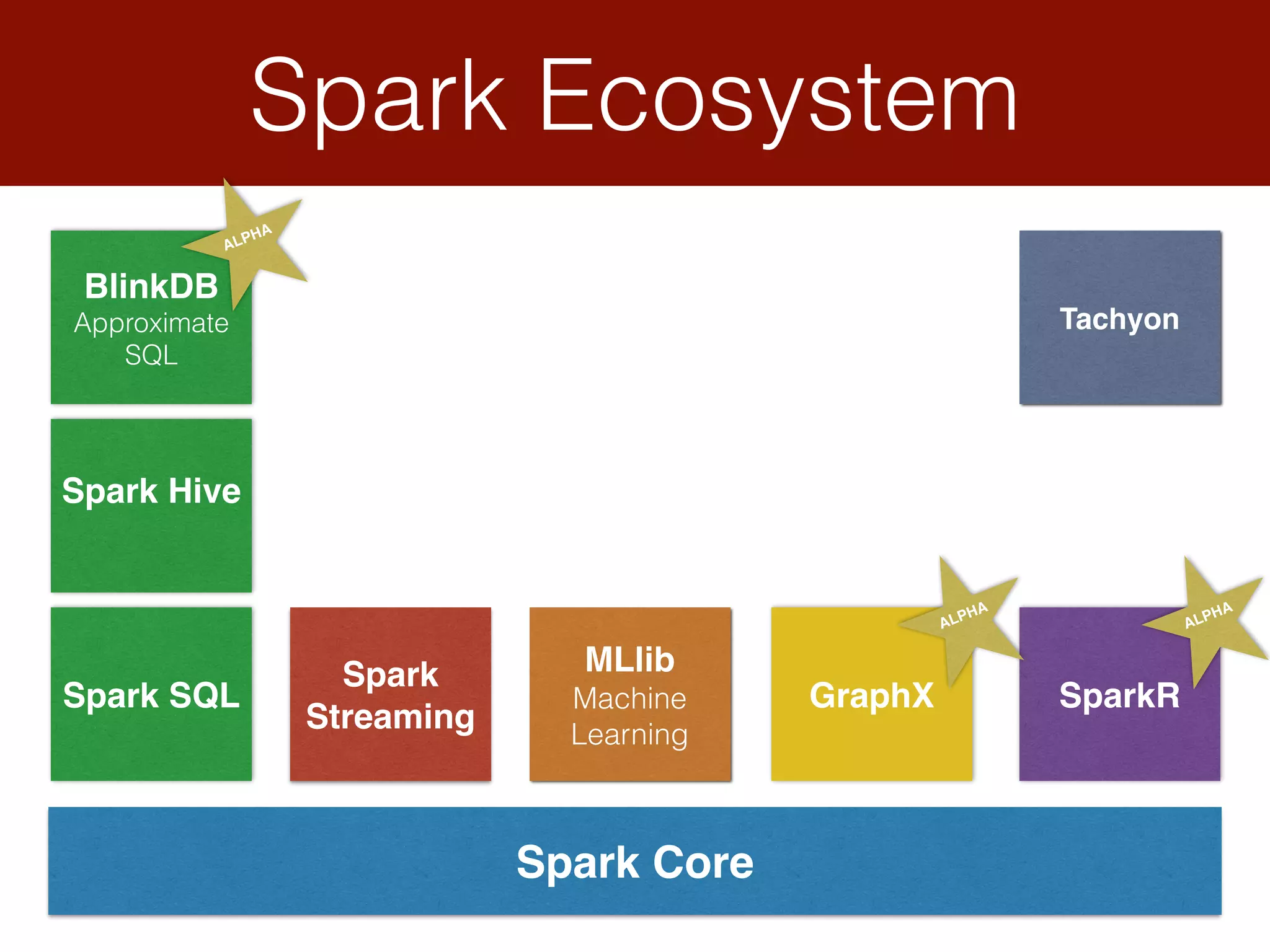

Spark is a framework for clustered in-memory data processing. It was developed at UC Berkeley and is now an Apache top-level project. Spark uses cluster-wide memory to speed up computations on large data. The core abstraction in Spark is the resilient distributed dataset (RDD), which acts as a fault-tolerant collection of objects across a cluster. Spark also provides APIs for batch processing, streaming, SQL, machine learning, and graph processing.

![RDD Word Count val sc = new SparkContext()

val input: RDD[String] = sc.textFile("/tmp/word.txt")

val words: RDD[(String, Long)] = input

.flatMap(line => line.toLowerCase.split("s+"))

.map(word => word -> 1L)

.cache()

val wordCountsRdd: RDD[(String, Long)] = words

.reduceByKey(_ + _)

.sortByKey()

val wordCounts: Array[(String, Long)] = wordCountsRdd.collect()](https://image.slidesharecdn.com/sparkintroscalaug-150326162834-conversion-gate01/75/Intro-to-Apache-Spark-12-2048.jpg)