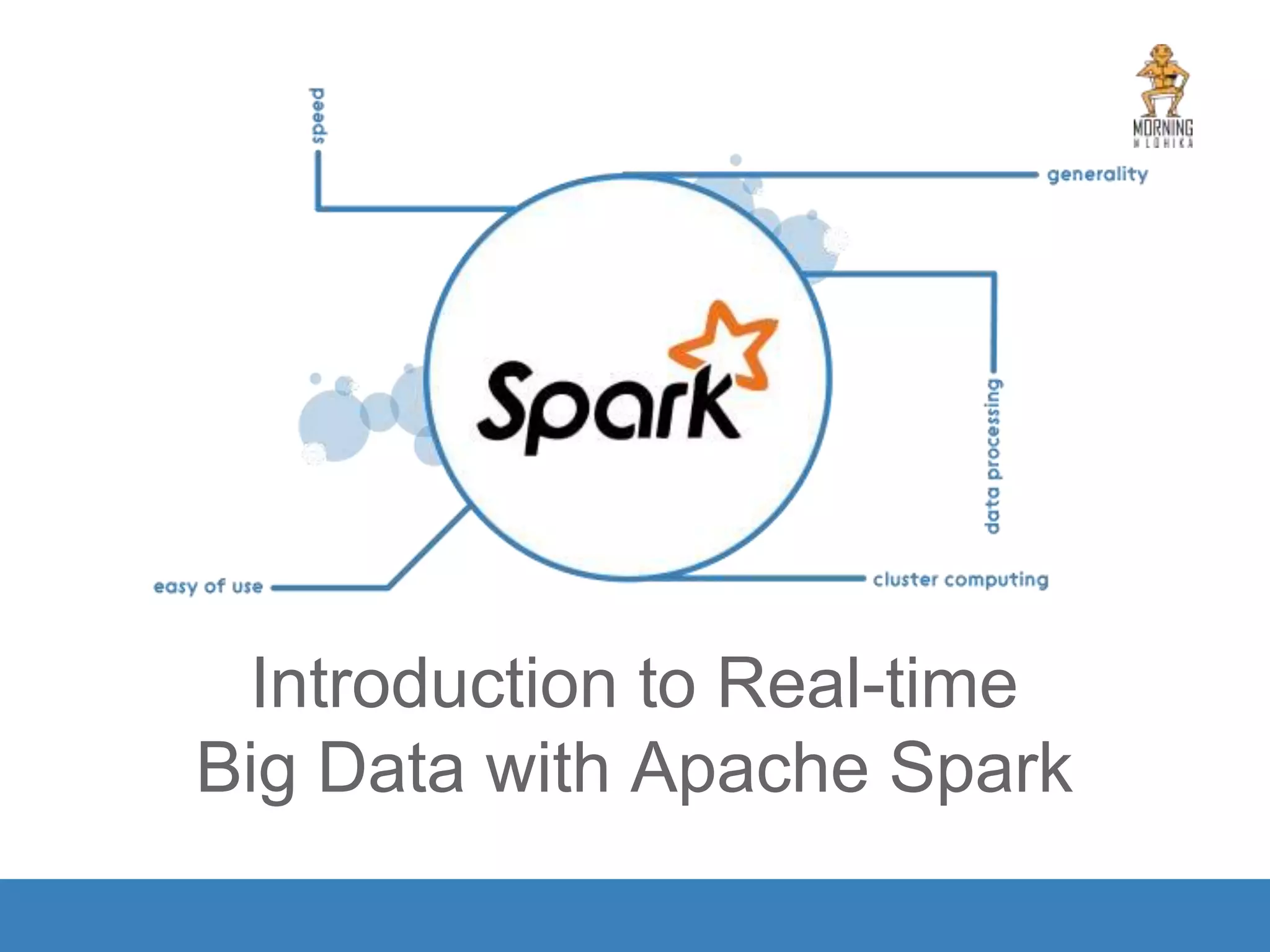

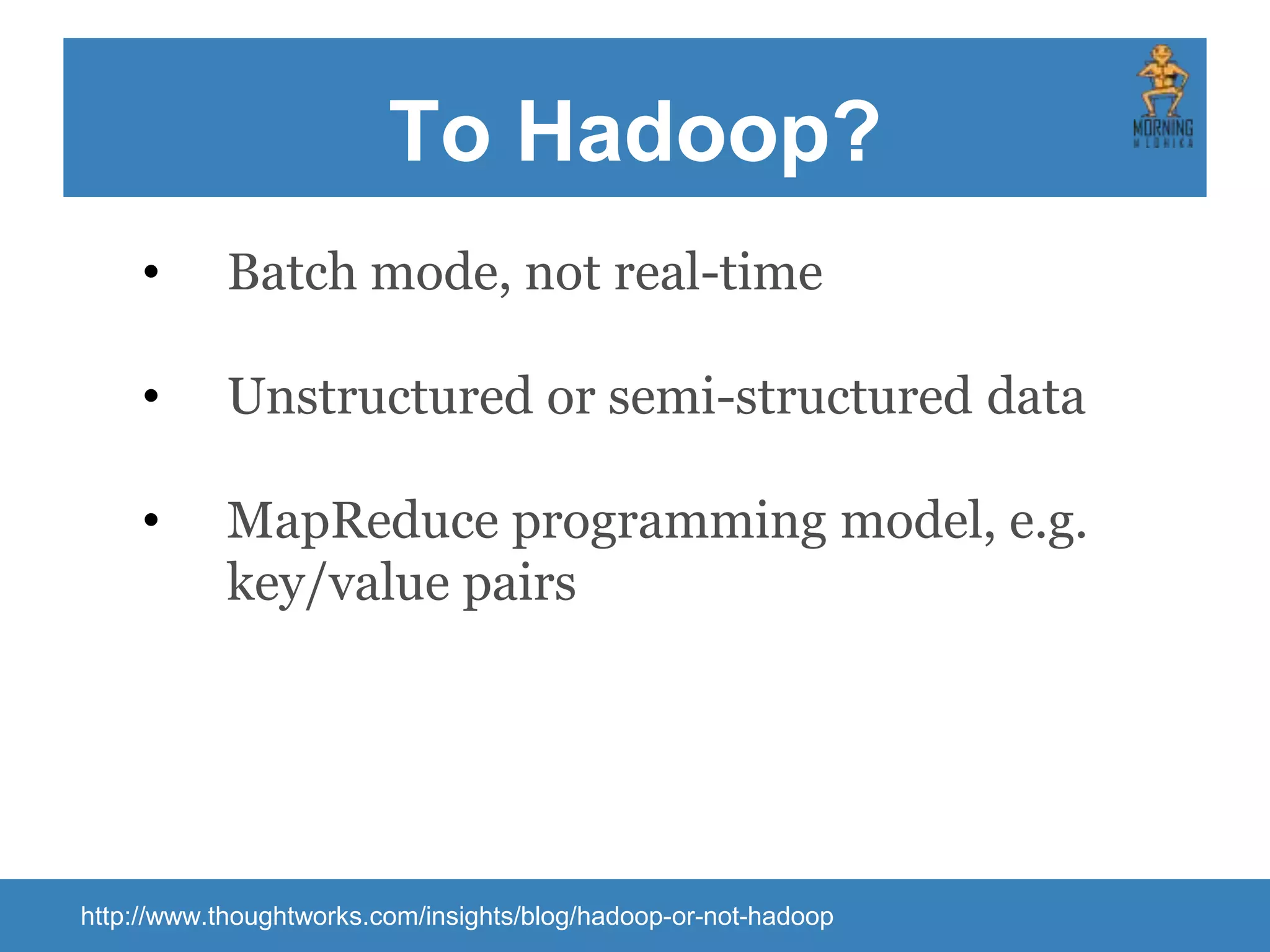

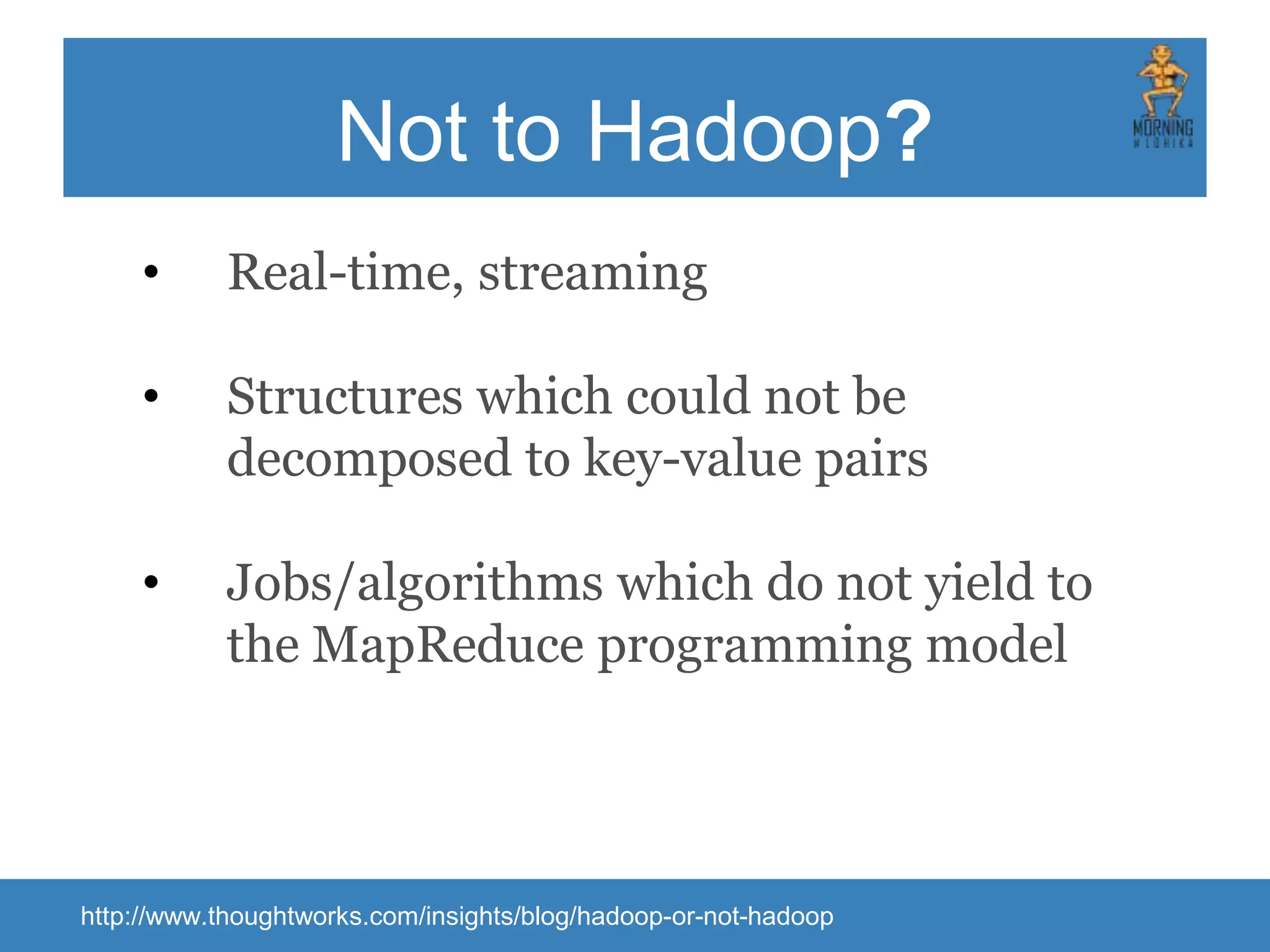

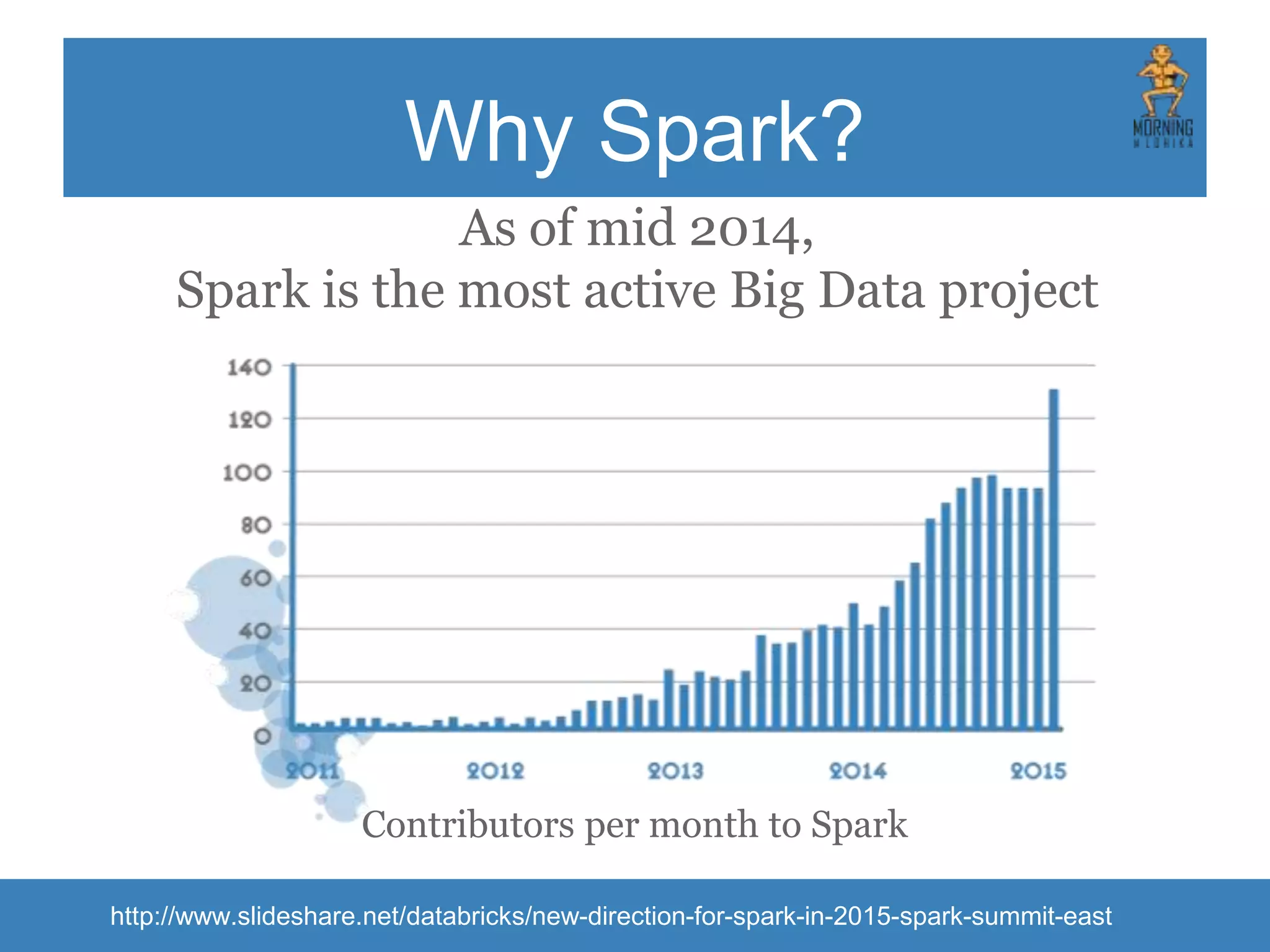

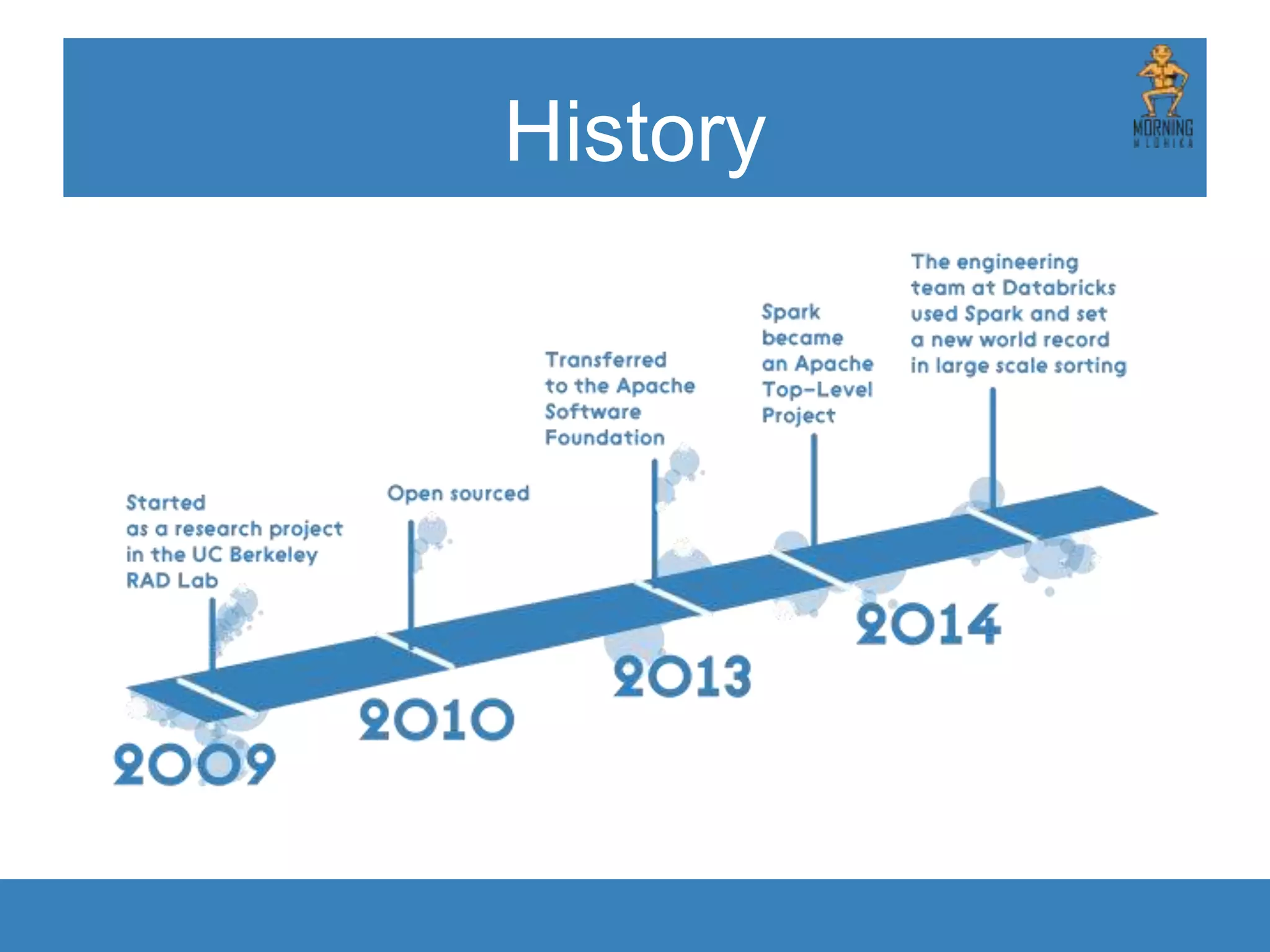

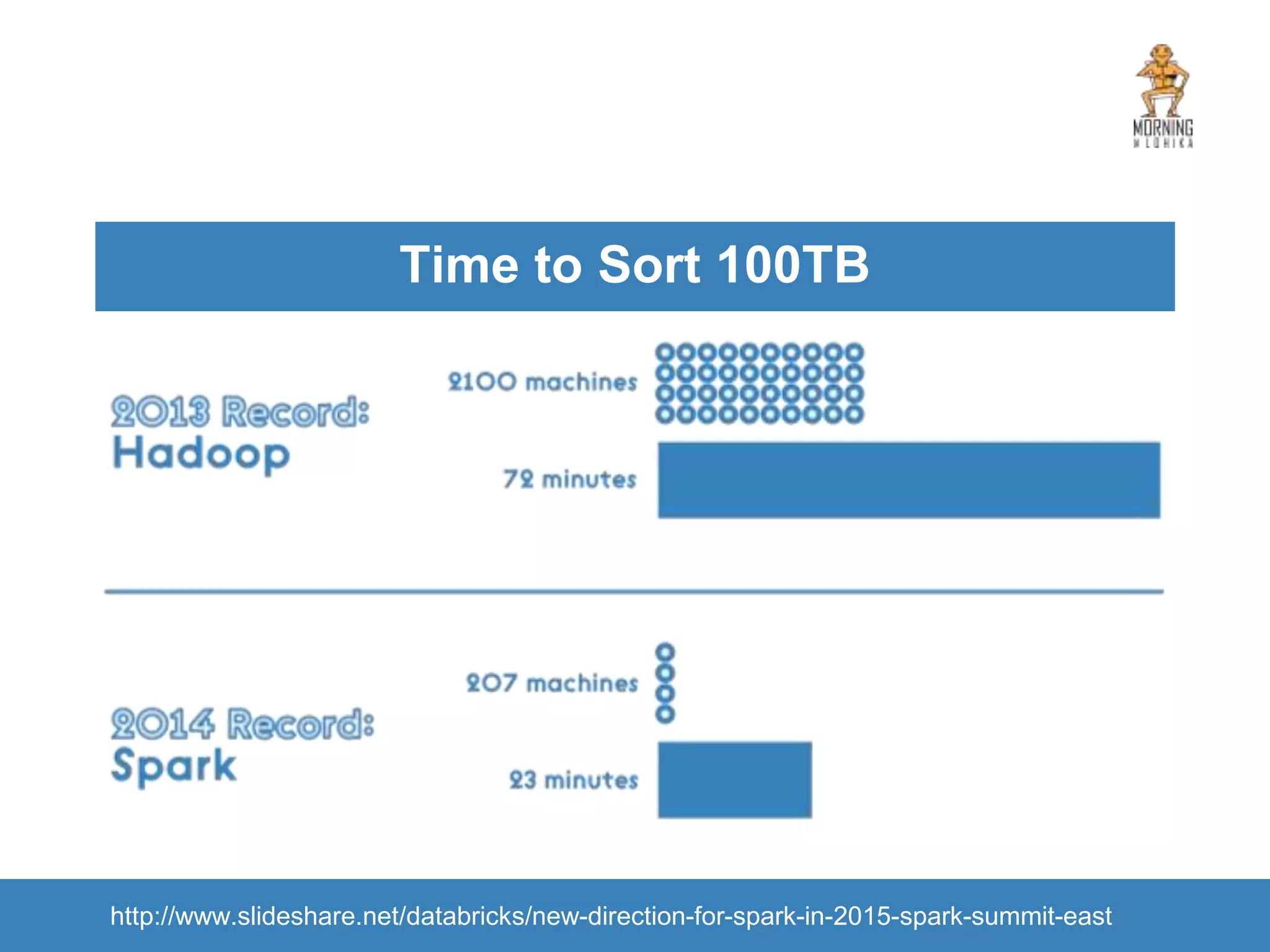

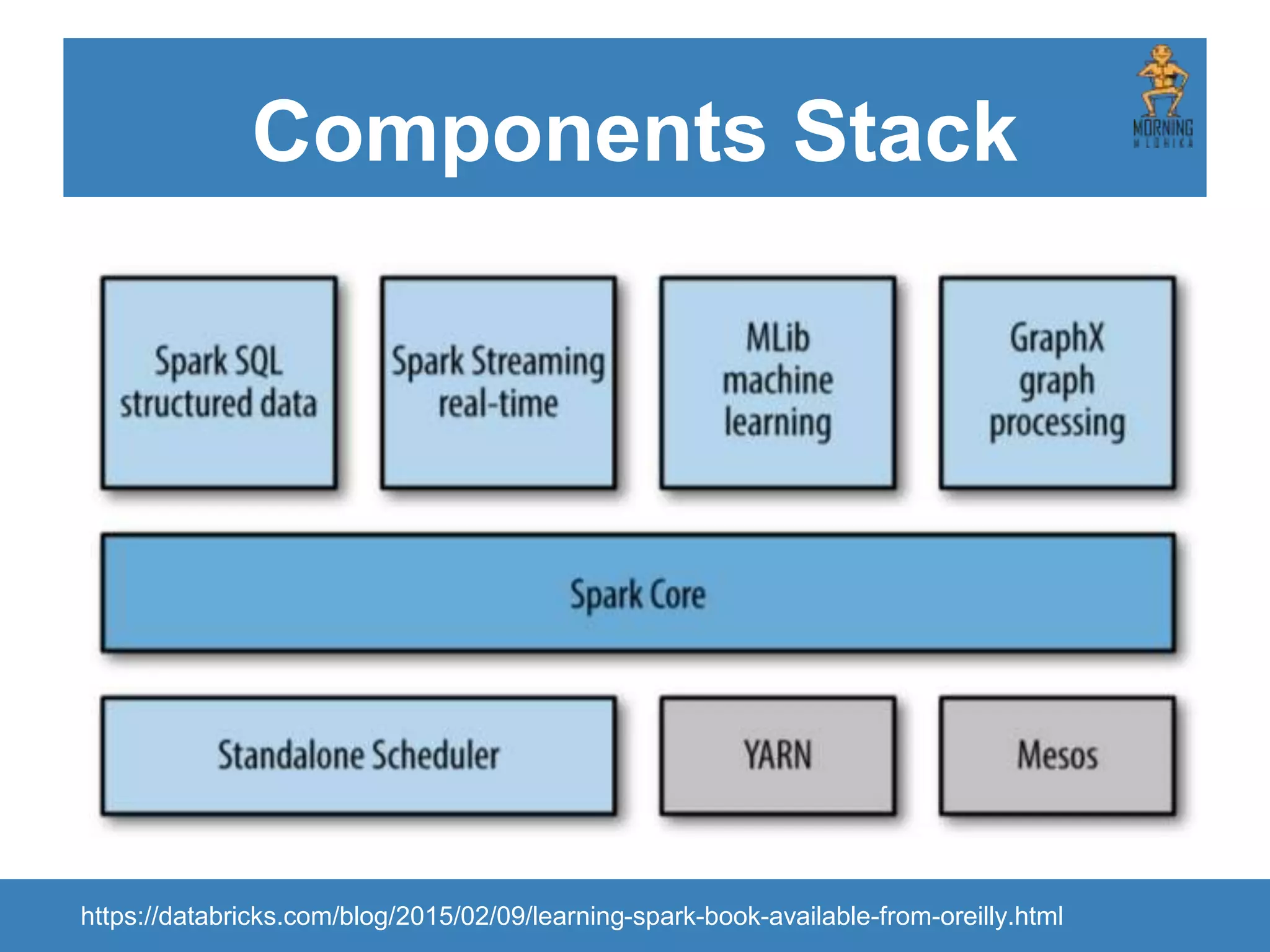

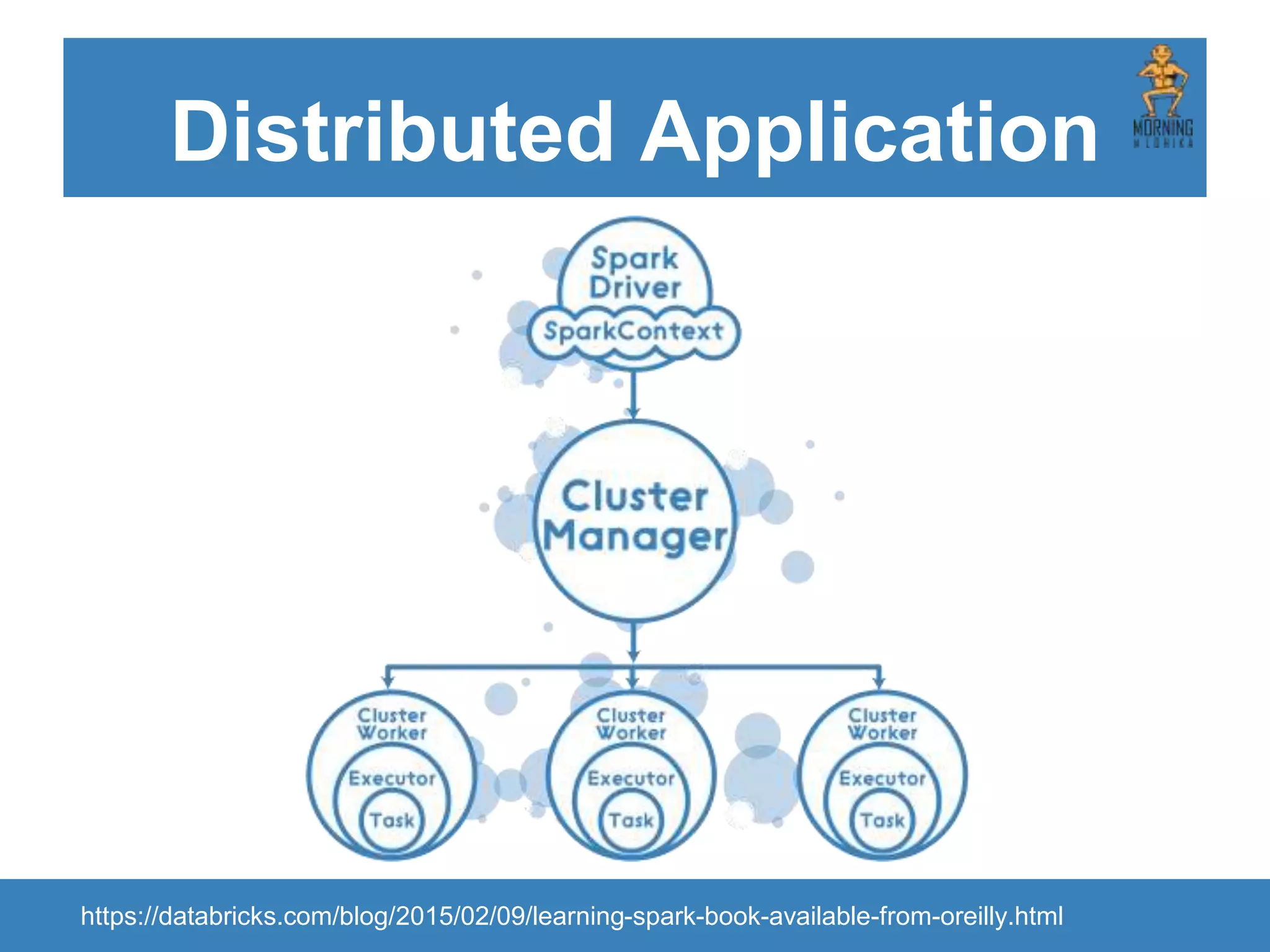

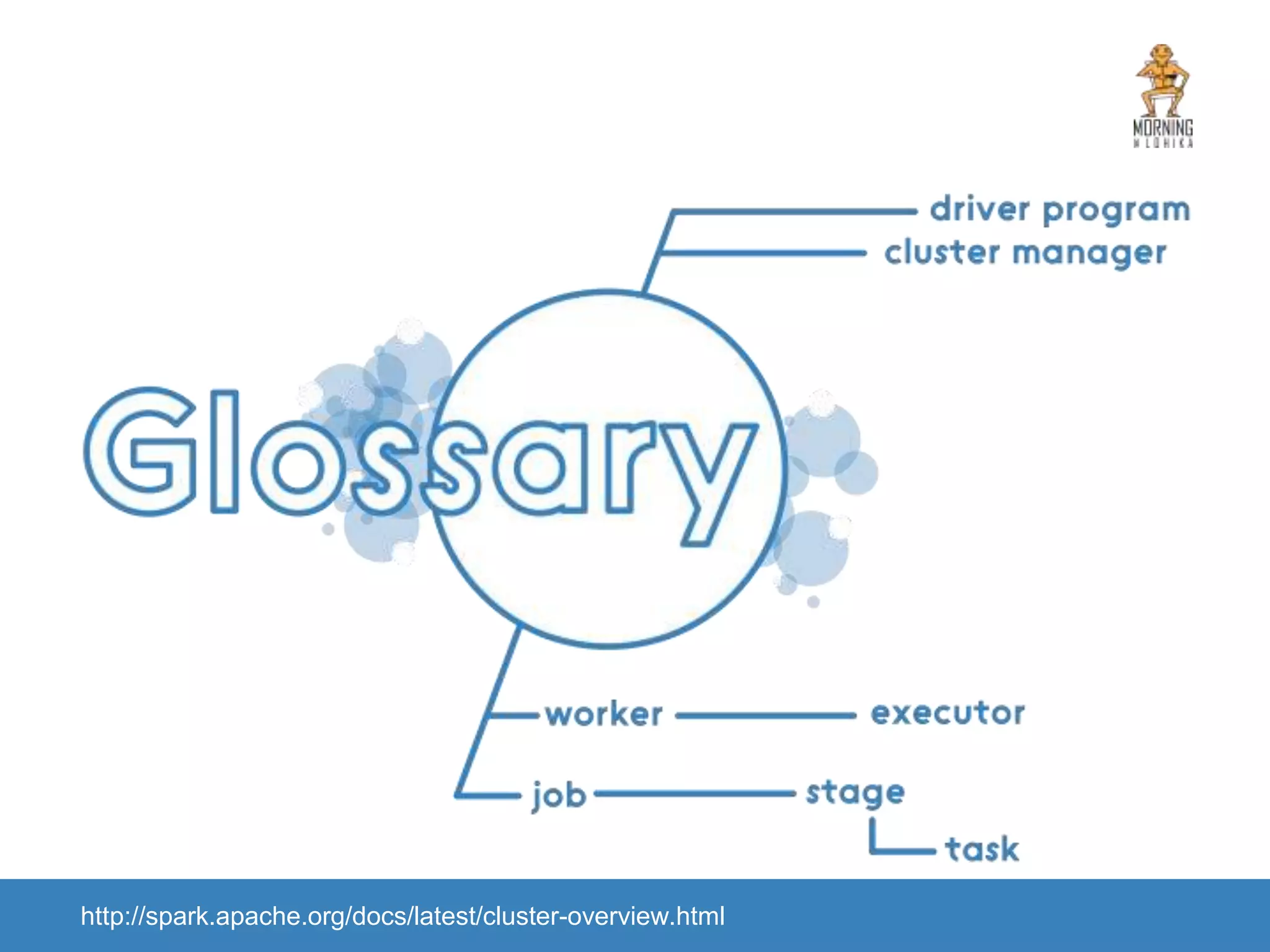

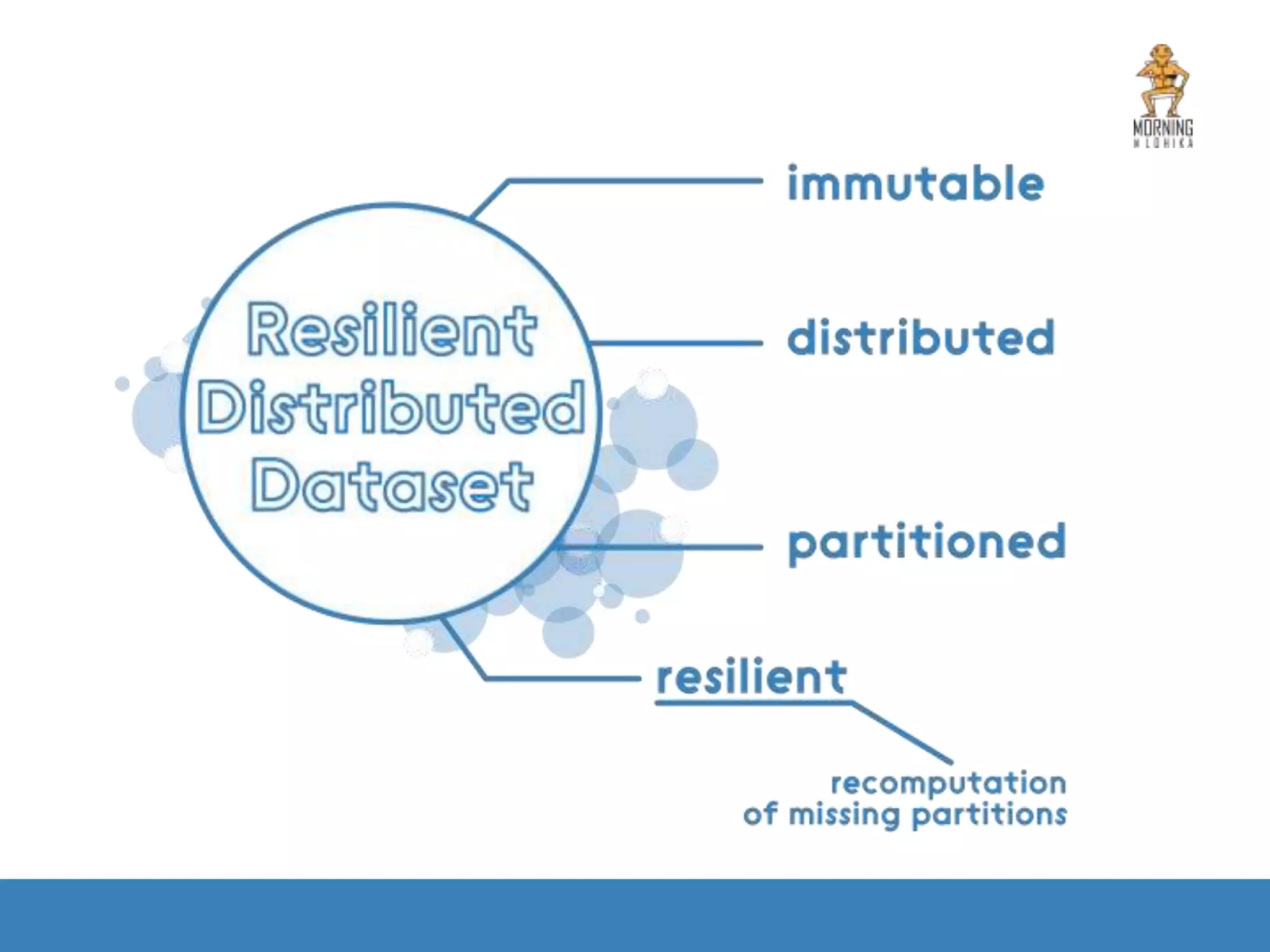

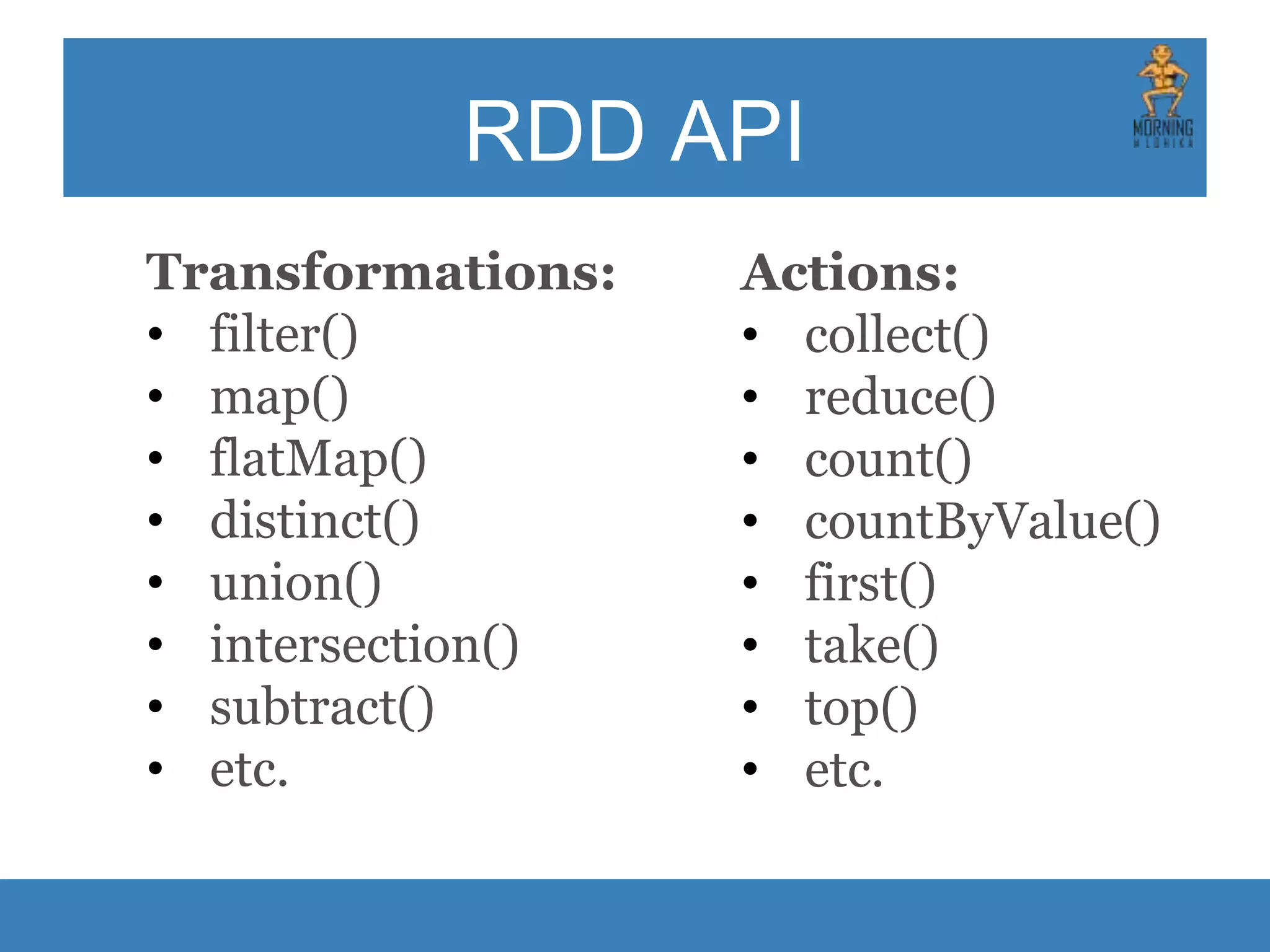

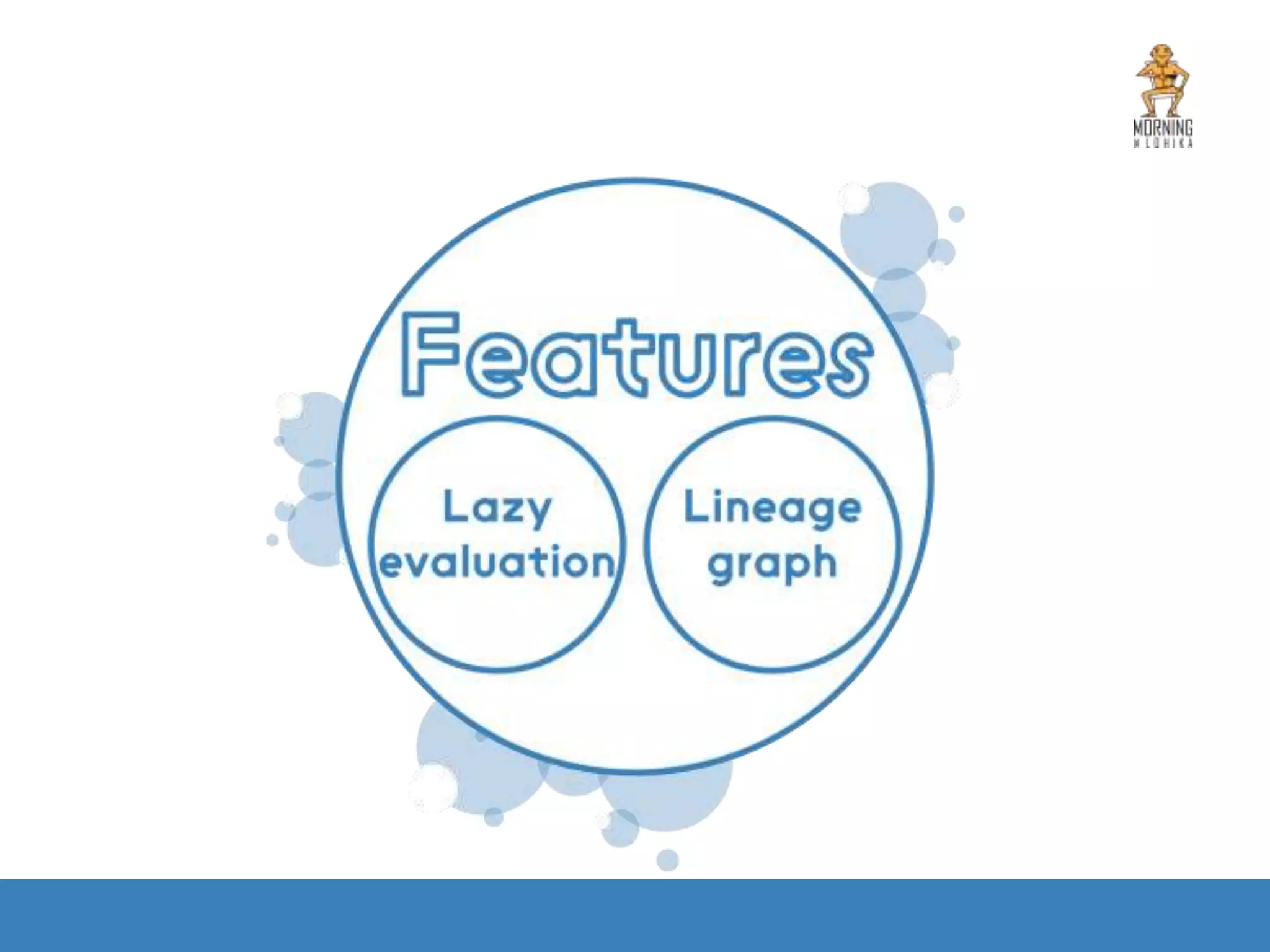

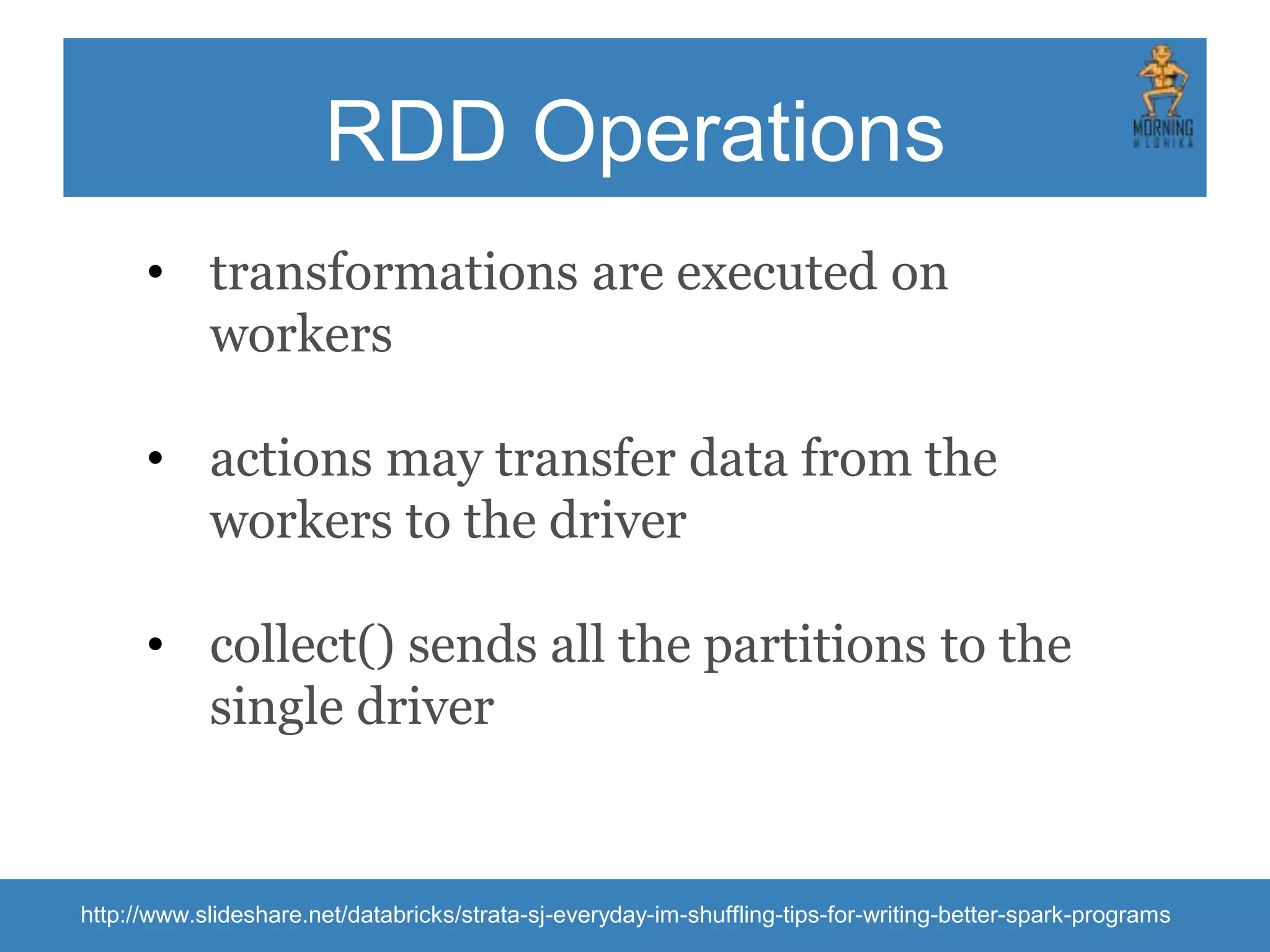

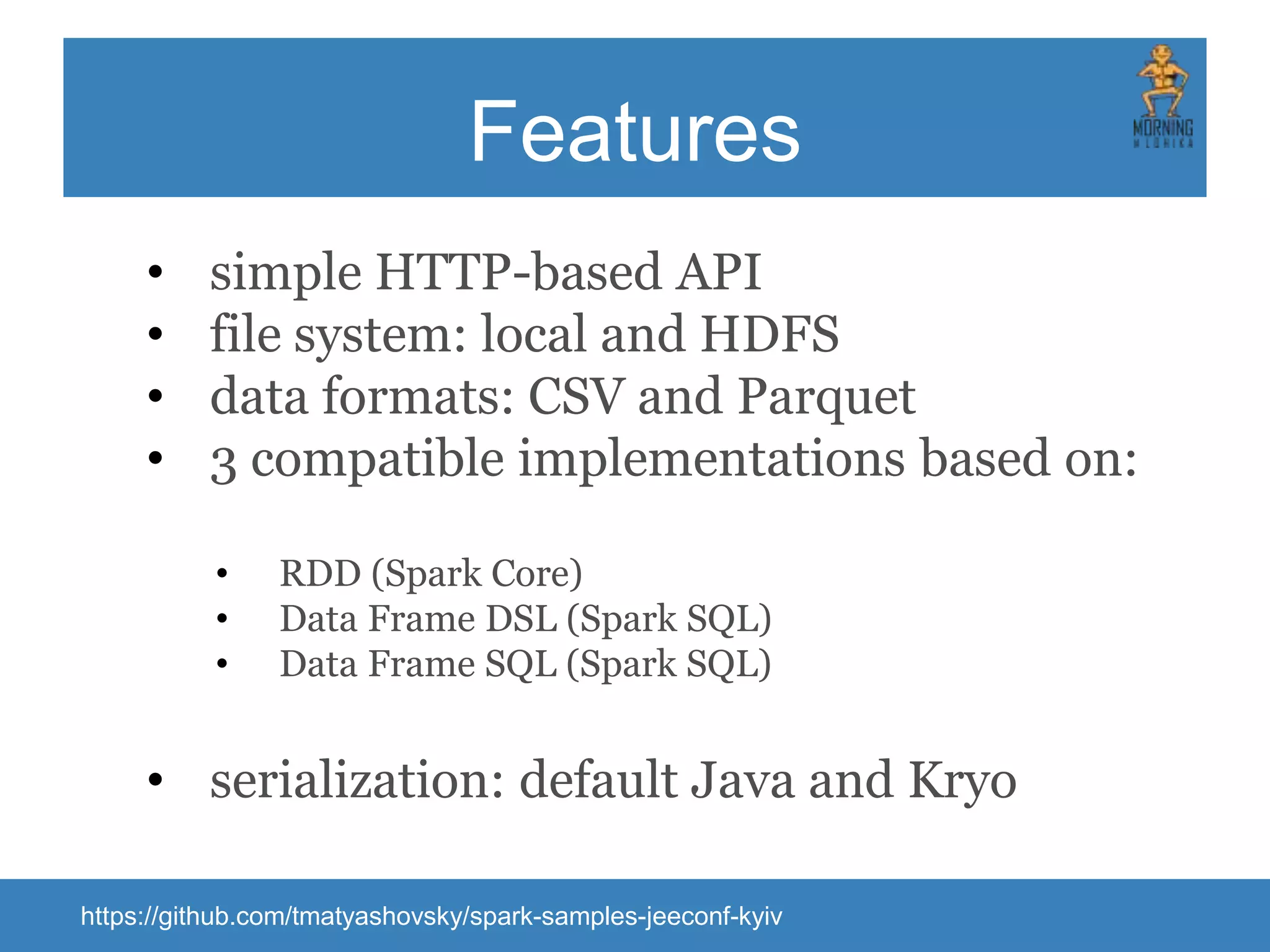

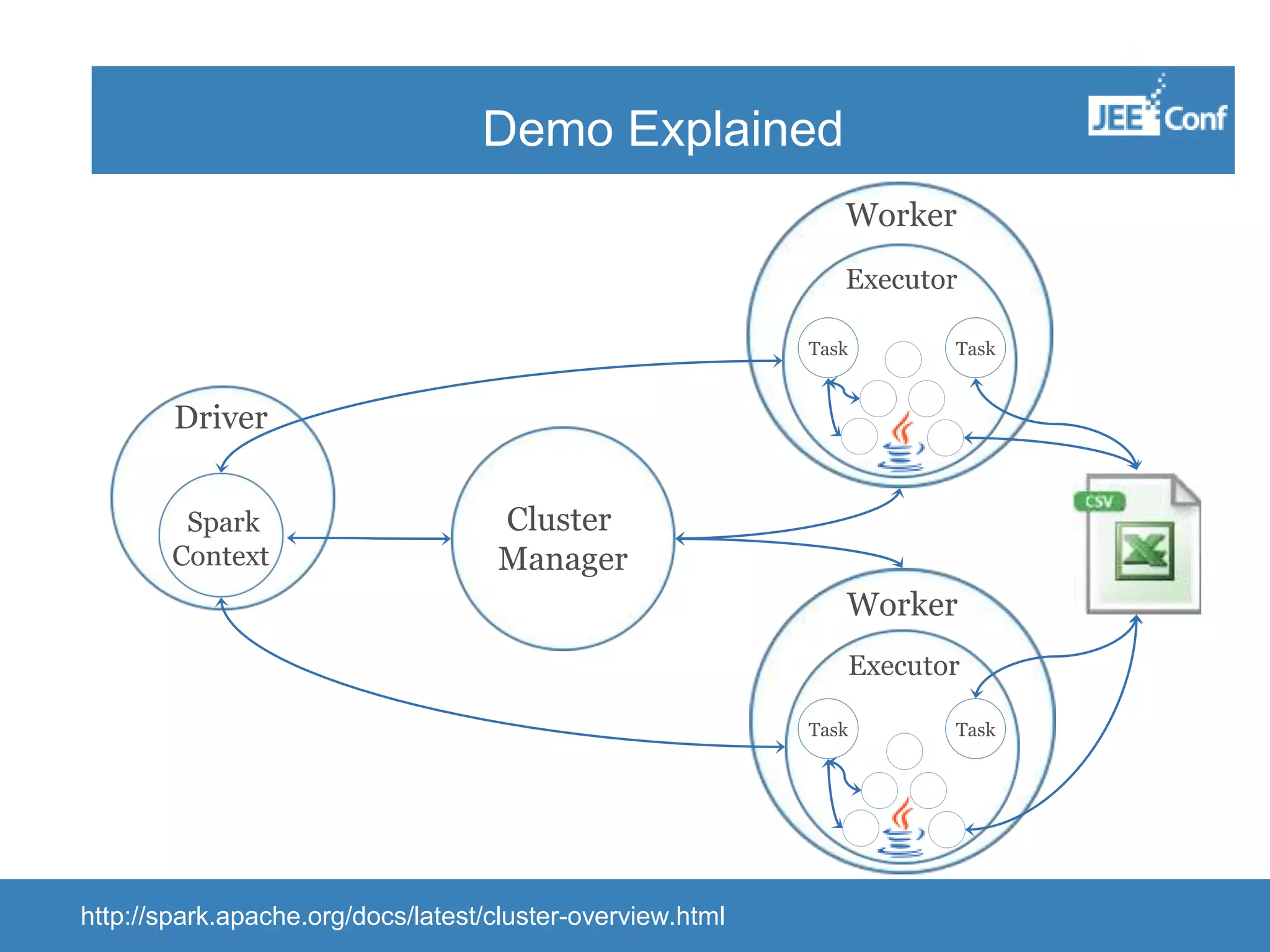

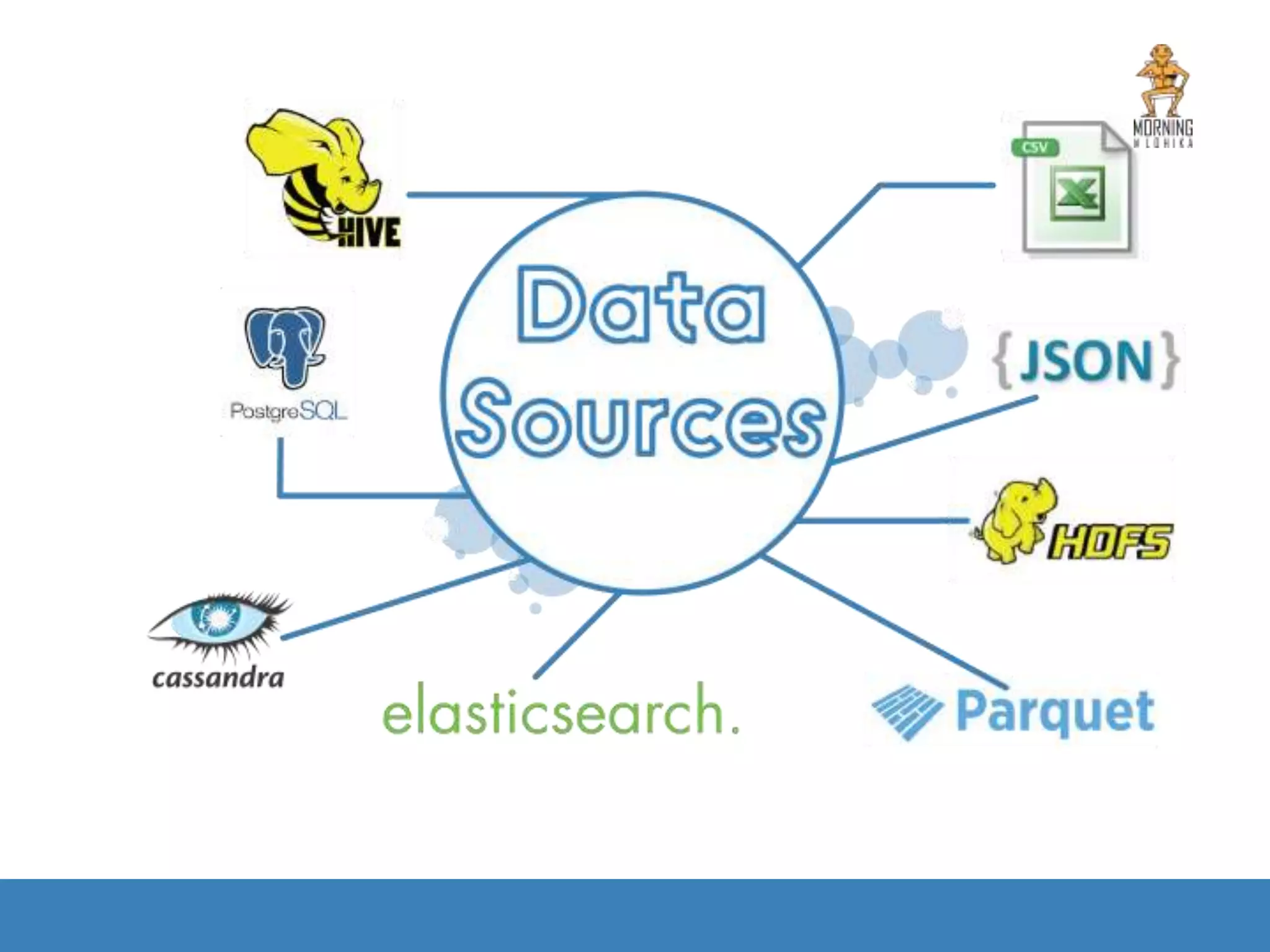

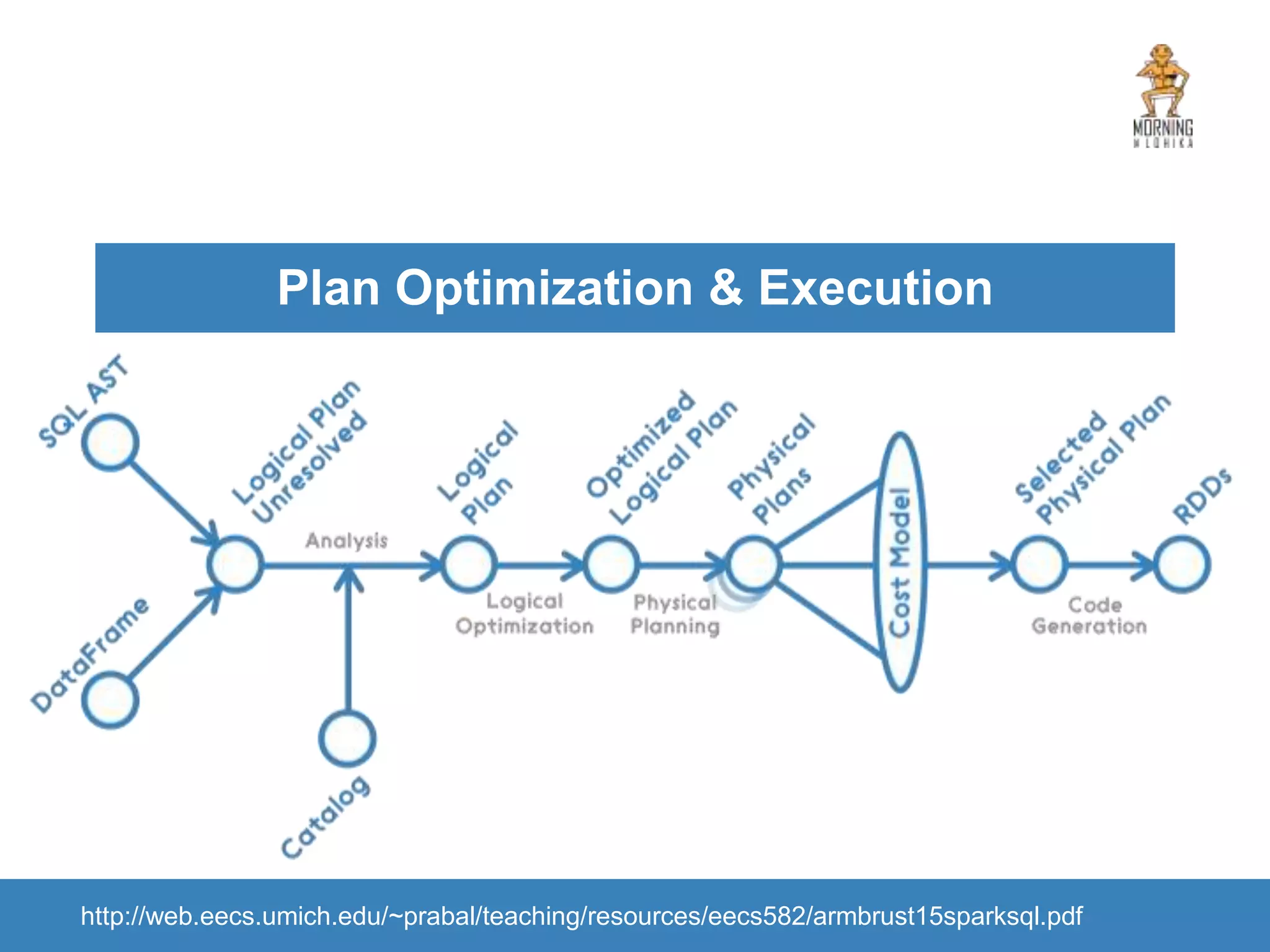

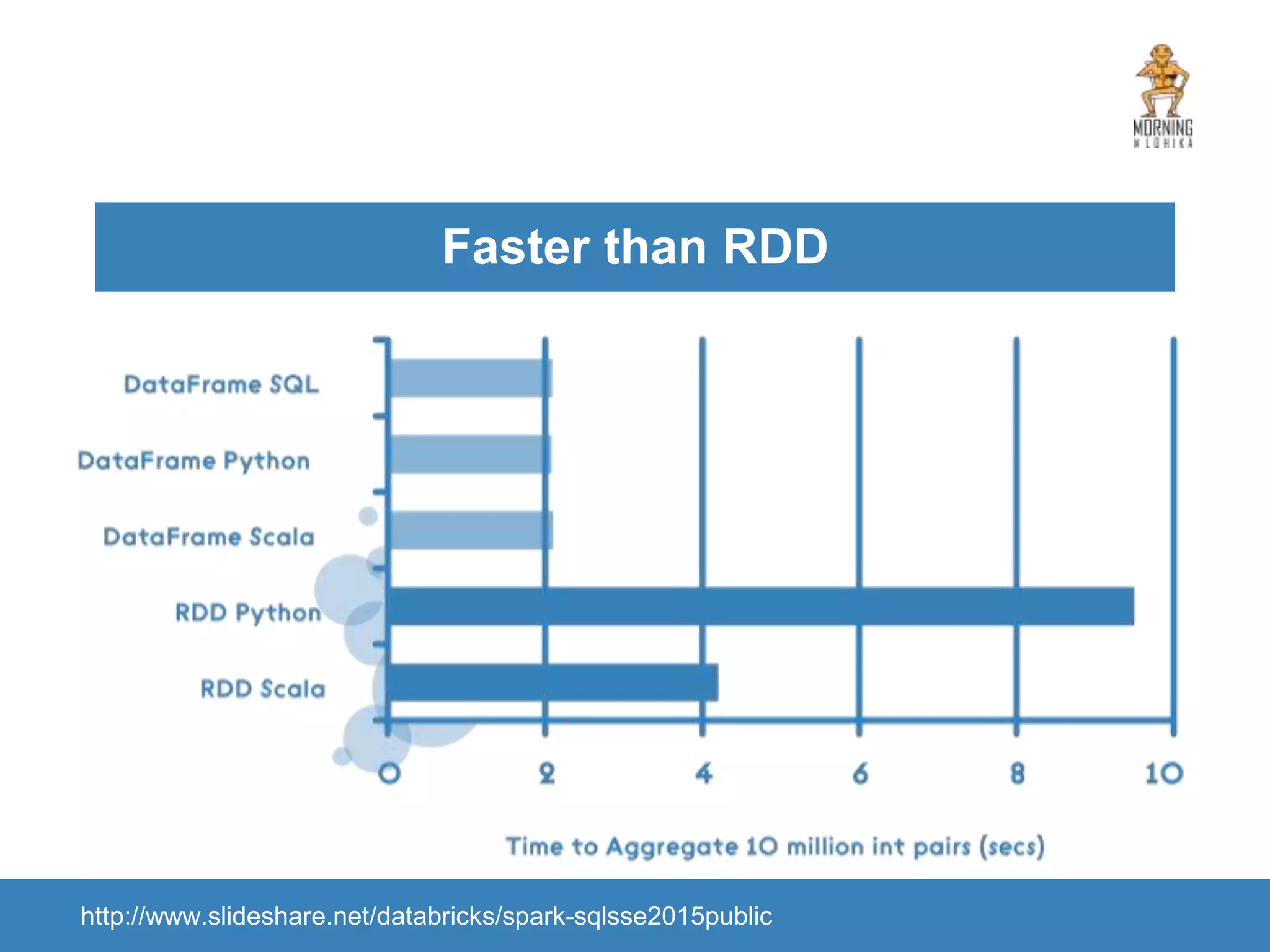

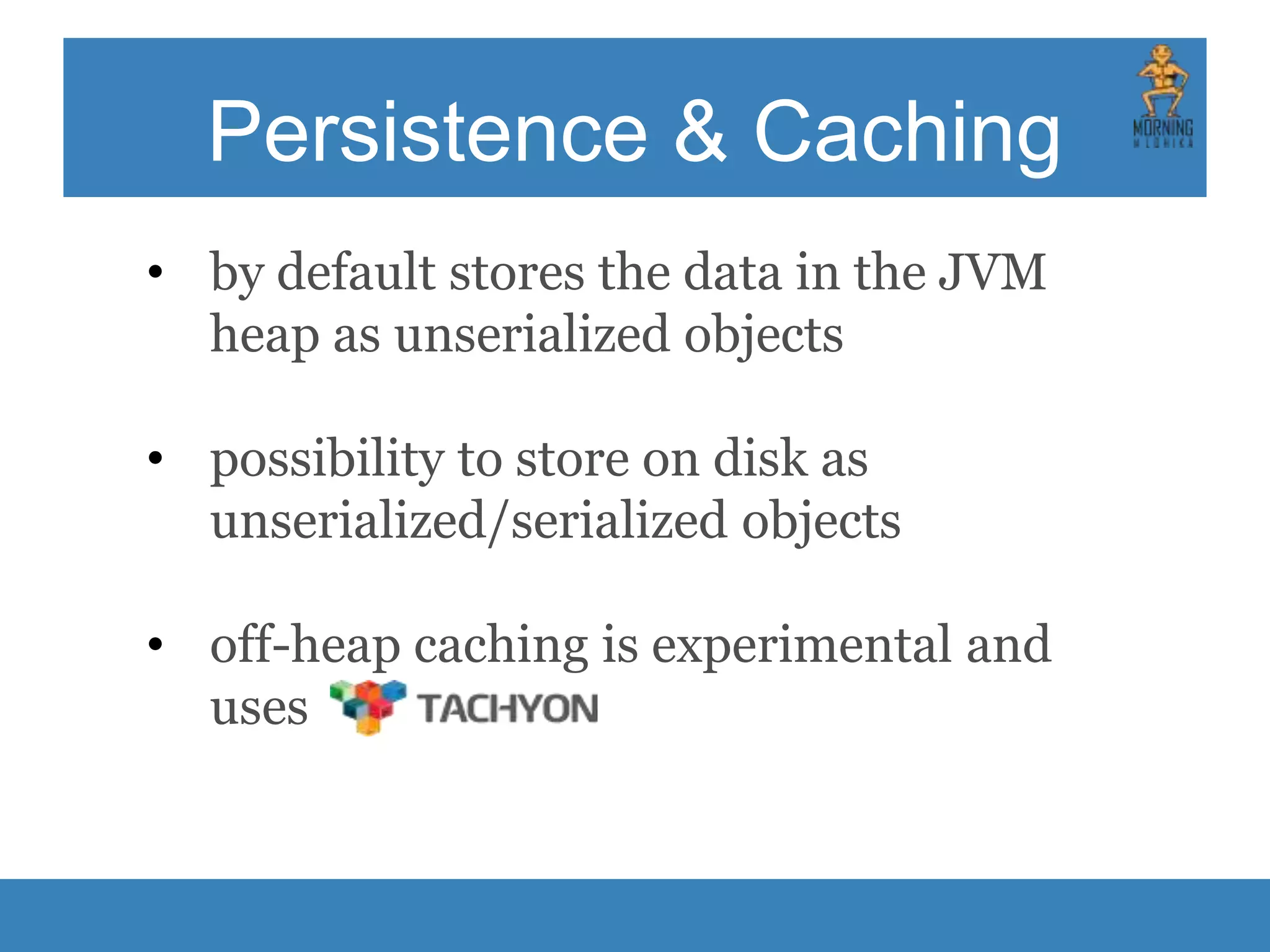

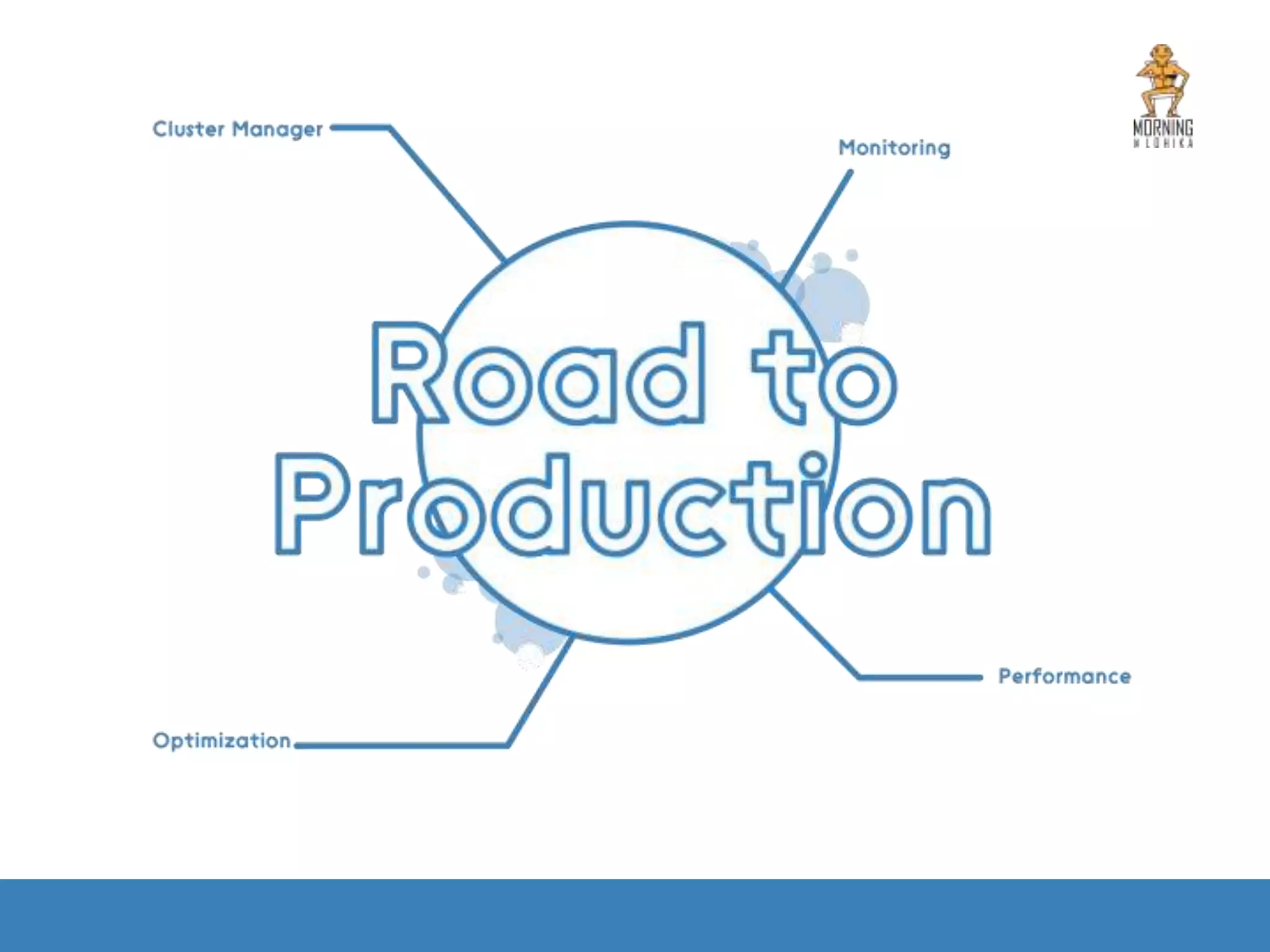

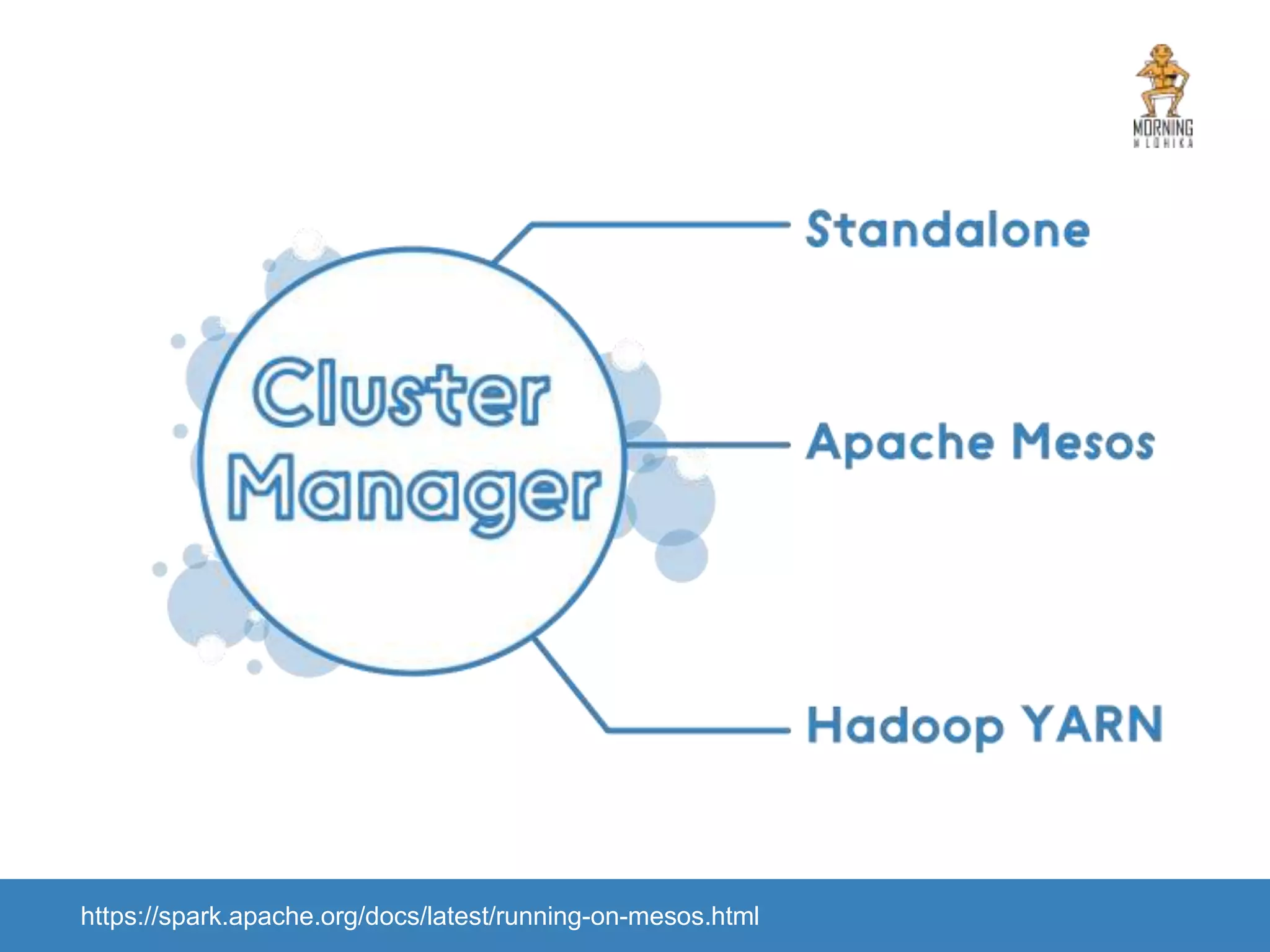

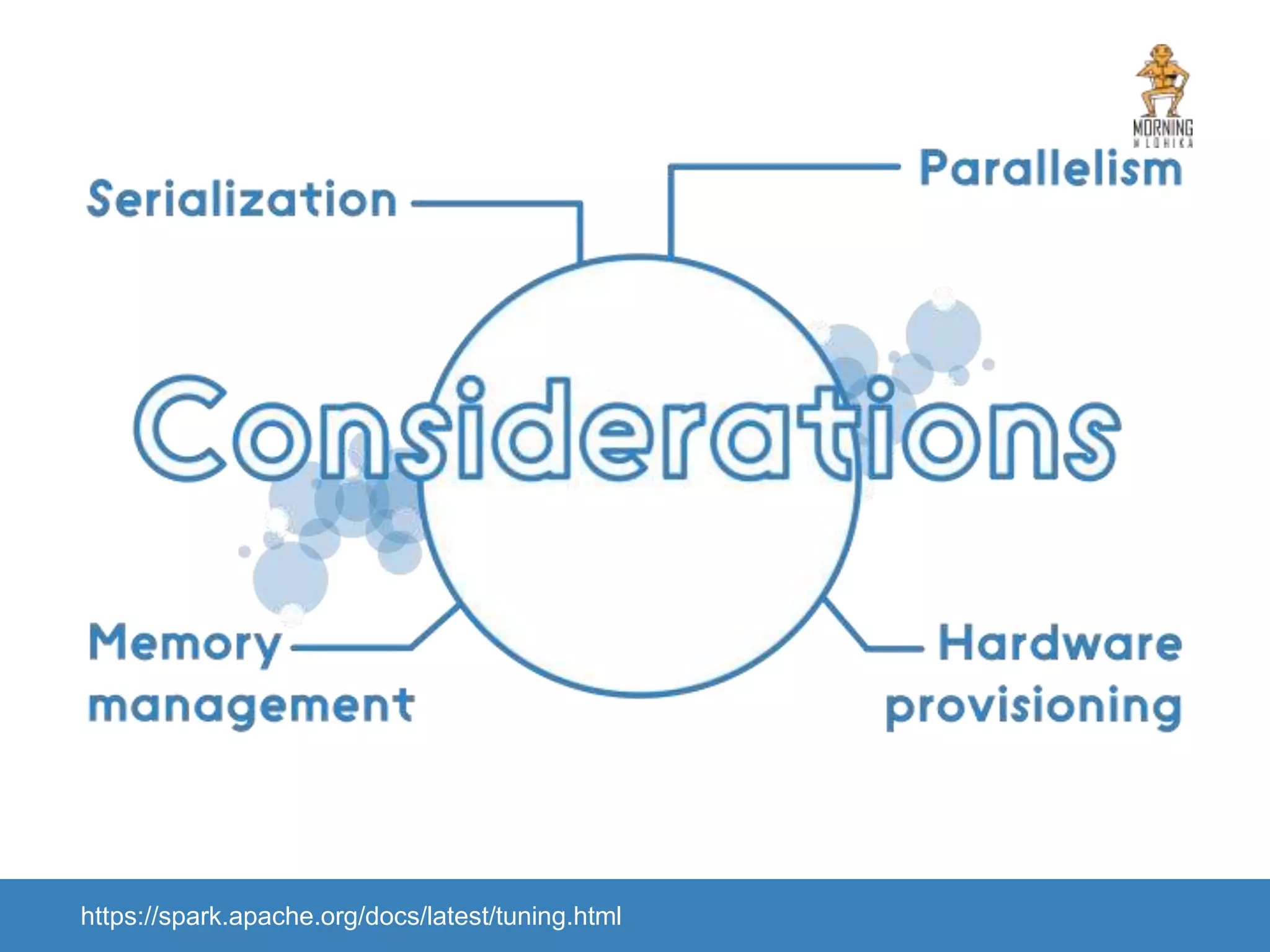

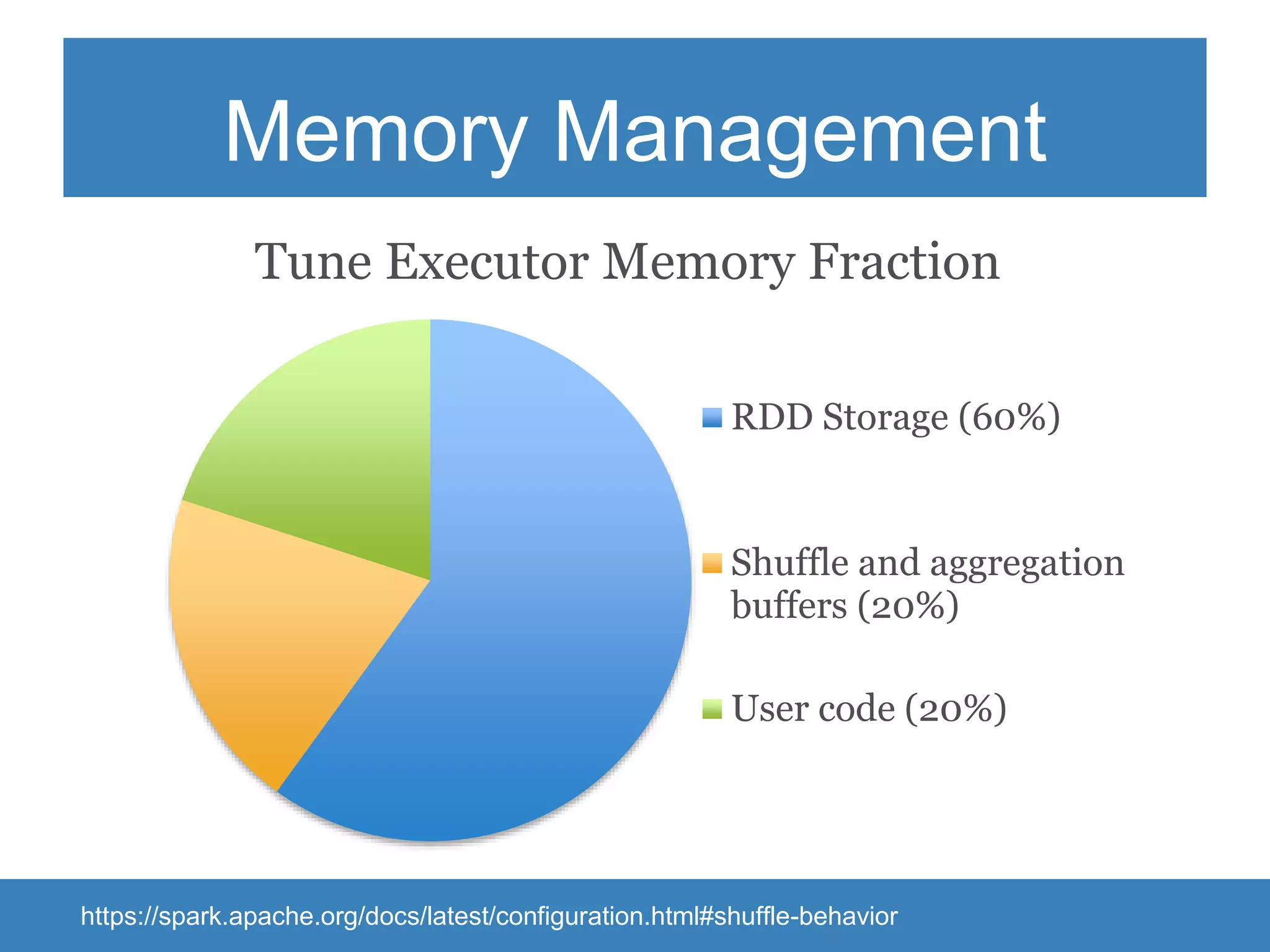

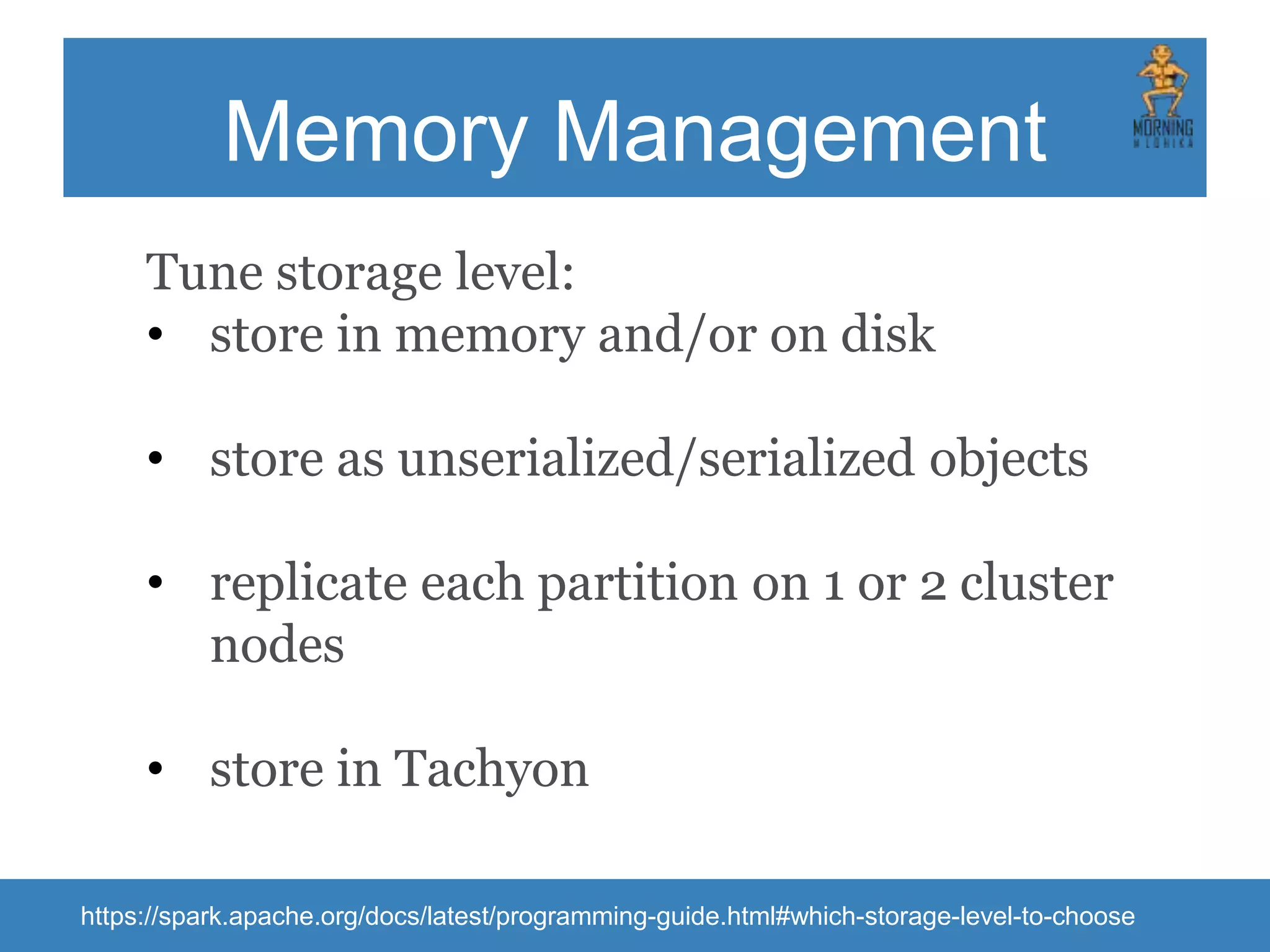

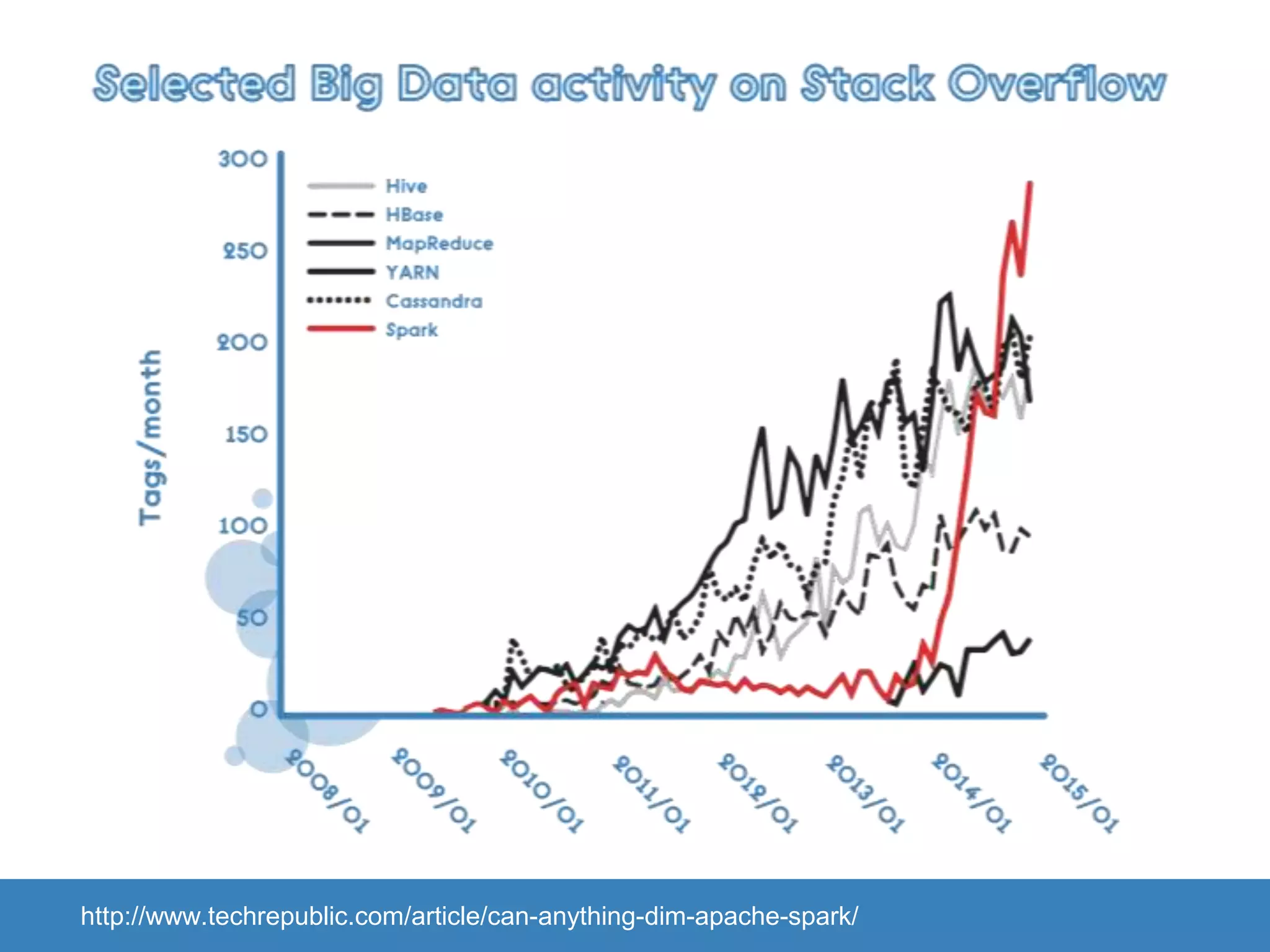

The document provides an introduction to Apache Spark, focusing on its features, capabilities, and advantages over traditional big data solutions like Hadoop. It covers key concepts, core abstractions, applications, and integrations with various technologies, emphasizing Spark's efficiency and speed due to in-memory data processing. Additionally, it highlights the importance of proper memory management and monitoring while working with Spark for large-scale data analytics.