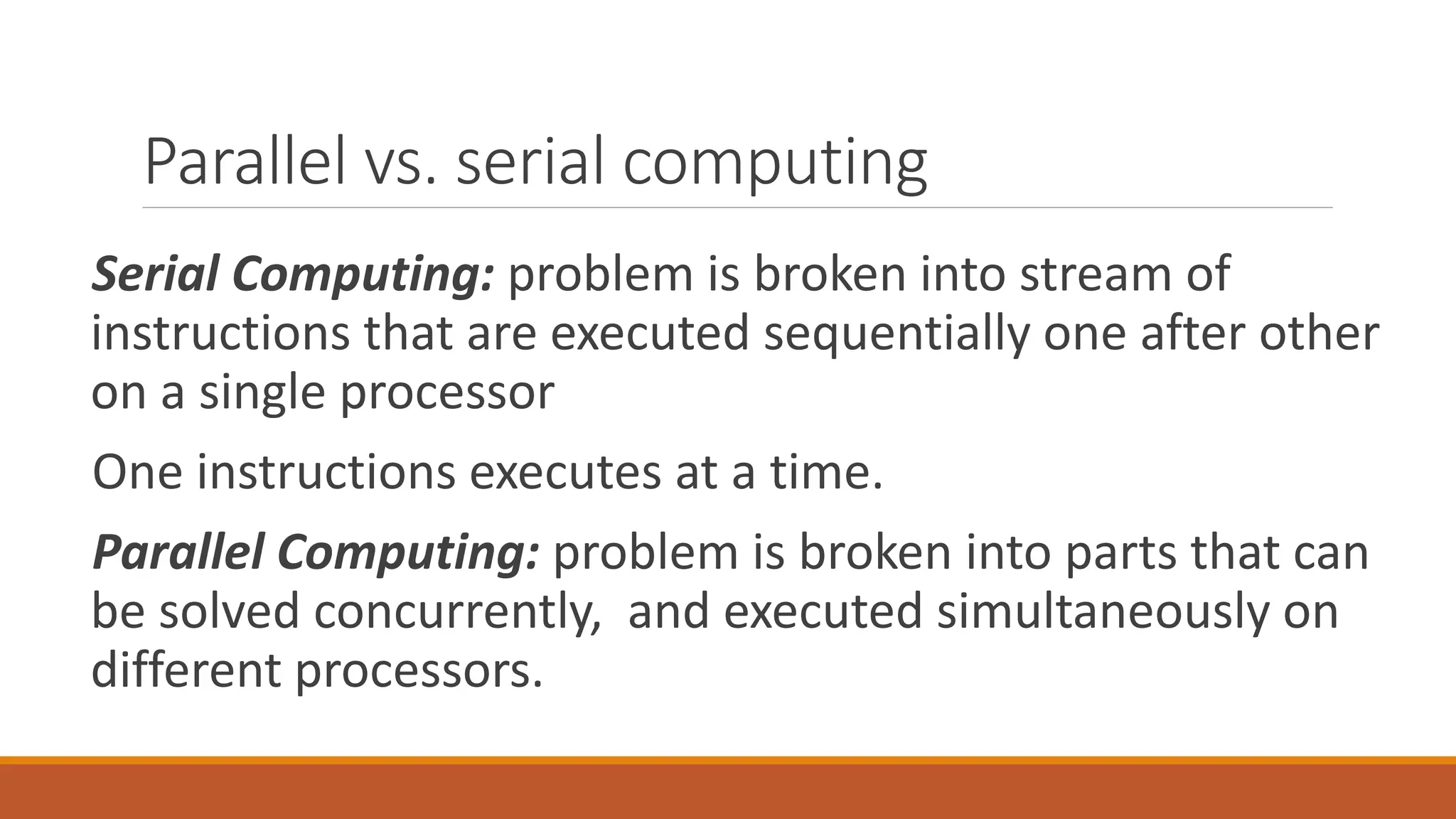

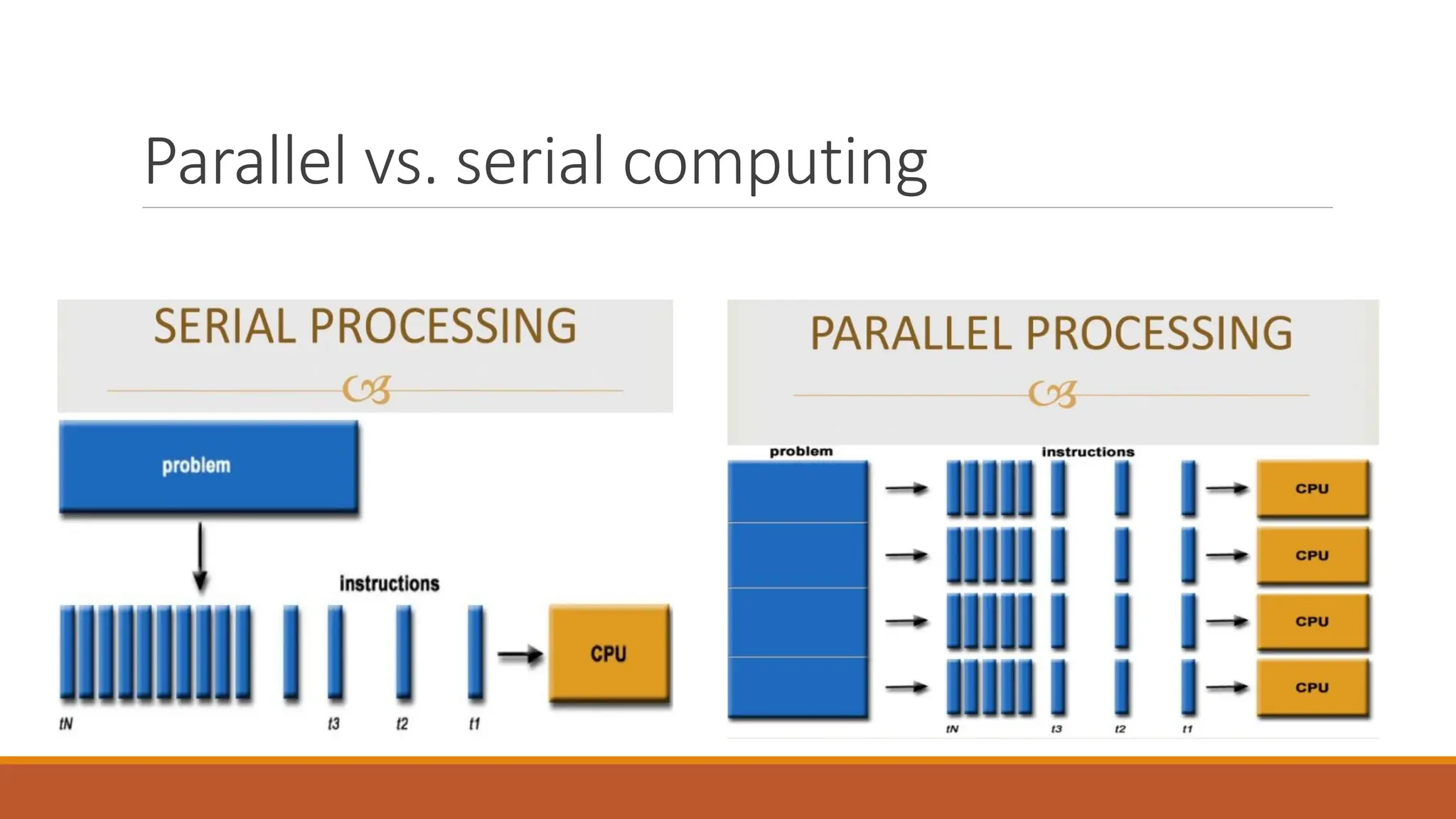

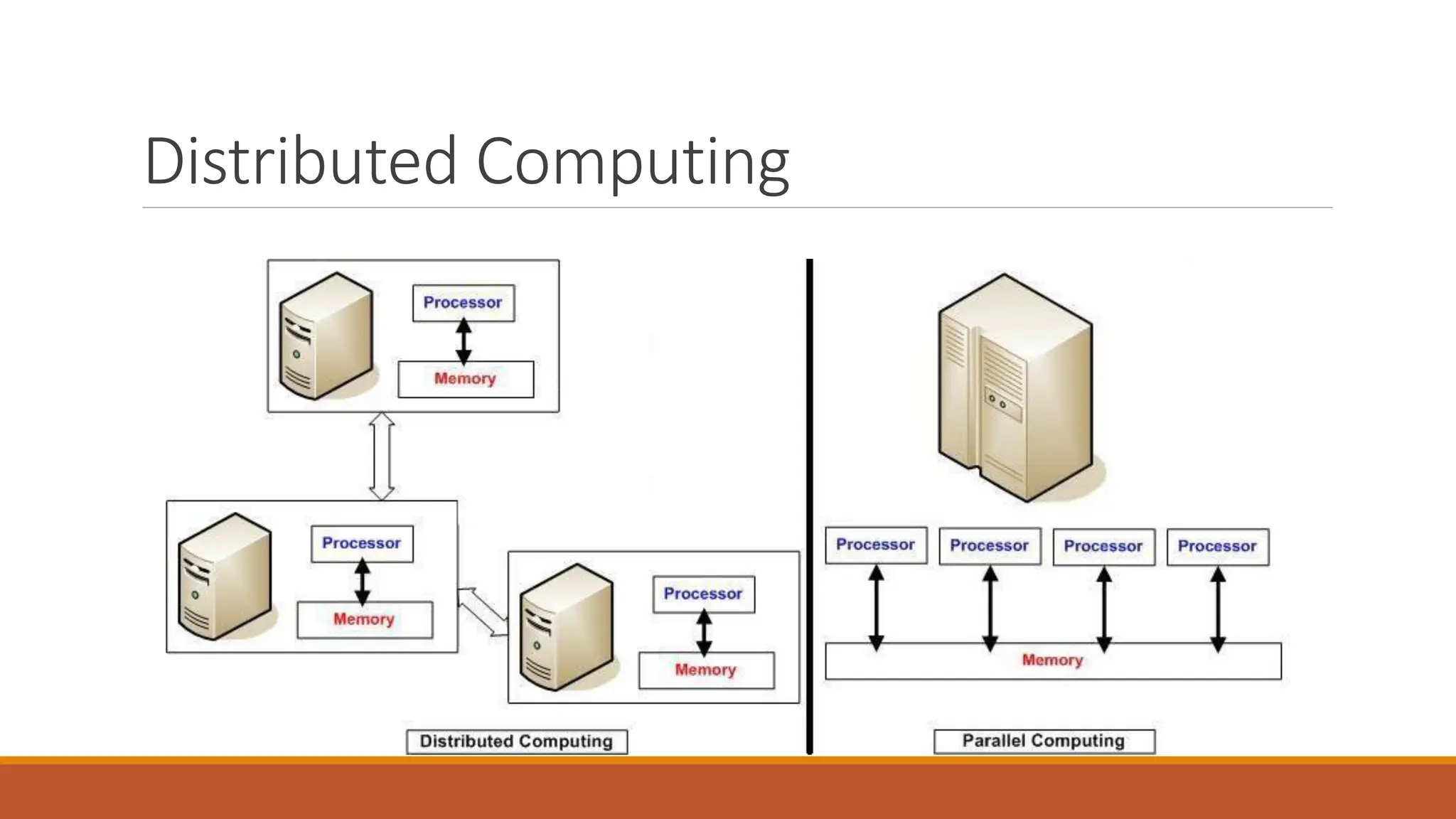

Parallel and distributed computing systems use multiple computers simultaneously to solve large computational problems faster than a single computer. Parallel computing involves breaking a problem into parts that can be solved concurrently on different processors, while distributed computing uses multiple independent computers that coordinate via message passing to appear as a single system. These approaches improve scalability, allow problems too large for one computer to be solved, and provide high throughput to handle many users concurrently.