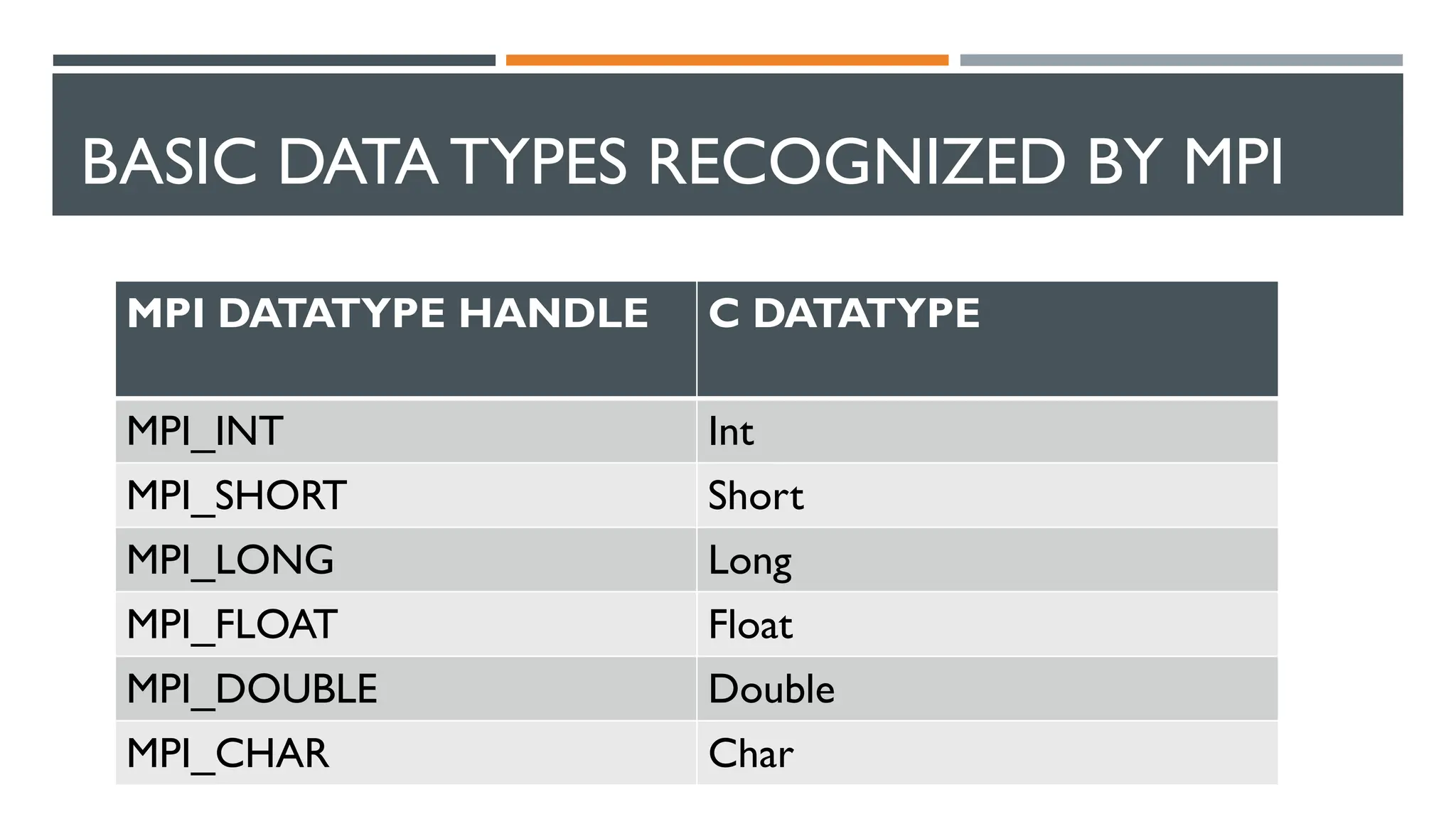

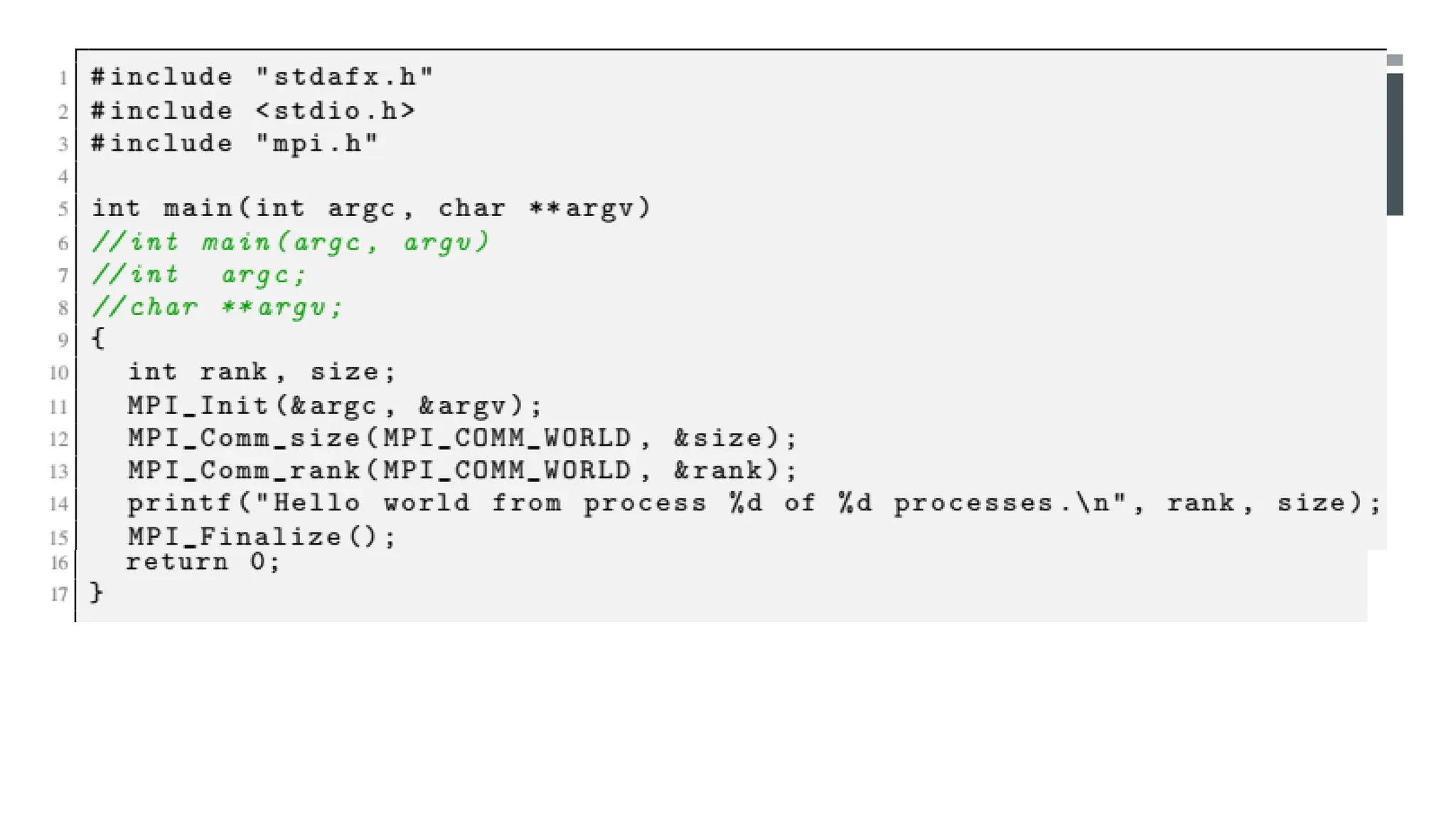

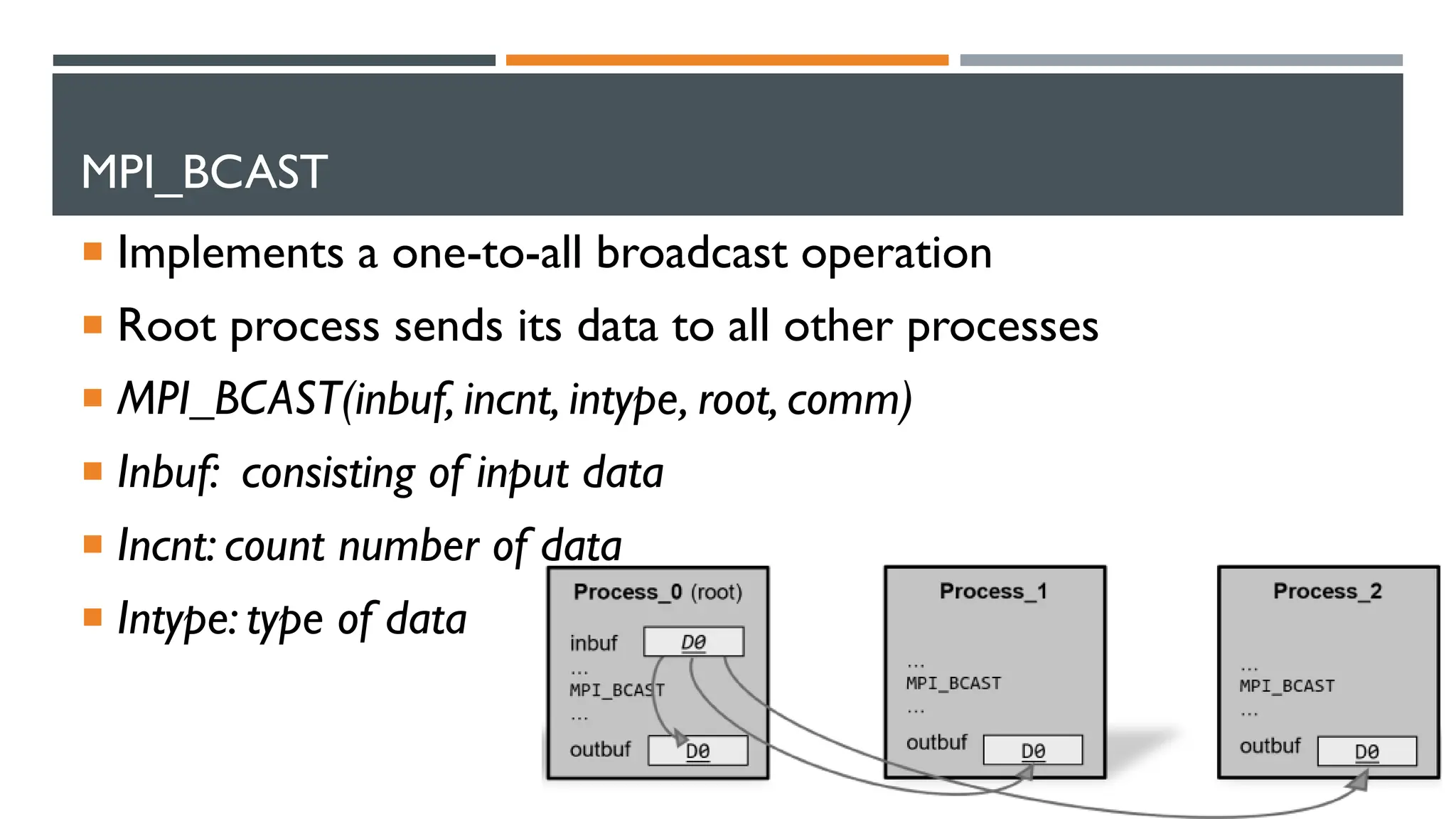

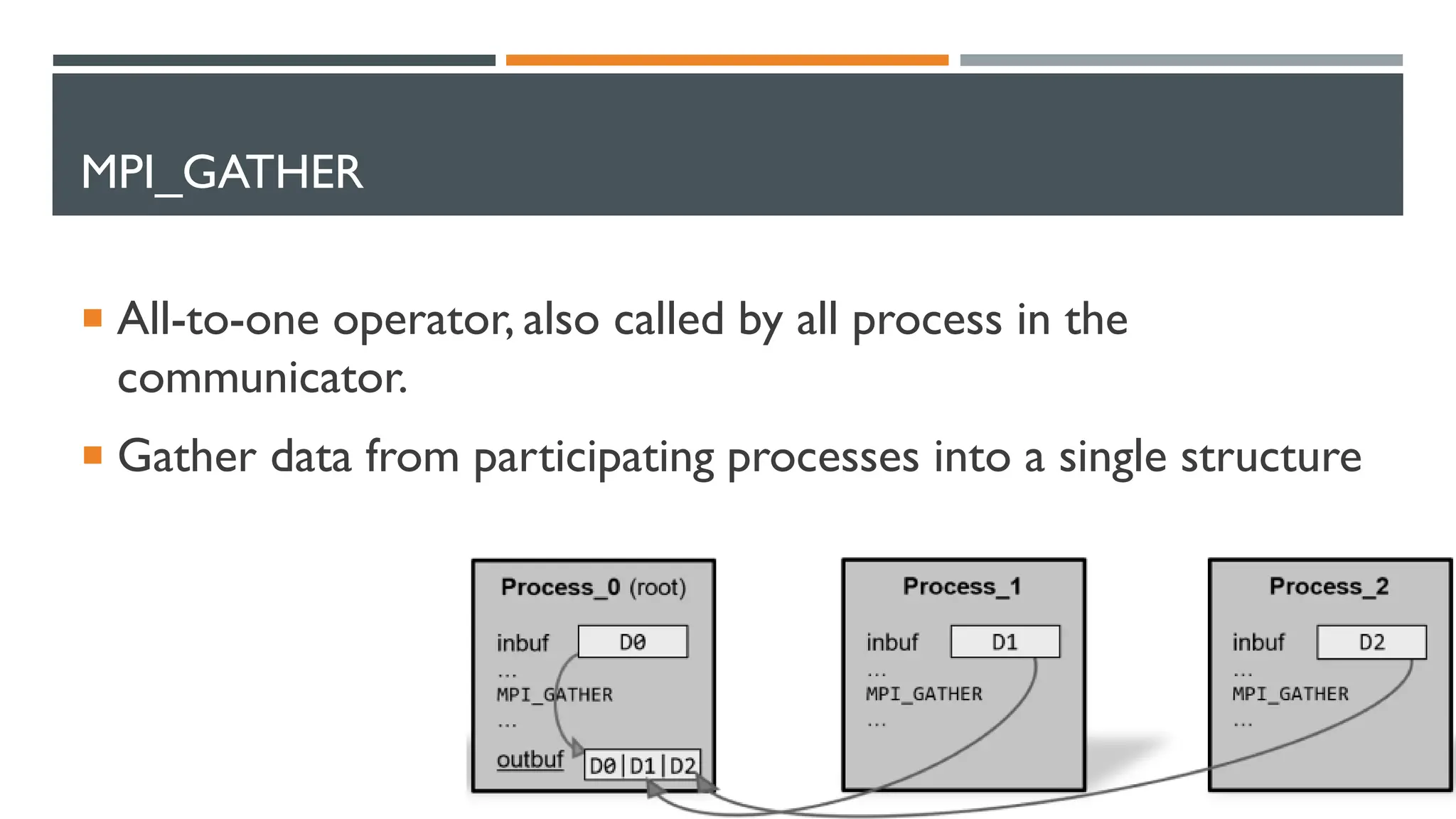

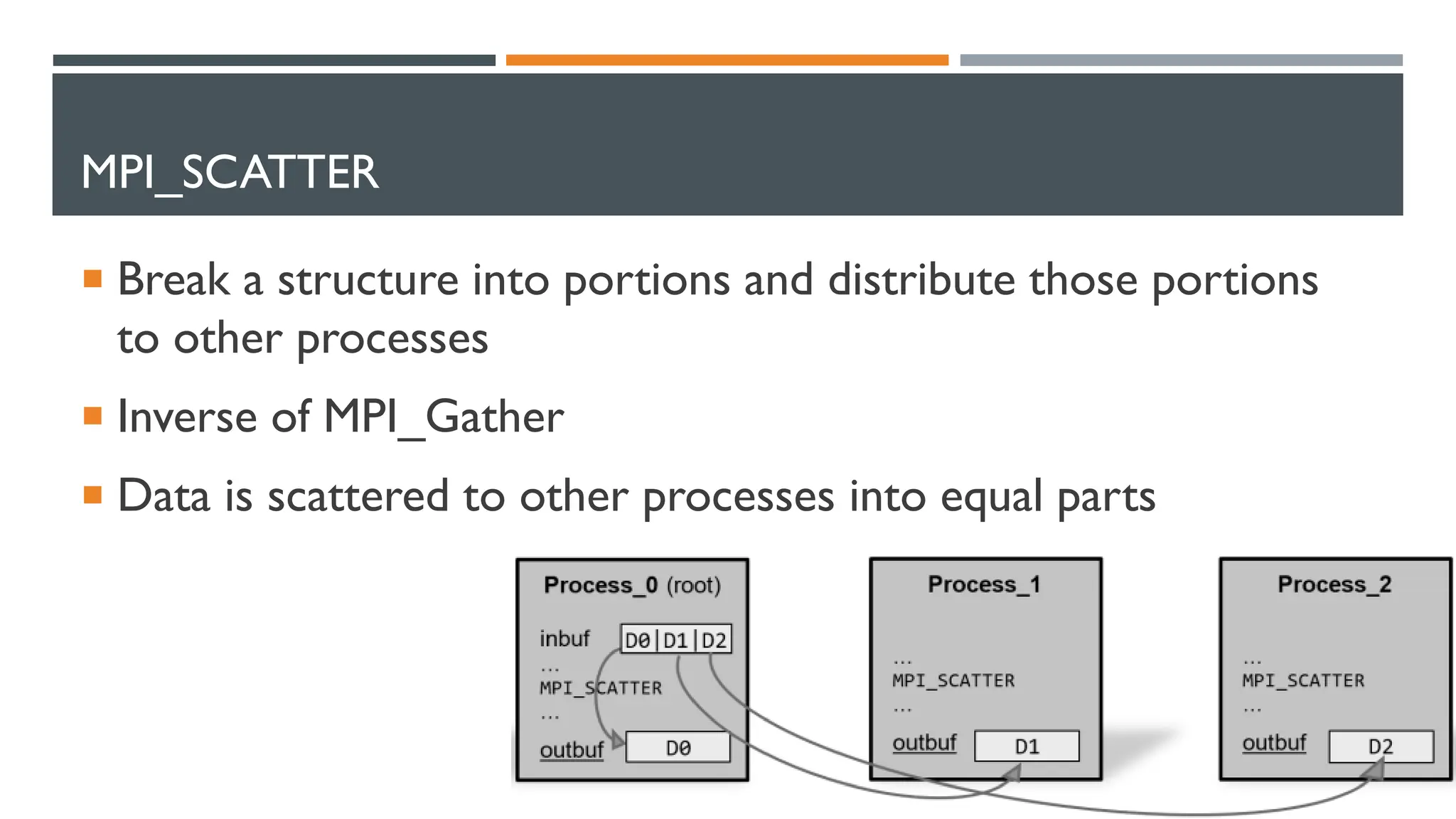

MPI is a standardized library for message passing between processes in distributed-memory parallel computers. It provides routines for point-to-point communication like send and receive, as well as collective communication routines like broadcast, gather, and scatter. MPI programs consist of autonomous processes that can communicate by exchanging messages. Common usage involves processes sending data to specific recipients by rank.