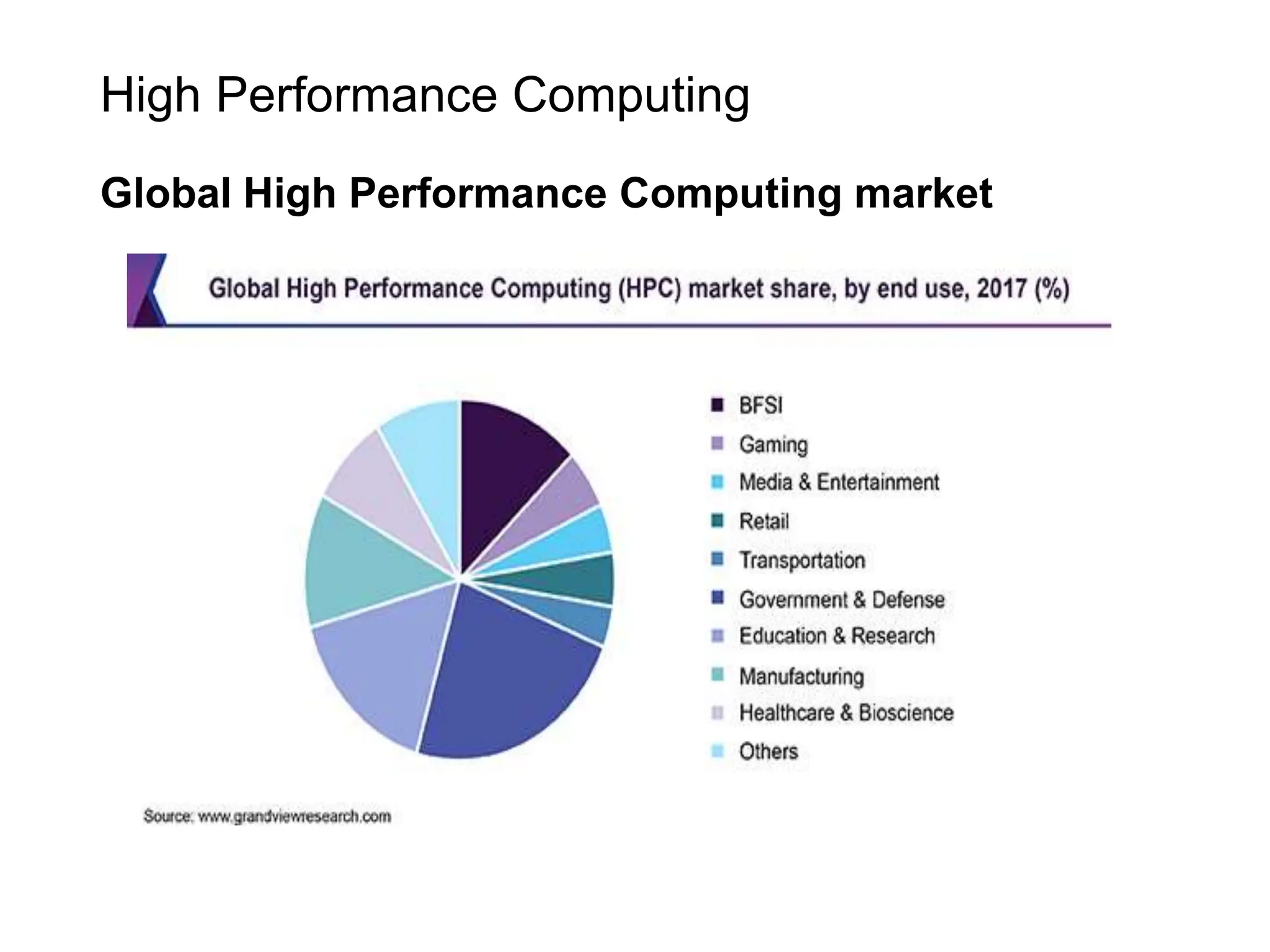

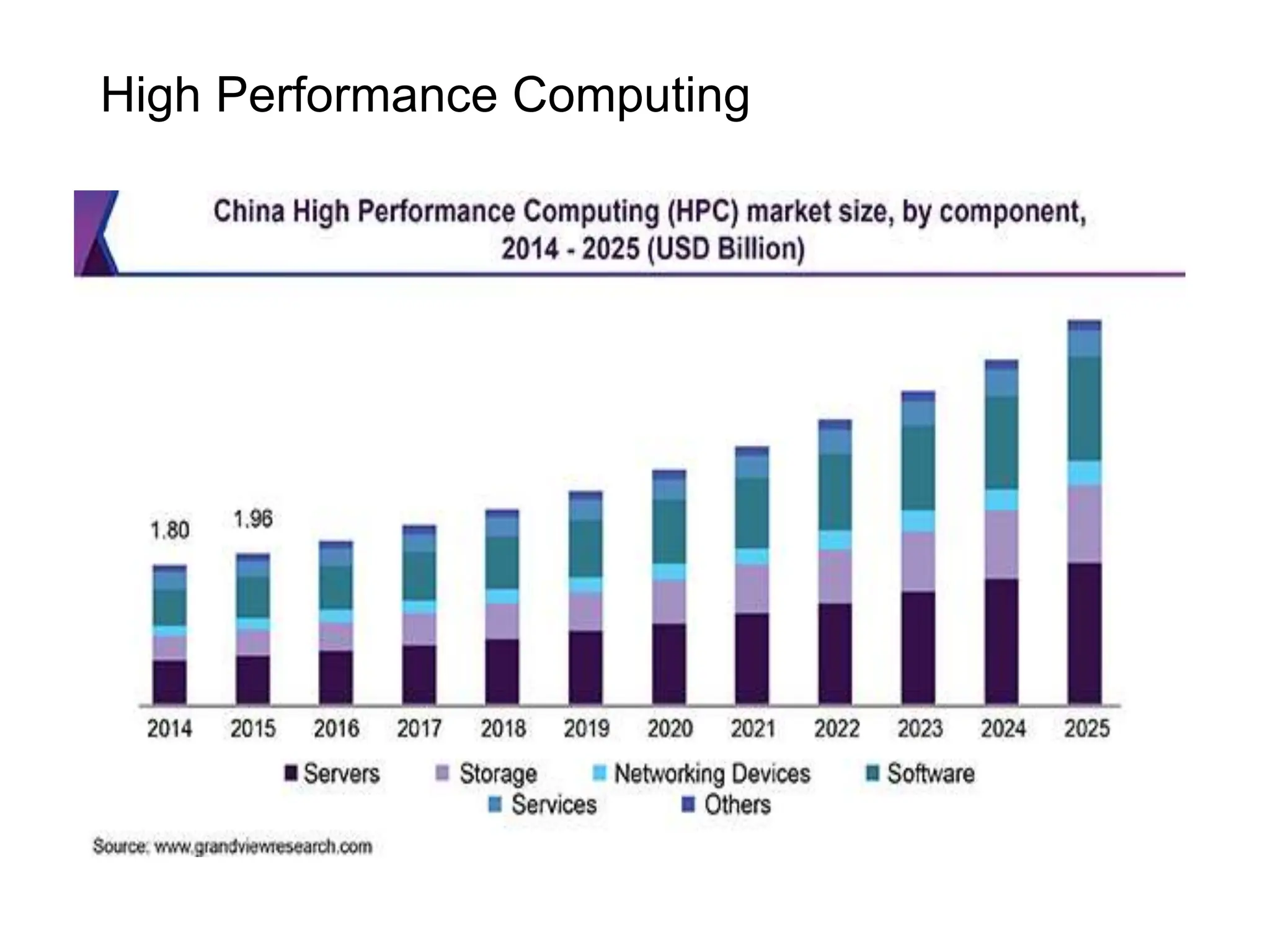

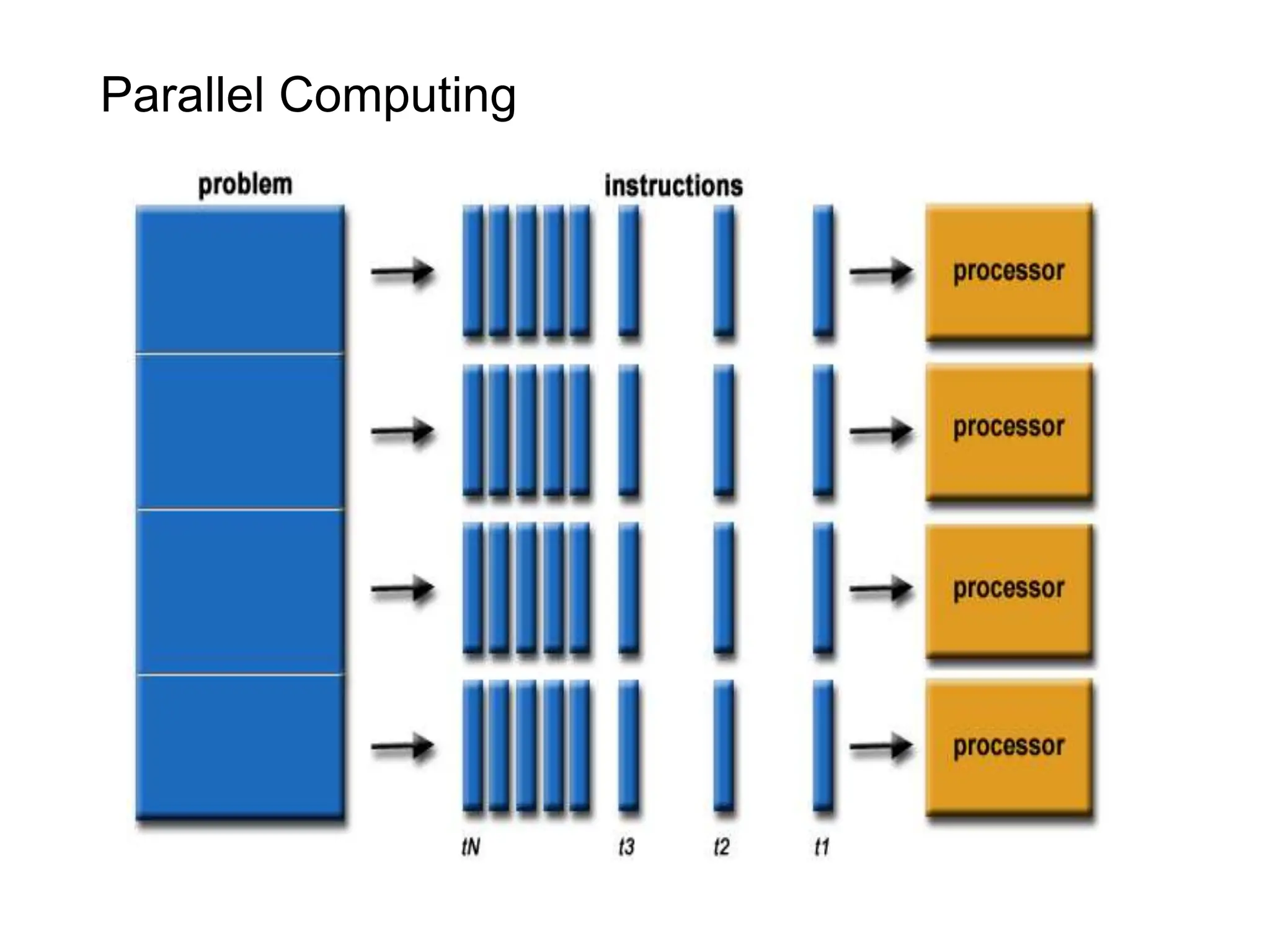

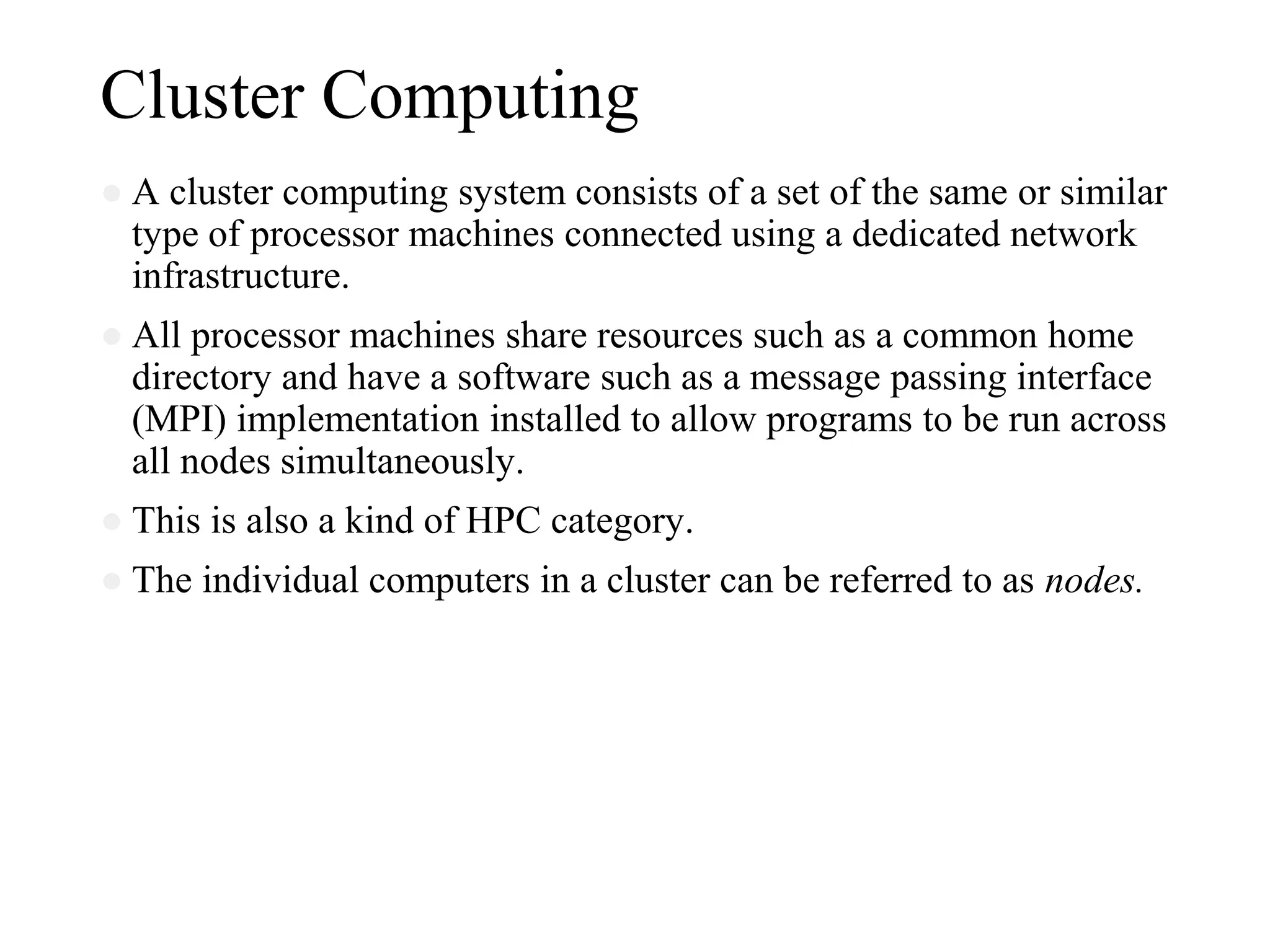

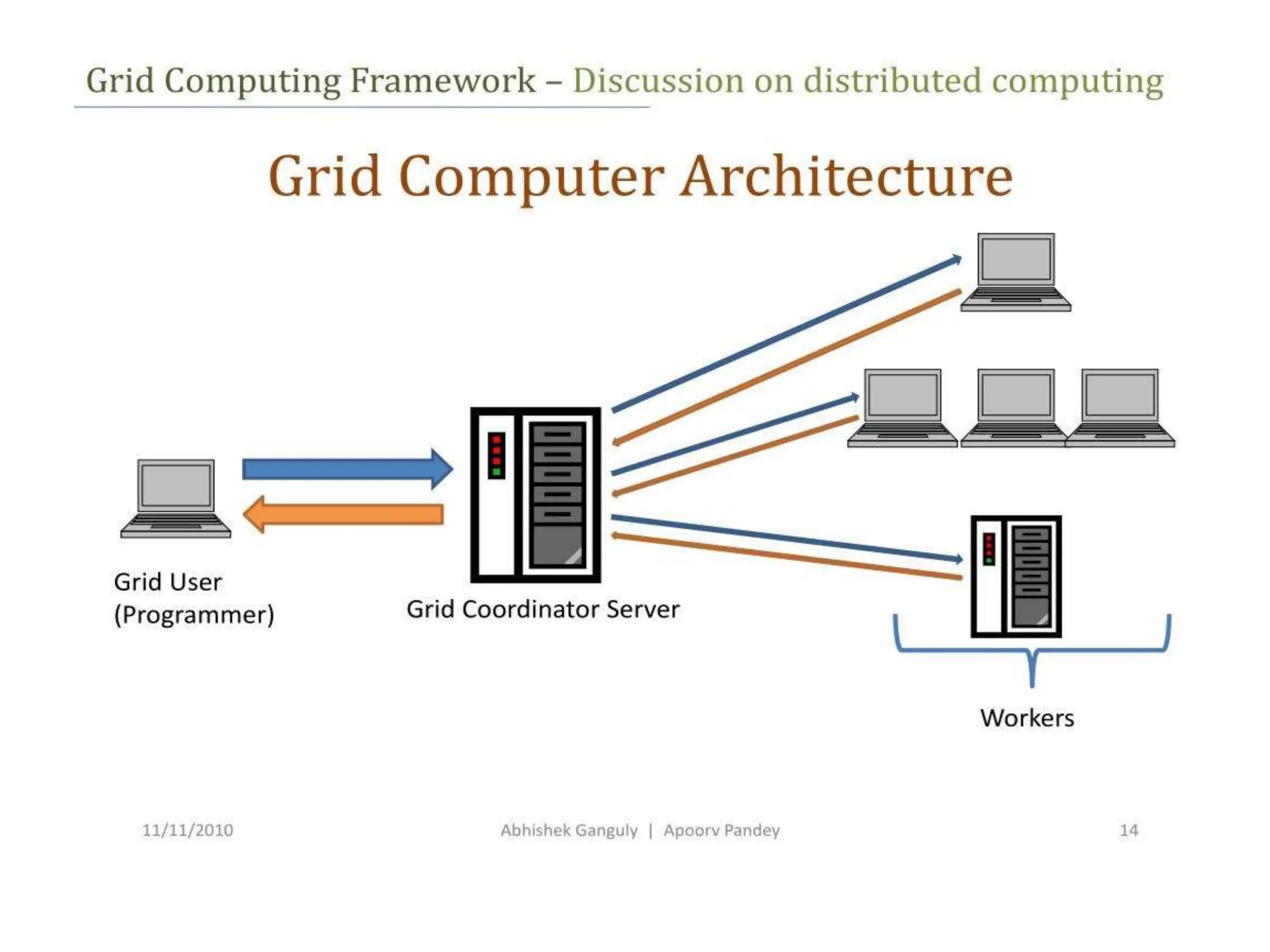

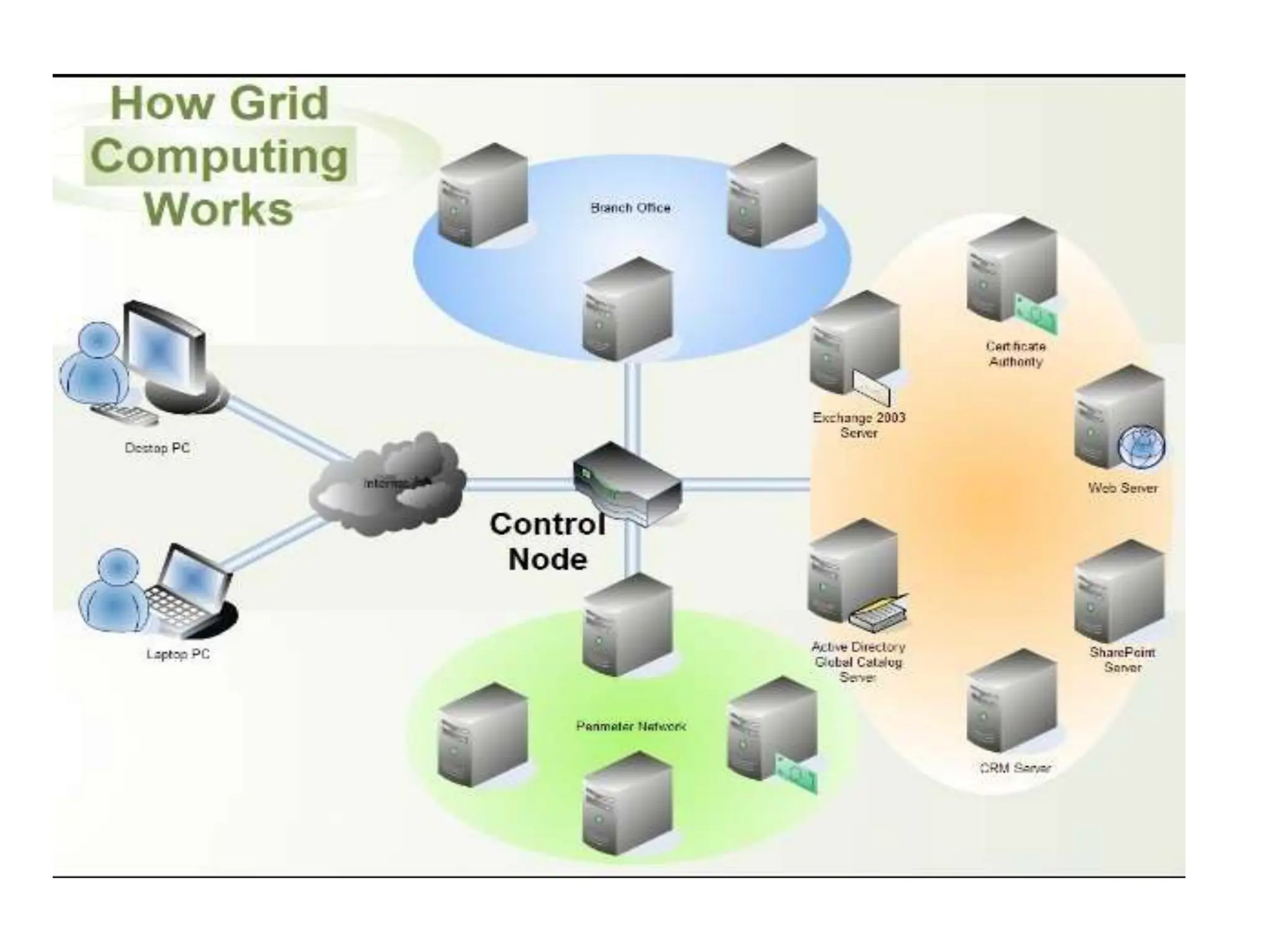

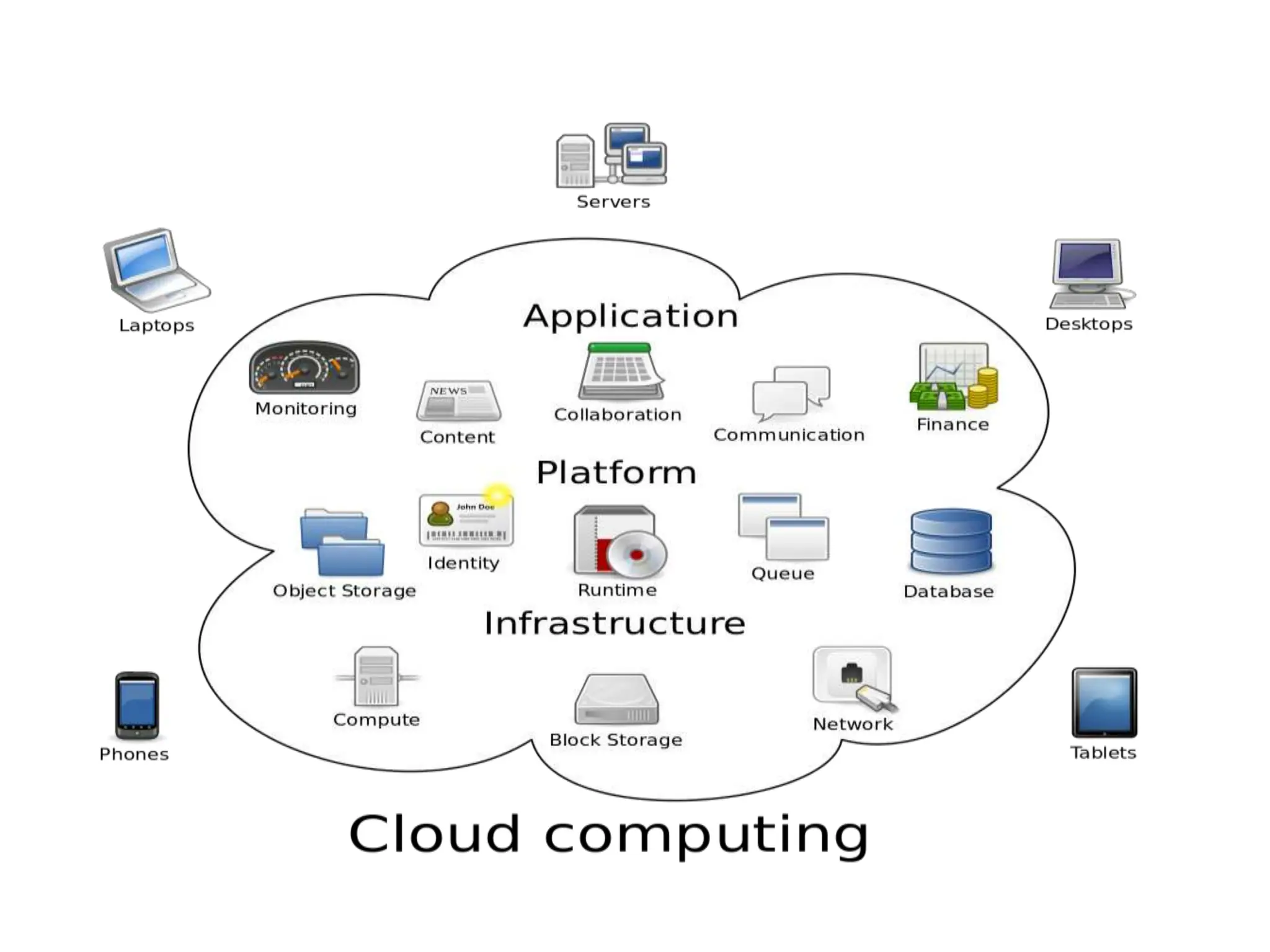

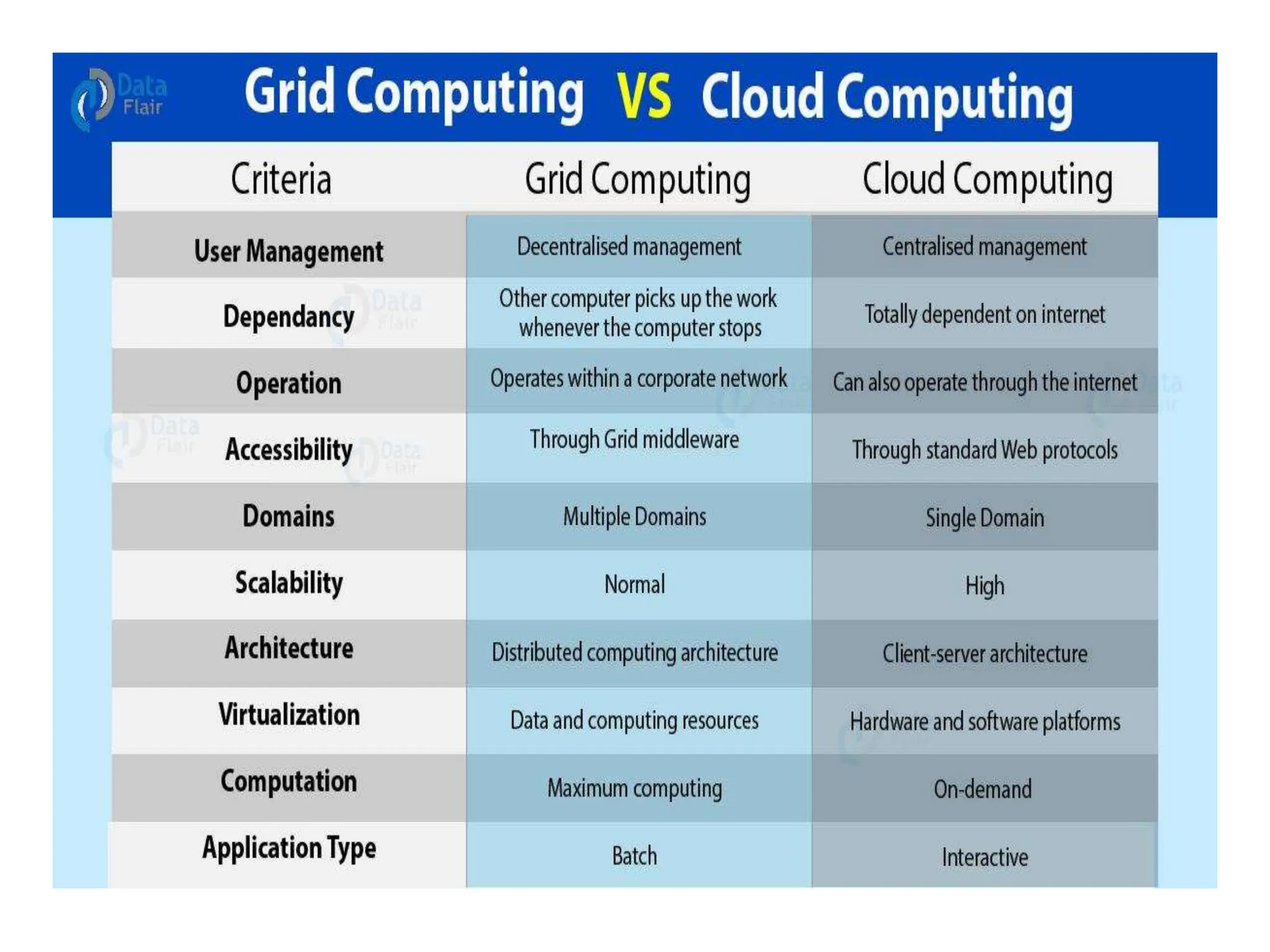

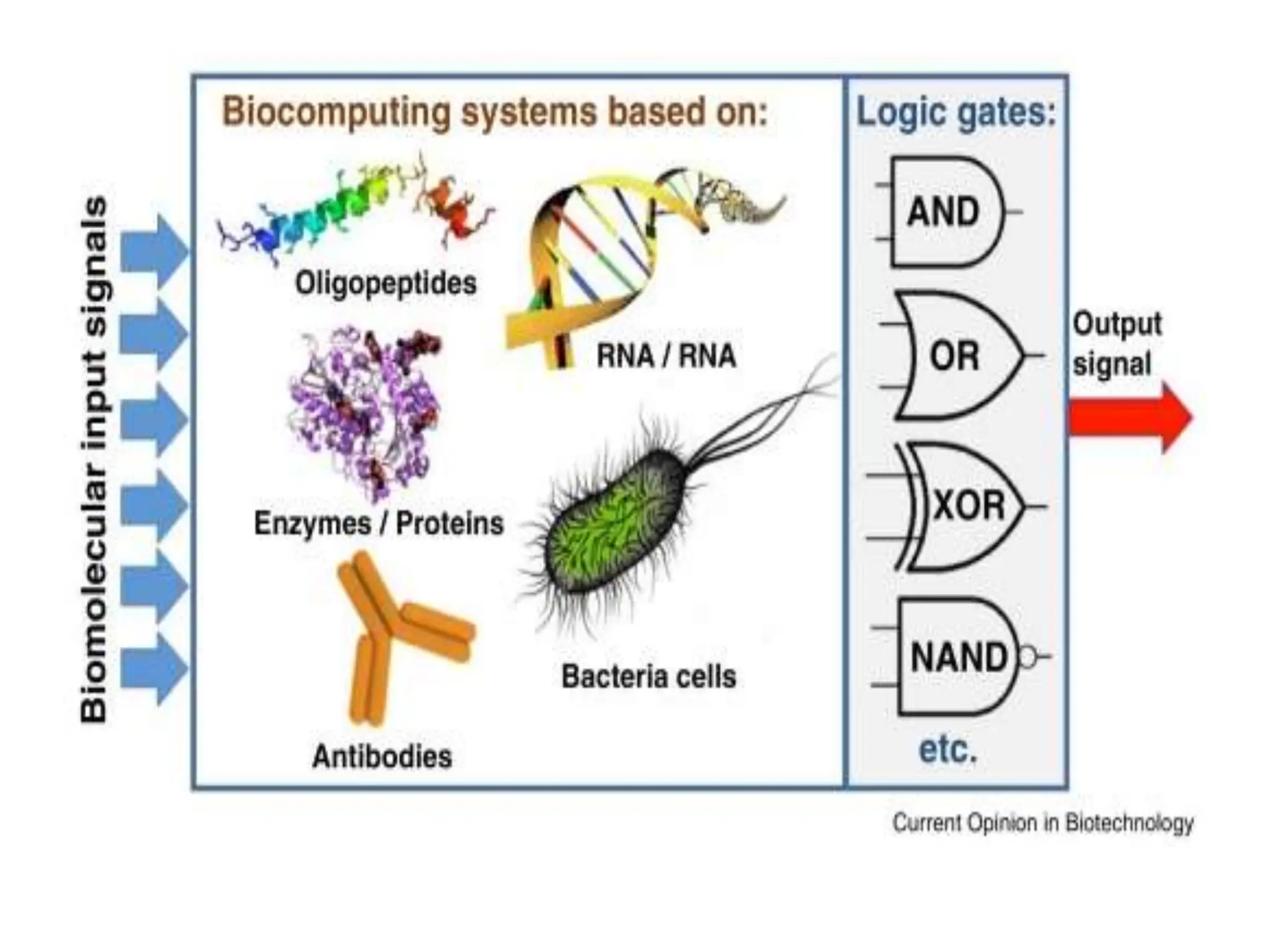

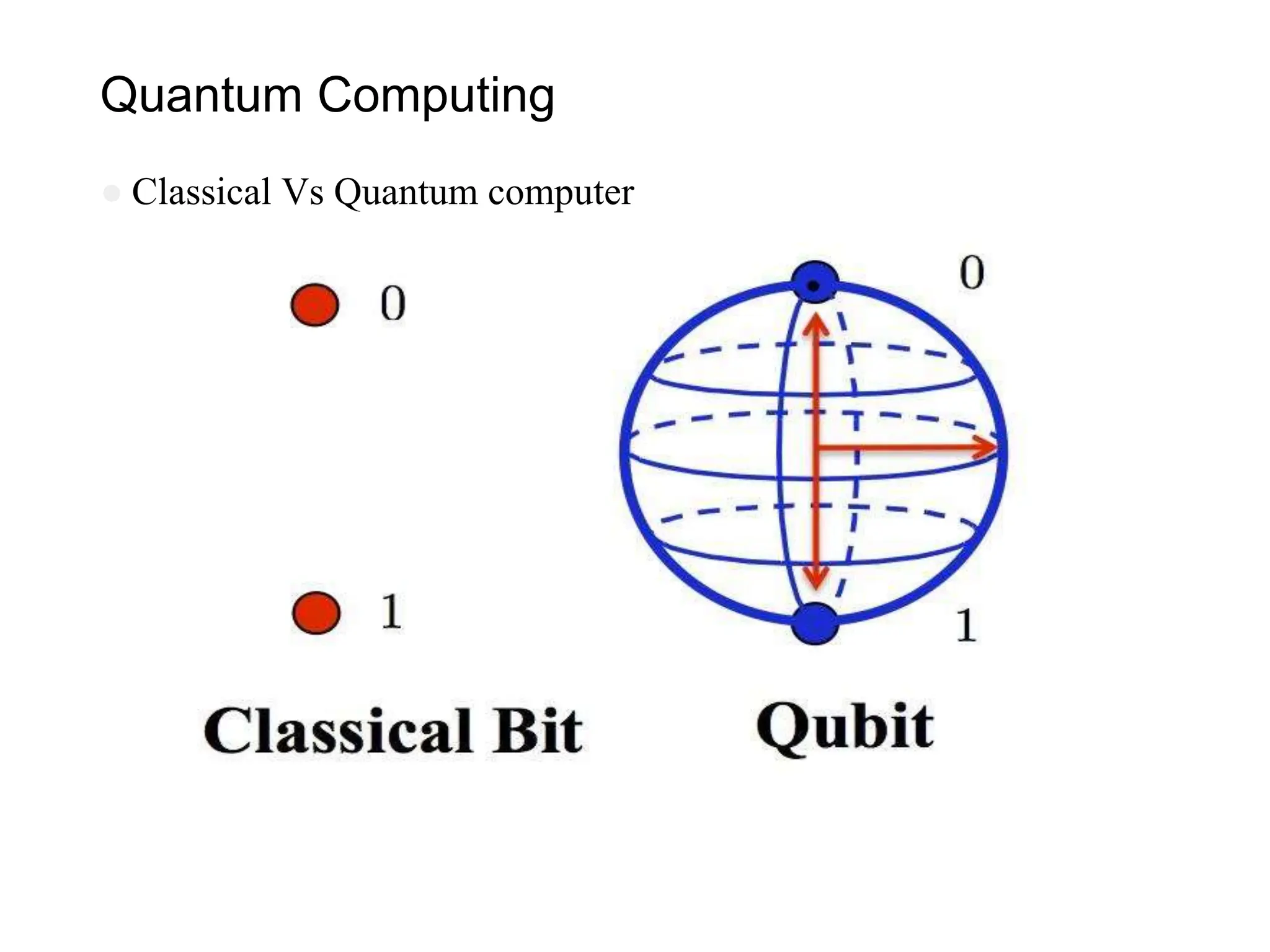

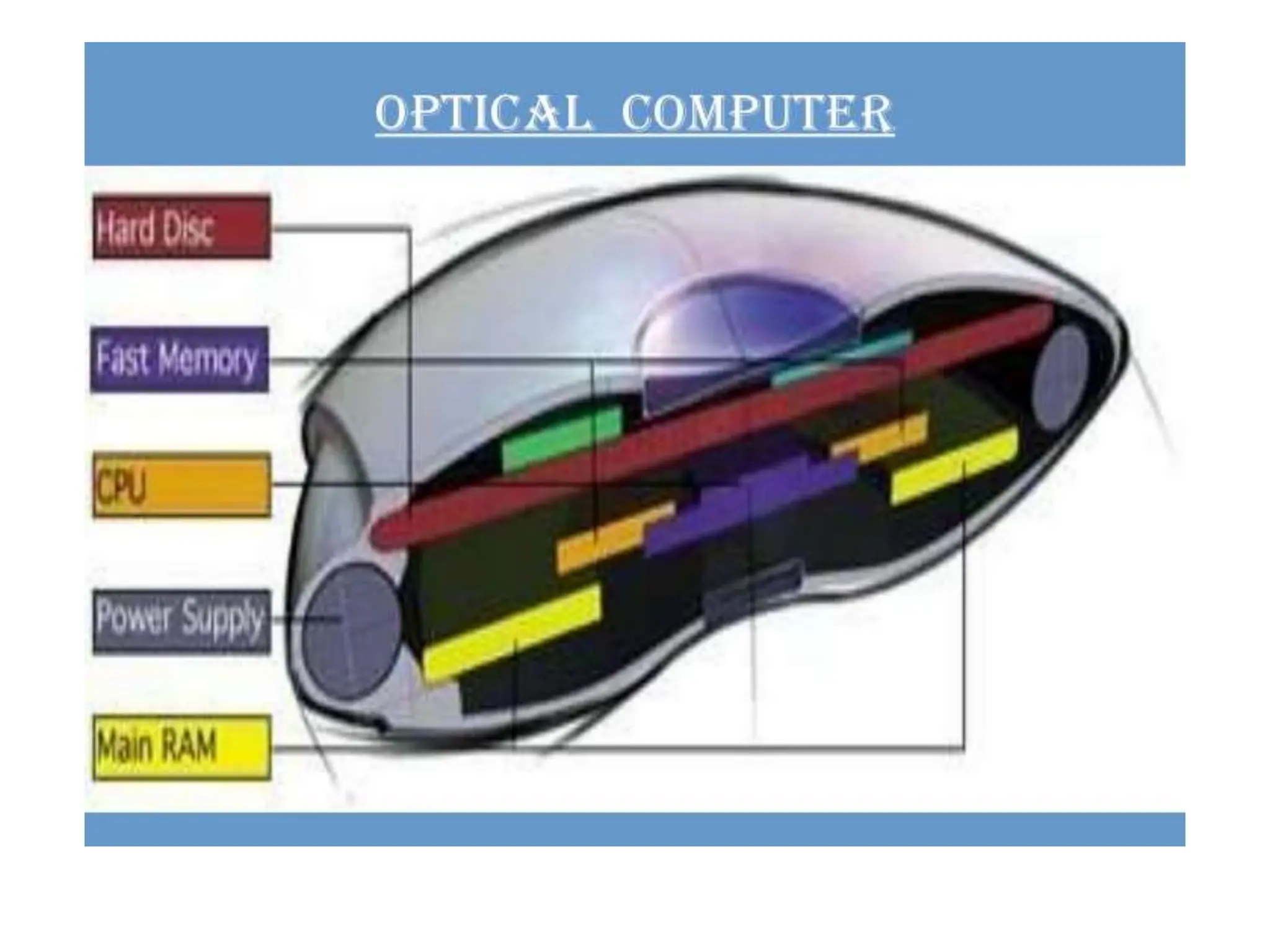

The document provides an overview of various computing paradigms, including high performance computing, parallel computing, and cloud computing, detailing their characteristics and applications. It discusses the importance of these paradigms in solving complex scientific problems and optimizing resource usage in different environments. Furthermore, it highlights the evolution towards advanced computing technologies like biocomputing, quantum computing, and optical computing, indicating the potential future of computing systems.