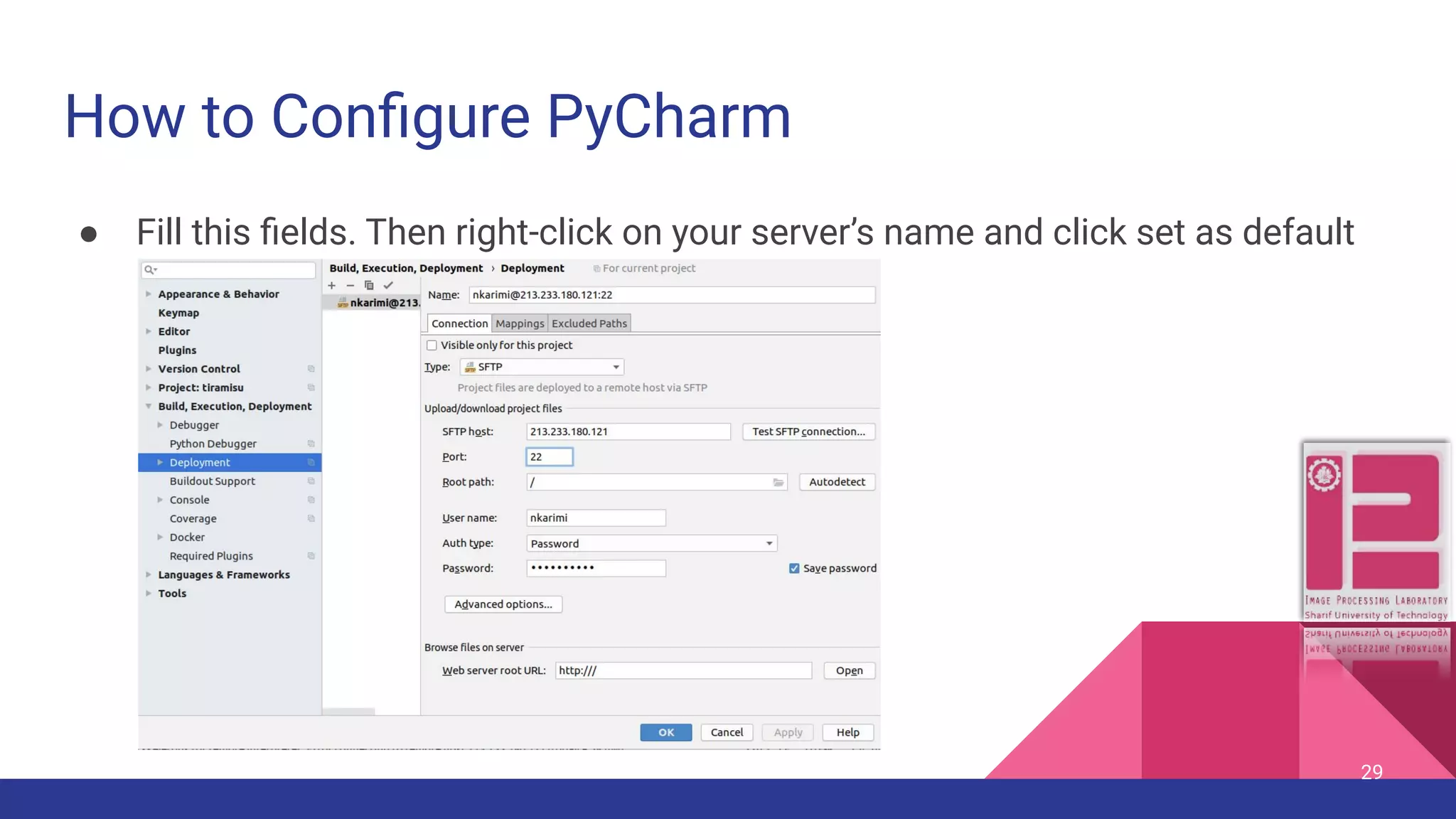

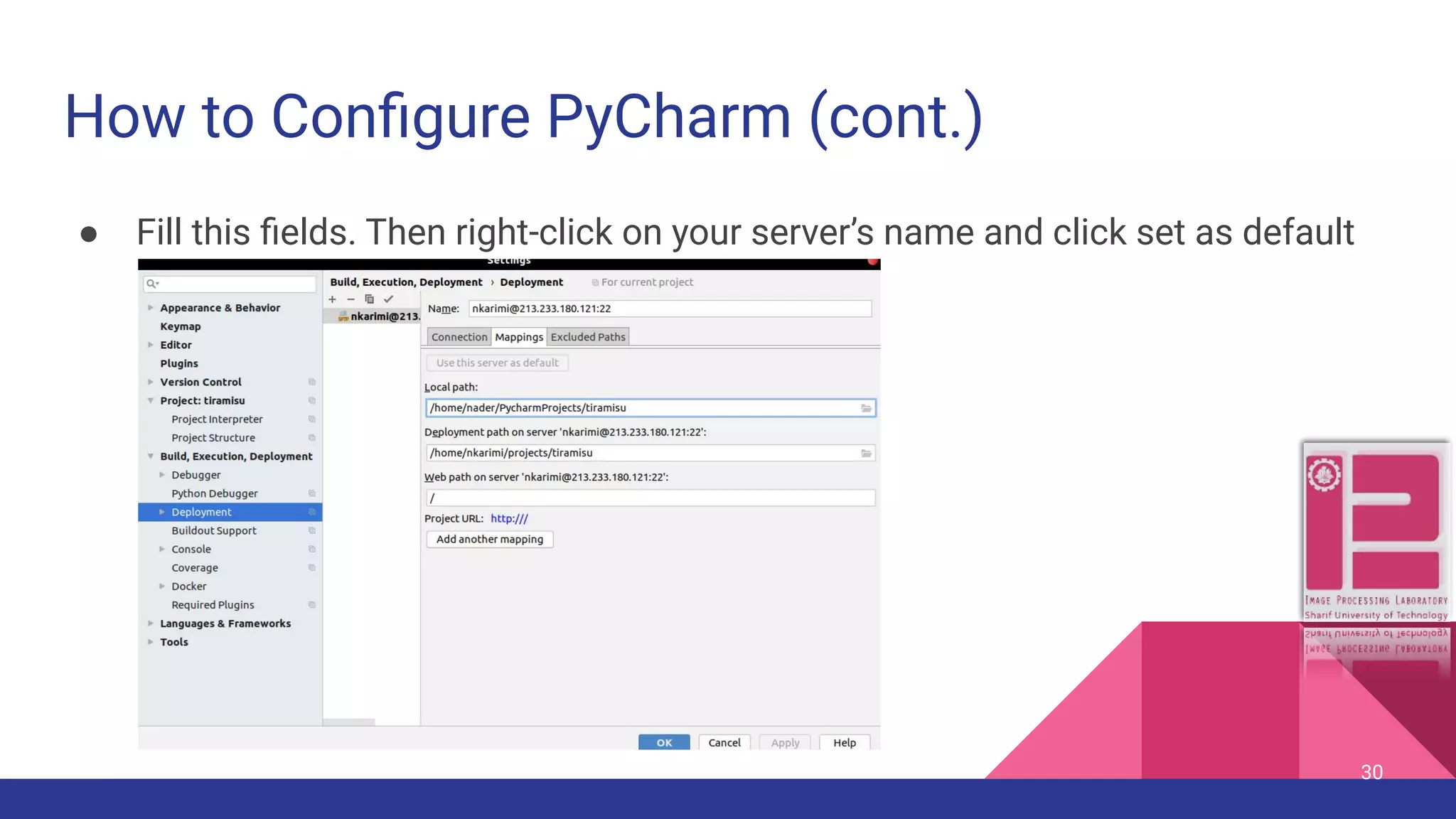

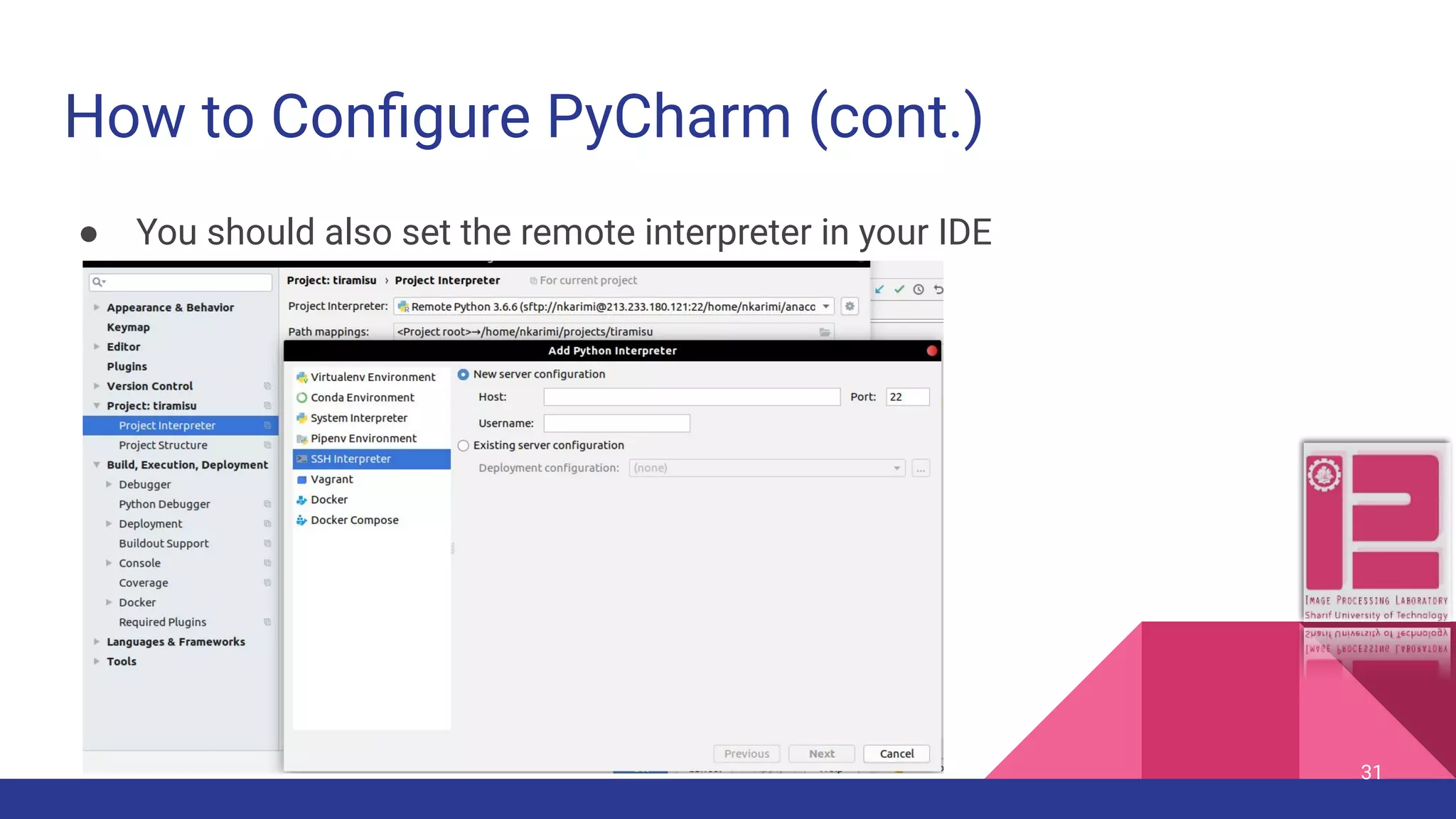

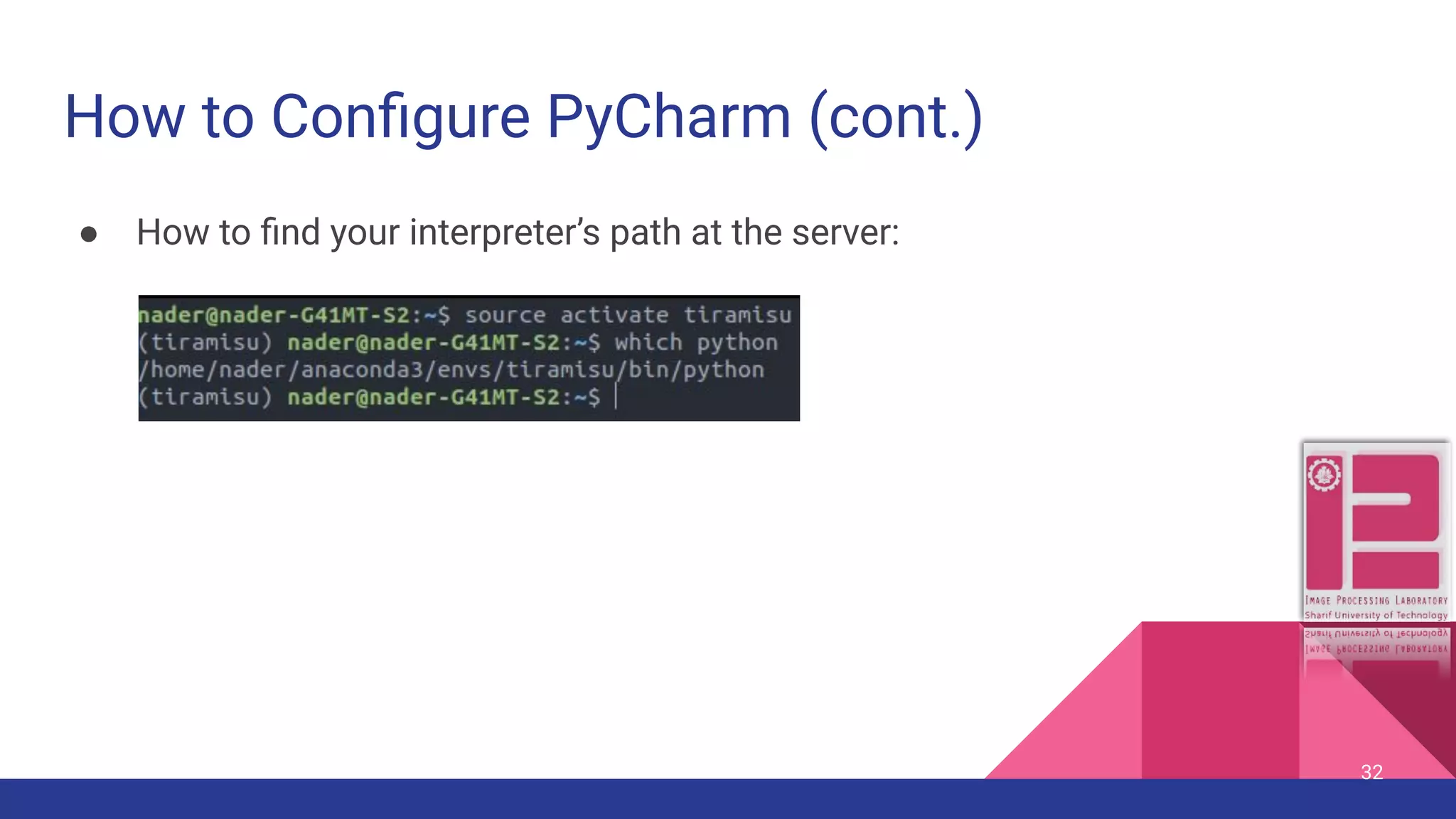

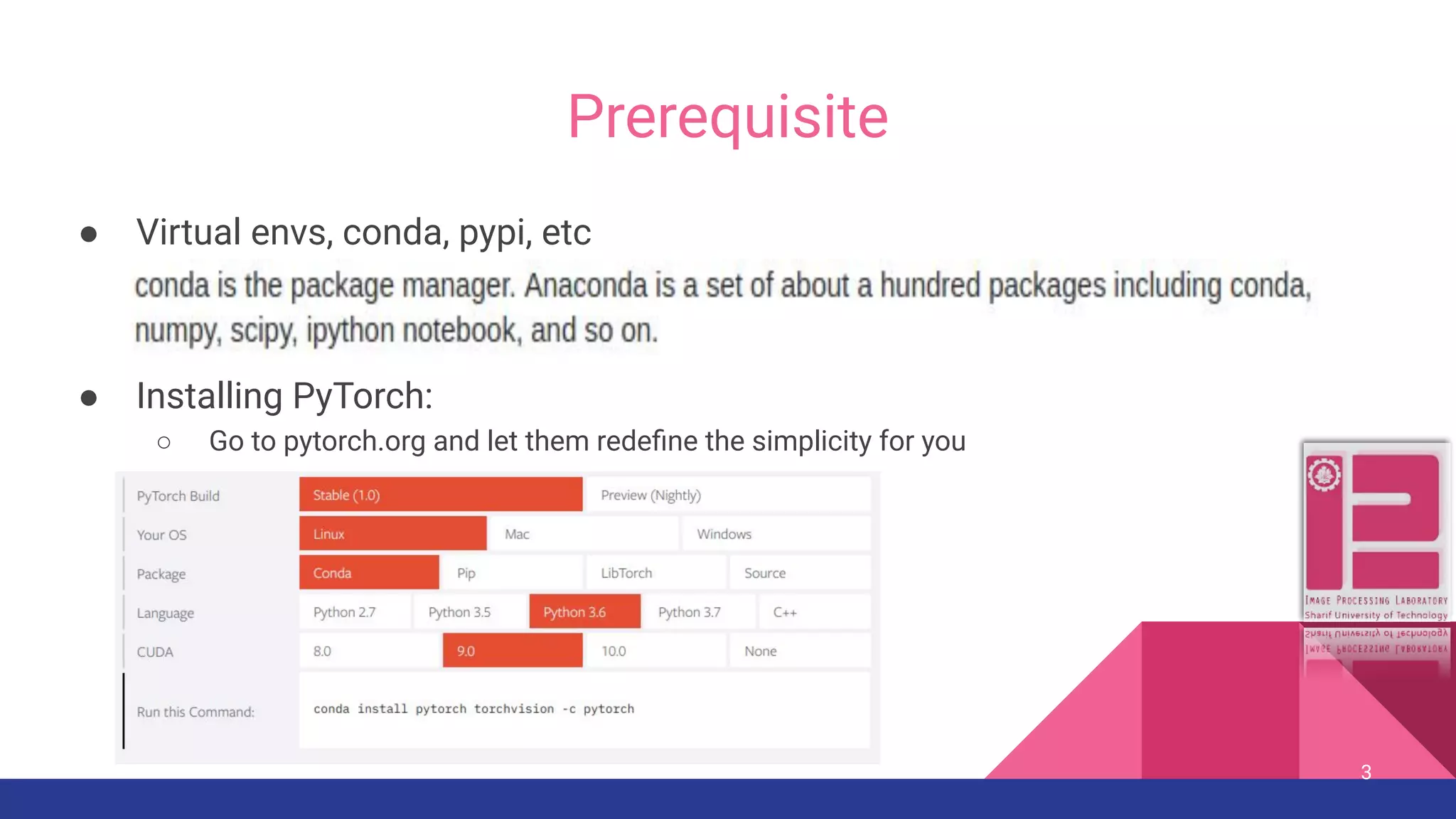

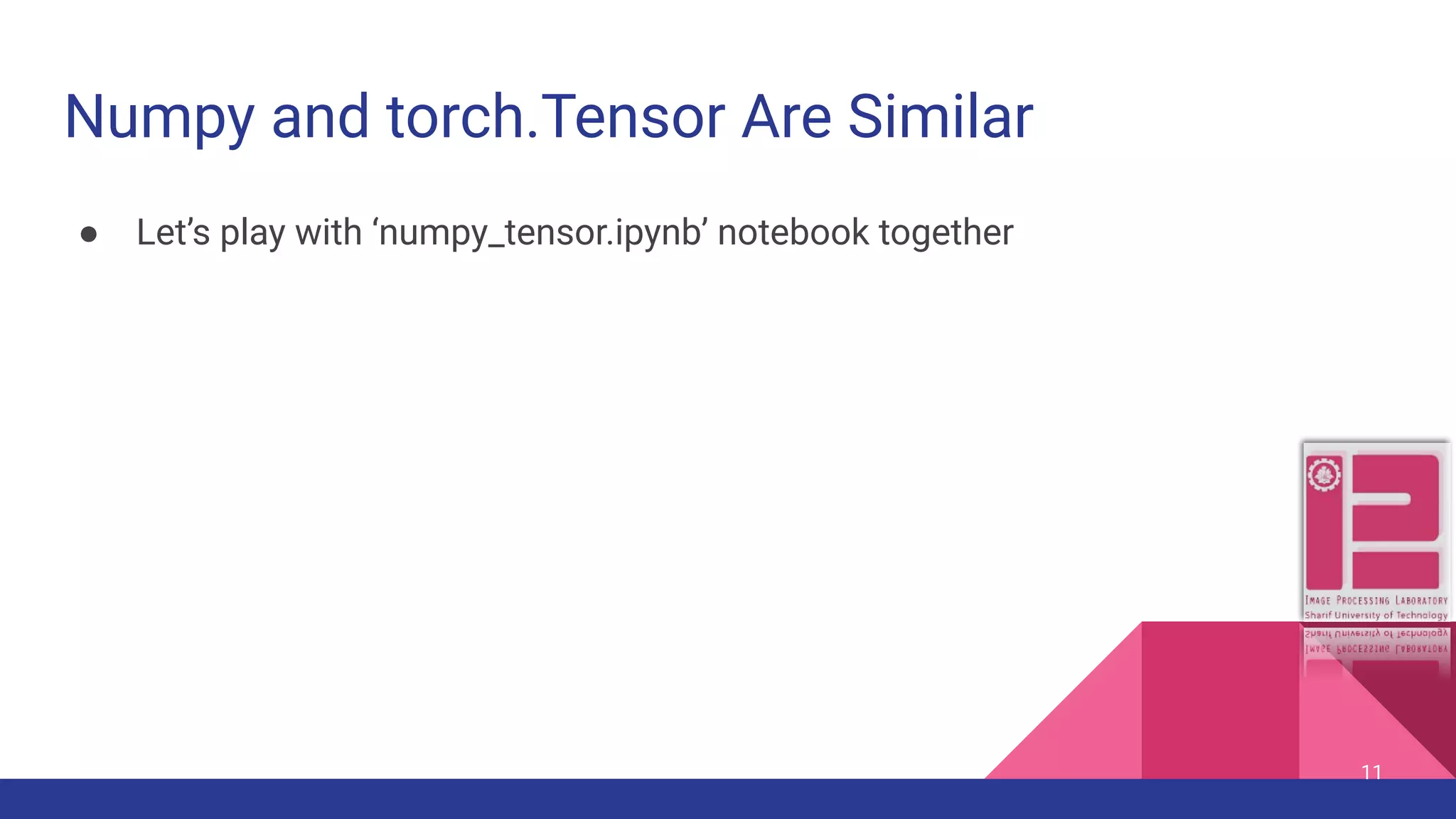

This document is a guide for getting started with PyTorch, covering necessary prerequisites like installing PyTorch, using Jupyter notebooks, and basic concepts such as tensors and neural networks. It also includes useful links and commands for operating on a remote Linux server, detailing essential operations and tools to facilitate deep learning projects. Further, it provides insights into integrating PyTorch with various development environments such as PyCharm.

![Some Useful Linux Commands 23 Command Task Options ([.] means optional) cd Change Directory pwd Print Working Directory ls List contents of a directory [path], [-{a,l,h,...}] touch Create a file [parent_directory/] filename nano Simple text editor filename cat See content of a file filename head See some of lines from top filename tail See some of last lines filename](https://image.slidesharecdn.com/pytorchcrashcourse-191006165457/75/PyTorch-crash-course-23-2048.jpg)

![Some Useful Linux Commands (cont.) ● Watch -n1 {command-name}. Example: watch -n1 gpustat, shows result of a command with periodic refresh ● Screen {[-x screen-name], [list]}: Use this so that your program keeps running while you are far far away ○ Examples: ■ Screen: create a new screen and go to it ■ Screen list: list all of available screens (attached or detached) ■ Screen -x screen-name: attach to an available screen ● gpustat: install by pip install gpustat ○ Watch -n1 gpustat is a useful combination 24](https://image.slidesharecdn.com/pytorchcrashcourse-191006165457/75/PyTorch-crash-course-24-2048.jpg)