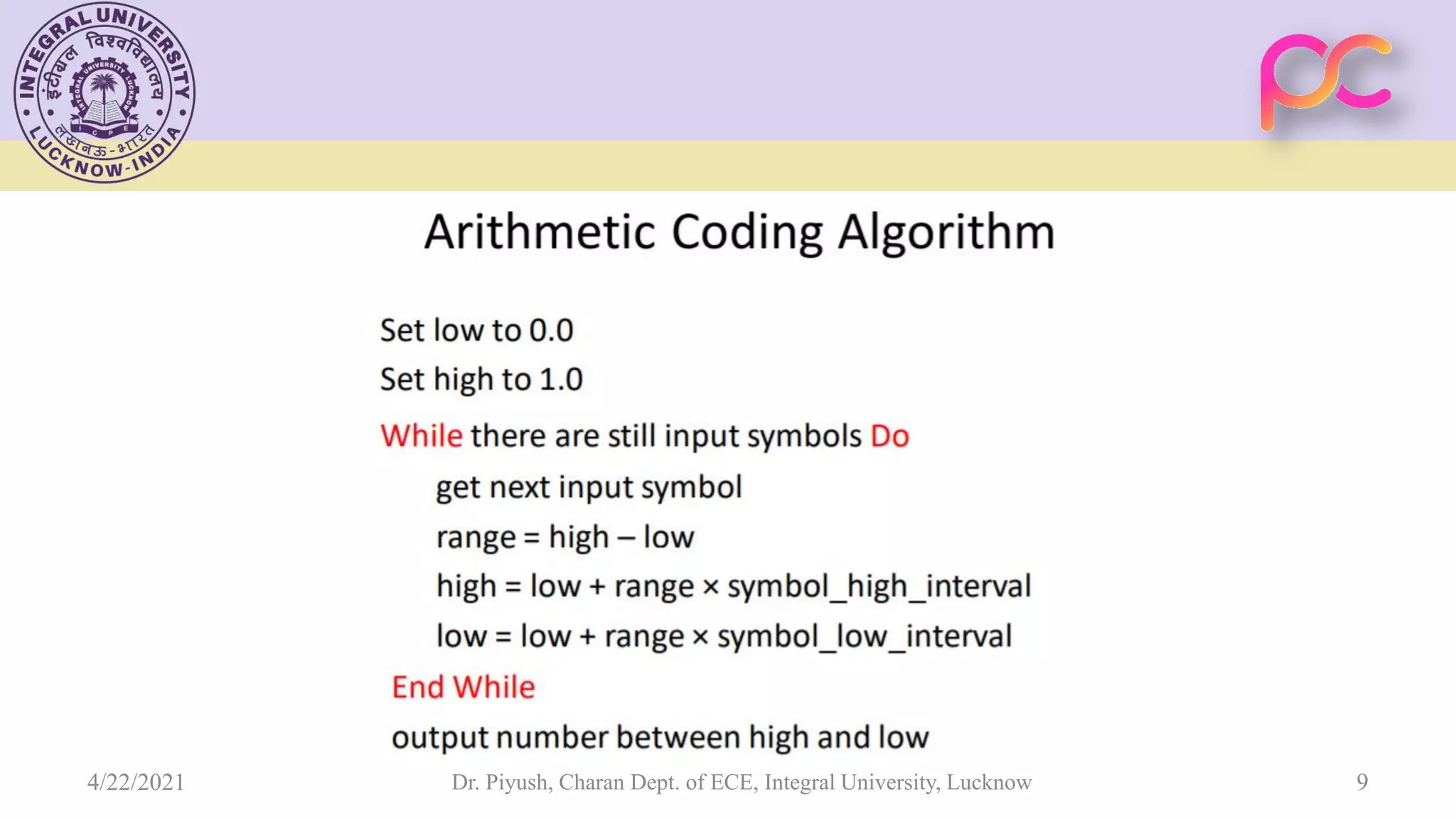

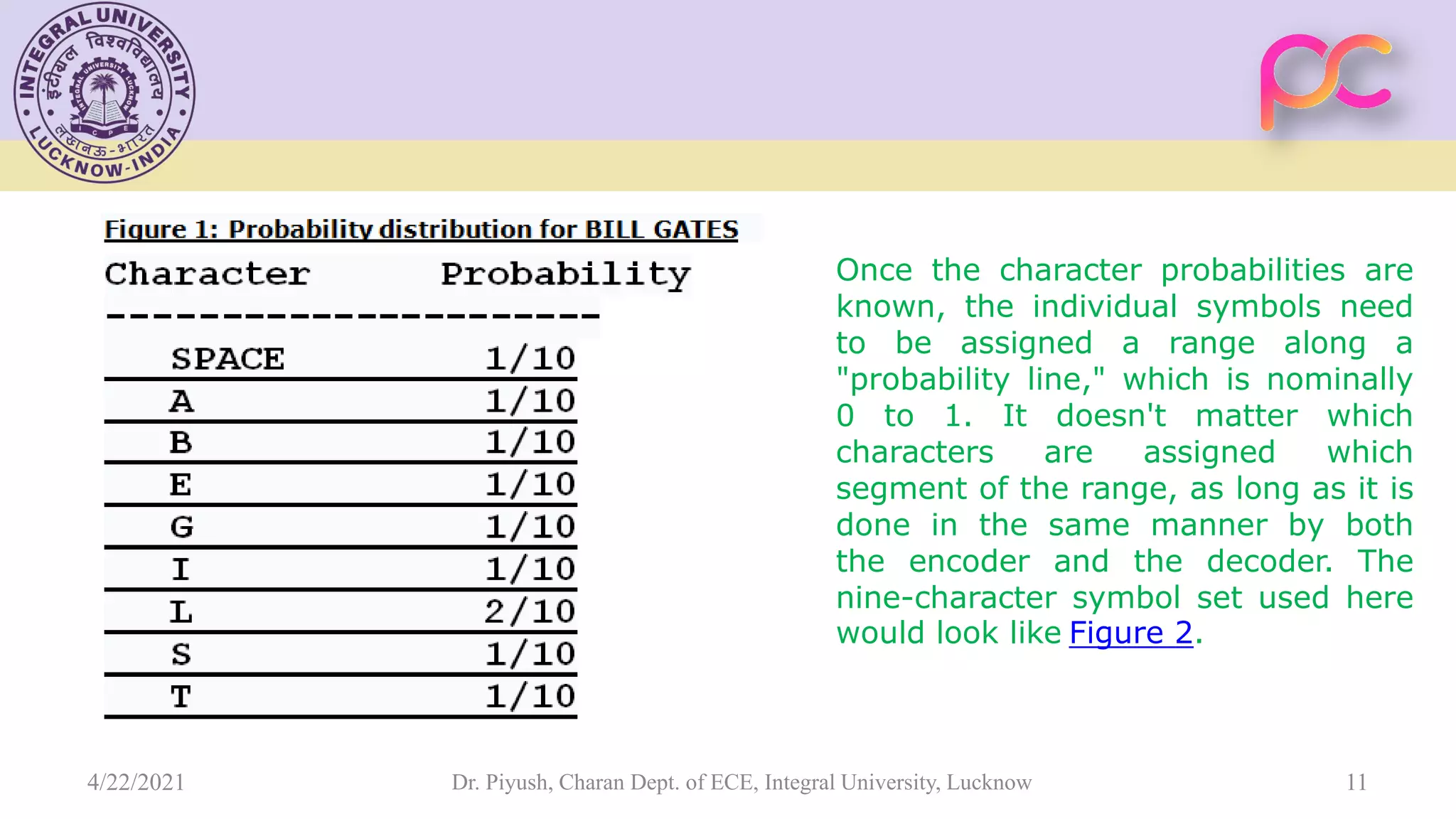

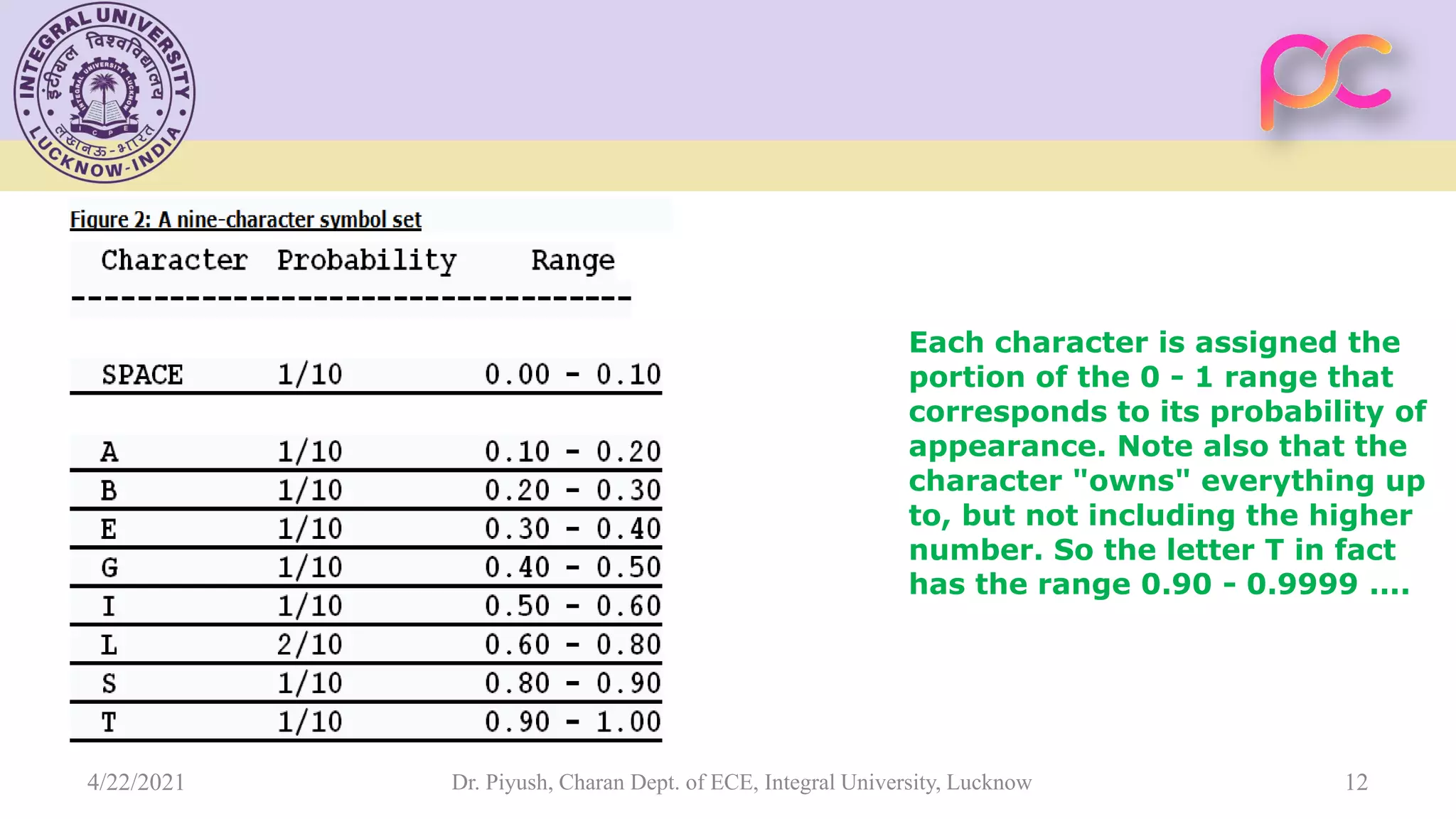

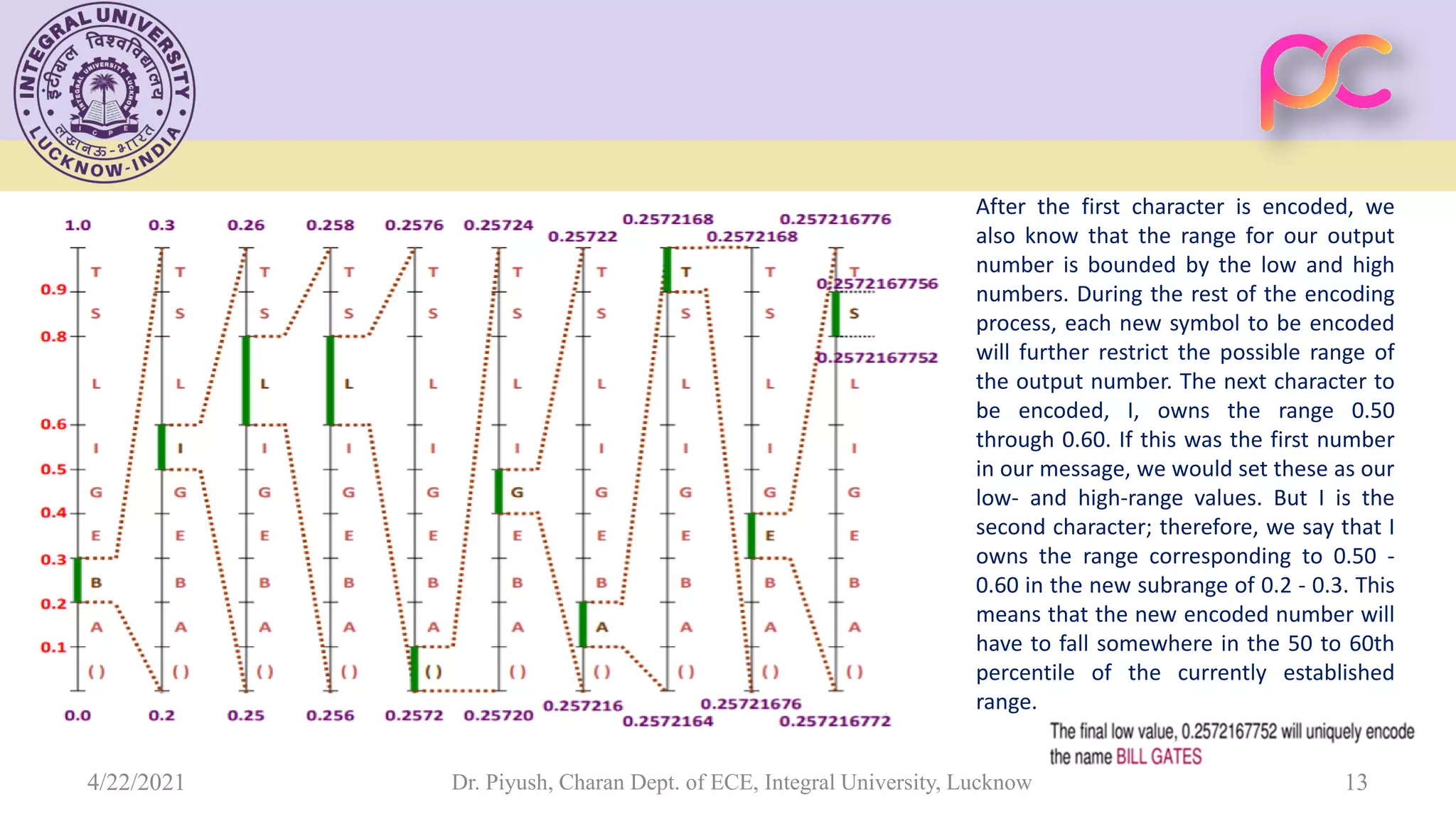

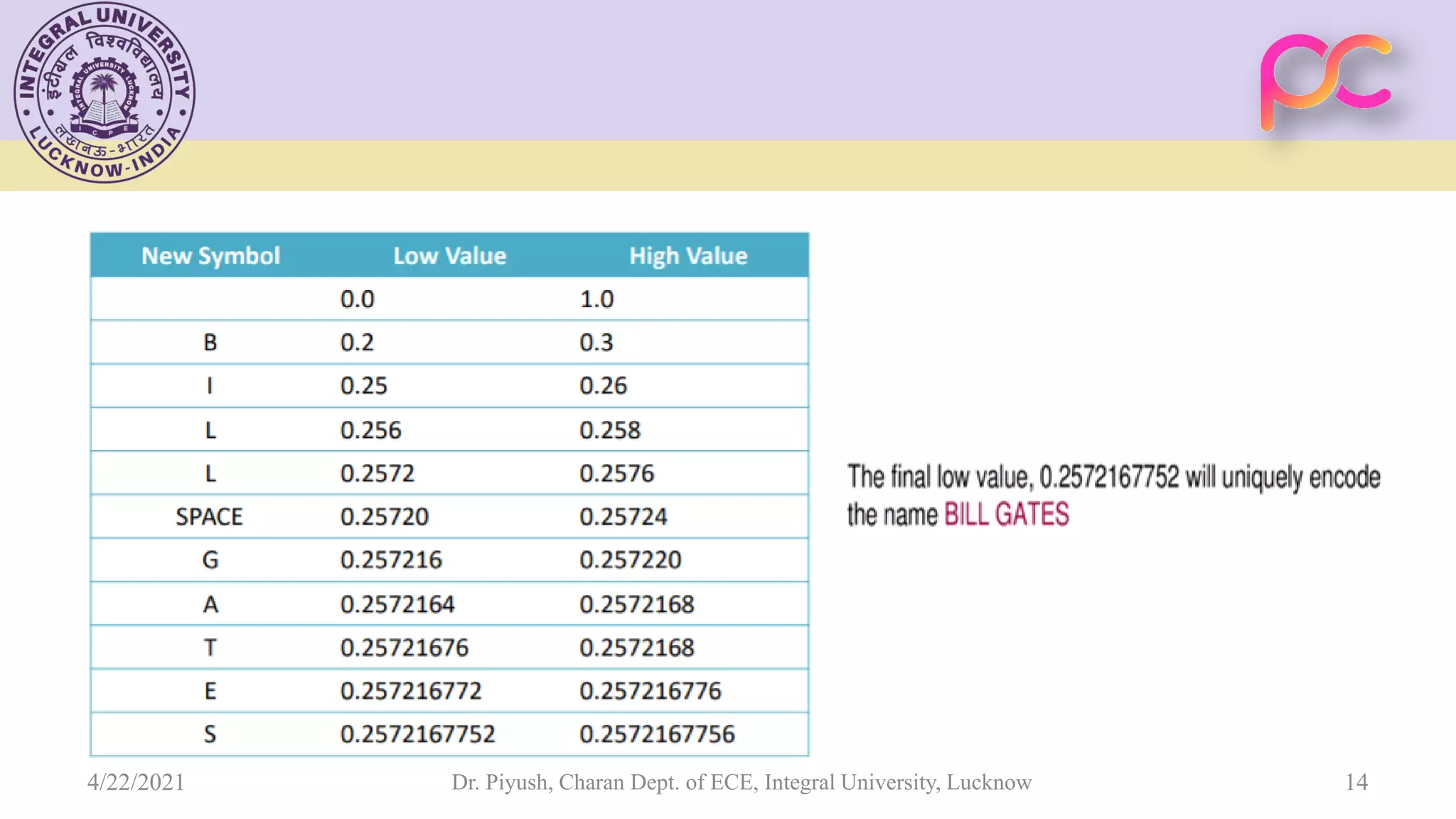

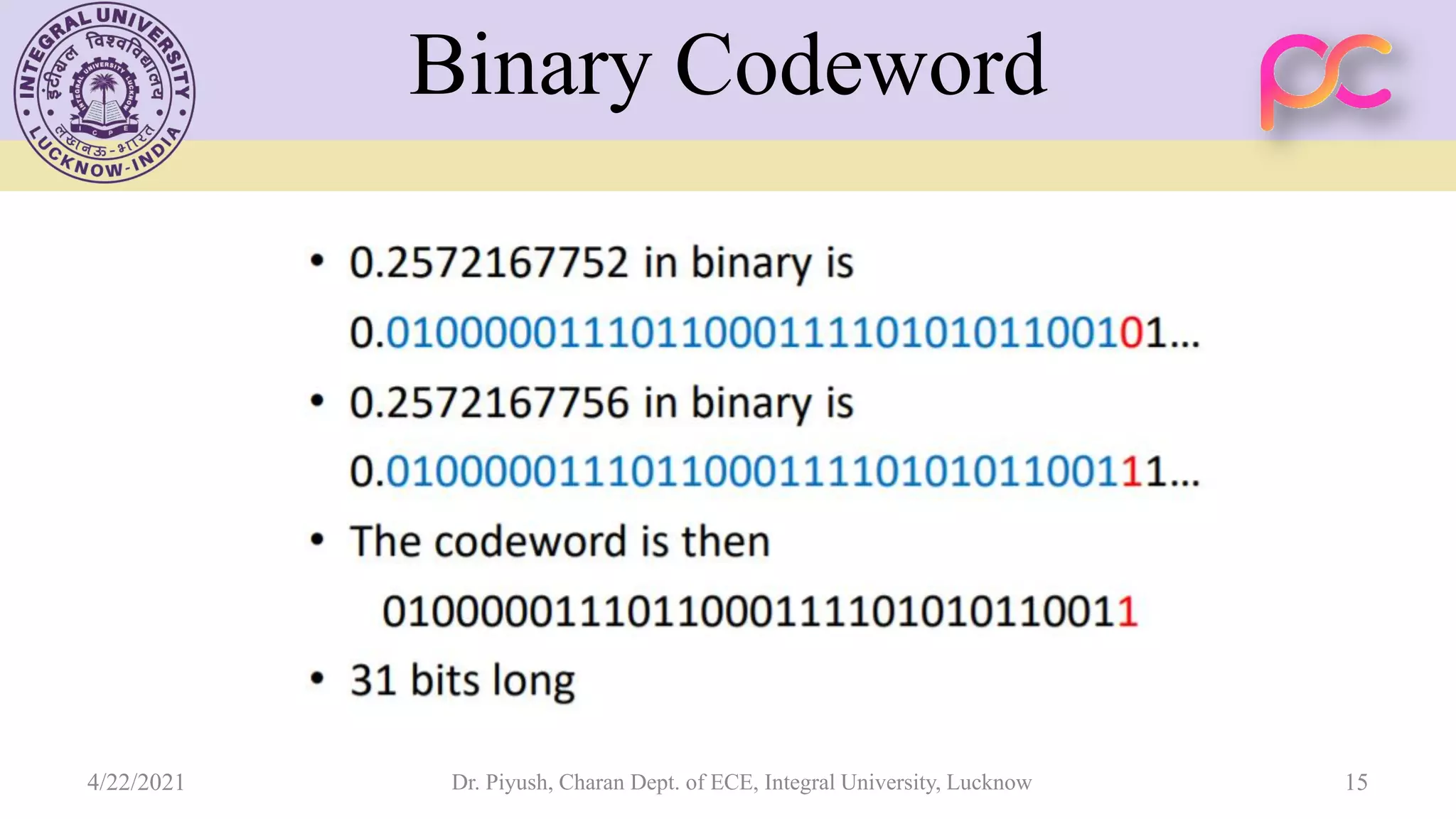

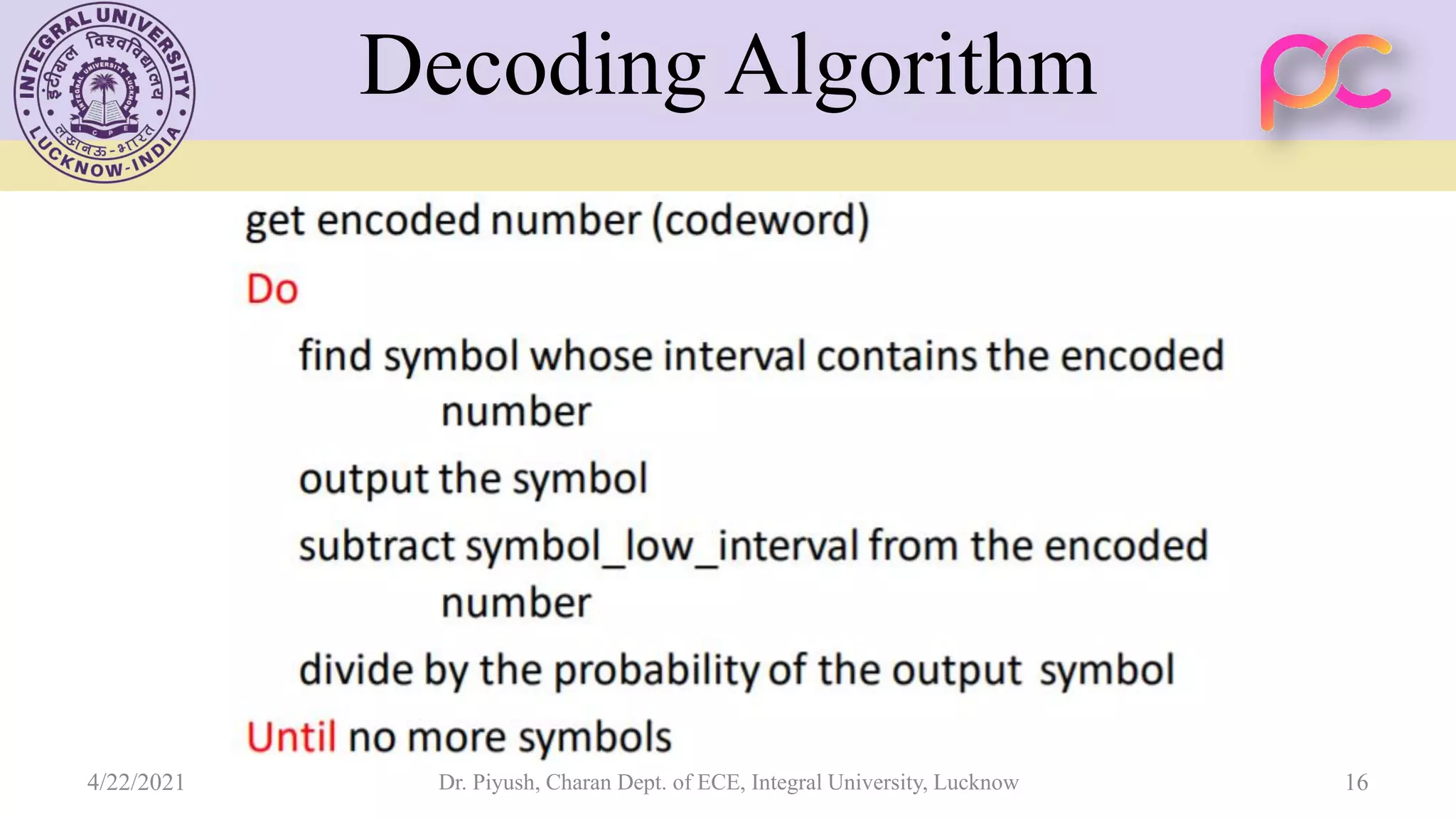

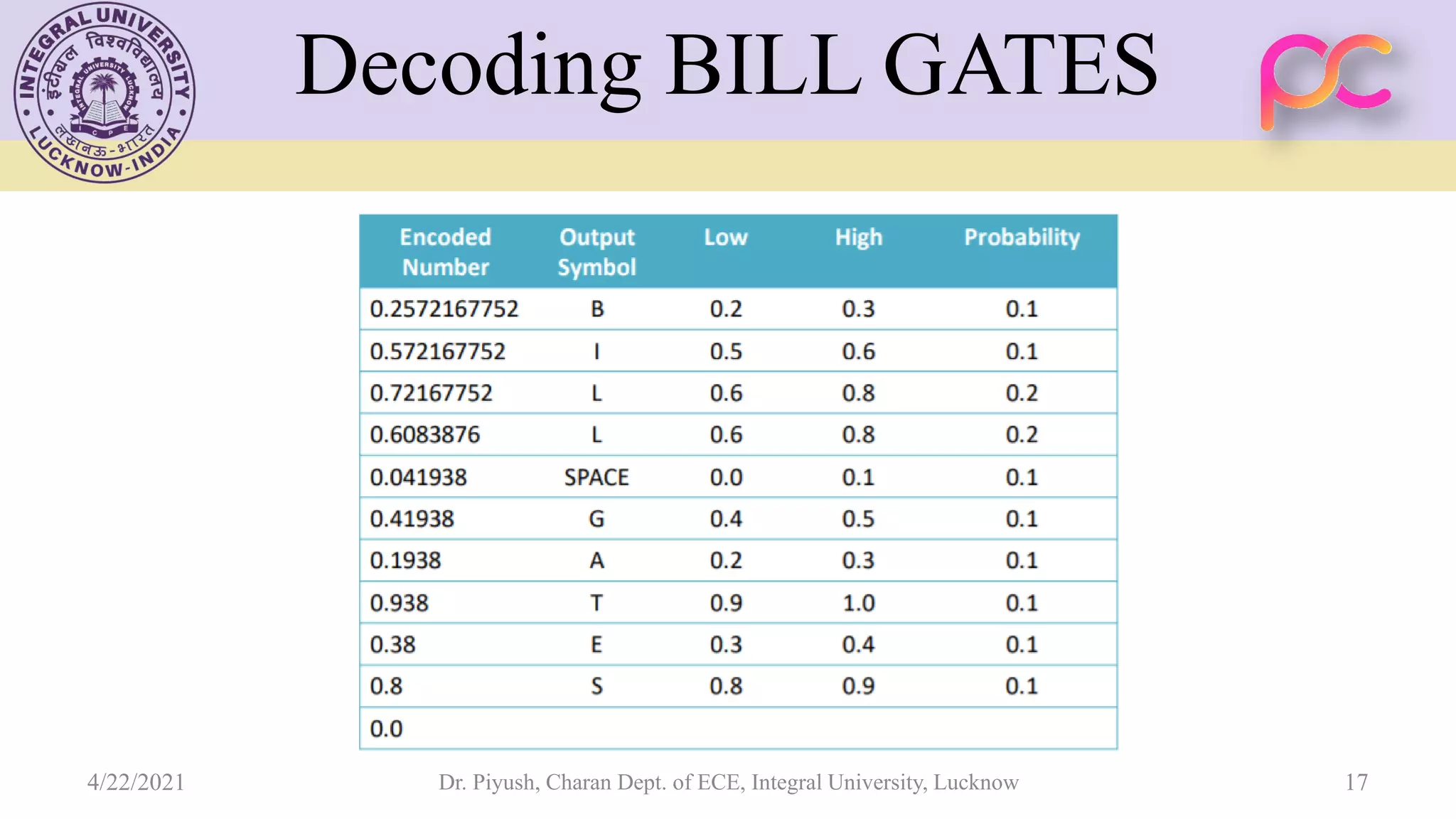

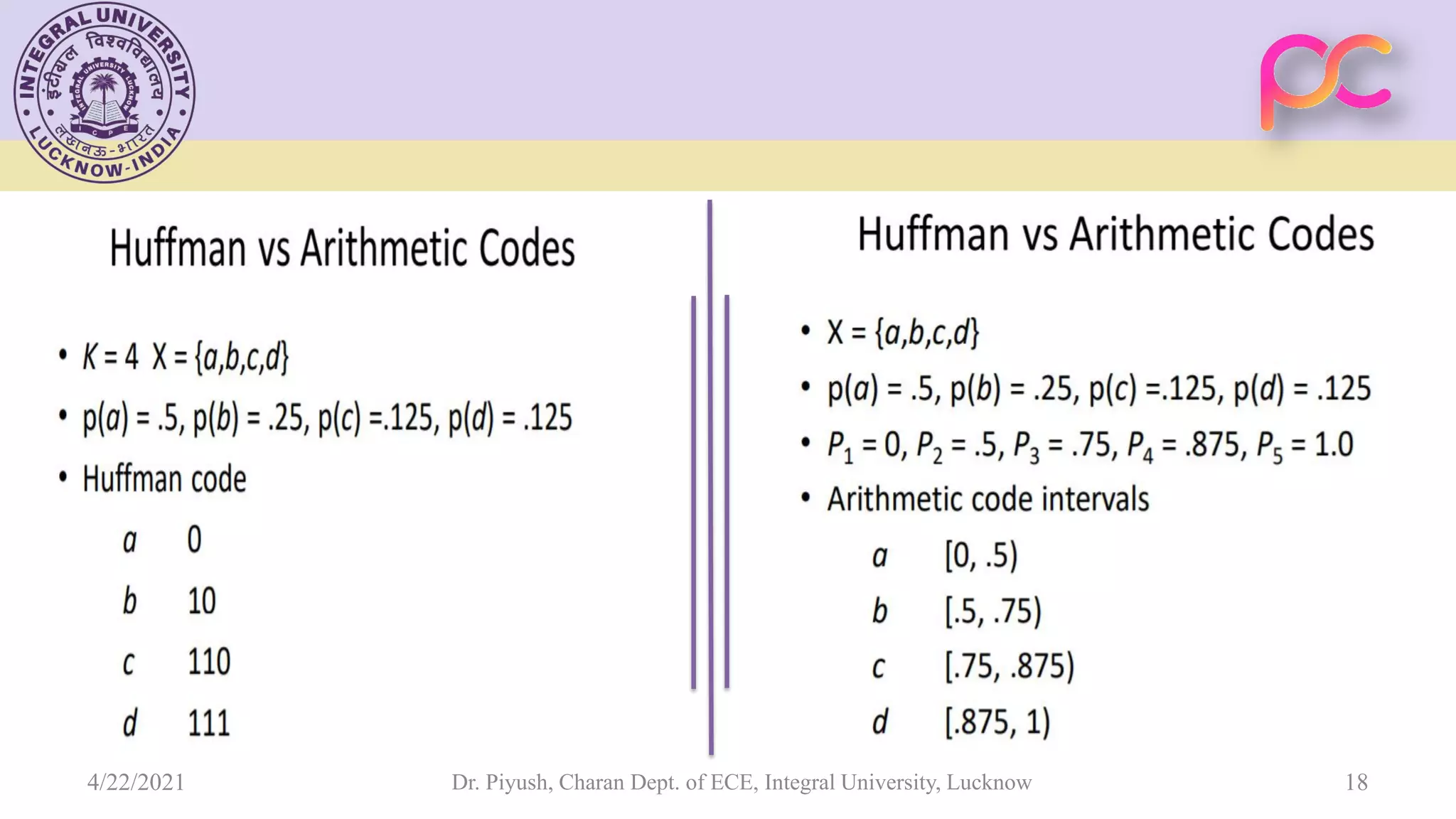

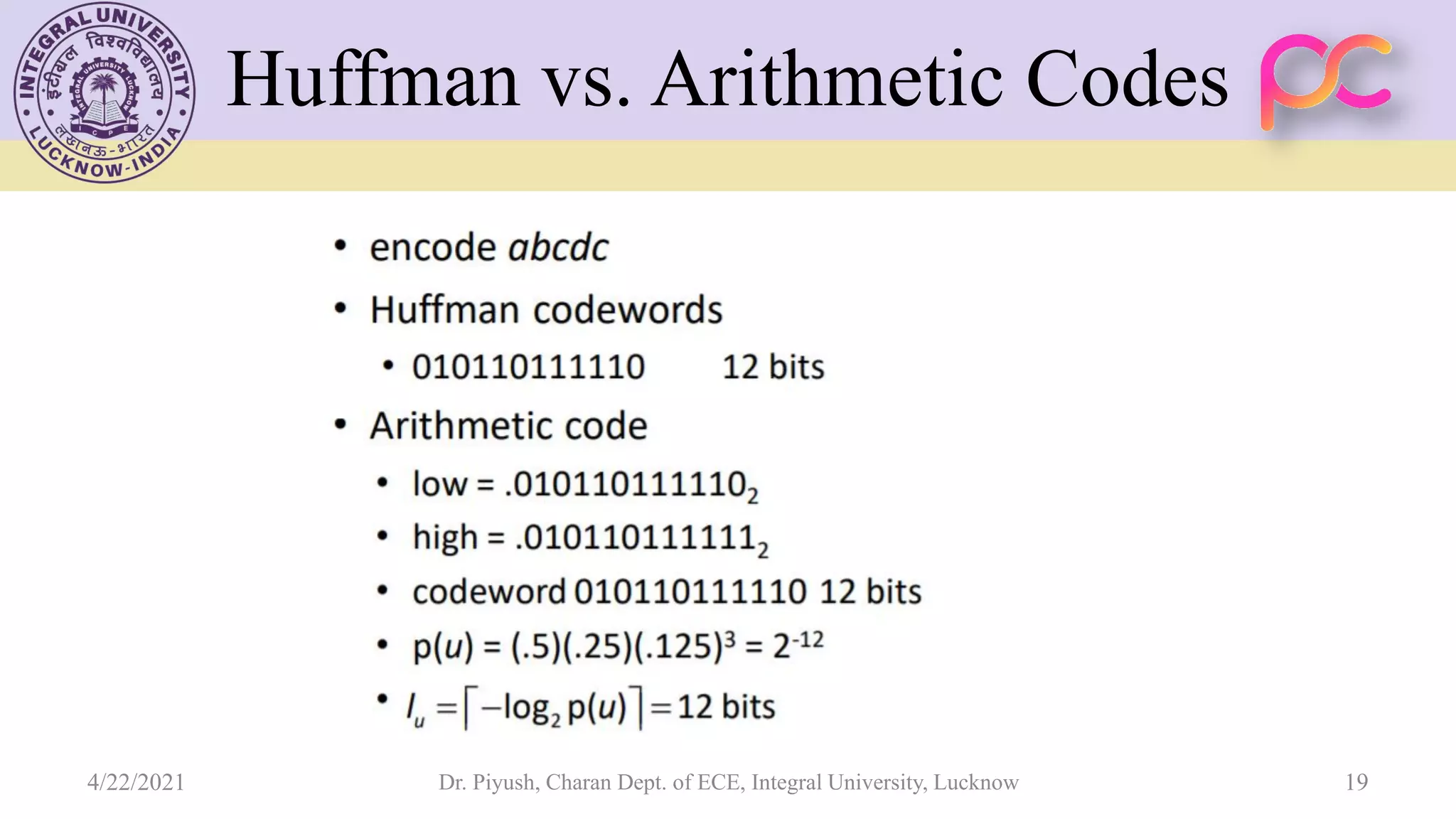

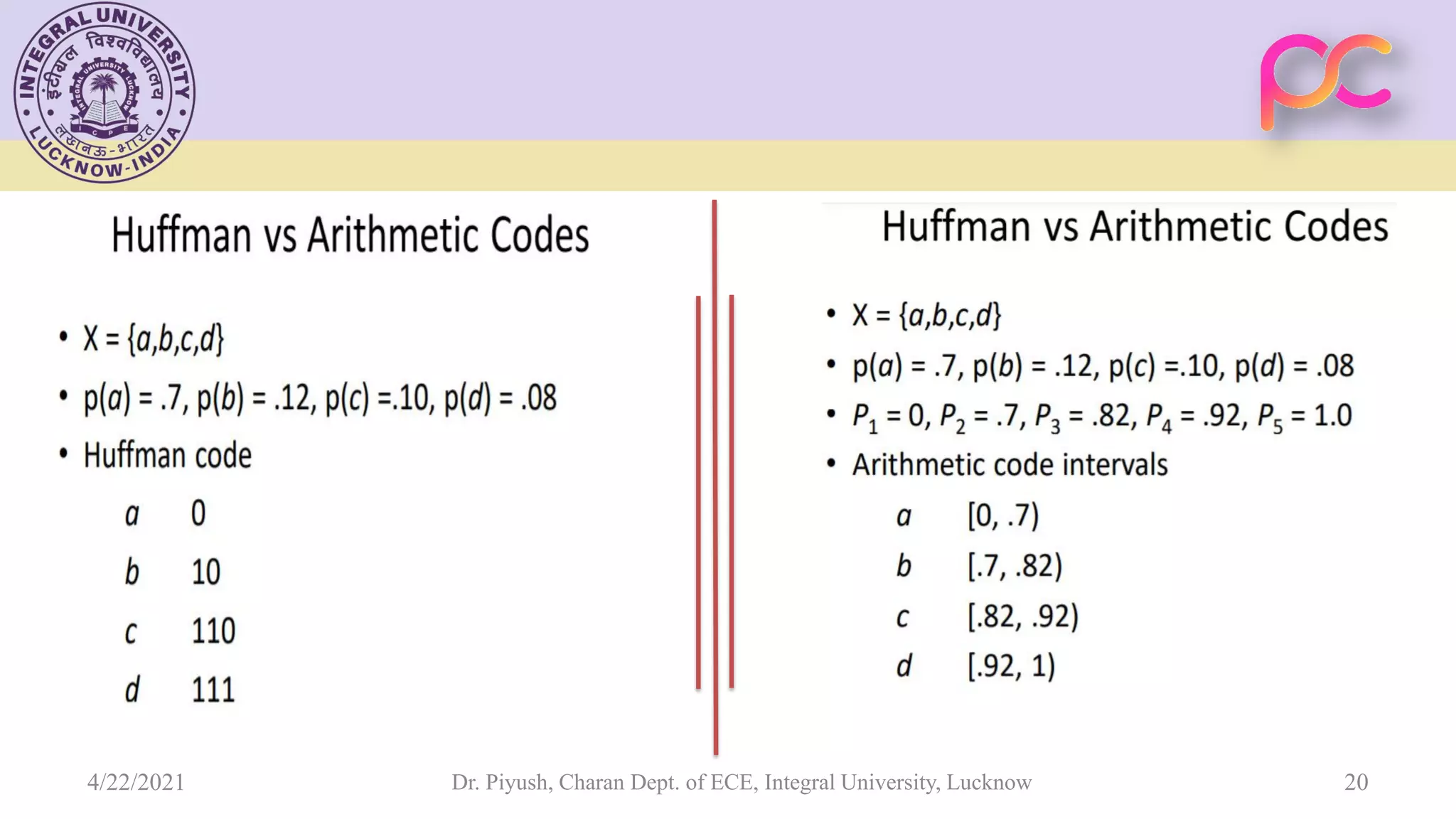

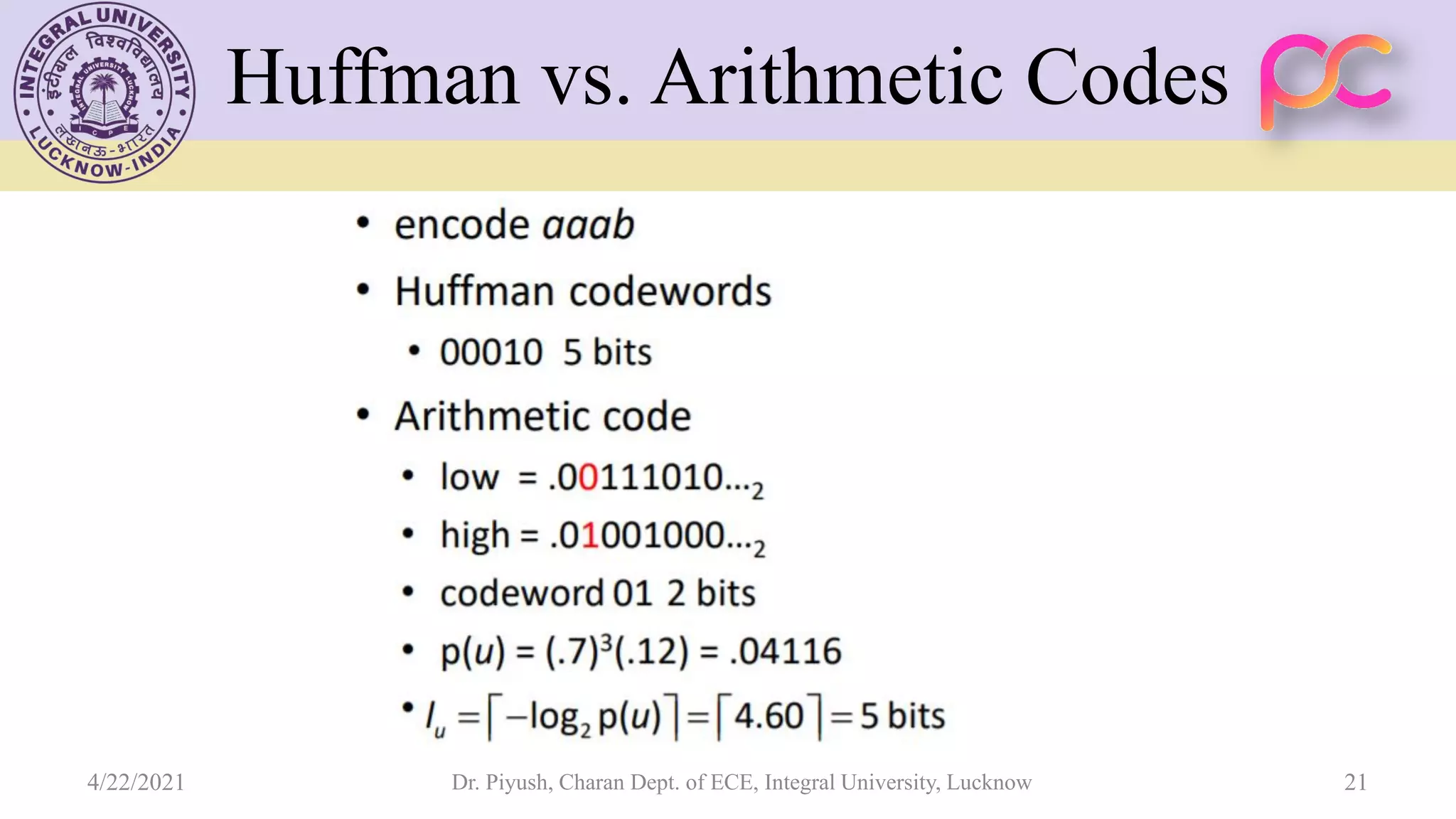

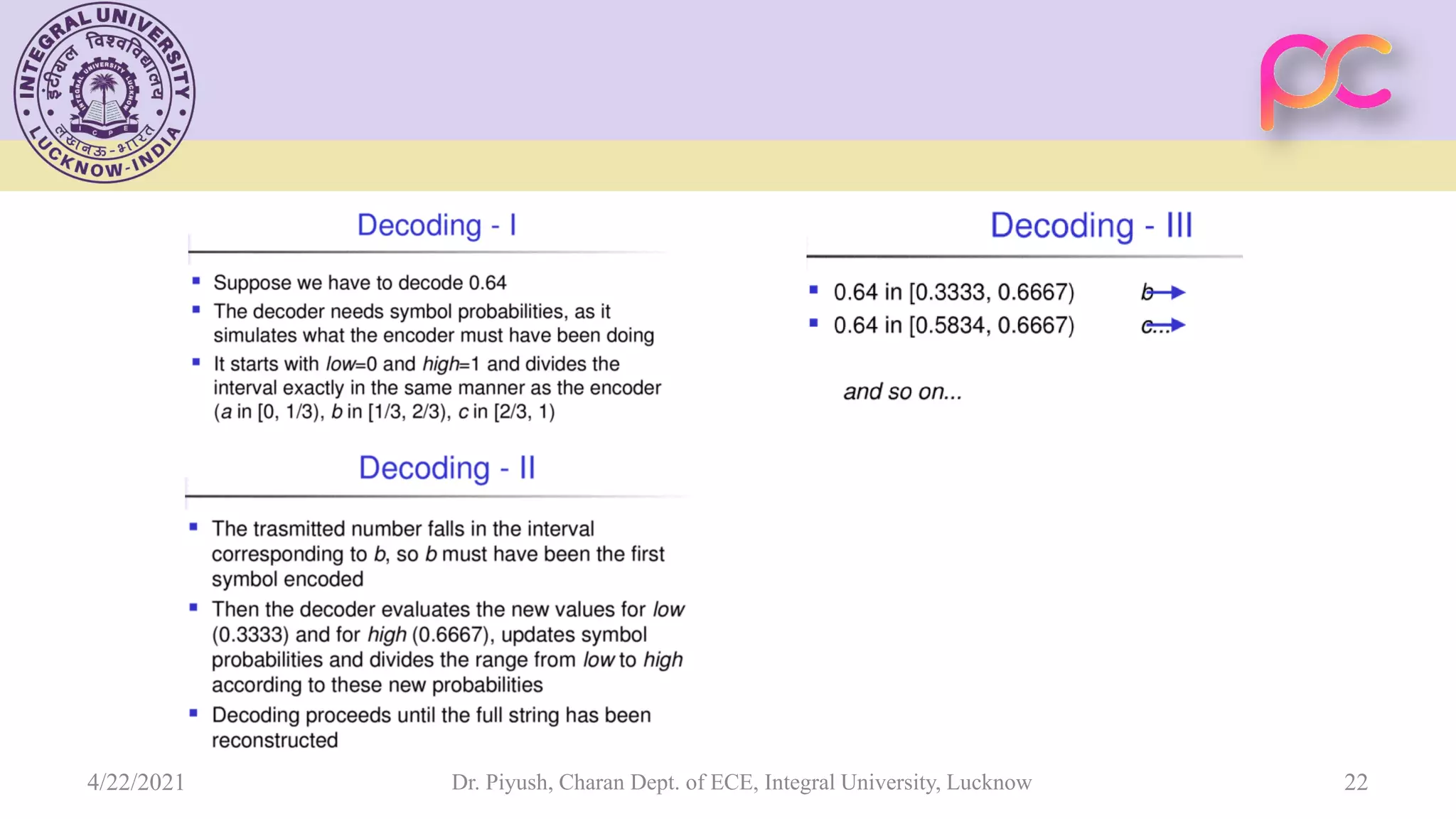

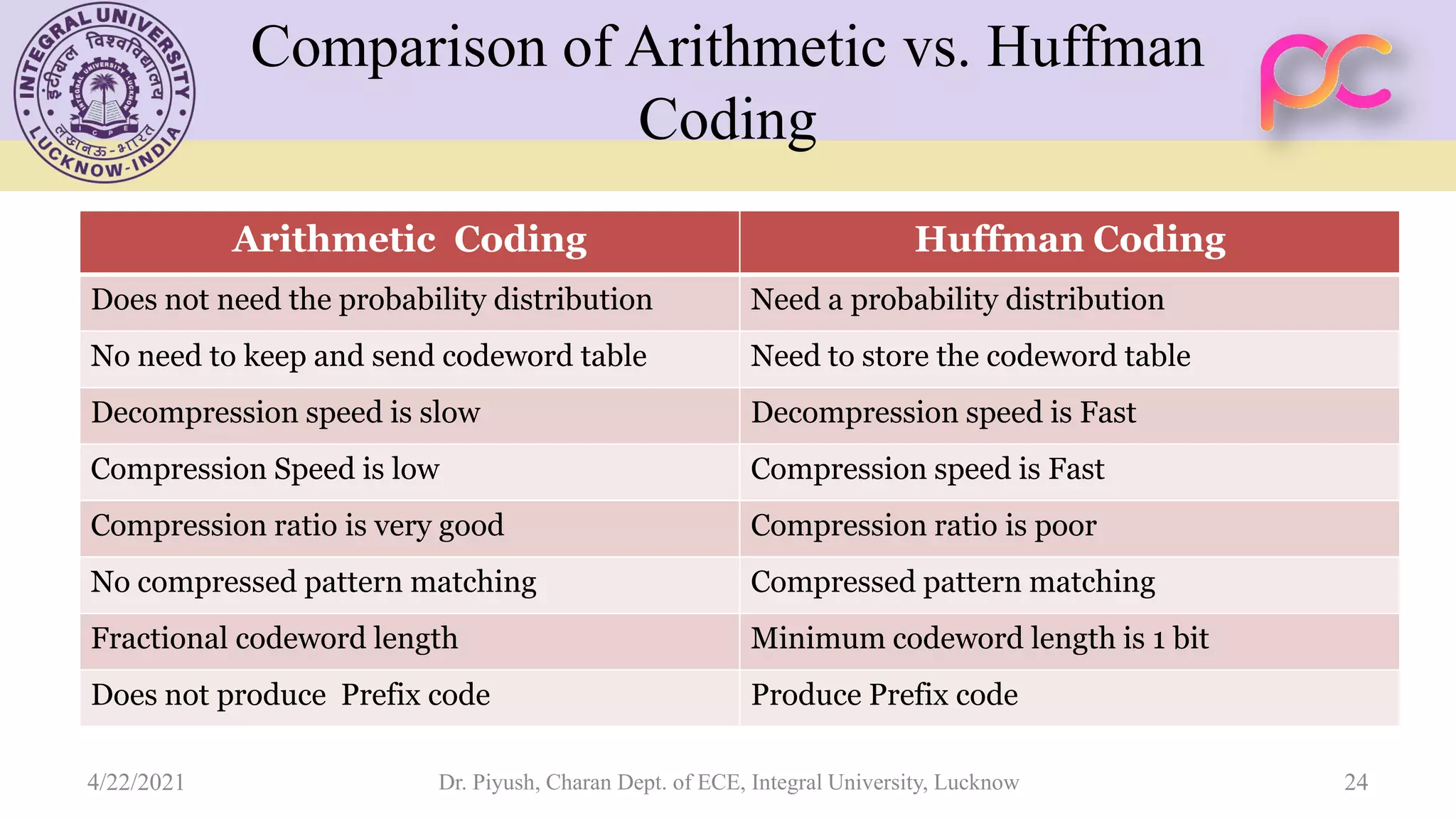

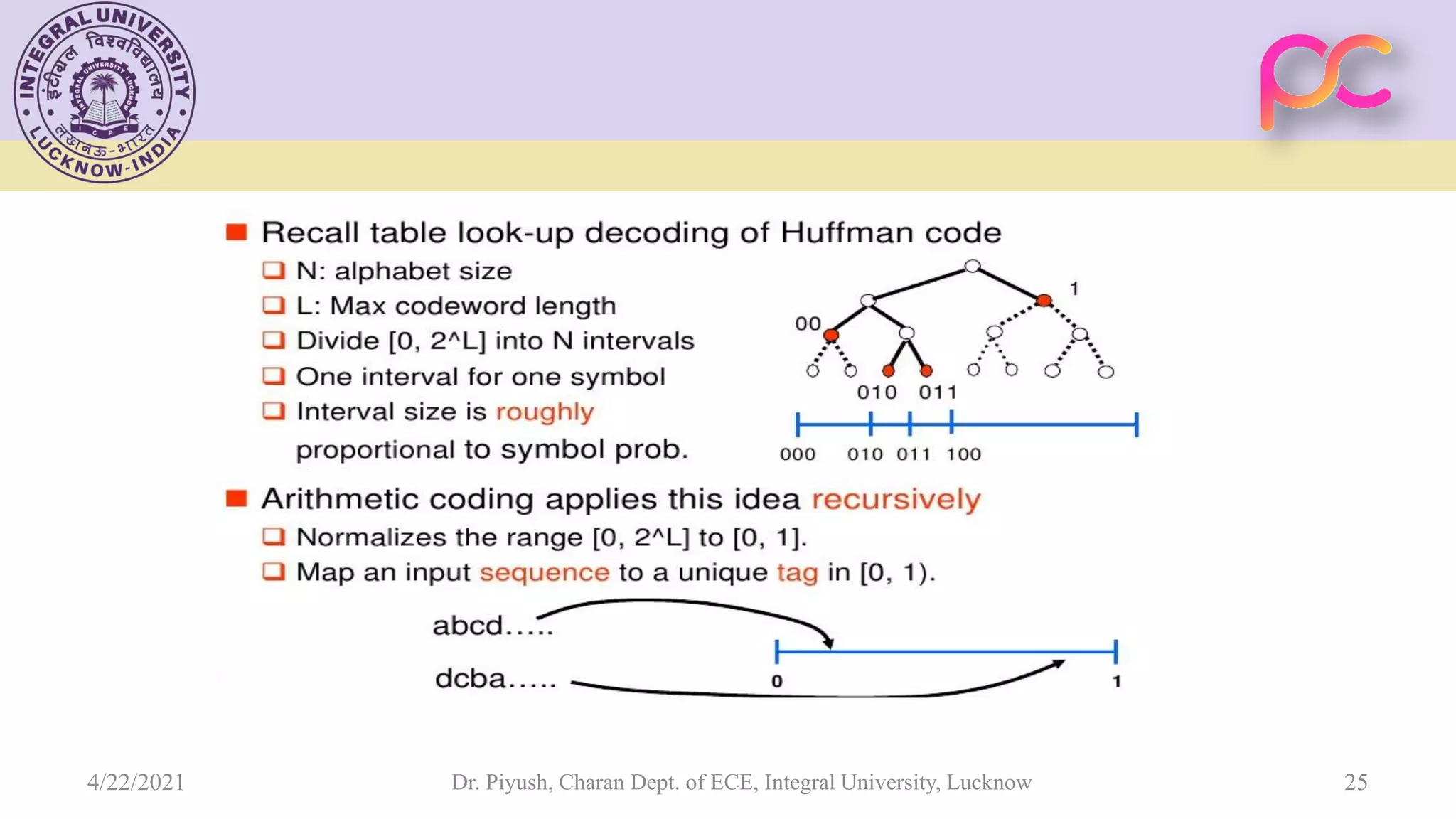

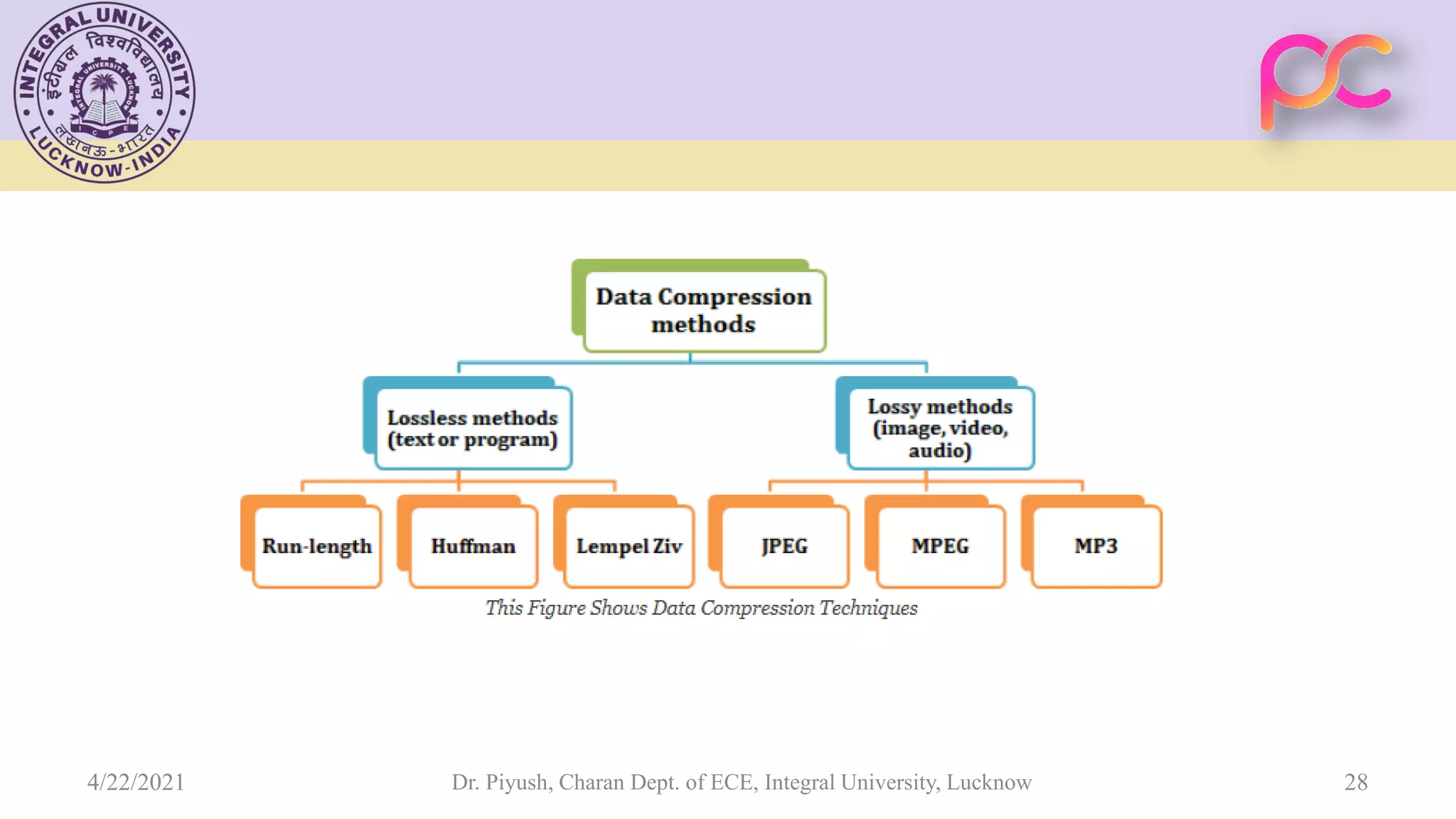

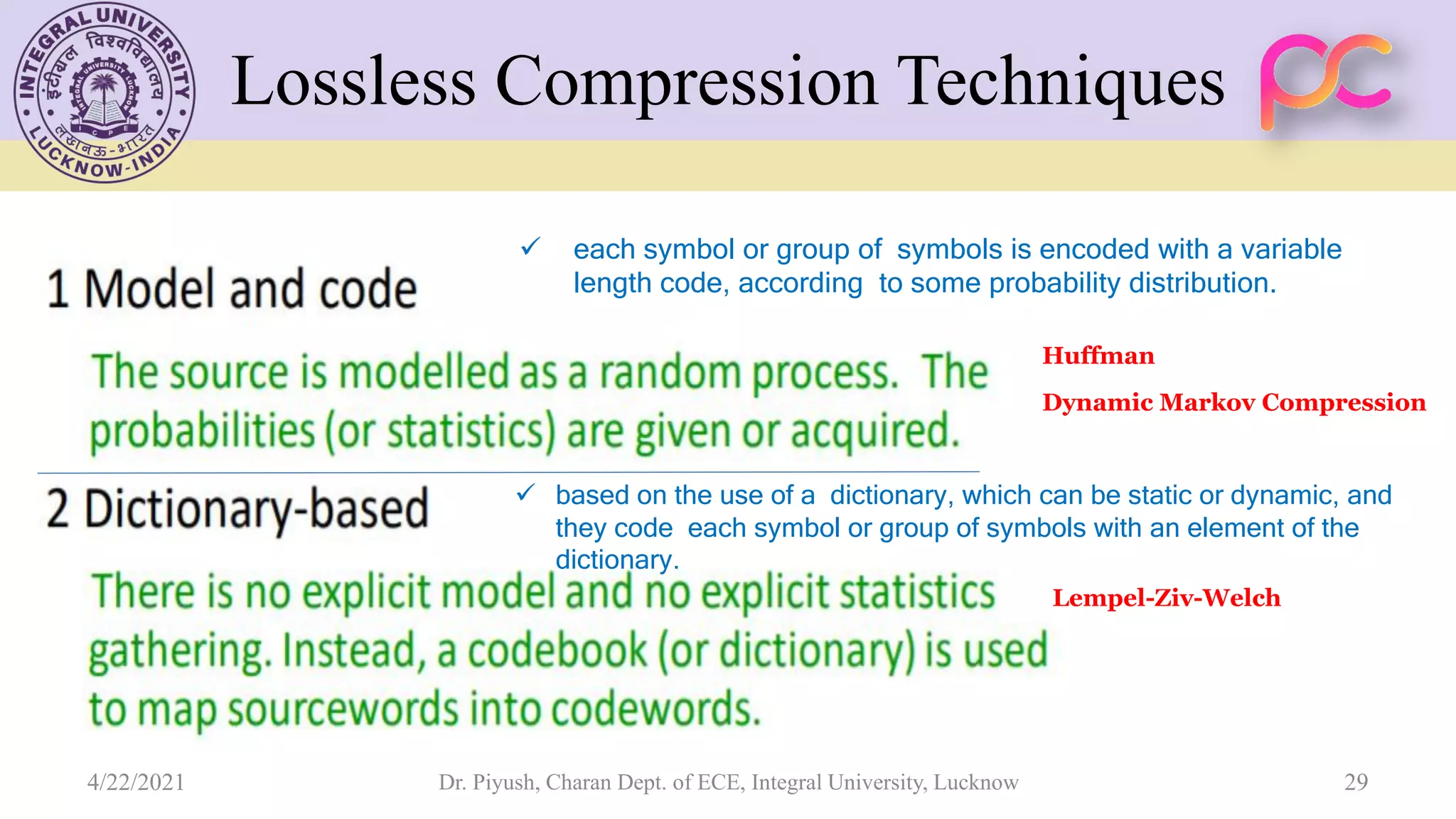

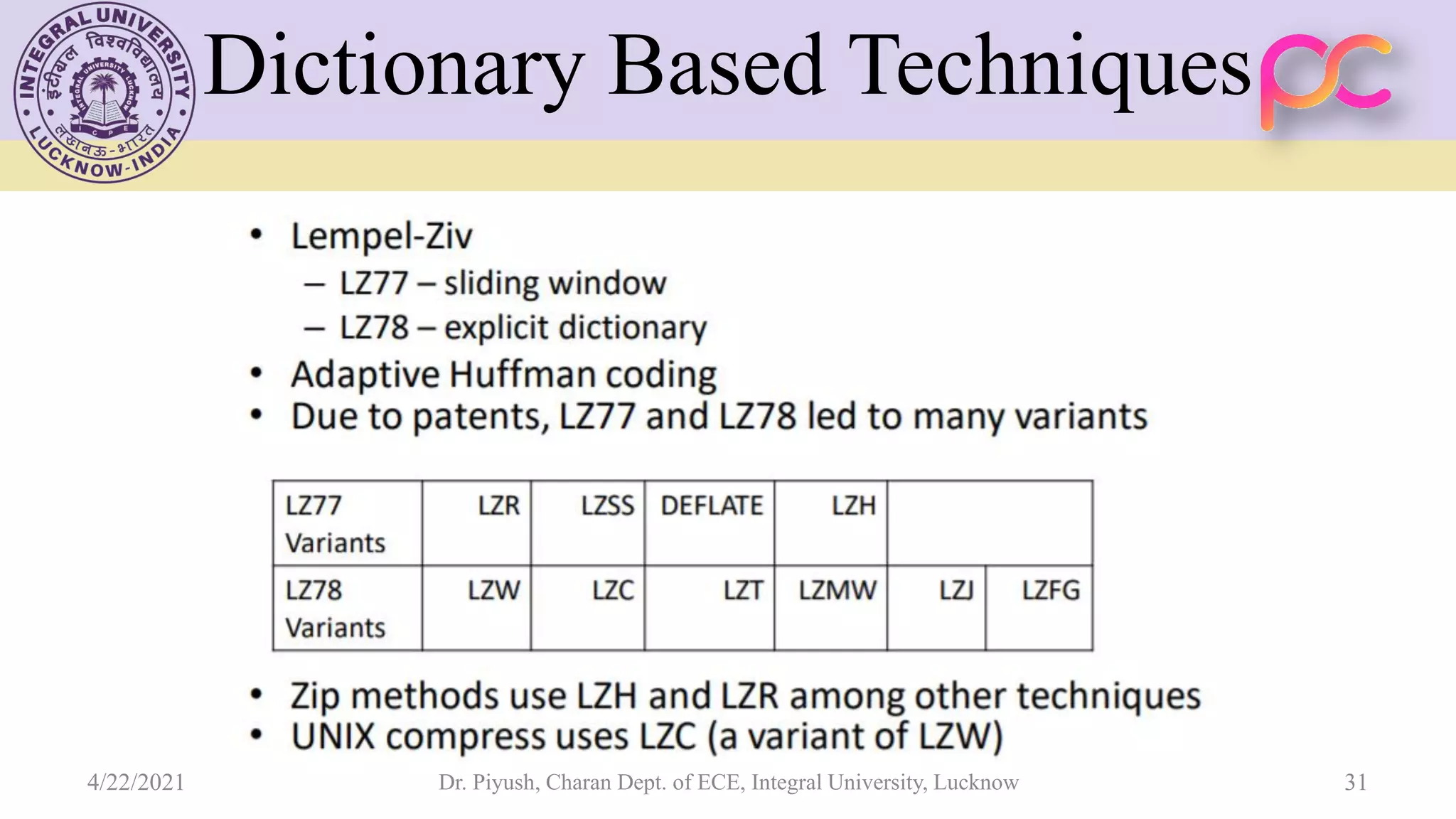

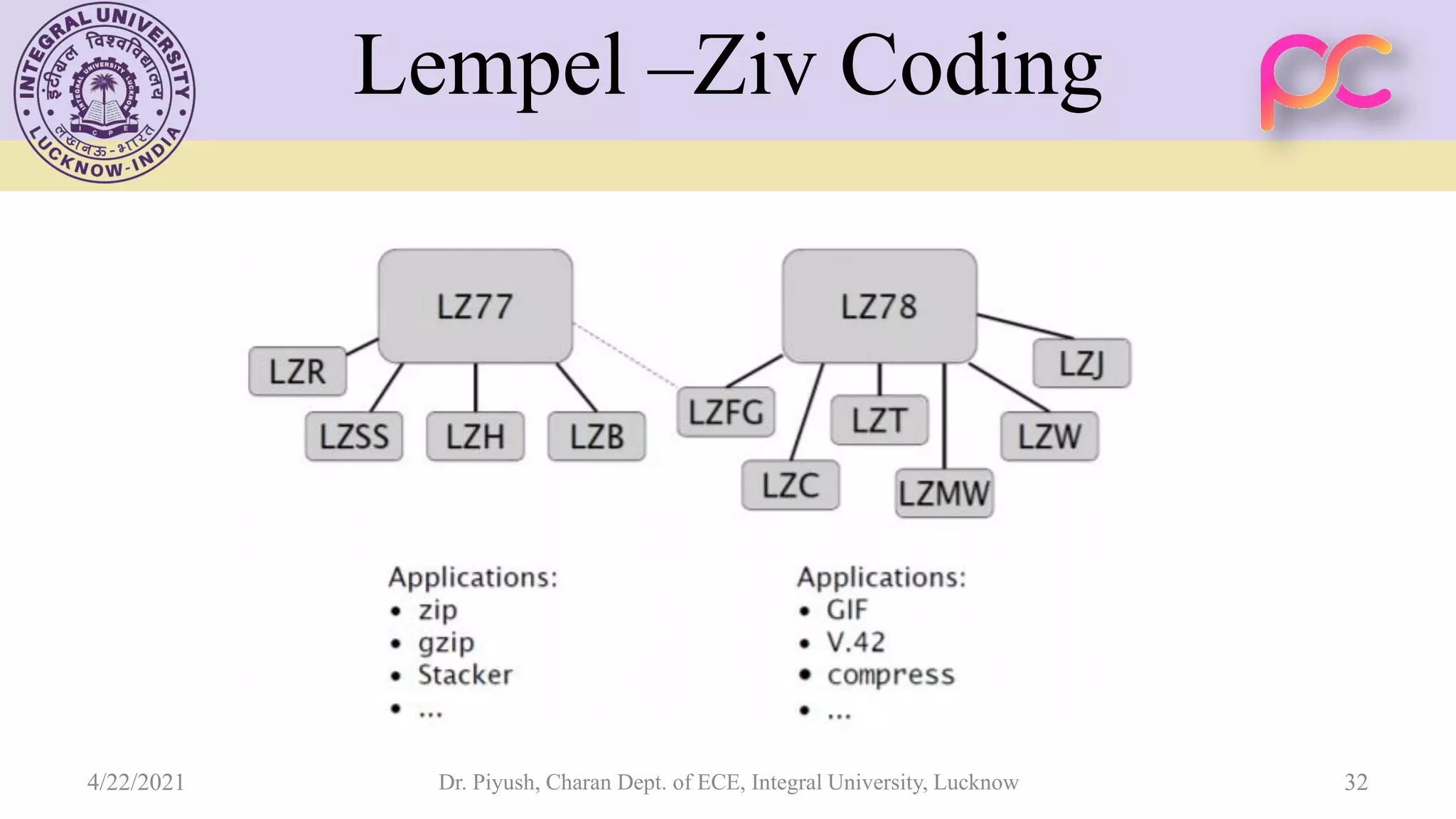

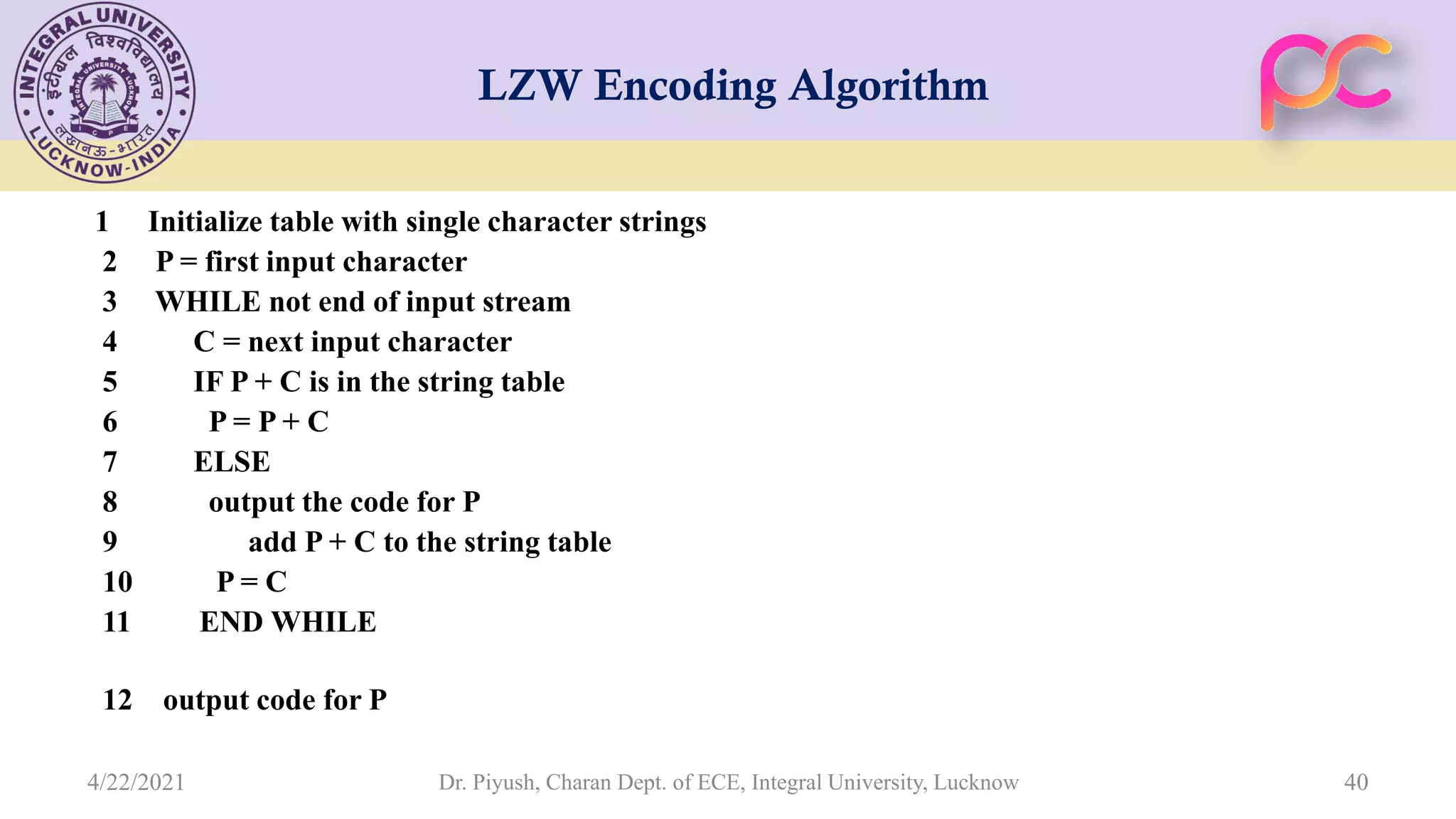

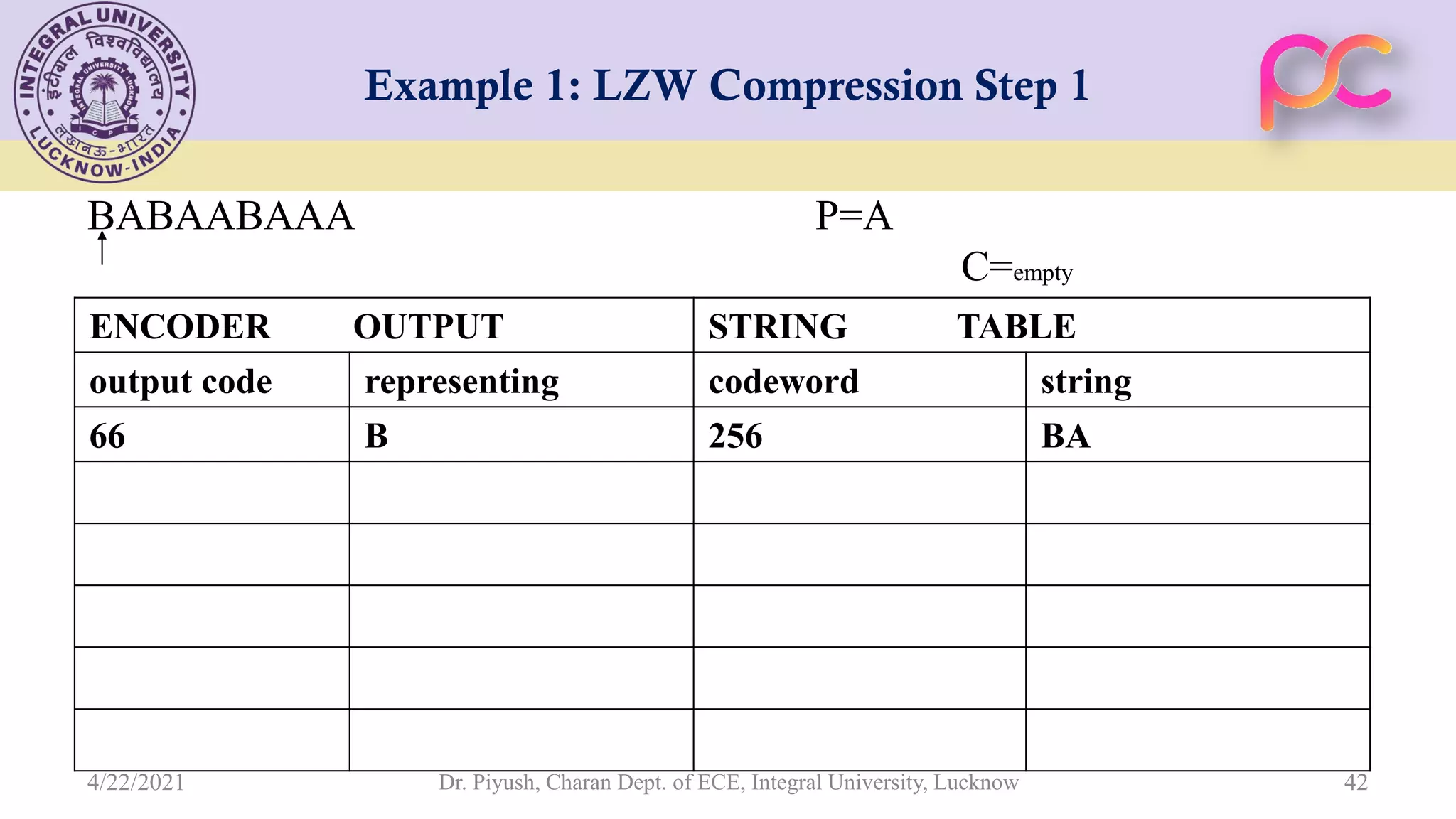

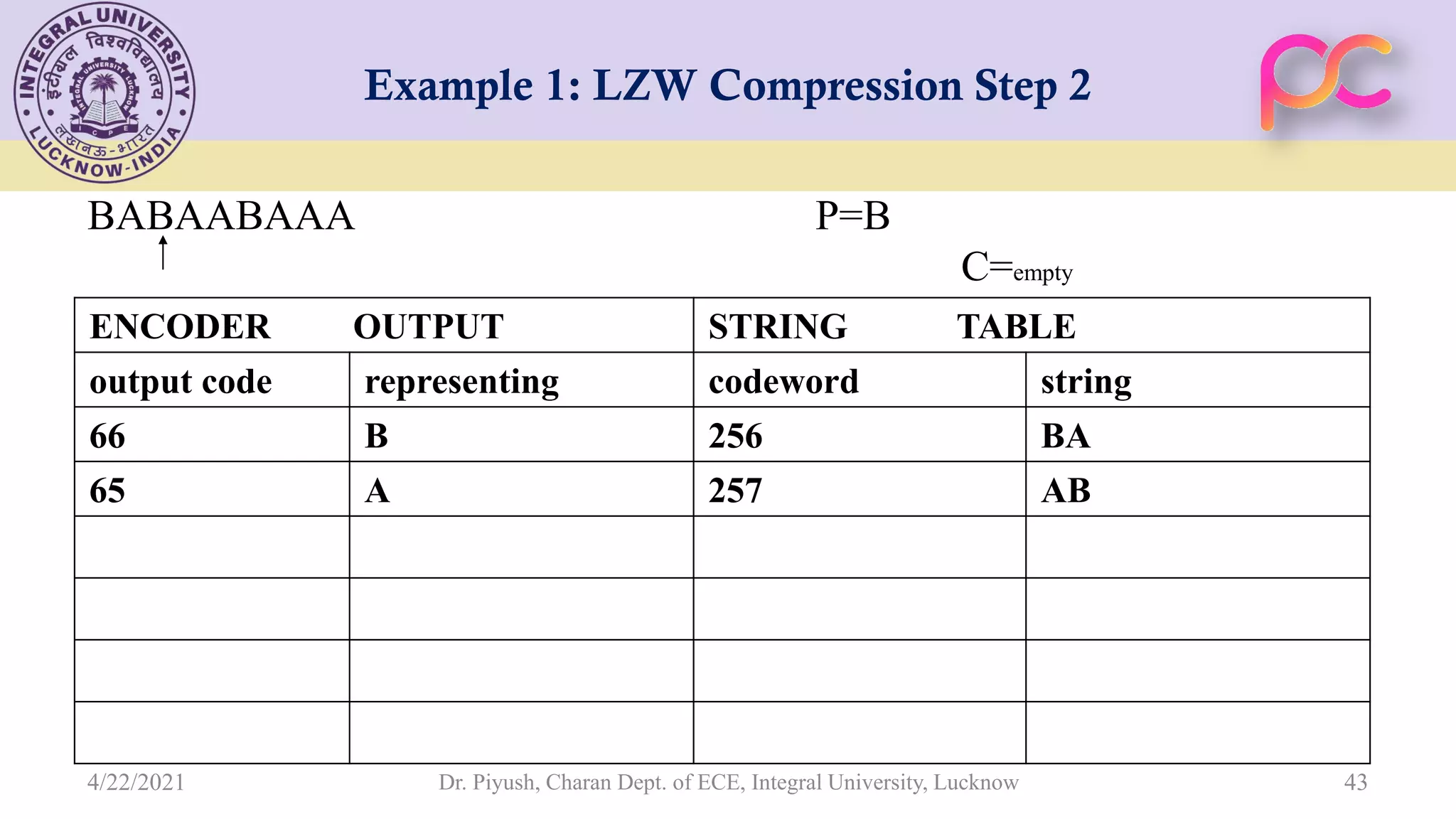

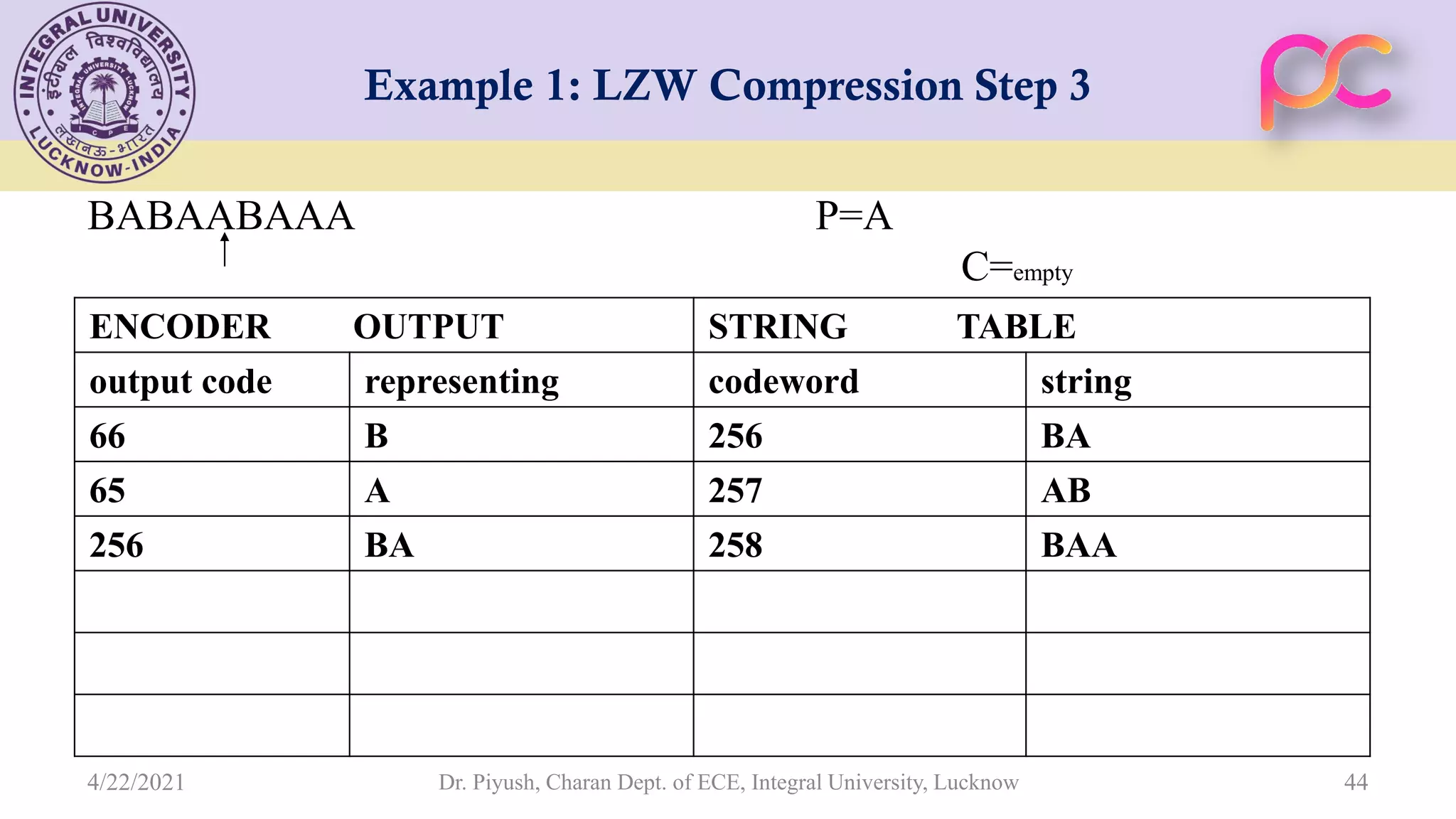

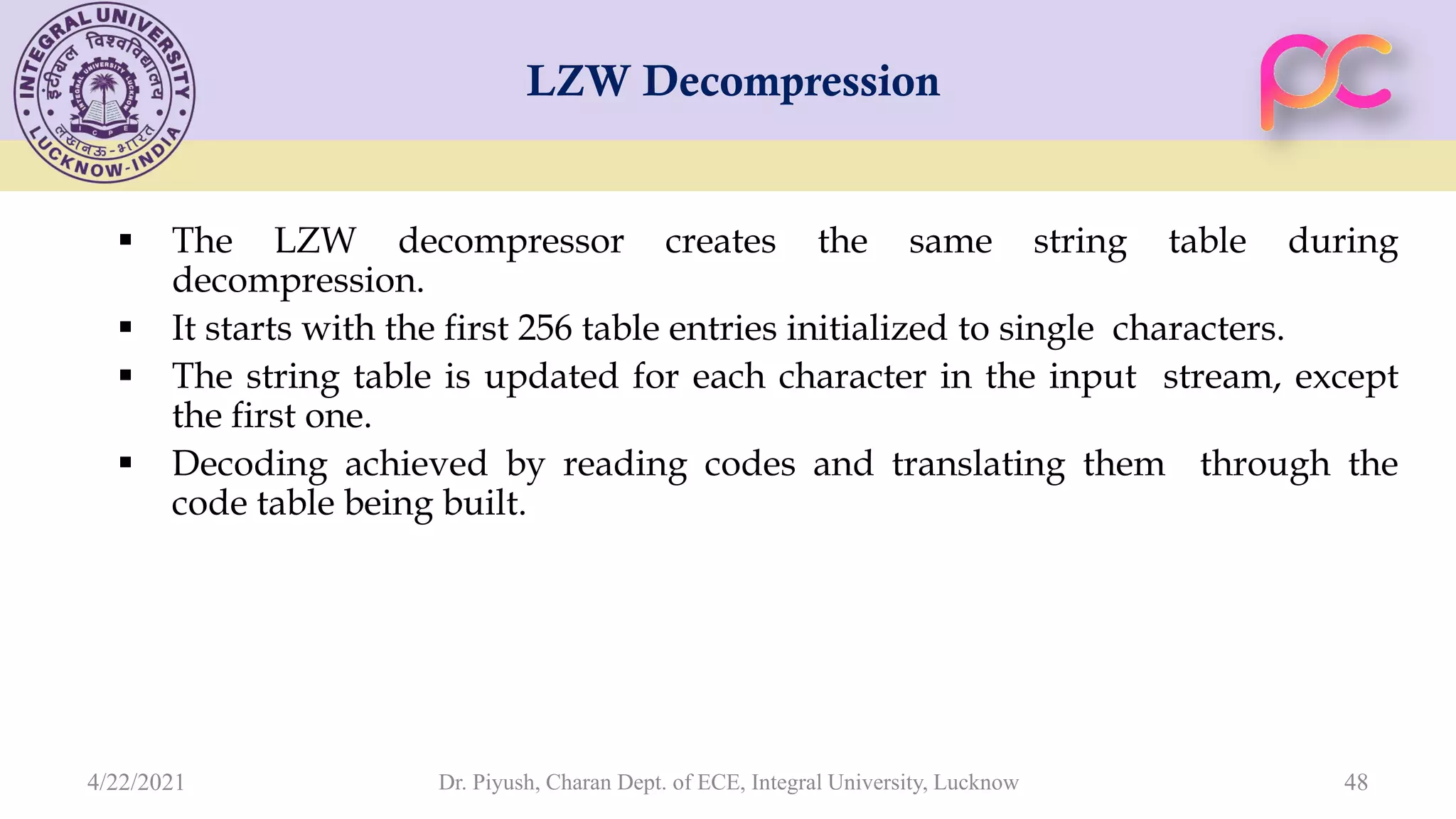

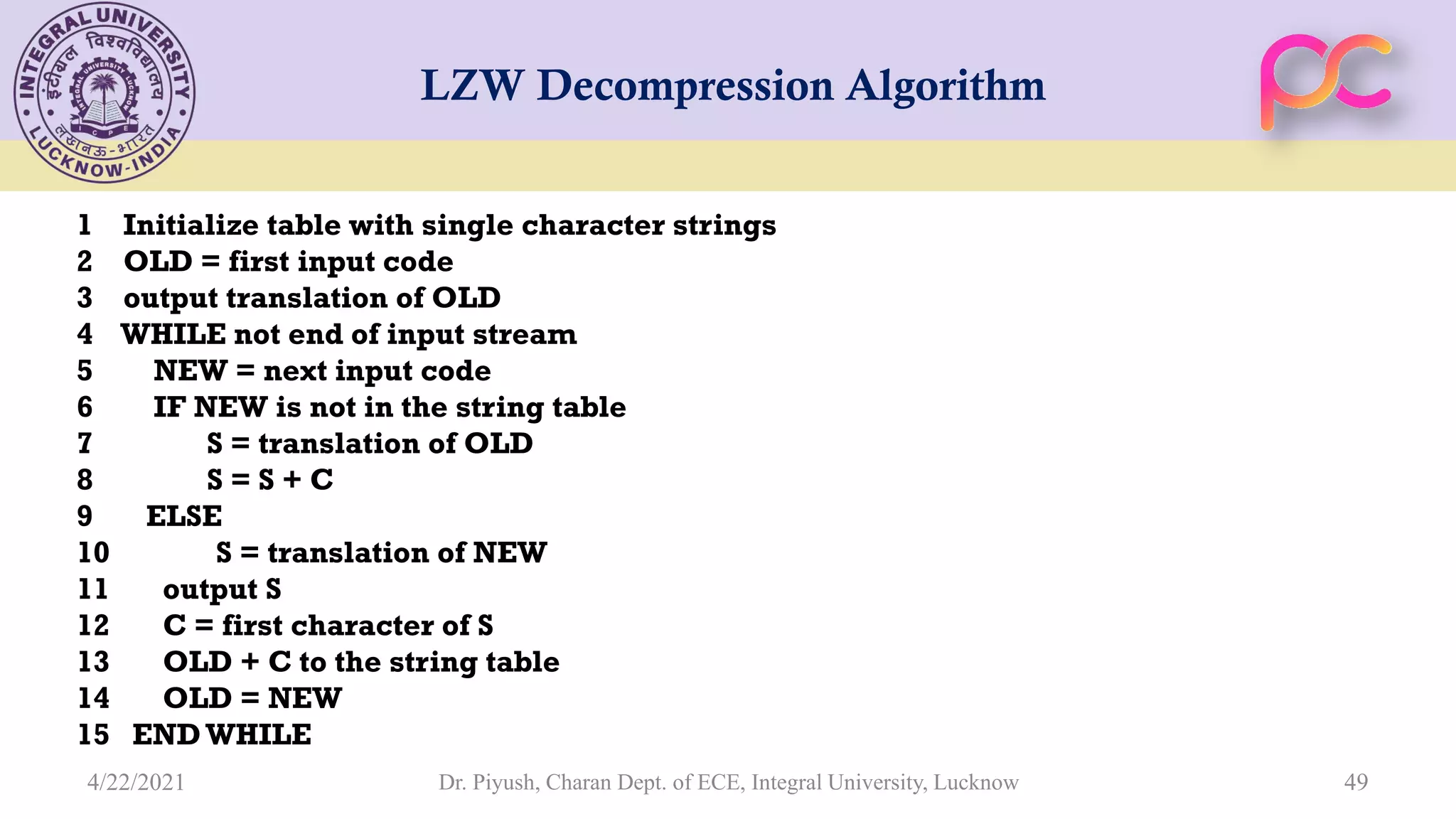

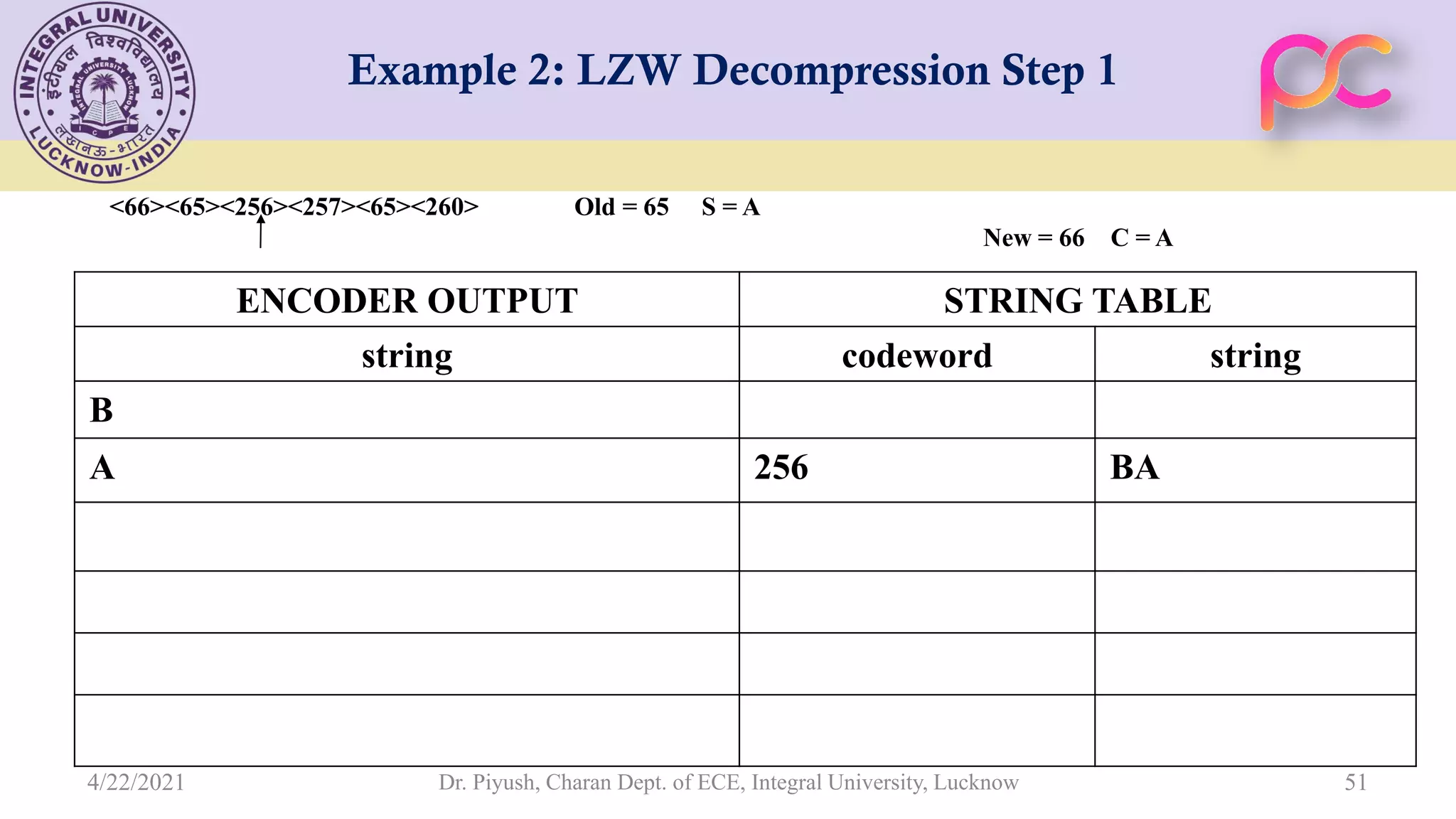

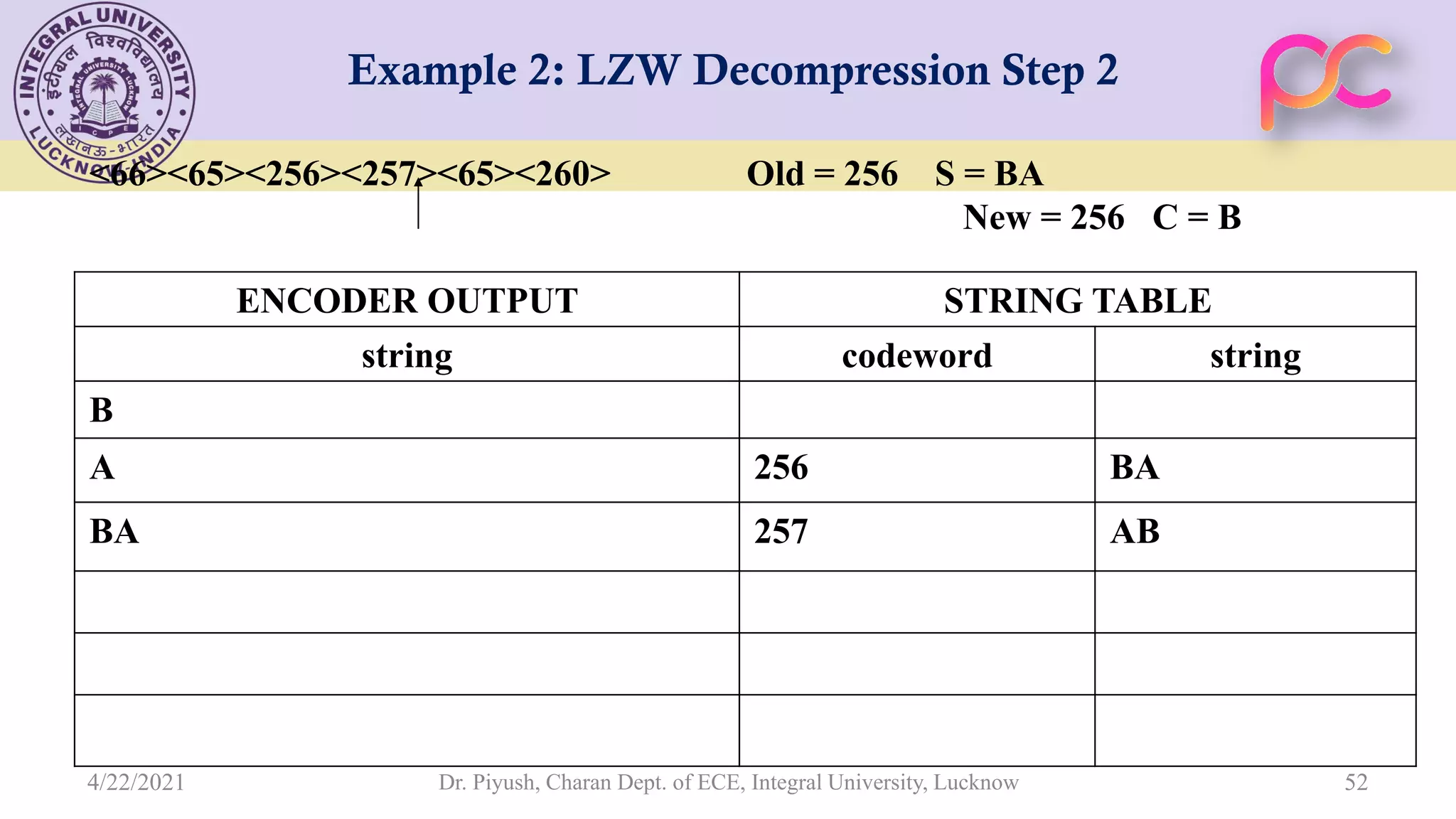

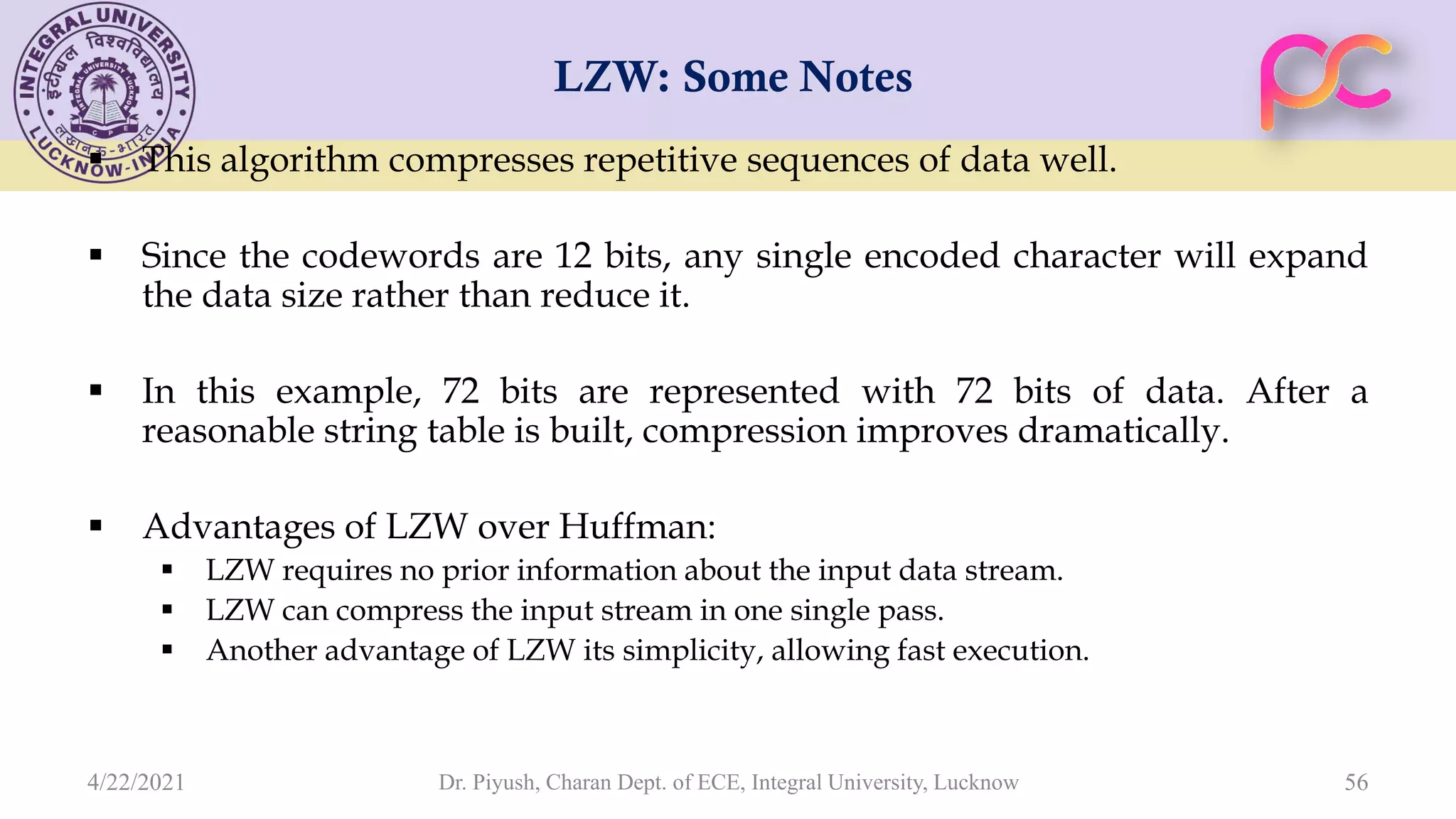

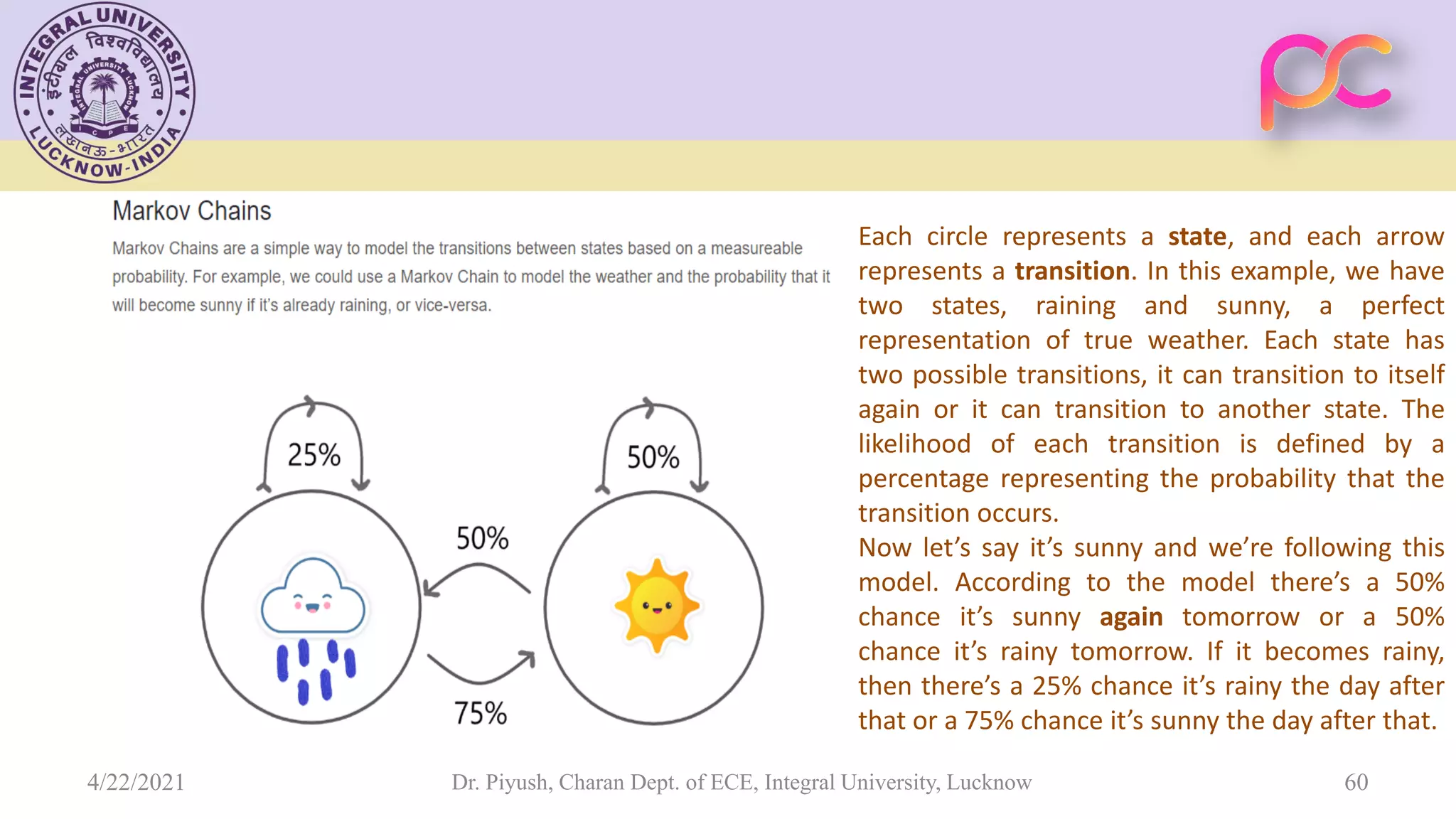

The document provides lecture notes on arithmetic coding for data compression, covering topics such as arithmetic coding encoding and decoding algorithms, comparing arithmetic coding to Huffman coding, dictionary techniques like Lempel-Ziv coding, and applications of lossless compression techniques. Arithmetic coding assigns a unique identifier or tag to a sequence and then gives that tag a unique binary code, while dictionary techniques code each symbol or group of symbols with an element from a static or dynamic dictionary.

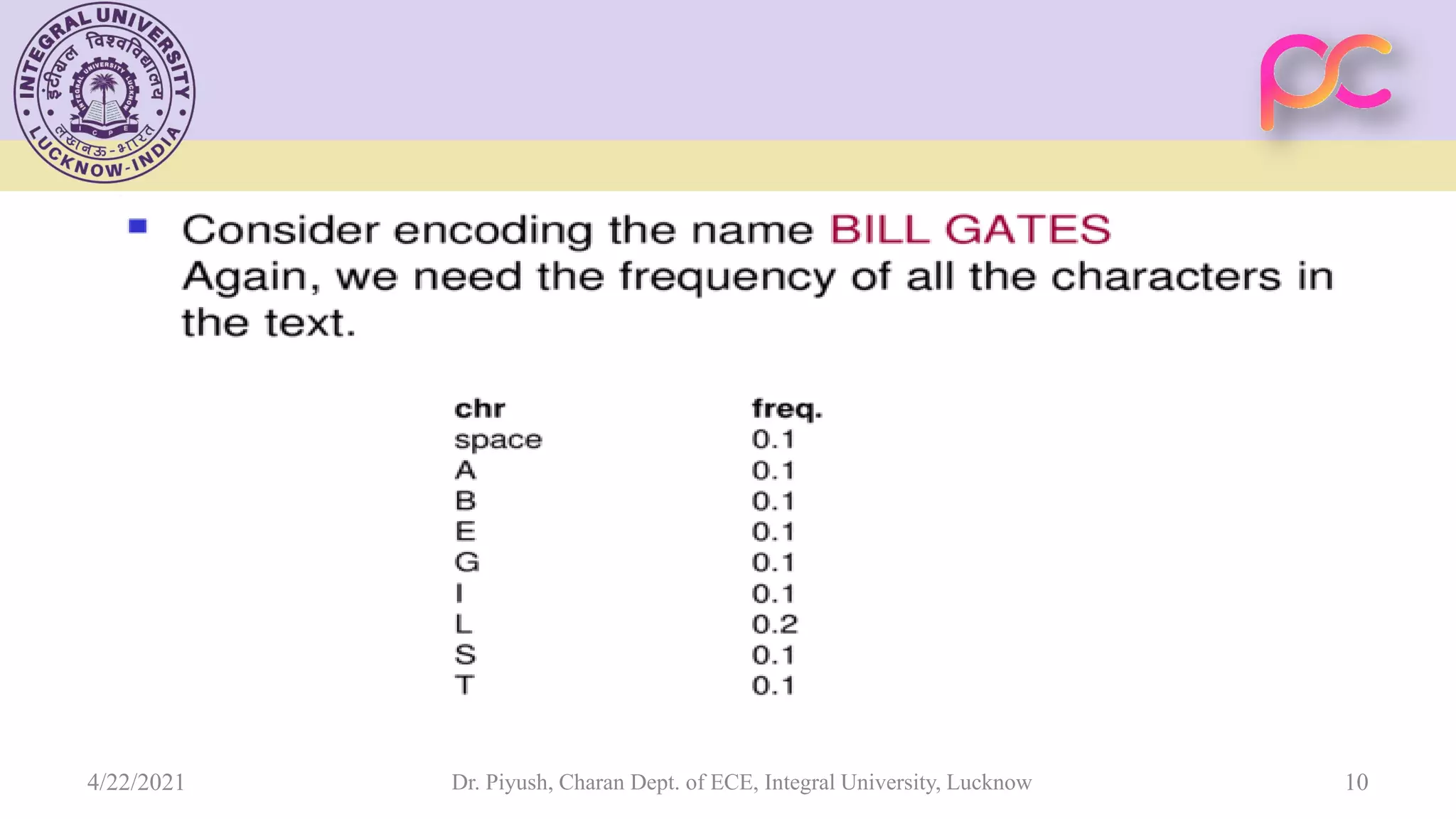

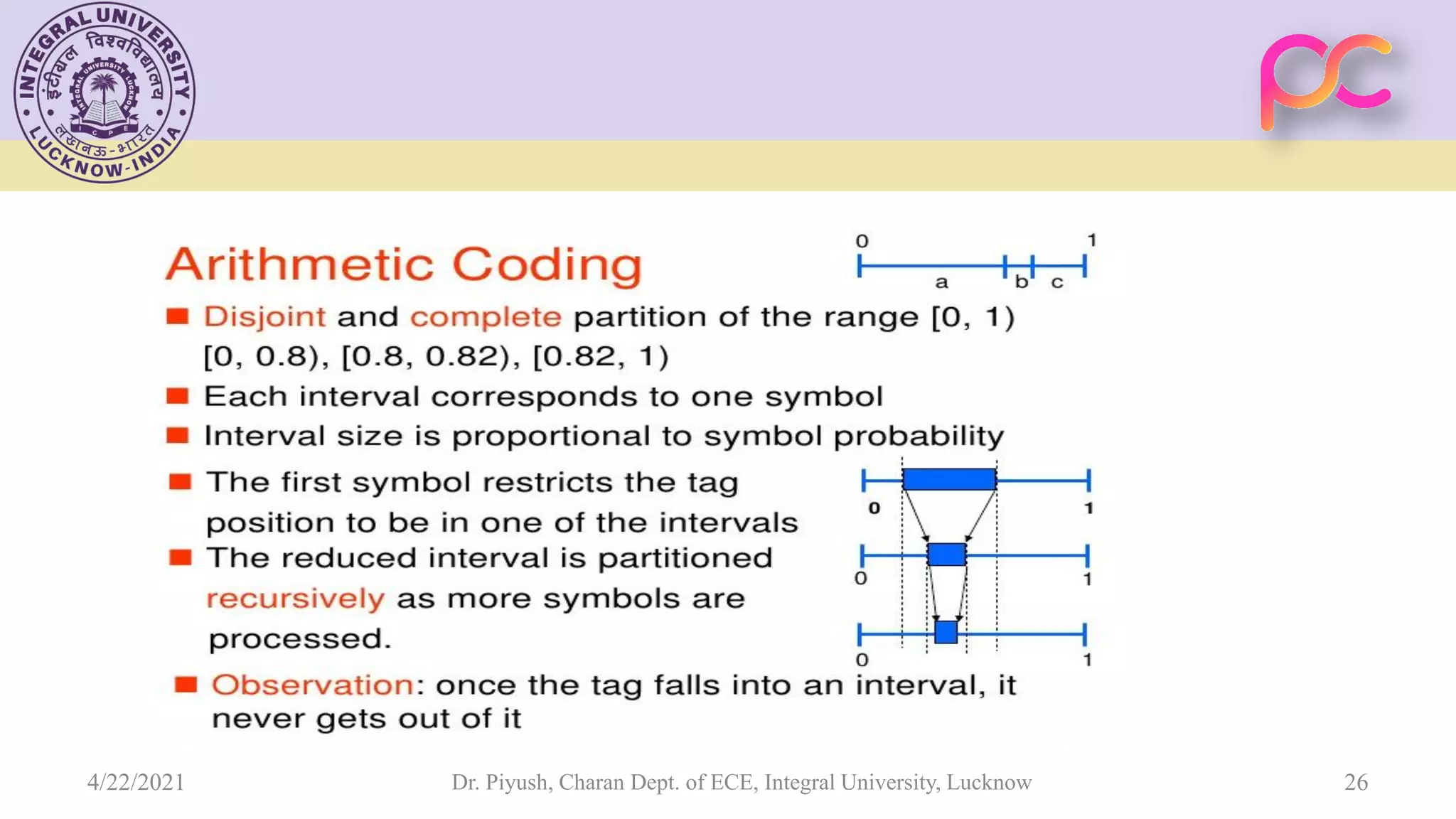

![ Assume we know the probabilities of each symbol of the data source, we can allocate to each symbol an interval with width proportional to its probability, and each of the intervals does not overlap with others. This can be done if we use the cumulative probabilities as the two ends of each interval. Therefore, the two ends of each symbol x amount to Q[x-1] and Q[x]. Symbol x is said to own the range [Q[x-1], Q[x]). Dr. Piyush, Charan Dept. of ECE, Integral University, Lucknow 6 4/22/2021](https://image.slidesharecdn.com/unit3-210422102054/75/Unit-3-Arithmetic-Coding-6-2048.jpg)