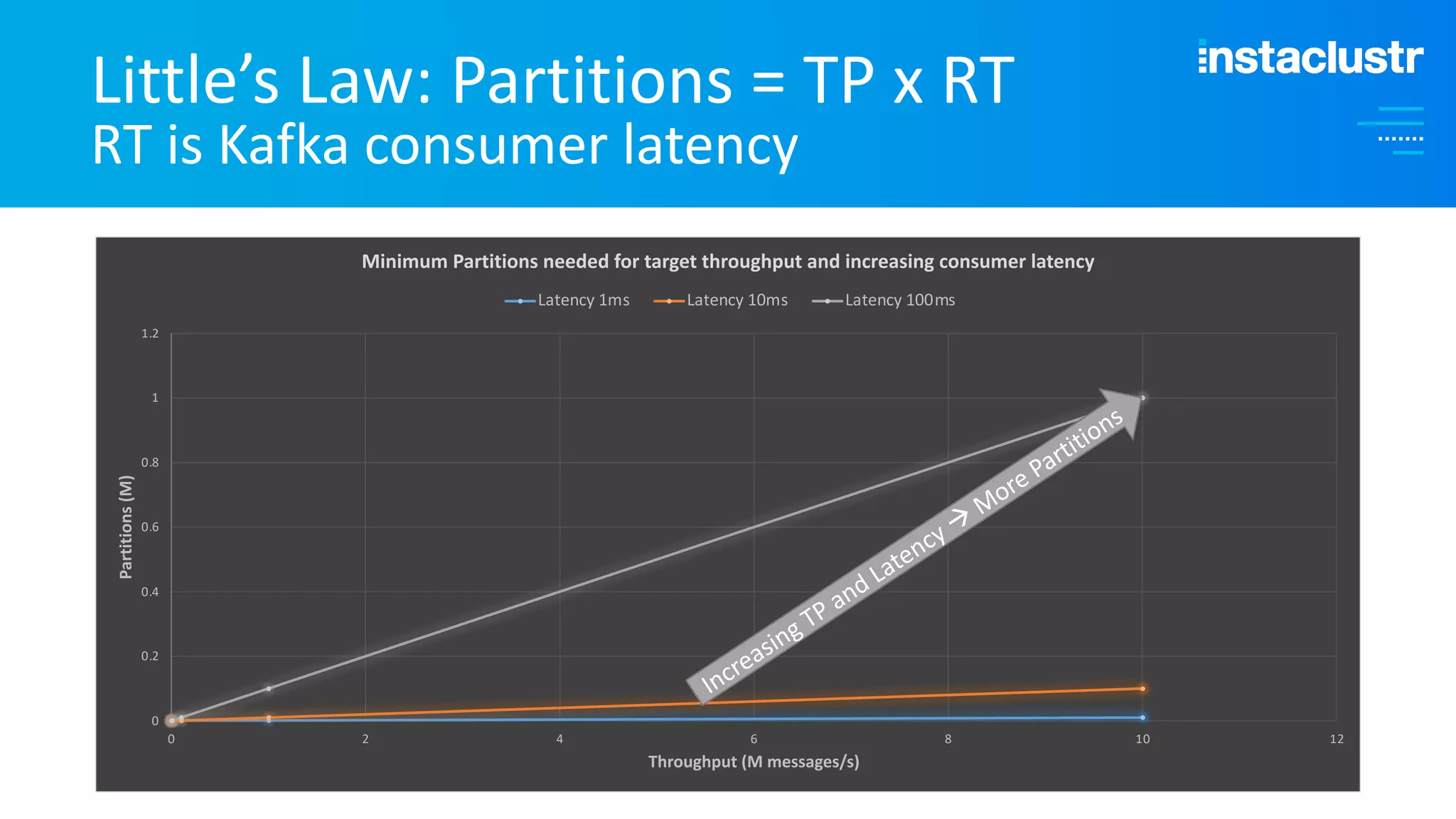

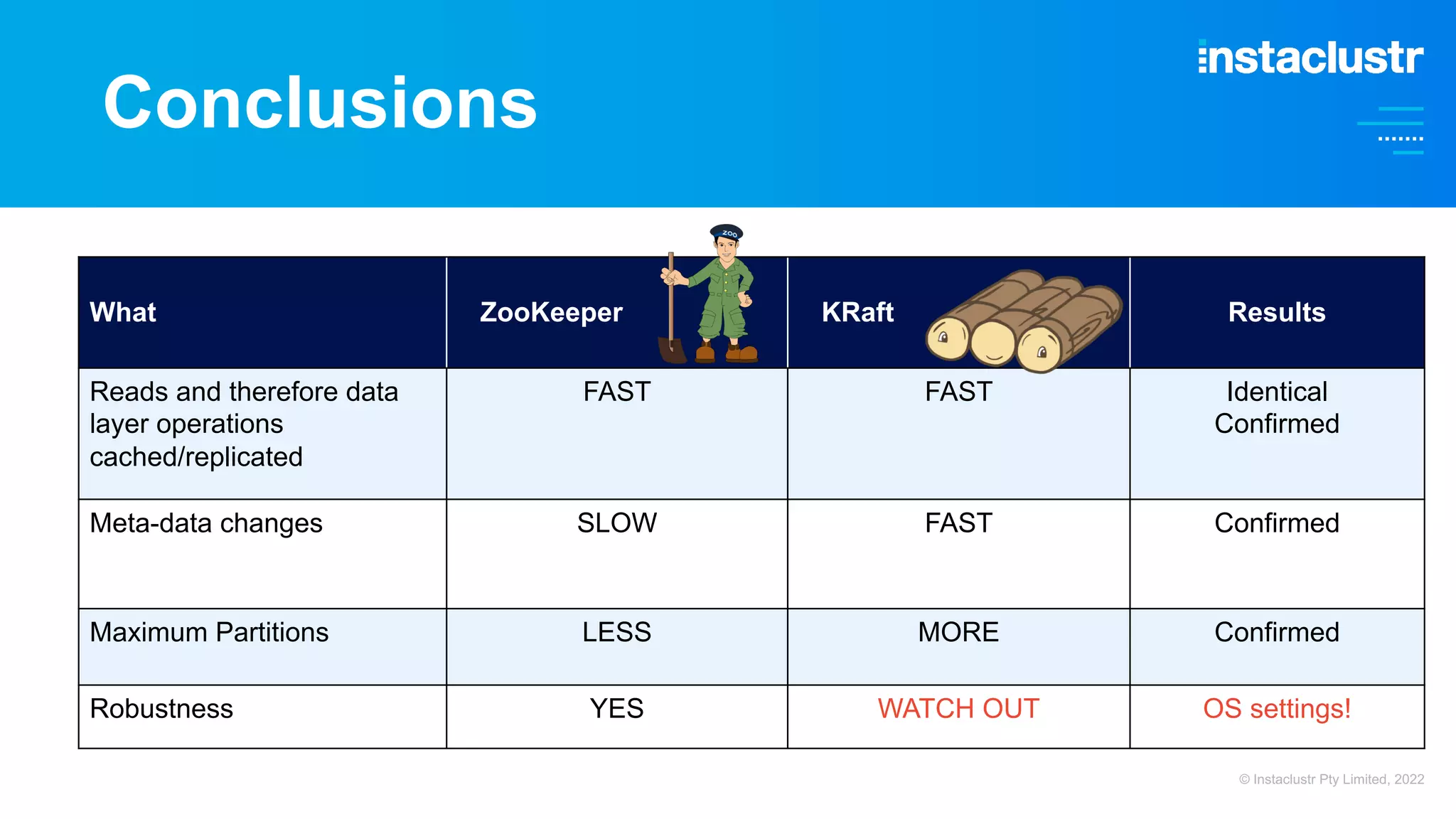

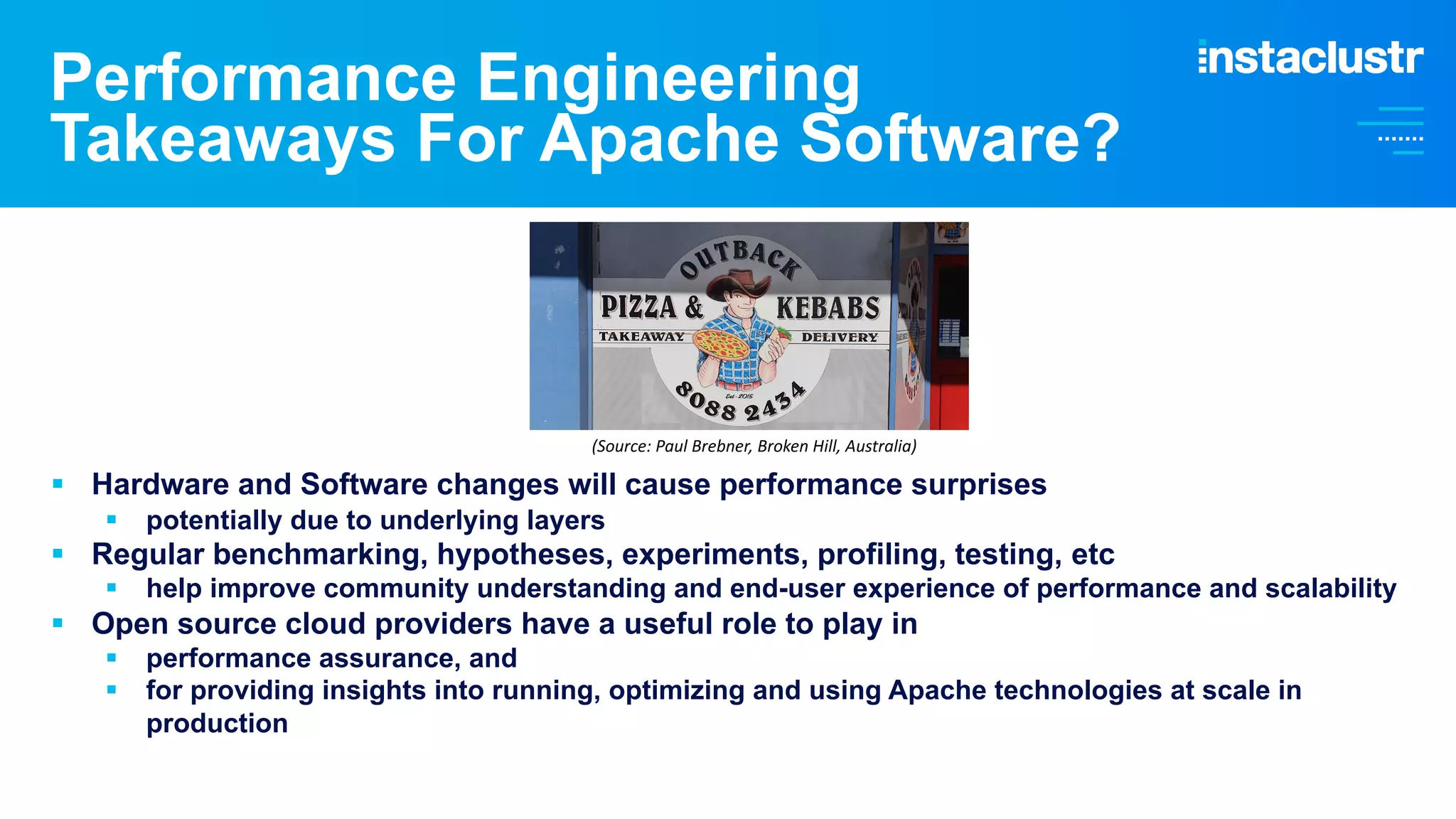

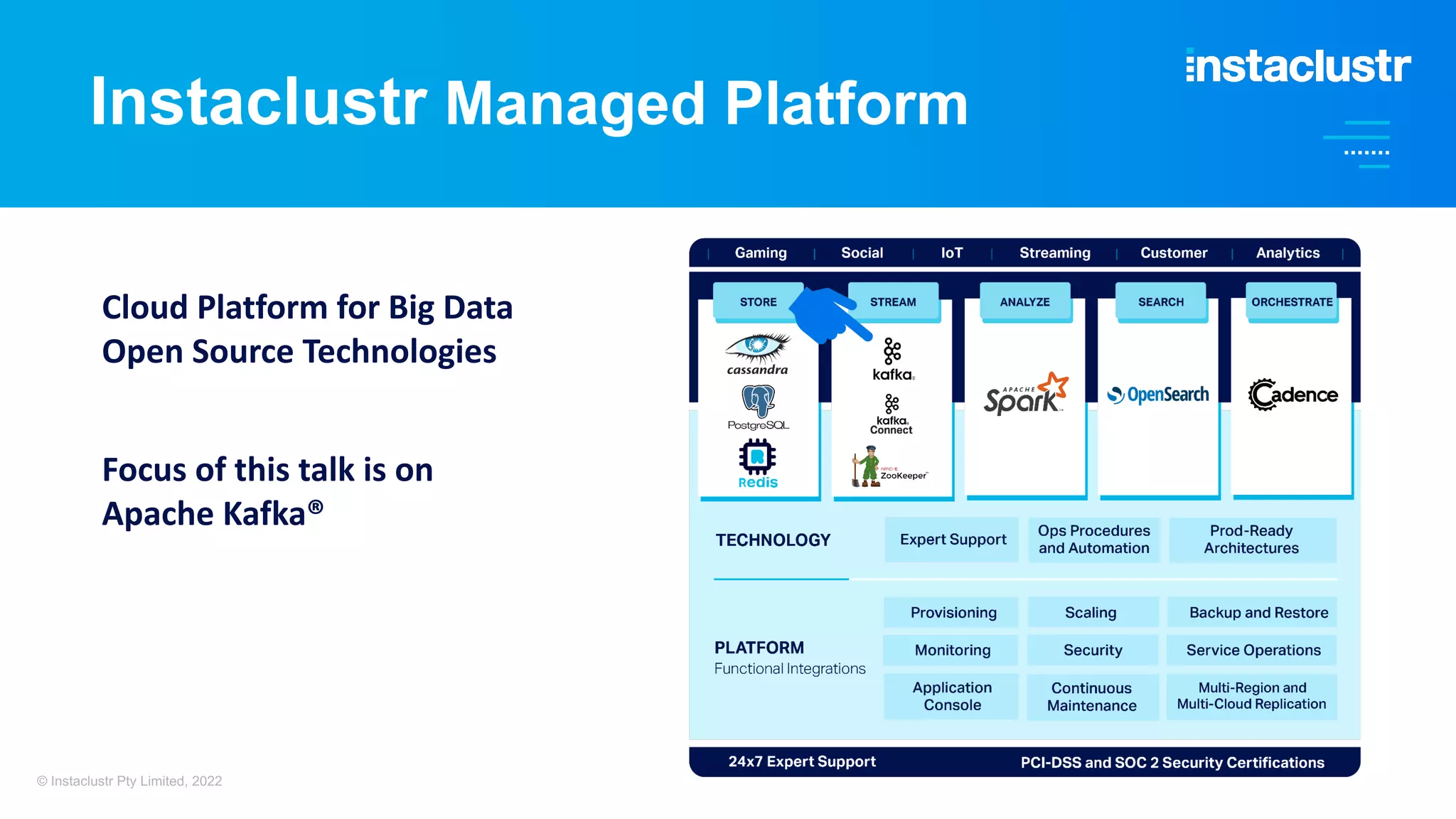

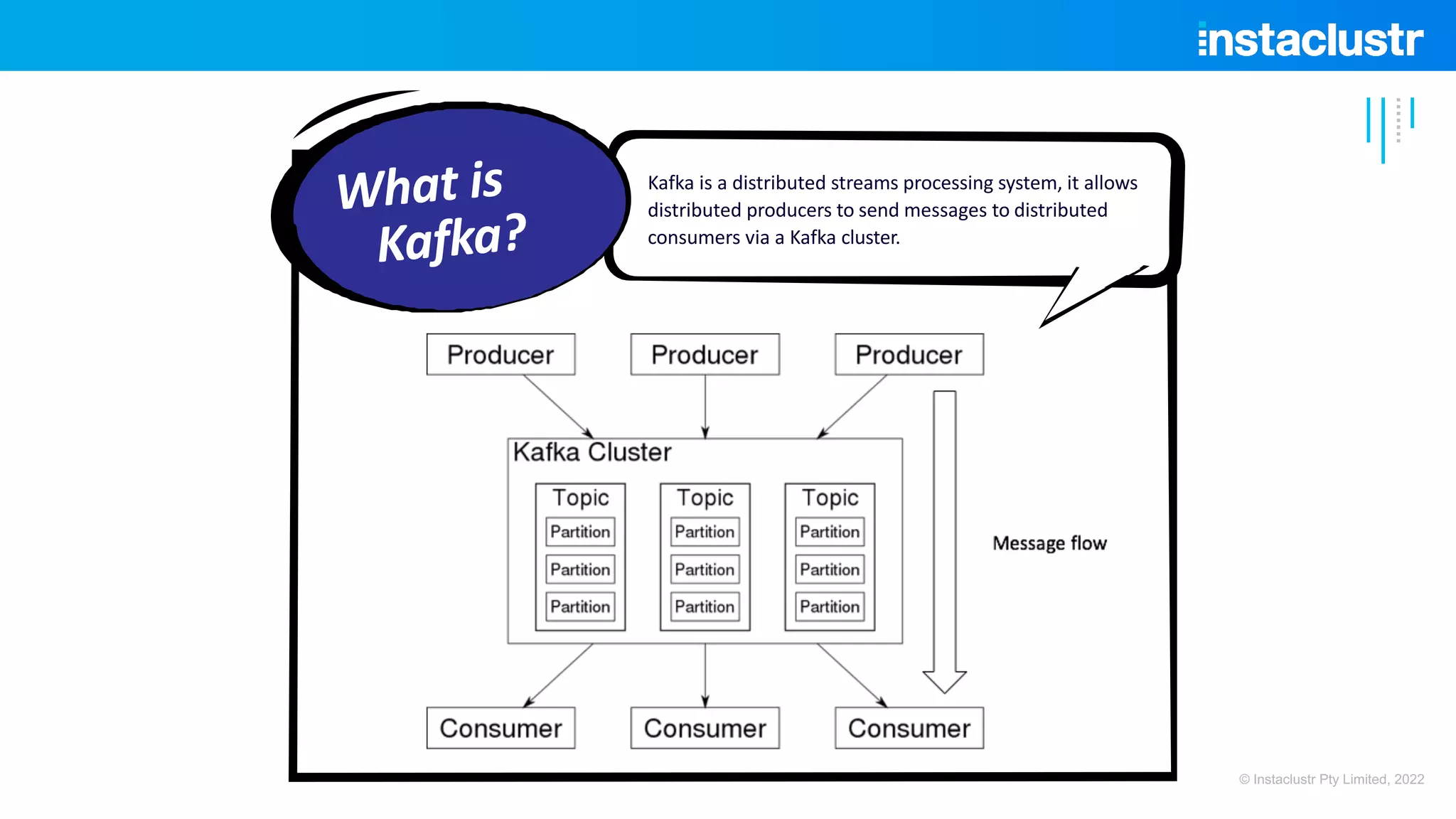

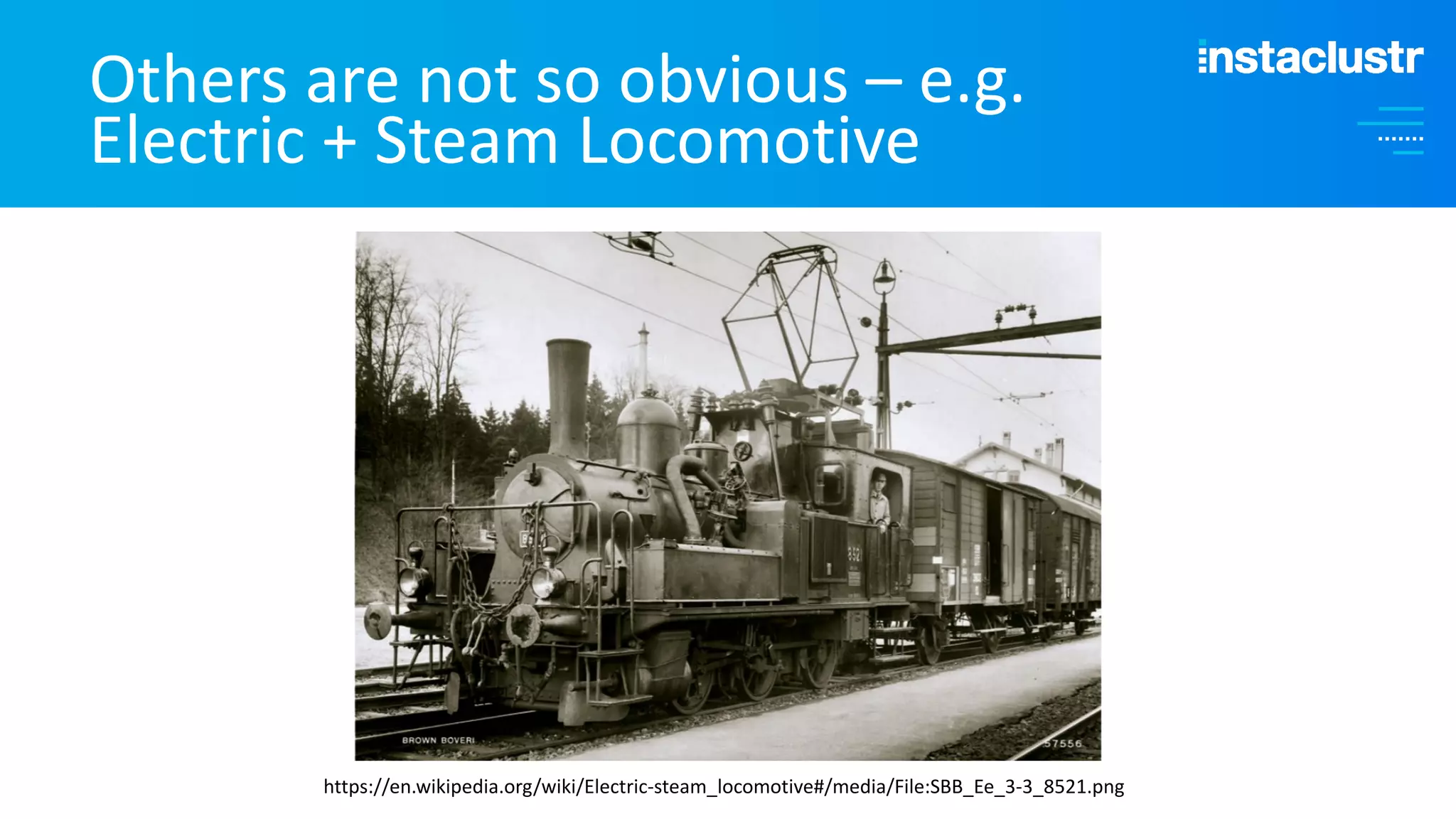

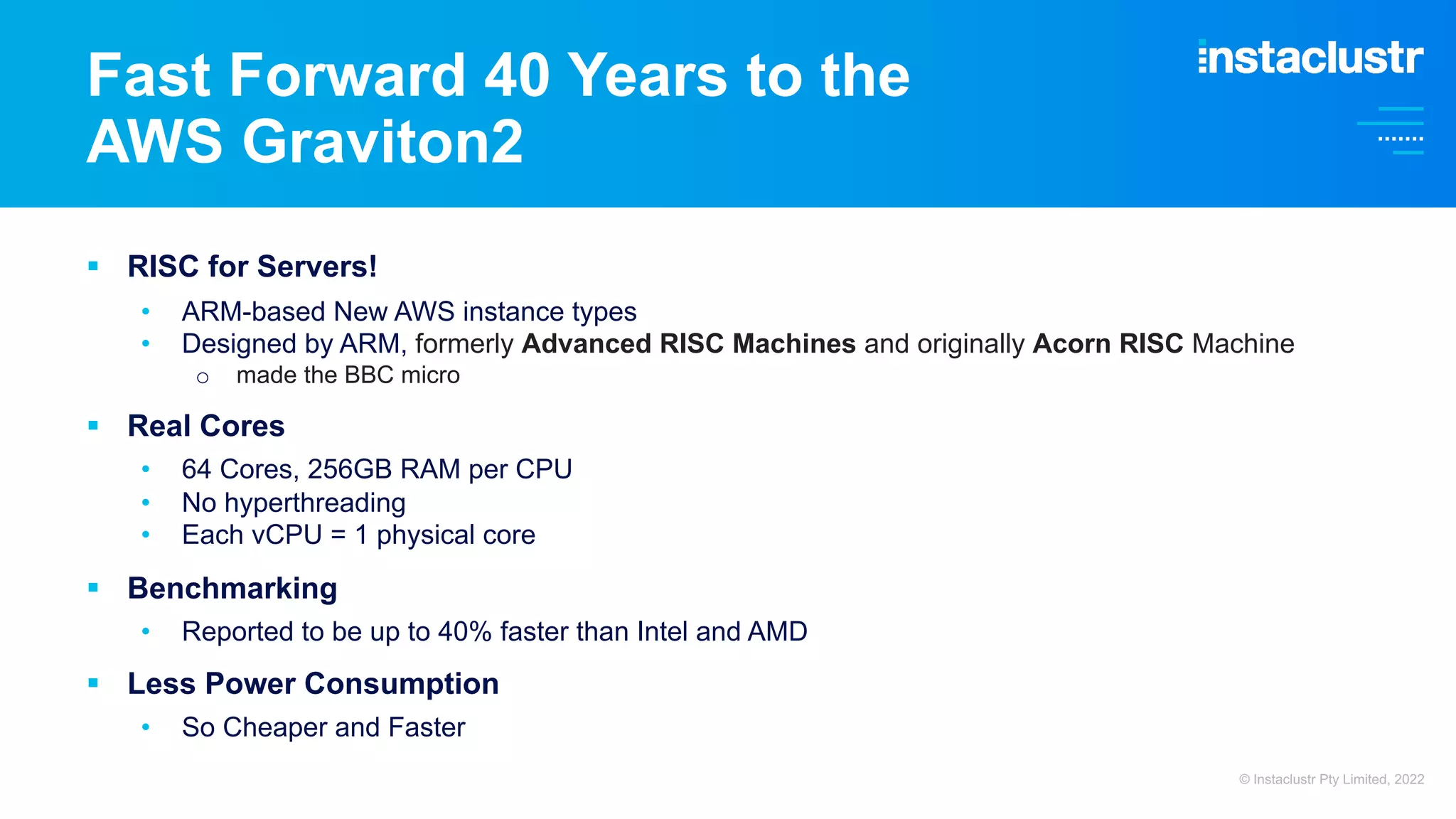

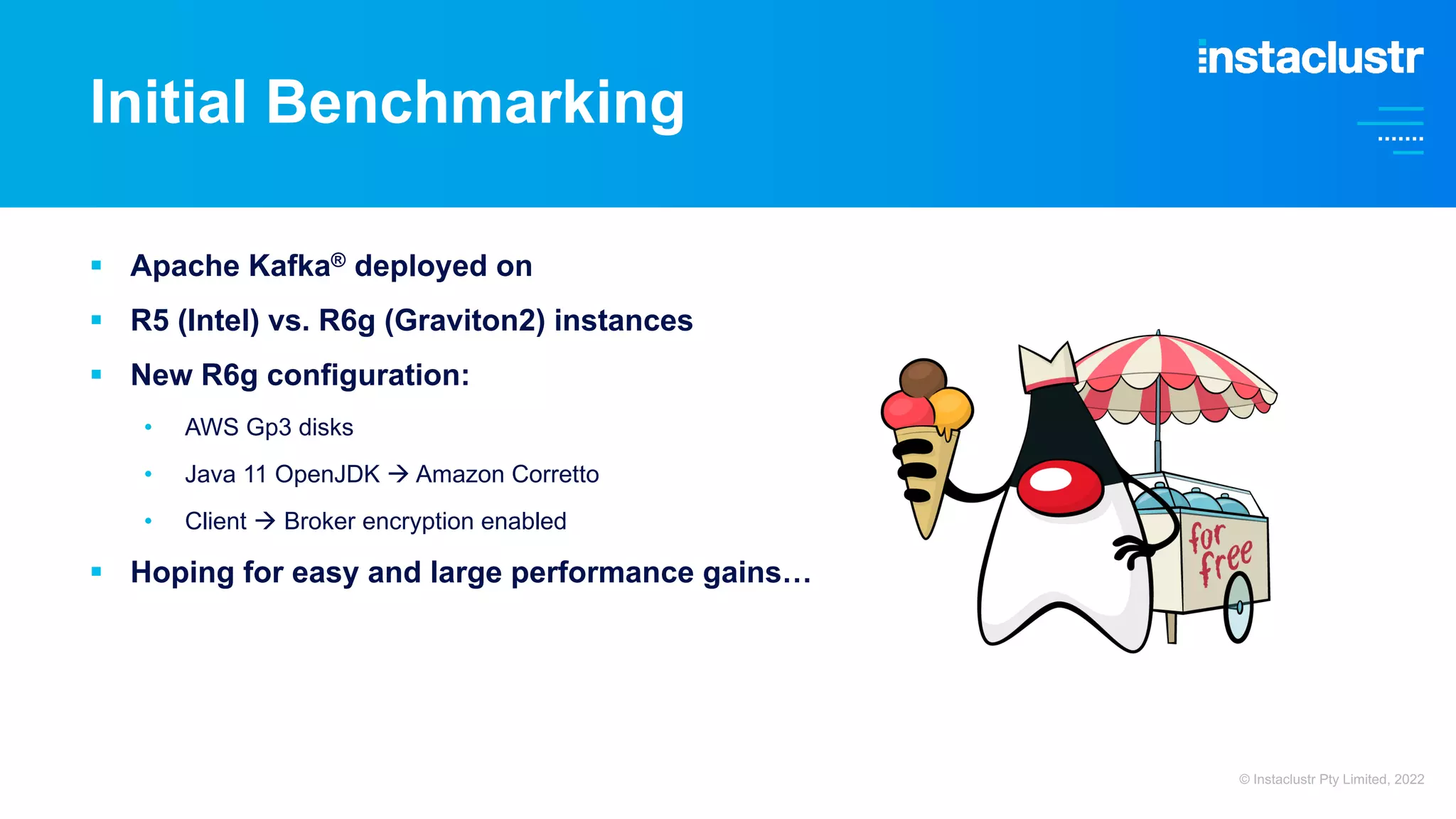

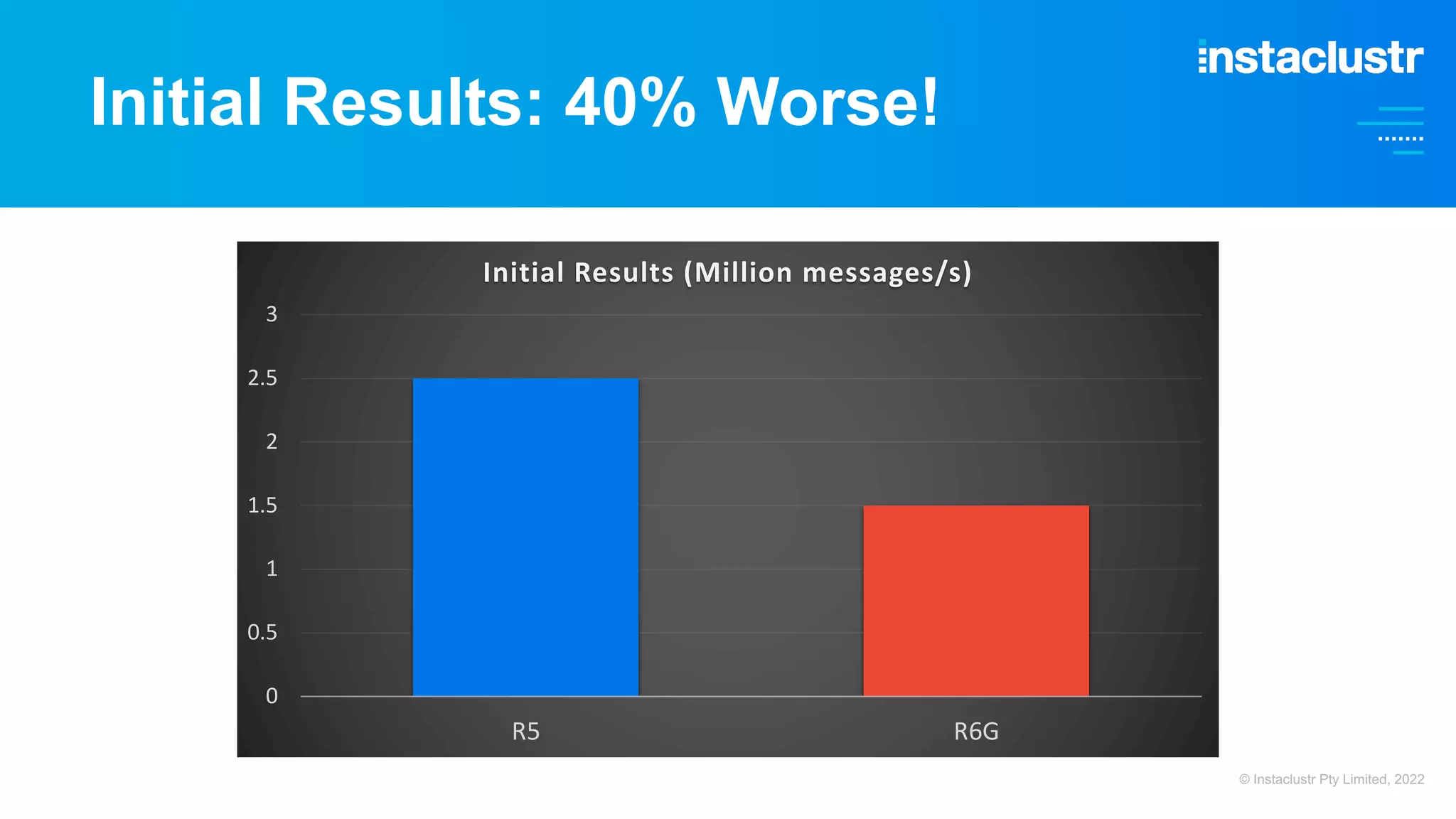

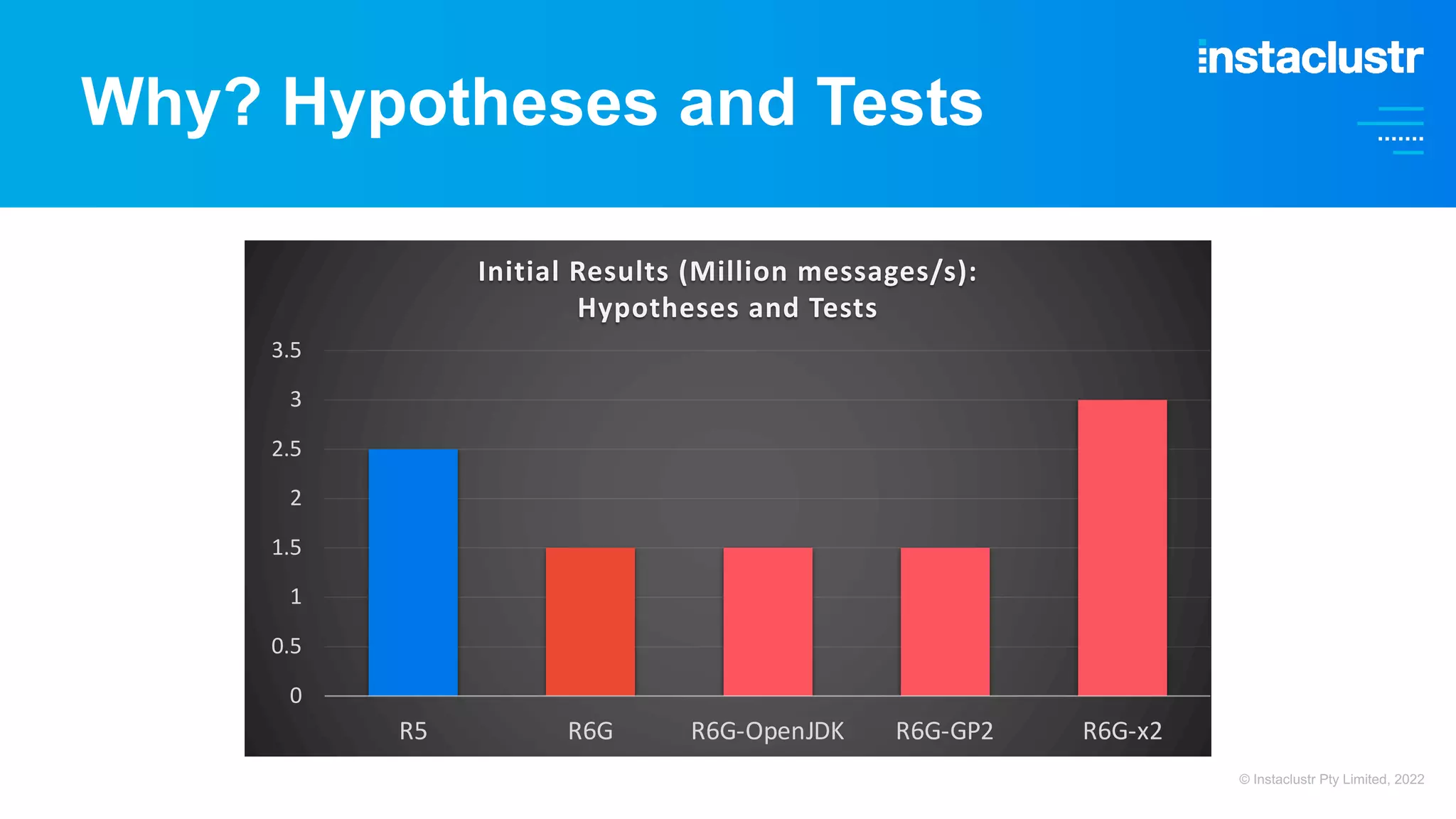

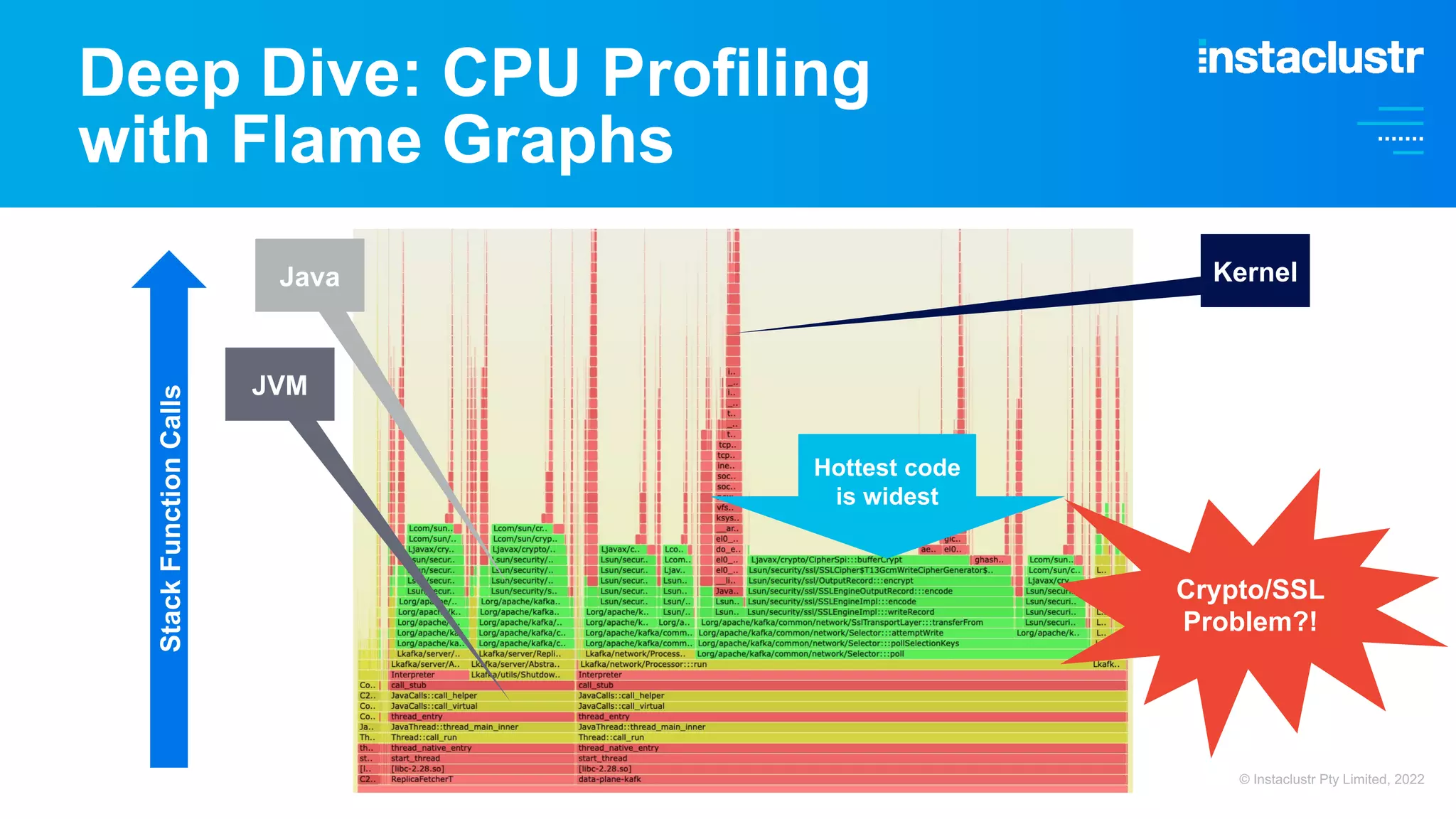

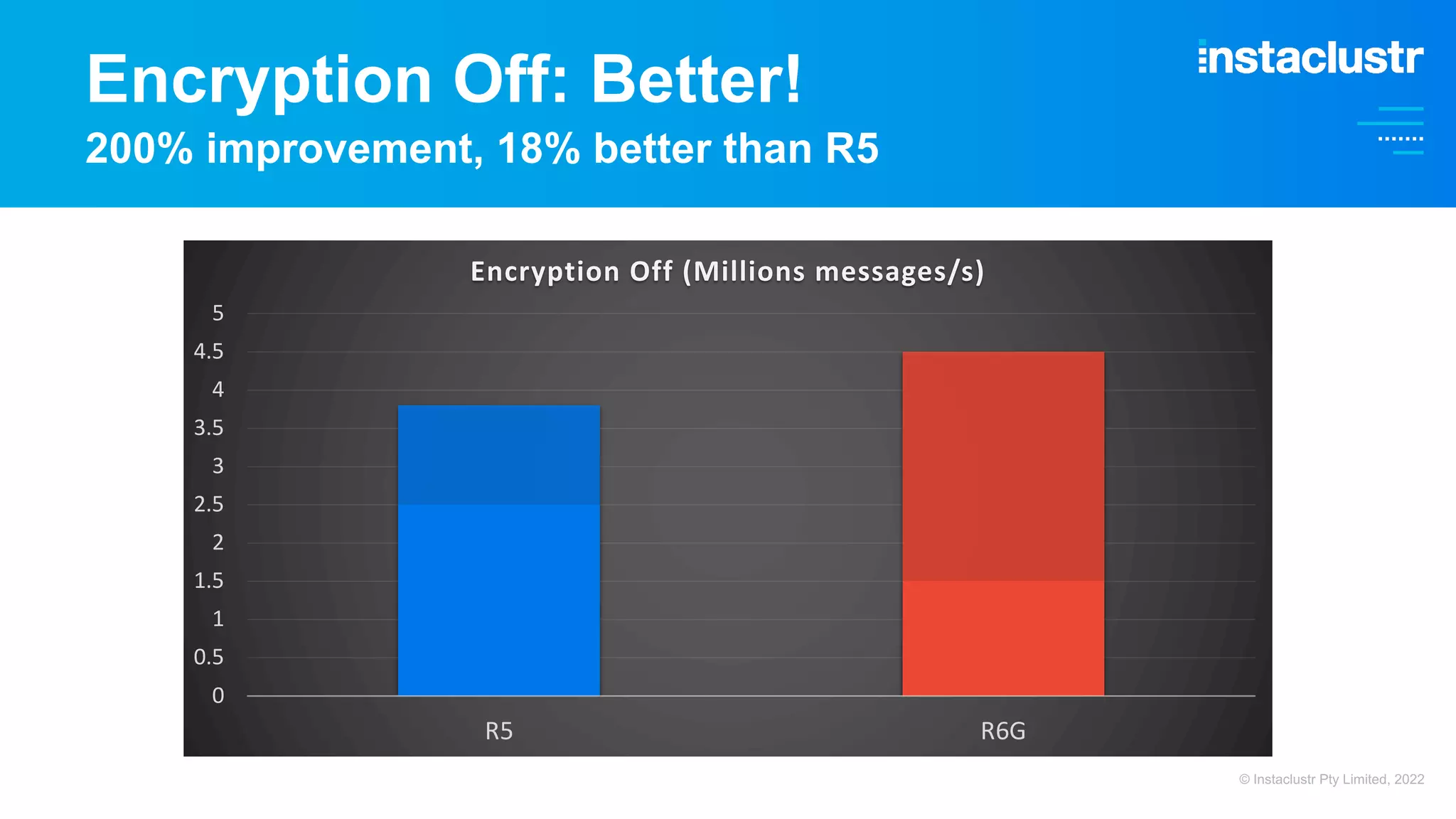

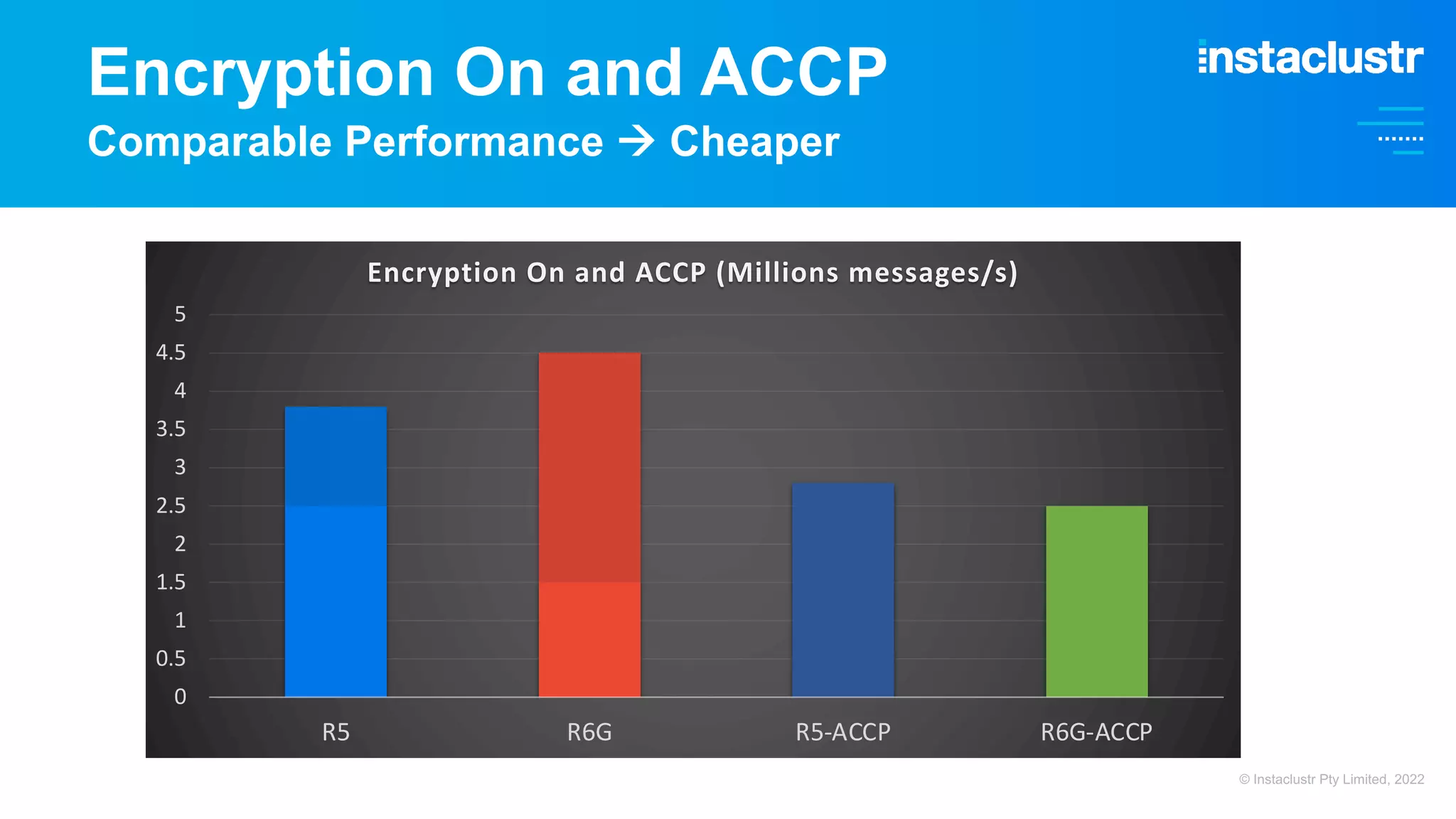

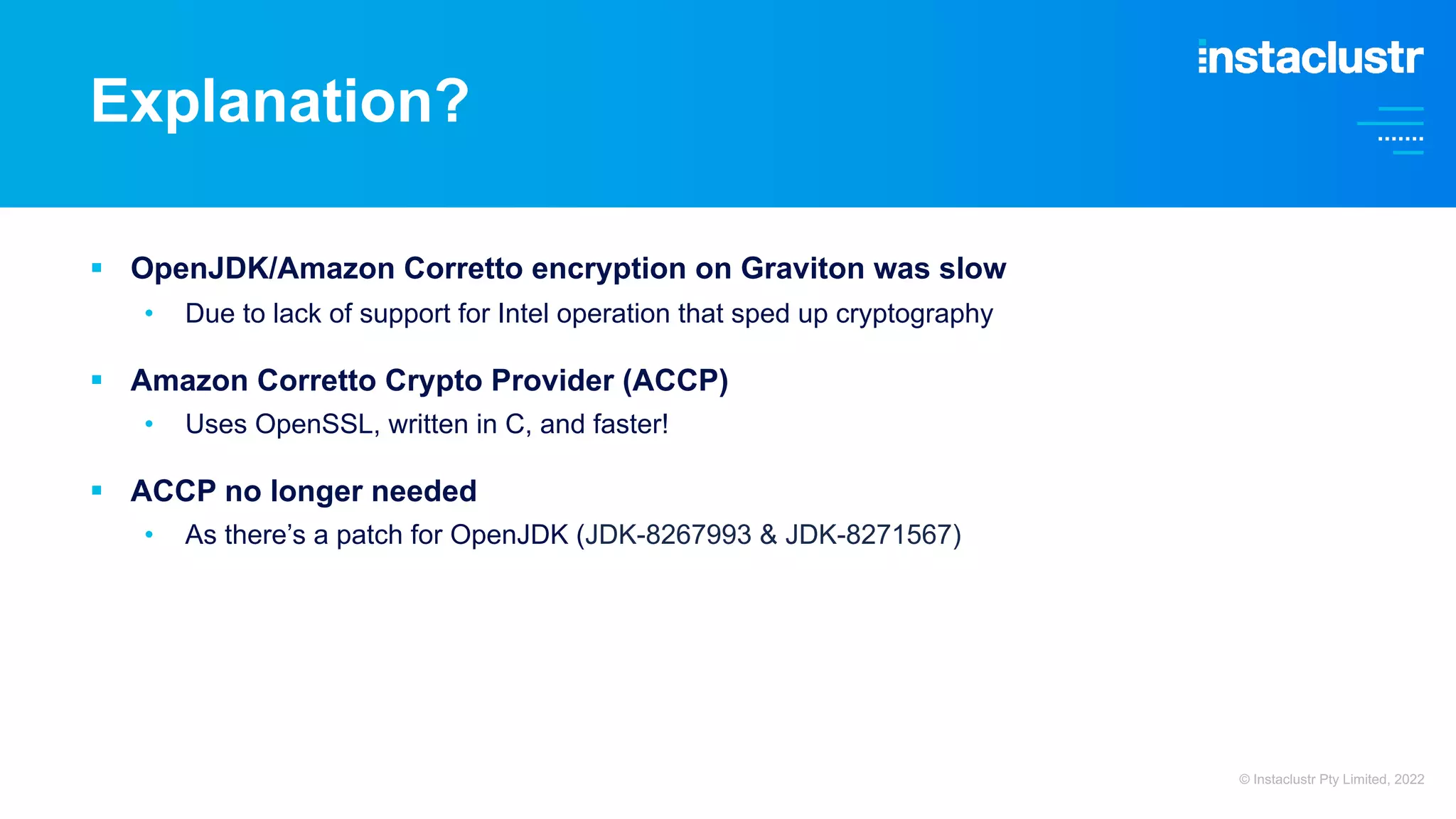

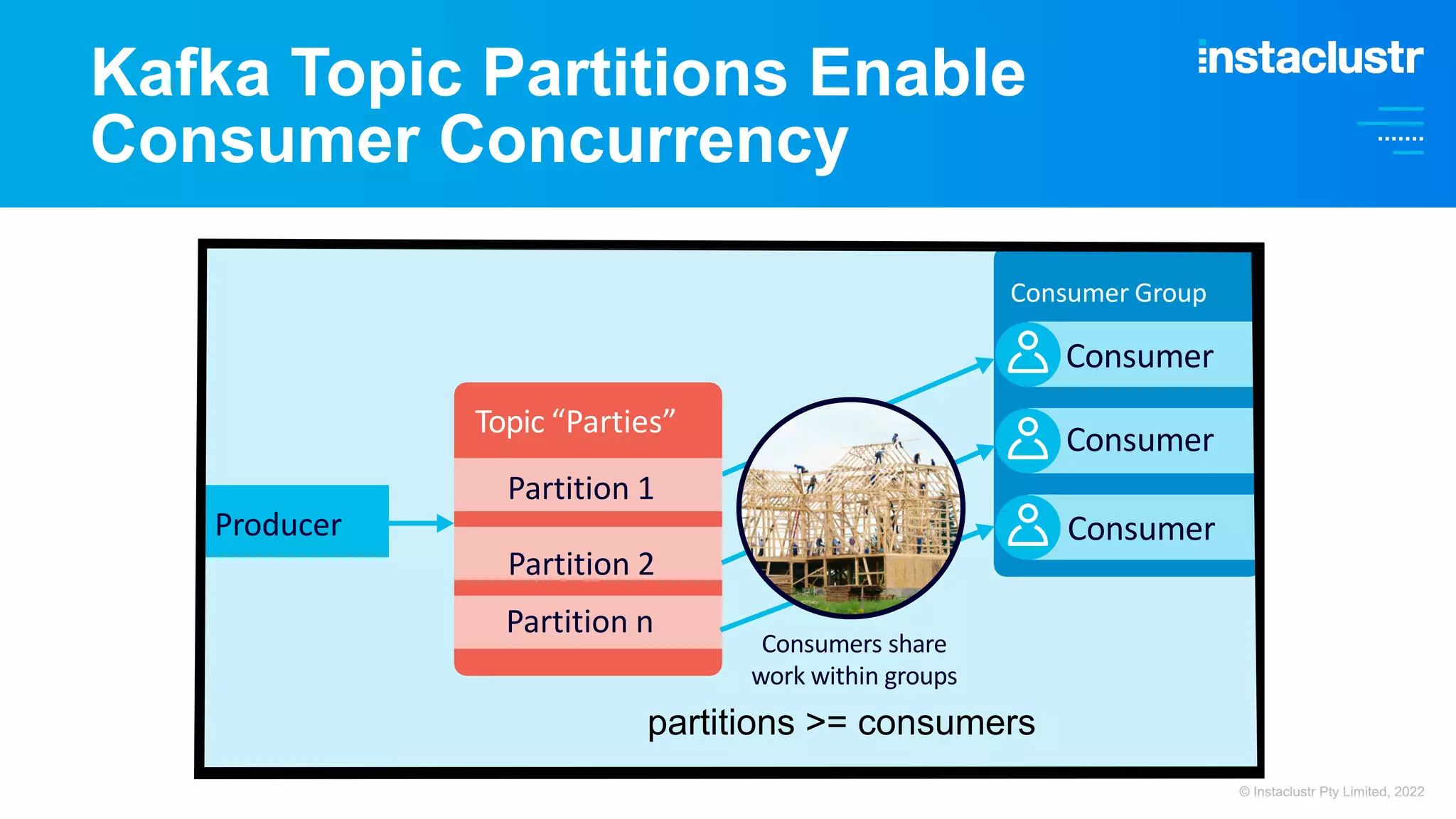

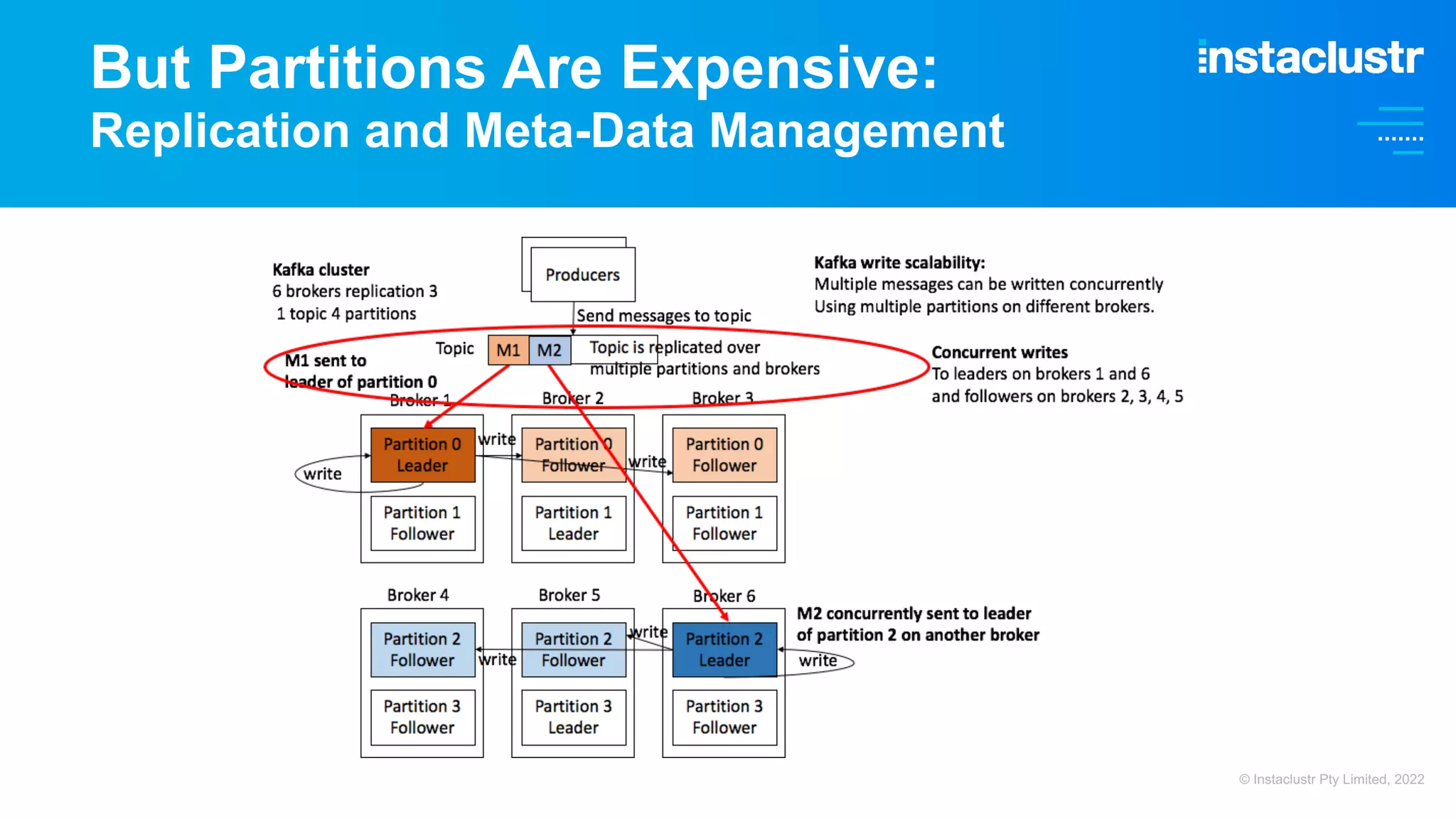

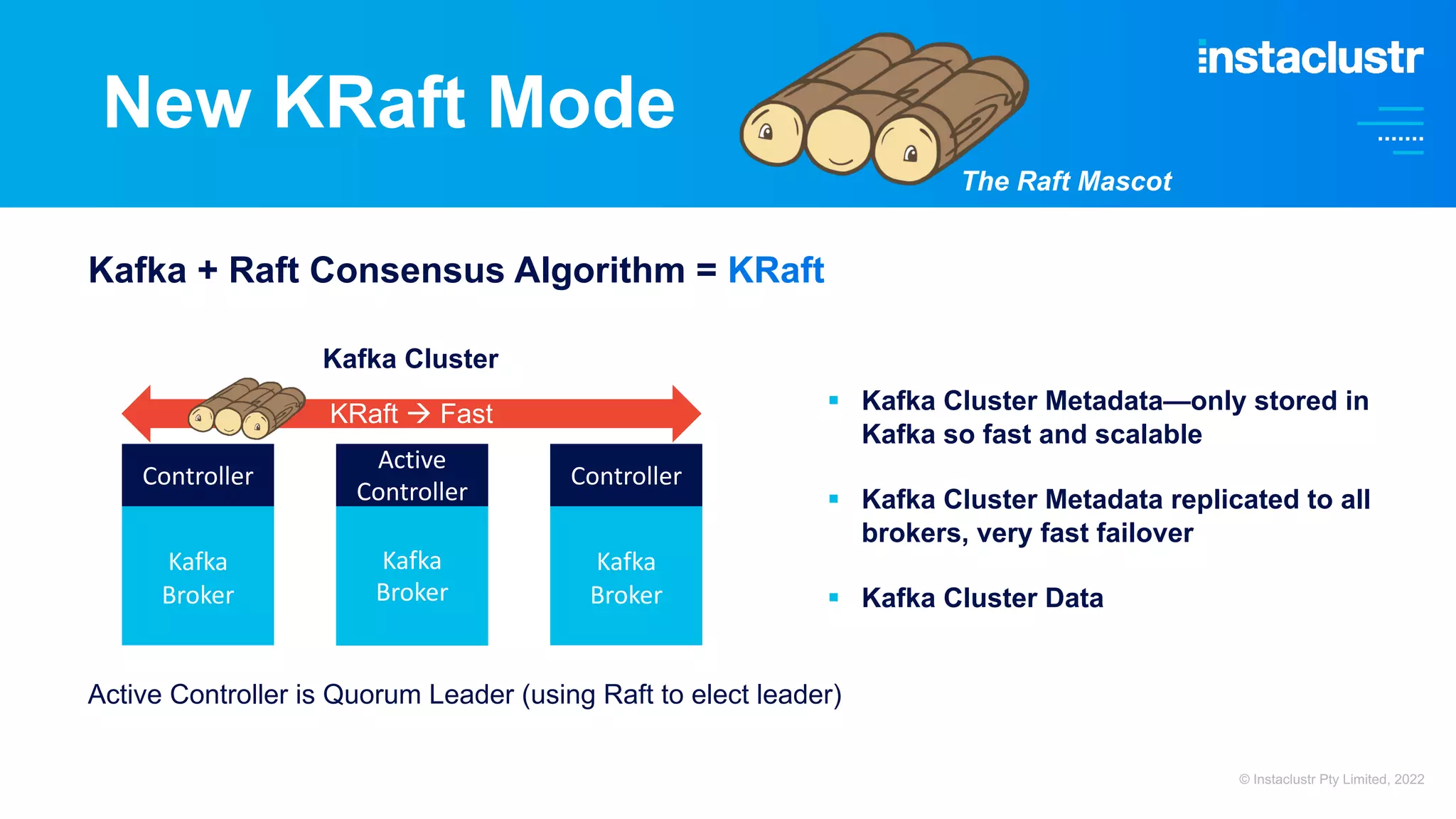

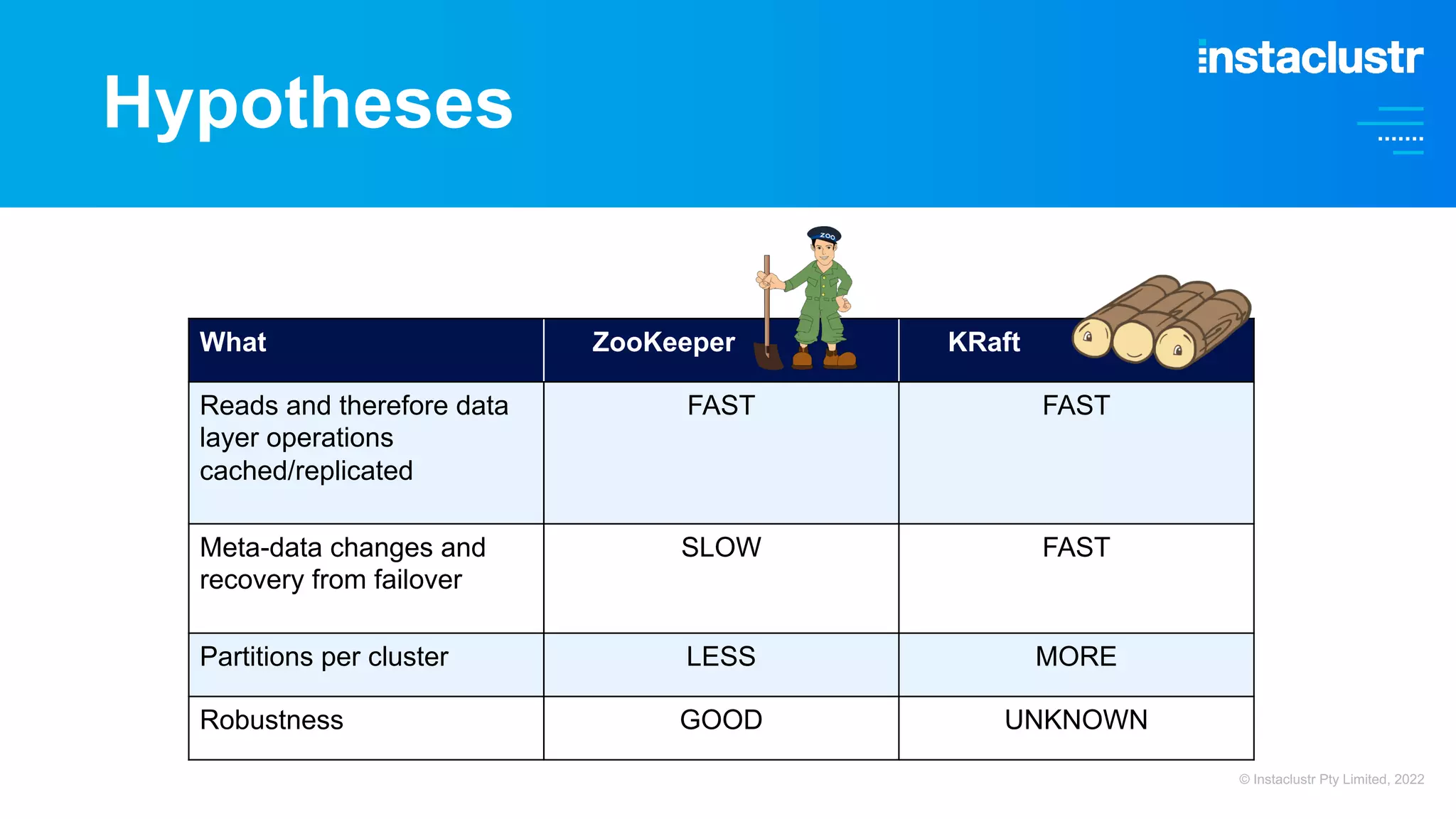

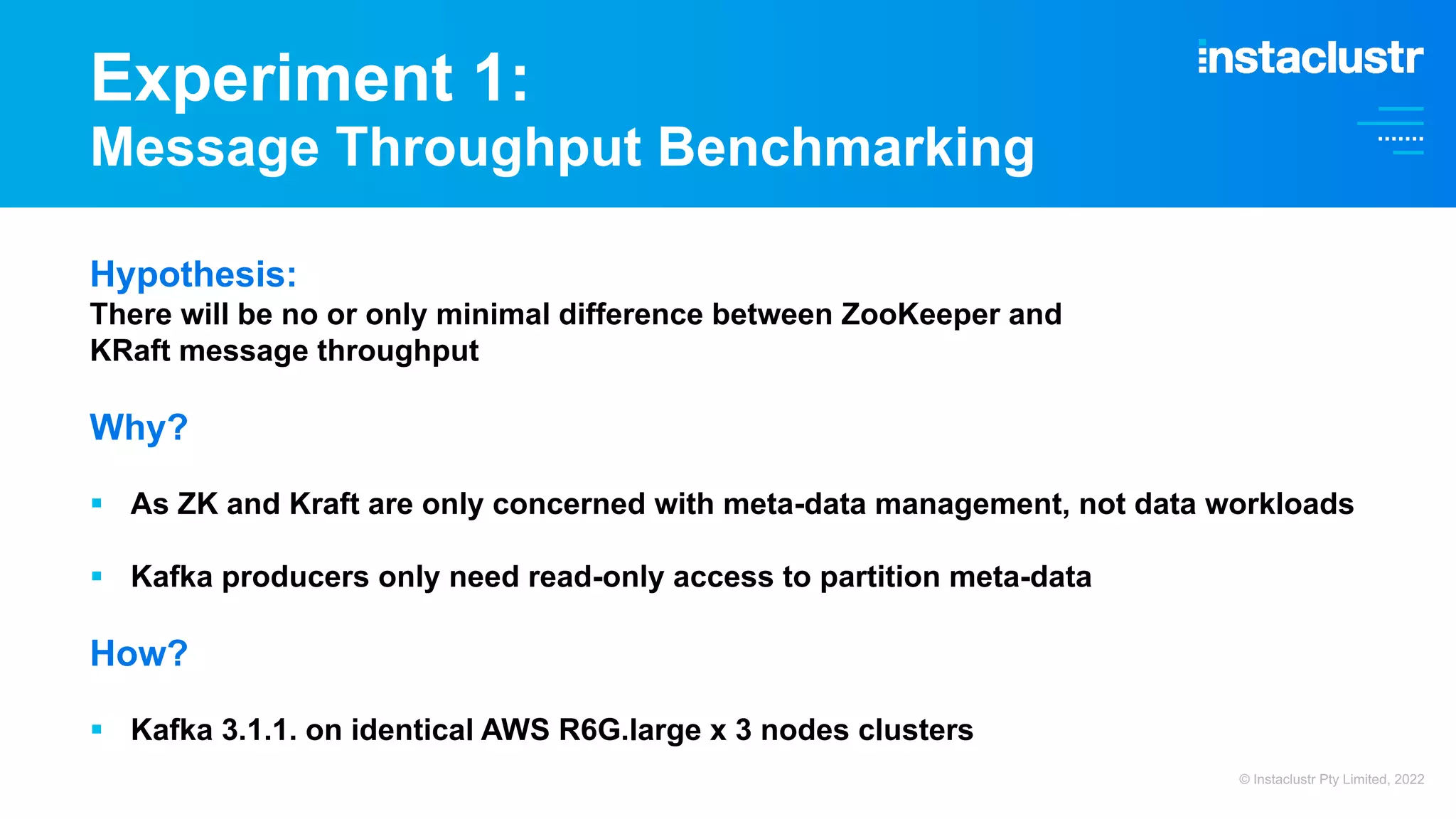

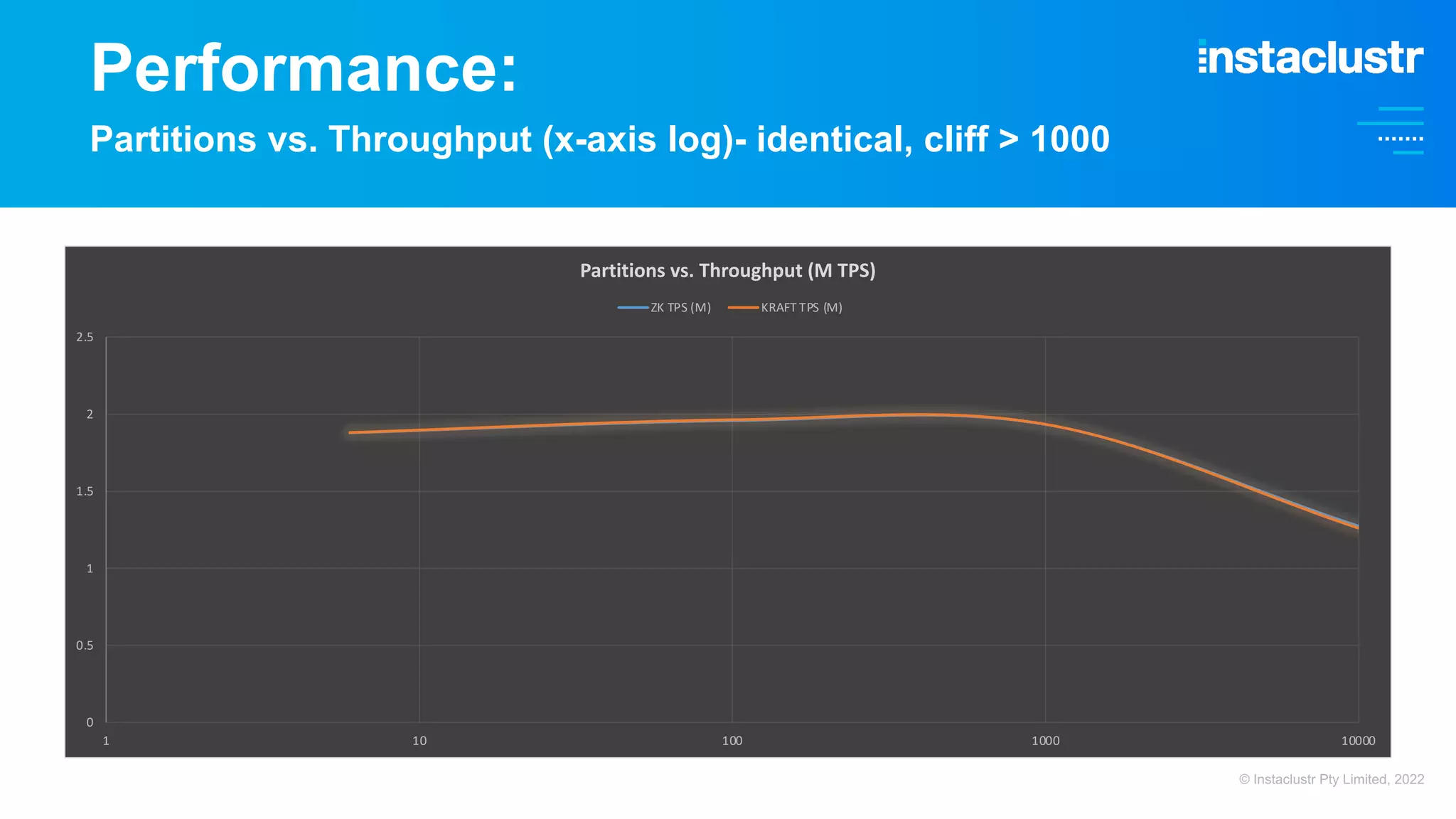

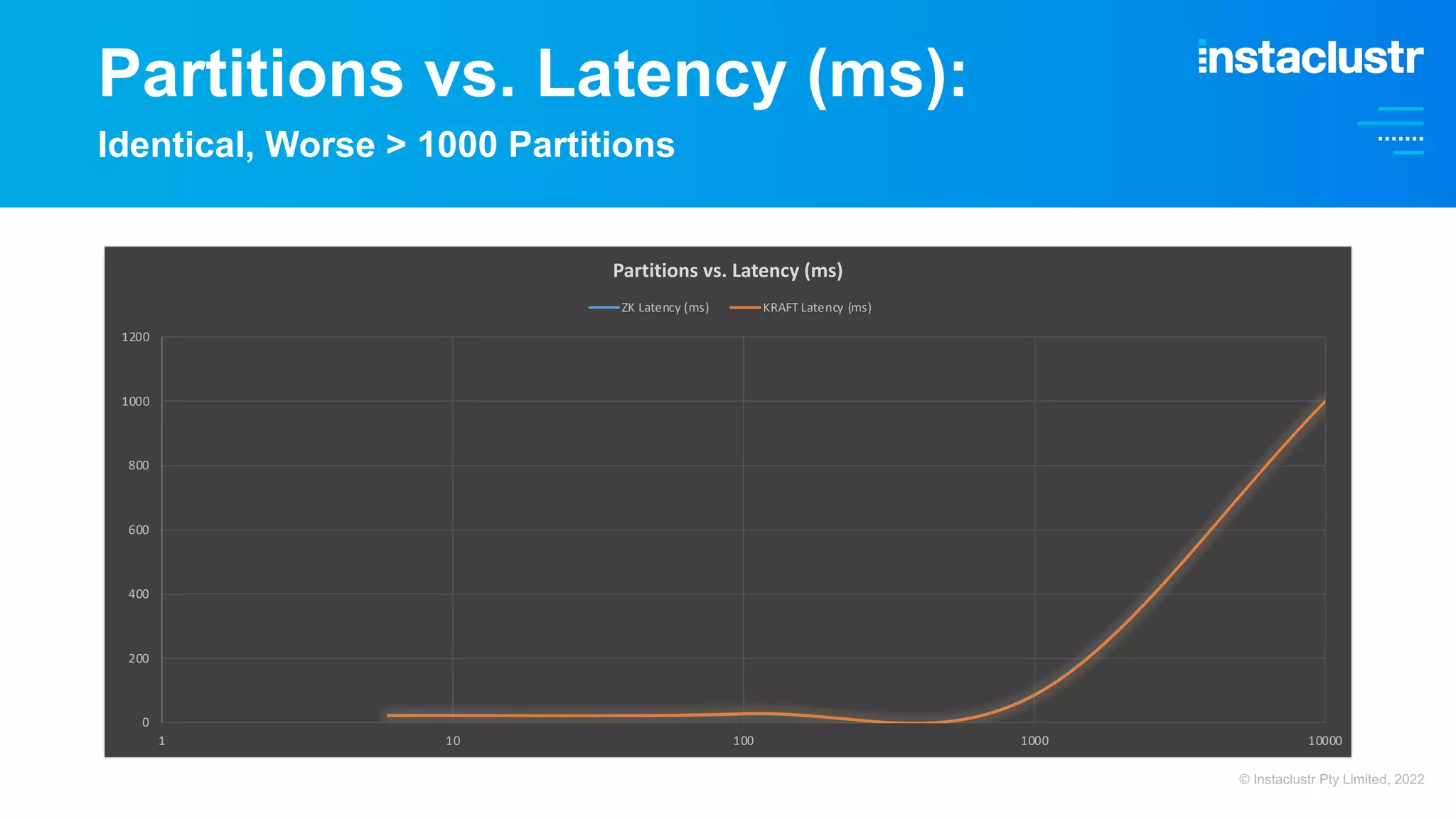

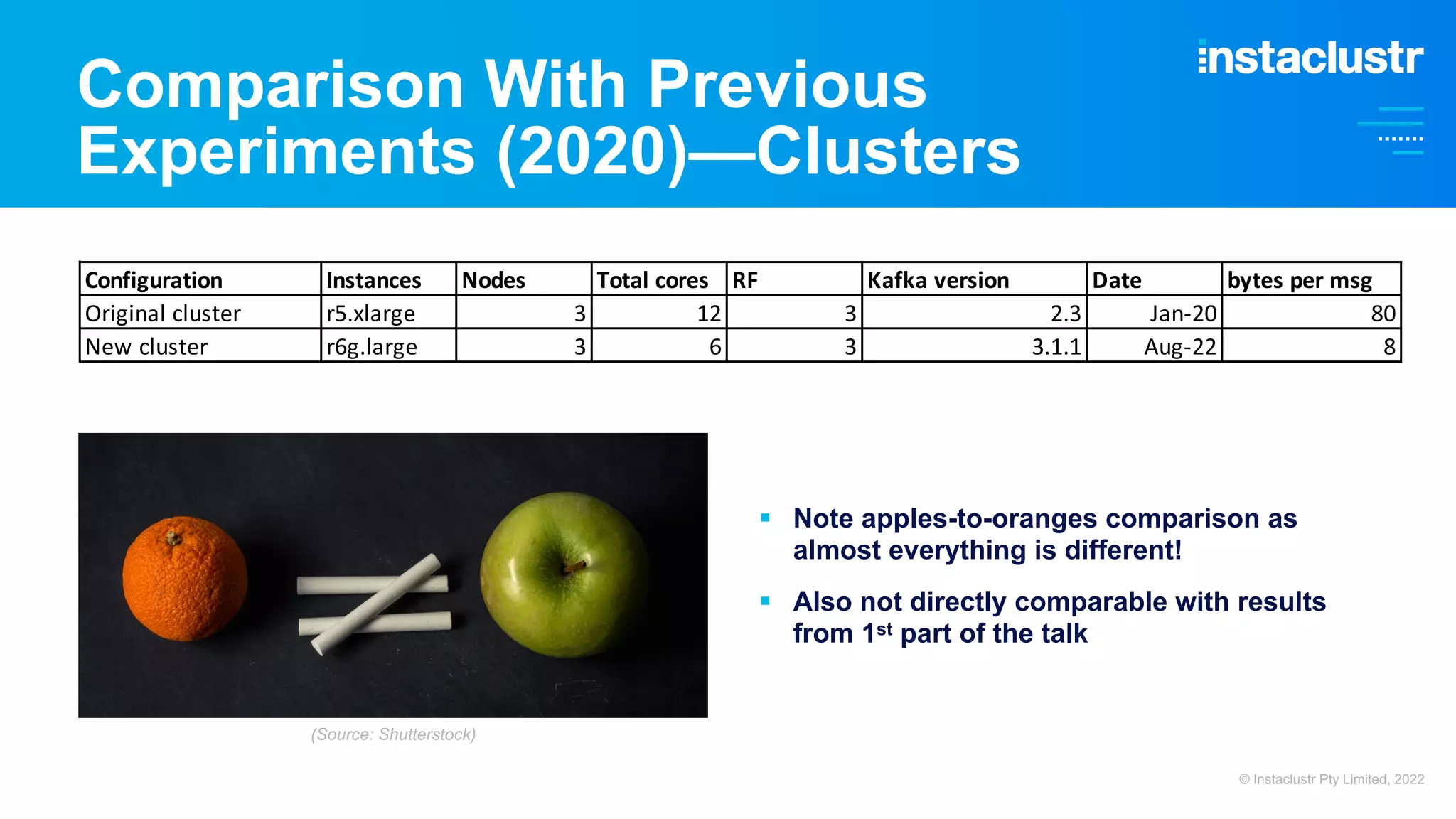

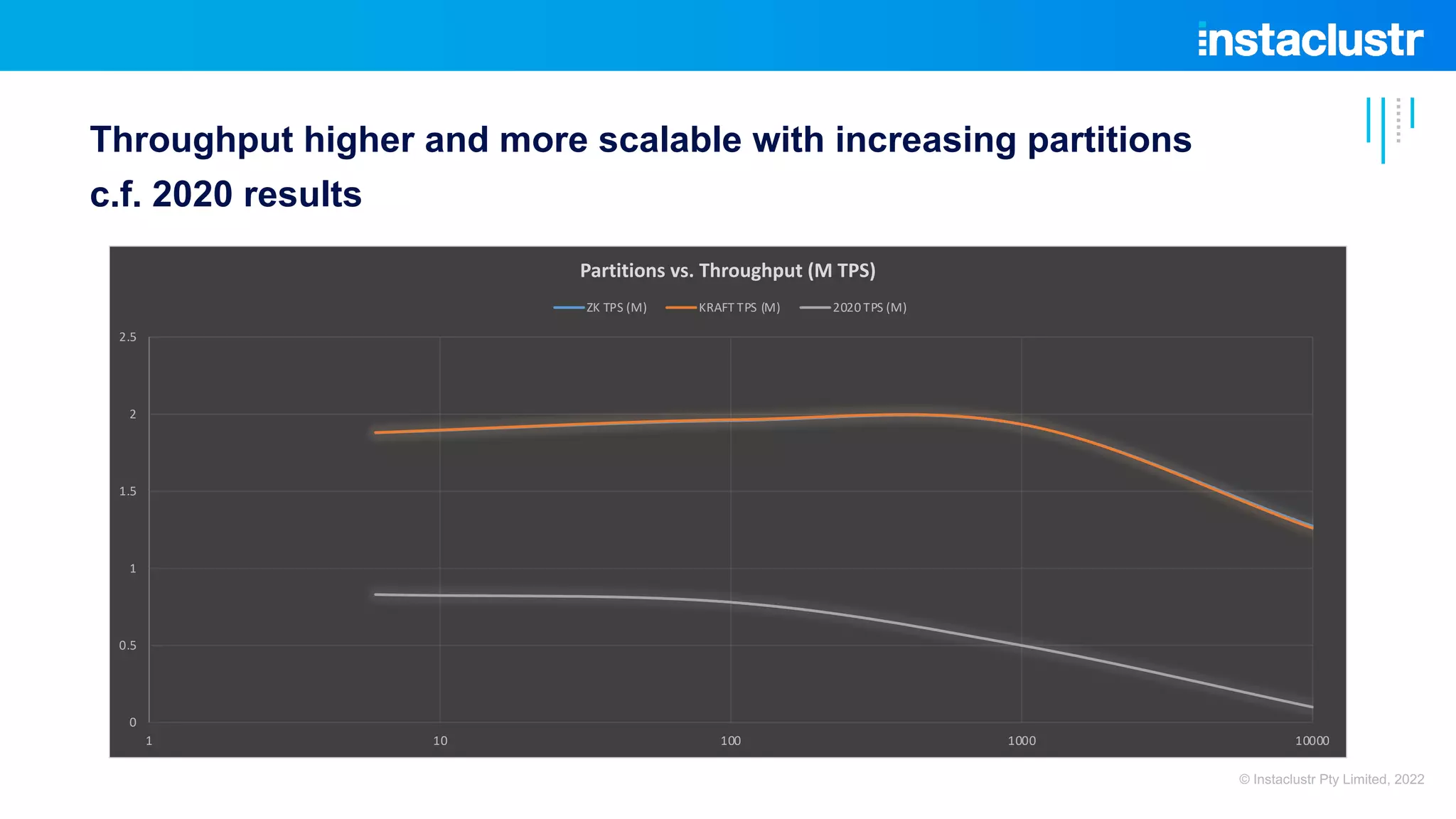

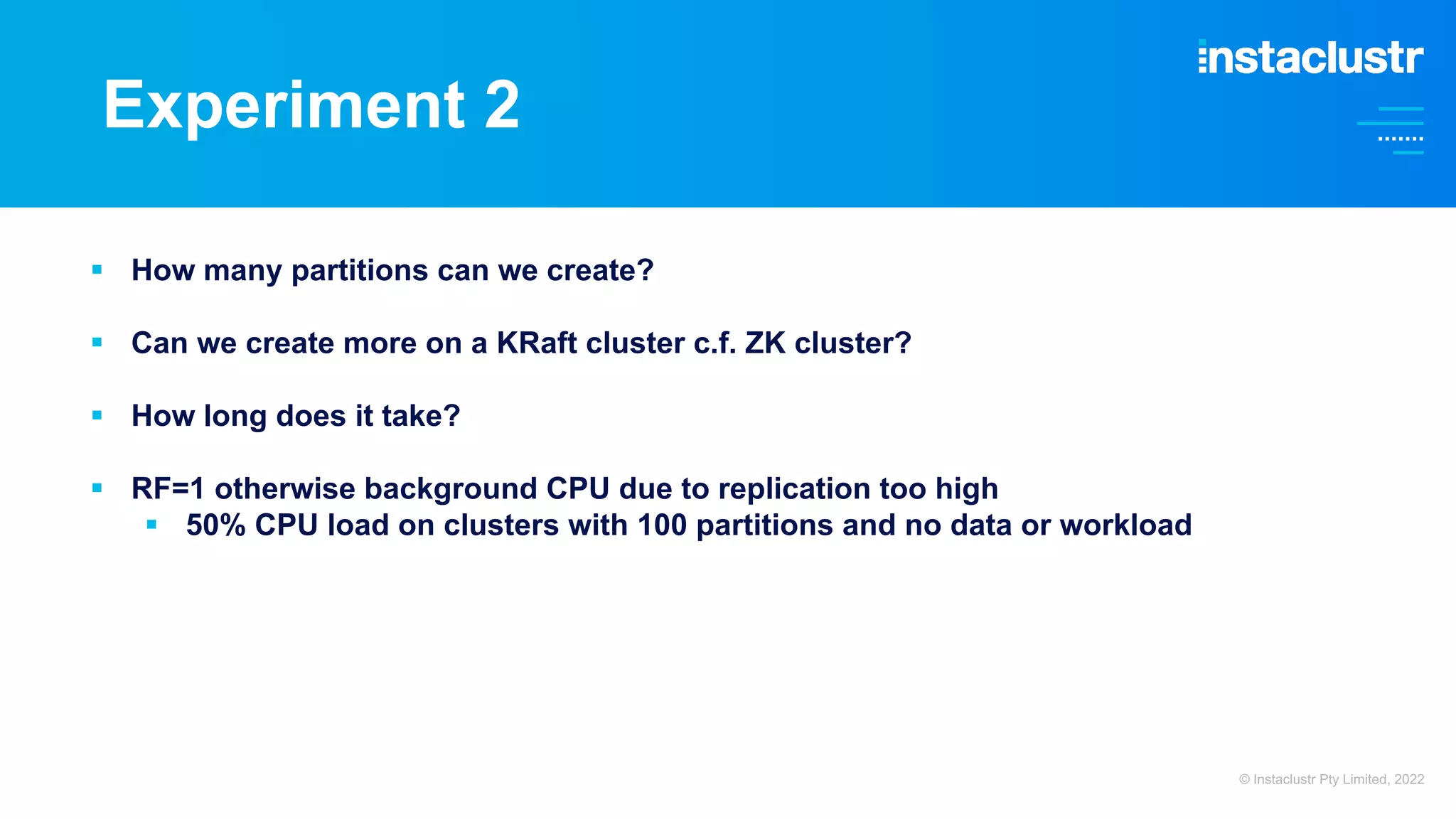

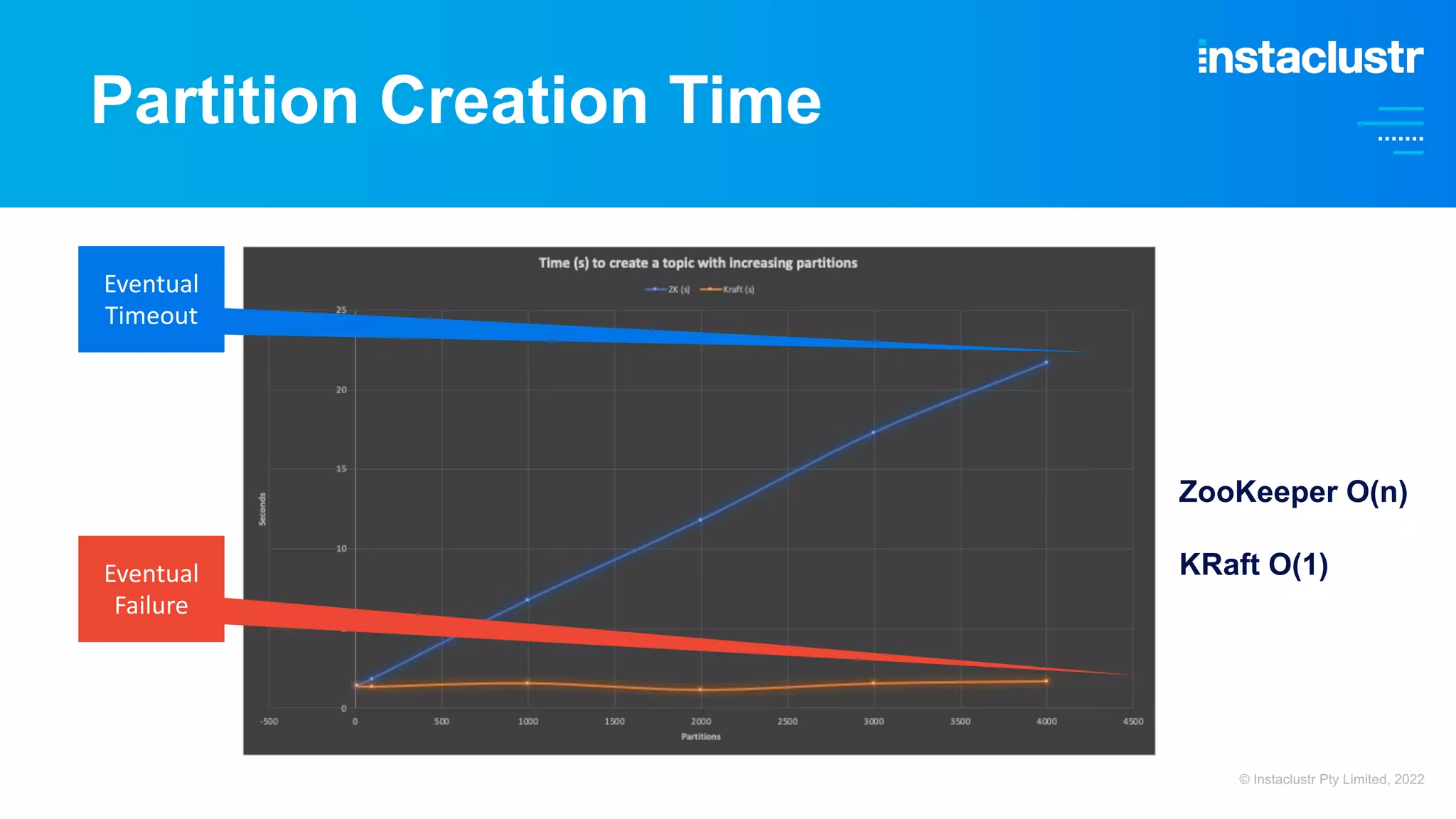

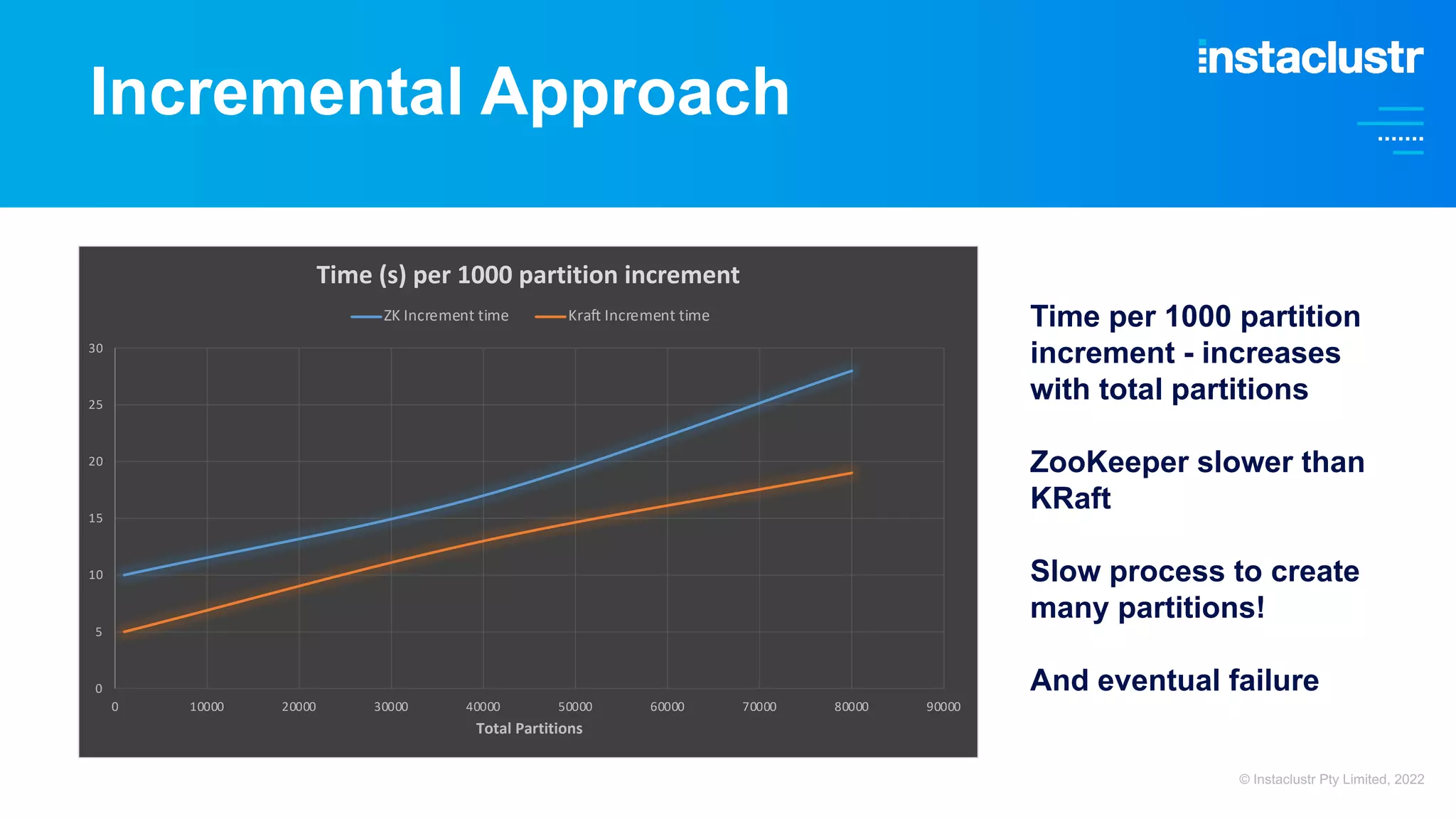

The document discusses the performance impacts of hardware and software changes on Apache Kafka, focusing on benchmarking results between Intel and Graviton2 instances. It highlights initial performance disappointments due to cryptographic overhead and compares traditional ZooKeeper with the new Kraft mode for Kafka, which improves scalability and partition management. Key takeaways emphasize the importance of regular benchmarking and experimentation to understand and enhance performance in cloud environments.

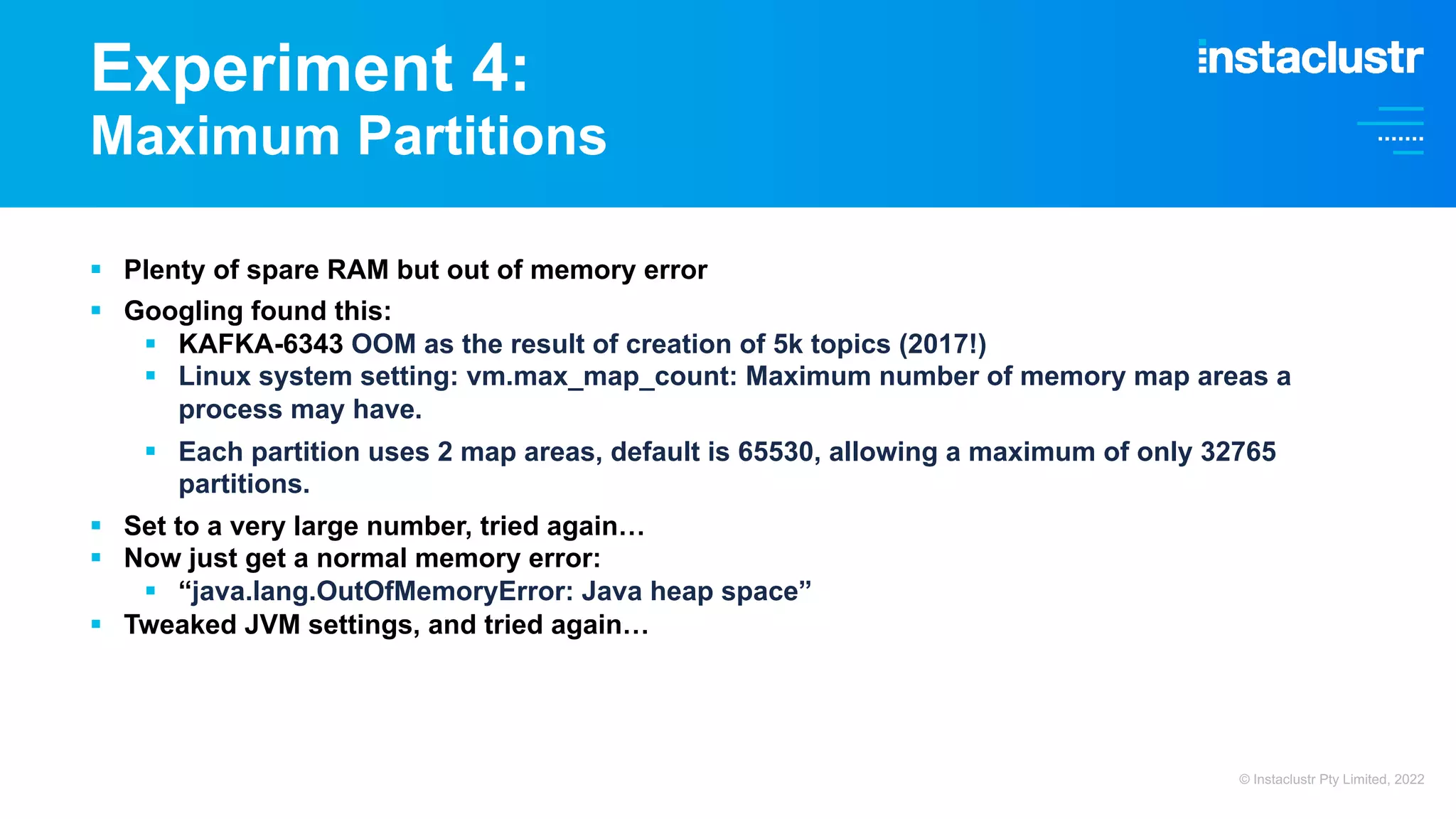

![Errors Included Error while executing topic command : The request timed out. ERROR org.apache.kafka.common.errors.TimeoutException: The request timed out. From curl: {"errors":[{"name":"Create Topic","message":"org.apache.kafka.common.errors.RecordBatchTooLargeException : The total record(s) size of 56991841 exceeds the maximum allowed batch size of 8388608"}]} org.apache.kafka.common.errors.DisconnectException: Cancelled createTopics request with correlation id 3 due to node 2 being disconnected org.apache.kafka.common.errors.DisconnectException: Cancelled createPartitions request with correlation id 6 due to node 1 being disconnected * A historical error, “Shannon and Bill” = Bill Shannon – 1955-2020 (Sun, UNIX, J2EE) panic("Shannon and Bill* say this can't happen."); © Instaclustr Pty Limited, 2022](https://image.slidesharecdn.com/04brebnertheimpactof-221017052408-c57b3c00/75/The-Impact-of-Hardware-and-Software-Version-Changes-on-Apache-Kafka-Performance-and-Scalability-39-2048.jpg)

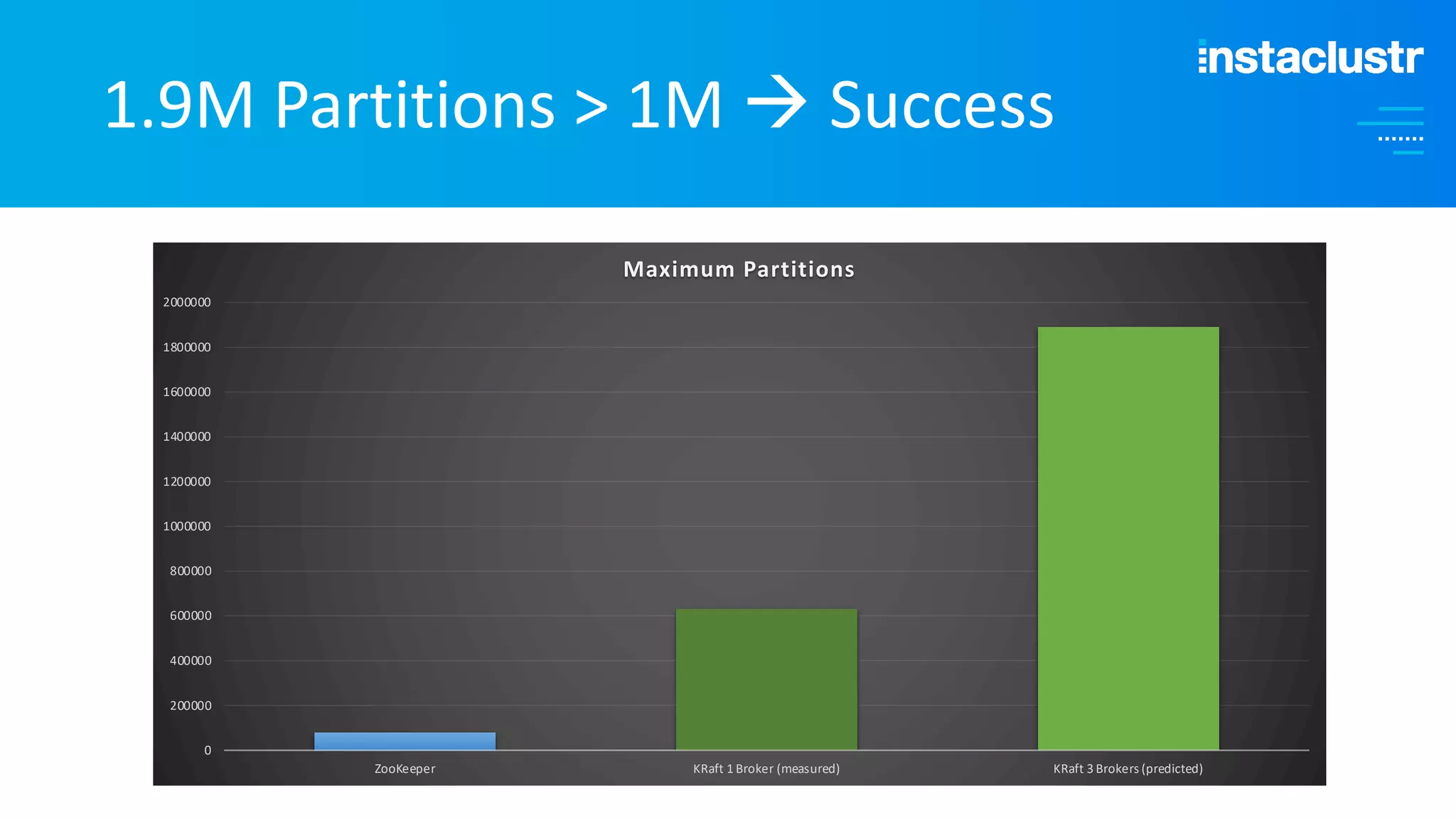

![Experiment 4: Maximum Partitions § Final attempt to reach 1 Million+ Partitions on a cluster (RF=1 only however) § Used manual installation of Kafka 3.2.1. on large EC2 instance § Hit limits at around 30,000 partitions: ERROR [BrokerMetadataPublisher id=1] Error publishing broker metadata at 33037 (kafka.server.metadata.BrokerMetadataPublisher) java.io.IOException: Map failed # There is insufficient memory for the Java Runtime Environment to continue. # Native memory allocation (mmap) failed to map 65536 bytes for committing reserved memory. More RAM needed? No – didn’t help. More file descriptors? 2 descriptors used per partition. Only 65535 by default on Linux. Increased – still failed. © Instaclustr Pty Limited, 2022](https://image.slidesharecdn.com/04brebnertheimpactof-221017052408-c57b3c00/75/The-Impact-of-Hardware-and-Software-Version-Changes-on-Apache-Kafka-Performance-and-Scalability-45-2048.jpg)