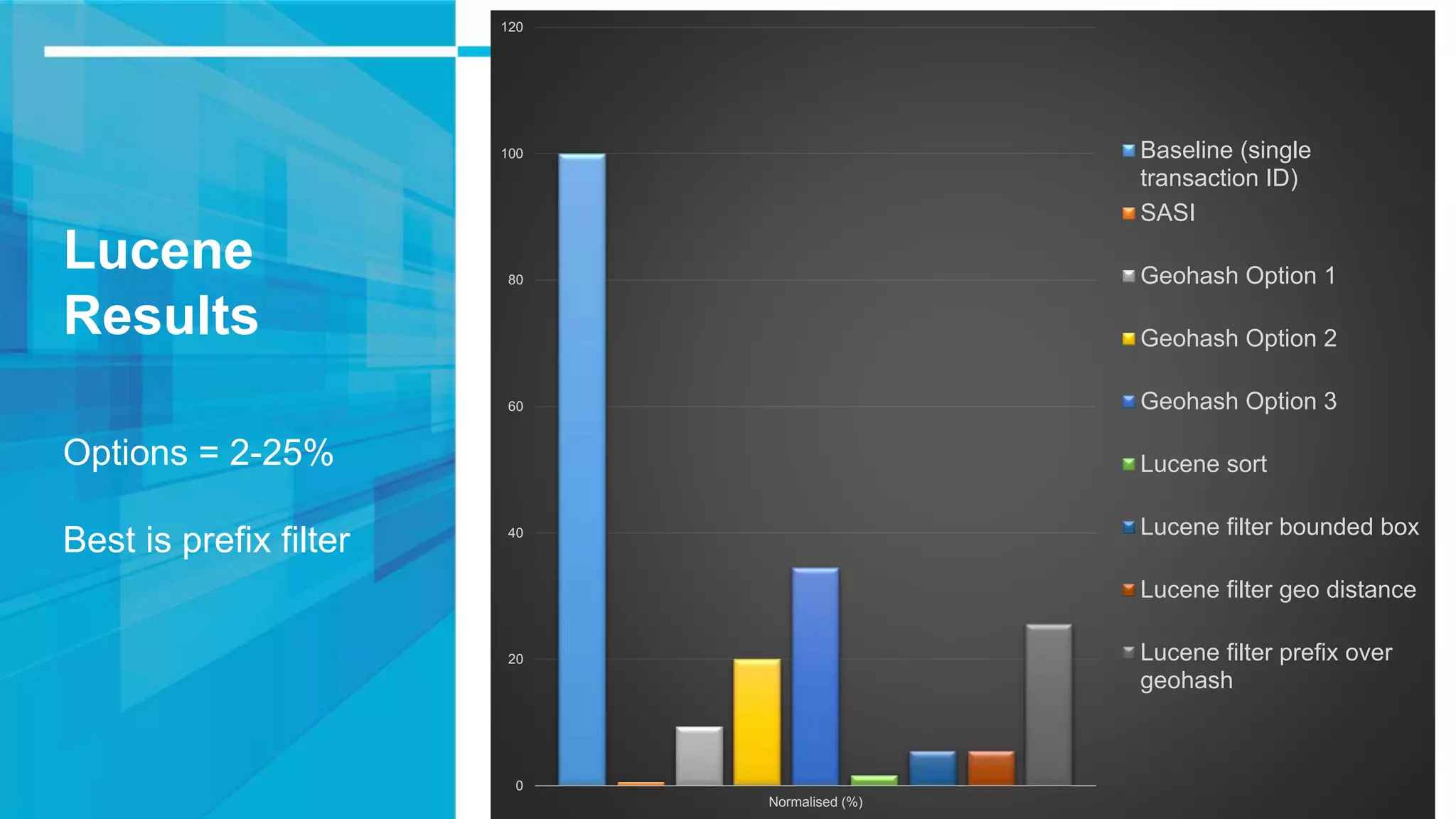

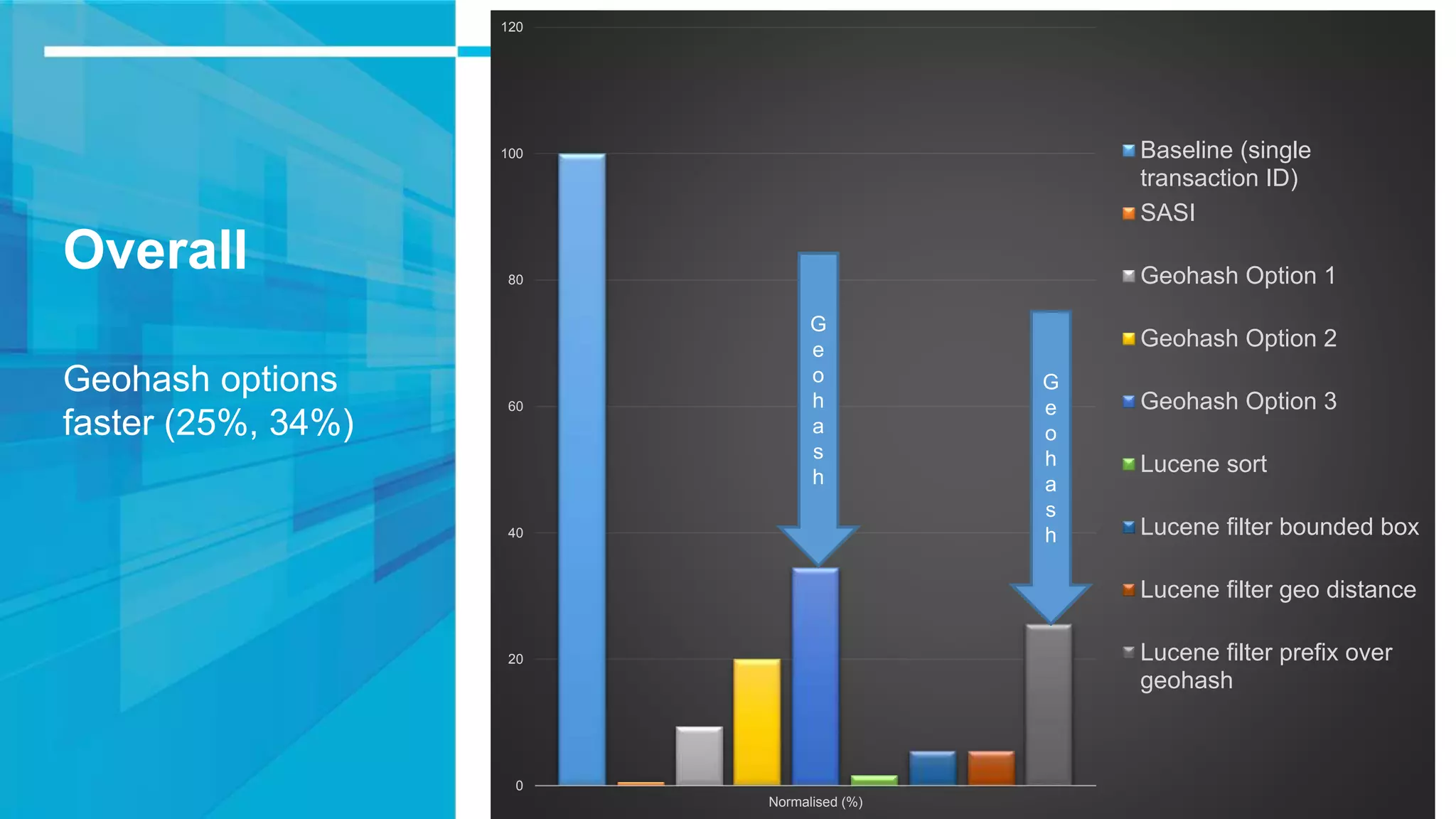

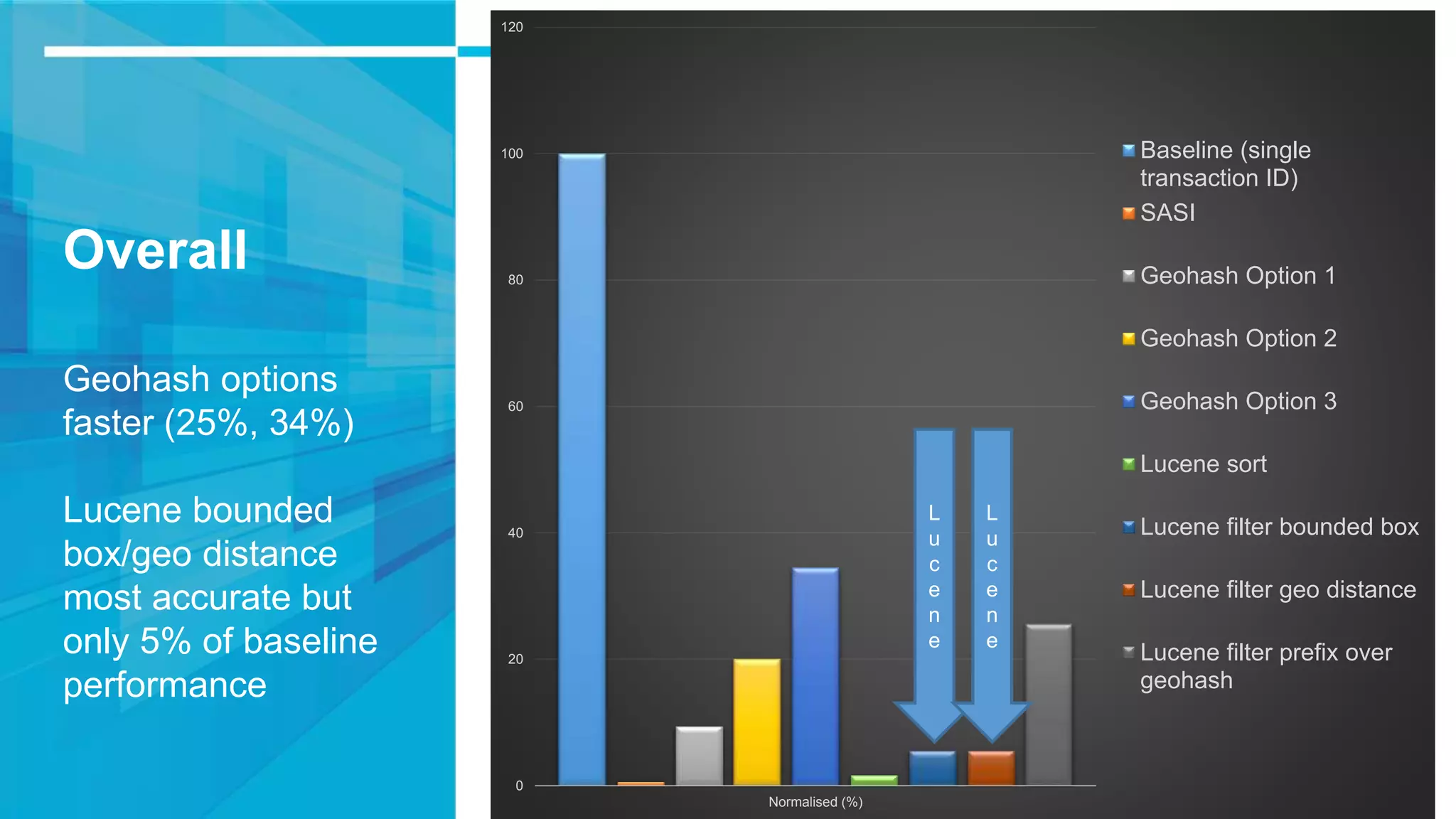

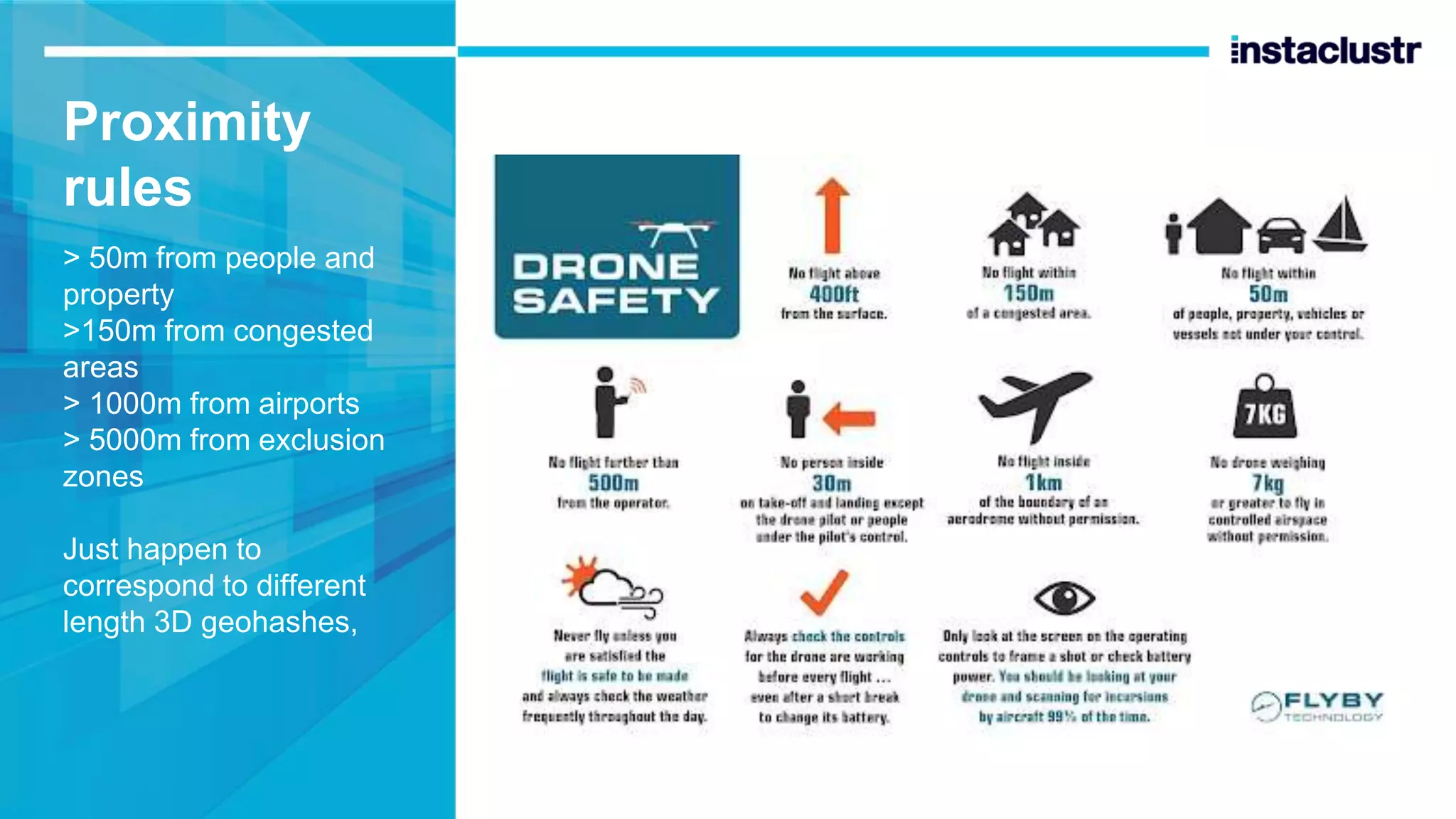

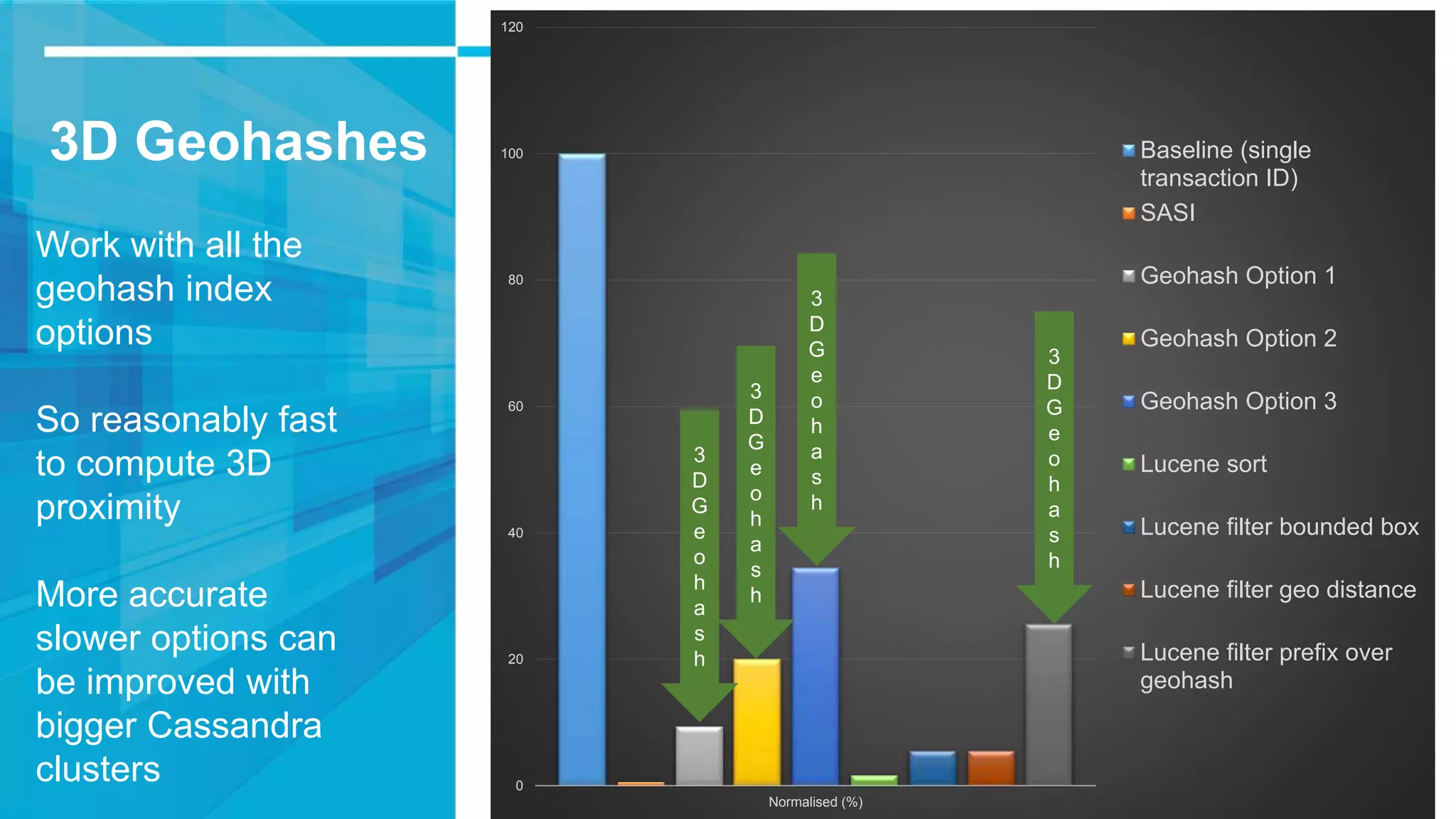

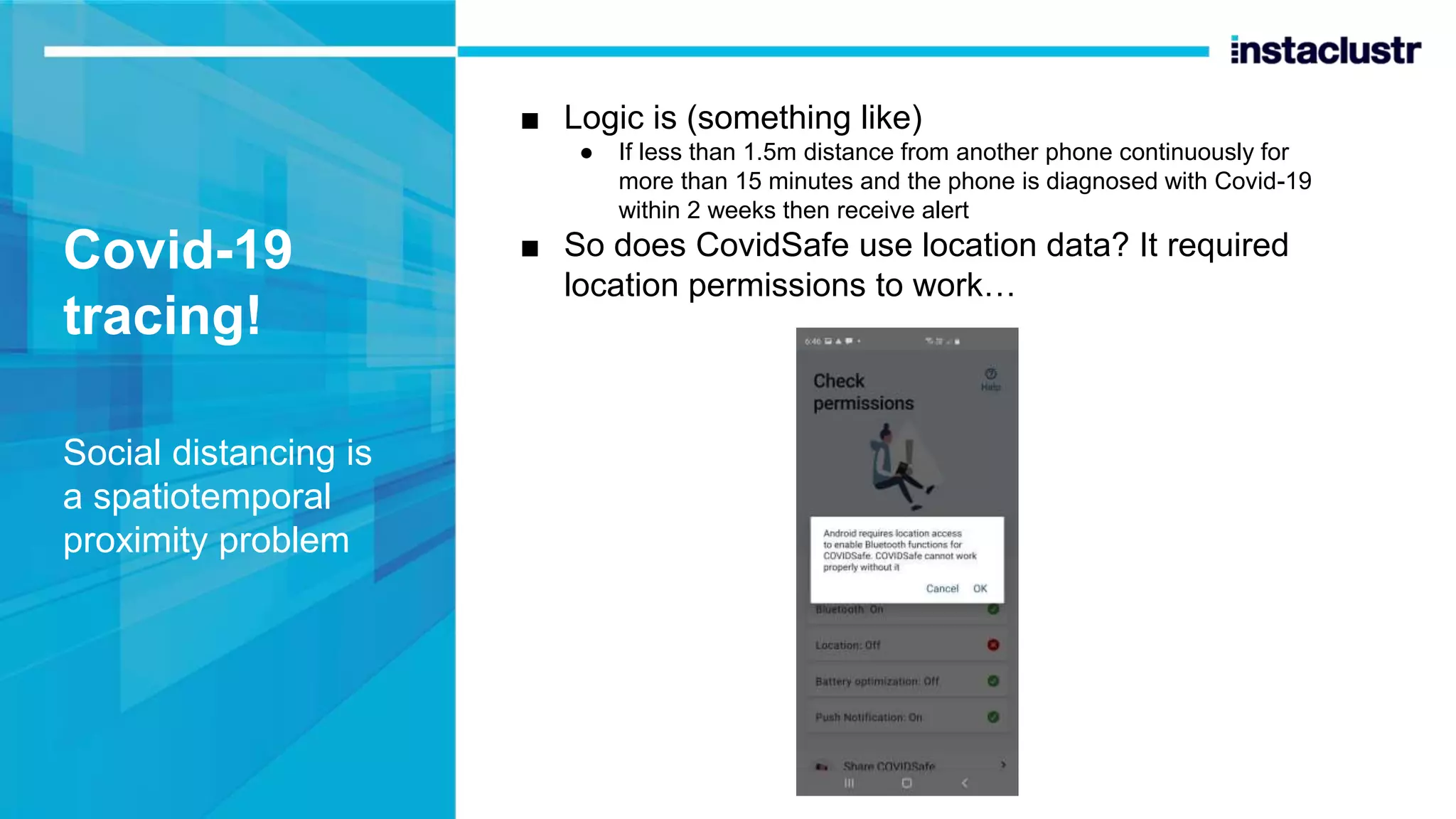

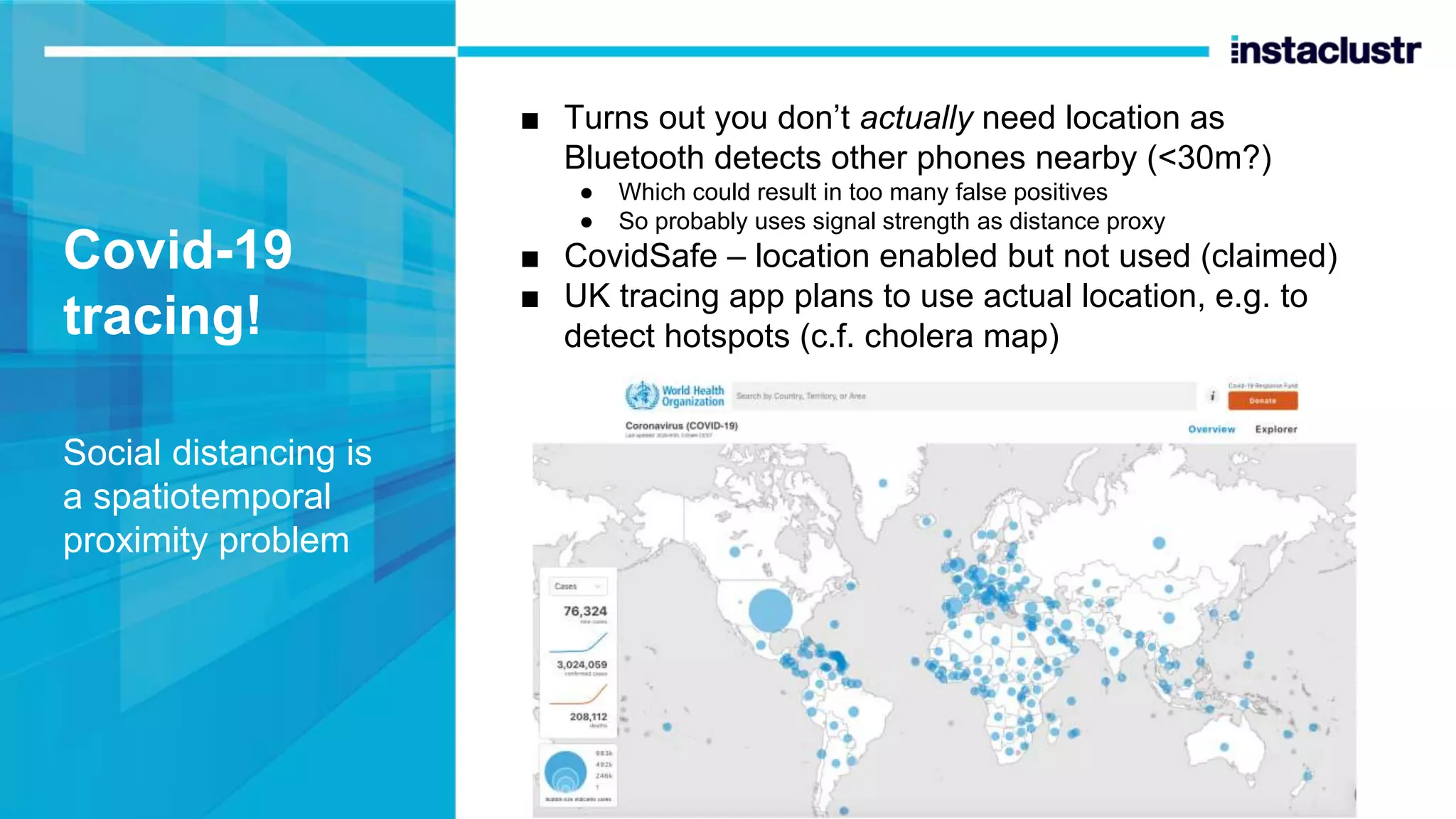

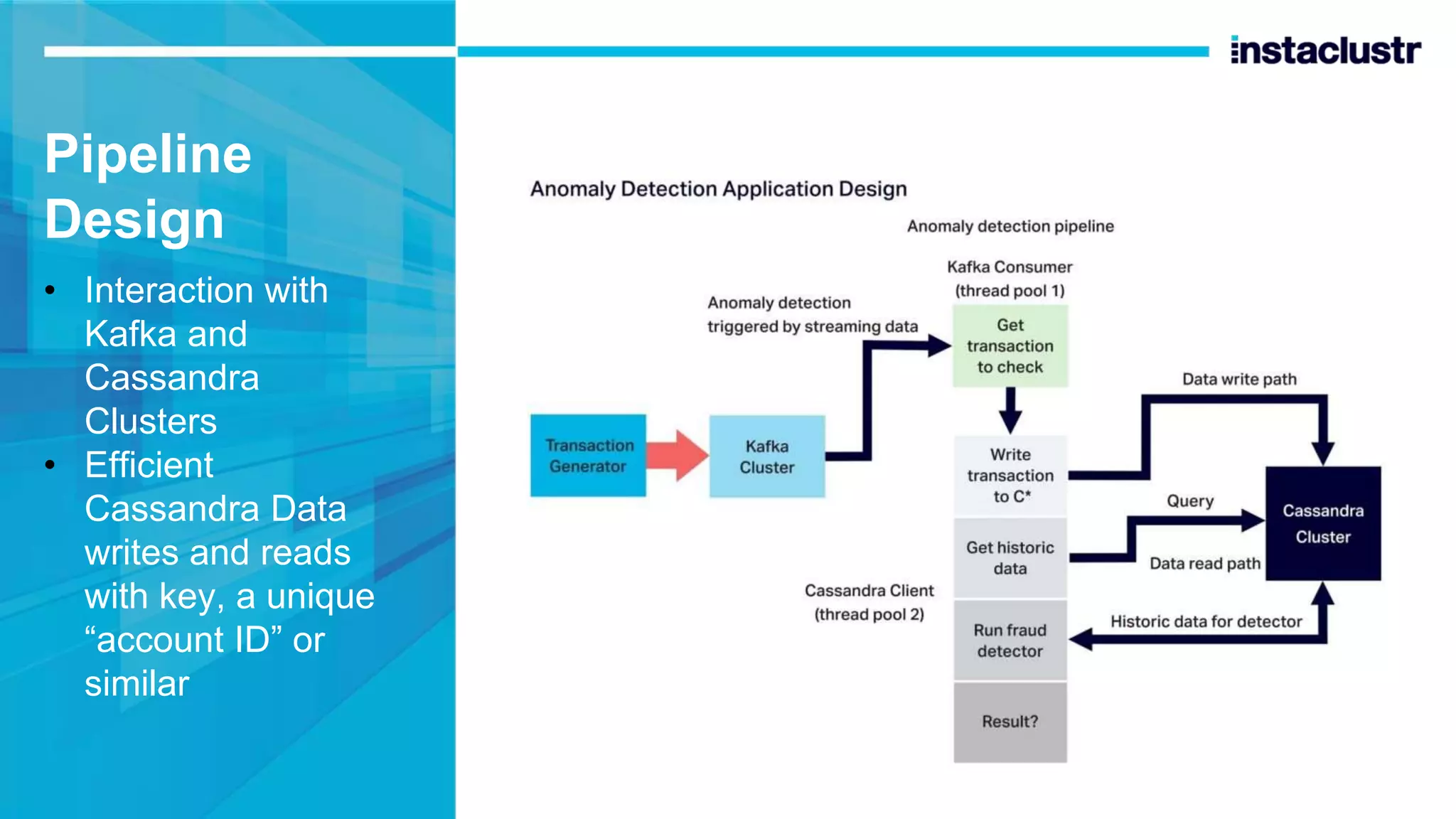

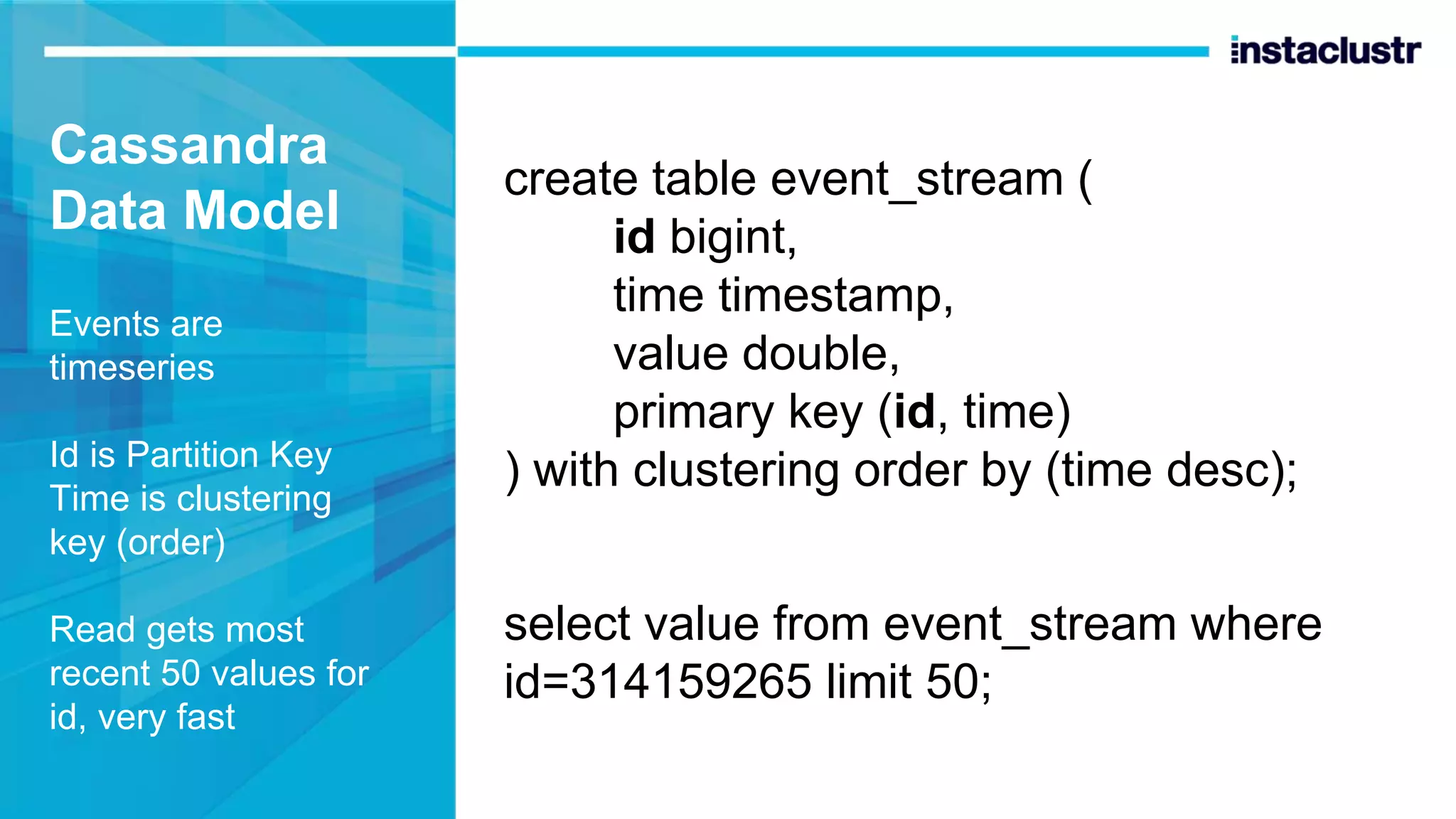

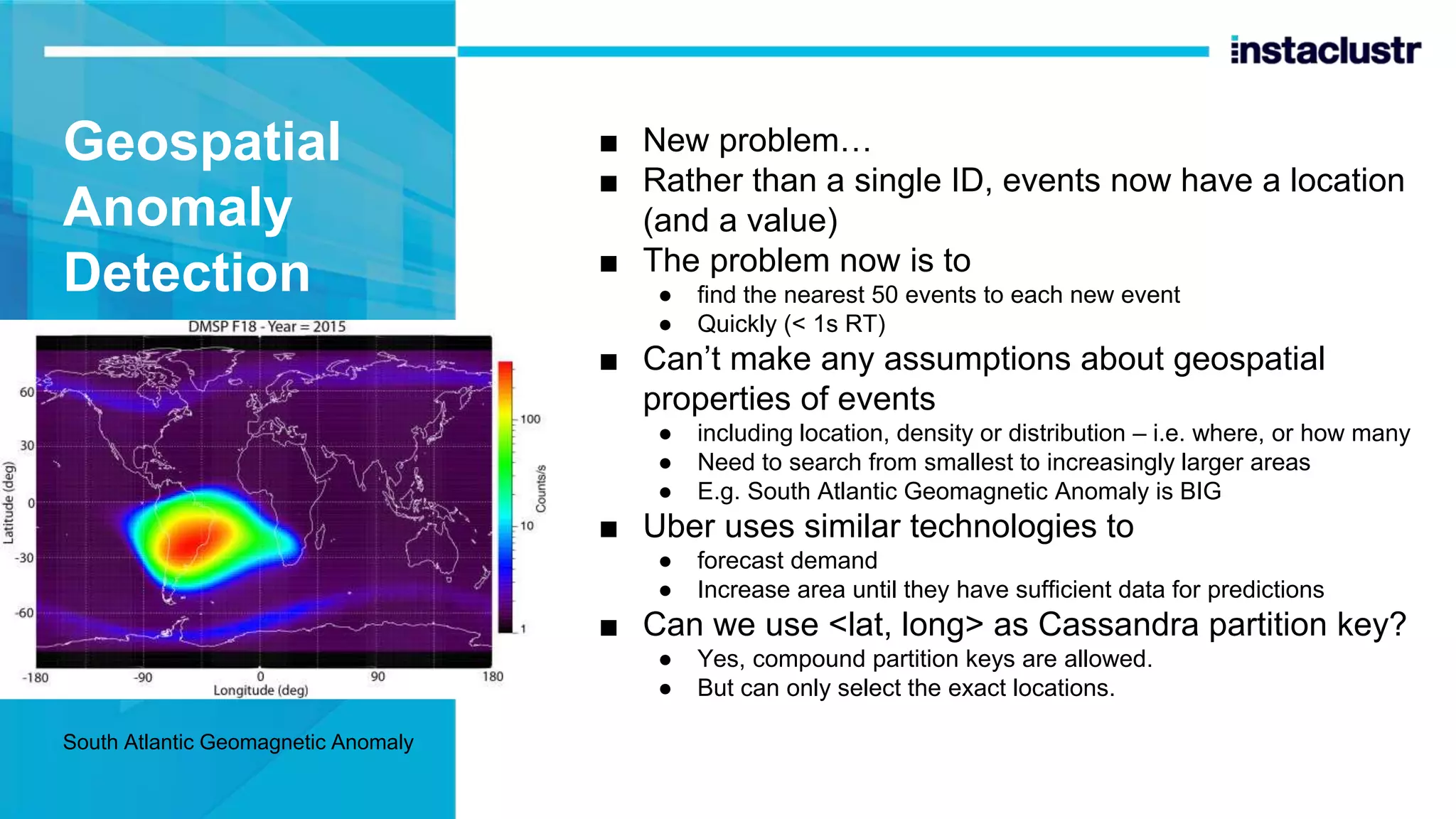

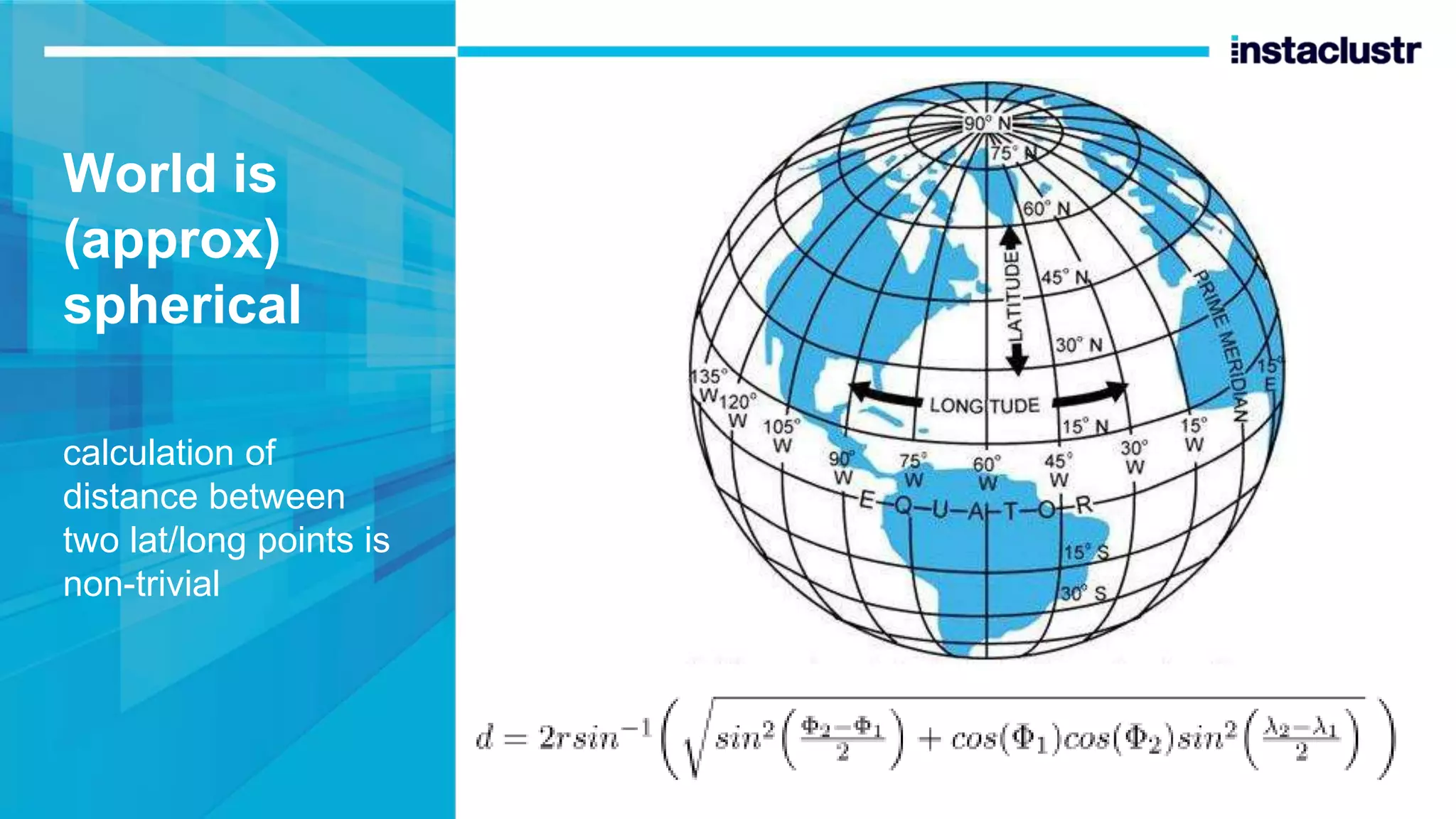

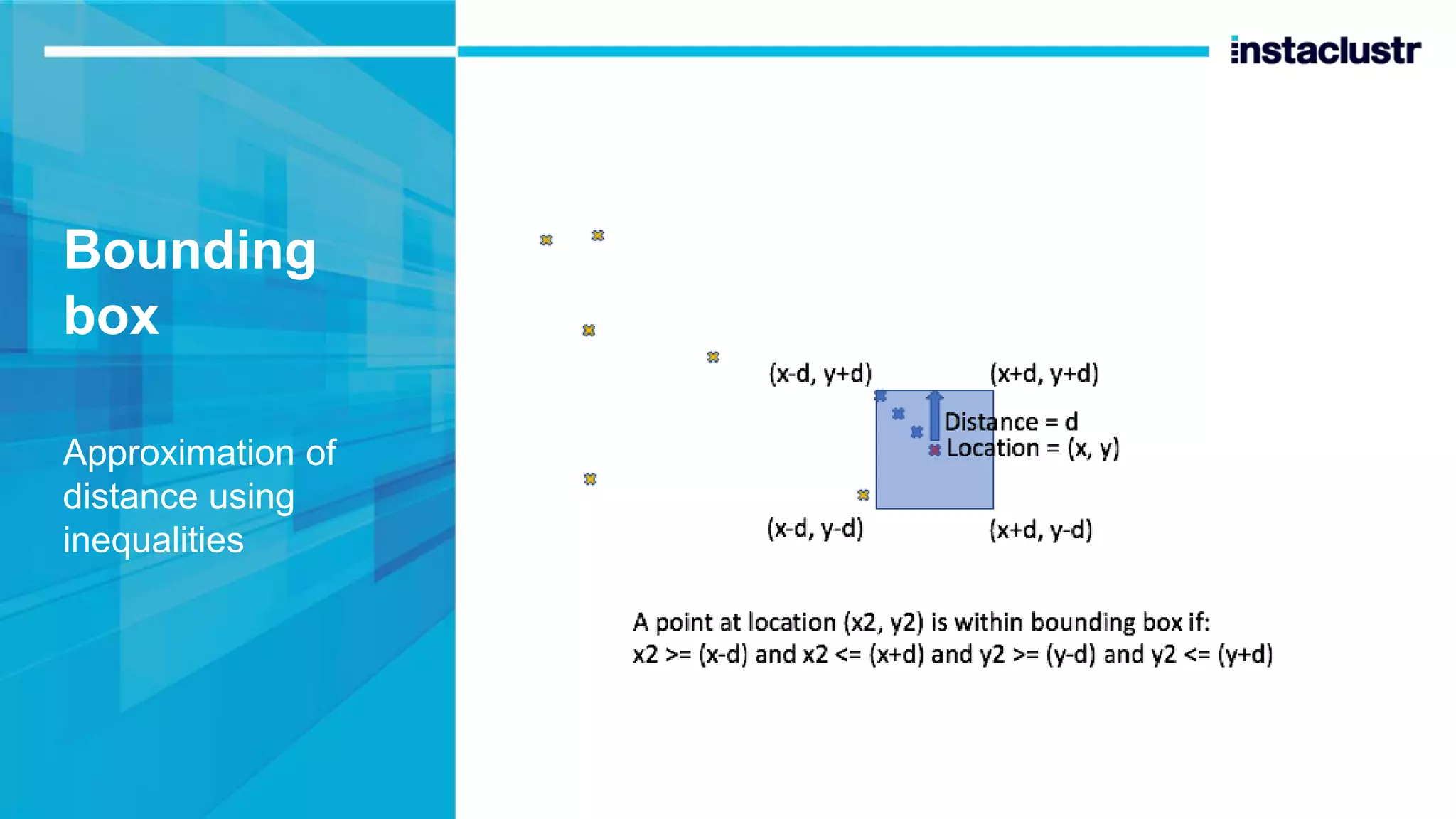

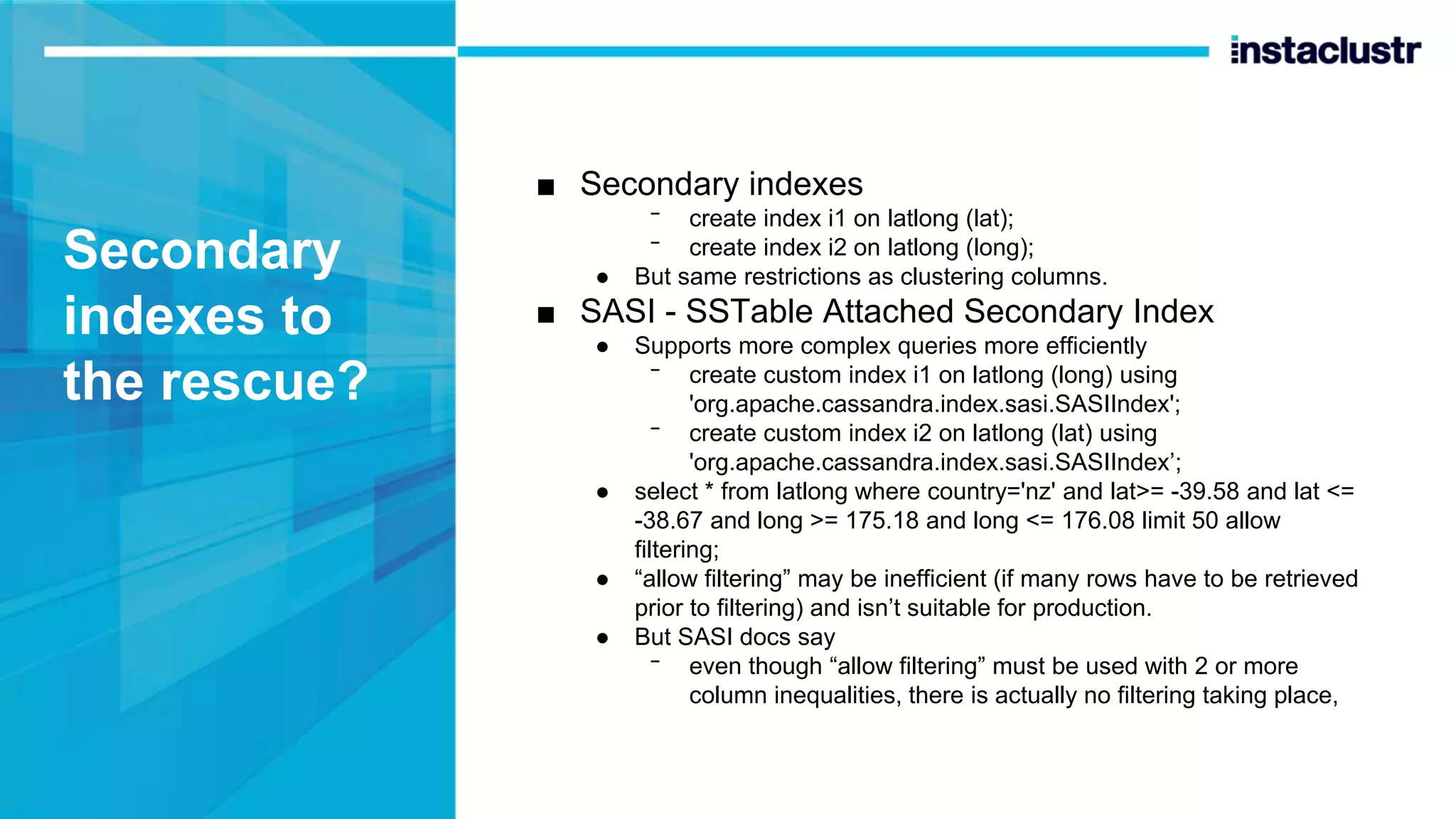

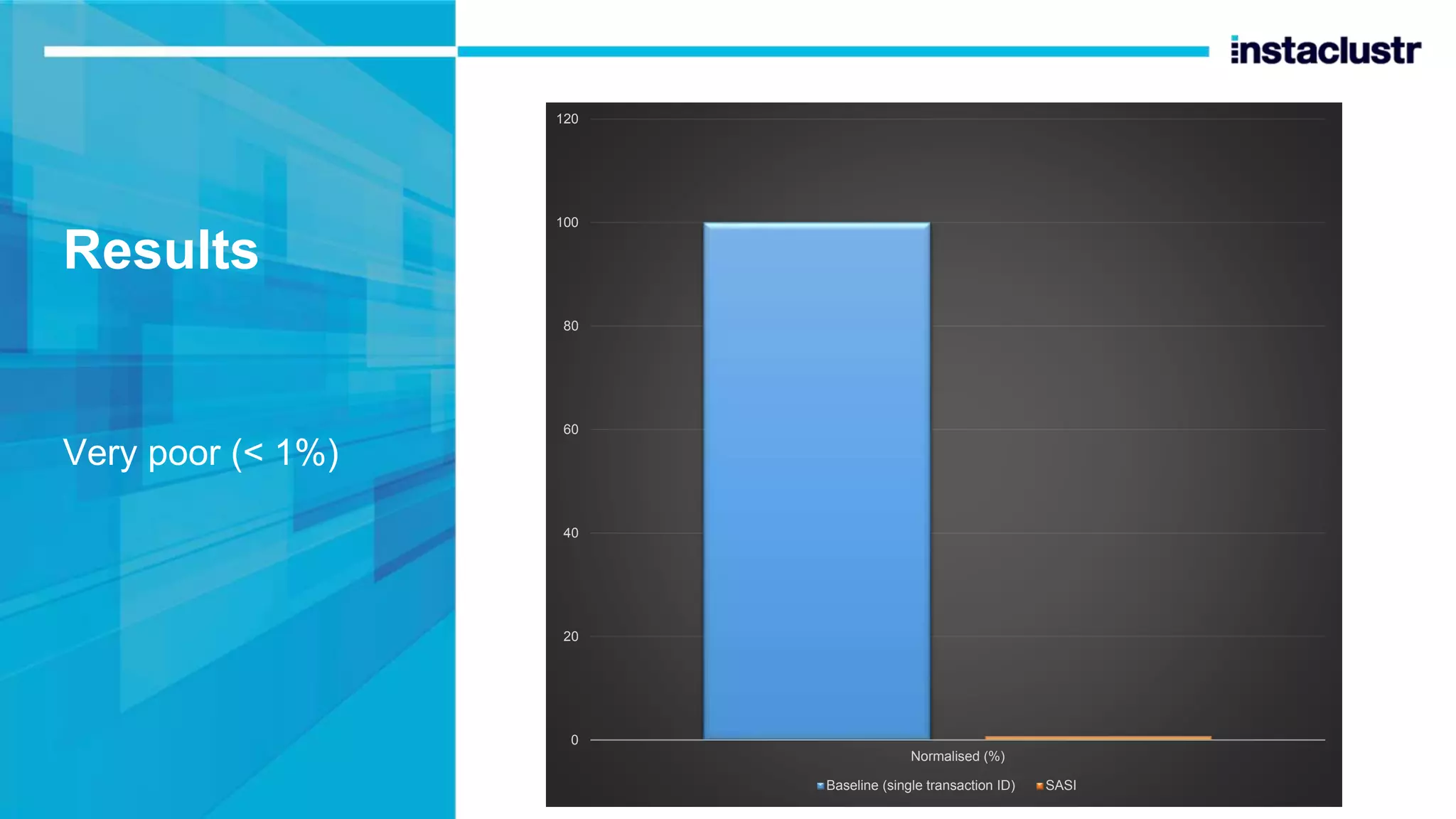

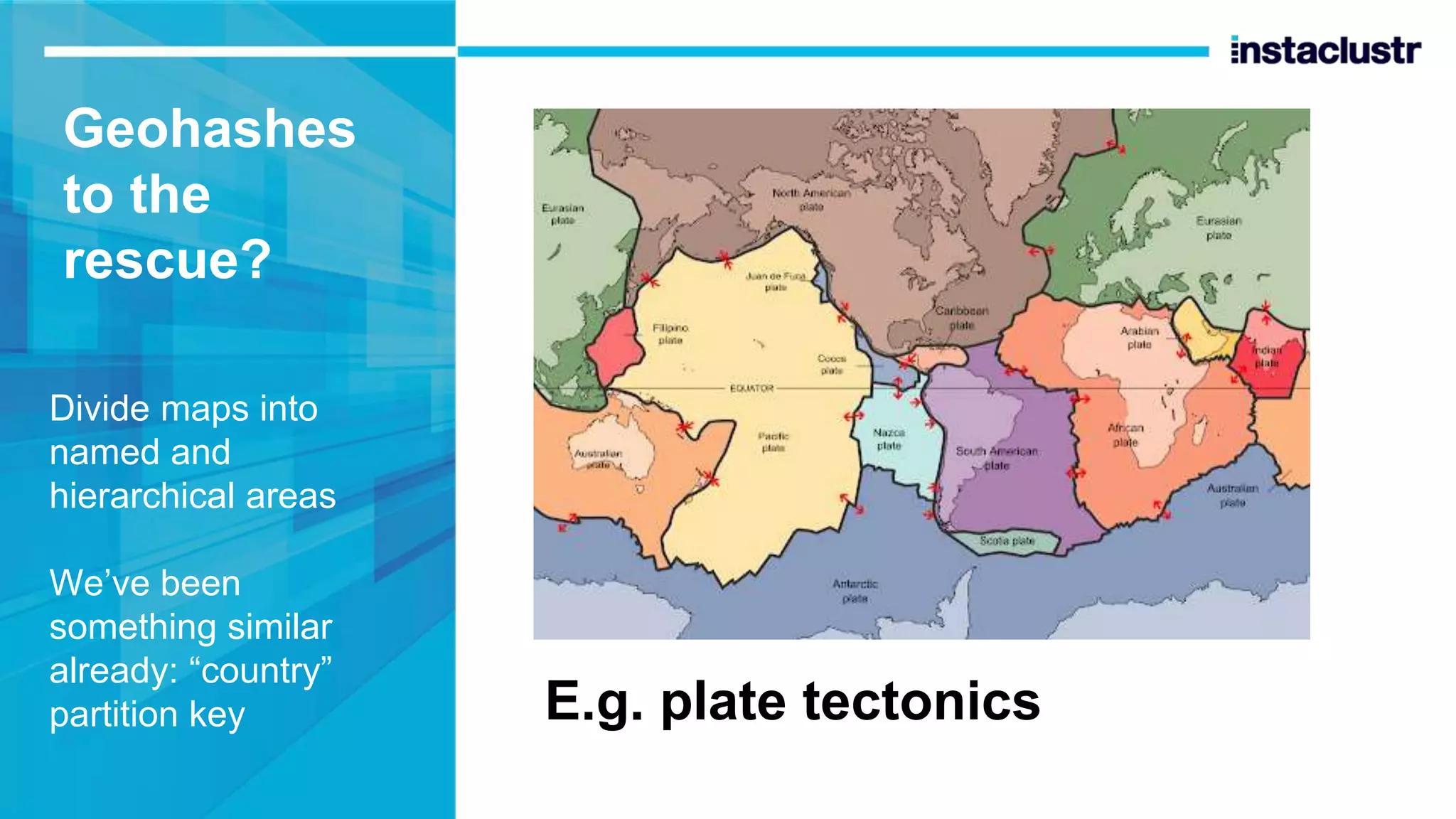

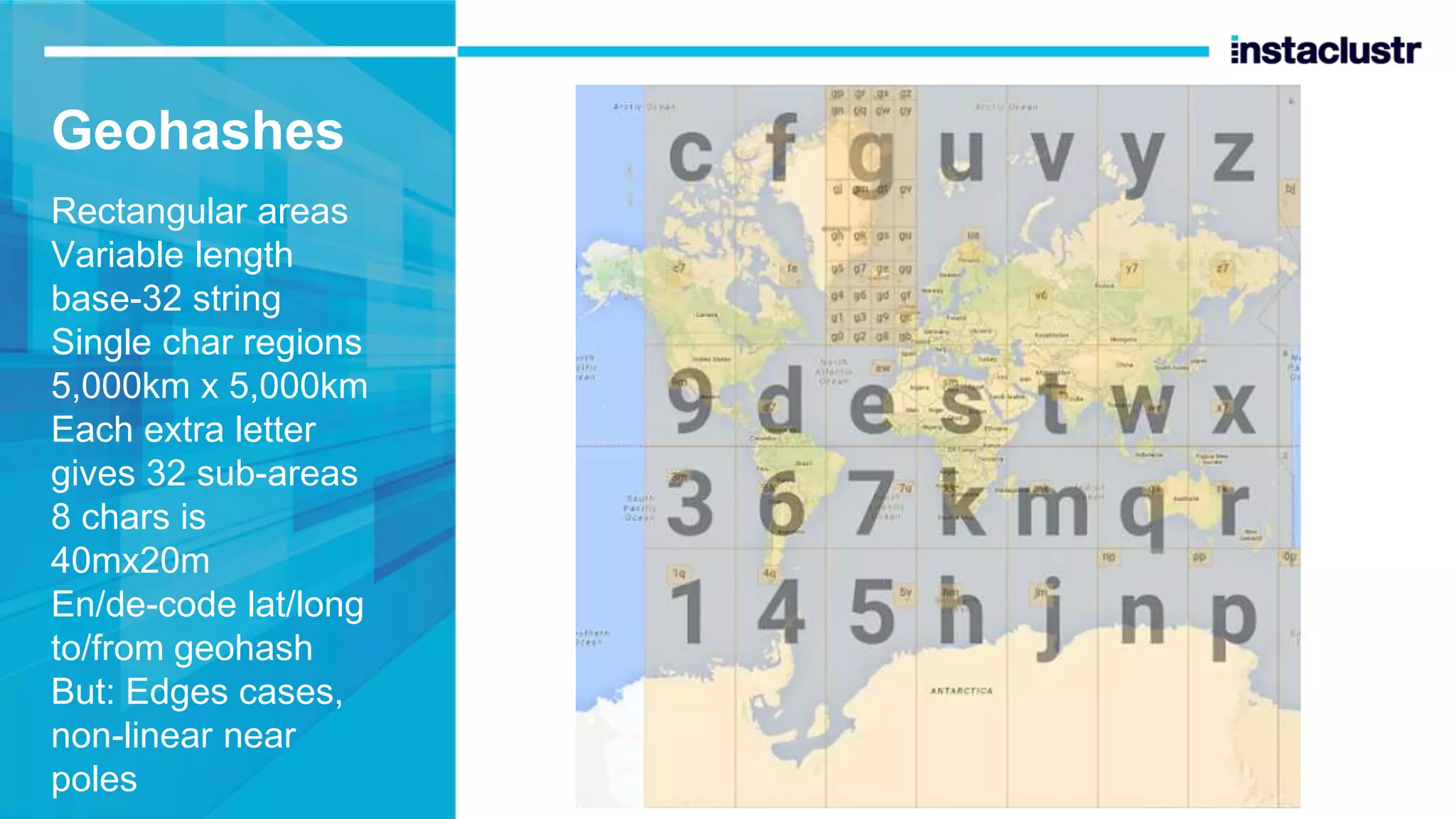

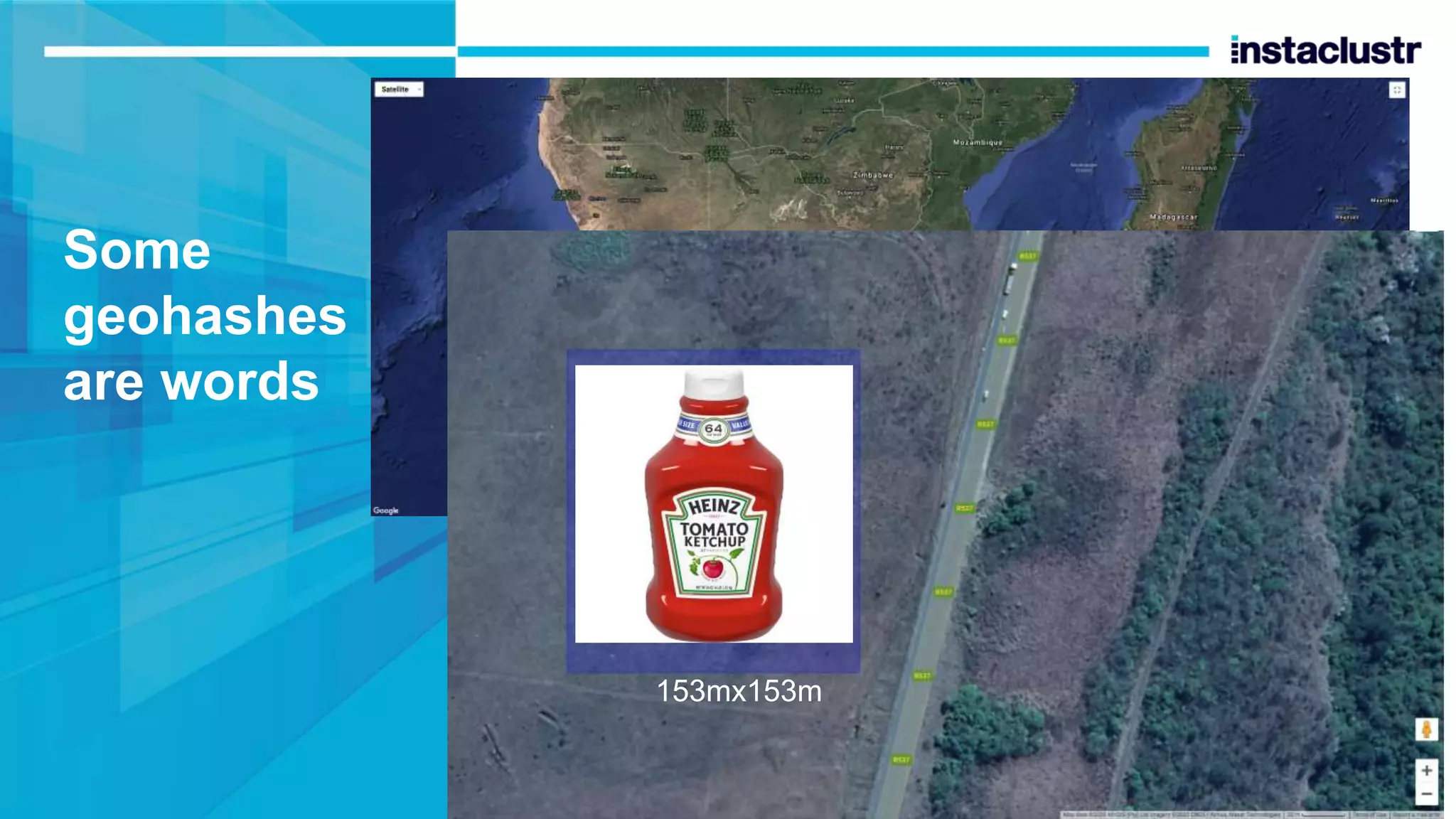

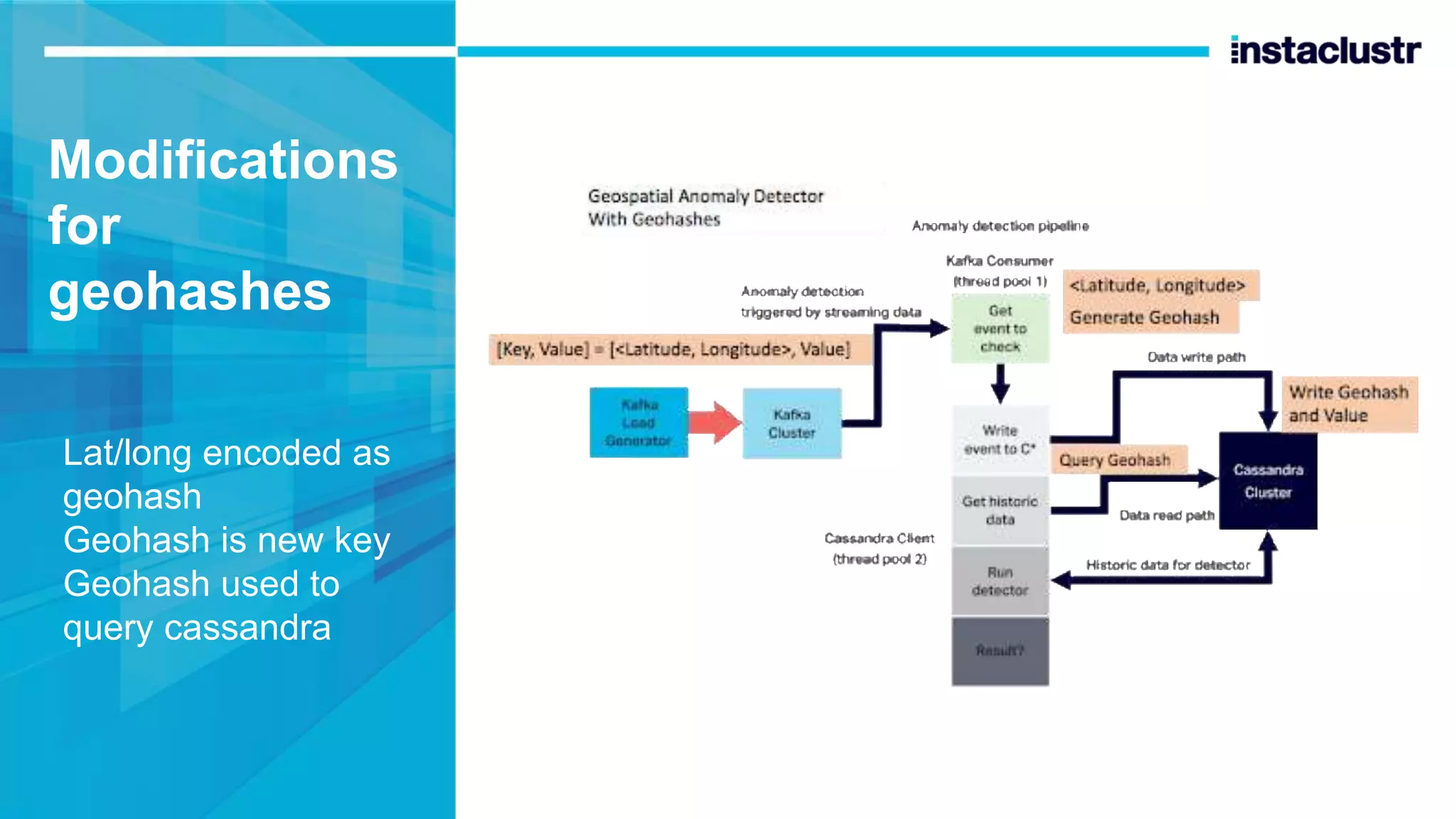

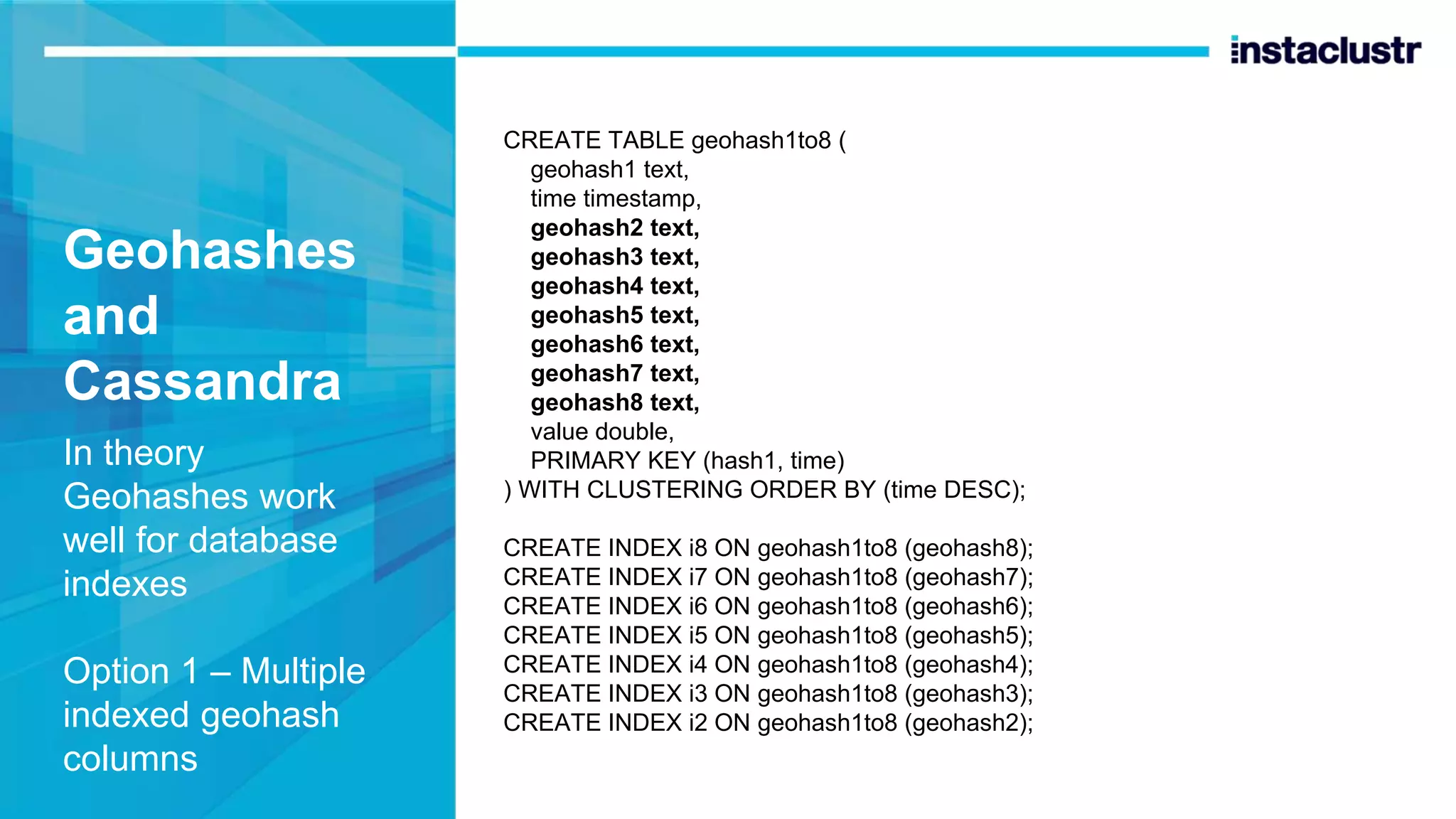

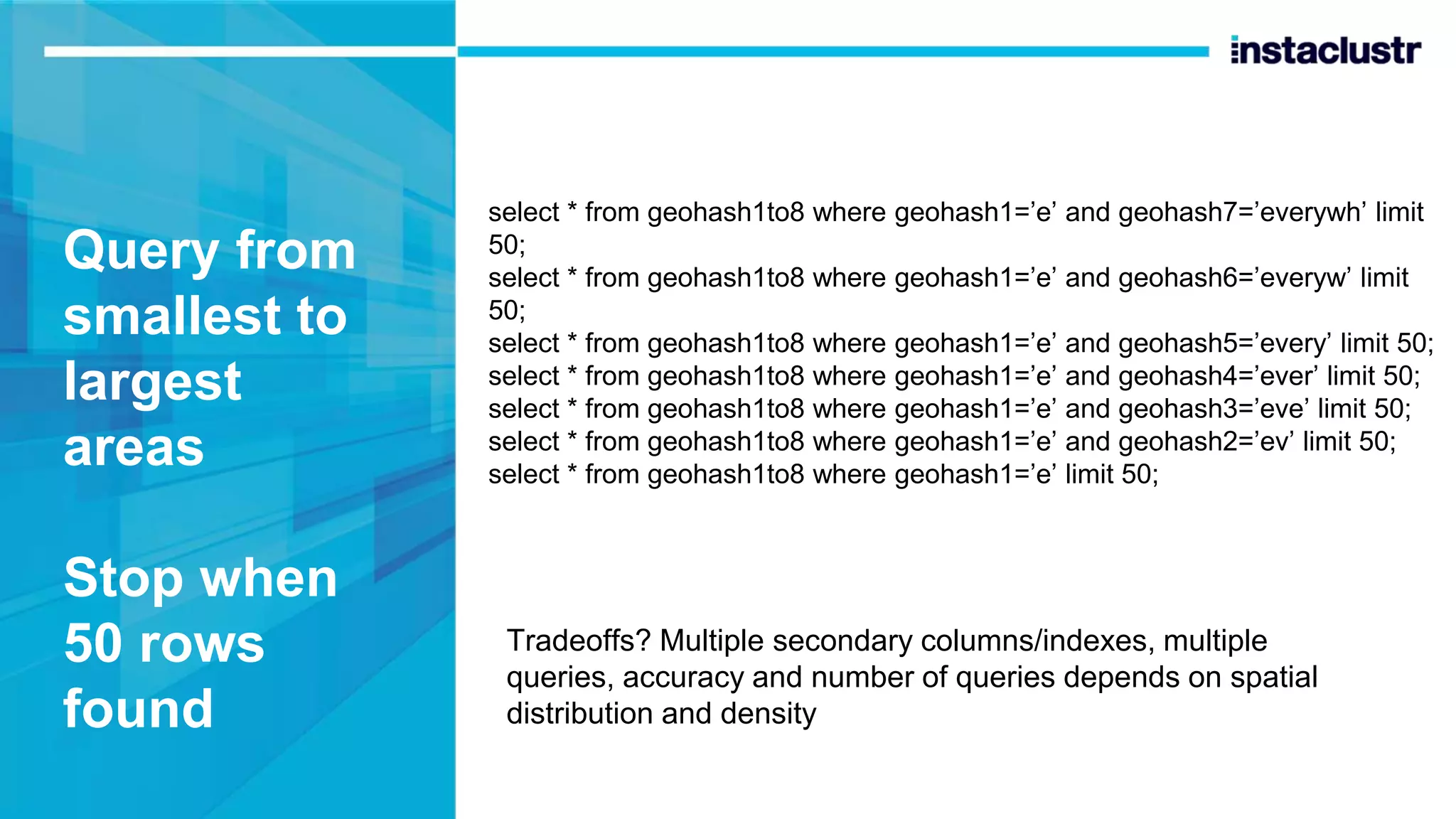

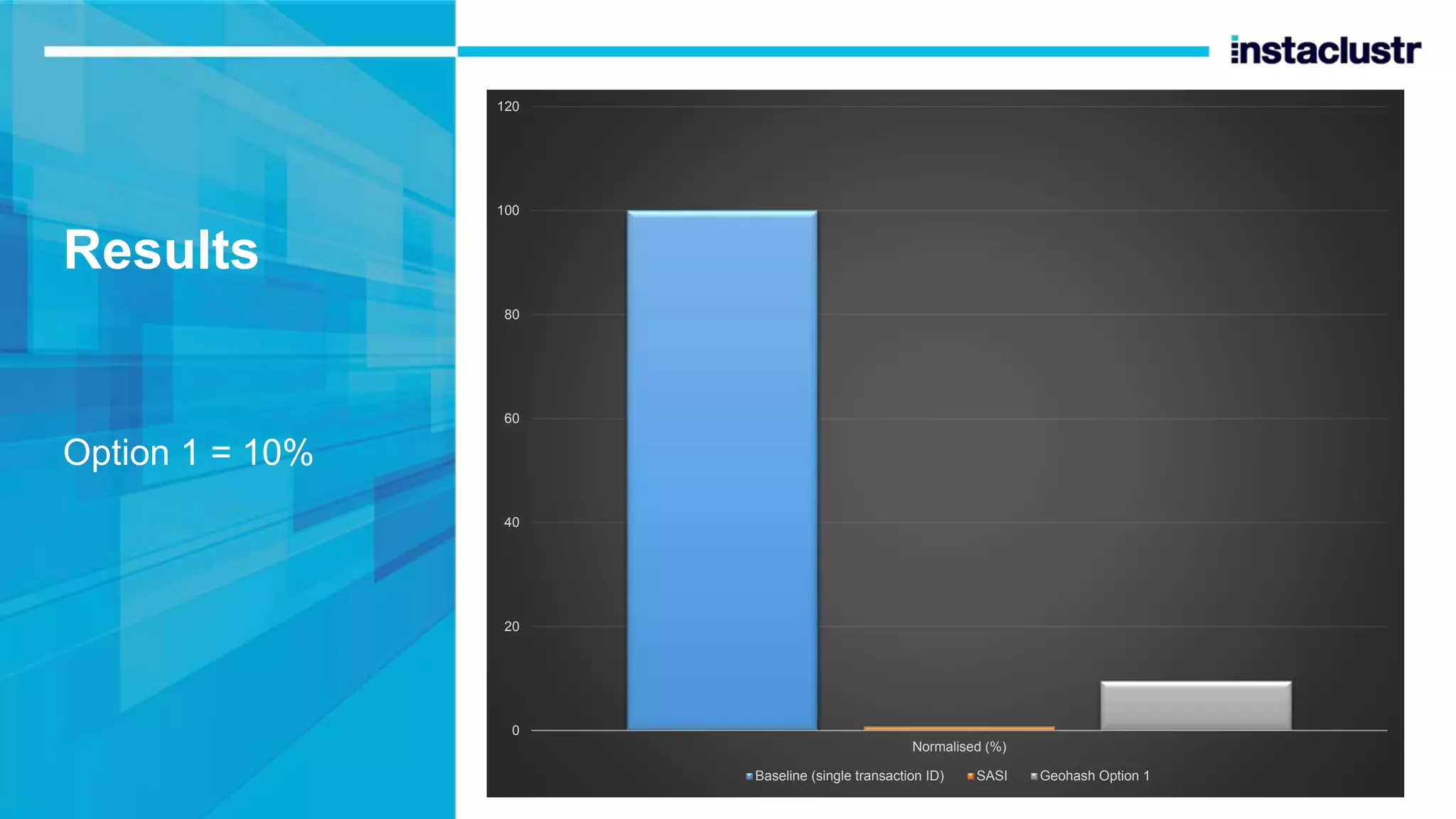

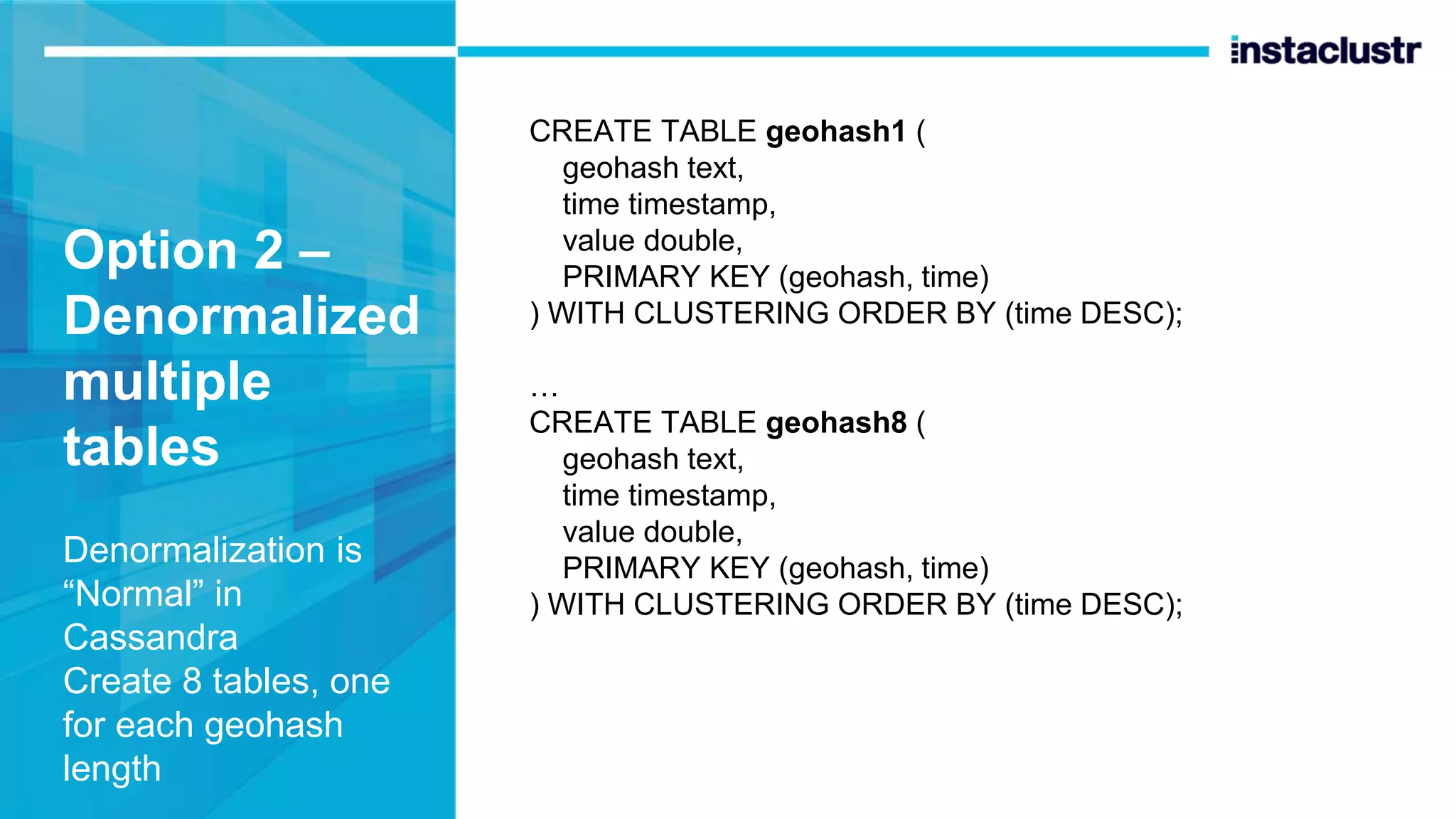

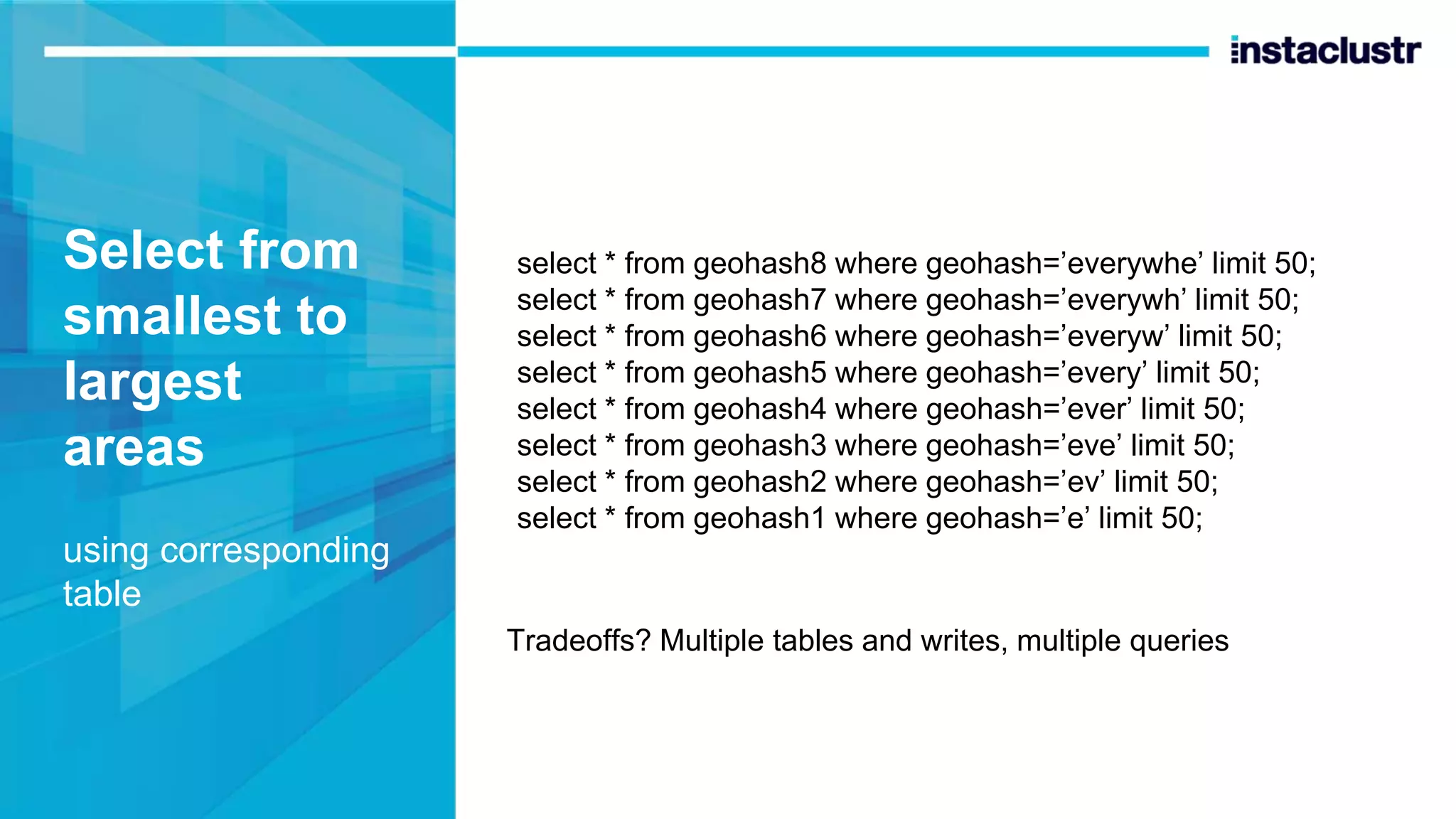

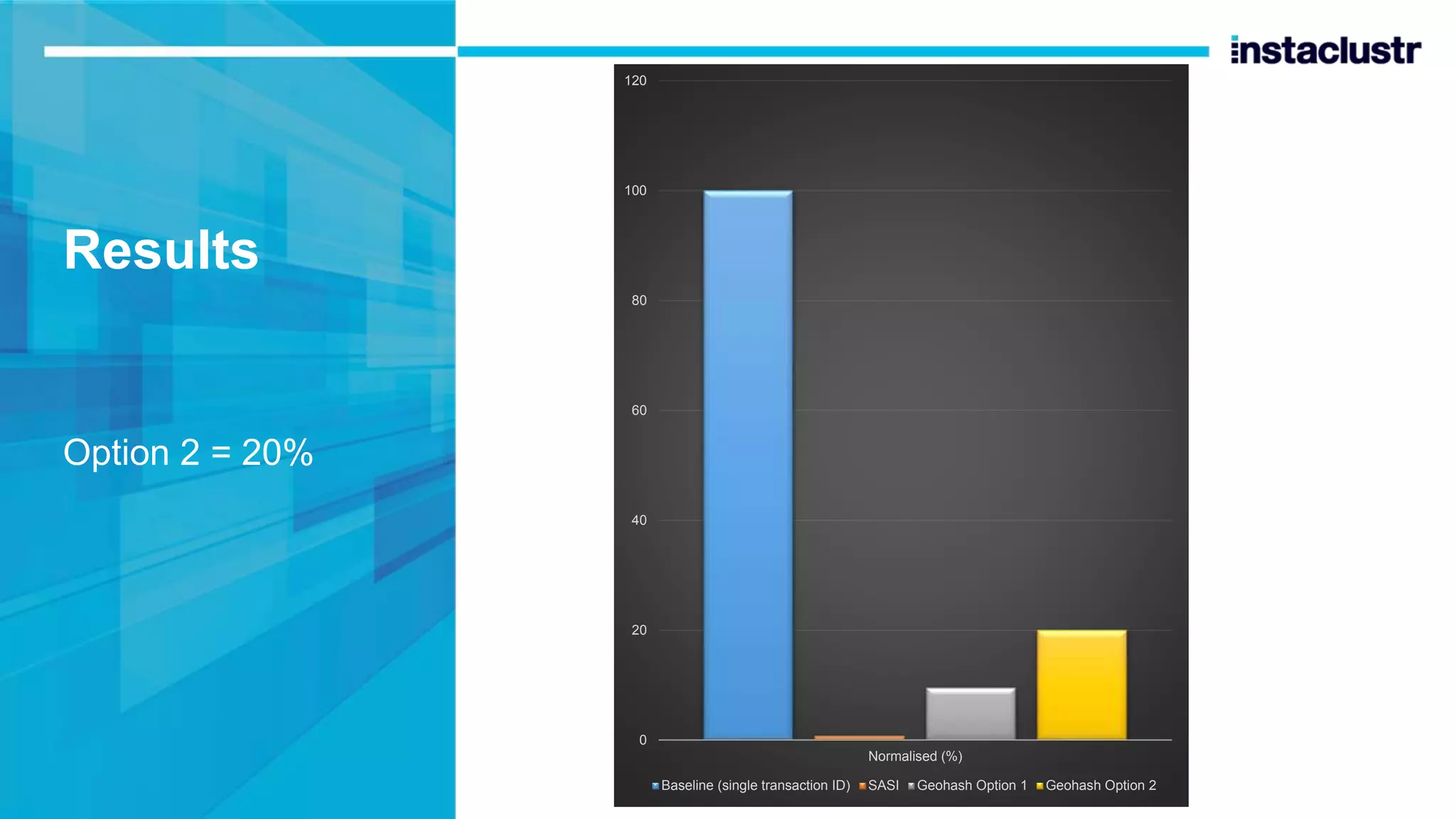

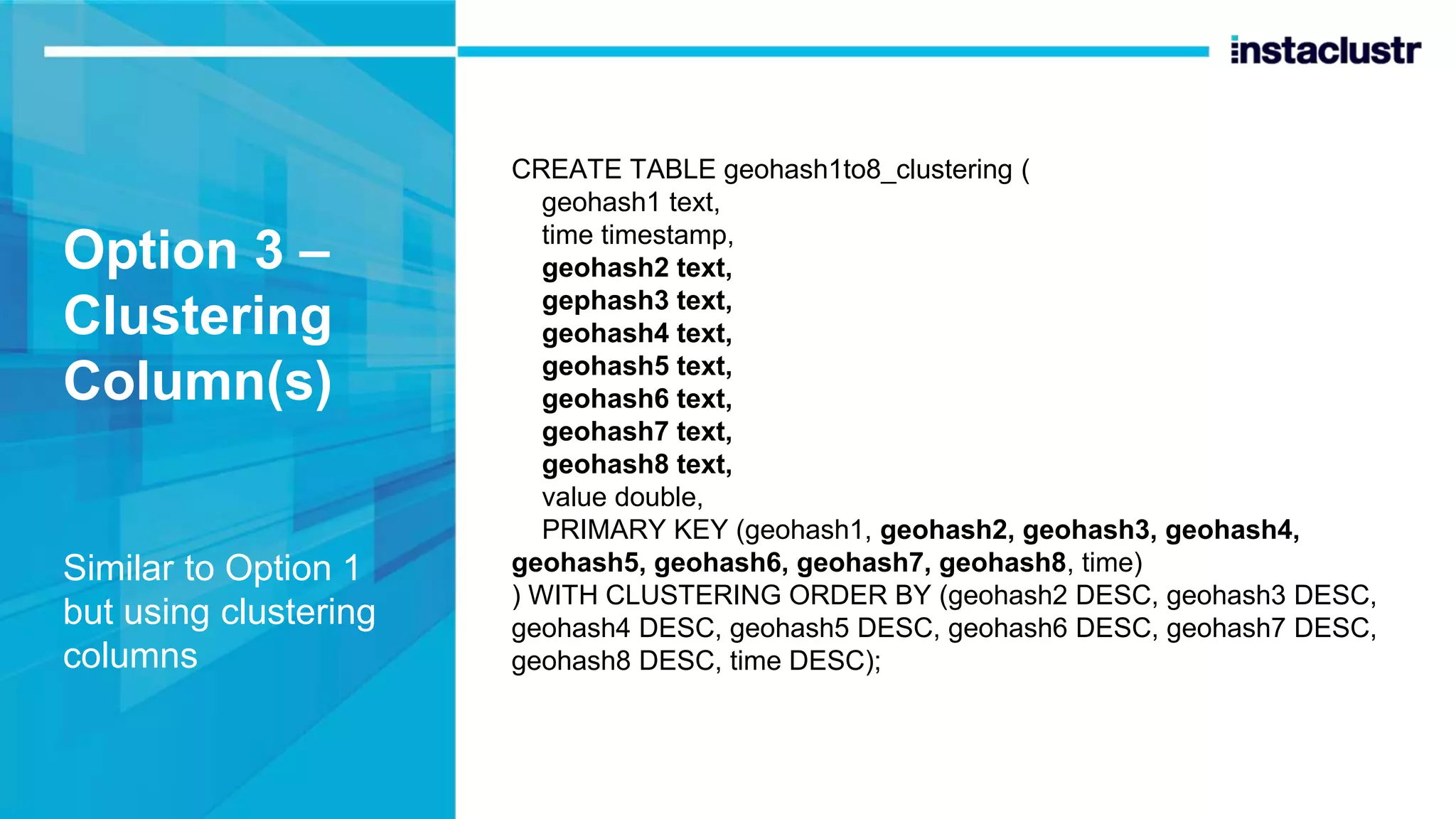

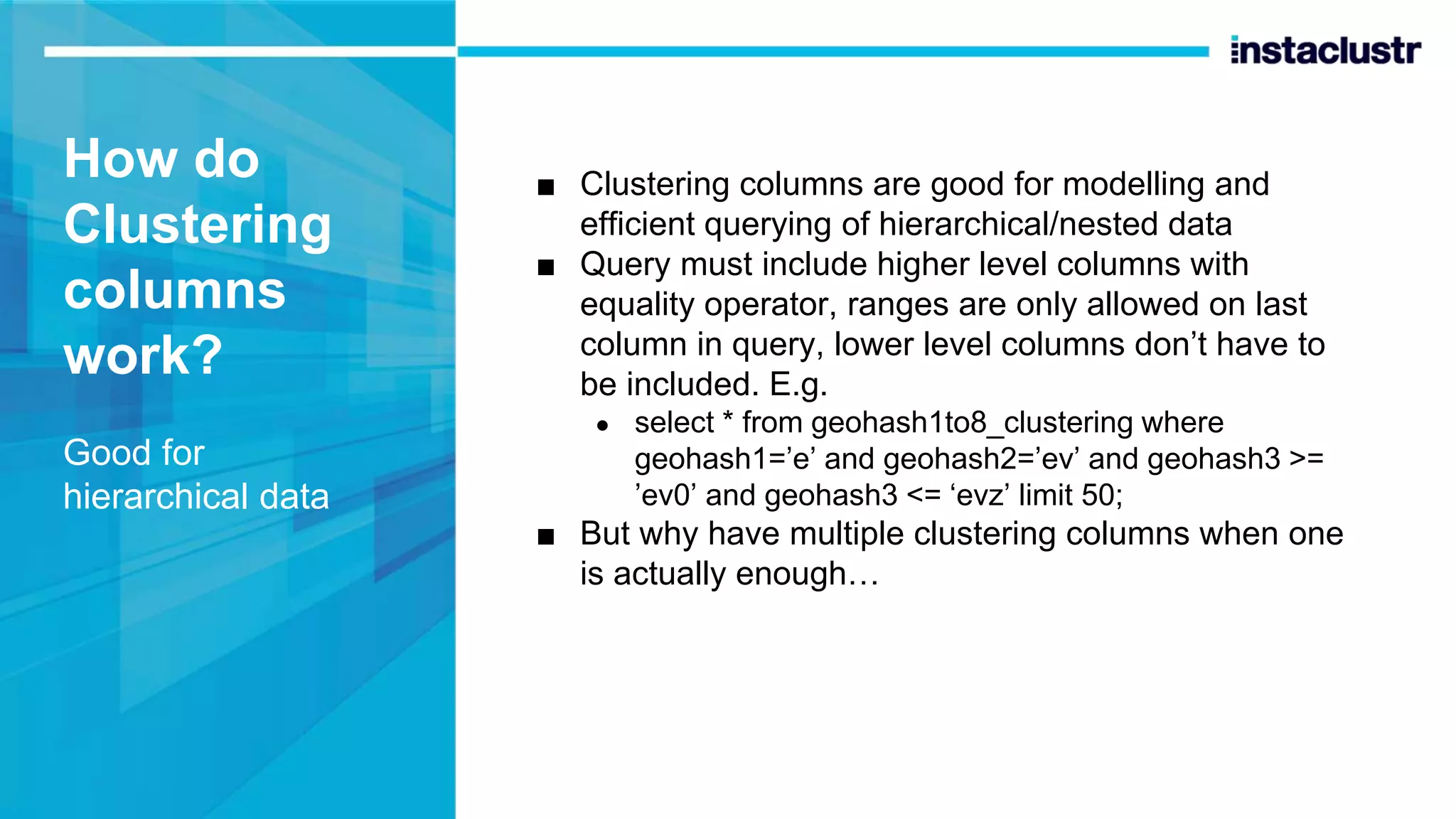

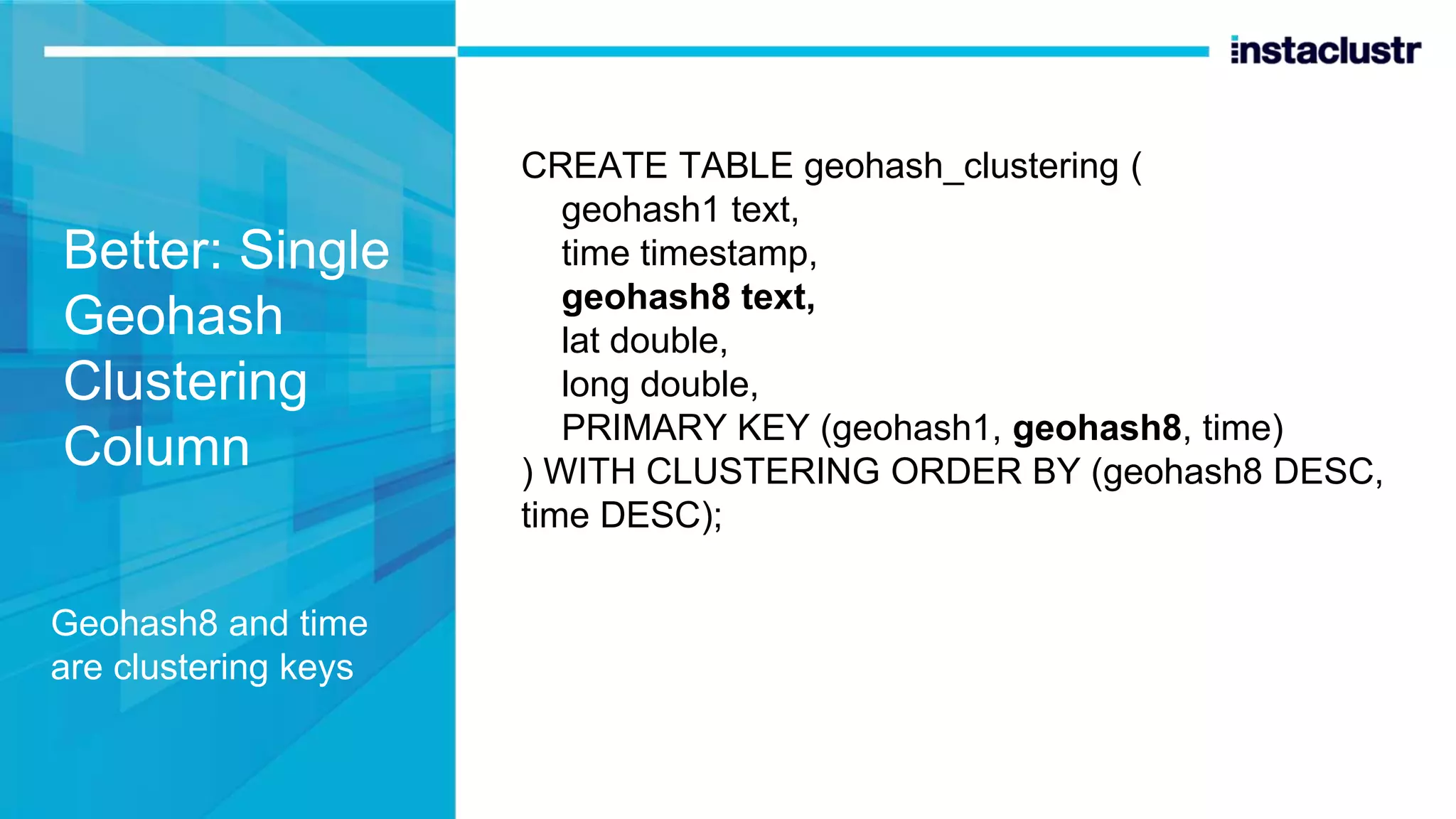

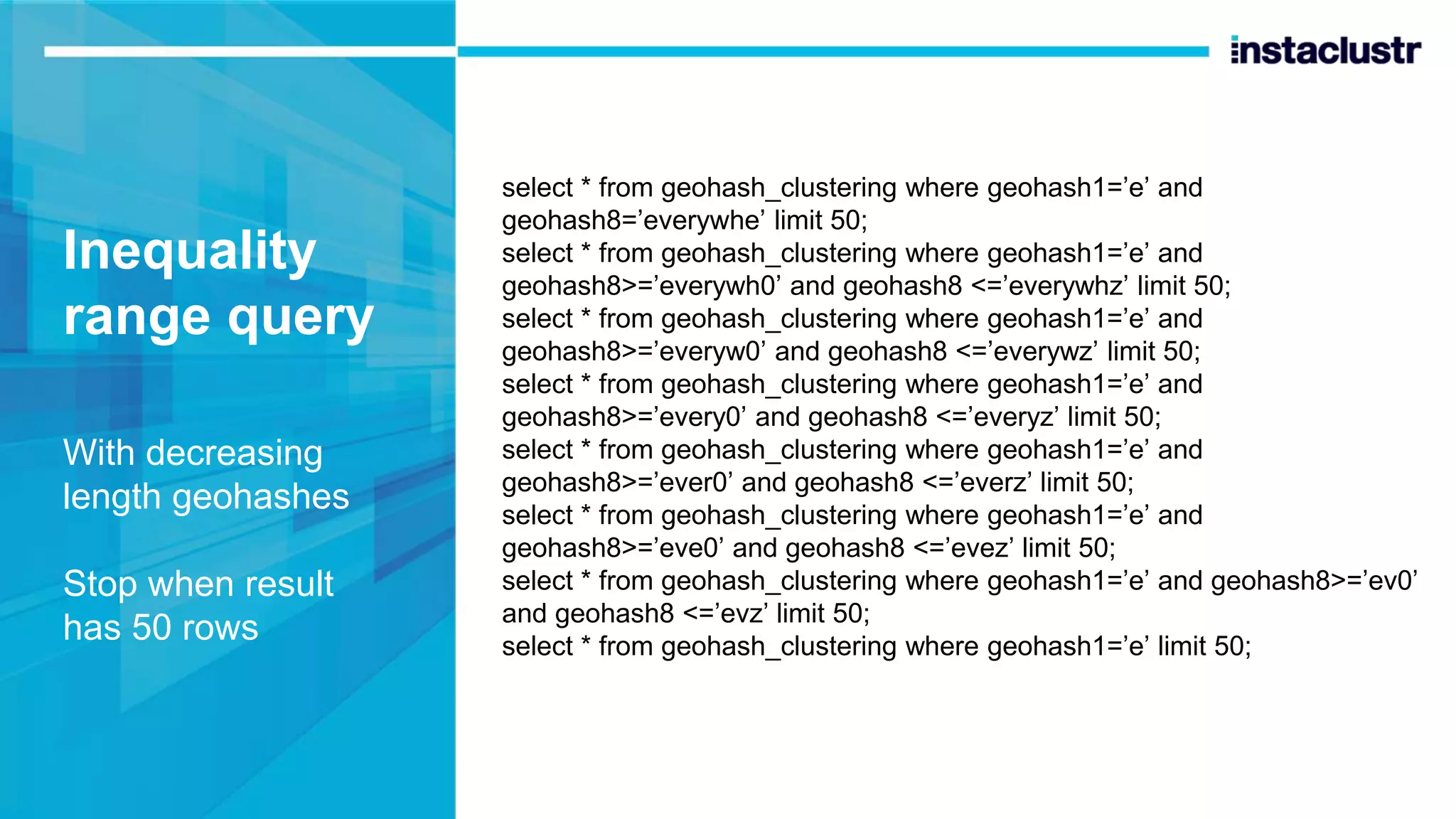

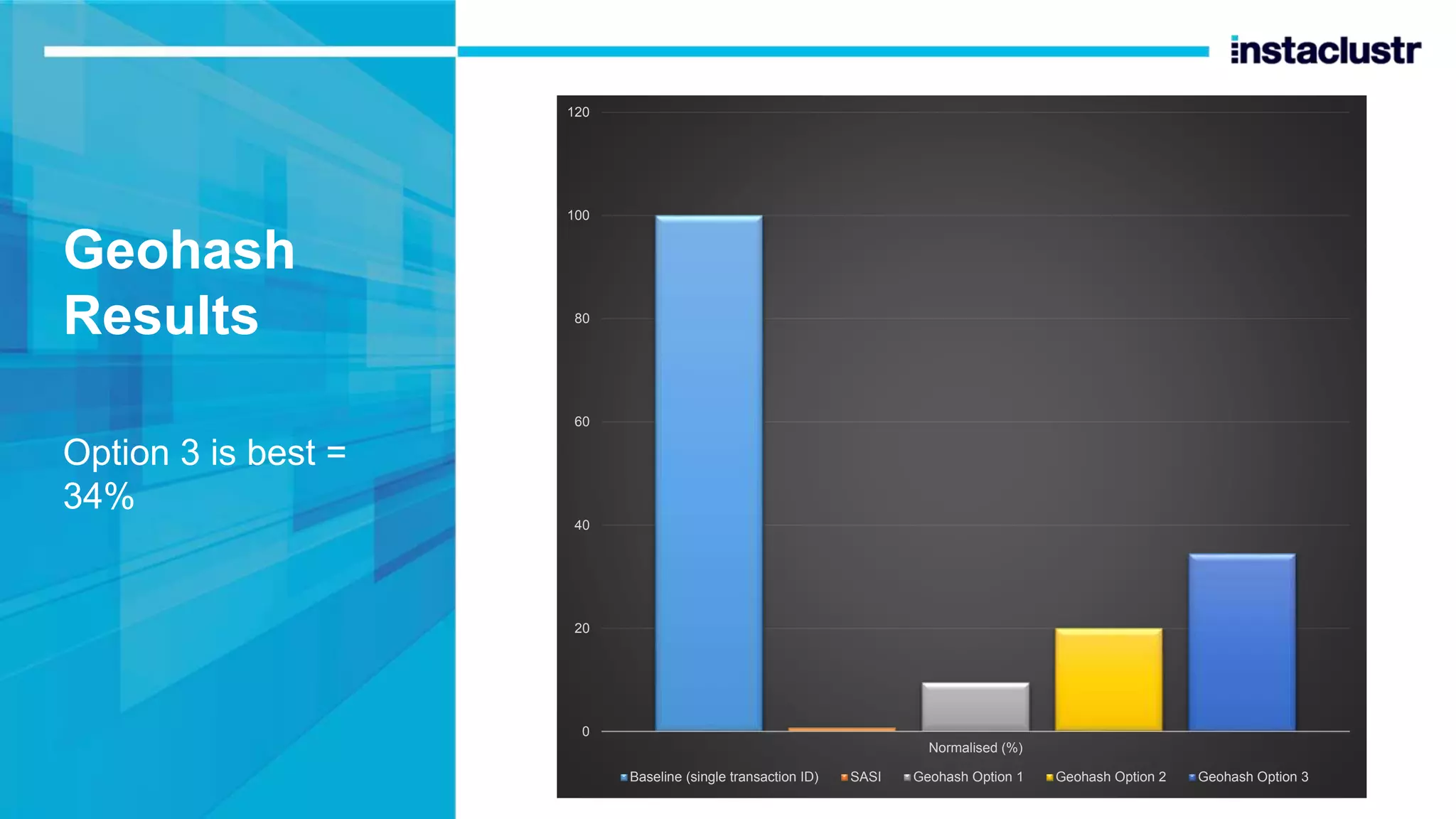

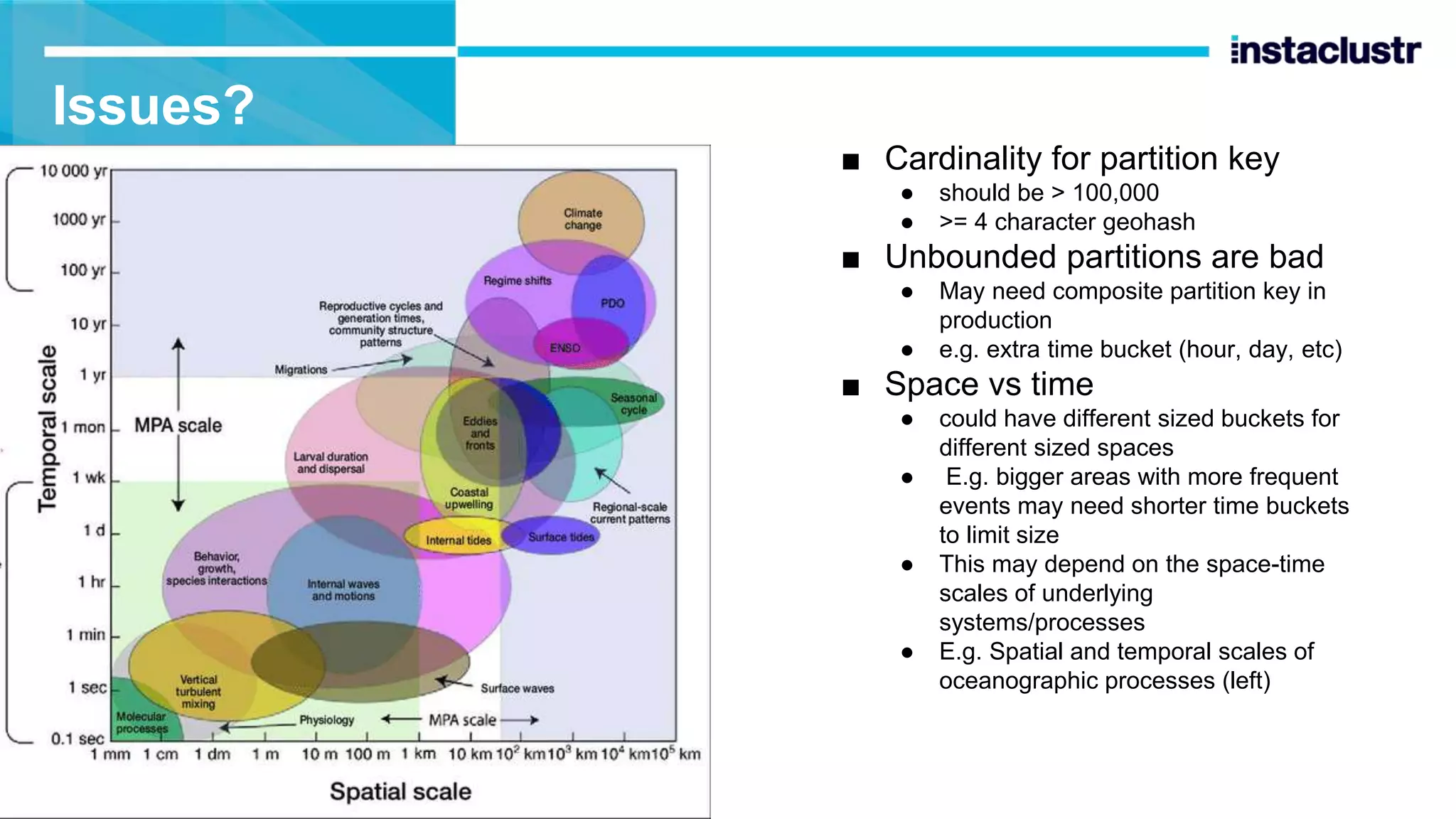

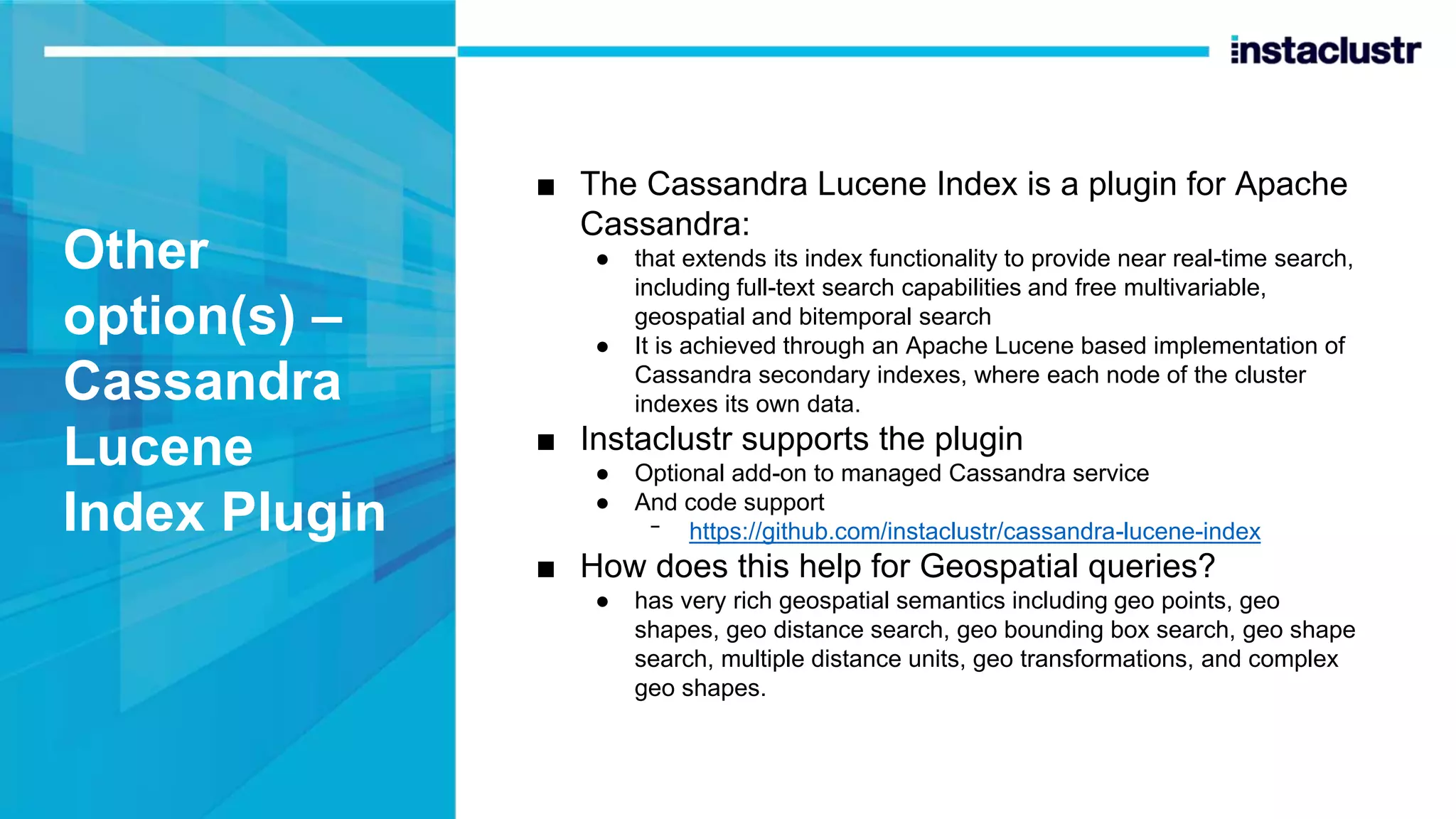

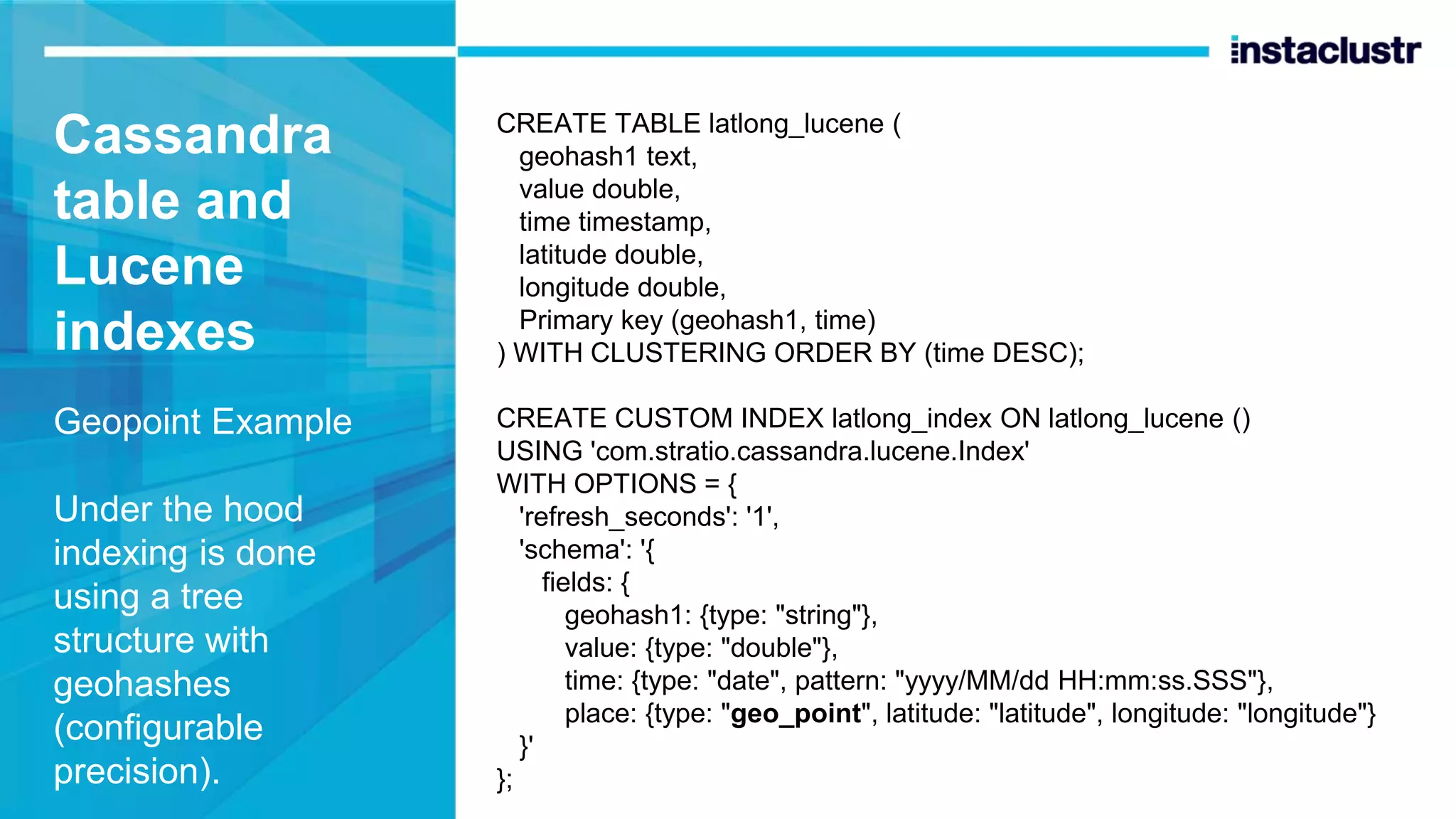

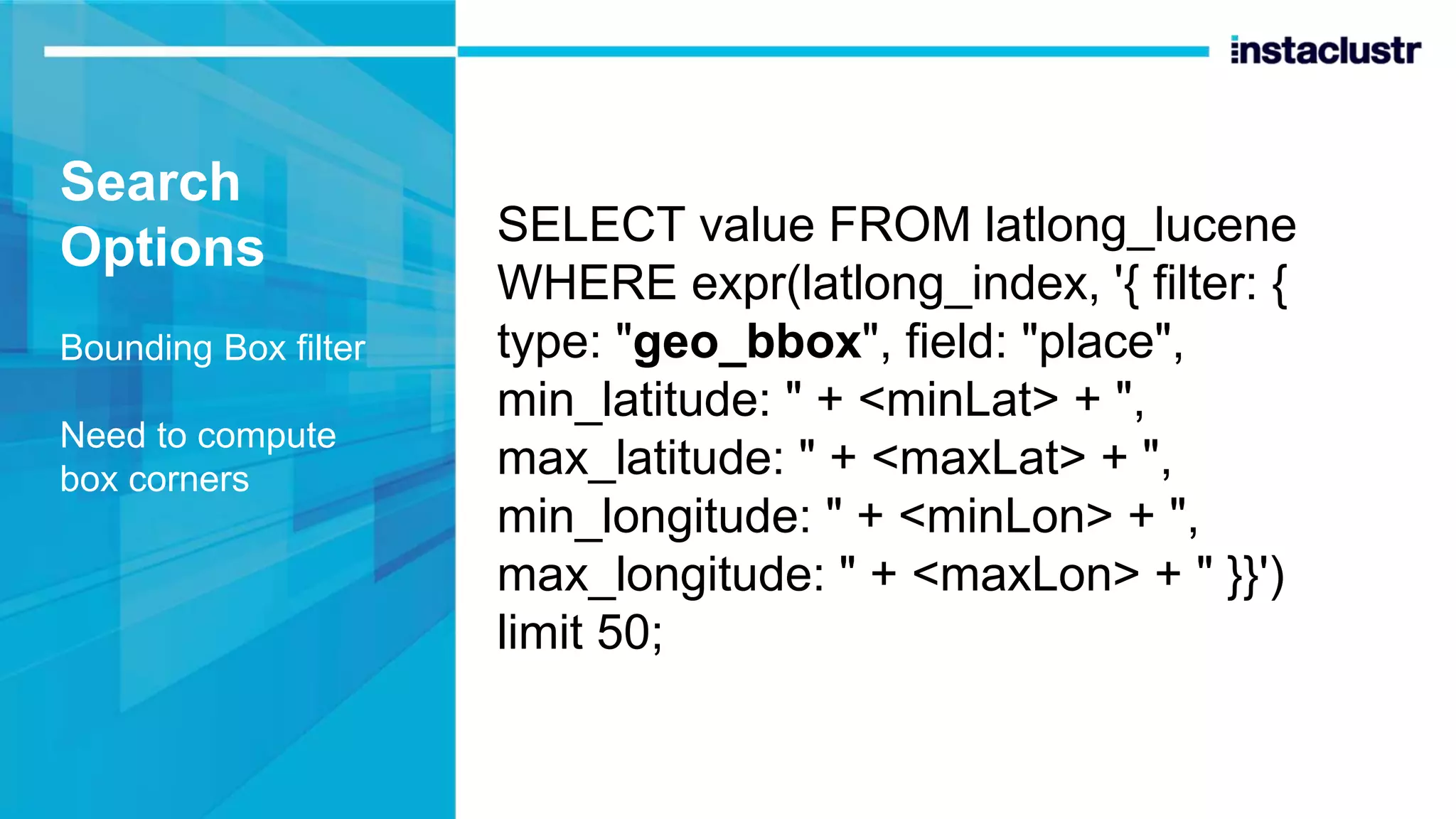

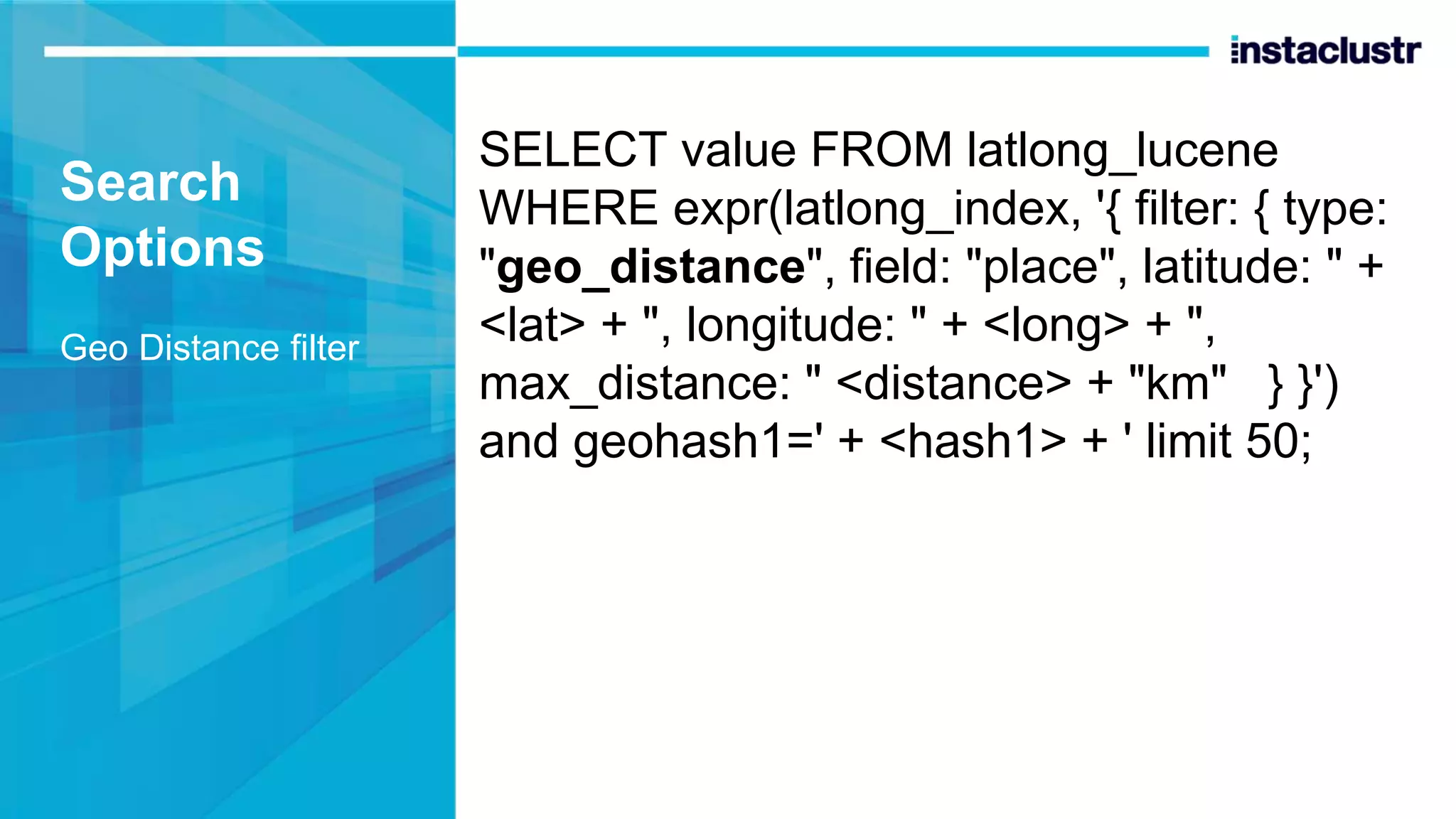

The document discusses the use of Apache Kafka and Cassandra for massively scalable real-time geospatial data processing, presenting anomaly detection techniques and the challenges of querying spatial data. It covers various methods including the use of bounding boxes, secondary indexes, and geohashes for efficient data representation and querying. The document also highlights the trade-offs between different indexing strategies like SASI and traditional approaches, along with a brief mention of the Cassandra Lucene index plugin for enhanced geospatial search capabilities.

![Bounding boxes and Cassandra? Use ”country” partition key, Lat/long/time clustering keys But can’t run the query with multiple inequalities CREATE TABLE latlong ( country text, lat double, long double, time timestamp, PRIMARY KEY (country, lat, long, time) ) WITH CLUSTERING ORDER BY (lat ASC, long ASC, time DESC); select * from latlong where country='nz' and lat>= - 39.58 and lat <= -38.67 and long >= 175.18 and long <= 176.08 limit 50; InvalidRequest: Error from server: code=2200 [Invalid query] message="Clustering column "long" cannot be restricted (preceding column "lat" is restricted by a non-EQ relation)"](https://image.slidesharecdn.com/bigdatameetupgeotalkinstaclustraprilupdate-200430060616/75/Massively-Scalable-Real-time-Geospatial-Data-Processing-with-Apache-Kafka-and-Cassandra-Melbourne-Distributed-Meetup-30-April-2020-online-24-2048.jpg)

![Search Options Sort Sophisticated but complex semantics (see the docs) SELECT value FROM latlong_lucene WHERE expr(latlong_index, '{ sort: [ {field: "place", type: "geo_distance", latitude: " + <lat> + ", longitude: " + <long> + "}, {field: "time", reverse: true} ] }') and geohash1=<geohash> limit 50;](https://image.slidesharecdn.com/bigdatameetupgeotalkinstaclustraprilupdate-200430060616/75/Massively-Scalable-Real-time-Geospatial-Data-Processing-with-Apache-Kafka-and-Cassandra-Melbourne-Distributed-Meetup-30-April-2020-online-48-2048.jpg)

![Search Options – Prefix filter prefix search is useful for searching larger areas over a single geohash column as you can search for a substring SELECT value FROM latlong_lucene WHERE expr(latlong_index, '{ filter: [ {type: "prefix", field: "geohash1", value: <geohash>} ] }') limit 50 Similar to inequality over clustering column](https://image.slidesharecdn.com/bigdatameetupgeotalkinstaclustraprilupdate-200430060616/75/Massively-Scalable-Real-time-Geospatial-Data-Processing-with-Apache-Kafka-and-Cassandra-Melbourne-Distributed-Meetup-30-April-2020-online-51-2048.jpg)