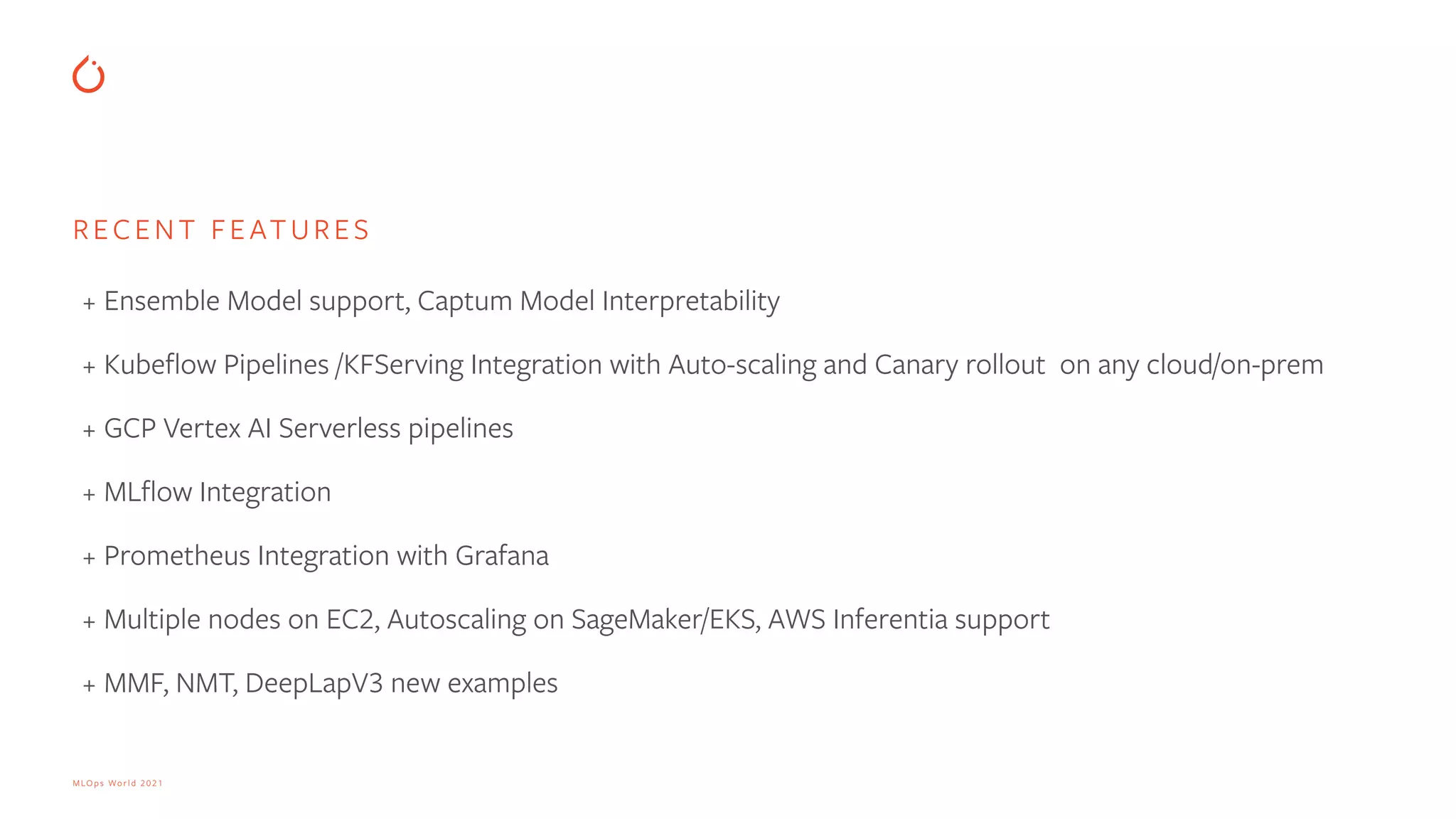

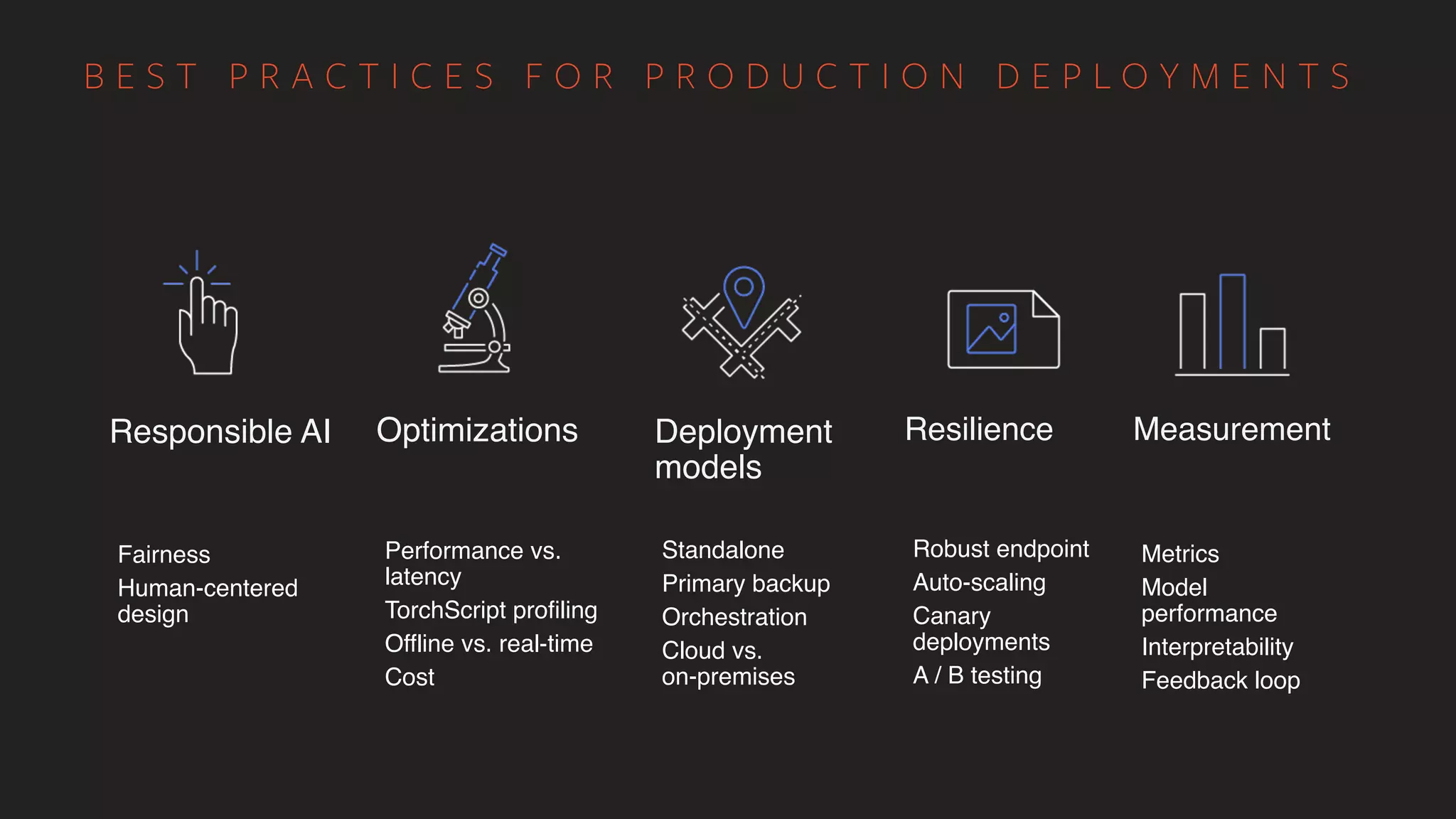

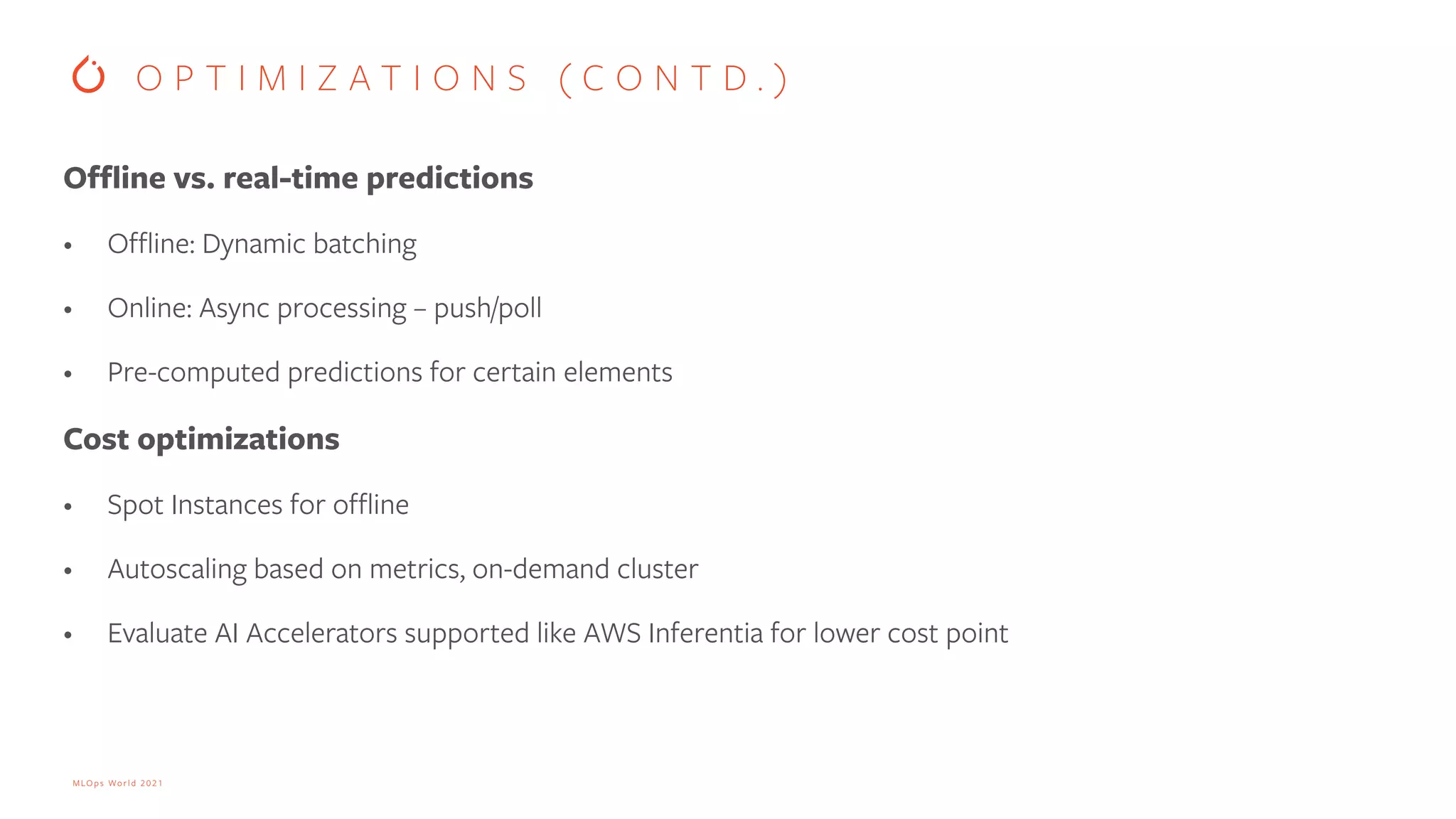

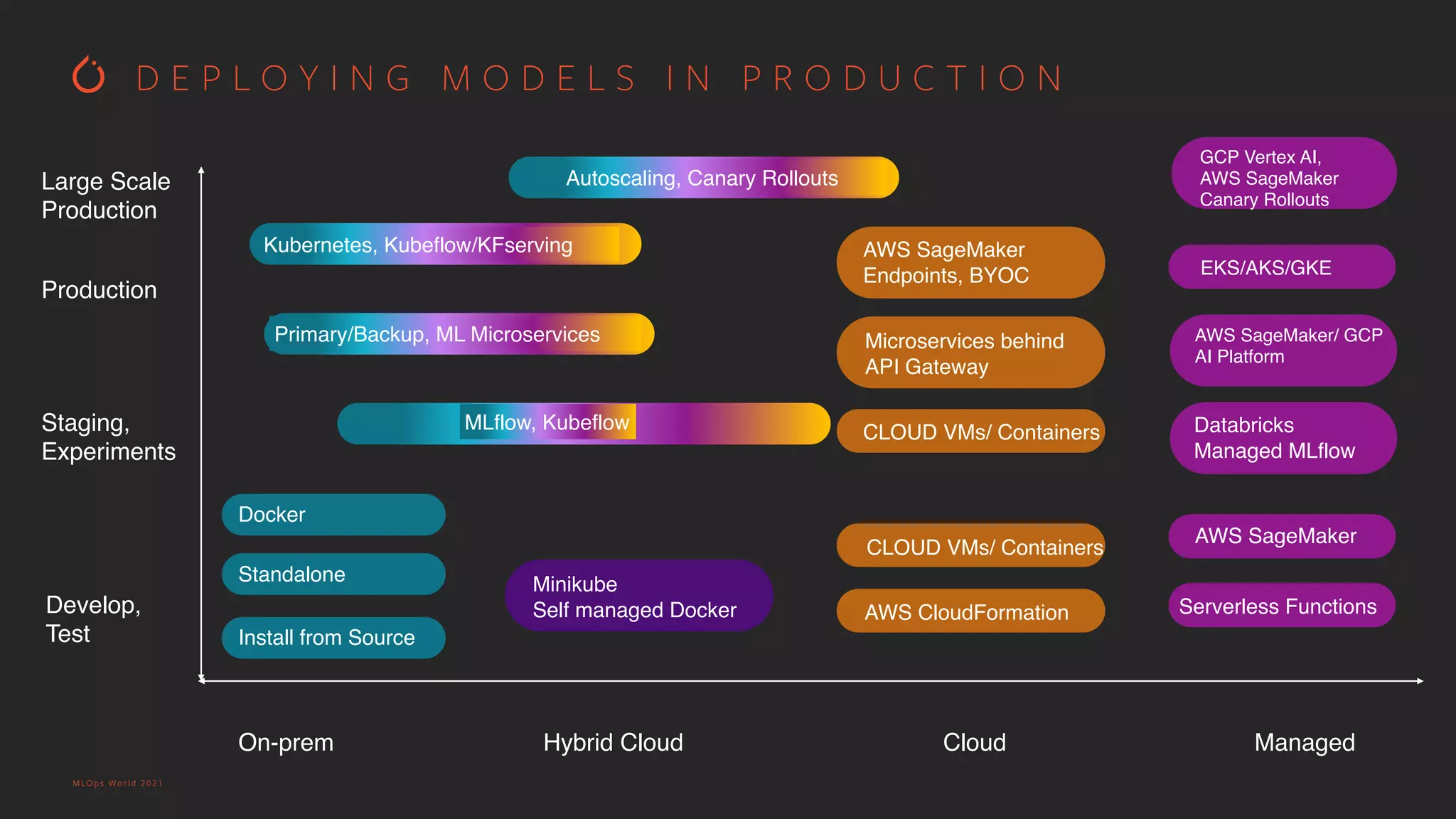

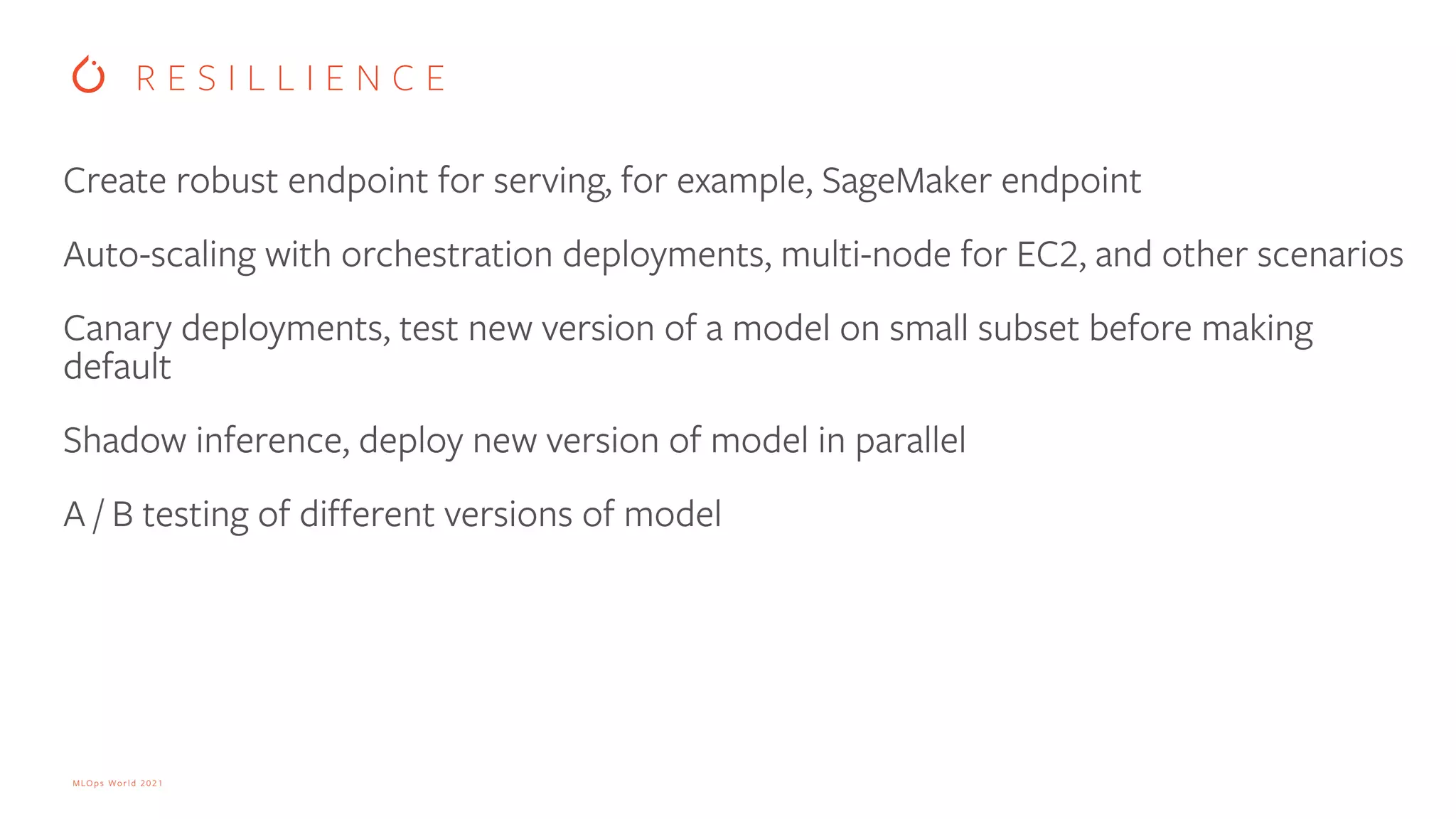

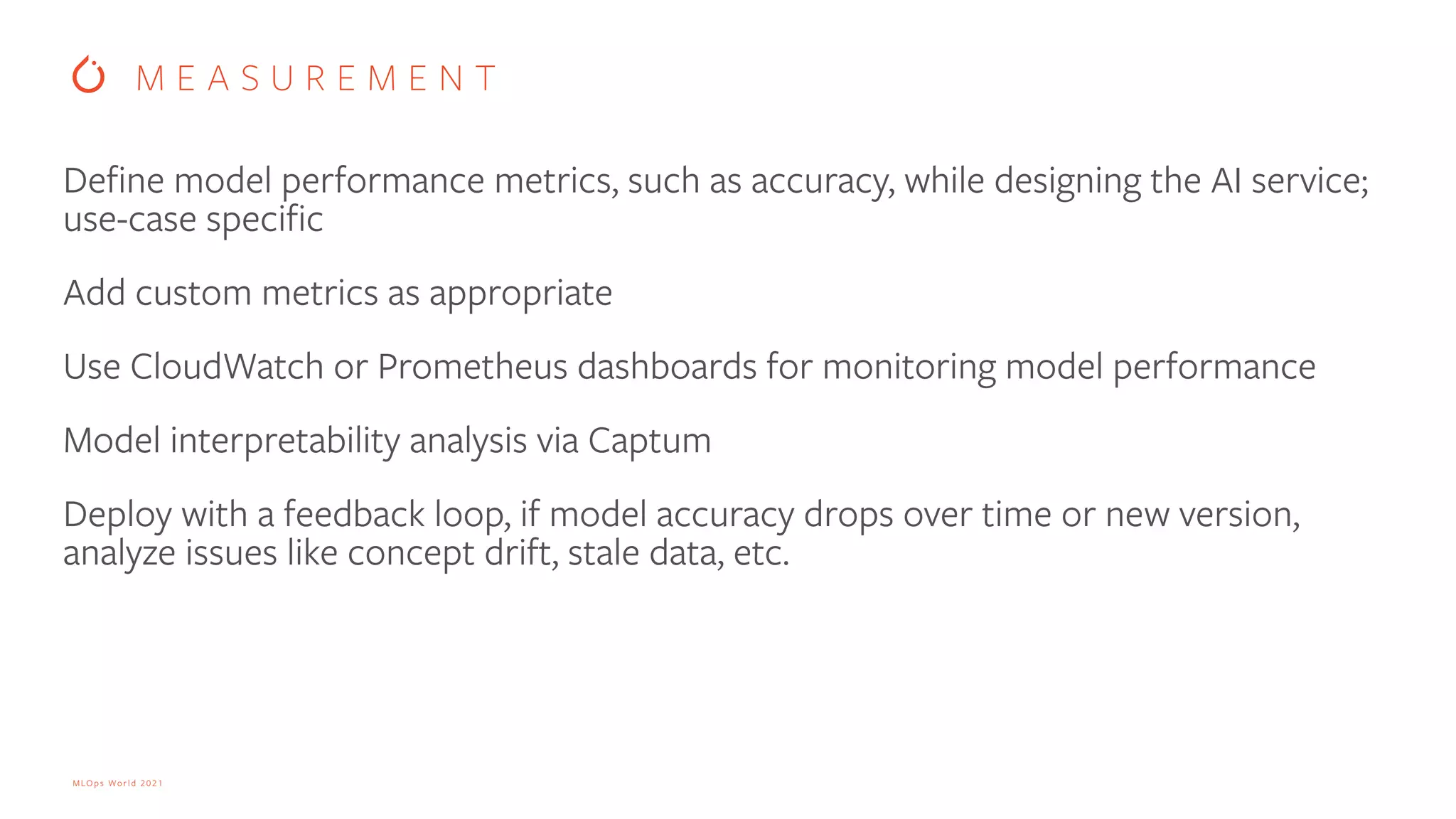

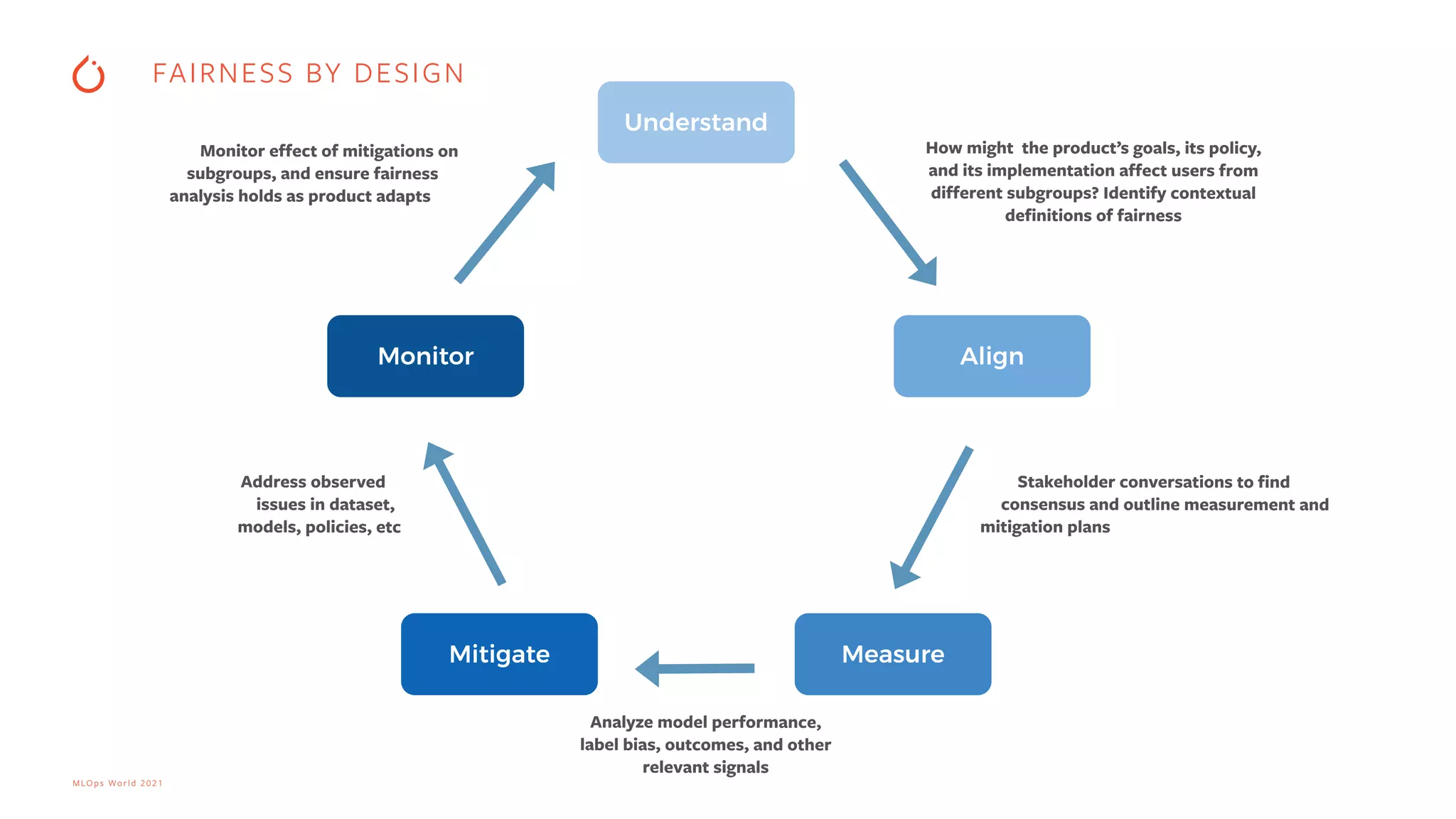

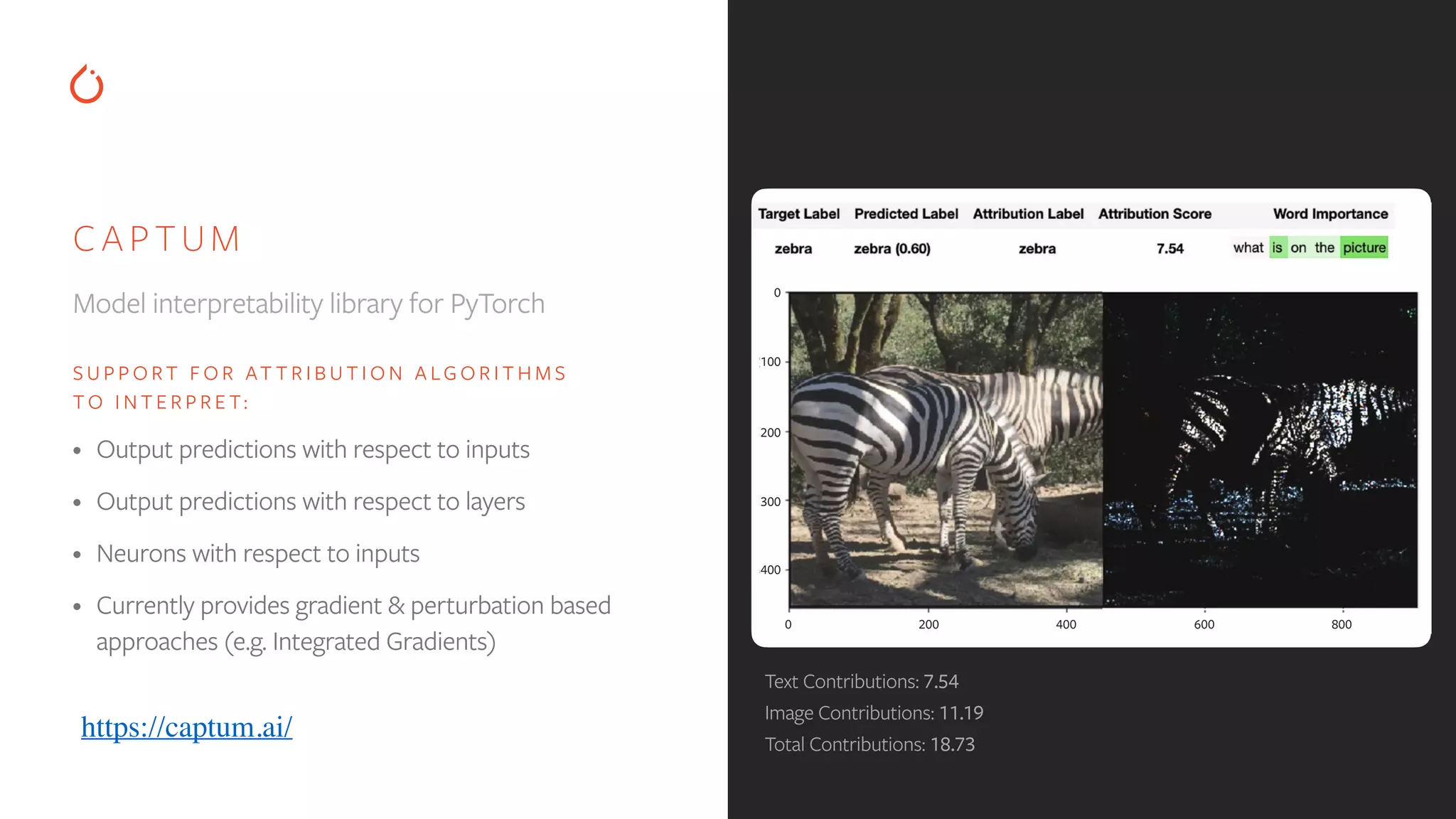

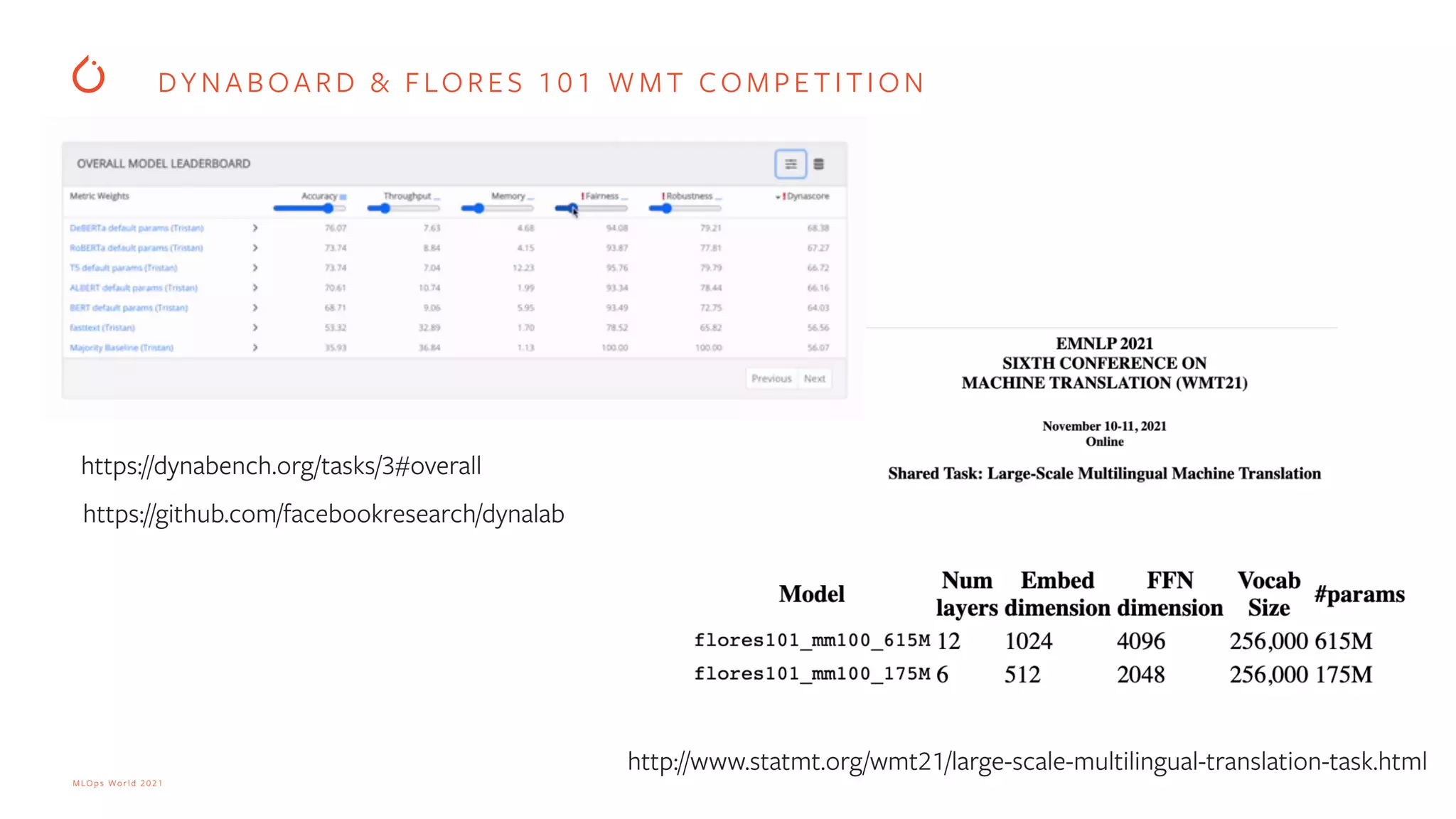

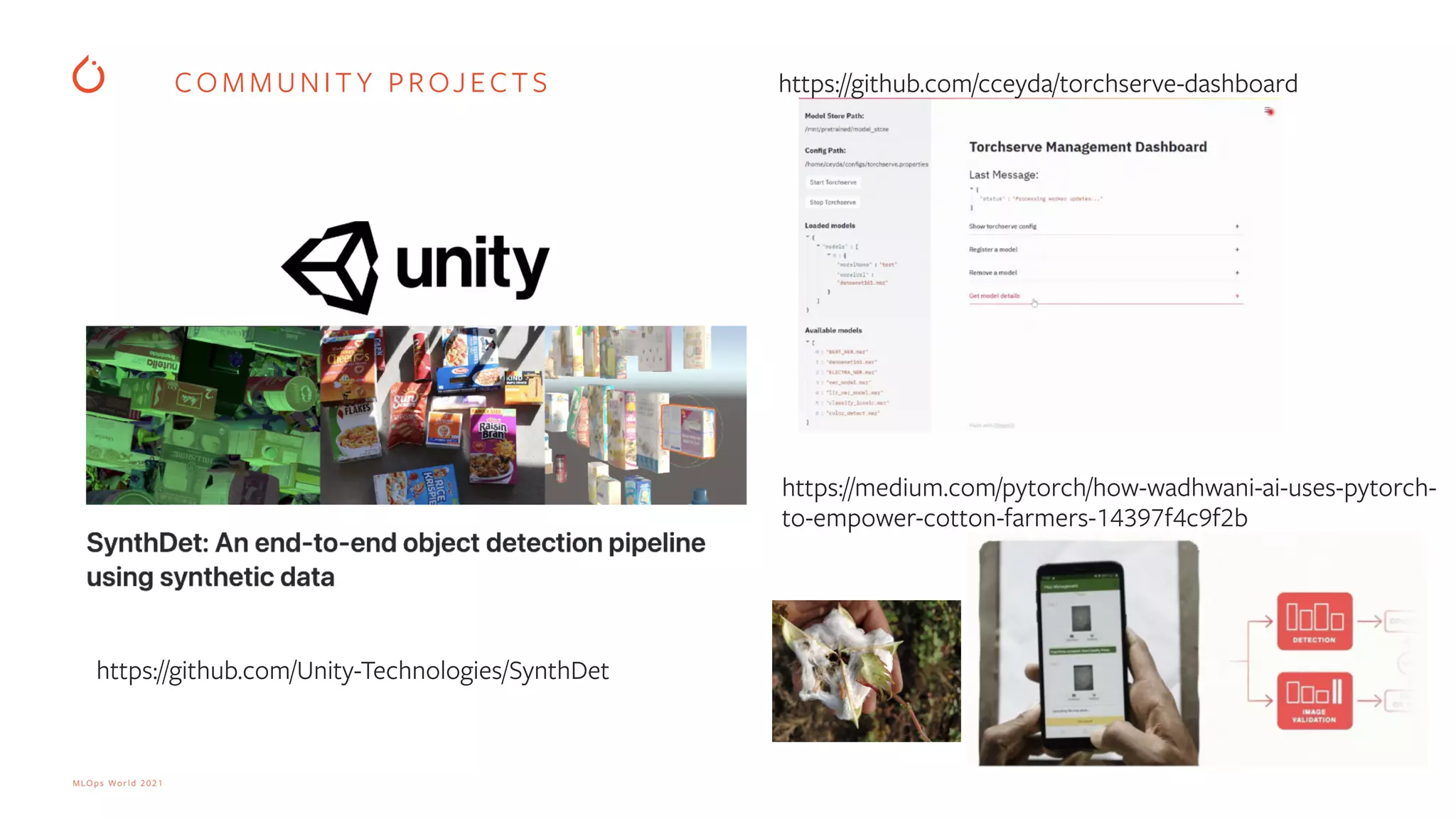

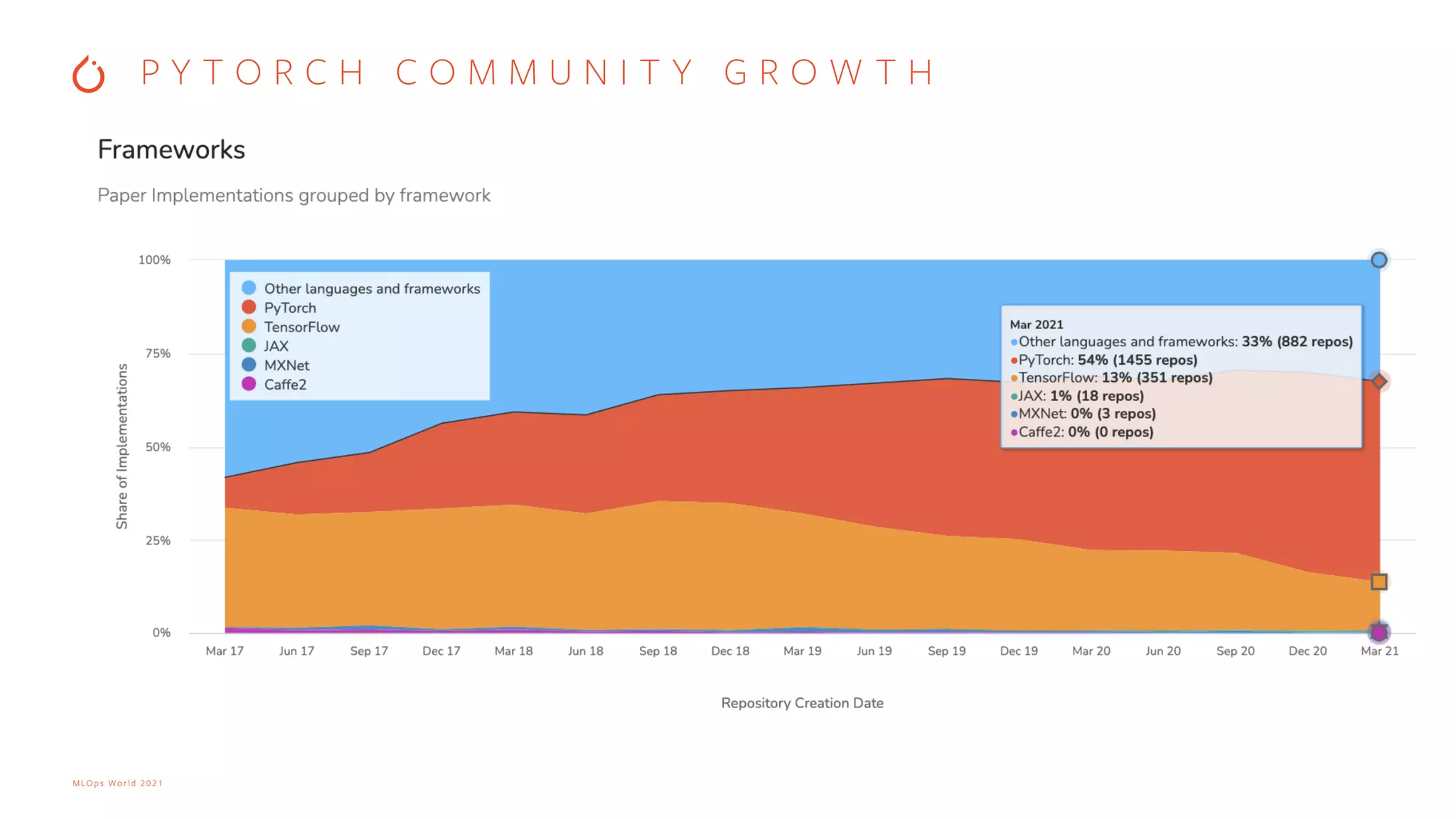

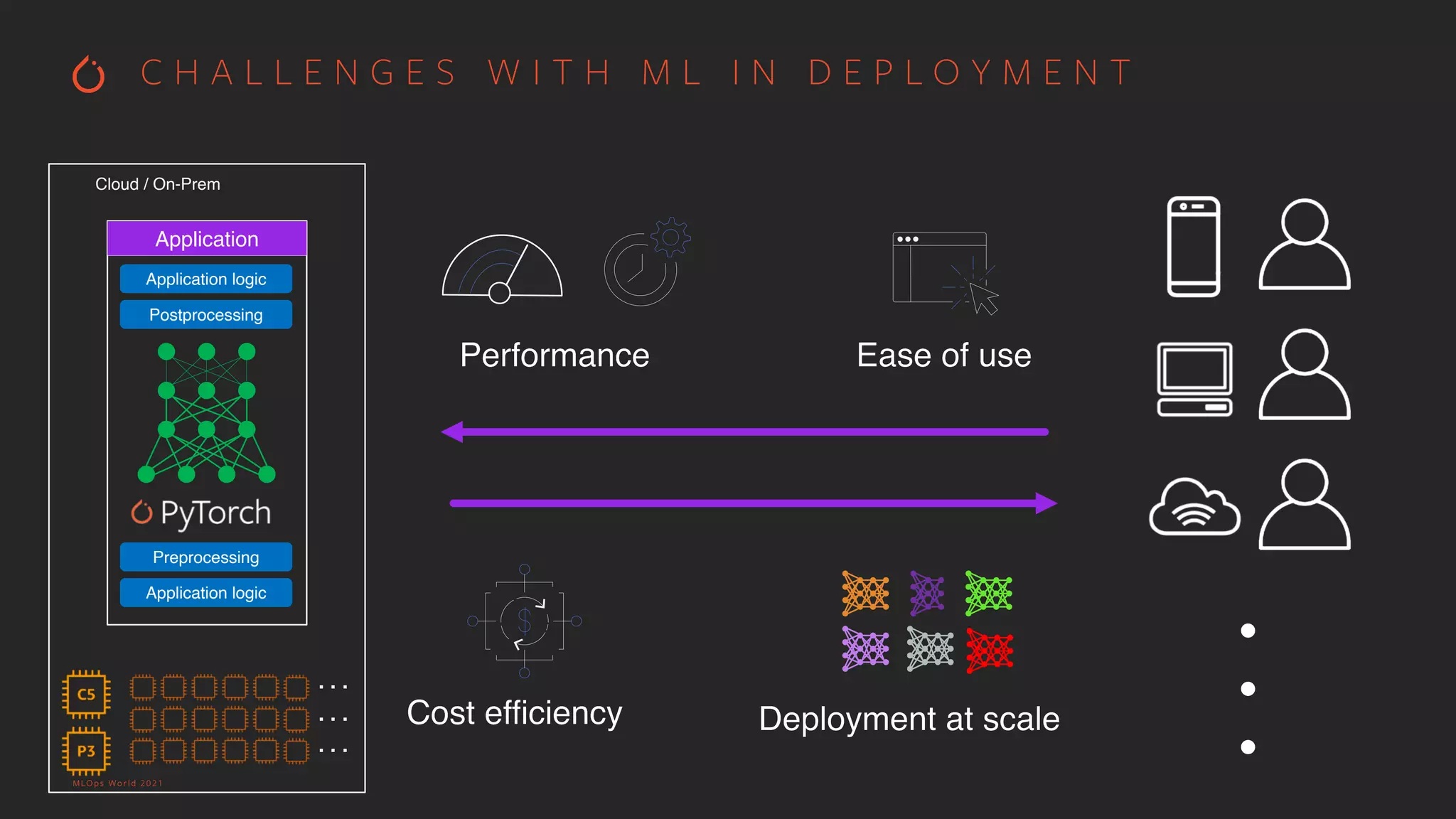

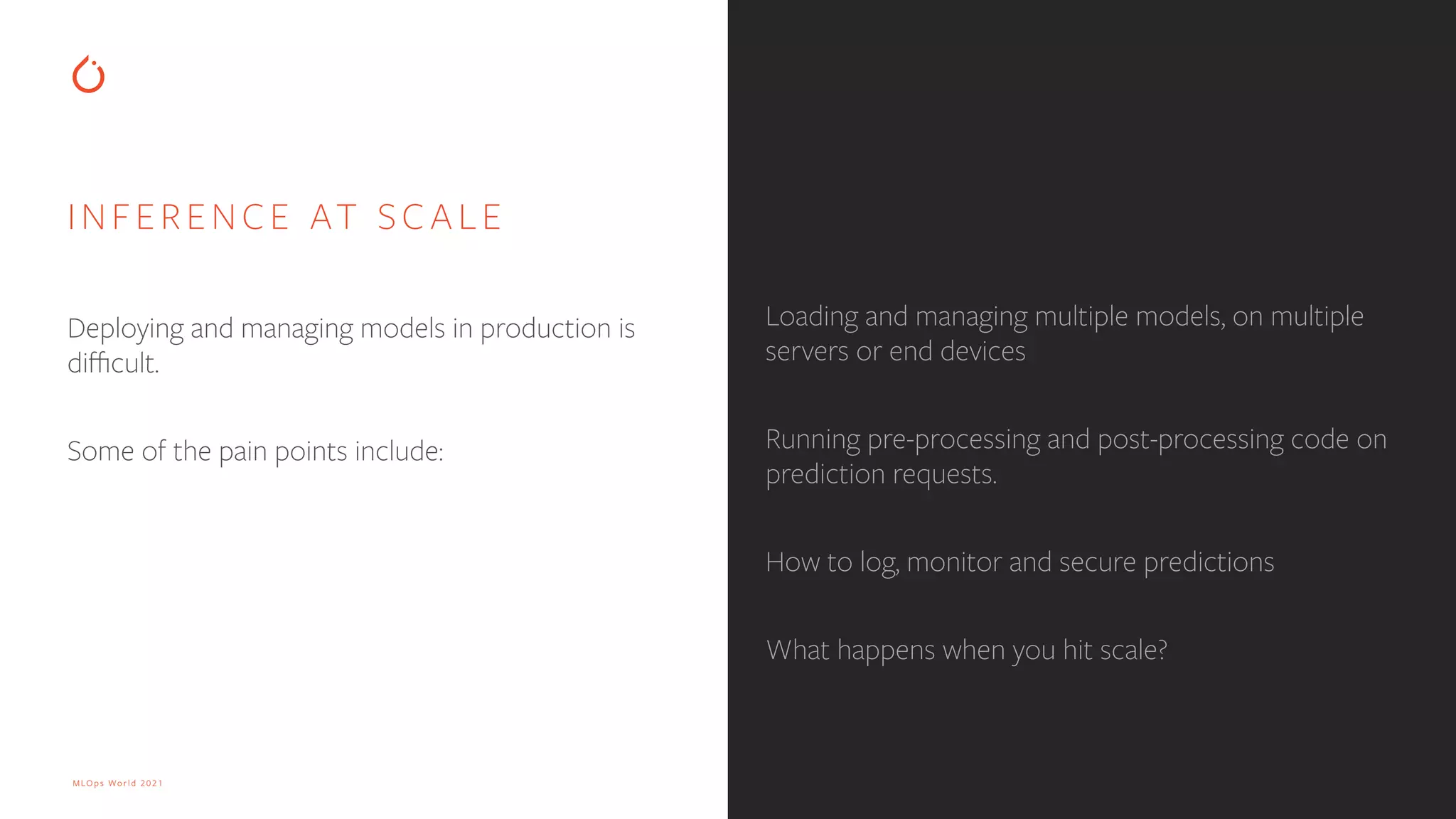

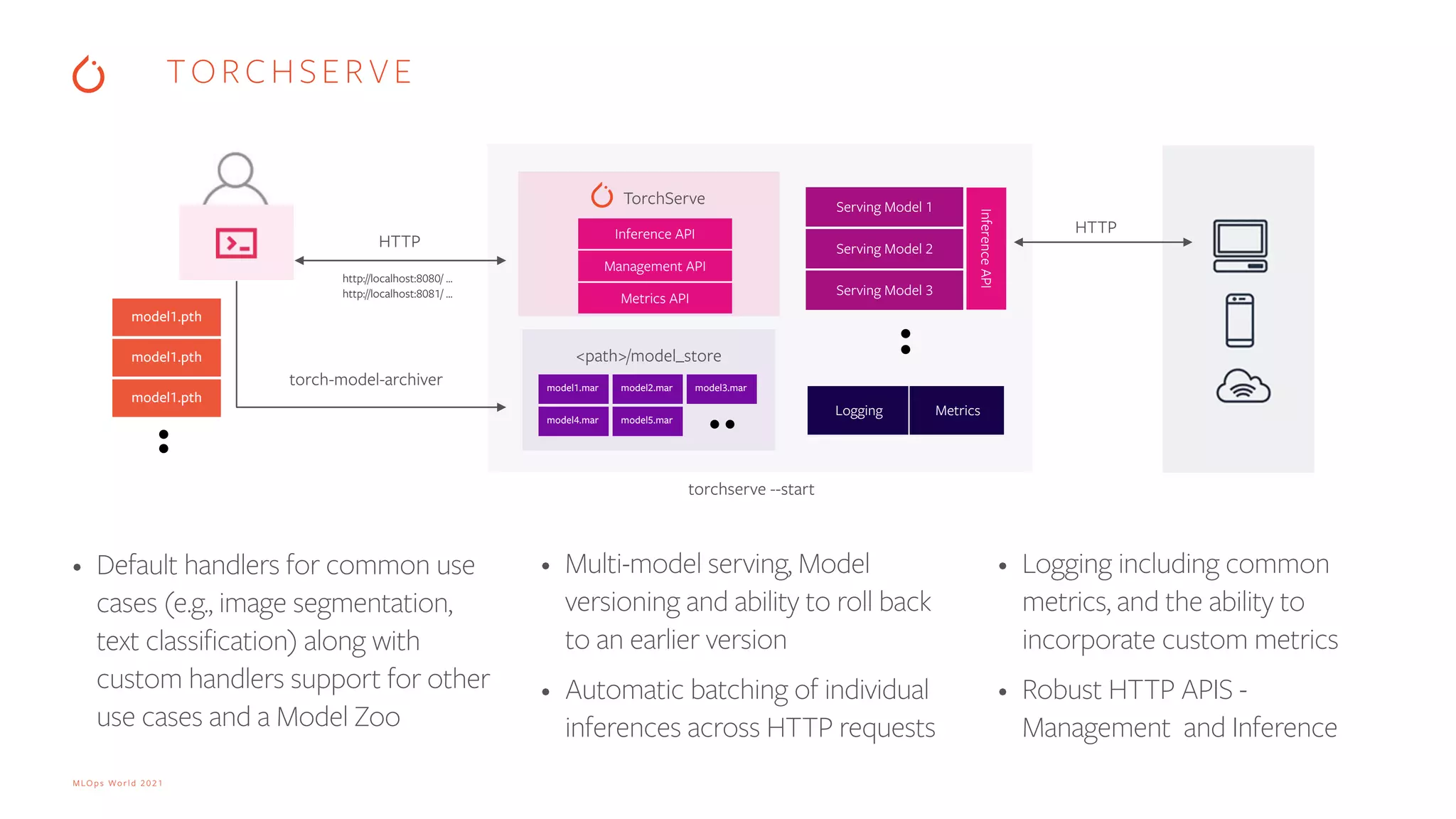

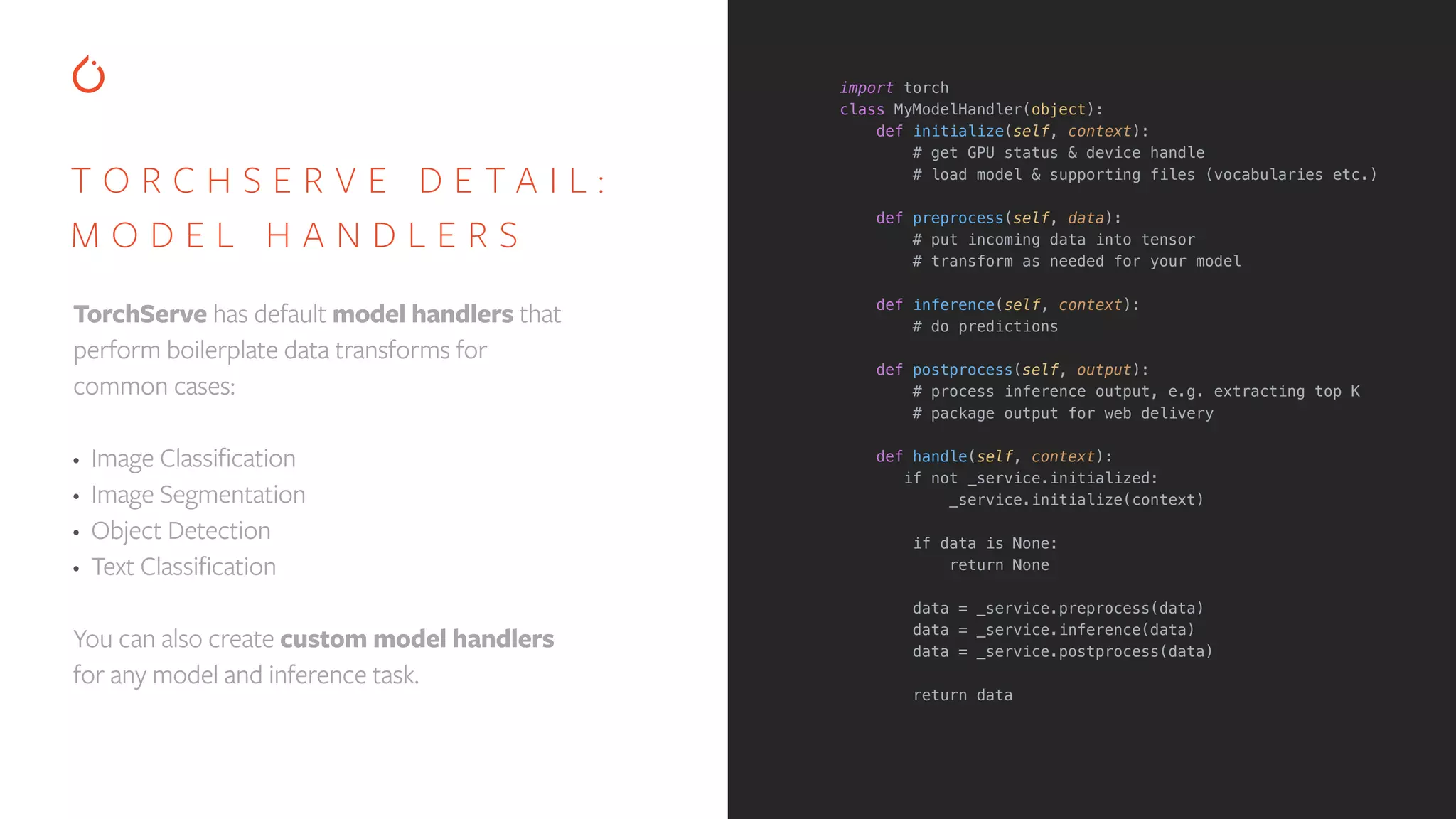

The document discusses challenges and best practices for deploying machine learning models in production, particularly using TorchServe, a tool for serving PyTorch models at scale. It details capabilities such as default model handlers, dynamic batching, performance monitoring, and the significance of implementing fairness and explainability in AI systems. Key features include integration with cloud services, handling of model versioning, and custom metrics for effective MLOps management.

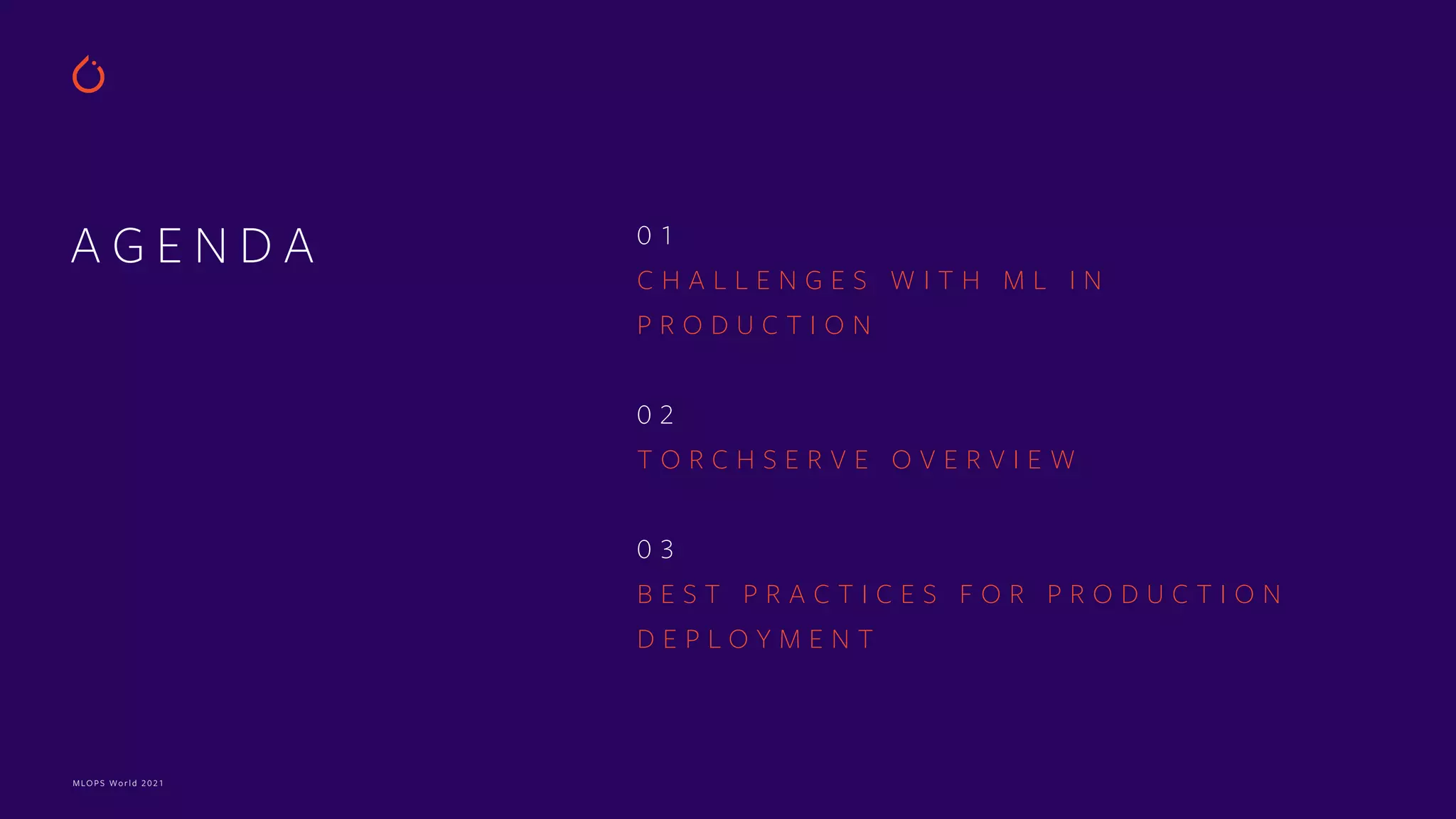

![D Y N A M I C B A T C H I N G Via Custom Handlers • Model Configuration based • batch_size Max batch size • max_batch_delay The max batch delay time TorchServe waits to receive batch_size number of requests

• (Coming soon) Batching support in default handlers curl localhost:8081/models/resnet-152 { "modelName": "resnet-152", "modelUrl": "https://s3.amazonaws.com/model-server/ model_archive_1.0/examples/resnet-152-batching/resnet-152.ma "runtime": "python", "minWorkers": 1, "maxWorkers": 1, "batchSize": 8, "maxBatchDelay": 10, "workers": [ { "id": "9008", "startTime": "2019-02-19T23:56:33.907Z", "status": "READY", "gpu": false, "memoryUsage": 607715328 } ] } https://github.com/pytorch/serve/blob/master/docs/batch_inference_with_ts.md](https://image.slidesharecdn.com/scalingaiinproductionusingpytorch-mlops-210624071408/75/Scaling-AI-in-production-using-PyTorch-10-2048.jpg)

![M E T R I C S Out of box metrics with ability to extend • CPU, Disk, Memory utilization • Requests type count • ts.metrics class for extension • Types supported - Size, percentage, counter, general metric • Prometheus metrics support available # Access context metrics as follows metrics = context.metrics # Create Dimension Object from ts.metrics.dimension import Dimension # Dimensions are name value pairs dim1 = Dimension(name, value) . dimN= Dimension(name_n, value_n) # Add Distance as a metric # dimensions = [dim1, dim2, dim3, ..., dimN] metrics.add_metric('DistanceInKM', distance, 'km', dimensions=dimensions) # Add Image size as a size metric metrics.add_size('SizeOfImage', img_size, None, 'MB', dimensions) # Add MemoryUtilization as a percentage metric metrics.add_percent('MemoryUtilization', utilization_percent, None, dimensions) # Create a counter with name 'LoopCount' and dimensions metrics.add_counter('LoopCount', 1, None, dimensions) # Log custom metrics for metric in metrics.store: logger.info("[METRICS]%s", str(metric)) https://github.com/pytorch/serve/blob/master/docs/metrics.md](https://image.slidesharecdn.com/scalingaiinproductionusingpytorch-mlops-210624071408/75/Scaling-AI-in-production-using-PyTorch-11-2048.jpg)