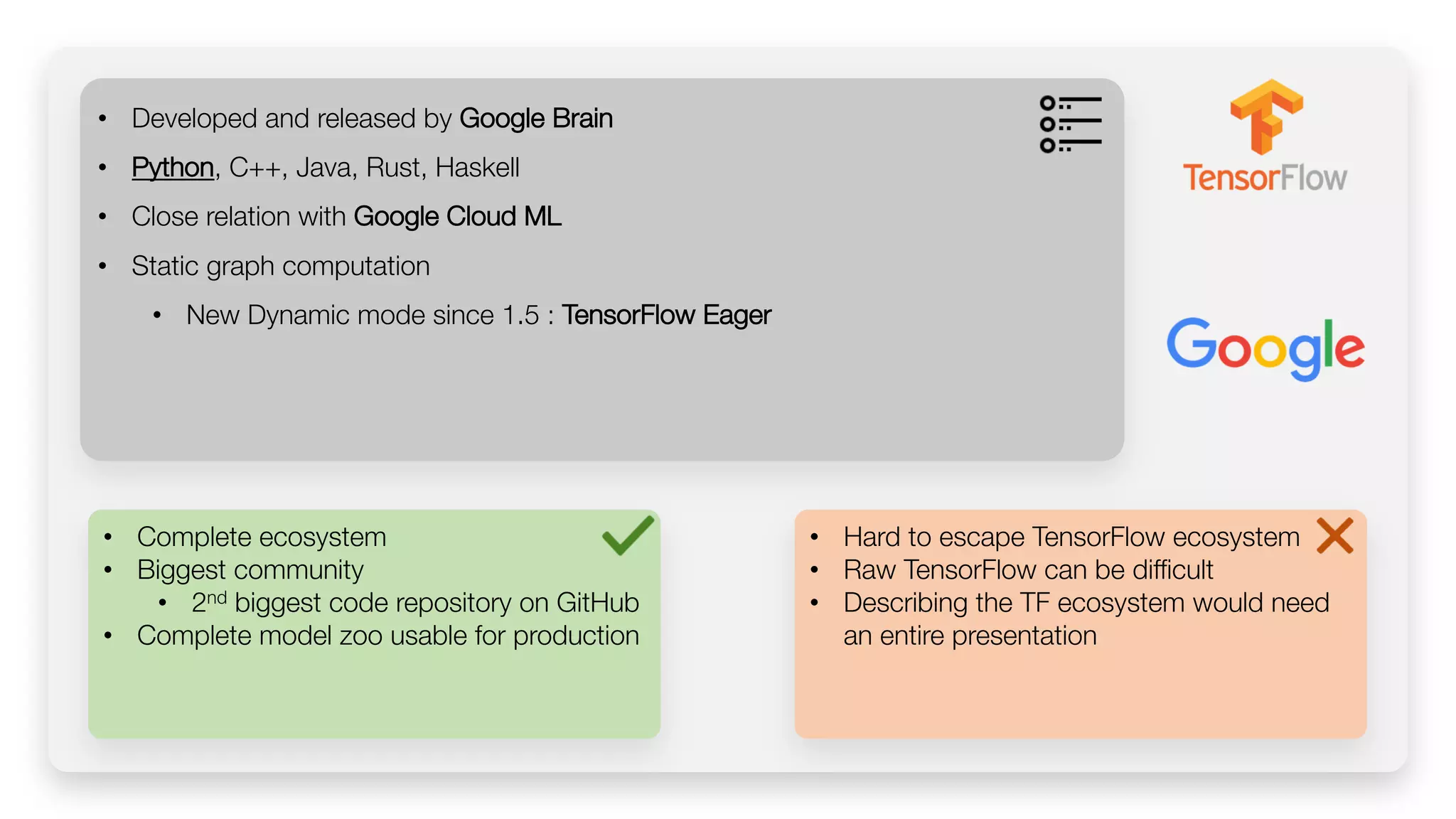

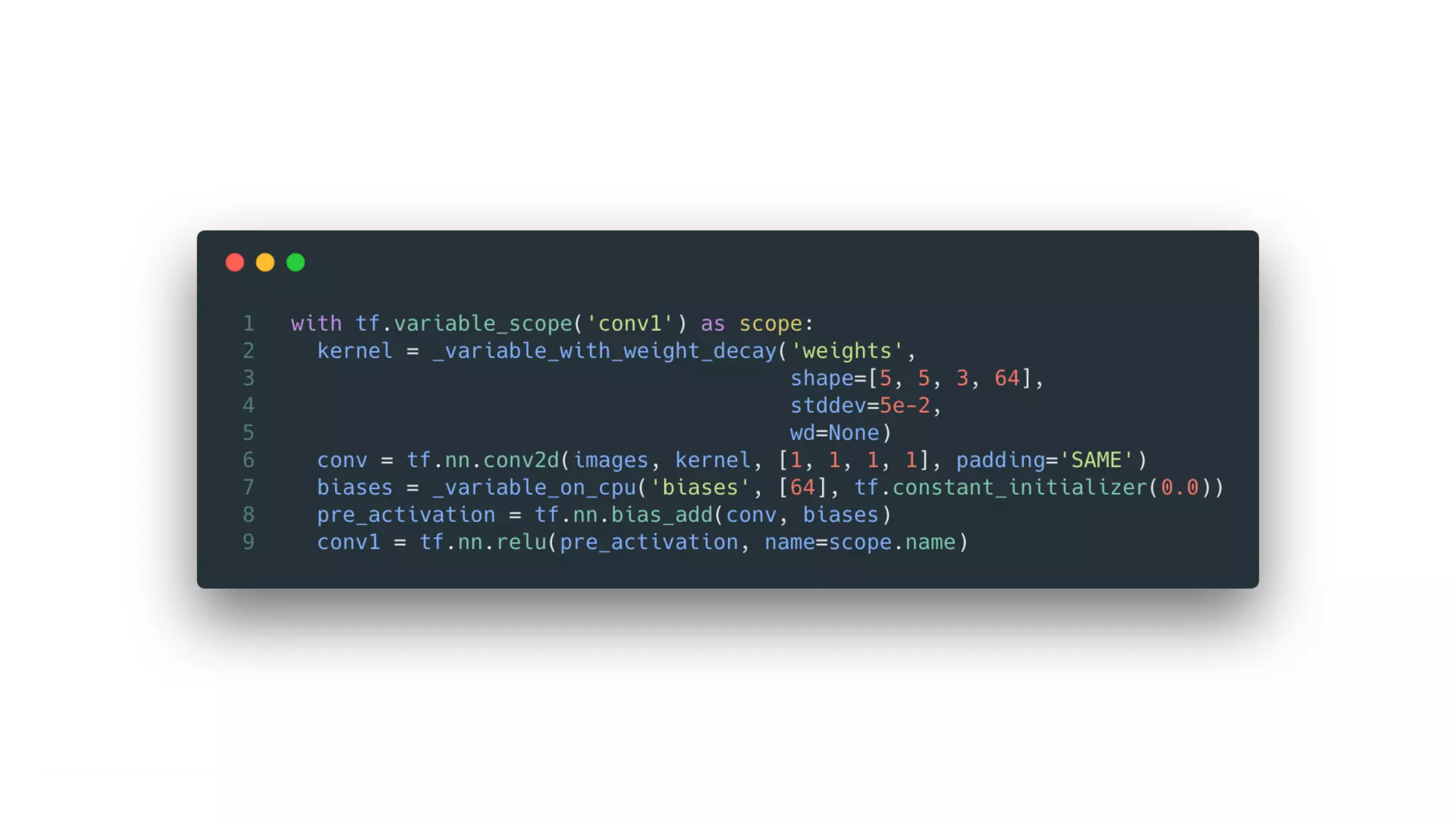

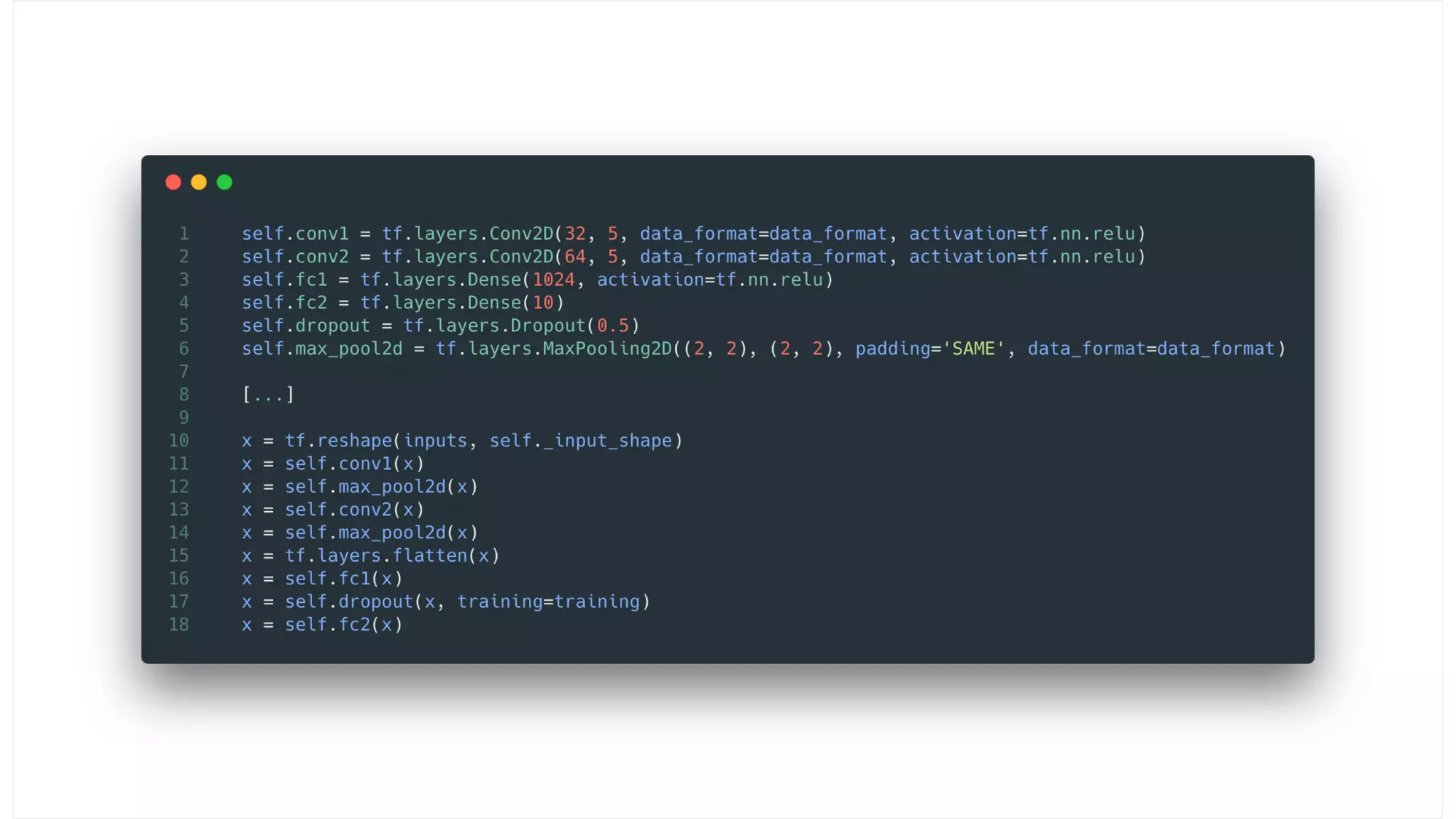

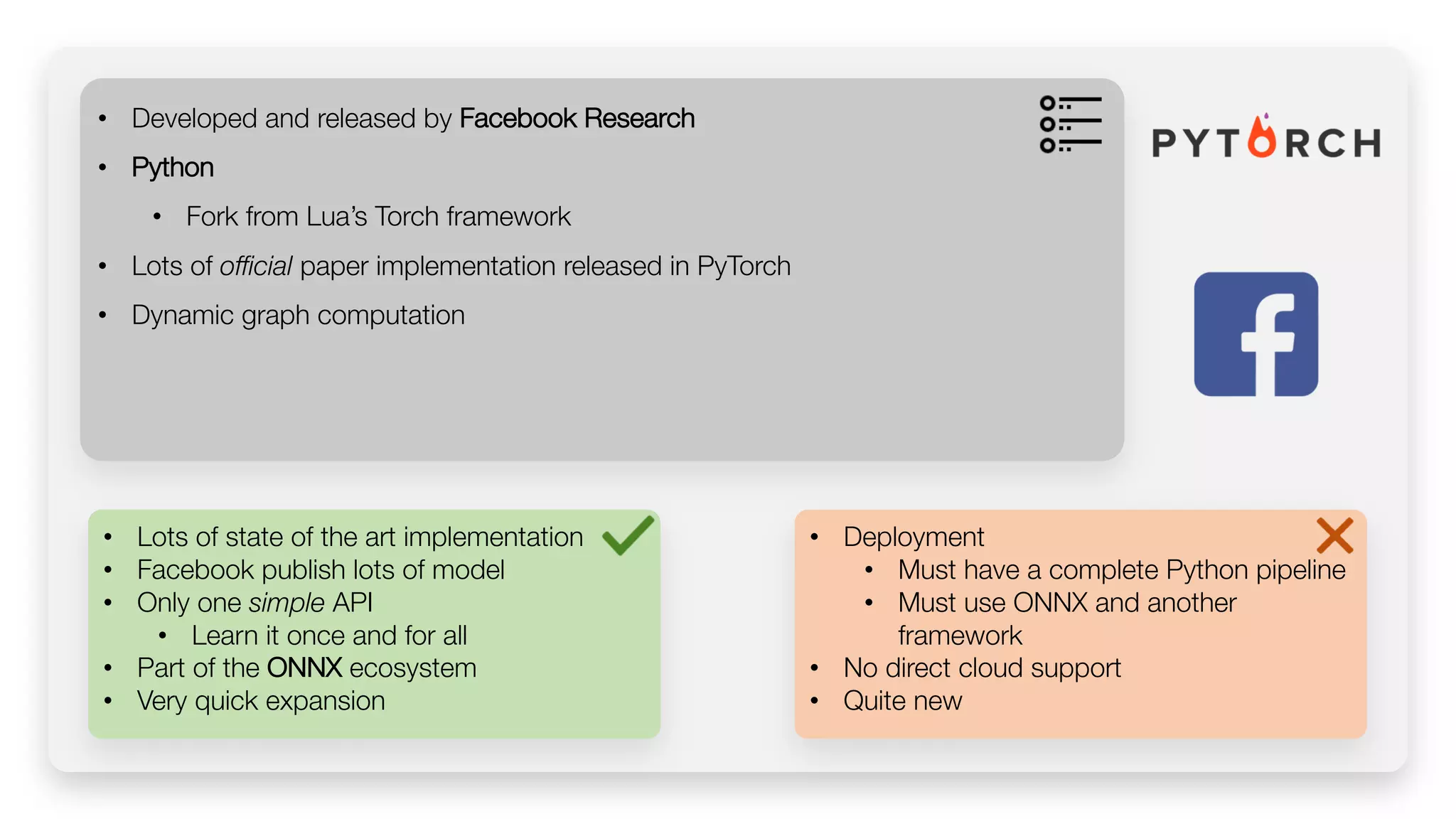

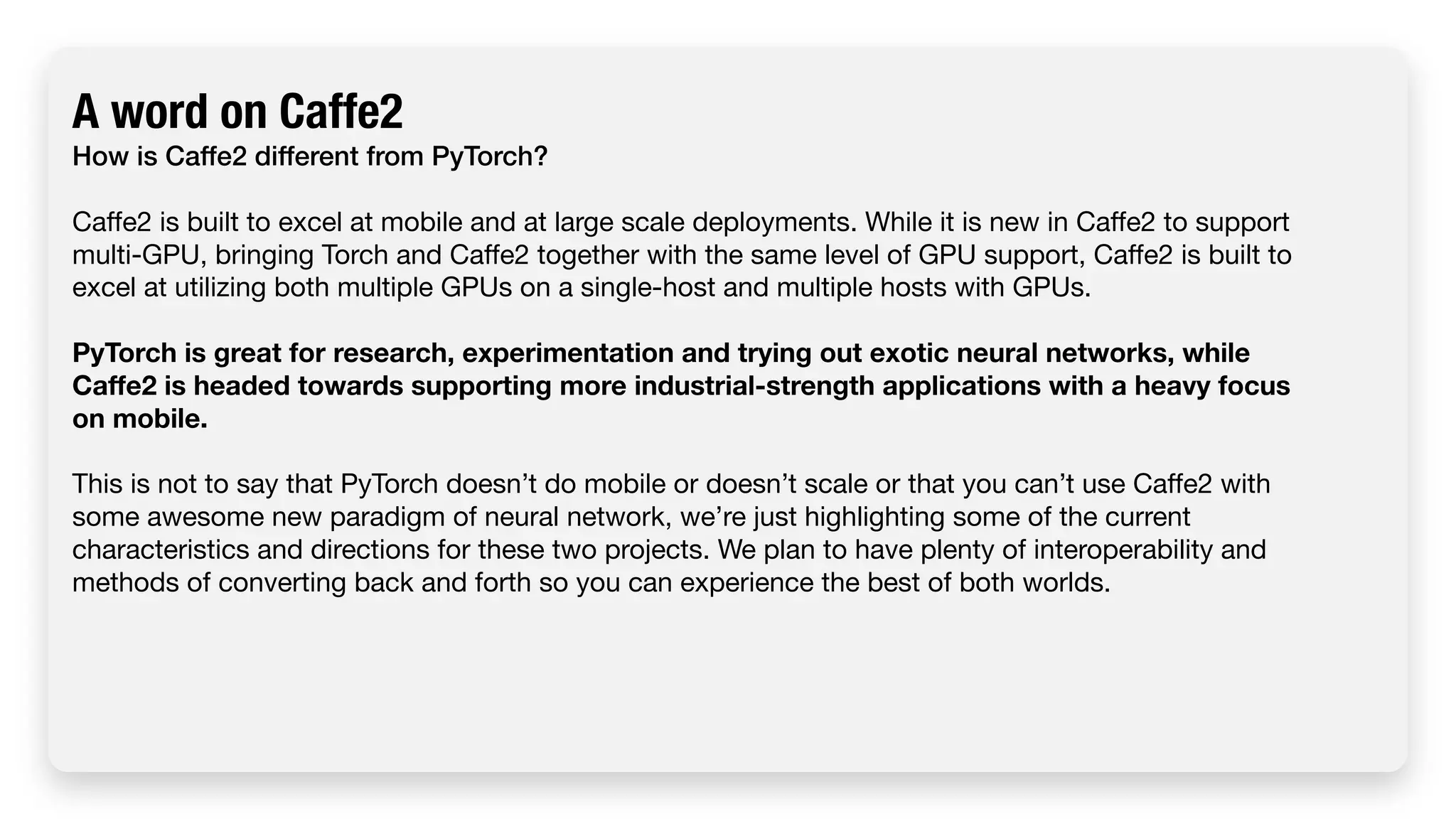

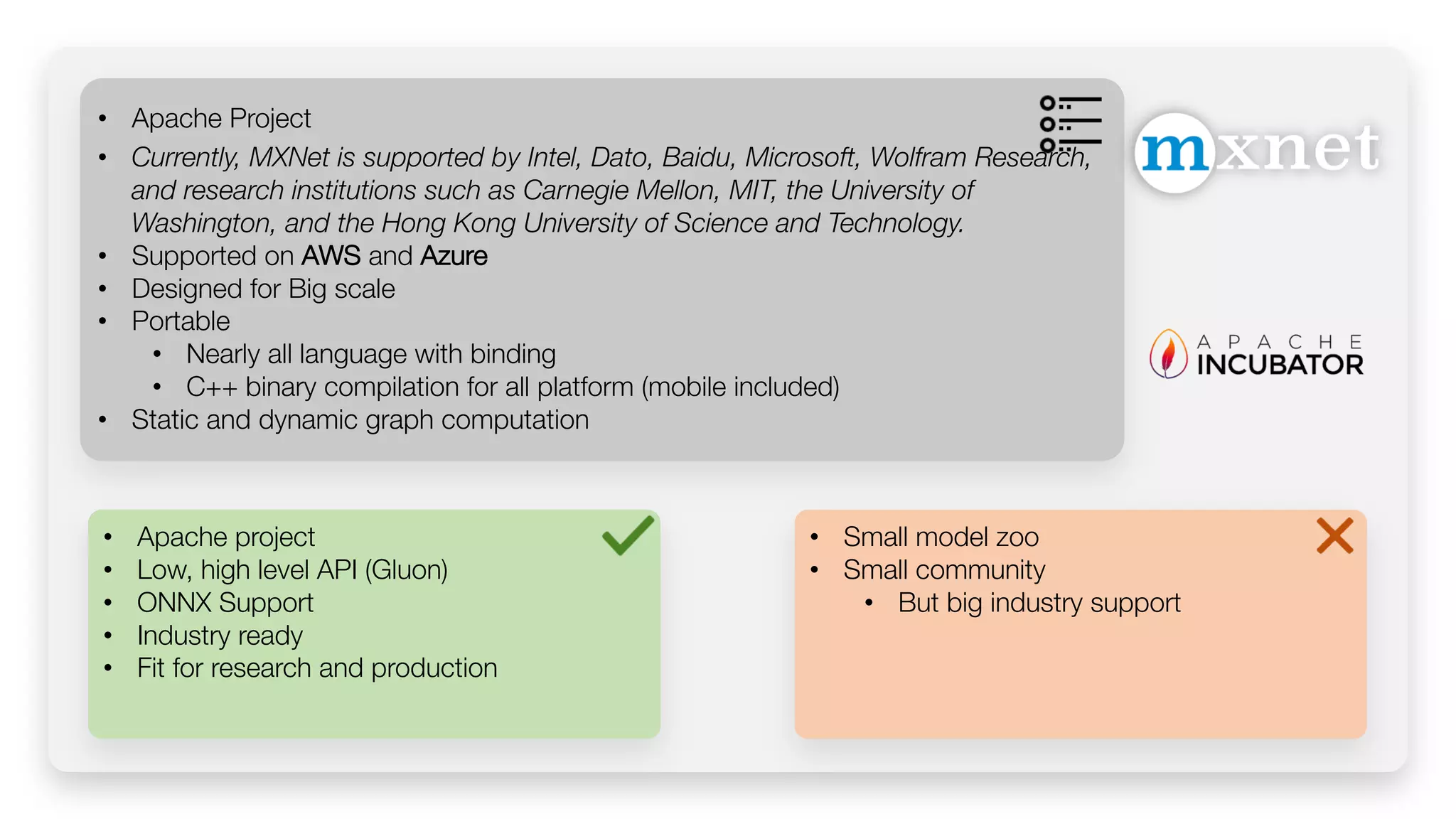

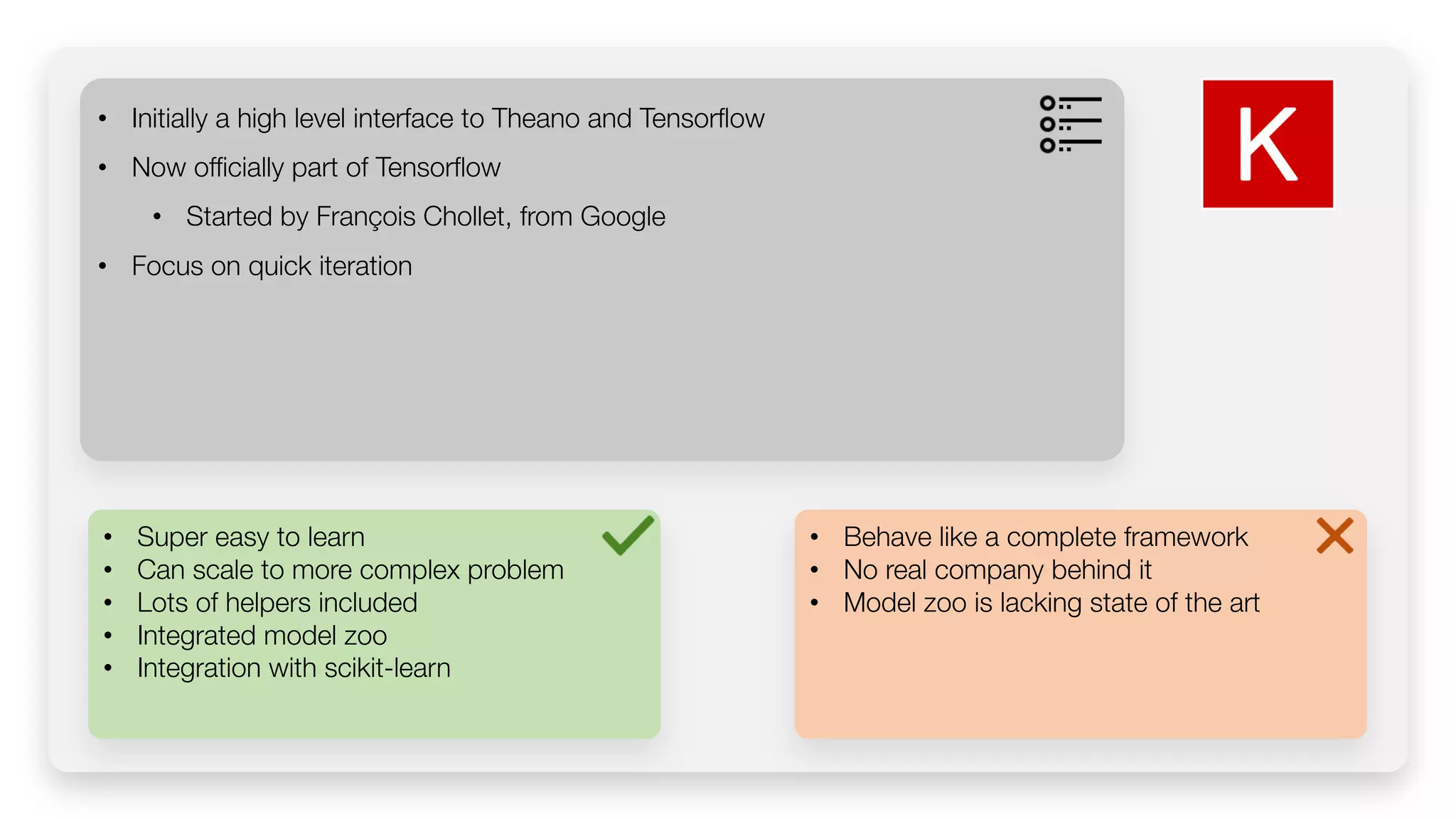

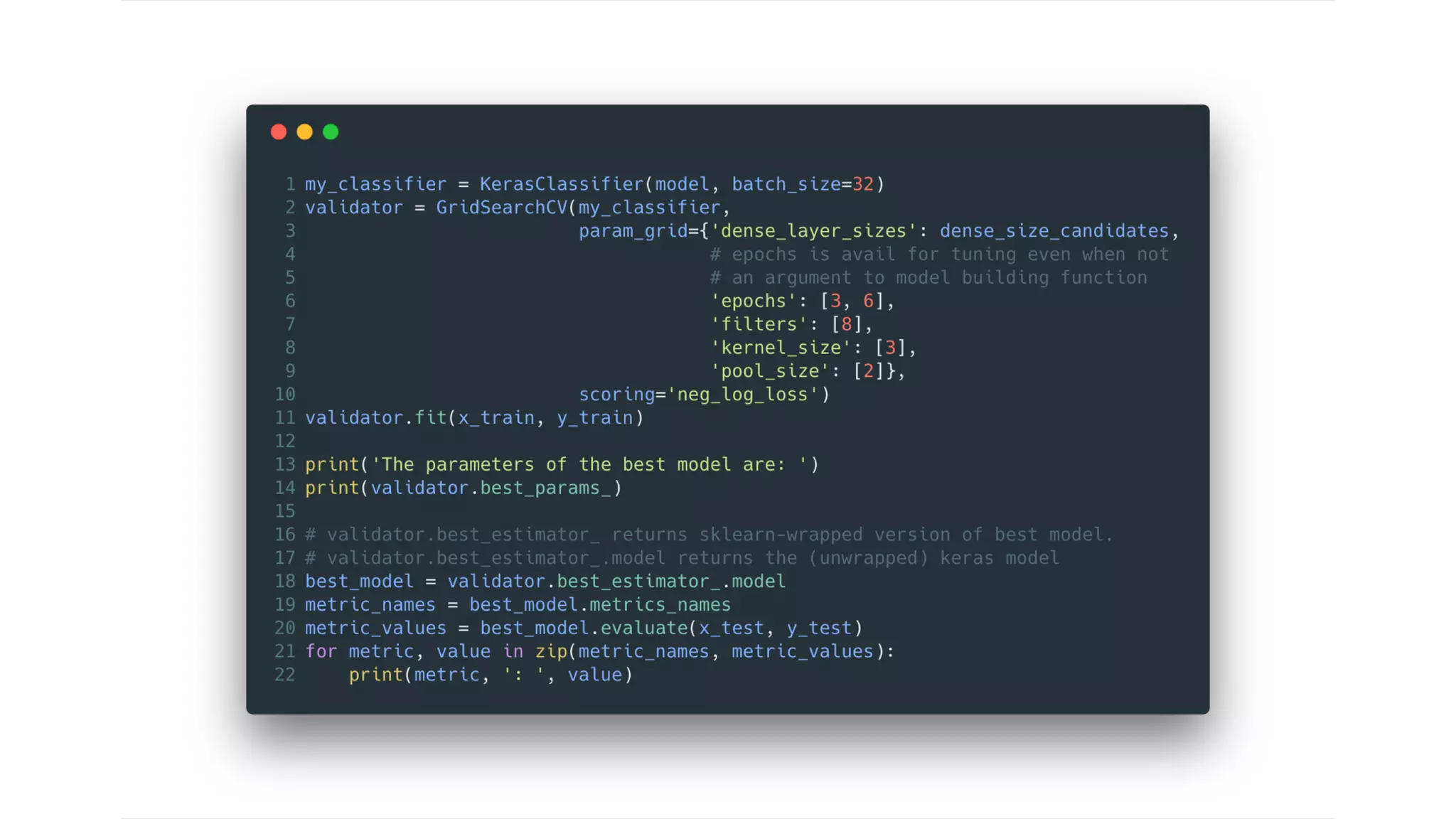

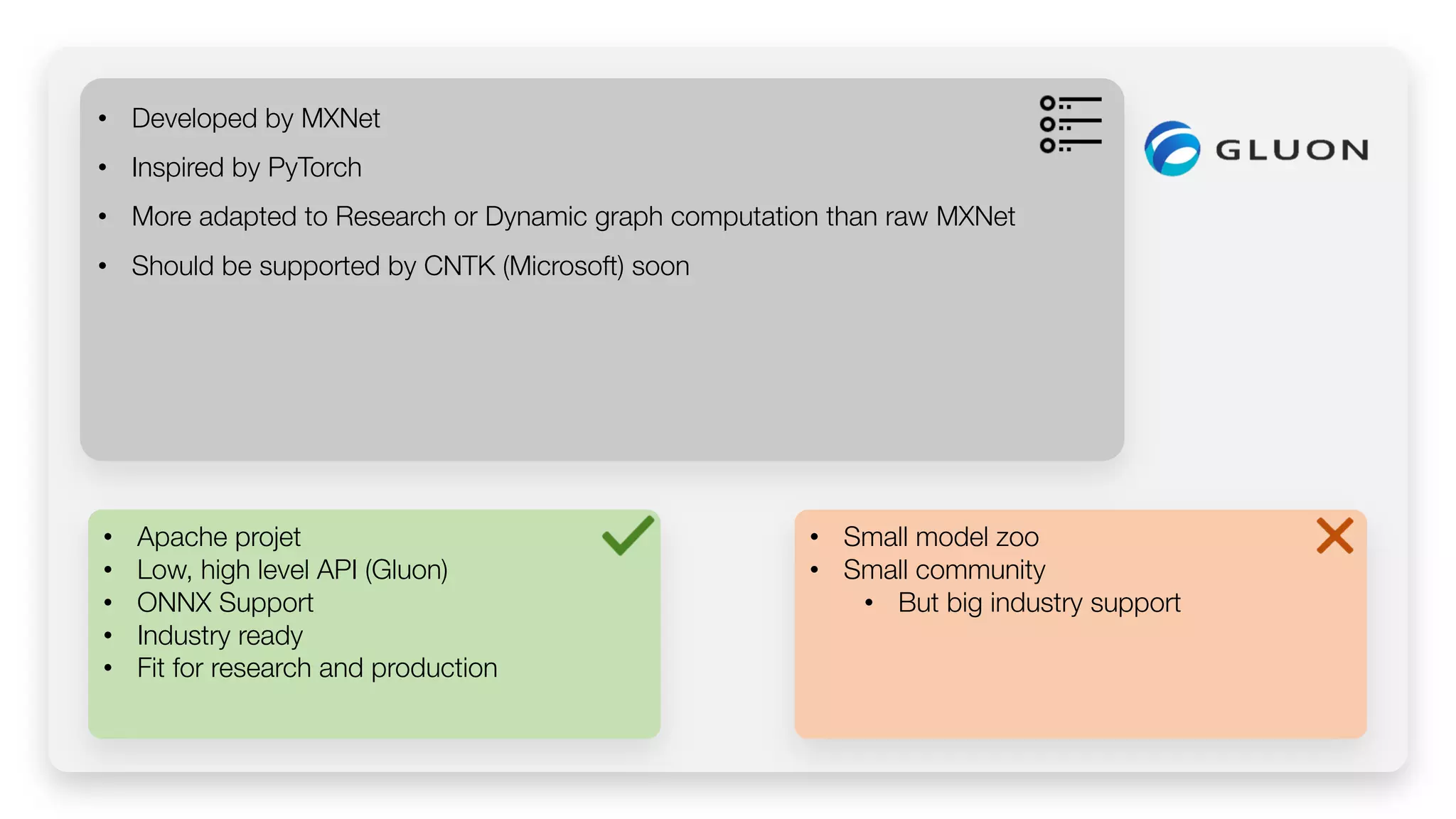

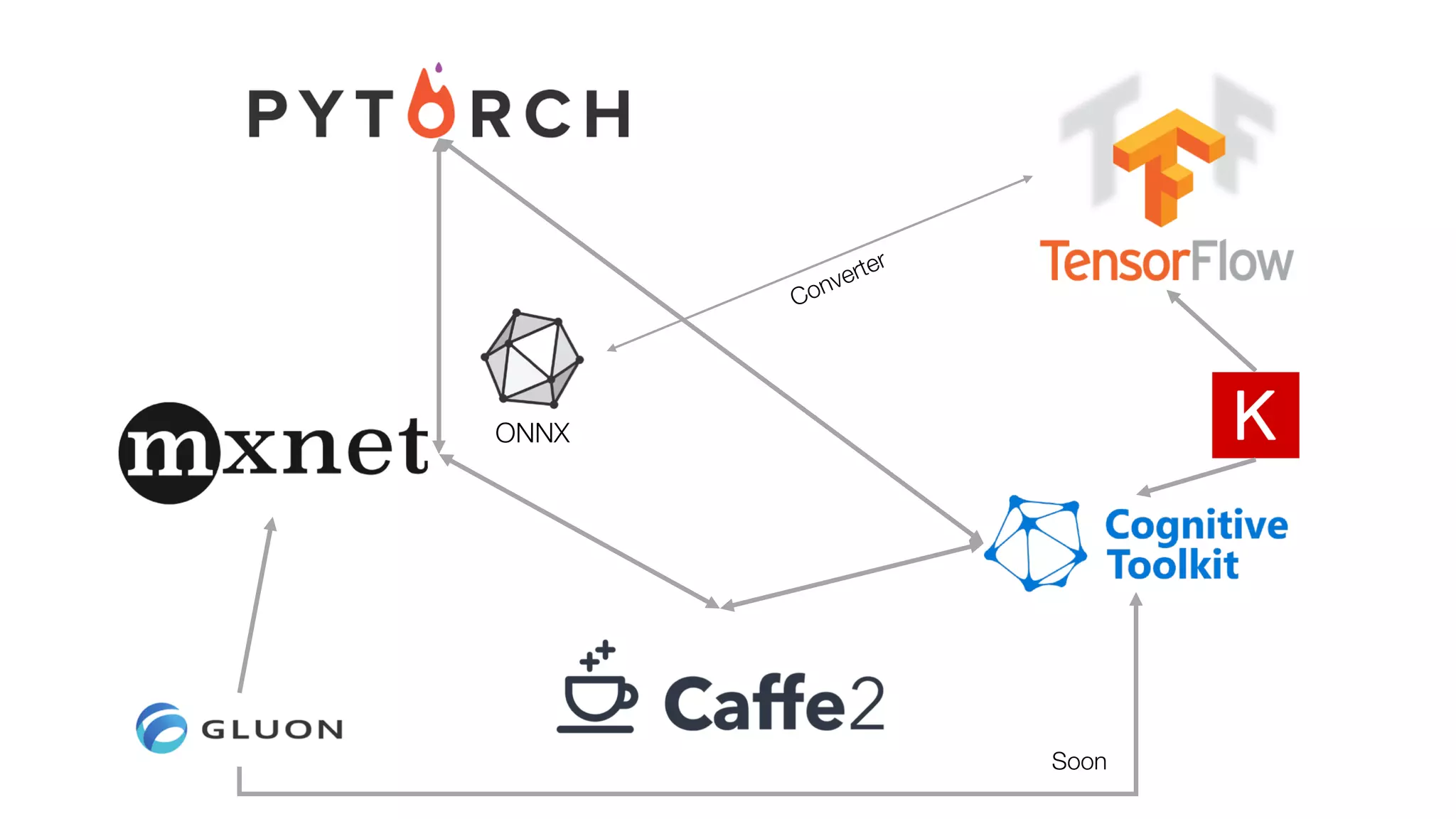

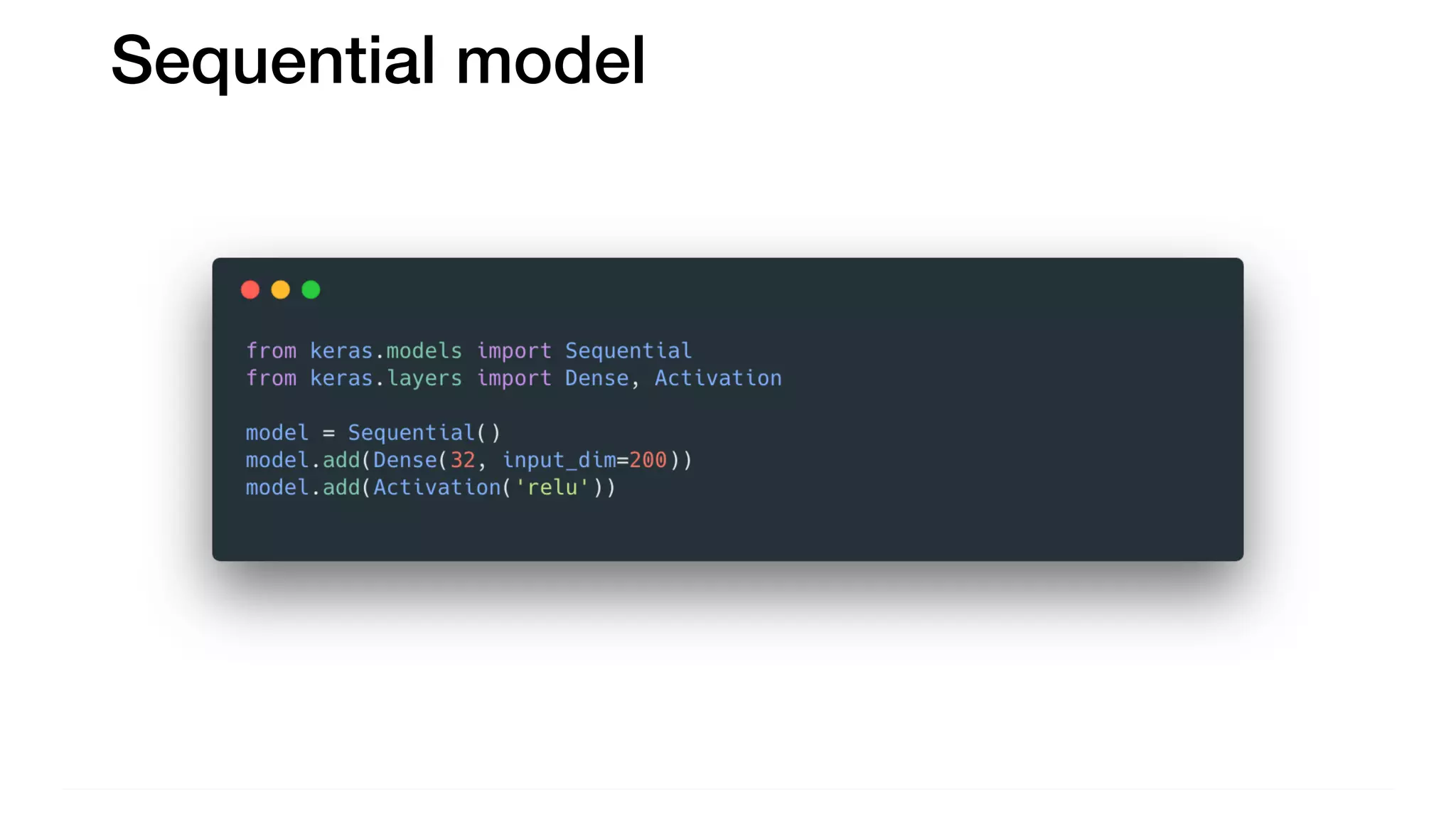

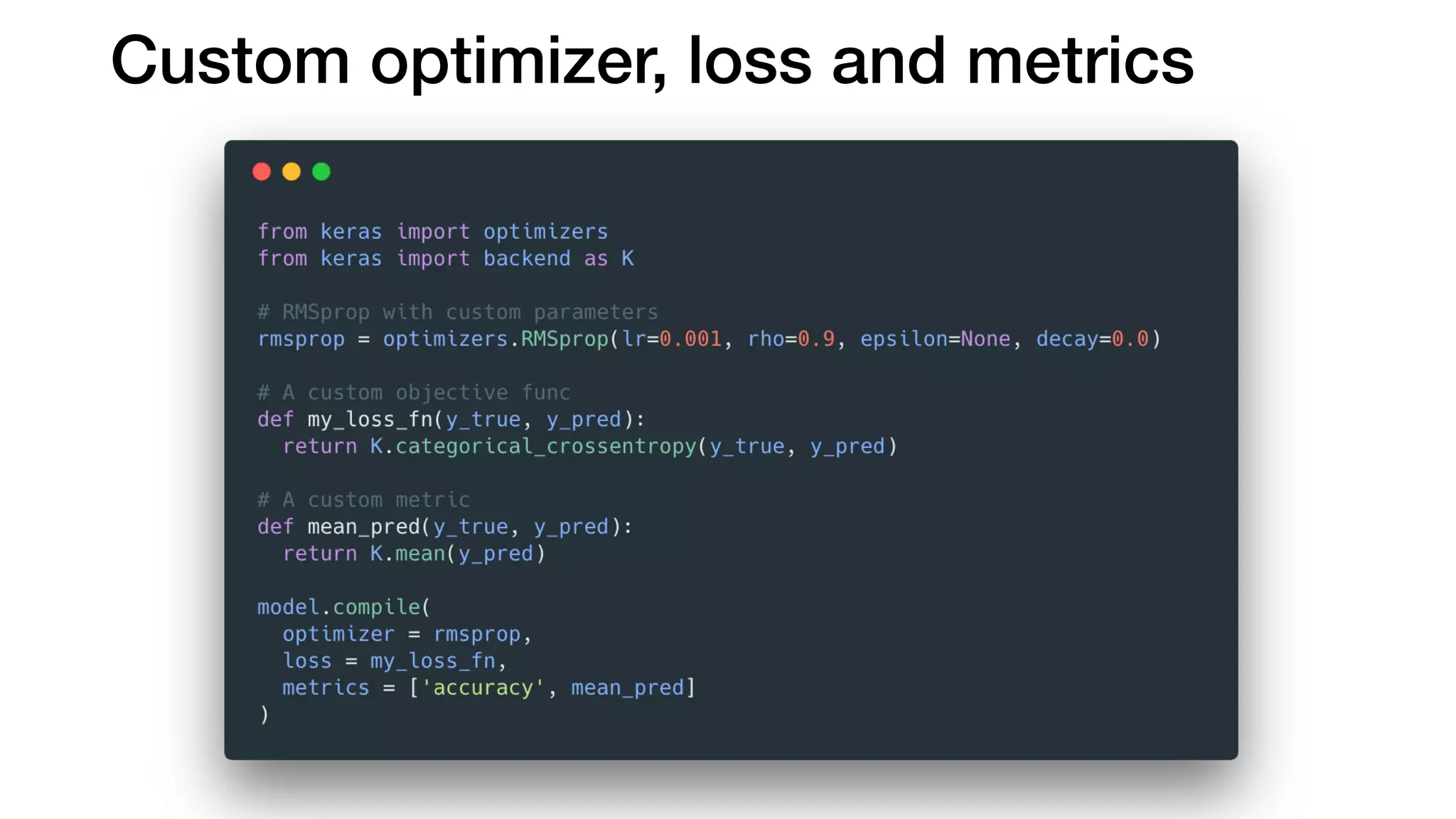

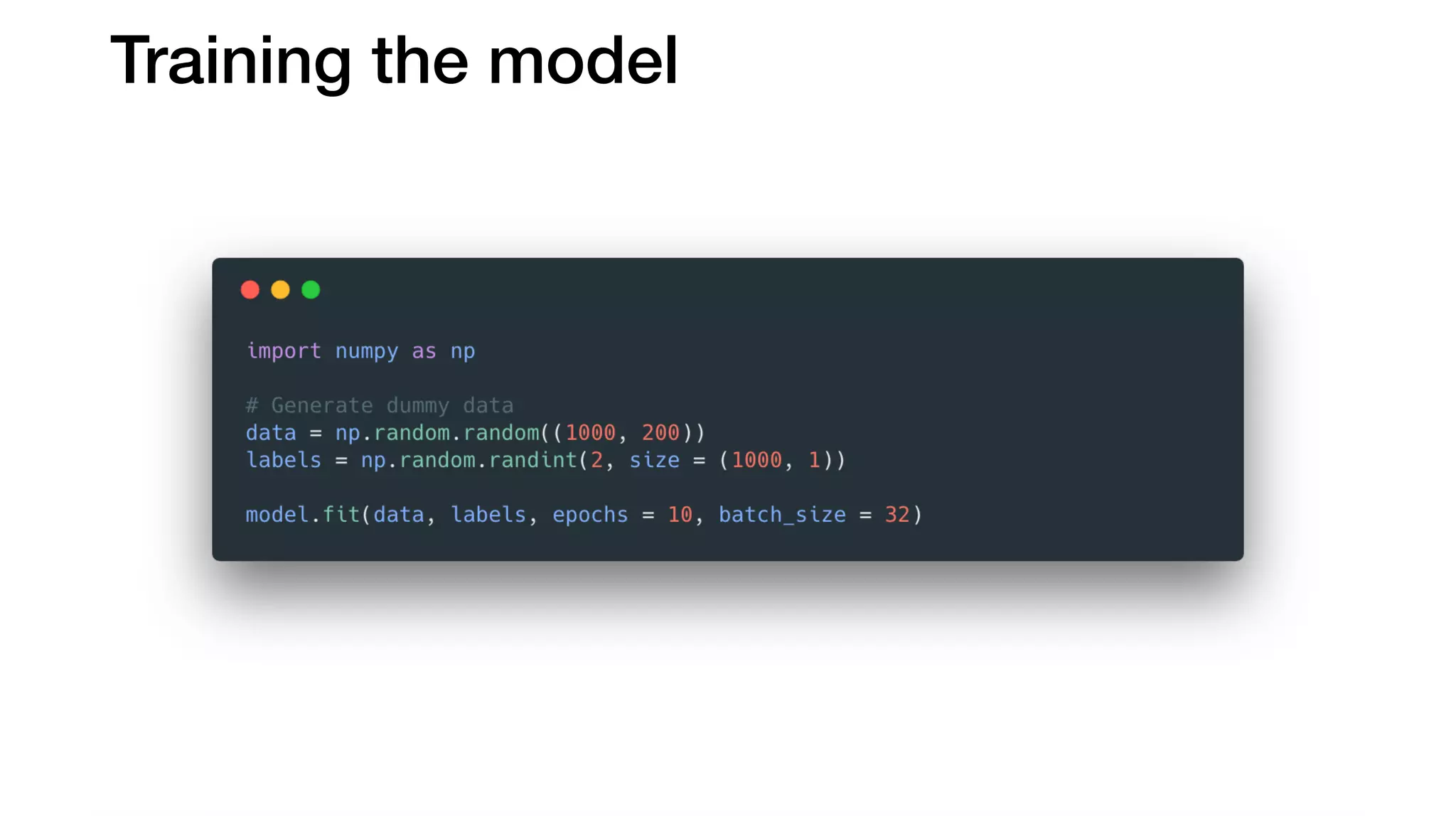

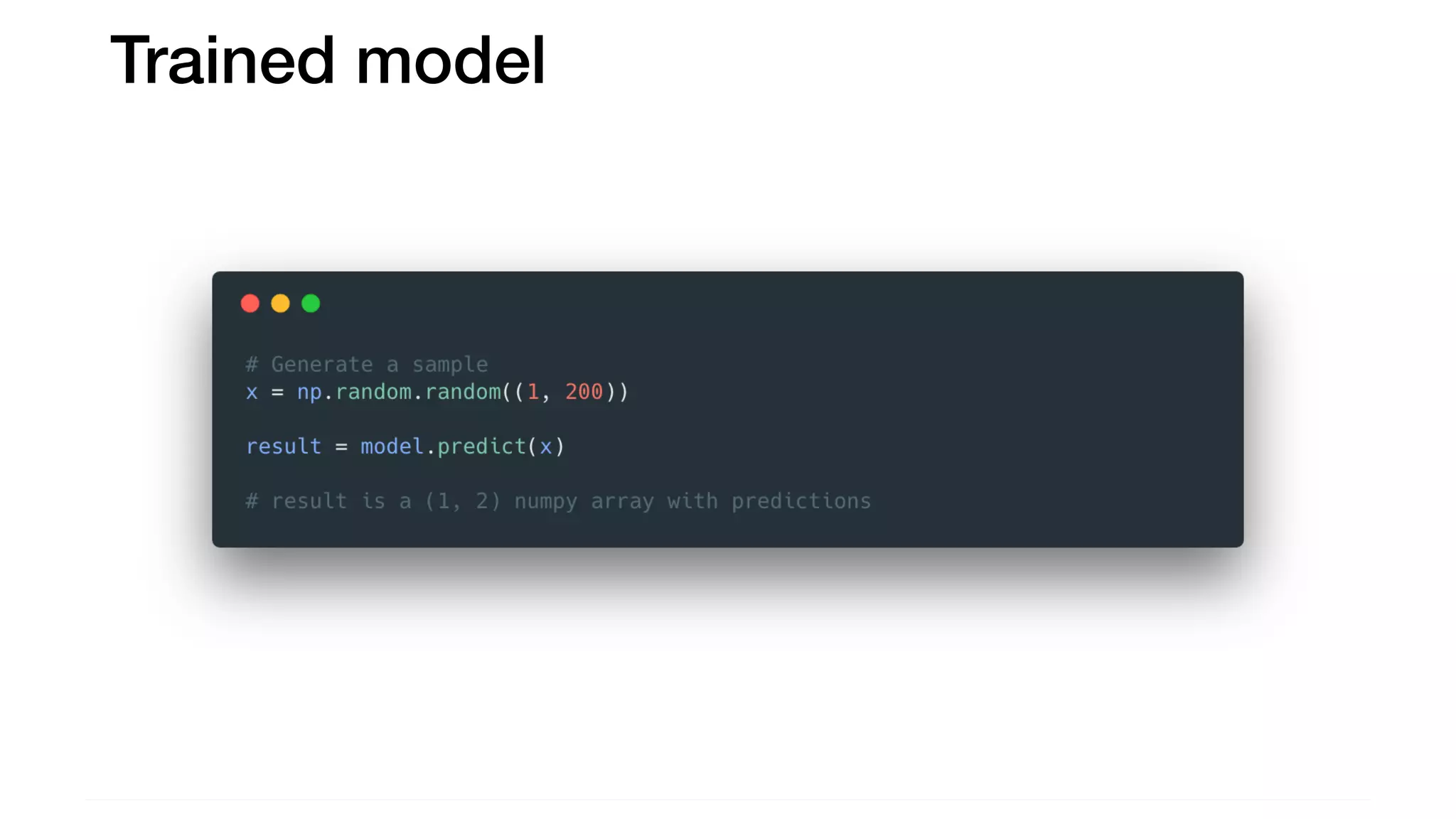

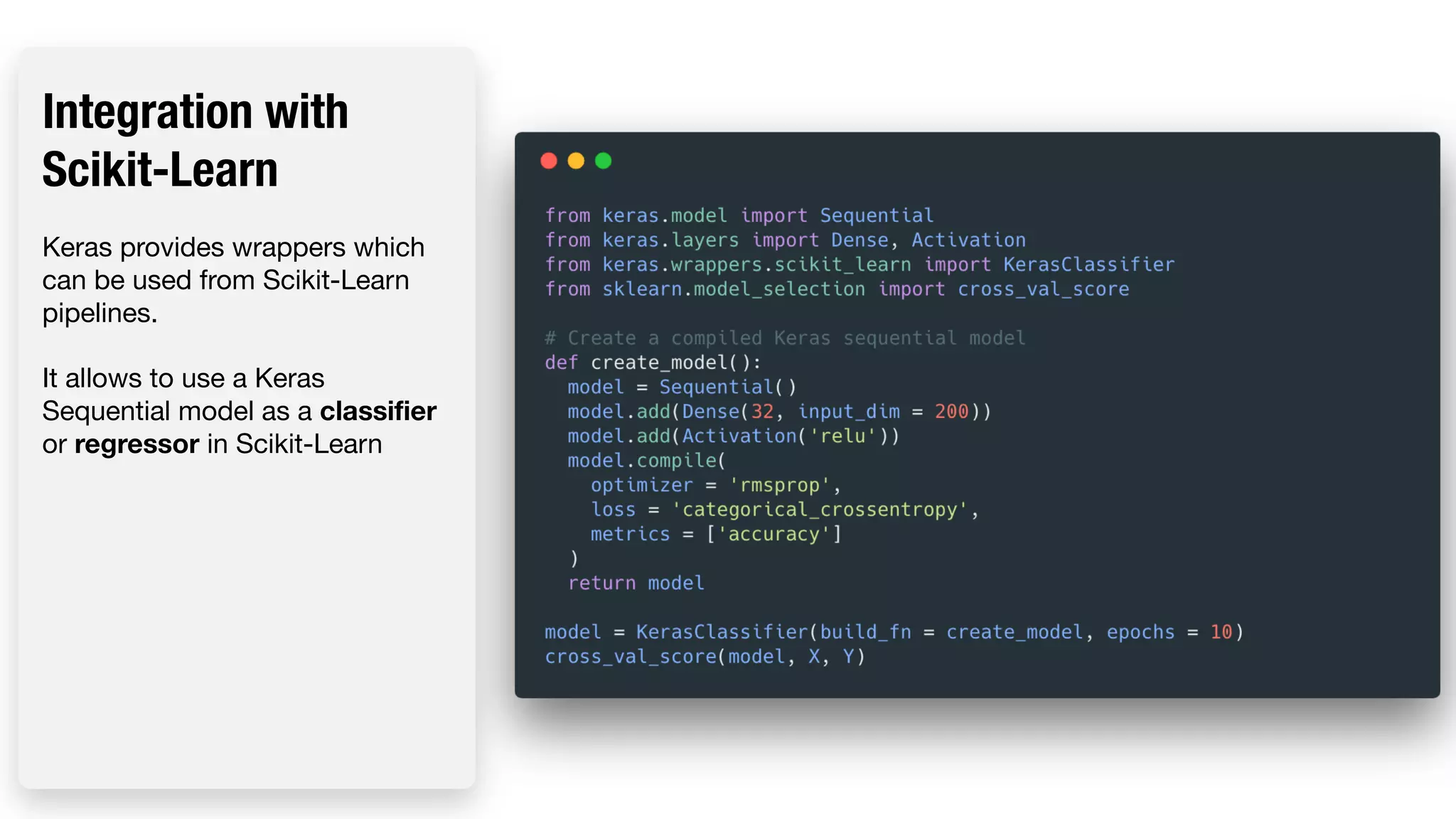

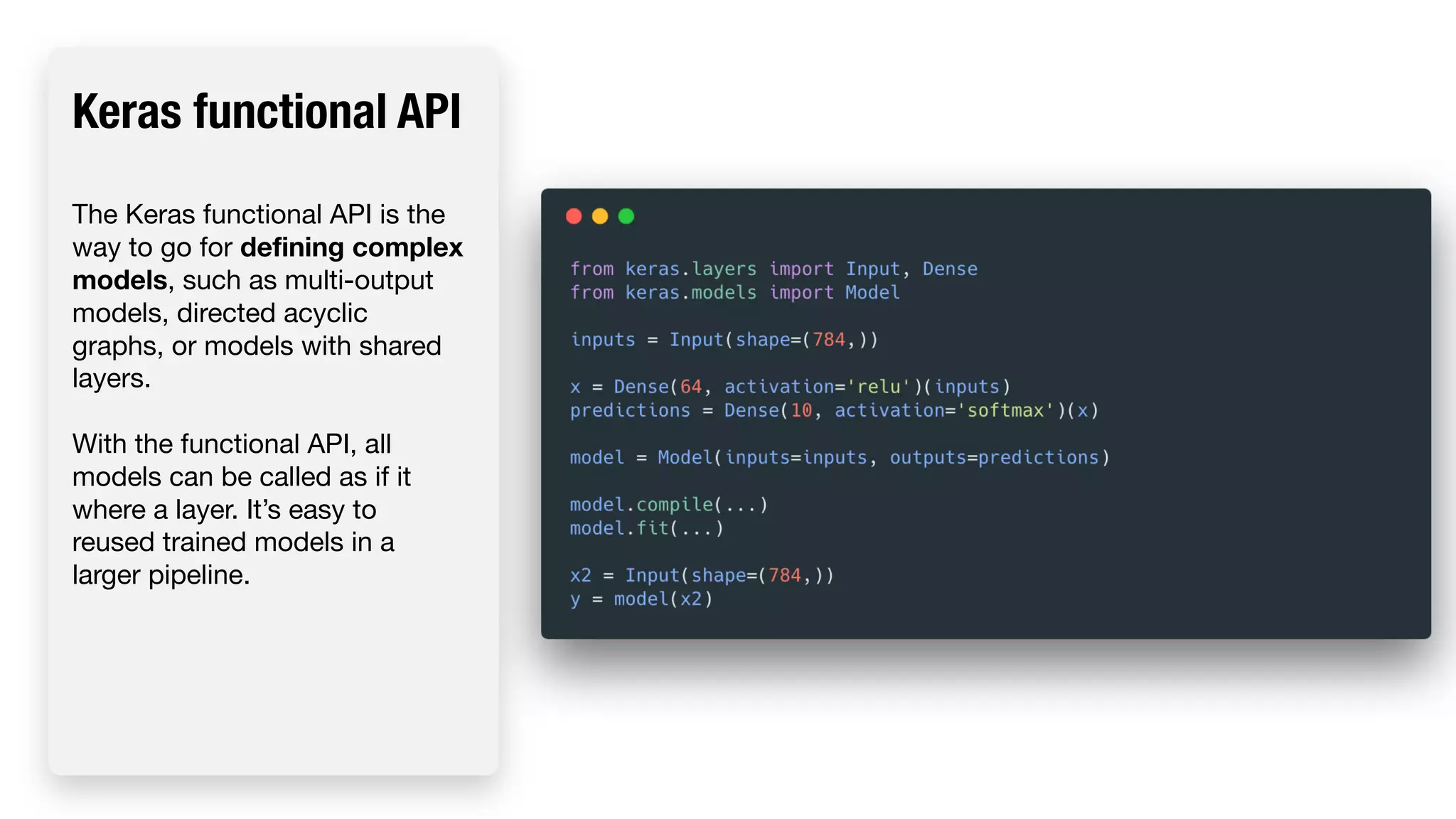

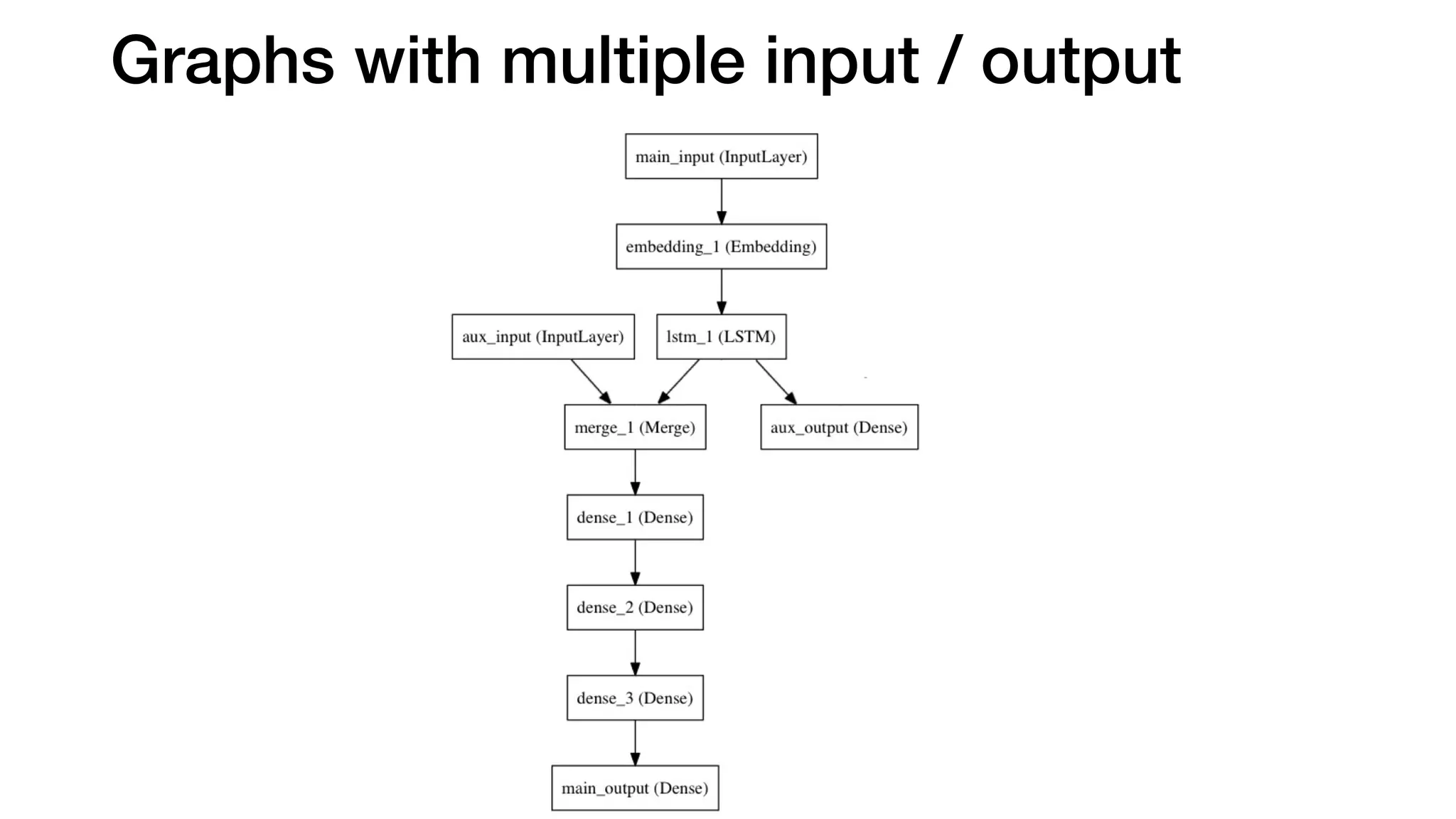

The document discusses various machine learning frameworks, highlighting TensorFlow, PyTorch, and Caffe2's strengths and weaknesses. It emphasizes the need for interoperability in AI tools and introduces ONNX as a solution for compatibility between frameworks. Additionally, it covers Keras as a user-friendly library for building neural networks, detailing its functionalities and integration with other tools.