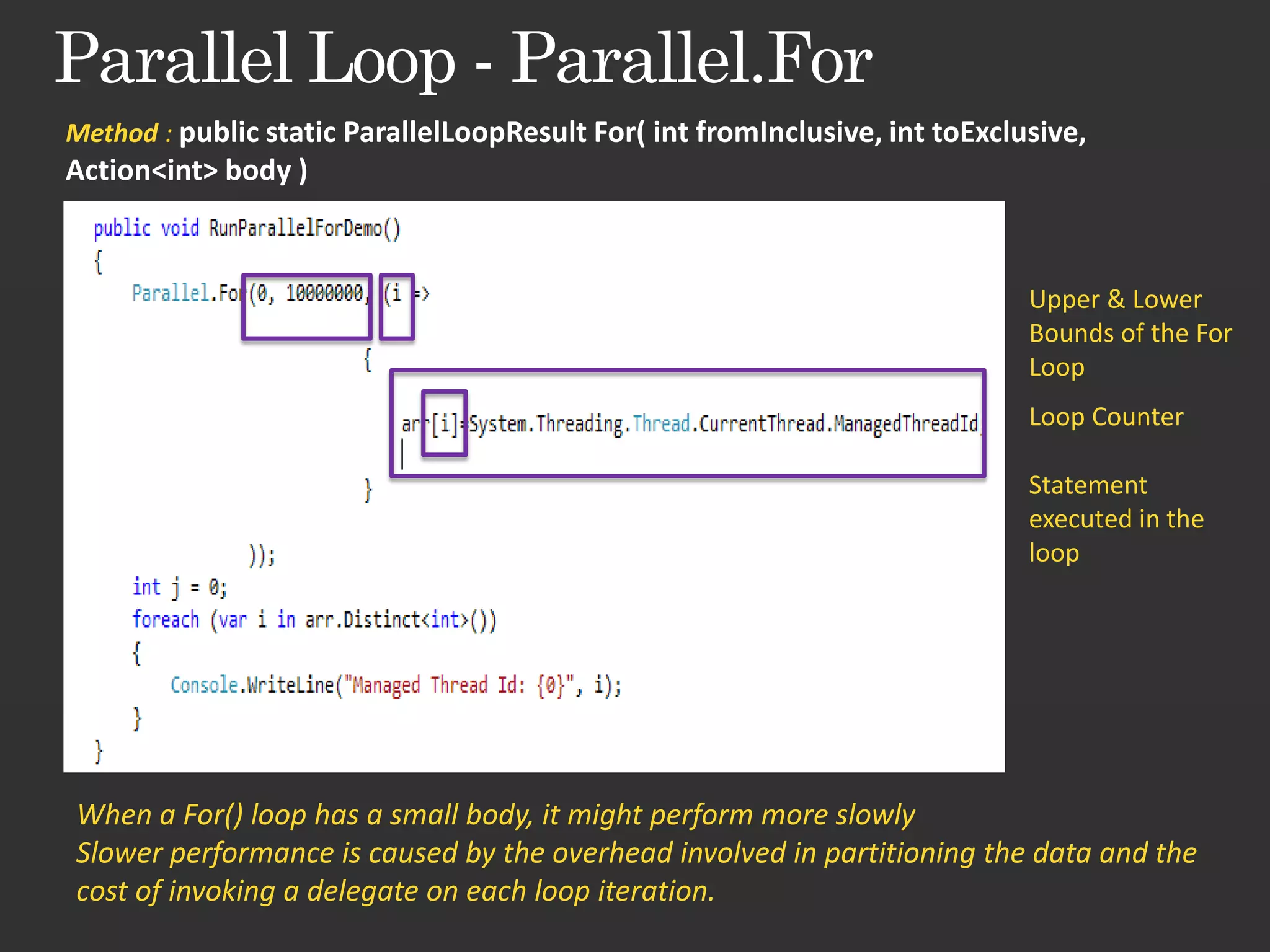

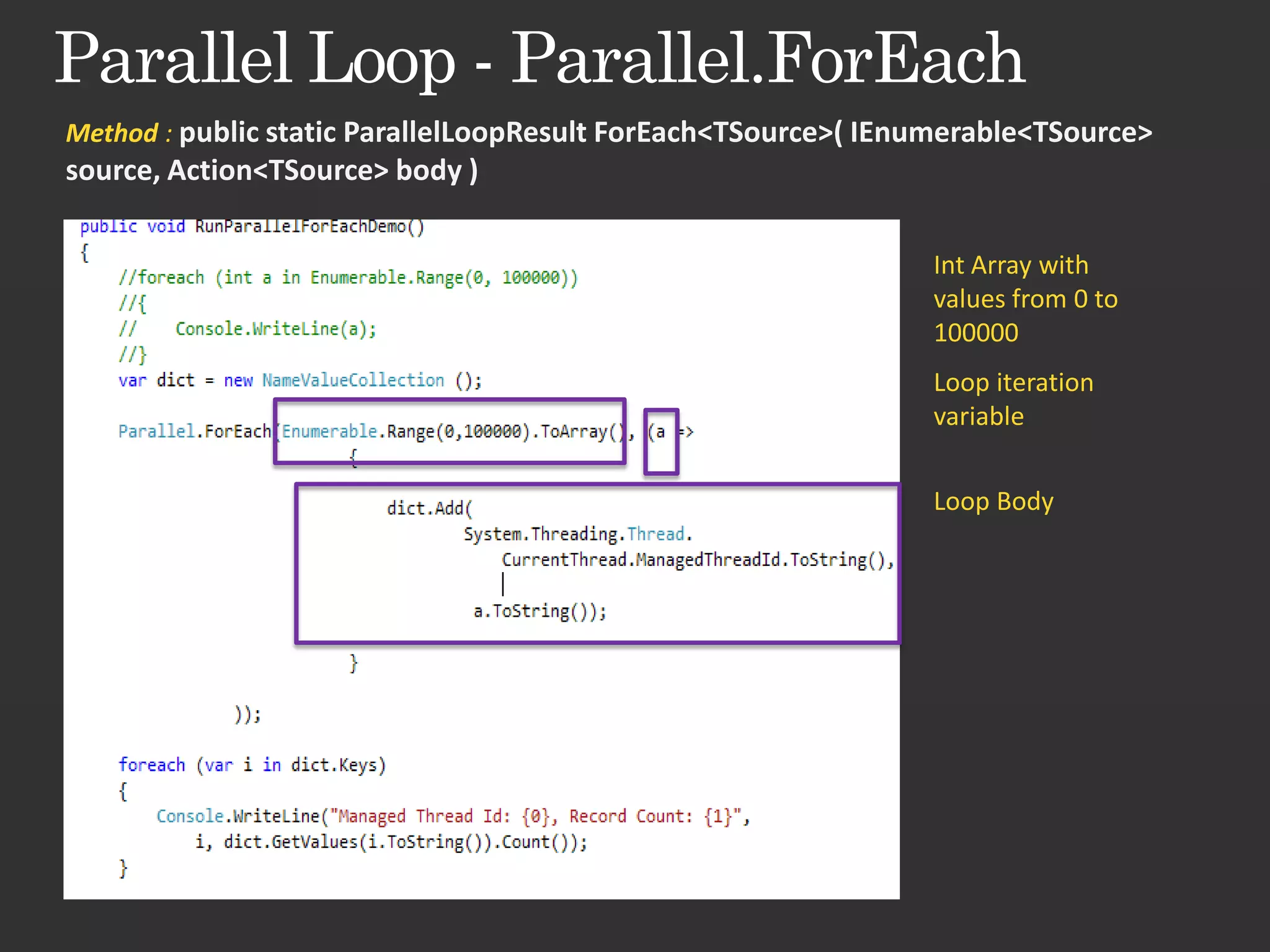

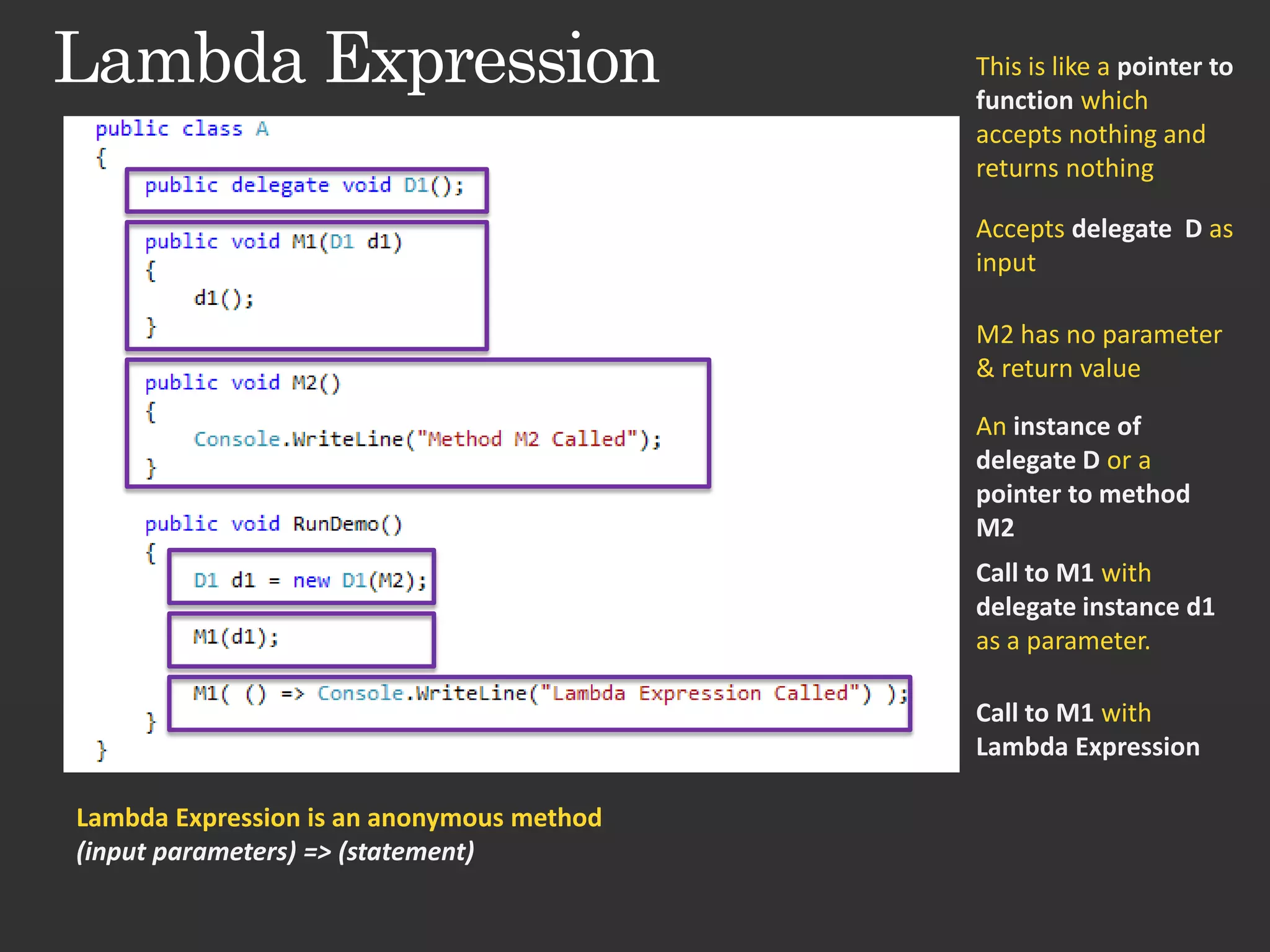

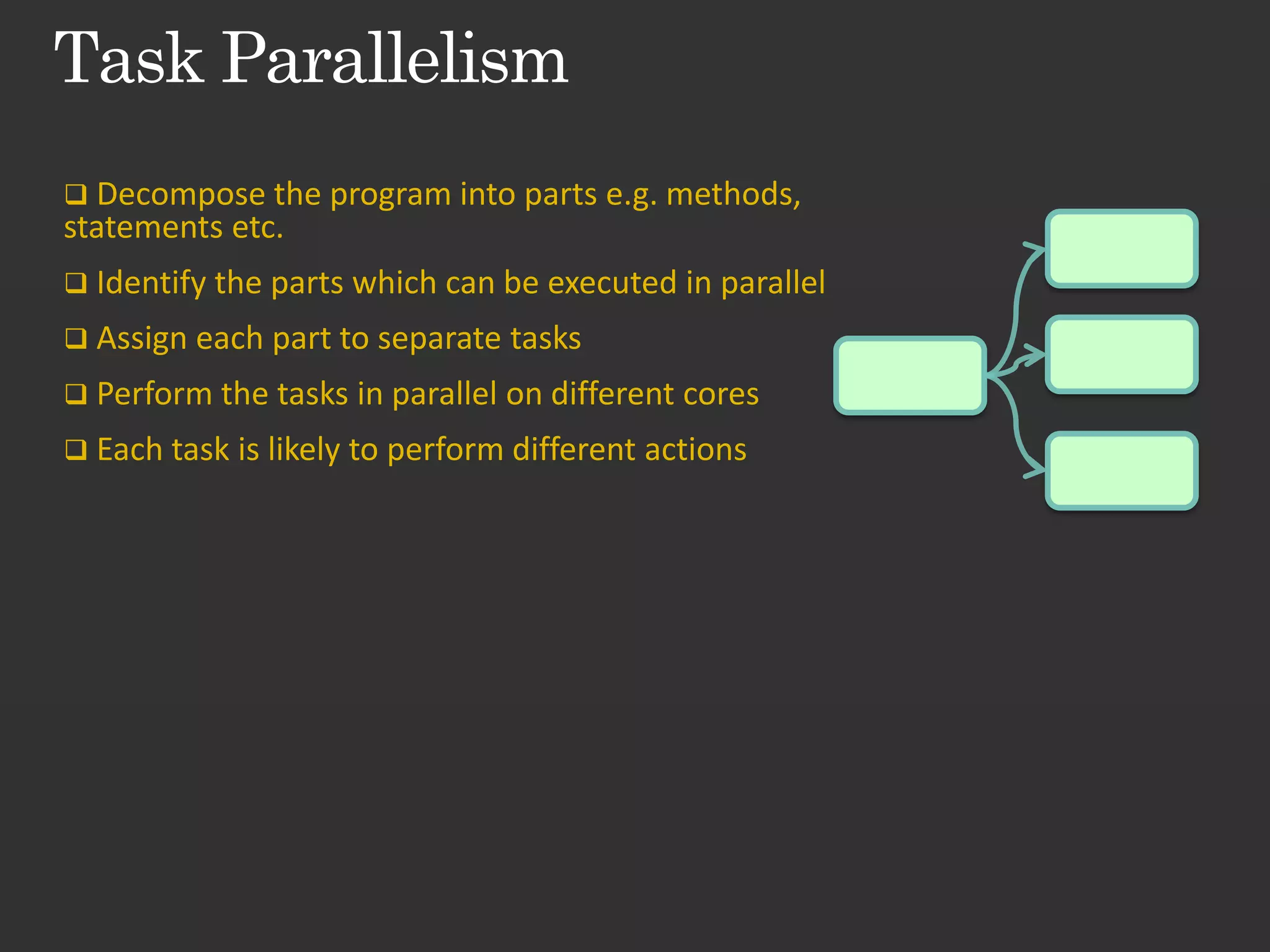

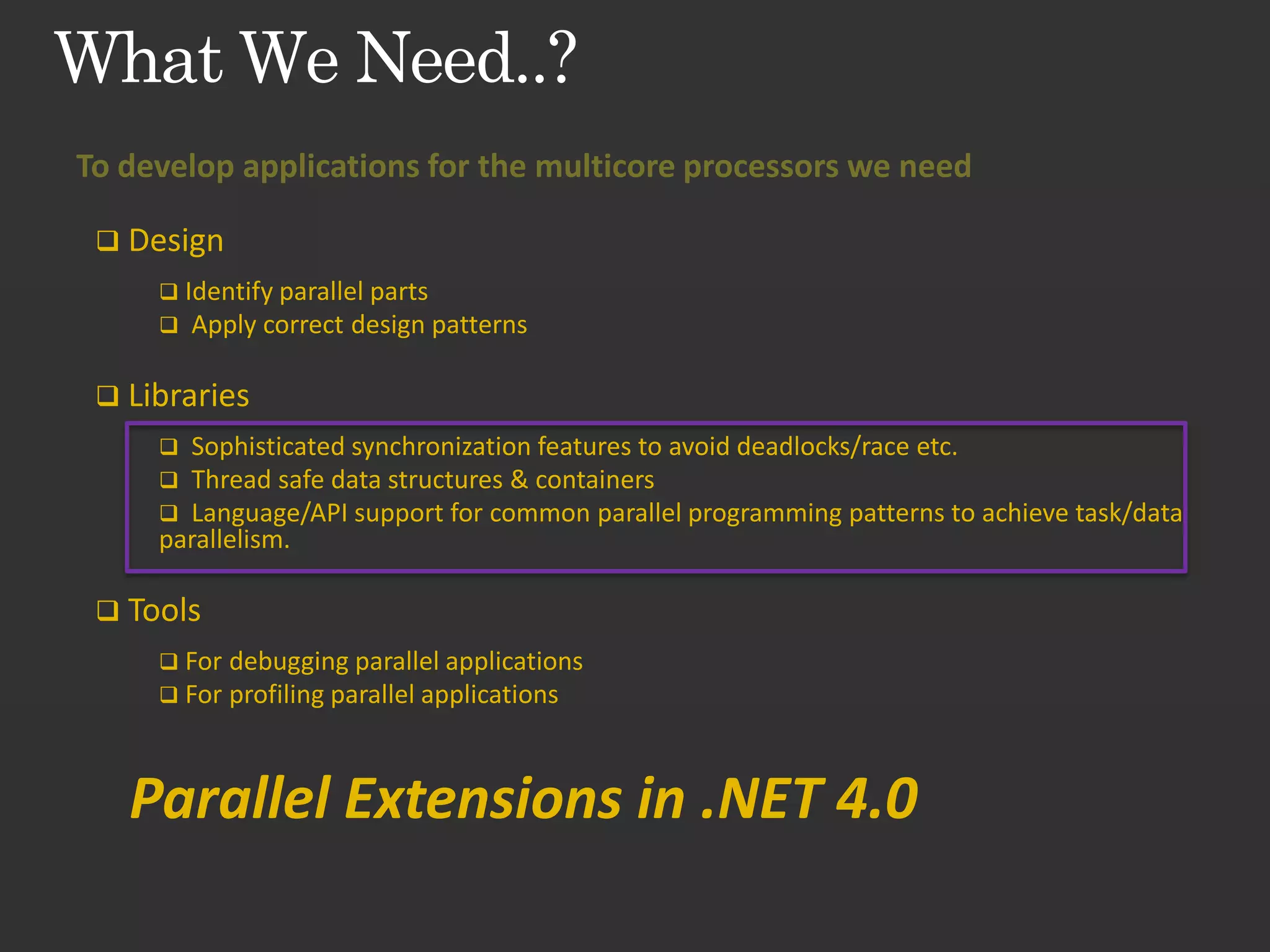

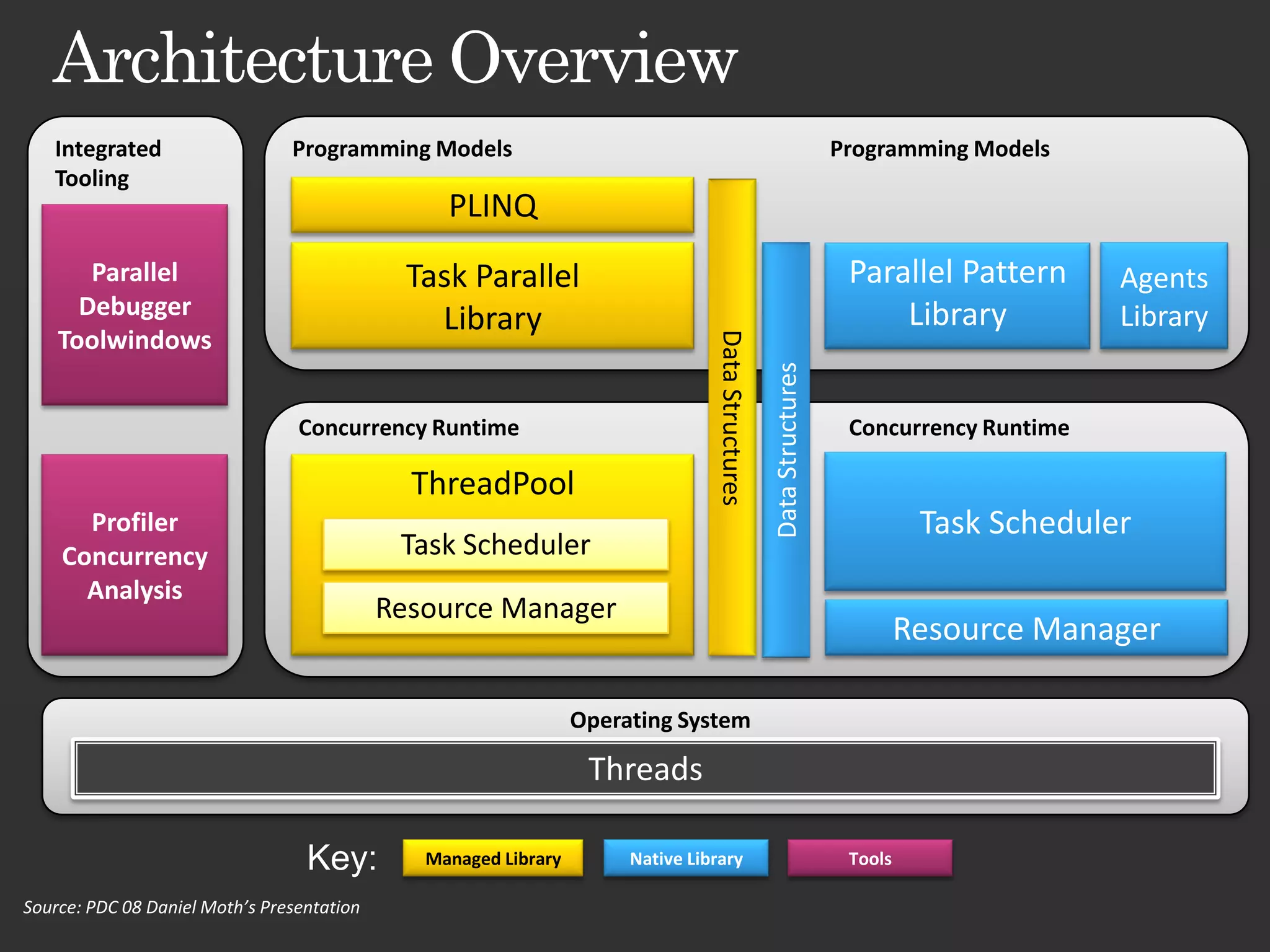

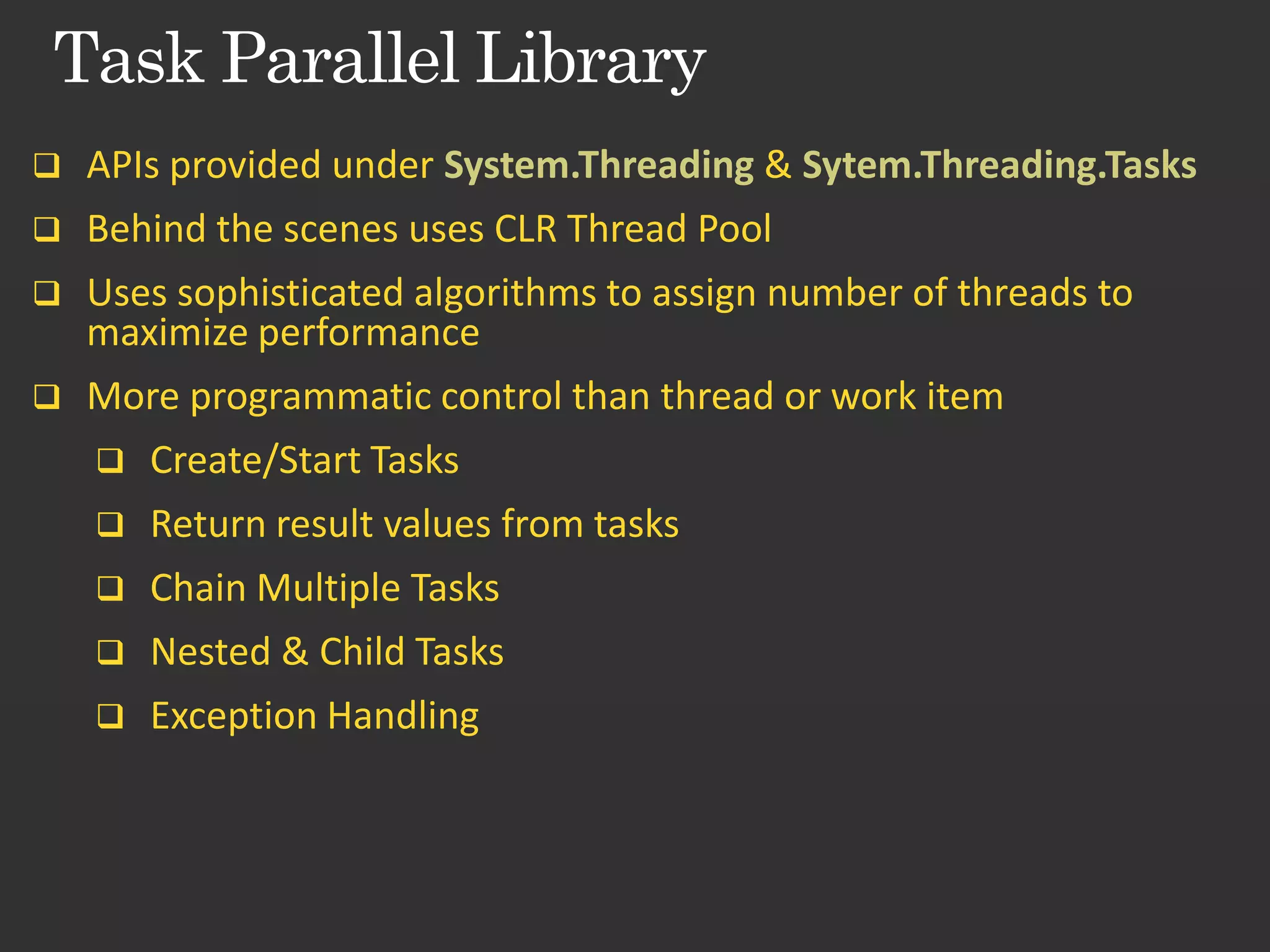

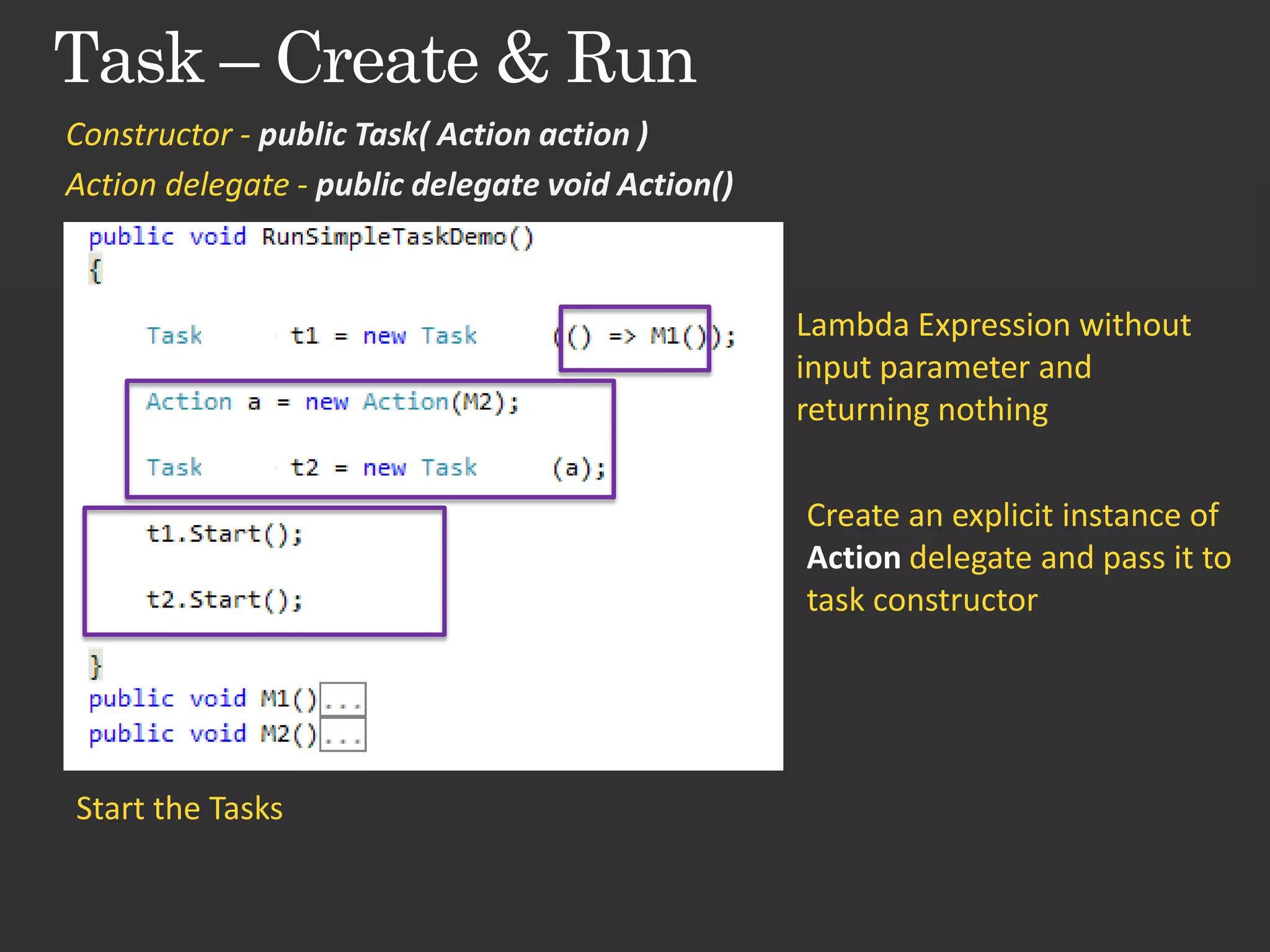

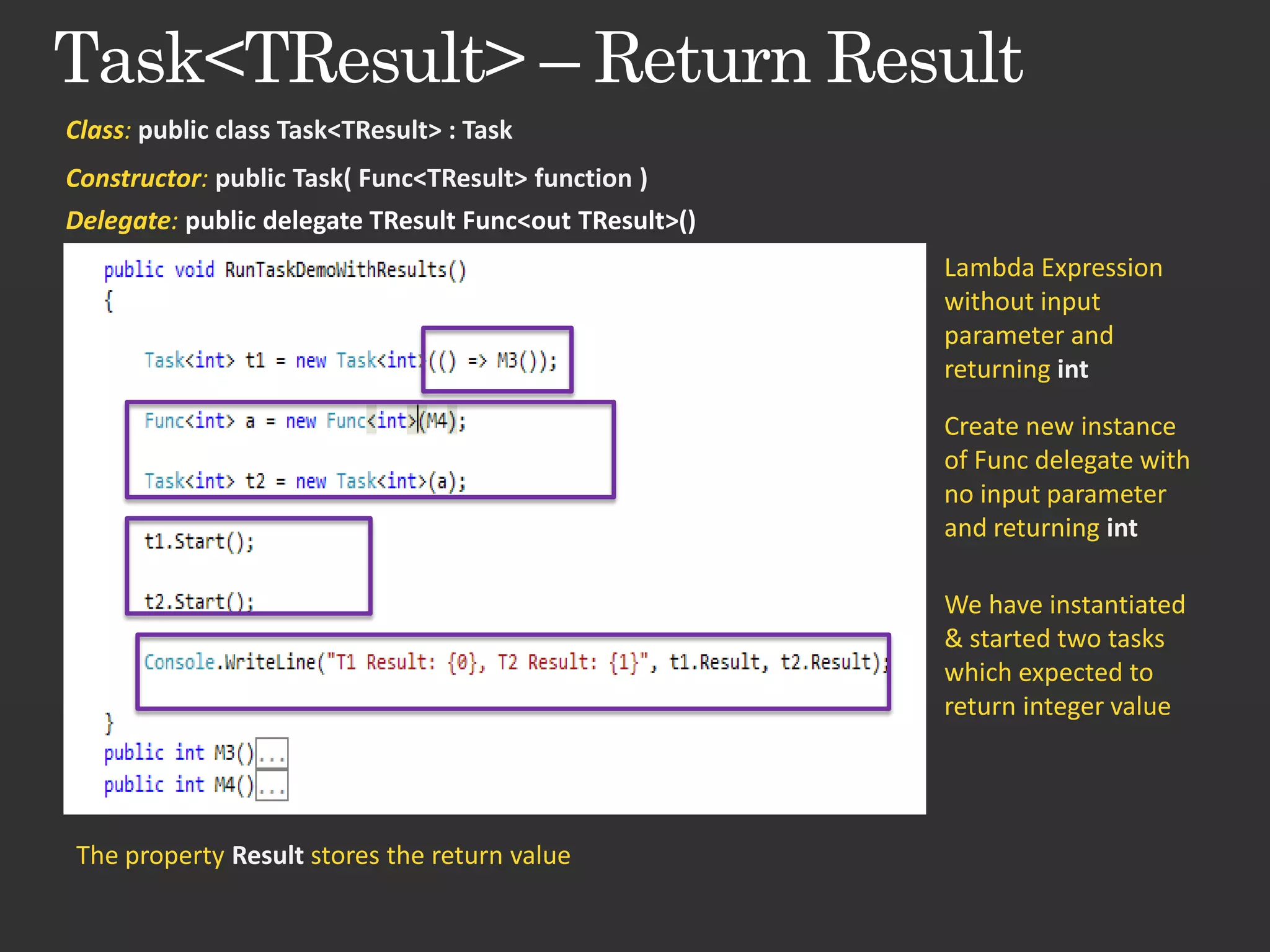

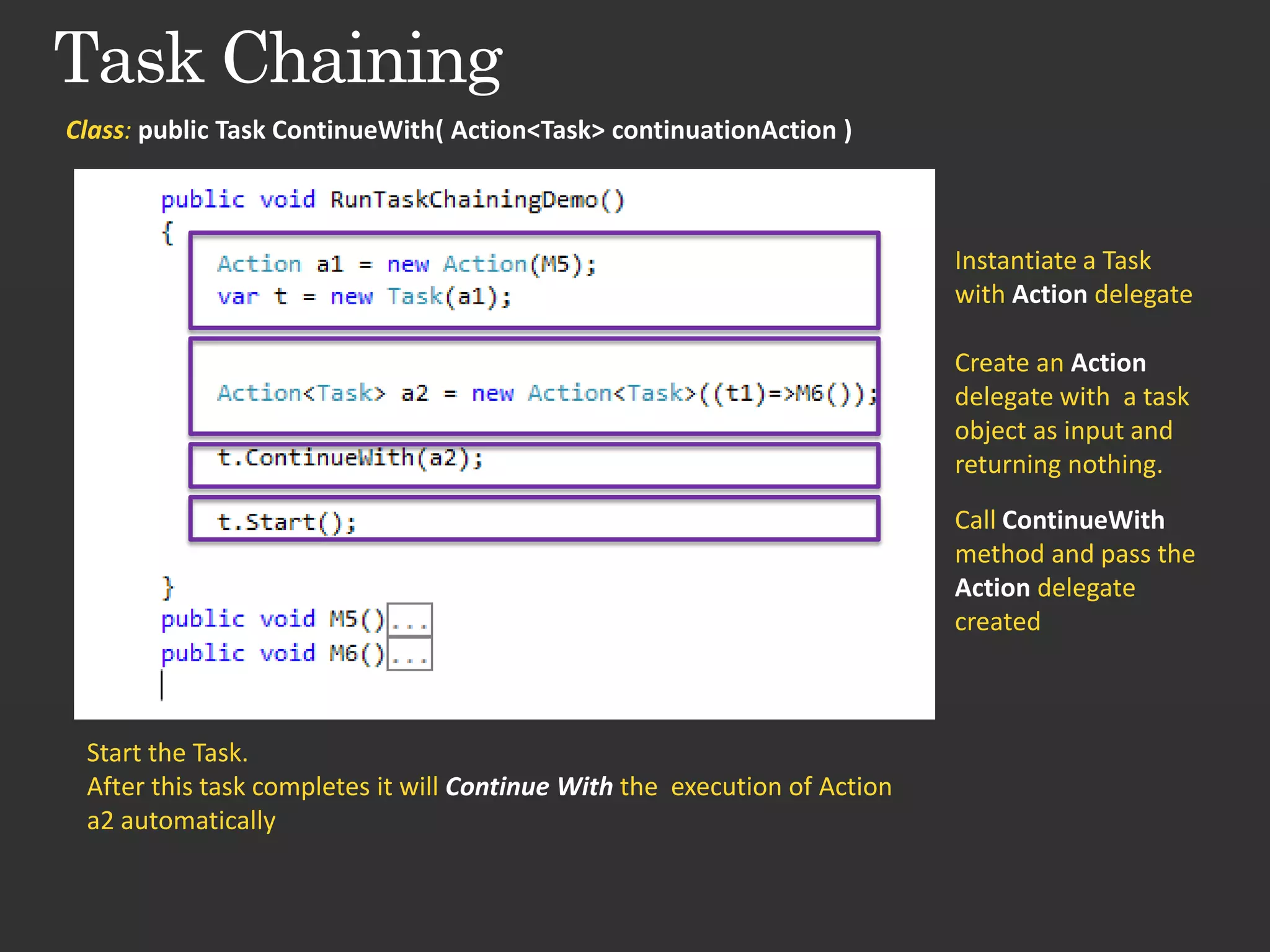

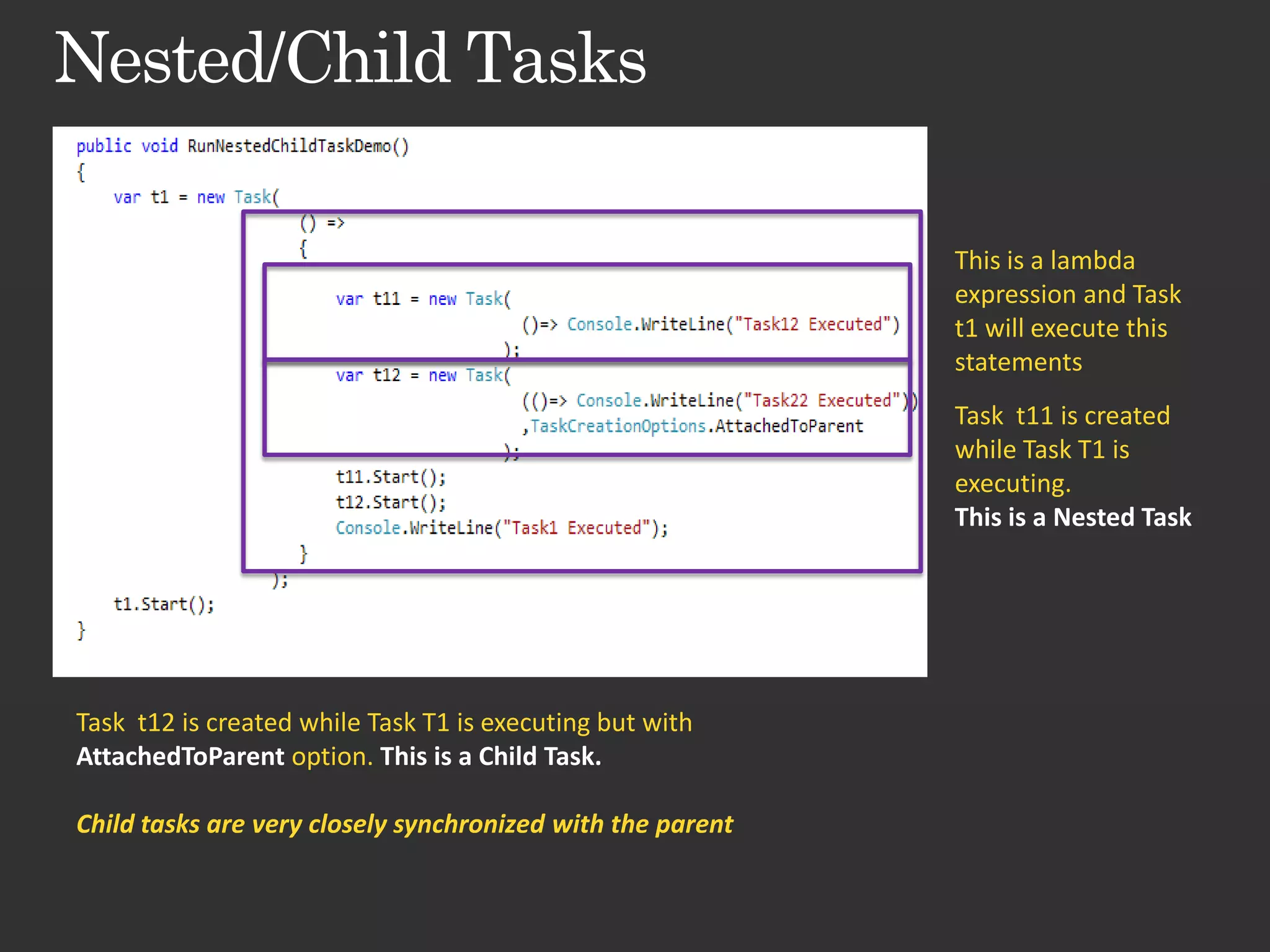

This document discusses parallel programming concepts in .NET 4.0 including task parallelism, data parallelism, and coordination data structures. It provides an overview of tasks and task parallelism in .NET 4.0, explaining how to create and start tasks using lambda expressions and delegates. The document also demonstrates continuing tasks by chaining additional actions to run after a task completes.

![Method : public static void Invoke( params Action[] actions ) Three Action delegates are created Three Action delegates will be invoked possibly in Parallel](https://image.slidesharecdn.com/ctd-parallelprogramming-100712232218-phpapp01/75/Parallel-Programming-in-NET-29-2048.jpg)