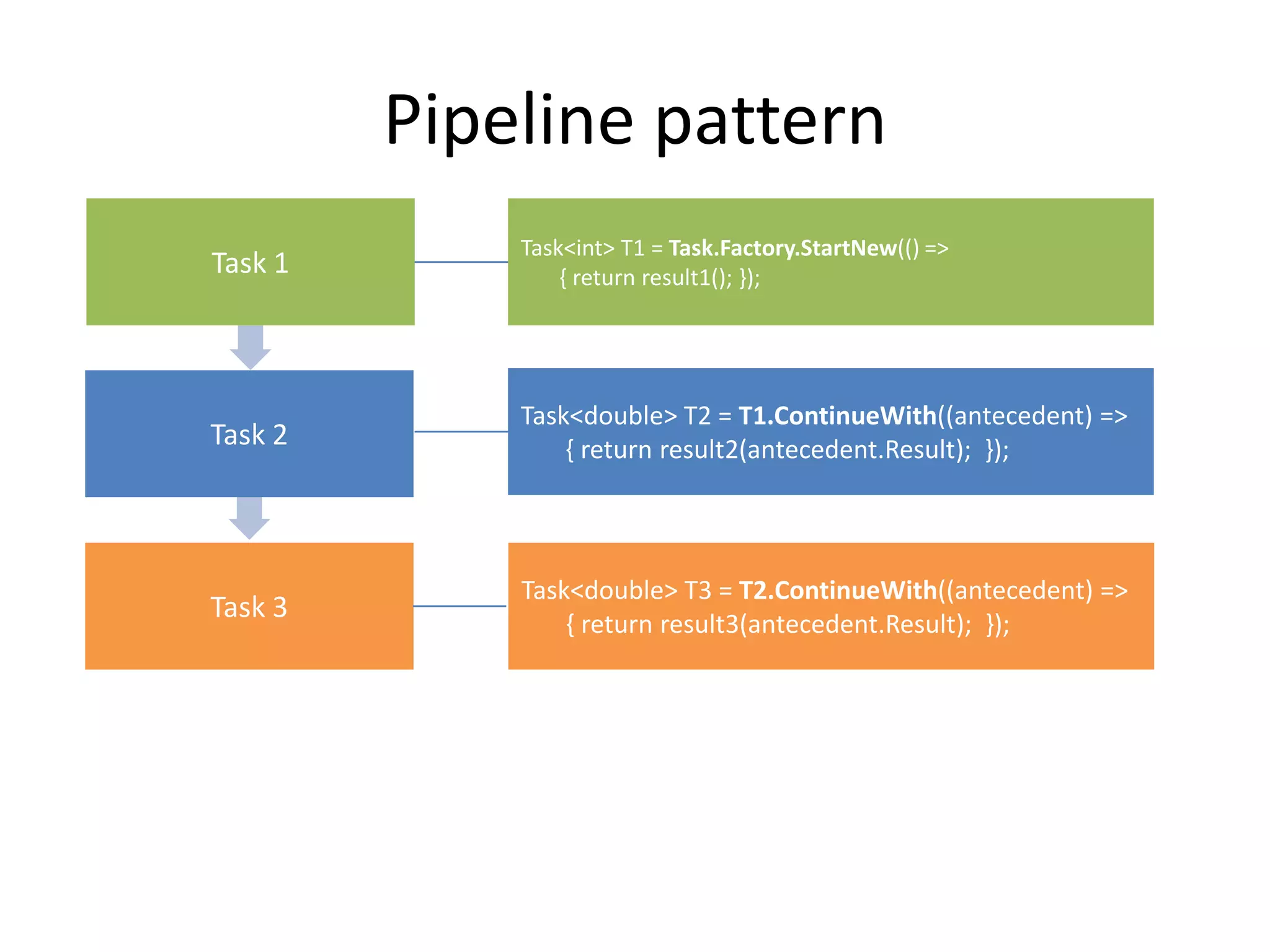

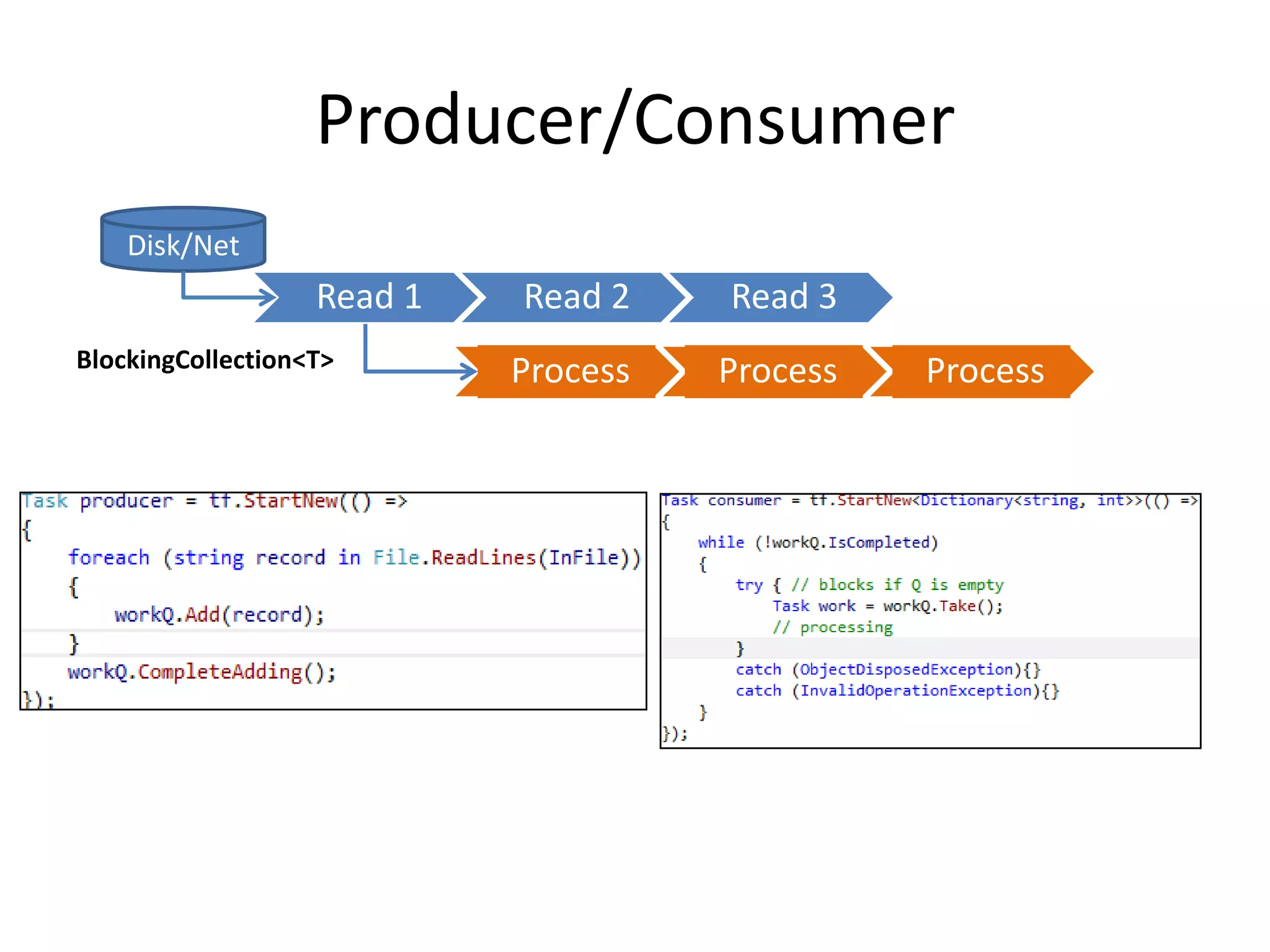

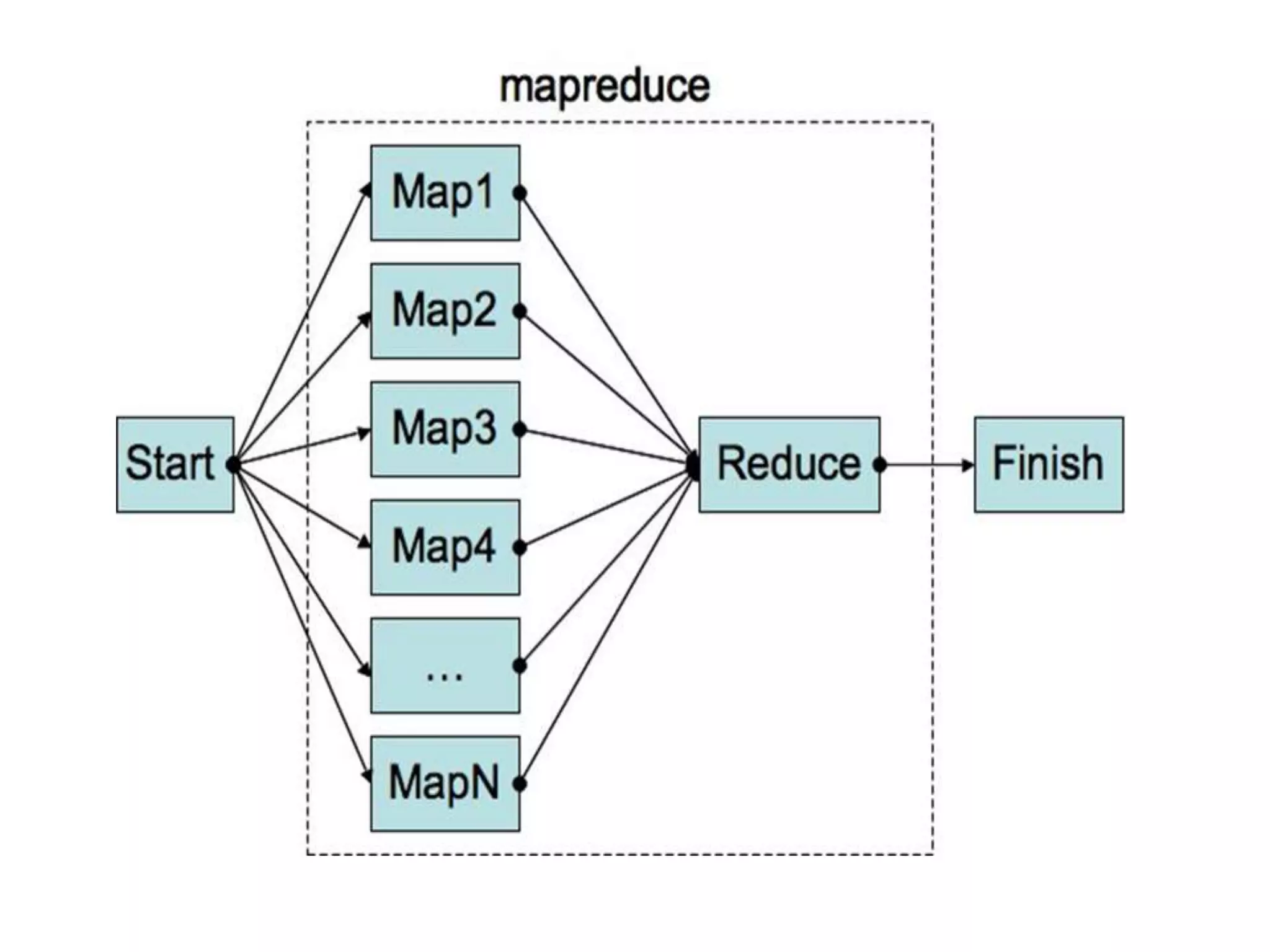

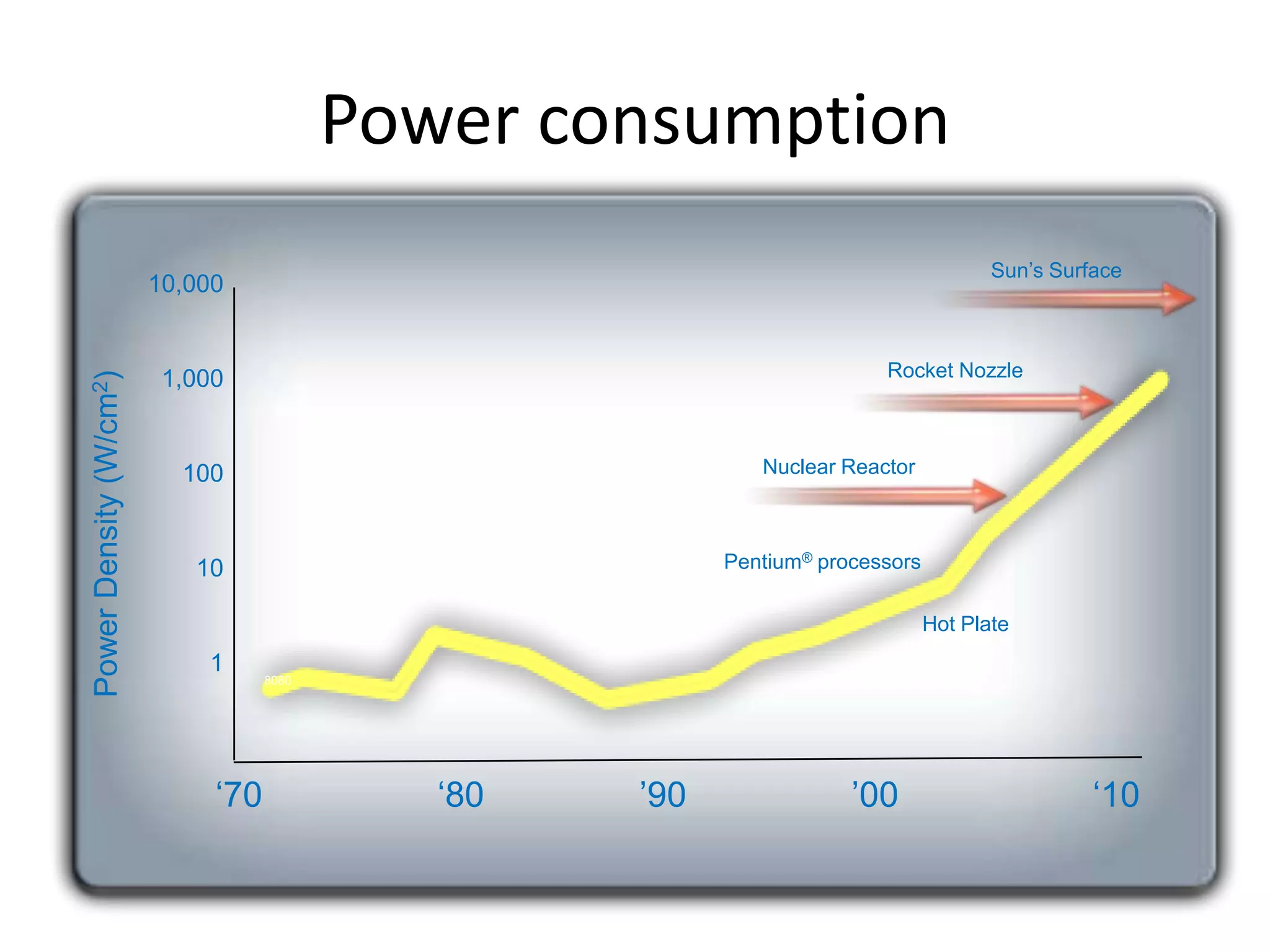

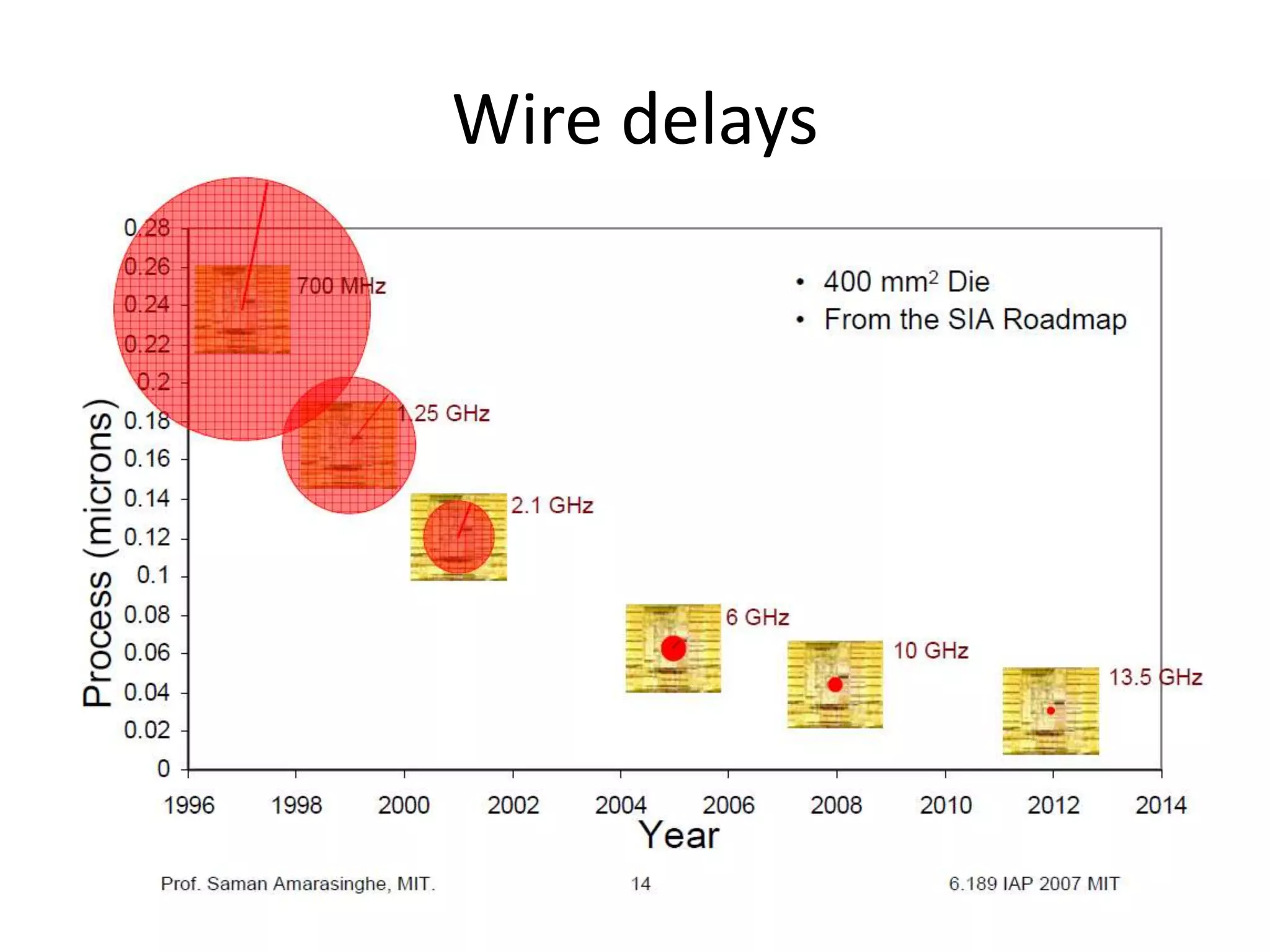

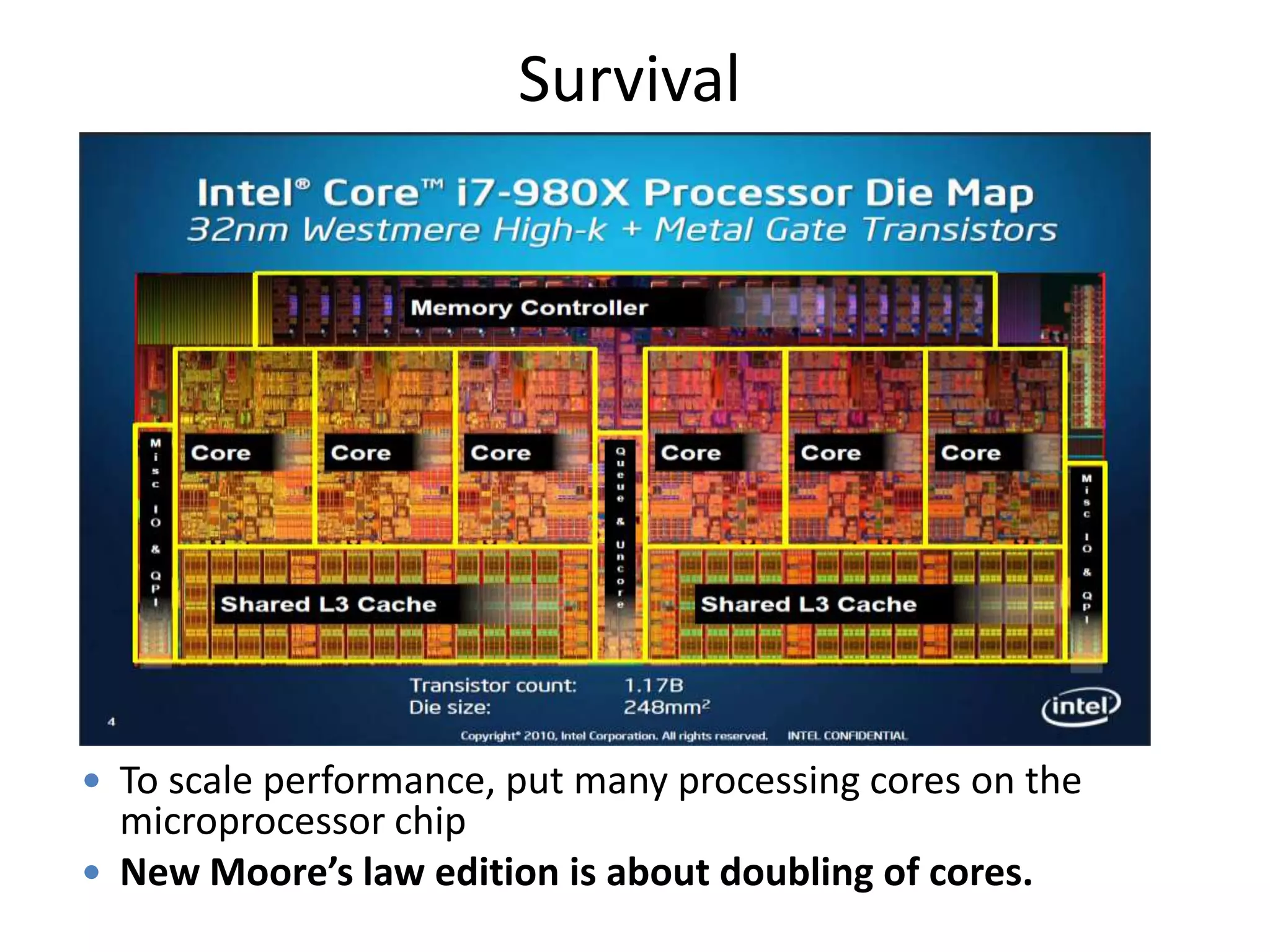

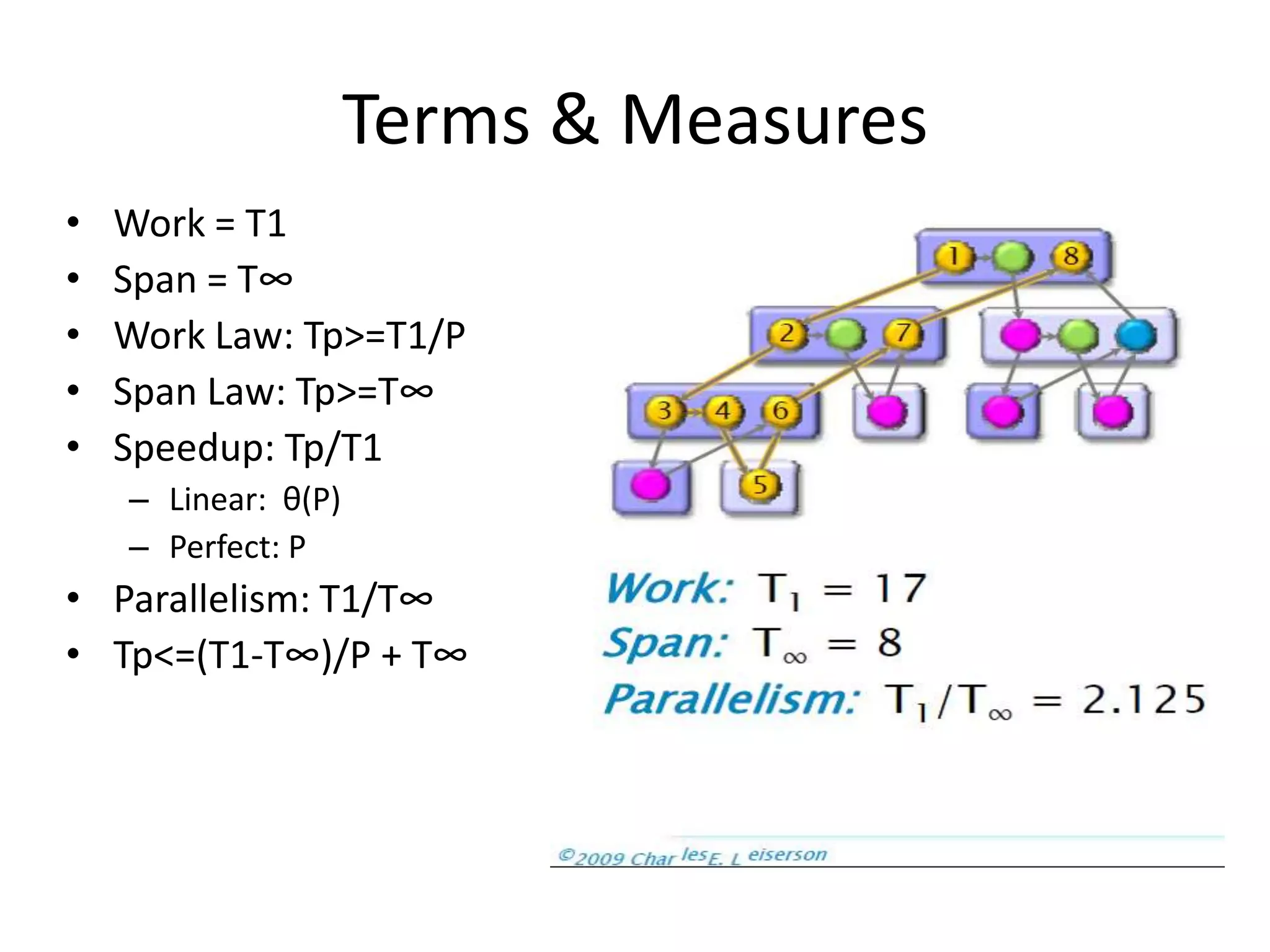

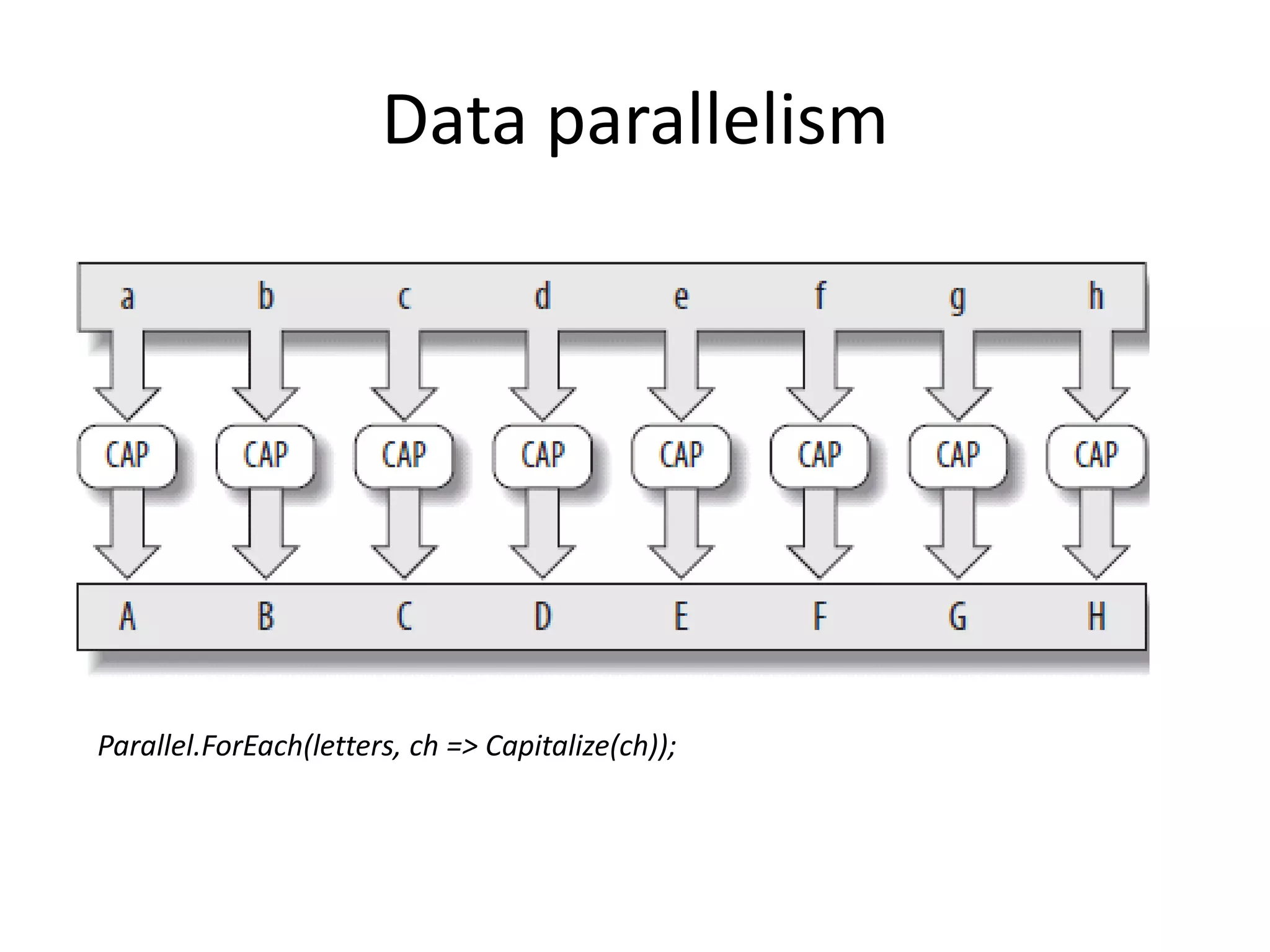

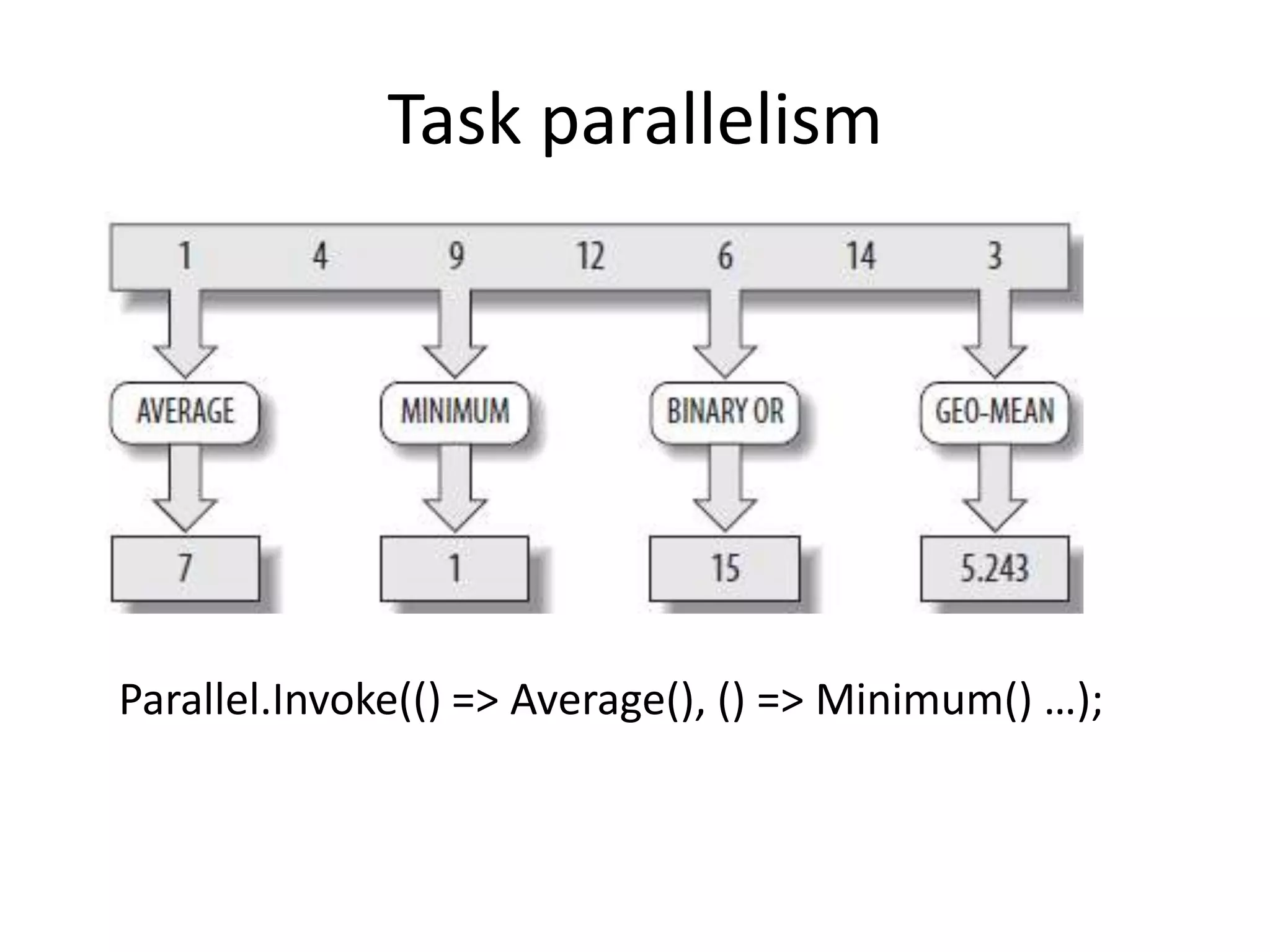

This document discusses patterns of parallel programming. It begins by explaining why parallel programming is necessary due to limitations of Moore's Law like power consumption and wire delays. It then covers key terms and measures for parallel programming like work, span, speedup and parallelism. Common patterns are overviewed like pipeline, producer-consumer, and Map-Reduce. It warns of dangers like race conditions, deadlocks and starvation. Finally, it provides references for further reading on parallel programming patterns and approaches.

![Fork-Join • Additional work may be started only when specific subsets of the original elements have completed processing • All elements should be given the chance to run even if one invocation fails (Ping) Parallel.Invoke( () => ComputeMean(), Fork () => ComputeMedian(), () => ComputeMode()); Compute Compute Compute static void MyParallelInvoke(params Action[] actions) Median Mean Mode { var tasks = new Task[actions.Length]; for (int i = 0; i < actions.Length; i++) tasks[i] = Task.Factory.StartNew(actions[i]); Join Task.WaitAll(tasks); }](https://image.slidesharecdn.com/patternsofparallelprogrammingnetusergroup-130311045912-phpapp01/75/Patterns-of-parallel-programming-14-2048.jpg)