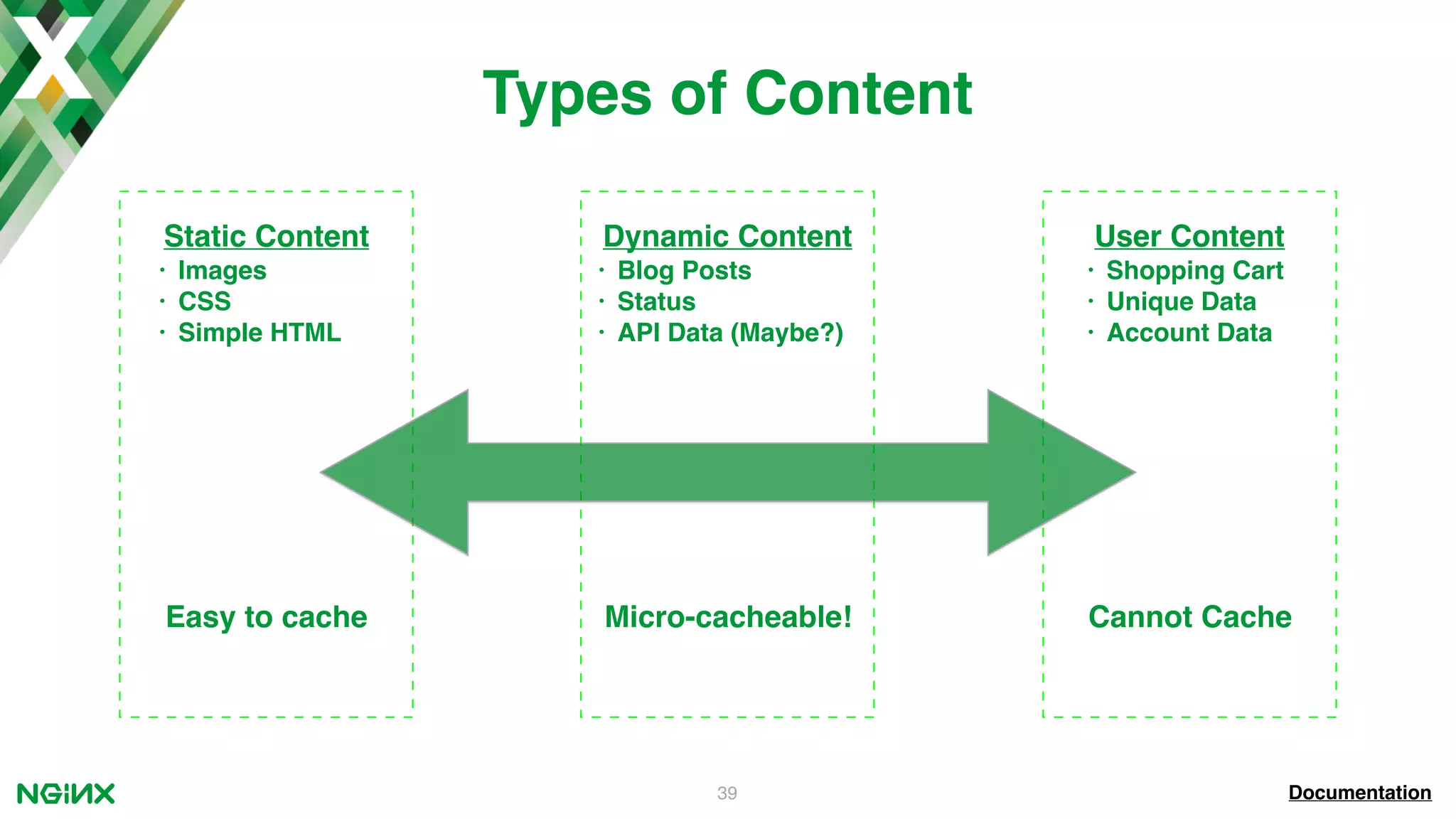

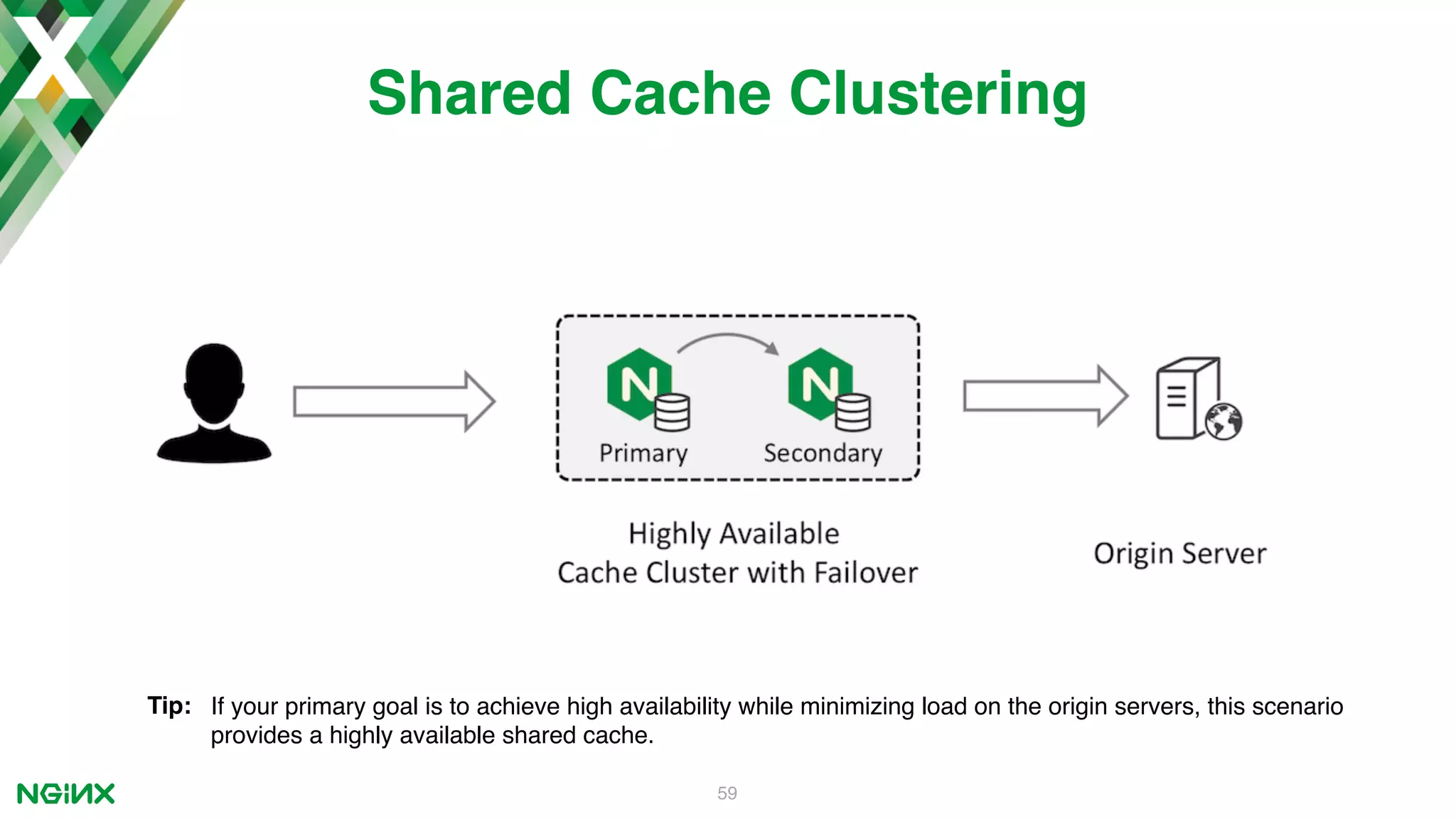

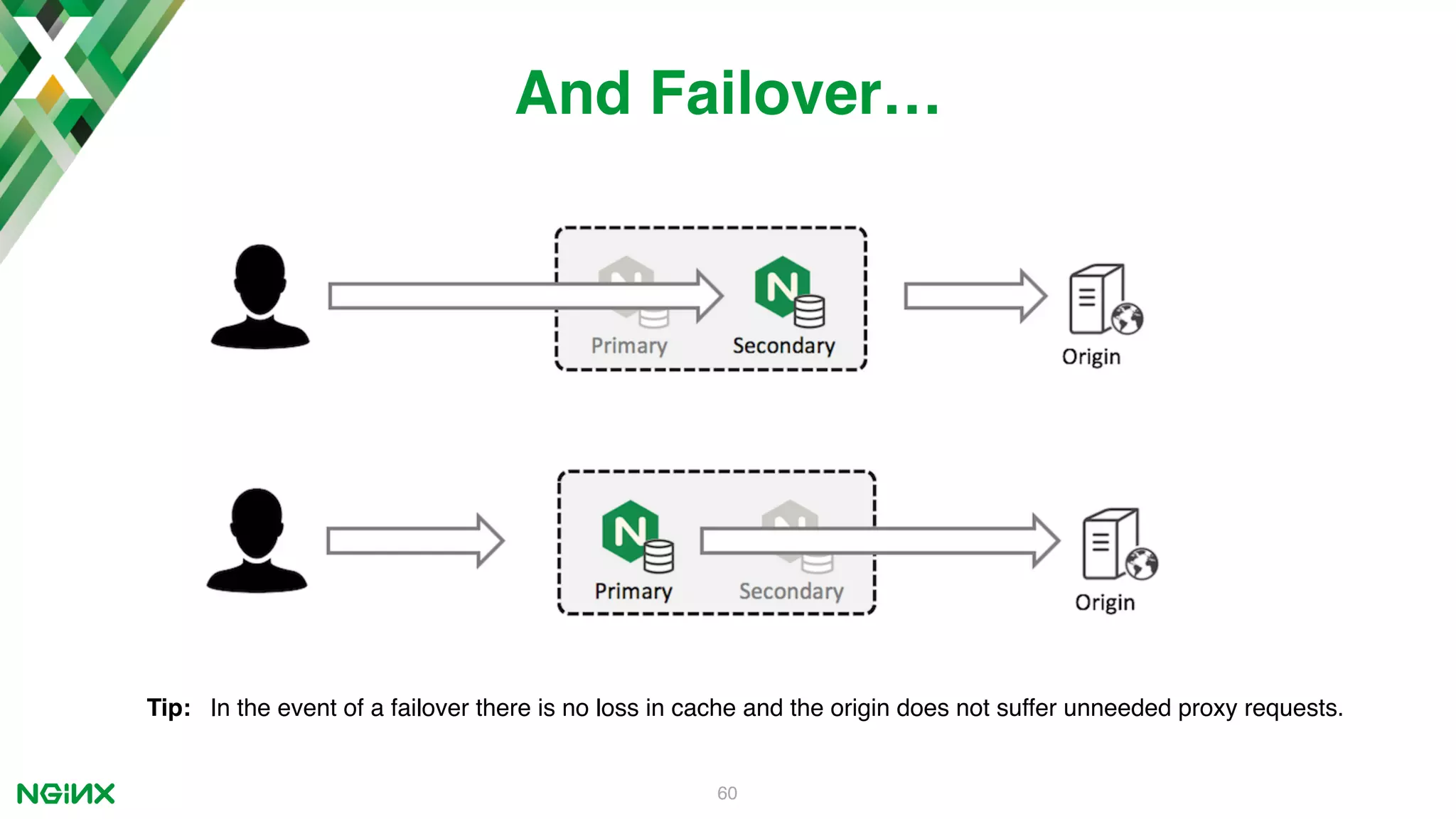

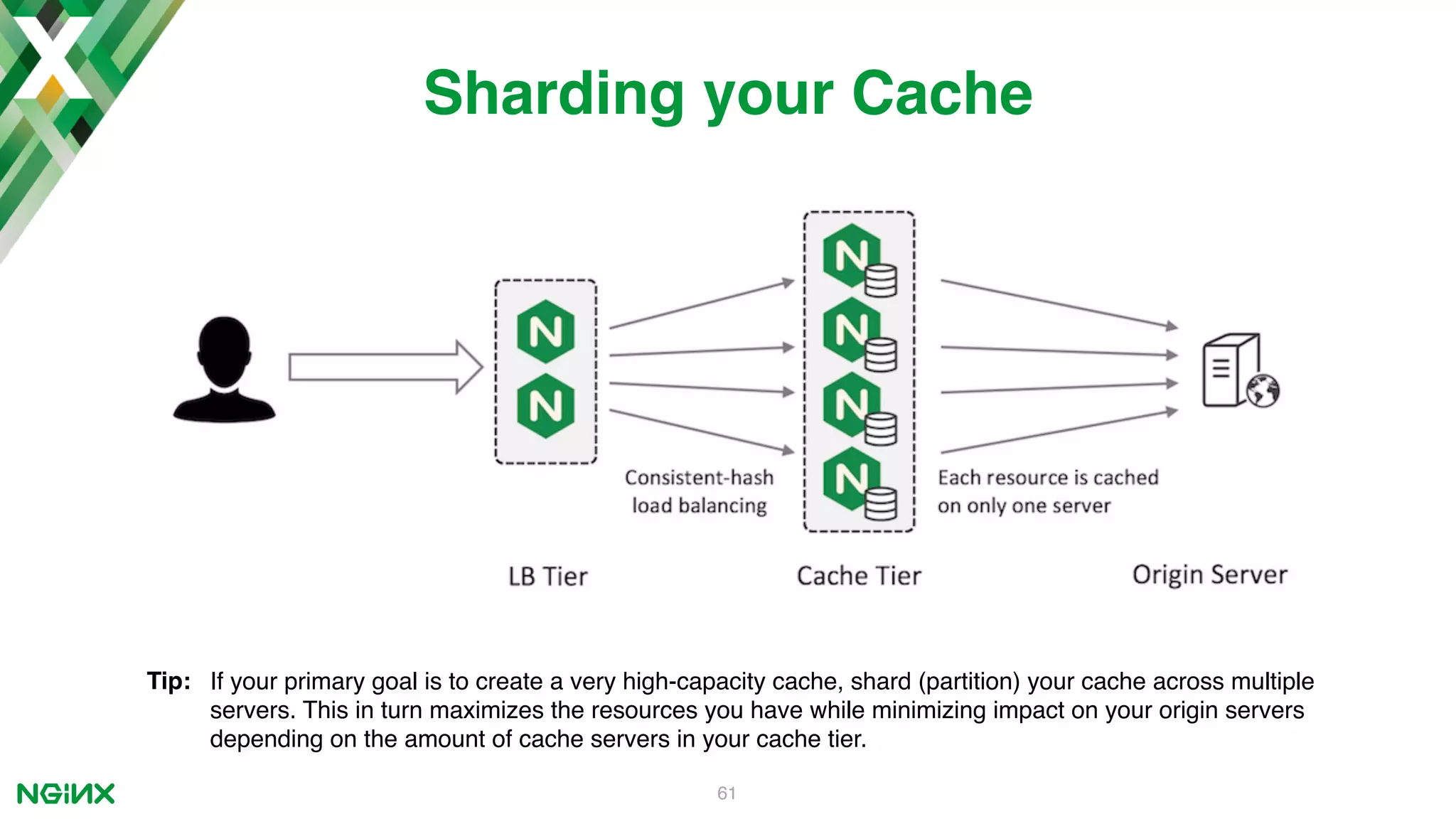

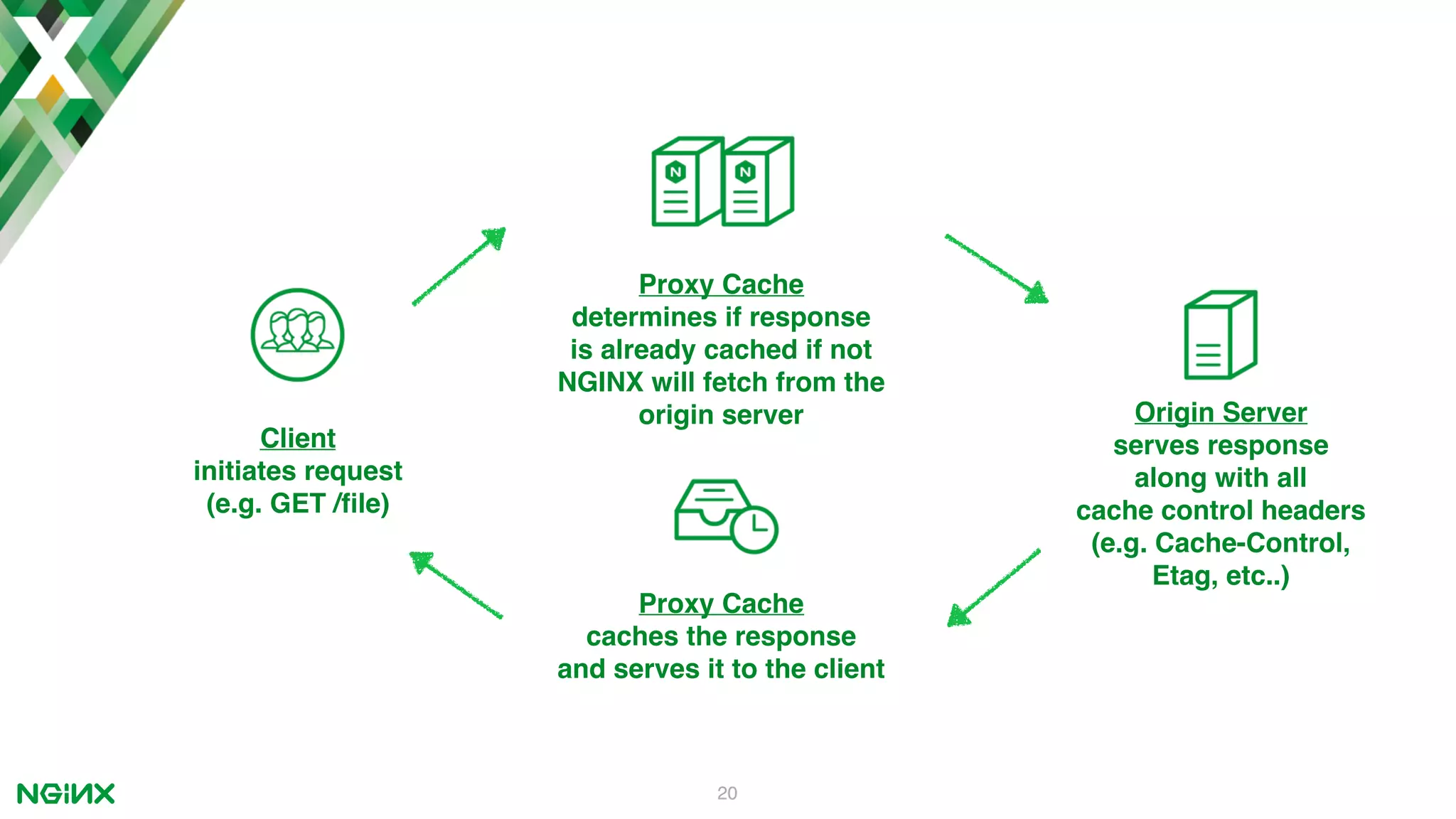

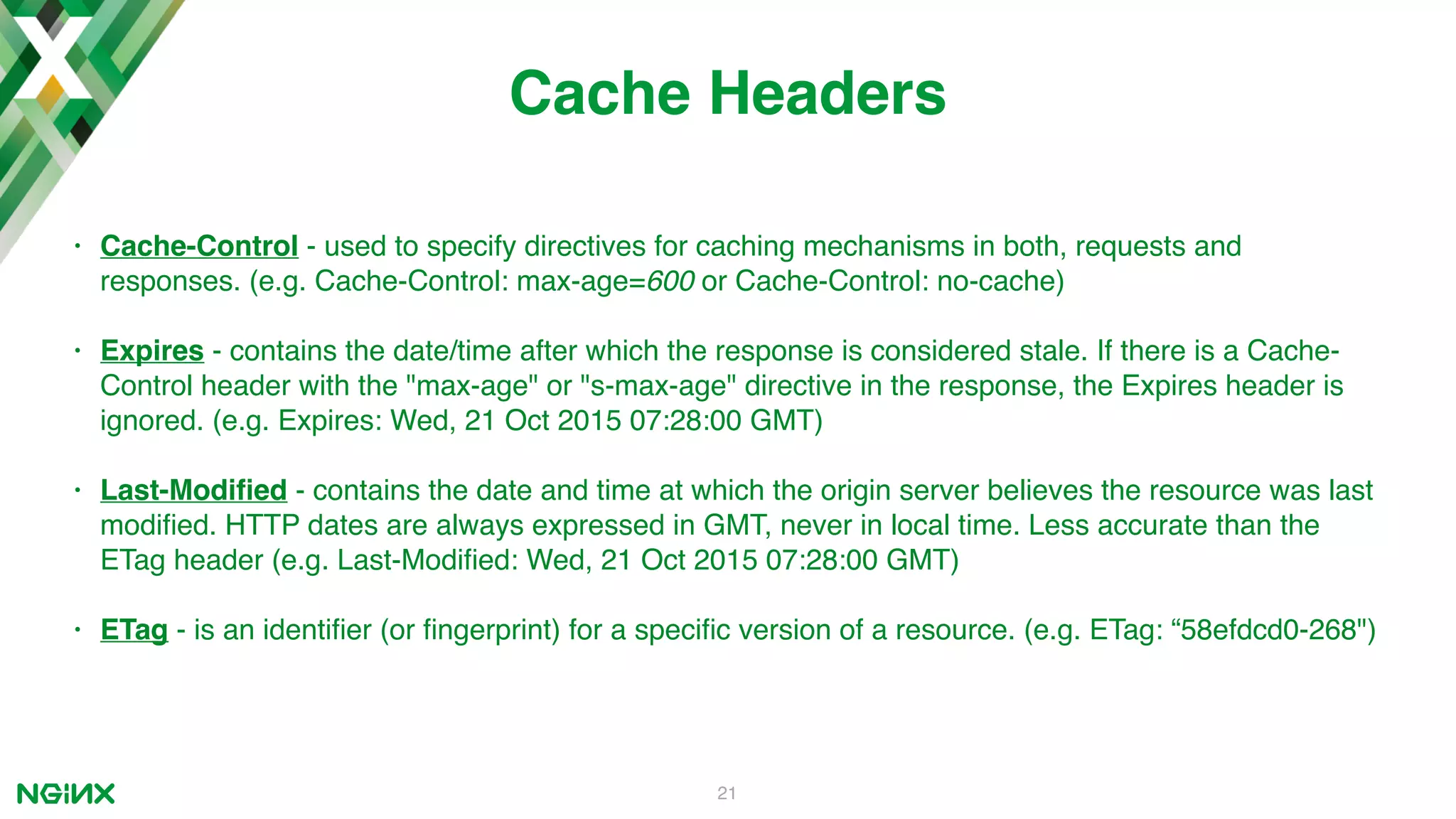

The document is a technical guide on using Nginx as a high-availability content caching solution. It covers various caching functionalities, including enabling basic and advanced caching, micro-caching, and configuration tips for improved availability. Additionally, it discusses the working of cache headers and the architecture required for efficient caching management.

![9 user nginx; worker_processes auto; error_log /var/log/nginx/error.log notice; pid /var/run/nginx.pid; events { worker_connections 1024; } http { include /etc/nginx/mime.types; default_type application/octet-stream; log_format main '$remote_addr - $remote_user [$time_local] "$request" ' '$status $body_bytes_sent "$http_referer" ' '"$http_user_agent" "$http_x_forwarded_for"'; access_log /var/log/nginx/access.log main; upstream api-backends { server 10.0.1.11:8080; server 10.0.1.12:8080; } server { listen 10.0.1.10:80; server_name example.com; location / { root /usr/share/nginx/html; index index.html index.htm; } location ^~ /api { proxy_pass http://api-backends; } } include /path/to/more/virtual_servers/*.conf; } nginx.org/en/docs/dirindex.html http context server context events context main context stream contextnot shown… upstream context location context](https://image.slidesharecdn.com/nginxeffectivehacaching-170502202753/75/ITB2017-Nginx-Effective-High-Availability-Content-Caching-9-2048.jpg)

![10 user nginx; worker_processes auto; error_log /var/log/nginx/error.log notice; pid /var/run/nginx.pid; events { worker_connections 1024; } http { include /etc/nginx/mime.types; default_type application/octet-stream; log_format main '$remote_addr - $remote_user [$time_local] "$request" ' '$status $body_bytes_sent "$http_referer" ' '"$http_user_agent" "$http_x_forwarded_for"'; access_log /var/log/nginx/access.log main; upstream api-backends { server 10.0.1.11:8080; server 10.0.1.12:8080; } server { listen 10.0.1.10:80; server_name example.com; location / { root /usr/share/nginx/html; index index.html index.htm; } location ^~ /api { proxy_pass http://api-backends; } } include /path/to/more/virtual_servers/*.conf; } server directive location directive upstream directive events directive main directive nginx.org/en/docs/dirindex.html](https://image.slidesharecdn.com/nginxeffectivehacaching-170502202753/75/ITB2017-Nginx-Effective-High-Availability-Content-Caching-10-2048.jpg)

![11 user nginx; worker_processes auto; error_log /var/log/nginx/error.log notice; pid /var/run/nginx.pid; events { worker_connections 1024; } http { include /etc/nginx/mime.types; default_type application/octet-stream; log_format main '$remote_addr - $remote_user [$time_local] "$request" ' '$status $body_bytes_sent "$http_referer" ' '"$http_user_agent" "$http_x_forwarded_for"'; access_log /var/log/nginx/access.log main; upstream api-backends { server 10.0.1.11:8080; server 10.0.1.12:8080; } server { listen 10.0.1.10:80; server_name example.com; location / { root /usr/share/nginx/html; index index.html index.htm; } location ^~ /api { proxy_pass http://api-backends; } } include /path/to/more/virtual_servers/*.conf; } nginx.org/en/docs/dirindex.html parameter parameter parameter parameter](https://image.slidesharecdn.com/nginxeffectivehacaching-170502202753/75/ITB2017-Nginx-Effective-High-Availability-Content-Caching-11-2048.jpg)

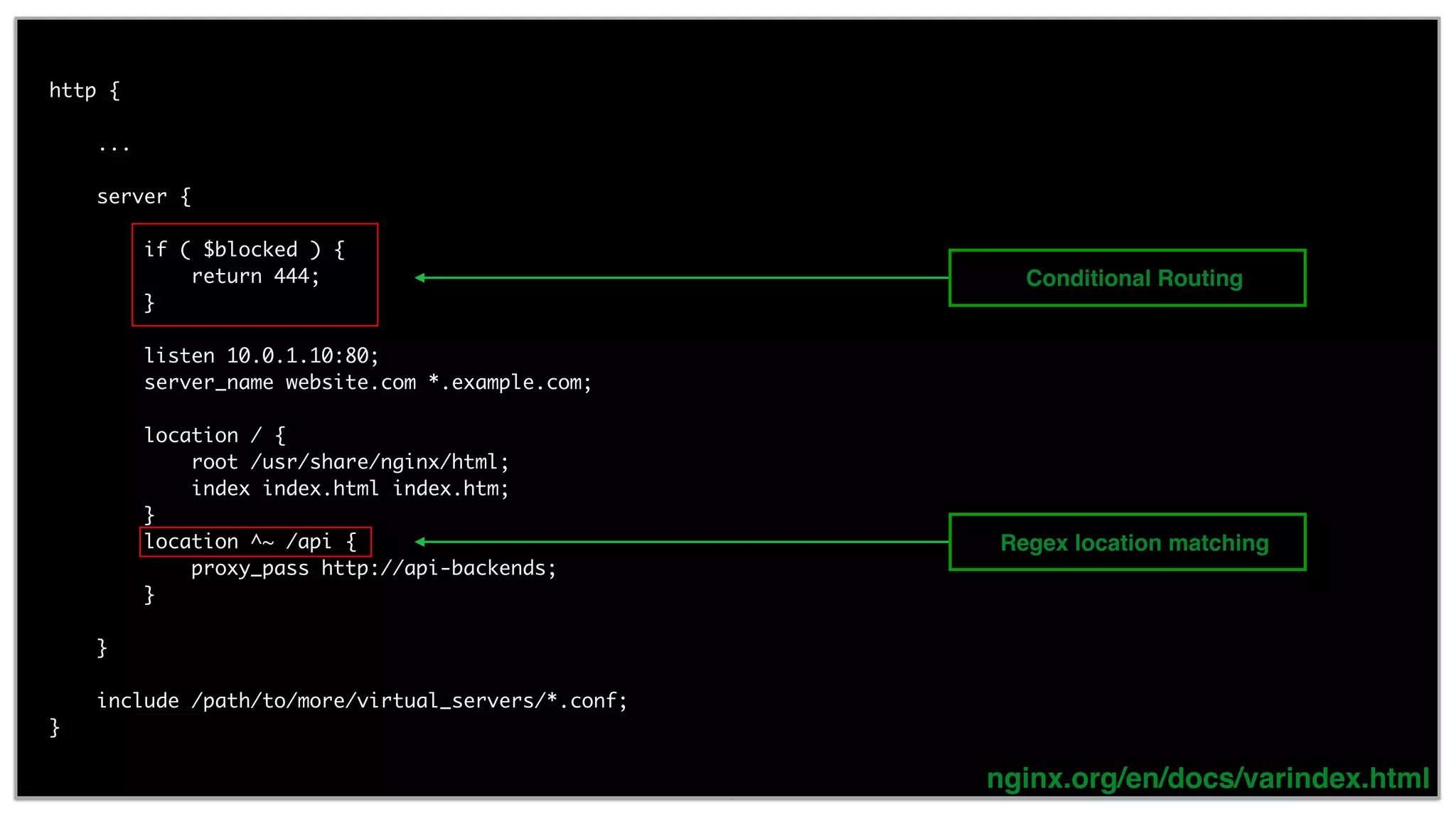

![13 user nginx; worker_processes auto; error_log /var/log/nginx/error.log notice; pid /var/run/nginx.pid; events { worker_connections 1024; } http { include /etc/nginx/mime.types; default_type application/octet-stream; log_format main '$remote_addr - $remote_user [$time_local] "$request" ' '$status $body_bytes_sent "$http_referer" ' '"$http_user_agent" "$http_x_forwarded_for"'; access_log /var/log/nginx/access.log main; upstream api-backends { server 10.0.1.11:8080; server 10.0.1.12:8080; } server { listen 10.0.1.10:80; server_name example.com; location / { root /usr/share/nginx/html; index index.html index.htm; } location ^~ /api { proxy_pass http://api-backends; } } include /path/to/more/virtual_servers/*.conf; } nginx.org/en/docs/varindex.html variables](https://image.slidesharecdn.com/nginxeffectivehacaching-170502202753/75/ITB2017-Nginx-Effective-High-Availability-Content-Caching-13-2048.jpg)

![14 http { include /etc/nginx/mime.types; default_type application/octet-stream; log_format main '$remote_addr - $remote_user [$time_local] "$request" ' '$status $body_bytes_sent "$http_referer" ' '"$http_user_agent" "$http_x_forwarded_for"'; access_log /var/log/nginx/access.log main; map $http_user_agent $dynamic { “~*Mobile” mobile.example.com; default desktop.example.com; } server { listen 10.0.1.10:80; server_name example.com; location / { root /usr/share/nginx/html; index index.html index.htm; } location ^~ /api { proxy_pass http://$dynamic; } } include /path/to/more/virtual_servers/*.conf; } nginx.org/en/docs/varindex.html variable map (dynamic)](https://image.slidesharecdn.com/nginxeffectivehacaching-170502202753/75/ITB2017-Nginx-Effective-High-Availability-Content-Caching-14-2048.jpg)

![23 proxy_cache_path proxy_cache_path path [levels=levels] [use_temp_path=on|off] keys_zone=name:size [inactive=time] [max_size=size] [manager_files=number] [manager_sleep=time] [manager_threshold=time] [loader_files=number] [loader_sleep=time] [loader_threshold=time] [purger=on|off] [purger_files=number] [purger_sleep=time] [purger_threshold=time]; Syntax: Default: - Context: http Documentation http { proxy_cache_path /tmp/nginx/micro_cache/ levels=1:2 keys_zone=large_cache:10m max_size=300g inactive=14d; ... } Definition: Sets the path and other parameters of a cache. Cache data are stored in files. The file name in a cache is a result of applying the MD5 function to the cache key.](https://image.slidesharecdn.com/nginxeffectivehacaching-170502202753/75/ITB2017-Nginx-Effective-High-Availability-Content-Caching-23-2048.jpg)

![26 proxy_cache_valid Documentation location ~* .(jpg|png|gif|ico)$ { ... proxy_cache_valid any 1d; } proxy_cache_valid [code ...] time;Syntax: Default: - Context: http, server, location Definition: Sets caching time for different response codes.](https://image.slidesharecdn.com/nginxeffectivehacaching-170502202753/75/ITB2017-Nginx-Effective-High-Availability-Content-Caching-26-2048.jpg)