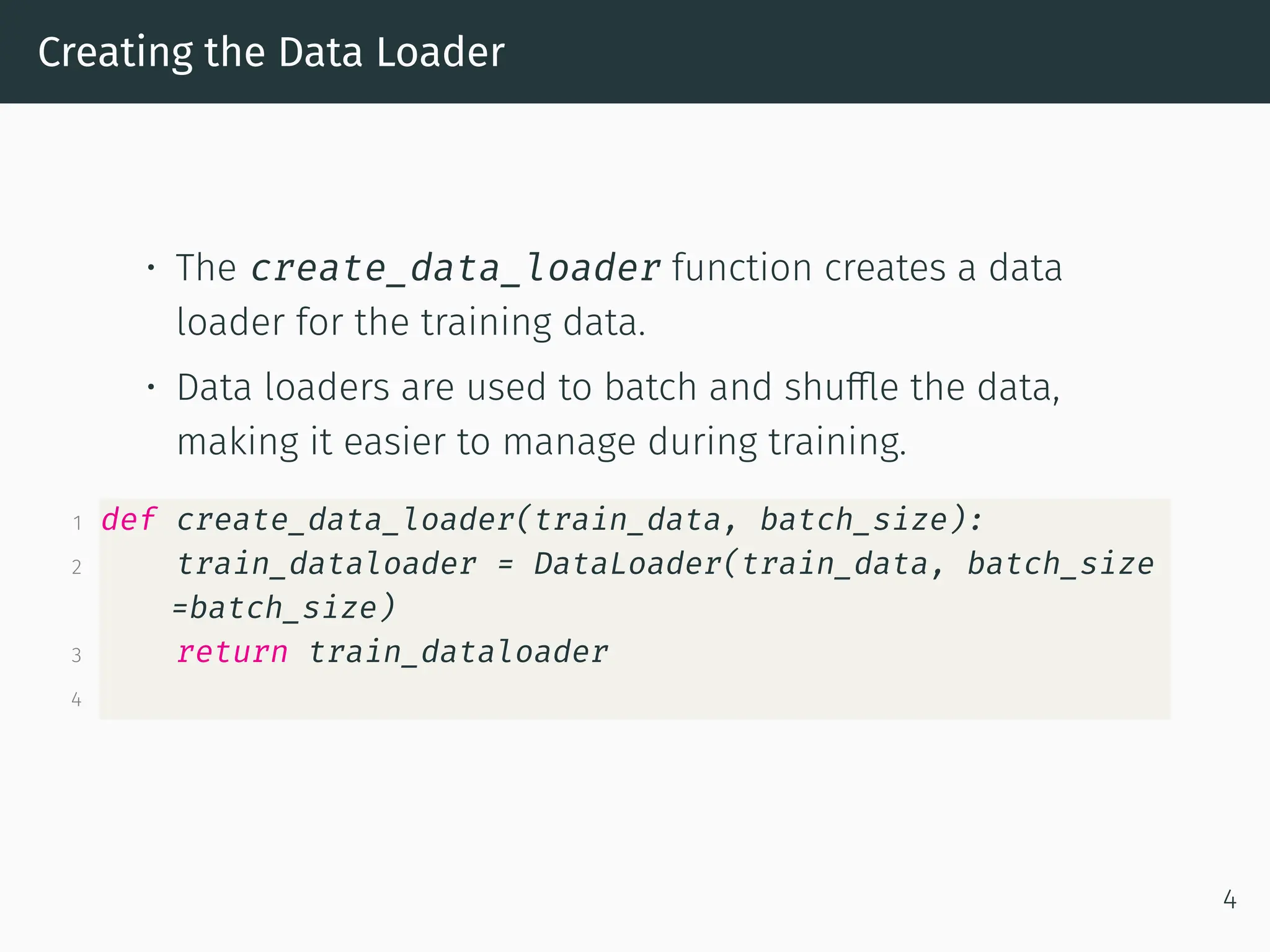

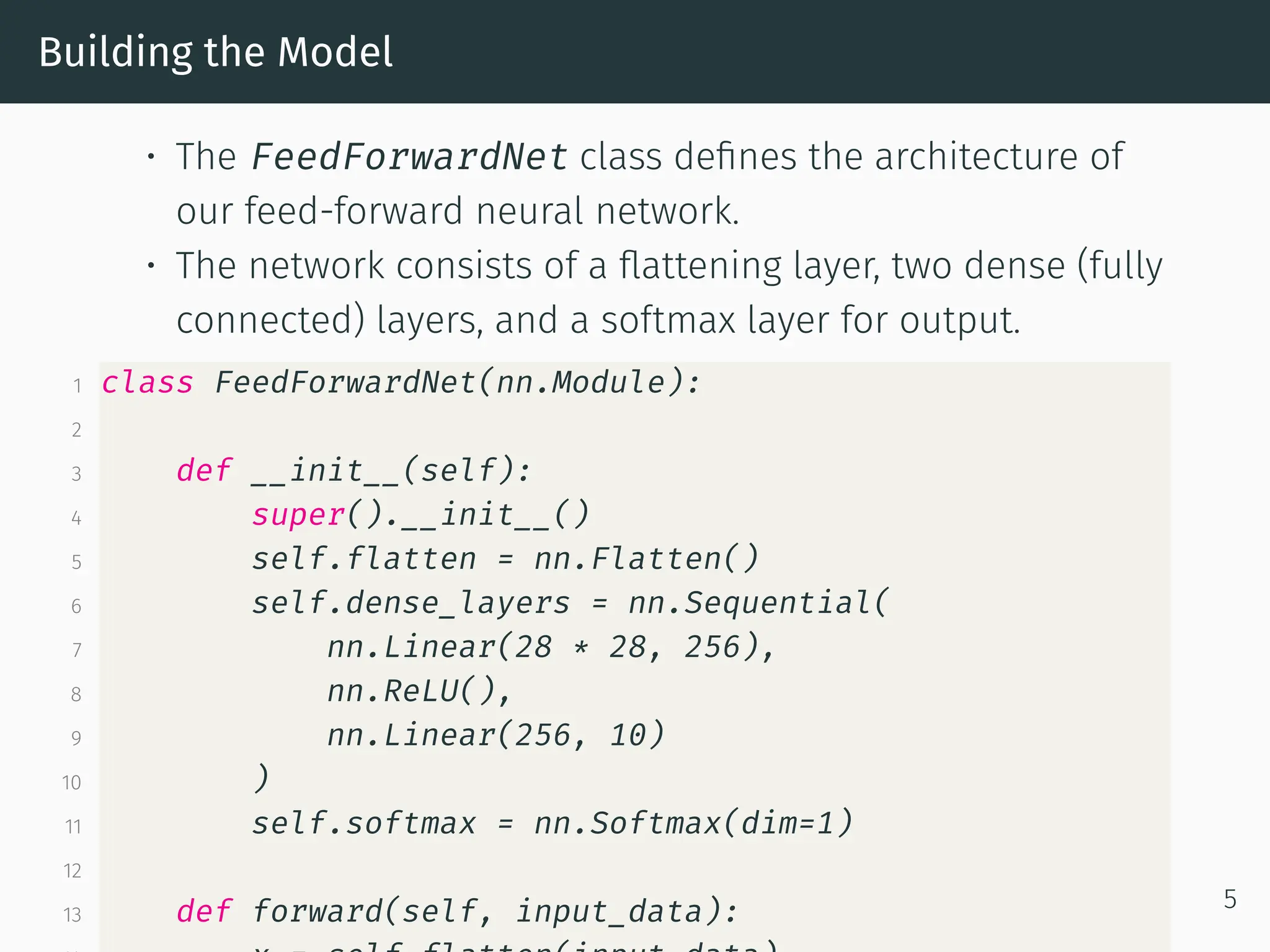

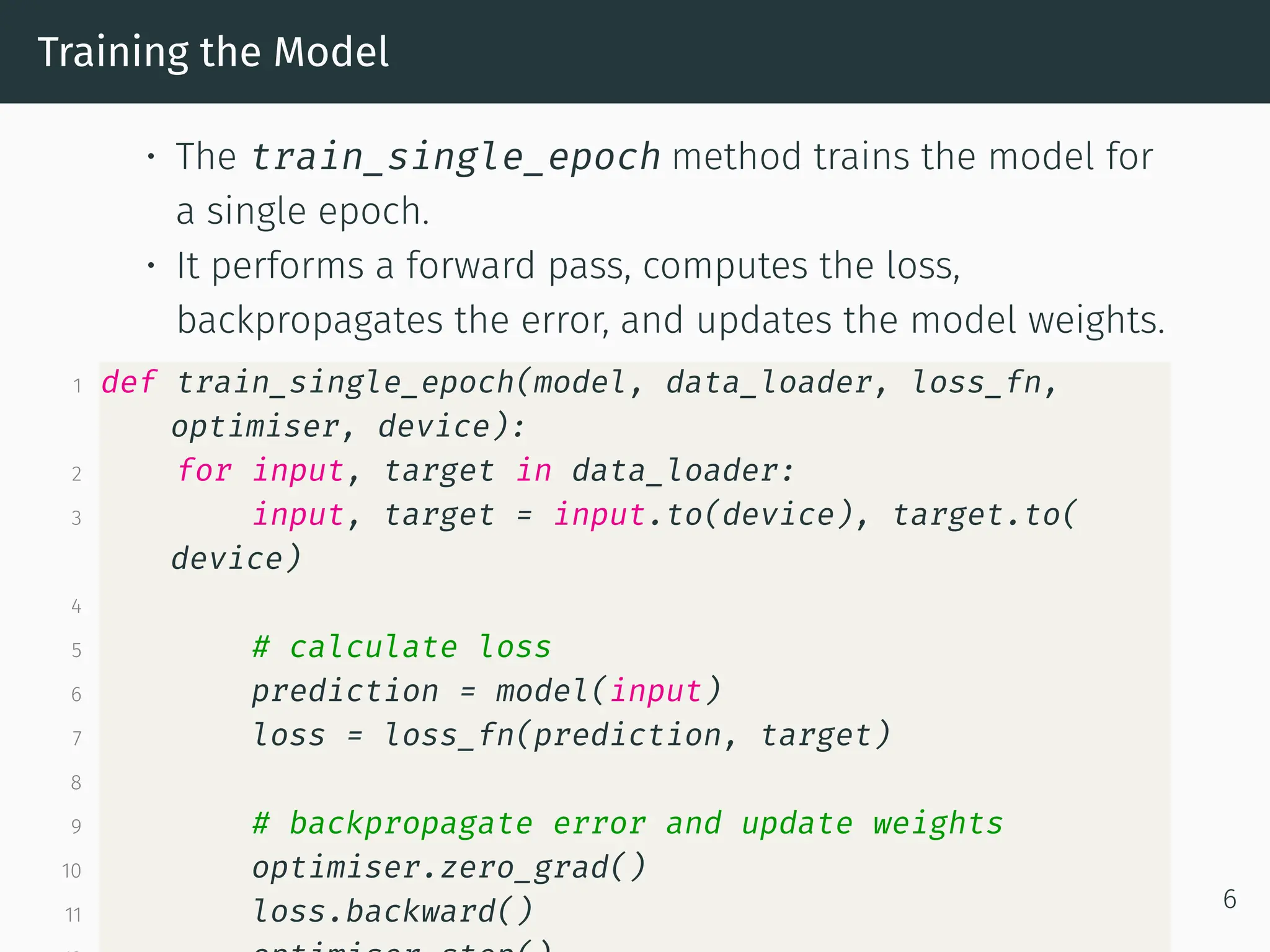

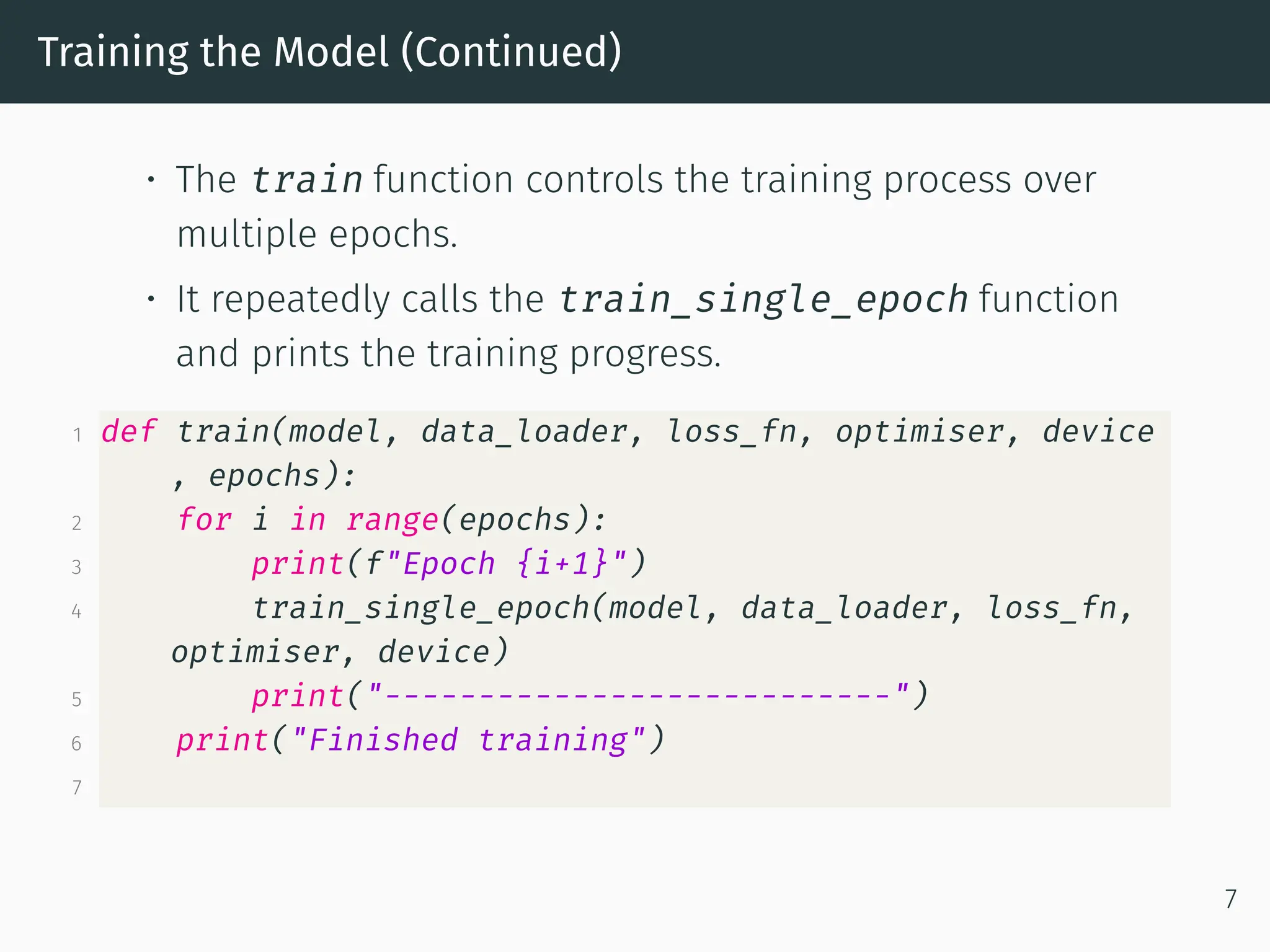

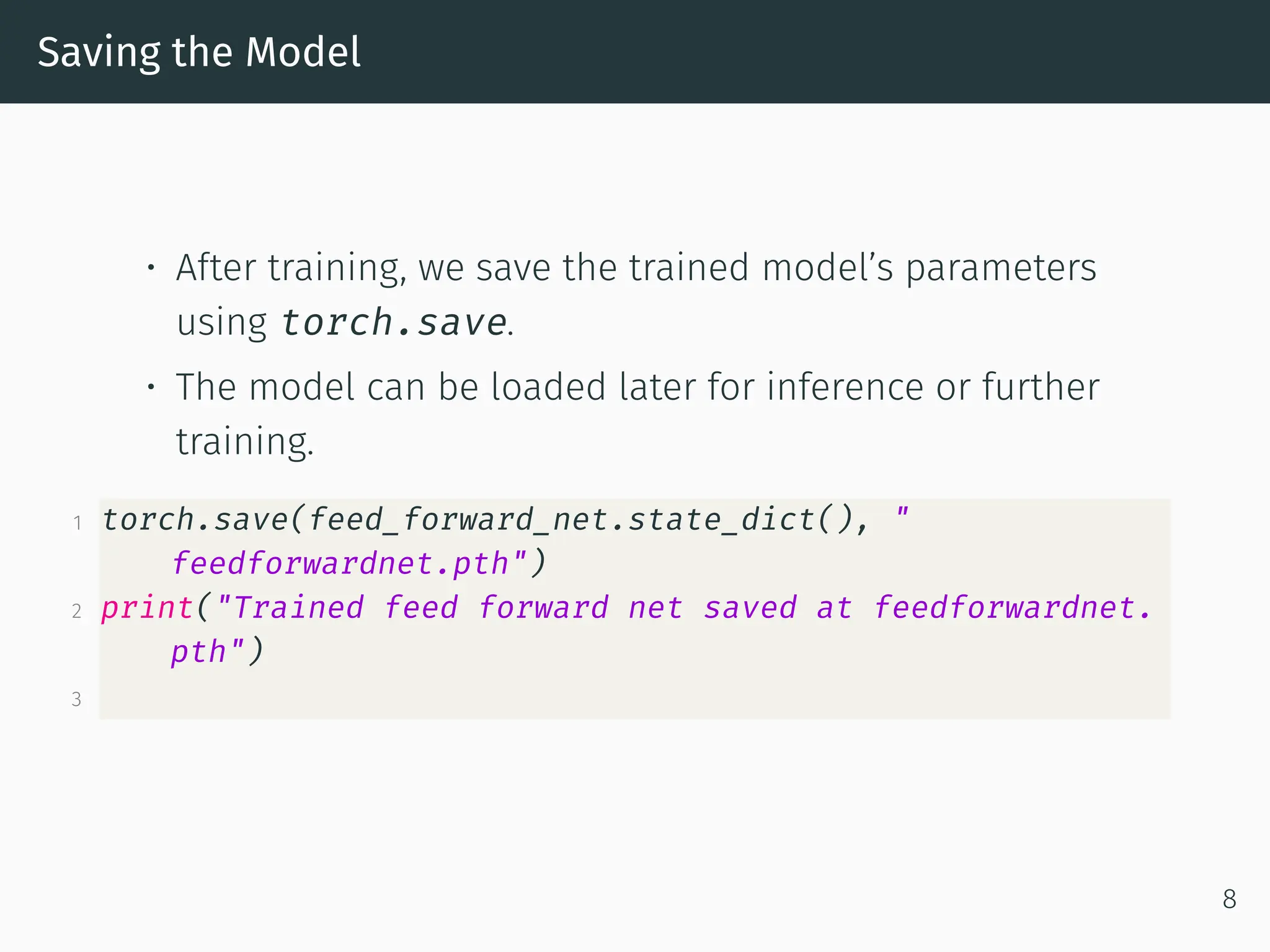

This document outlines the process of implementing and training a feed-forward neural network using PyTorch on the MNIST dataset for handwritten digit classification. It details steps including dataset downloading, data loader creation, model building, training, and saving the model. Each step is explained with accompanying code snippets to facilitate understanding of the implementation process.