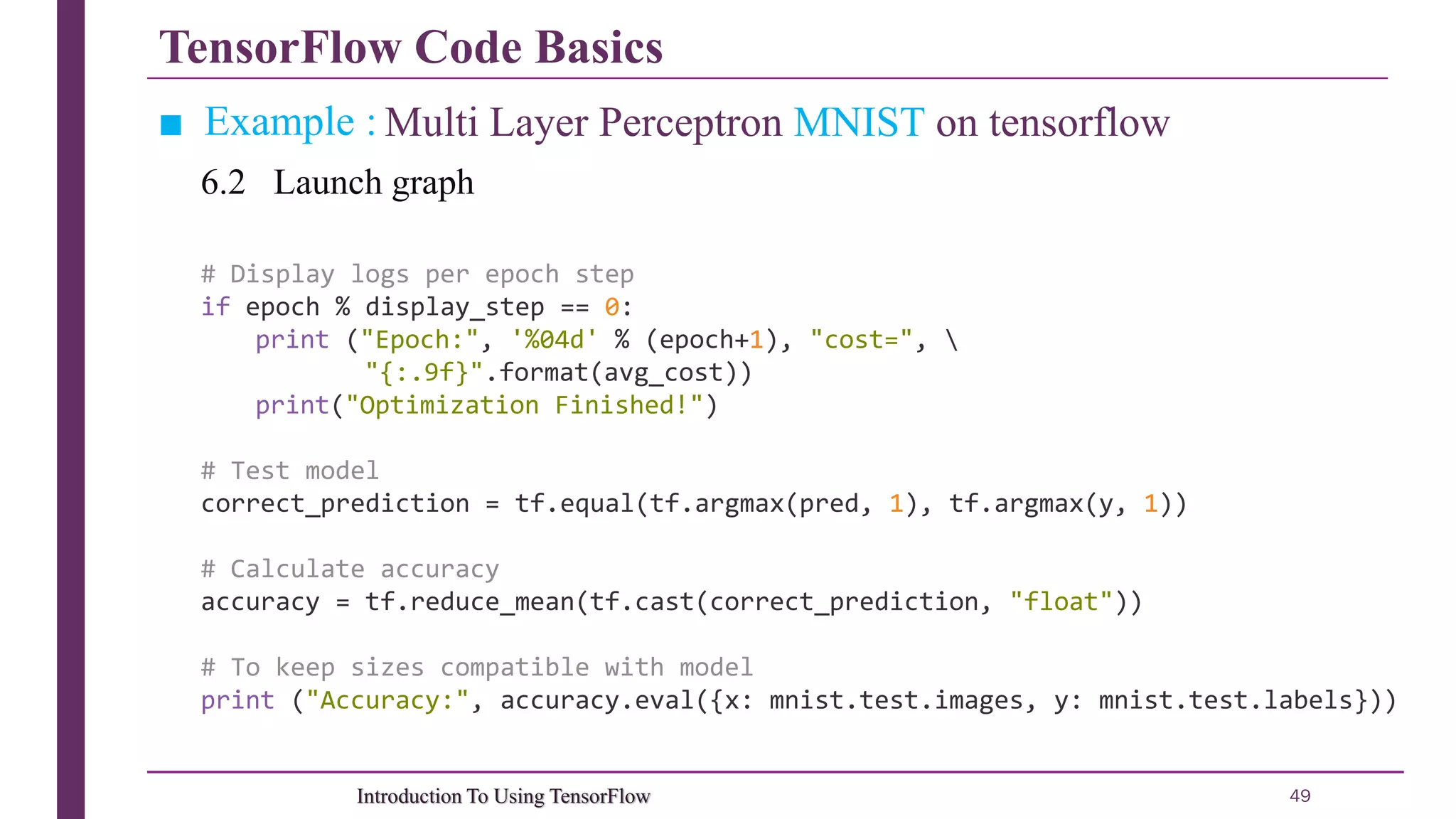

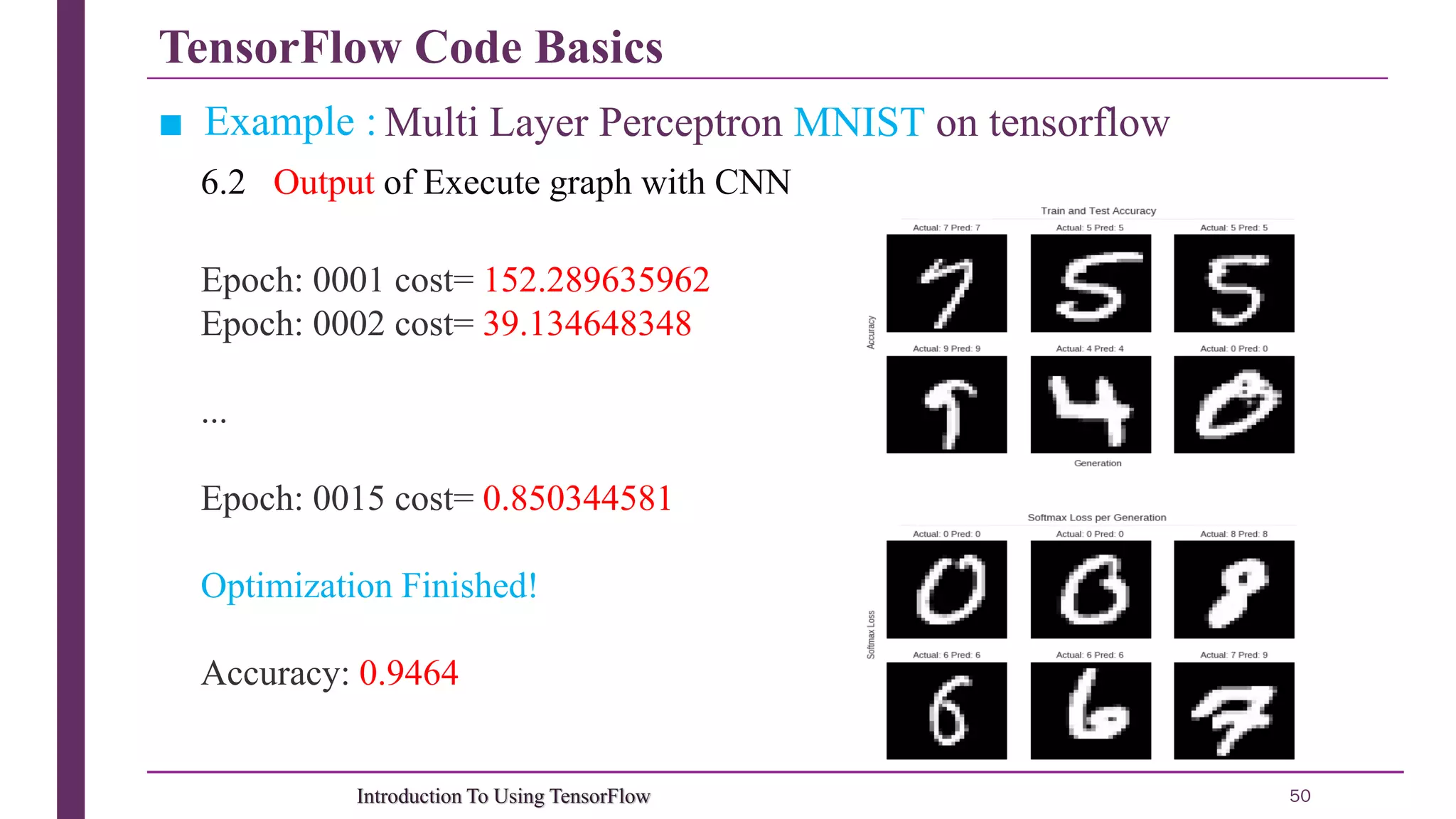

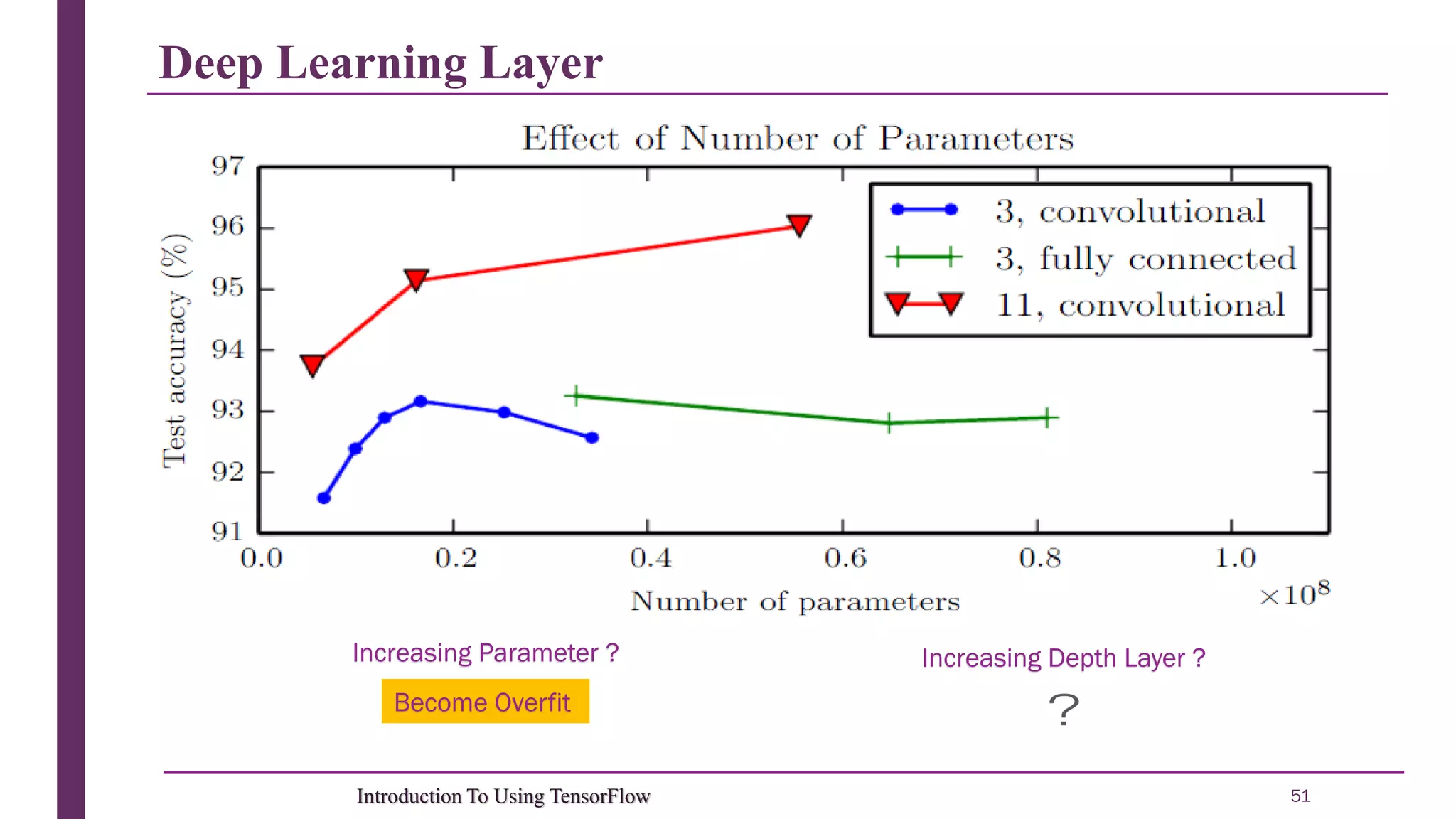

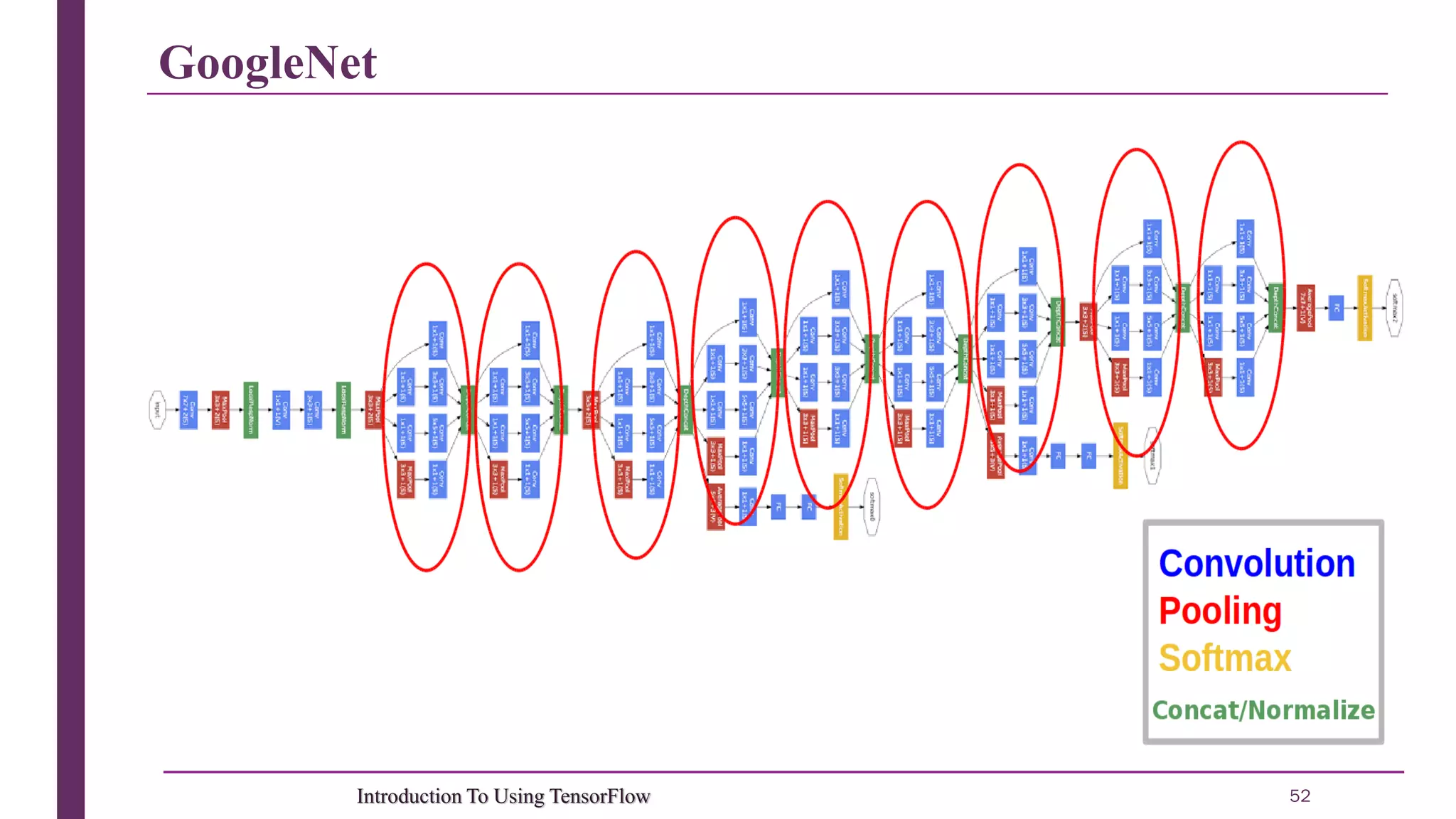

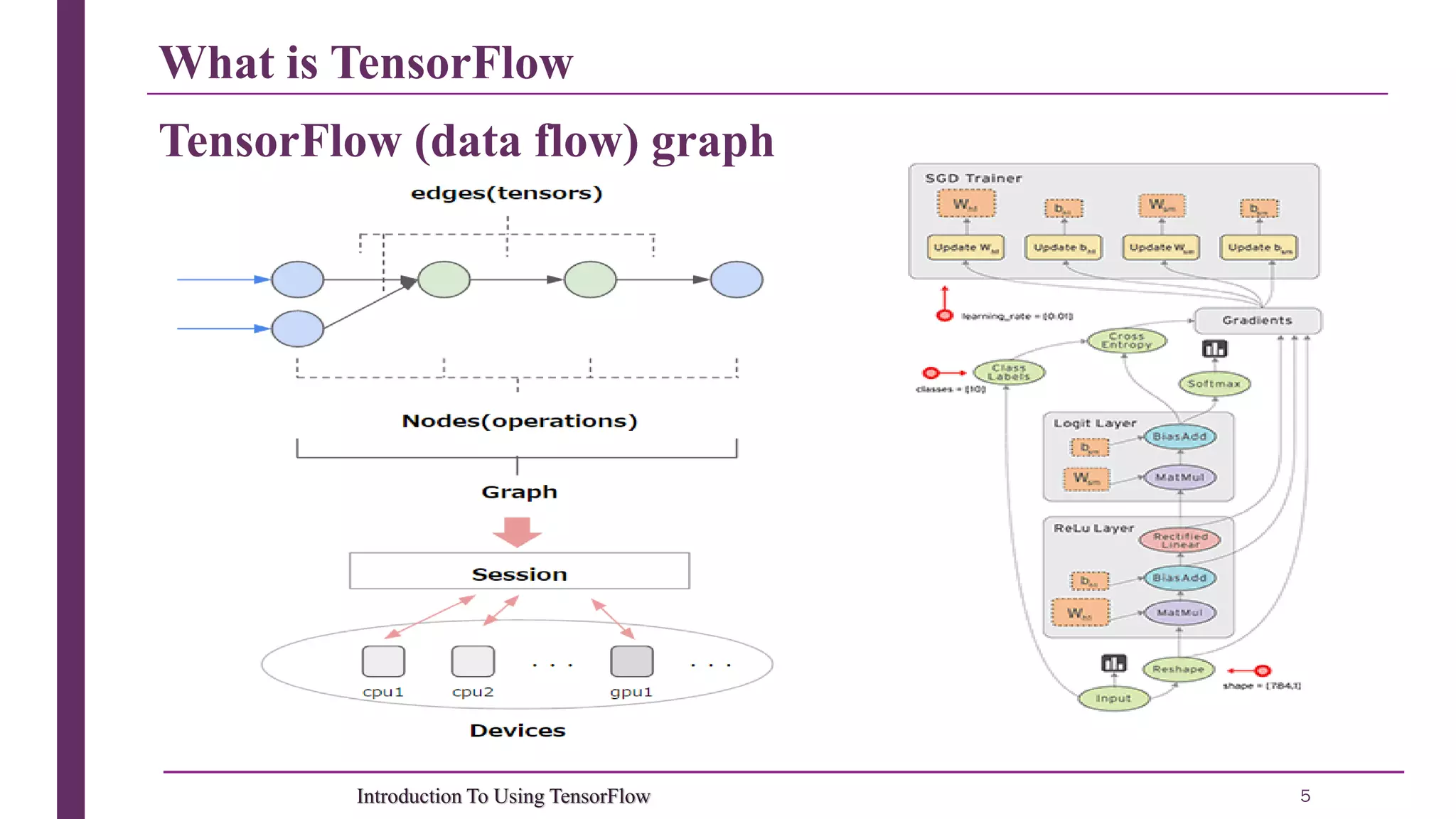

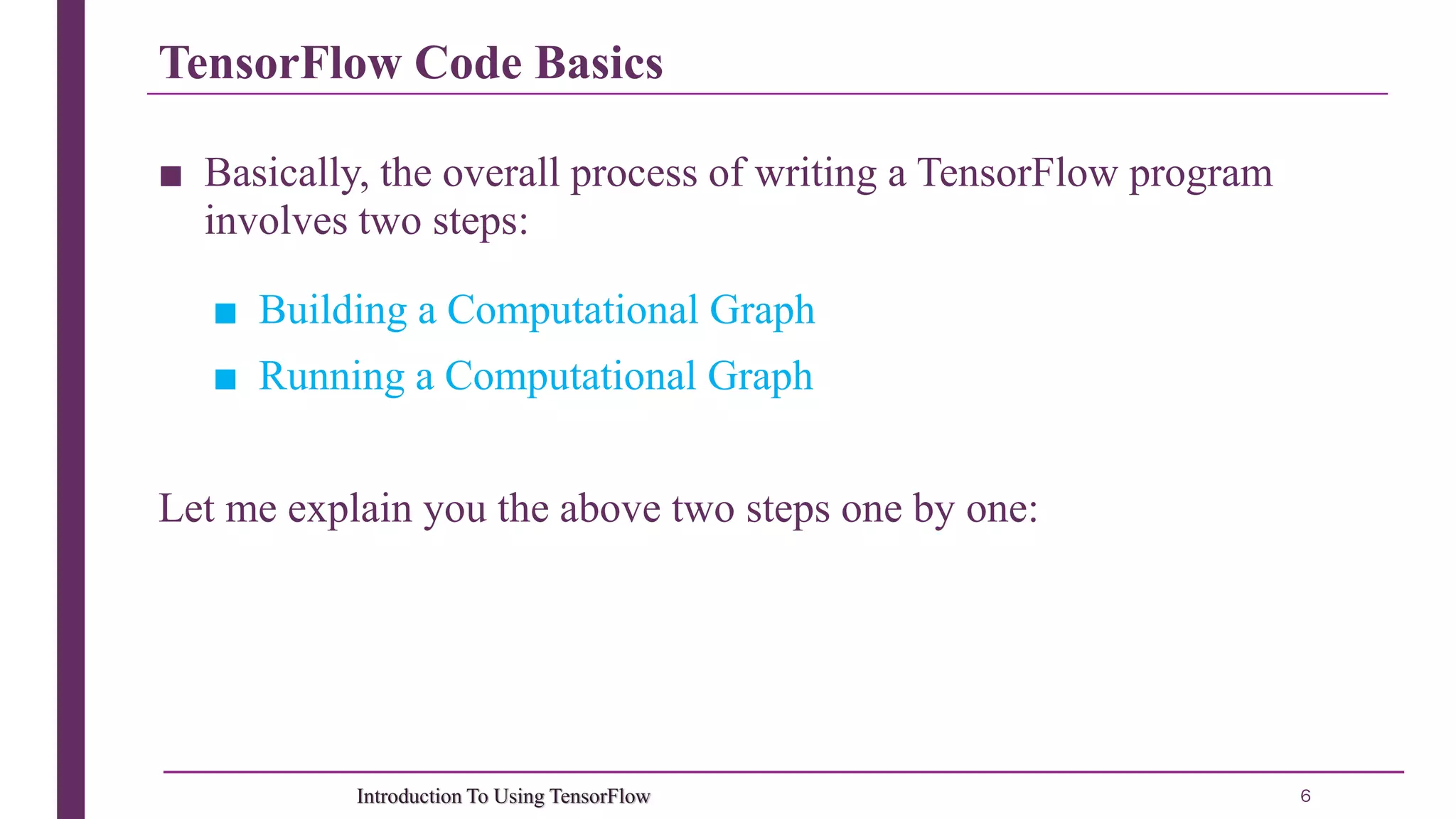

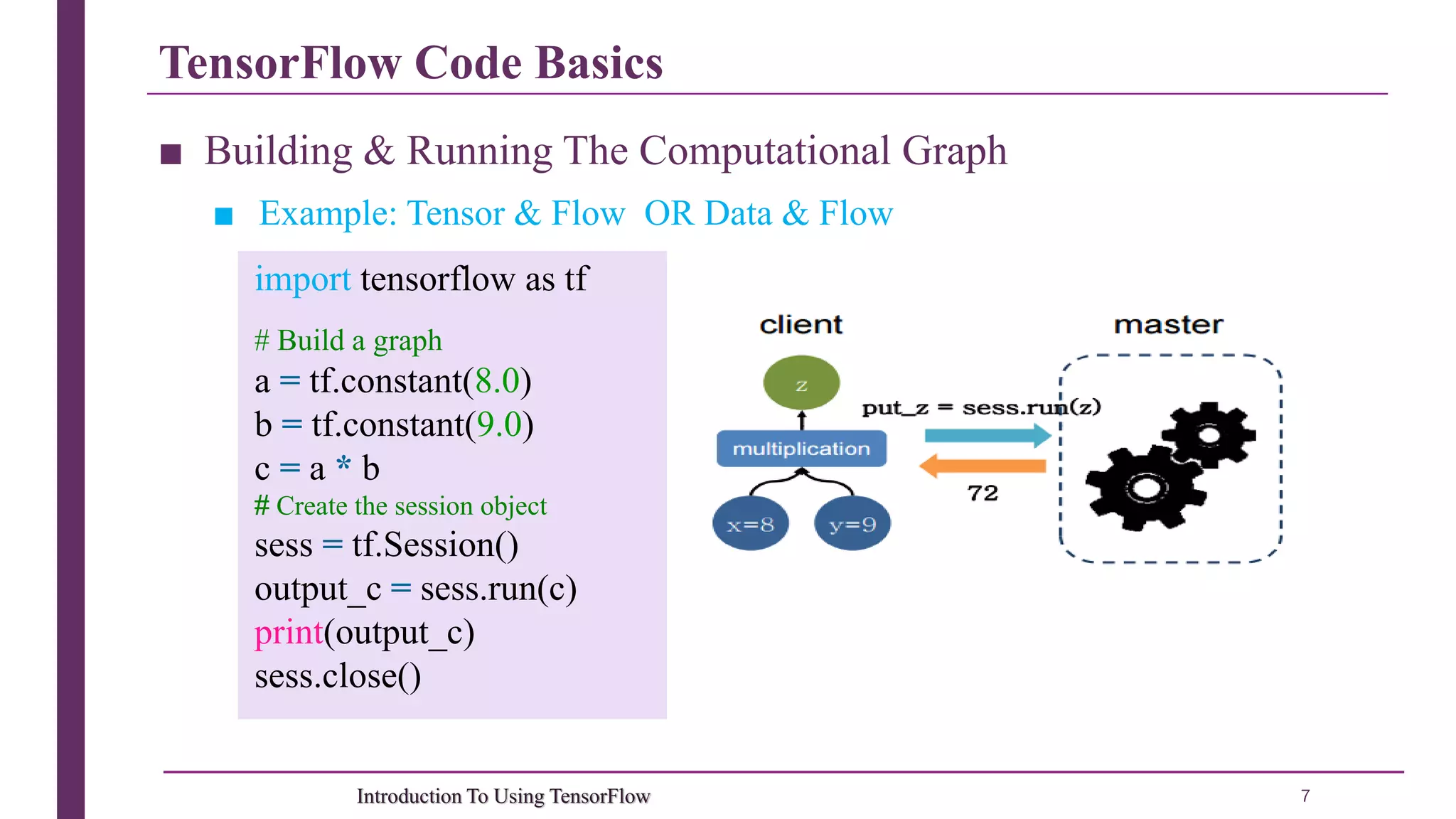

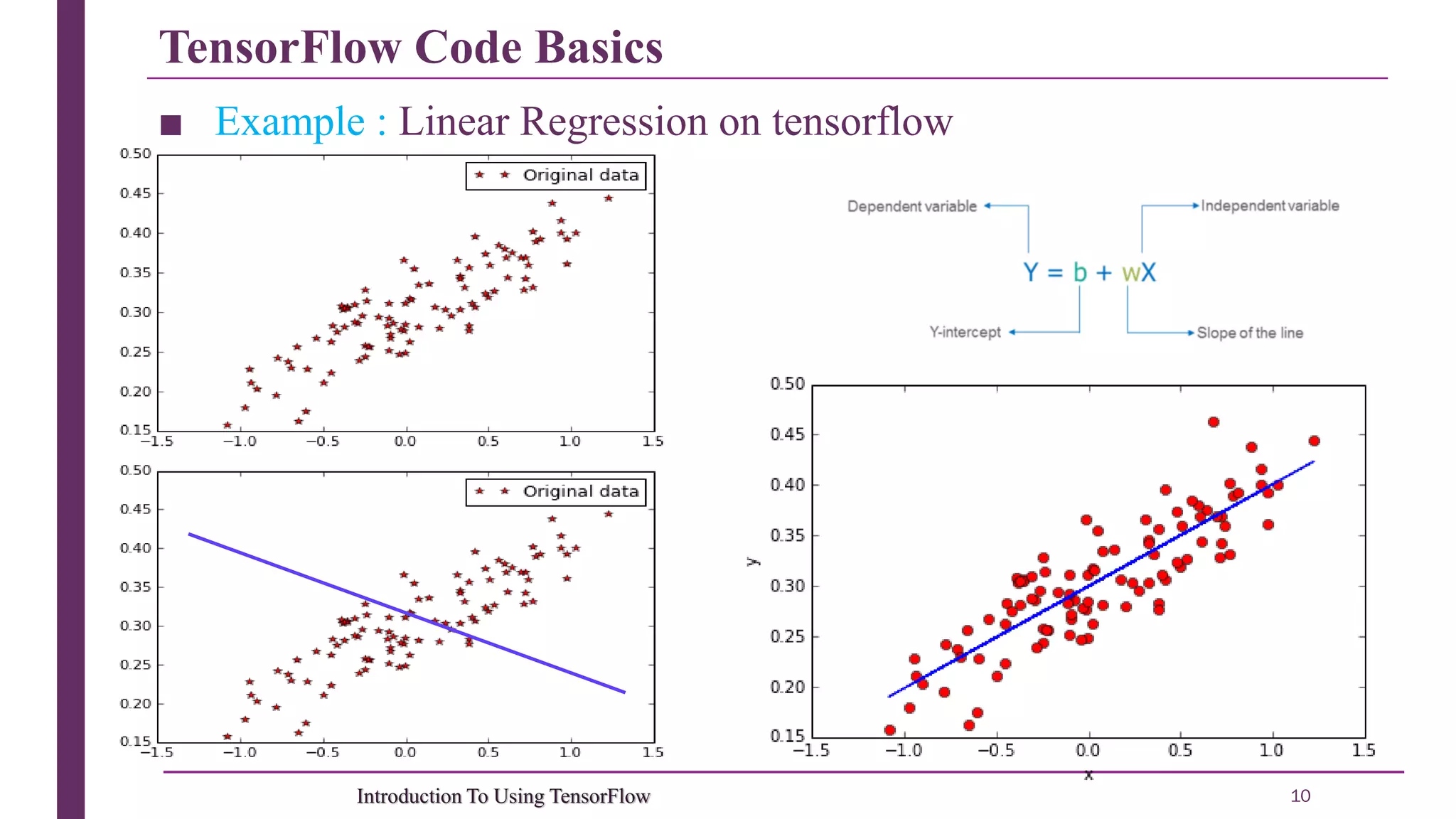

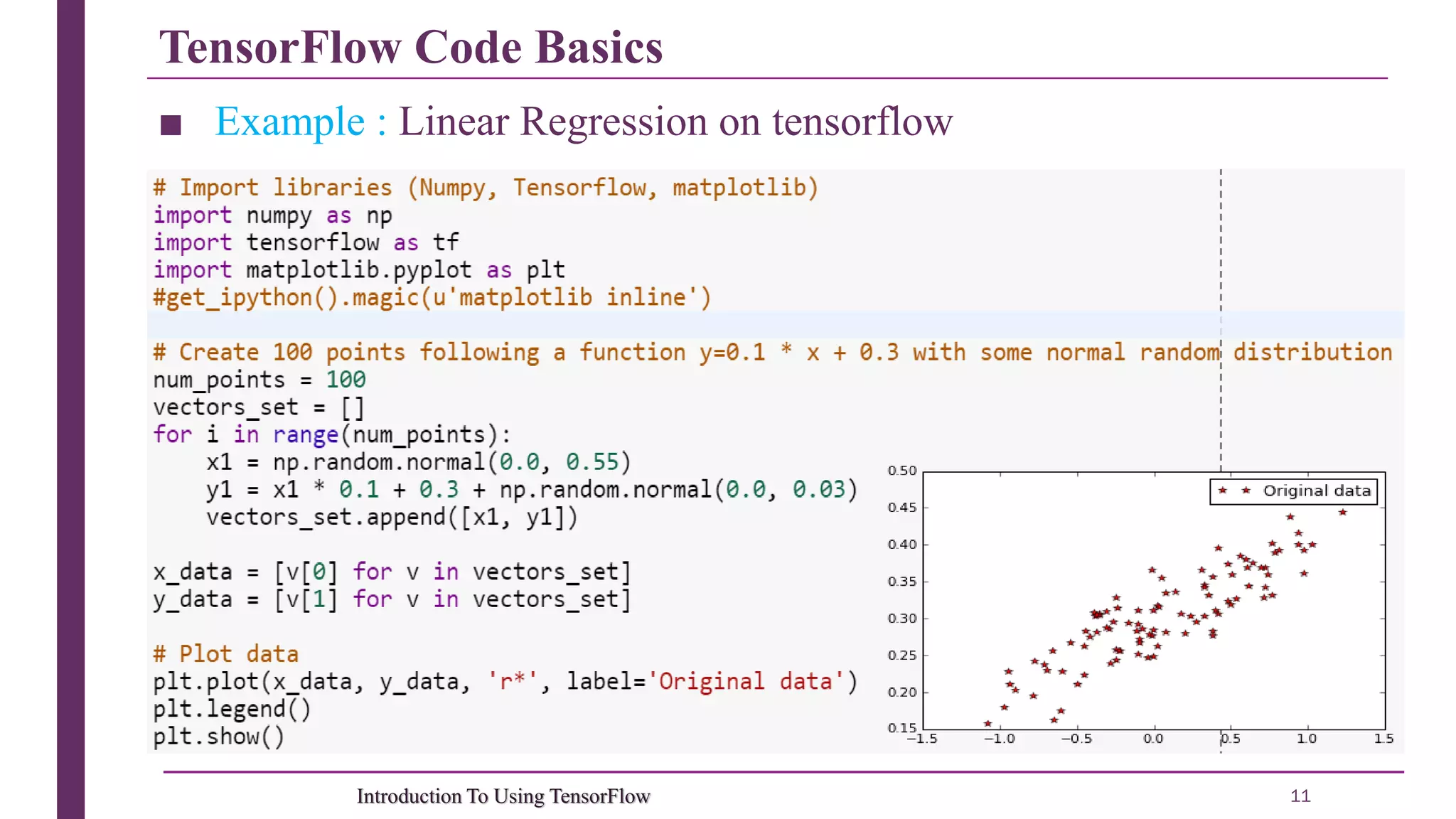

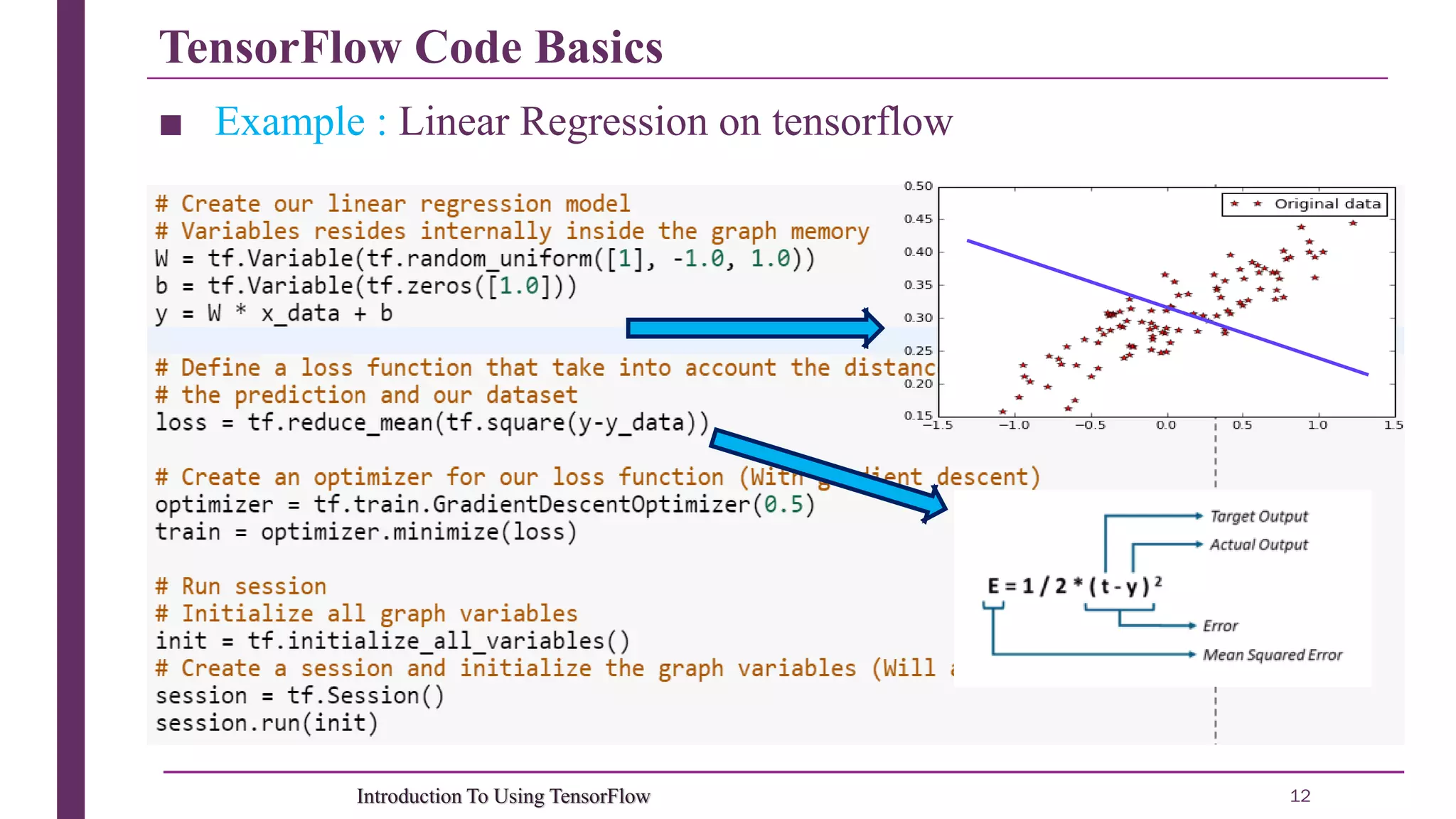

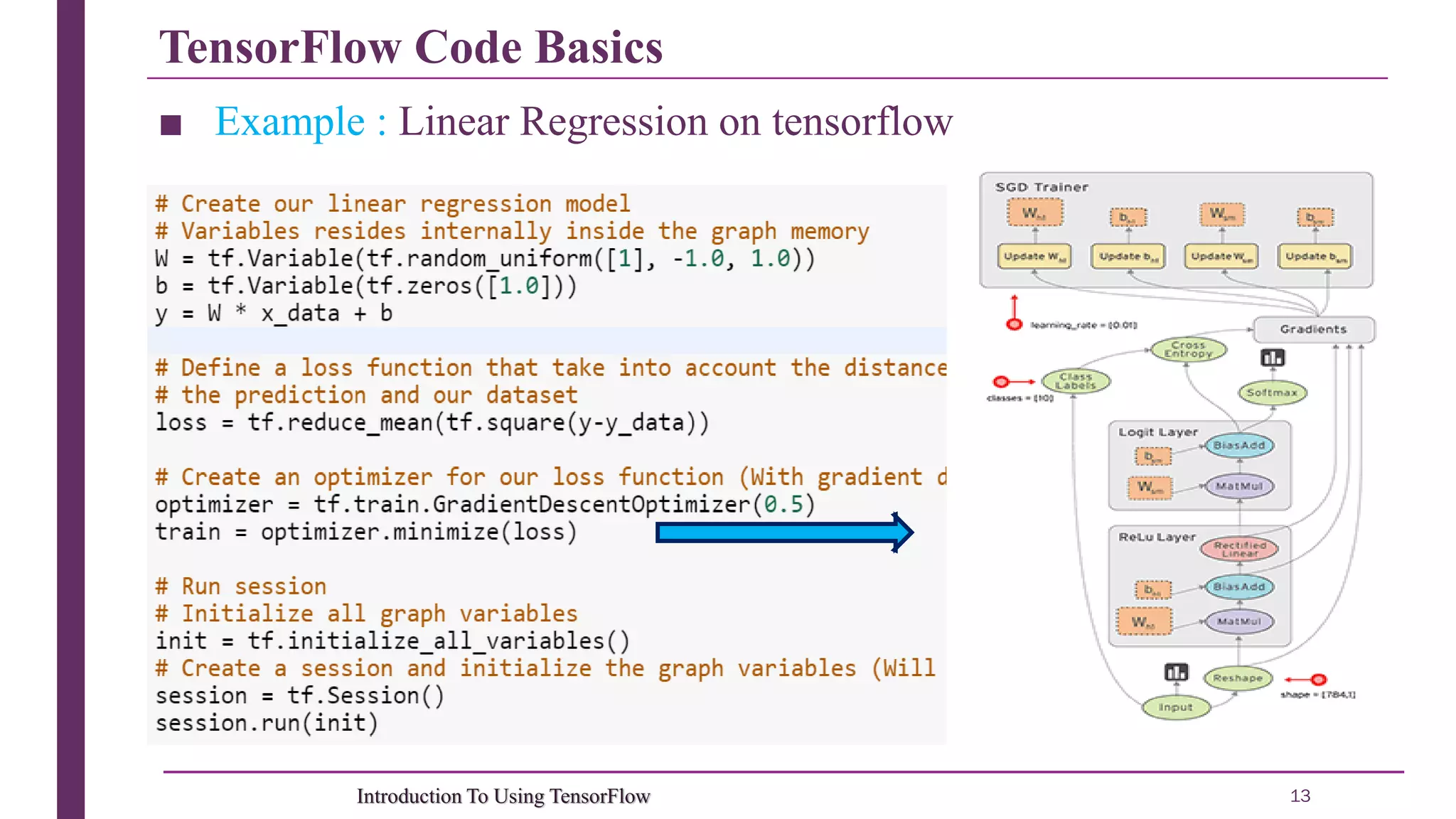

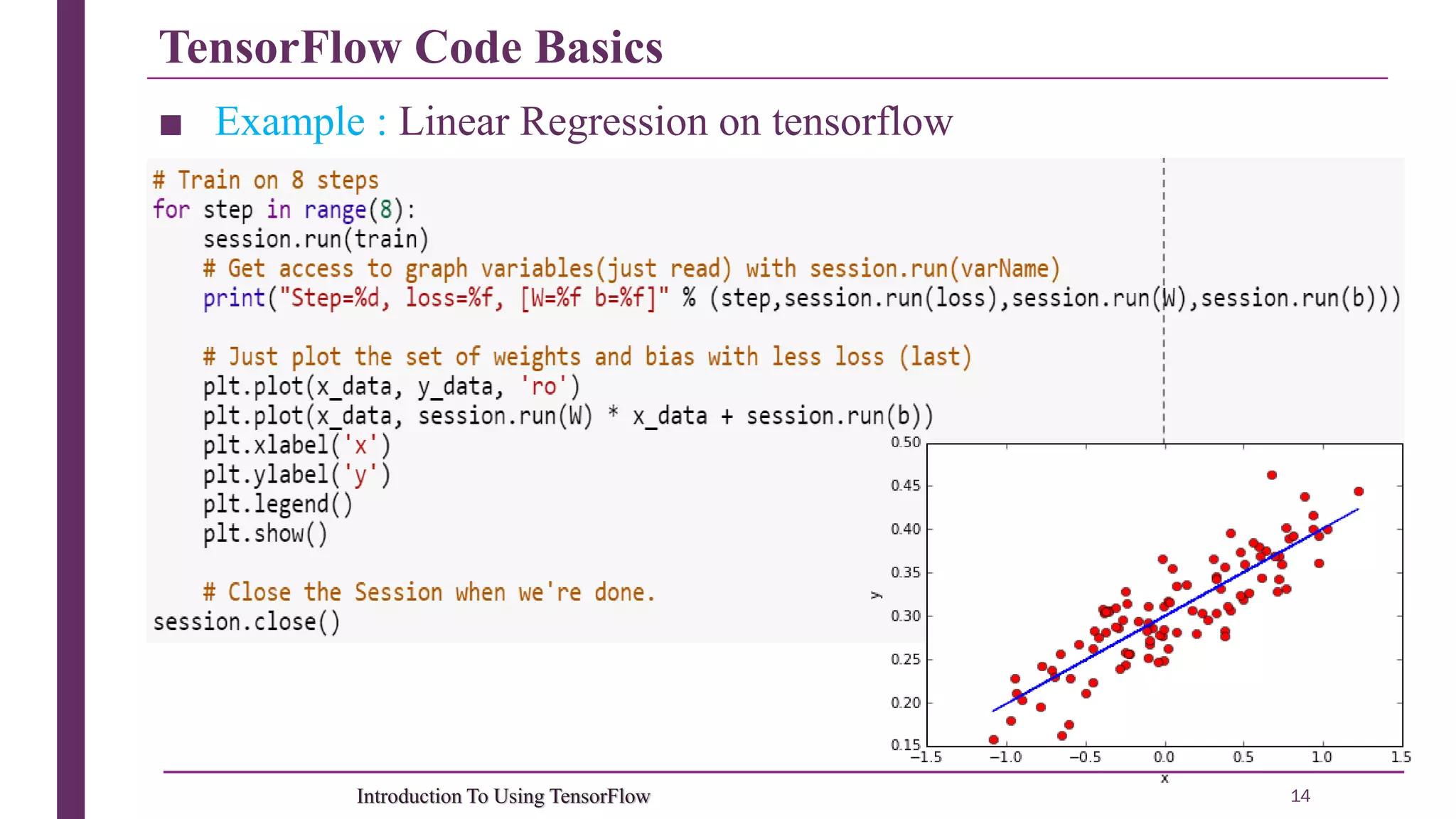

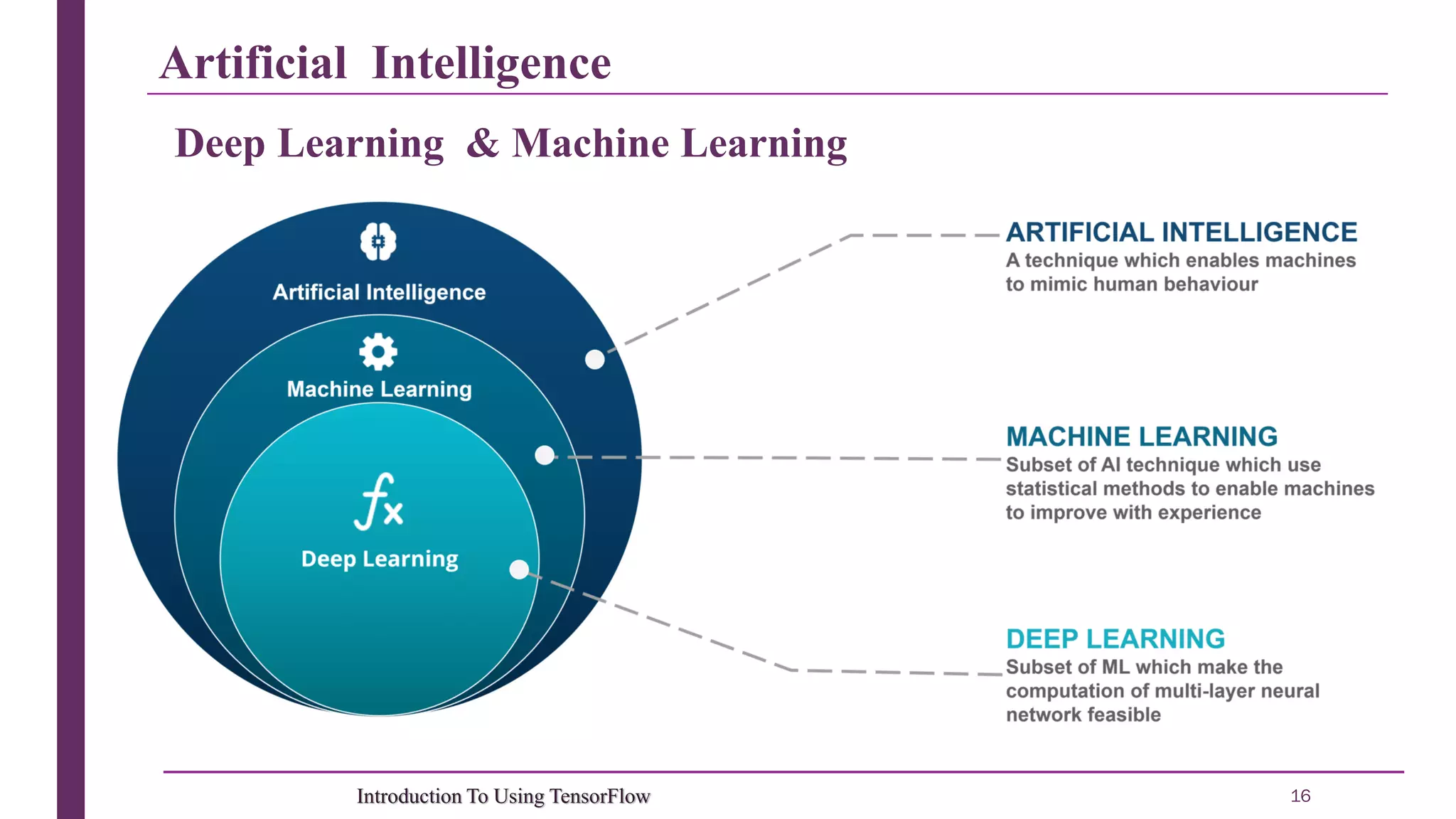

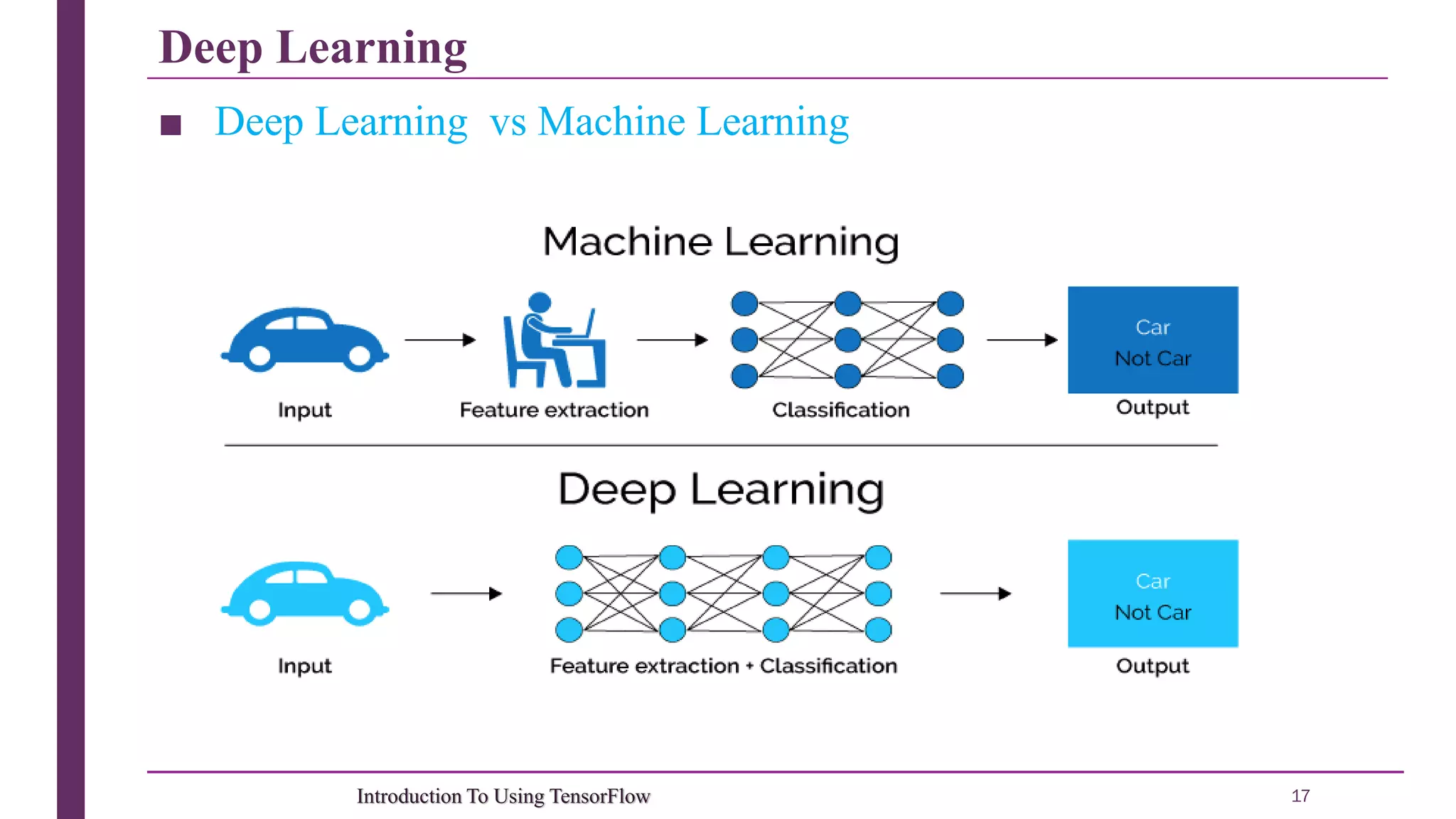

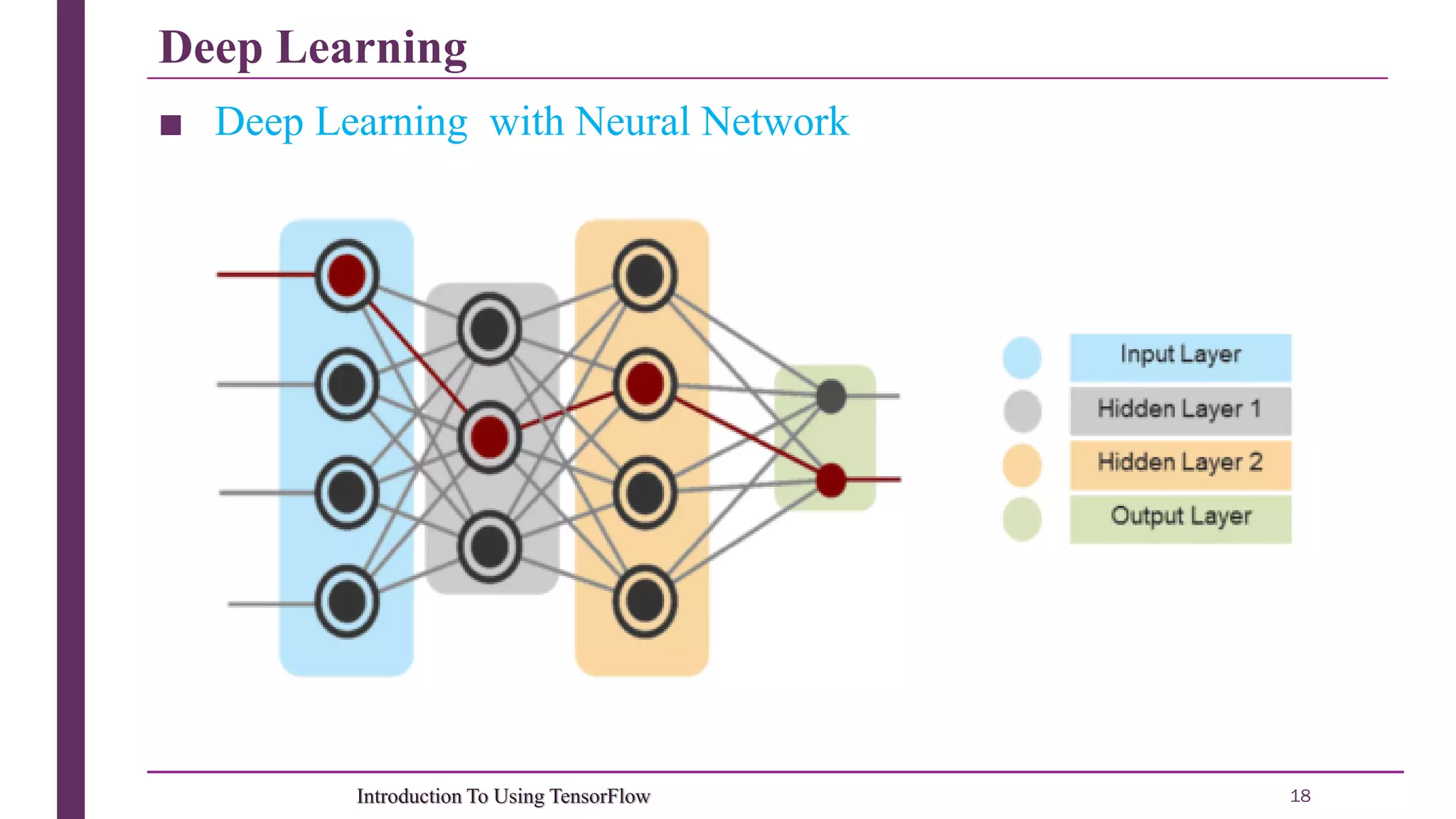

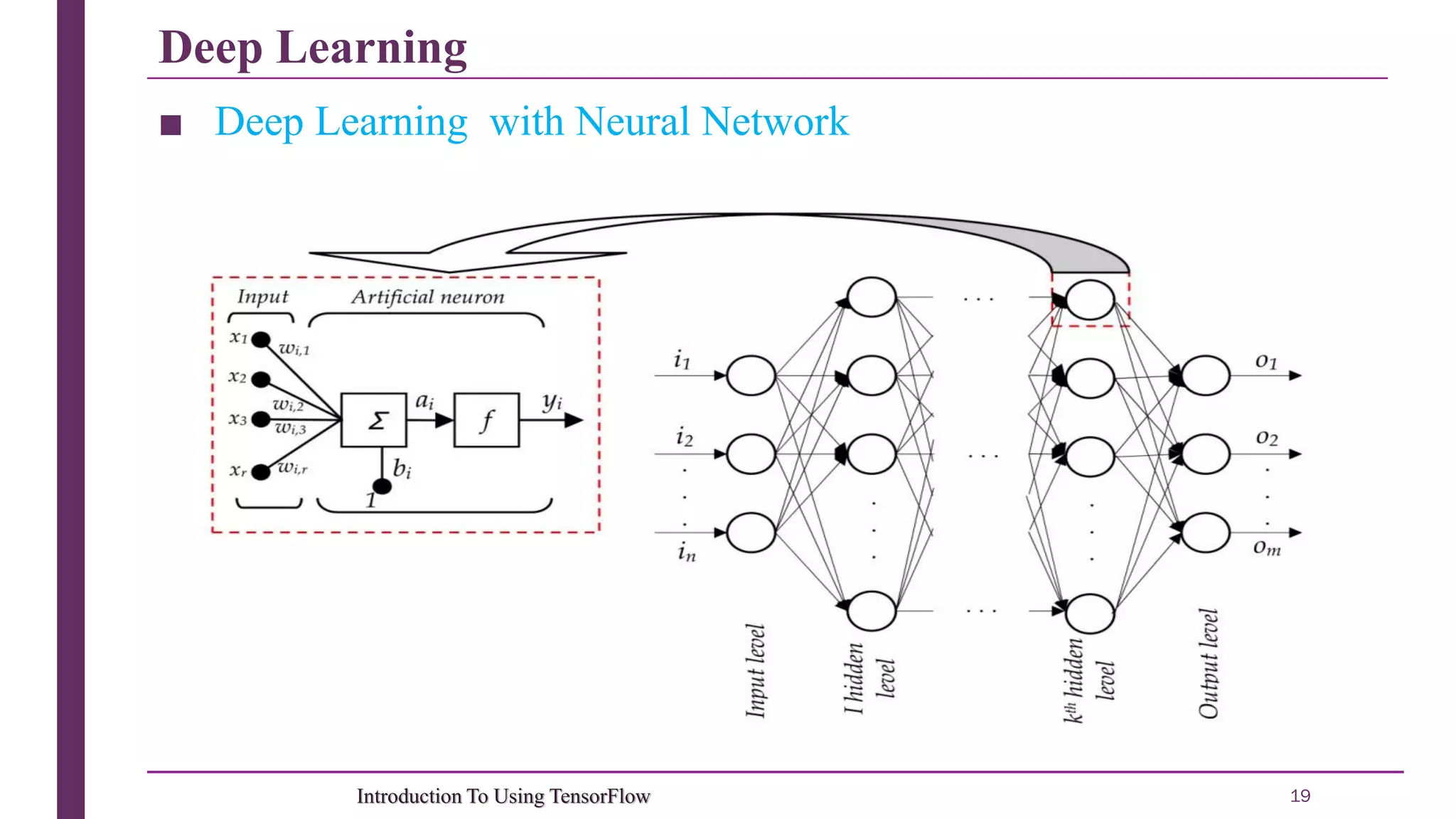

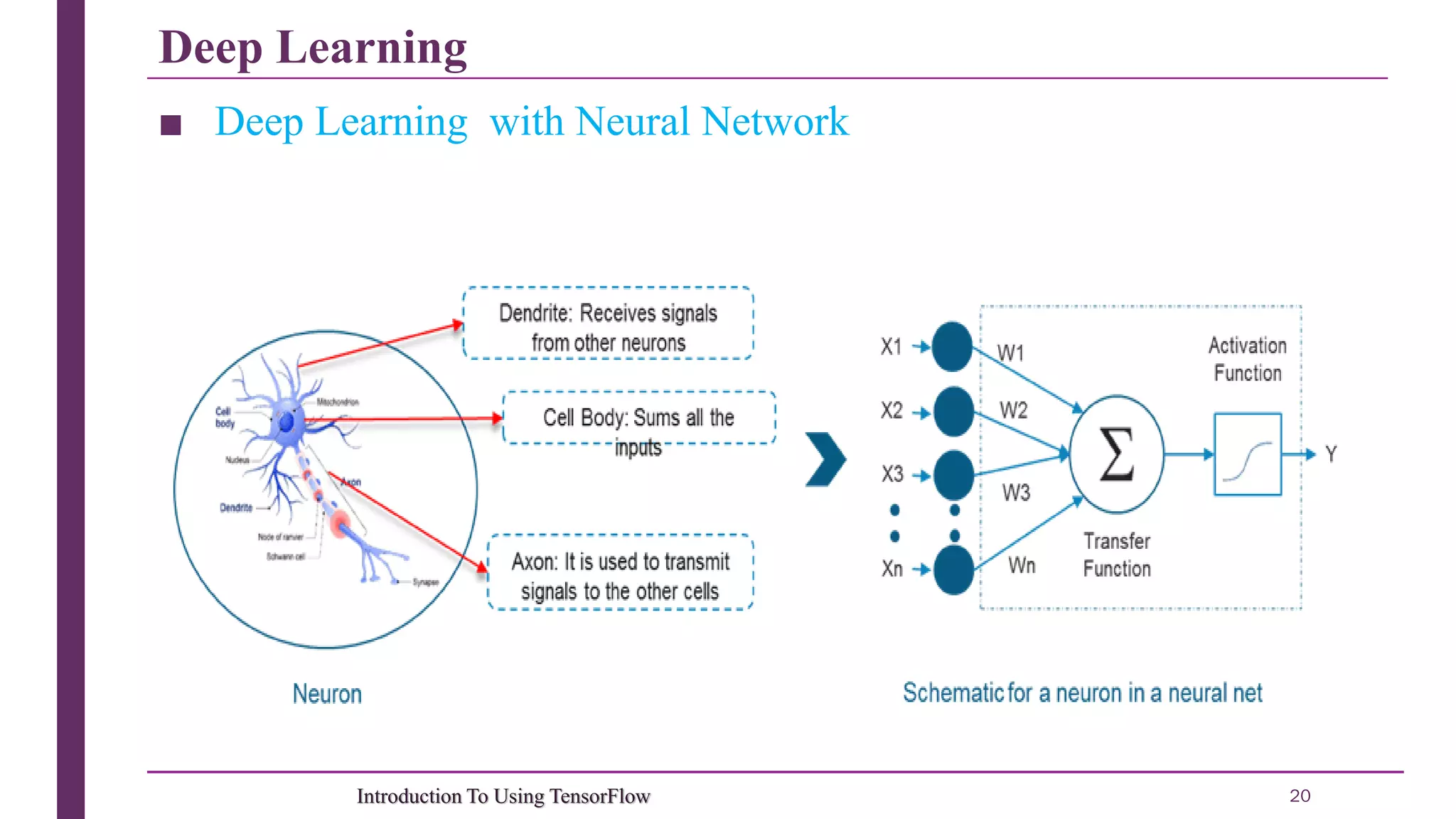

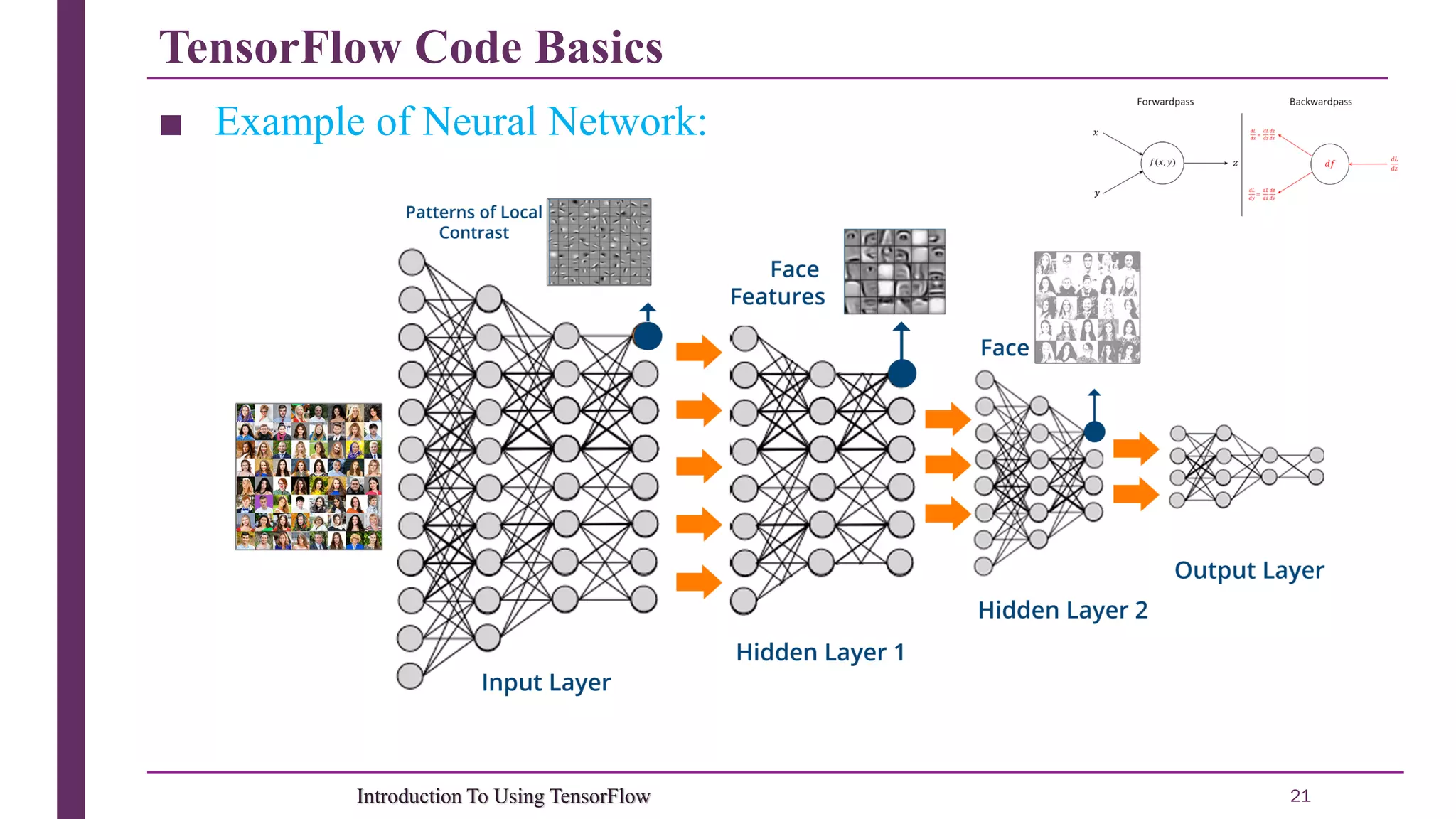

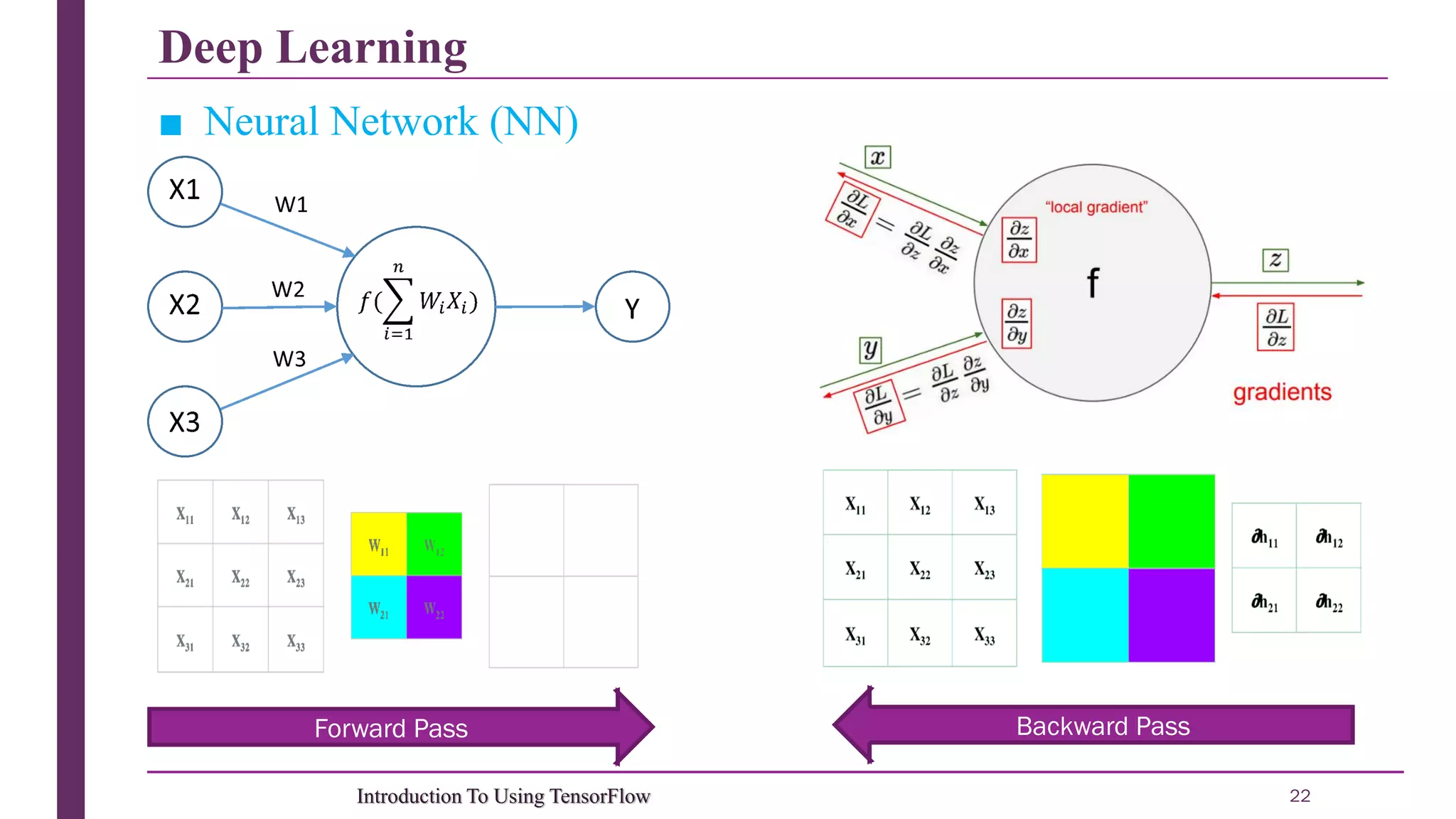

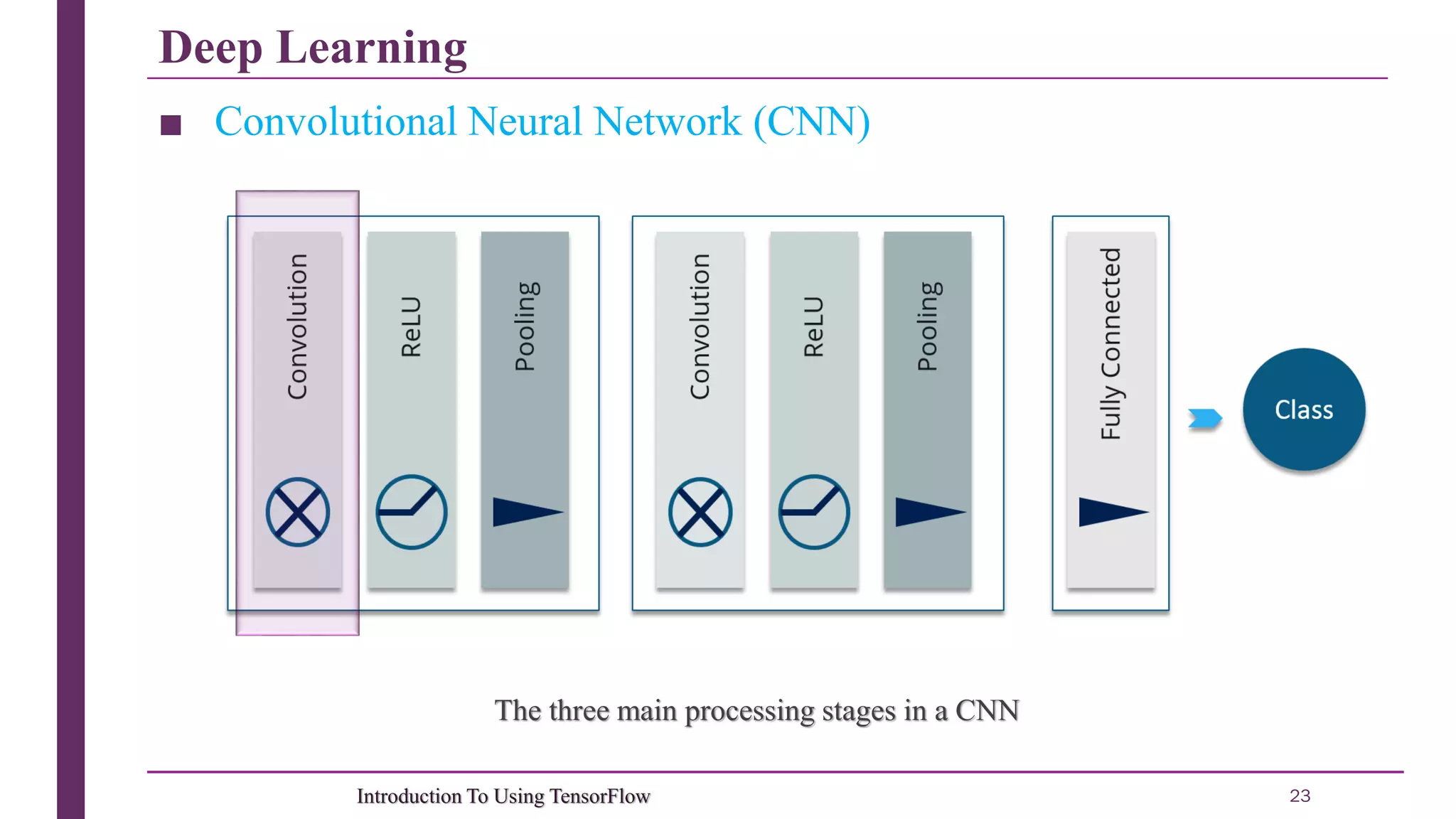

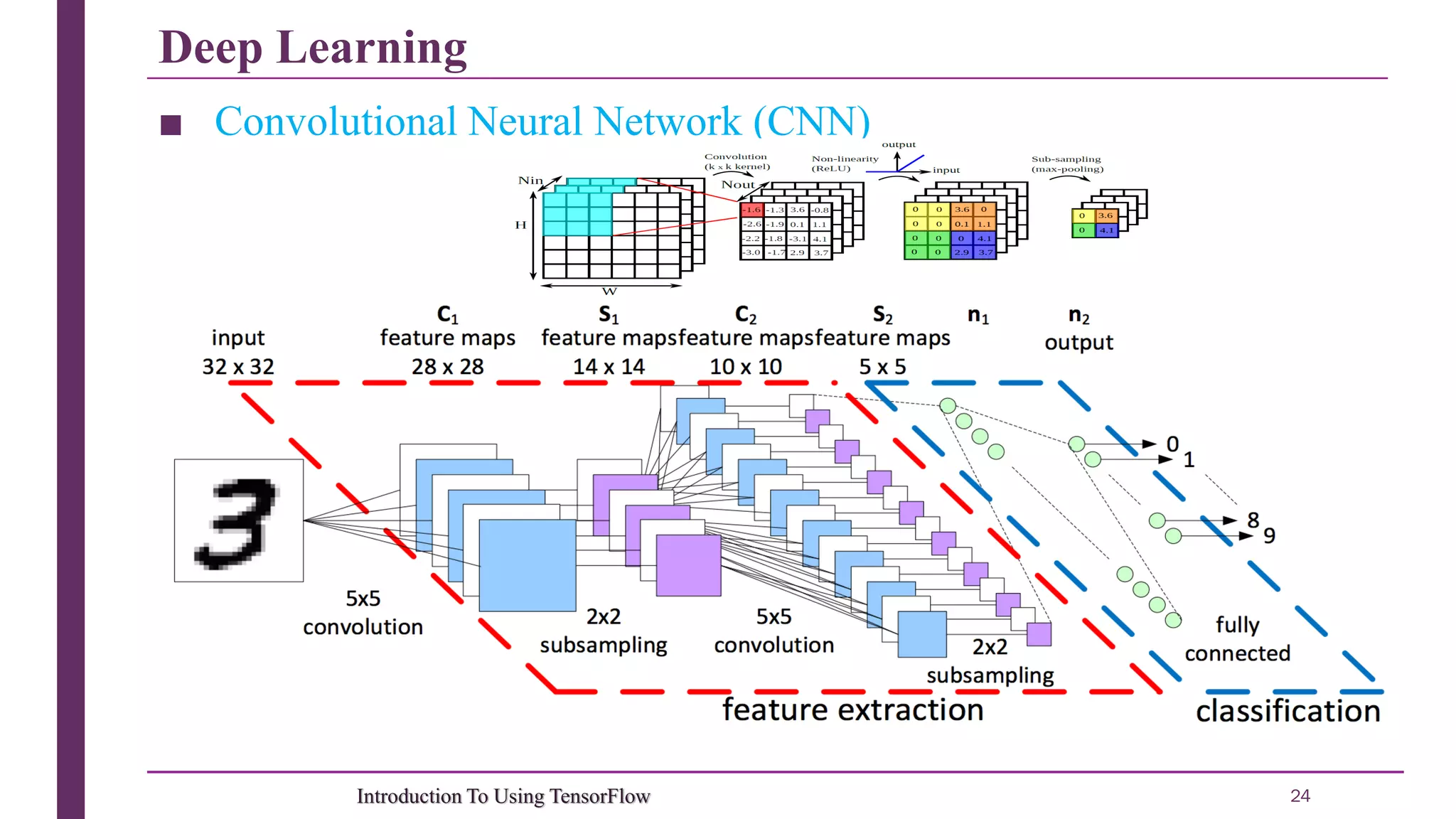

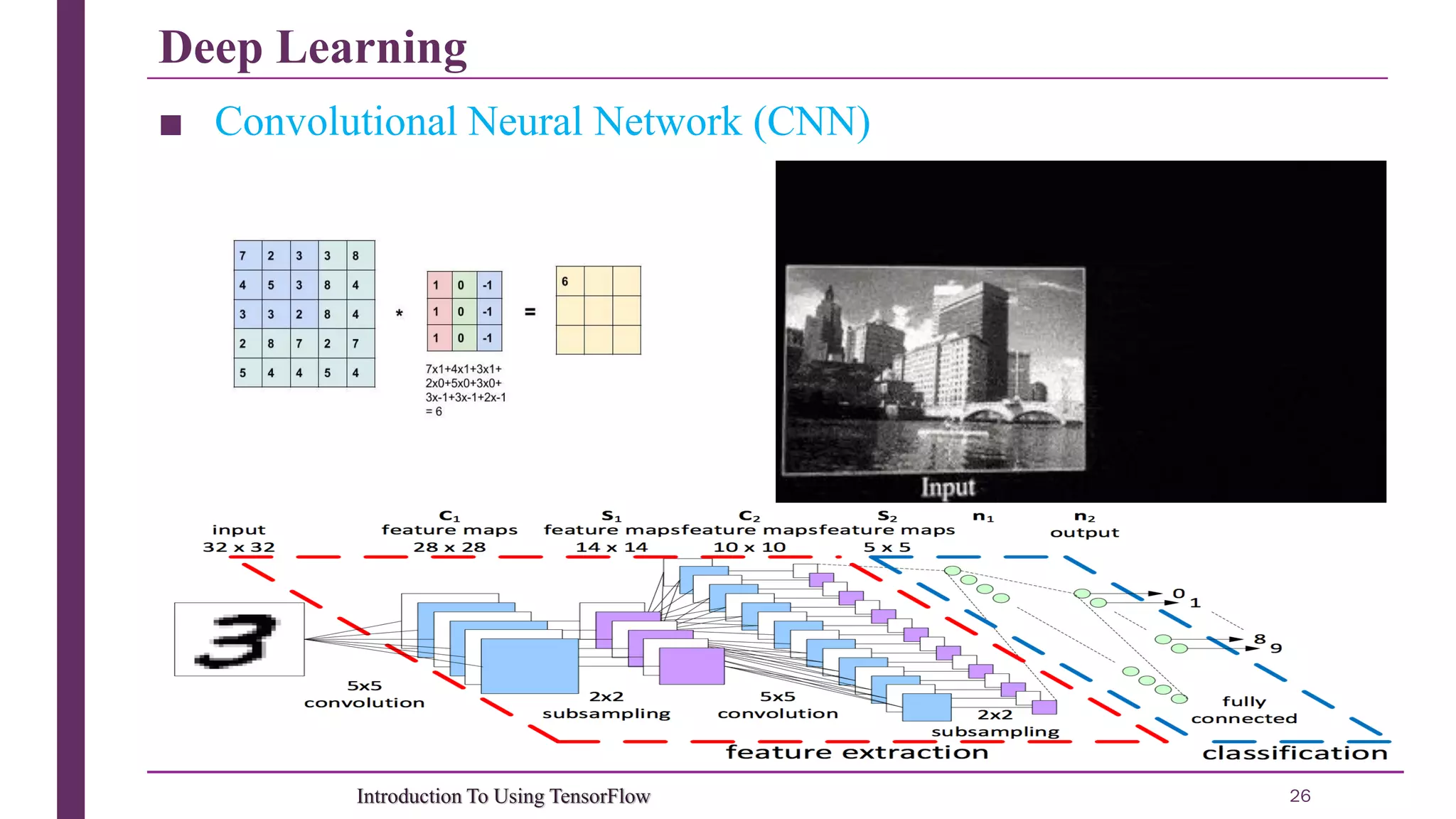

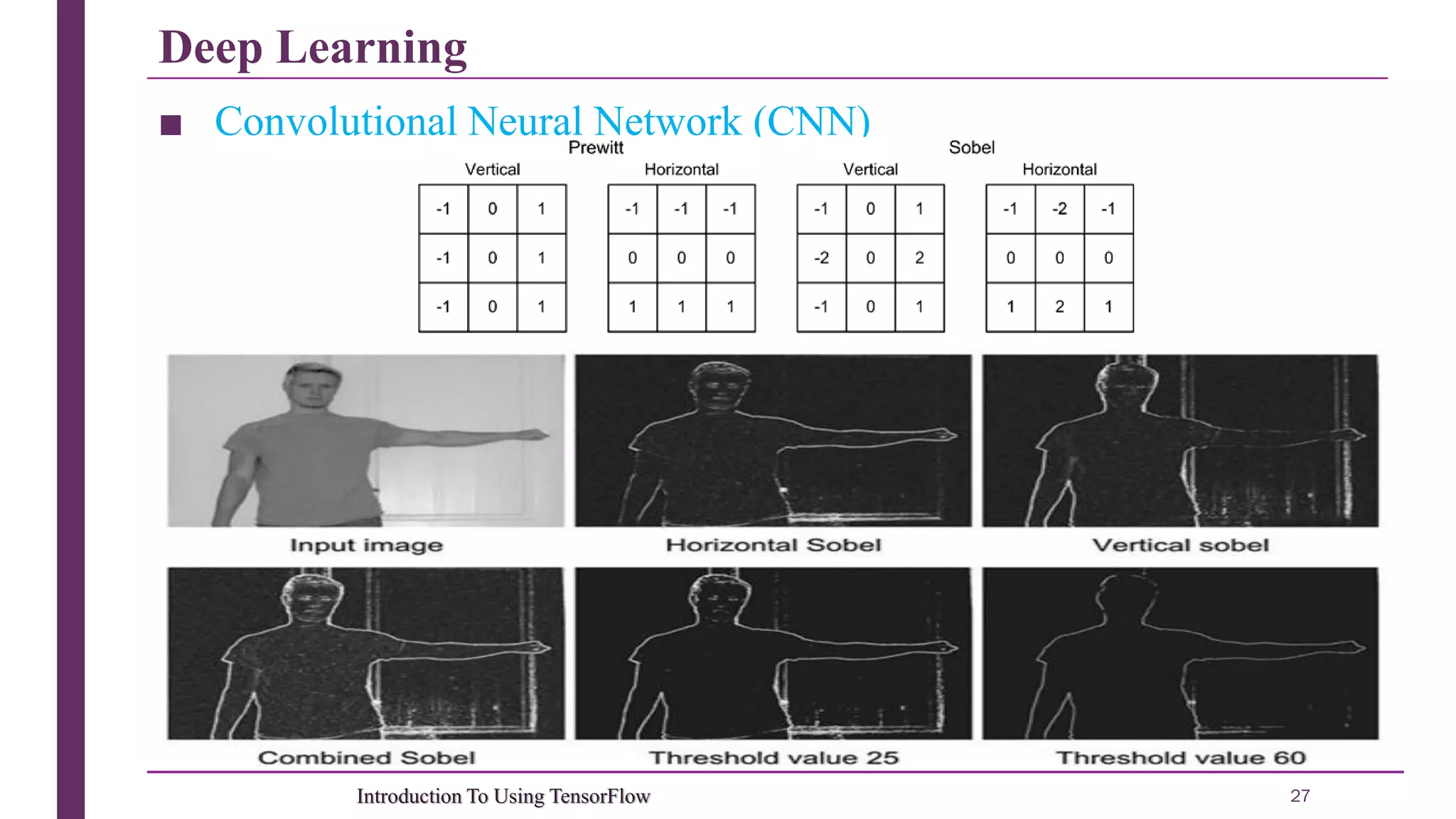

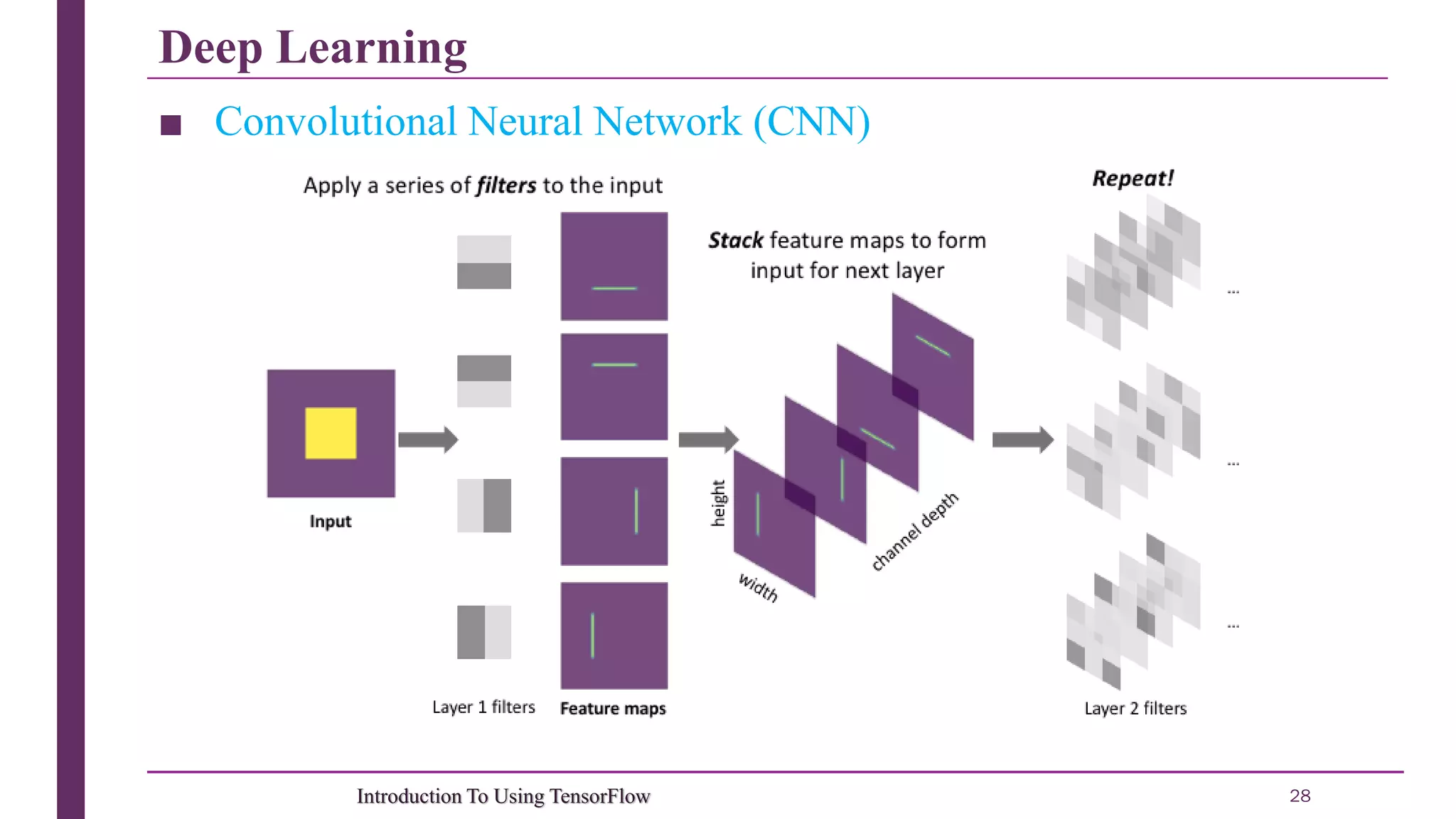

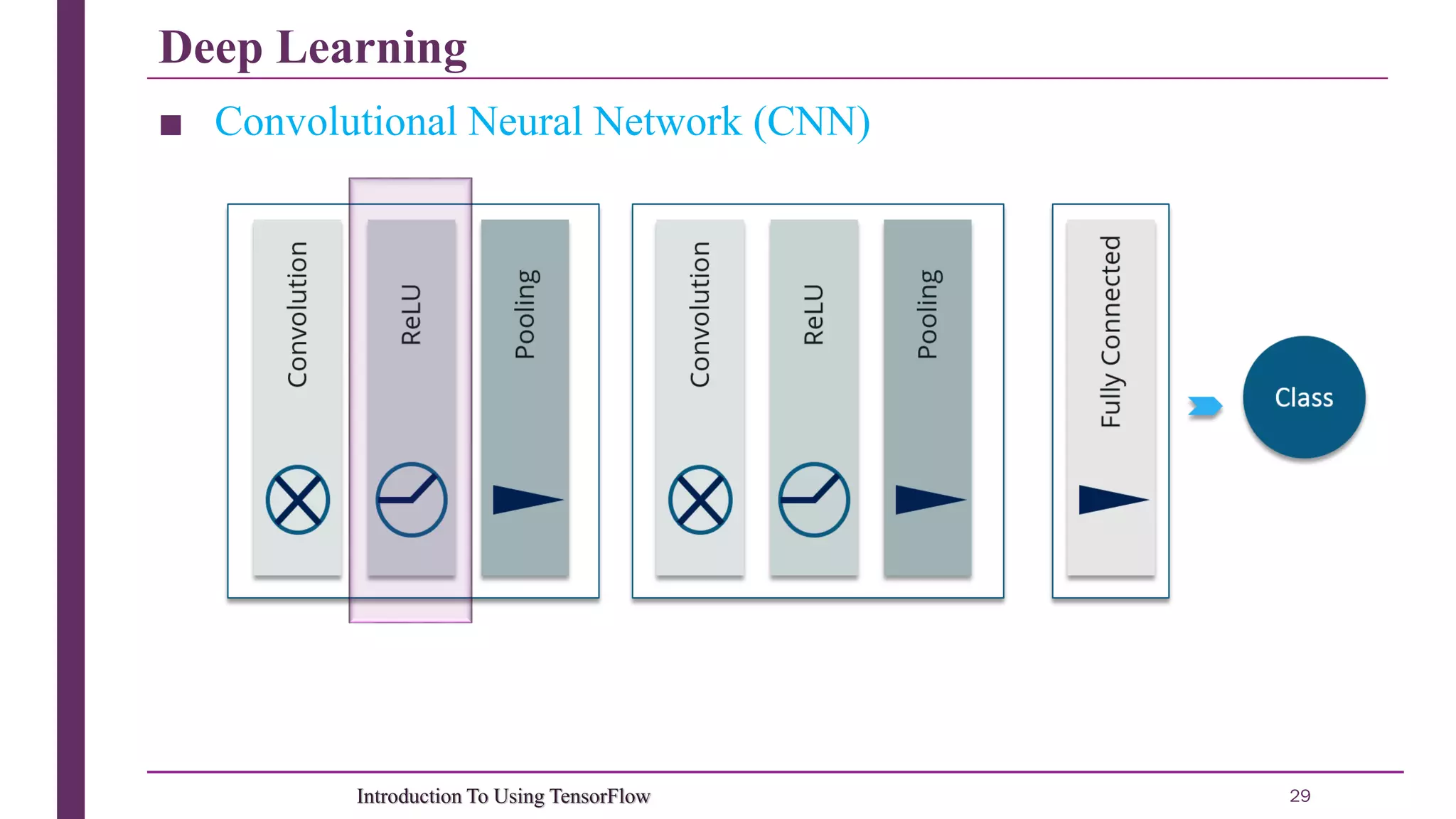

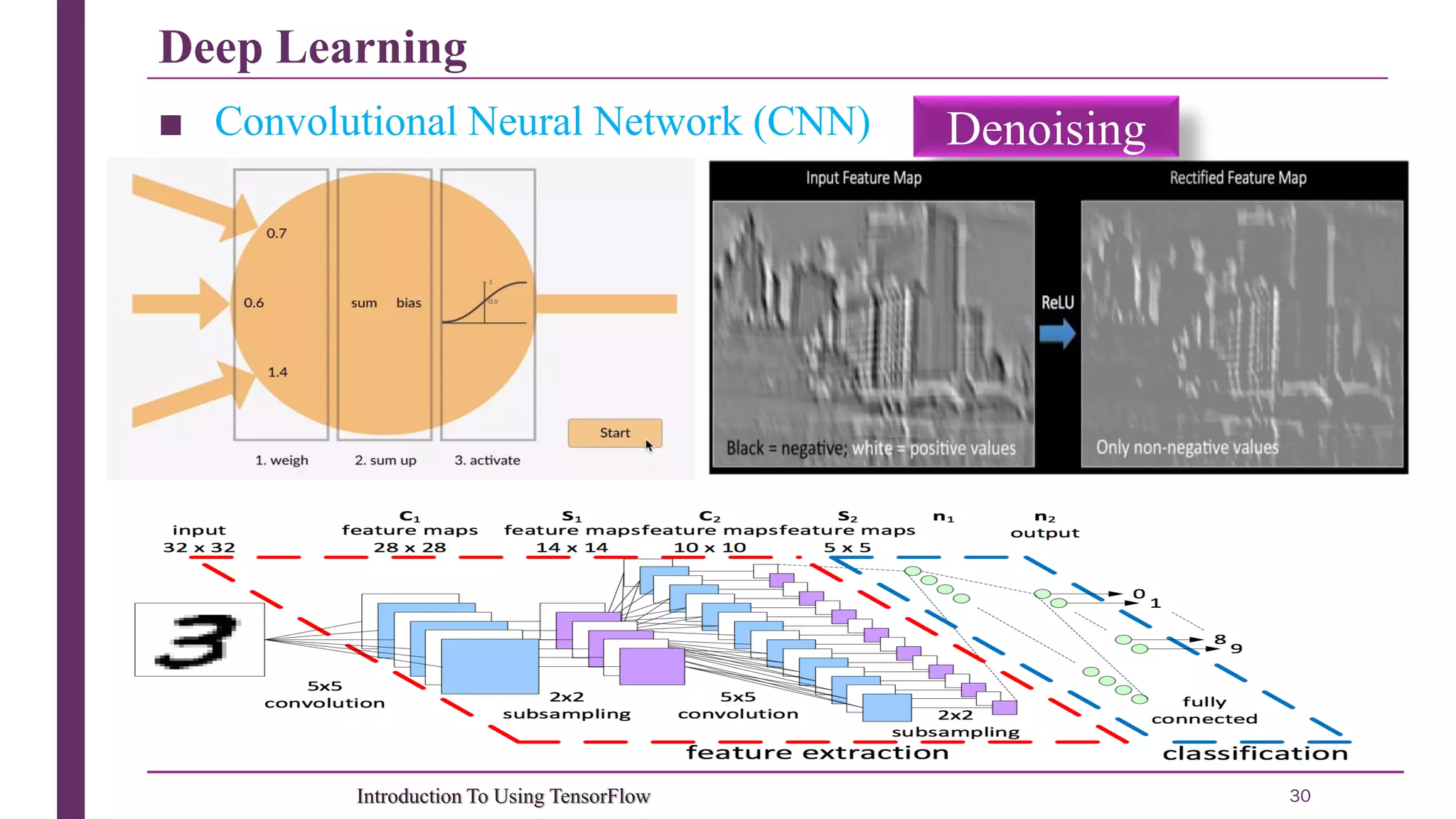

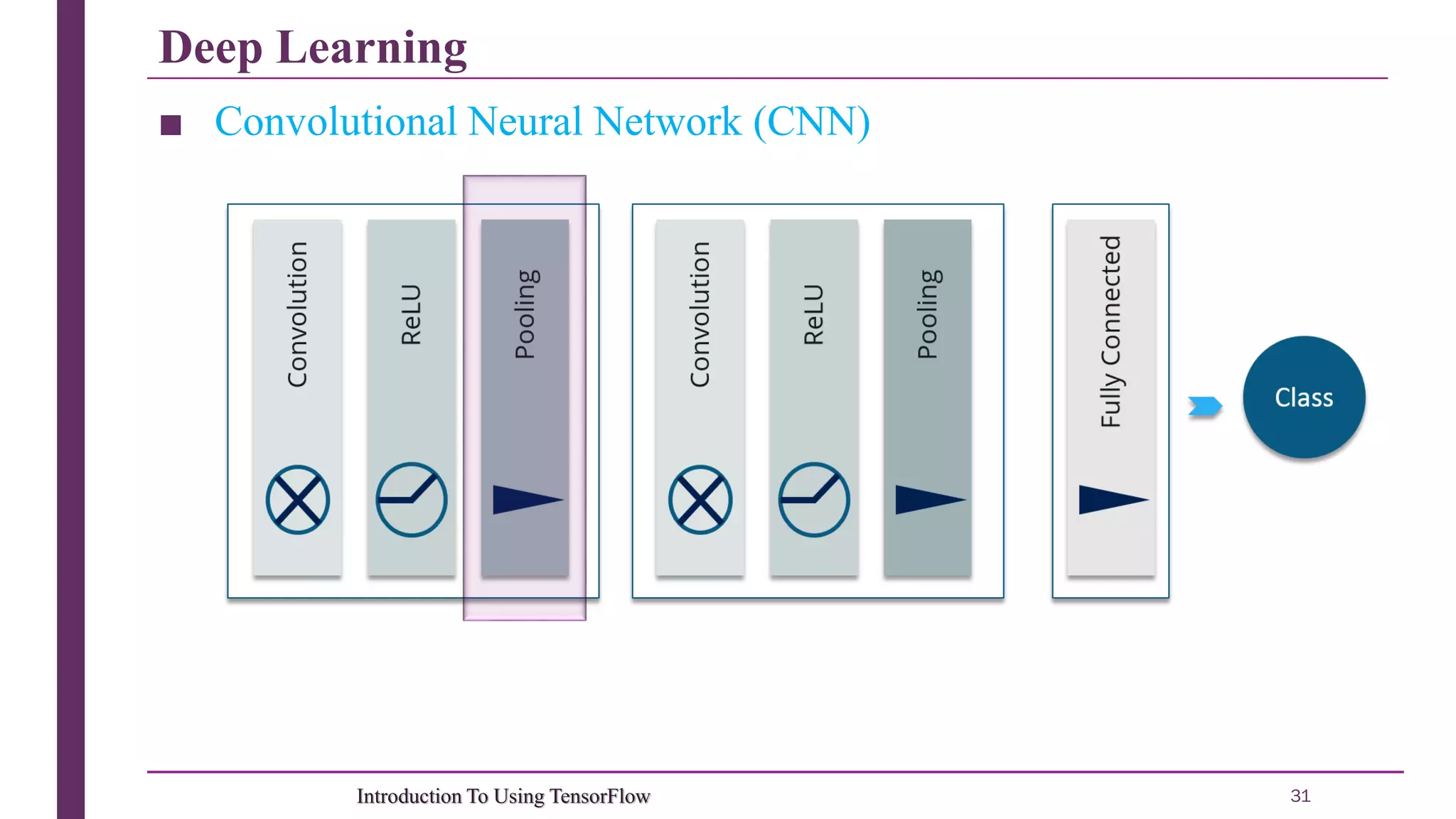

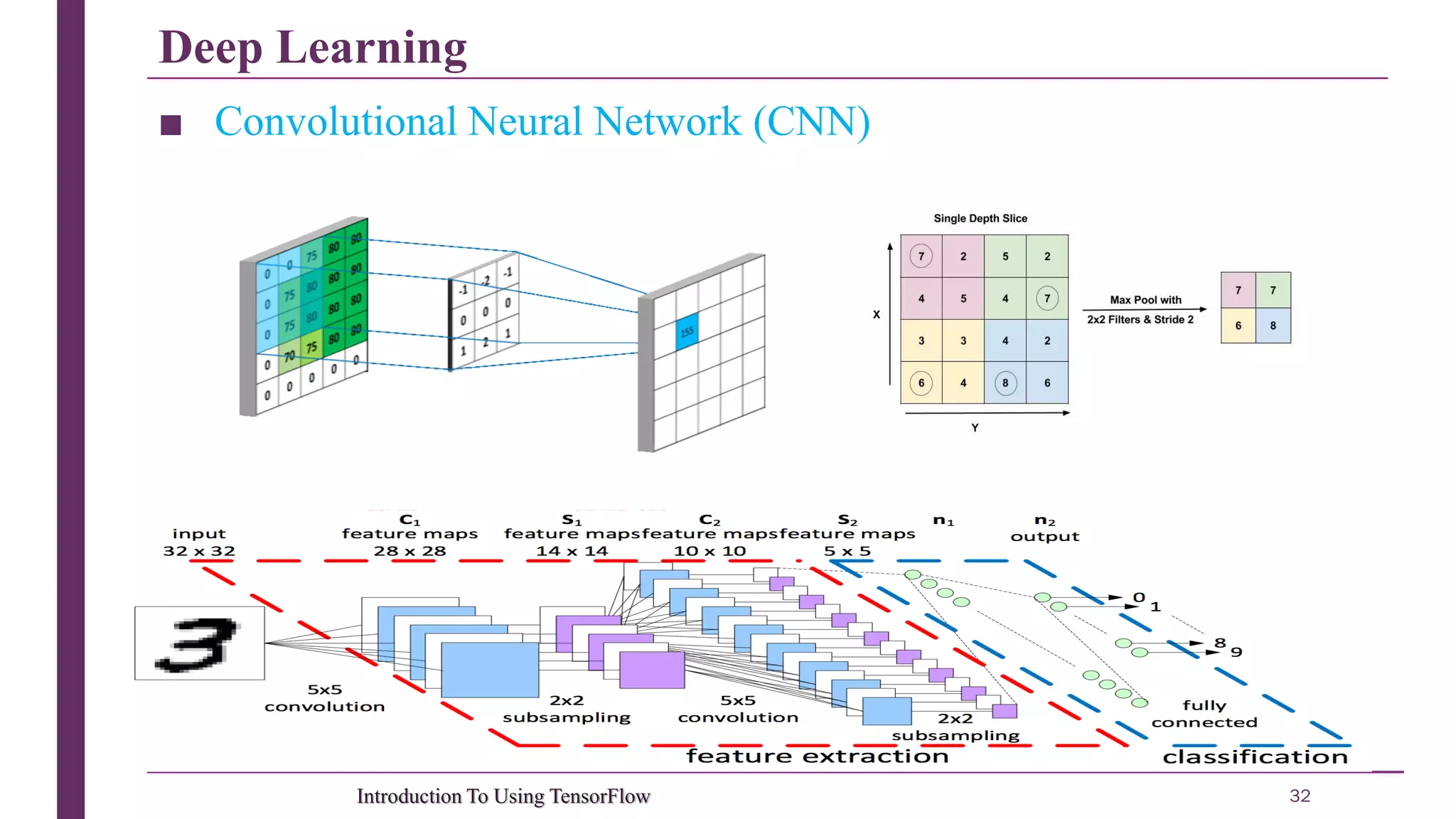

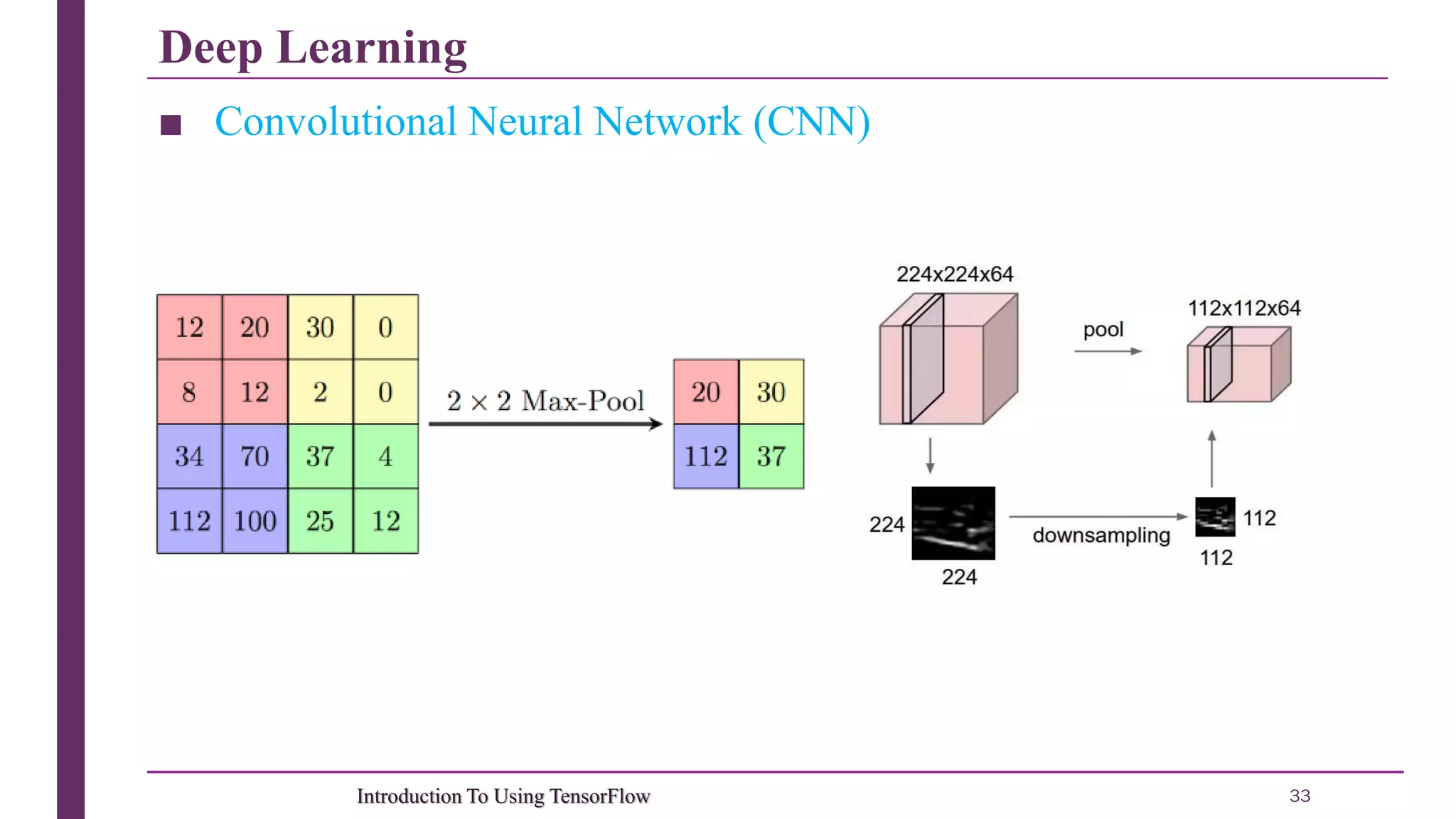

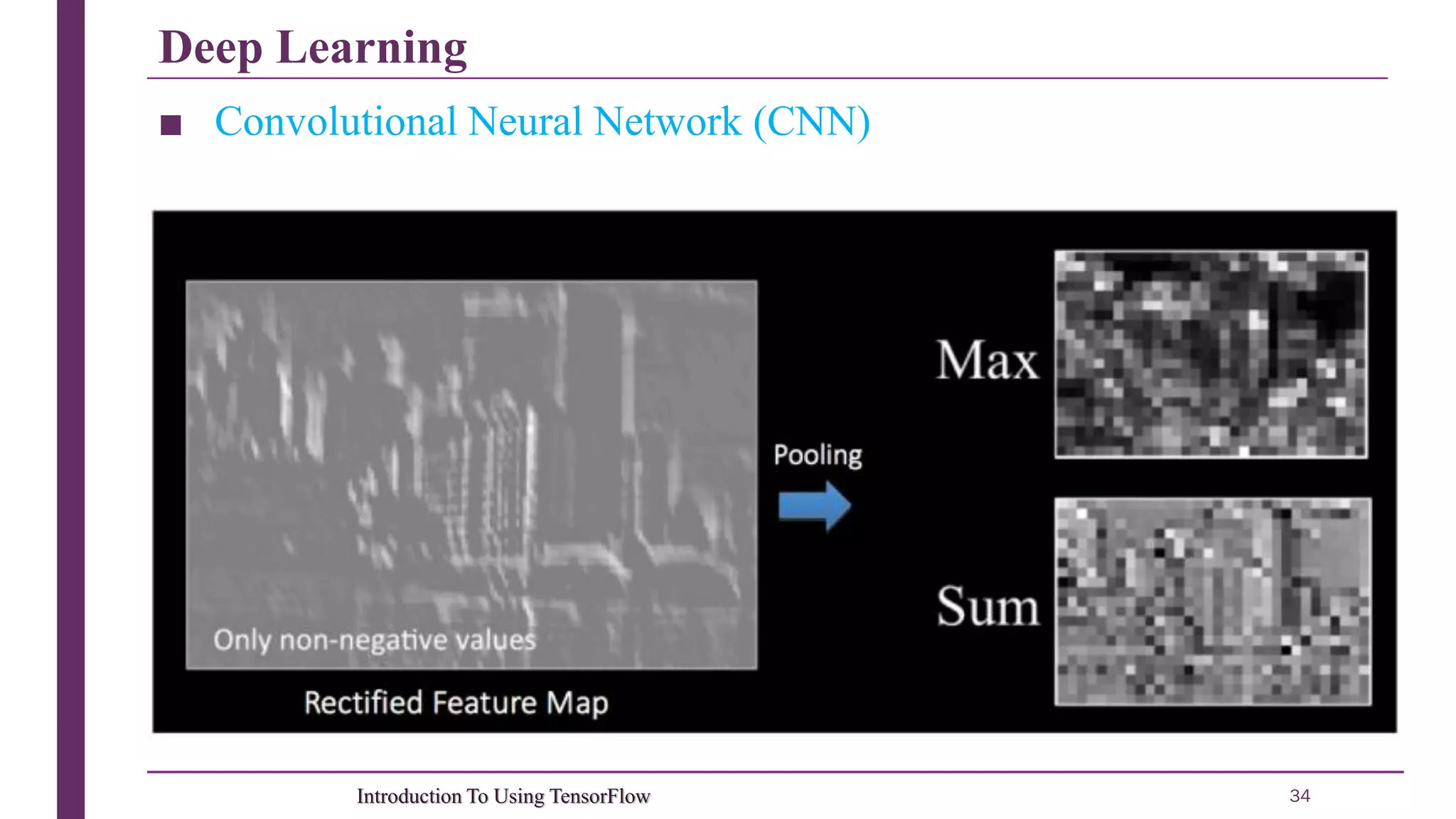

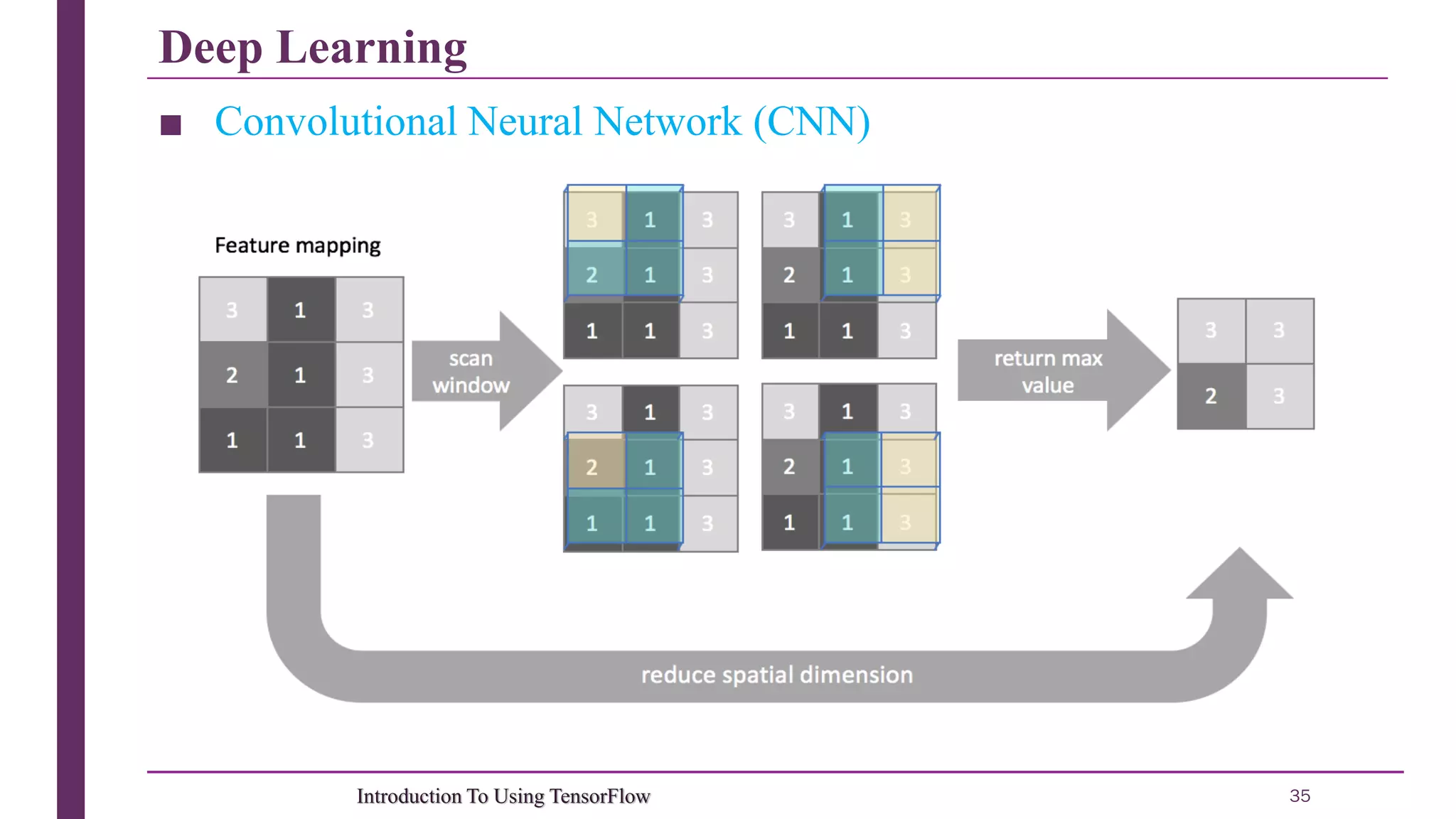

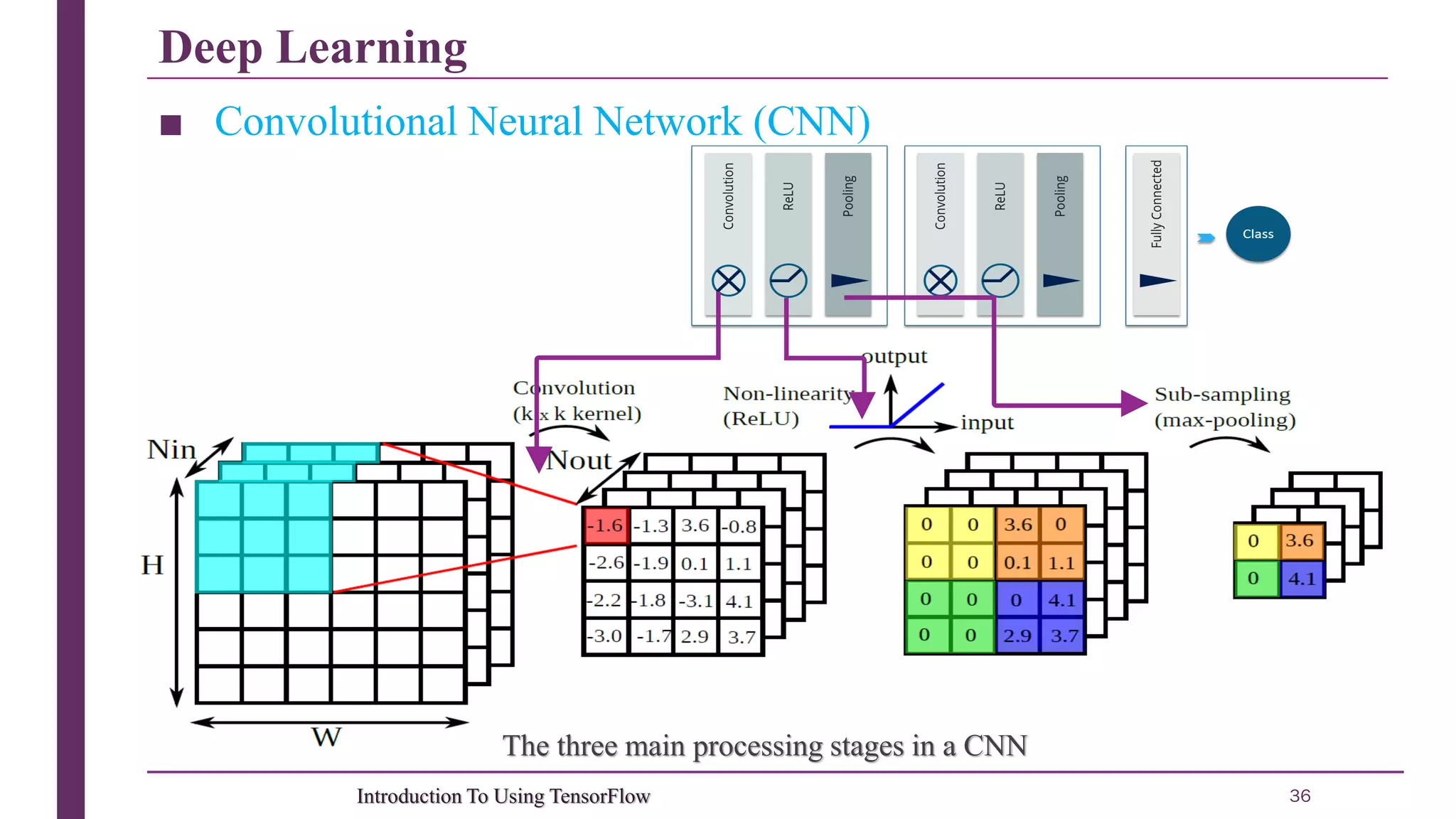

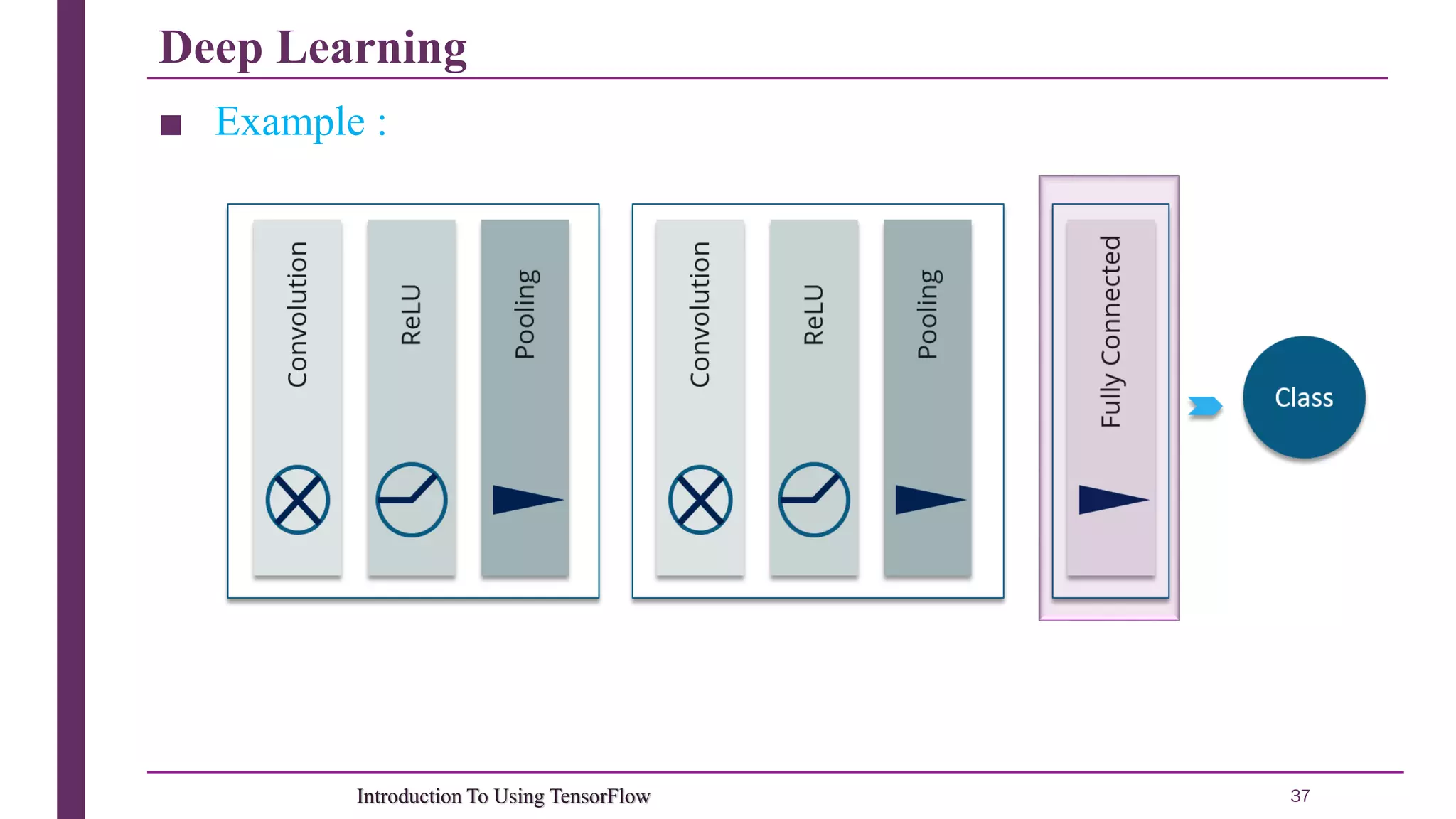

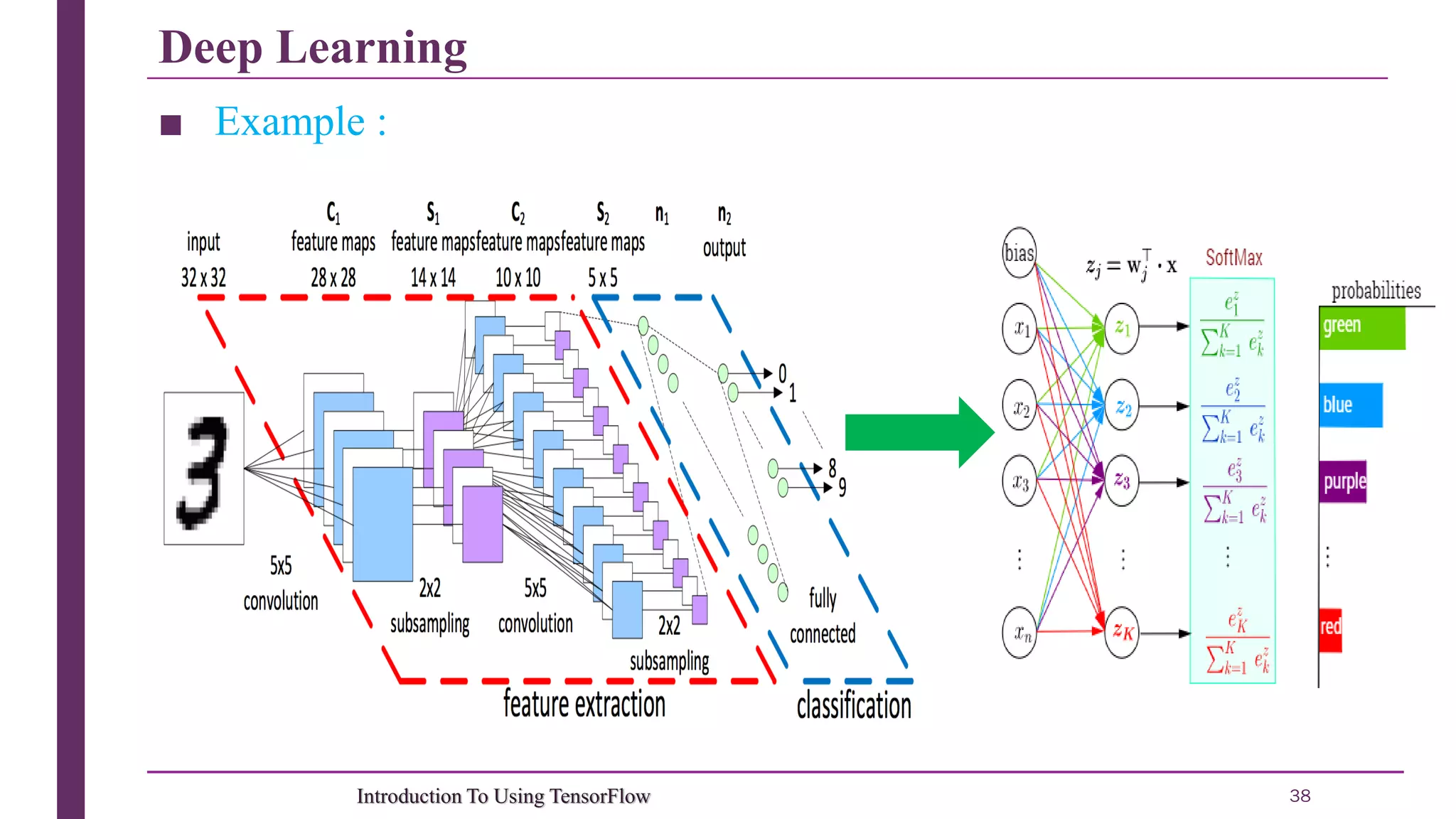

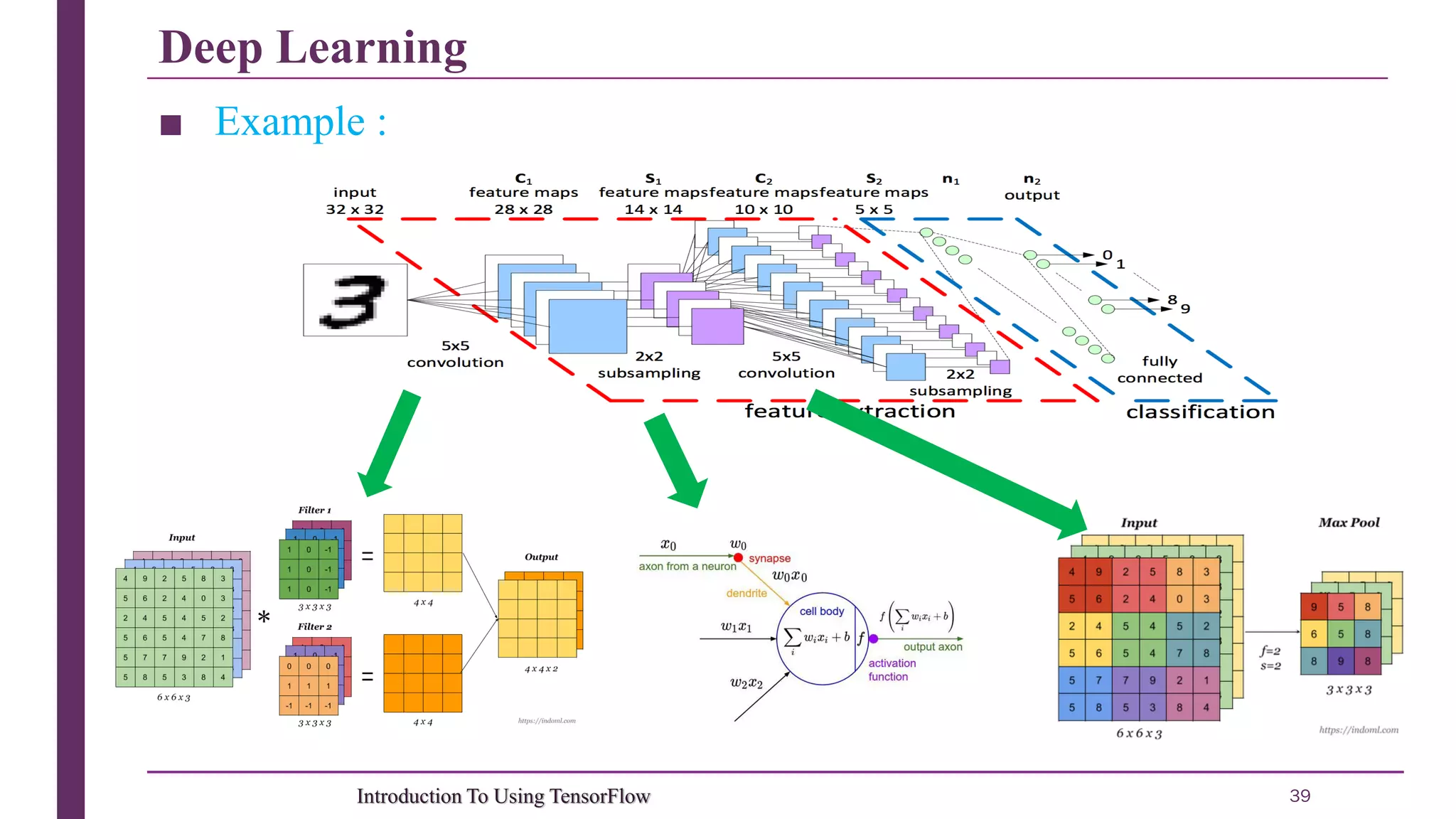

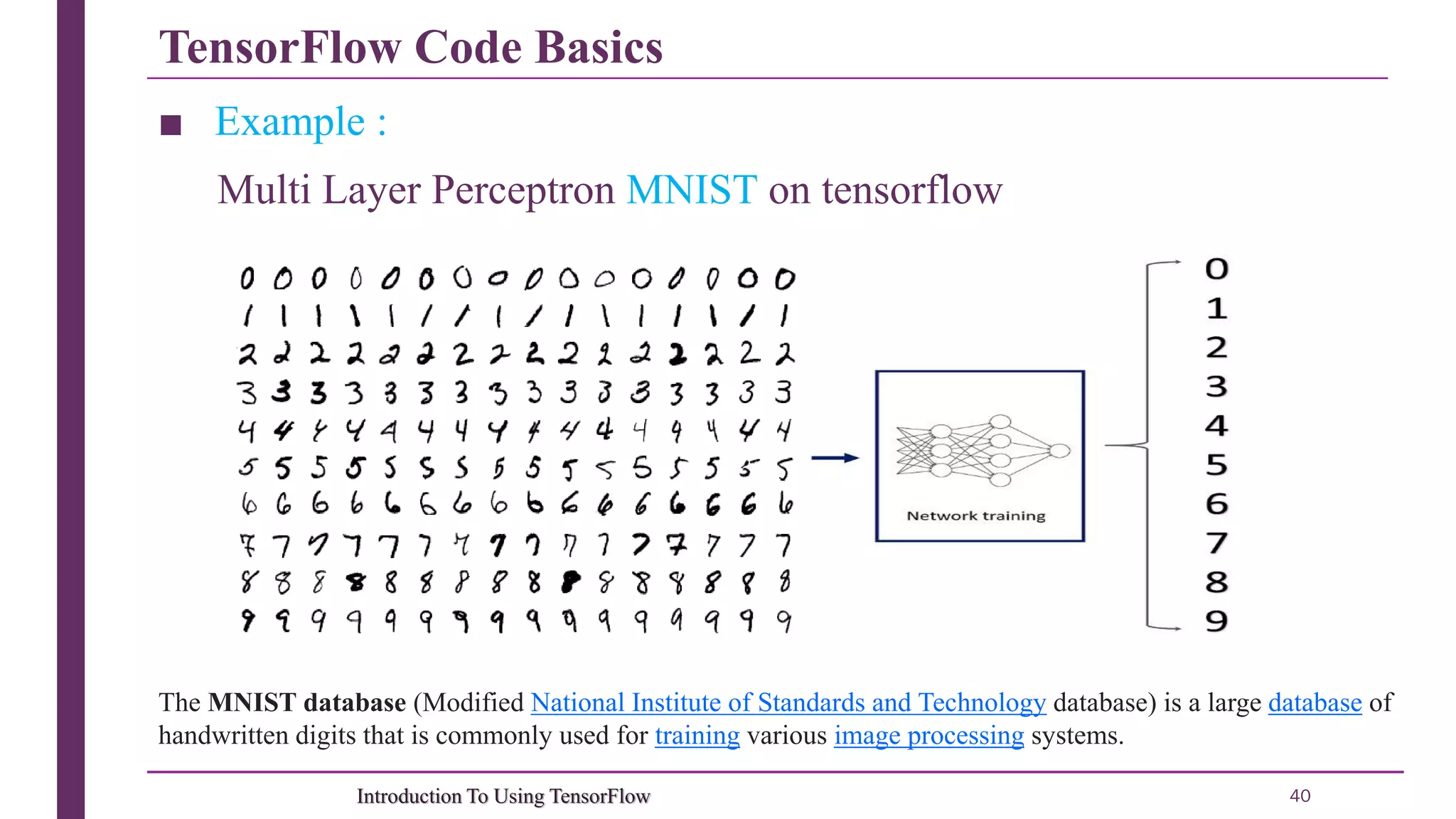

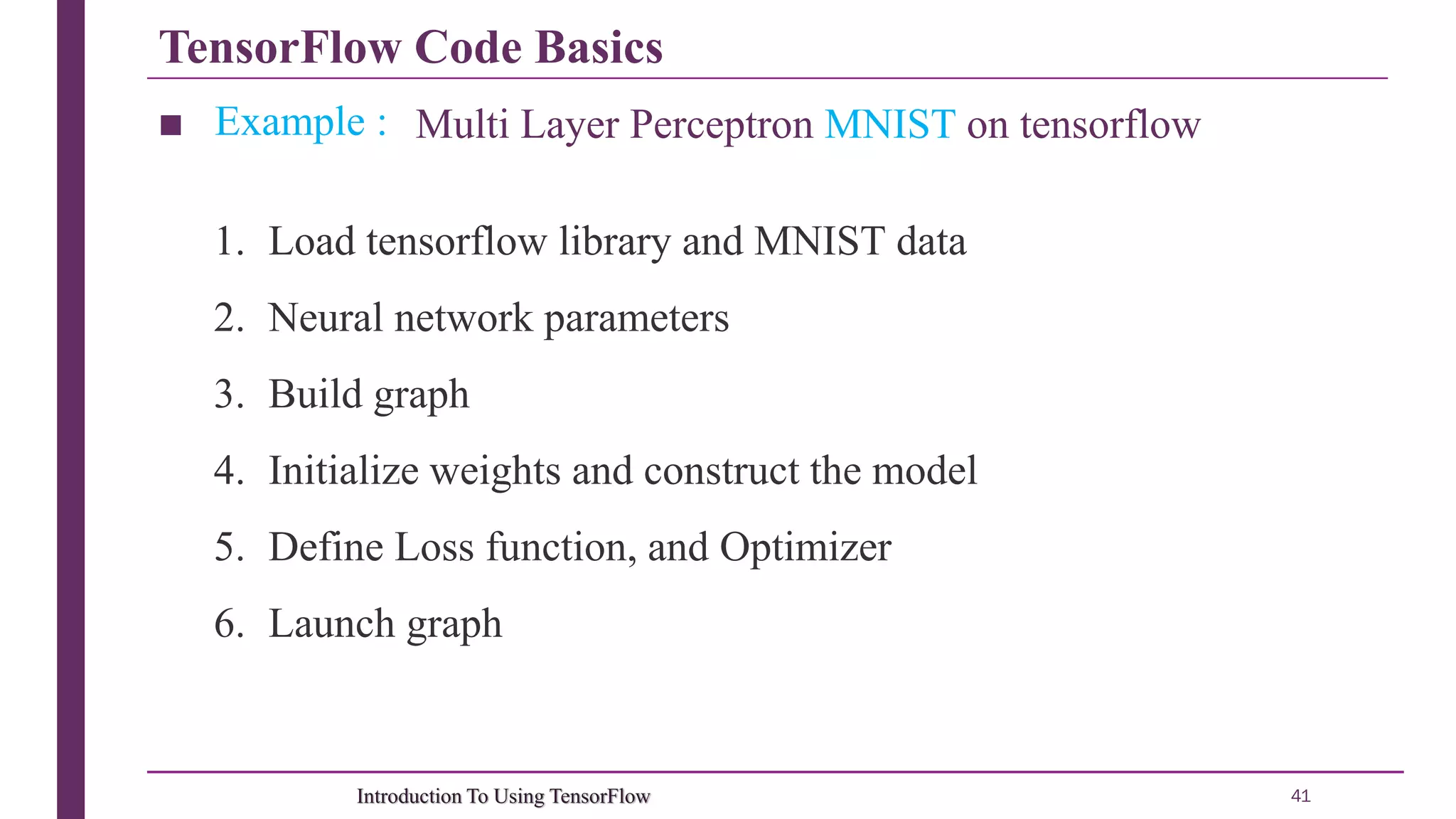

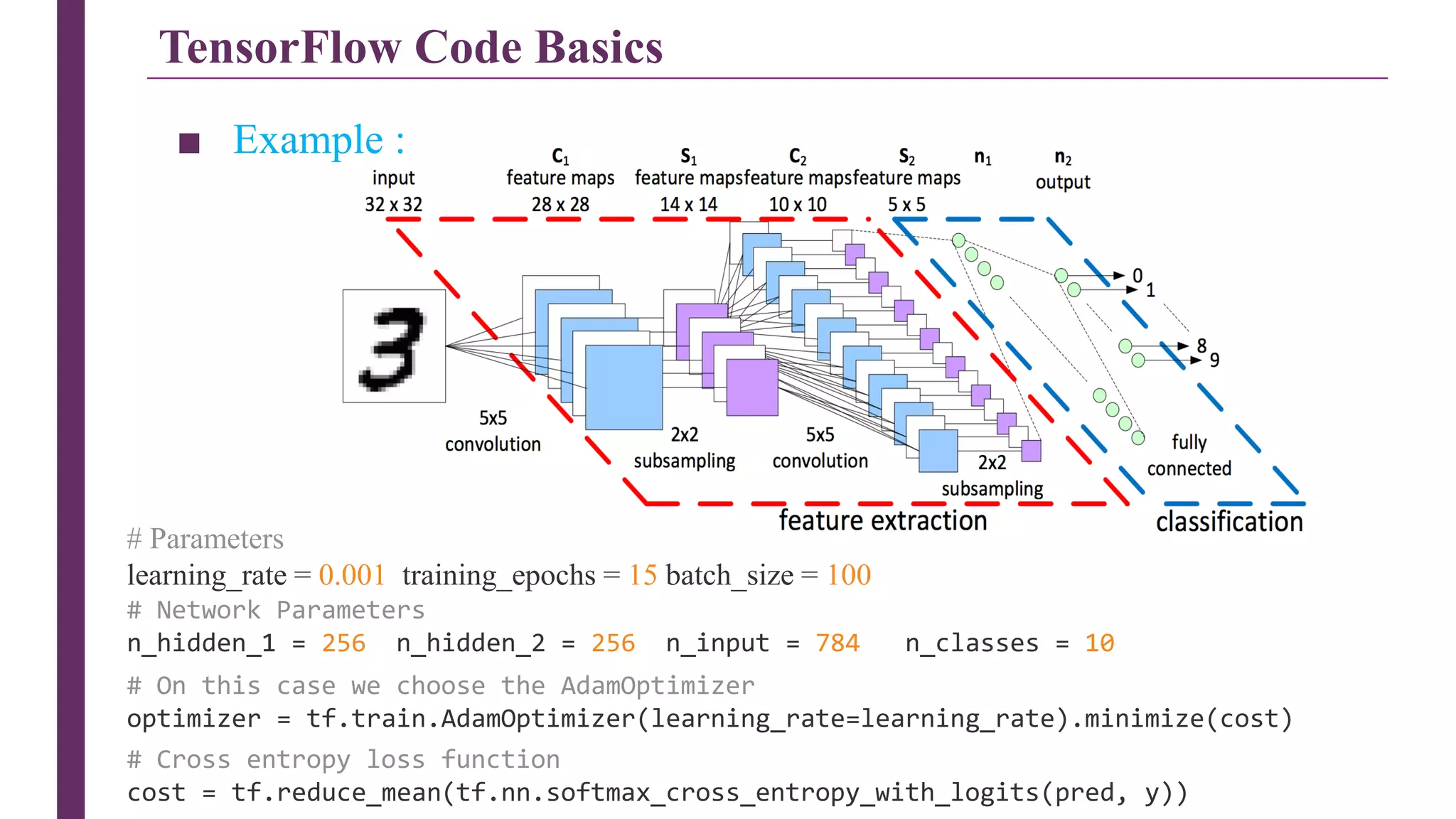

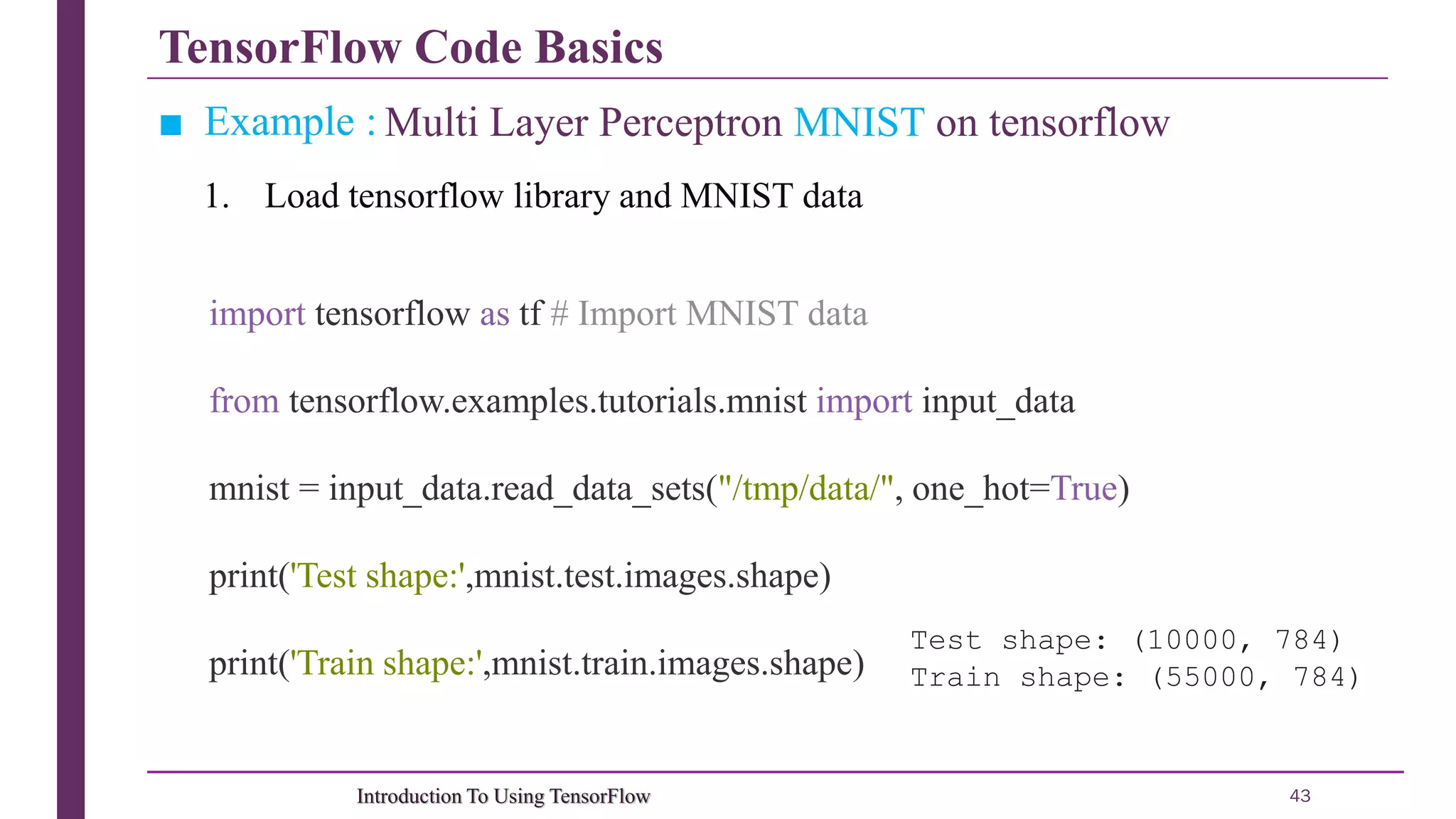

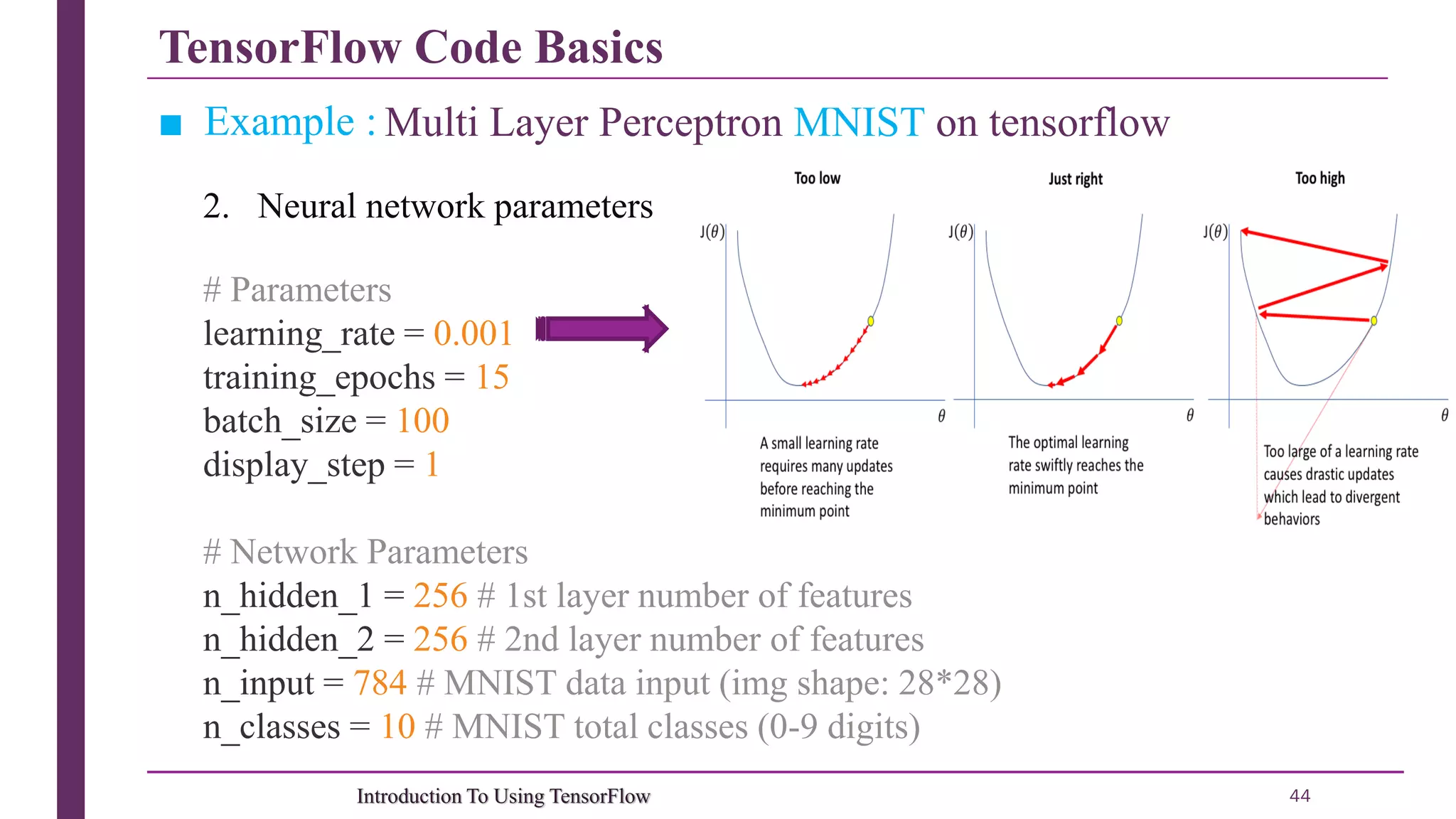

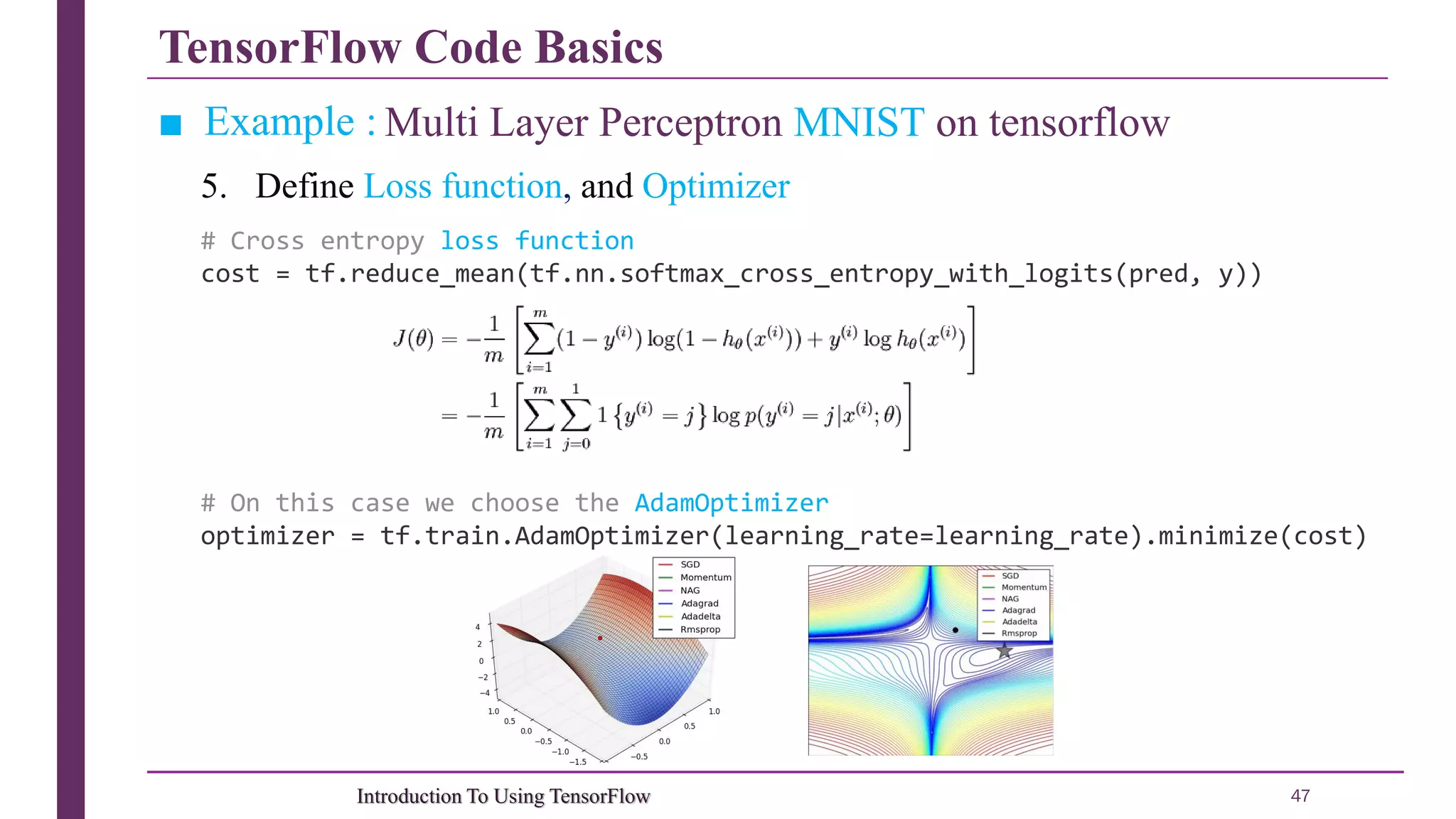

This document provides an introduction to using TensorFlow. It begins with an overview of TensorFlow and what it is. It then discusses TensorFlow code basics, including building computational graphs and running sessions. It provides examples of using placeholders, constants, and variables. It also gives an example of linear regression using TensorFlow. Finally, it discusses deep learning techniques like convolutional neural networks (CNNs) and recurrent neural networks (RNNs), providing examples of CNNs for image classification. It concludes with an example of using a multi-layer perceptron for MNIST digit classification in TensorFlow.

![TensorFlow Code Basics 9Introduction To Using TensorFlow ■ Building & Running The Computational Graph ■ Constants, Placeholder and Variables import tensorflow as tf # Creating placeholders a = tf. placeholder(tf.float32) b = tf. placeholder(tf.float32) # Assigning multiplication operation w.r.t. a & b to node mul mul = a*b # Create session object sess = tf.Session() # Executing mul by passing the values [1, 3] [2, 4] for a and b respectively output = sess.run(mul, {a: [1,3], b: [2, 4]}) print('Multiplying a b:', output) Output: [2. 12.]](https://image.slidesharecdn.com/cloudcomputing-presentationcnn1-181231190350/75/Introduction-To-Using-TensorFlow-Deep-Learning-9-2048.jpg)

![Deep Learning 25Introduction To Using TensorFlow ■ Convolutional Neural Network (CNN) Example filters learned by Krizhevsky et al. Each of the 96 filters shown here is of size [11x11x3], and each one is shared by the 55*55 neurons in one depth slice. 1 2 3](https://image.slidesharecdn.com/cloudcomputing-presentationcnn1-181231190350/75/Introduction-To-Using-TensorFlow-Deep-Learning-25-2048.jpg)

![TensorFlow Code Basics 45Introduction To Using TensorFlow ■ Example : Multi Layer Perceptron MNIST on tensorflow x = tf.placeholder("float", [None, n_input]) y = tf.placeholder("float", [None, n_classes]) # Create model def multilayer_perceptron(x, weights, biases): print('x:',x.get_shape(),'W1:',weights['h1'].get_shape(),'b1:',biases['b1'].get_shape()) layer_1 = tf.add(tf.matmul(x, weights['h1']), biases['b1']) layer_1 = tf.nn.relu(layer_1) print( 'layer_1:', layer_1.get_shape(), 'W2:', weights['h2'].get_shape(), 'b2:', biases['b2'].get_shape()) layer_2 = tf.add(tf.matmul(layer_1, weights['h2']), biases['b2']) layer_2 = tf.nn.relu(layer_2) print( 'layer_2:', layer_2.get_shape(), 'W3:', weights['out'].get_shape(), 'b3:', biases['out'].get_shape()) out_layer = tf.matmul(layer_2, weights['out']) + biases['out'] print('out_layer:',out_layer.get_shape()) return out_layer 3. Build graph](https://image.slidesharecdn.com/cloudcomputing-presentationcnn1-181231190350/75/Introduction-To-Using-TensorFlow-Deep-Learning-45-2048.jpg)

![TensorFlow Code Basics 46Introduction To Using TensorFlow ■ Example : Multi Layer Perceptron MNIST on tensorflow # Store layers weight & bias weights = { 'h1': tf.Variable(tf.random_normal([n_input, n_hidden_1])), #784x256 'h2': tf.Variable(tf.random_normal([n_hidden_1, n_hidden_2])), #256x256 'out': tf.Variable(tf.random_normal([n_hidden_2, n_classes])) #256x10 } biases = { 'b1': tf.Variable(tf.random_normal([n_hidden_1])), #256x1 'b2': tf.Variable(tf.random_normal([n_hidden_2])), #256x1 'out': tf.Variable(tf.random_normal([n_classes])) #10x1 } # Construct model pred = multilayer_perceptron(x, weights, biases) 4. Initialize weights and construct the model](https://image.slidesharecdn.com/cloudcomputing-presentationcnn1-181231190350/75/Introduction-To-Using-TensorFlow-Deep-Learning-46-2048.jpg)

![TensorFlow Code Basics 48Introduction To Using TensorFlow ■ Example : Multi Layer Perceptron MNIST on tensorflow 6.1 Launch graph # Initializing the variables init = tf.initialize_all_variables() # Launch the graph with tf.Session() as sess: sess.run(init) # Training cycle for epoch in range(training_epochs): avg_cost = 0. total_batch = int(mnist.train.num_examples/batch_size) # Loop over all batches for i in range(total_batch): batch_x, batch_y = mnist.train.next_batch(batch_size) # Run optimization op (backprop) and cost op (to get loss value) _, c = sess.run([optimizer, cost], feed_dict={x: batch_x, y: batch_y}) # Compute average loss avg_cost += c / total_batch](https://image.slidesharecdn.com/cloudcomputing-presentationcnn1-181231190350/75/Introduction-To-Using-TensorFlow-Deep-Learning-48-2048.jpg)