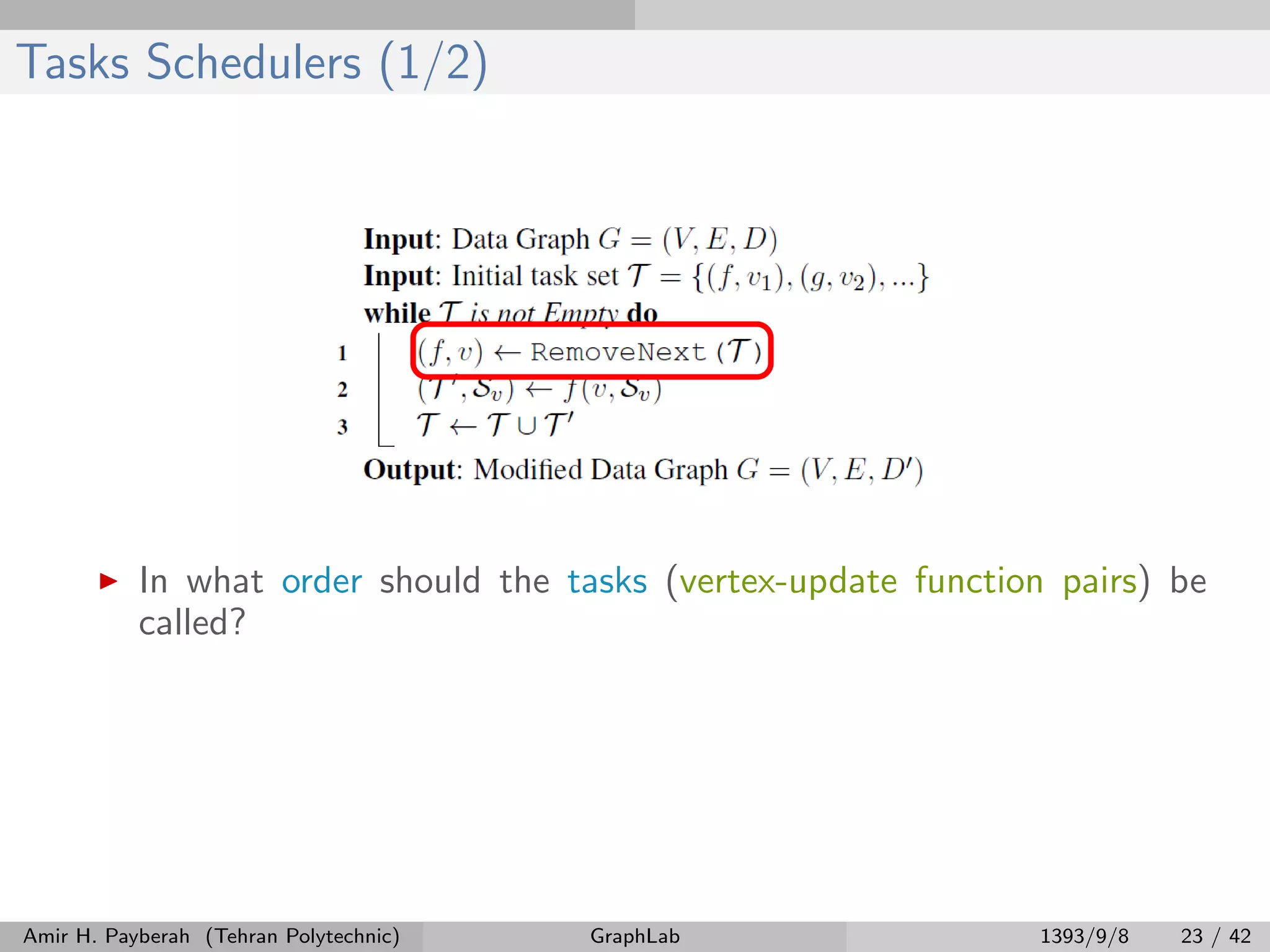

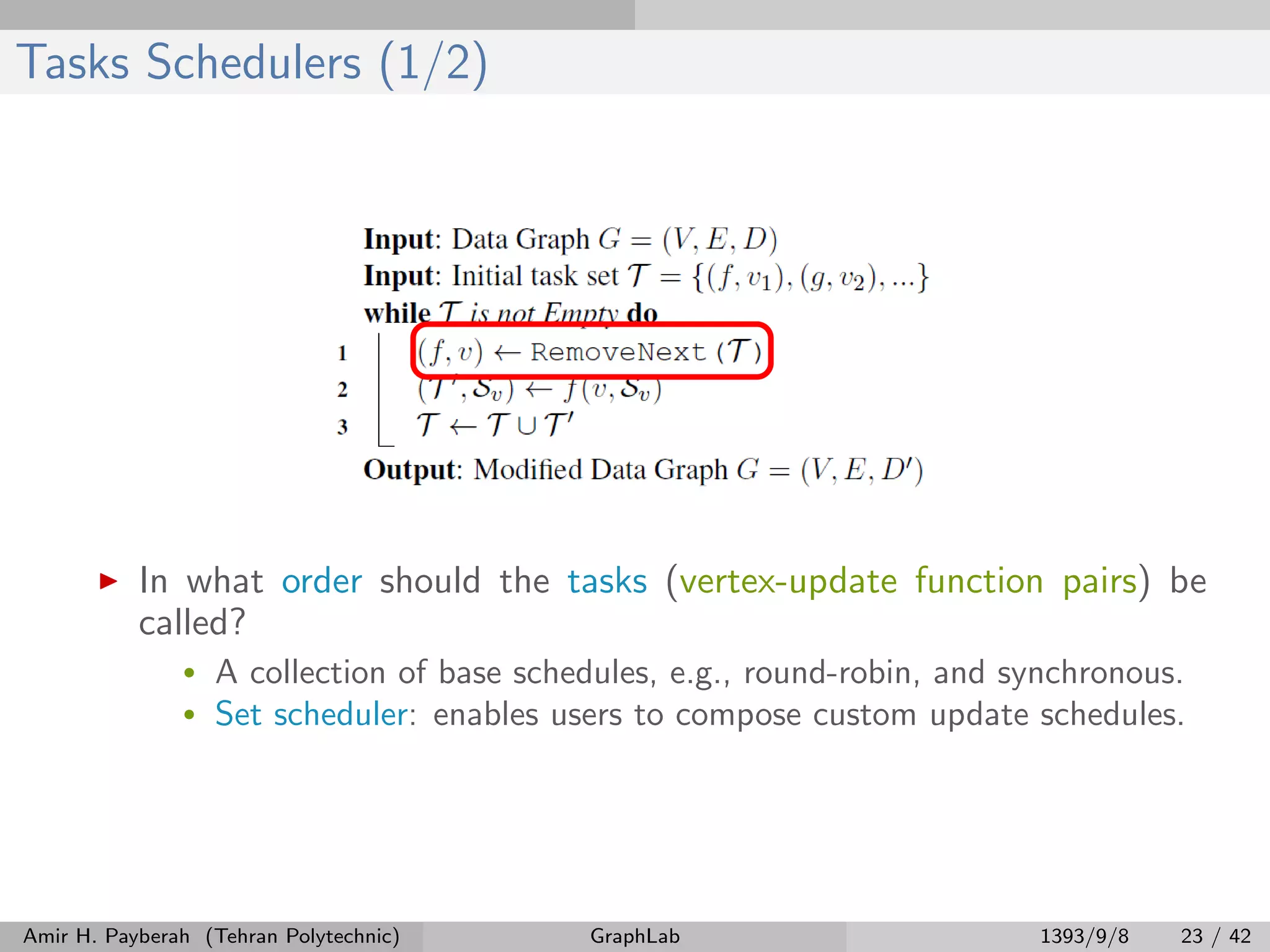

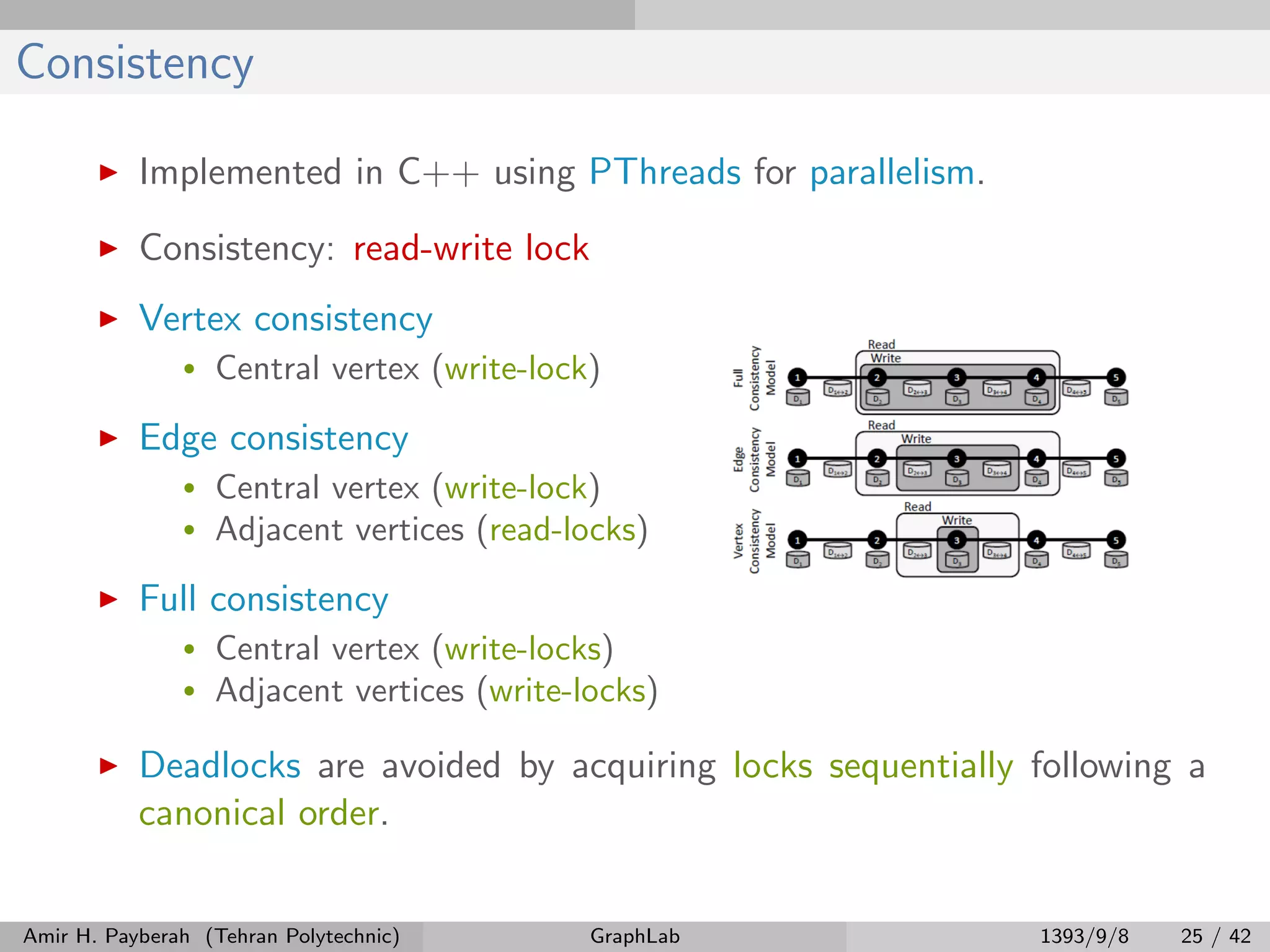

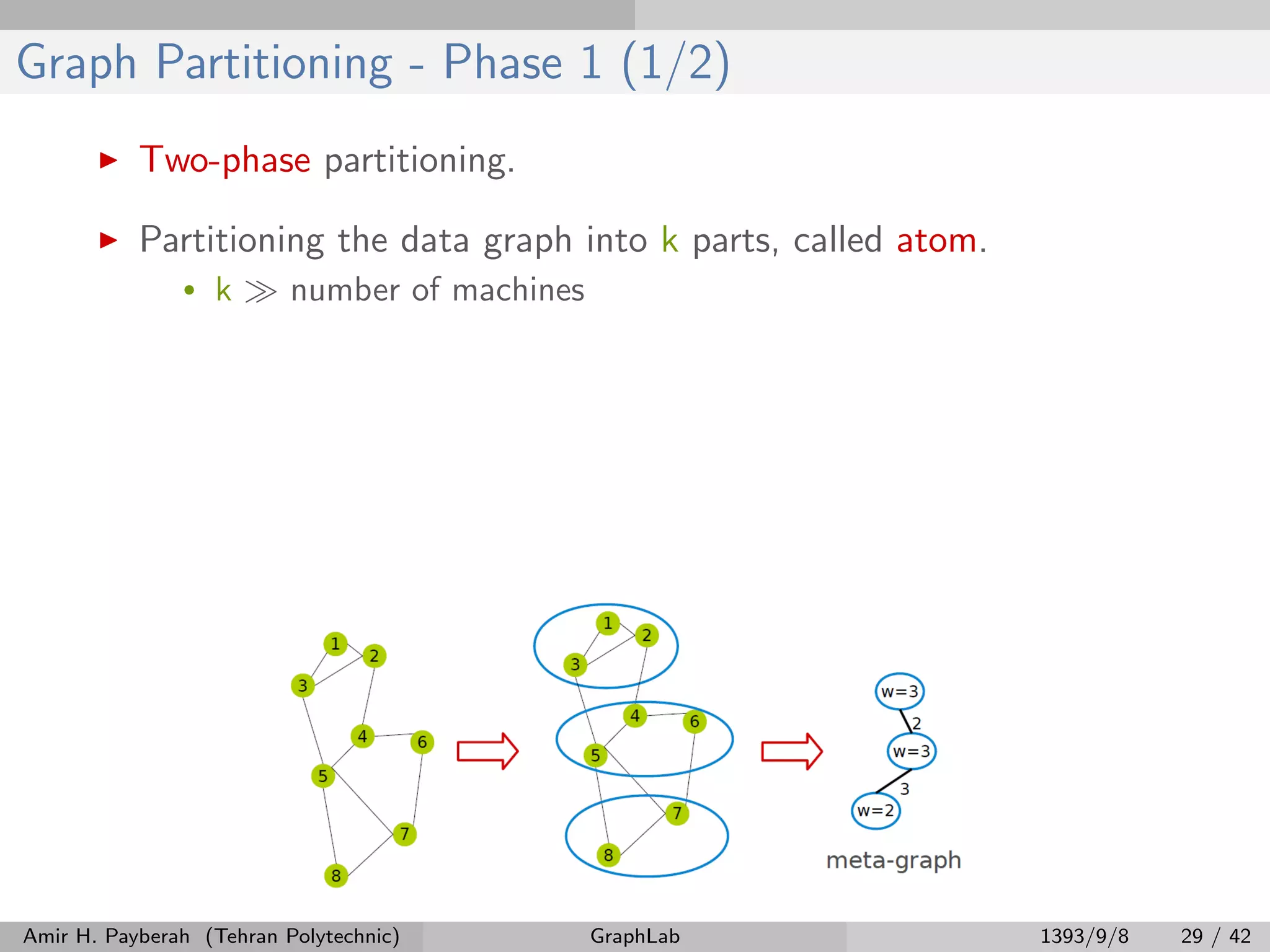

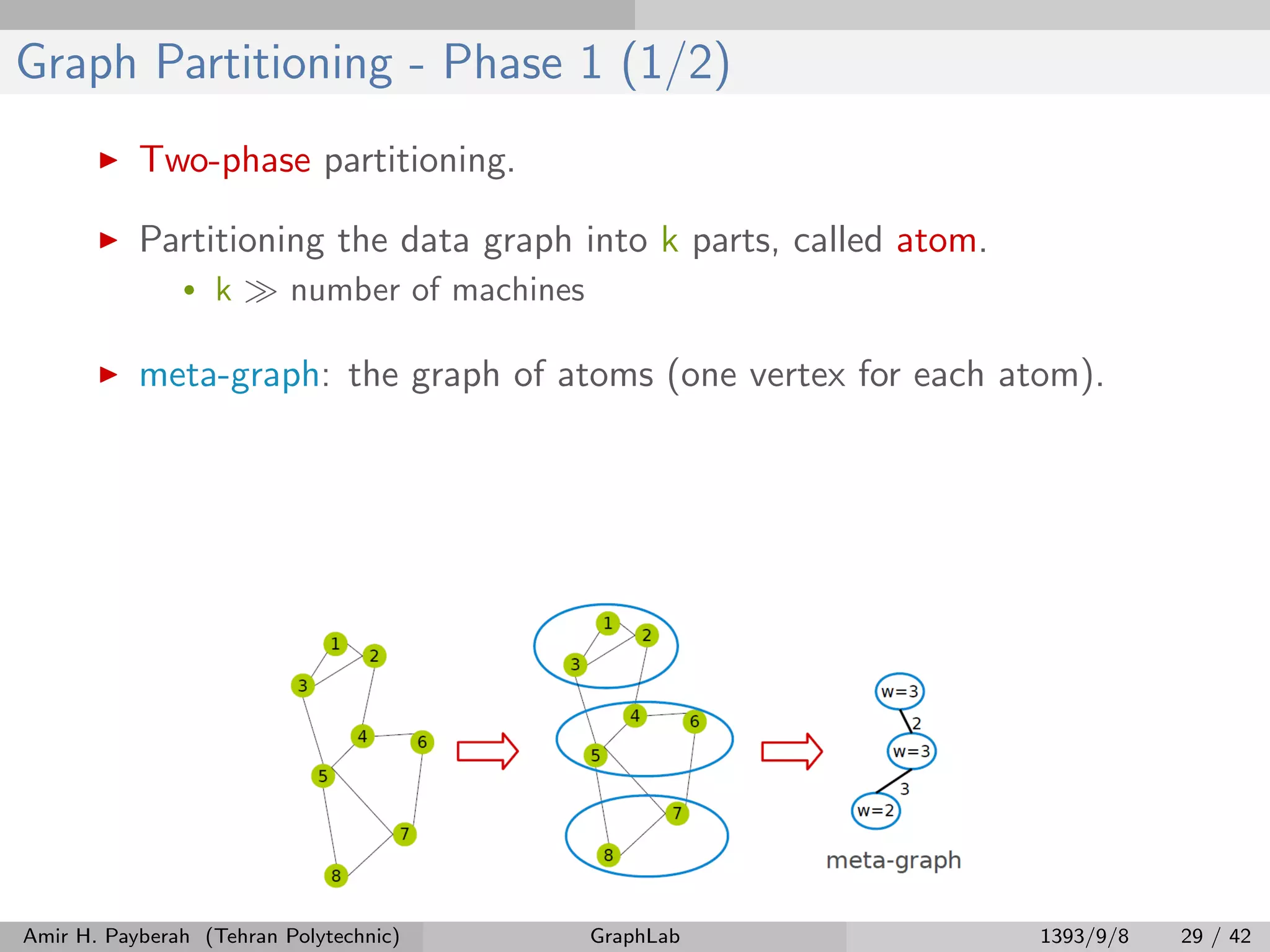

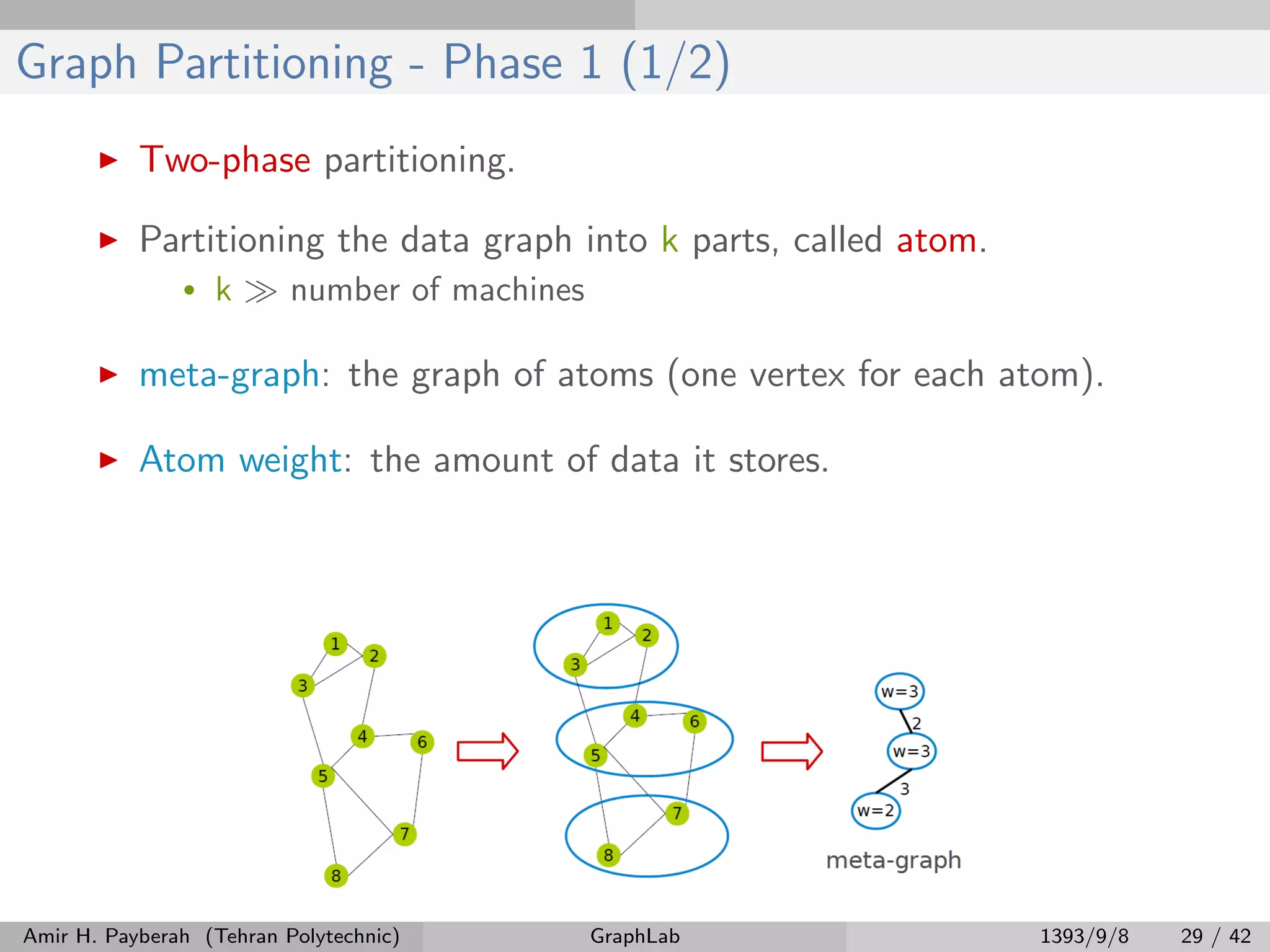

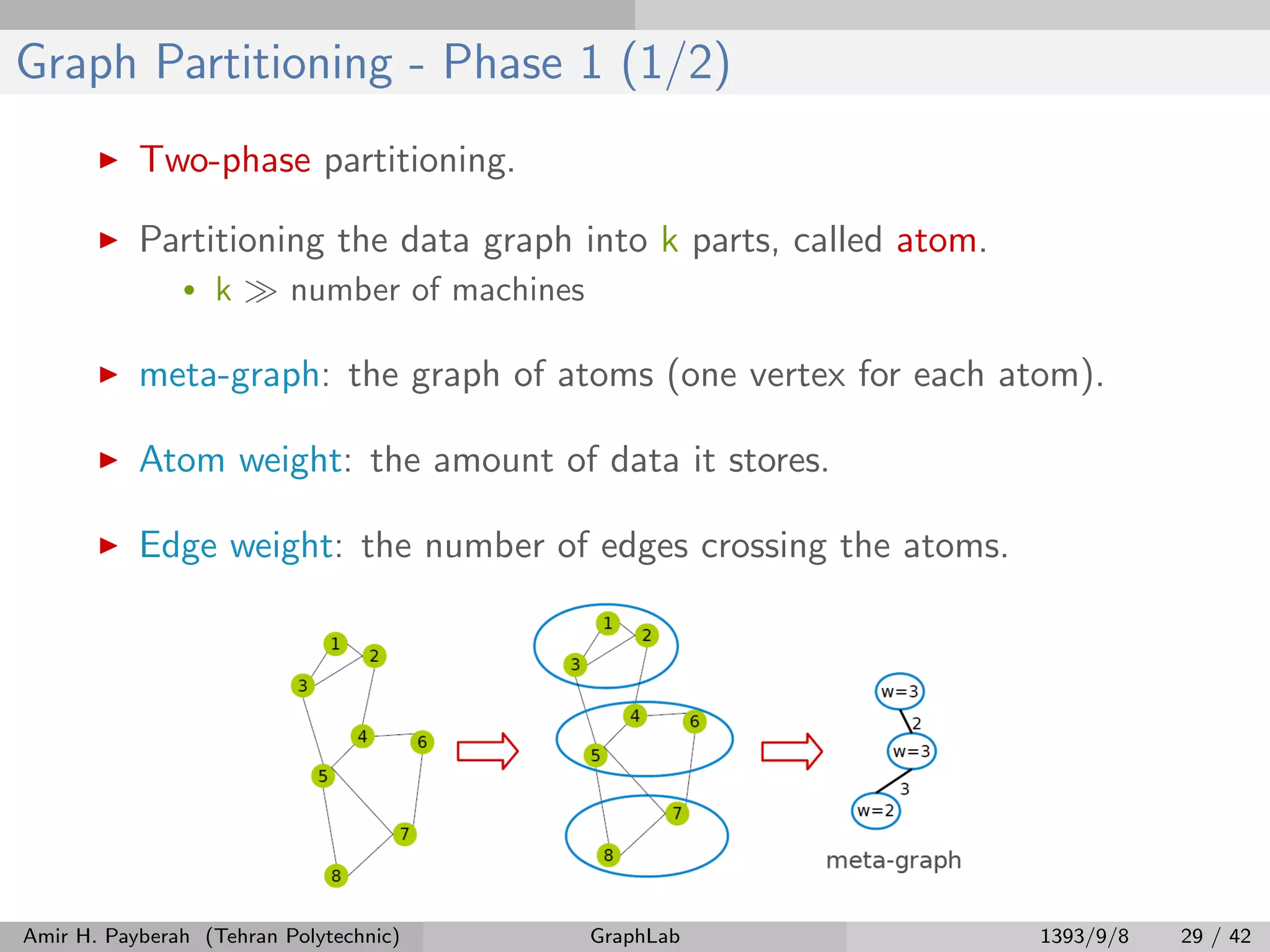

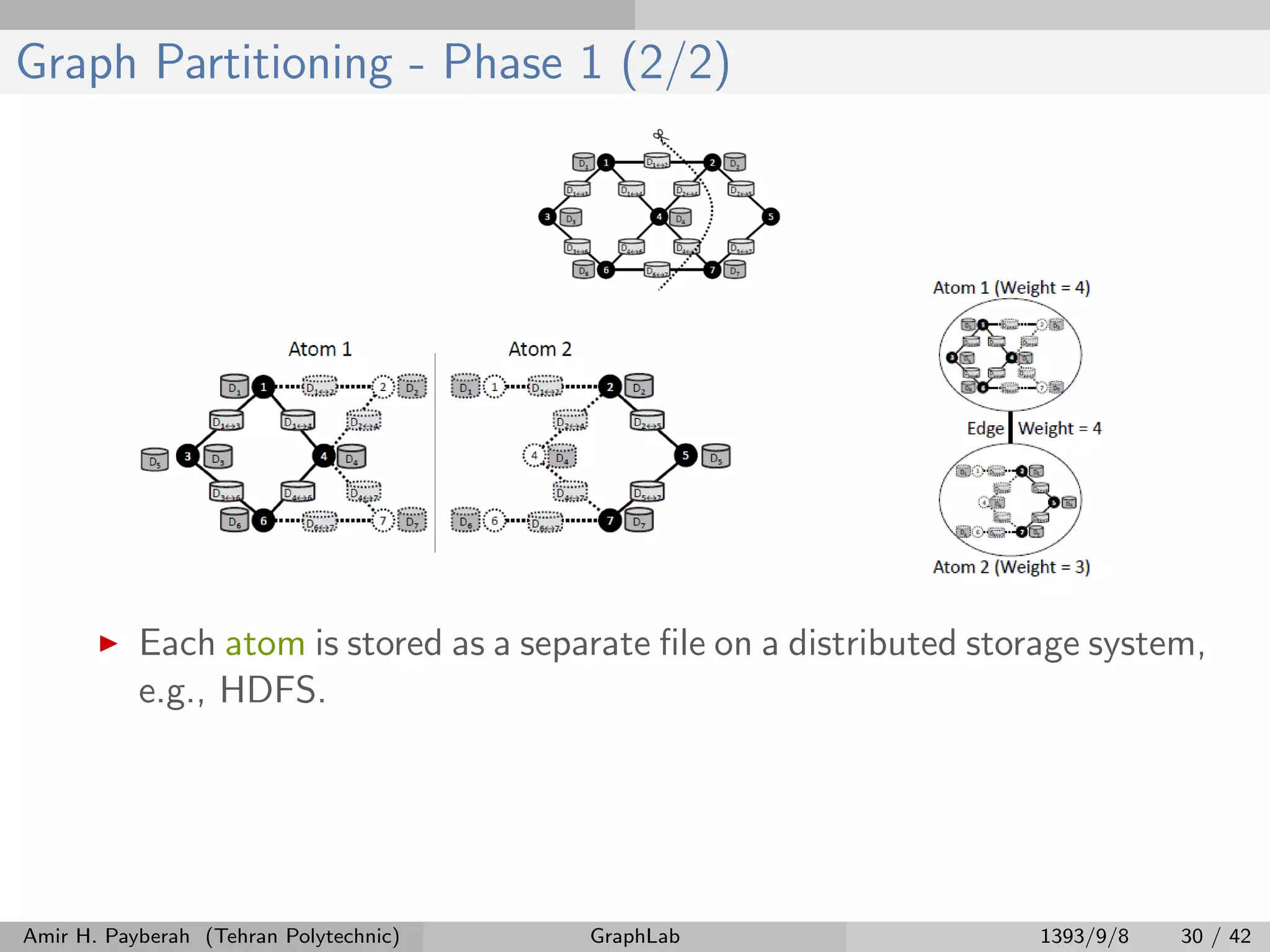

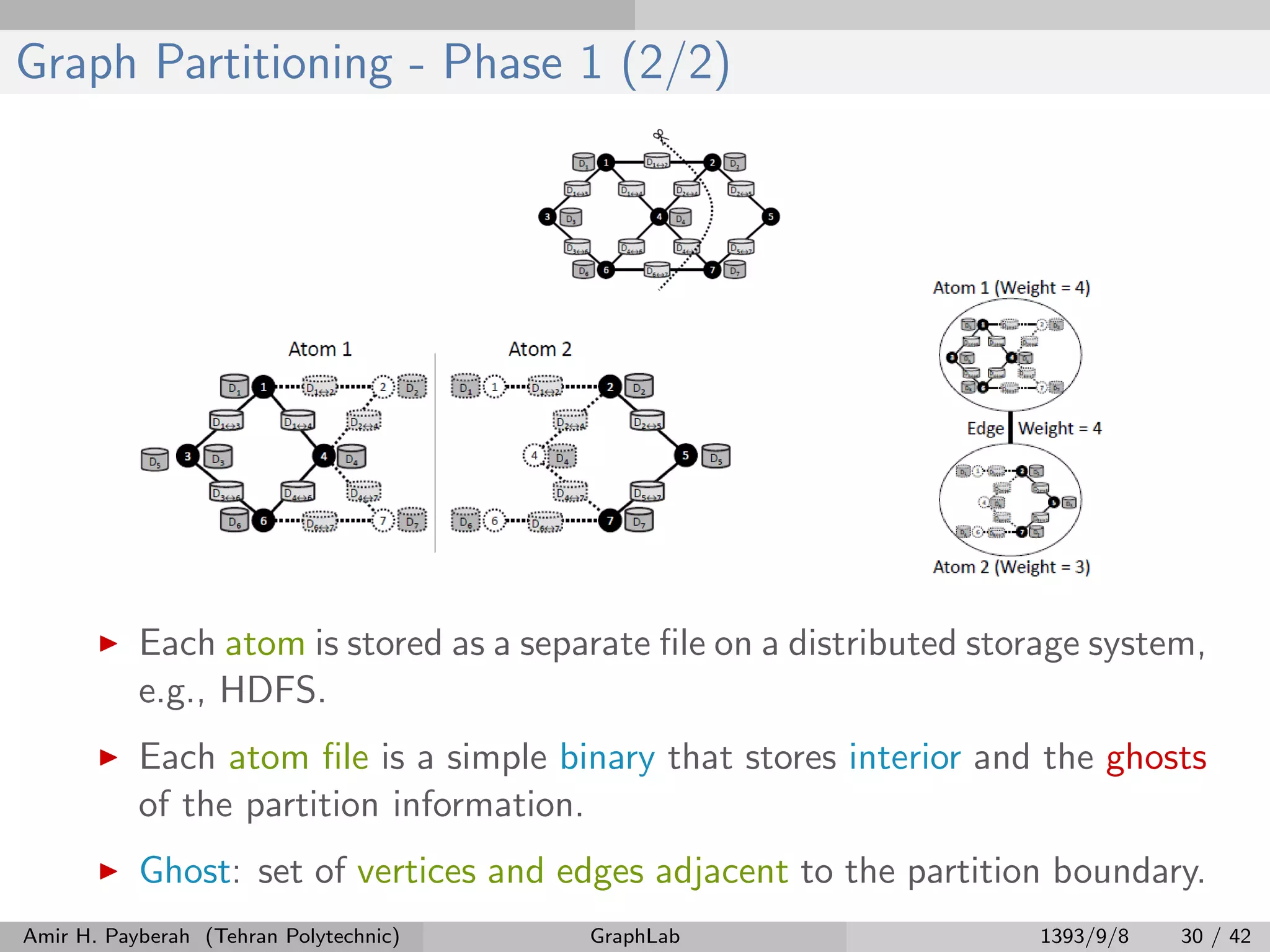

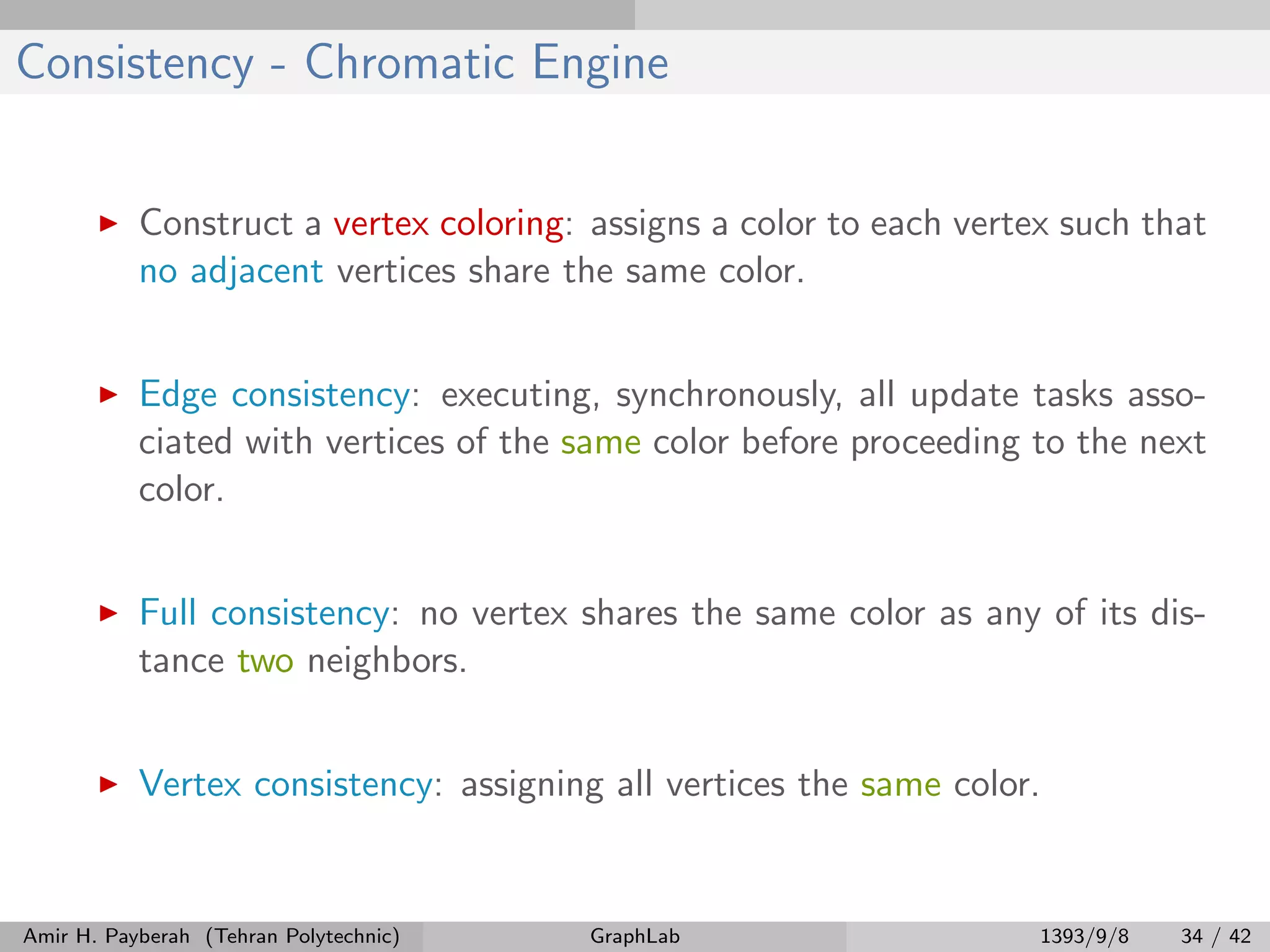

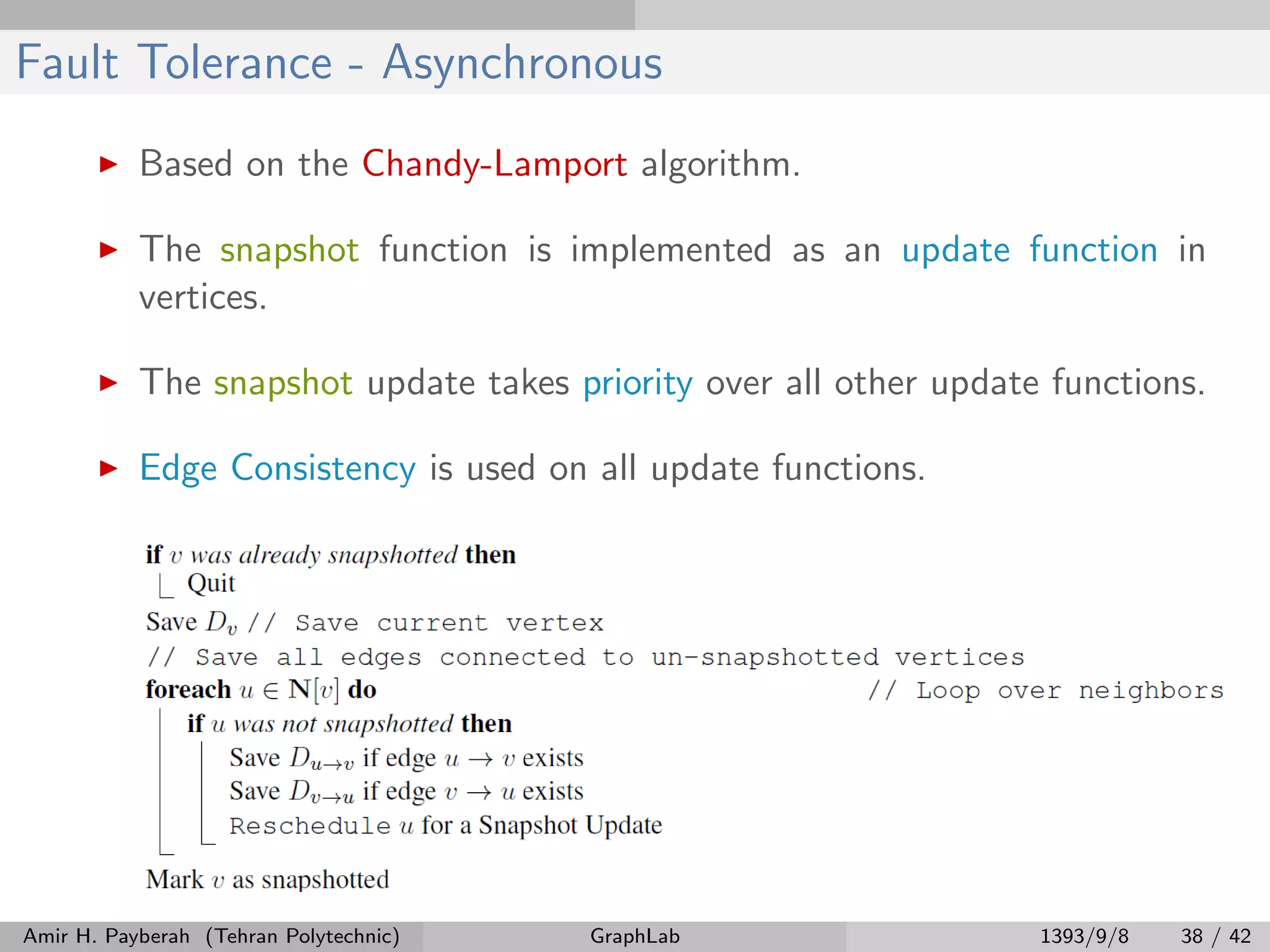

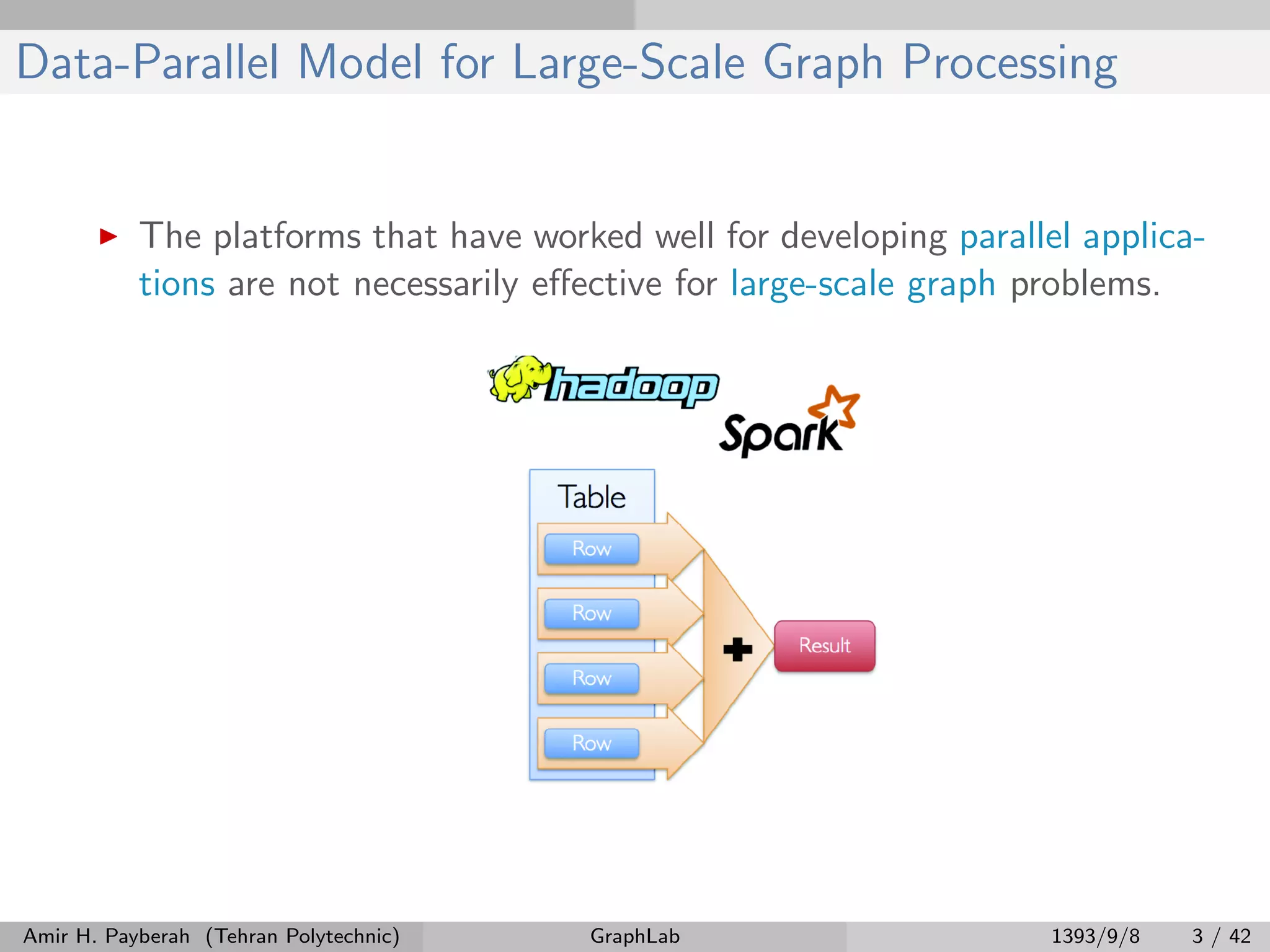

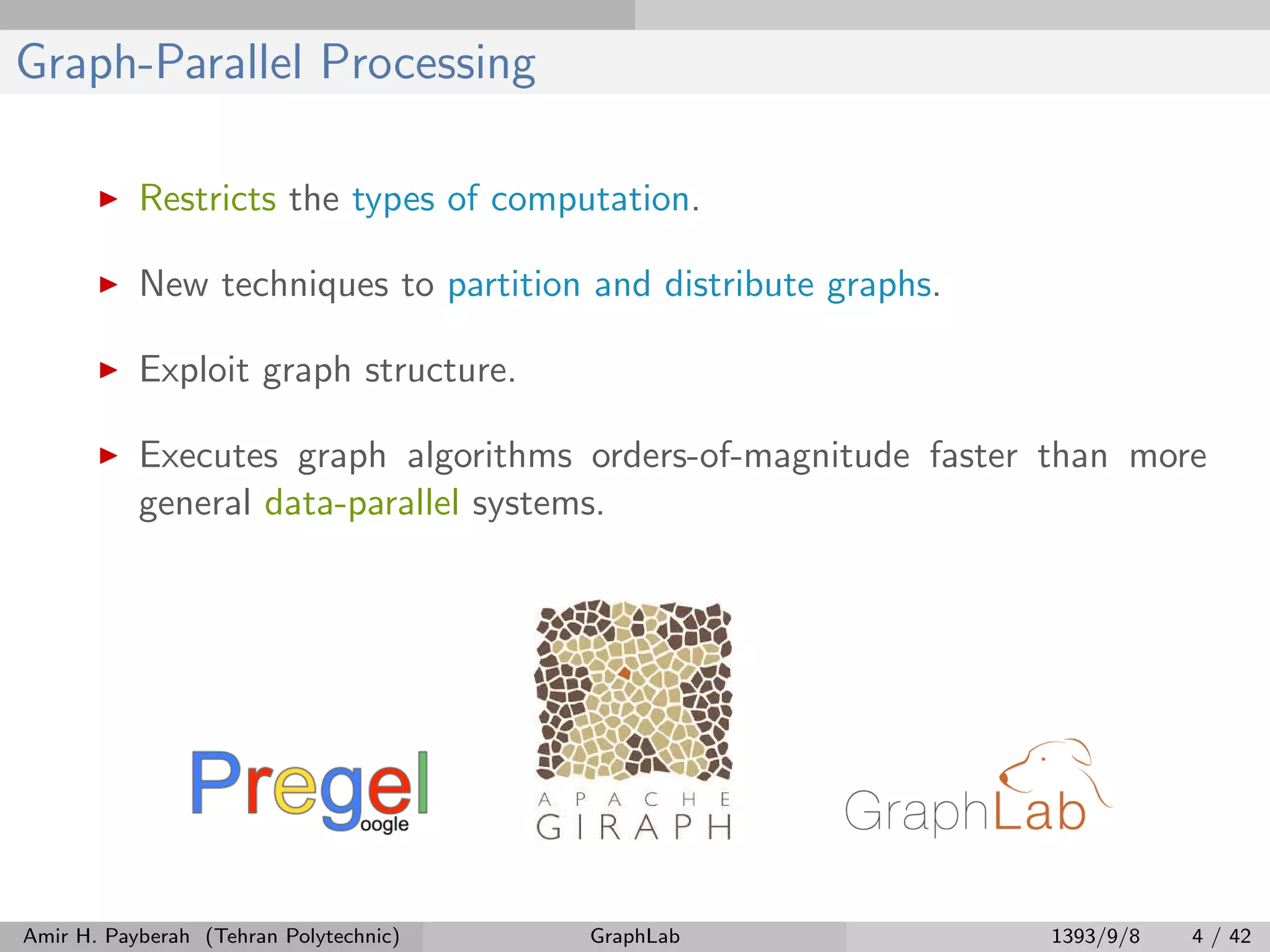

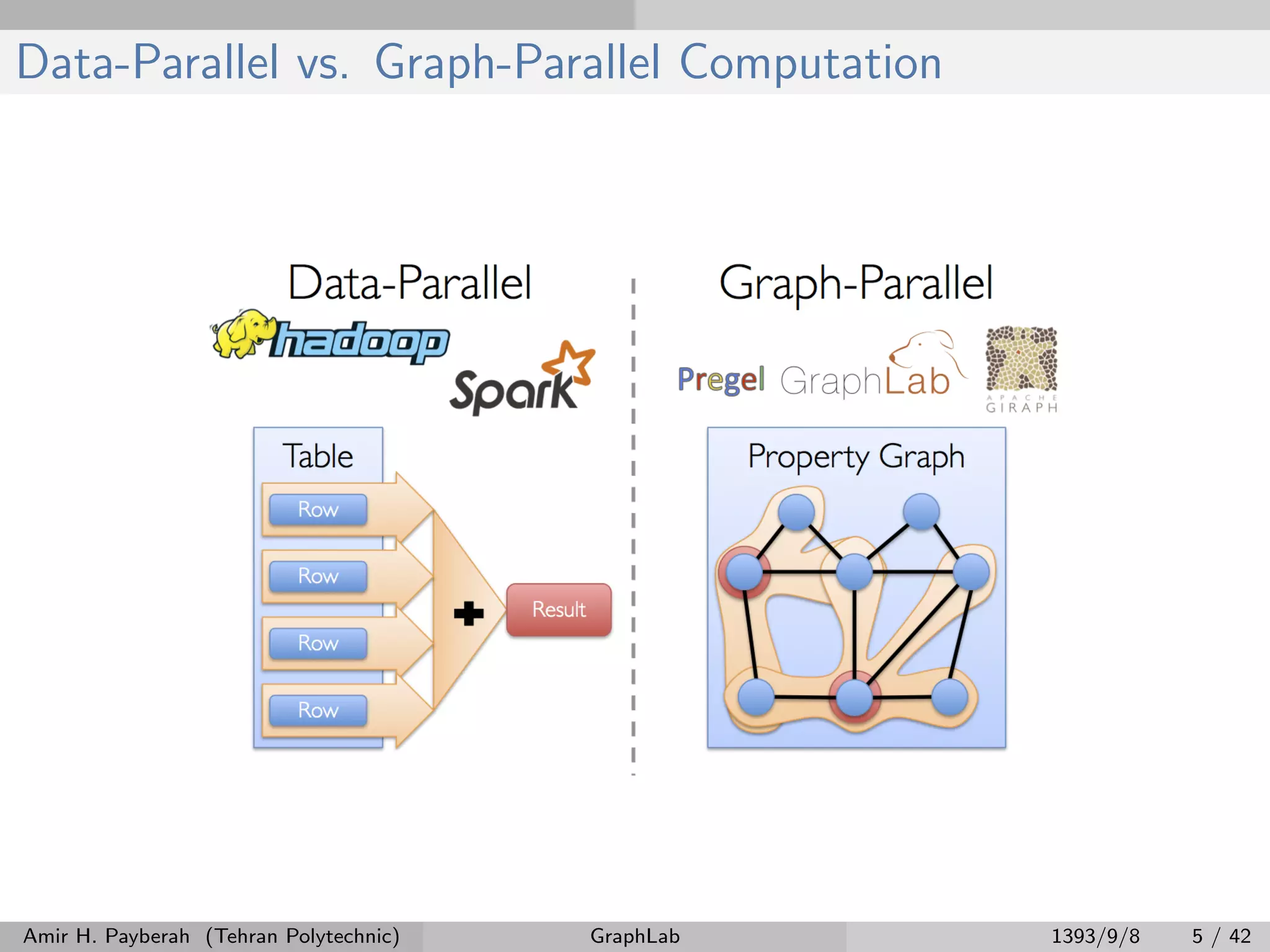

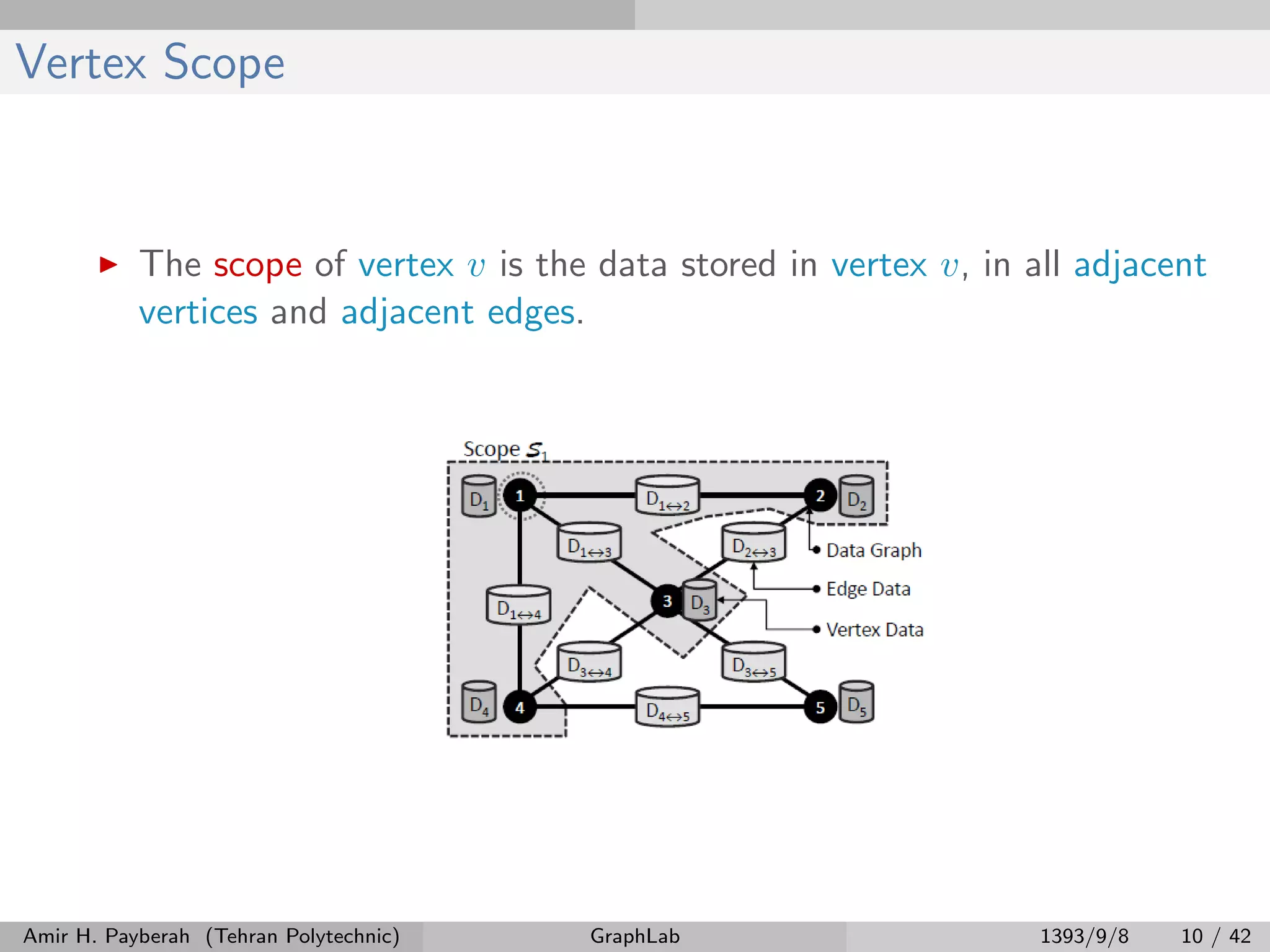

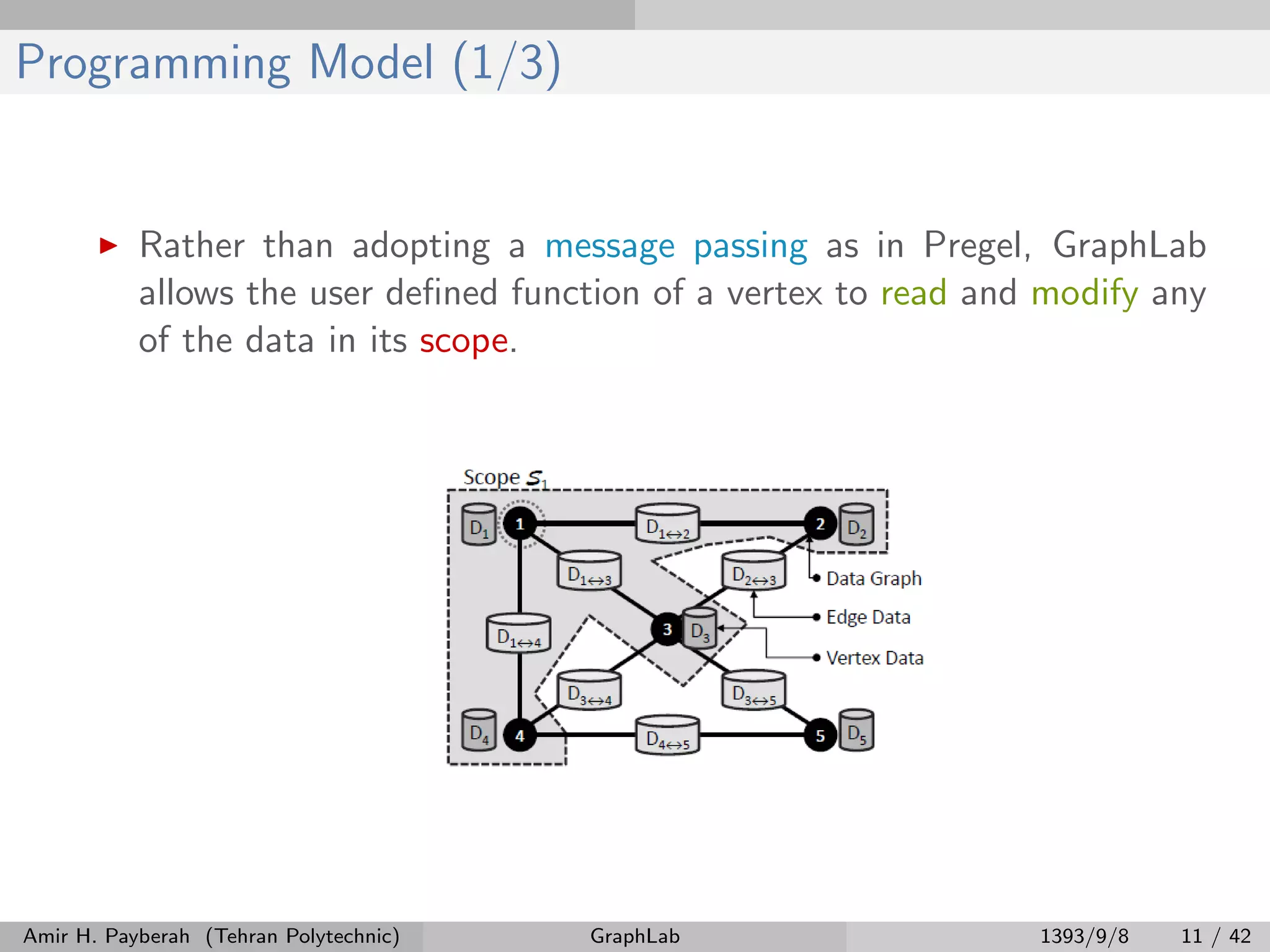

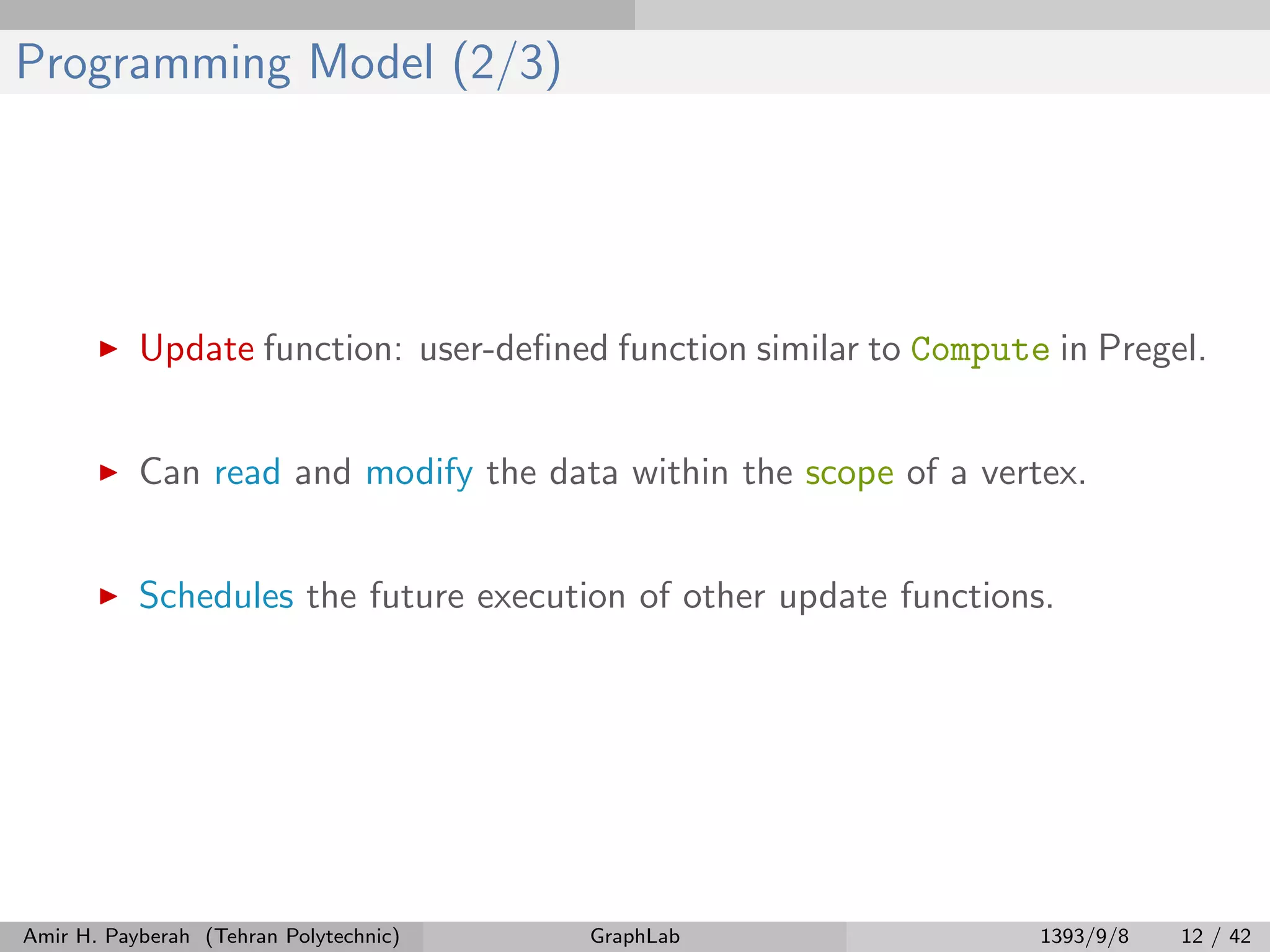

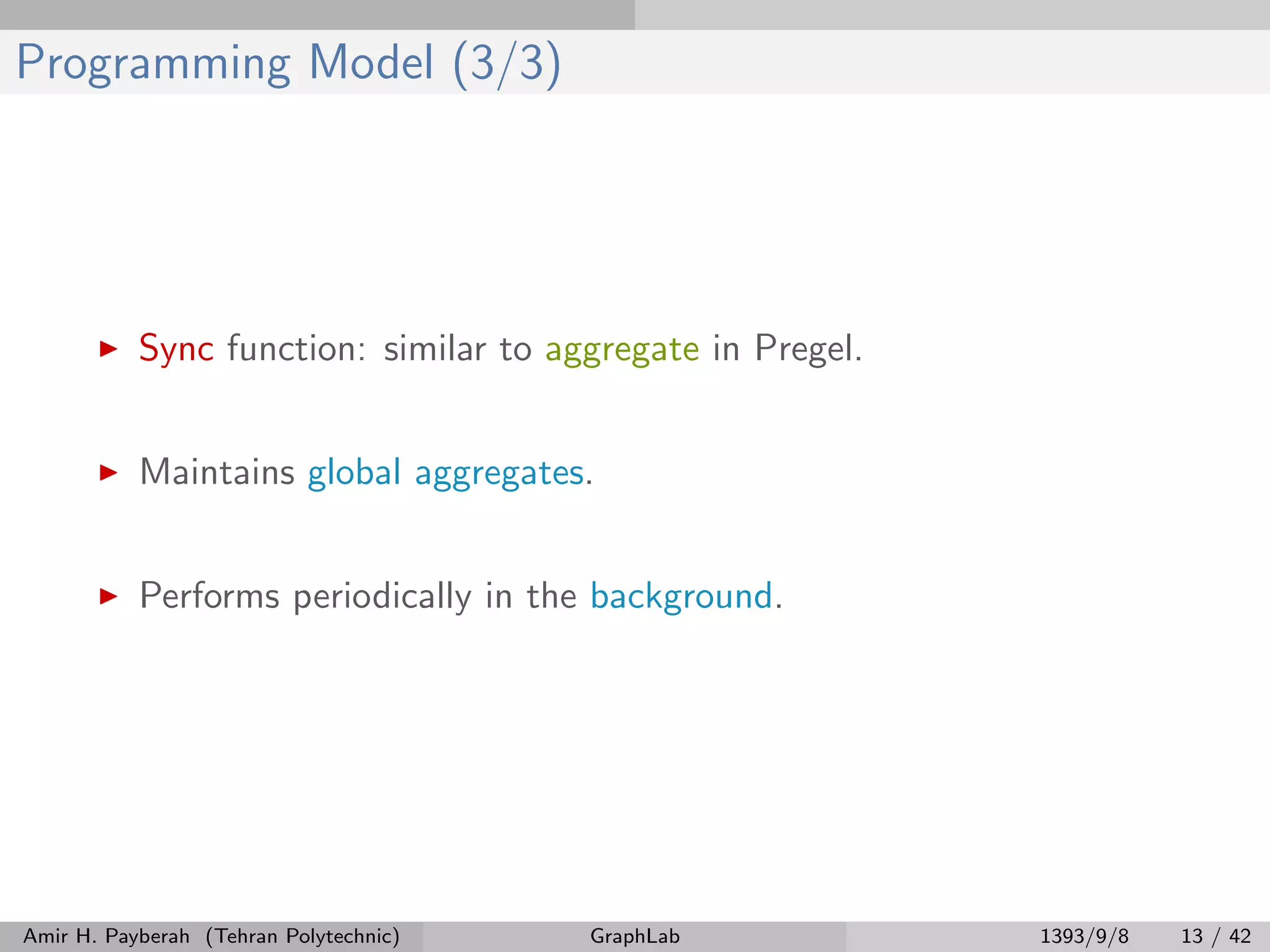

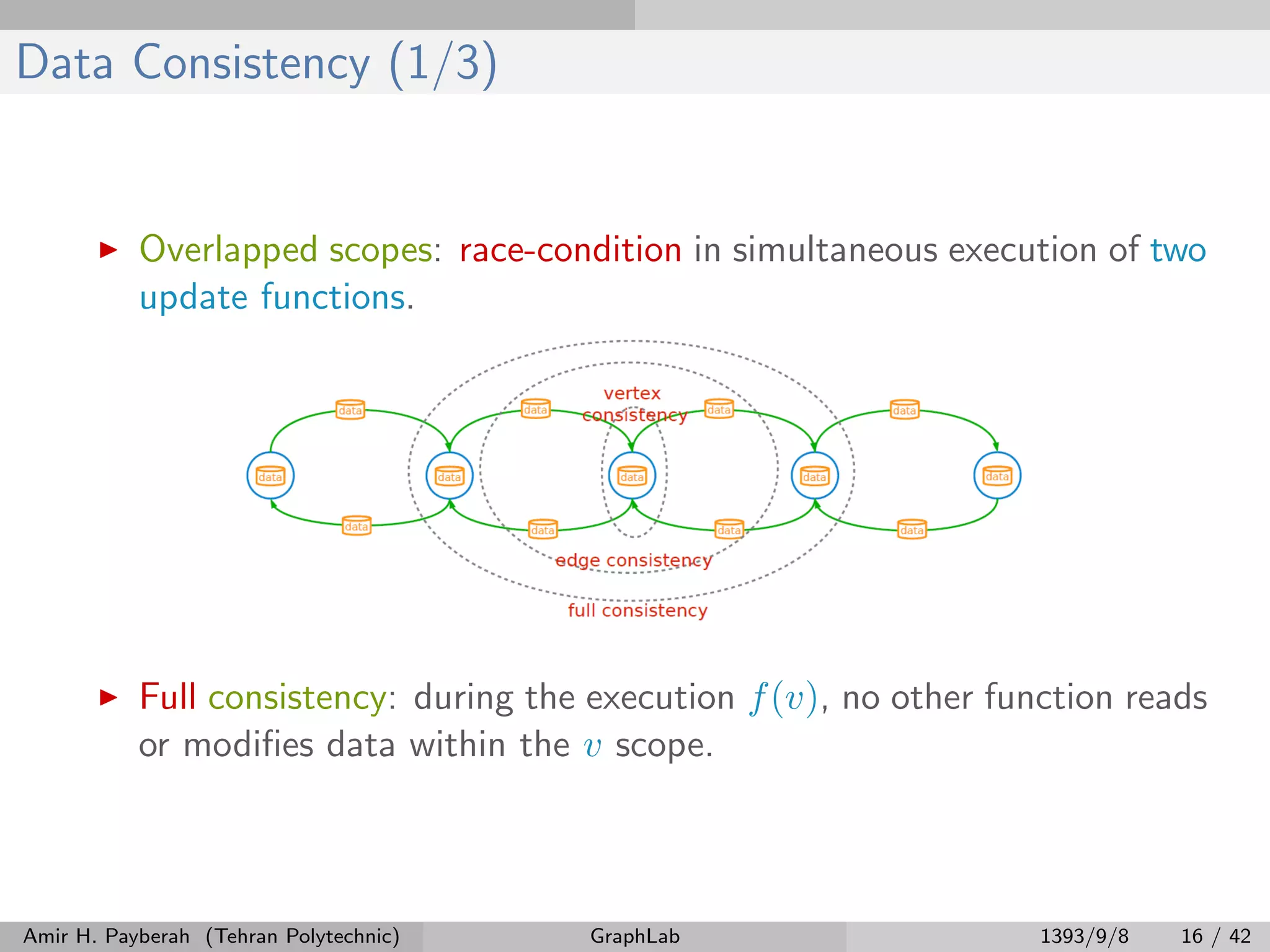

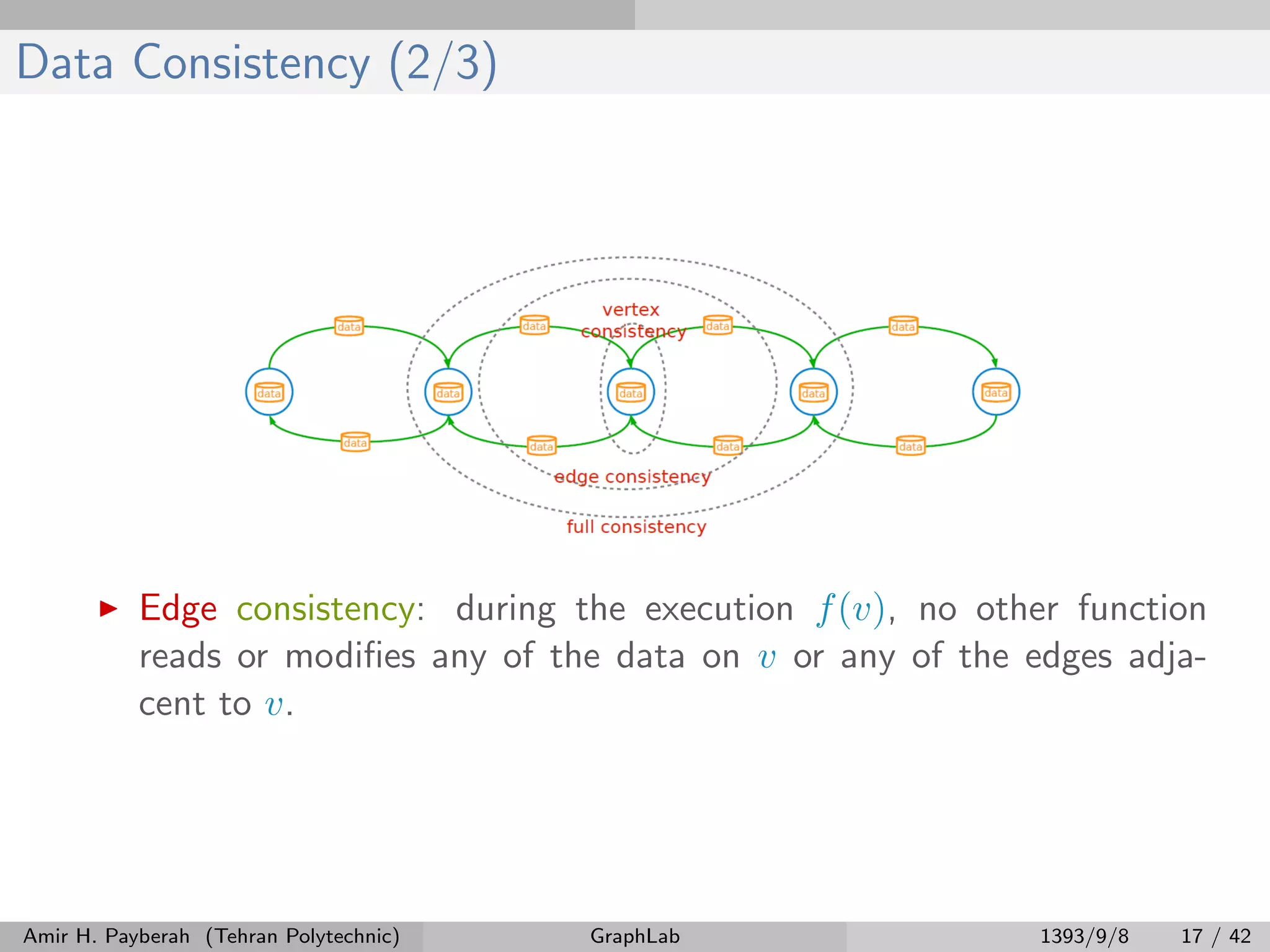

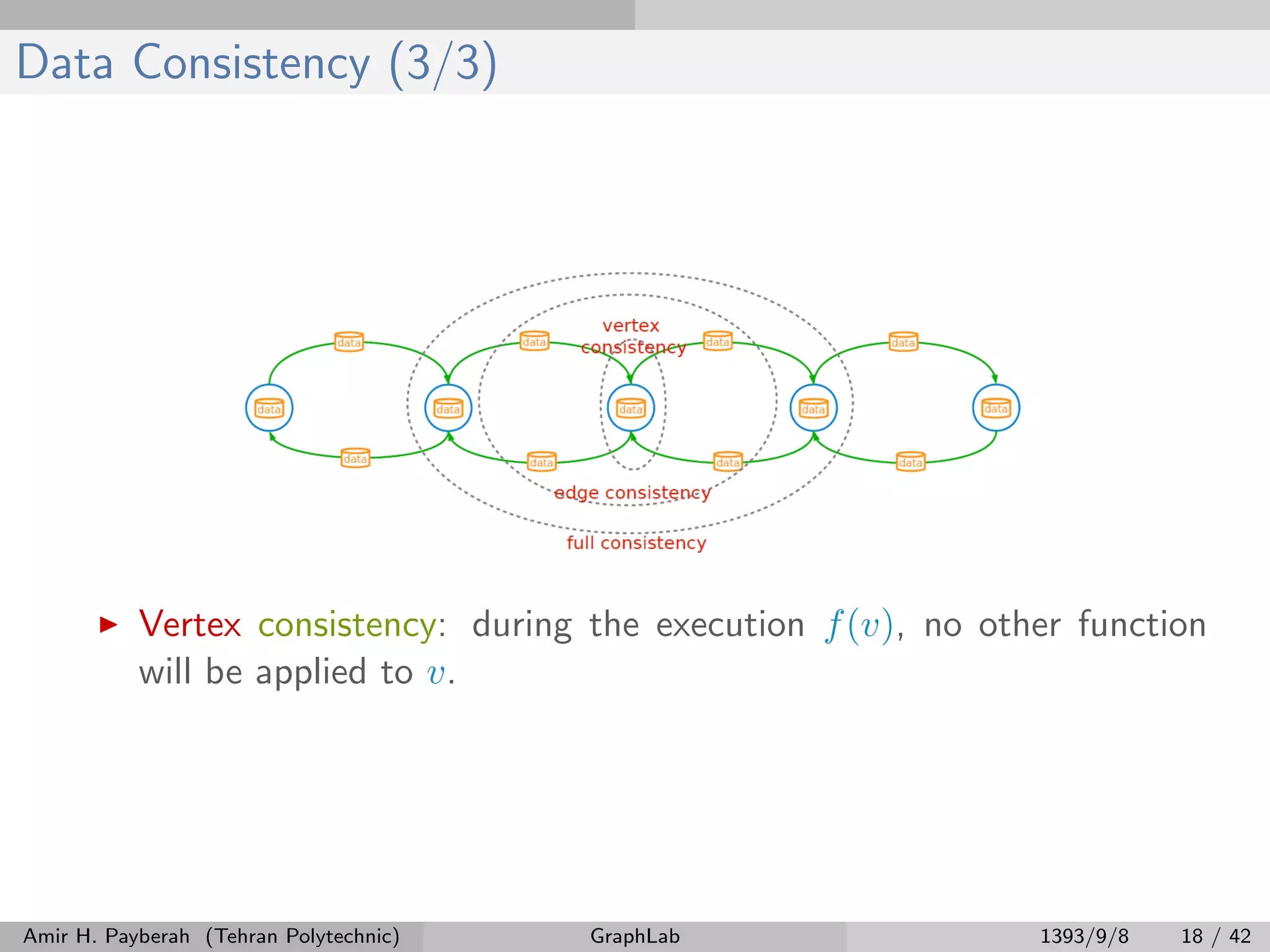

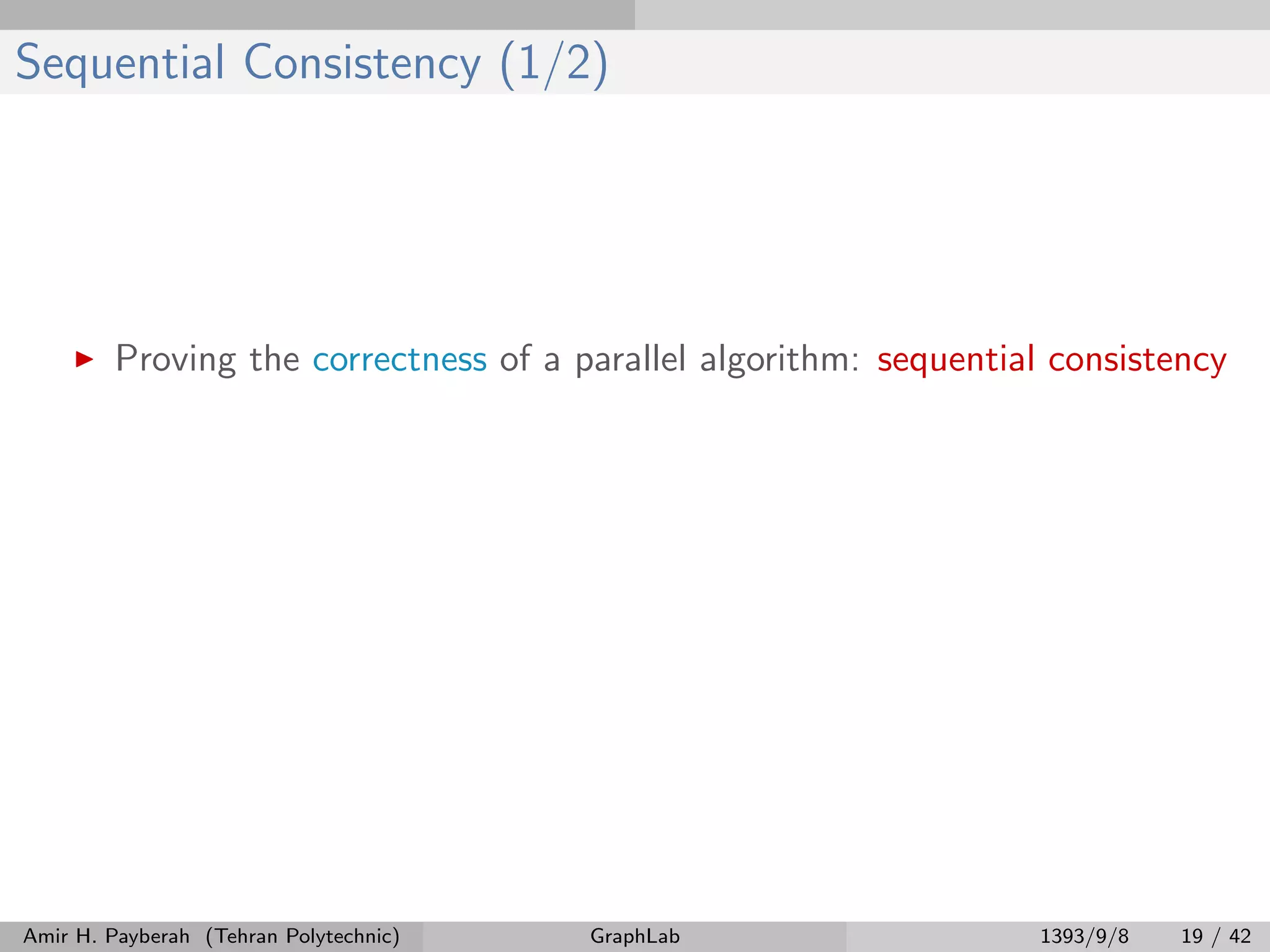

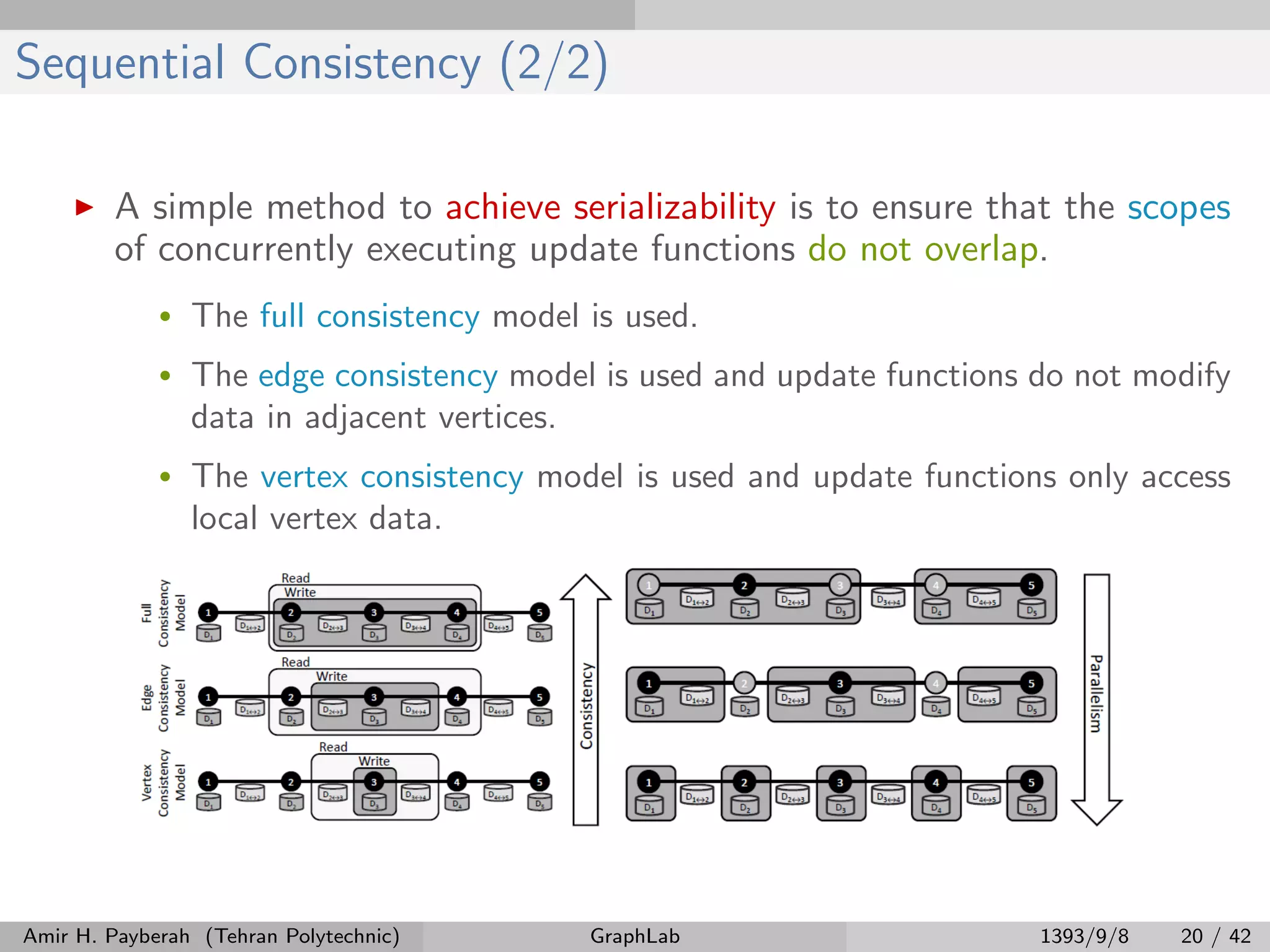

The document describes GraphLab, a new framework for parallel machine learning. GraphLab allows parallel processing of large-scale graph problems more efficiently than general data-parallel systems by exploiting the graph structure. It uses a vertex-centric programming model that allows update functions to read and modify data within a vertex's scope, addressing limitations of the Pregel model. GraphLab implements consistency models to ensure correctness and employs techniques like graph partitioning and distributed locking to enable parallel execution across multiple machines.

![Example: PageRank GraphLab_PageRank(i) // compute sum over neighbors total = 0 foreach(j in in_neighbors(i)): total = total + R[j] * wji // update the PageRank R[i] = 0.15 + total // trigger neighbors to run again foreach(j in out_neighbors(i)): signal vertex-program on j R[i] = 0.15 + j∈Nbrs(i) wjiR[j] Amir H. Payberah (Tehran Polytechnic) GraphLab 1393/9/8 15 / 42](https://image.slidesharecdn.com/graphlab-150126091904-conversion-gate02/75/Graph-processing-Graphlab-20-2048.jpg)

![Consistency vs. Parallelism Consistency vs. Parallelism [Low, Y., GraphLab: A Distributed Abstraction for Large Scale Machine Learning (Doctoral dissertation, University of California), 2013.] Amir H. Payberah (Tehran Polytechnic) GraphLab 1393/9/8 21 / 42](https://image.slidesharecdn.com/graphlab-150126091904-conversion-gate02/75/Graph-processing-Graphlab-31-2048.jpg)