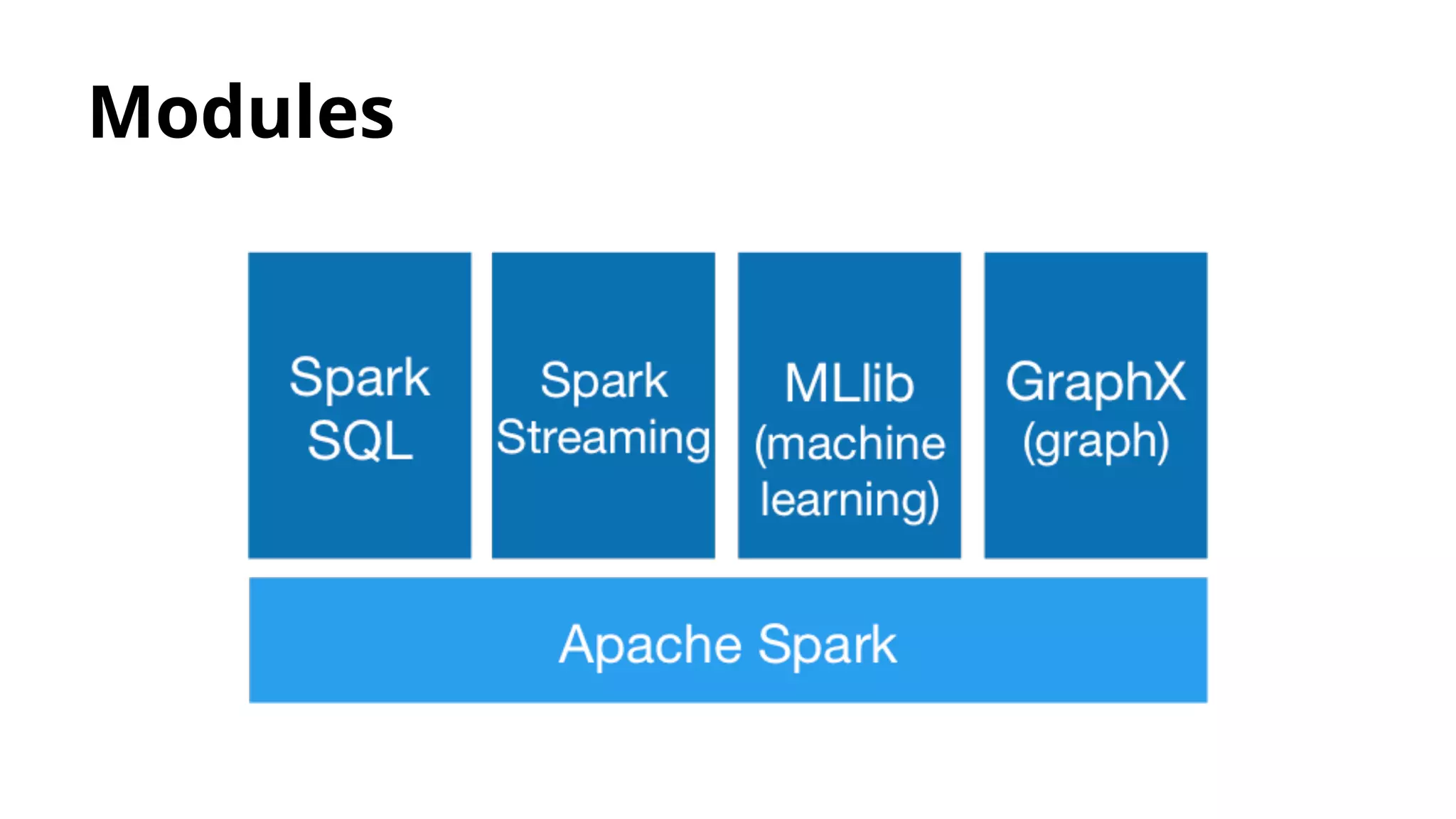

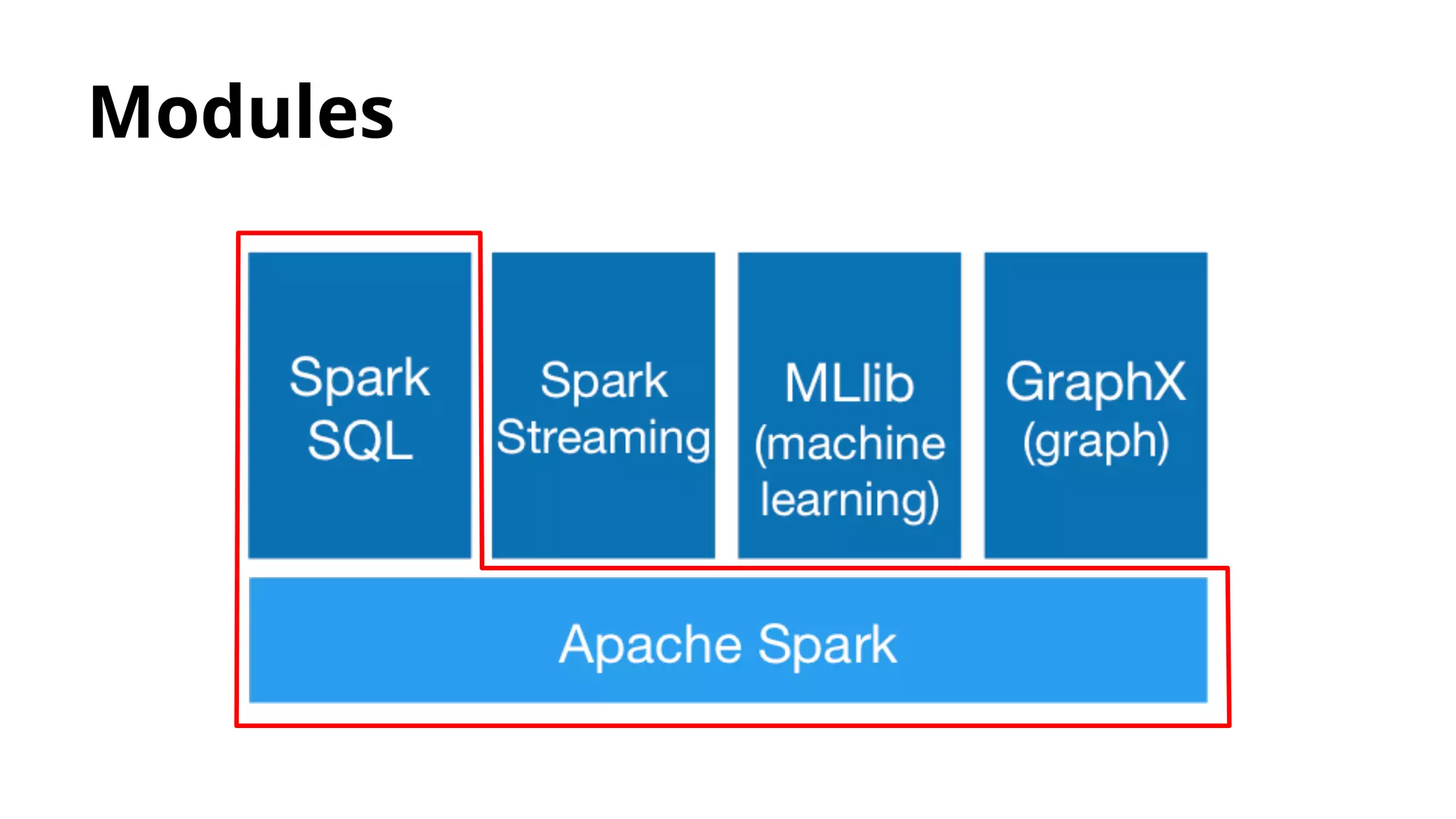

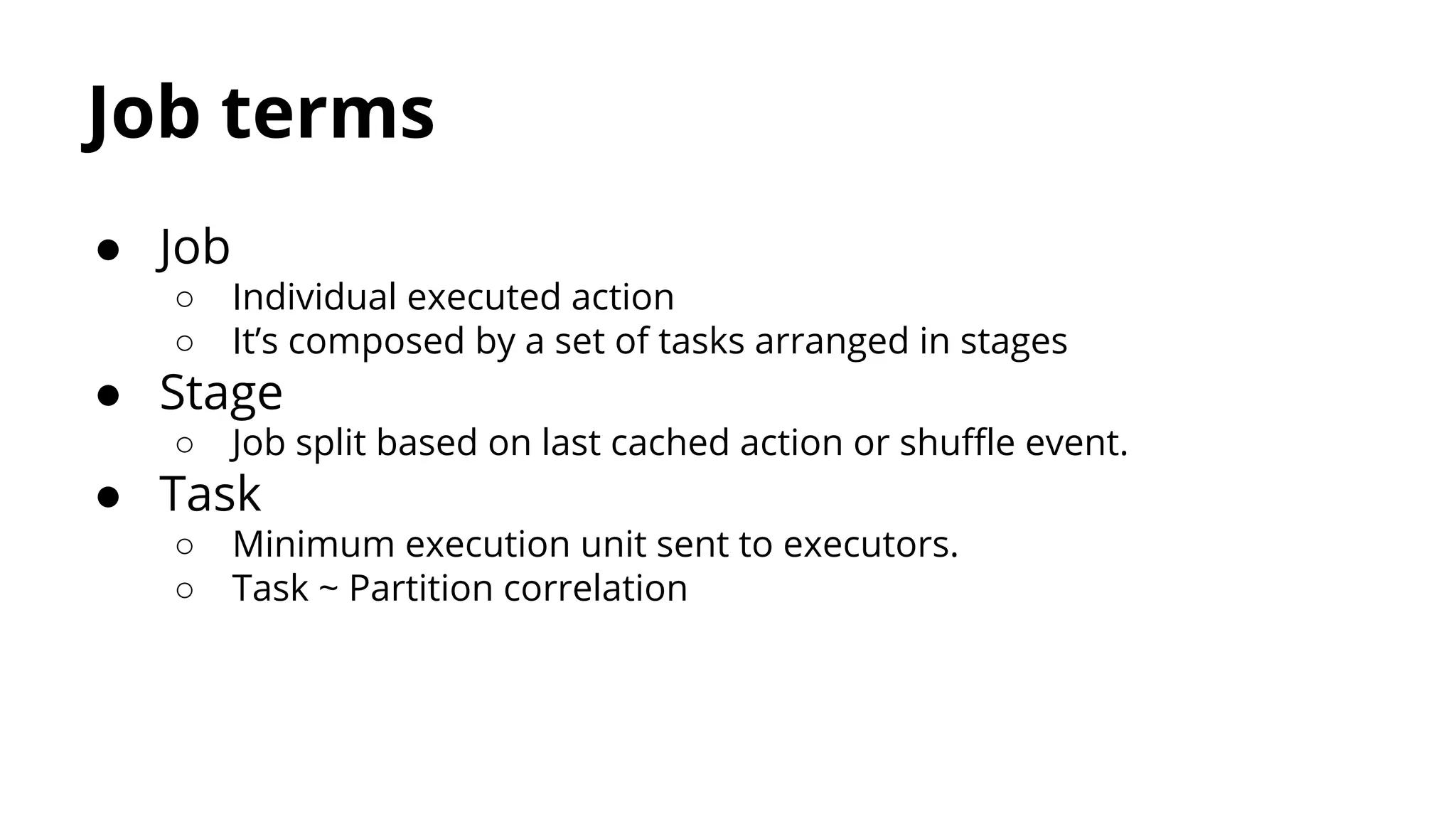

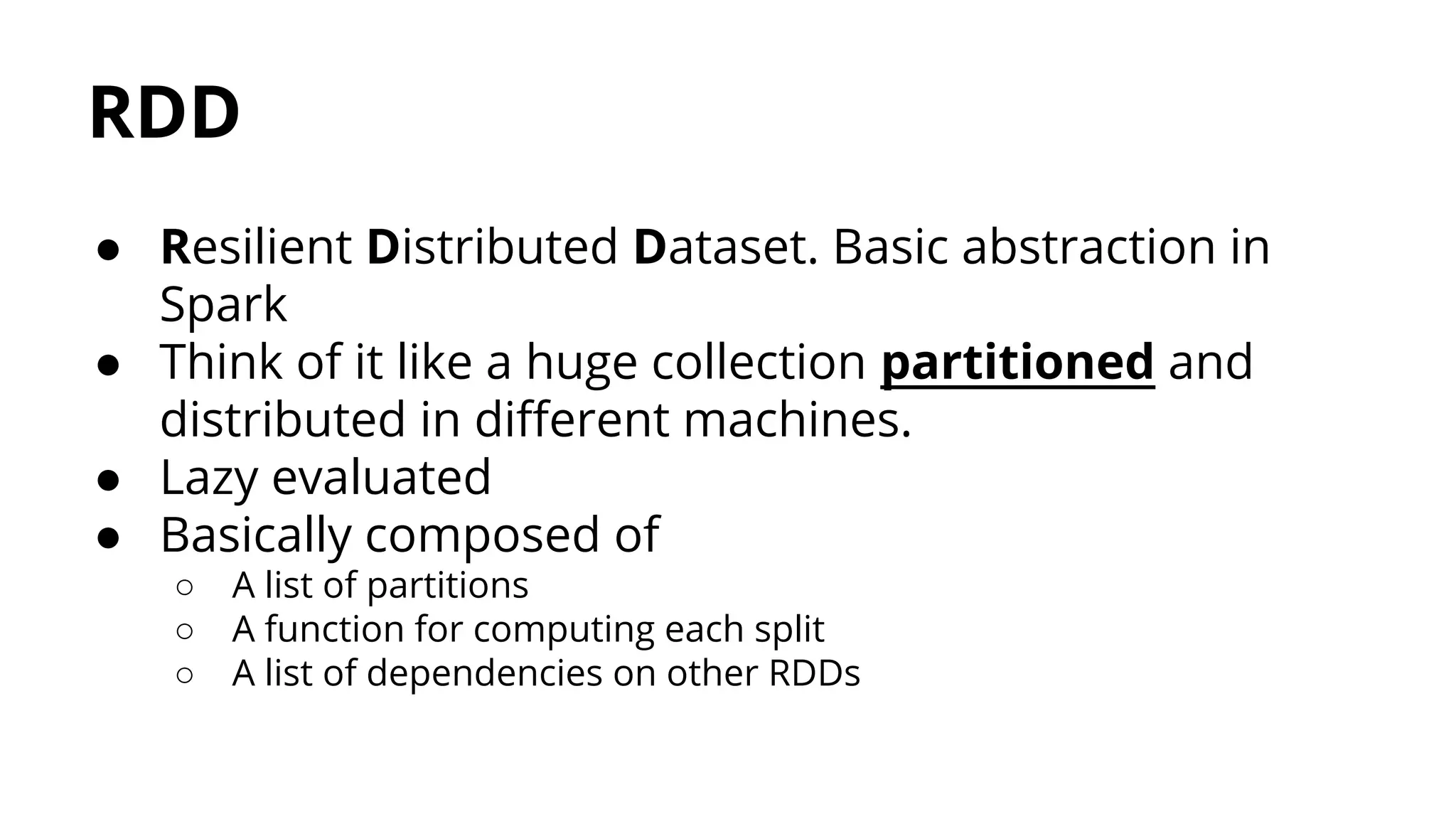

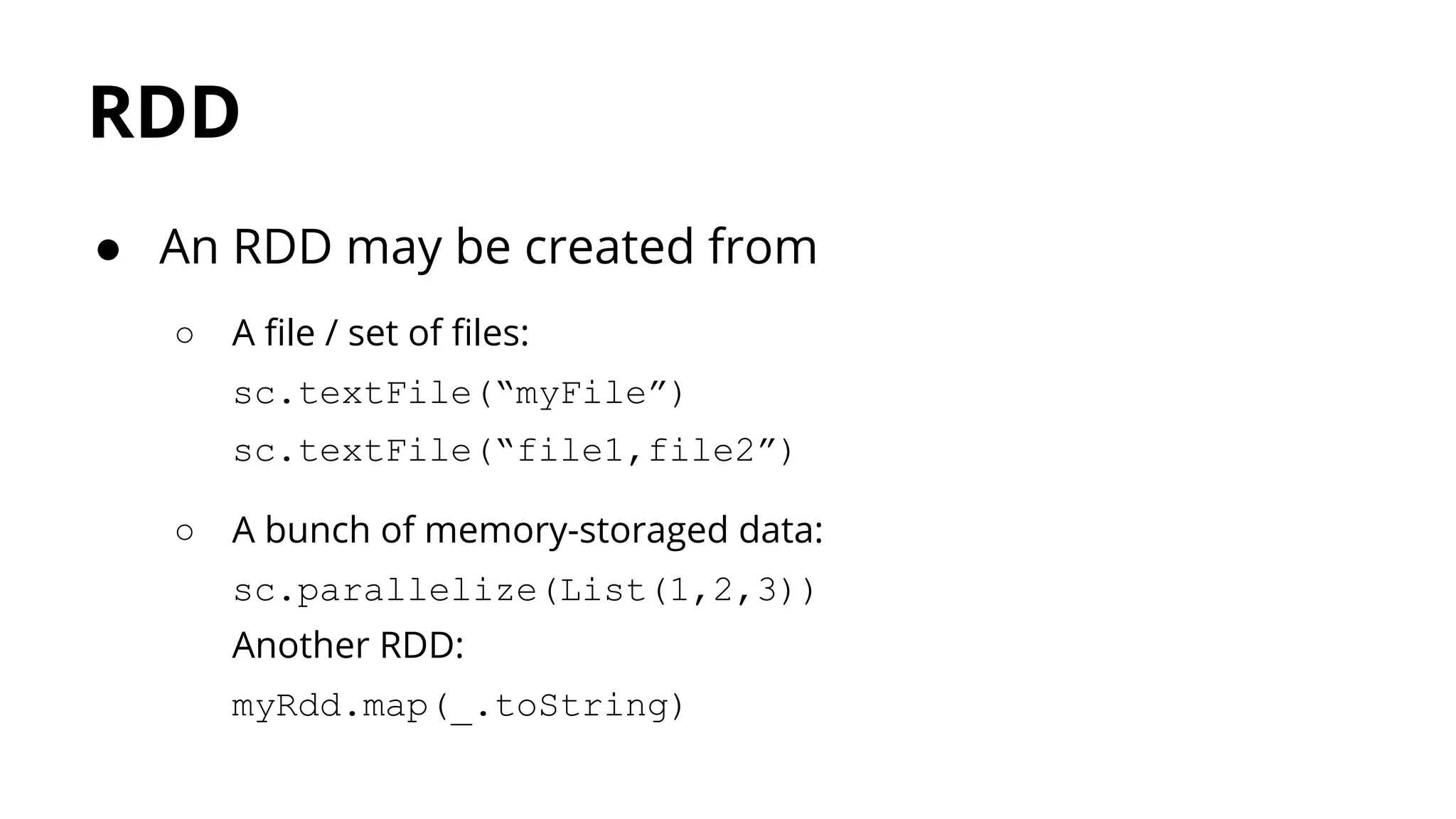

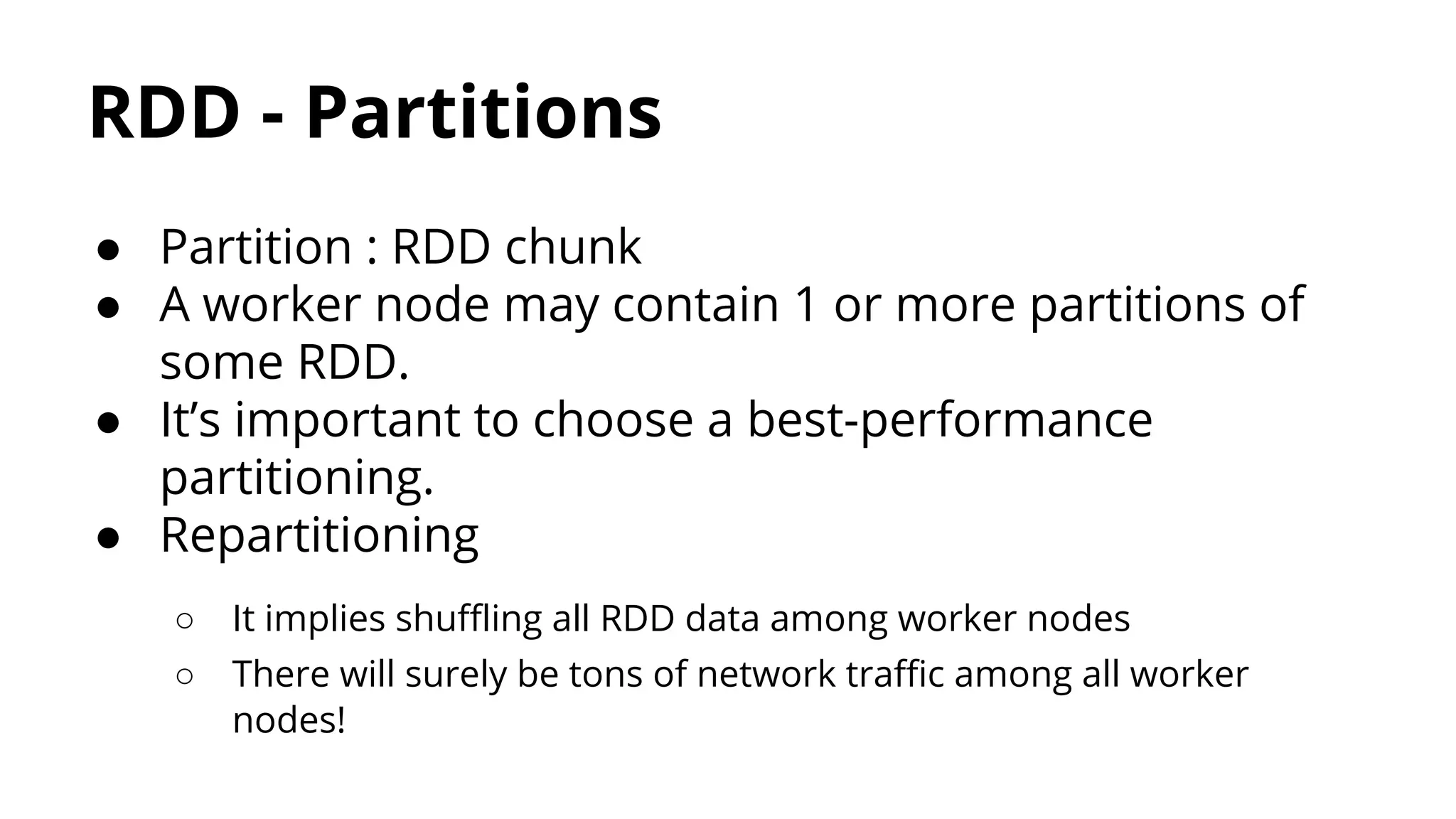

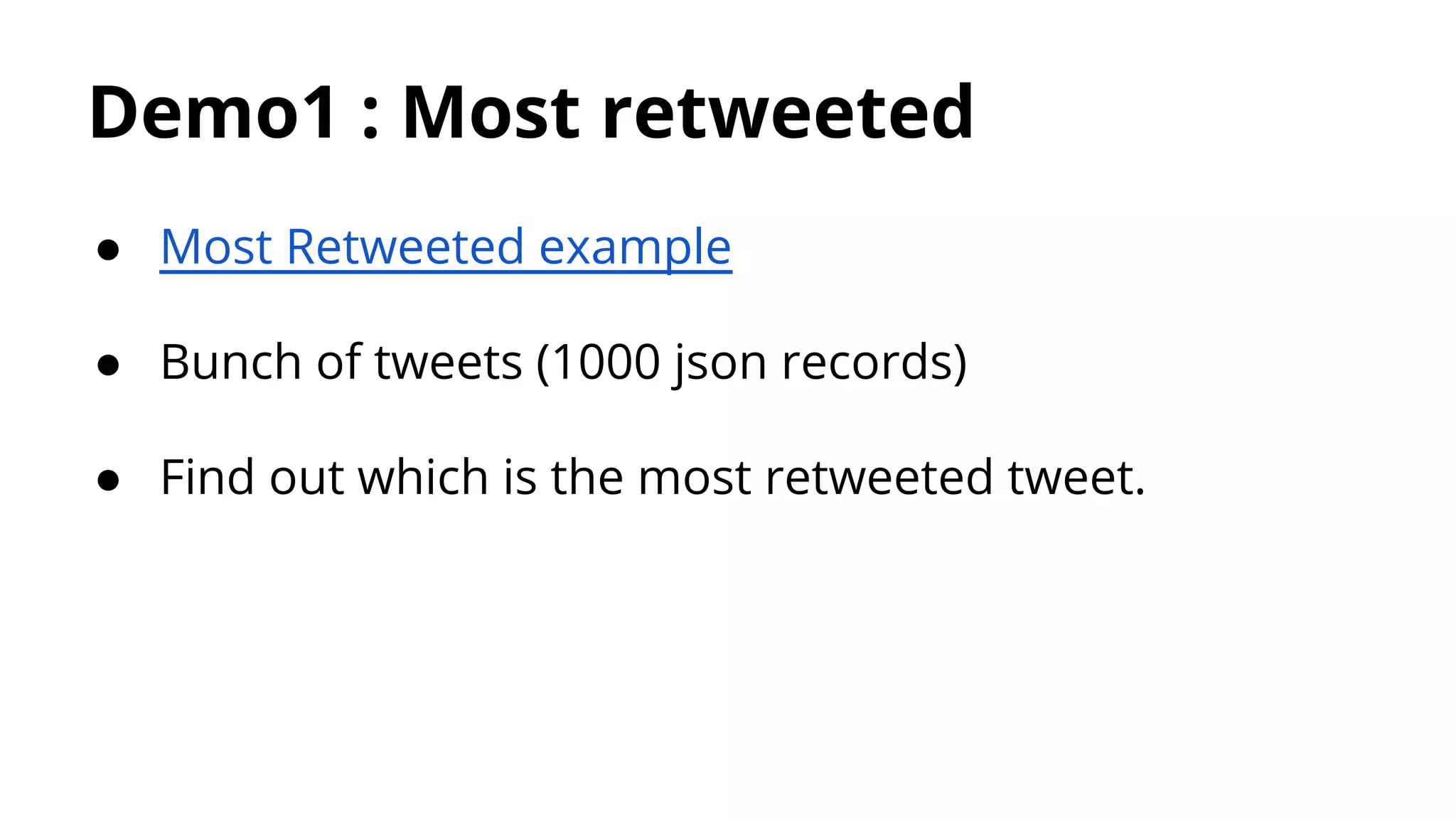

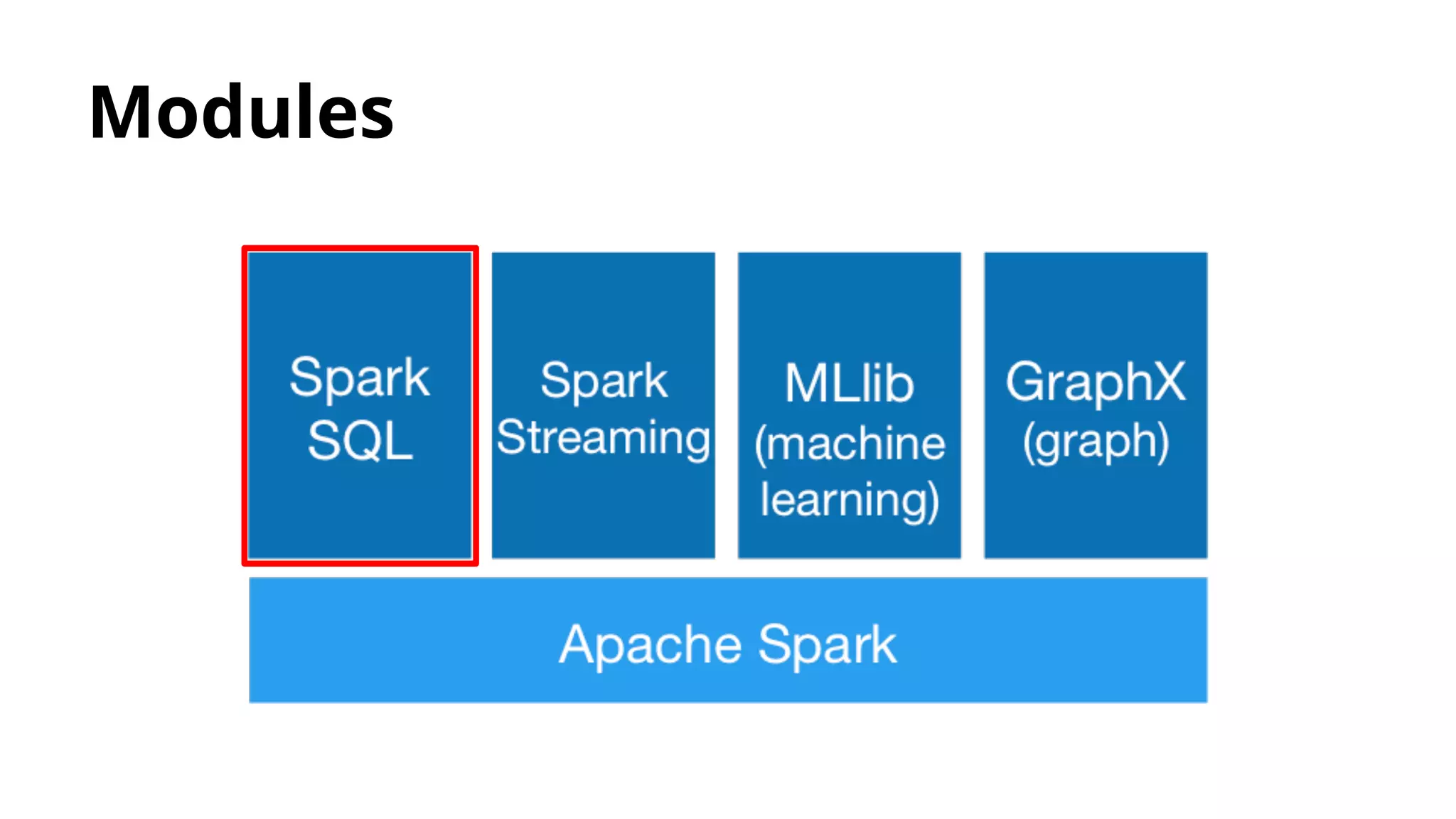

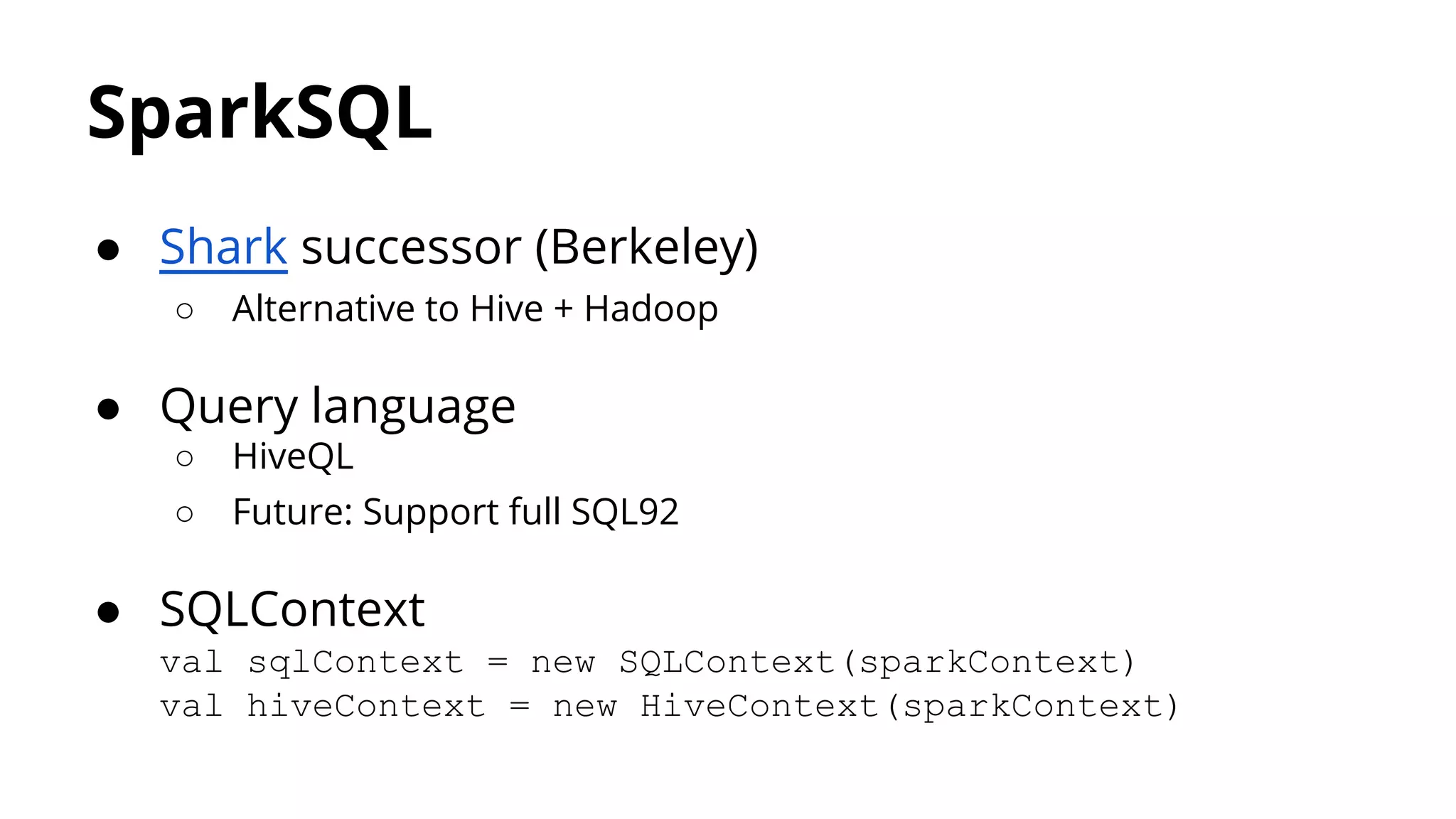

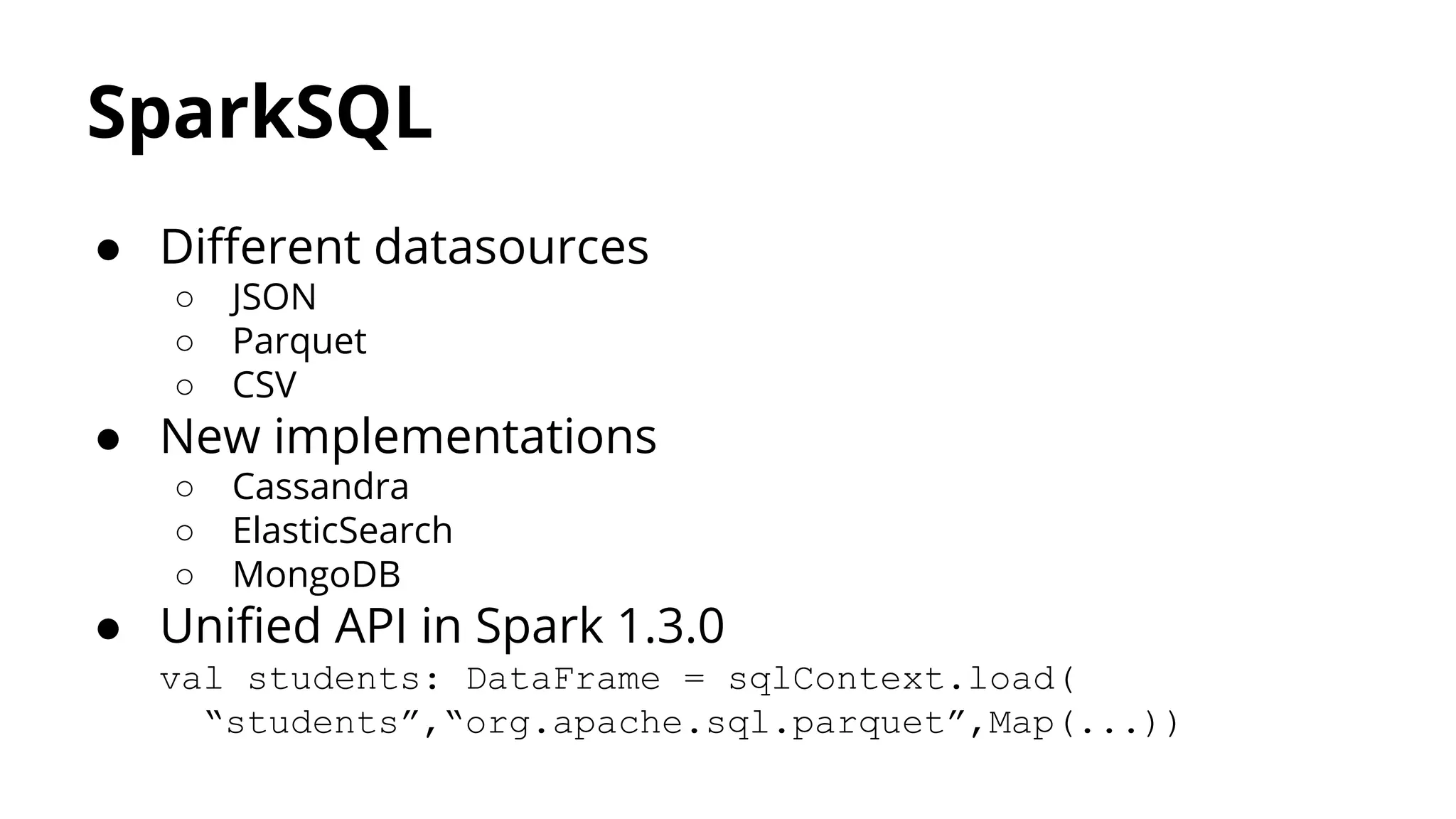

This document discusses Spark, an open-source cluster computing framework. It begins with an introduction to distributed computing problems related to processing large datasets. It then provides an overview of Spark, including its core abstraction of resilient distributed datasets (RDDs) and how Spark builds on the MapReduce model. The rest of the document demonstrates Spark concepts like transformations and actions on RDDs and the use of key-value pairs. It also discusses SparkSQL and shows examples of finding the most retweeted tweet using core Spark and SparkSQL.

![What’s Spark? val mySeq: Seq[Int] = 1 to 5 val action: Seq[Int] => Int = (seq: Seq[Int]) => seq.sum val result: Int = 15](https://image.slidesharecdn.com/distributedcomputingwithspark-150414042905-conversion-gate01/75/Distributed-computing-with-spark-3-2048.jpg)

![What’s Spark? val mySeq: Seq[Int] = 1 to Int.MaxValue val action: Seq[Int] => Int = (seq: Seq[Int]) => seq.sum val result: Int = 1073741824](https://image.slidesharecdn.com/distributedcomputingwithspark-150414042905-conversion-gate01/75/Distributed-computing-with-spark-4-2048.jpg)

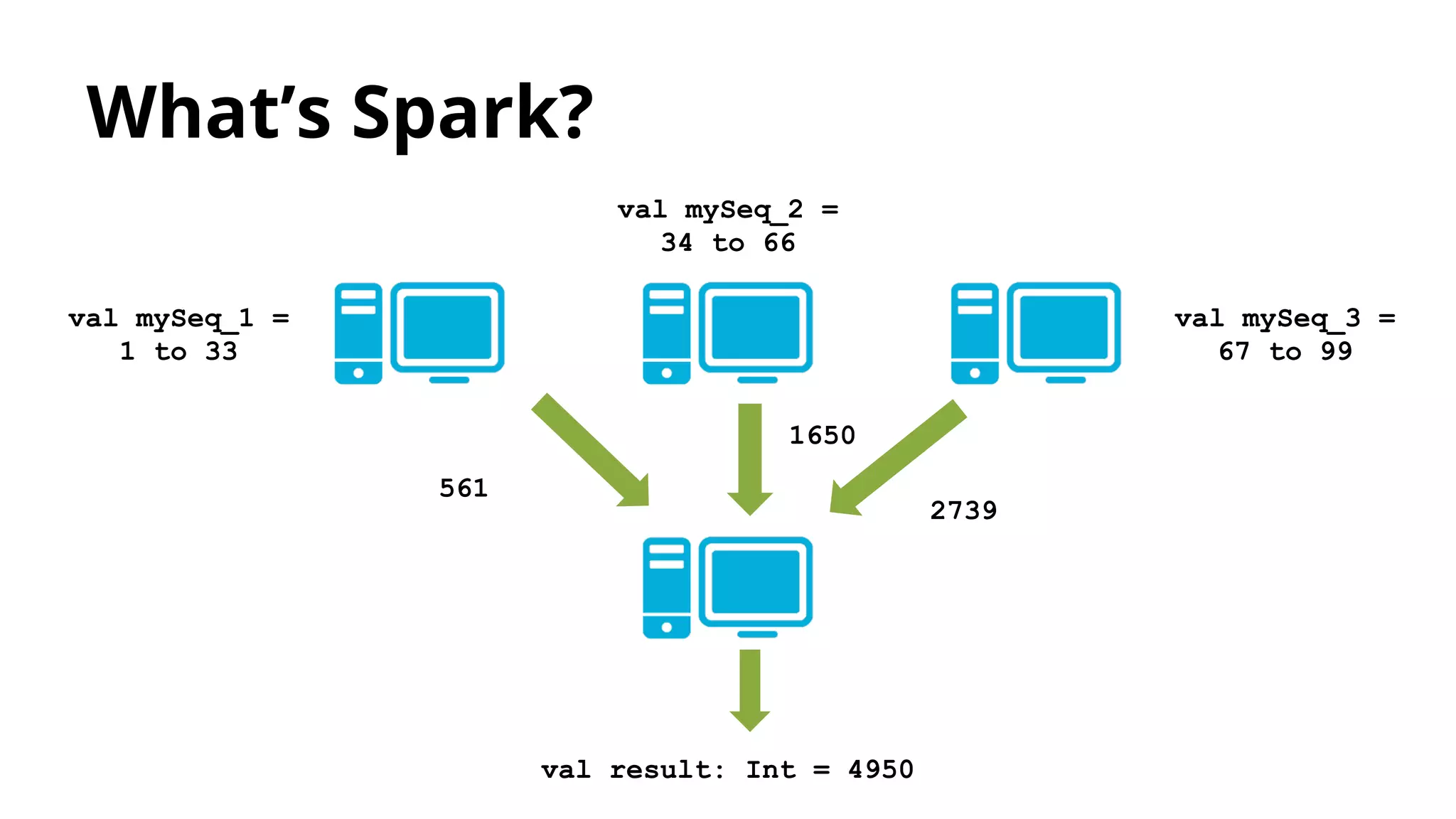

![What’s Spark? val mySeq: Seq[Int] = 1 to 99 val mySeq_1 = 1 to 33 val mySeq_3 = 67 to 99 val mySeq_2 = 34 to 66](https://image.slidesharecdn.com/distributedcomputingwithspark-150414042905-conversion-gate01/75/Distributed-computing-with-spark-7-2048.jpg)

![What’s Spark? val action: Seq[Int] => Int = (seq: Seq[Int]) => seq.sum val mySeq_1 = 1 to 33 val mySeq_3 = 67 to 99 val mySeq_2 = 34 to 66 action action action](https://image.slidesharecdn.com/distributedcomputingwithspark-150414042905-conversion-gate01/75/Distributed-computing-with-spark-8-2048.jpg)

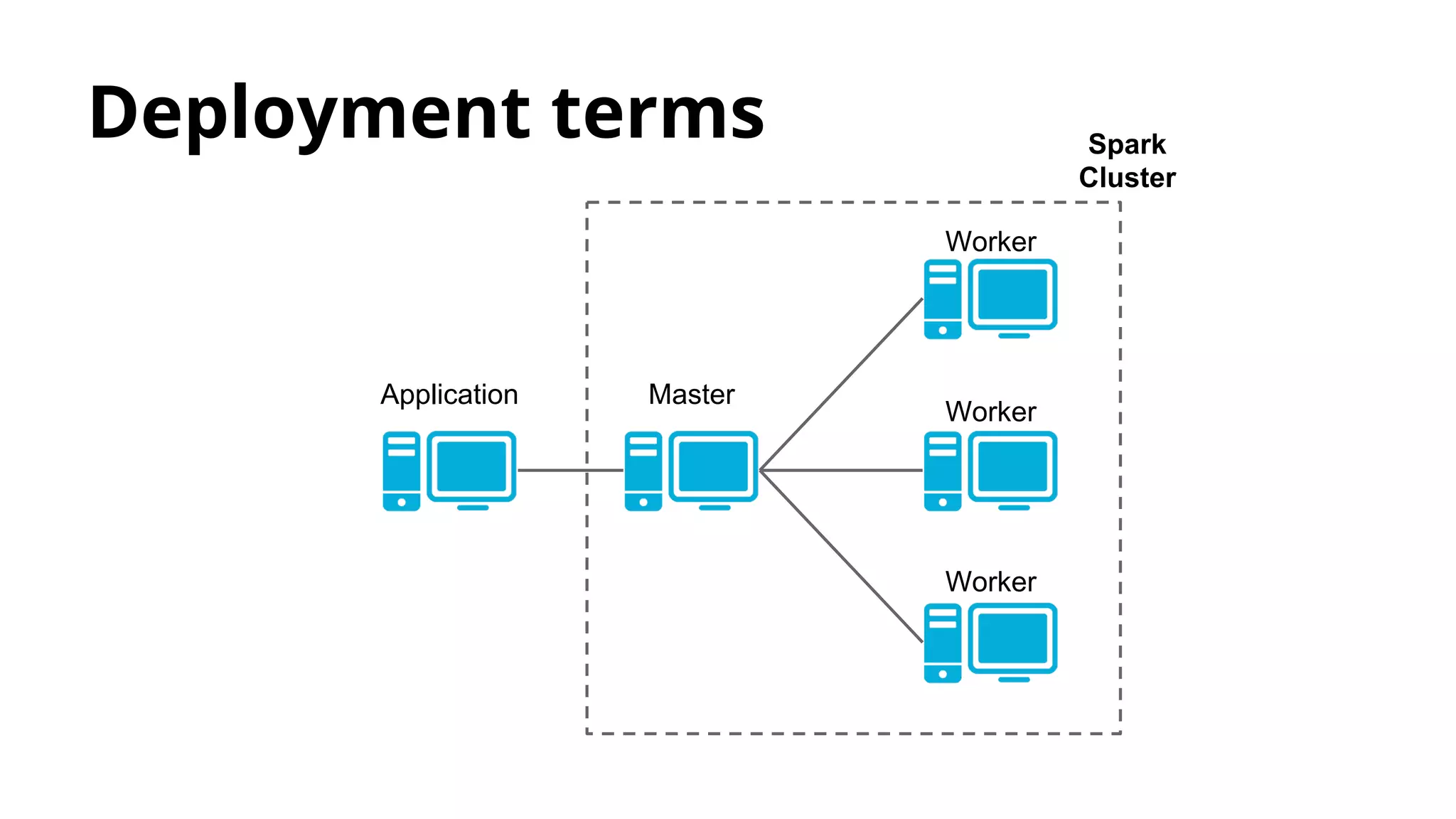

![Deployment types ● Local ○ master=”local[N]” (N=Amount of cores) ○ Spark master is launched in the same process. It’s not accessible from web. ○ Workers are launched in the same process. ● Standalone ○ master=”spark://master-url” ○ Spark master is launched in a cluster machine. ○ For deploying workers, it’s used ‘start-all.sh’ script. ○ Submit JAR to worker nodes.](https://image.slidesharecdn.com/distributedcomputingwithspark-150414042905-conversion-gate01/75/Distributed-computing-with-spark-20-2048.jpg)

![RDD ● Basic example: val myRdd: RDD[String] = sc.parallelize( “this is a sample string”.split(“ “).toList) ● For creating a new RDD is necessary to implement: ○ compute (How to get RDD population) ○ getDependencies (RDD lineage) ○ getPartitions (How to split the data)](https://image.slidesharecdn.com/distributedcomputingwithspark-150414042905-conversion-gate01/75/Distributed-computing-with-spark-29-2048.jpg)

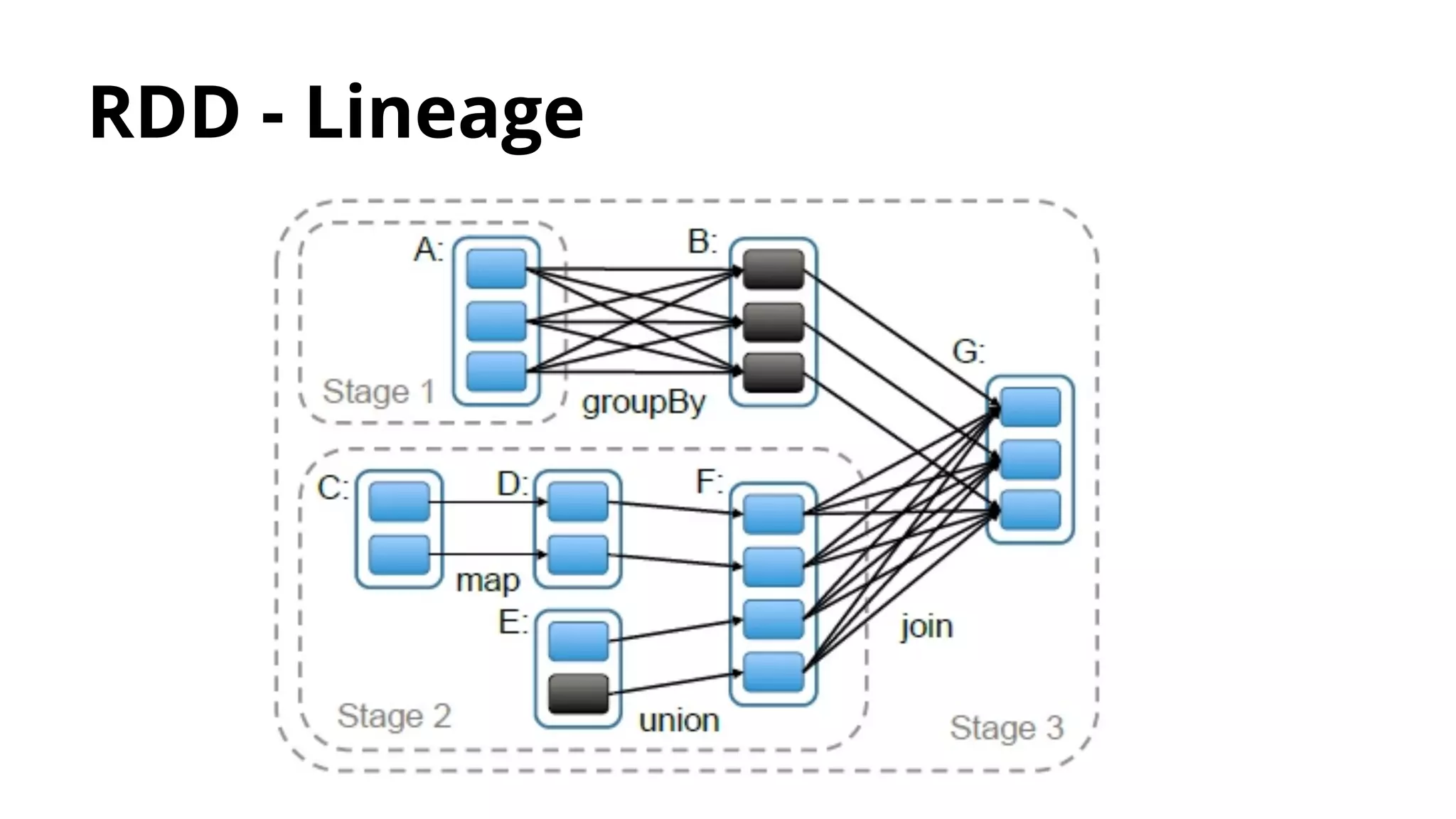

![RDD - Lineage ● An RDD may be built from another one. ● Base RDD : Has no parent ● Ex: val base: RDD[Int] = sc.parallelize(List(1,2,3,4)) val even: RDD[Int] = myRDD.filter(_%2==0) ● DAG (Directed Acyclic Graph) : Represents the RDD lineage (inheritance, mutations, …). ● DAGScheduler: High level layer used that schedules stages among all related RDDs](https://image.slidesharecdn.com/distributedcomputingwithspark-150414042905-conversion-gate01/75/Distributed-computing-with-spark-32-2048.jpg)

![RDD - Transformations ● Most frequently used: ○ map val myRDD: RDD[Int] = sc.parallelize(List(1,2,3,4)) val myStrinRDD: RDD[String] = myRDD.map(_.toString) ○ flatMap val myRDD: RDD[Int] = sc.parallelize(List(1,null,3,null)) val myNotNullInts: RDD[Int] = myRdd.flatMap(n => Option(n))](https://image.slidesharecdn.com/distributedcomputingwithspark-150414042905-conversion-gate01/75/Distributed-computing-with-spark-35-2048.jpg)

![RDD - Transformations ● Most frequently used: ○ filter val myRDD: RDD[Int] = sc.parallelize(List(1,2,3,4)) val myStrinRDD: RDD[Int] = myRDD.filter(_%2==0) ○ union val odd: RDD[Int] = sc.parallelize(List(1,3,5)) val even: RDD[Int] = sc.parallelize(List(2,4,6)) val all: RDD[Int] = odd.union(even)](https://image.slidesharecdn.com/distributedcomputingwithspark-150414042905-conversion-gate01/75/Distributed-computing-with-spark-36-2048.jpg)

![RDD - Actions ● Examples: ○ count val myRdd: Rdd[Int] = sc.parallelize(List(1,2,3)) val size: Int = myRdd.count Counting implies processing whole RDD ○ take val myRdd: Rdd[Int] = sc.parallelize(List(1,2,3)) val List(1,2) = myRdd.take(2).toList ○ collect val myRdd: Rdd[Int] = sc.parallelize(List(1,2,3)) val data: Array[Int] = myRdd.collect Beware! Executing ‘collect’ on big collections might end into a memory leak](https://image.slidesharecdn.com/distributedcomputingwithspark-150414042905-conversion-gate01/75/Distributed-computing-with-spark-38-2048.jpg)

![Key-Value RDDs ● Particular case of RDD[T] where T = (U,V) ● It allows grouping,combining,aggregating values by some key. ● In Scala it’s only needed to import org.apache.spark.SparkContext._ ● In Java, it’s mandatory to use PairRDD class.](https://image.slidesharecdn.com/distributedcomputingwithspark-150414042905-conversion-gate01/75/Distributed-computing-with-spark-40-2048.jpg)

![Key-Value RDDs ● keyBy: Generates a PairRDD val myRDD: RDD[Int] = sc.parallelize(List(1,2,3,4,5)) val kvRDD: RDD[(String,Int)] = myRDD.keyBy( n => if (n%2==0) “even” else “odd”) “odd” -> 1, “even” -> 2, “odd” -> 3, “even” -> 4, “odd” -> 5](https://image.slidesharecdn.com/distributedcomputingwithspark-150414042905-conversion-gate01/75/Distributed-computing-with-spark-41-2048.jpg)

![Key-Value RDDs ● keys: Gets keys from PairRDD val myRDD: RDD[(String,Int)] = sc.parallelize(“odd” -> 1,”even” -> 2,”odd” -> 3) val keysRDD: RDD[String] = myRDD.keys “odd”,”even”,”odd” ● values: Gets values from PairRDD](https://image.slidesharecdn.com/distributedcomputingwithspark-150414042905-conversion-gate01/75/Distributed-computing-with-spark-42-2048.jpg)

![Key-Value RDDs ● mapValues: map PairRDD values, omitting keys. val myRDD: RDD[(String,Int)] = sc.parallelize(“odd” -> 1,”even” -> 2,”odd” -> 3) val mapRDD: RDD[(String,String)] = myRDD.mapValues(_.toString) “odd” -> “1”, “even” -> “2”, “odd” -> “3” ● flatMapValues: flatMap PairRDD values](https://image.slidesharecdn.com/distributedcomputingwithspark-150414042905-conversion-gate01/75/Distributed-computing-with-spark-43-2048.jpg)

![Key-Value RDDs ● join: Return a new RDD with both RDD joined by key. val a = sc.parallelize(List("dog", "salmon", "salmon", "rat", "elephant"), 3) val b = a.keyBy(_.length) val c = sc.parallelize(List("dog","cat","gnu","salmon","rabbit","turkey","wolf"," bear","bee"), 3) val d = c.keyBy(_.length) b.join(d).collect res0: Array[(Int, (String, String))] = Array((6,(salmon,salmon)), (6,(salmon,rabbit)), (6,(salmon,turkey)), (6,(salmon,salmon)), (6,(salmon,rabbit)), (6,(salmon,turkey)), (3, (dog,dog)), (3,(dog,cat)), (3,(dog,gnu)), (3,(dog,bee)), (3,(rat,dog)), (3,(rat,cat)), (3,(rat,gnu)), (3,(rat,bee)))](https://image.slidesharecdn.com/distributedcomputingwithspark-150414042905-conversion-gate01/75/Distributed-computing-with-spark-44-2048.jpg)

=> C, mergeCombiners: (C,C) => C): RDD[(K,C)] ● Think of it as something-like-but-not a foldLeft over each partition](https://image.slidesharecdn.com/distributedcomputingwithspark-150414042905-conversion-gate01/75/Distributed-computing-with-spark-45-2048.jpg)

![Key-Value RDDs - combineByKey ● It’s composed by: ○ createCombiner( V => C): Sets the way to mutate initial RDD [V] data into new data type used for aggregating values (C). This will be called Combinator. ○ mergeValue((C,V) => C): Defines how to aggregate initial V values to our Combiner type C, returning a new combiner type C. ○ mergeCombiners((C,C) => C): Defines how to merge two combiners into a new one.](https://image.slidesharecdn.com/distributedcomputingwithspark-150414042905-conversion-gate01/75/Distributed-computing-with-spark-46-2048.jpg)

![Key-Value RDDs - combineByKey ● Example: val a = sc.parallelize(List("dog","cat","gnu","salmon","rabbit"," turkey","wolf","bear","bee"), 3) val b = sc.parallelize(List(1,1,2,2,2,1,2,2,2), 3) val c = b.zip(a) val d = c.combineByKey(List(_), (x:List[String], y:String) => y :: x, (x:List[String], y:List[String]) => x ::: y) d.collect res16: Array[(Int, List[String])] = Array((1,List(cat, dog, turkey)), (2,List(gnu, rabbit, salmon, bee, bear, wolf)))](https://image.slidesharecdn.com/distributedcomputingwithspark-150414042905-conversion-gate01/75/Distributed-computing-with-spark-47-2048.jpg)

( seqOp: (U,V) => U, combOp: (U,U) => U): RDD[(K,U)] = combineByKey( (v: V) => seqOp(zeroValue,v), seqOp, comboOp)](https://image.slidesharecdn.com/distributedcomputingwithspark-150414042905-conversion-gate01/75/Distributed-computing-with-spark-48-2048.jpg)

![Key-Value RDDs - groupByKey ● groupByKey: Group all values with same key into a Iterable of values def groupByKey(): RDD[(Key,Iterable[Value])] = combineByKey[List[Value]]( (v: V) => List(v), (list: List[V],v: V) => list+= v, (l1: List[V],l2: List[V]) => l1 ++ l2) Note: GroupByKey actually uses CompactBuffer instead of list.](https://image.slidesharecdn.com/distributedcomputingwithspark-150414042905-conversion-gate01/75/Distributed-computing-with-spark-49-2048.jpg)

![SparkSQL - DataFrame ● DataFrame = RDD[org.apache.spark.sql.Row] + Schema ● A Row holds both column values and their types. ● This allows unifying multiple datasources with the same API. i.e, we could join two tables, one declared on Mongo and another on ElasticSearch.](https://image.slidesharecdn.com/distributedcomputingwithspark-150414042905-conversion-gate01/75/Distributed-computing-with-spark-54-2048.jpg)