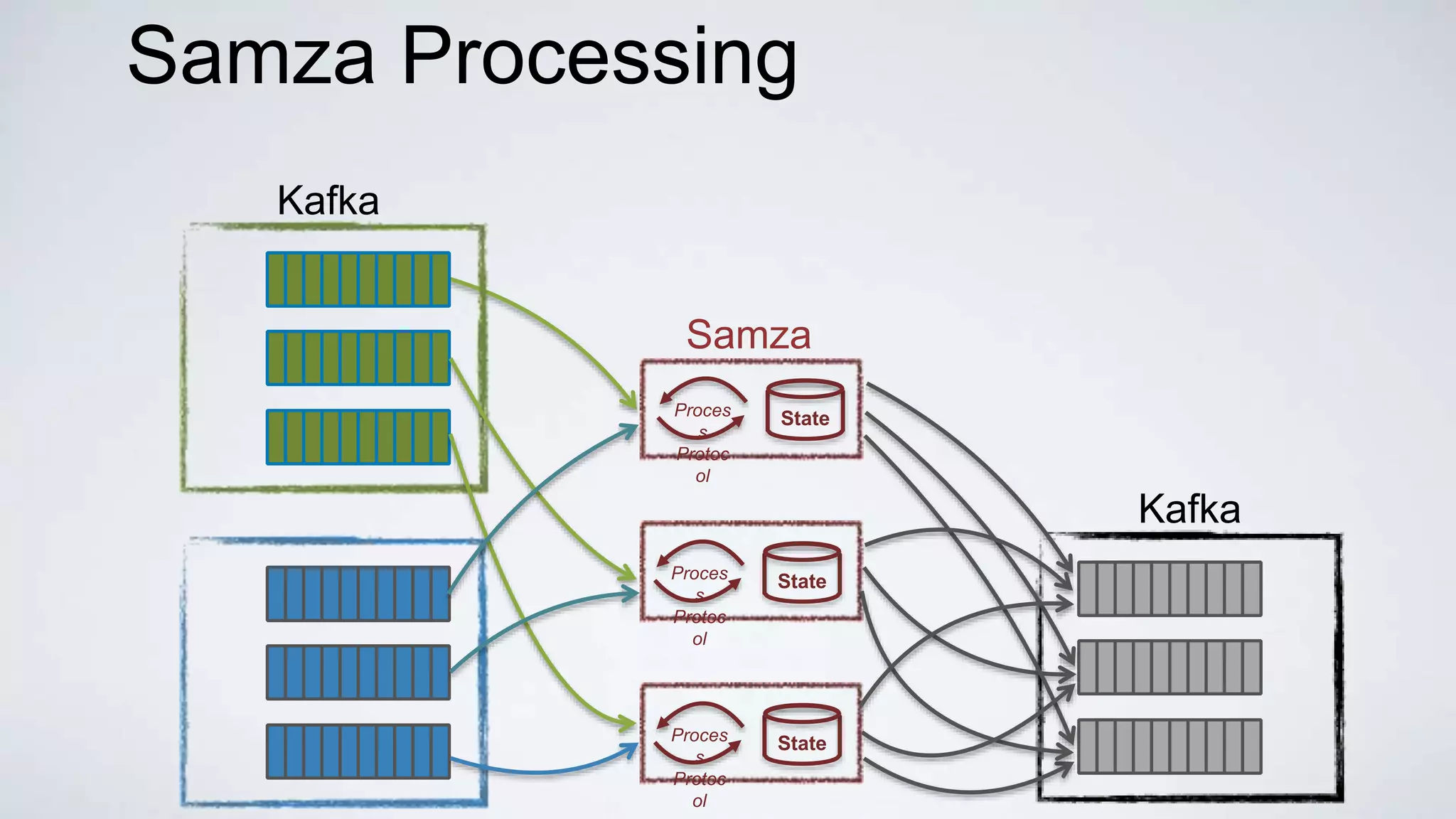

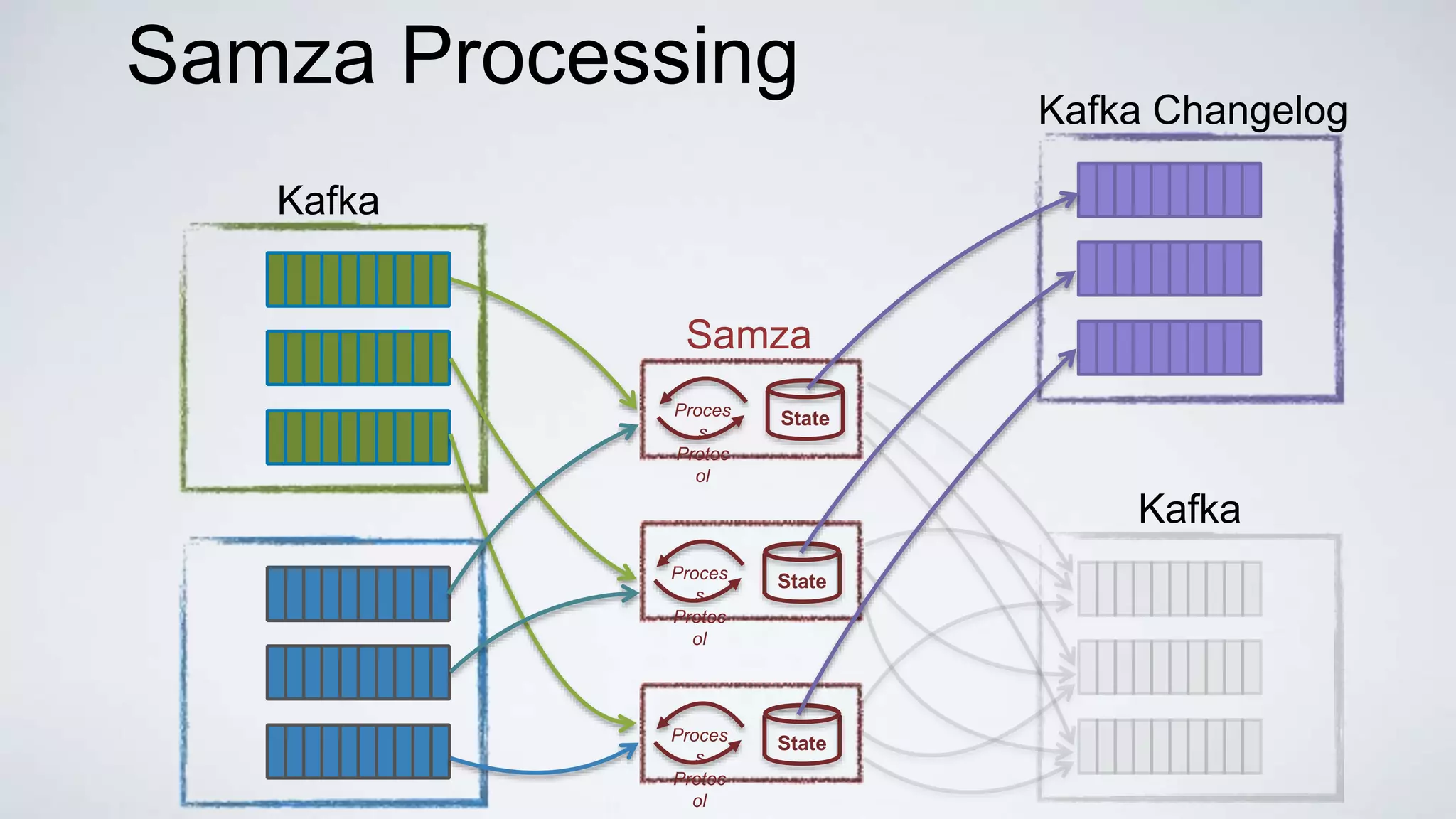

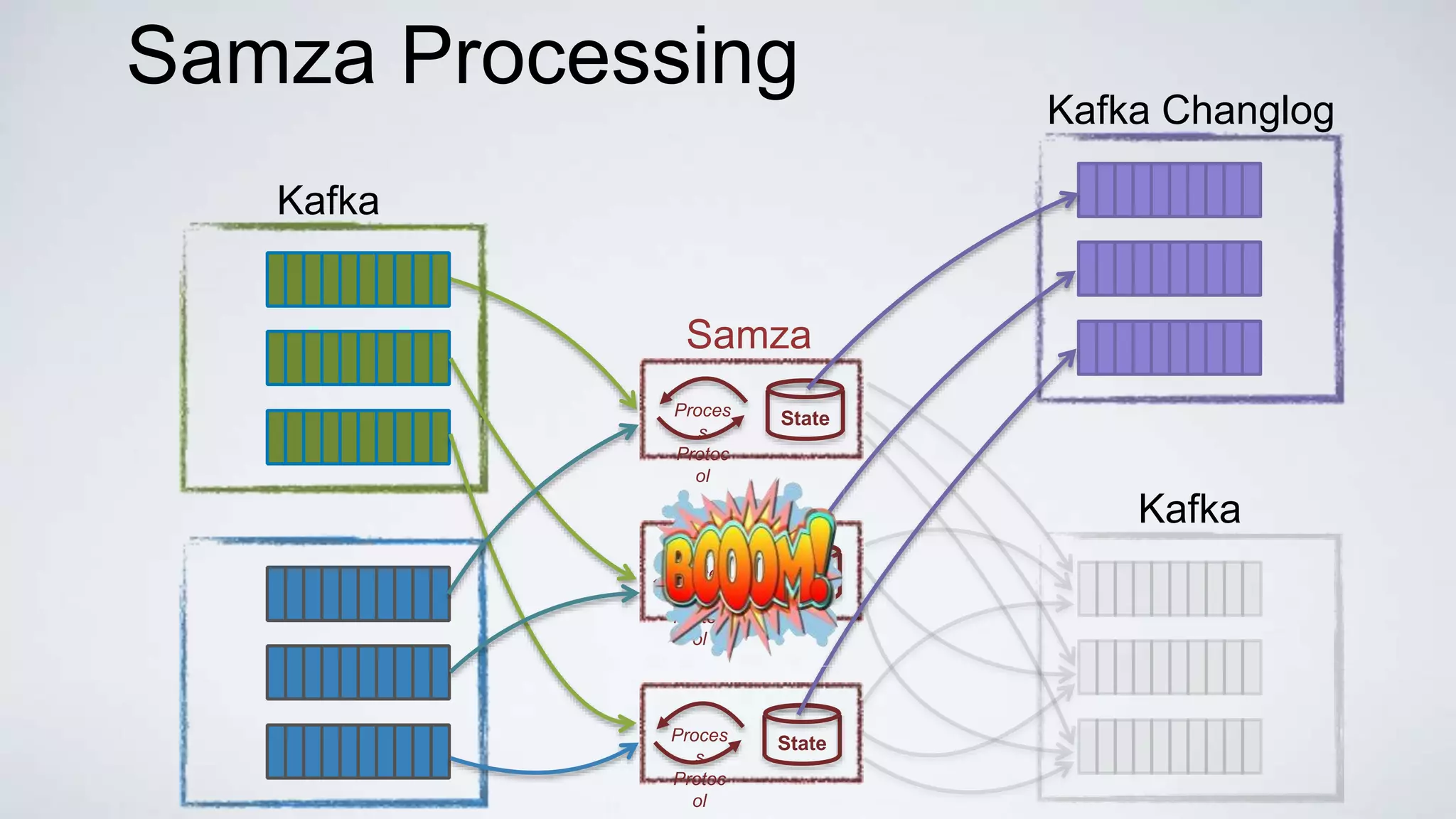

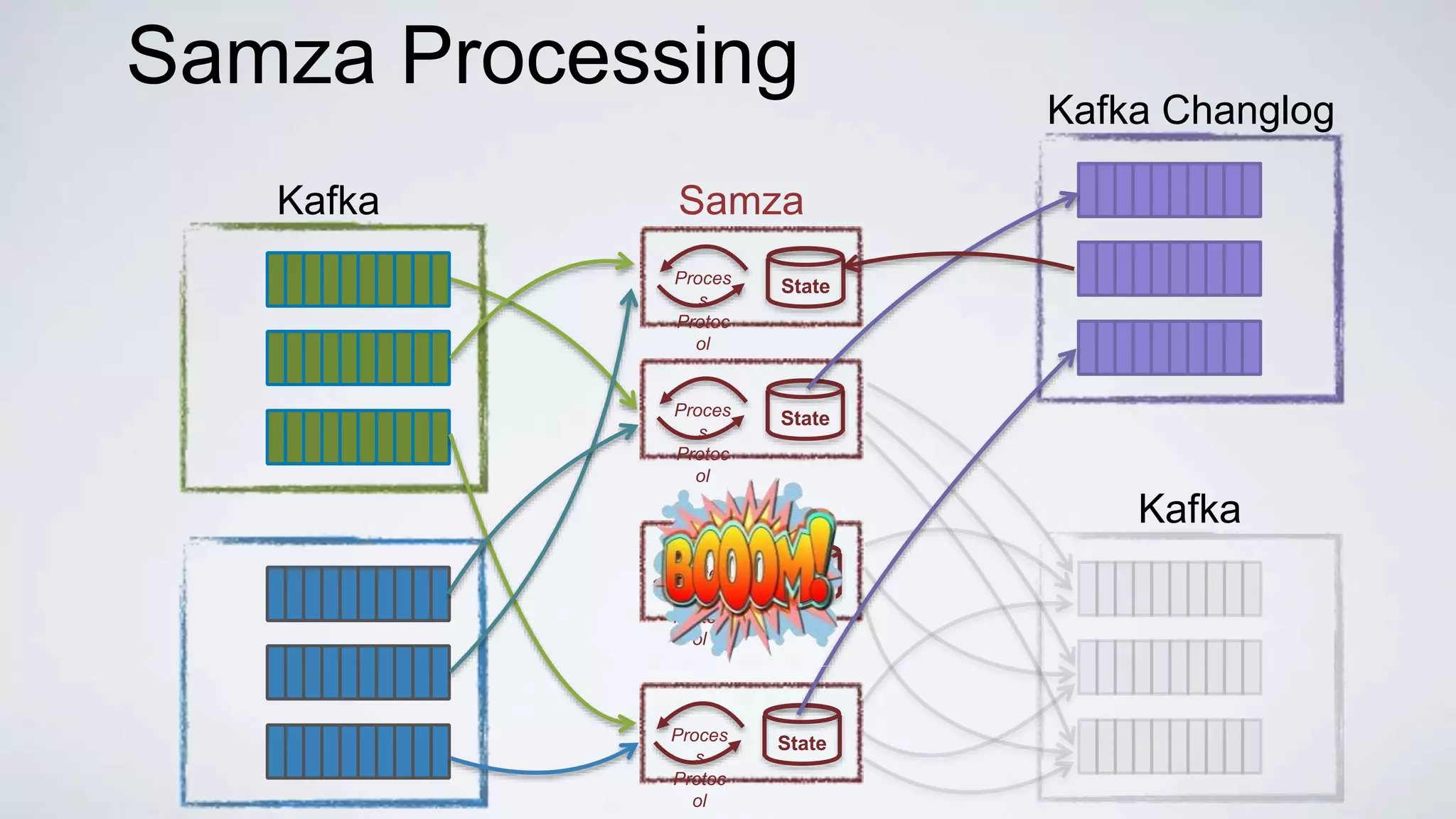

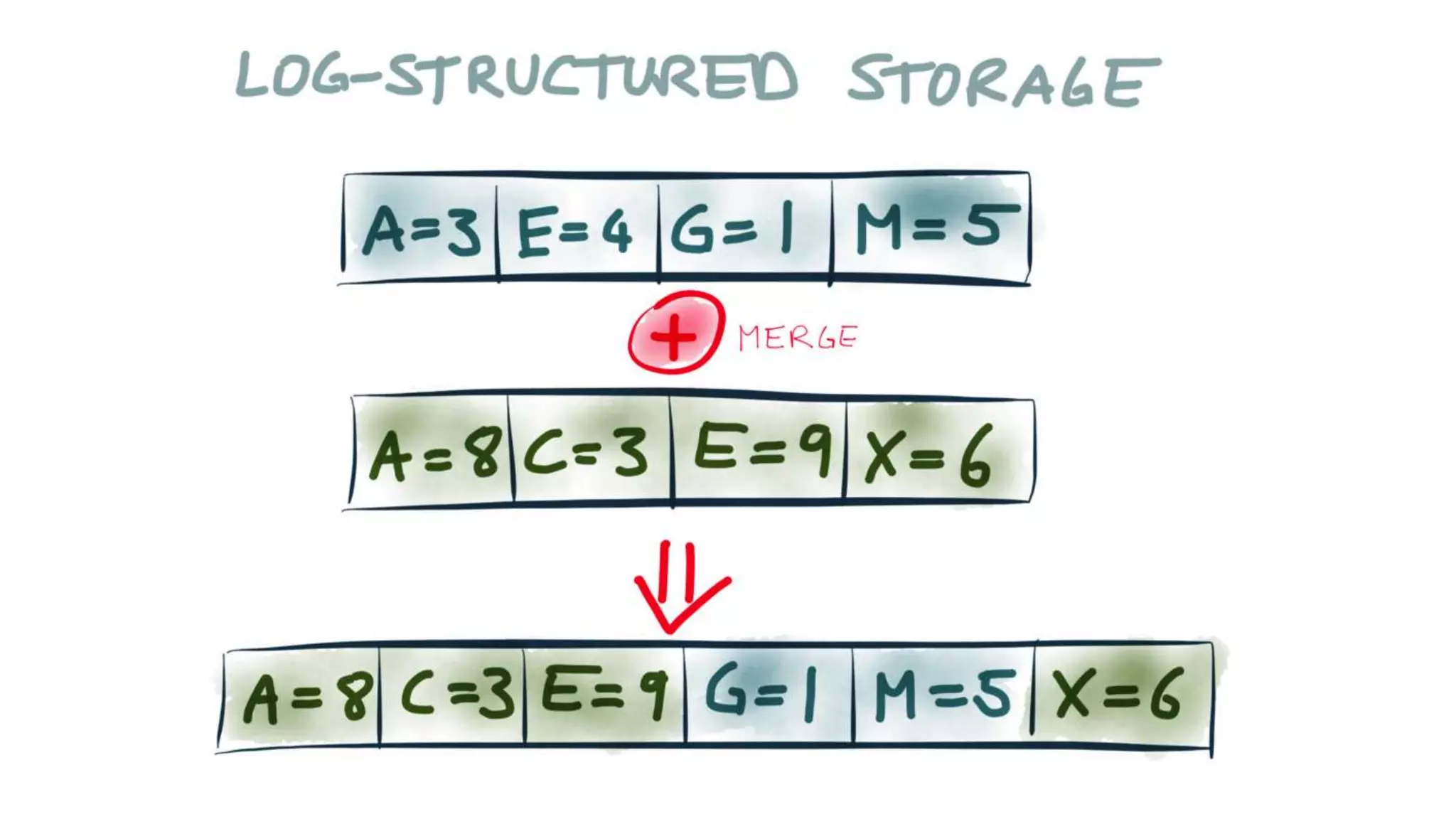

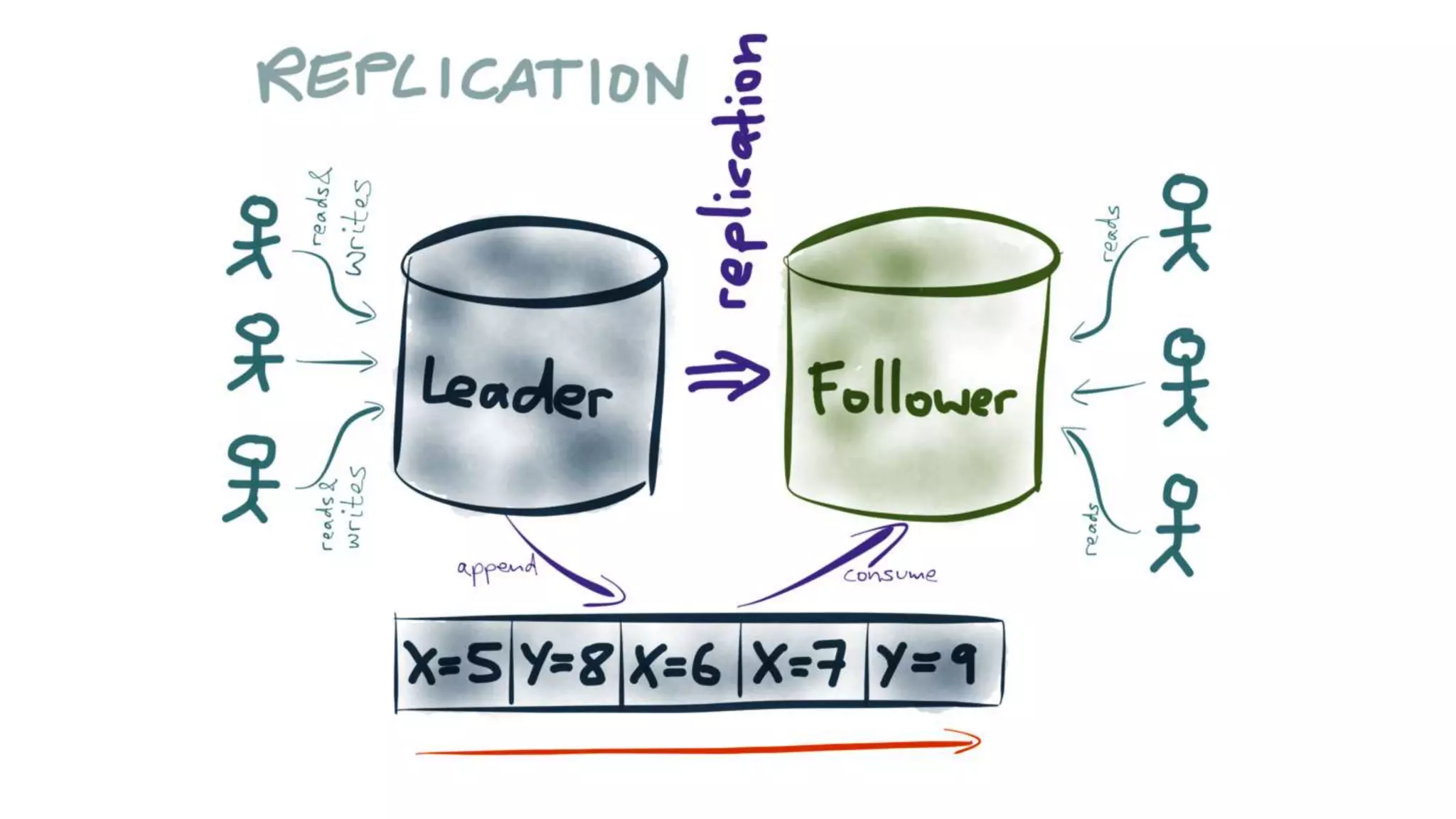

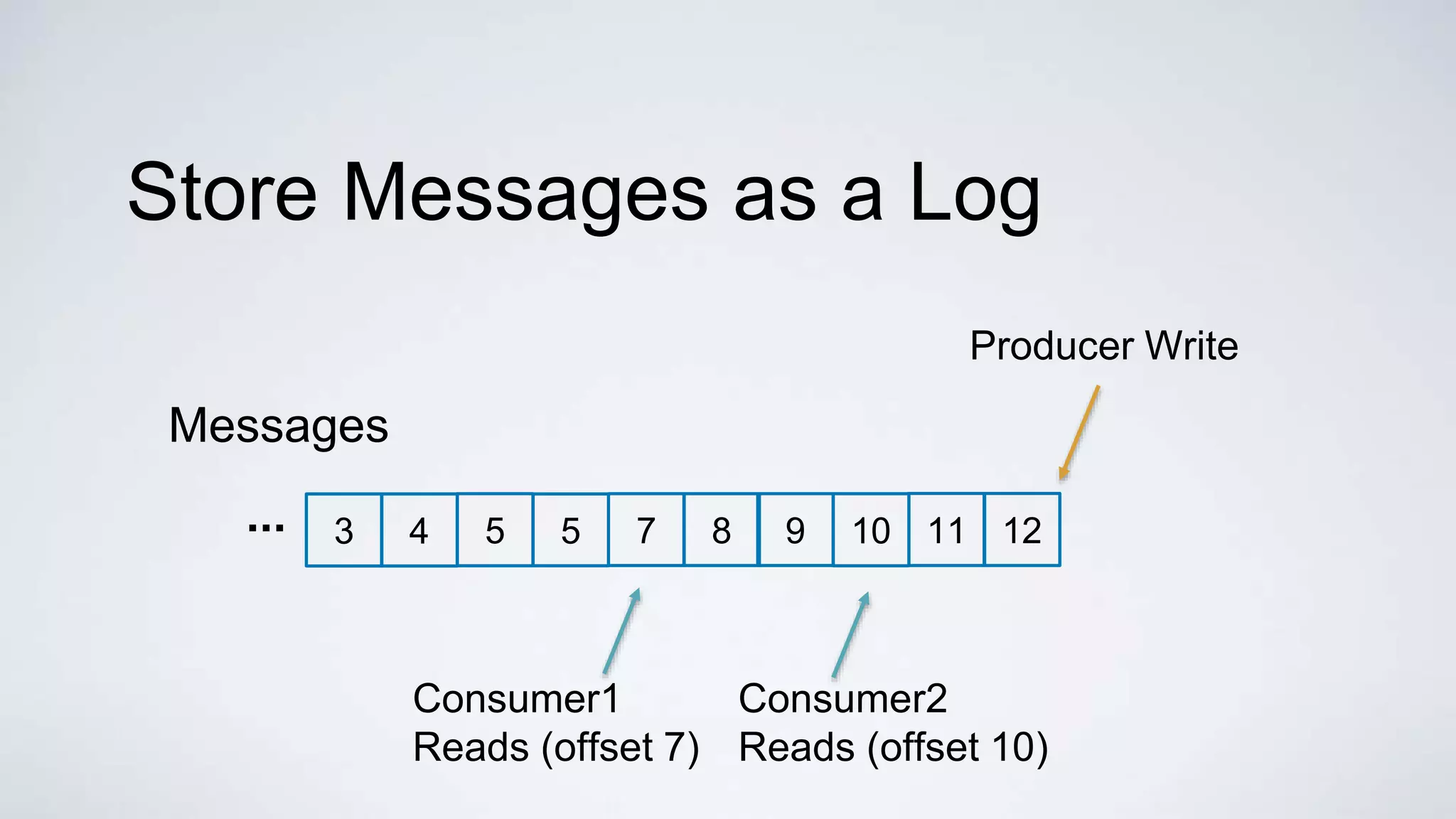

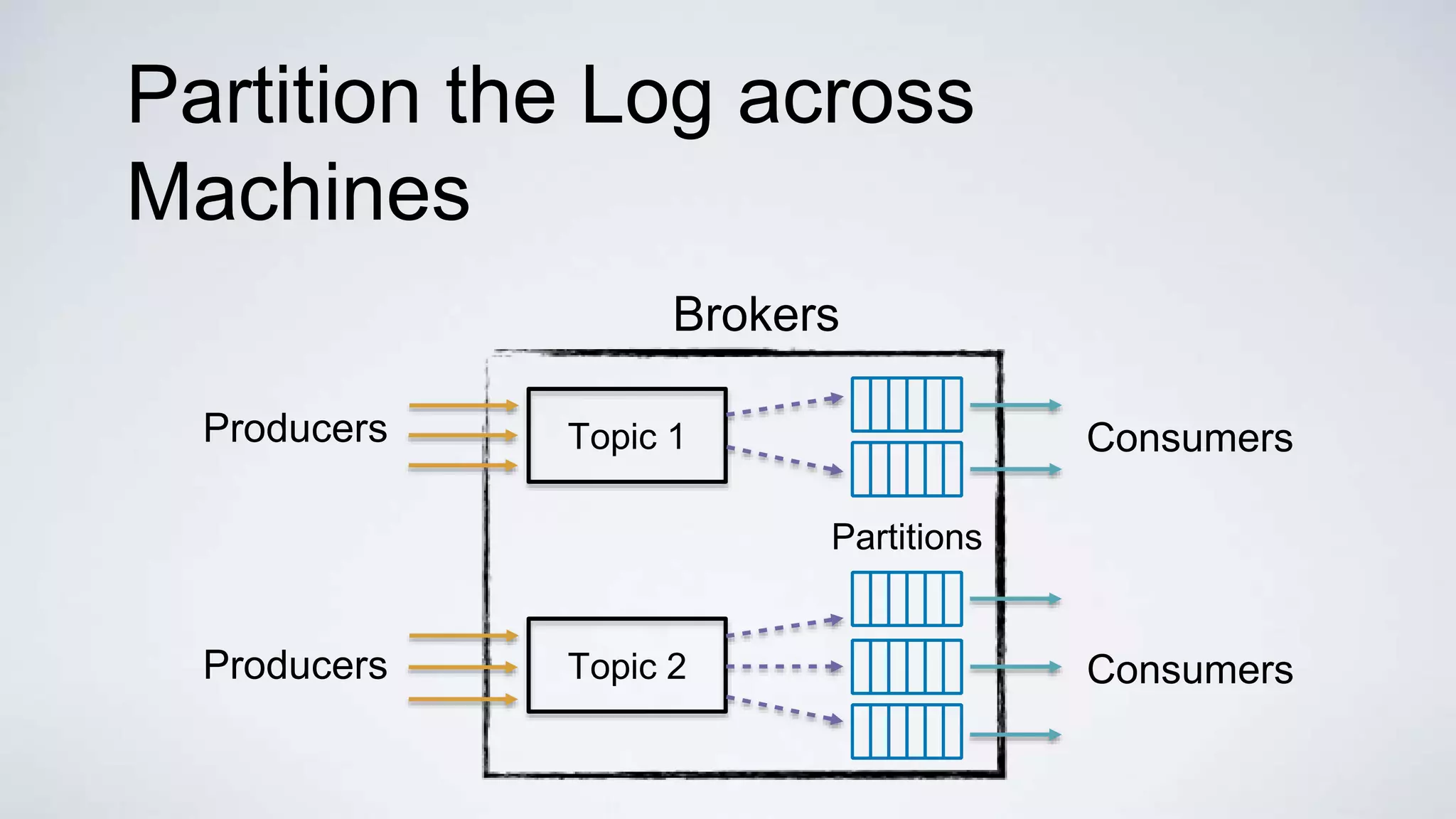

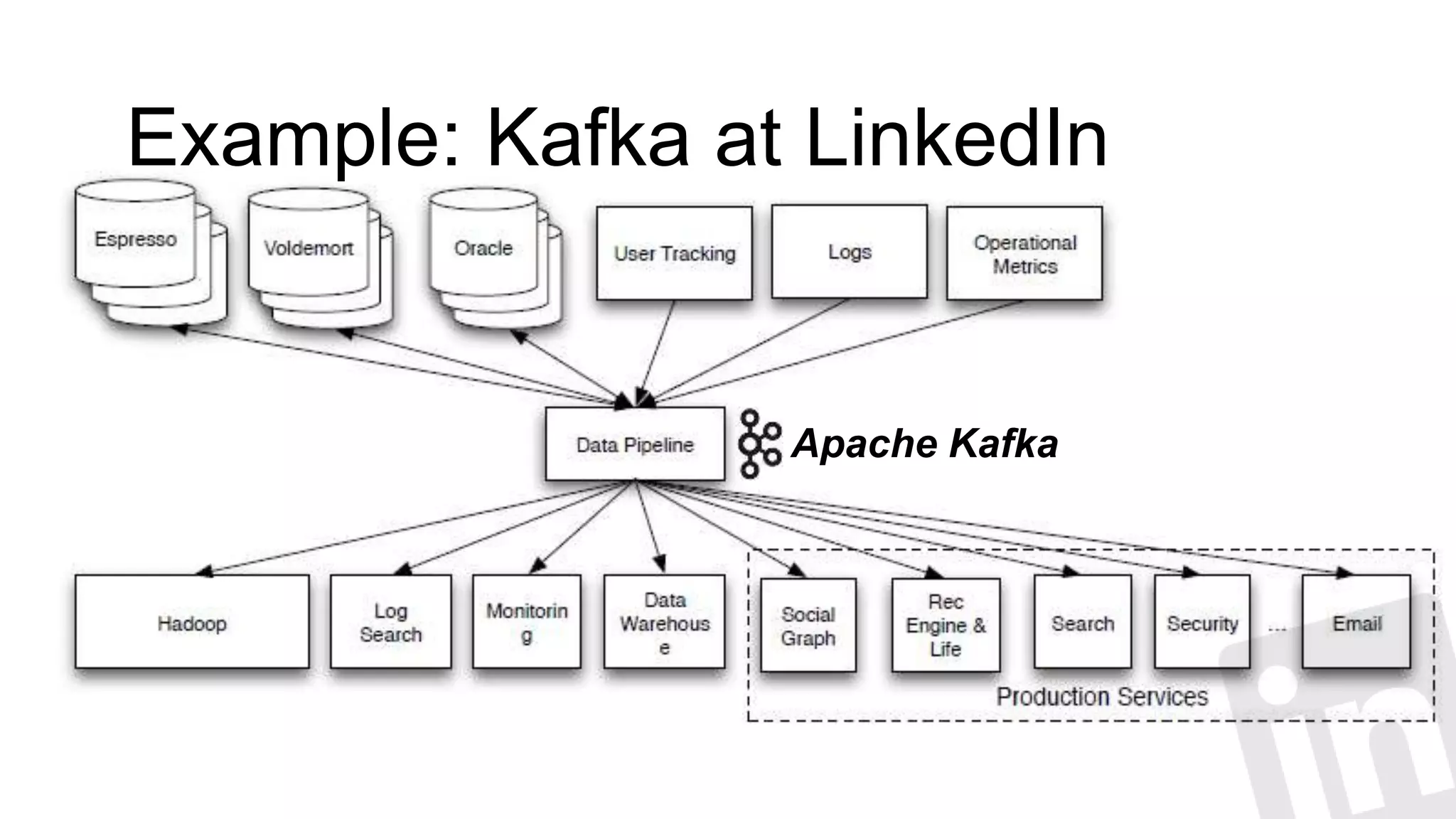

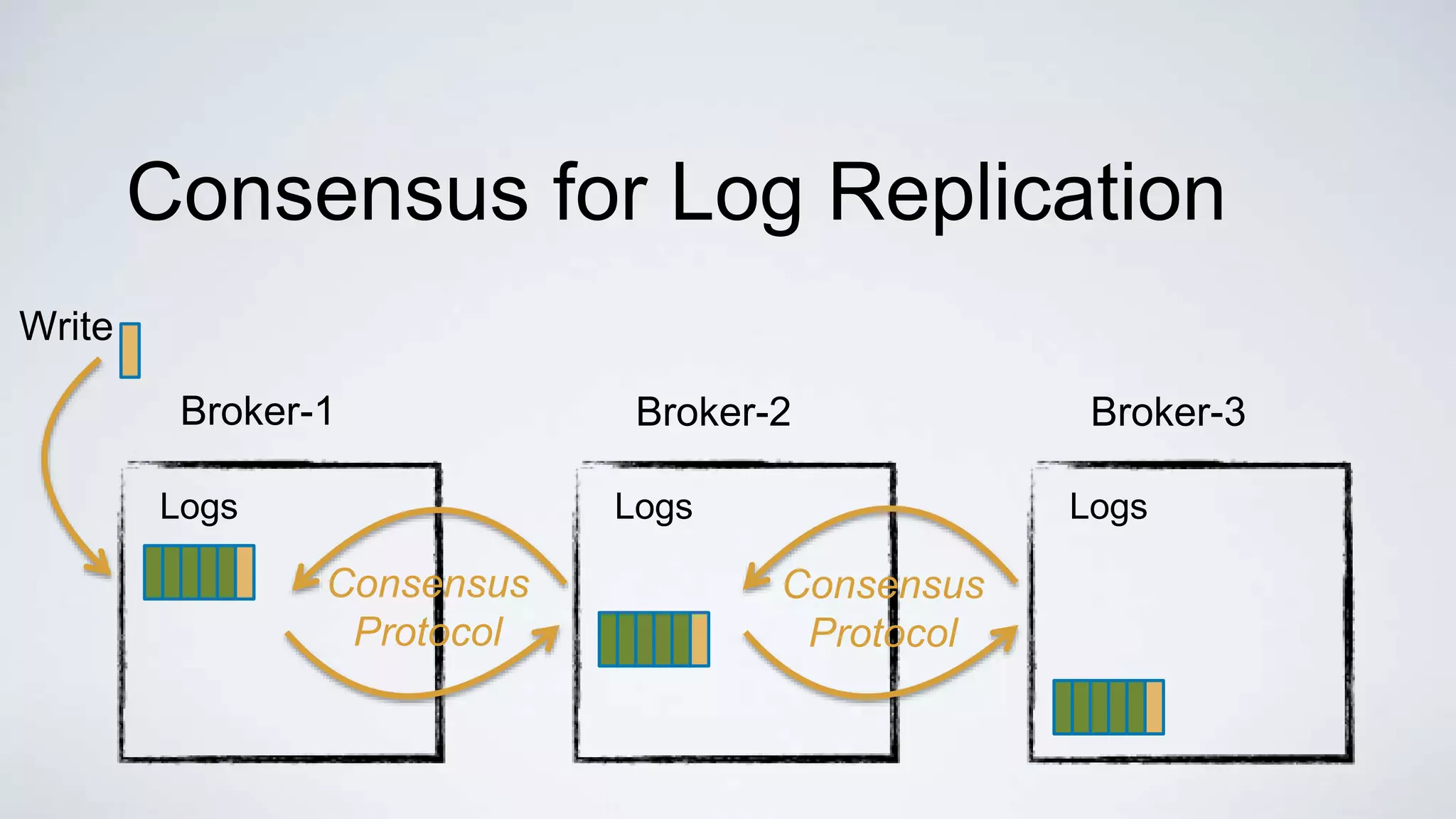

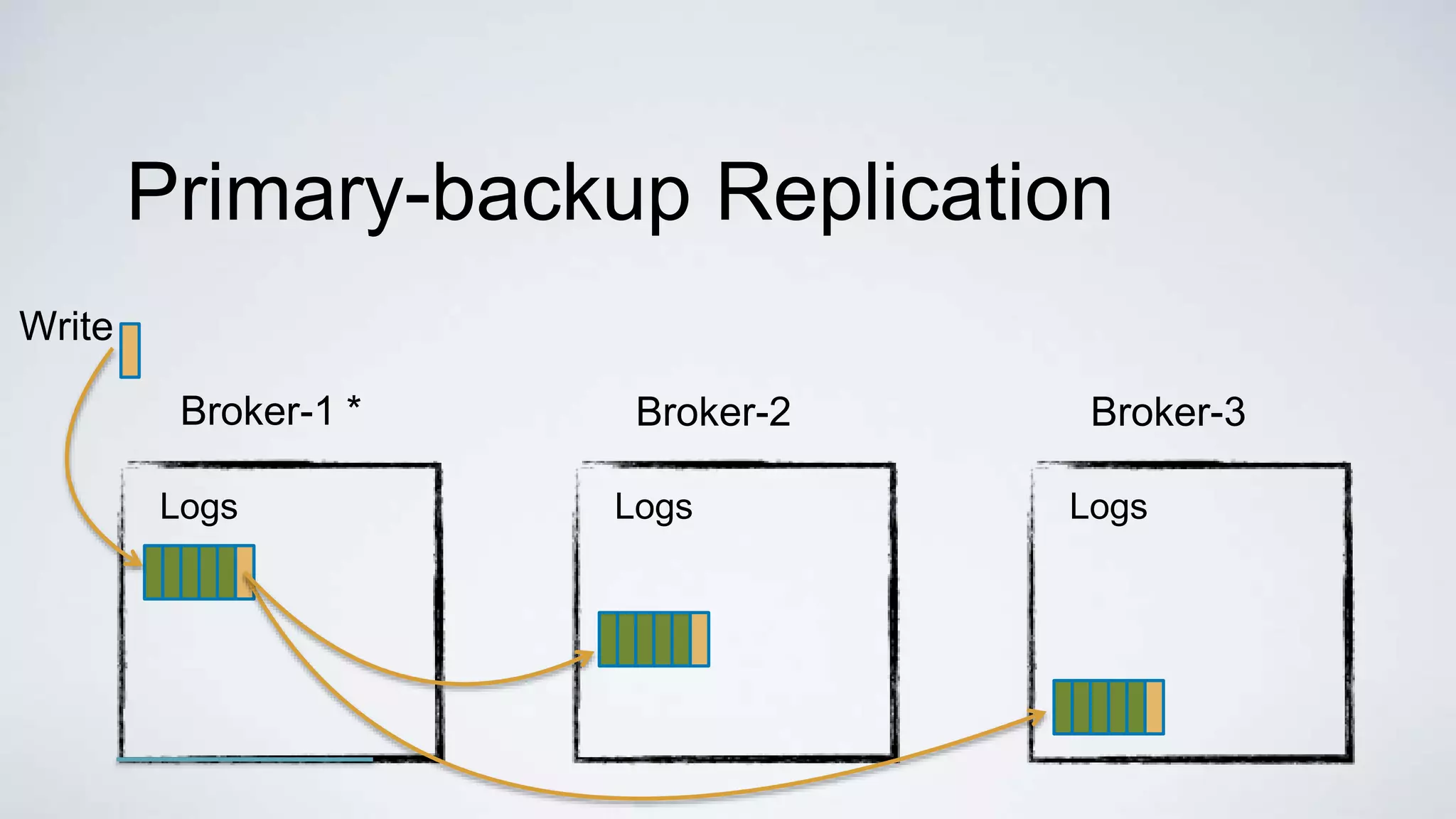

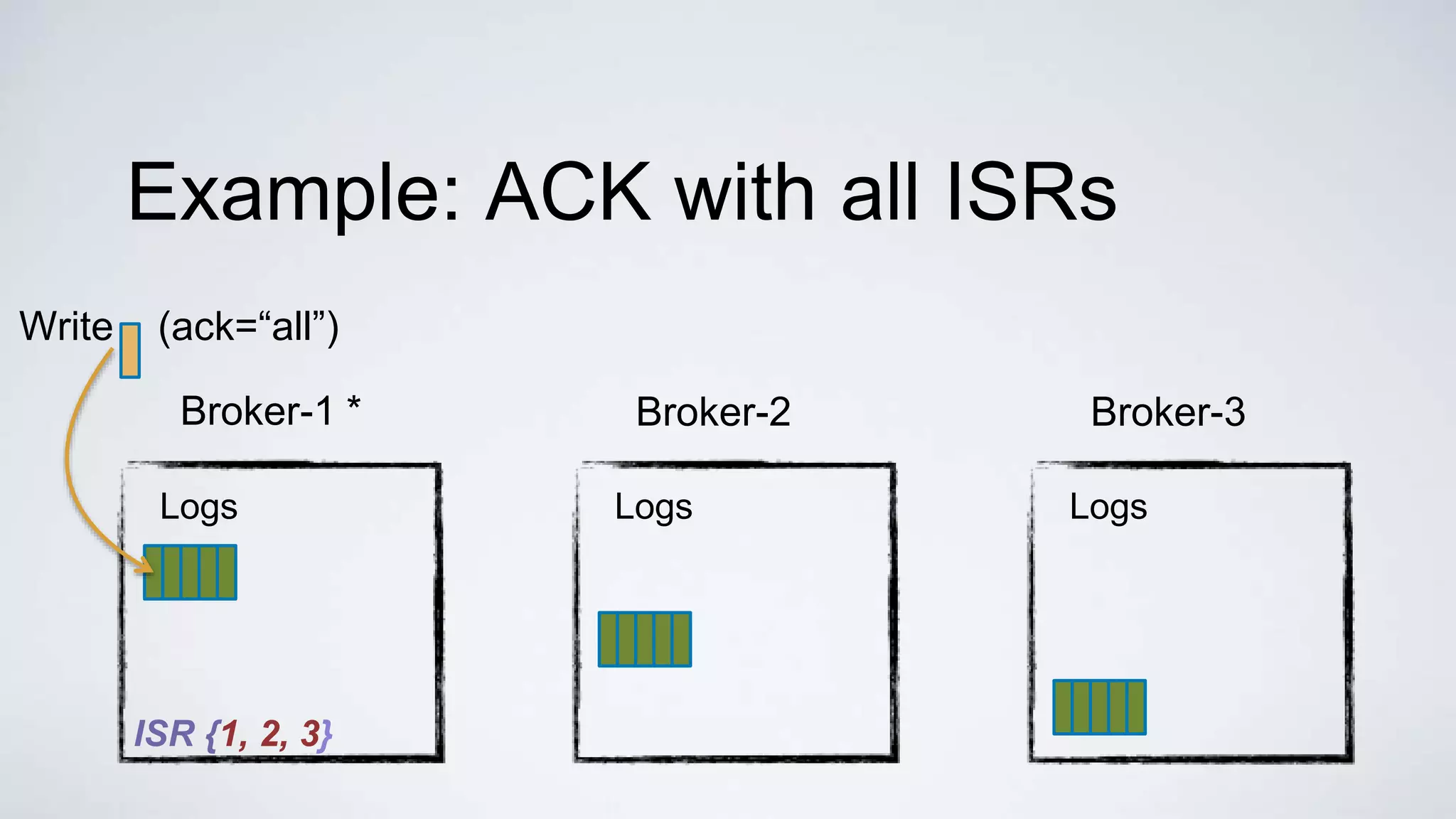

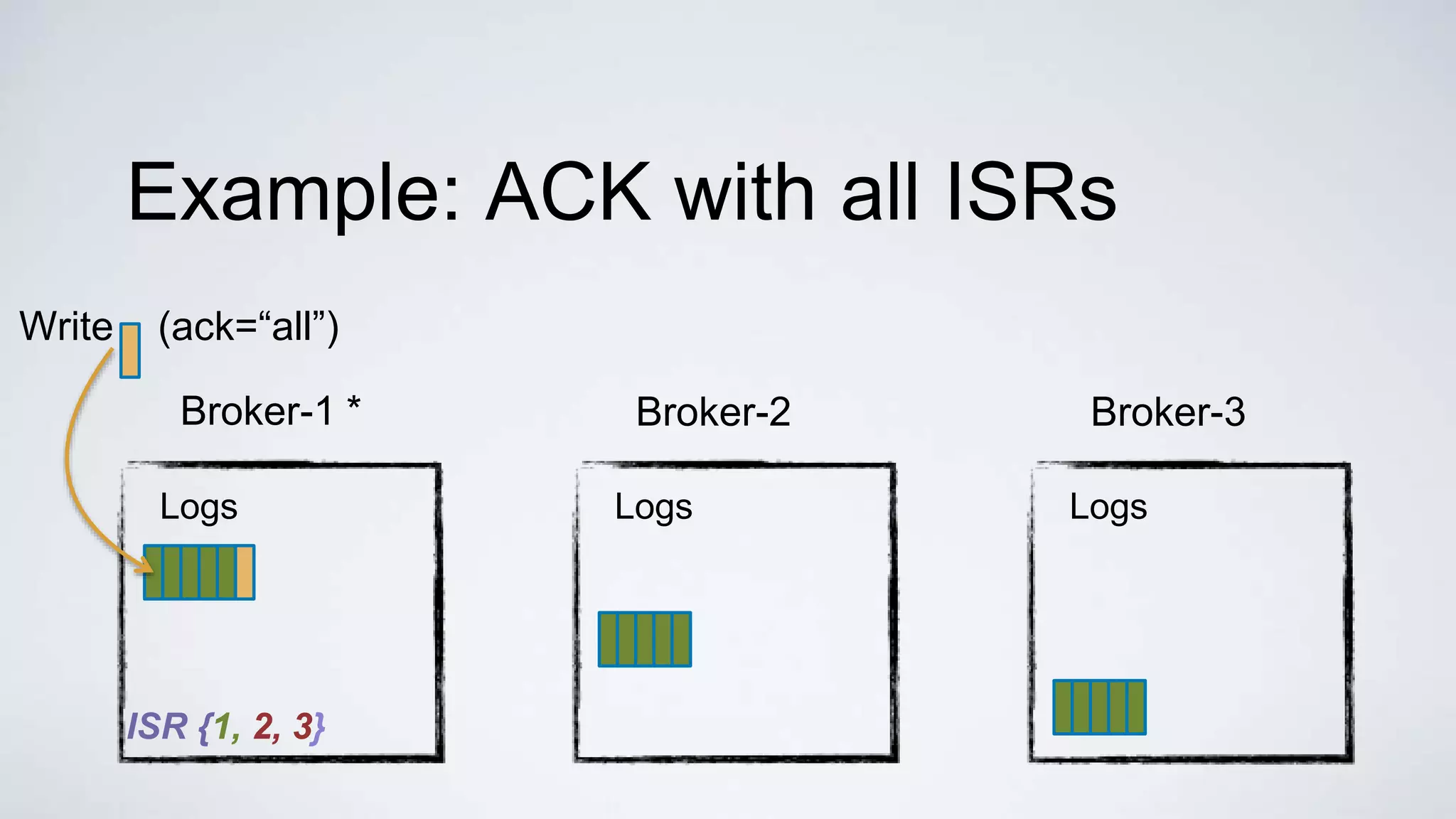

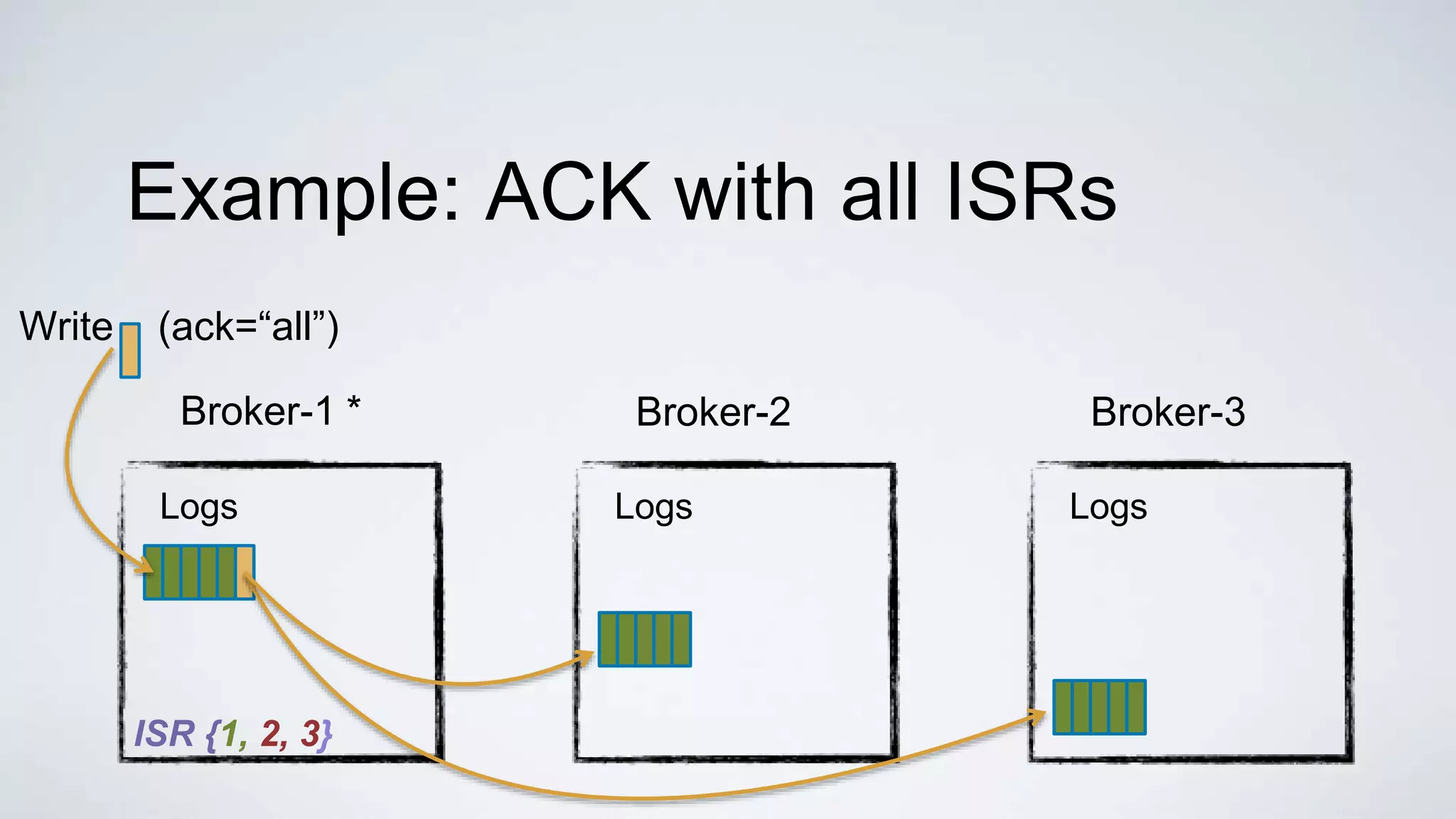

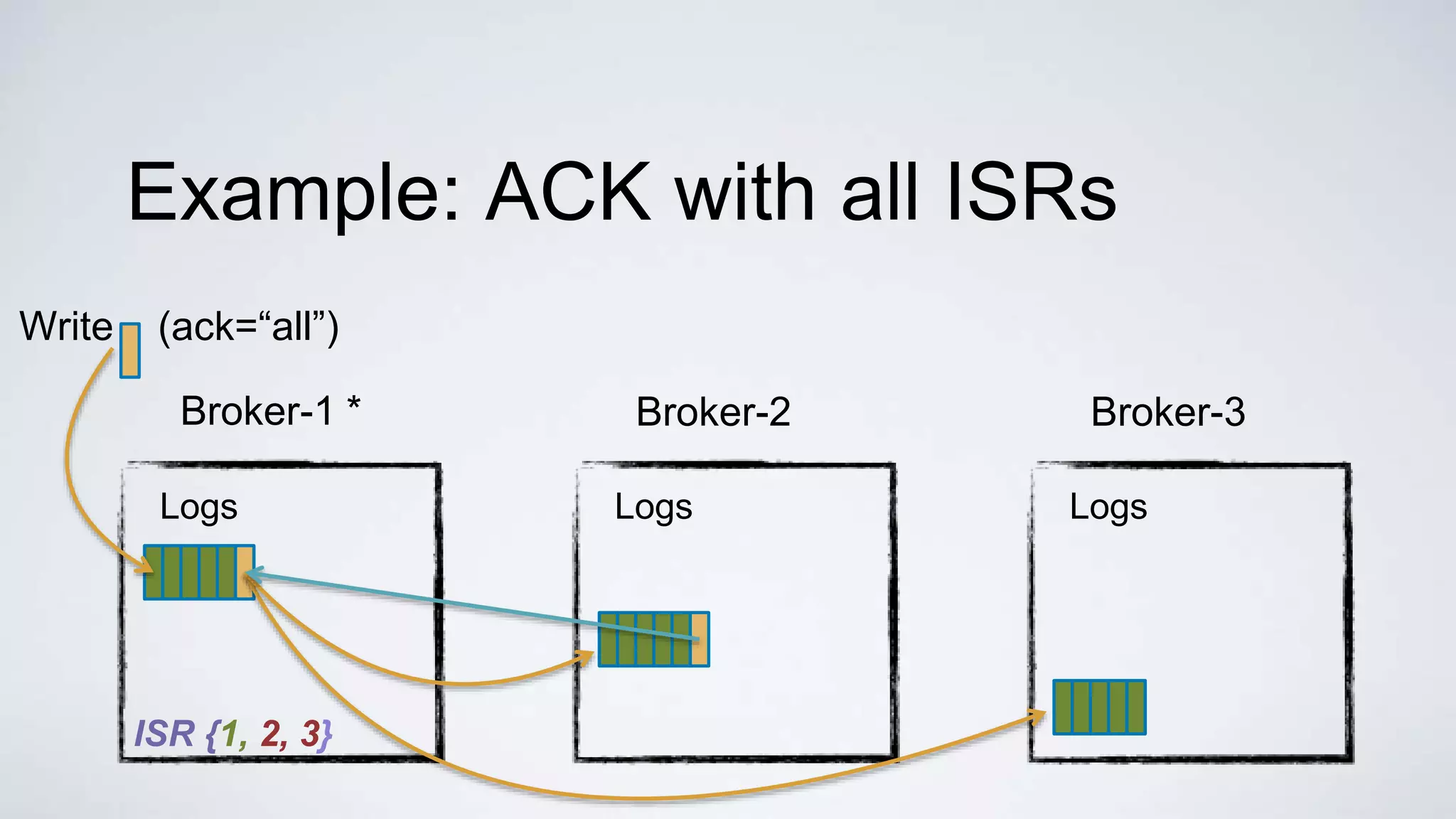

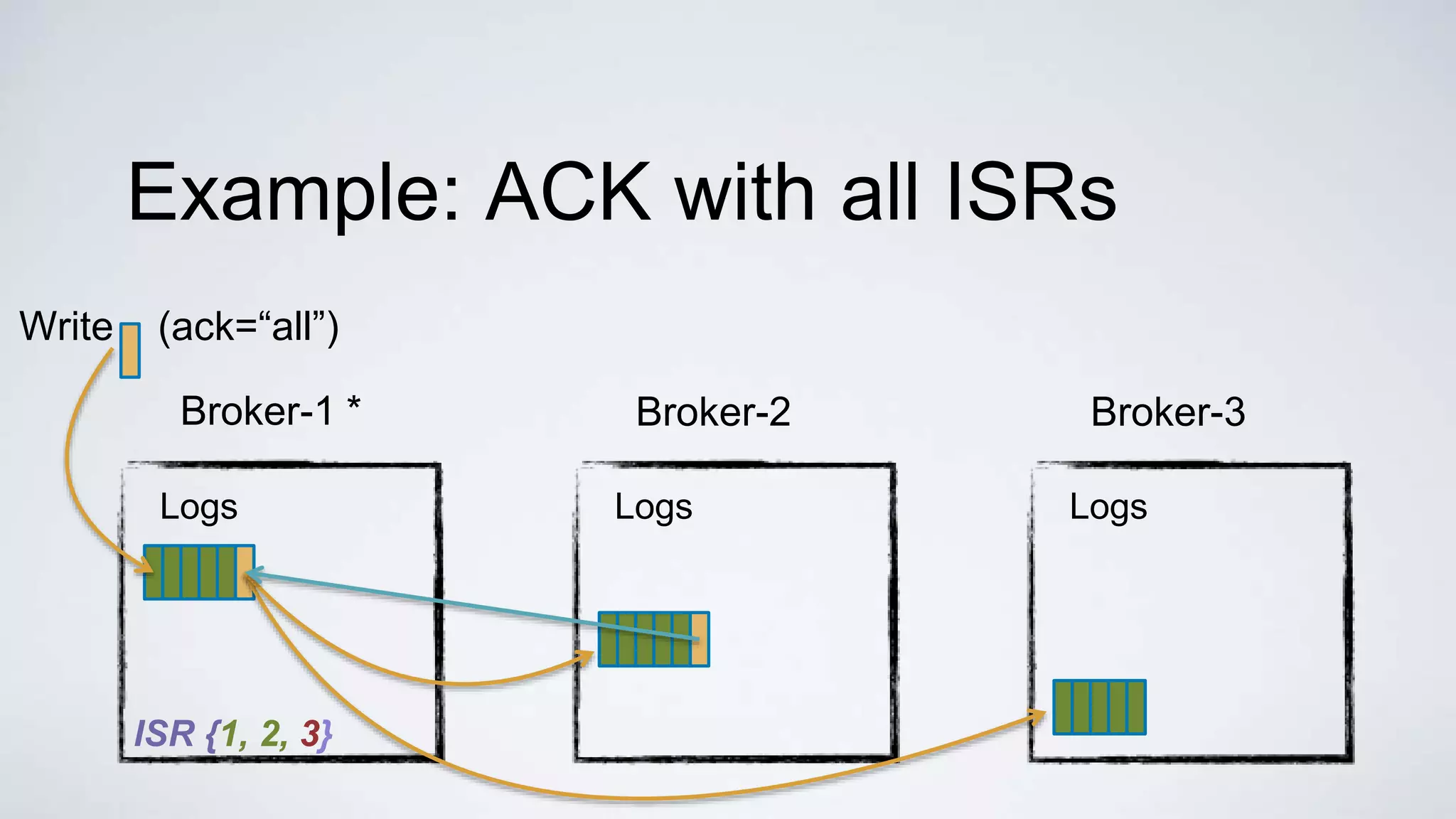

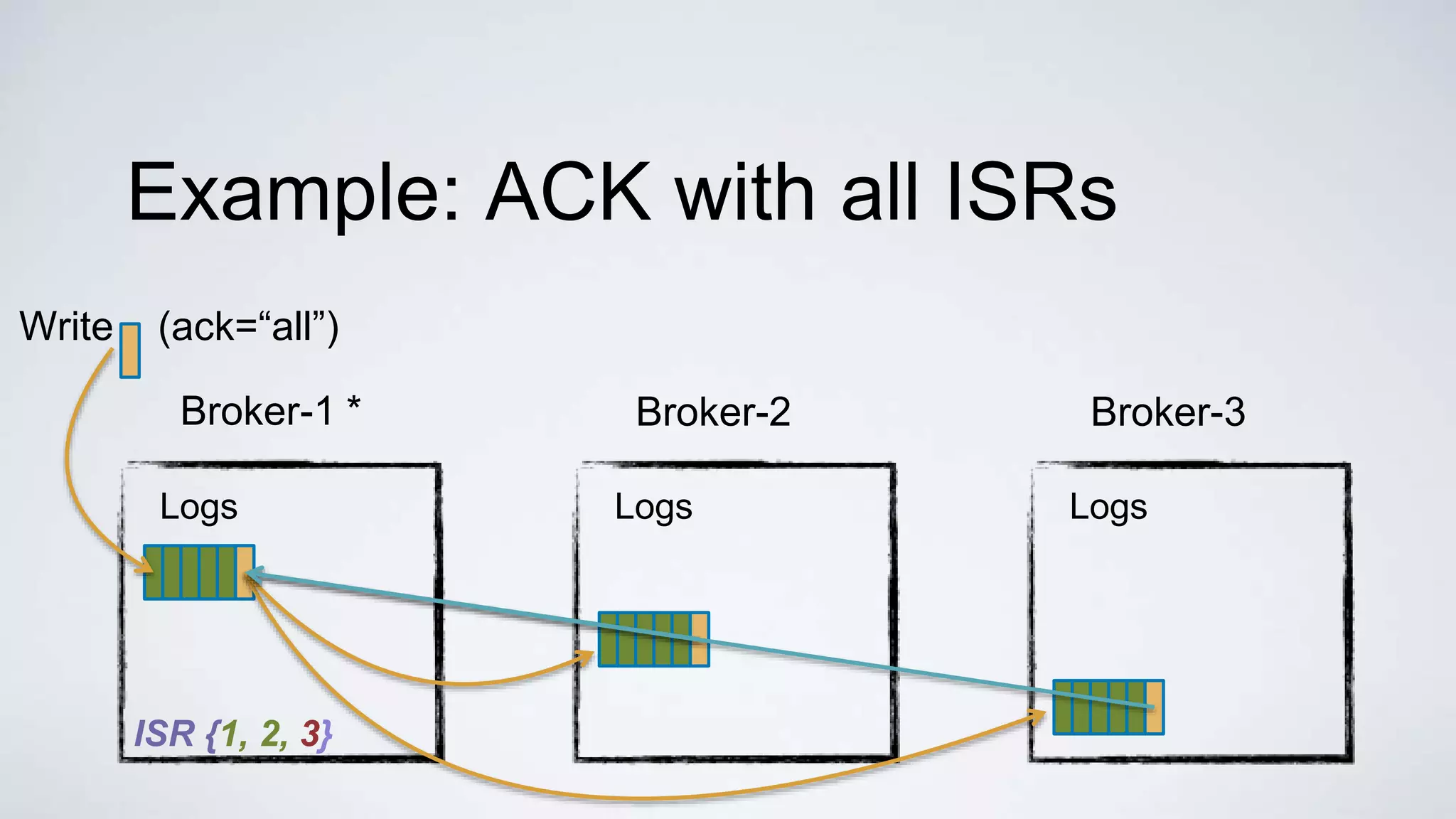

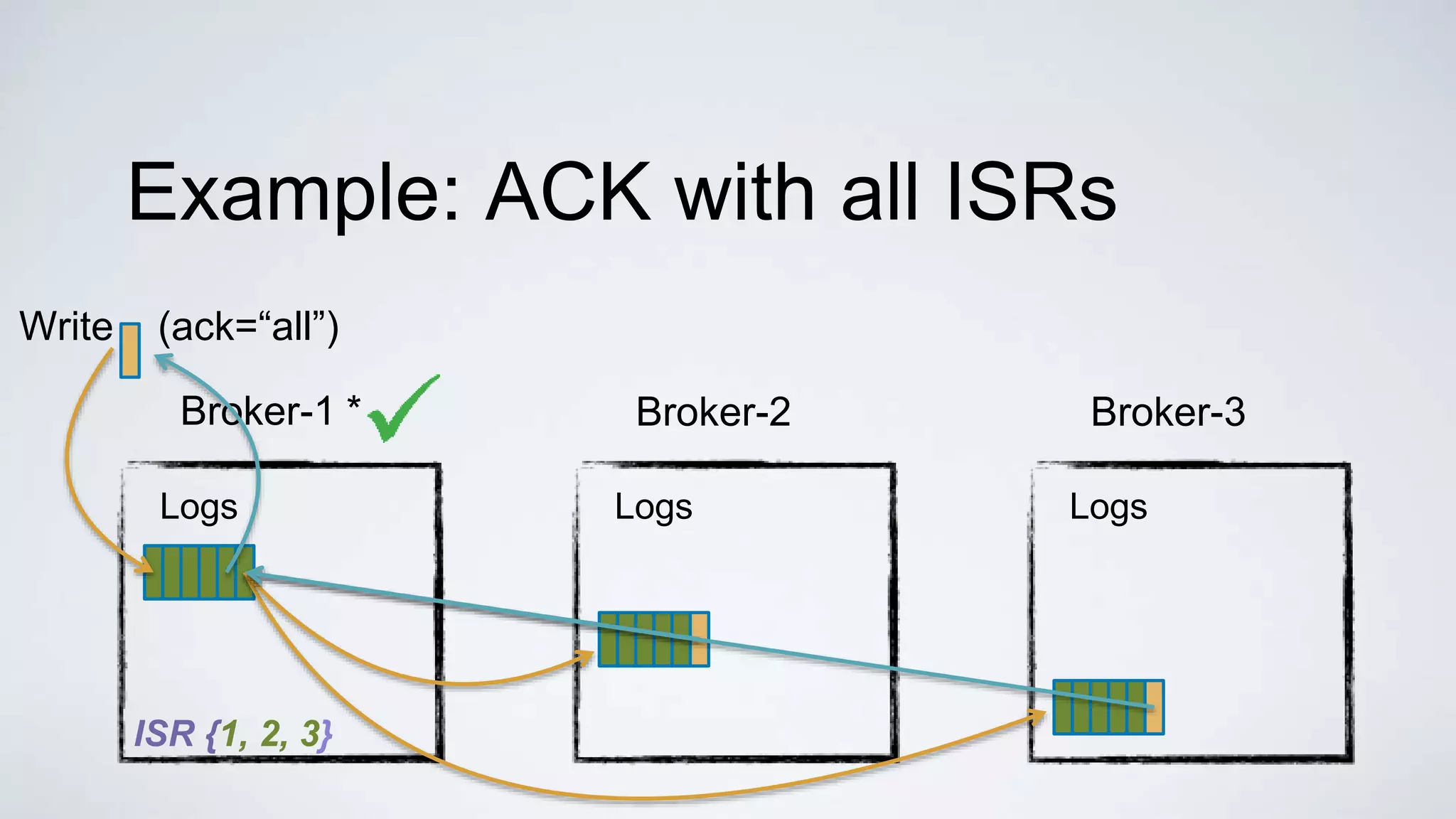

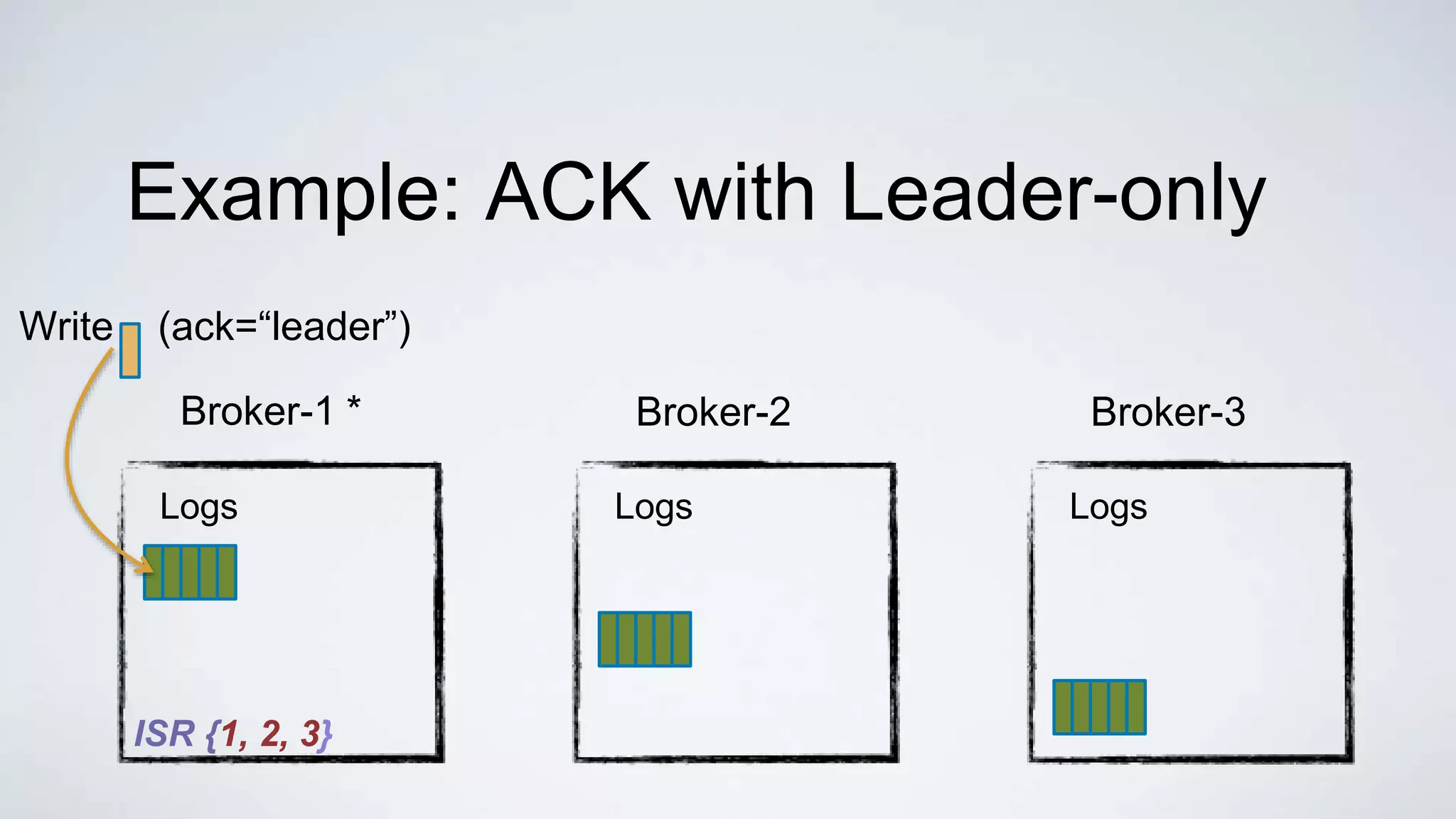

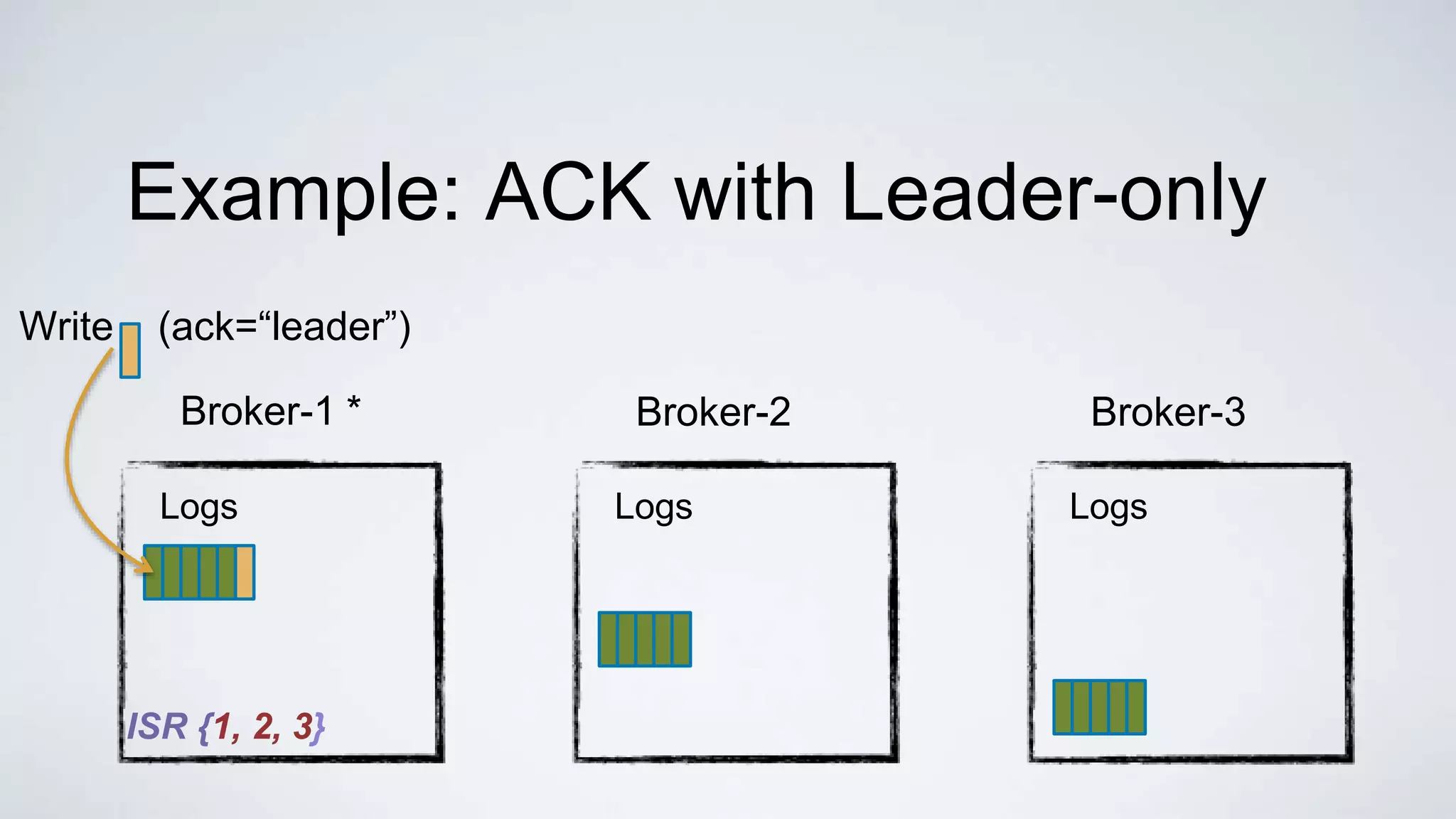

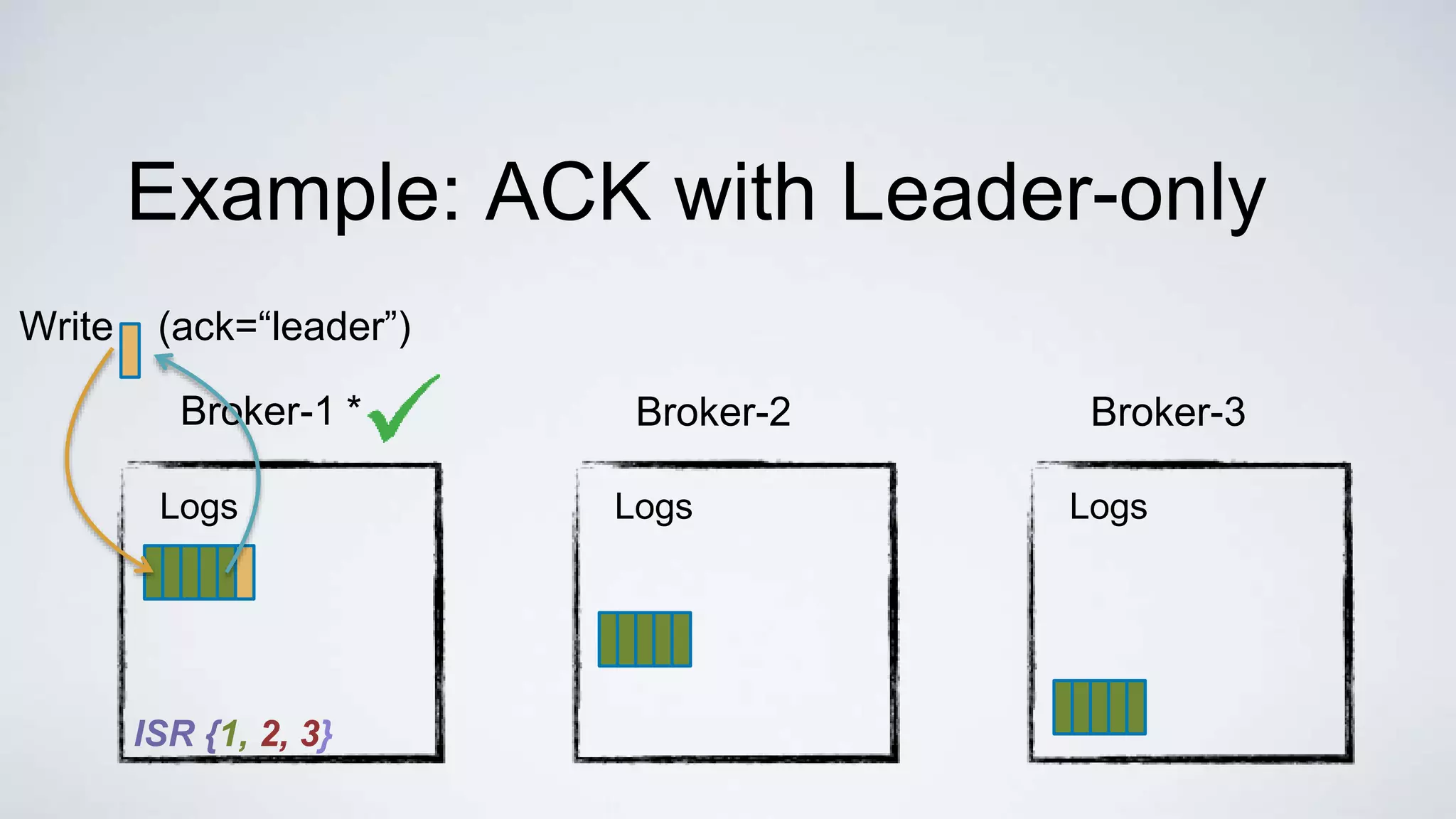

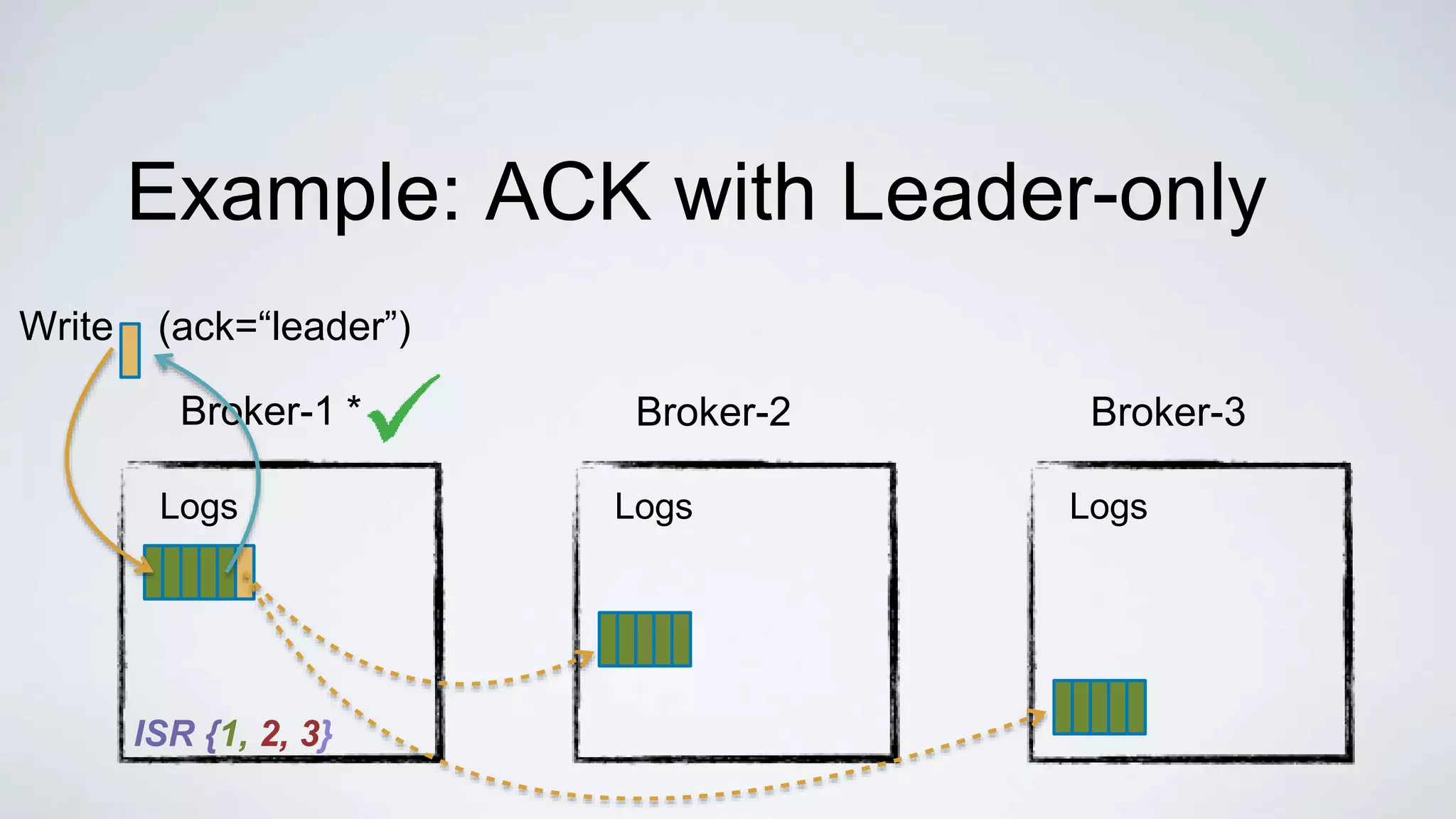

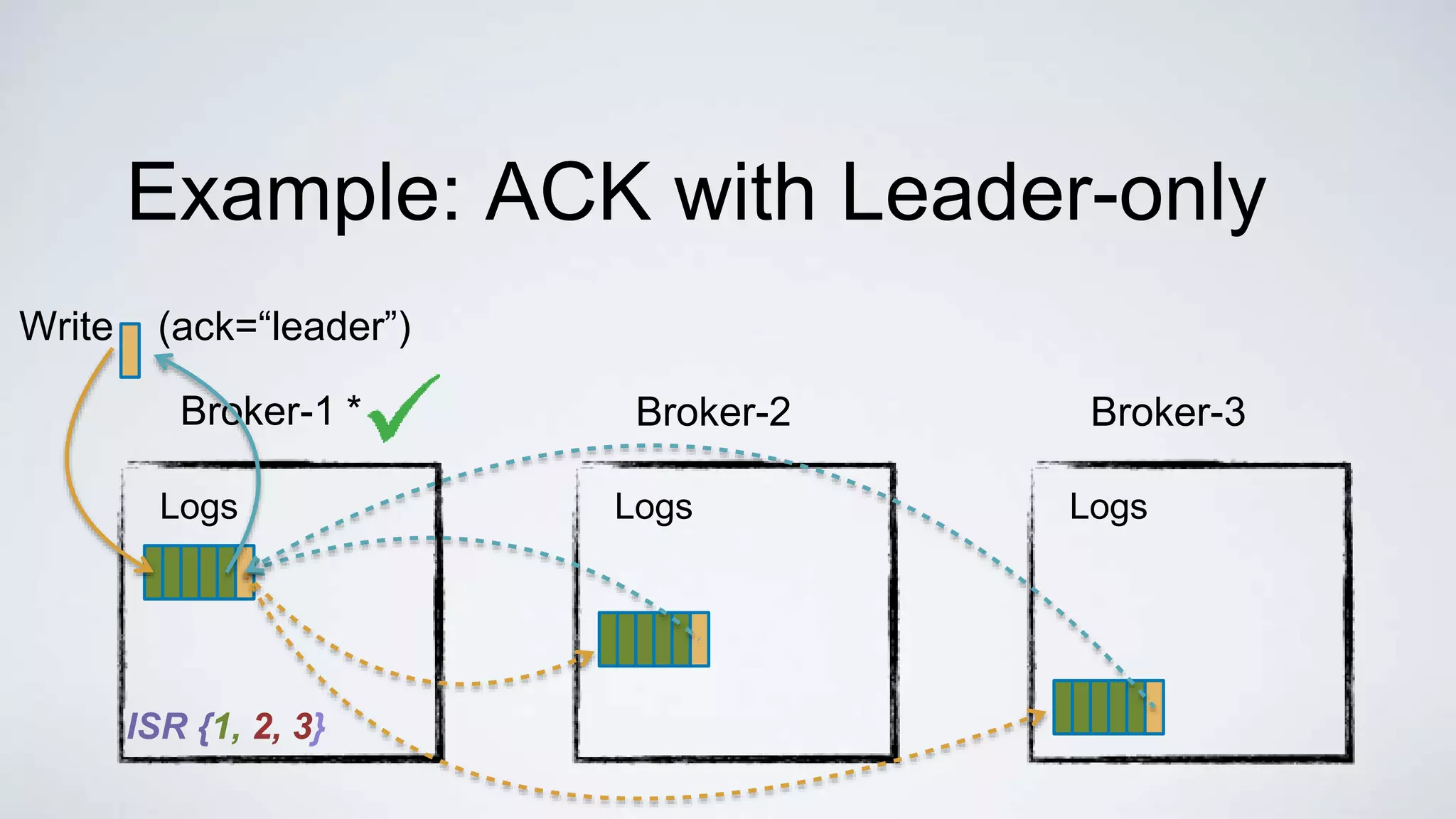

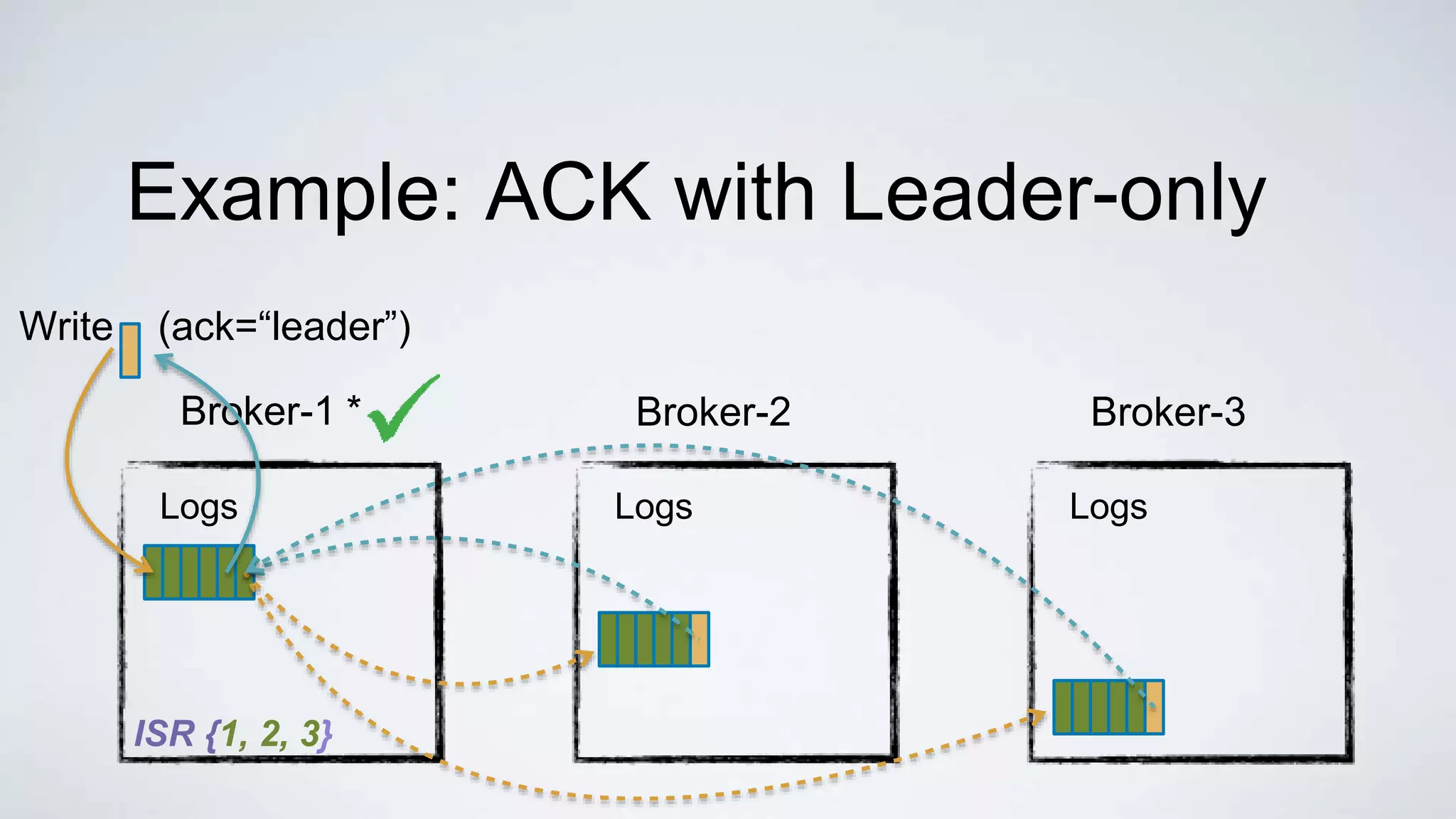

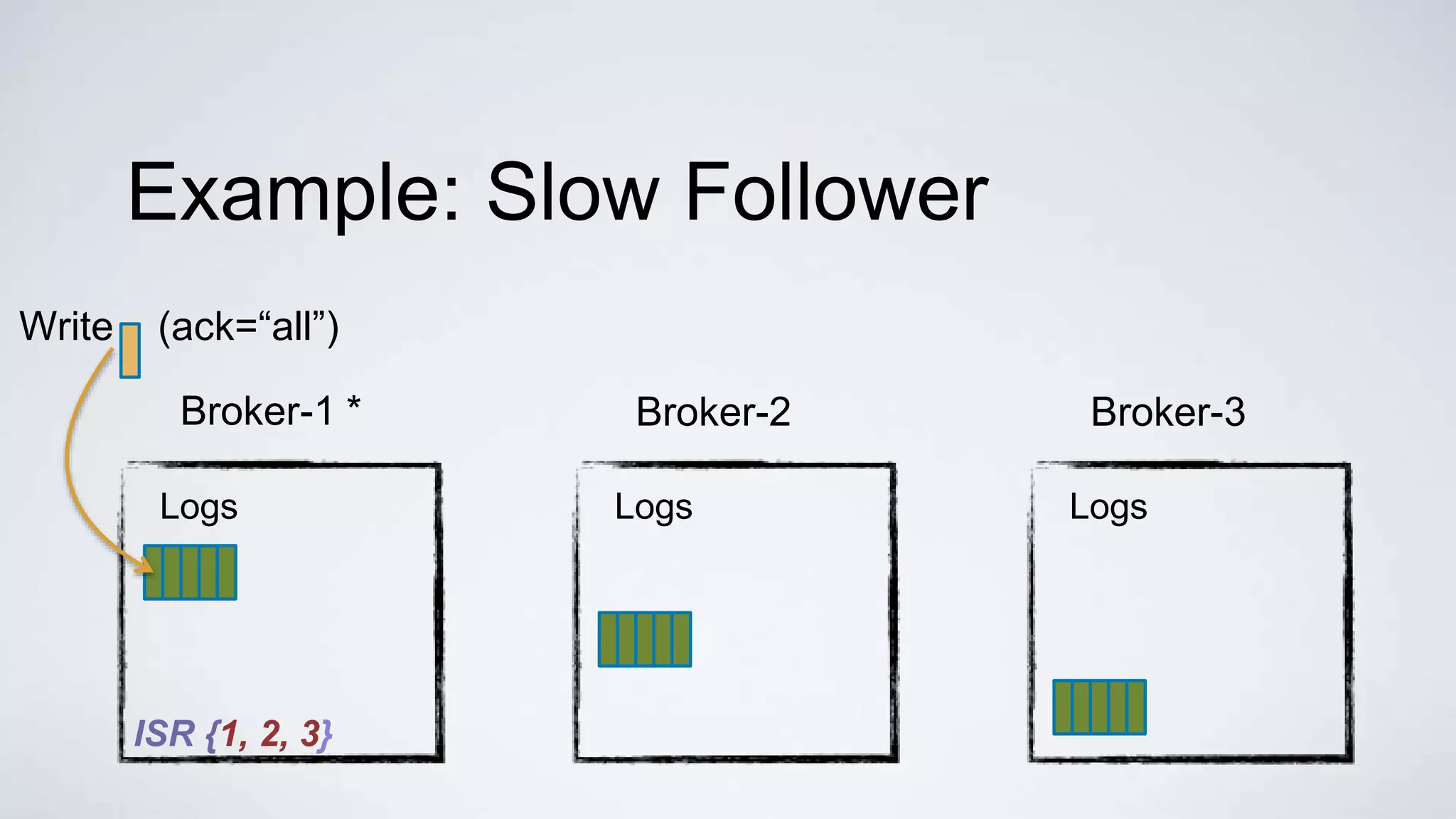

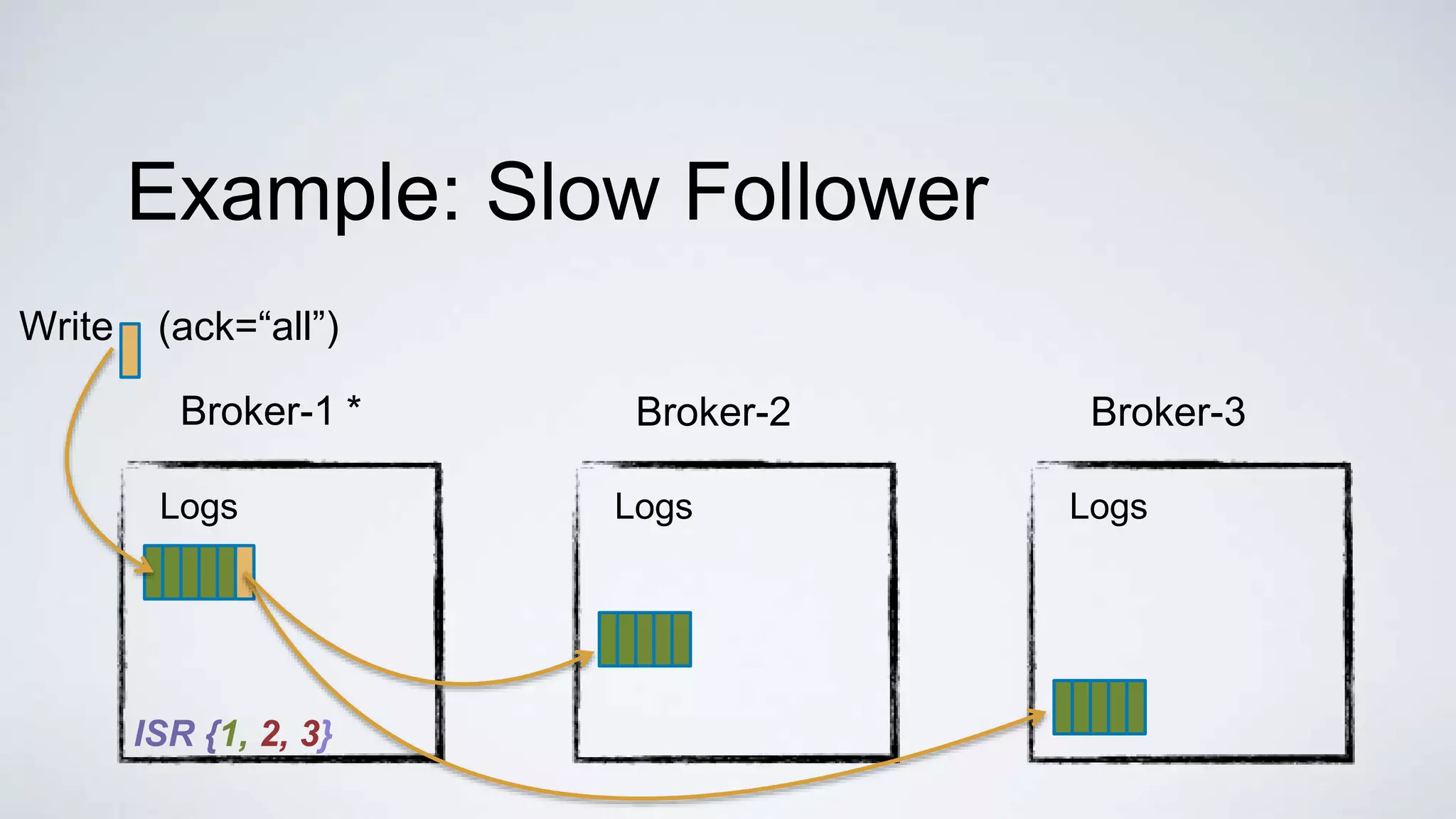

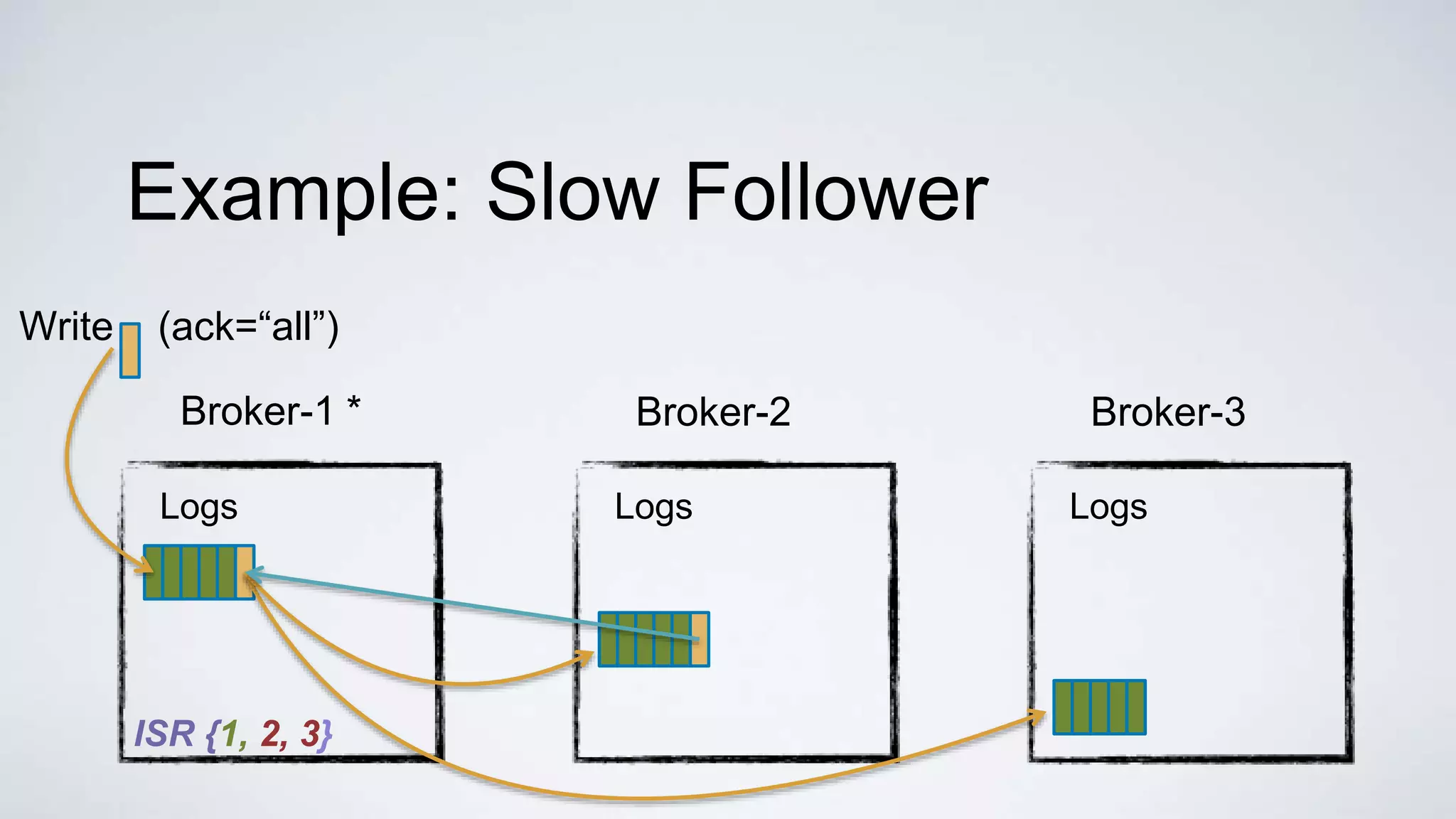

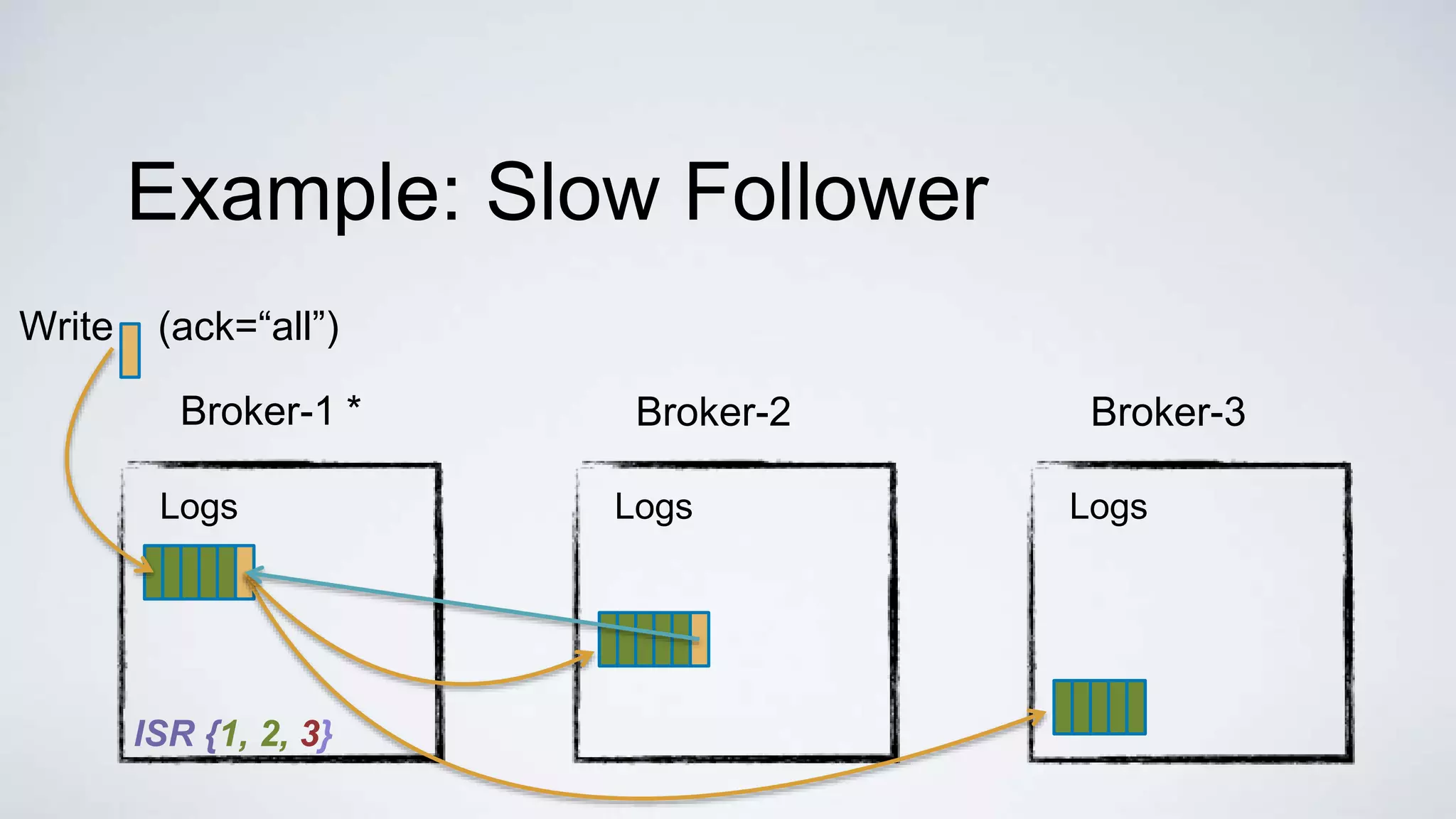

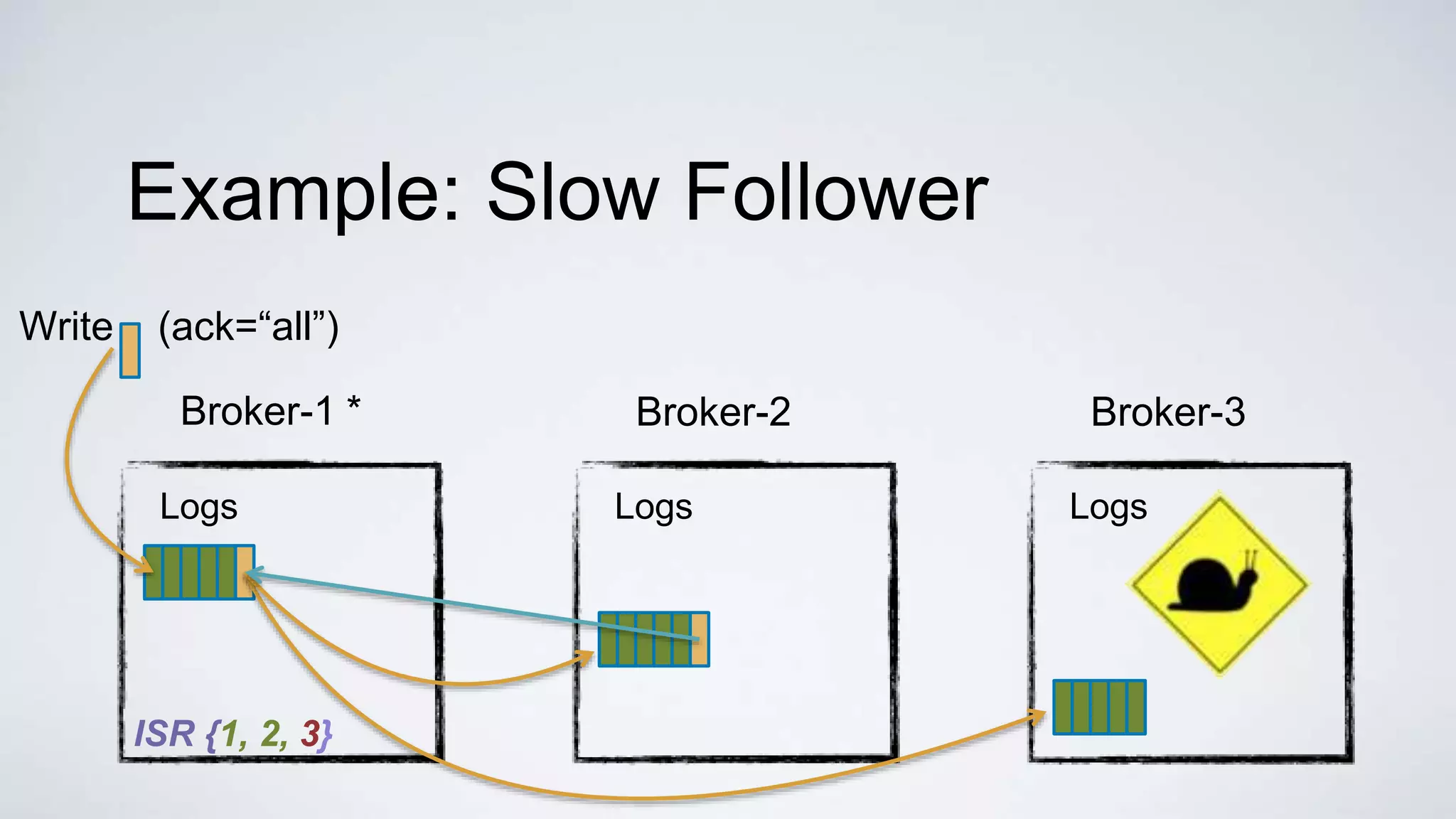

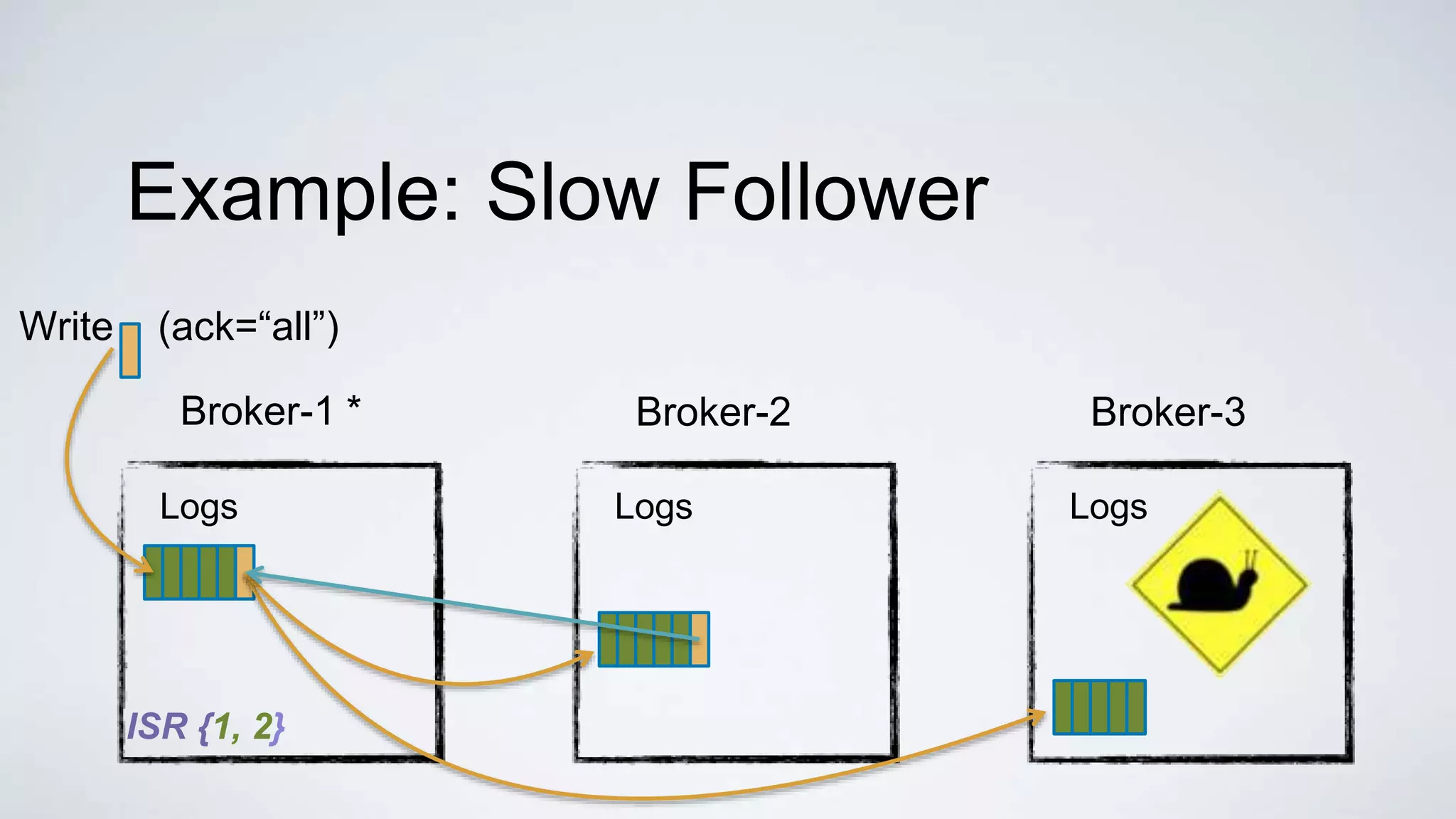

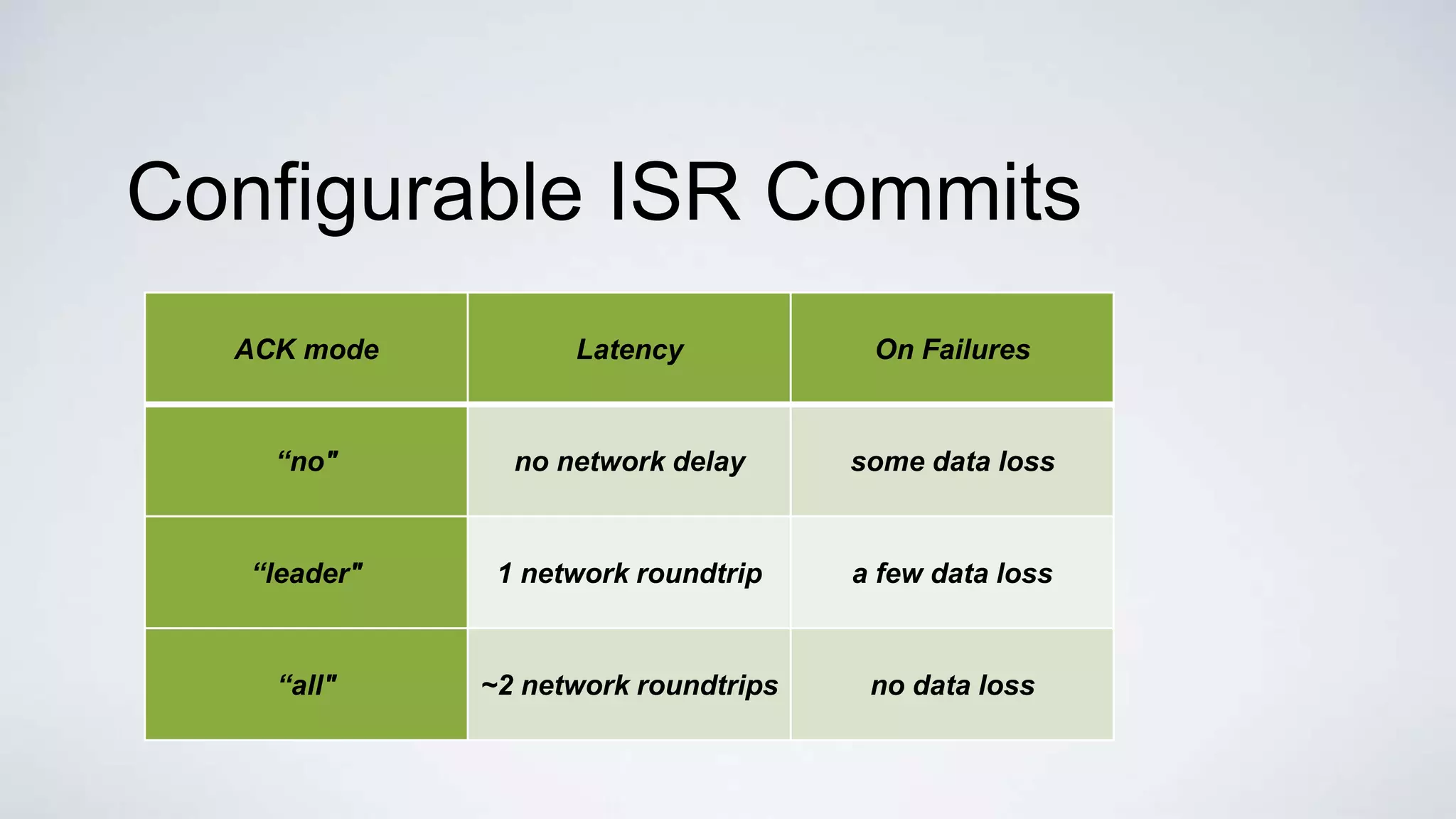

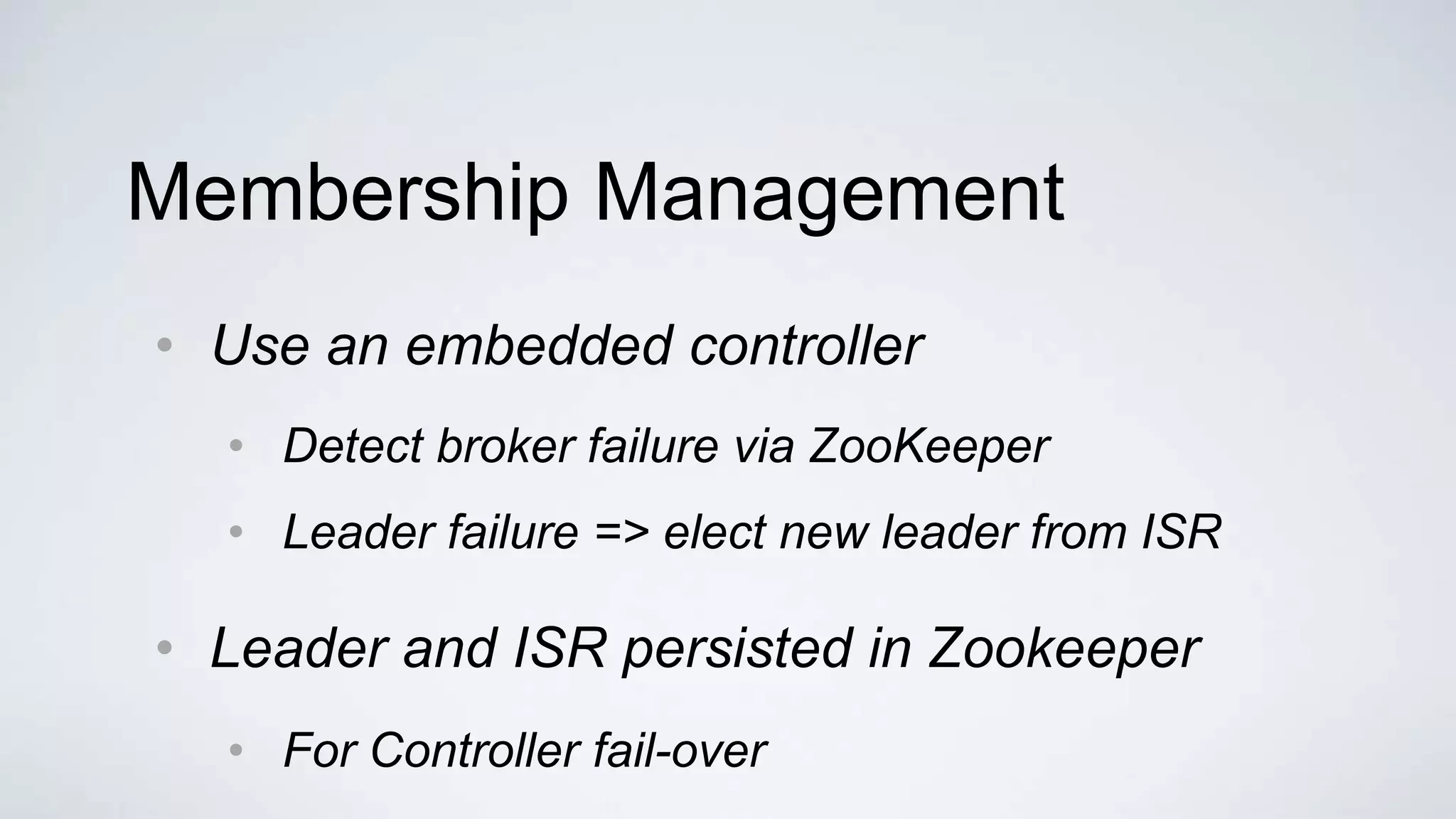

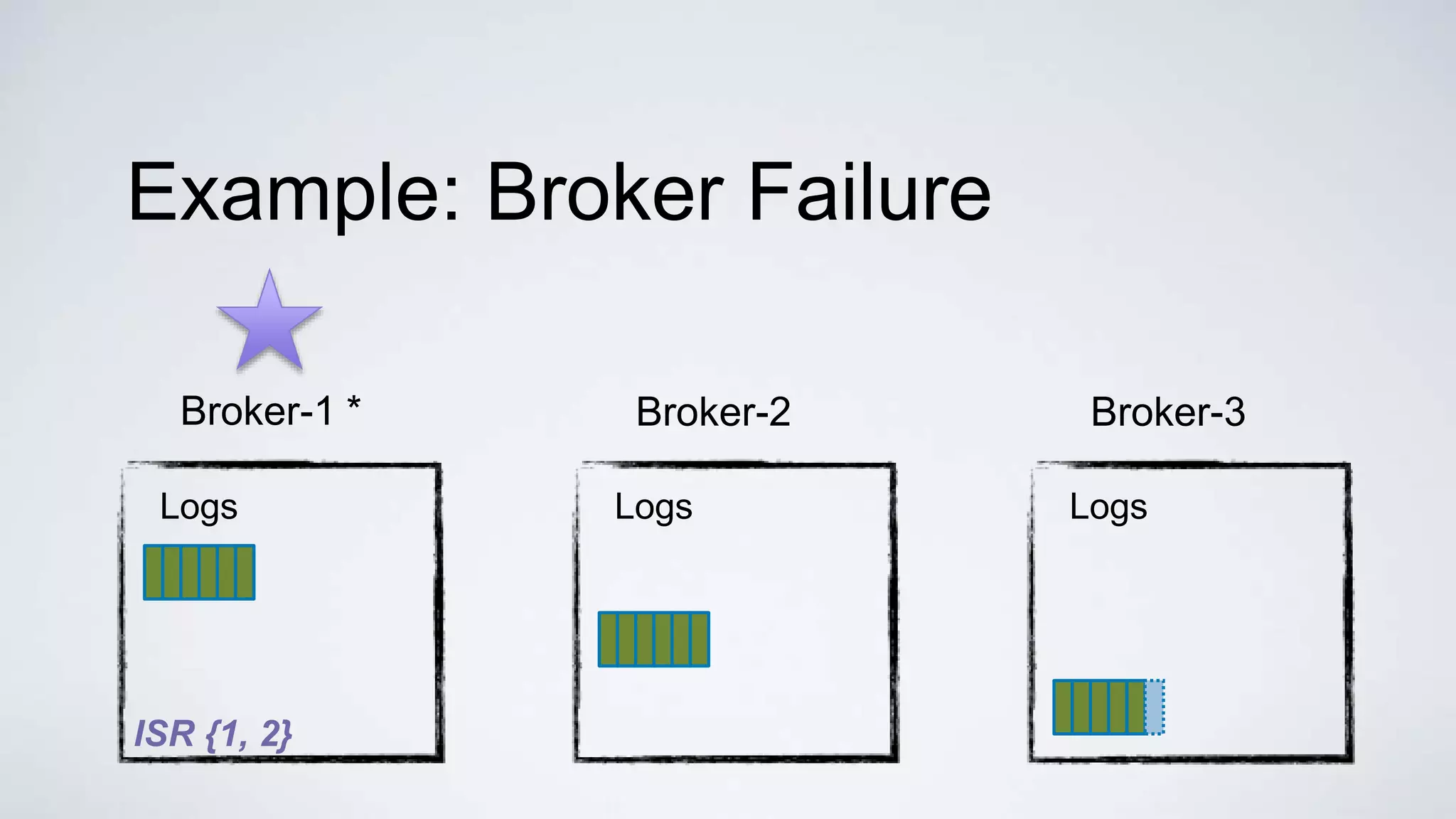

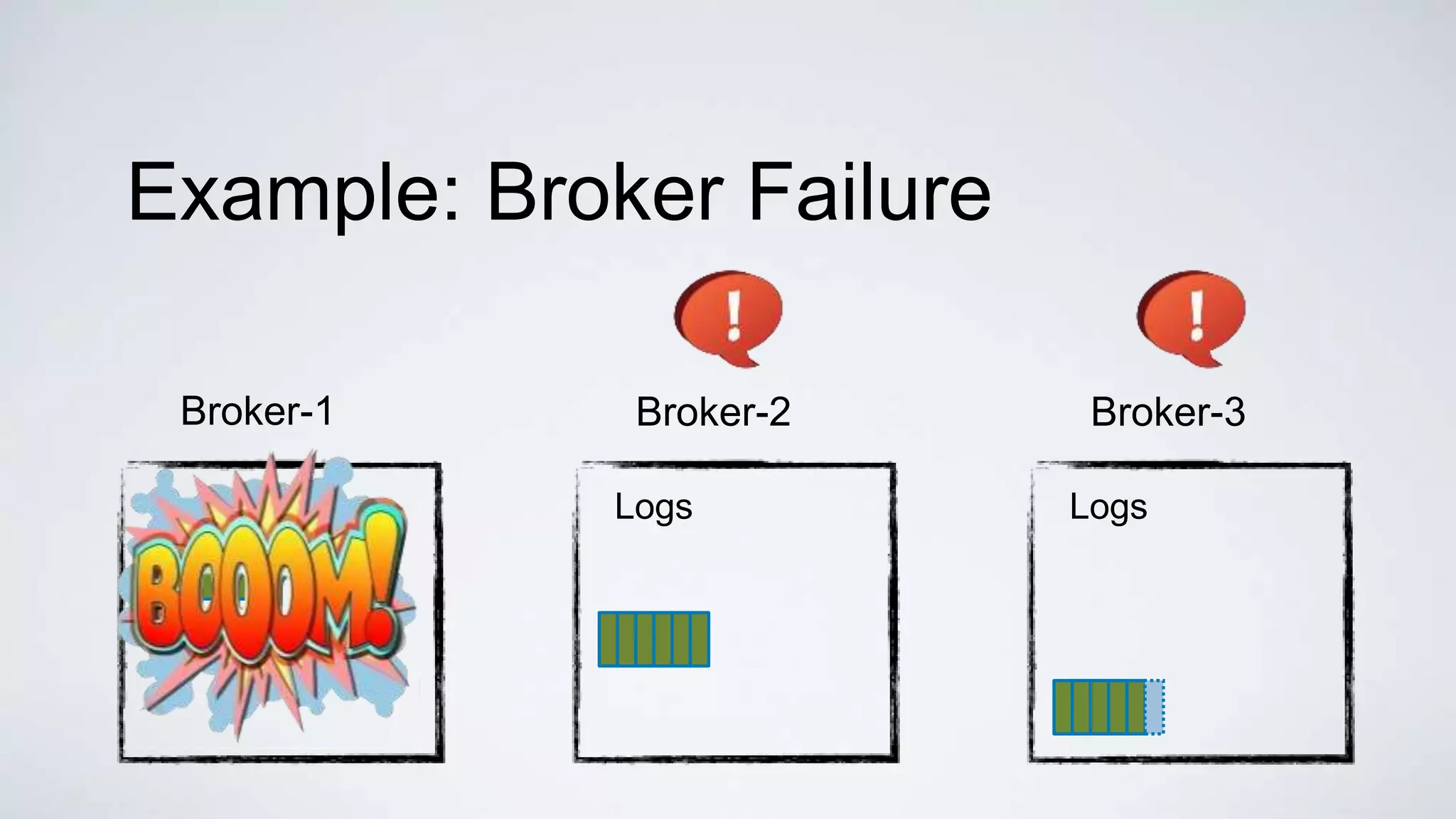

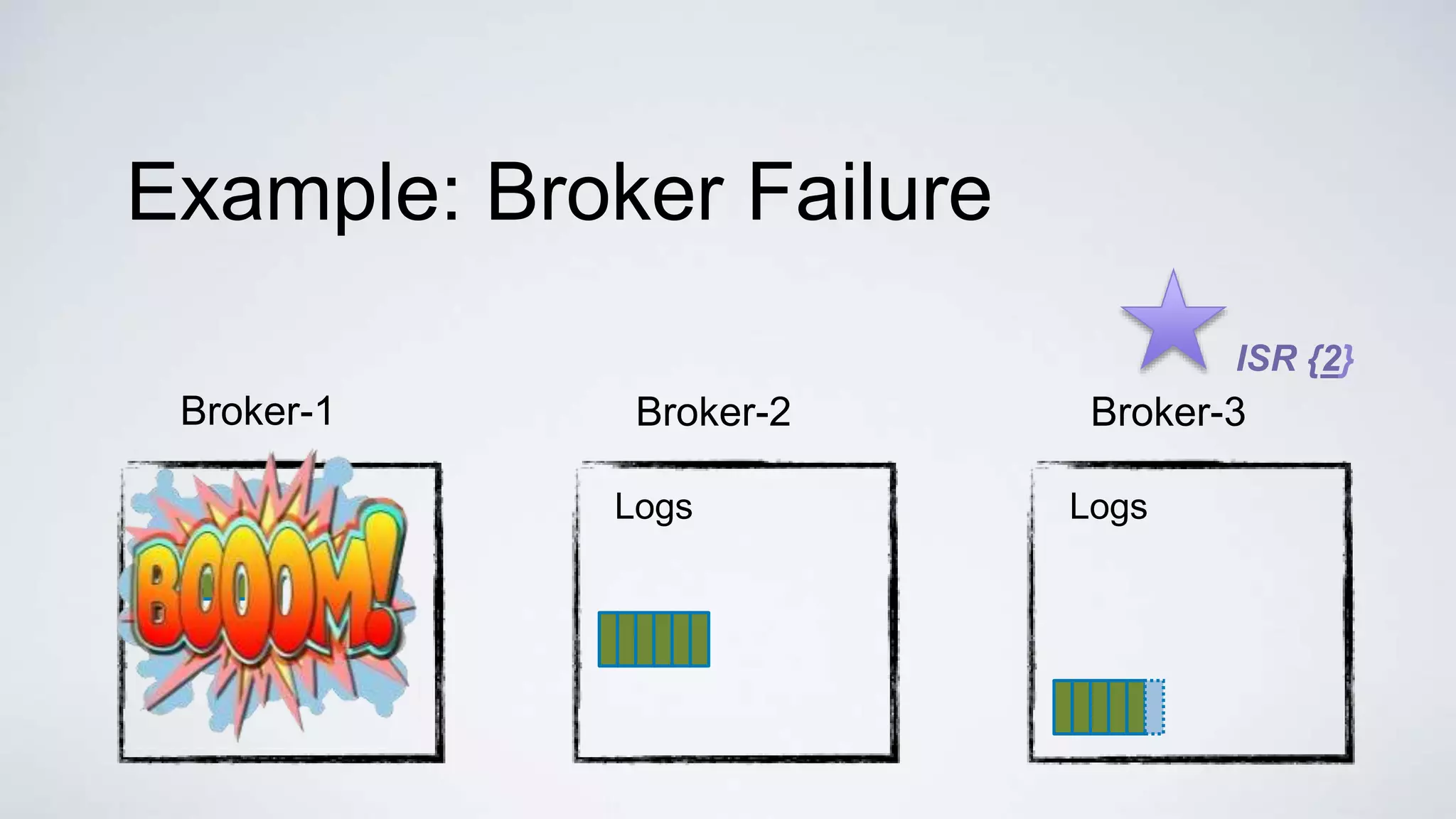

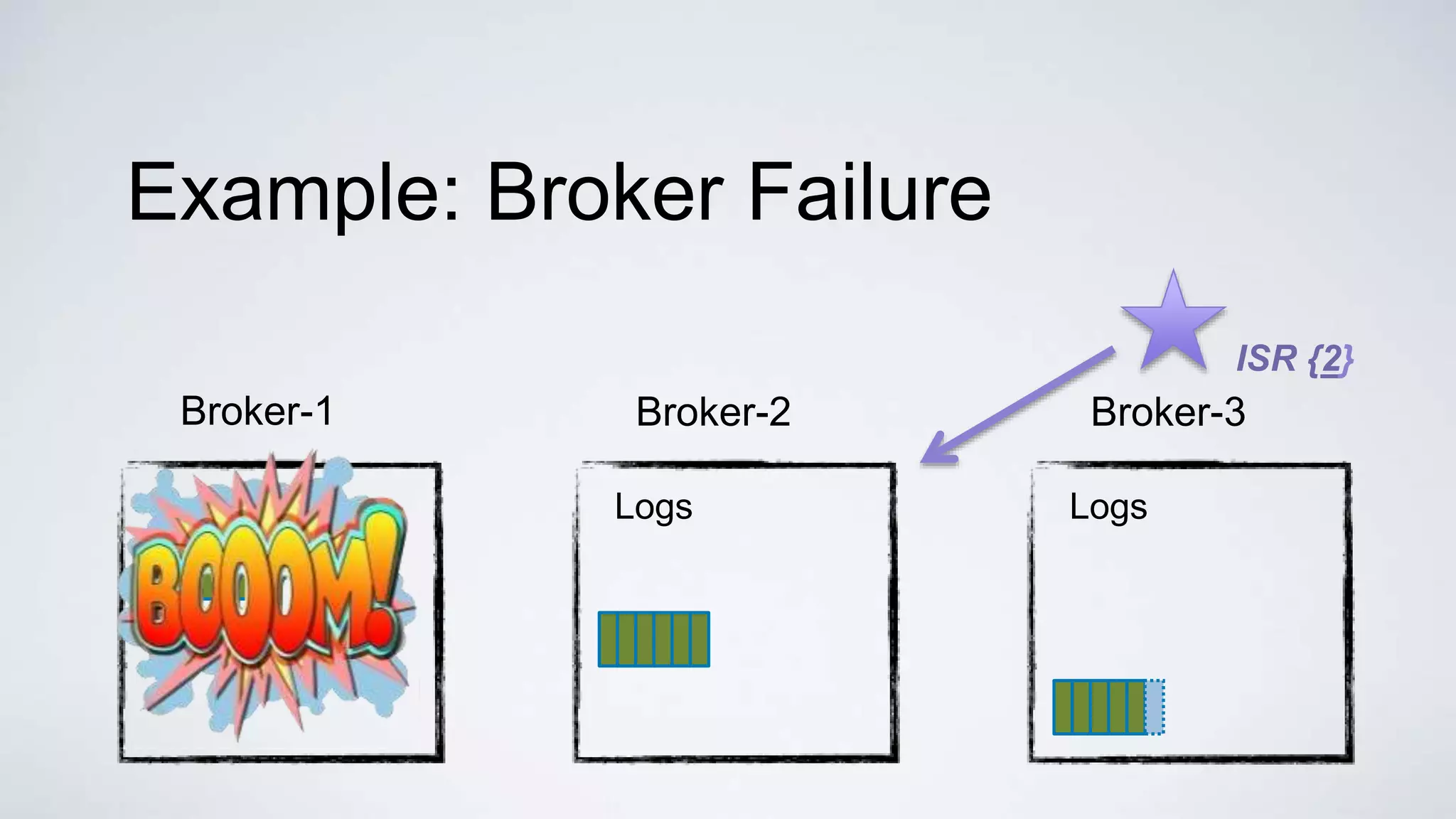

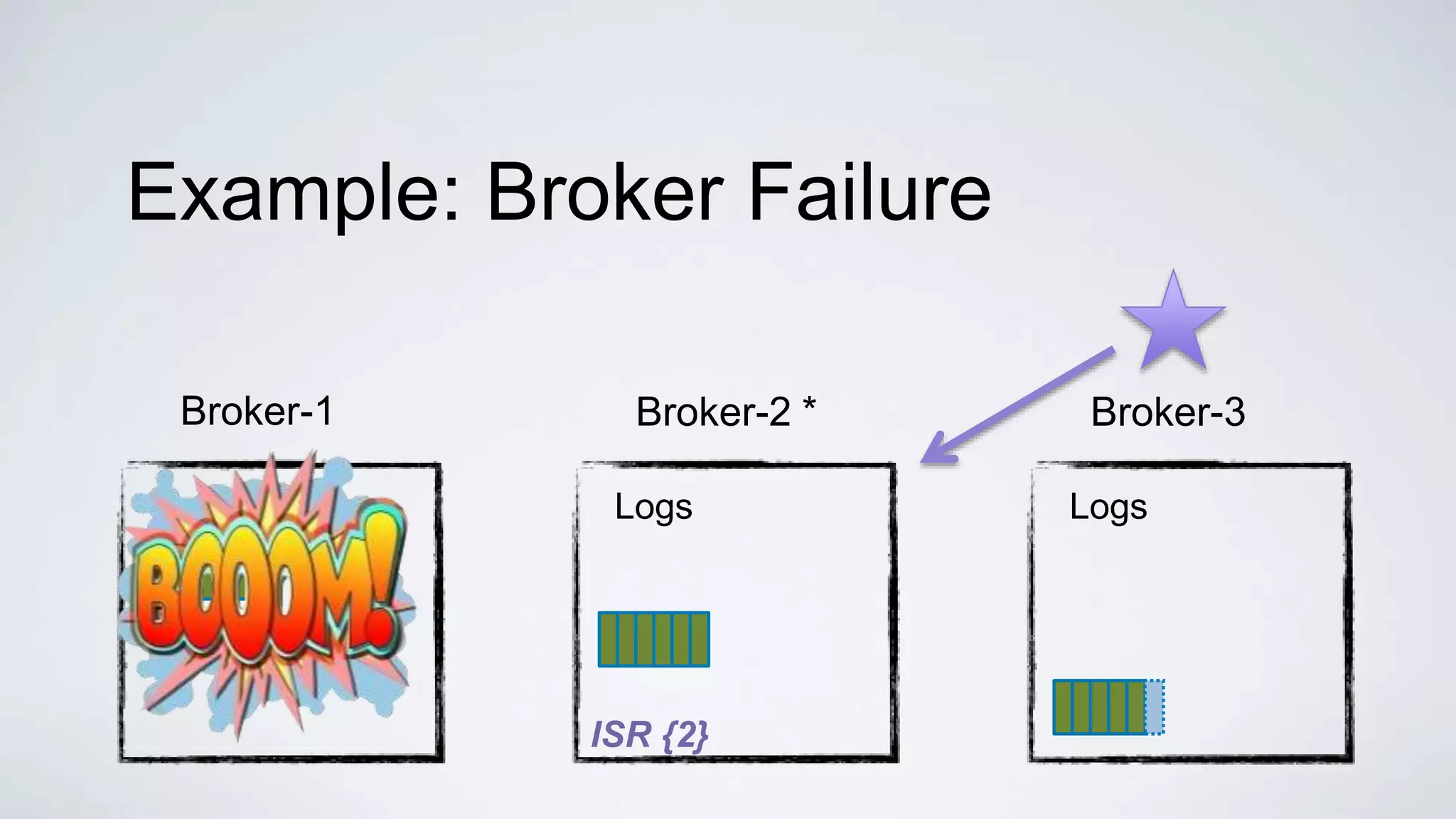

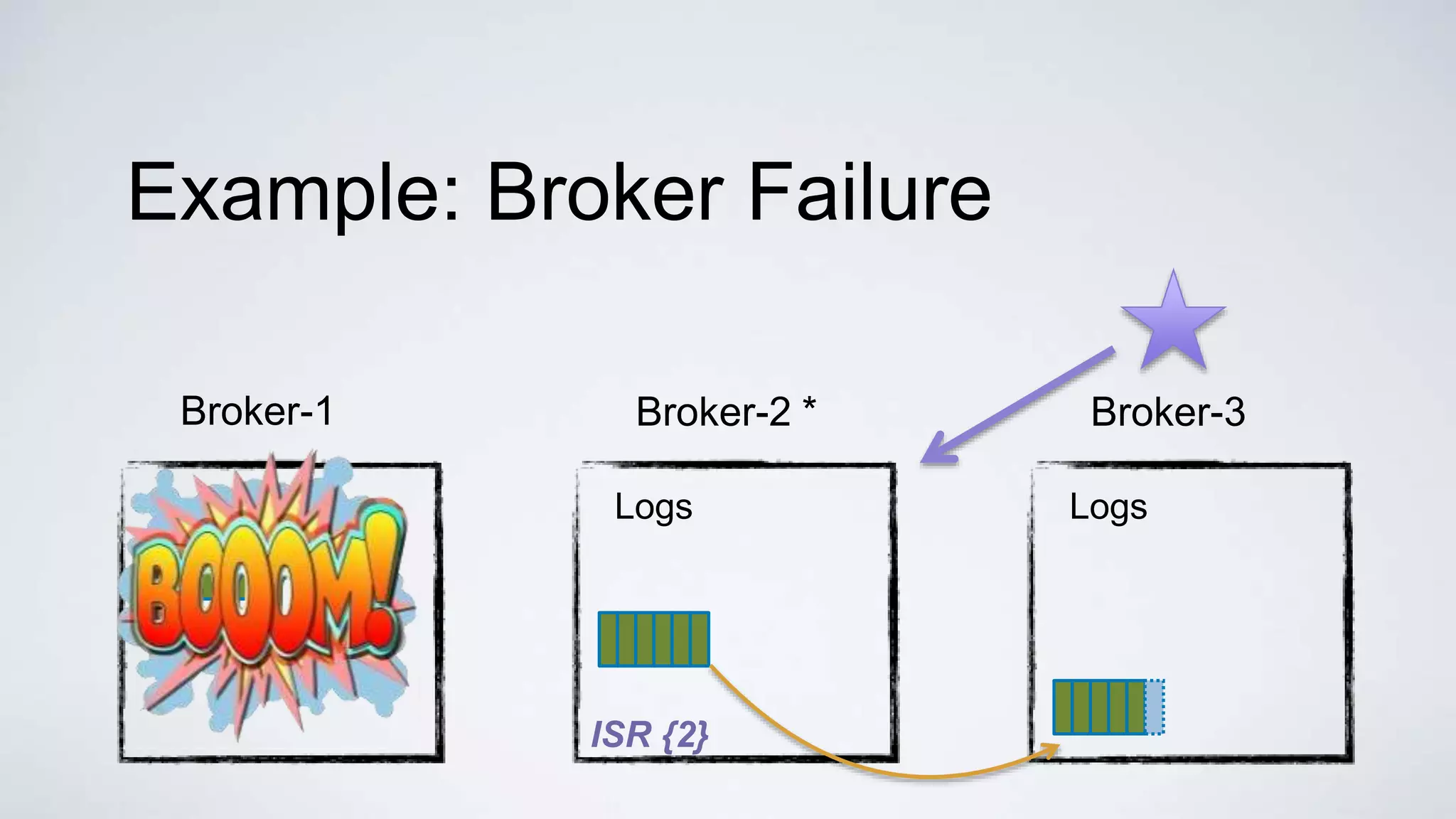

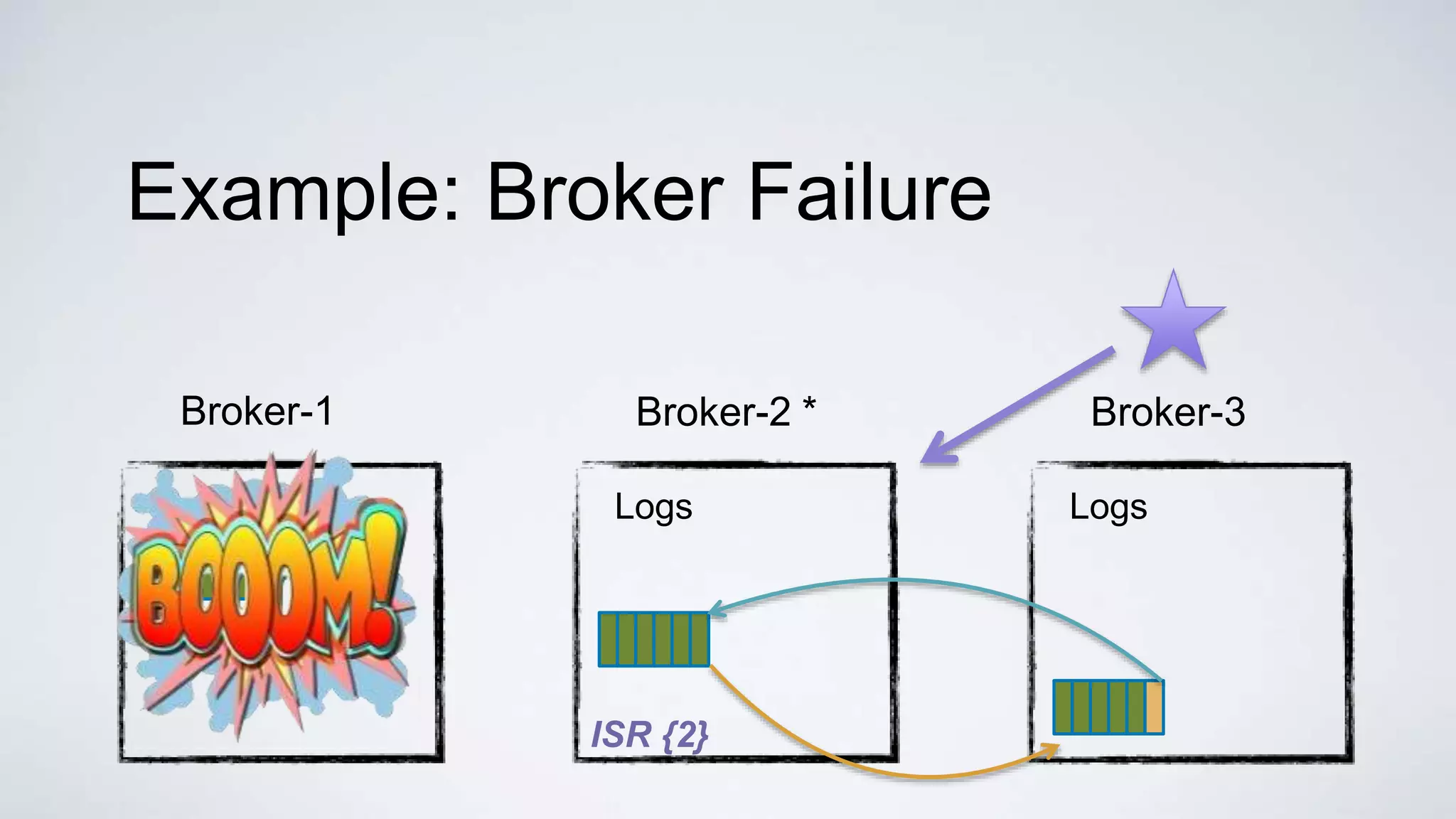

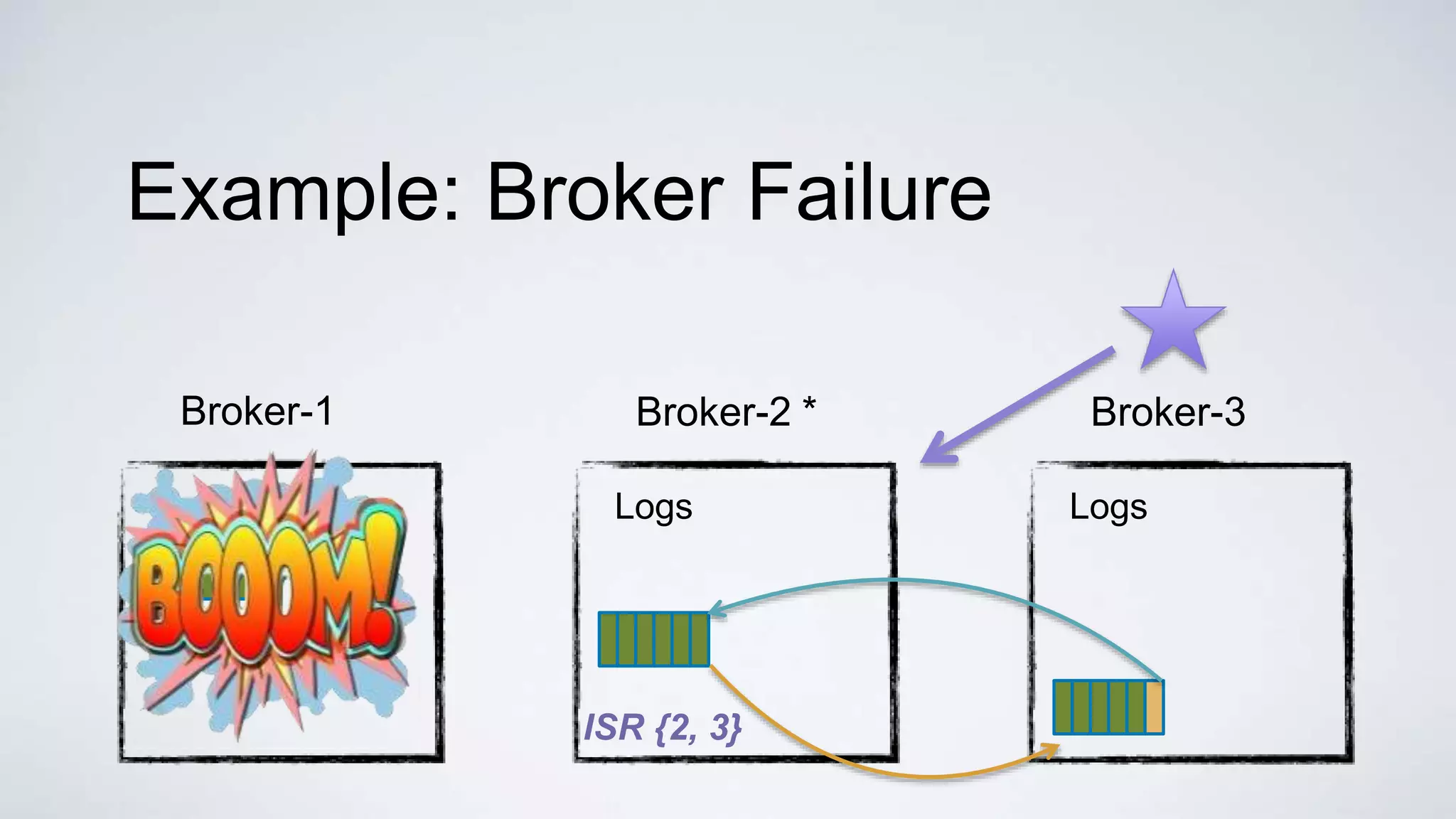

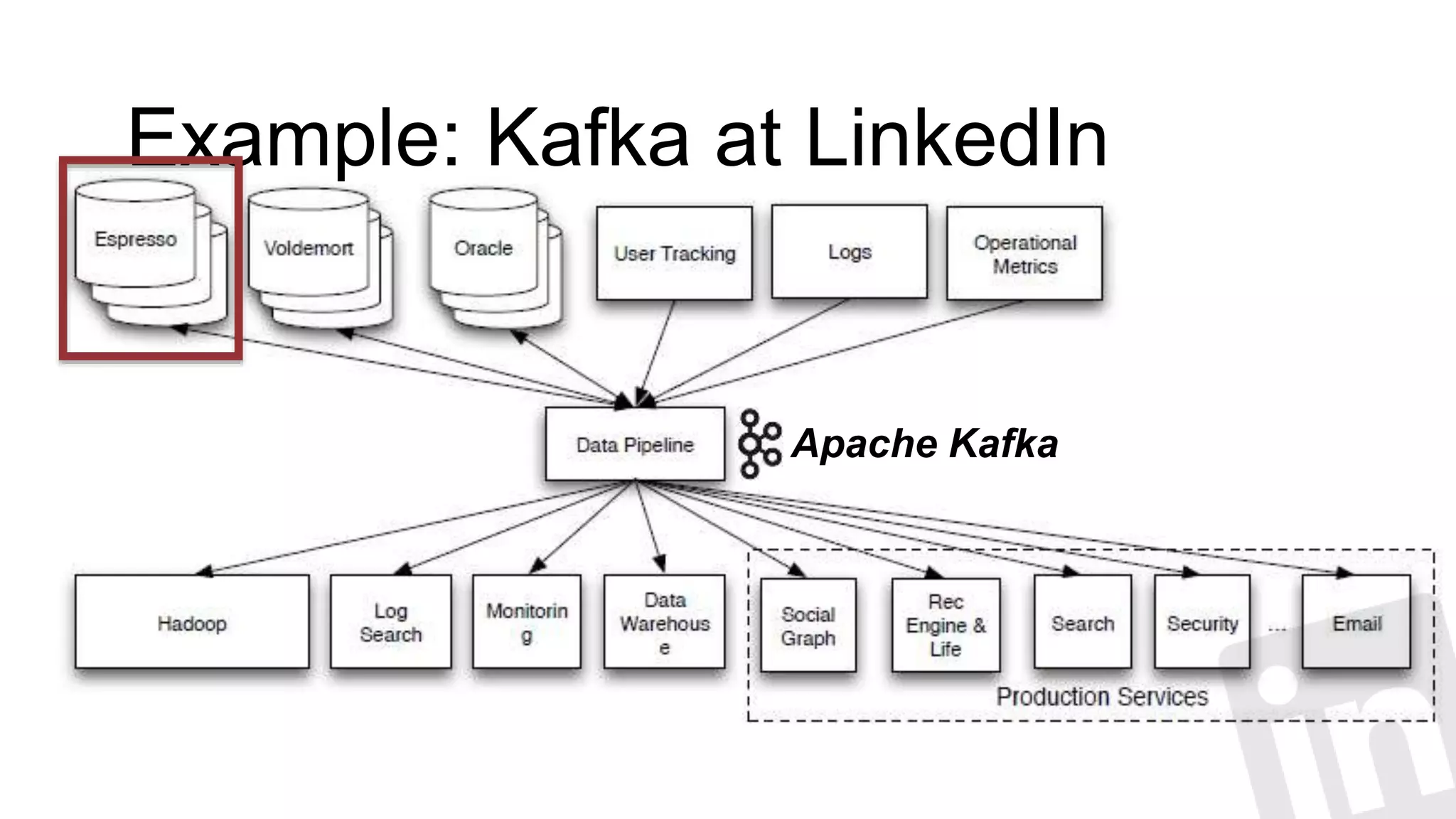

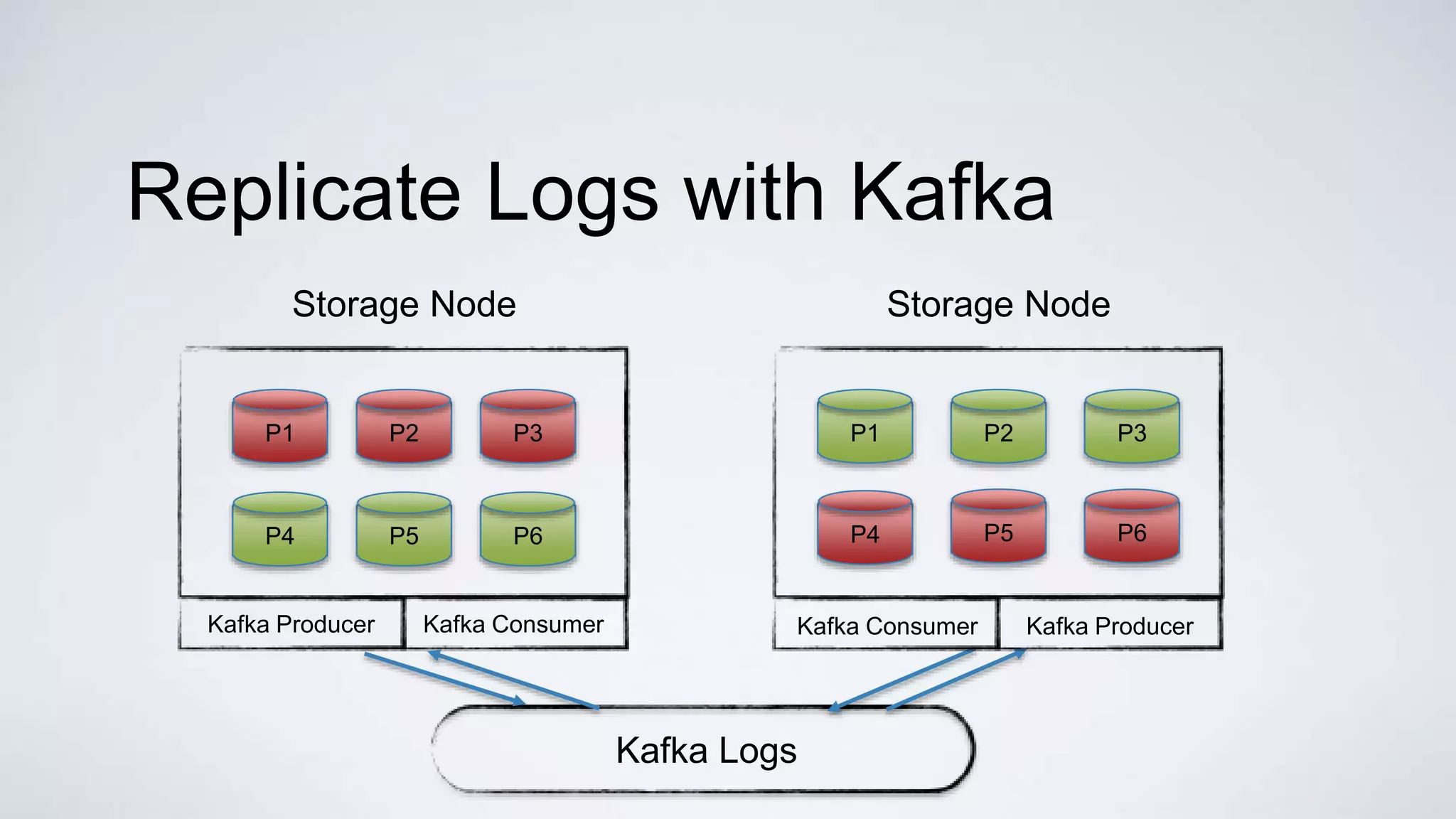

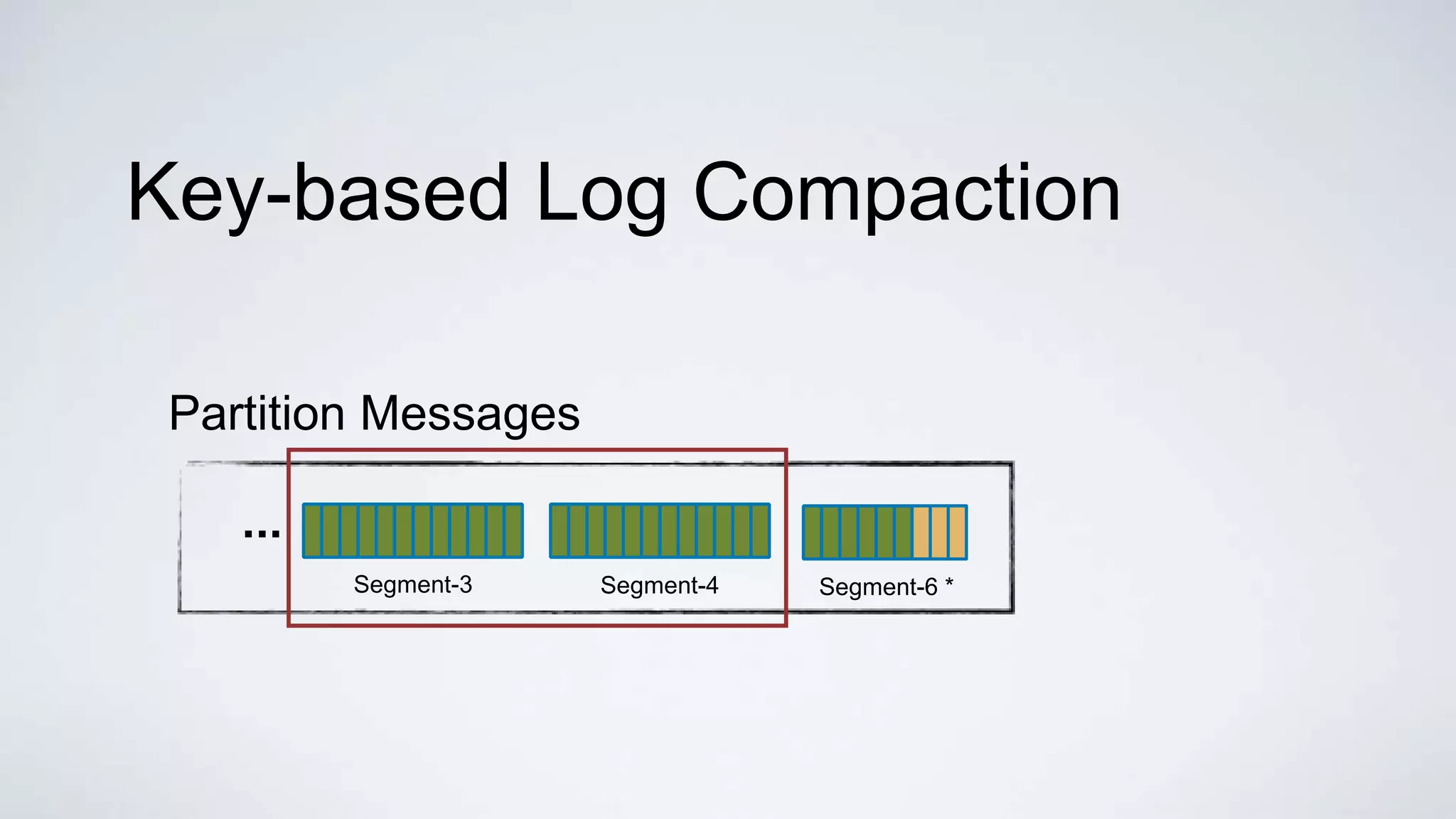

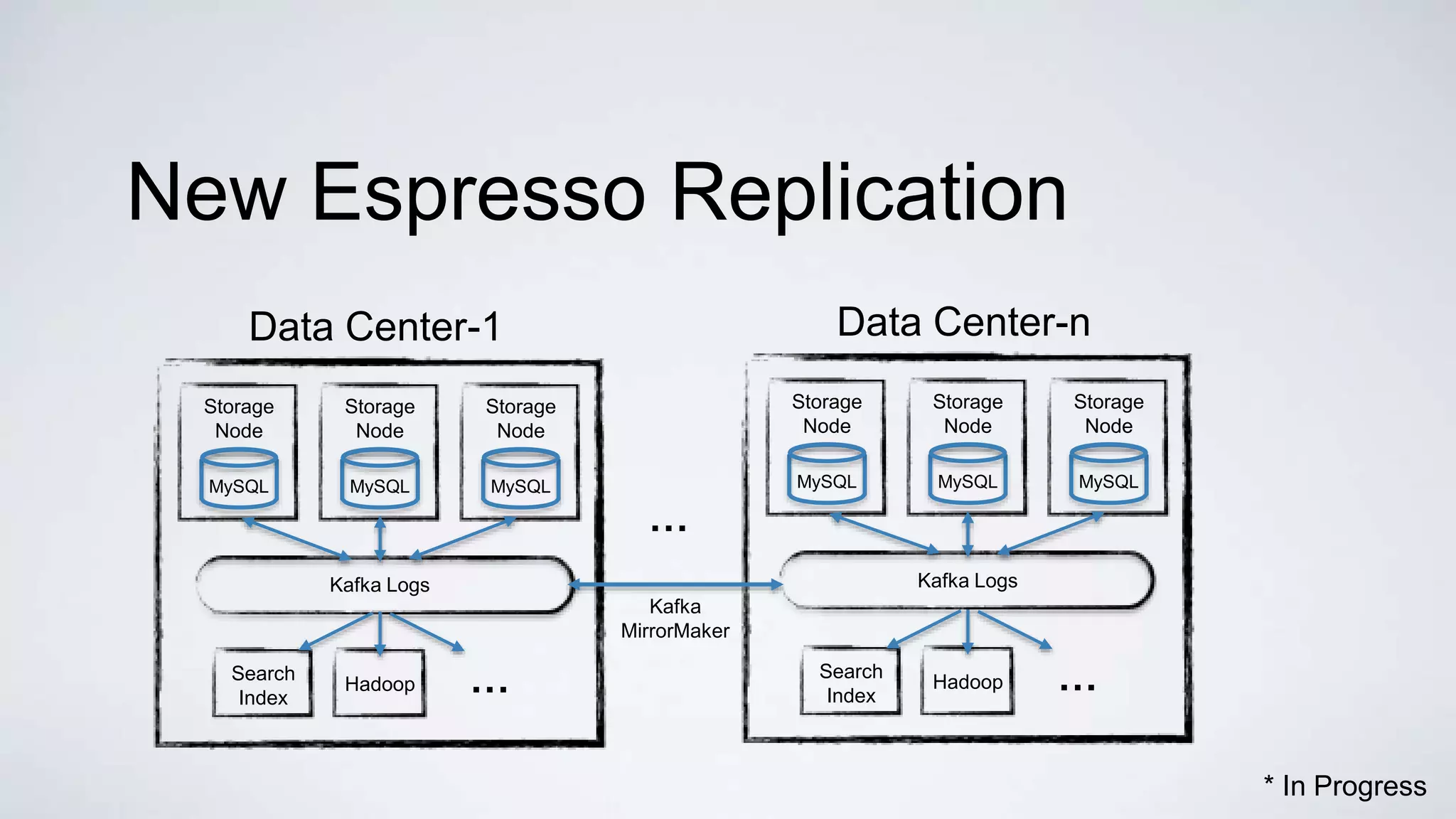

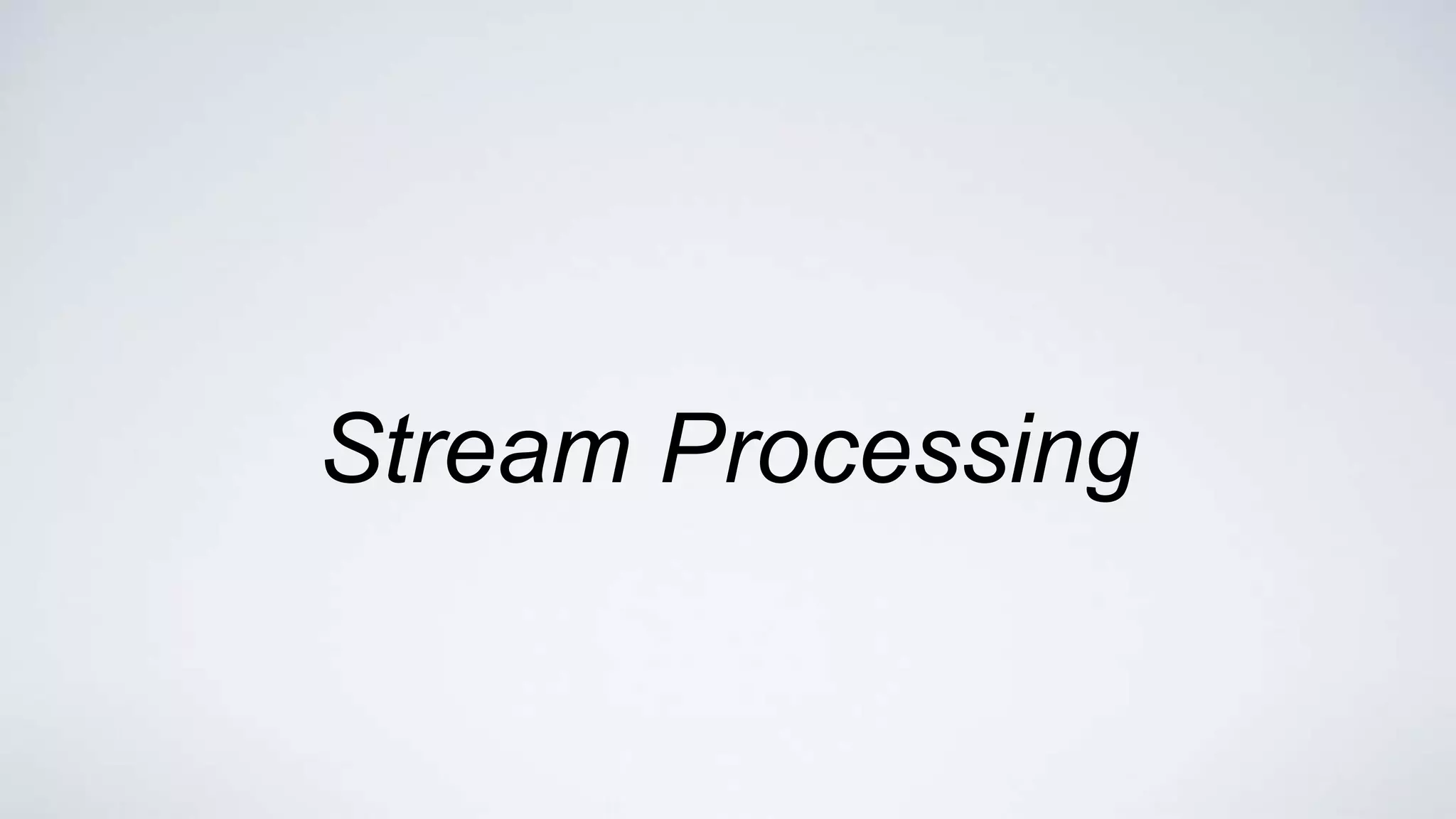

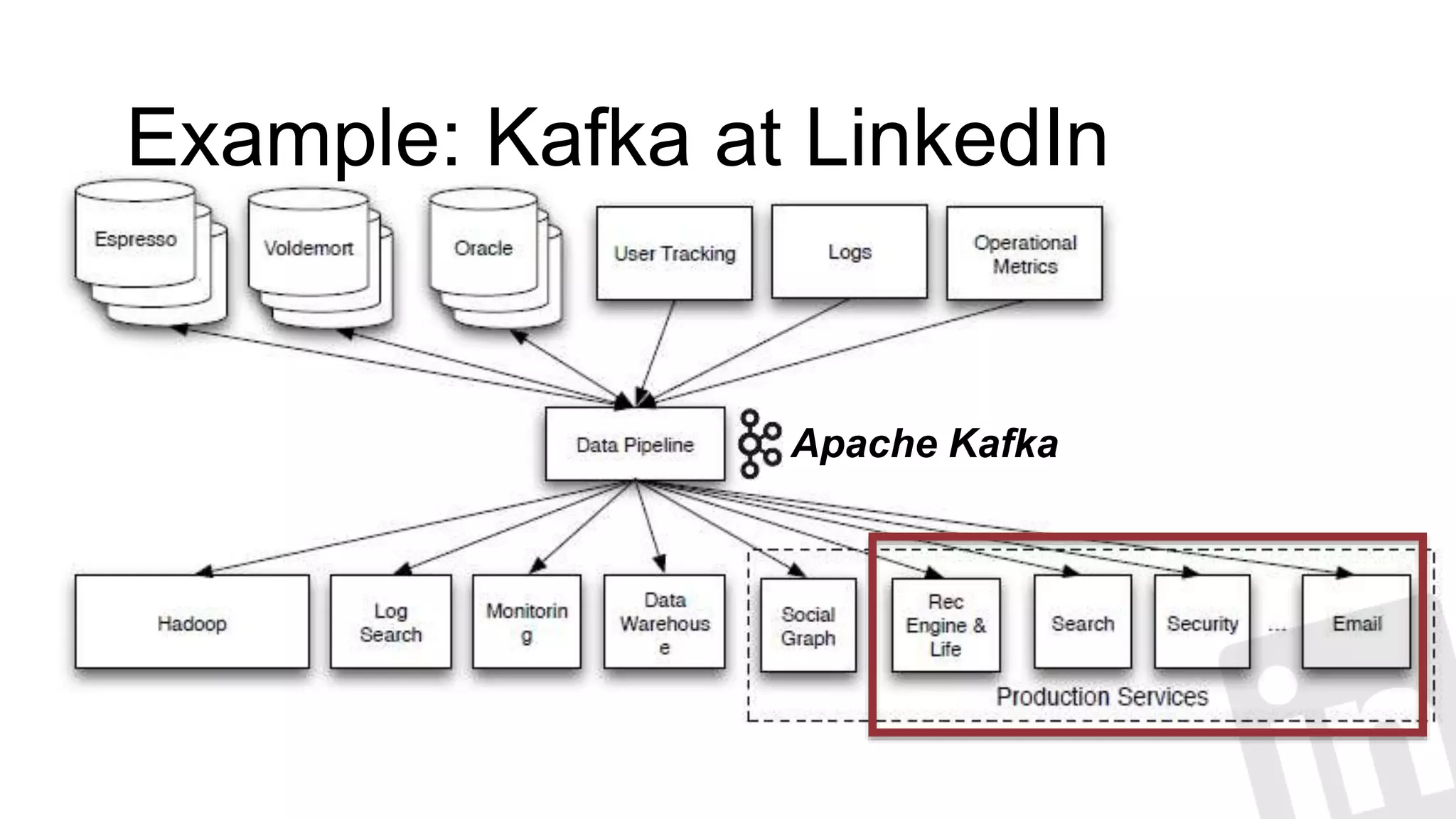

The document discusses building a replicated logging system using Apache Kafka, emphasizing its role as a distributed messaging system with log-centric data flow for centralized data pipelines. It covers key concepts such as log replication, acknowledgment settings, and failure recovery mechanisms, particularly highlighting Kafka's usage at LinkedIn. The document concludes by asserting that a log-centric data flow facilitates system scalability and real-time data processing.

![Example: Espresso • A distributed document store • Primary online data serving platform at LI • Member profile, homepage, InMail, etc [SIGMOD 2013]](https://image.slidesharecdn.com/buildingareplicatedloggingsystemwithapachekafka-150907075312-lva1-app6892/75/Building-a-Replicated-Logging-System-with-Apache-Kafka-58-2048.jpg)

![• Data flow streaming on Kafka and YARN • Stateful processing • Re-processing • Failure Recovery Example: Samza [CIDR 2015]](https://image.slidesharecdn.com/buildingareplicatedloggingsystemwithapachekafka-150907075312-lva1-app6892/75/Building-a-Replicated-Logging-System-with-Apache-Kafka-71-2048.jpg)