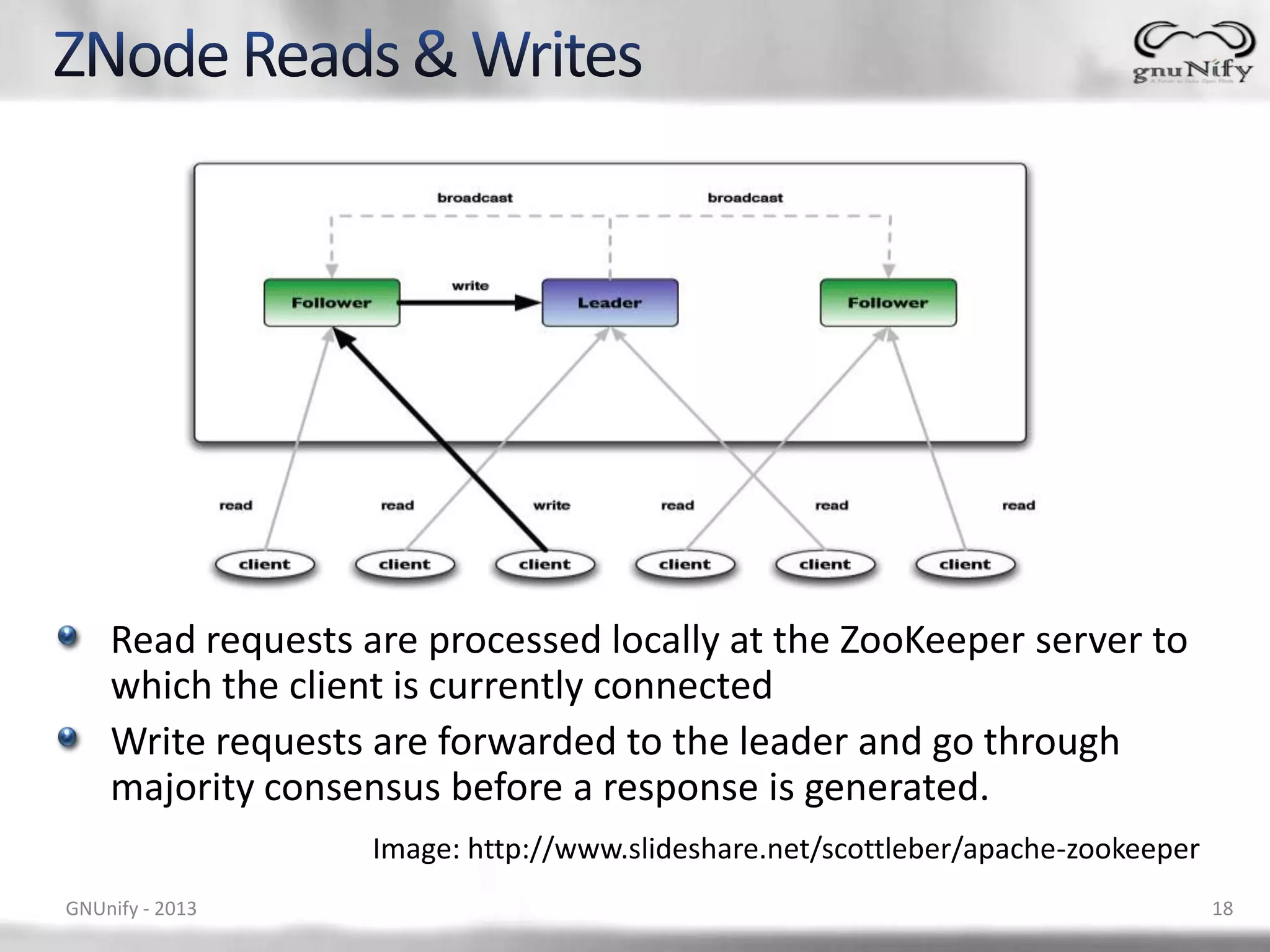

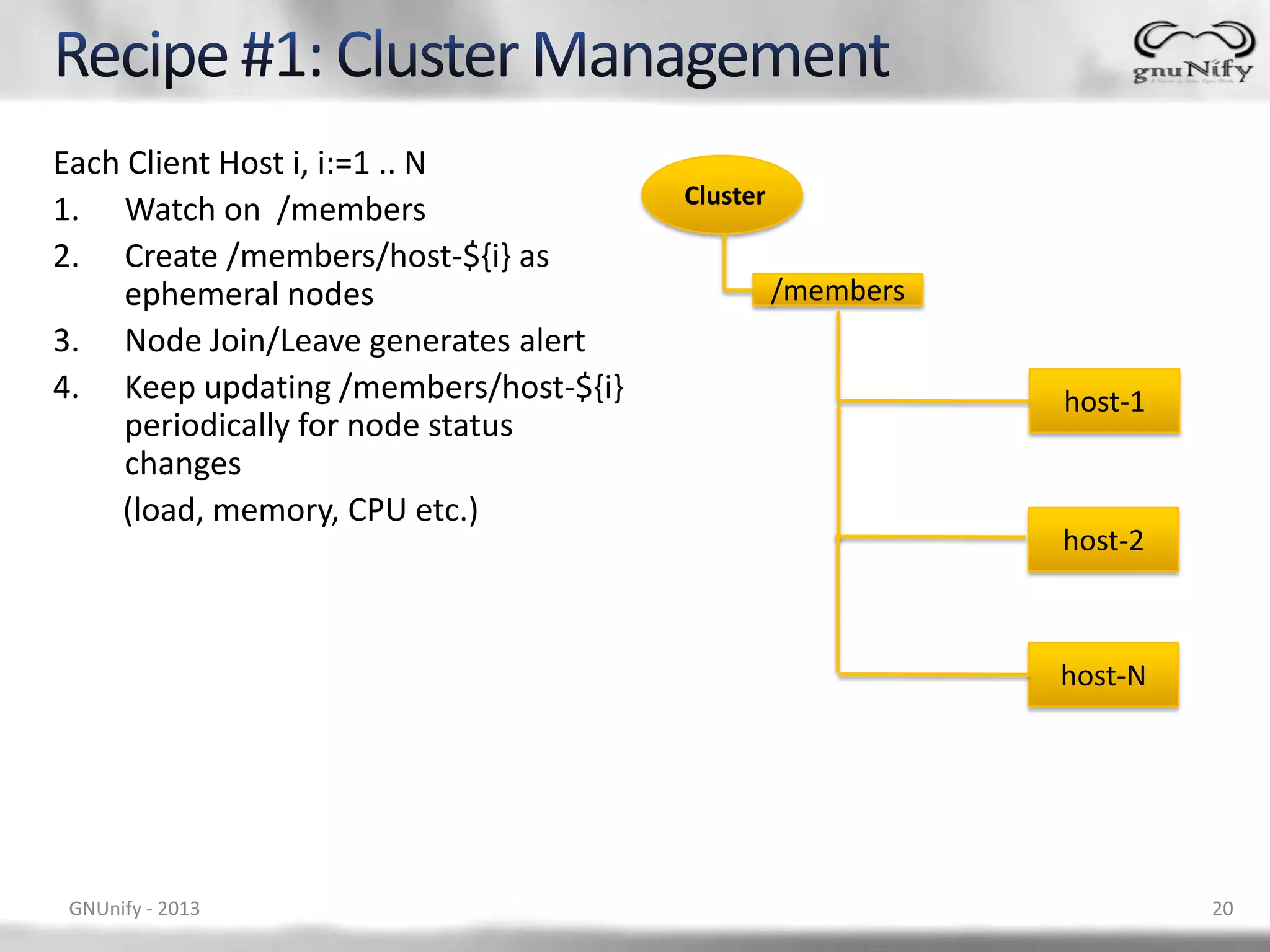

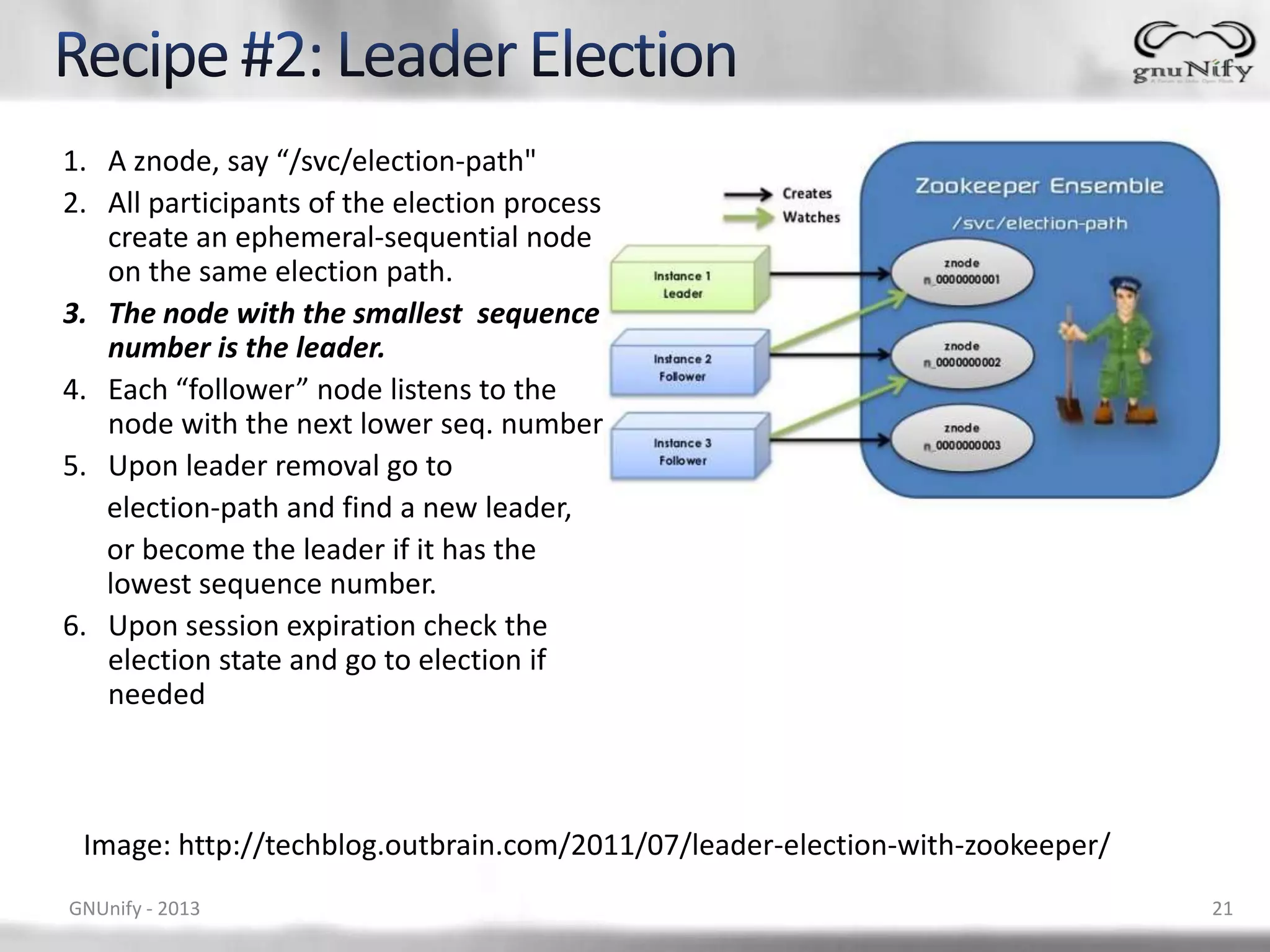

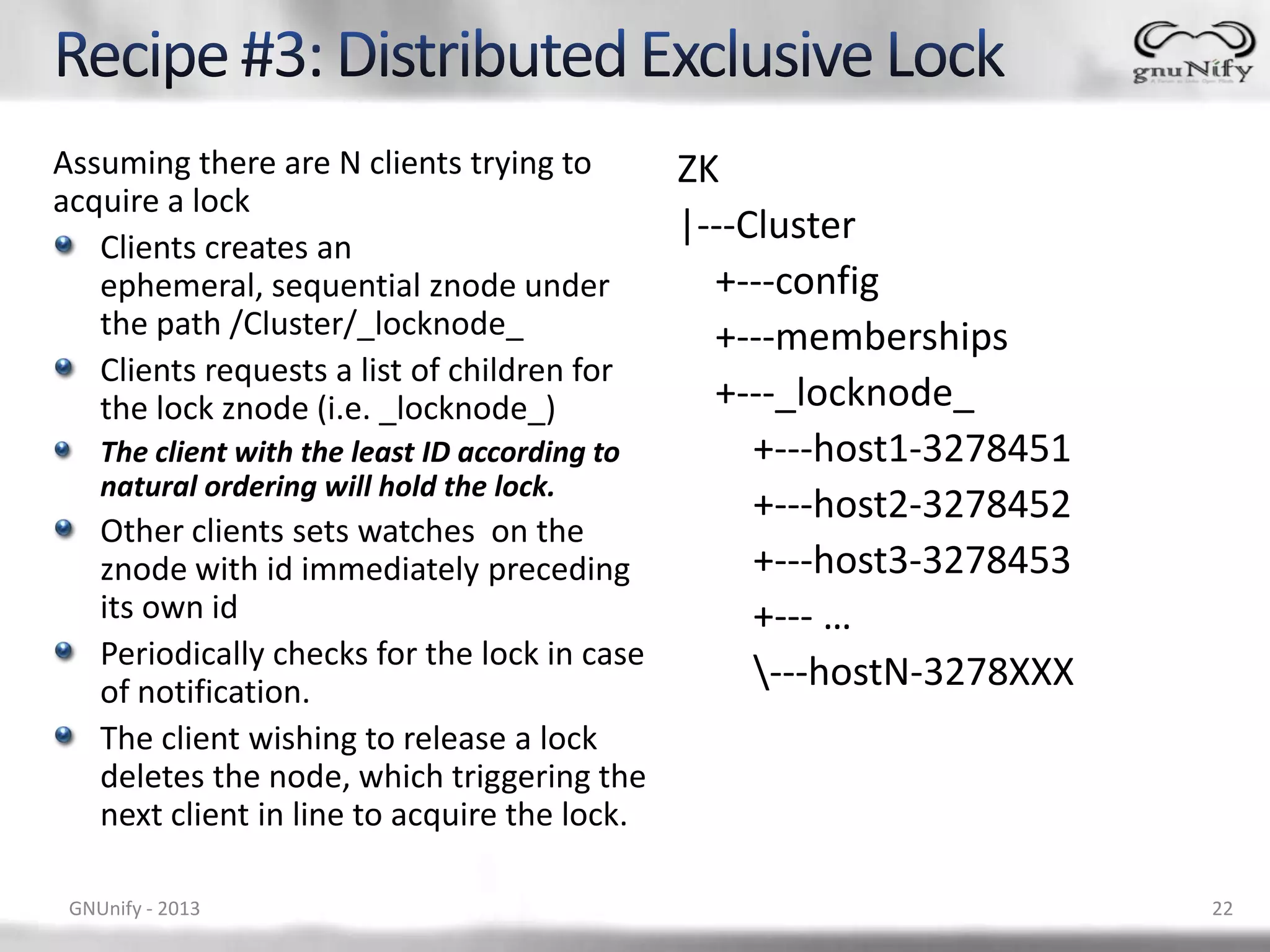

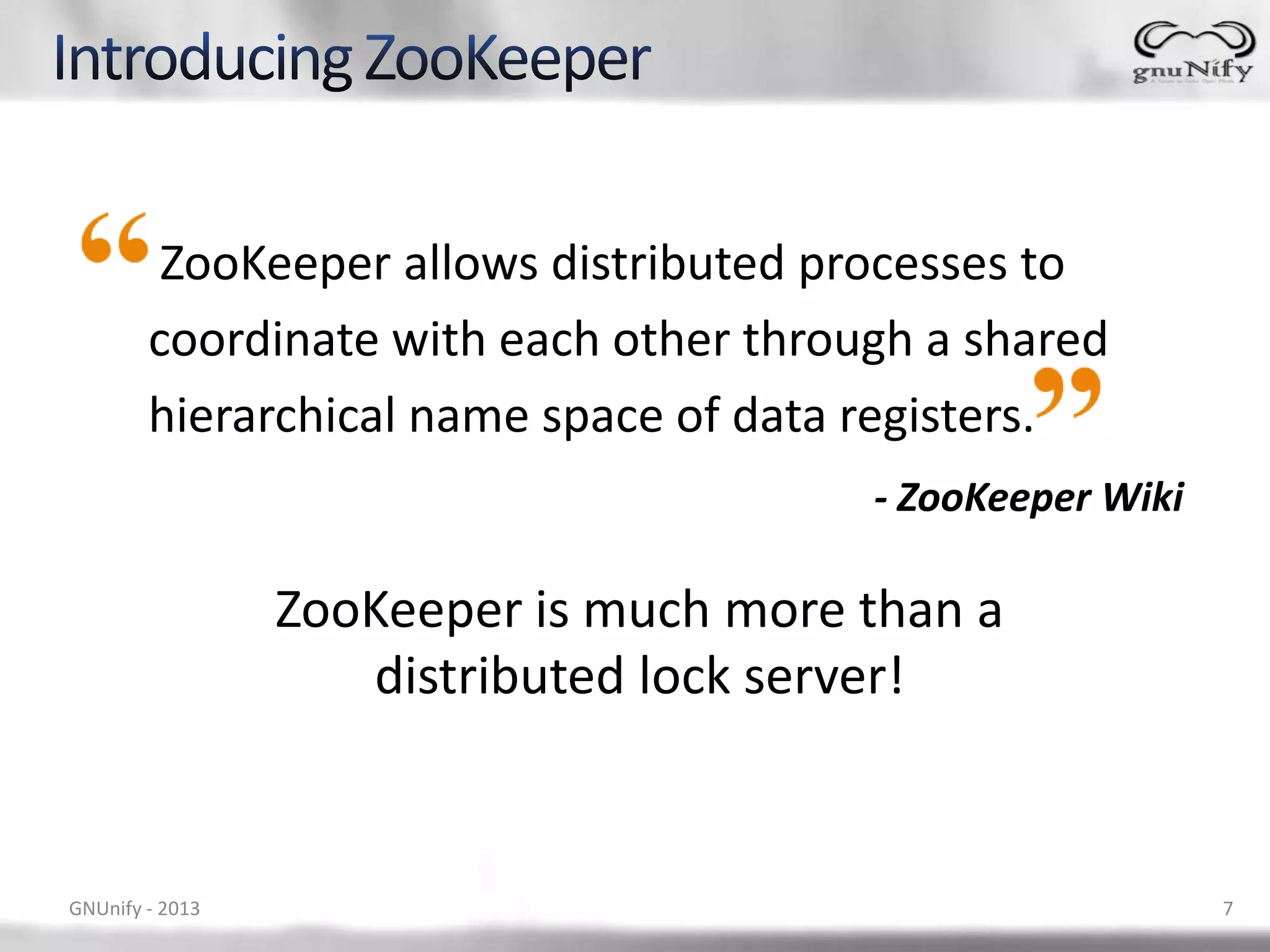

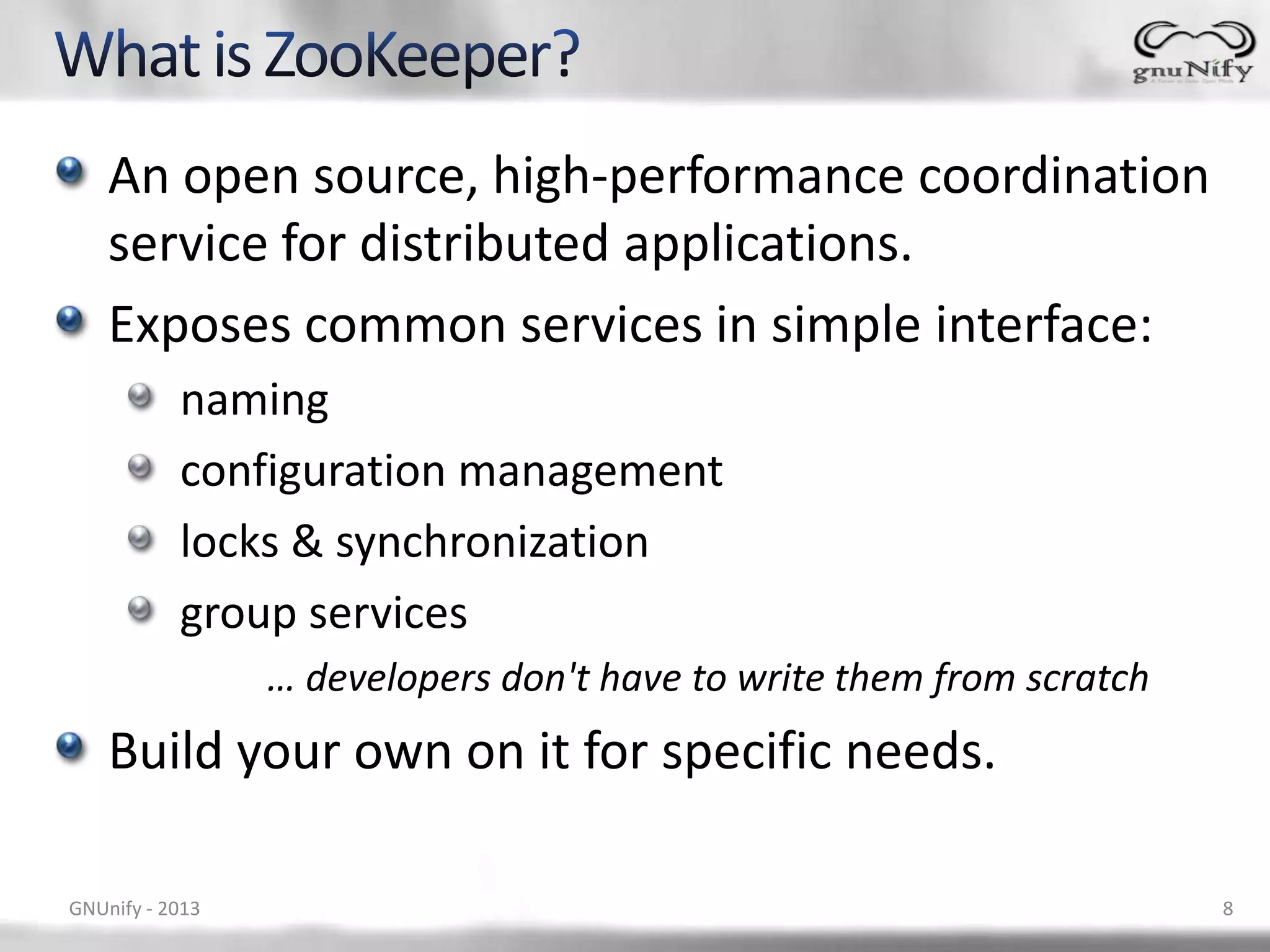

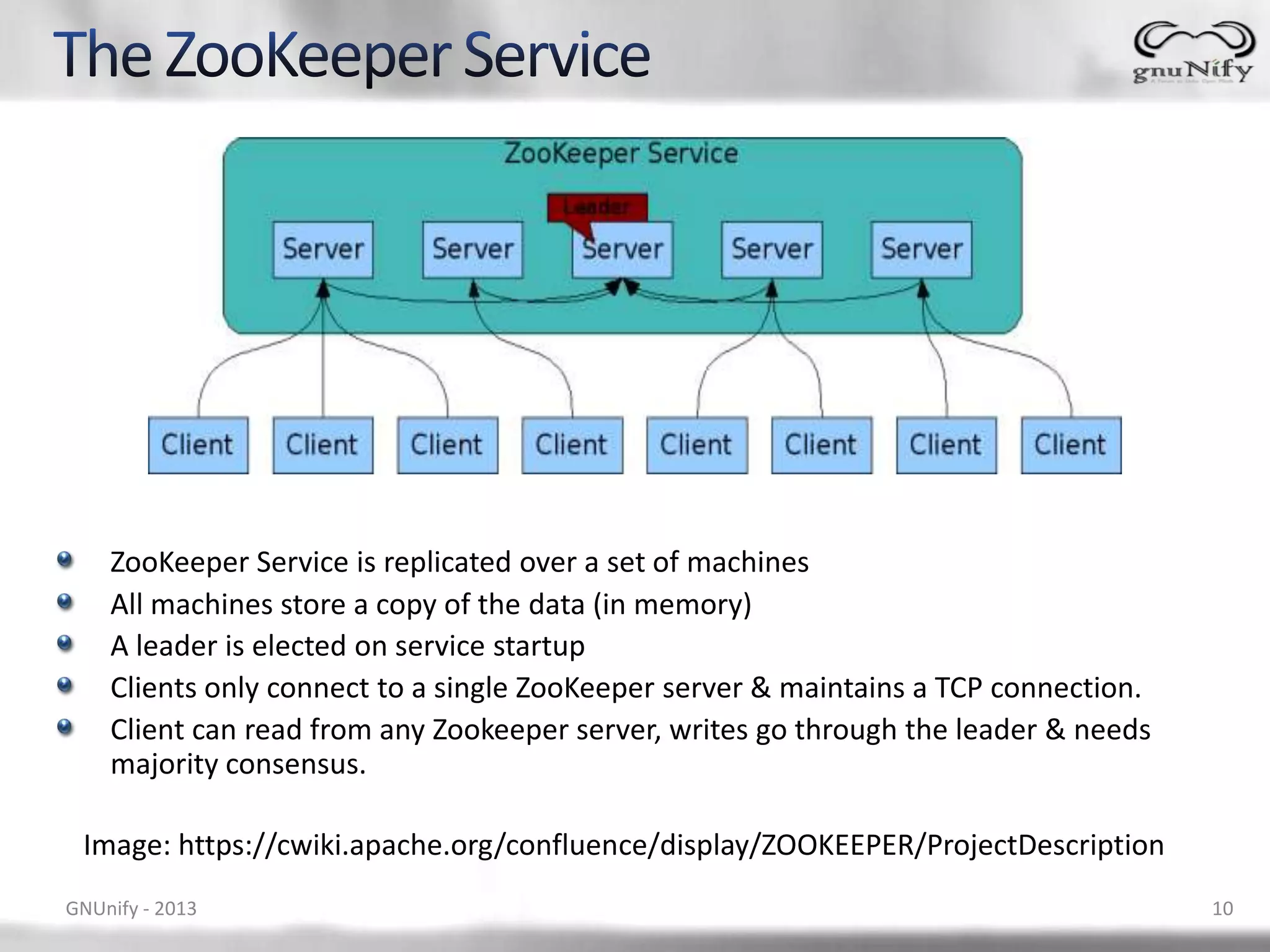

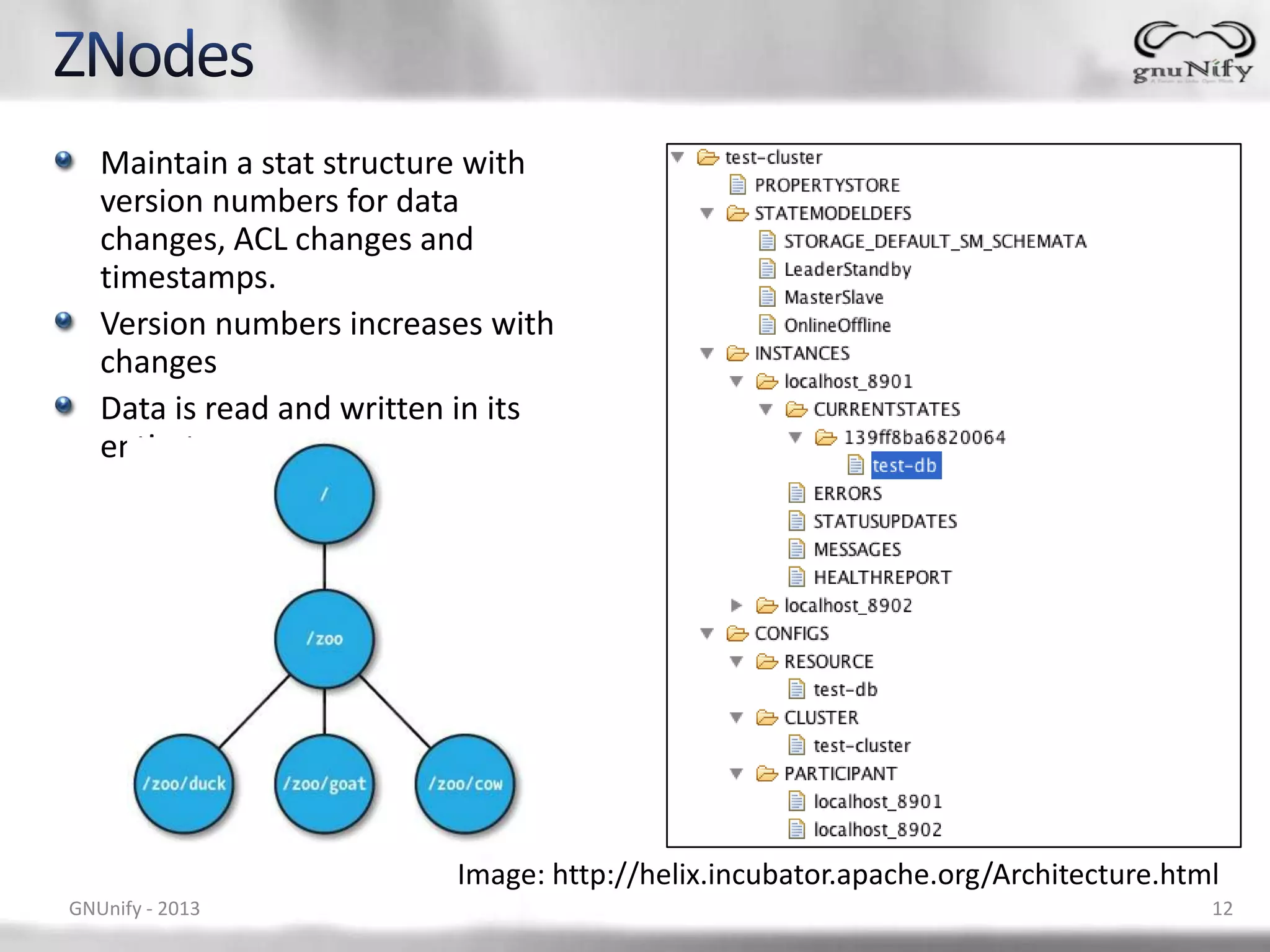

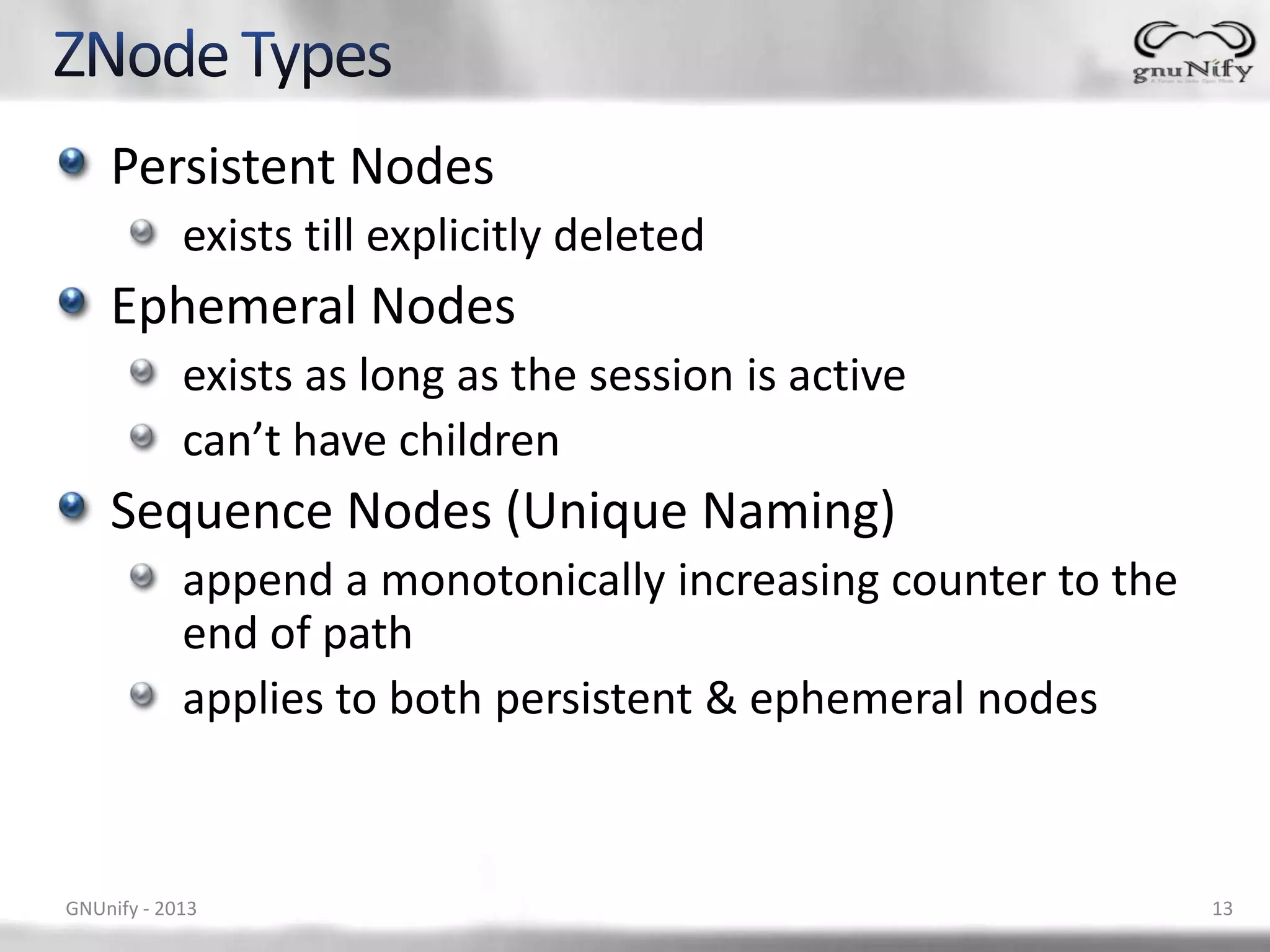

The document discusses Apache ZooKeeper, an open-source coordination service for distributed applications, facilitating process coordination through a hierarchical namespace. It highlights its features such as naming, configuration management, and synchronization services, along with the architecture and operational aspects including znode management, watches, and consensus mechanisms. Additionally, it outlines practical applications and uses of ZooKeeper in various companies and projects.

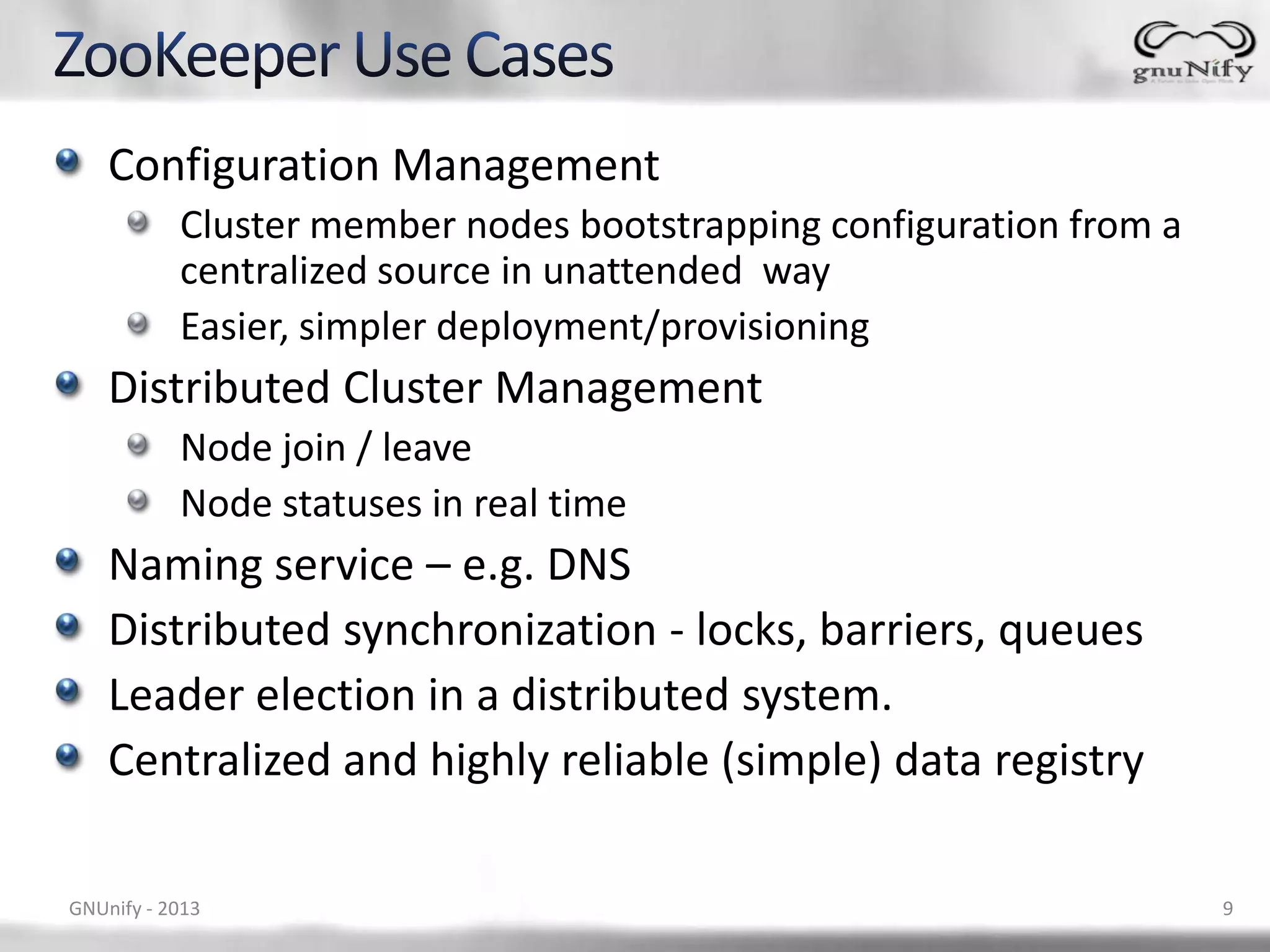

![ZooKeeper has a hierarchal name space. Each node in the namespace is called as a ZNode. Every ZNode has data (given as byte[]) and can optionally have children. parent : "foo" |-- child1 : "bar" |-- child2 : "spam" `-- child3 : "eggs" `-- grandchild1 : "42" ZNode paths: canonical, absolute, slash-separated no relative references. names can have Unicode characters GNUnify - 2013 11](https://image.slidesharecdn.com/gnunify13-apachezookeeper-130216065255-phpapp02/75/Introduction-to-Apache-ZooKeeper-11-2048.jpg)

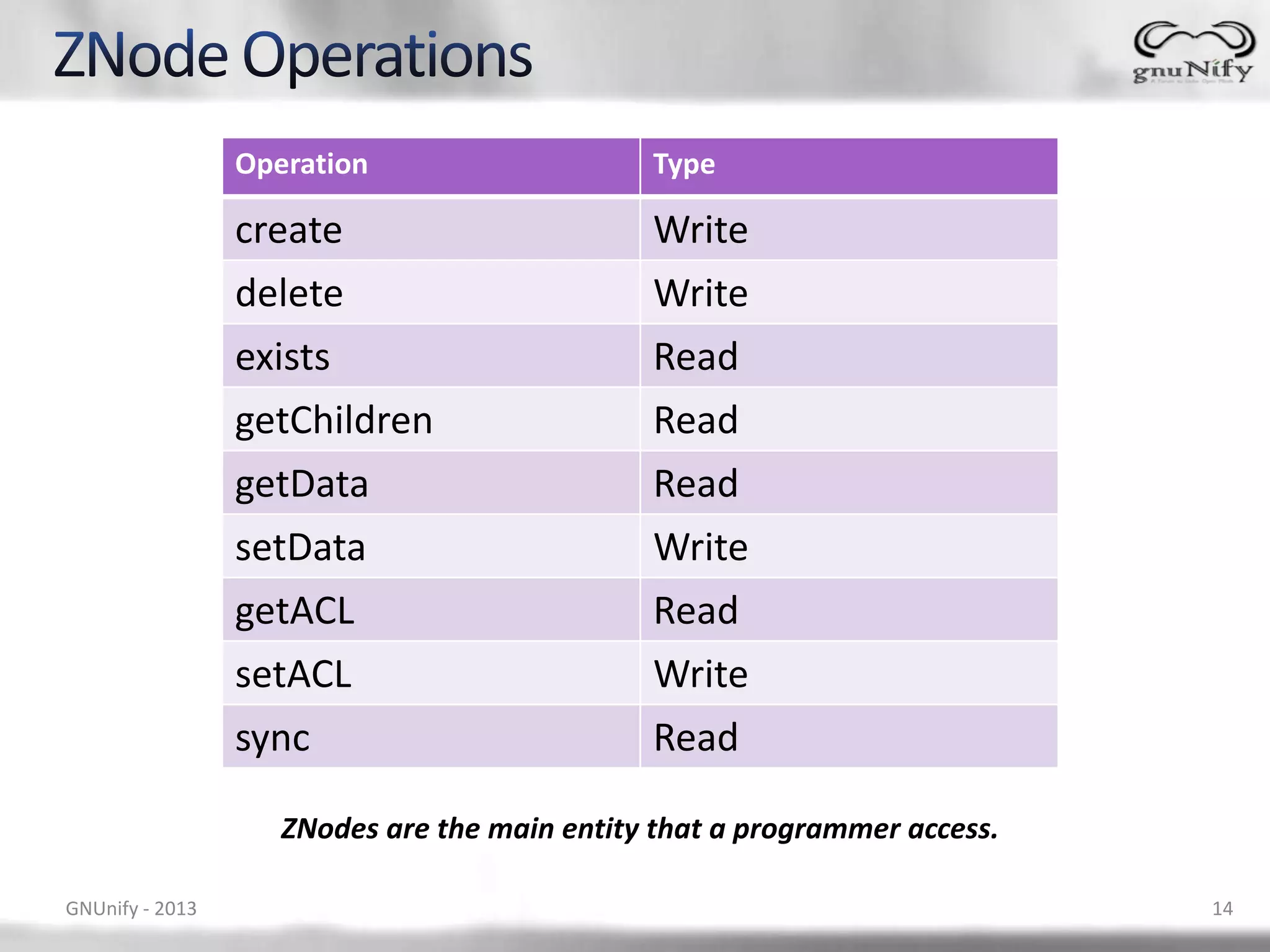

![[zk: localhost:2181(CONNECTED) 0] help [zk: localhost:2181(CONNECTED) 1] ls / ZooKeeper -server host:port cmd args [hbase, zookeeper] connect host:port get path [watch] [zk: localhost:2181(CONNECTED) 2] ls2 /zookeeper ls path [watch] [quota] set path data [version] cZxid = 0x0 rmr path ctime = Tue Jan 01 05:30:00 IST 2013 delquota [-n|-b] path mZxid = 0x0 quit mtime = Tue Jan 01 05:30:00 IST 2013 printwatches on|off pZxid = 0x0 create [-s] [-e] path data acl cversion = -1 stat path [watch] dataVersion = 0 close aclVersion = 0 ls2 path [watch] ephemeralOwner = 0x0 history dataLength = 0 listquota path numChildren = 1 setAcl path acl getAcl path [zk: localhost:2181(CONNECTED) 3] create /test-znode HelloWorld sync path Created /test-znode redo cmdno [zk: localhost:2181(CONNECTED) 4] ls / addauth scheme auth [test-znode, hbase, zookeeper] delete path [version] [zk: localhost:2181(CONNECTED) 5] get /test-znode setquota -n|-b val path HelloWorld GNUnify - 2013 15](https://image.slidesharecdn.com/gnunify13-apachezookeeper-130216065255-phpapp02/75/Introduction-to-Apache-ZooKeeper-15-2048.jpg)