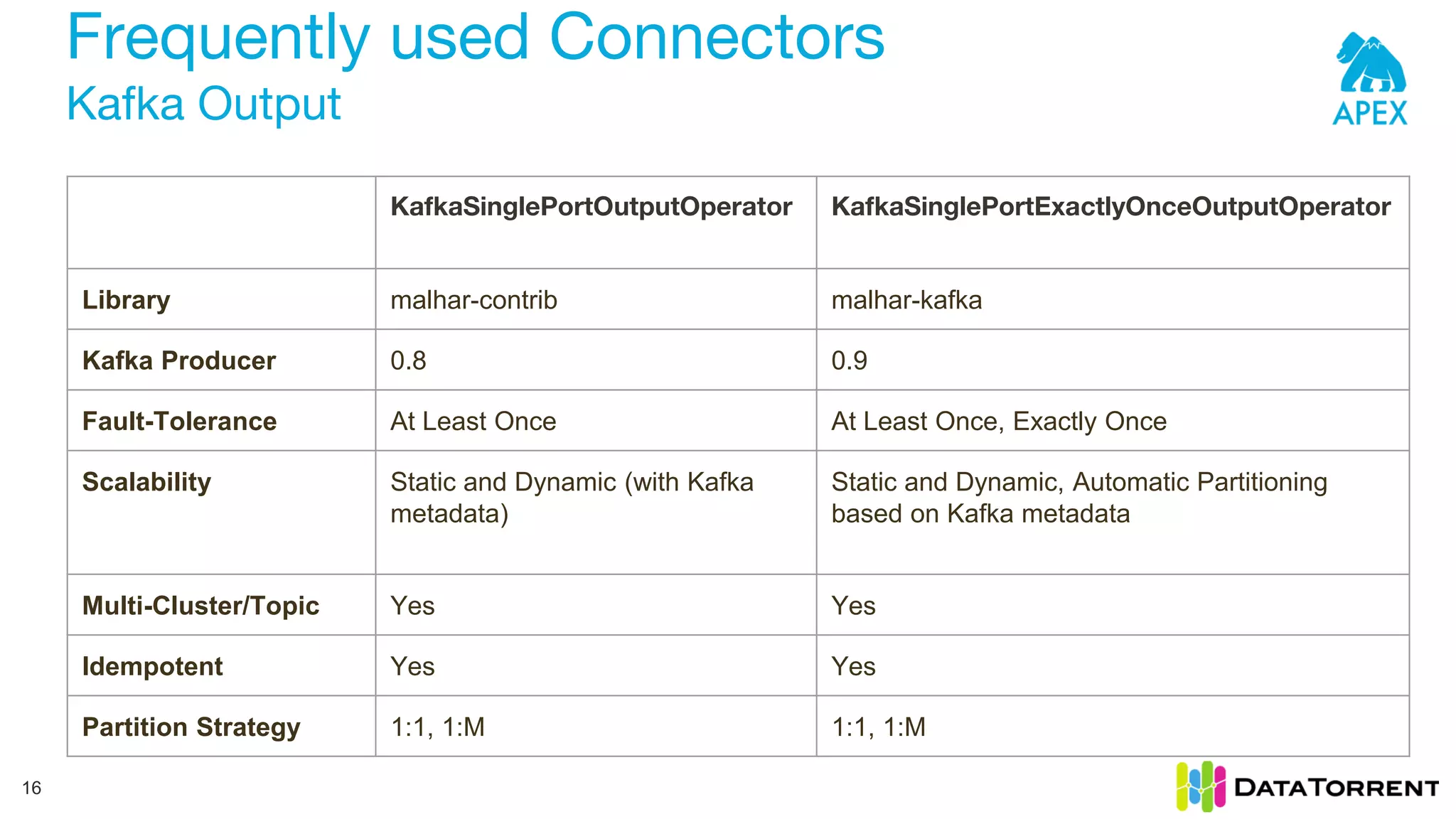

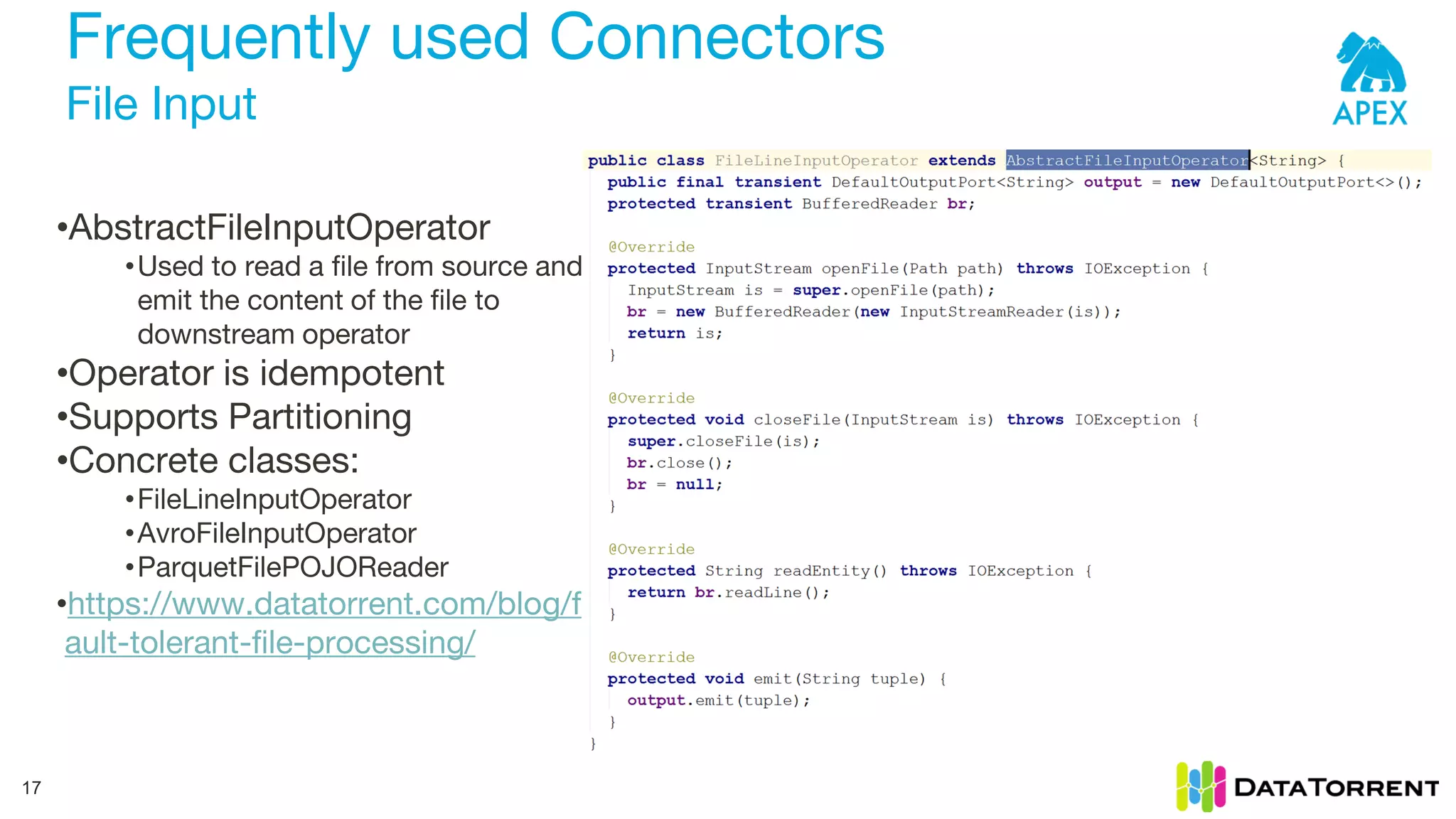

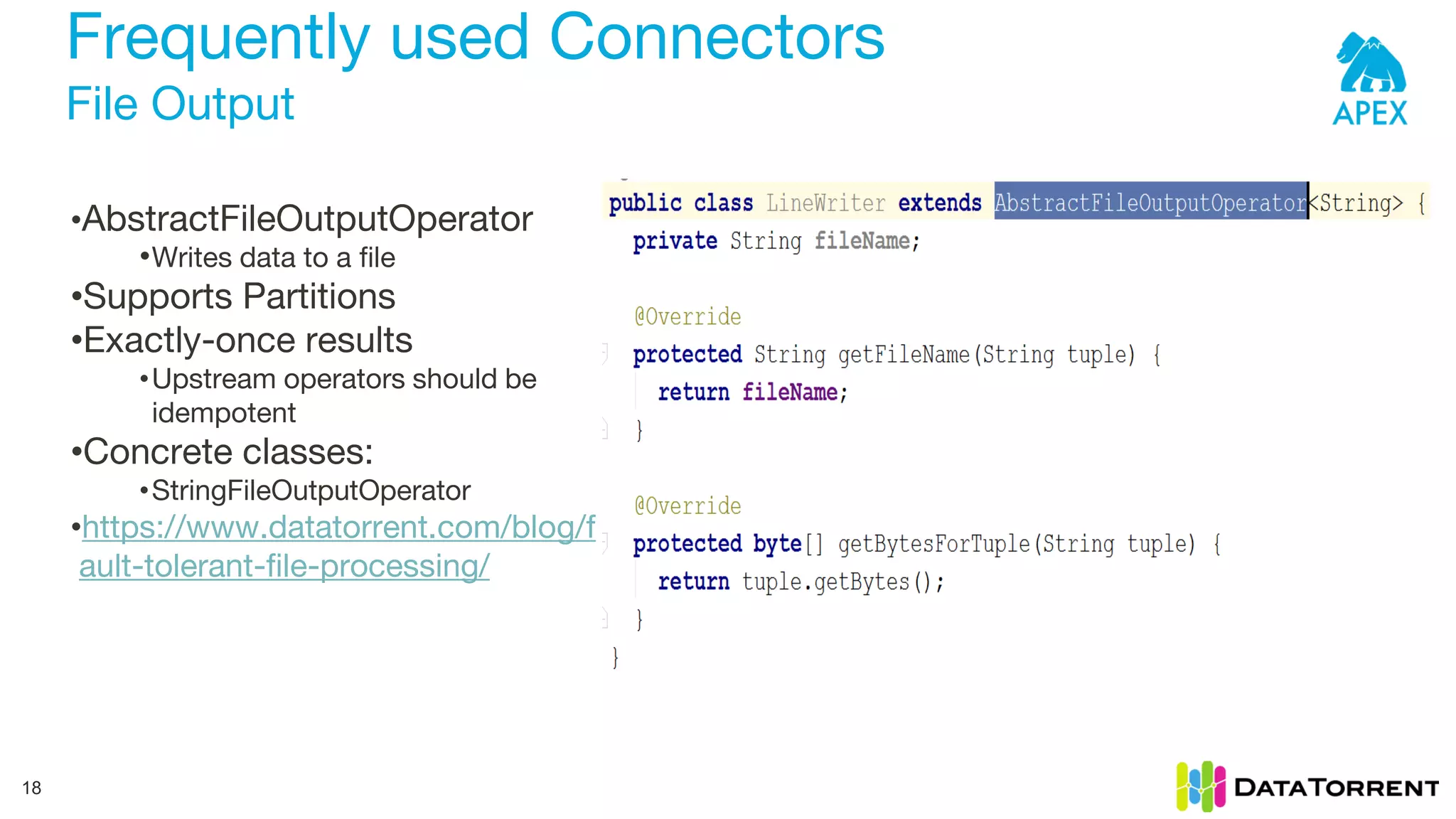

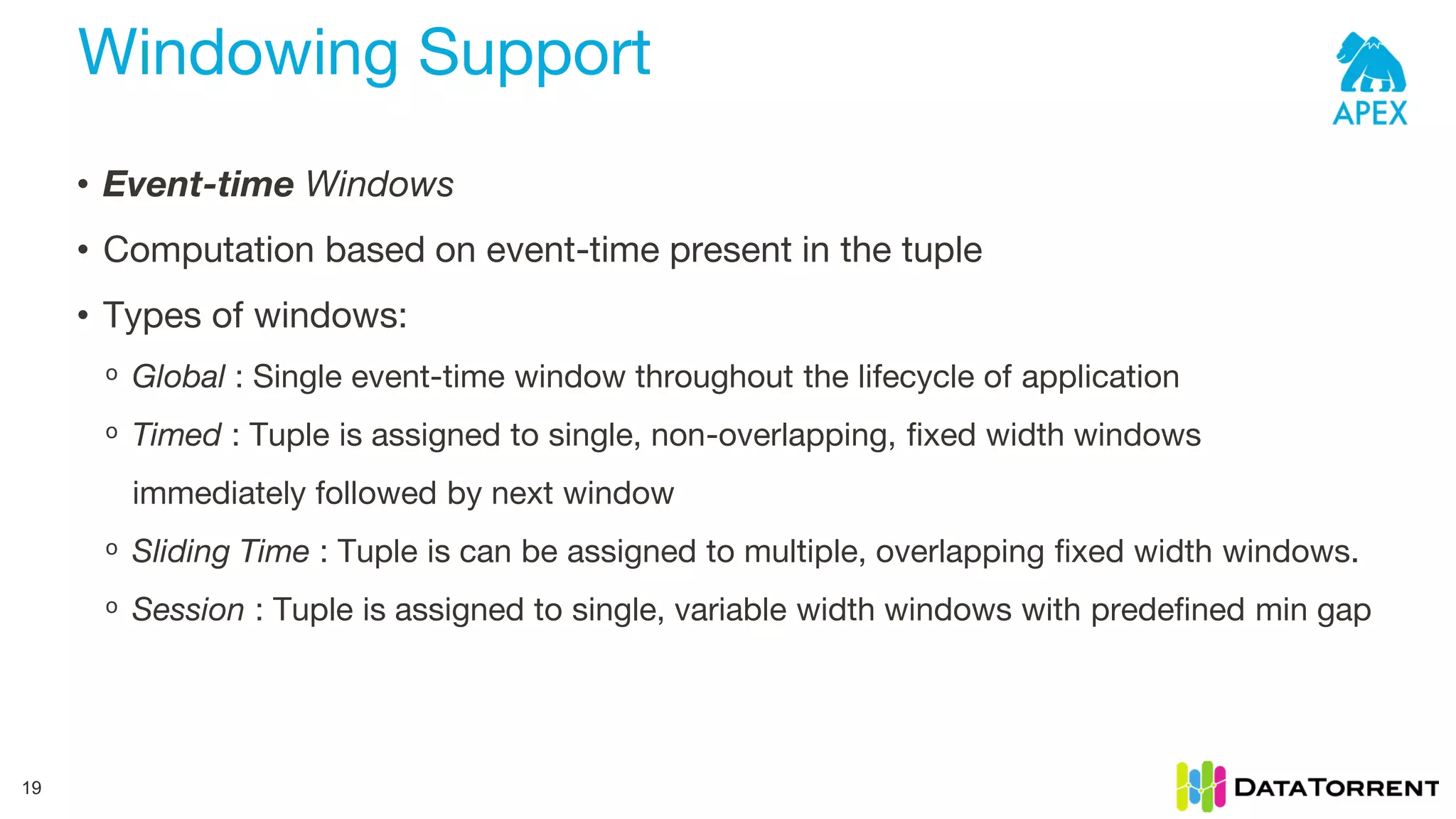

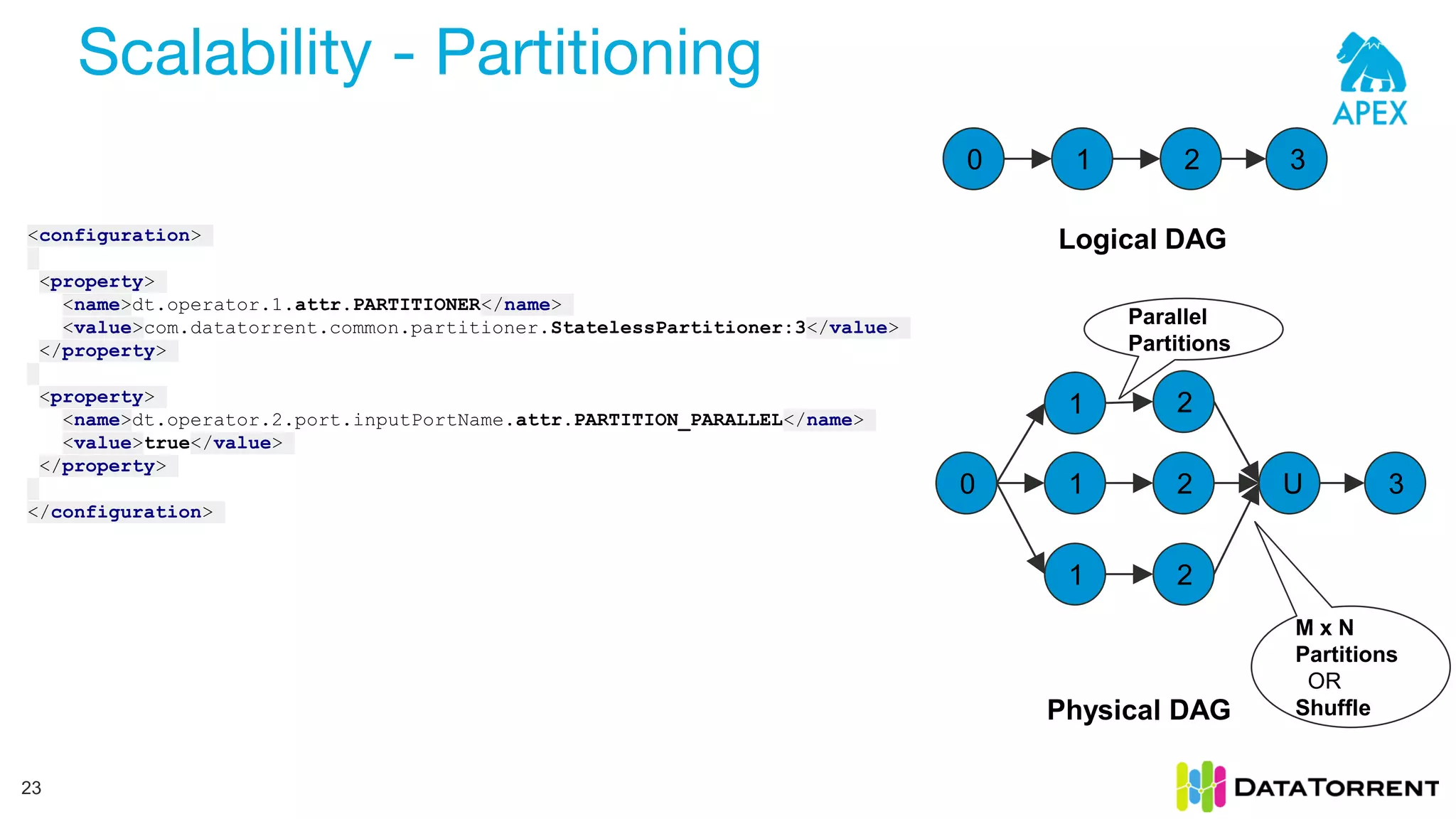

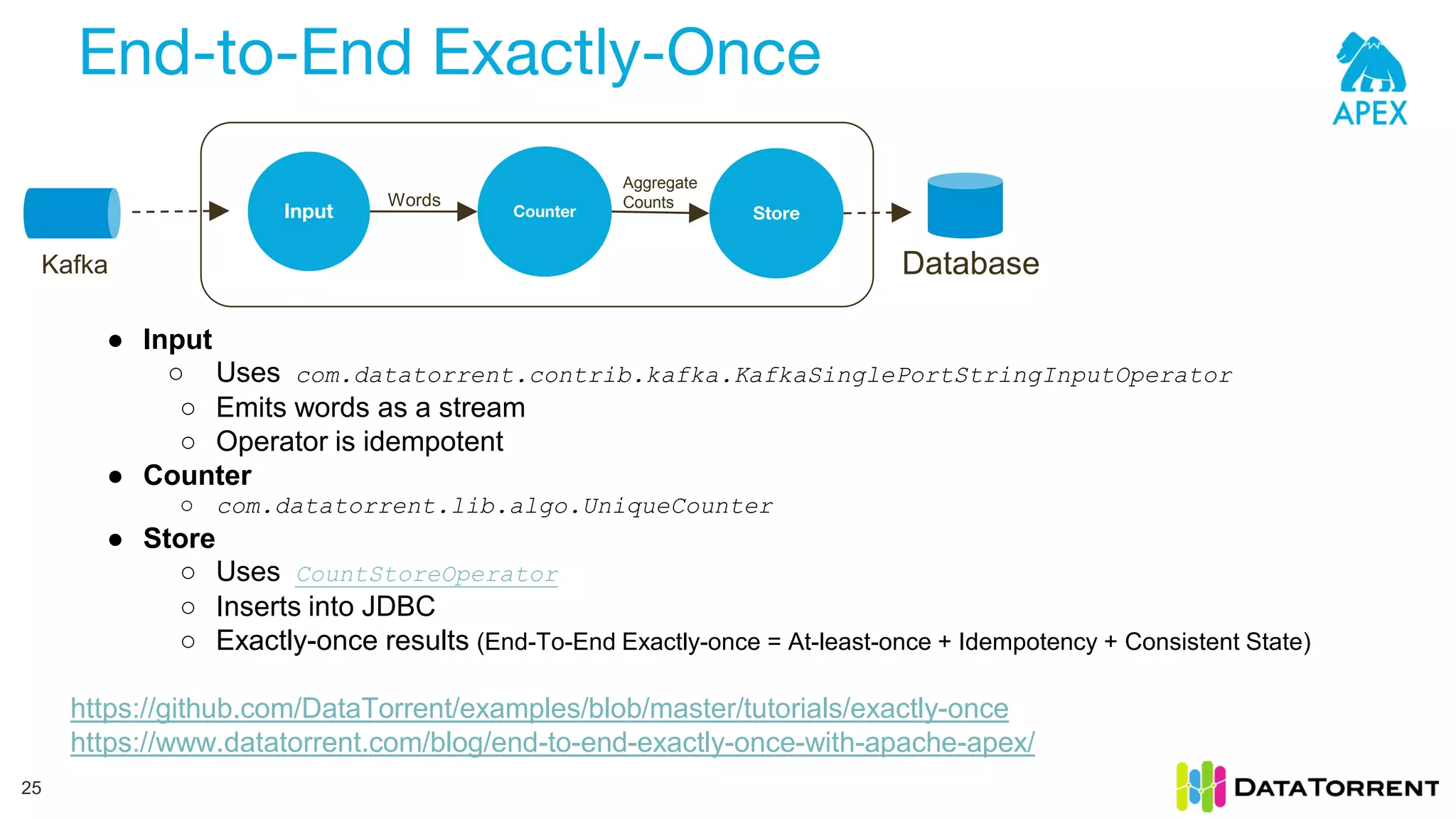

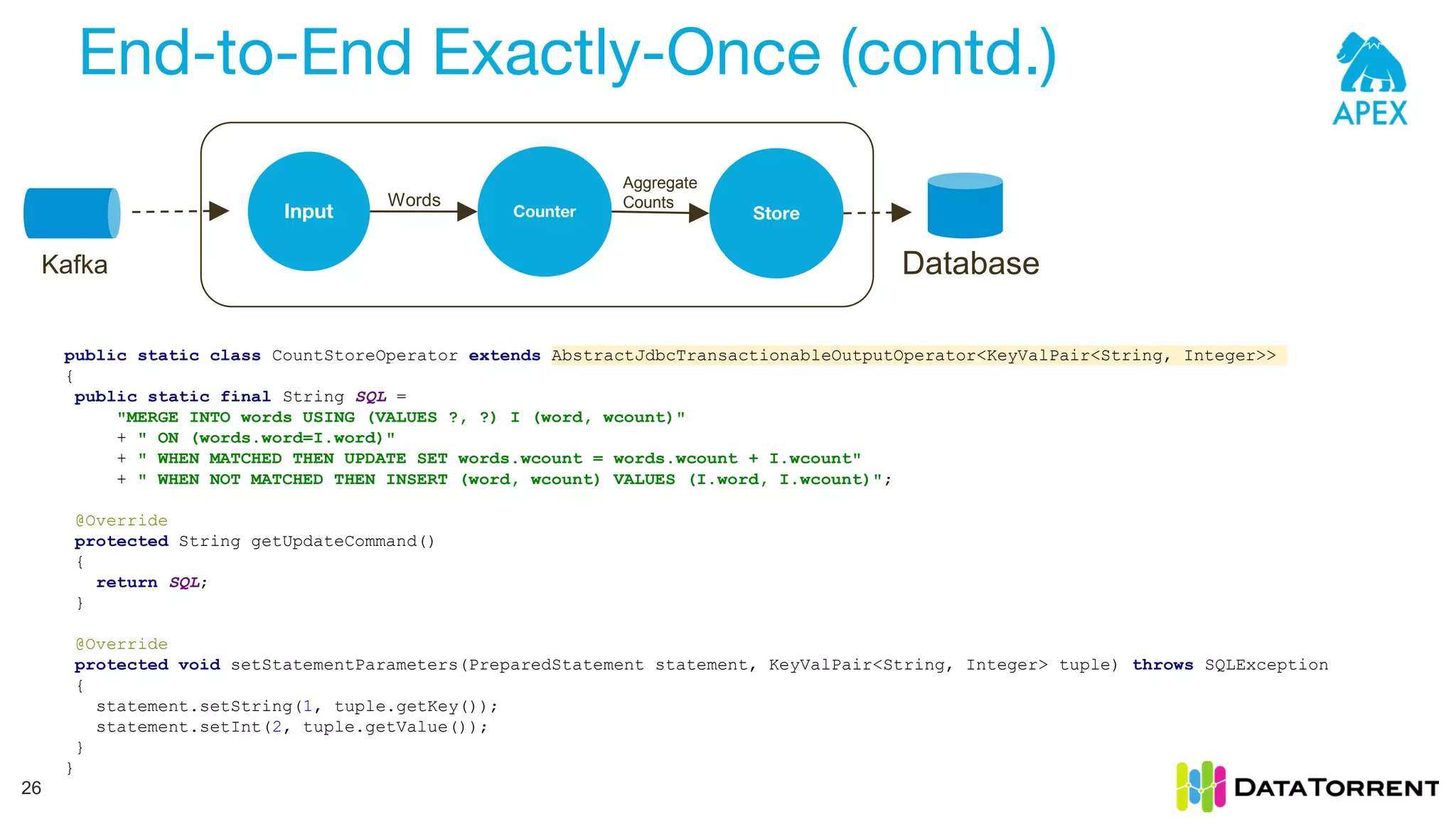

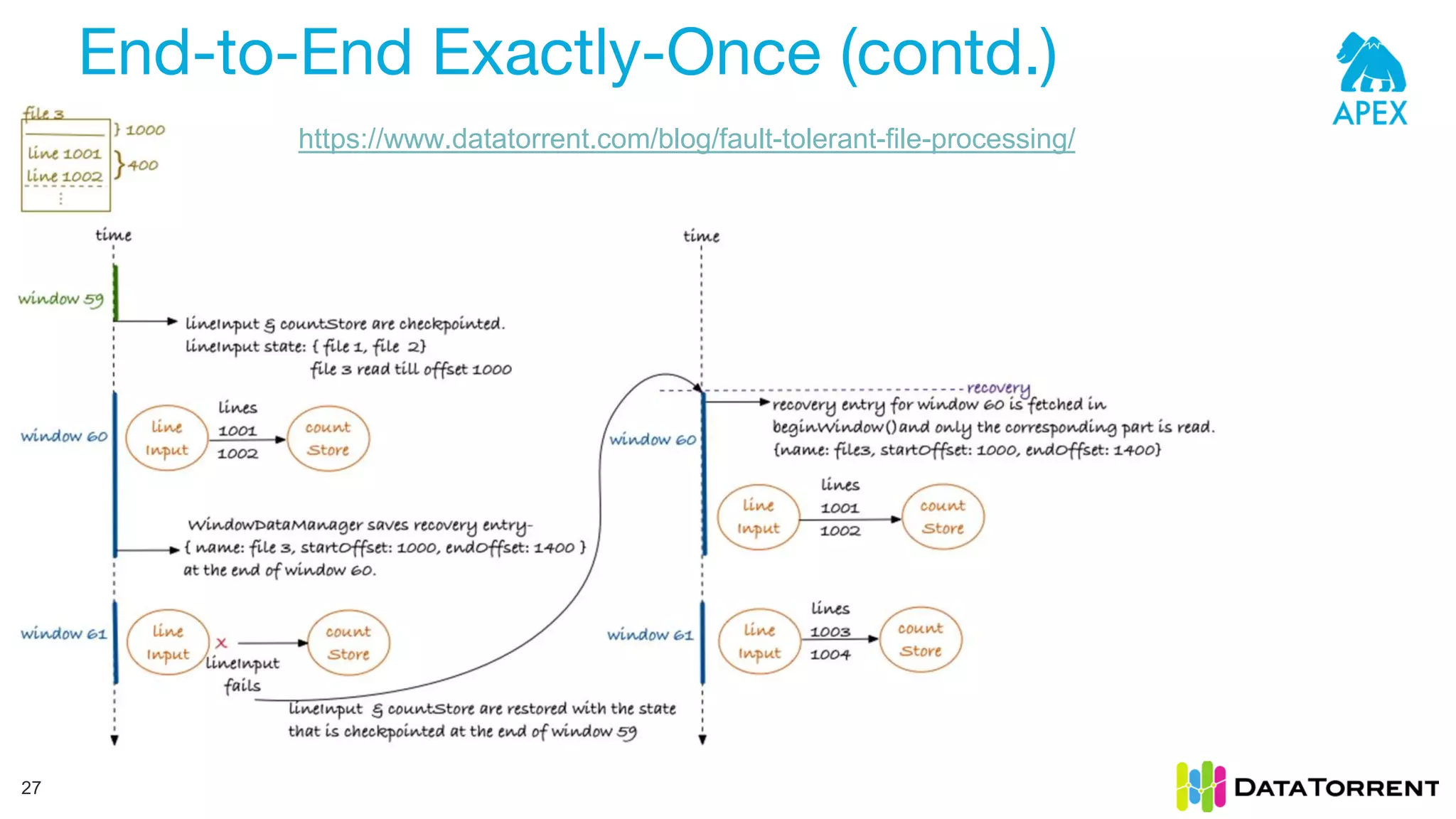

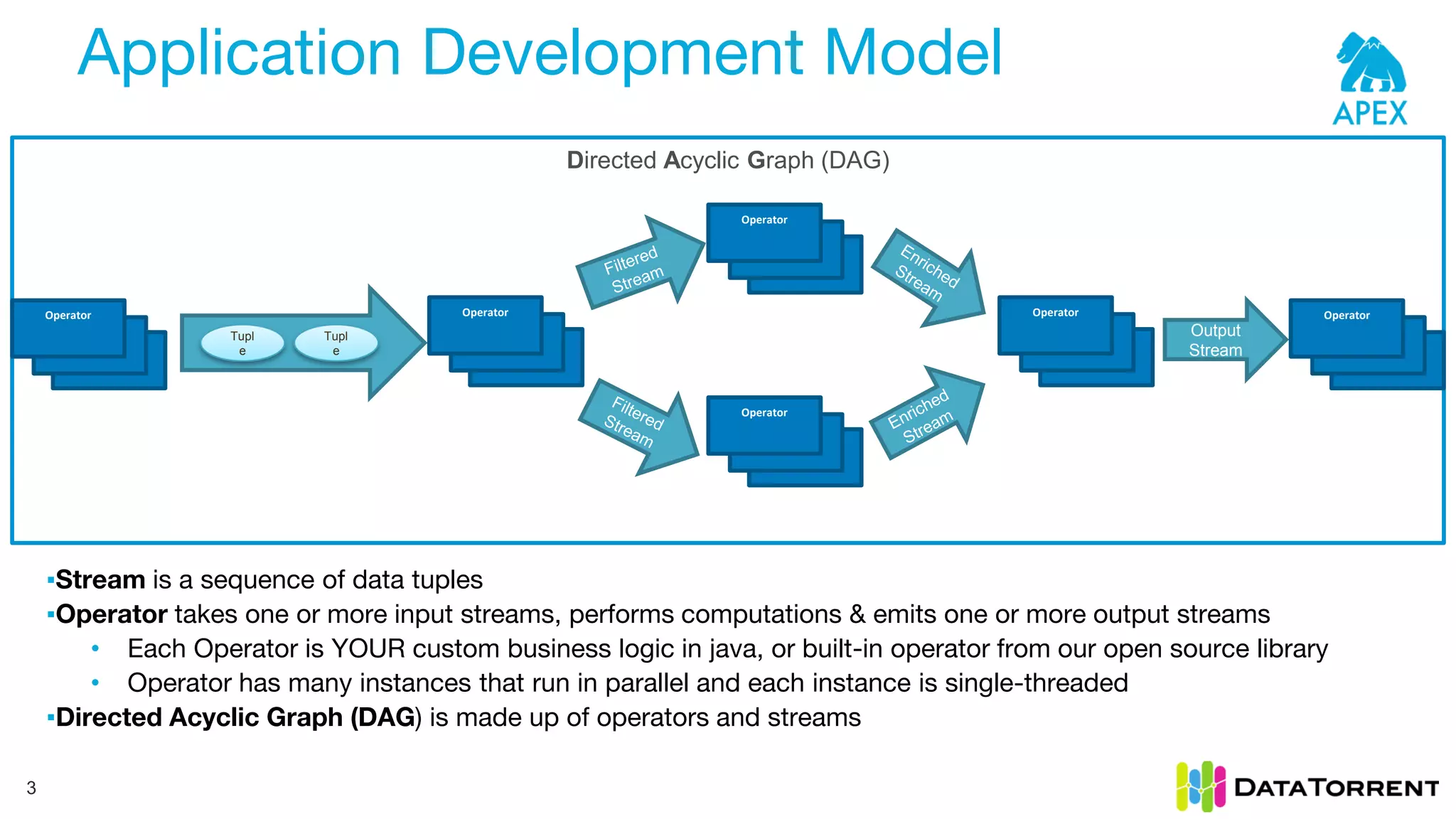

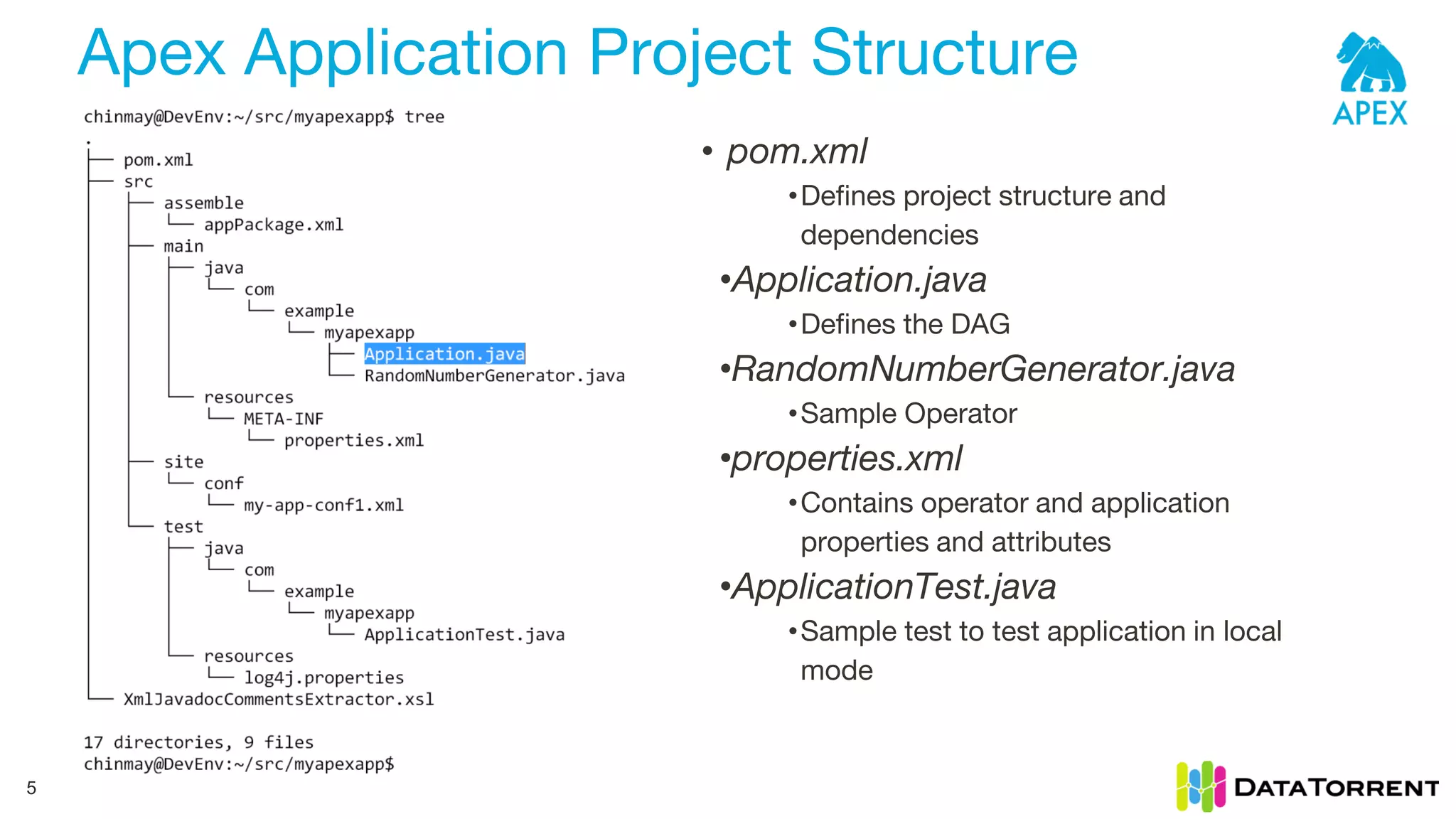

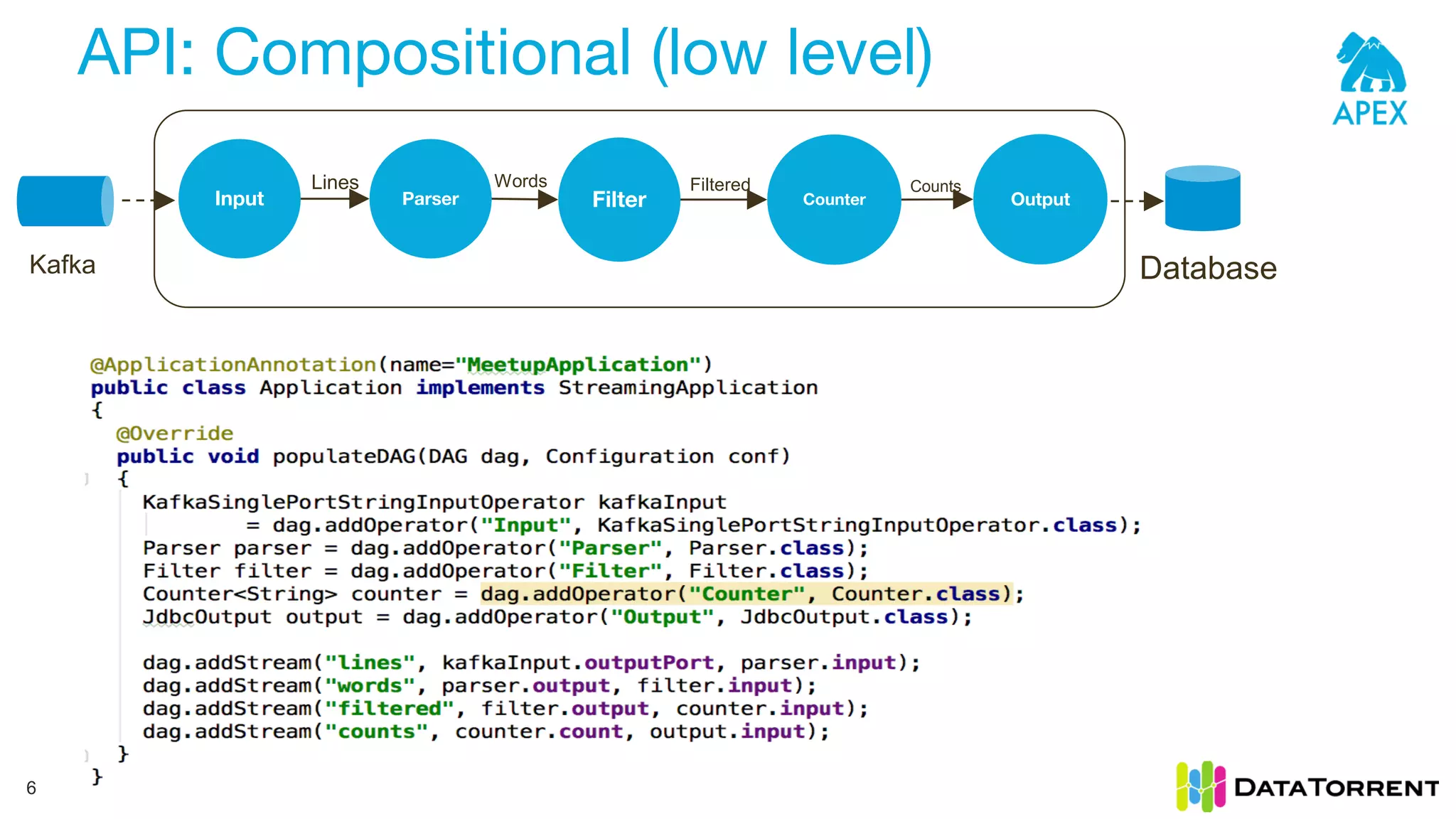

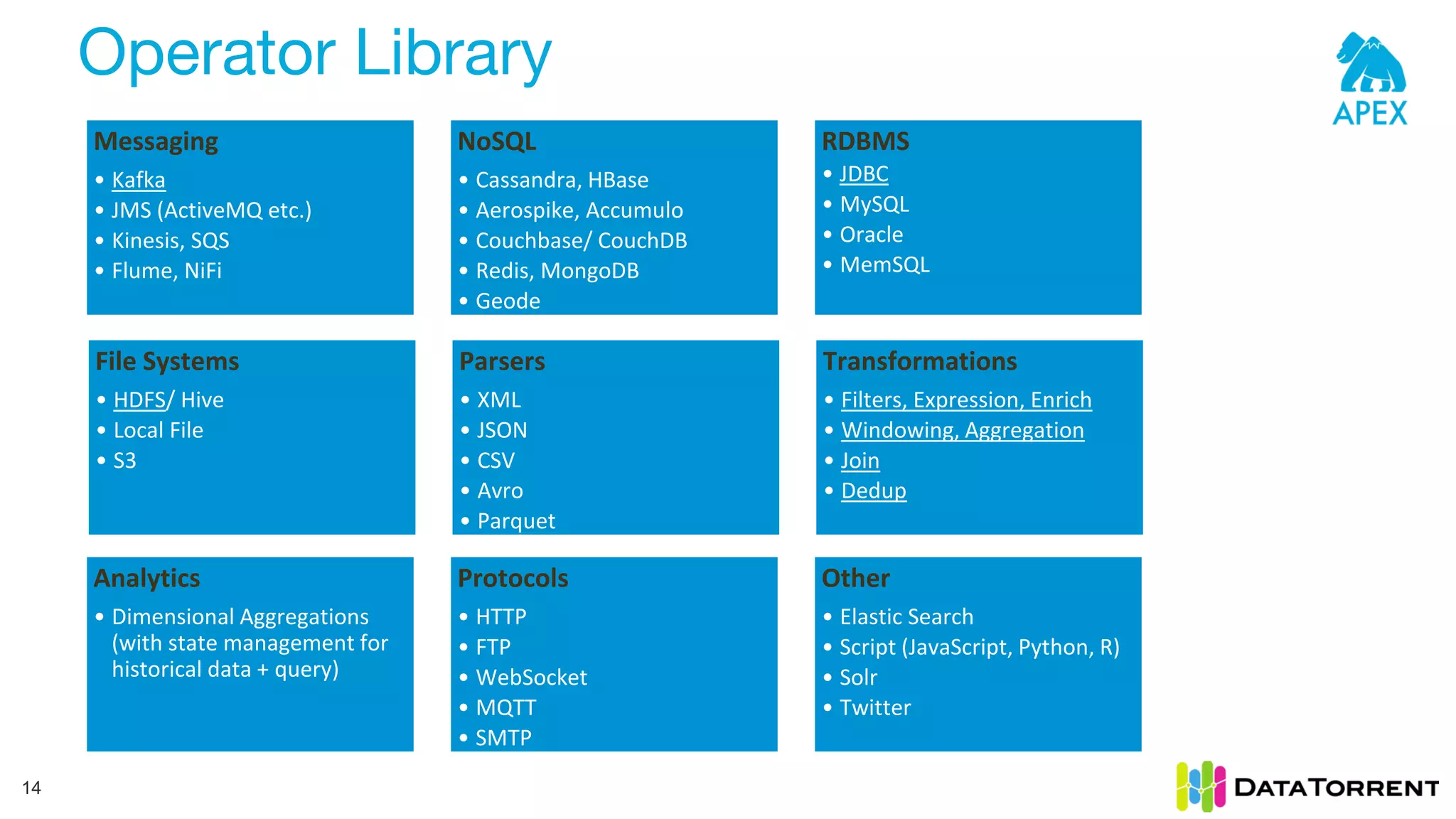

The document provides an overview of building streaming applications using Apache Apex, covering the application development model, project structure, and APIs for operators and configuration. It emphasizes the use of Directed Acyclic Graph (DAG) for custom business logic processing and details various operator libraries, frequently used connectors, and stateful transformations. Additionally, it discusses scalability, exactly-once processing, and usage examples from organizations that leverage Apache Apex.

![Creating Apex Application Project 4 chinmay@chinmay-VirtualBox:~/src$ mvn archetype:generate -DarchetypeGroupId=org.apache.apex - DarchetypeArtifactId=apex-app-archetype -DarchetypeVersion=LATEST -DgroupId=com.example - Dpackage=com.example.myapexapp -DartifactId=myapexapp -Dversion=1.0-SNAPSHOT … … ... Confirm properties configuration: groupId: com.example artifactId: myapexapp version: 1.0-SNAPSHOT package: com.example.myapexapp archetypeVersion: LATEST Y: : Y … … ... [INFO] project created from Archetype in dir: /media/sf_workspace/src/myapexapp [INFO] ------------------------------------------------------------------------ [INFO] BUILD SUCCESS [INFO] ------------------------------------------------------------------------ [INFO] Total time: 13.141 s [INFO] Finished at: 2016-11-15T14:06:56+05:30 [INFO] Final Memory: 18M/216M [INFO] ------------------------------------------------------------------------ chinmay@chinmay-VirtualBox:~/src$ https://www.youtube.com/watch?v=z-eeh-tjQrc](https://image.slidesharecdn.com/bigdataspain2016-streamprocessingapplicationswithapacheapex-161119093004/75/BigDataSpain-2016-Stream-Processing-Applications-with-Apache-Apex-4-2048.jpg)

![API: Beam 9 • Apex Runner for Apache Beam is now available!! •Build once run-anywhere model •Beam Streaming applications can be run on apex runner: public static void main(String[] args) { Options options = PipelineOptionsFactory.fromArgs(args).withValidation().as(Options.class); // Run with Apex runner options.setRunner(ApexRunner.class); Pipeline p = Pipeline.create(options); p.apply("ReadLines", TextIO.Read.from(options.getInput())) .apply(new CountWords()) .apply(MapElements.via(new FormatAsTextFn())) .apply("WriteCounts", TextIO.Write.to(options.getOutput())); .run().waitUntilFinish(); }](https://image.slidesharecdn.com/bigdataspain2016-streamprocessingapplicationswithapacheapex-161119093004/75/BigDataSpain-2016-Stream-Processing-Applications-with-Apache-Apex-9-2048.jpg)

![Frequently used Connectors Kafka Input 15 KafkaSinglePortInputOperator KafkaSinglePortByteArrayInputOperator Library malhar-contrib malhar-kafka Kafka Consumer 0.8 0.9 Emit Type byte[] byte[] Fault-Tolerance At Least Once, Exactly Once At Least Once, Exactly Once Scalability Static and Dynamic (with Kafka metadata) Static and Dynamic (with Kafka metadata) Multi-Cluster/Topic Yes Yes Idempotent Yes Yes Partition Strategy 1:1, 1:M 1:1, 1:M](https://image.slidesharecdn.com/bigdataspain2016-streamprocessingapplicationswithapacheapex-161119093004/75/BigDataSpain-2016-Stream-Processing-Applications-with-Apache-Apex-15-2048.jpg)