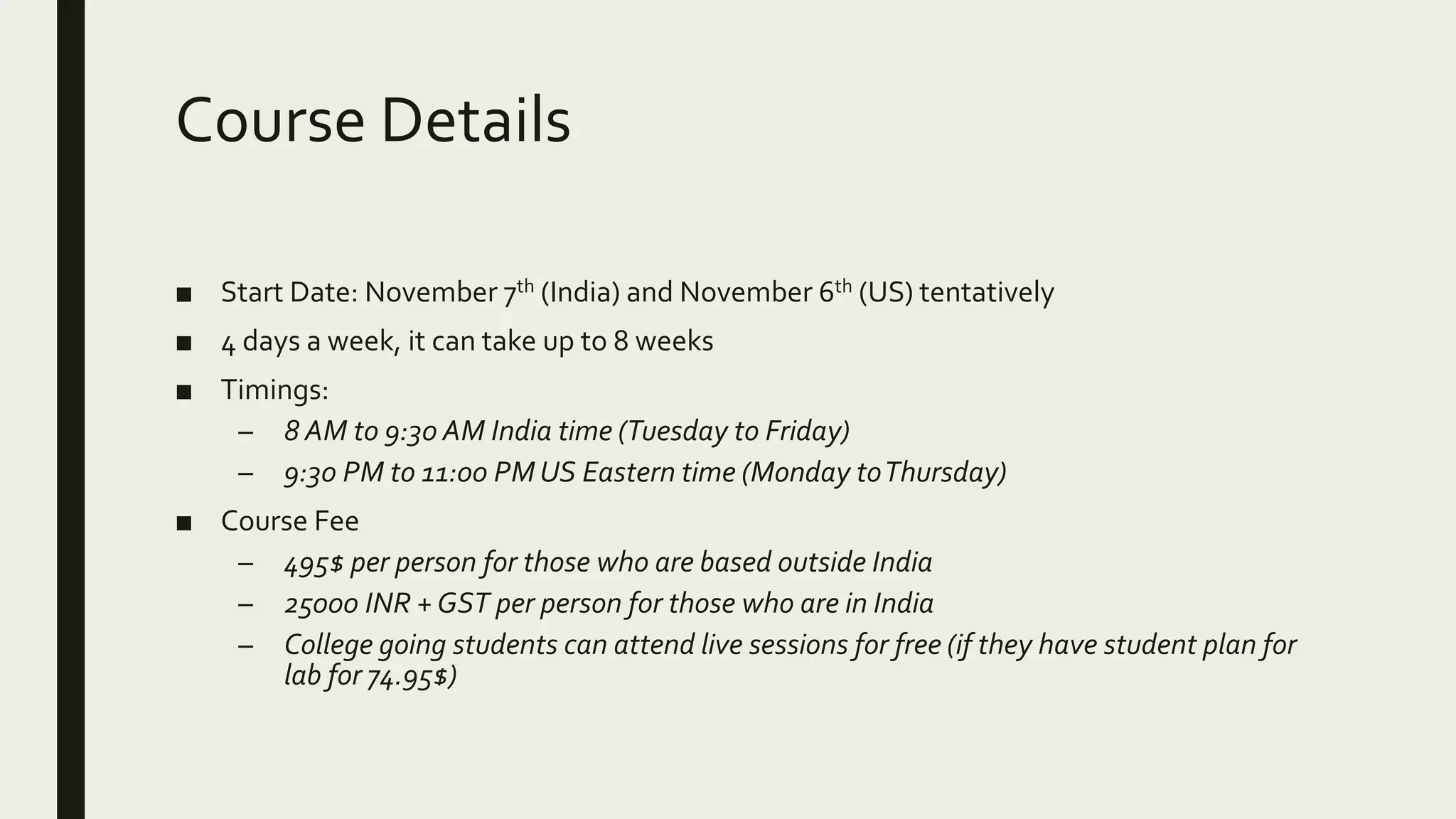

This document provides an agenda for a comprehensive developer workshop on Spark-based big data certifications offered by ITVersity. The workshop will cover topics like Linux essentials, Spark, Spark SQL, streaming analytics using Spark Streaming and MLlib over 4-8 weeks. Students will learn shell scripting and have an exercise to monitor multiple servers using a shell script. The course fee is $495 or 25,000 INR and will include recorded videos, pre-recorded certification courses and a 3-4 month lab access period.