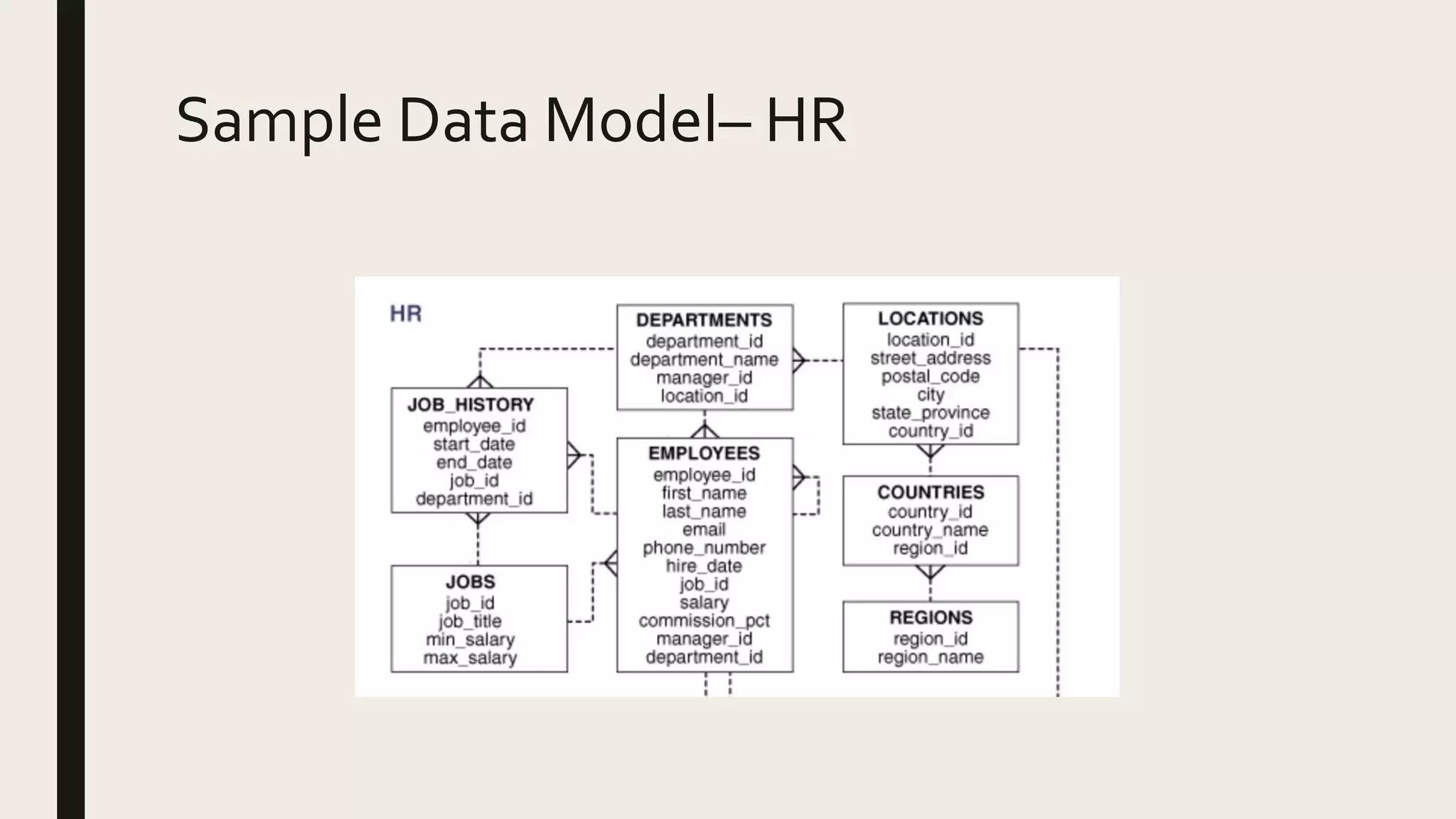

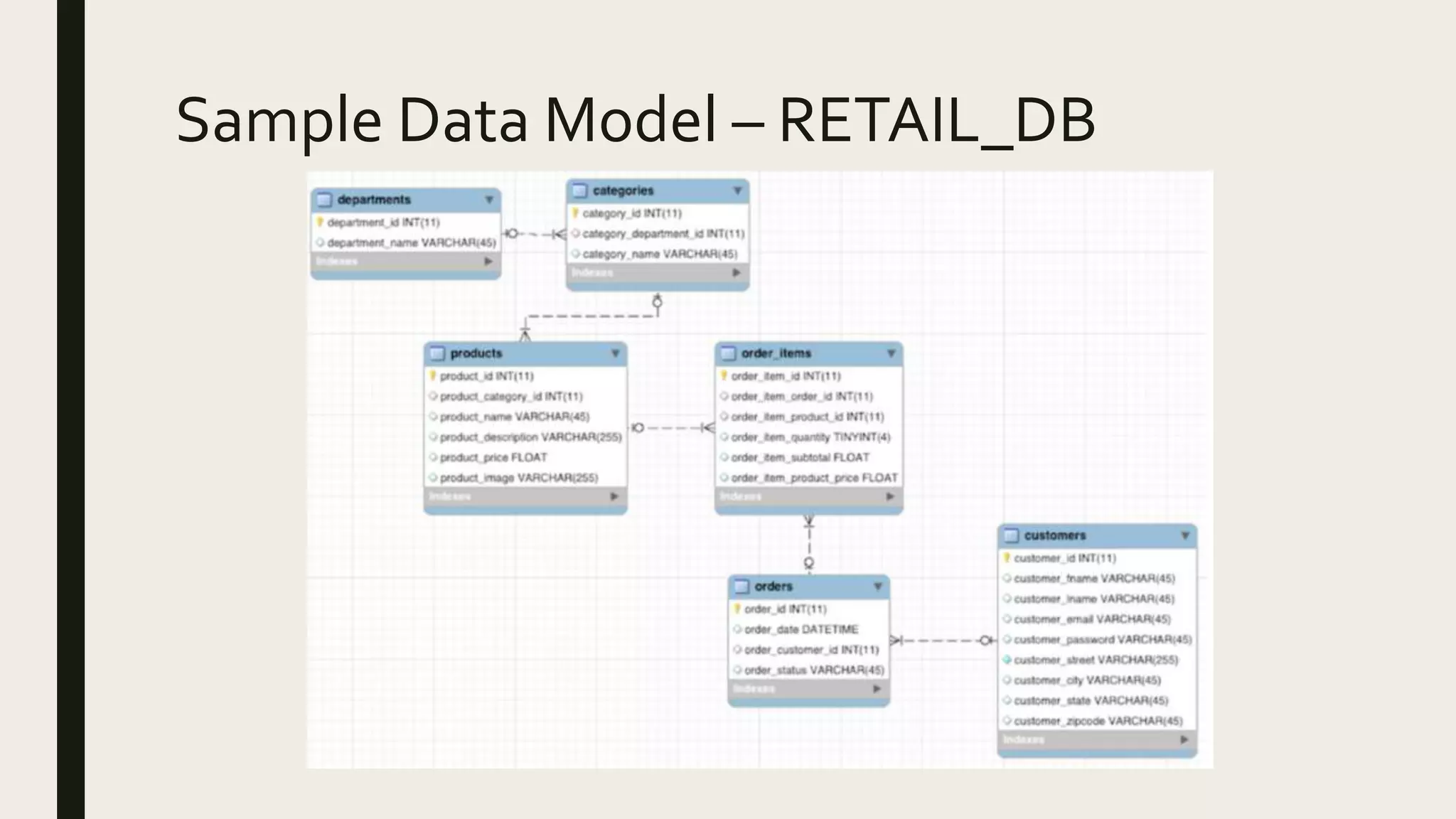

This document provides an agenda and details for a comprehensive developer workshop on Spark-based big data certifications. The workshop will cover topics like introduction to big data, popular certifications, curriculum including Linux, SQL, Python, Spark, and more. It will run for 4 days a week for 8 weeks, with sessions at different times for India and US. The course fee is $495 or 25,000 INR and includes recorded videos, pre-recorded courses, 3-4 months of lab access, and a certification simulator. A separate document provides details on a database essentials course covering SQL using Oracle and application express.