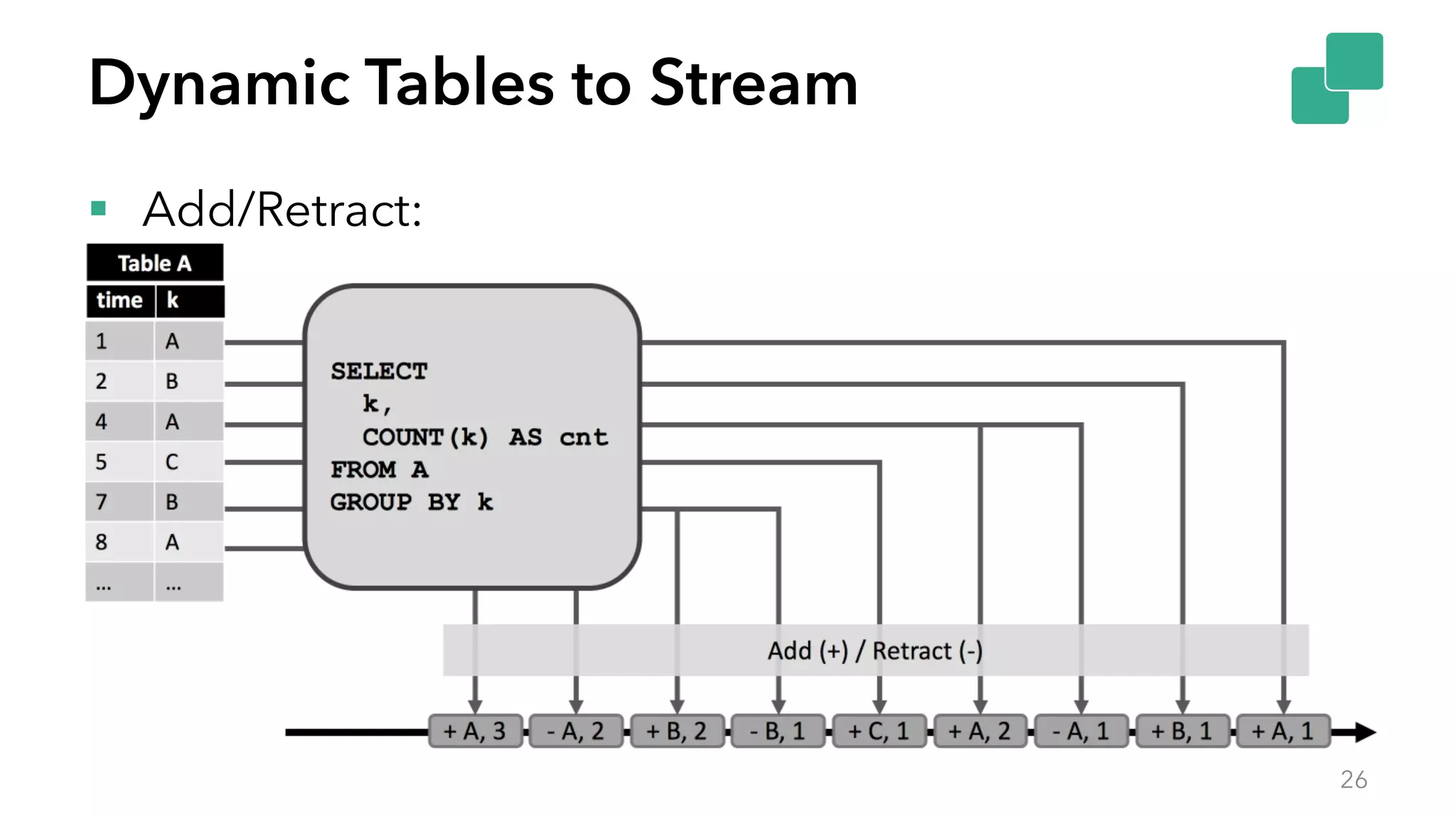

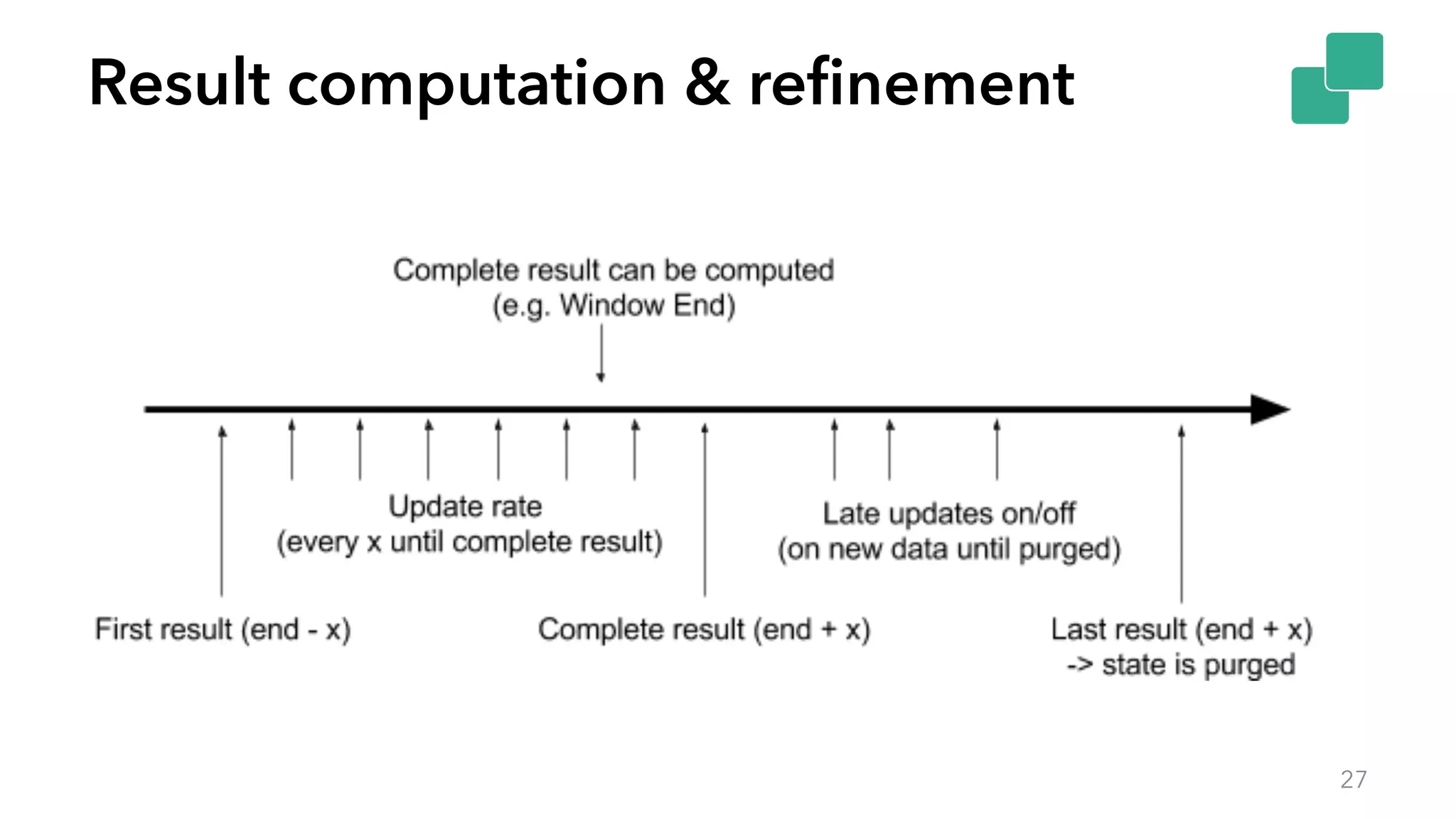

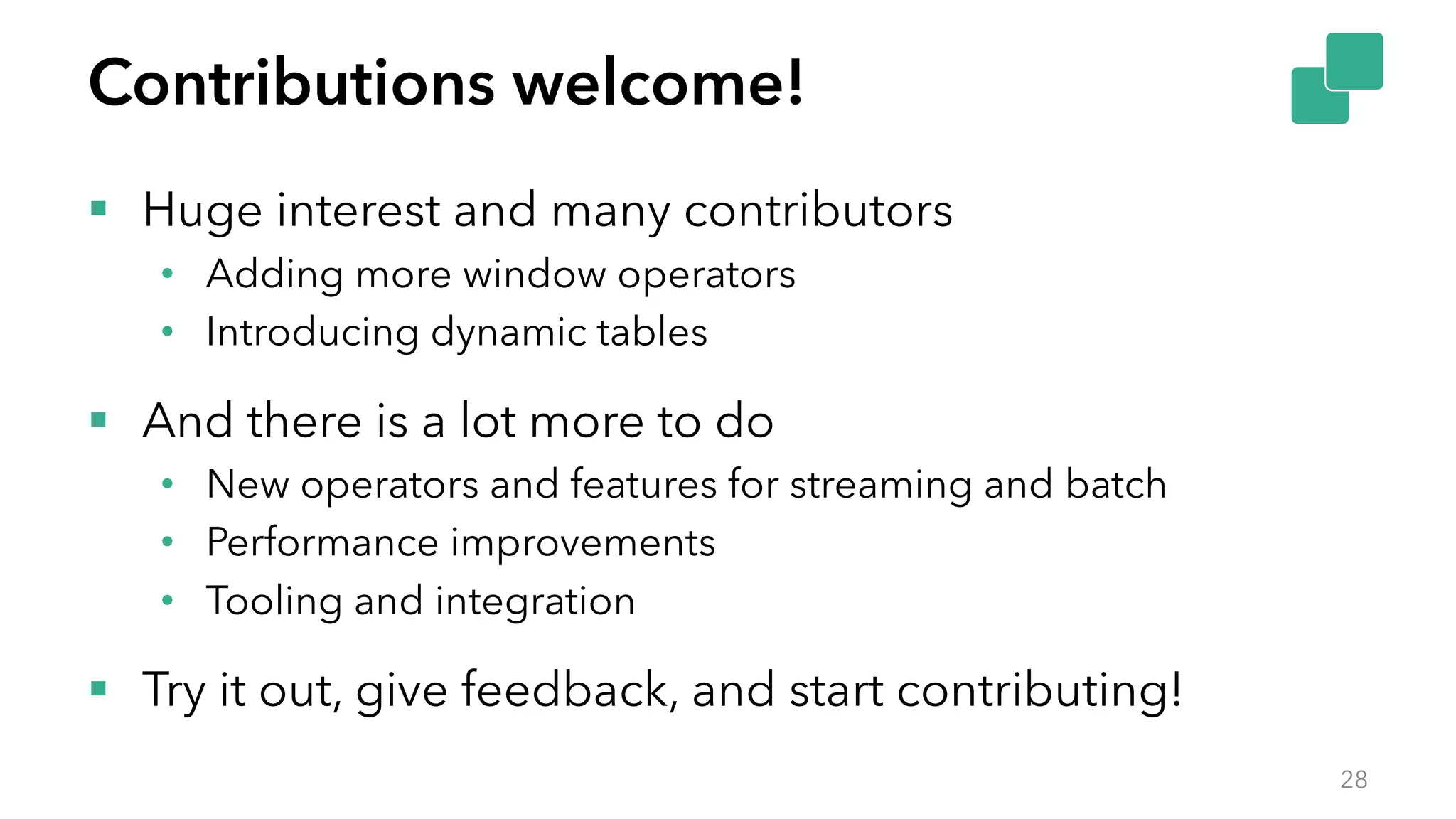

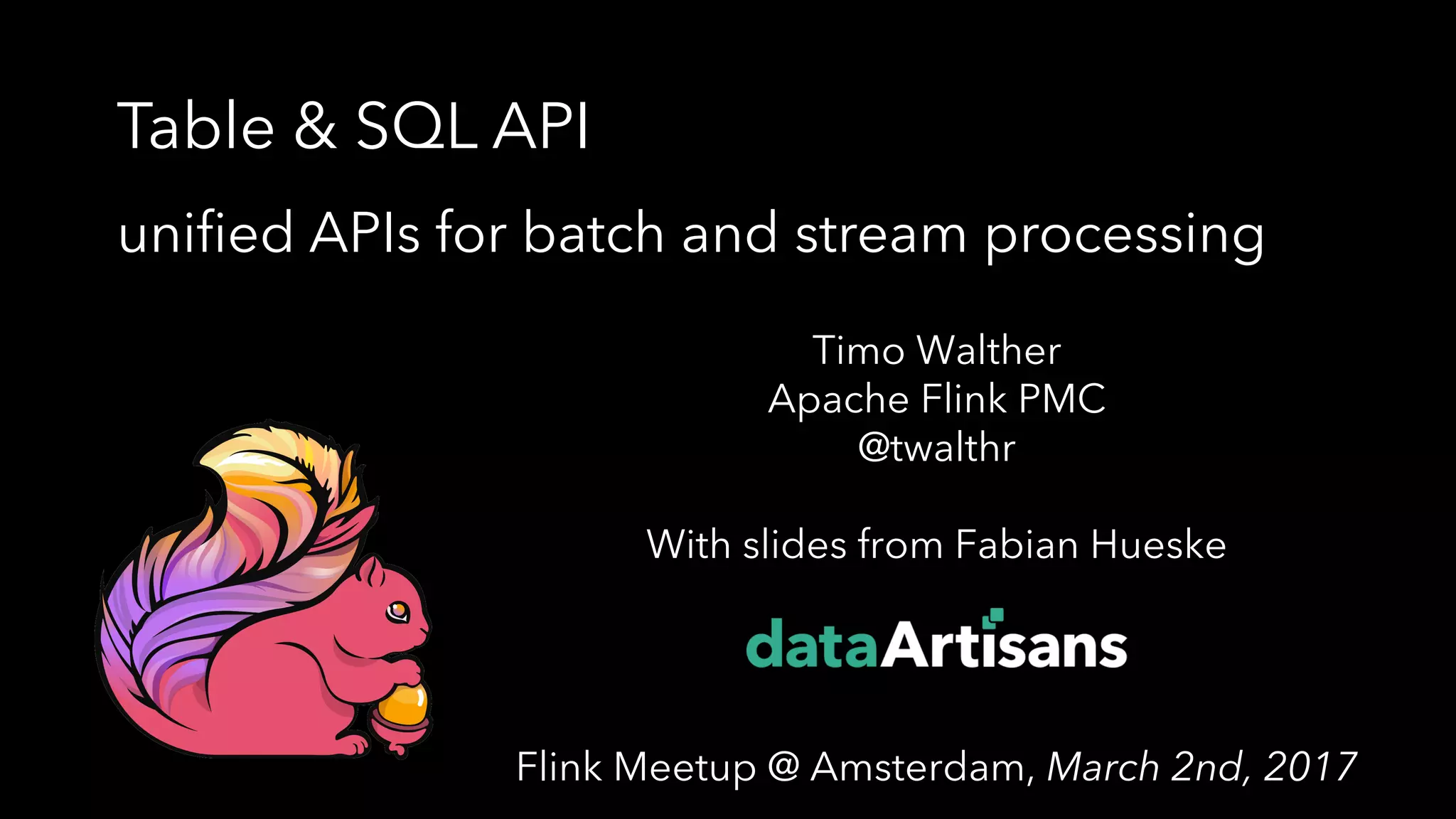

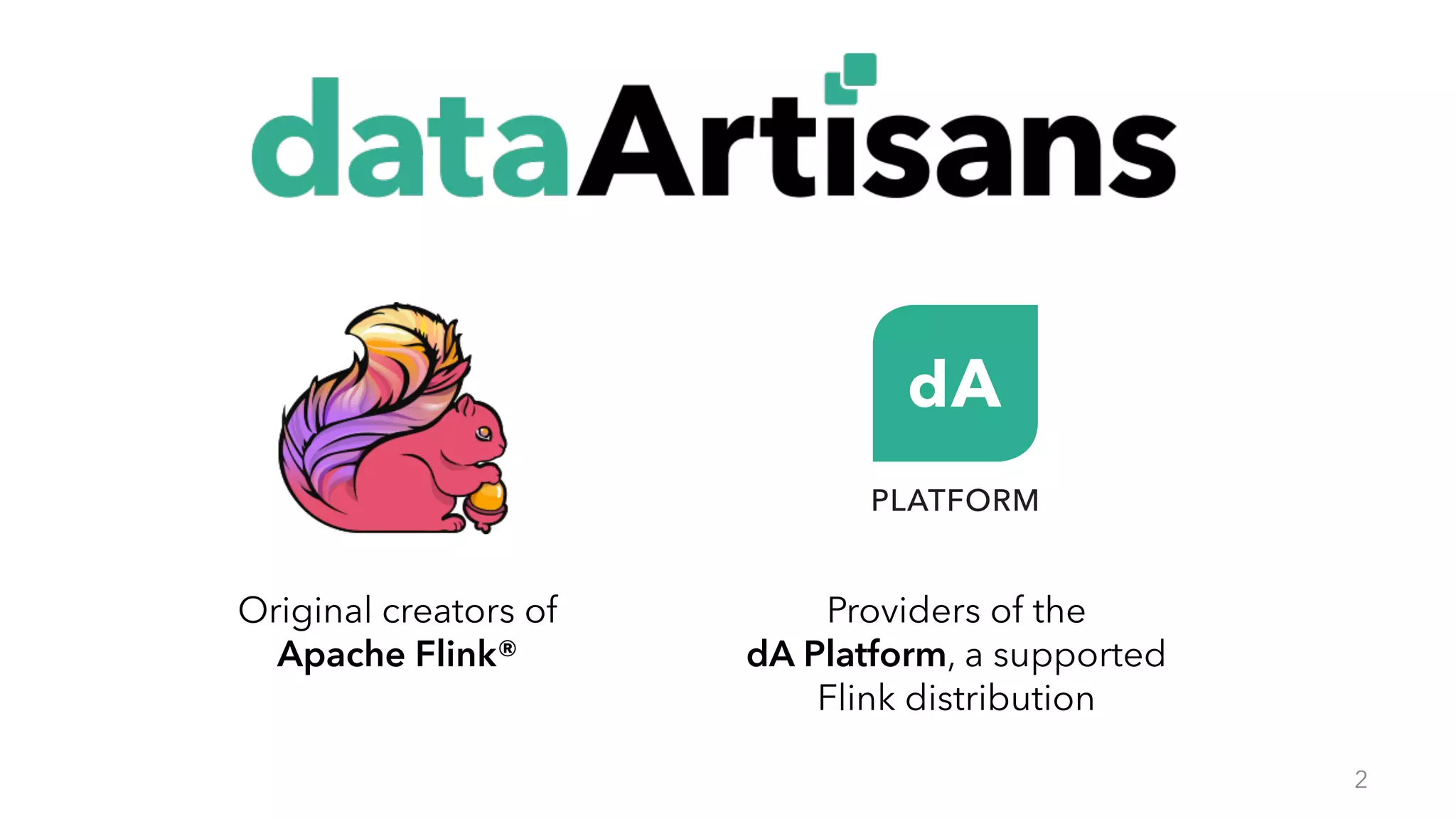

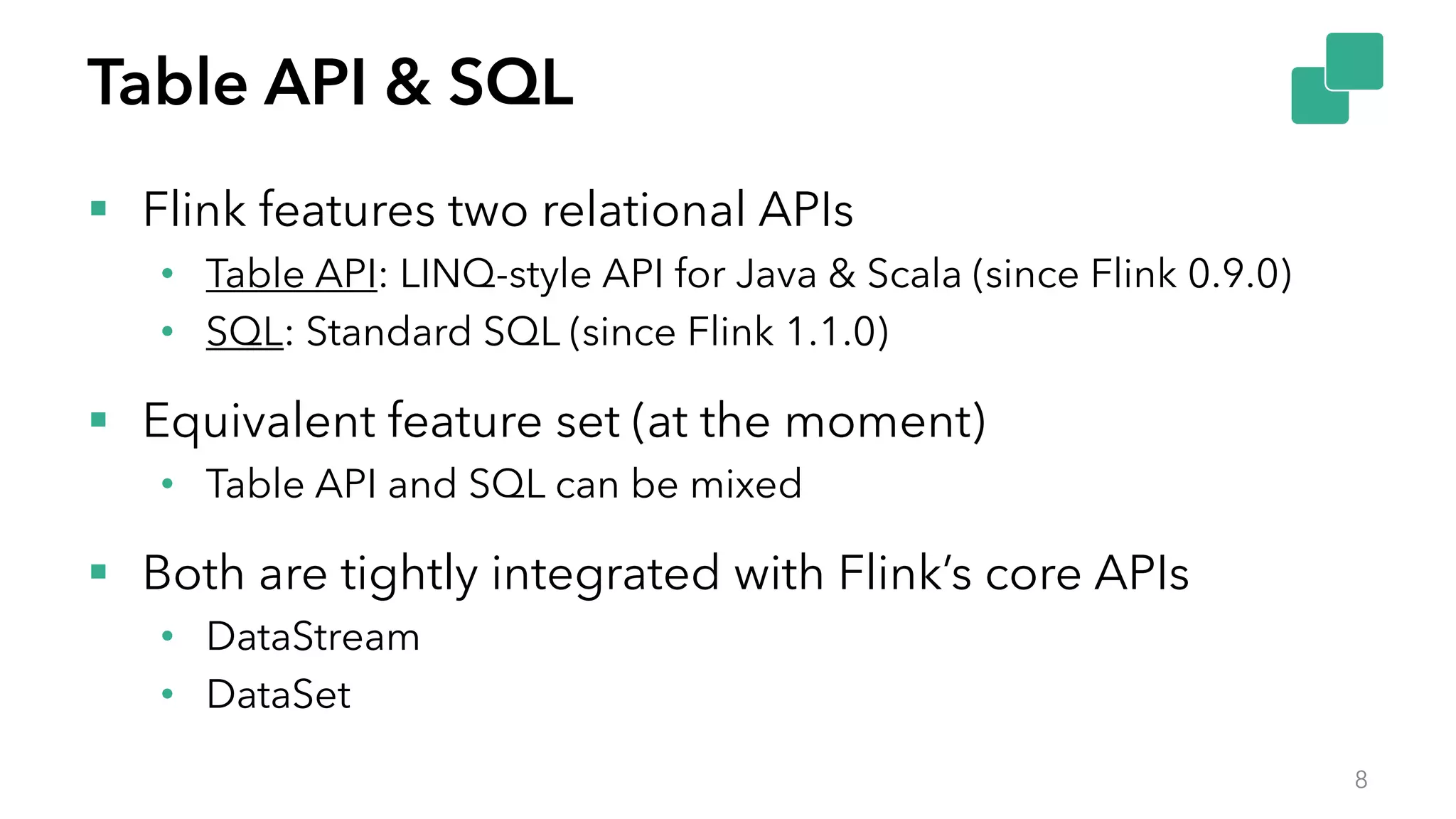

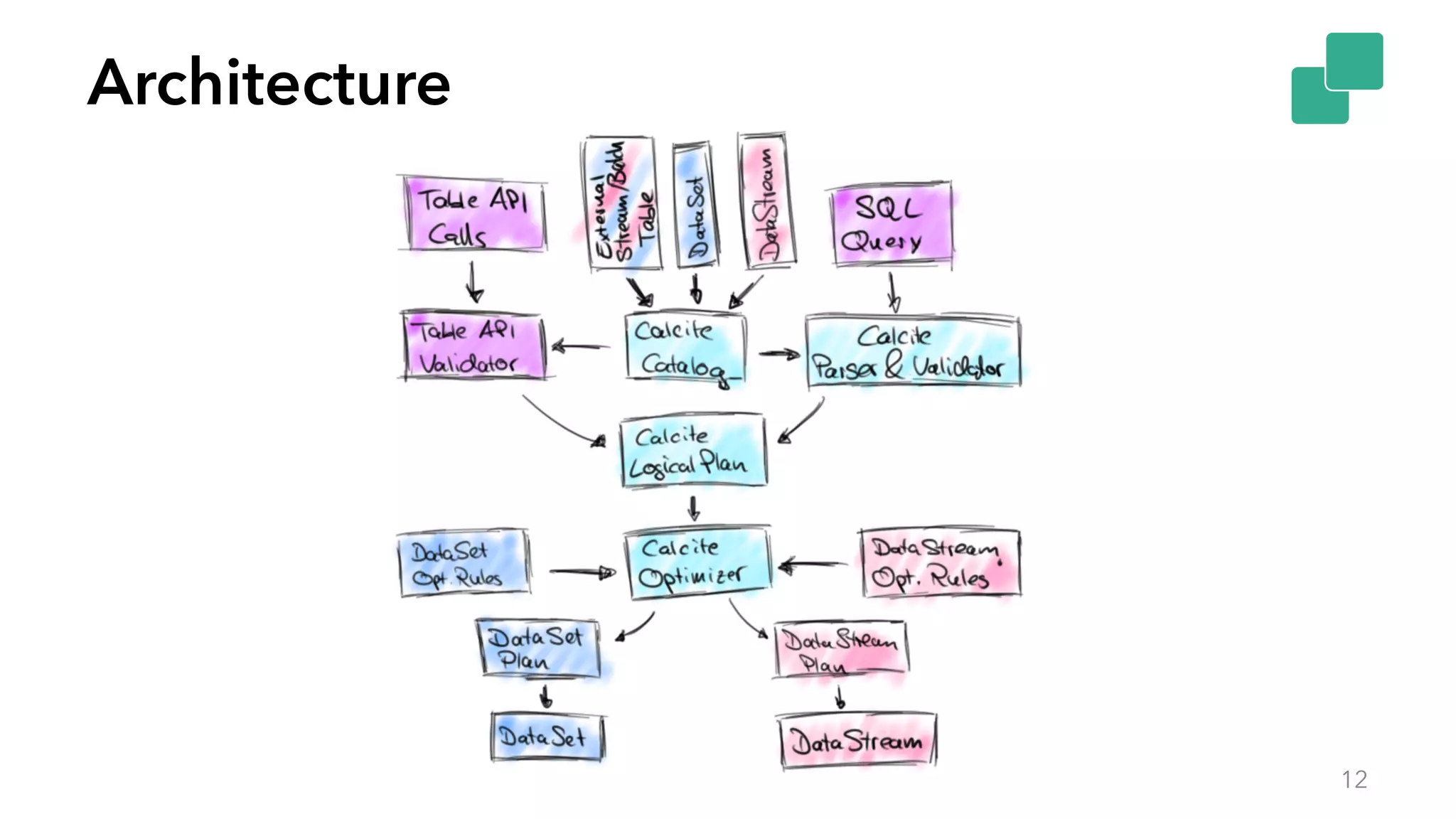

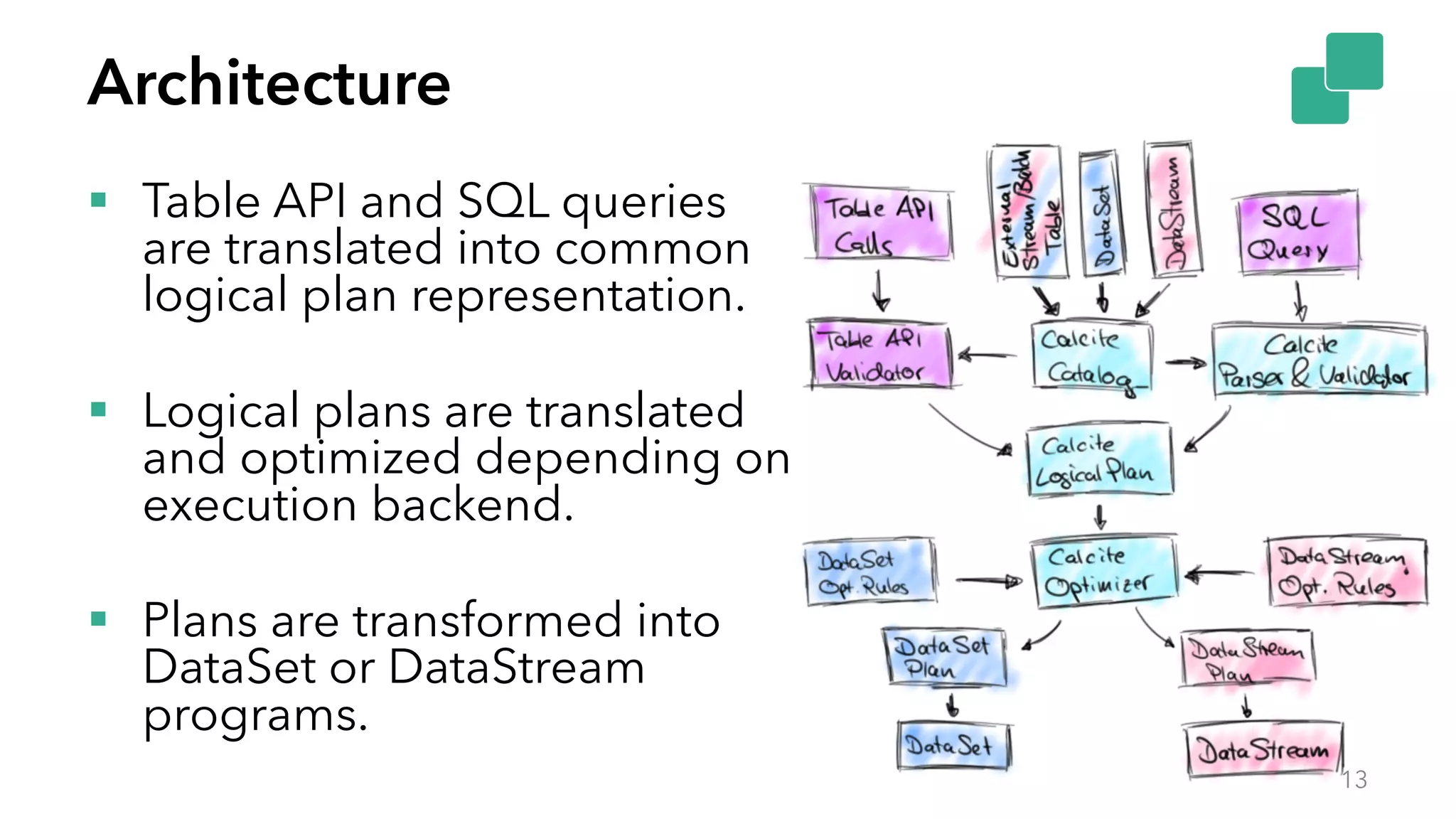

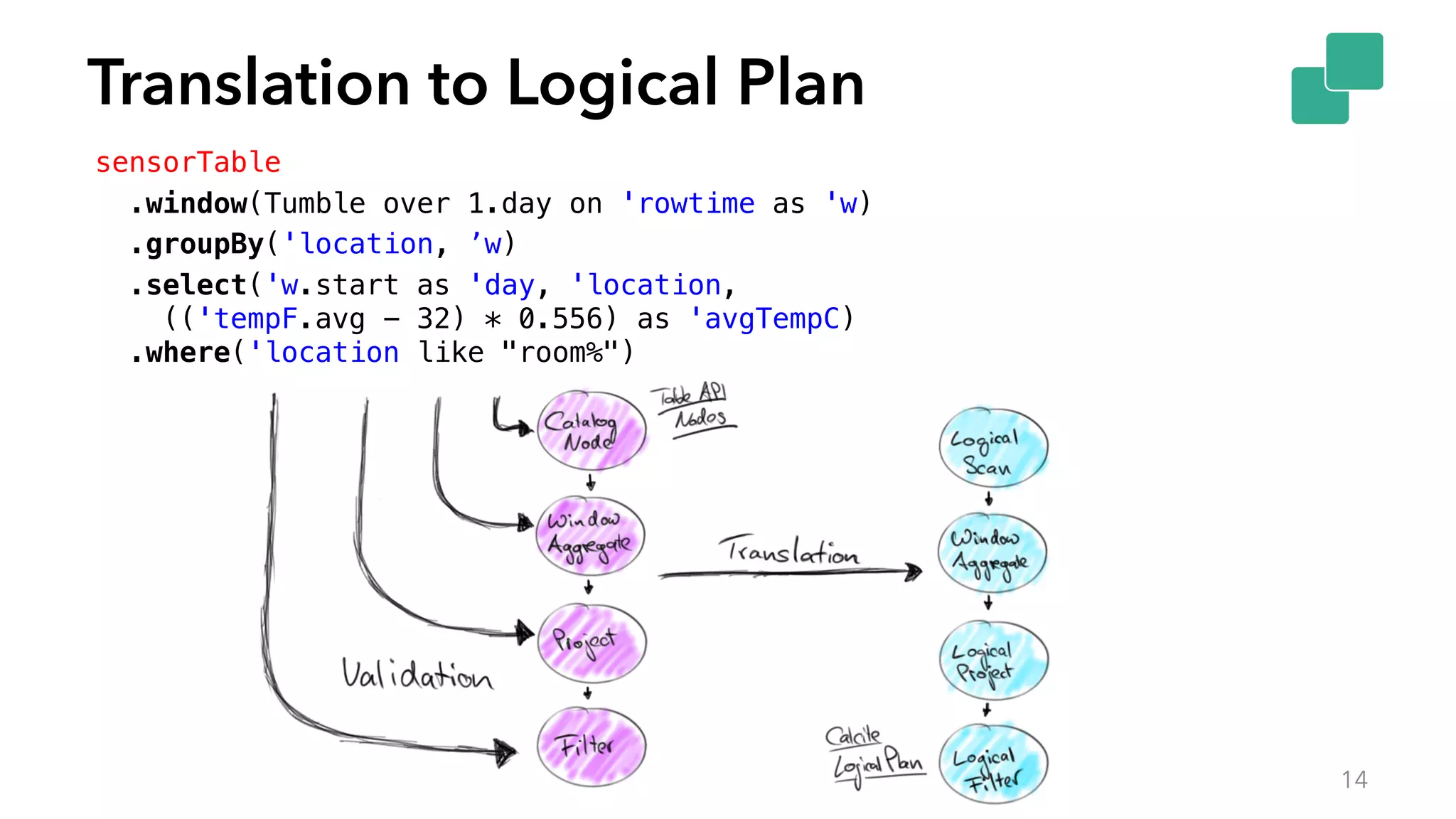

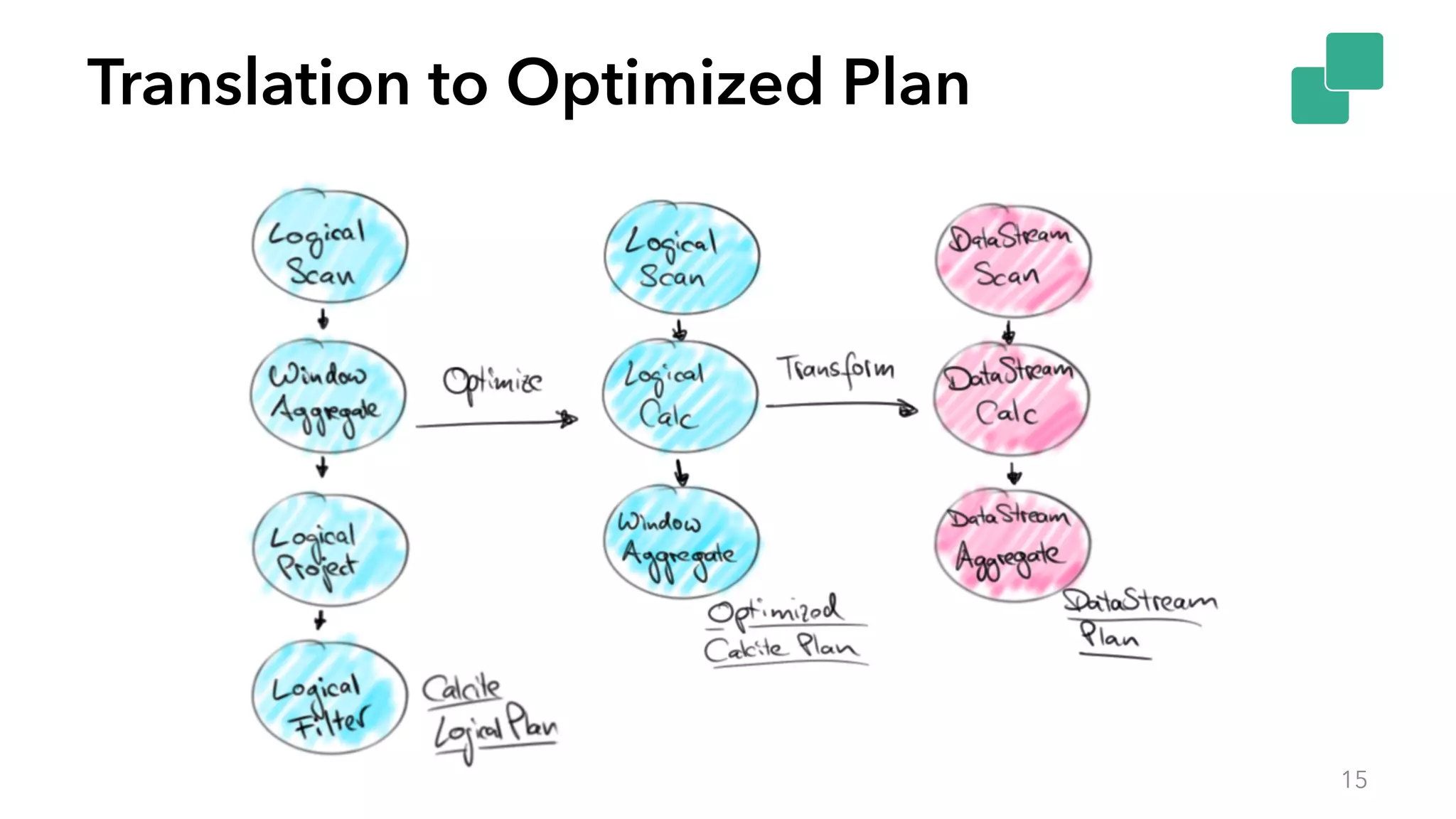

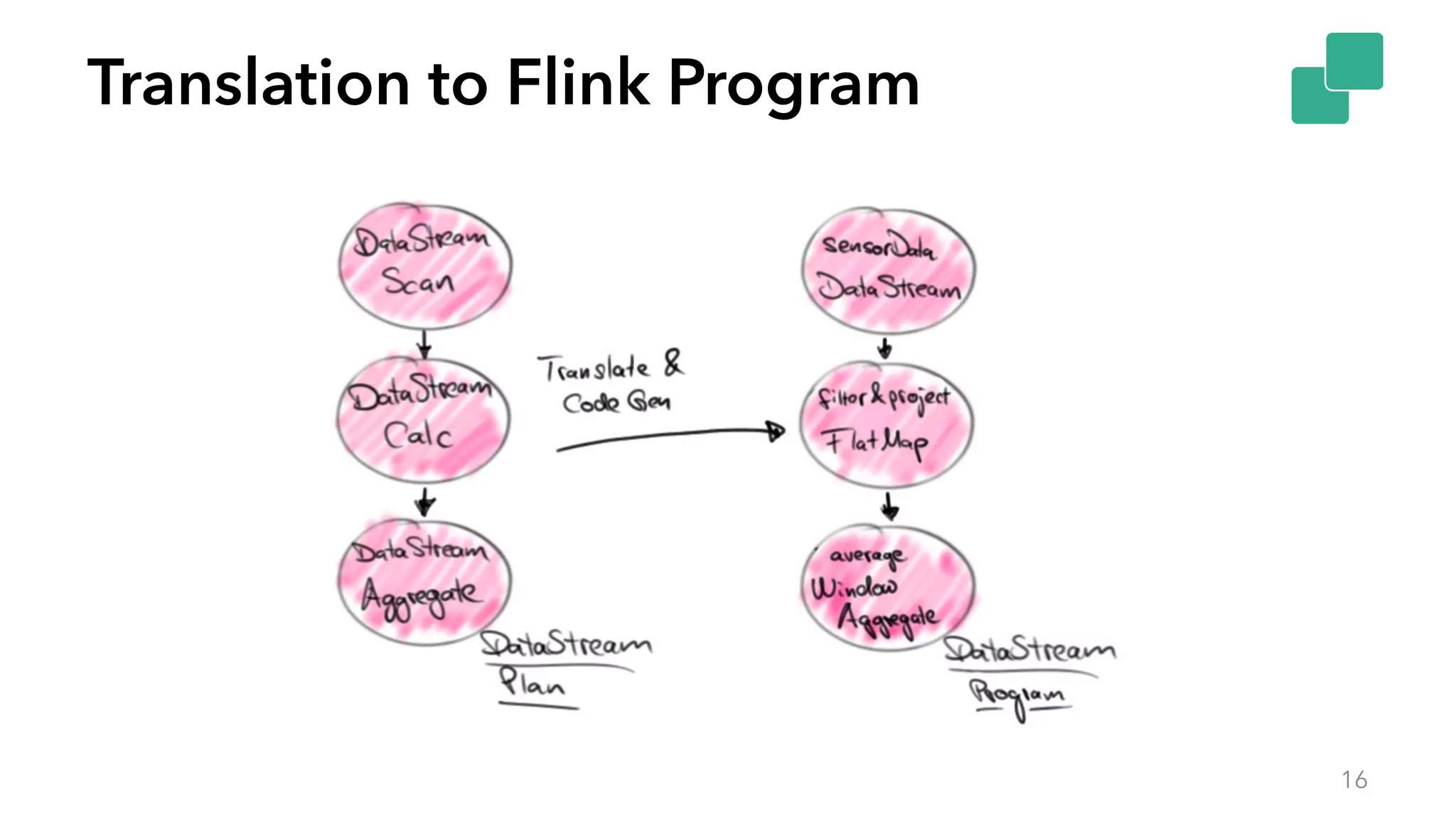

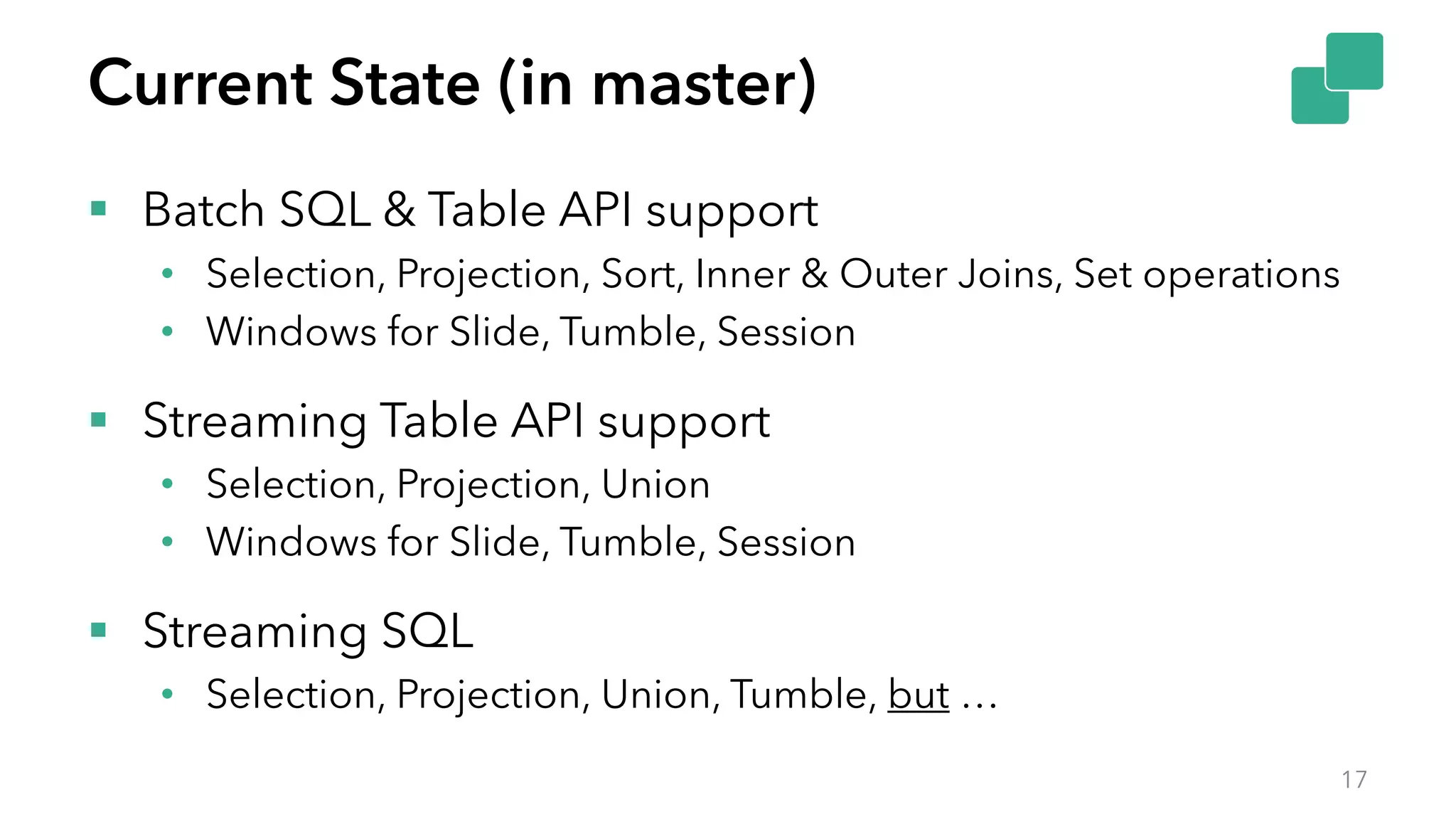

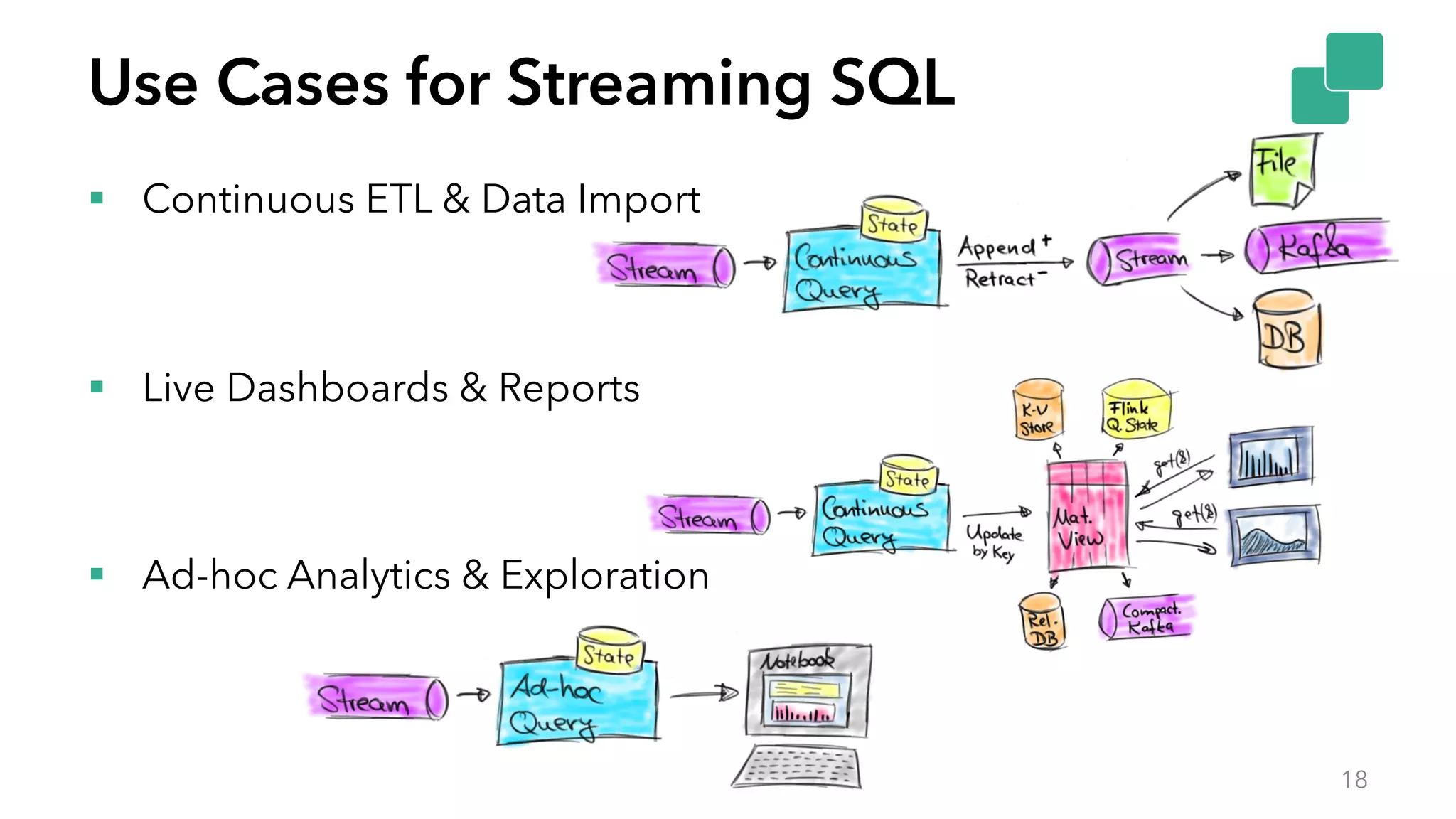

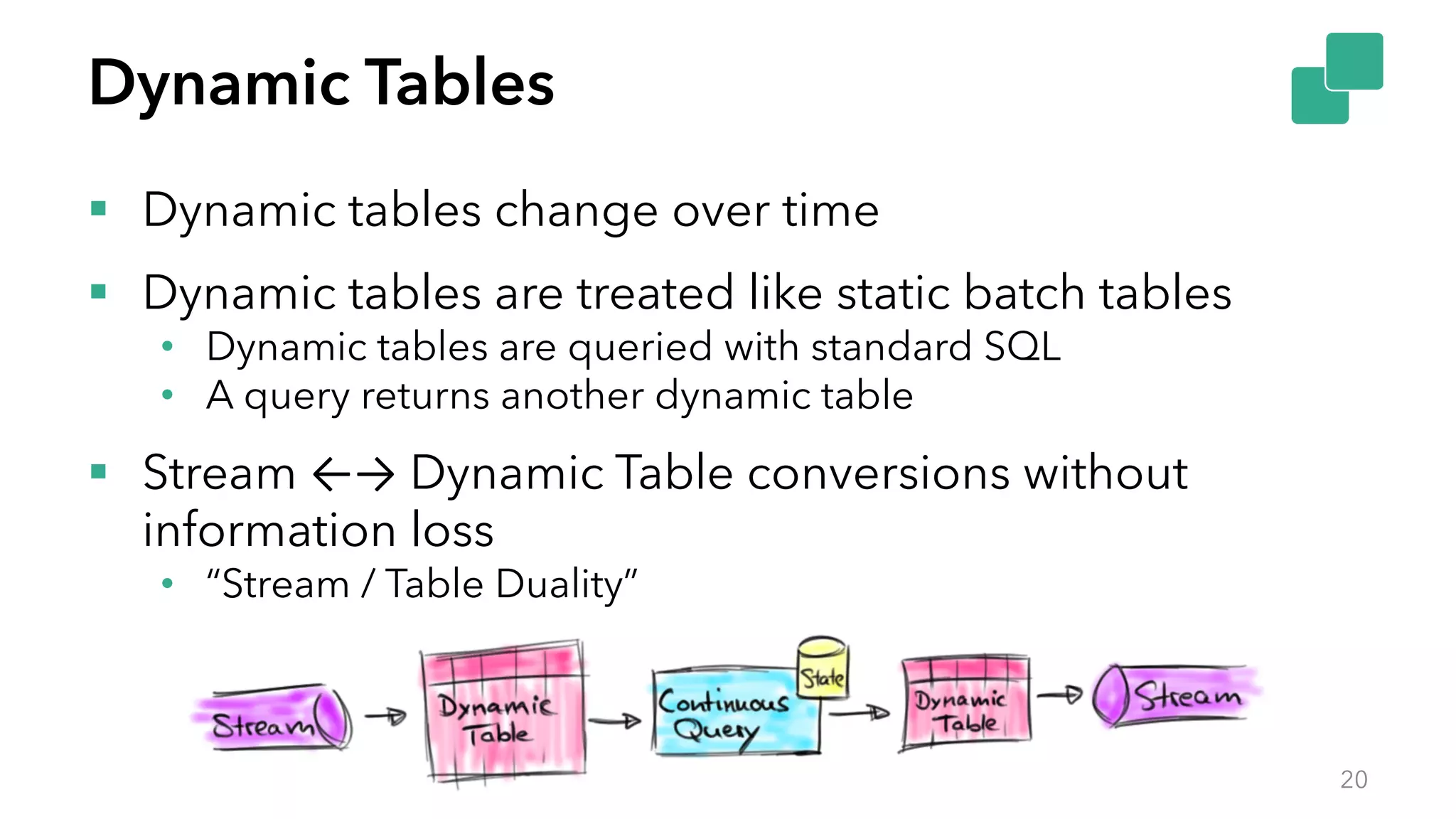

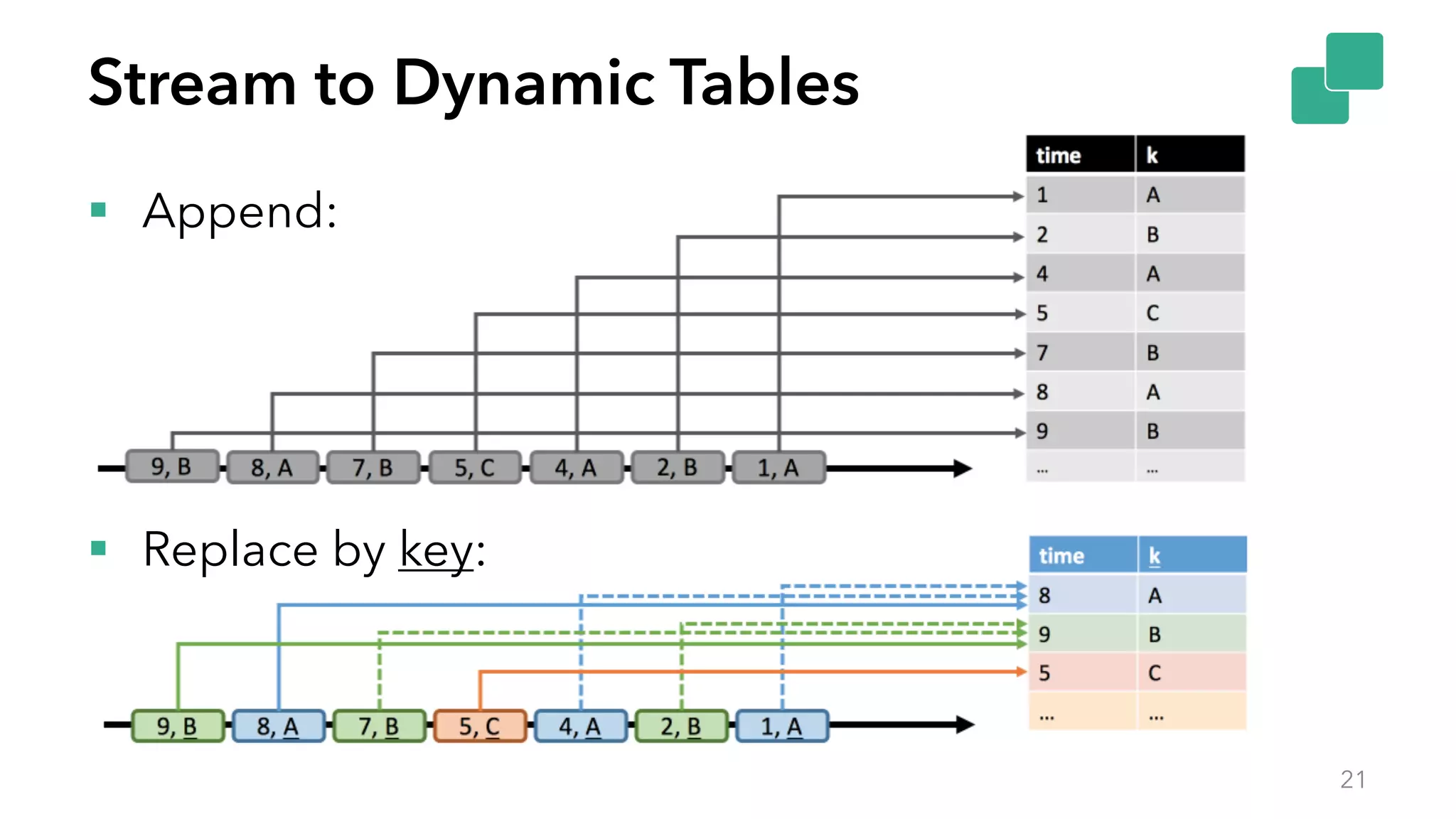

The document discusses Apache Flink's unified API for batch and stream processing, emphasizing the importance of a relational API to simplify stream processing. It presents the Table API and SQL as tools for querying data in a consistent manner across both batch and streaming data. Additionally, it highlights the functionality of dynamic tables and the ongoing contributions for enhancing Flink's capabilities.

![Table API Example 9 val sensorData: DataStream[(String, Long, Double)] = ??? // convert DataSet into Table val sensorTable: Table = sensorData .toTable(tableEnv, 'location, ’time, 'tempF) // define query on Table val avgTempCTable: Table = sensorTable .window(Tumble over 1.day on 'rowtime as 'w) .groupBy('location, ’w) .select('w.start as 'day, 'location, (('tempF.avg - 32) * 0.556) as 'avgTempC) .where('location like "room%")](https://image.slidesharecdn.com/timo-walther-tableapiandsql-170306091814/75/Apache-Flink-s-Table-SQL-API-unified-APIs-for-batch-and-stream-processing-9-2048.jpg)

![SQL Example 10 val sensorData: DataStream[(String, Long, Double)] = ??? // register DataStream tableEnv.registerDataStream( "sensorData", sensorData, 'location, ’time, 'tempF) // query registered Table val avgTempCTable: Table = tableEnv .sql(""" SELECT FLOOR(rowtime() TO DAY) AS day, location, AVG((tempF - 32) * 0.556) AS avgTempC FROM sensorData WHERE location LIKE 'room%' GROUP BY location, FLOOR(rowtime() TO DAY) """)](https://image.slidesharecdn.com/timo-walther-tableapiandsql-170306091814/75/Apache-Flink-s-Table-SQL-API-unified-APIs-for-batch-and-stream-processing-10-2048.jpg)

![Architecture 2 APIs [SQL, Table API] * 2 backends [DataStream, DataSet] = 4 different translation paths? 11](https://image.slidesharecdn.com/timo-walther-tableapiandsql-170306091814/75/Apache-Flink-s-Table-SQL-API-unified-APIs-for-batch-and-stream-processing-11-2048.jpg)

![Querying Dynamic Tables § Dynamic tables change over time • A[t]: Table A at time t § Dynamic tables are queried with regular SQL • Result of a query changes as input table changes • q(A[t]): Evaluate query q on table A at time t § Query result is continuously updated as t progresses • Similar to maintaining a materialized view • t is current event time 22](https://image.slidesharecdn.com/timo-walther-tableapiandsql-170306091814/75/Apache-Flink-s-Table-SQL-API-unified-APIs-for-batch-and-stream-processing-22-2048.jpg)