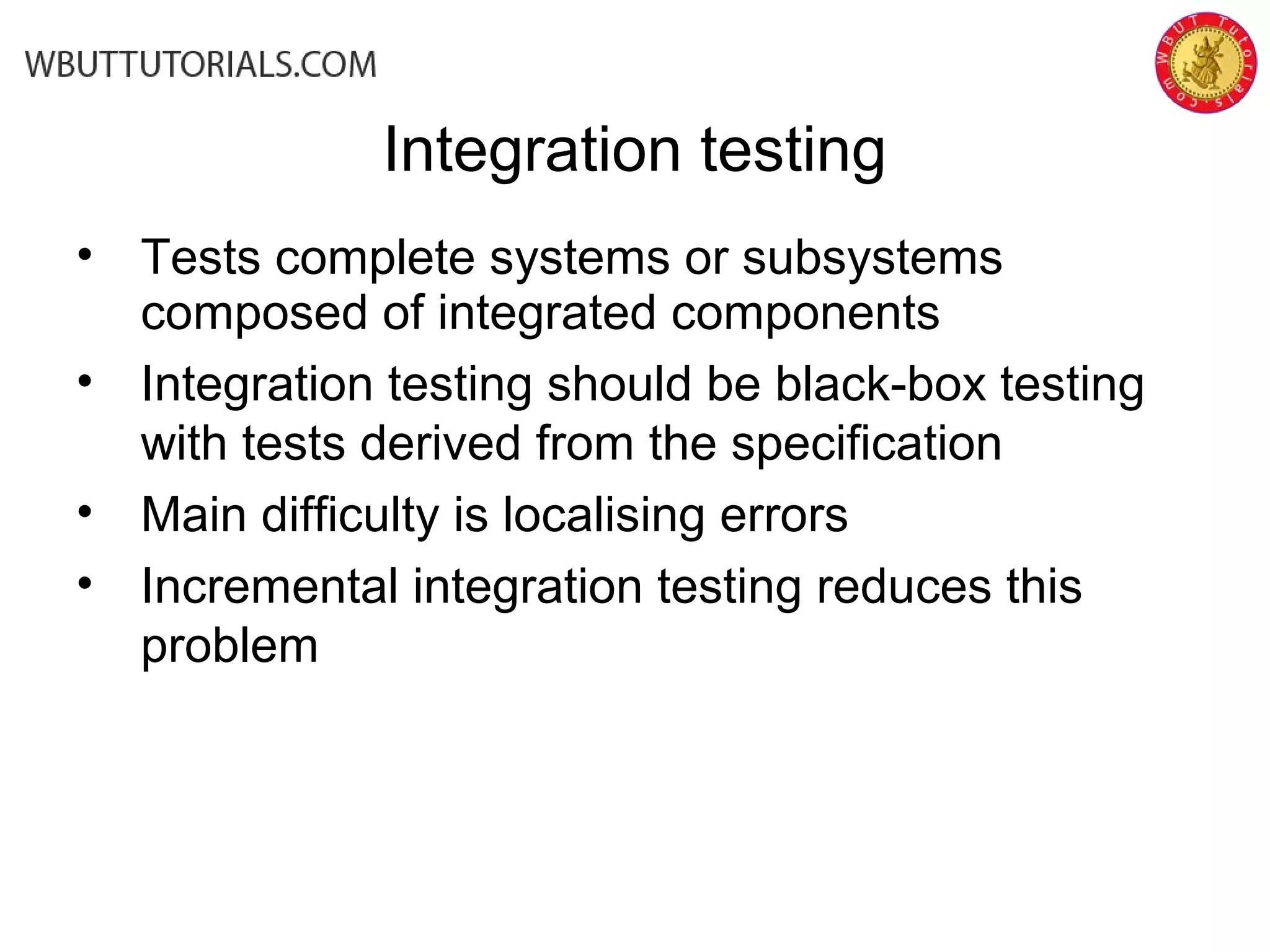

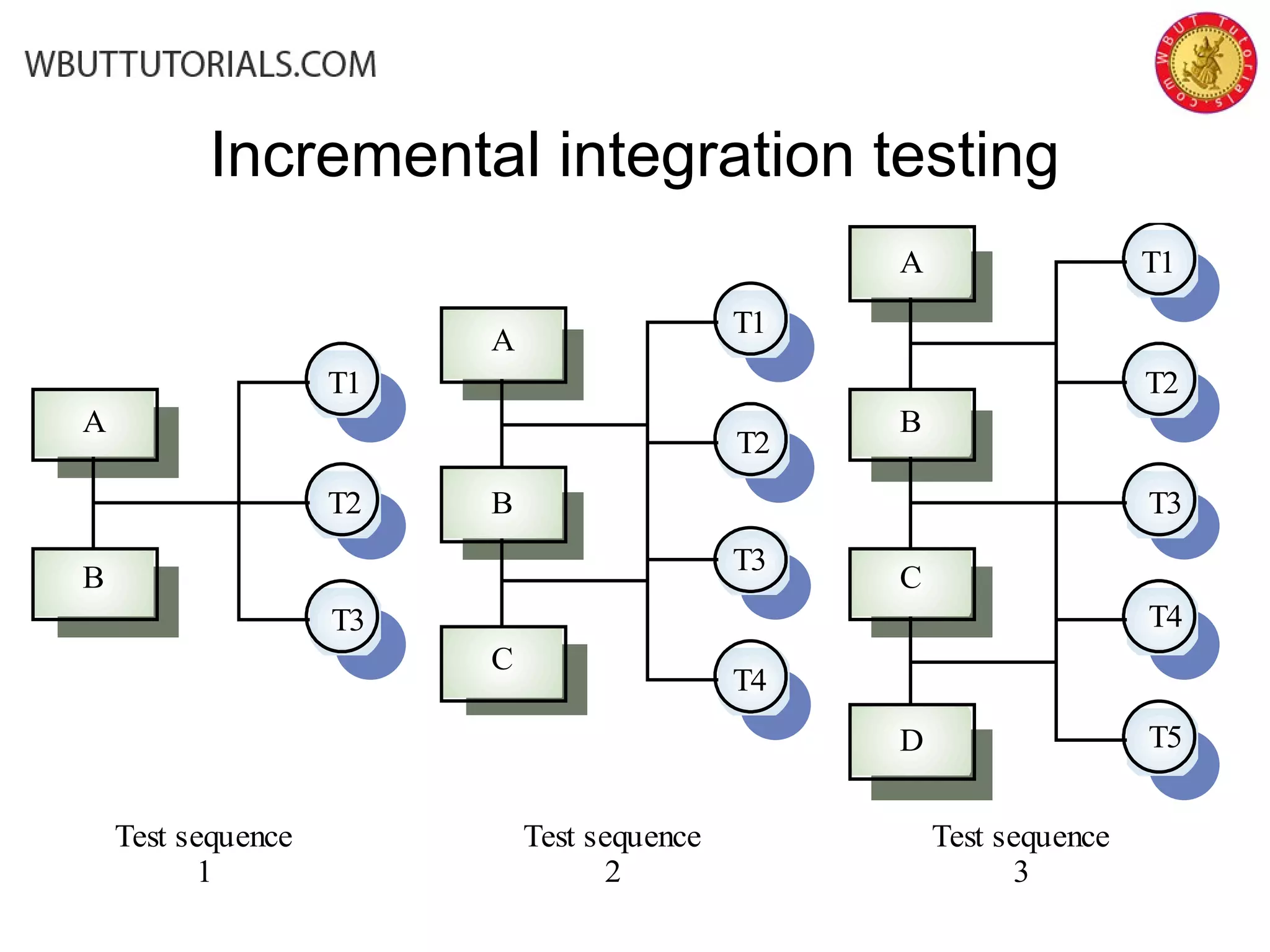

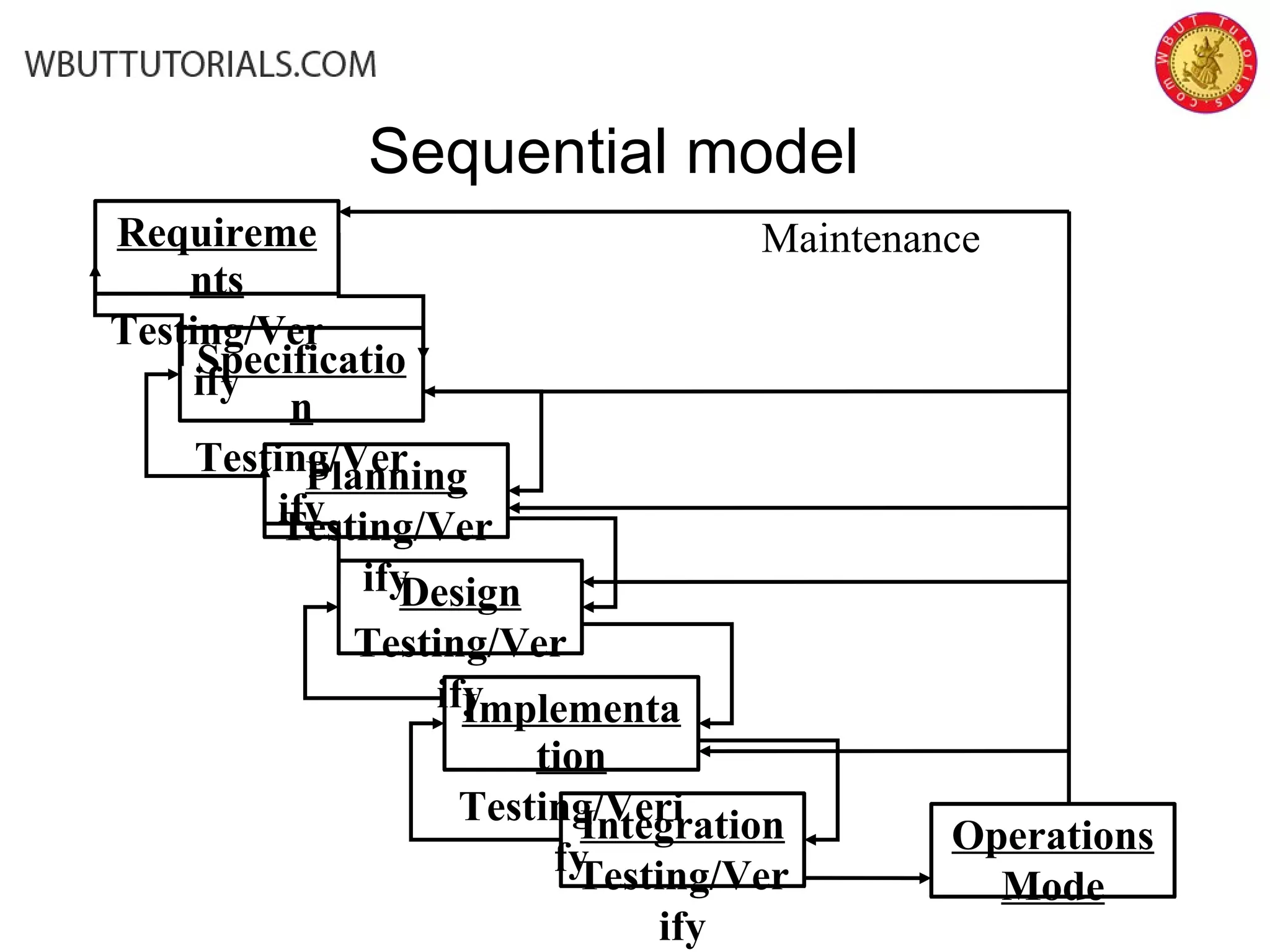

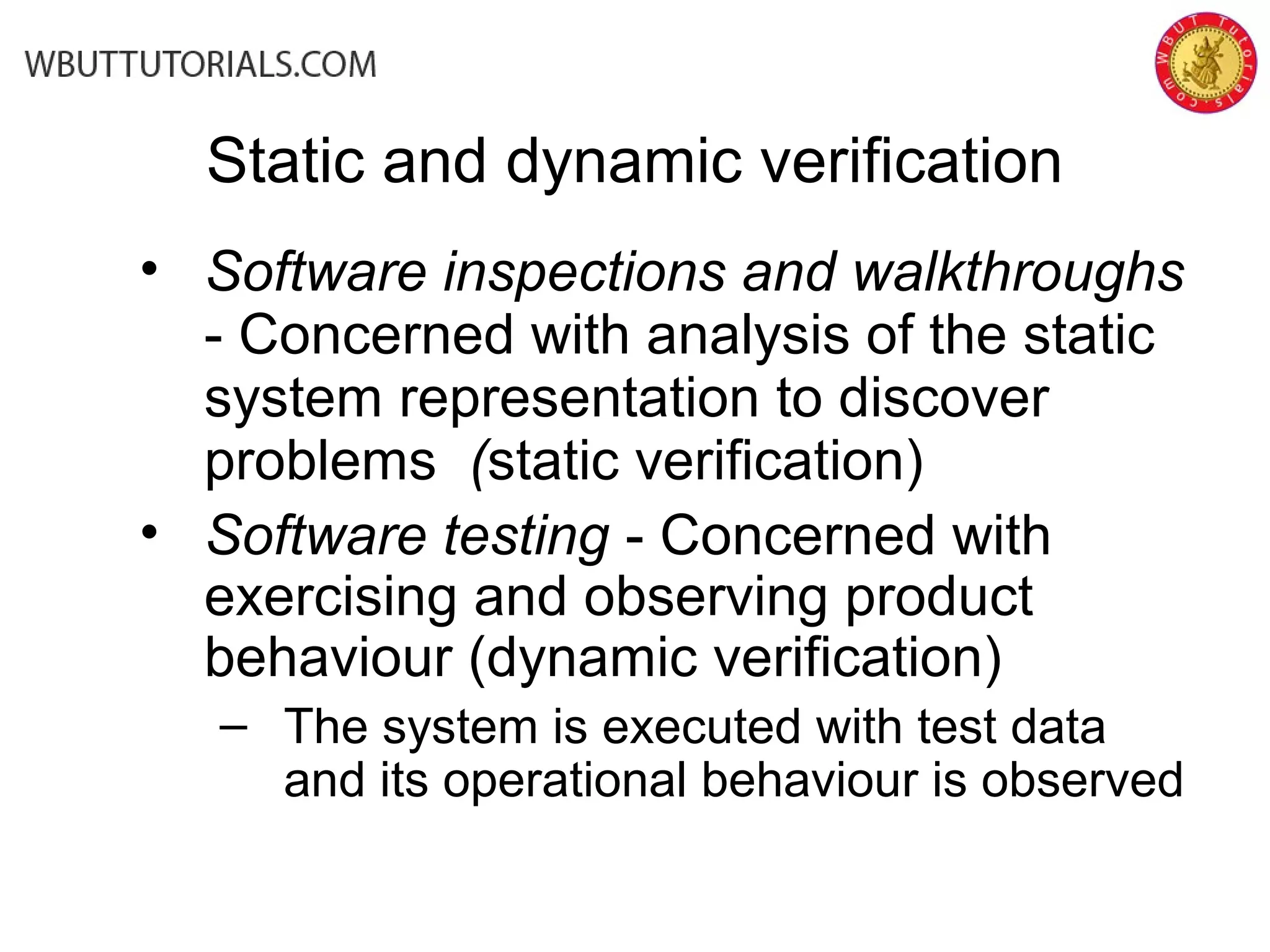

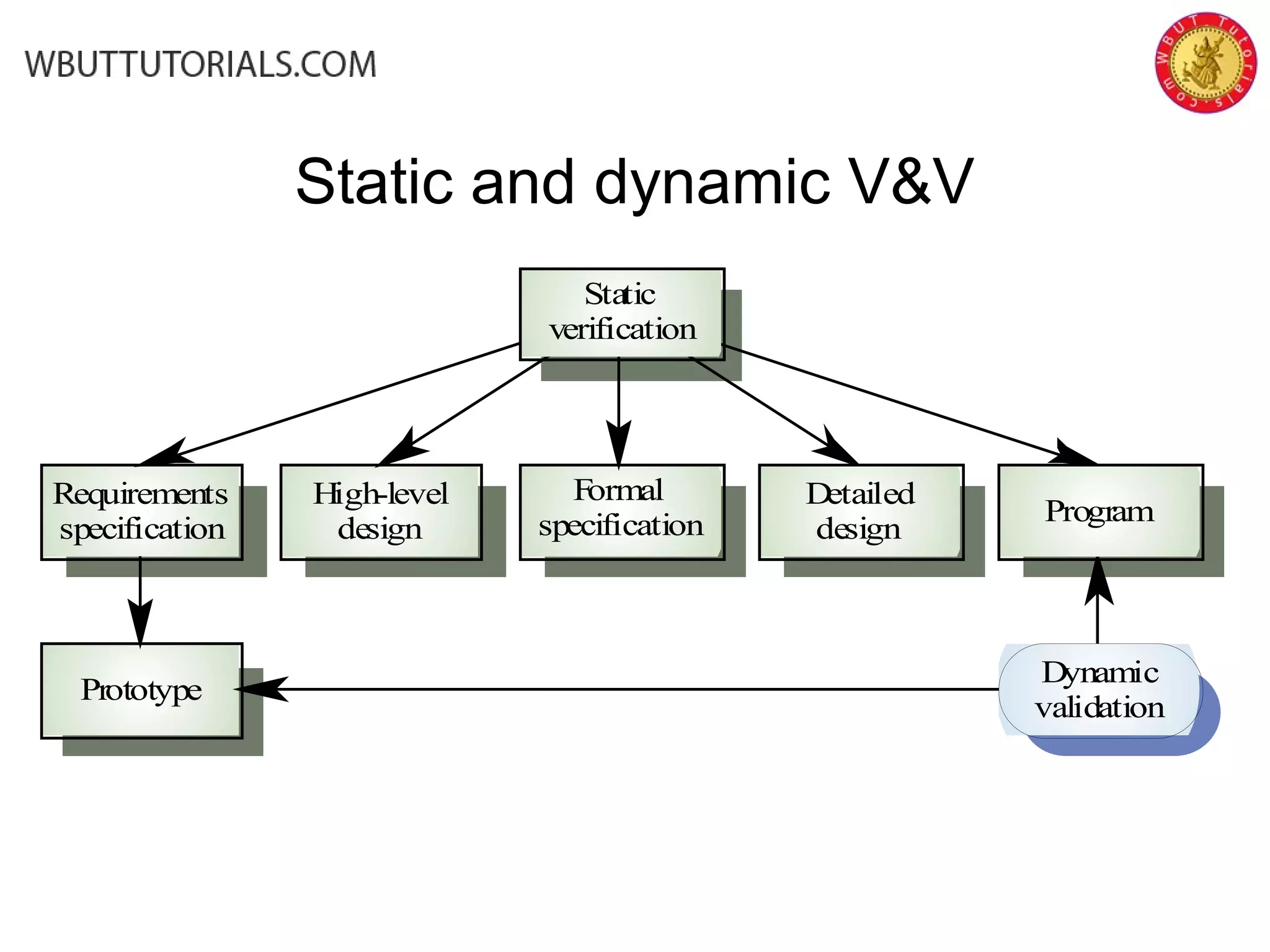

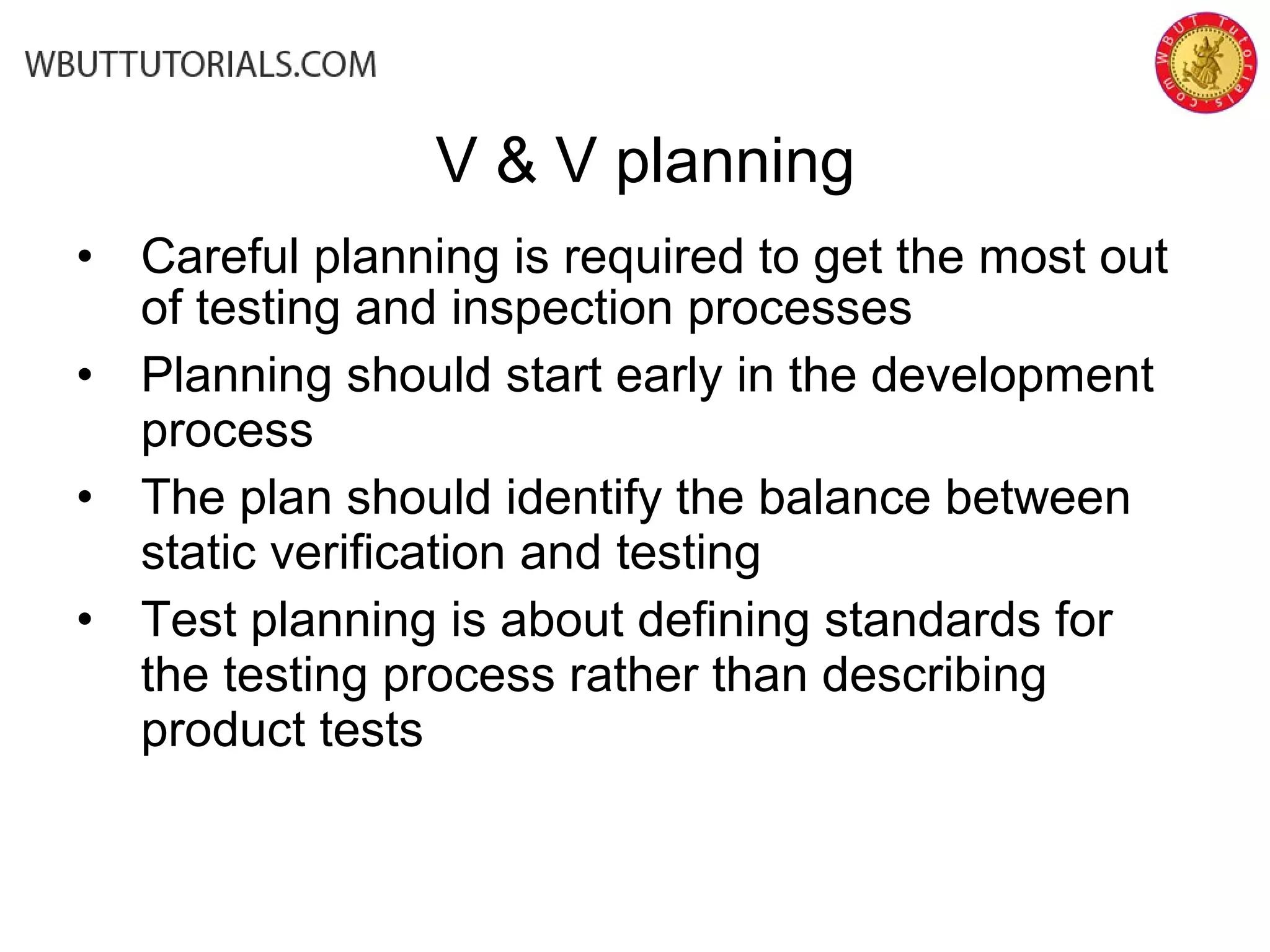

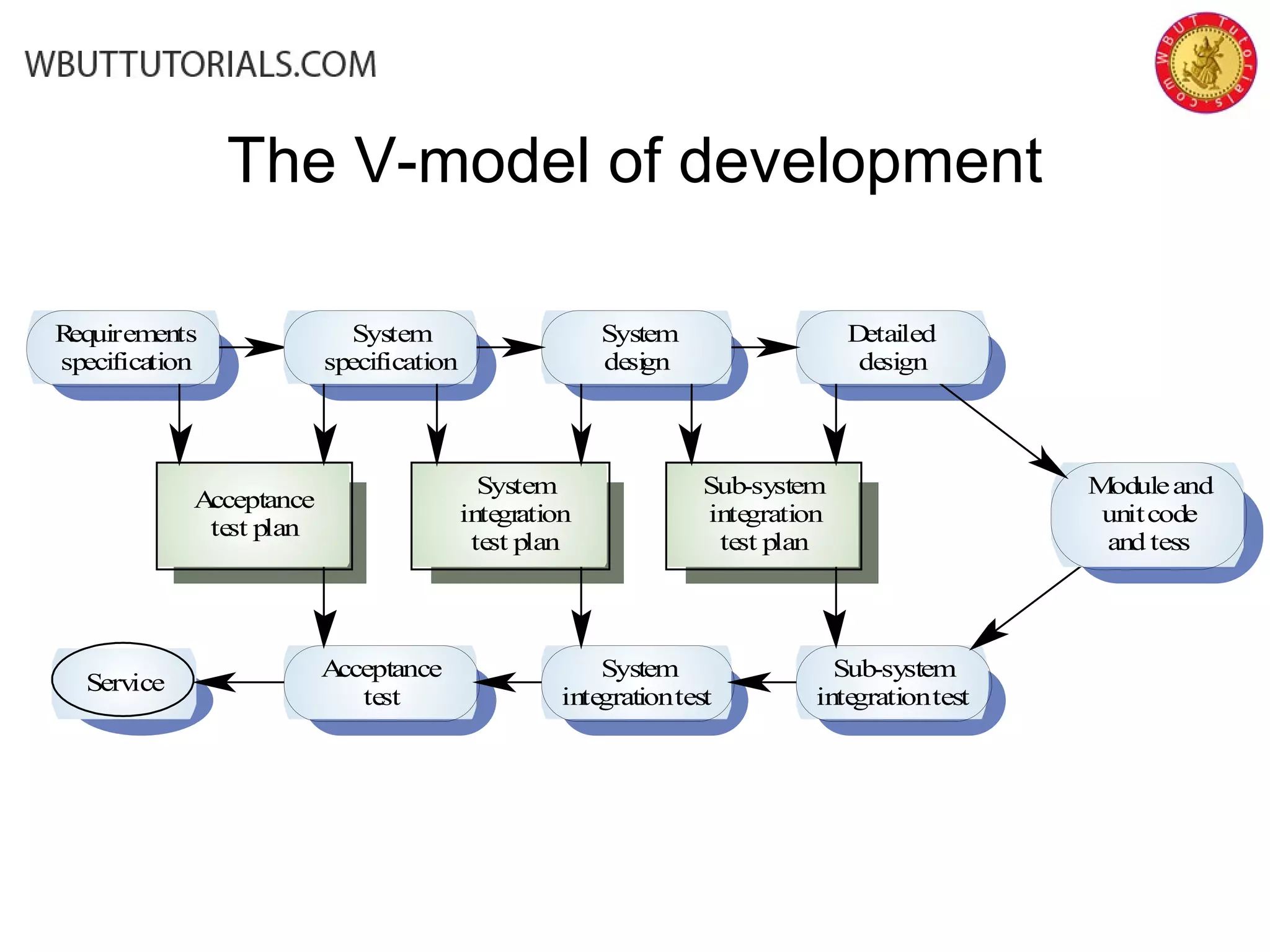

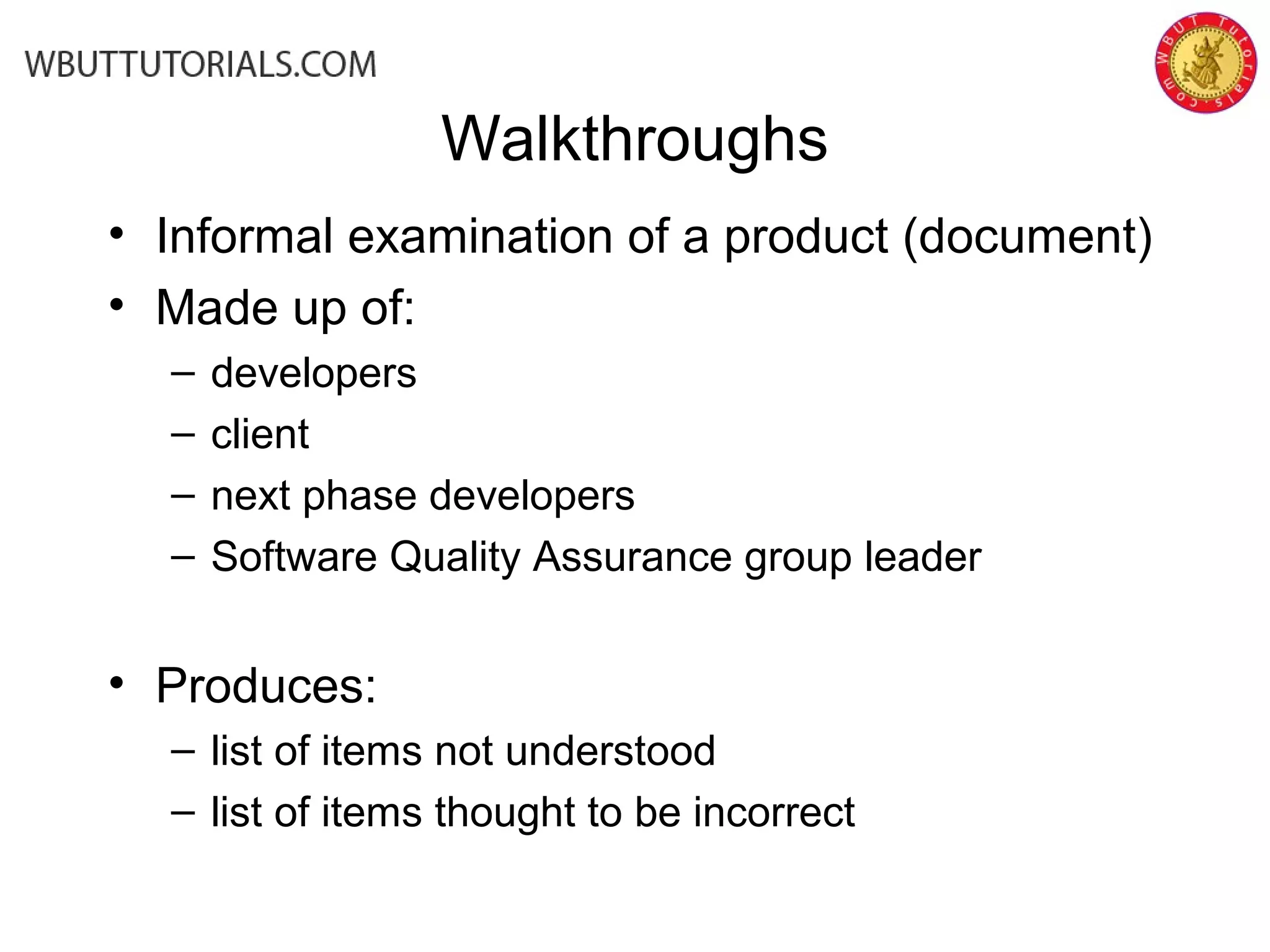

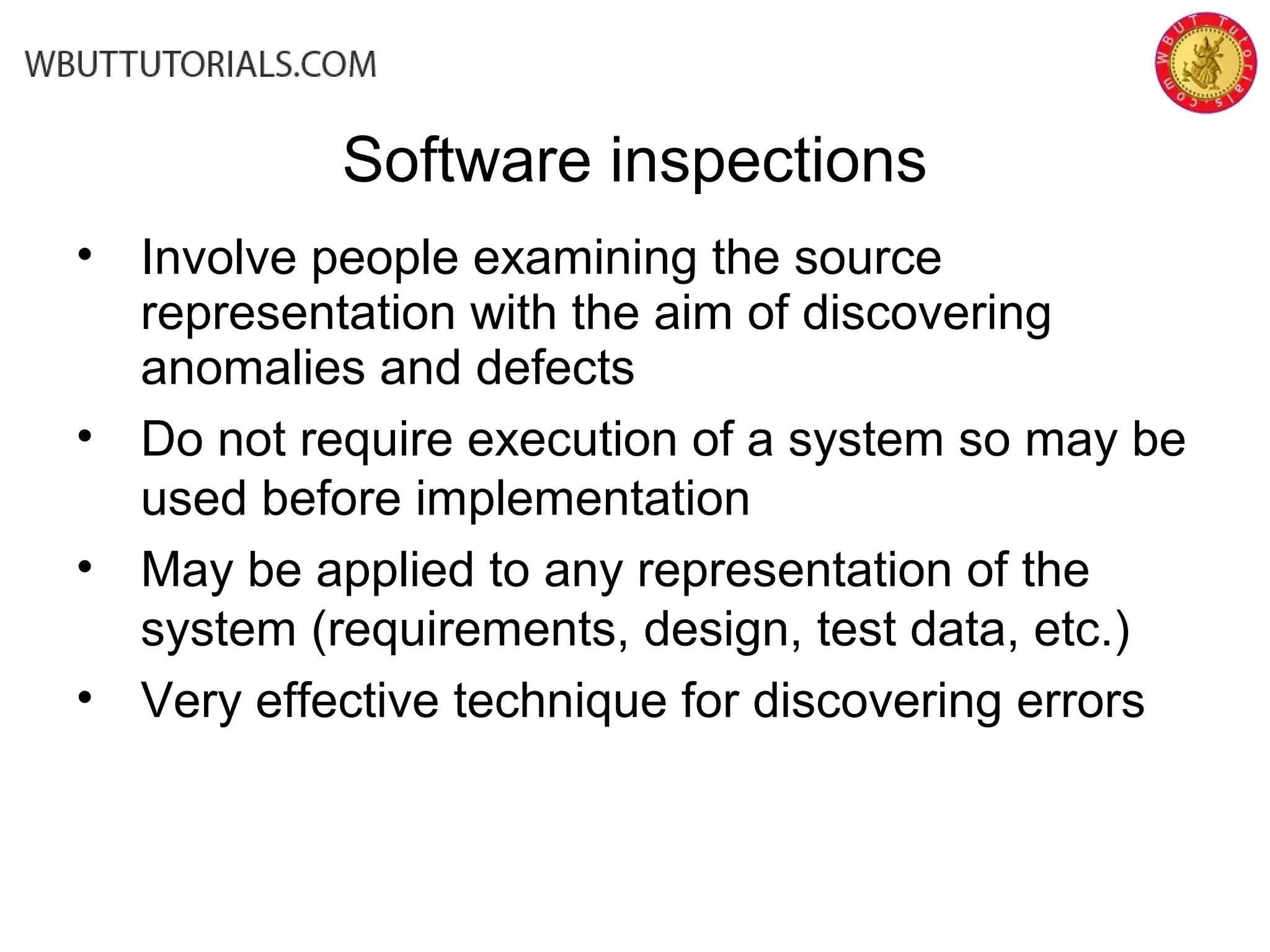

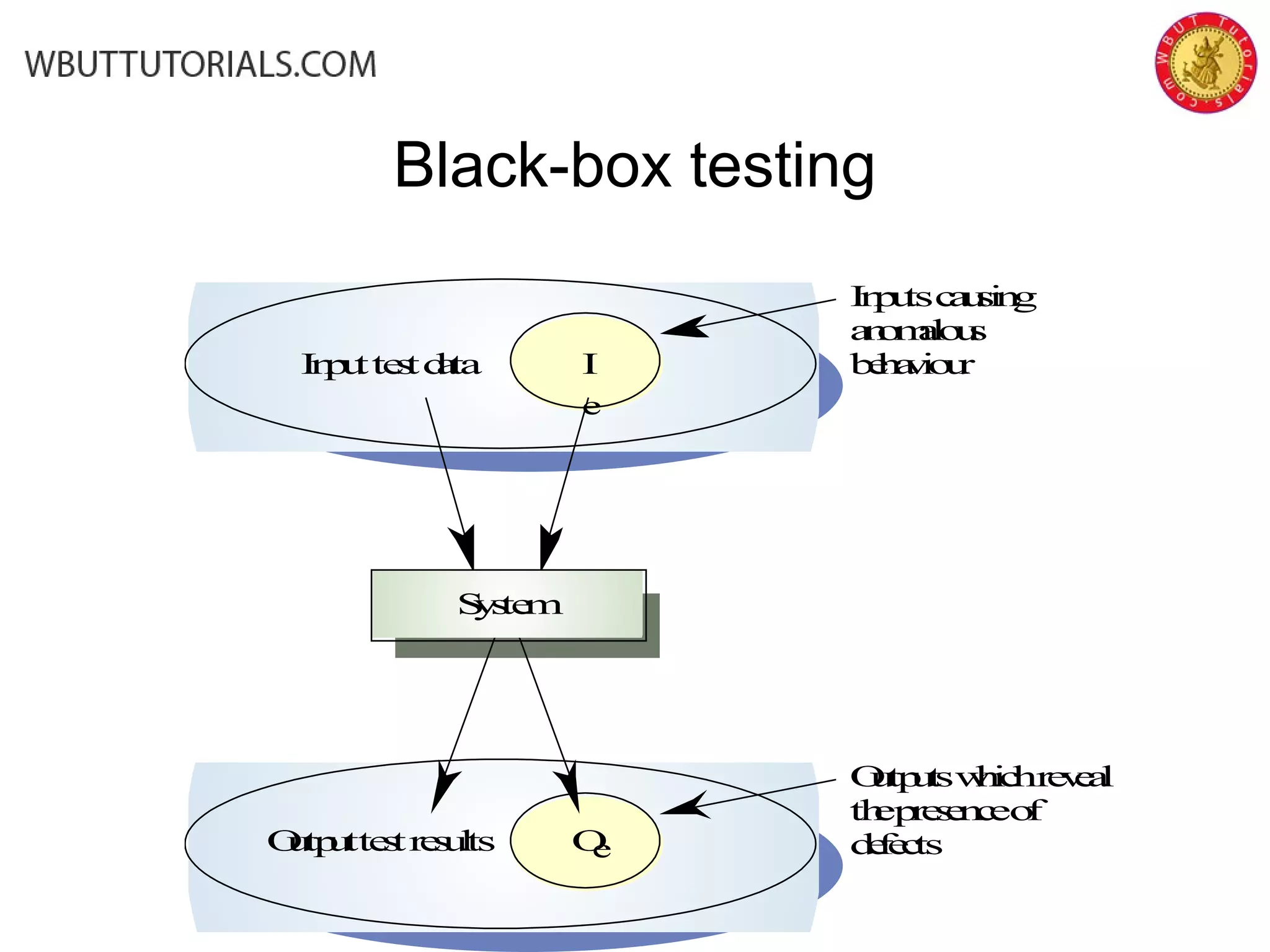

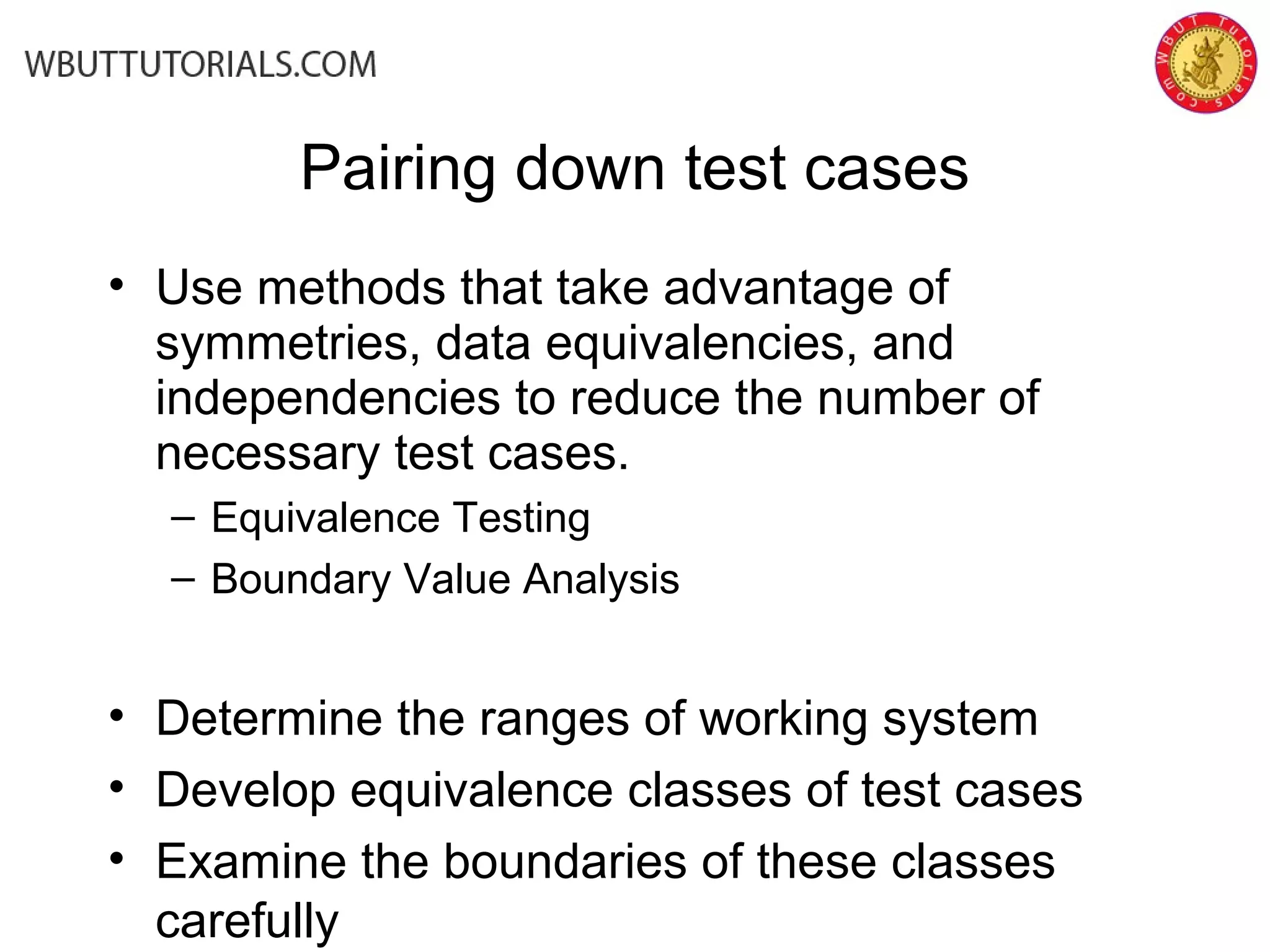

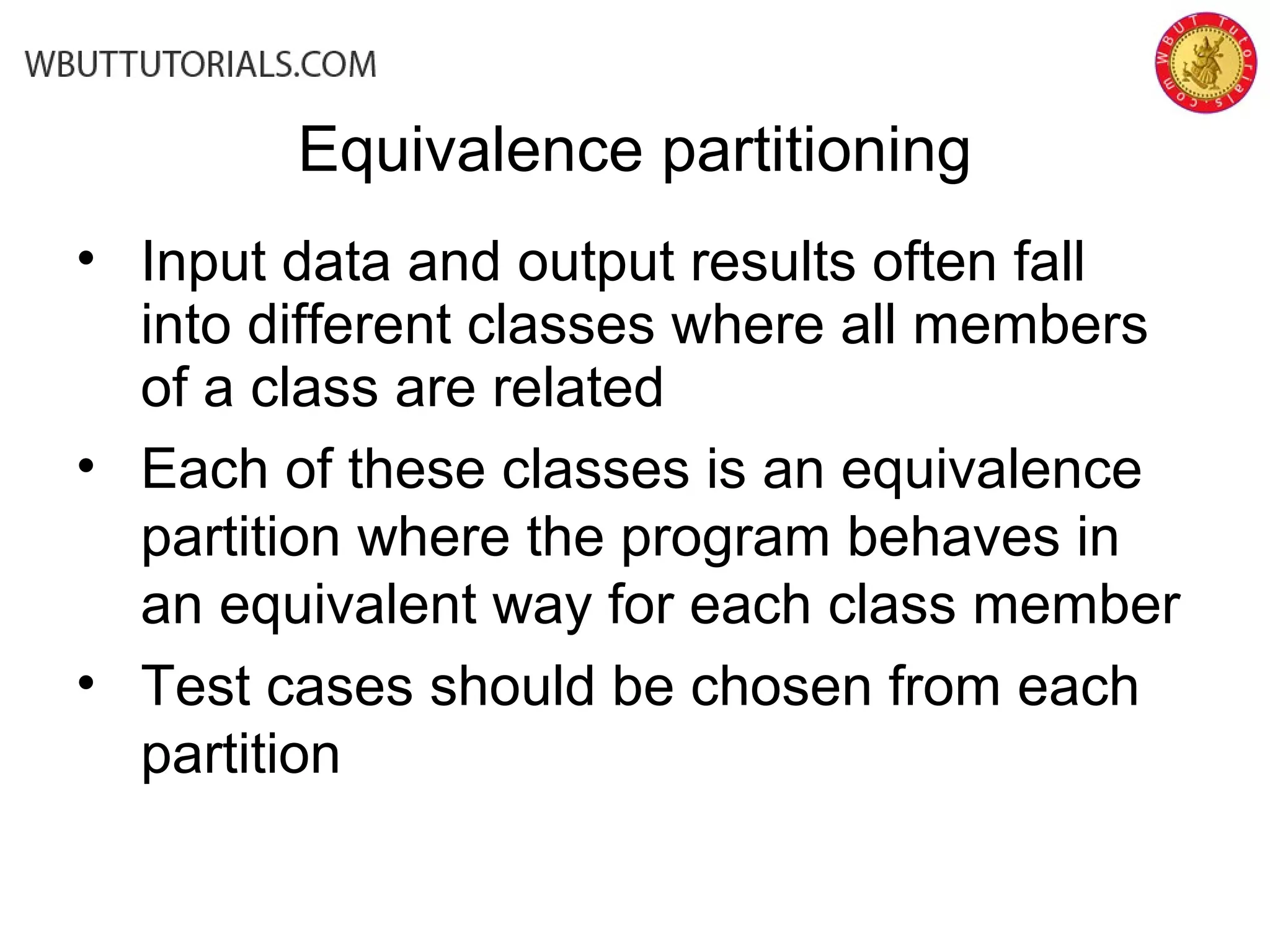

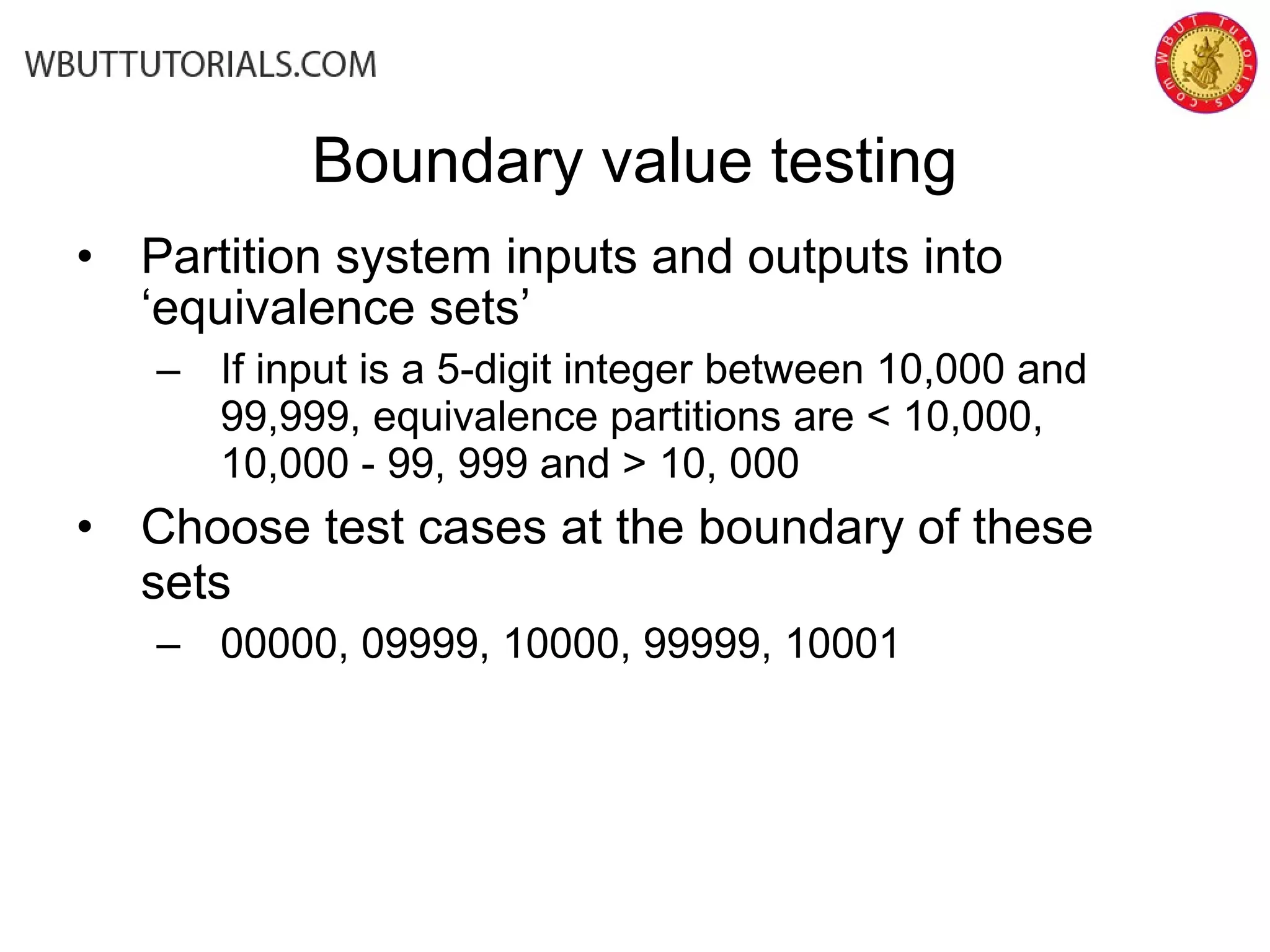

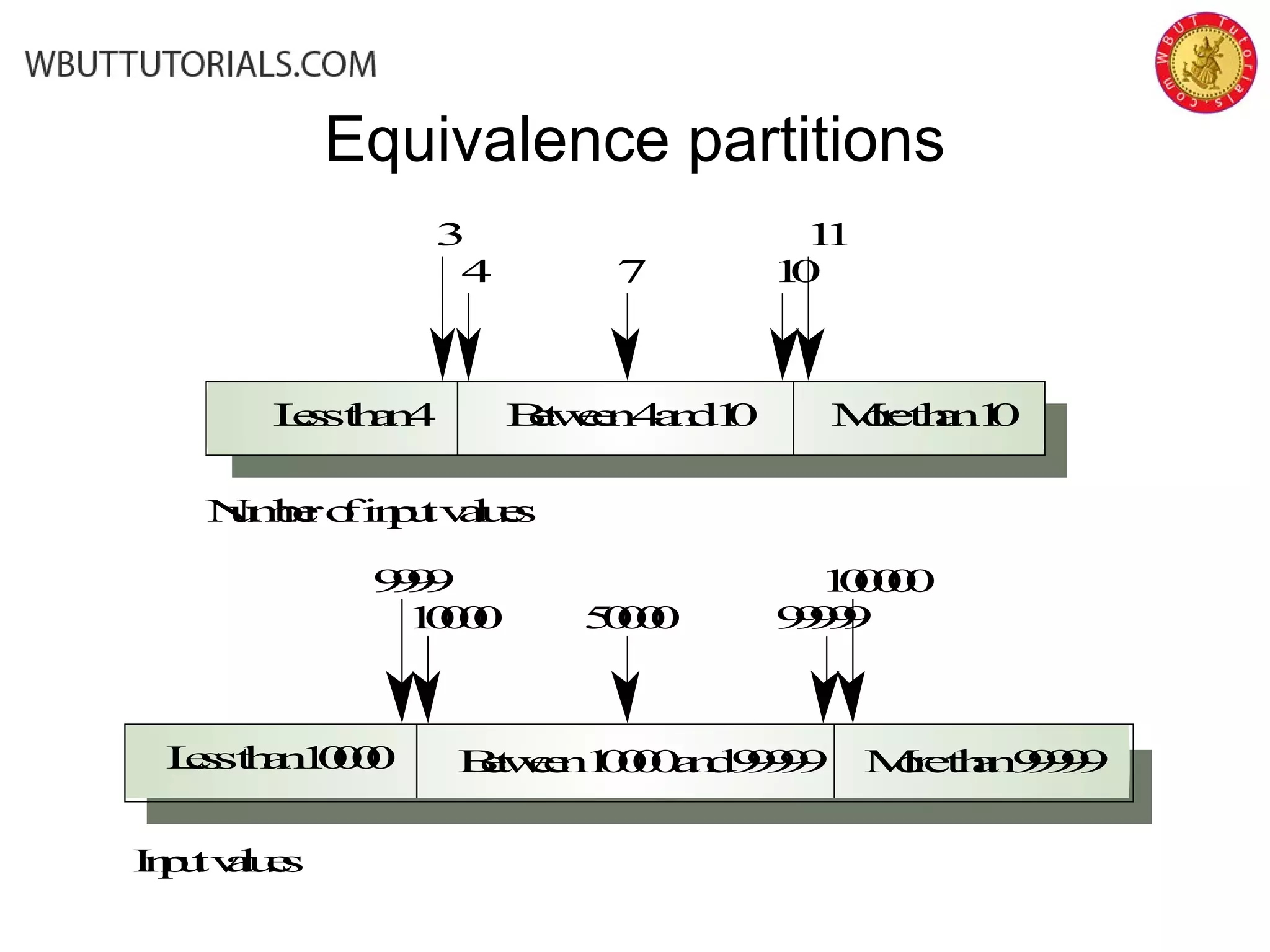

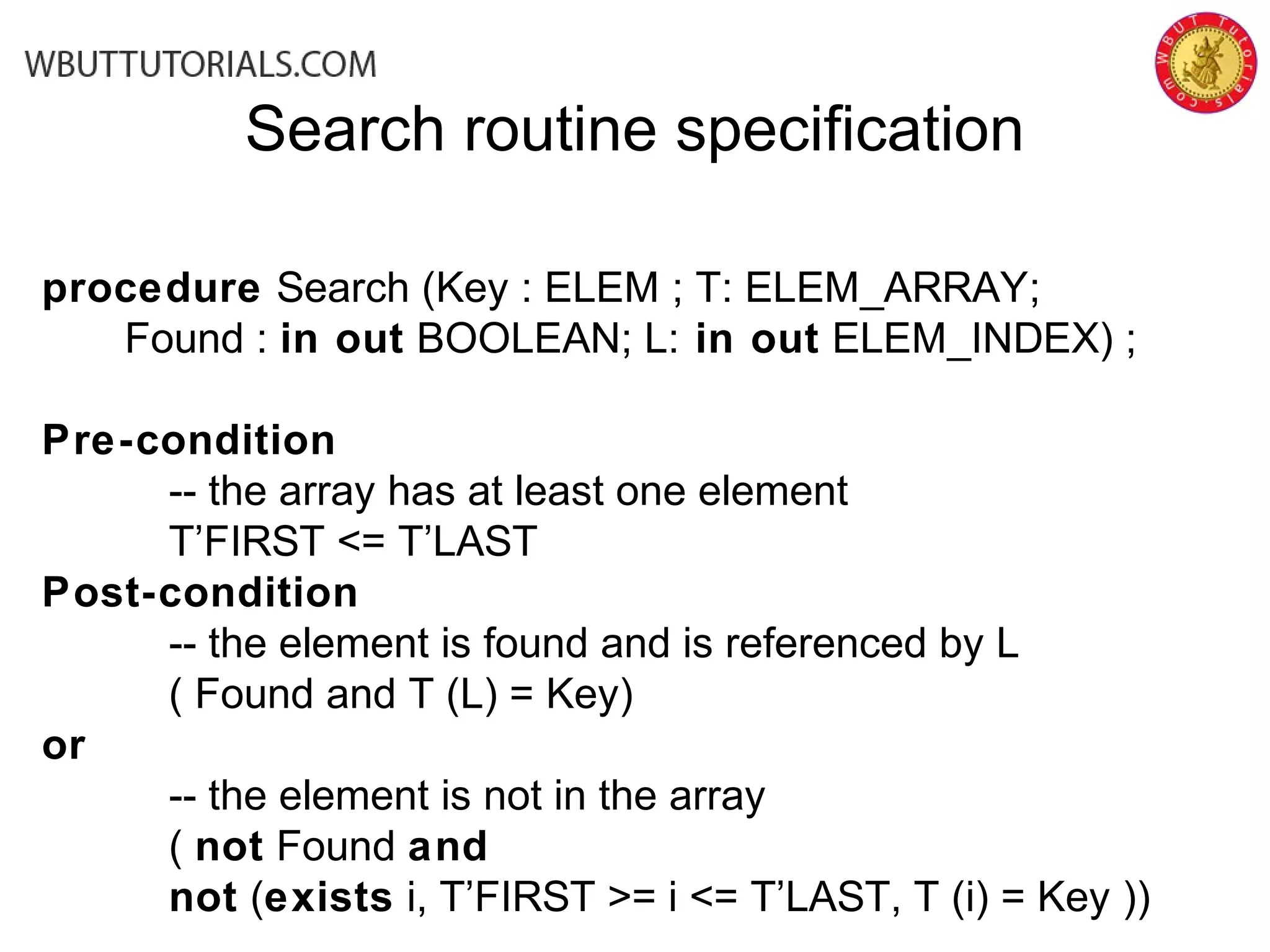

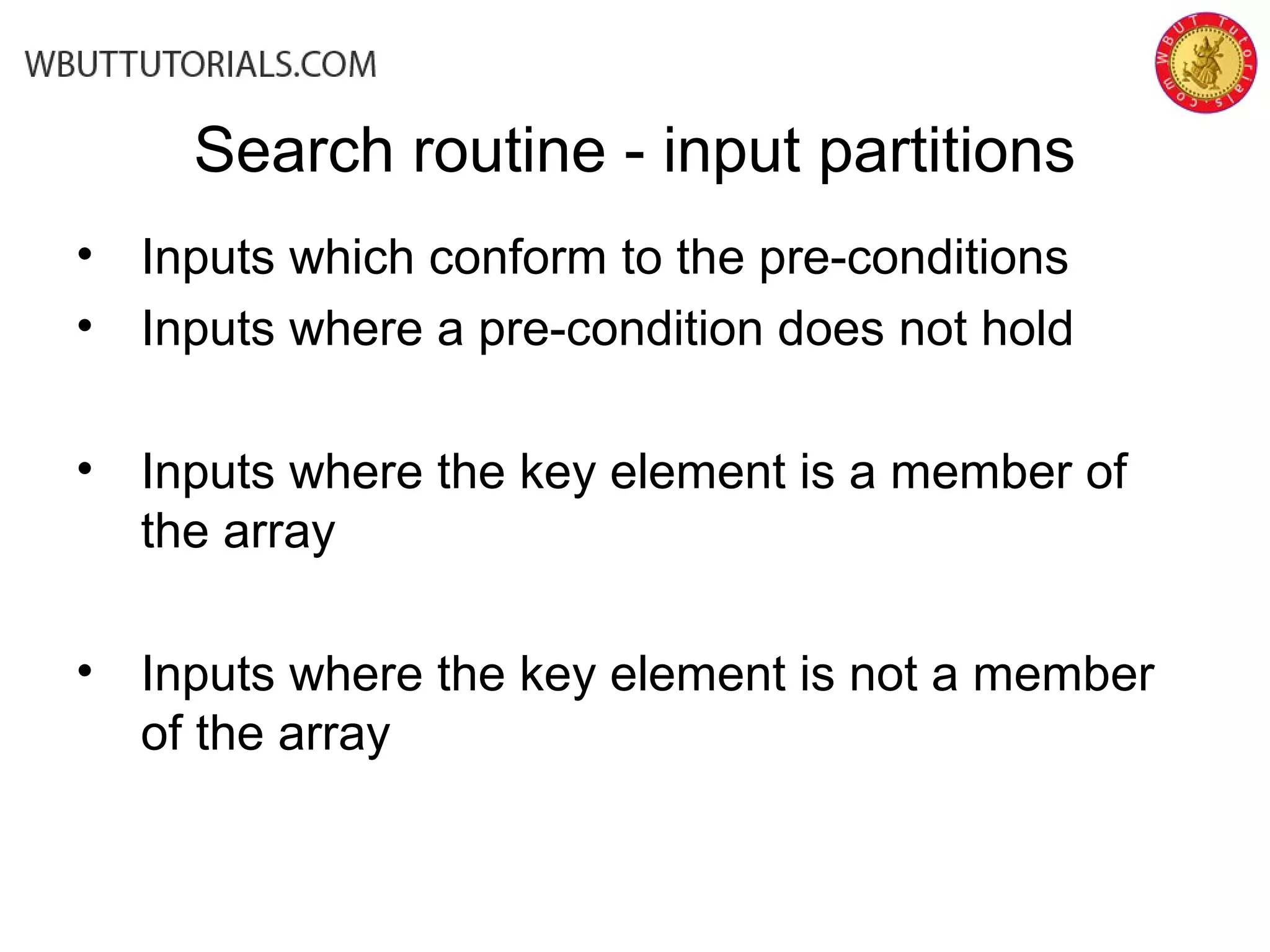

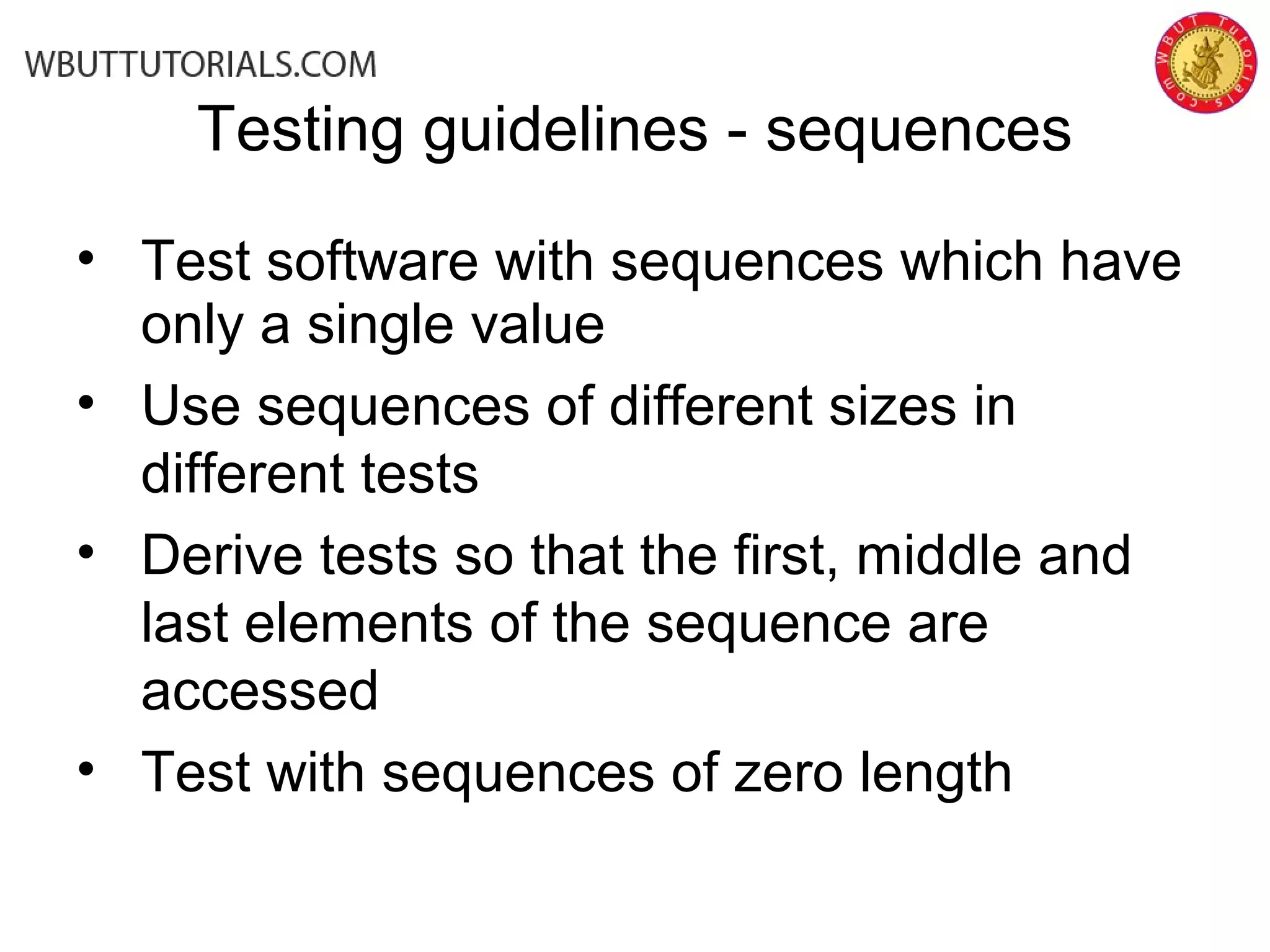

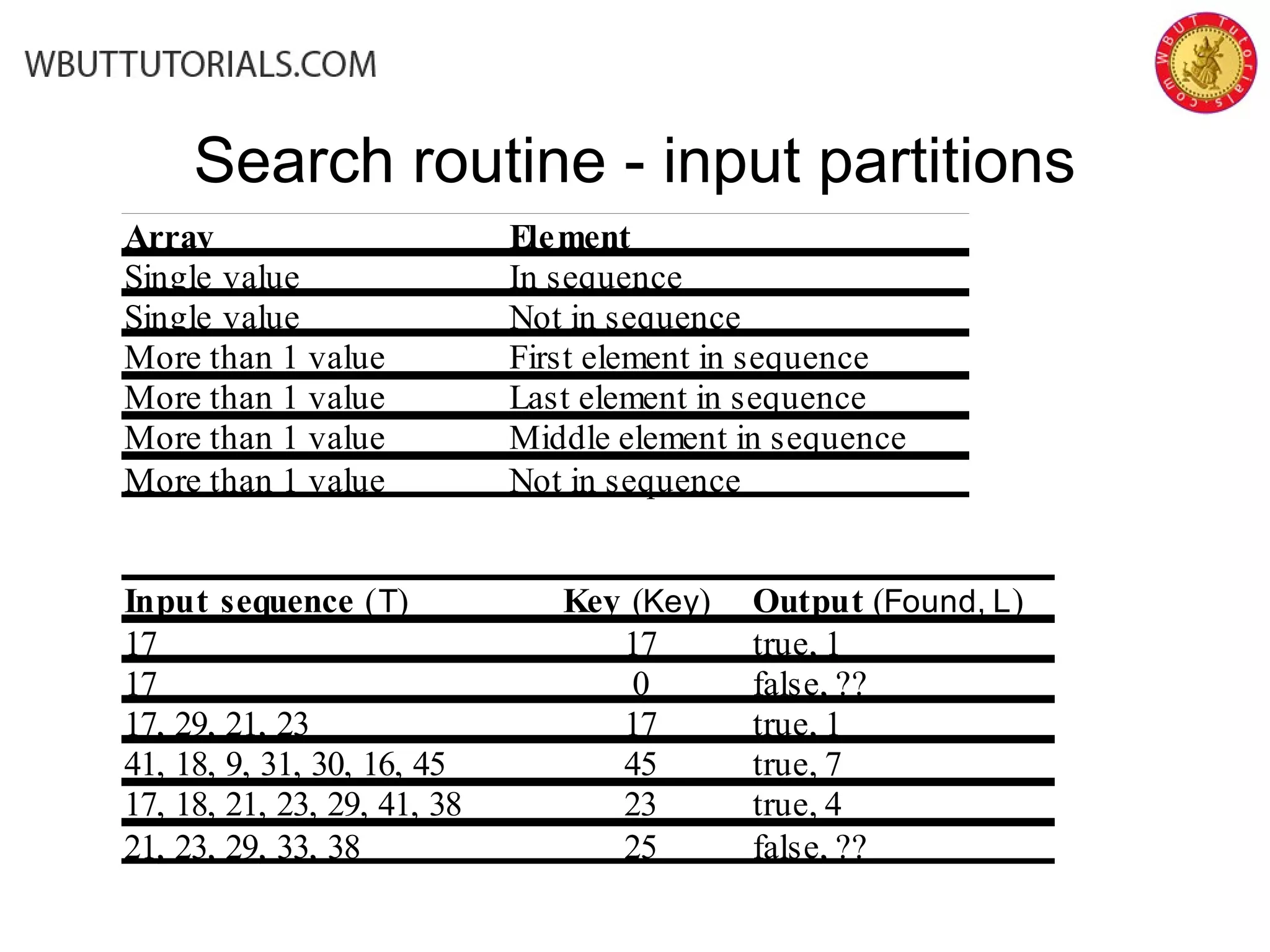

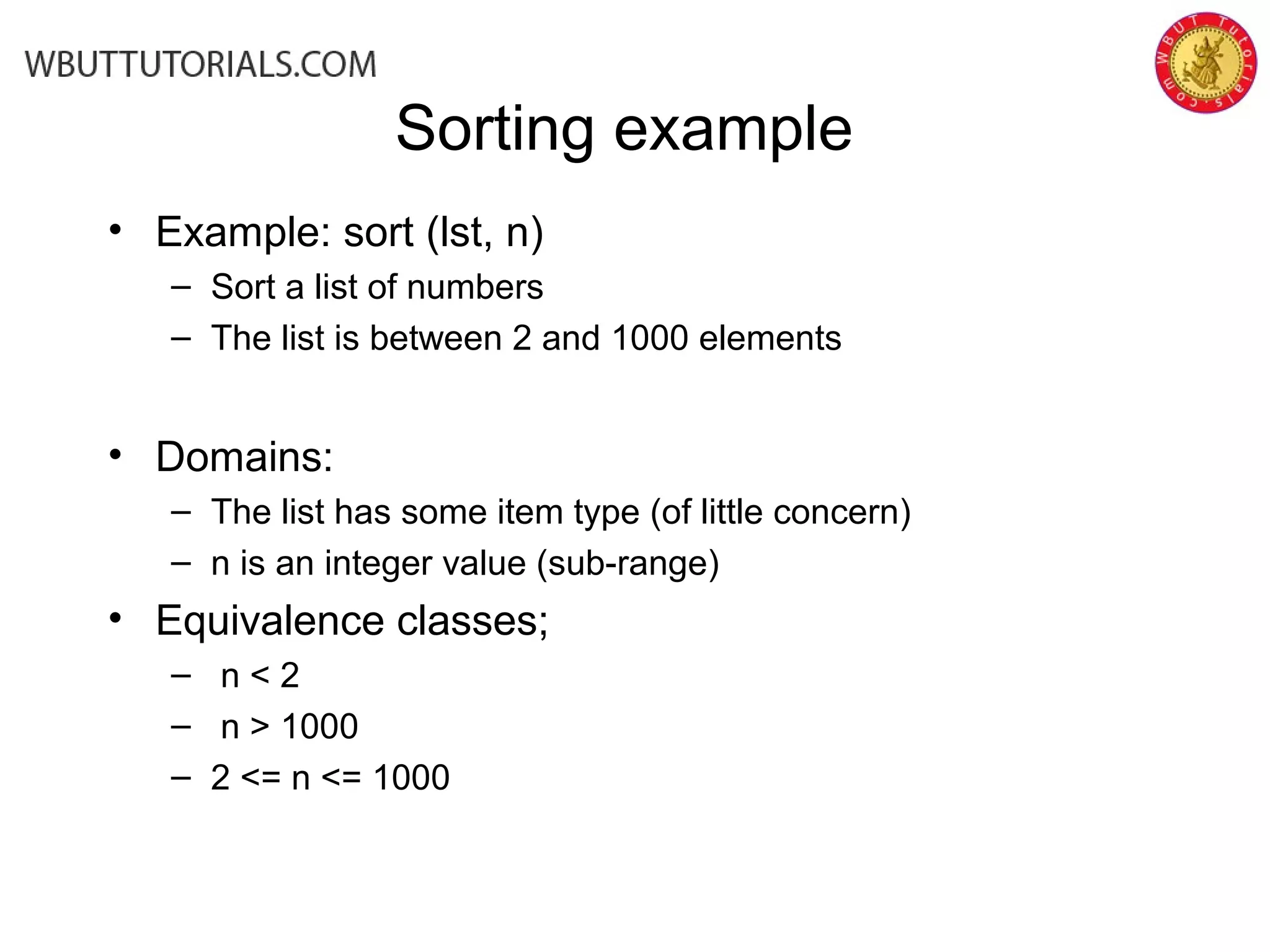

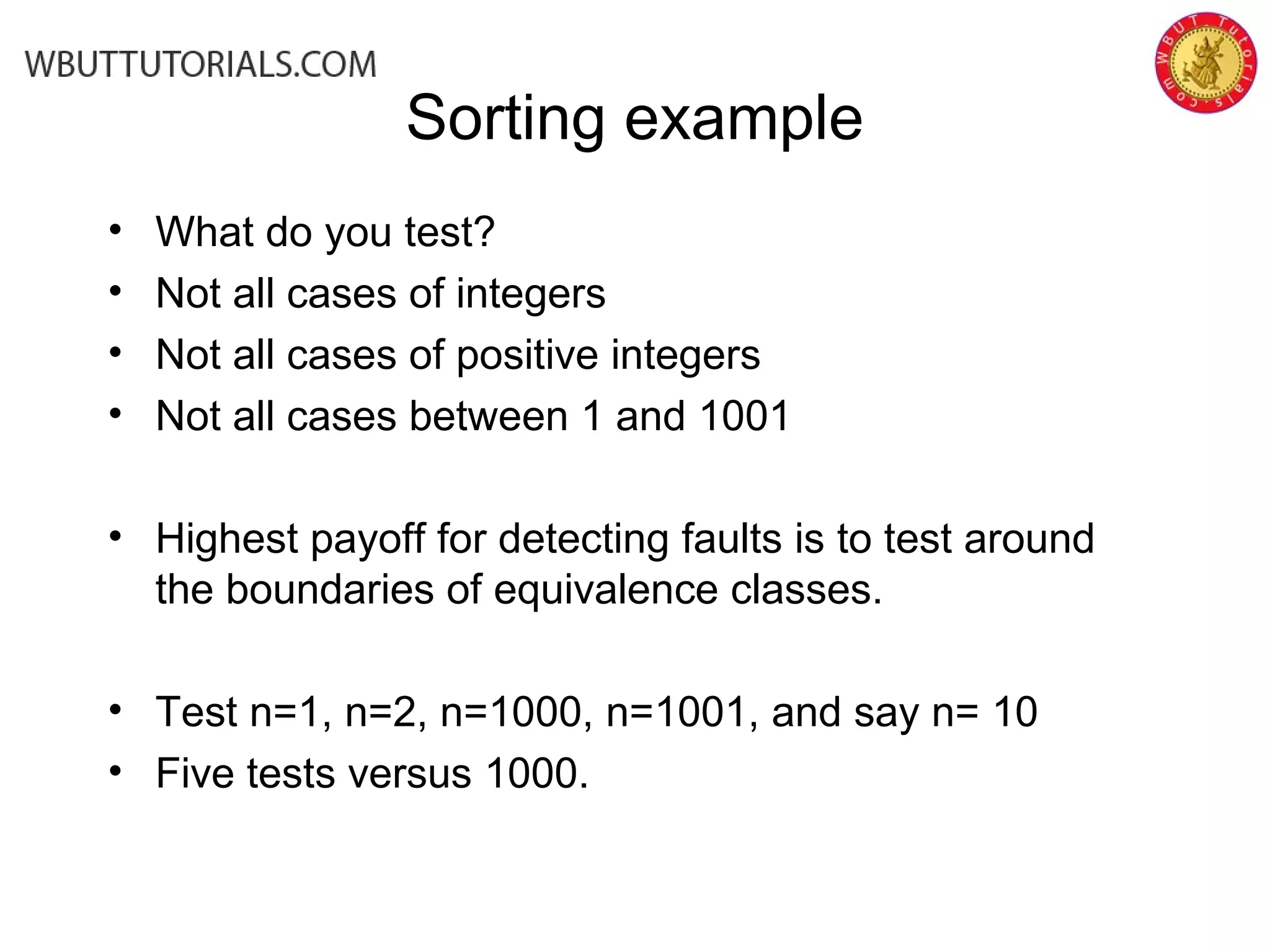

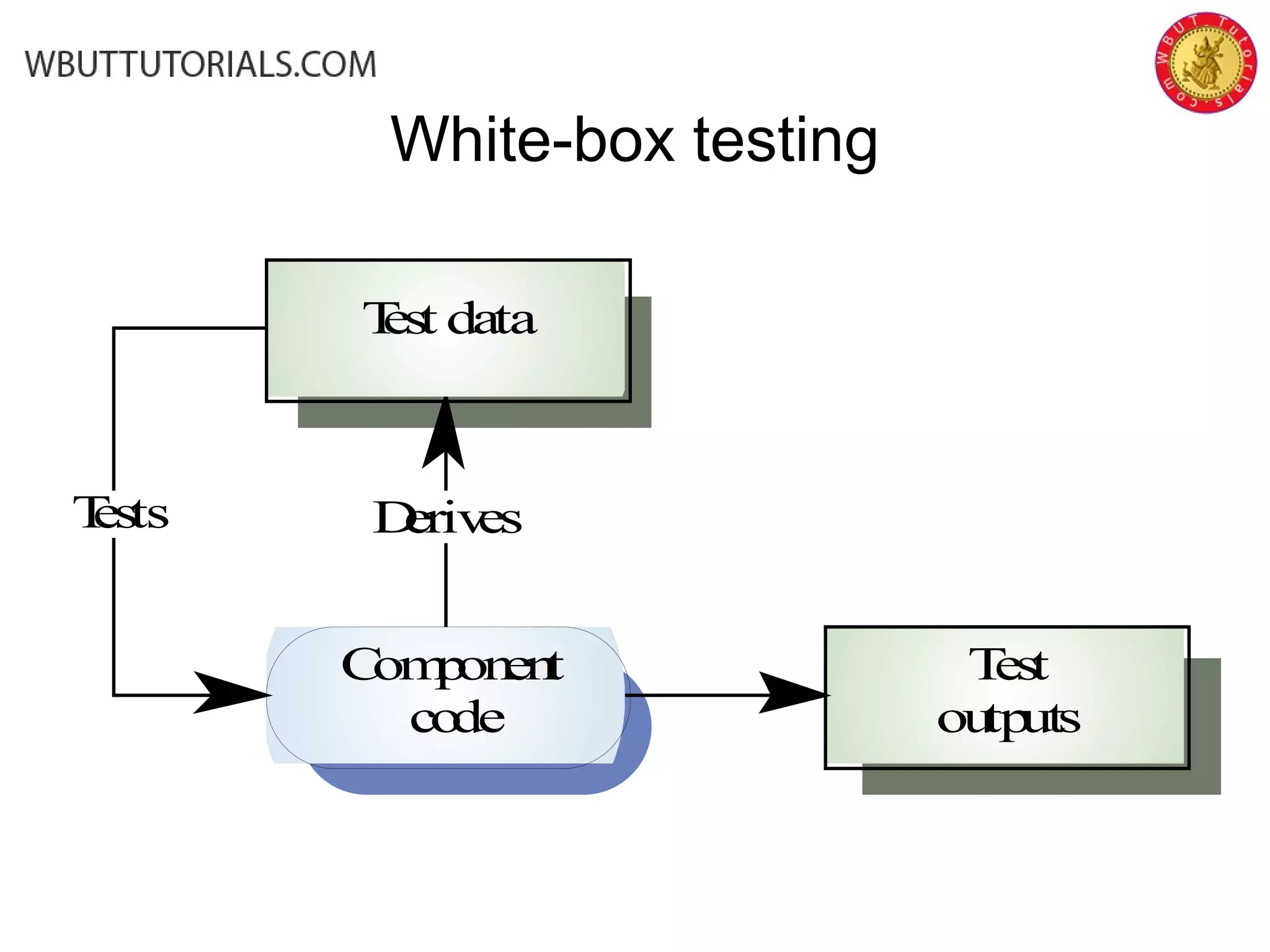

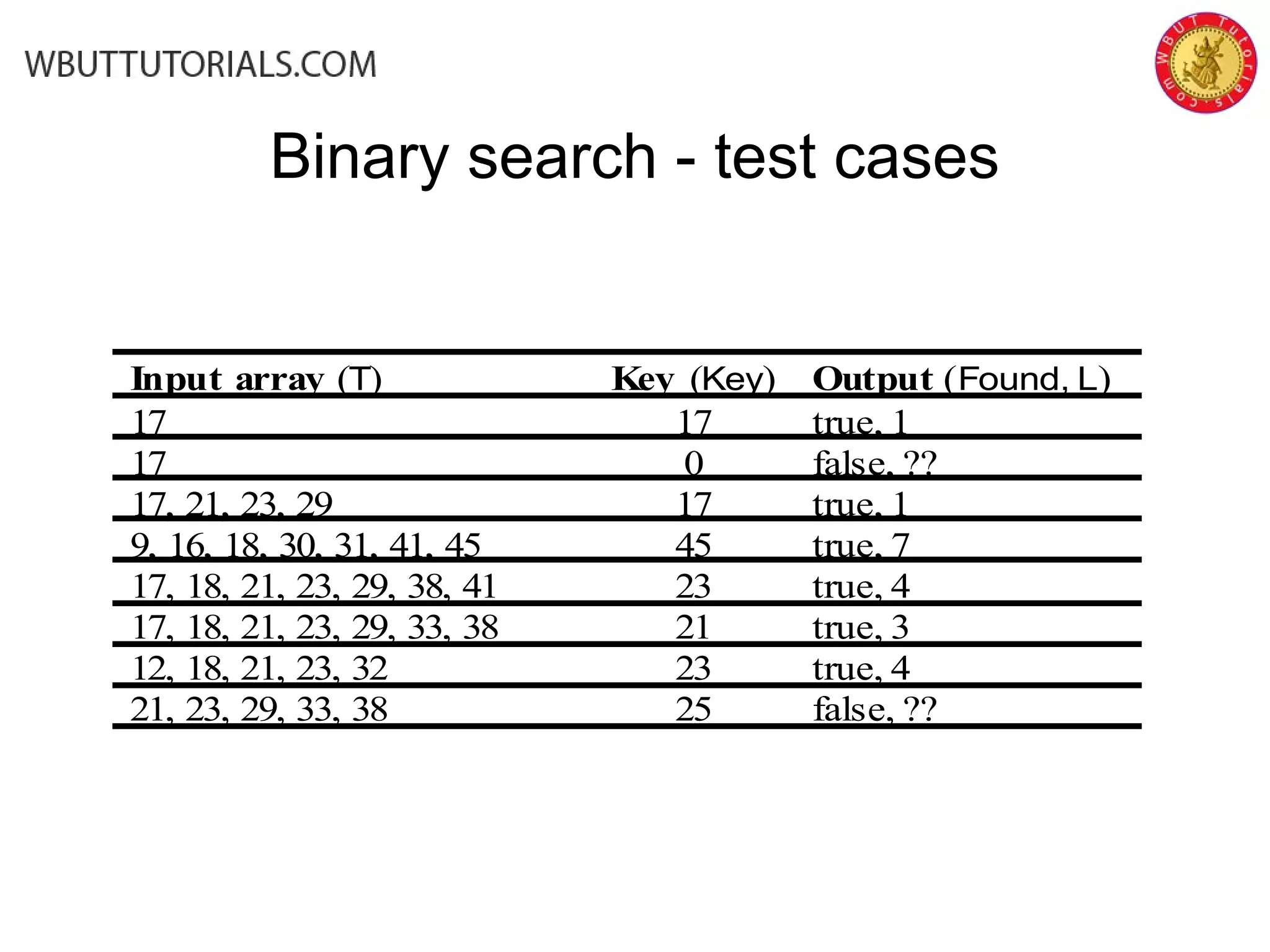

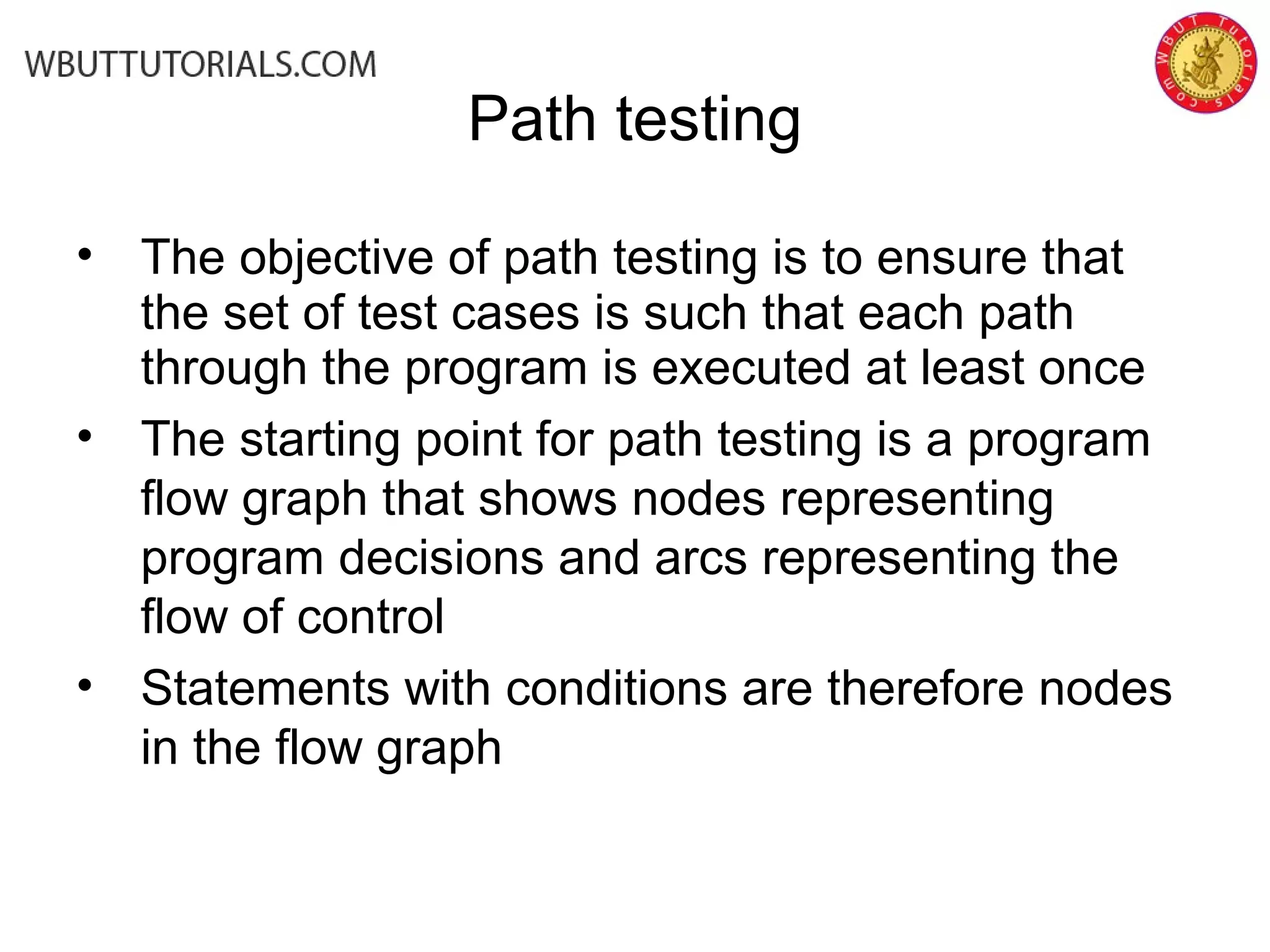

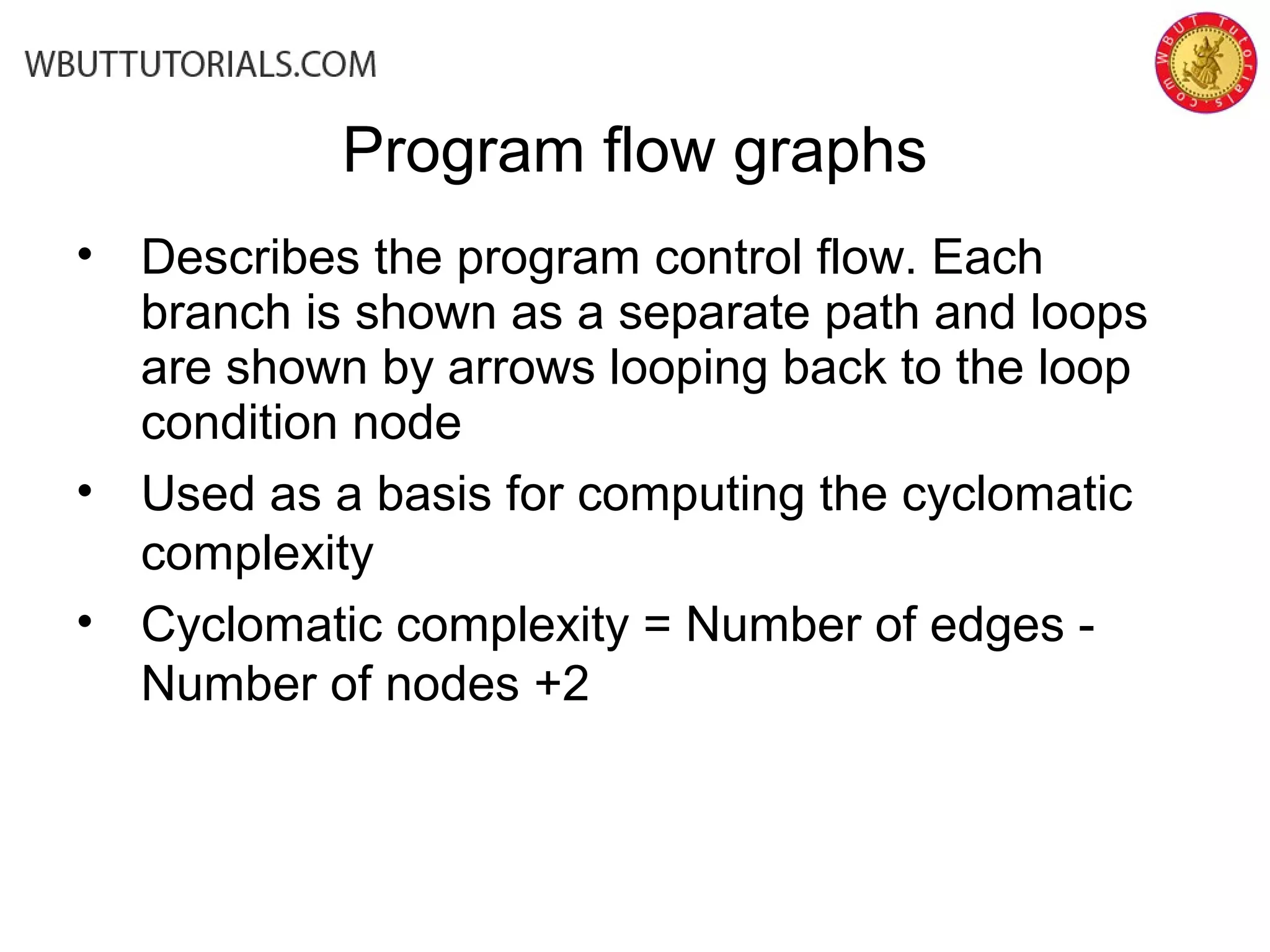

This document discusses various software testing techniques. It begins by explaining the goals of verification and validation as establishing confidence that software is fit for its intended use. It then covers different testing phases from component to integration testing. The document discusses both static and dynamic verification methods like inspections, walkthroughs, and testing. It details test case development techniques like equivalence partitioning and boundary value analysis. Finally, it covers white-box and structural testing methods that derive test cases from examining a program's internal structure.

![Execution based testing • “Program testing can be a very effective way to show the presents of bugs but is hopelessly inadequate for showing their absence” [Dijkstra] • Fault: “bug” incorrect piece of code • Failure: result of a fault • Error: mistake made by the programmer/developer](https://image.slidesharecdn.com/software-testing-and-analysis-140806021053-phpapp01/75/Software-testing-and-analysis-25-2048.jpg)

![White box testing - binary search example int search ( int key, int [] elemArray) { int bottom = 0; int top = elemArray.length - 1; int mid; int result = -1; while ( bottom <= top ) { mid = (top + bottom) / 2; if (elemArray [mid] == key) { result = mid; return result; } // if part else { if (elemArray [mid] < key) bottom = mid + 1; else top = mid - 1; } } //while loop return result; } // search](https://image.slidesharecdn.com/software-testing-and-analysis-140806021053-phpapp01/75/Software-testing-and-analysis-52-2048.jpg)

![1 2 3 4 65 7 while bottom <= top if (elemArray [mid] == key (if (elemArray [mid]< key8 9 bottom > top Binary search flow graph](https://image.slidesharecdn.com/software-testing-and-analysis-140806021053-phpapp01/75/Software-testing-and-analysis-59-2048.jpg)