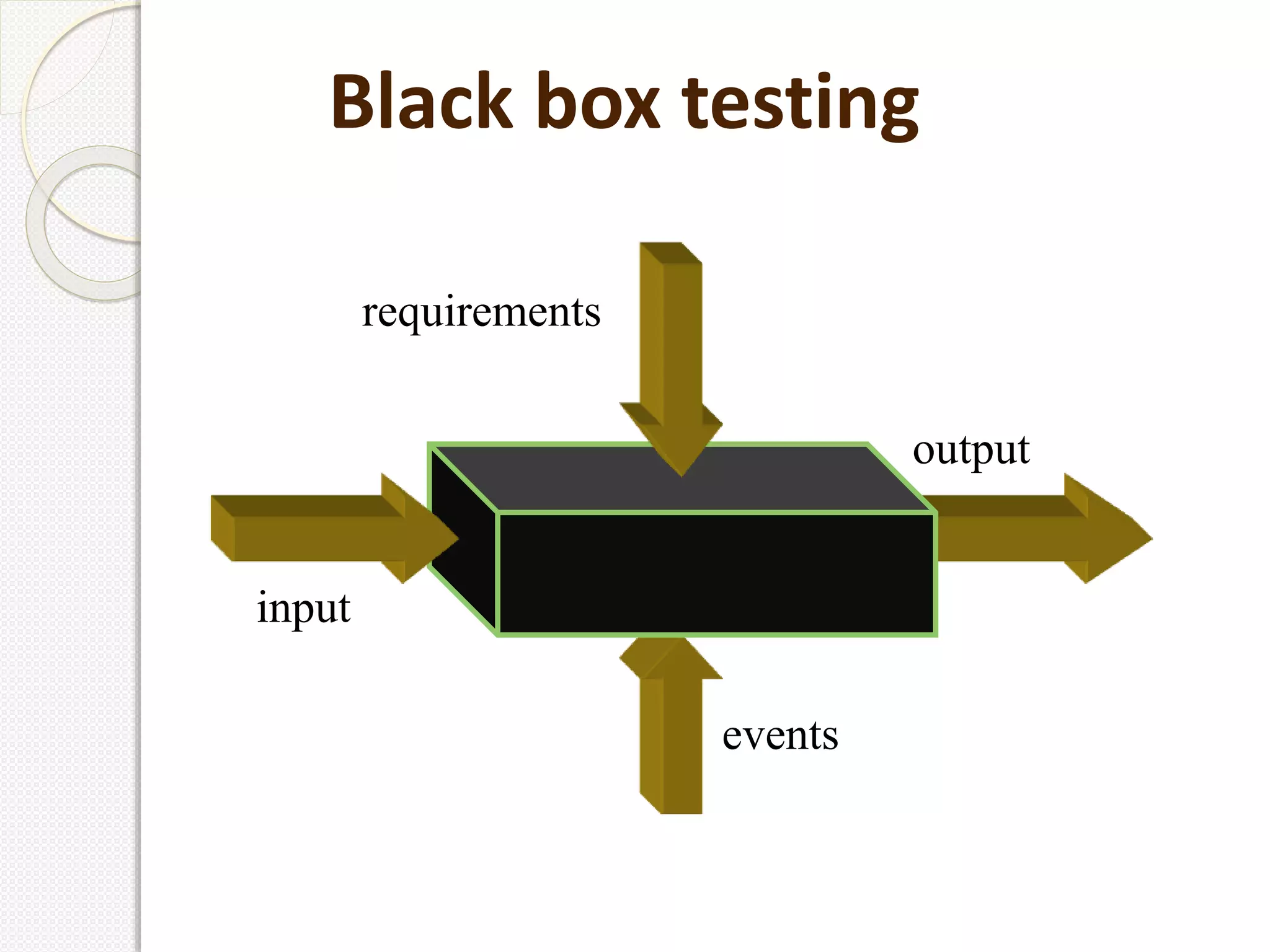

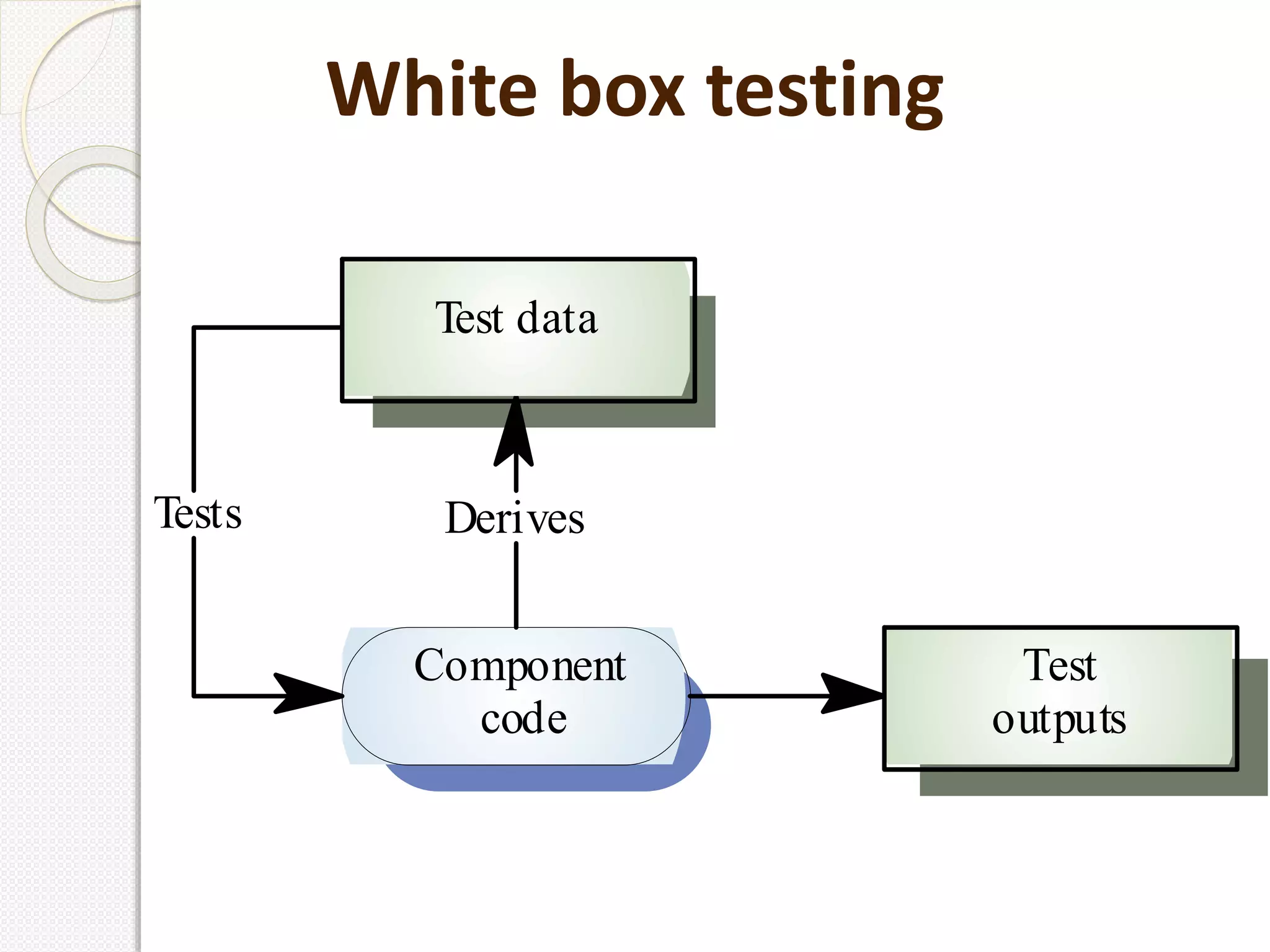

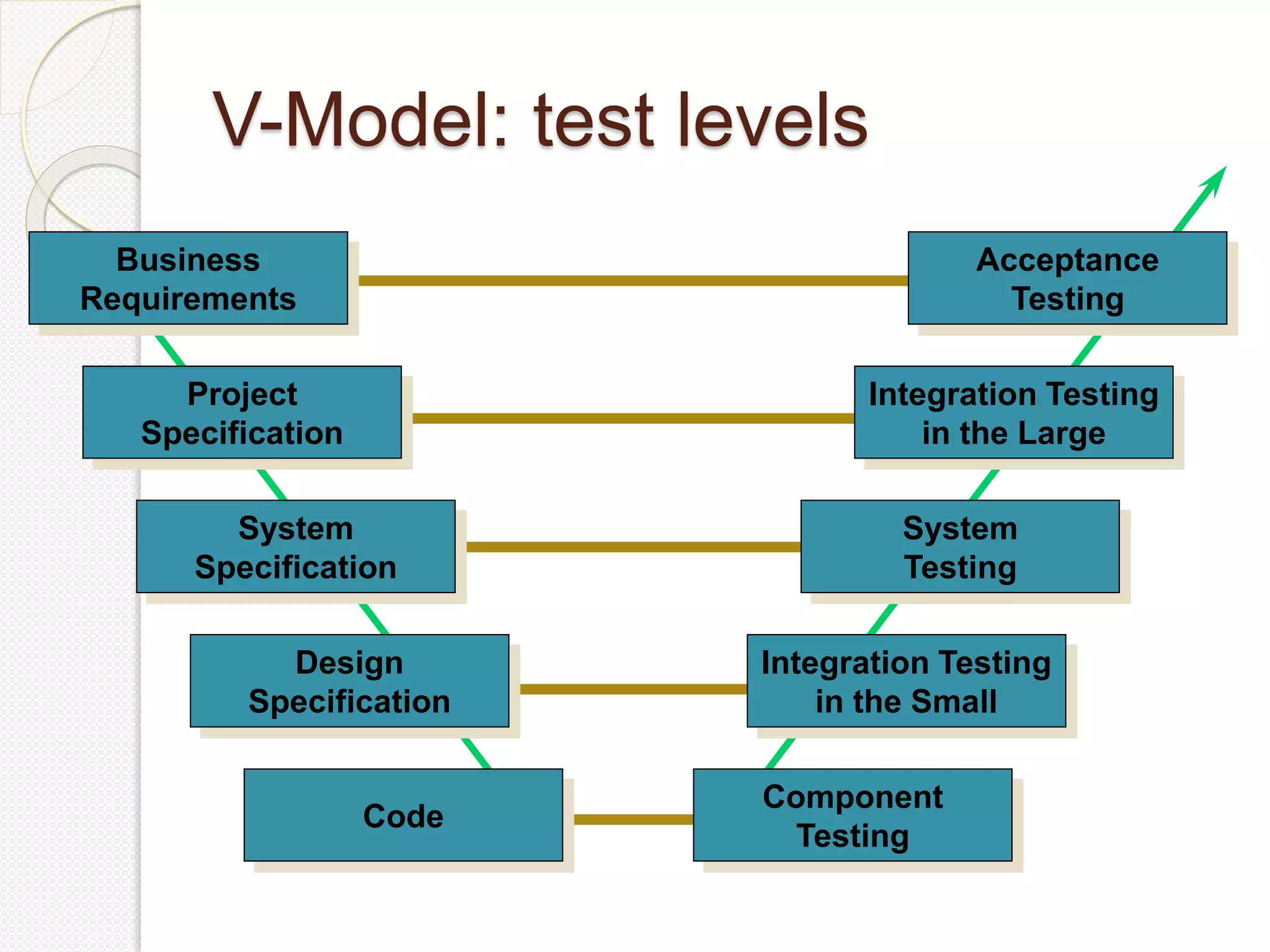

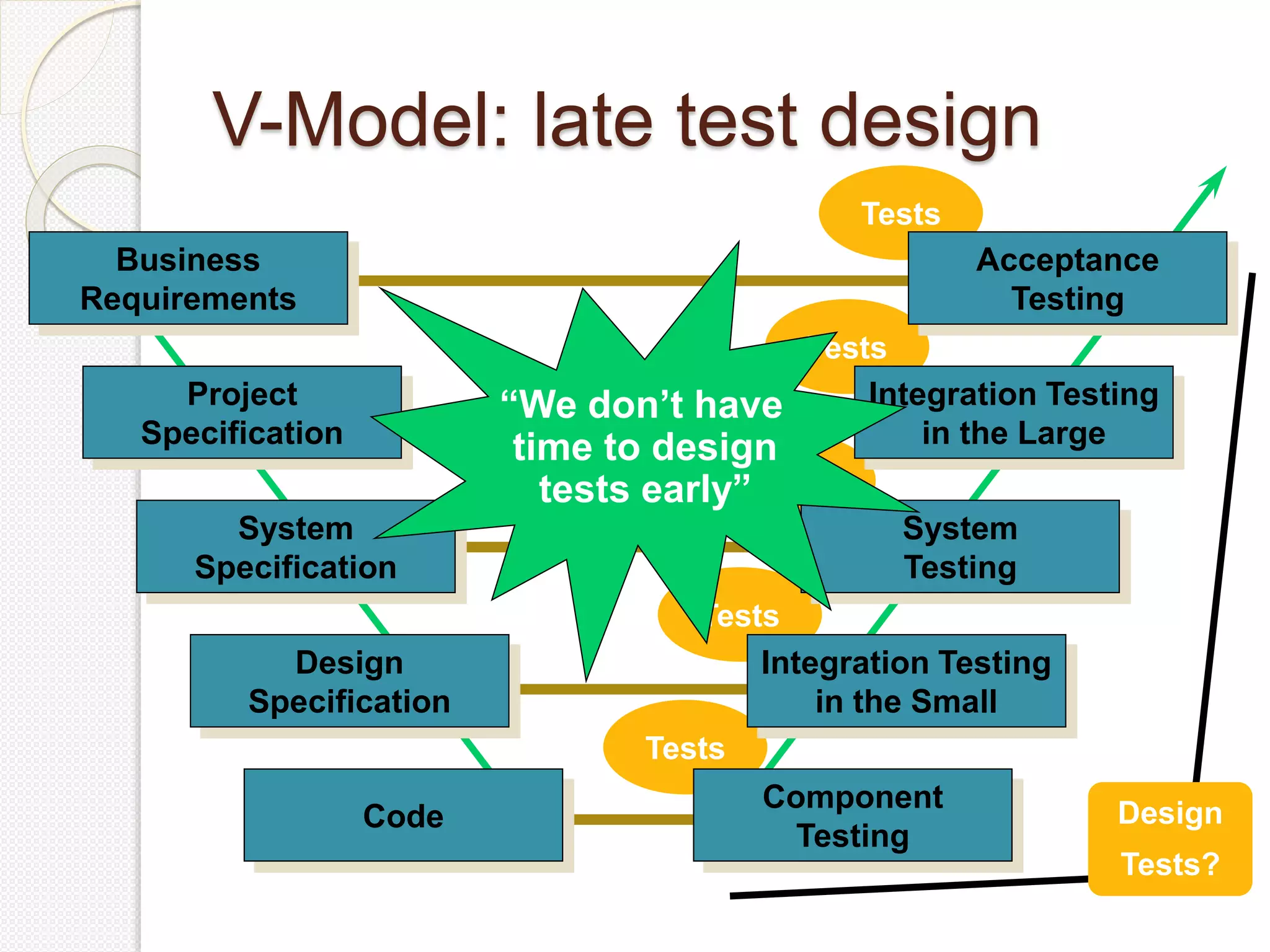

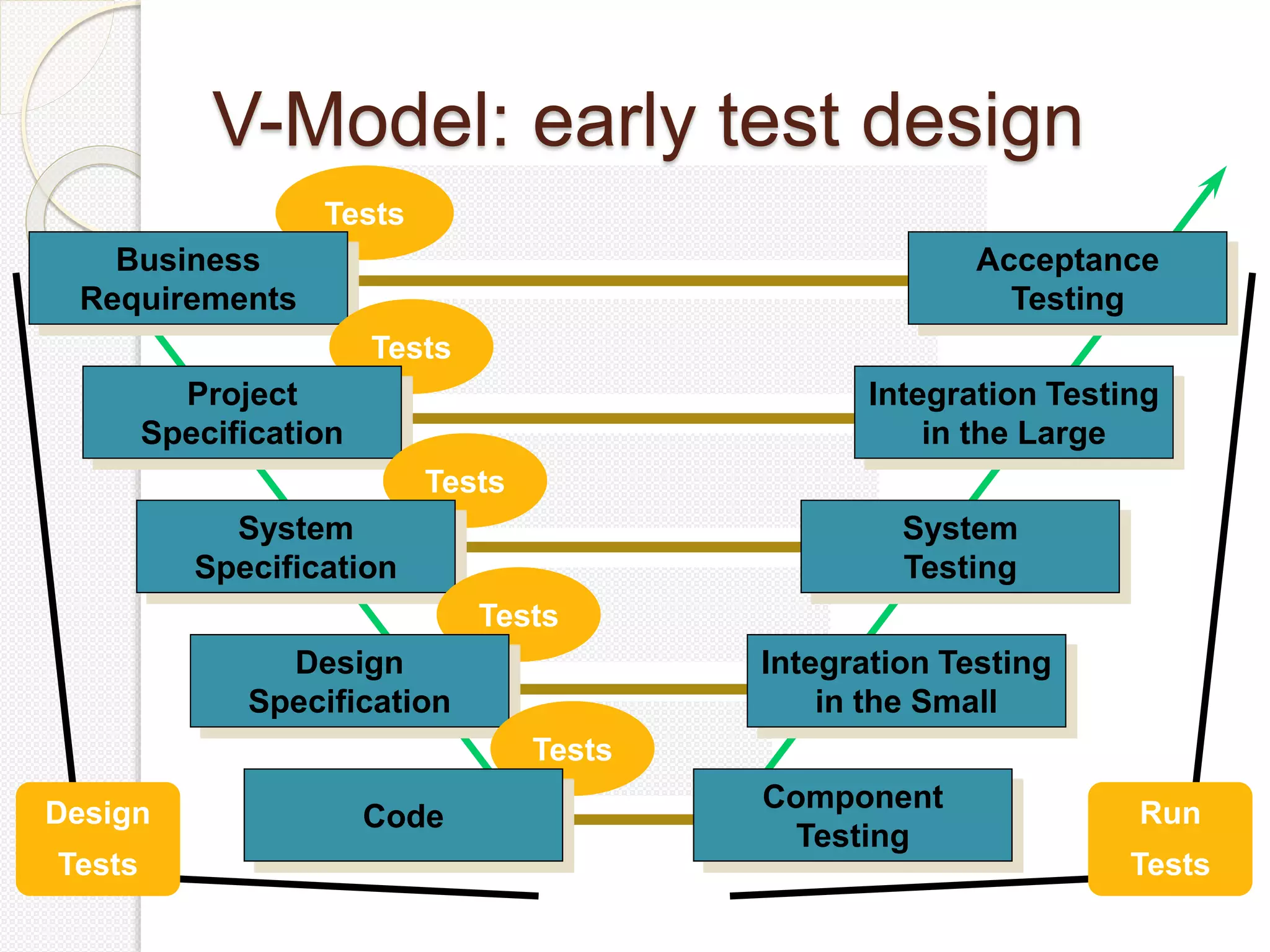

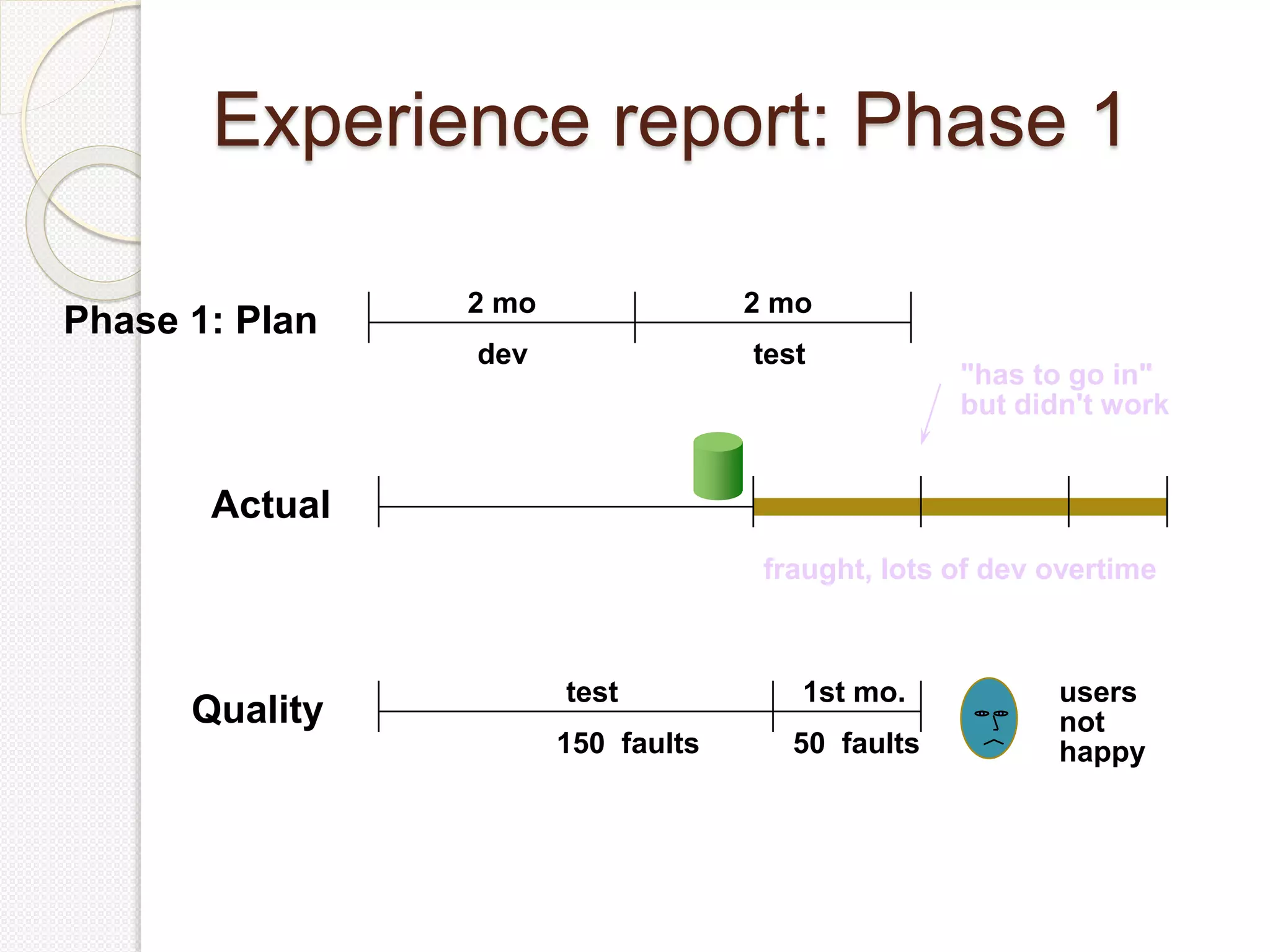

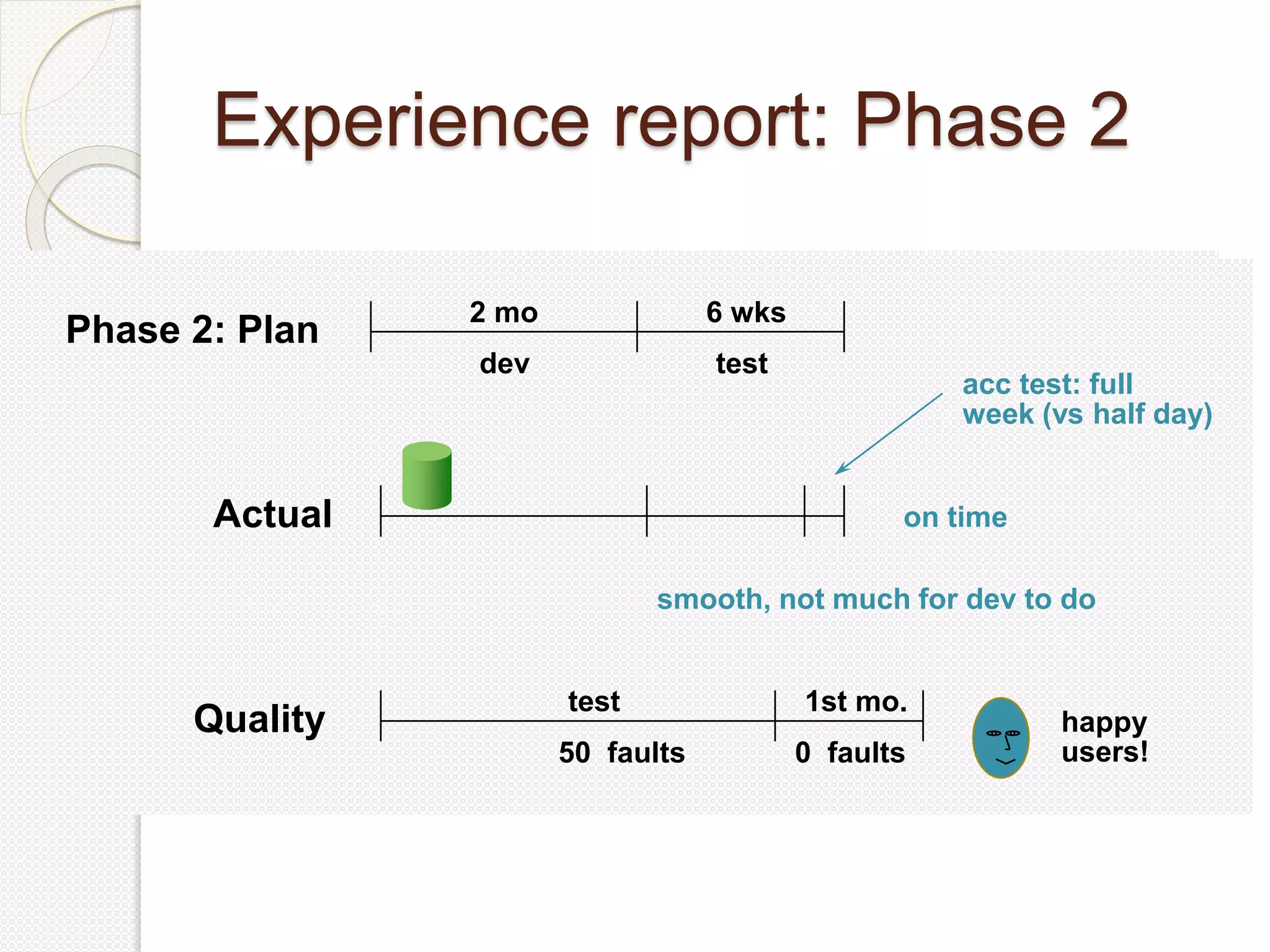

The document discusses software testing. It defines software testing as verifying and validating that a software application meets requirements and works as expected. The main purposes of testing are verification, validation, and defect finding. Verification ensures the software meets technical specifications, while validation ensures it meets business requirements. Defect finding identifies variances between expected and actual results. The document also discusses different testing methodologies like black box and white box testing and different testing levels like unit, integration, and system testing.