This document discusses a reduced complexity maximum likelihood decoding algorithm for non-binary low-density parity-check (NB-LDPC) codes, highlighting their advantages over binary codes in error correction performance, but also their challenges related to decoding complexity. The proposed improvements include message compression techniques that reduce the number of messages exchanged between nodes, thereby alleviating throughput limitations and hardware requirements. The conclusion suggests that optimizing LDPC codes can lead to reduced power consumption and improved efficiency in decoding architectures.

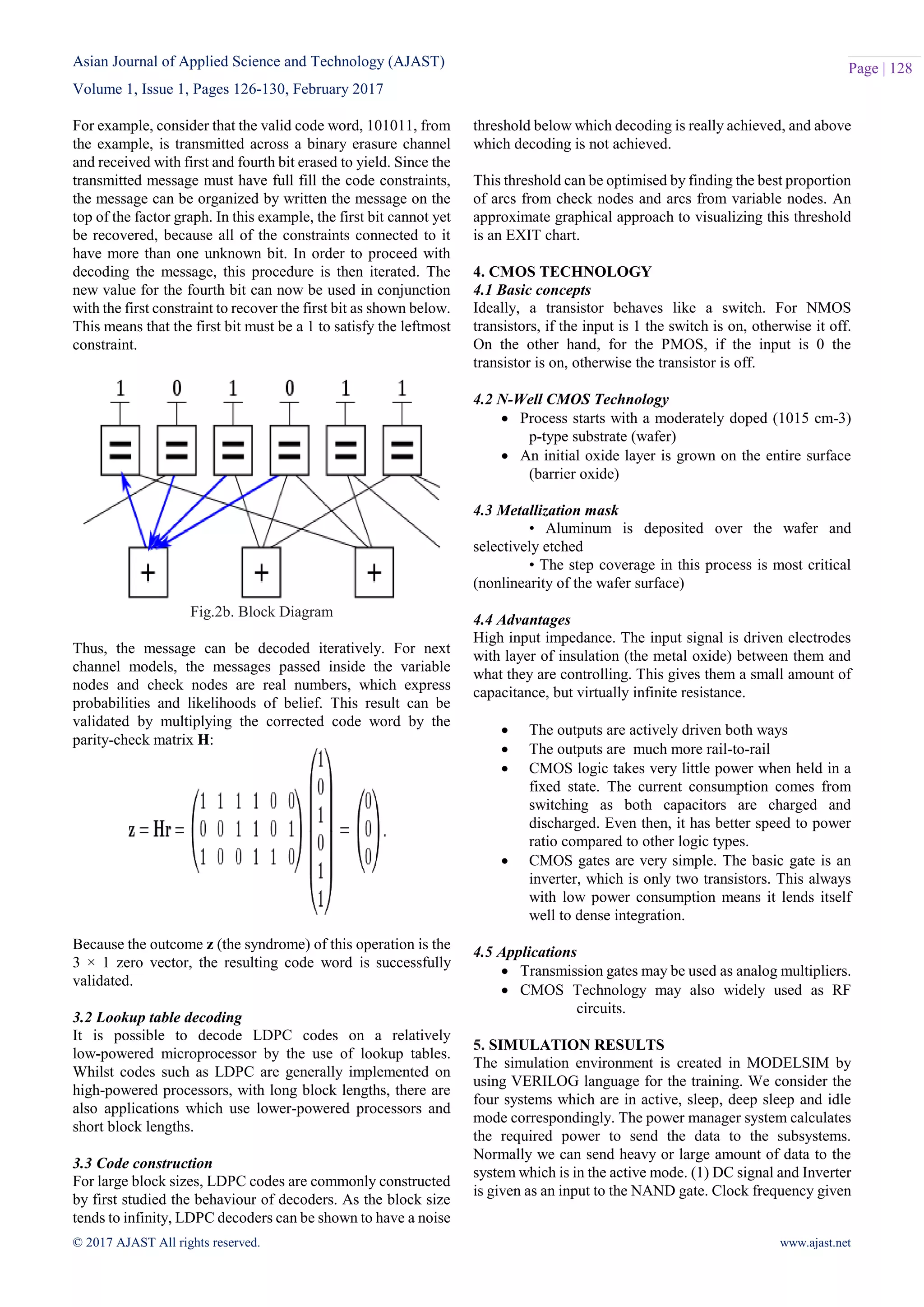

![Asian Journal of Applied Science and Technology (AJAST) Volume 1, Issue 1, Pages 126-130, February 2017 © 2017 AJAST All rights reserved. www.ajast.net Page | 127 2.2 Project Description NON-BINARY low-density parity-check (NB-LDPC) codes are a promising kind of linear block codes defined over Galois fields (GFs) GF(q = 2p) with p >1. NB-LDPC codes have numerous advantages over its binary counterparts, including better error correction performance for short/medium code word length, higher burst error correction capability, and improved performance in the error-floor region. 2.3 T-MM Decoding Algorithm with Compressed Messages A sparse parity-check matrix H defines an NB-LDPC code, where each nonzero element h m ,n belongs to a GFs GF(q = 2p). Another common way to characterize the NB-LDPC codes is by means of a Tanner graph, where two kinds of nodes are differentiated representing all N columns (VNs) and M rows (CNs) of H. N(m) denotes the set of VNs connected to a CN m and M(n) denotes the set of CNs connected to a VN n; therefore, the cardinality of the sets corresponds to dc and dv. 2.4 T-MM Algorithm with Reduced Set of Messages In this section, we introduce a novel method to reduce the number of messages exchanged between the CN and the VN compared with the proposal from [11]. First, we define the reduced set of compressed messages that are sent from CN to VN and an approximation to obtain the rest of values in the VN. Second, the performance of the method is analyzed. Third, a technique to generate the most reliable values of the set I (a) without building a complete trellis structure is presented 2.5 Reduction of the CN-to-VN Message The sets I (a) and P(a) are required to generate the messages Rm,n(a) at the VN processor, as shown in (3). Reducing the cardinality of I (a), the one of P(a) is also reduced. Our proposal is to keep the L most reliable values of I (a) and the corresponding ones of P(a) and E(a), where L < (q −1).Defining the complementary set a__ ∈a a_, we propose to set E∗(a__) = m1(a__). Therefore, the cardinality of the set E∗(a) is kept in q −1. Table I includes the number of bits of each one of the sets exchanged from CN-to-VN processors compared with the proposal from [11], where w is the number of bits used to quantize the reliabilities. Fig.1. Mean value of each reliability 2.6 Conclusion The main drawbacks of T-EMS, T-MM, and OMO-TMM are: 1) the high number of exchanged messages between the CN and the VN (q × dc reliabilities), which impacts in the wiring congestion, limiting the maximum throughput achievable and 2) the high amount of storage elements required in the hardware implementations of these algorithms, which supposes the major part of the decoder’s area. To overcome the drawbacks of T-EMS and T-MM, the proposal in [11] introduces a technique of message compression that reduces the wiring congestion between the CN and the VN and the storage elements used in the derived architectures. The messages at the output of the CN are reduced to four elementary sets that include the intrinsic and extrinsic information, the path coordinates, and the hard-decision symbols. 3. PROPOSED SYSTEM The block diagram for the proposed CN is detailed in Fig. 2a. The CN input messages are Q m, n, which come from the VN processor and the tentative hard-decision symbols z. Both the input messages are used to compute the normal to- delta-domain transformation (N →_ block in Fig. 2b). DC transformation networks are needed in the CN, each one requires q×log(q) w-bit MUX following the approach proposed in [19], where w is the number of bits for the data path. Z is also used to obtain the syndrome β adding all dc tentative hard-decision symbols. This operation requires w × (dc − 1) XOR gates. Fig.2a. Proposed check node Block Diagram β is used to generate the new hard decision symbols z∗, which are sent to the VN to generate the R∗m,n messages using (4). Z ∗ symbols are generated using GF(q) adders that require dc × w XOR gates to implement them. 3.1 Decoding As with other codes, optimally decoding an LDPC code on the binary symmetric channel is the NP-complete problem, although techniques based on iterative belief propagation used in practice lead to good approximations. In contrast, belief propagation on the binary erasure channel is usually simple where it consists of iterative constraint satisfaction.](https://image.slidesharecdn.com/ajast29-170318195339/75/Reduced-Complexity-Maximum-Likelihood-Decoding-Algorithm-for-LDPC-Code-Correction-Technique-2-2048.jpg)

![Asian Journal of Applied Science and Technology (AJAST) Volume 1, Issue 1, Pages 126-130, February 2017 © 2017 AJAST All rights reserved. www.ajast.net Page | 130 Fig.9. Simulation result for eye diagram 6. CONCLUSION AND FUTURE ENHANCEMENT The low complexity design is prominent requirement of iterative decoders. We have tried to propose a design that fulfills the obligation with the completion of the sum min worker using better architecture. The difficulty is reduced as the number of register and memory utilization decreases. NB-LDPC codes are designed using CMOS Technology. It concludes the T-MM algorithm to reduce the complexity of CN architecture. Hence the power consumption is reduced. In future, LDPC codes can be used for reducing the power consumption. Thus, the post synthesis throughput of the other works is reduced in the same percentage. REFERENCES [1] Cai.F and Zhang.X, “Relaxed min-max decoder architectures for nonbinary low-density parity-check codes,” IEEE Trans. Very Large Scale Integr. (VLSI) Syst., vol. 21, no. 11, pp. 2010–2023, Nov. 2013. [2] Jesus O. Lacruz, Francisco García-Herrero, María Jose Canet, and Javier Valls, “Reduced-Complexity Non-binary LDPC Decoder for High-Order Galois Fields Based on Trellis Min–Max Algorithm”, IEEE Transactions on very large scale integration (VLSI) Systems, 2016. [3] Lacruz. J.O, García-Herrero.F, Declercq.D, and Valls.J, “Simplified trellis min–max decoder architecture for nonbinary low-density parity check codes,” IEEE Trans. Very Large Scale Integr. (VLSI) Syst., vol. 23, no. 9, pp. 1783–1792, Sep. 2015. [4] Li.E, Declercq.D, and Gunnam.K, “Trellis-based extended min-sum algorithm for non-binary LDPC codes and its hardware structure,” IEEE Trans. Commun. vol. 61, no. 7, pp. 2600–2611, Jul. 2013. [5] Lin.J, Sha.J, Wang.Z, and Li.L, “Efficient decoder design for nonbinary quasicyclic LDPC codes,” IEEE Trans. Circuits Syst. I, Reg.Papers, vol. 57, no. 5, pp. 1071–1082, May 2010. [6] Mansour.M.M and Shanbhag.N.R, “High-throughput LDPC decoders,” IEEE Trans. Very Large Scale Integr. (VLSI) Syst., vol. 11, no. 6, pp. 976–996, Dec. 2003. [7] Savin.V, “Min-max decoding for non-binary LDPC codes,” in Proc. IEEE Int. Symp. Inf. Theory, Jul. 2008, pp. 960–964. [8] Ueng.Y.-L, Liao.K.-H, Chou.H.-C, and Yang.C.-J., “A high-throughput trellis based layered decoding architecture for non-binary LDPC codes using max-log-QSPA,” IEEE Trans. Signal Process., vol. 61, no. 11, pp. 2940–2951, Jun. 2013. [9] Wymeersch.H, Steendam.H, and Moeneclaey.M, “Log-domain decoding of LDPC codes over GF(q),” in Proc. IEEE Int. Conf. Commun., vol. 2. Jun. 2004, pp. 772–776. [10] Zhou.B, Kang.J, Song.S, Lin.S, Abdel-Ghaffar.K, and Xu.M, “Construction of non-binary quasi-cyclic LDPC codes by arrays and array dispersions,” IEEE Trans. Commun., vol. 57, no. 6, pp. 1652–1662, Jun. 2009.](https://image.slidesharecdn.com/ajast29-170318195339/75/Reduced-Complexity-Maximum-Likelihood-Decoding-Algorithm-for-LDPC-Code-Correction-Technique-5-2048.jpg)