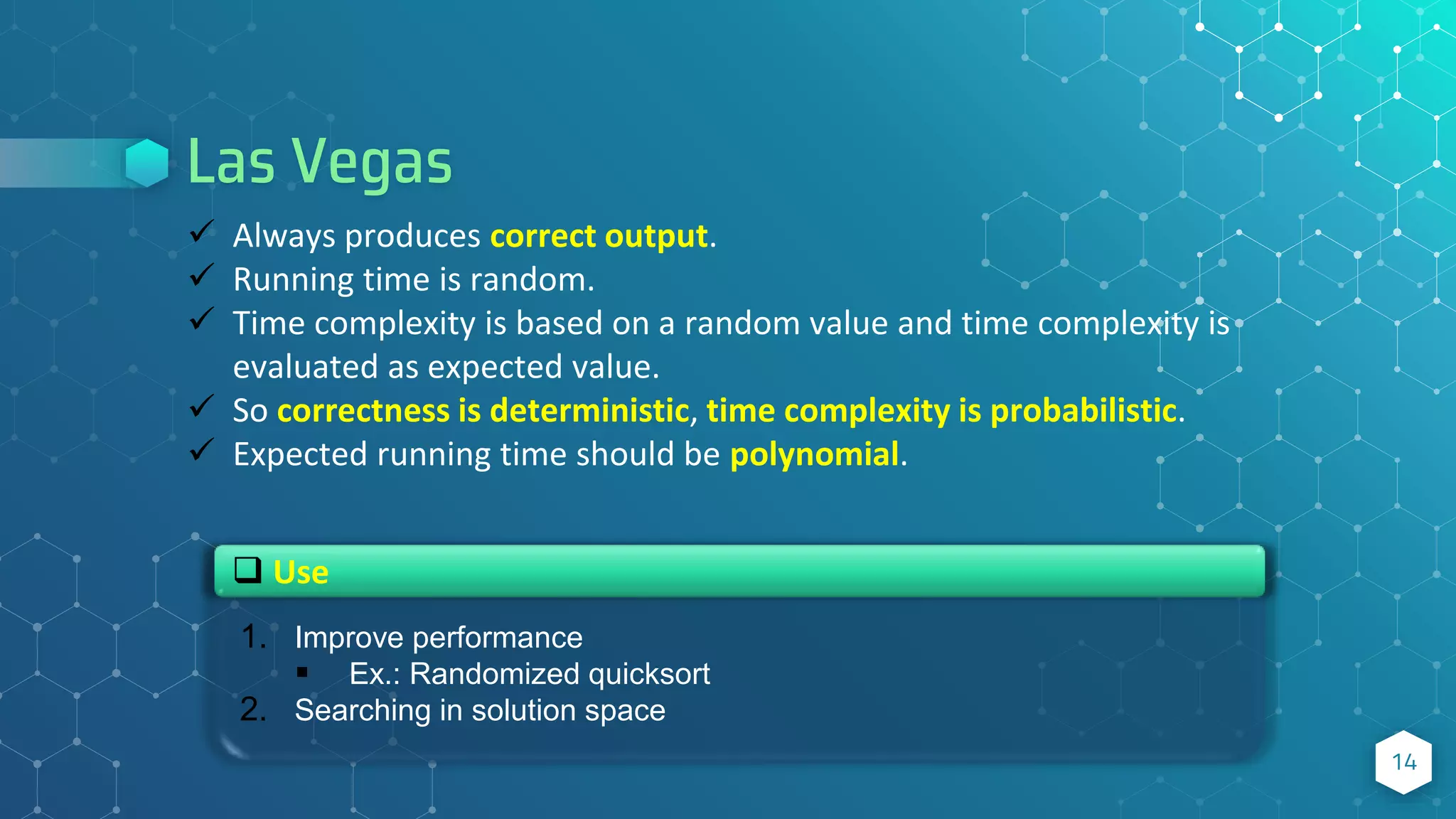

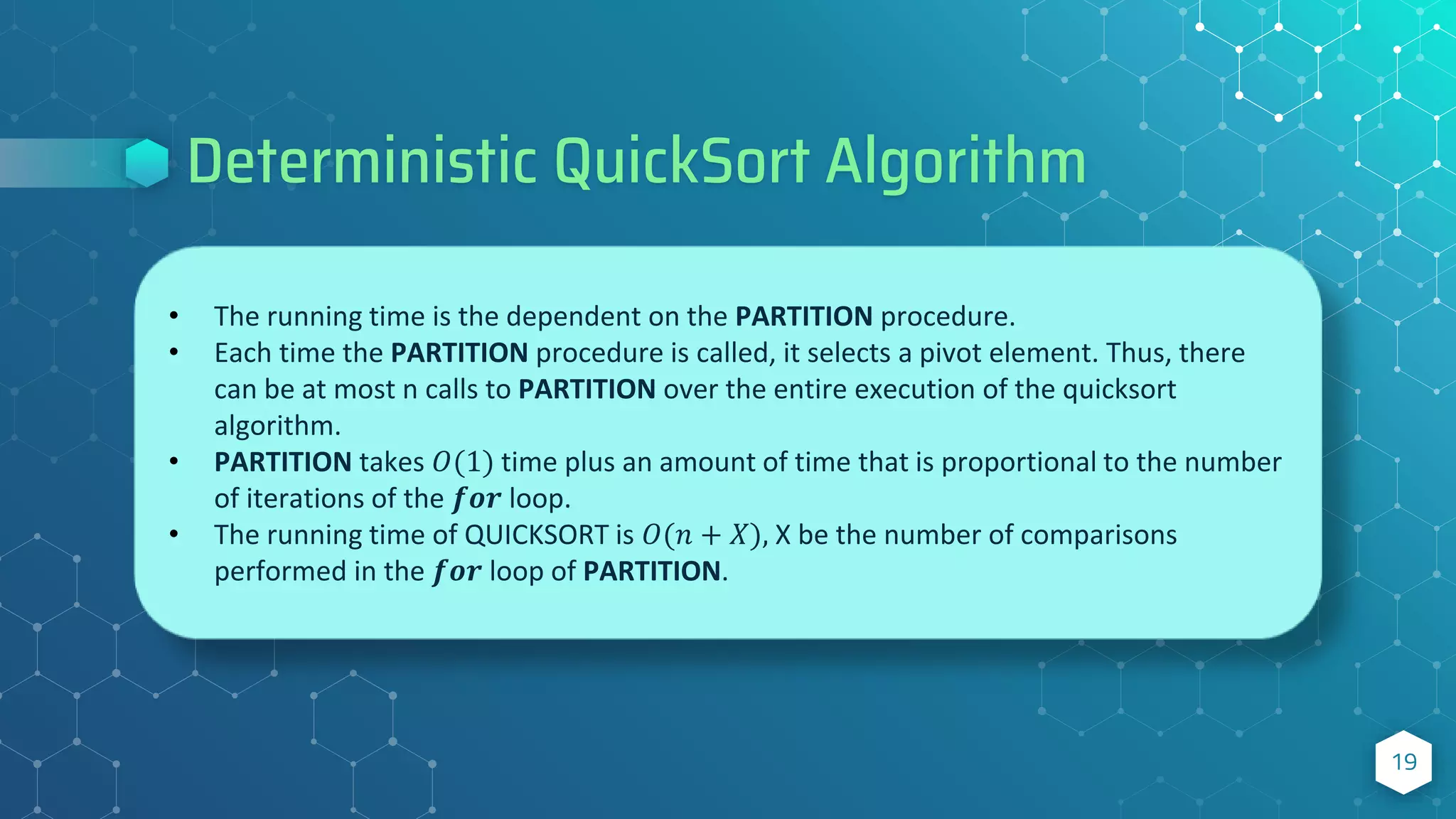

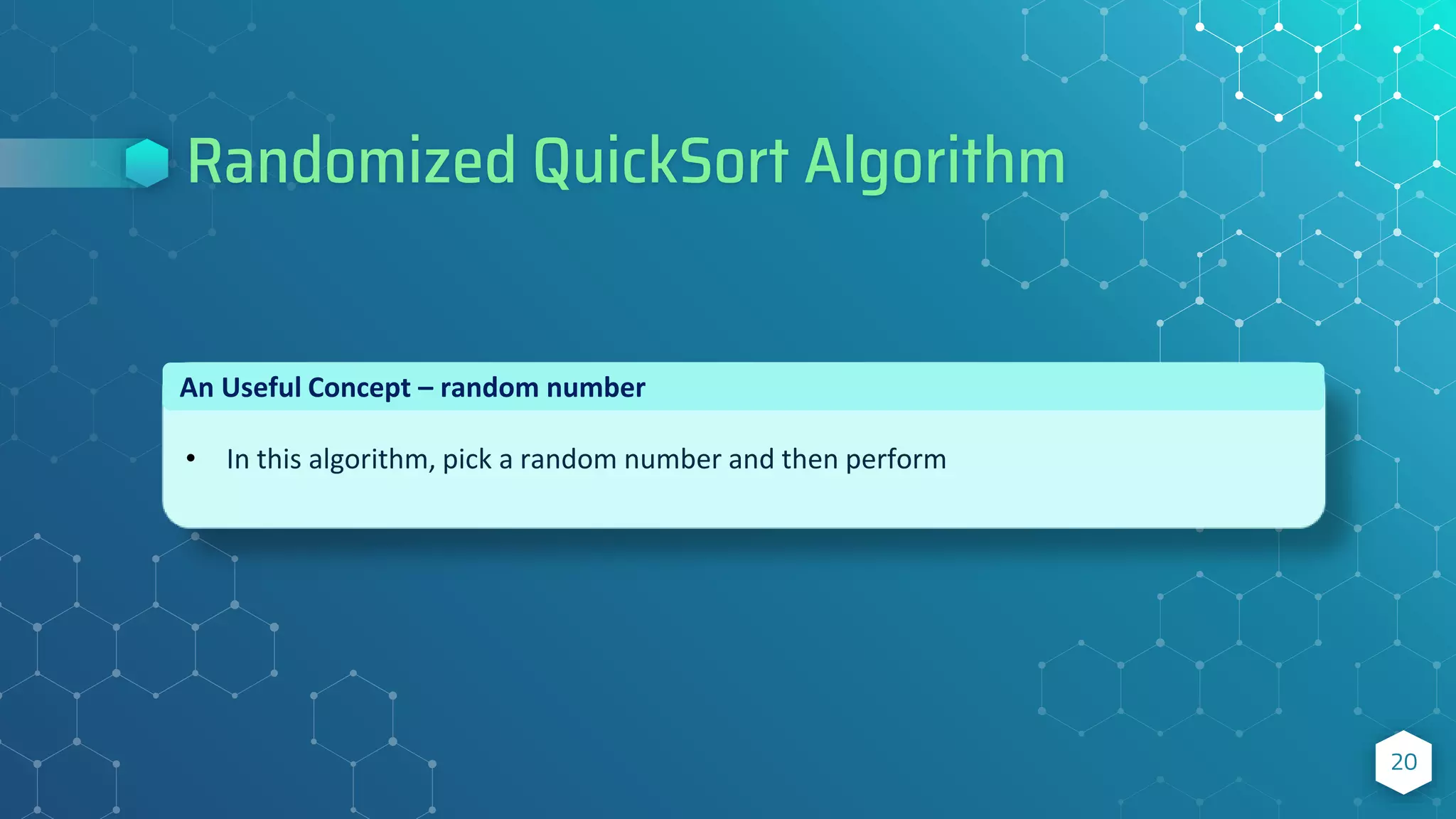

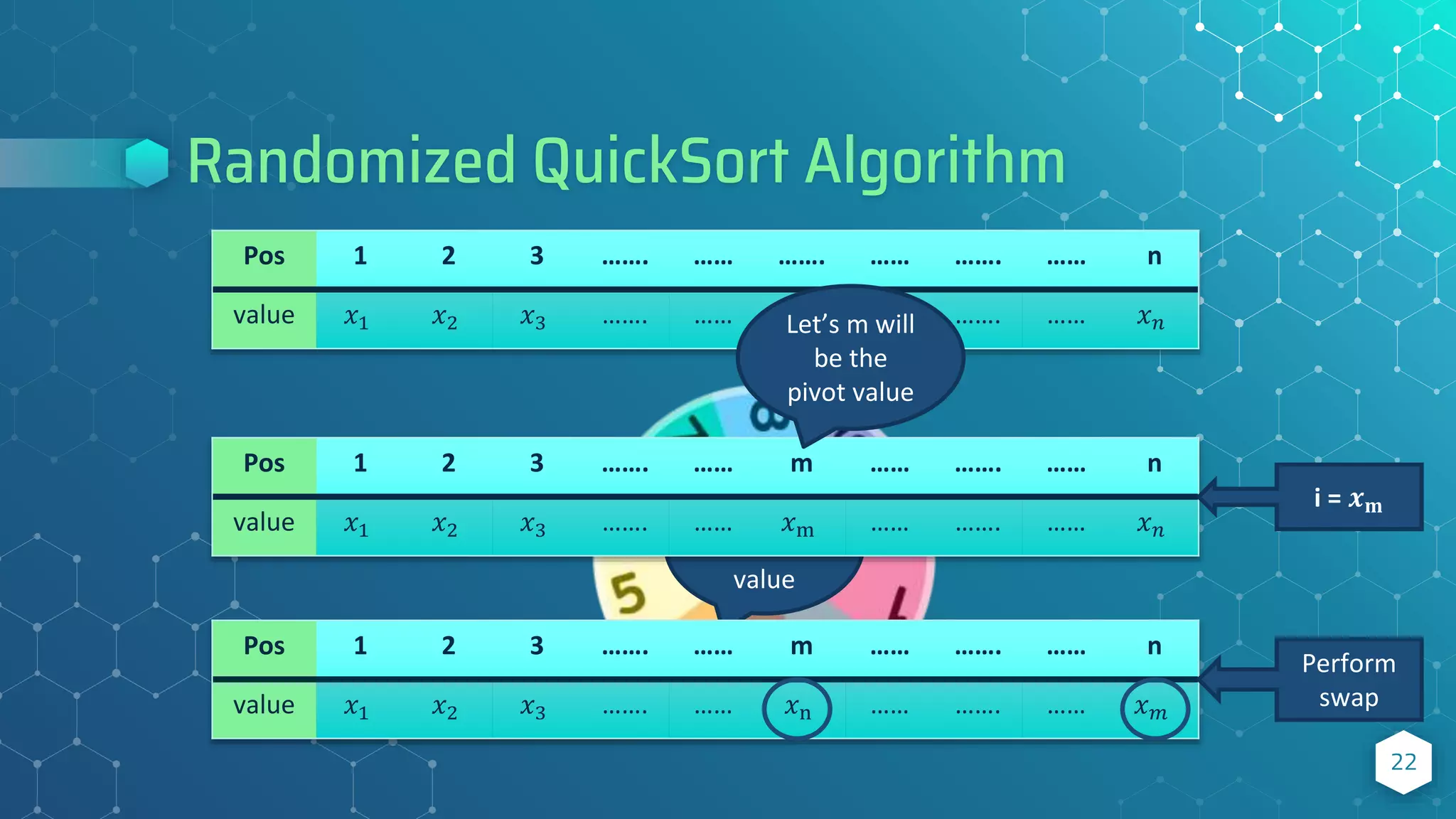

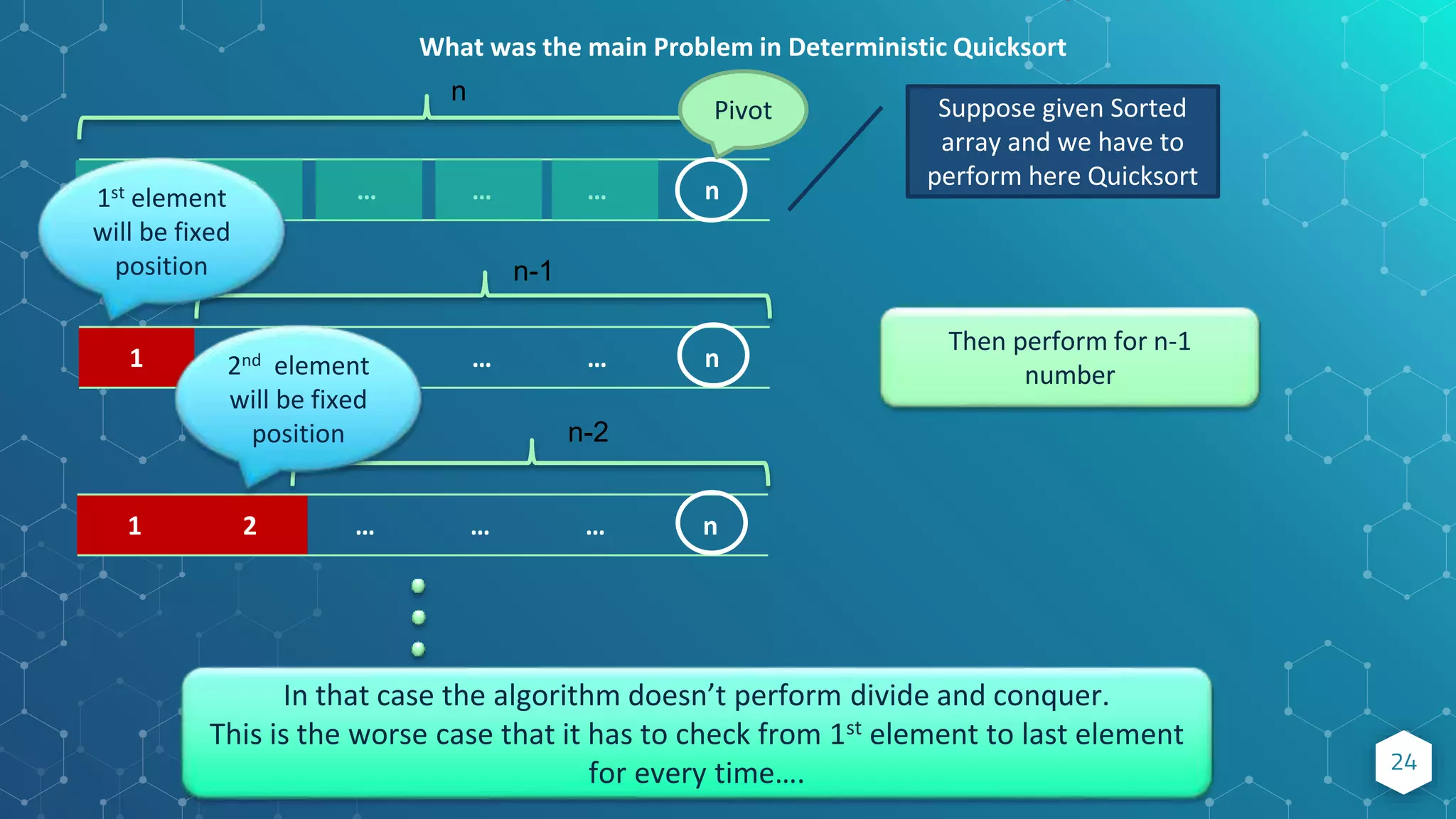

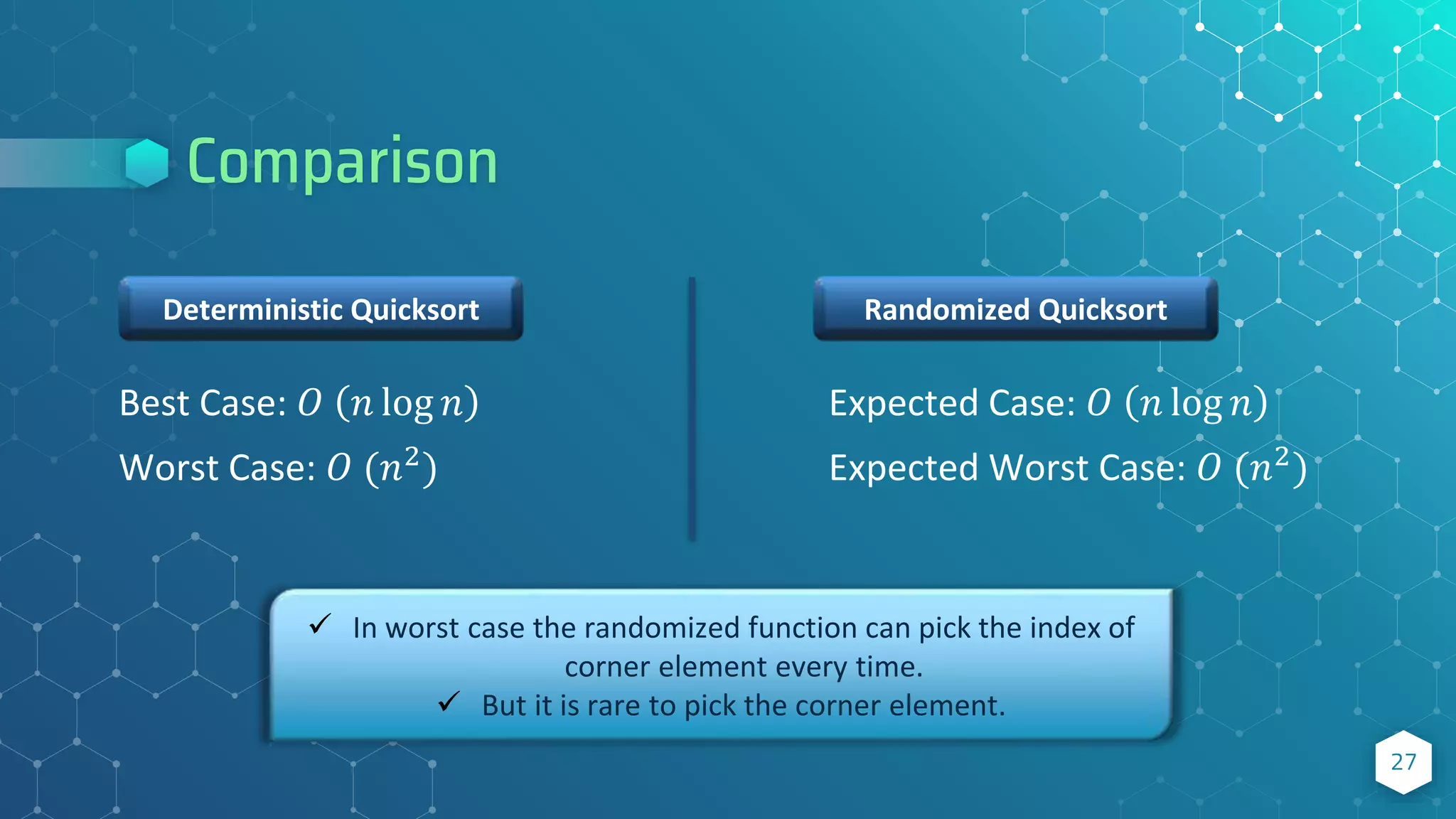

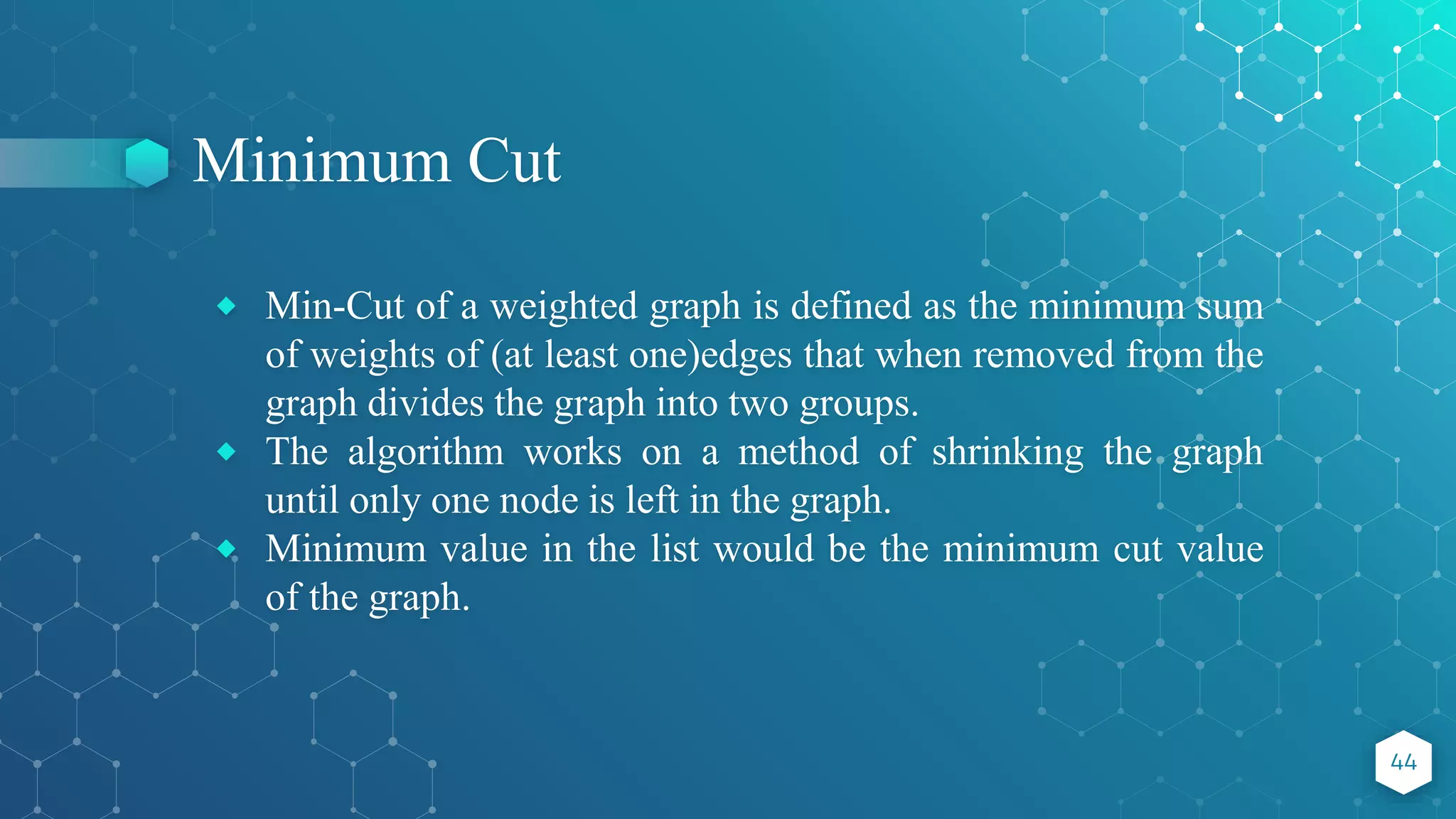

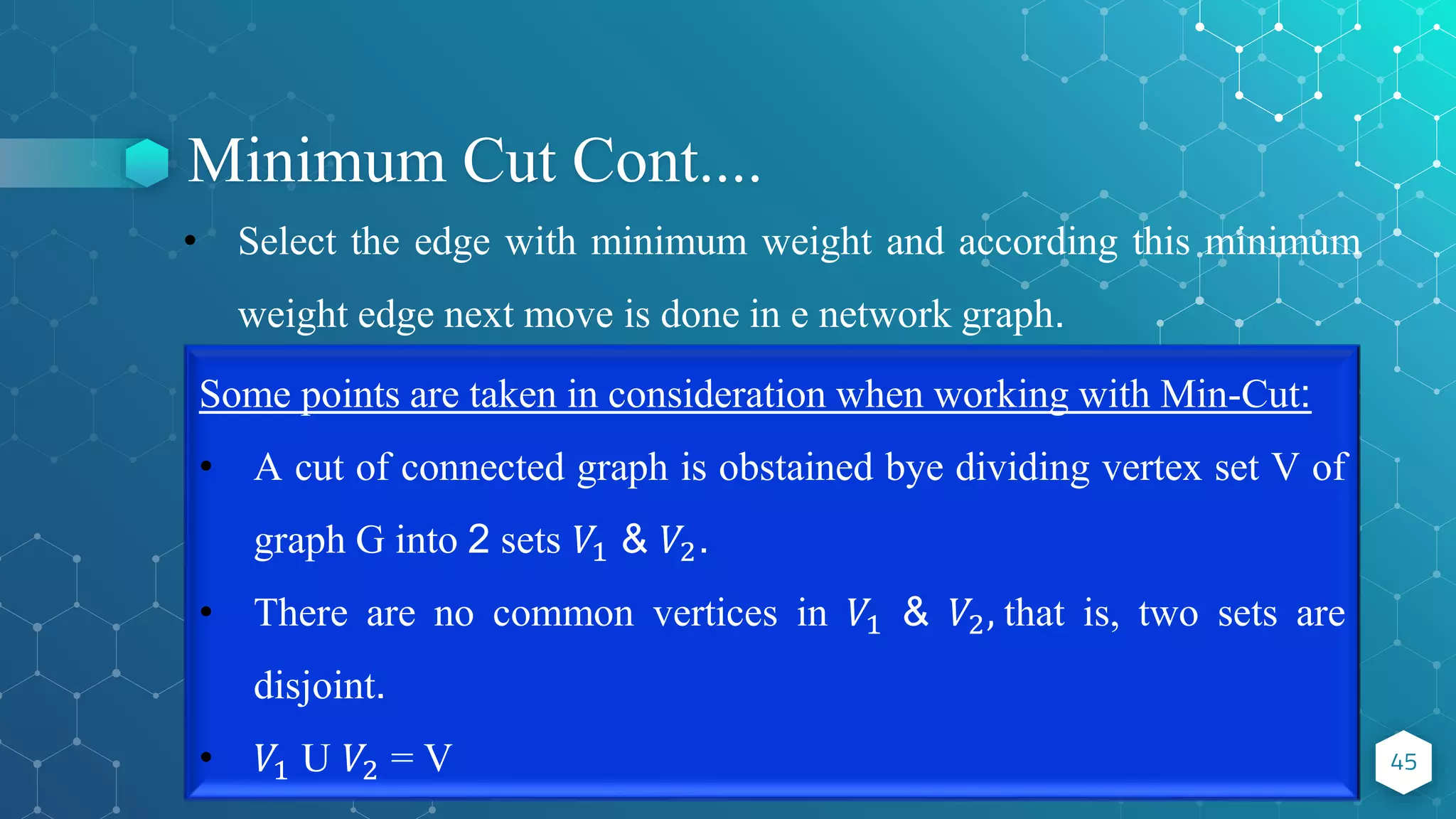

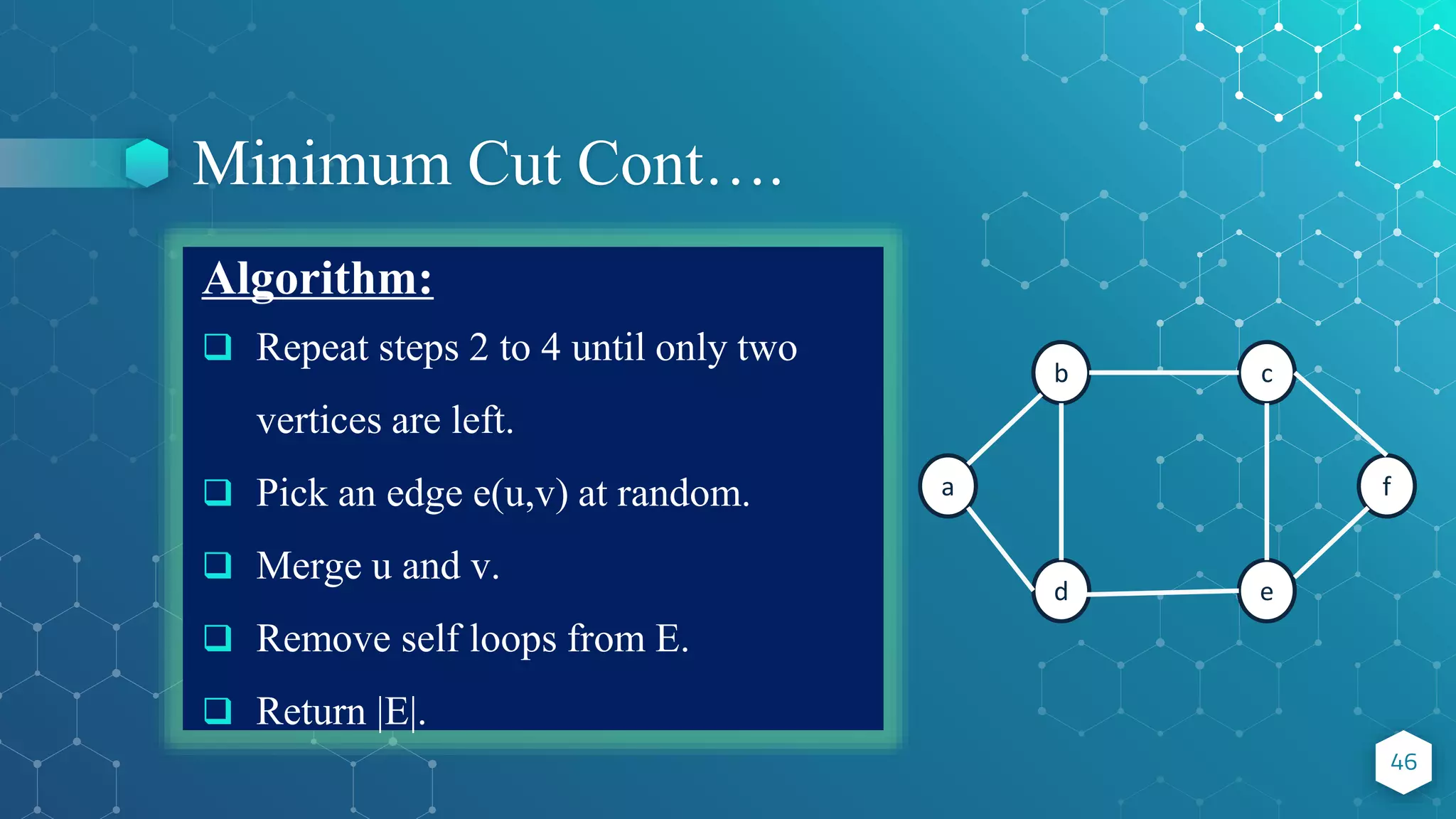

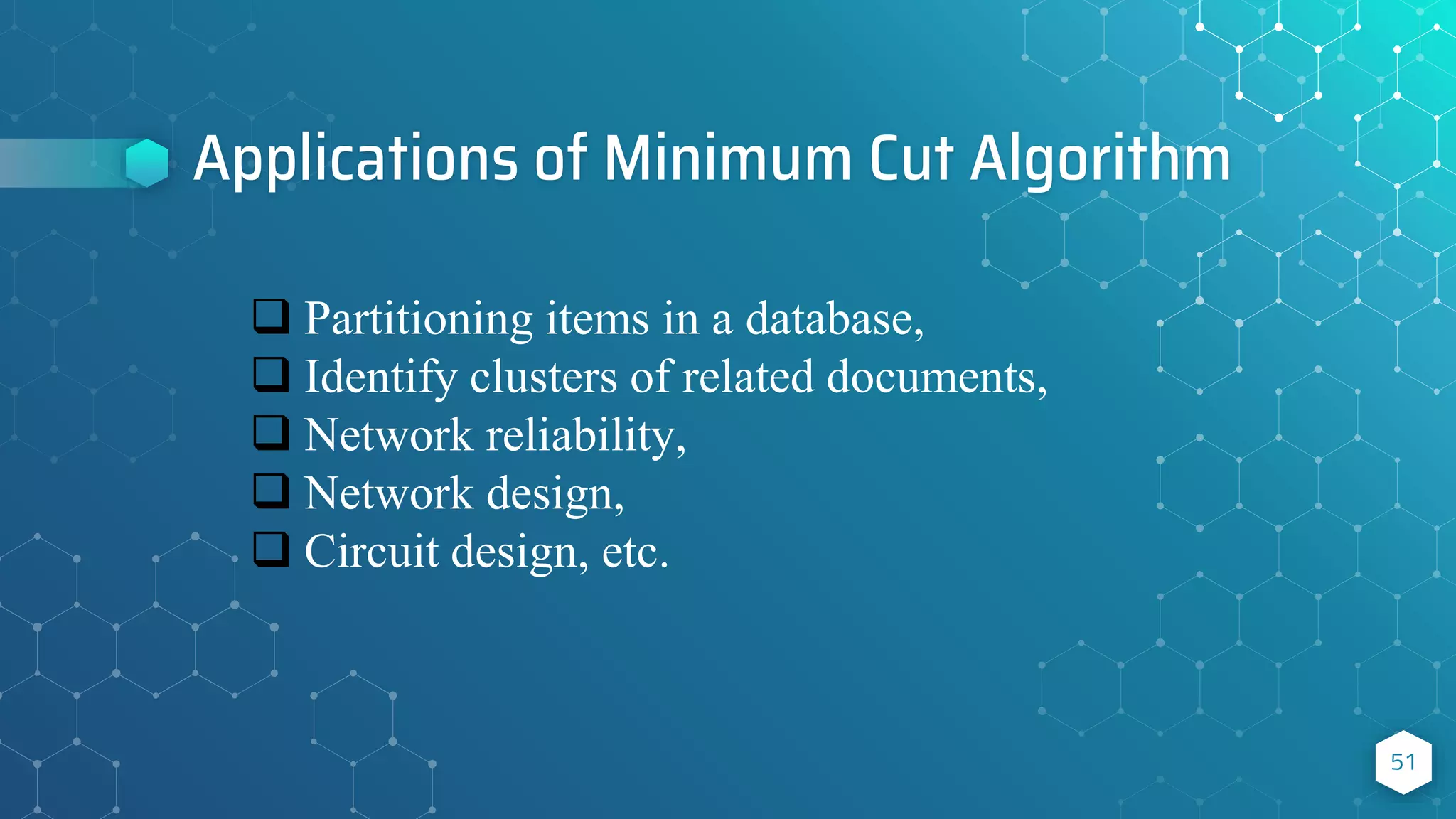

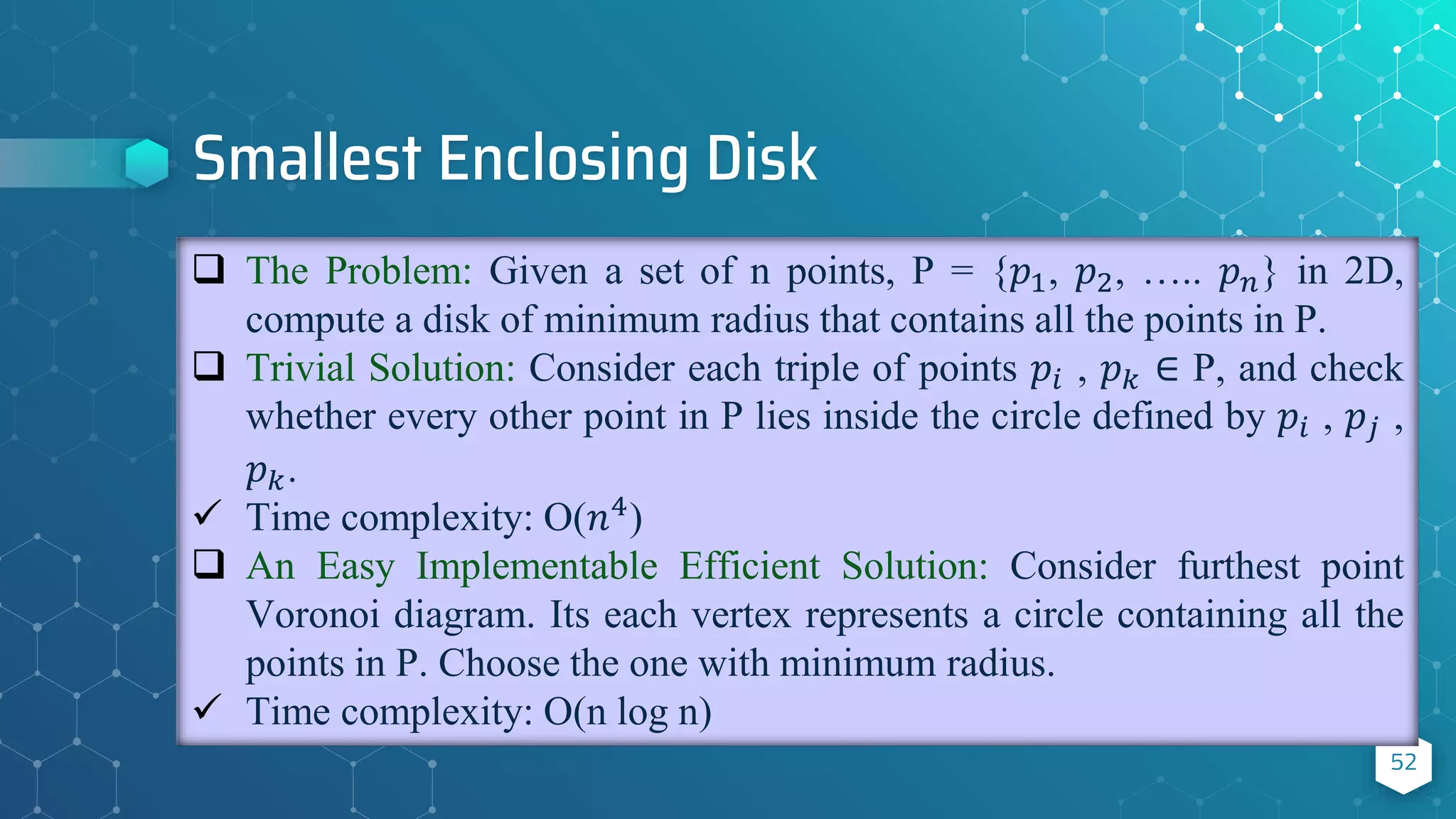

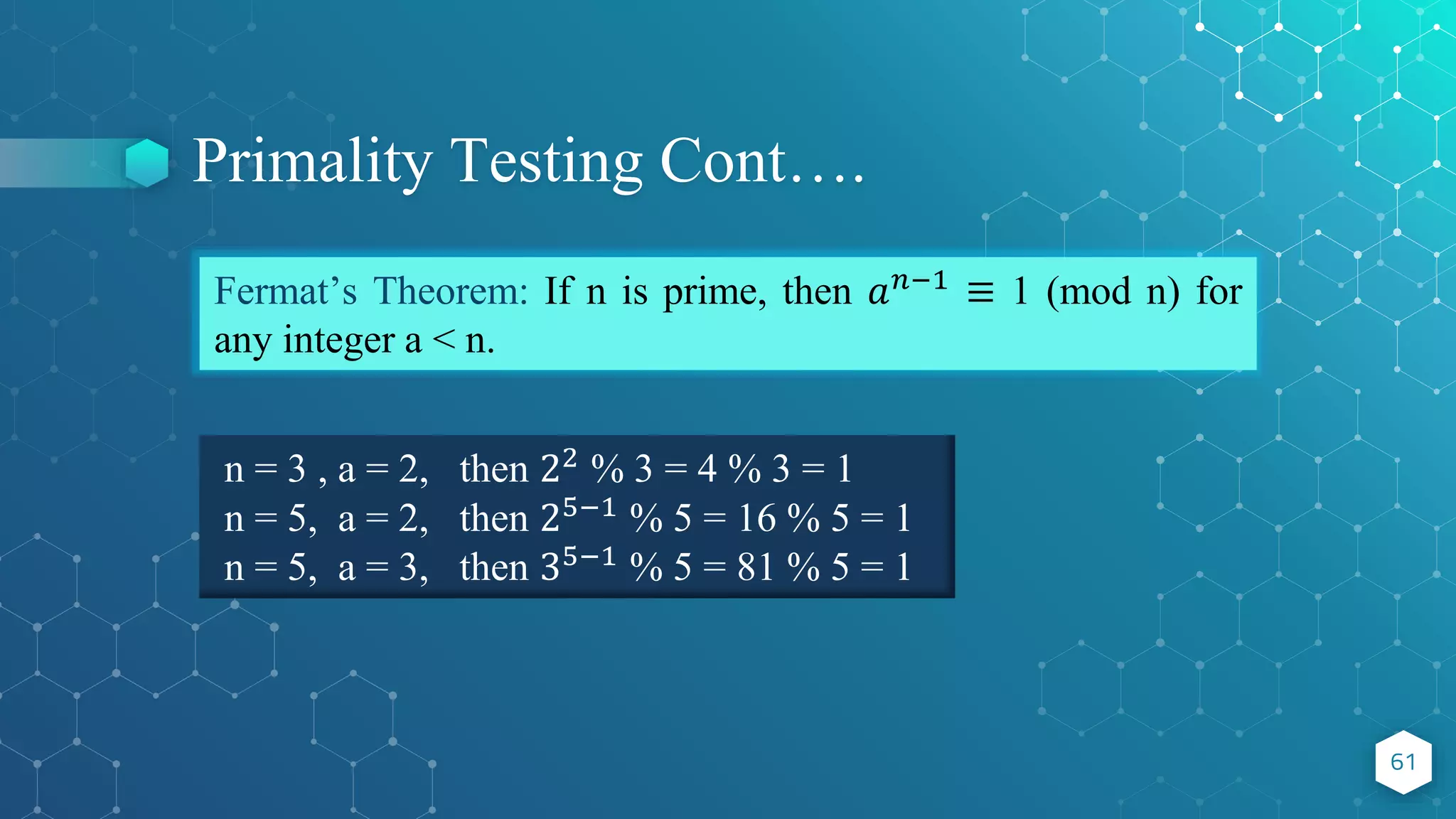

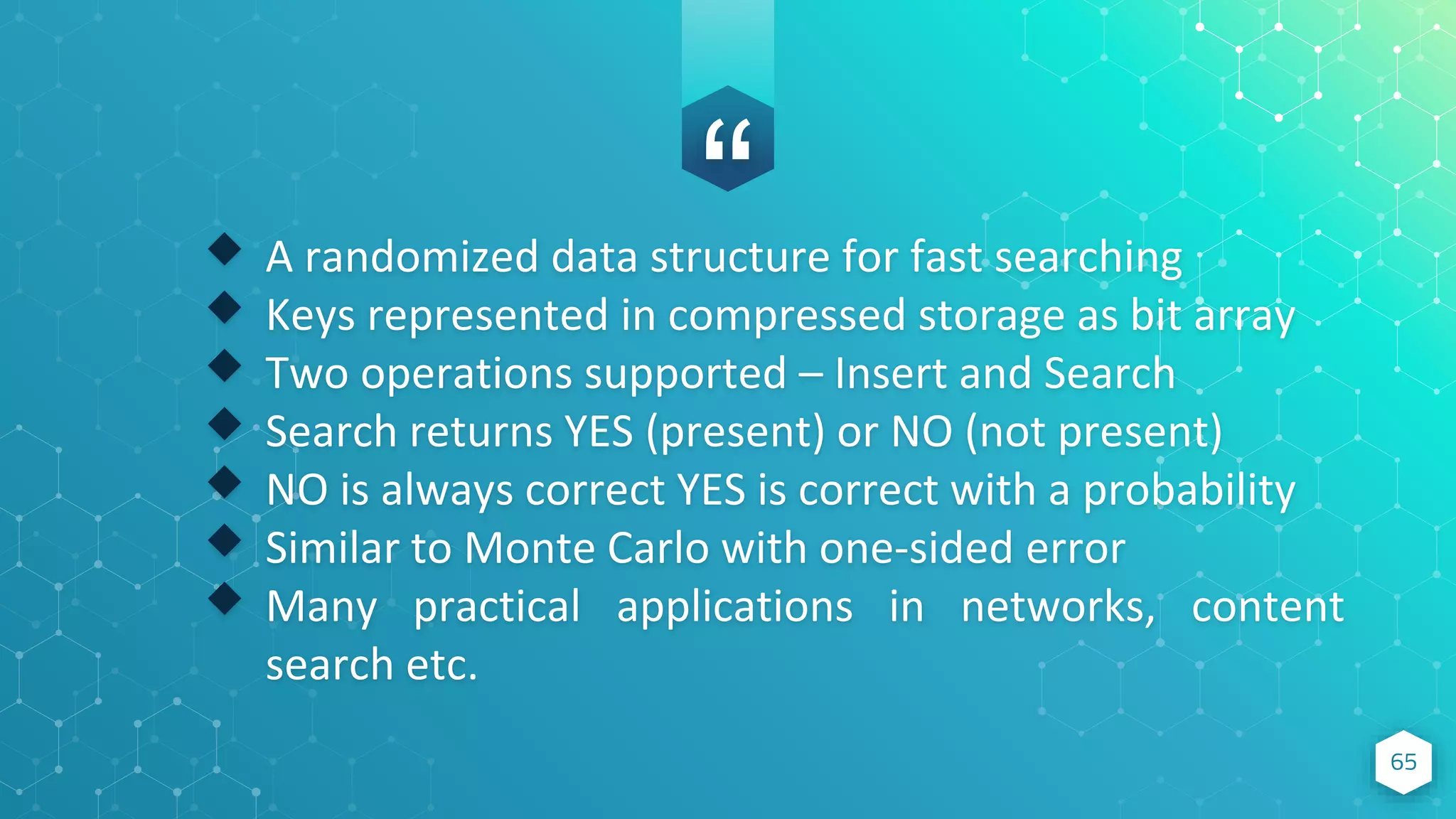

The document discusses randomized algorithms, comparing deterministic and non-deterministic algorithms, and outlining various types of randomized algorithms, specifically Las Vegas and Monte Carlo. It highlights the advantages of using randomized algorithms for efficiency and performance, including examples such as randomized quicksort and the minimum cut problem. The text further details applications and theoretical foundations of these algorithms, underscoring their significance in computing problems.

![Divide and Conquer 16 The design of Quicksort is based on the divide-and-conquer paradigm. Divide: Partition the array A[p..r] into two subarrays A[p..q-1] and A[q+1,r] such that, A[x] <= A[q] for all x in [p..q-1] A[x] > A[q] for all x in [q+1,r] Conquer: Recursively sort A[p..q-1] and A[q+1,r] Combine: nothing to do here ≤ 𝒙 𝒙 ≥ 𝒙](https://image.slidesharecdn.com/randomizedalgorithm-211022054611/75/Randomized-Algorithm-Advanced-Algorithm-16-2048.jpg)

![Deterministic QuickSort Algorithm 18 PARTITION(A, p, r) x := A[r]; i := p-1; for j = p to r-1{ if A[j] <= x then i := i+1; swap(A[i] , A[j]); } swap(A[i+1], A[r]); return i+1:](https://image.slidesharecdn.com/randomizedalgorithm-211022054611/75/Randomized-Algorithm-Advanced-Algorithm-18-2048.jpg)

![Randomized QuickSort Algorithm 21 Randomized-Quicksort(A, p, r) if p < r then q := Randomized-Partition(A, p, r); Randomized-Quicksort(A, p,q-1); Randomized-Quicksort(A,p+1,r); Randomized-Partition(A, p, r) i := Random(p, r); swap(A[i], A[r]); p := Partition(A, p, r); Return p; Almost the same as Partition as Deterministic QuickSort, but now the pivot element is not the rightmost/leftmost element, but rather an element from A[p..r] that is chosen uniformly at random.](https://image.slidesharecdn.com/randomizedalgorithm-211022054611/75/Randomized-Algorithm-Advanced-Algorithm-21-2048.jpg)

![25 What will be happened in case of Randomized Quicksort 1 2 3 4 5 6 4 Pick a random number 1 2 3 6 5 4 i p r Swap(A[i],A[r]) and then perform Partition function Pivot p r](https://image.slidesharecdn.com/randomizedalgorithm-211022054611/75/Randomized-Algorithm-Advanced-Algorithm-25-2048.jpg)

![26 What will be happened in case of Randomized Quicksort 1 2 3 6 5 4 i=p-1 j x 1 2 3 6 5 4 i j x 1 2 3 6 5 4 i j x 1 2 3 6 5 4 i j A[j] <= x ? No 1 2 3 6 5 4 i j A[j] <= x ? No 1 2 3 5 6 4 4 6](https://image.slidesharecdn.com/randomizedalgorithm-211022054611/75/Randomized-Algorithm-Advanced-Algorithm-26-2048.jpg)

![Smallest Enclosing Circle 31 Applications: Facility location problem (1-center problem) Best deterministic algorithm : [Nimrod Megiddo, 1983] ⬦ 𝑂(𝑛3) time complexity, too complex, uses advanced geometry Randomized Las Vegas algorithm: [Emo Welz, 1991] ⬦ Expected 𝑂(𝑛) time complexity, too simple, uses elementary geometry. ⬦ The algorithm is recursive. ⬦ Based on a linear programming algorithm of “Raimund Seidel”.](https://image.slidesharecdn.com/randomizedalgorithm-211022054611/75/Randomized-Algorithm-Advanced-Algorithm-31-2048.jpg)

![Algorithm ⬥ Input: An array of n points P[ ]. ⬥ Output: Smallest distance between two points in the given array. 39 P[ ] = {0,1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17}](https://image.slidesharecdn.com/randomizedalgorithm-211022054611/75/Randomized-Algorithm-Advanced-Algorithm-39-2048.jpg)

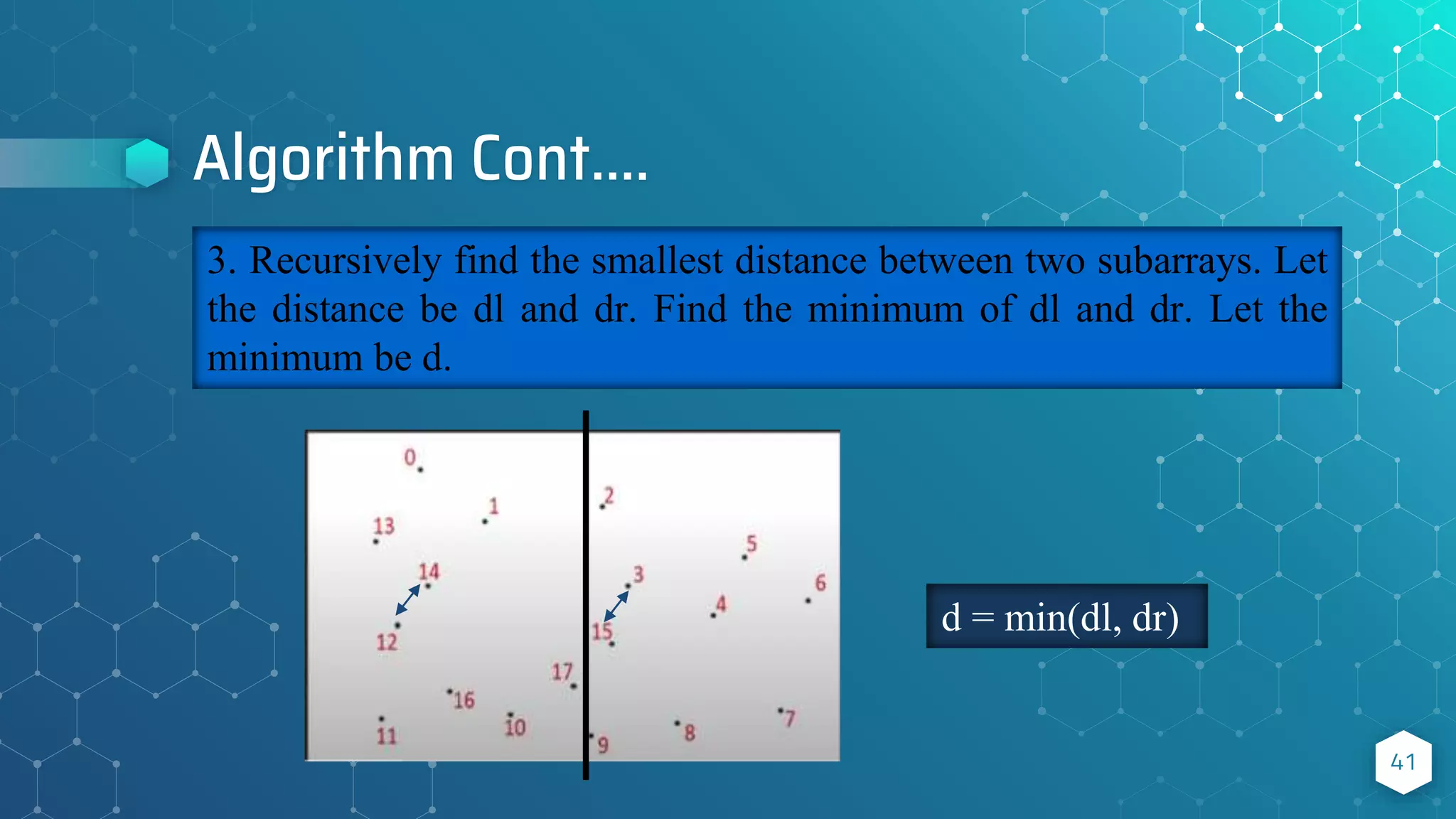

![Algorithm Cont…. 40 Sort the array according to the x-coordinates at first as preprocessing step. P[ ] = {13, 12, 11, 0, 14, 16, 1, 10, 17, 9, 2, 15, 3, 8, 4, 5, 7, 6} 1. Find the middle point in sorted array. We can take P[n/2] as the middle point. P[ ] = {13, 12, 11, 0, 14, 16, 1, 10, 17, 9, 2, 15, 3, 8, 4, 5, 7, 6} 2. Divide the array in two halves. The first subarray contains points for P[0] to P[n/2] and the second subarray contains points from P[n/2+1] to P[n-1]. 𝑃𝐿 = {13, 12, 11, 0, 14, 16, 1, 10, 17} 𝑃𝑅 = {9, 2, 15, 3, 8, 4, 5, 7, 6}](https://image.slidesharecdn.com/randomizedalgorithm-211022054611/75/Randomized-Algorithm-Advanced-Algorithm-40-2048.jpg)

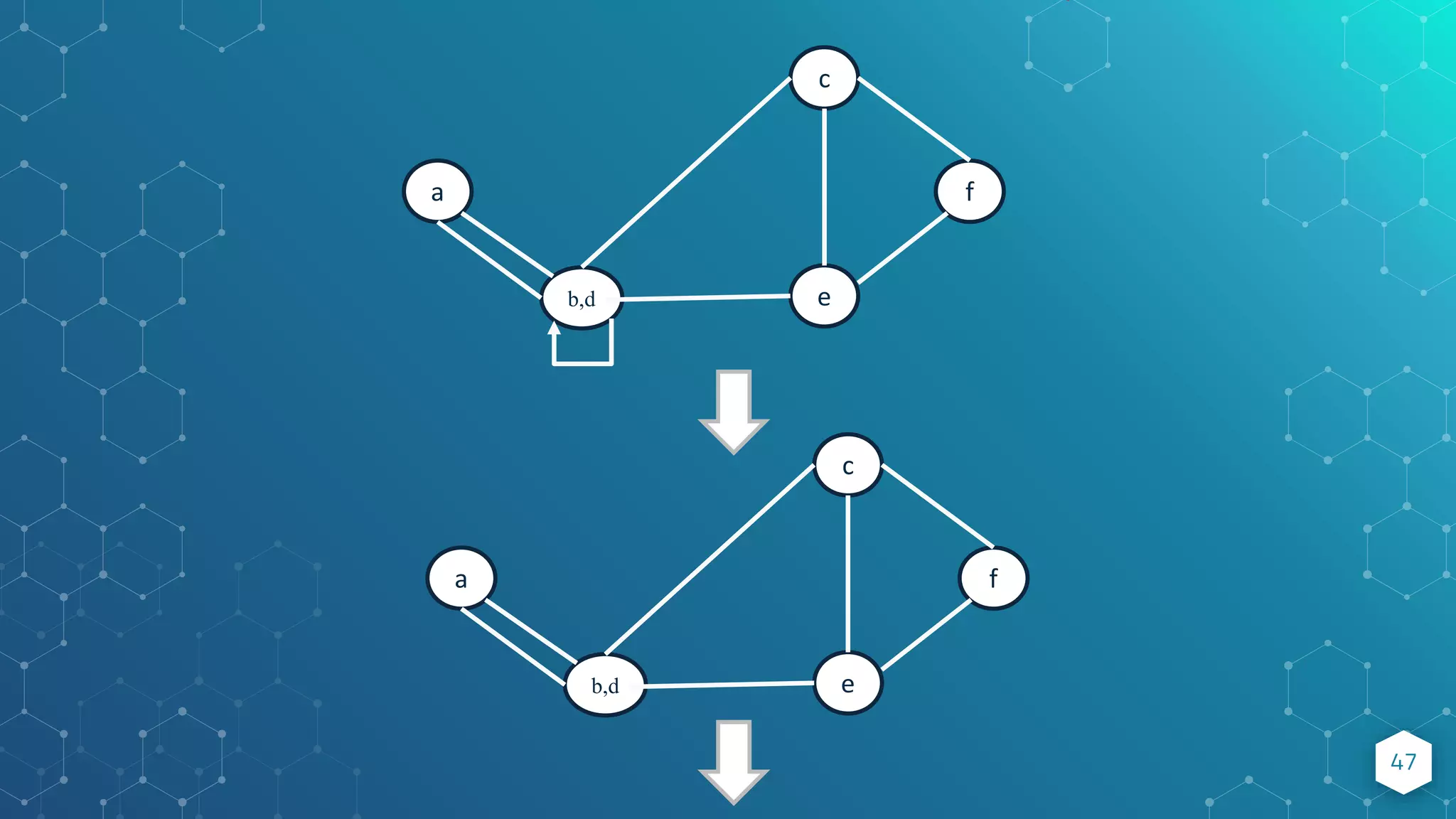

![Minimum Cut 50 Problem definition: Given a connected graph G=(V,E) on n vertices and m edges, compute the smallest set of edges that will make G disconnected. Best deterministic algorithm : [Stoer and Wagner, 1997] • O(mn) time complexity. Randomized Monte Carlo algorithm: [Karger, 1993] • O(m log n) time complexity. Error probability: n−𝑐 for any 𝑐 that we desire.](https://image.slidesharecdn.com/randomizedalgorithm-211022054611/75/Randomized-Algorithm-Advanced-Algorithm-50-2048.jpg)

![Primality Testing 63 Applications: RSA-cryptosystem Algebraic algorithms Best deterministic algorithm : [Agrawal, Kayal and Saxena, O(n6 ) time complexity. Randomized Monte Carlo algorithm: [Rabin, 1980] O(k n2 ) time complexity. Error probability: 2−k for any k that we desire. For 𝐧= 50, this probability is 𝟏𝟎−𝟏𝟓](https://image.slidesharecdn.com/randomizedalgorithm-211022054611/75/Randomized-Algorithm-Advanced-Algorithm-63-2048.jpg)

![Bloom Filter Operation 66 A bit array A[0..m-1] of size m Initially all bits are set to 0 A set of k random and independent hash functions ℎ0, ℎ1, …,ℎ𝑘−1 producing a hash value between 0 and m – 1 Insert key x Compute 𝑦𝑖 = ℎ𝑖(x) for i = 0, 1,….,k – 1 Set A[𝑦𝑖] = 1 for i = 0, 1, …,k – 1 (𝑦0, 𝑦1, 𝑦2,…,𝑦𝑘−1) is called the signature of x Search for a key x Compute 𝑦𝑖 = hi (x) for i = 0, 1,…,k – 1 Answer YES if A[𝑦𝑖 ] =1 for all i, NO otherwise](https://image.slidesharecdn.com/randomizedalgorithm-211022054611/75/Randomized-Algorithm-Advanced-Algorithm-66-2048.jpg)