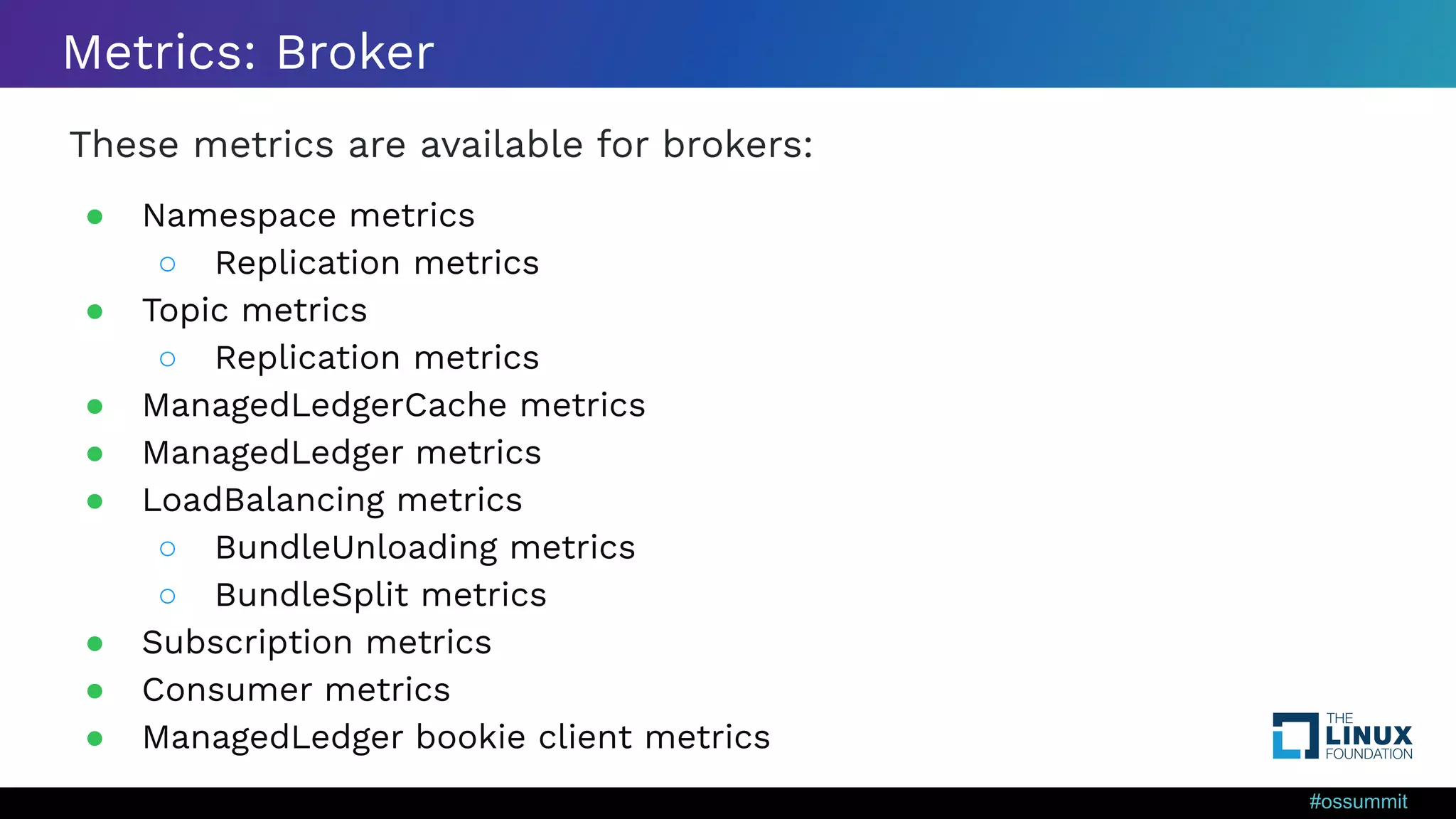

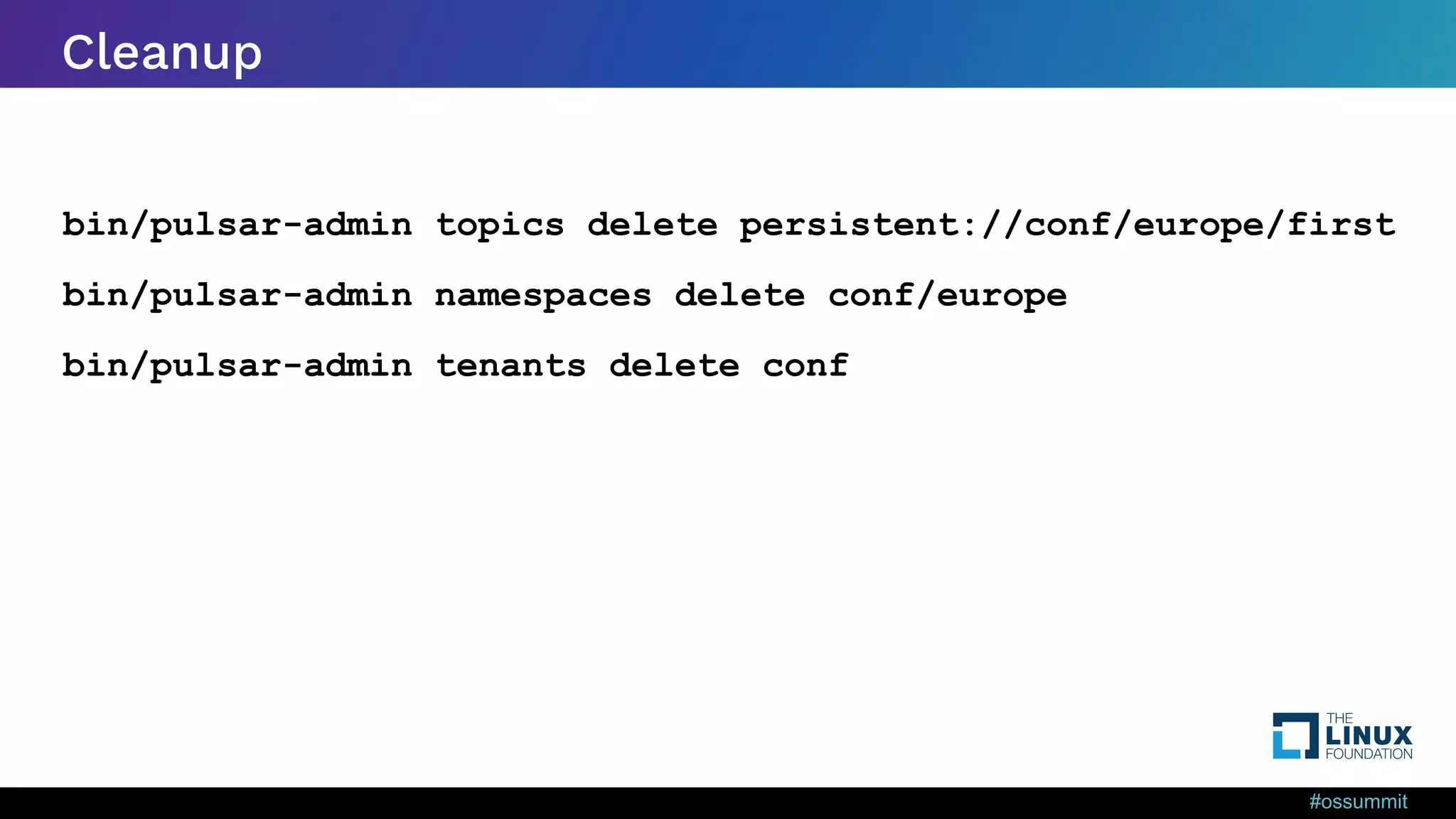

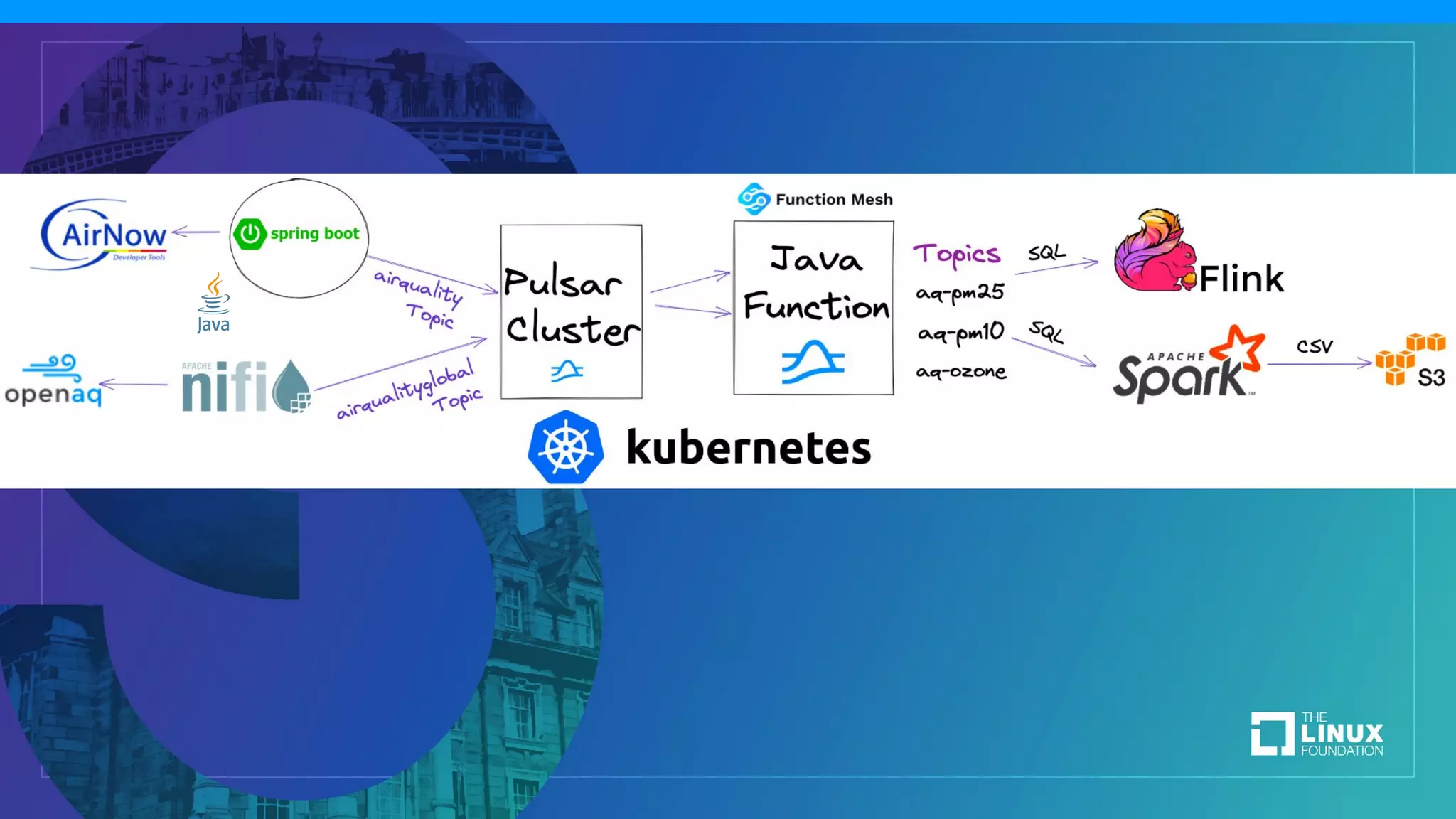

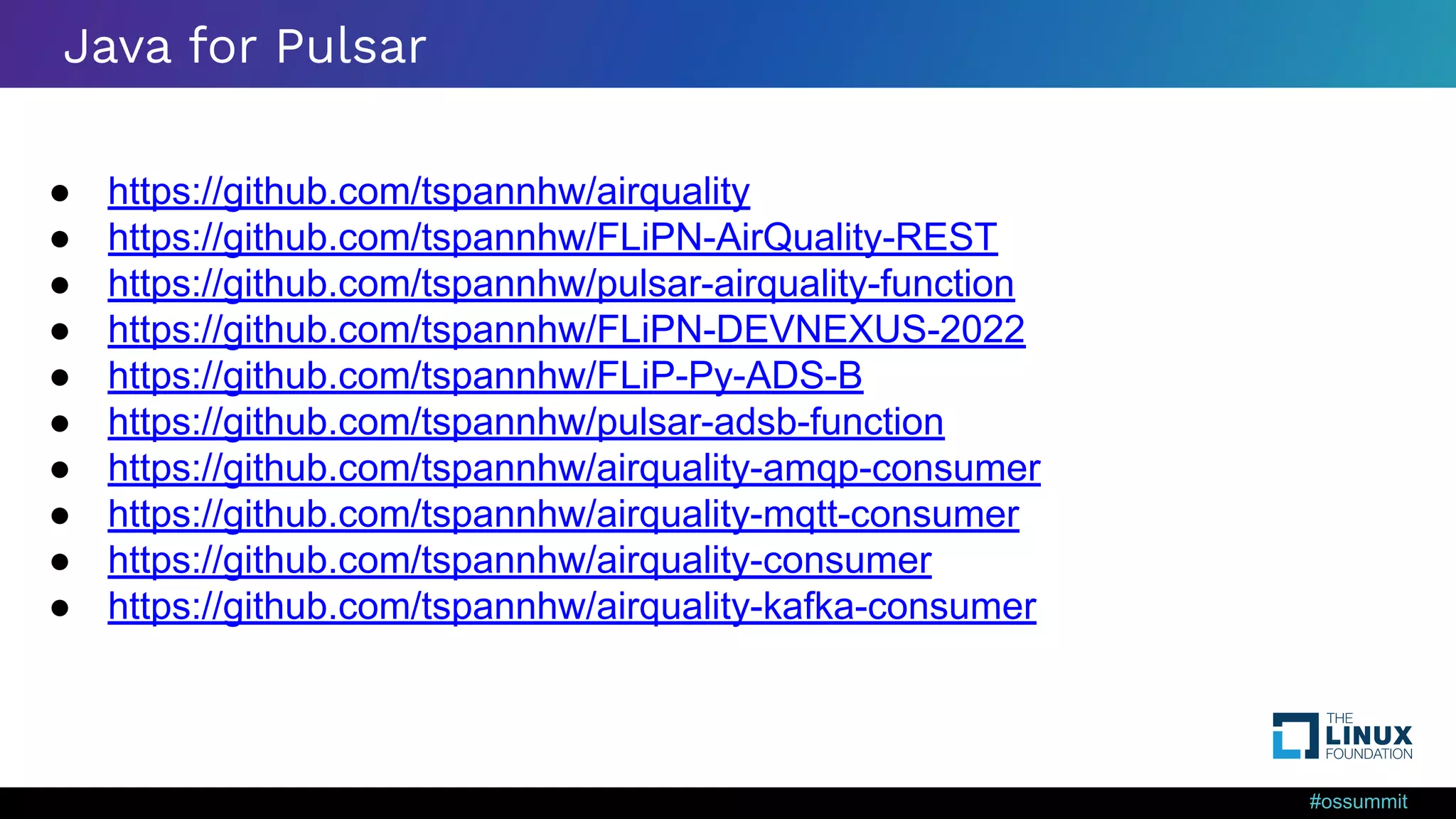

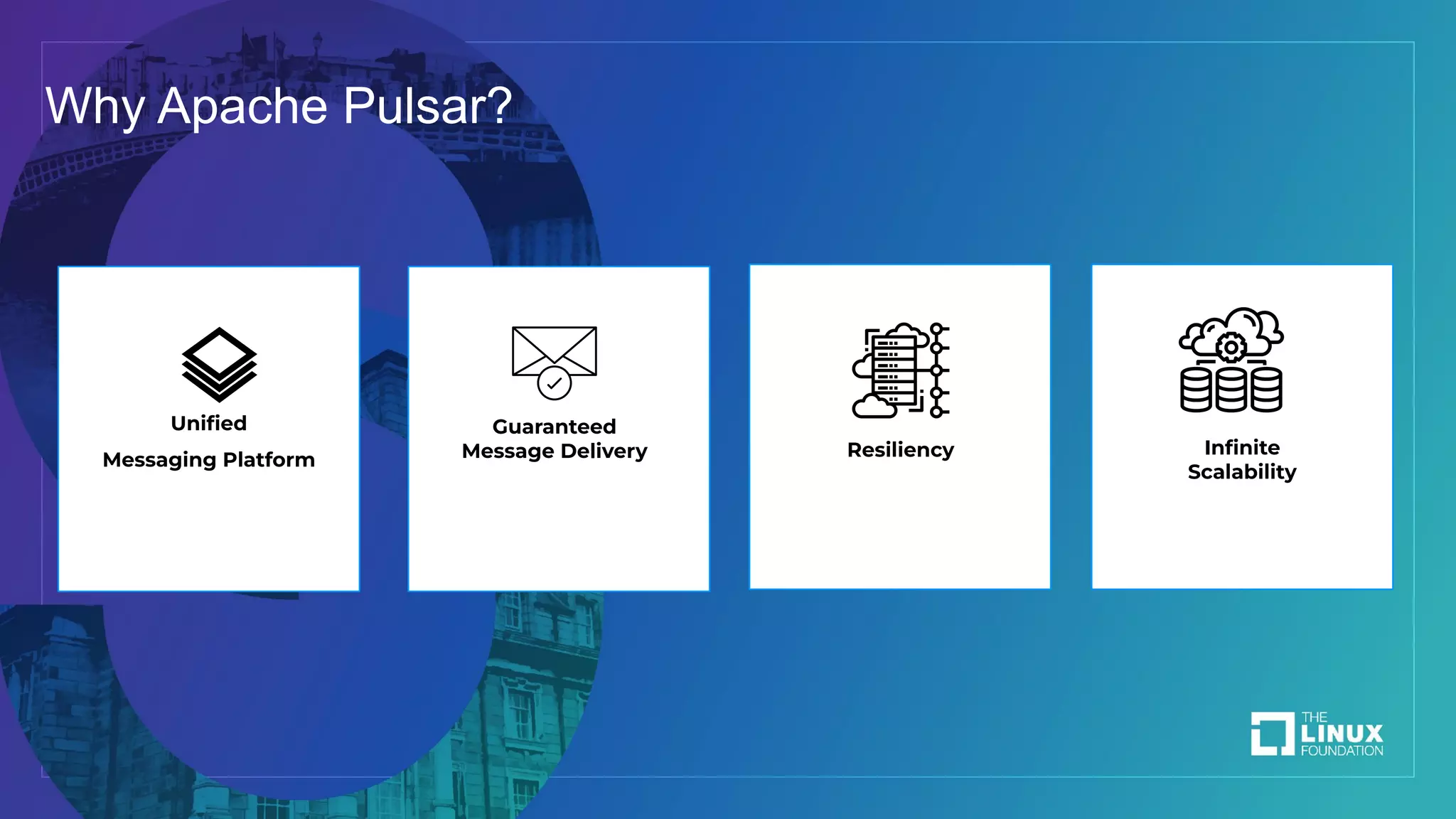

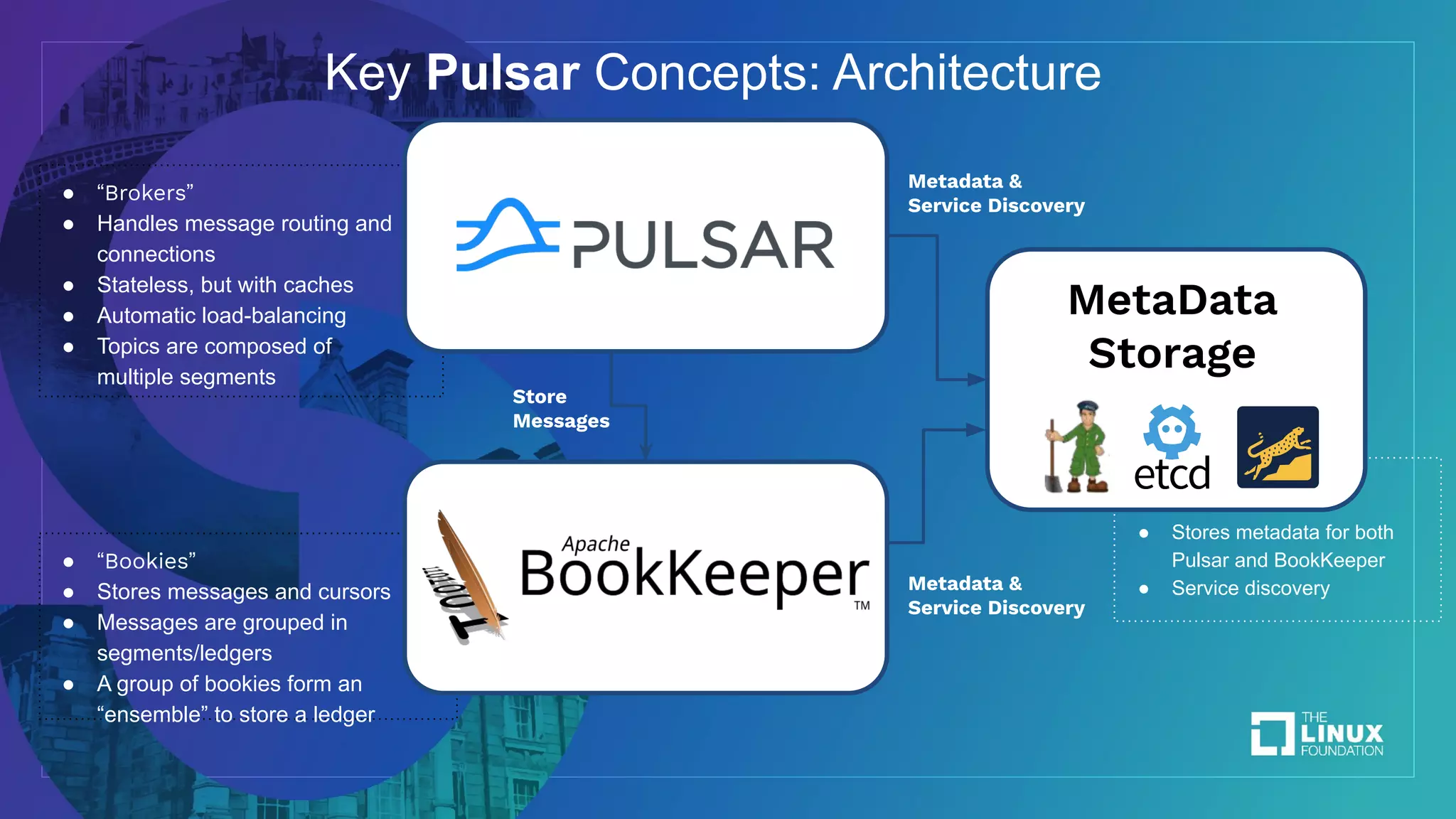

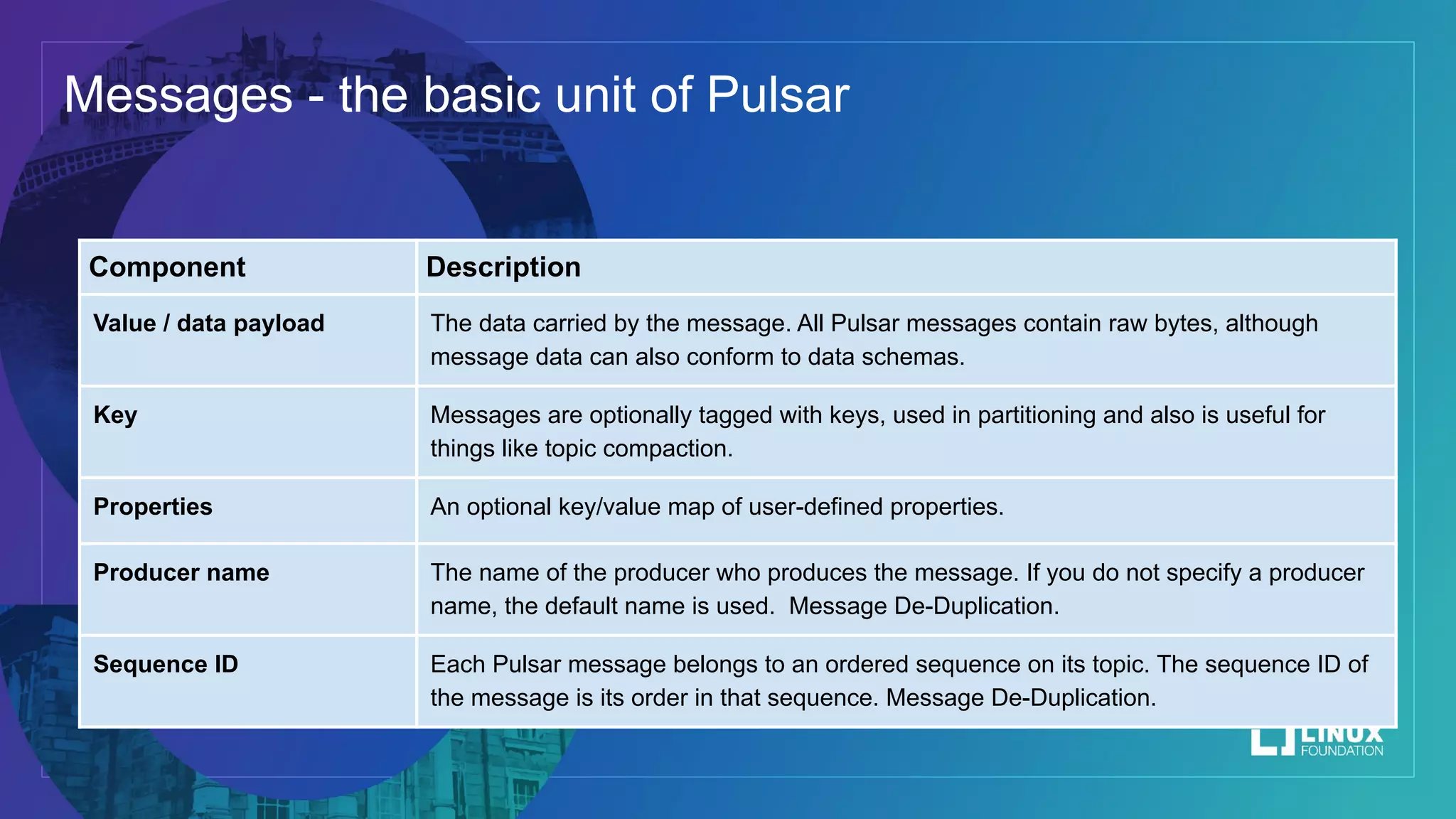

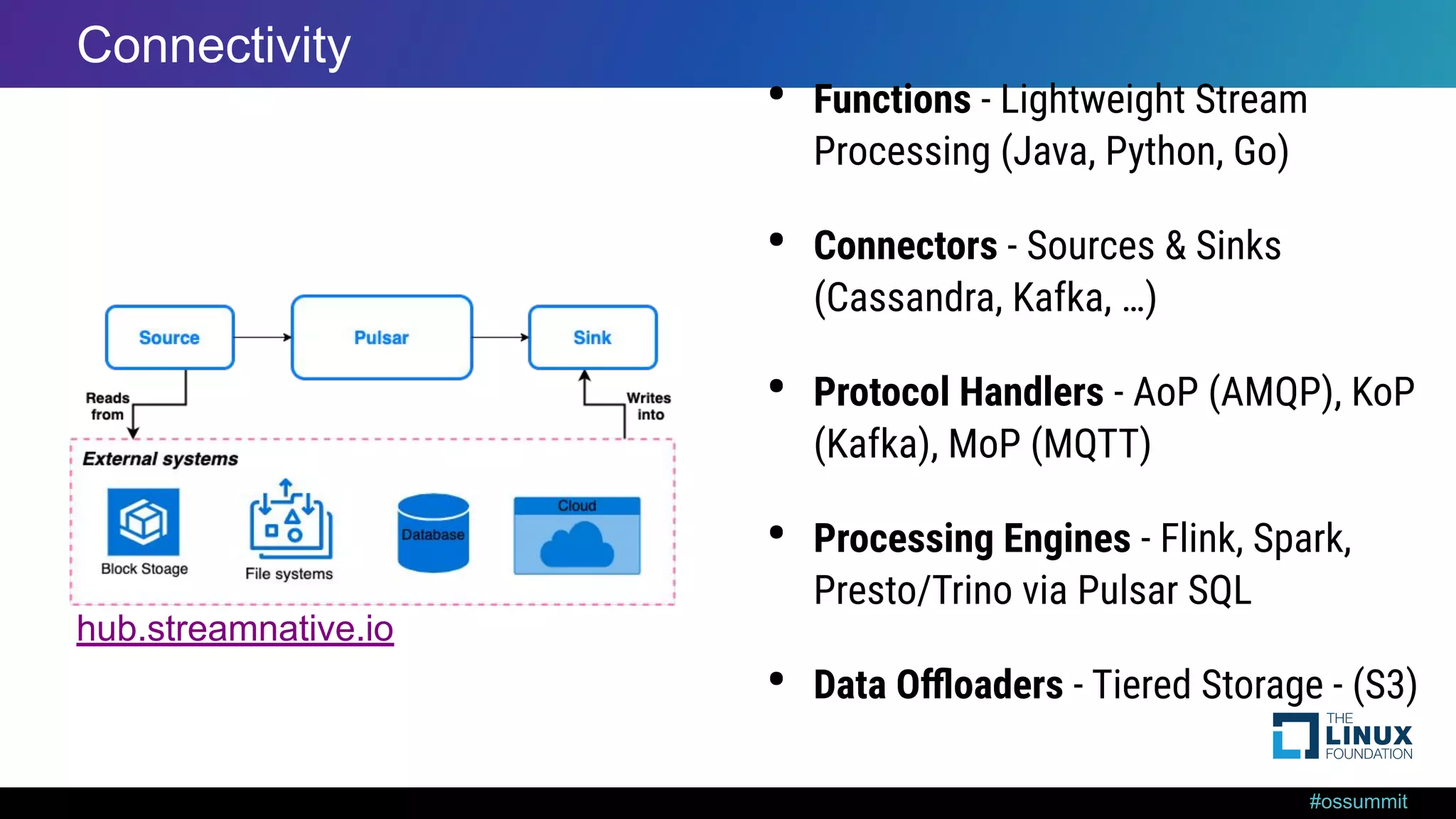

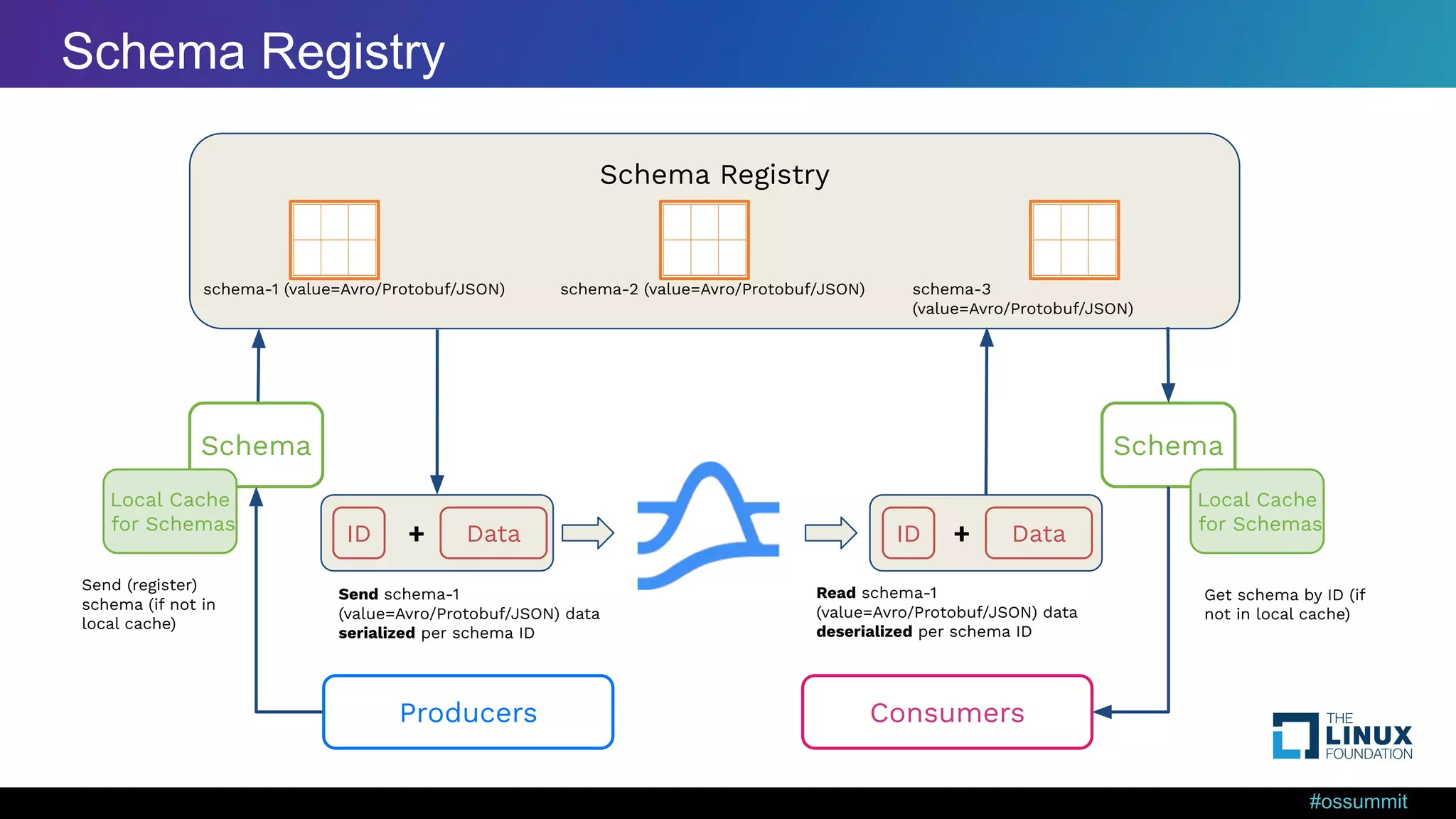

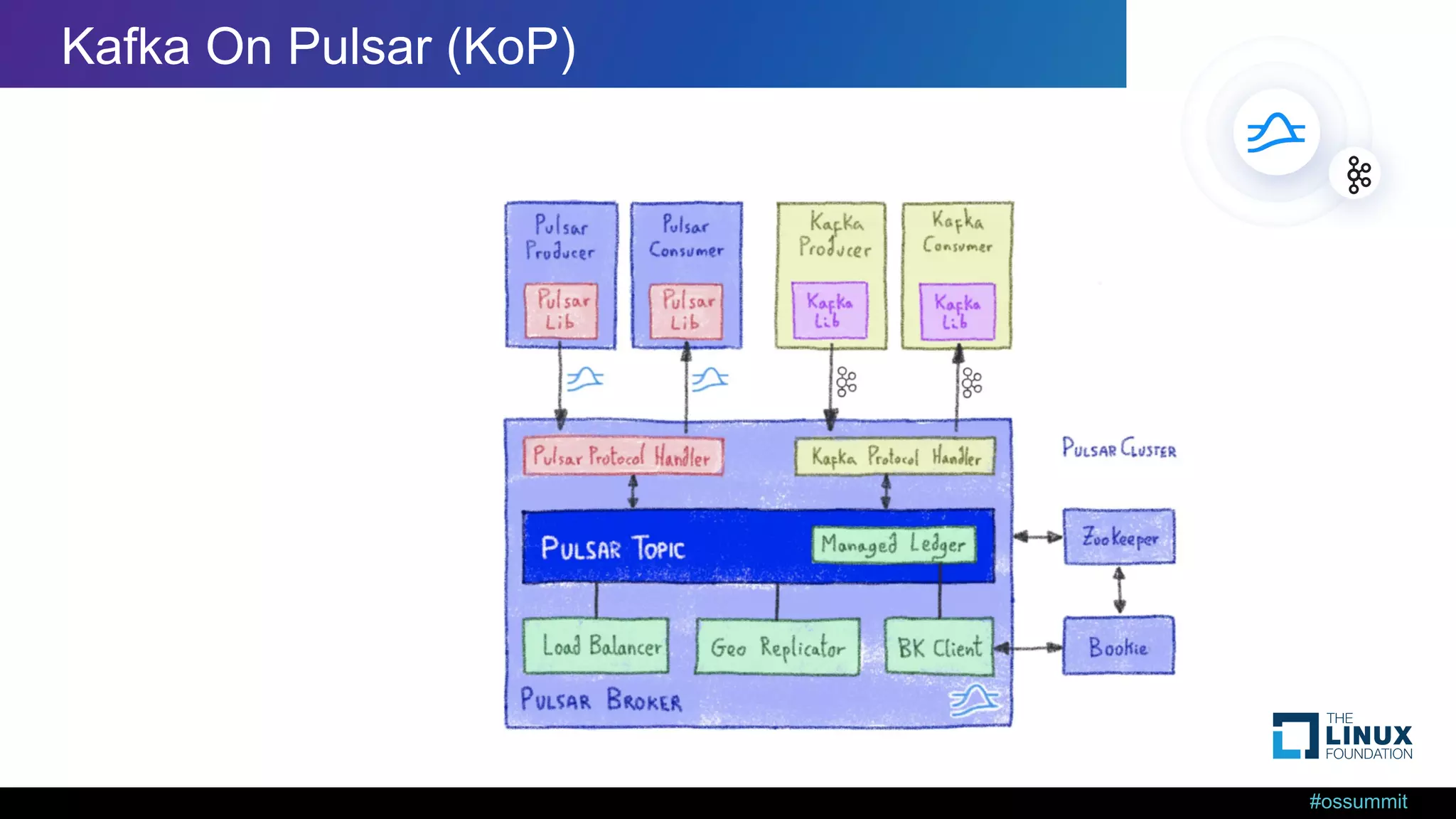

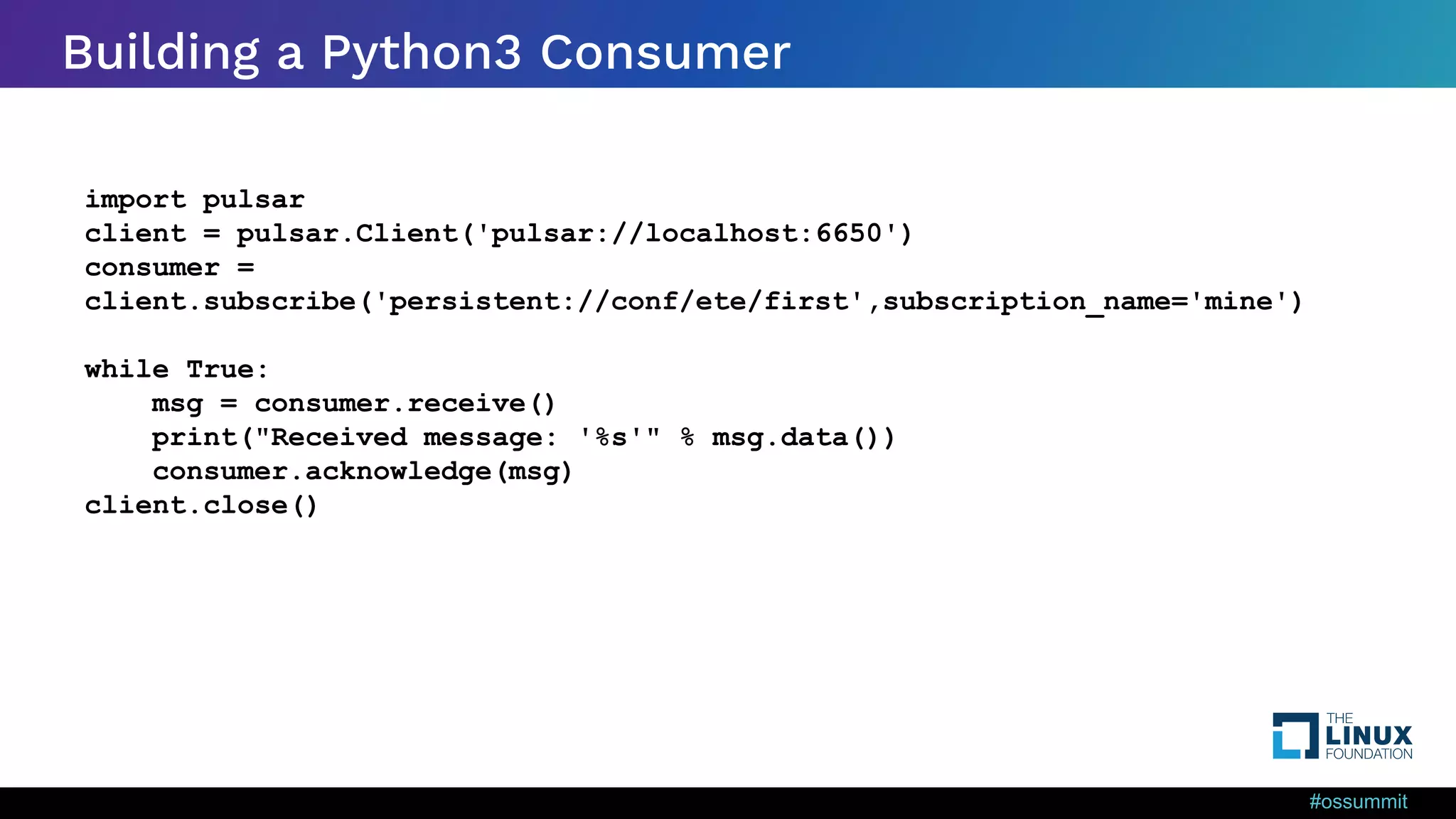

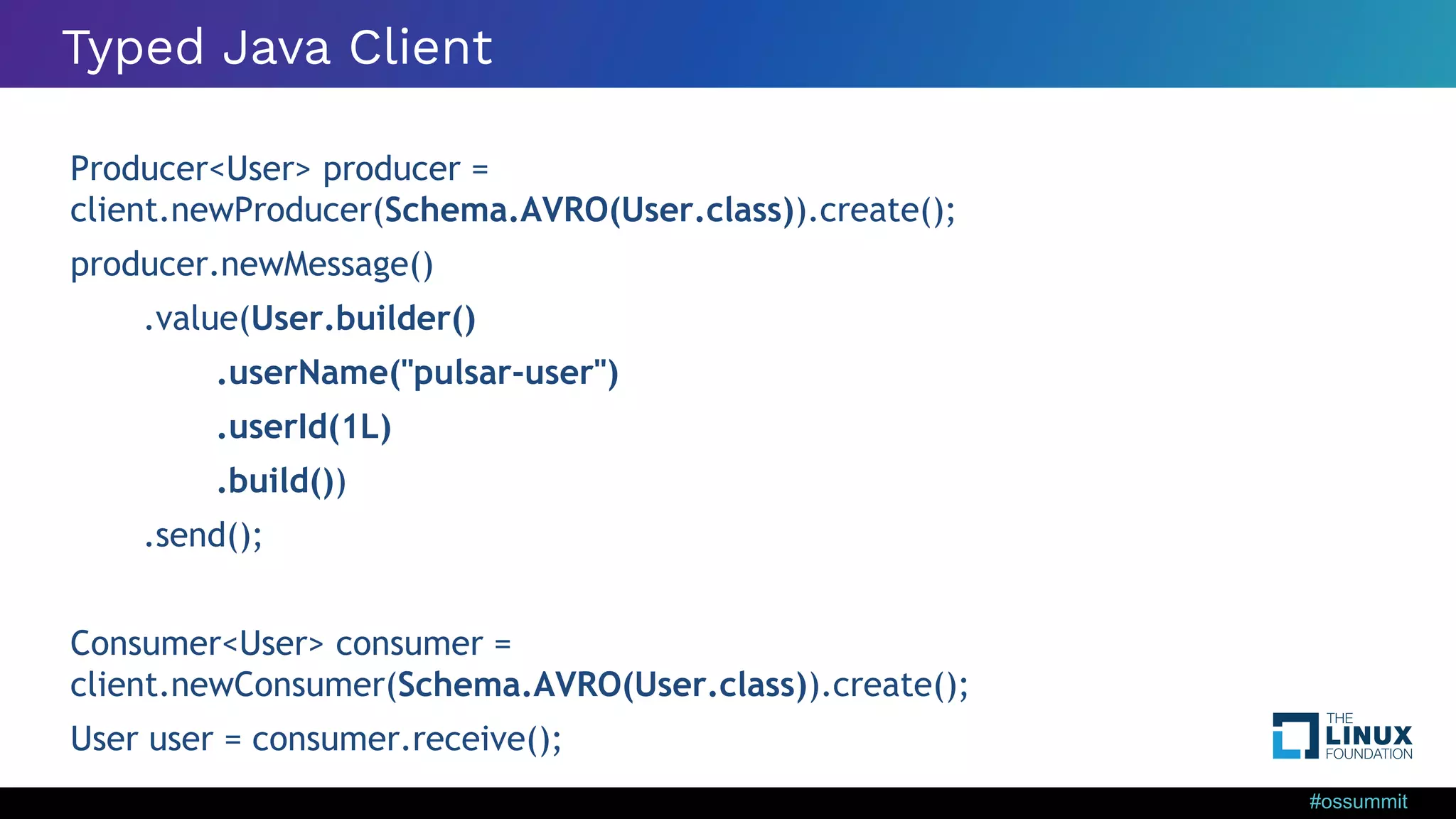

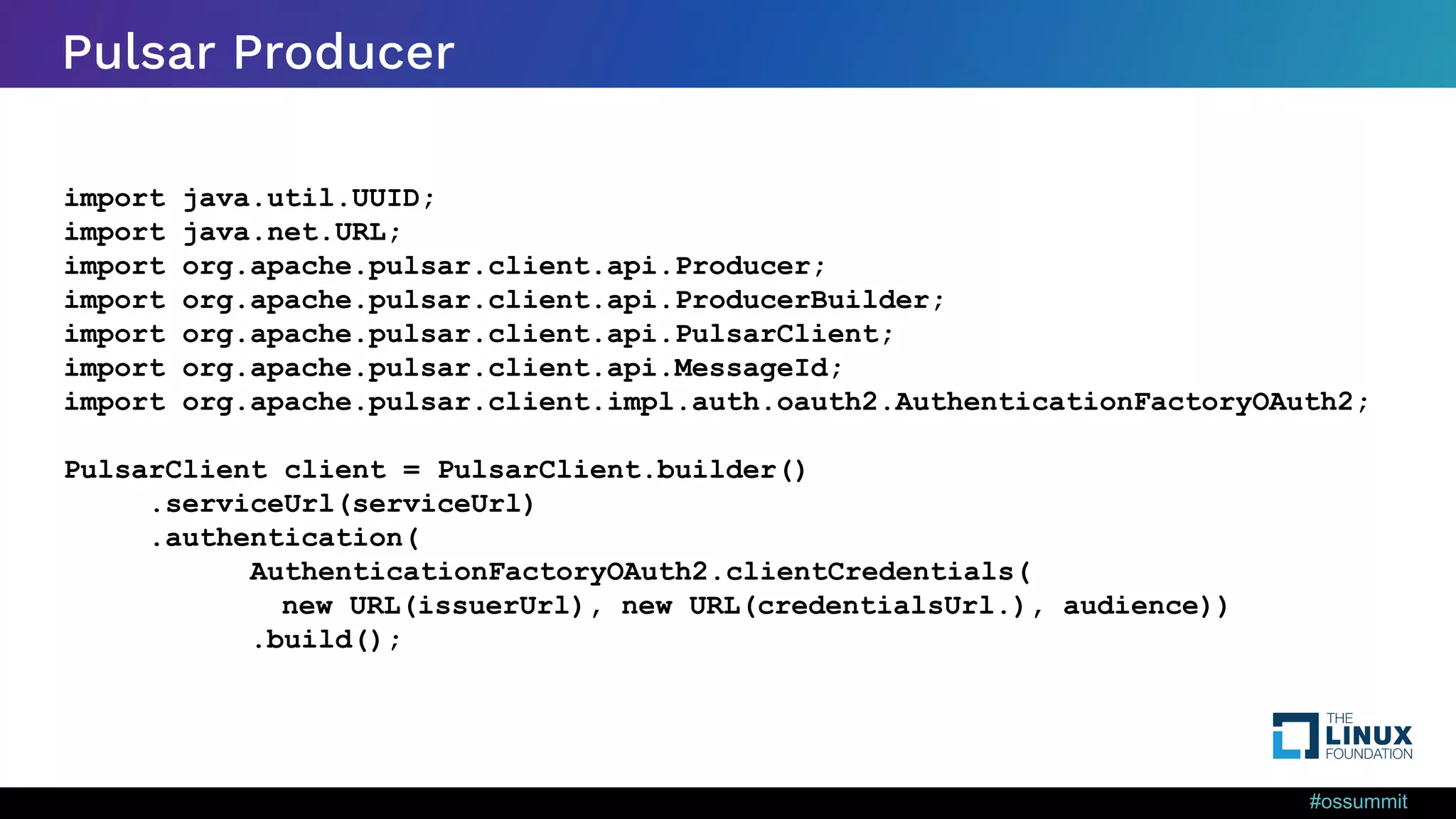

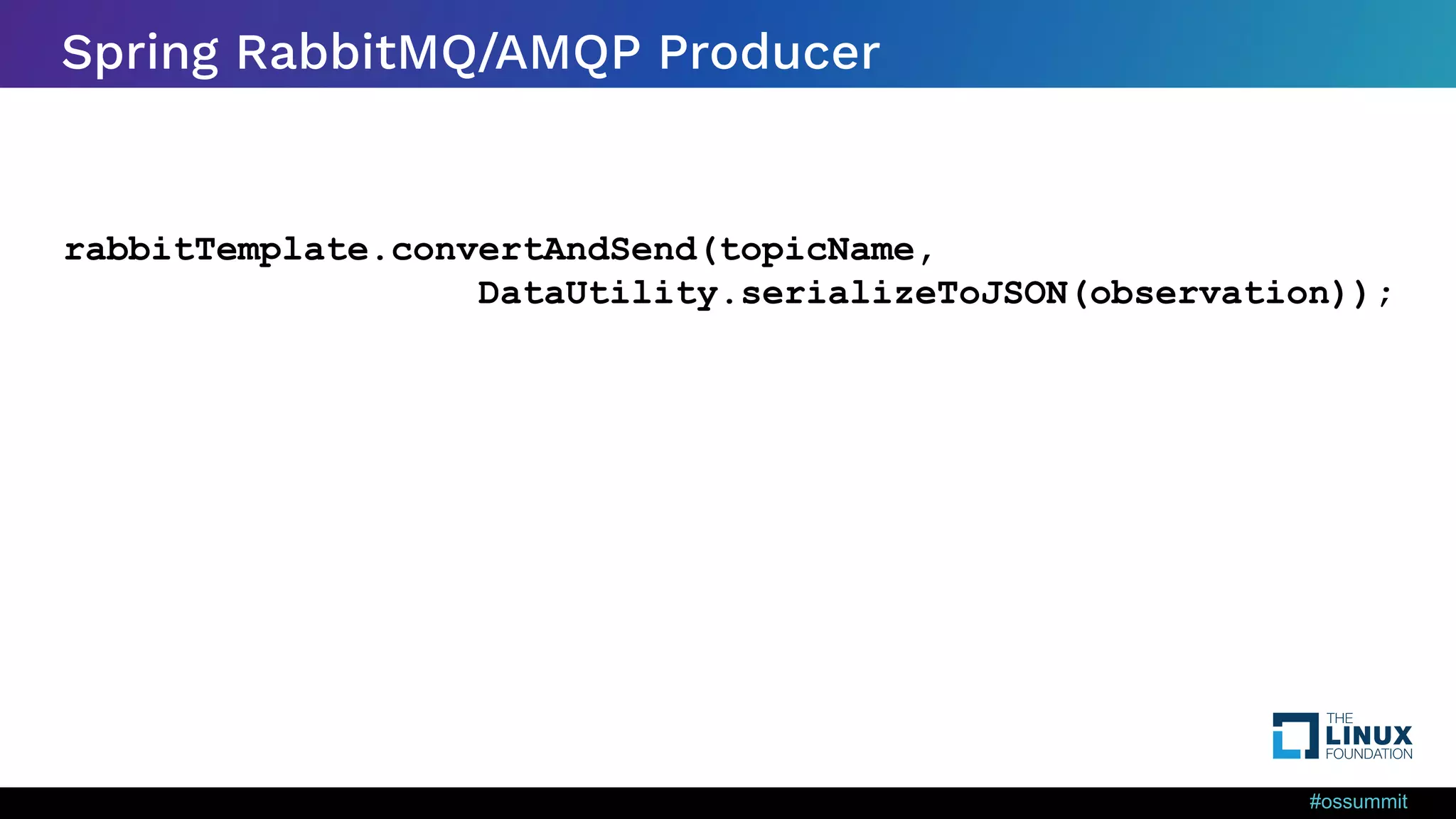

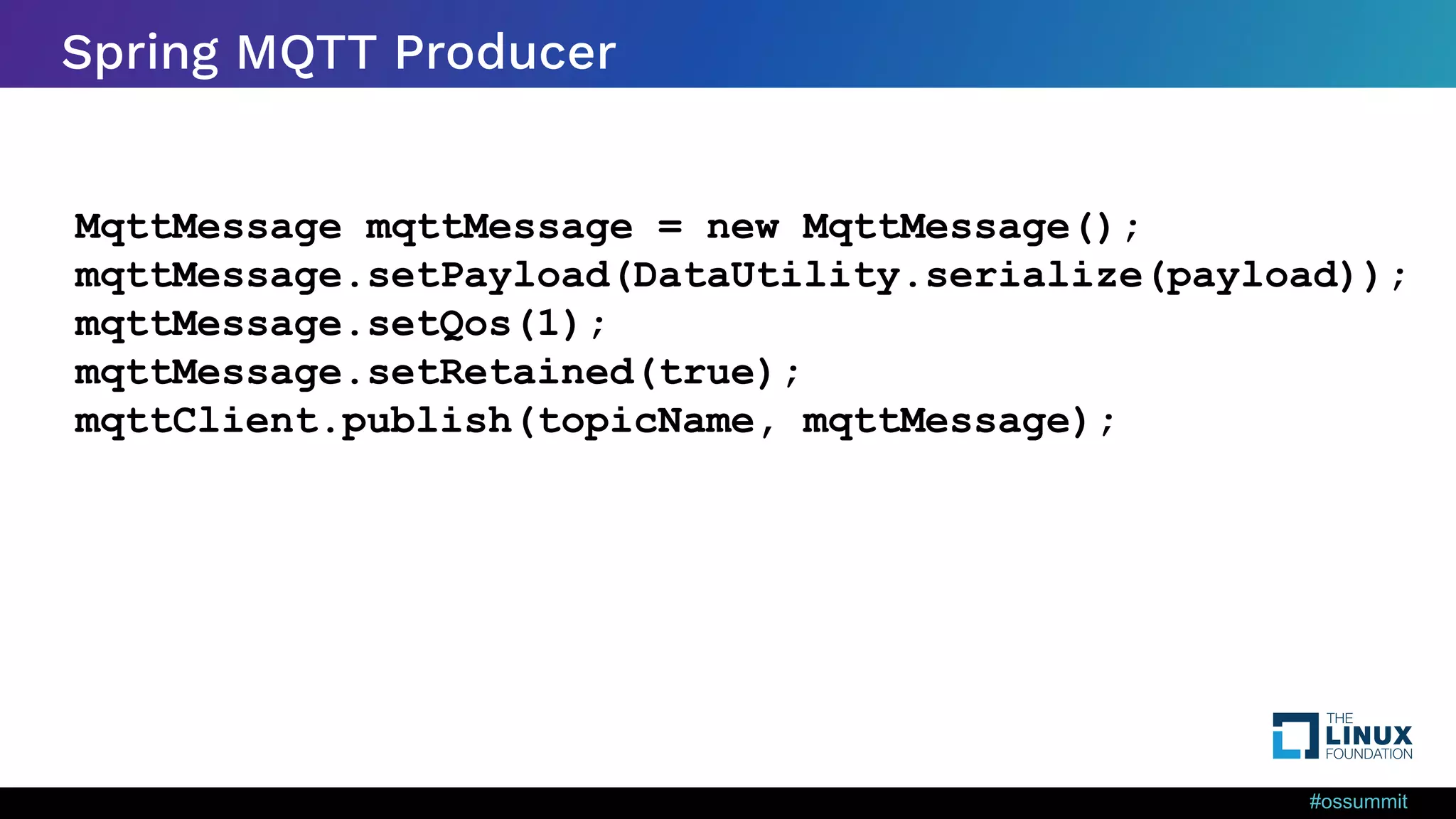

The document provides an in-depth exploration of building streaming applications using Apache Pulsar, highlighting its architecture, key concepts, and various components like brokers and bookies. It discusses the benefits of Pulsar for microservices, asynchronous communication, and real-time applications, as well as practical implementation details for producers and consumers across different programming languages. Additionally, the document covers monitoring, metrics, and methods for cleanup within the Pulsar ecosystem.

![#ossummit Install Python 3 Pulsar Client pip3 install pulsar-client=='2.10.1[all]' Includes AARCH64, ARM, M2, INTEL, … For Python on Pulsar on Pi https://github.com/tspannhw/PulsarOnRaspberryPi https://pulsar.apache.org/docs/en/client-libraries-python/ https://pypi.org/project/pulsar-client/2.10.0/#files](https://image.slidesharecdn.com/ossdeepdiveintobuildingstreamingapplicationswithapachepulsar-220916124224-daada9e0/75/OSS-EU-Deep-Dive-into-Building-Streaming-Applications-with-Apache-Pulsar-25-2048.jpg)

![#ossummit MQTT from Python pip3 install paho-mqtt import paho.mqtt.client as mqtt client = mqtt.Client("rpi4iot") row = { } row['gasKO'] = str(readings) json_string = json.dumps(row) json_string = json_string.strip() client.connect("pulsar-server.com", 1883, 180) client.publish("persistent://public/default/mqtt-2", payload=json_string,qos=0,retain=True) https://www.slideshare.net/bunkertor/data-minutes-2-apache-pulsar-with-mqtt-for-edge-computing-lightning-2022 MQTT](https://image.slidesharecdn.com/ossdeepdiveintobuildingstreamingapplicationswithapachepulsar-220916124224-daada9e0/75/OSS-EU-Deep-Dive-into-Building-Streaming-Applications-with-Apache-Pulsar-31-2048.jpg)

![#ossummit Kafka from Python pip3 install kafka-python from kafka import KafkaProducer from kafka.errors import KafkaError row = { } row['gasKO'] = str(readings) json_string = json.dumps(row) json_string = json_string.strip() producer = KafkaProducer(bootstrap_servers='pulsar1:9092',retries=3) producer.send('topic-kafka-1', json.dumps(row).encode('utf-8')) producer.flush() https://github.com/streamnative/kop https://docs.streamnative.io/platform/v1.0.0/concepts/kop-concepts Apache Kafka](https://image.slidesharecdn.com/ossdeepdiveintobuildingstreamingapplicationswithapachepulsar-220916124224-daada9e0/75/OSS-EU-Deep-Dive-into-Building-Streaming-Applications-with-Apache-Pulsar-33-2048.jpg)

![#ossummit Pulsar Simple Producer String pulsarKey = UUID.randomUUID().toString(); String OS = System.getProperty("os.name").toLowerCase(); ProducerBuilder<byte[]> producerBuilder = client.newProducer().topic(topic) .producerName("demo"); Producer<byte[]> producer = producerBuilder.create(); MessageId msgID = producer.newMessage().key(pulsarKey).value("msg".getBytes()) .property("device",OS).send(); producer.close(); client.close();](https://image.slidesharecdn.com/ossdeepdiveintobuildingstreamingapplicationswithapachepulsar-220916124224-daada9e0/75/OSS-EU-Deep-Dive-into-Building-Streaming-Applications-with-Apache-Pulsar-42-2048.jpg)

![#ossummit import org.apache.pulsar.client.impl.schema.JSONSchema; import org.apache.pulsar.functions.api.*; public class AirQualityFunction implements Function<byte[], Void> { @Override public Void process(byte[] input, Context context) { context.getLogger().debug("File:” + new String(input)); context.newOutputMessage(“topicname”, JSONSchema.of(Observation.class)) .key(UUID.randomUUID().toString()) .property(“prop1”, “value1”) .value(observation) .send(); } } Your Code Here Pulsar Function SDK](https://image.slidesharecdn.com/ossdeepdiveintobuildingstreamingapplicationswithapachepulsar-220916124224-daada9e0/75/OSS-EU-Deep-Dive-into-Building-Streaming-Applications-with-Apache-Pulsar-44-2048.jpg)

![#ossummit Setting Subscription Type Java Consumer<byte[]> consumer = pulsarClient.newConsumer() .topic(topic) .subscriptionName(“subscriptionName") .subscriptionType(SubscriptionType.Shared) .subscribe();](https://image.slidesharecdn.com/ossdeepdiveintobuildingstreamingapplicationswithapachepulsar-220916124224-daada9e0/75/OSS-EU-Deep-Dive-into-Building-Streaming-Applications-with-Apache-Pulsar-45-2048.jpg)

![#ossummit Subscribing to a Topic and Setting Subscription Name Java Consumer<byte[]> consumer = pulsarClient.newConsumer() .topic(topic) .subscriptionName(“subscriptionName") .subscribe();](https://image.slidesharecdn.com/ossdeepdiveintobuildingstreamingapplicationswithapachepulsar-220916124224-daada9e0/75/OSS-EU-Deep-Dive-into-Building-Streaming-Applications-with-Apache-Pulsar-46-2048.jpg)