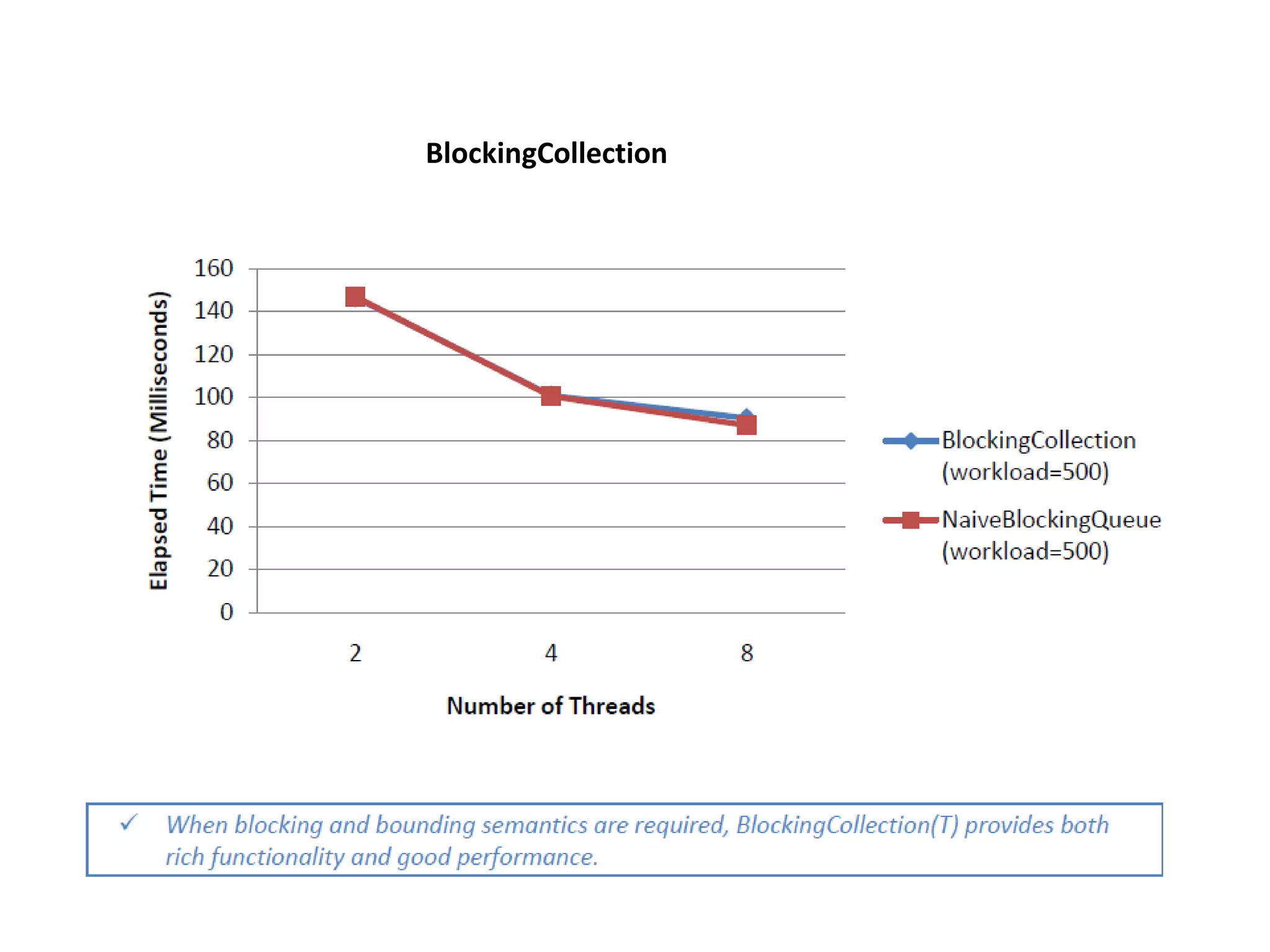

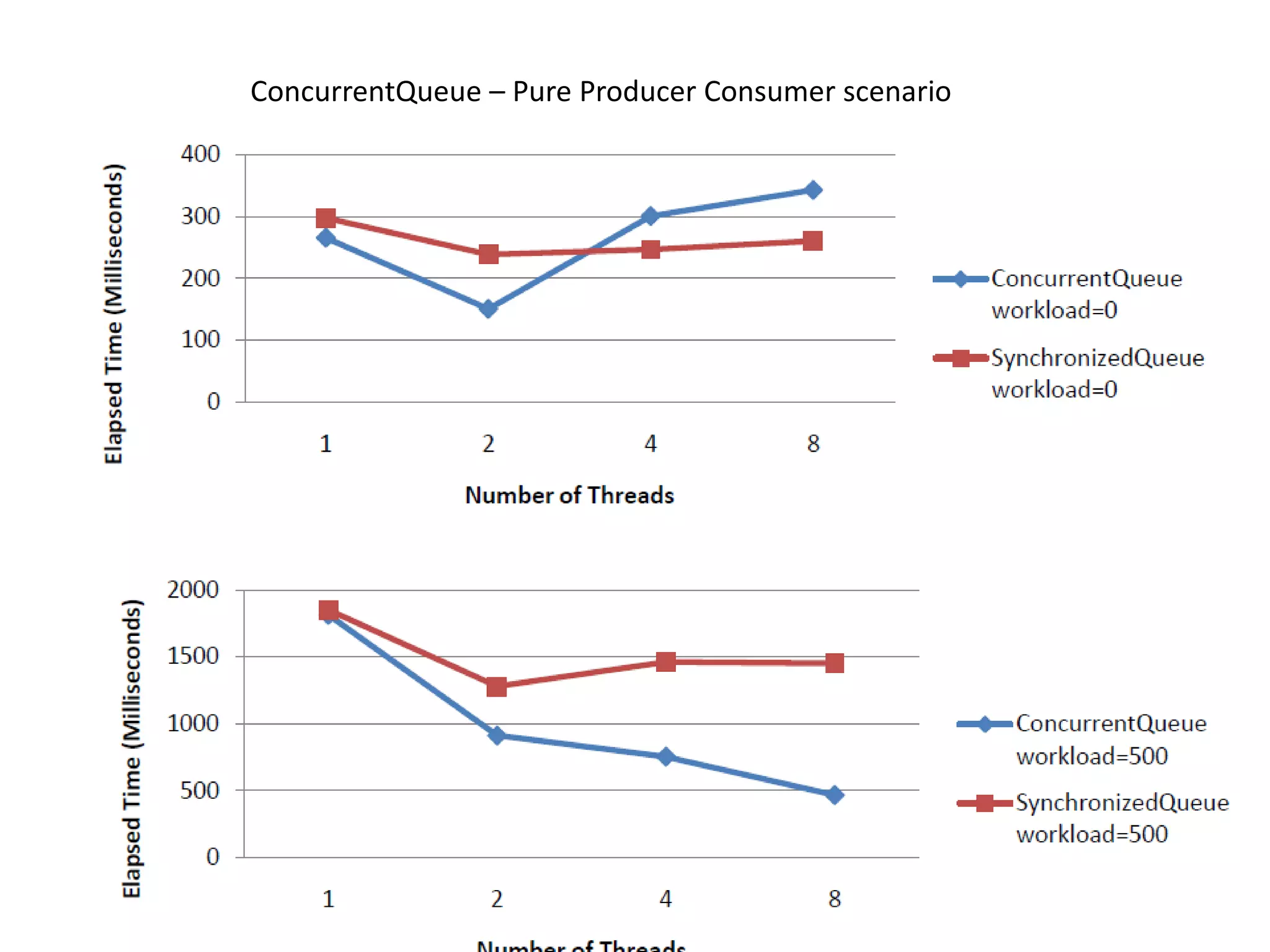

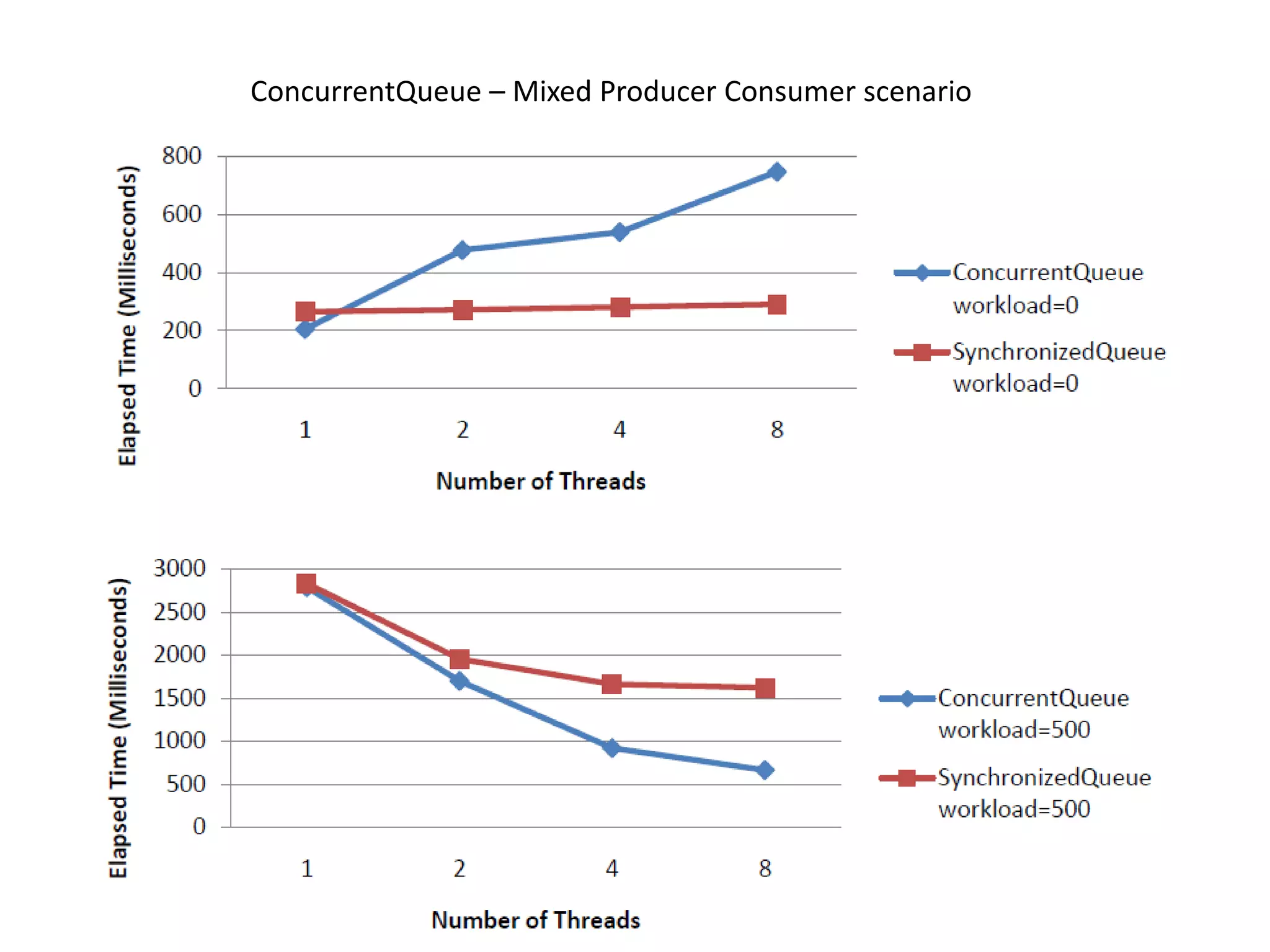

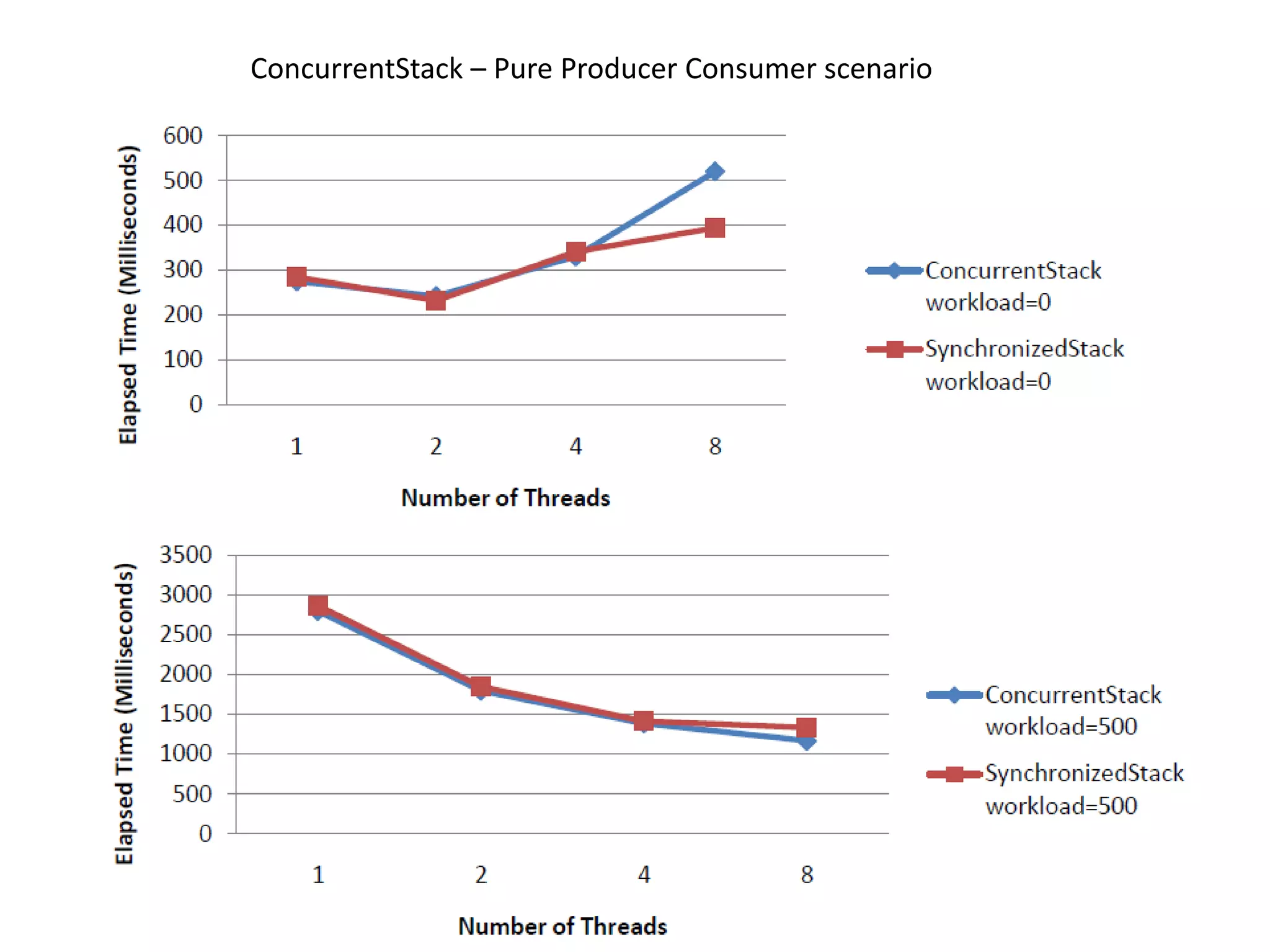

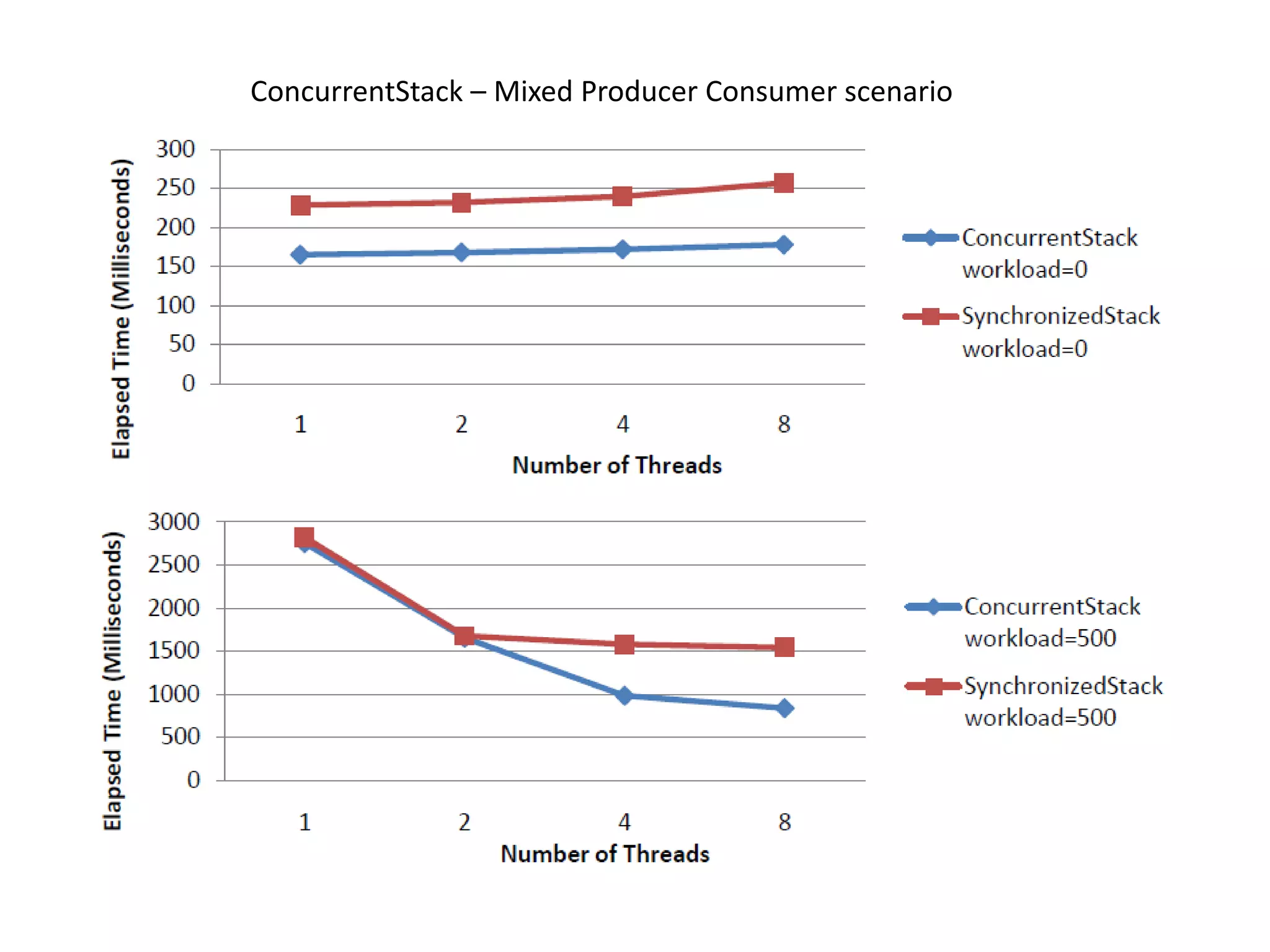

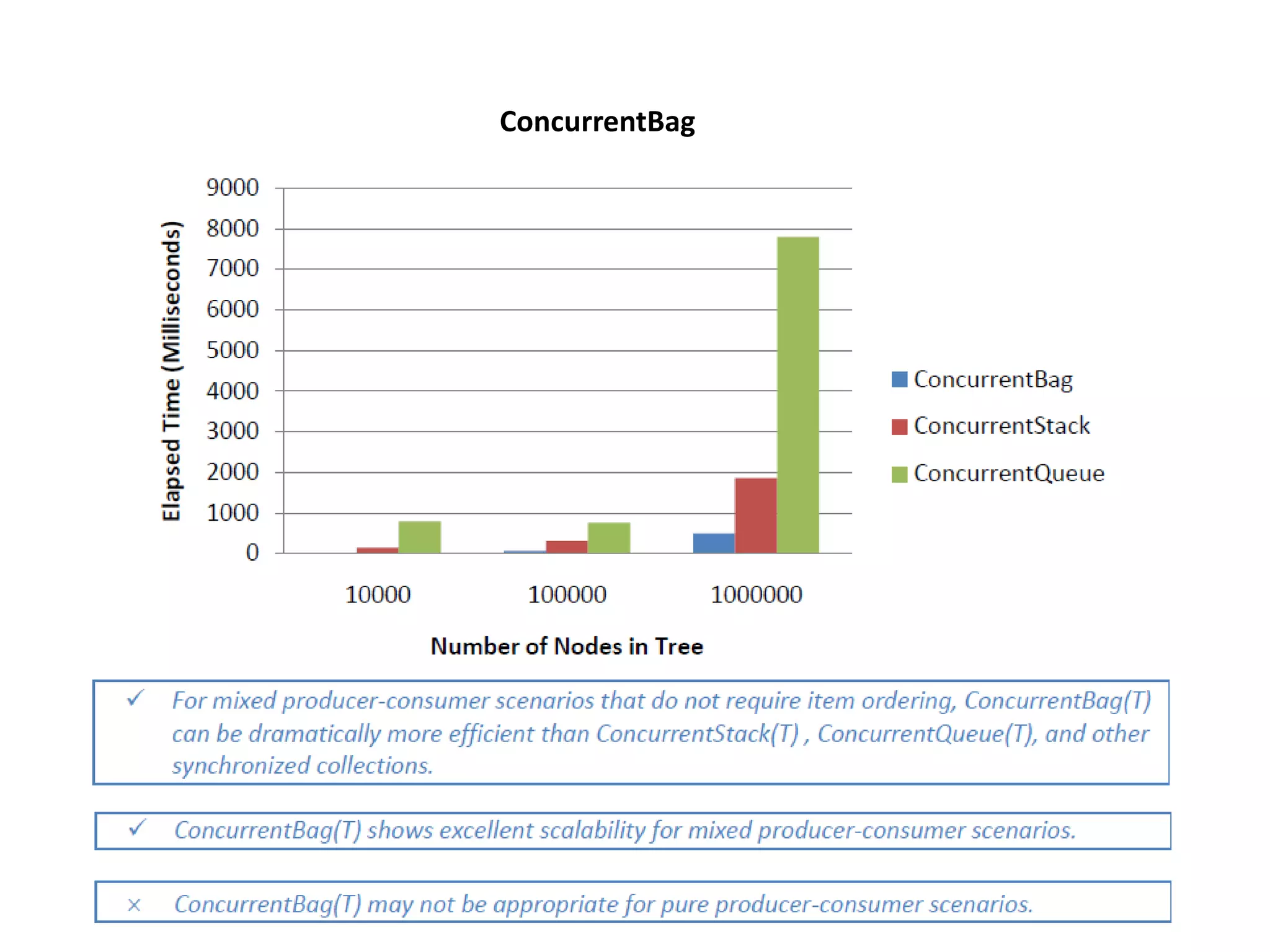

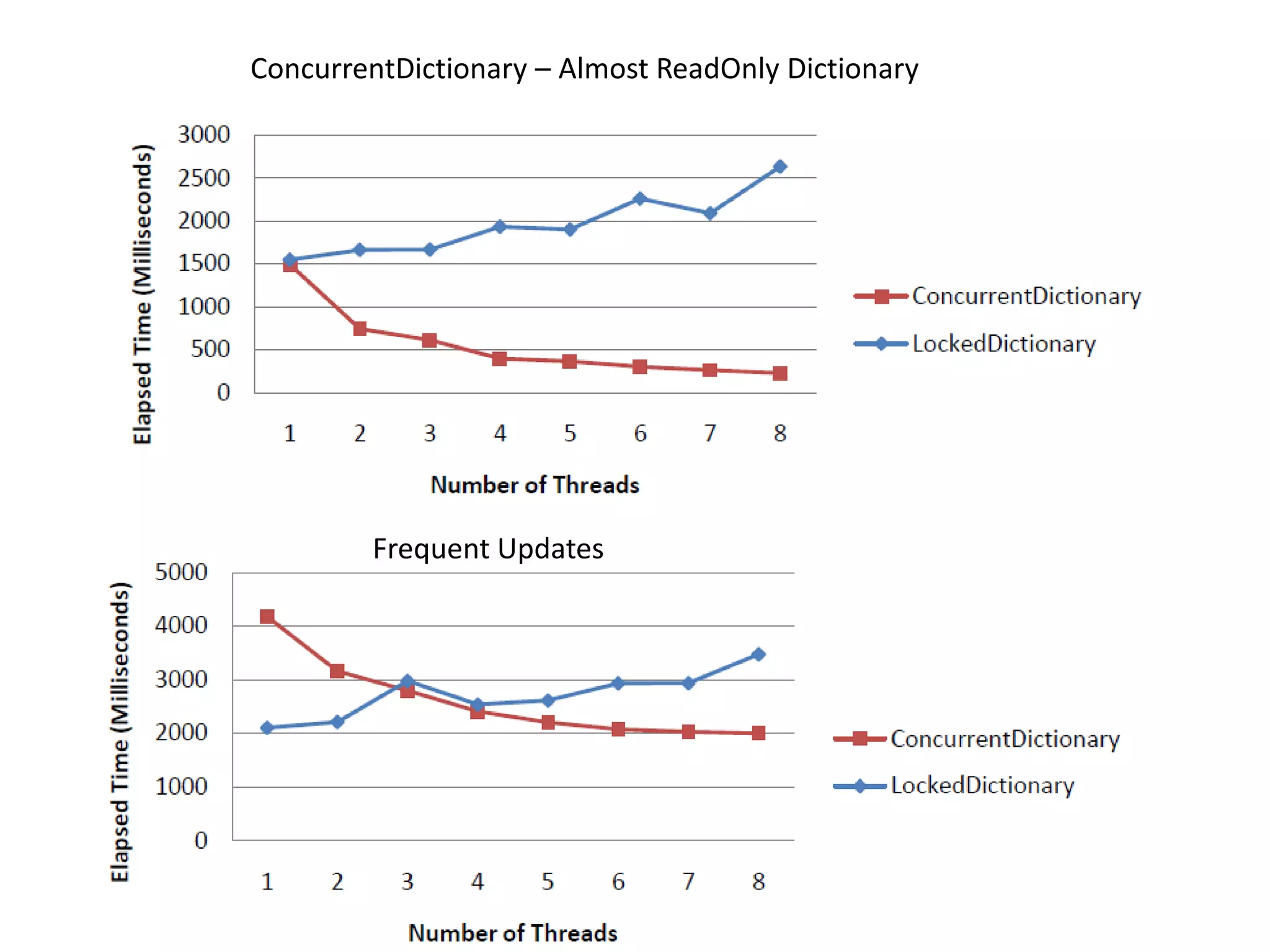

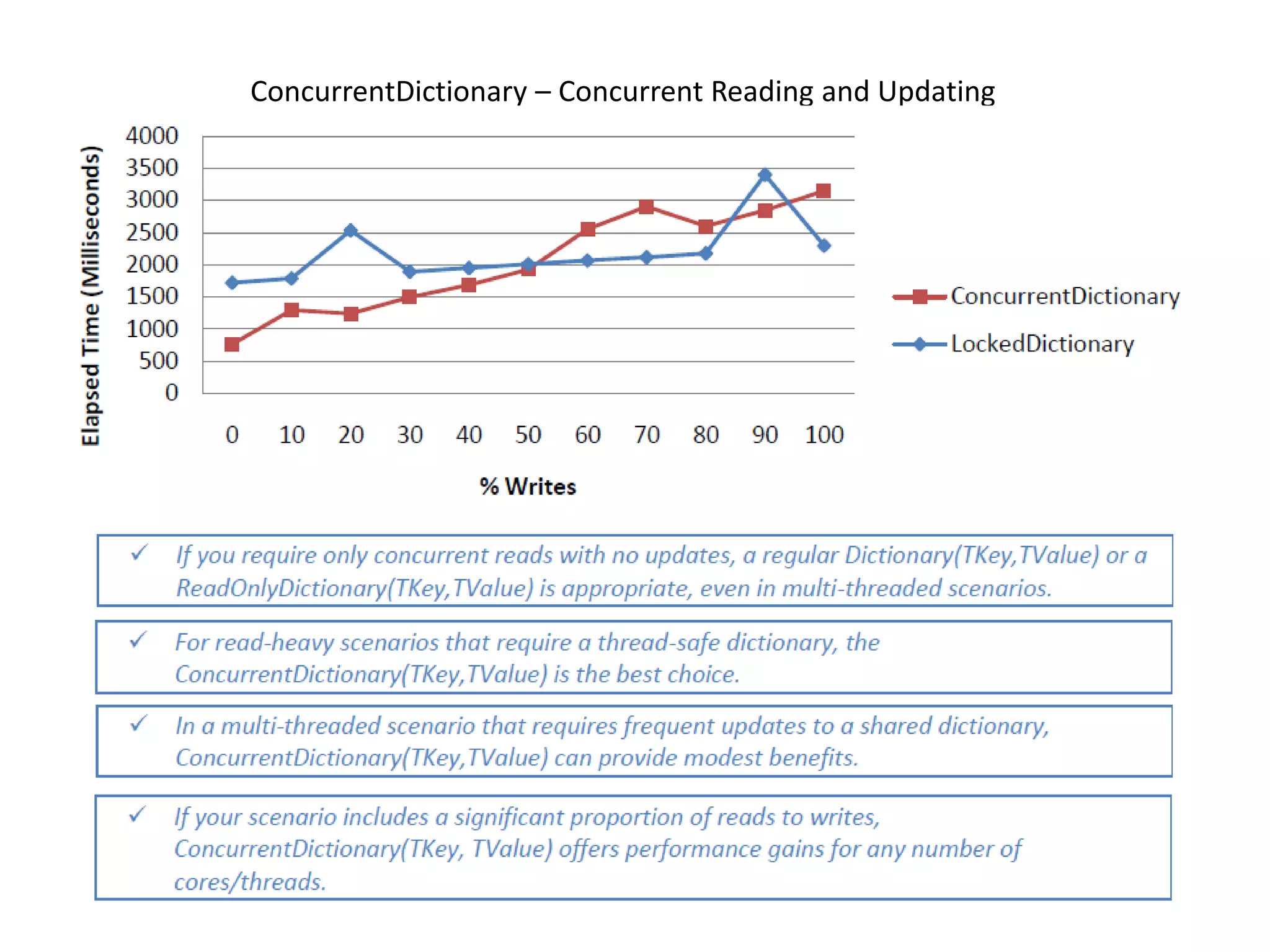

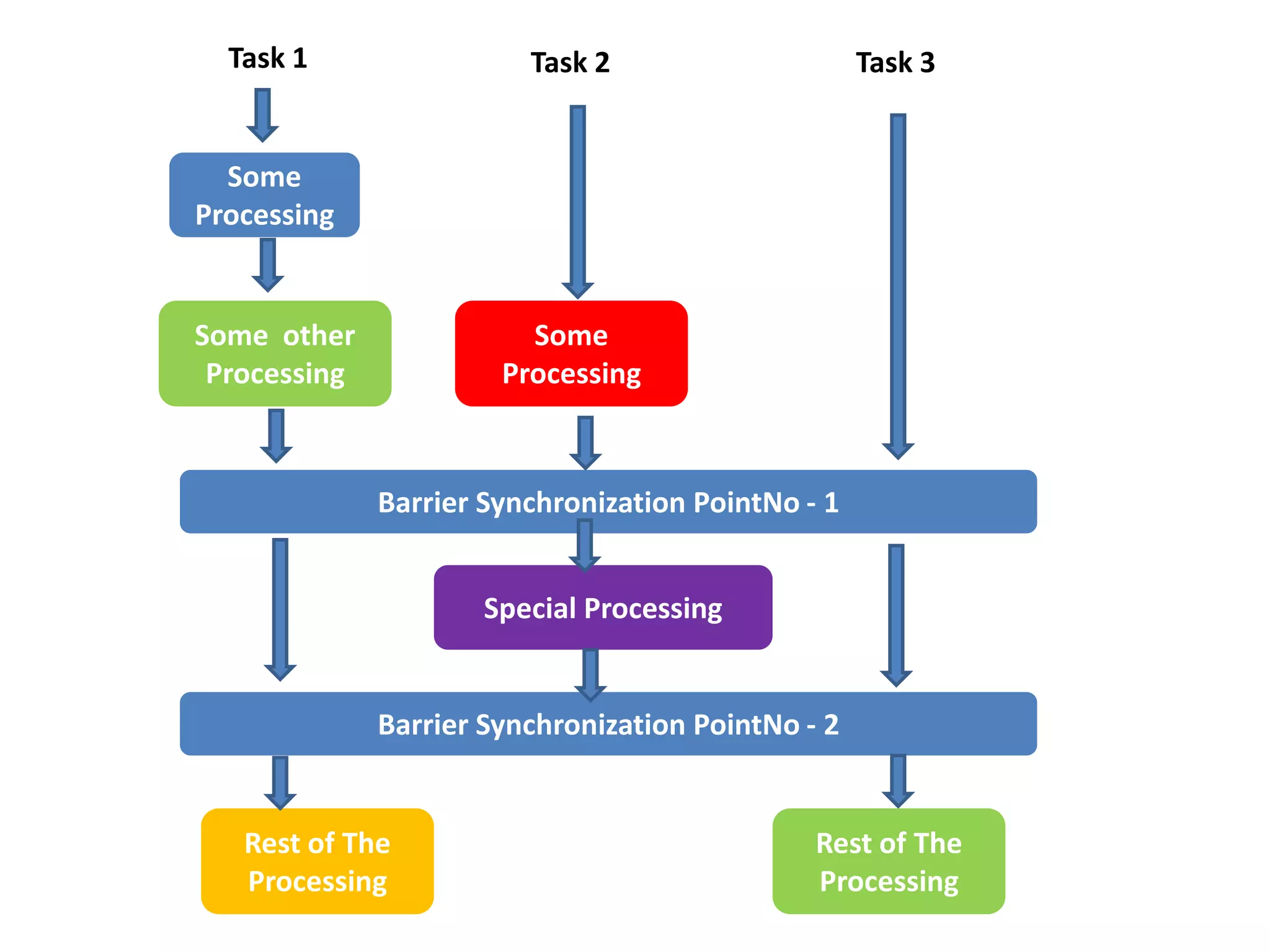

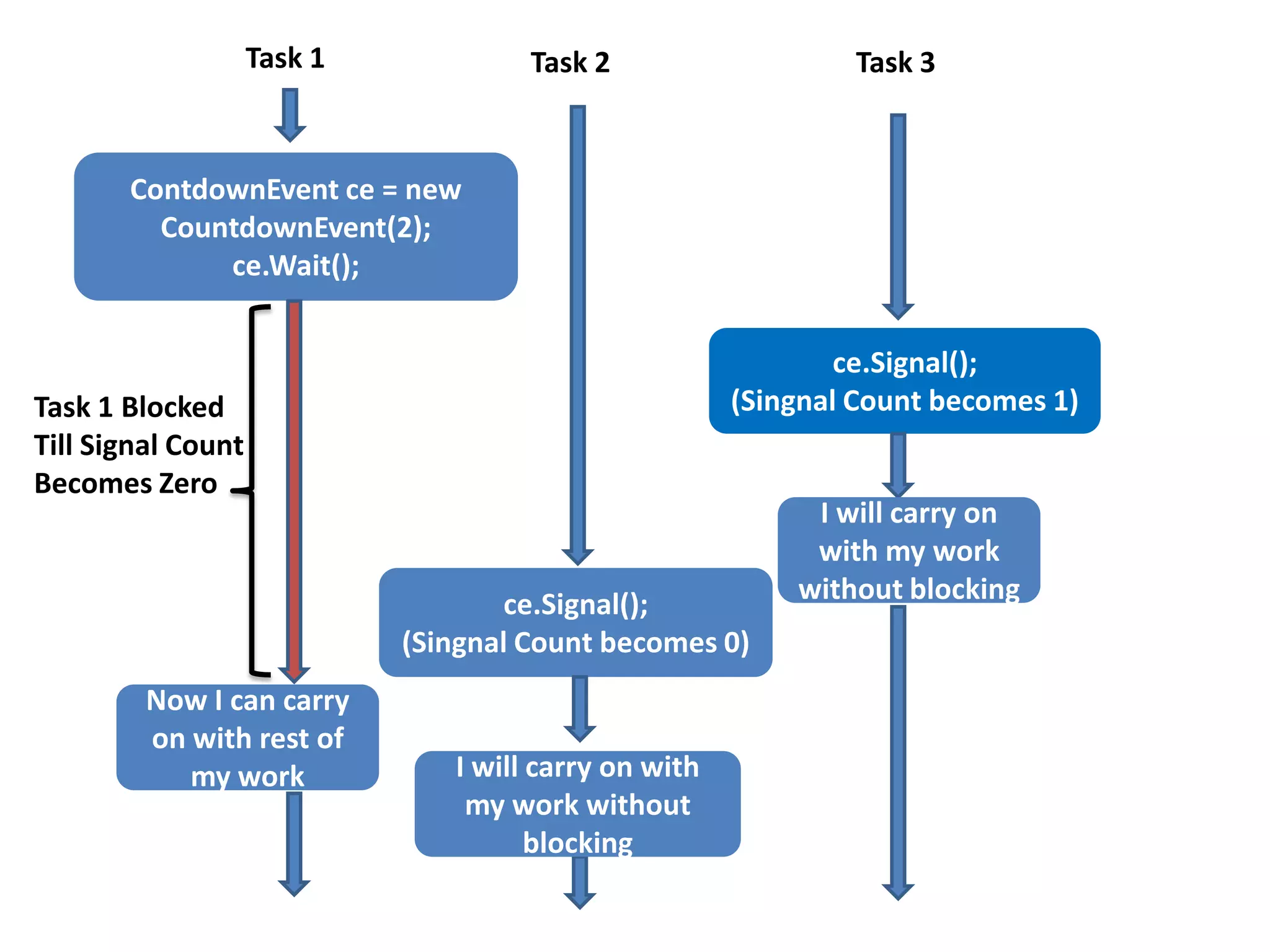

The document discusses various topics related to multi-core programming in .NET including: 1) Concurrent collections like ConcurrentQueue and ConcurrentDictionary that are thread-safe for concurrent access from multiple threads. 2) Synchronization primitives like CountdownEvent, Barrier, and SemaphoreSlim for coordinating threads. 3) Techniques for improving performance like lazy initialization, limiting allocations, and optimizing for caches. 4) Important guidelines for using locks correctly and avoiding common multithreading bugs.