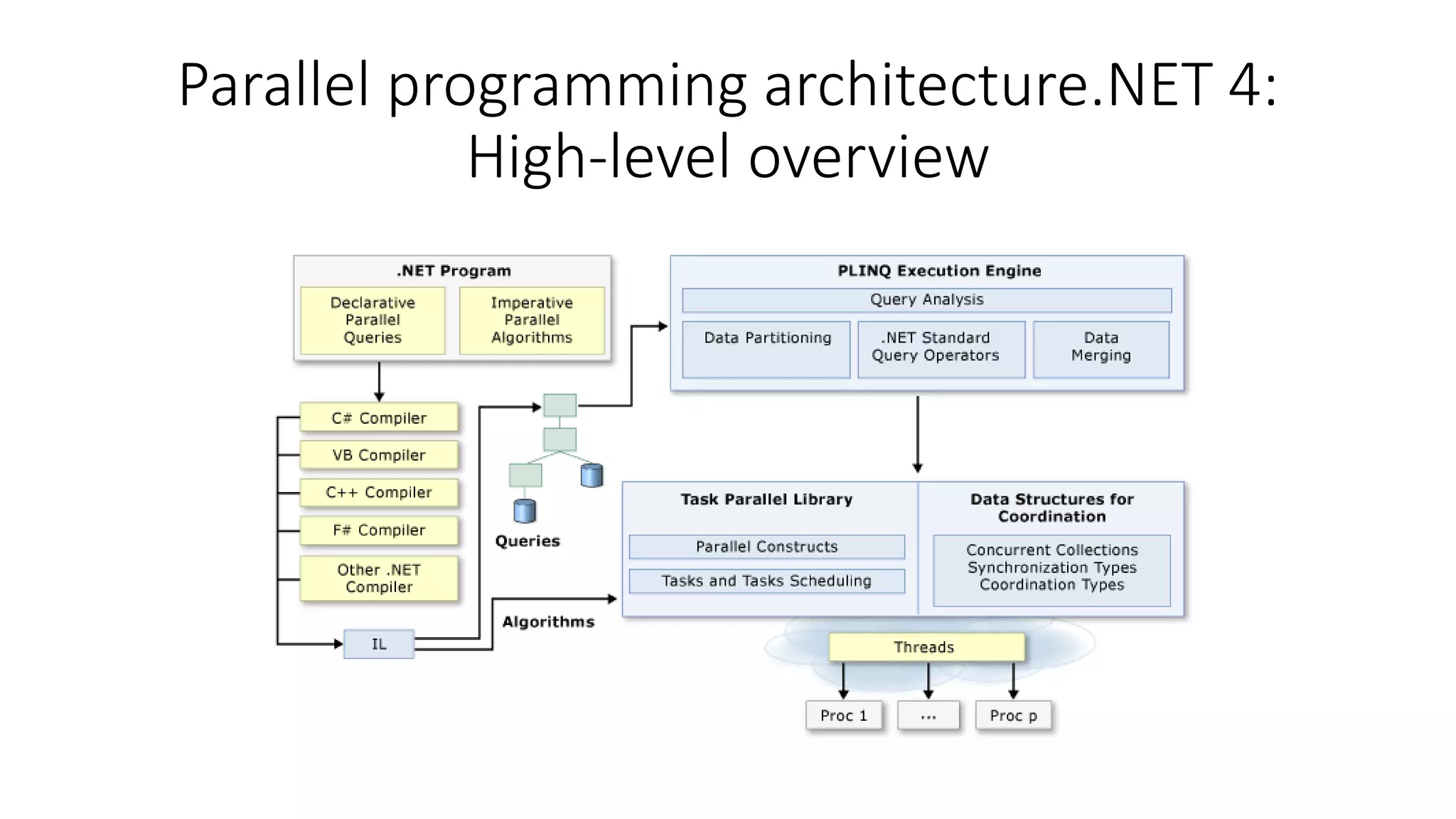

This document discusses parallel programming in .NET and provides an overview of the Task Parallel Library (TPL) and Parallel LINQ (PLINQ). It notes that multicore processors have existed for years but many developers are still writing single-threaded programs. The TPL scales concurrency dynamically across cores and handles partitioning work. PLINQ can improve performance of some queries by parallelizing across segments. Tasks represent asynchronous operations more efficiently than threads. The document provides examples of implicit and explicit task creation and running tasks in parallel using Parallel.Invoke or Task.Run.