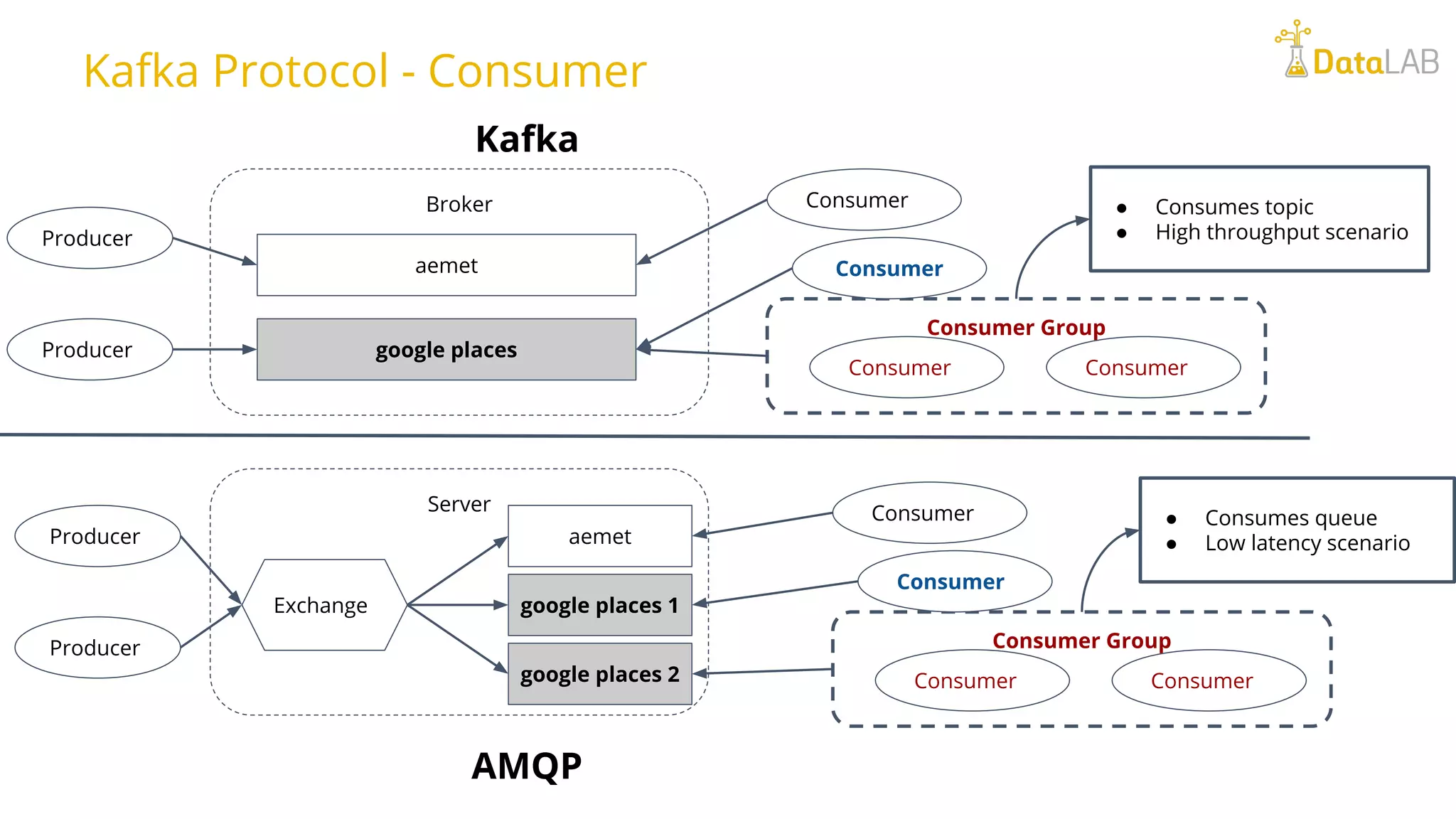

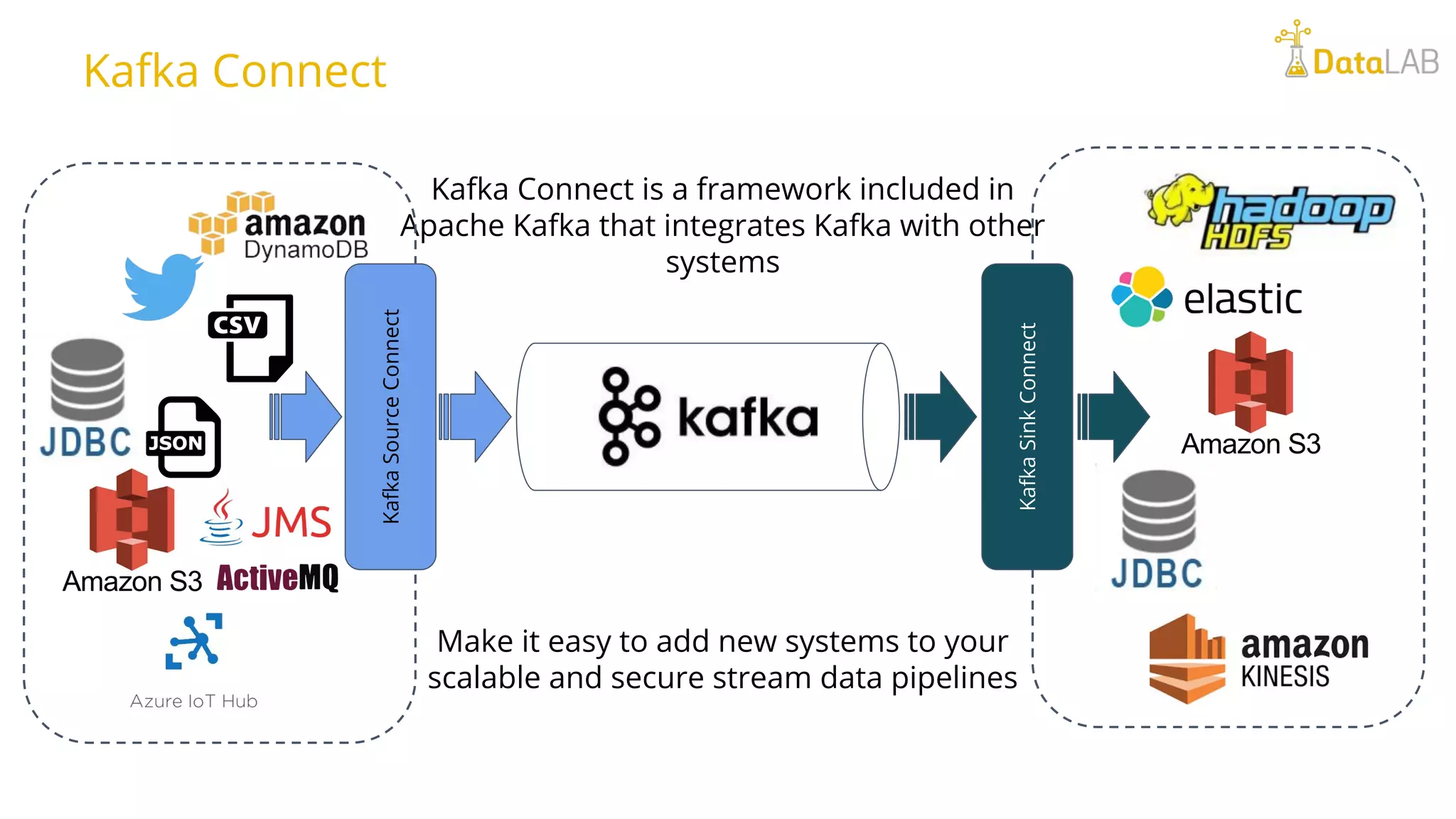

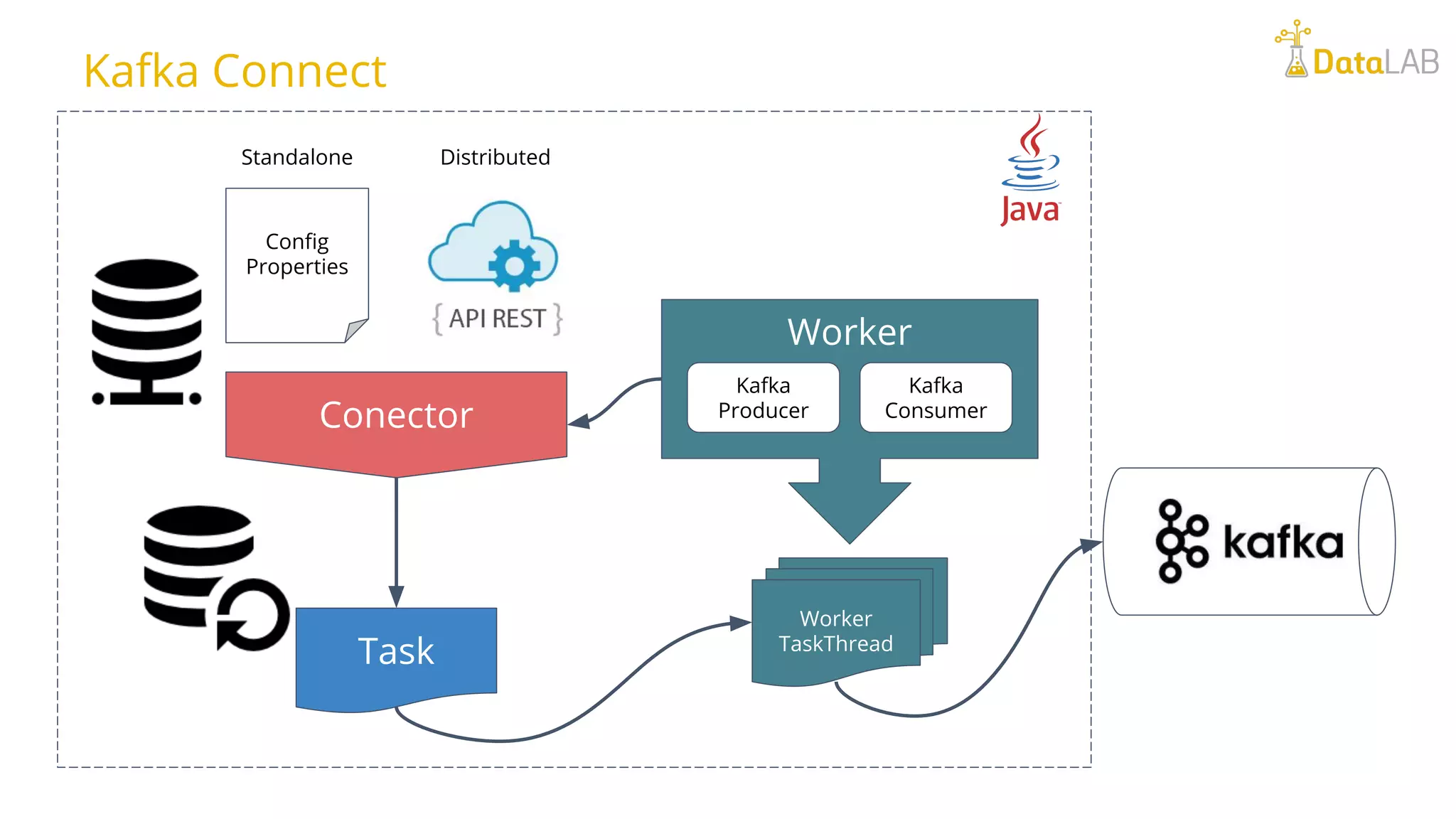

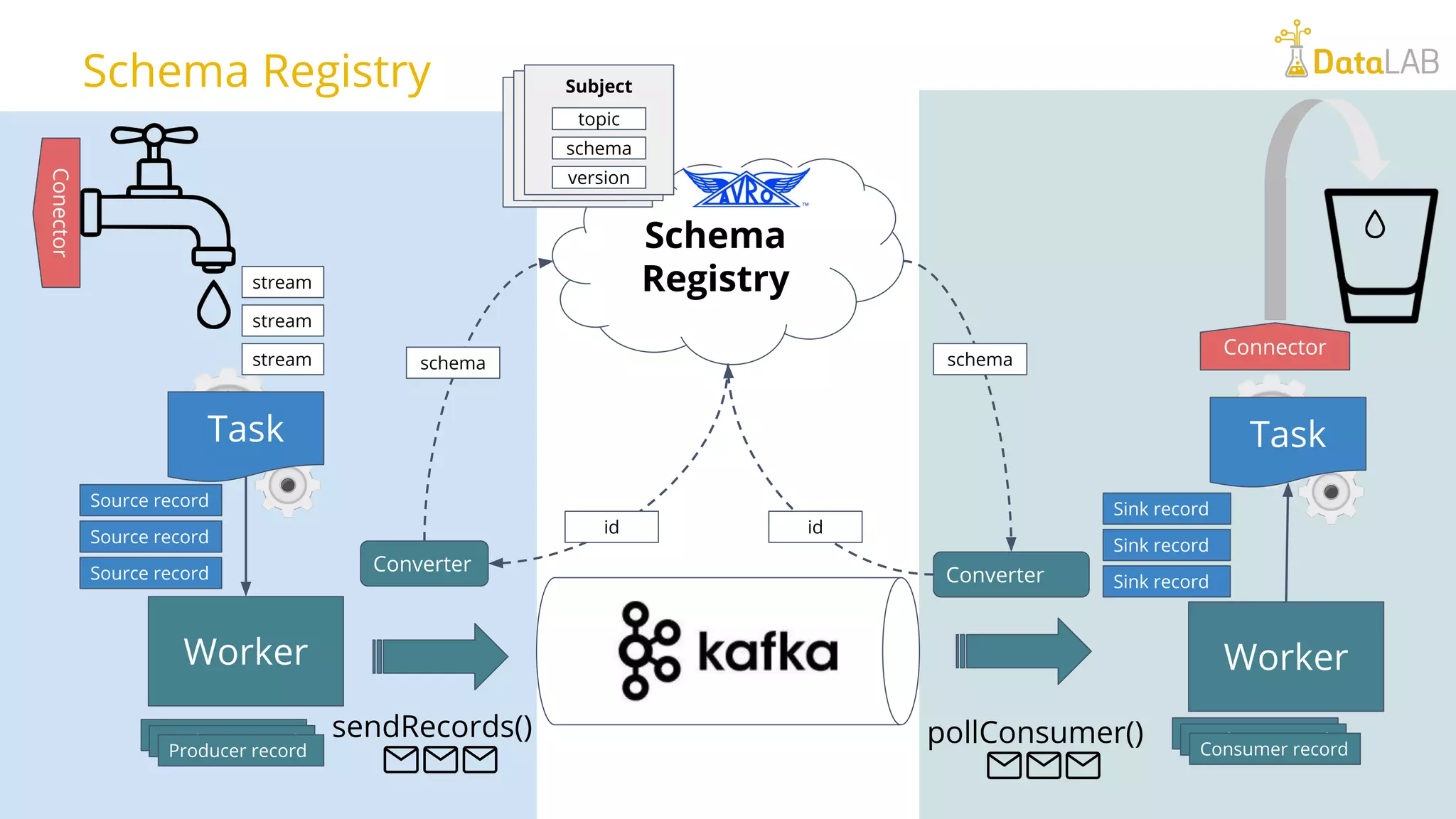

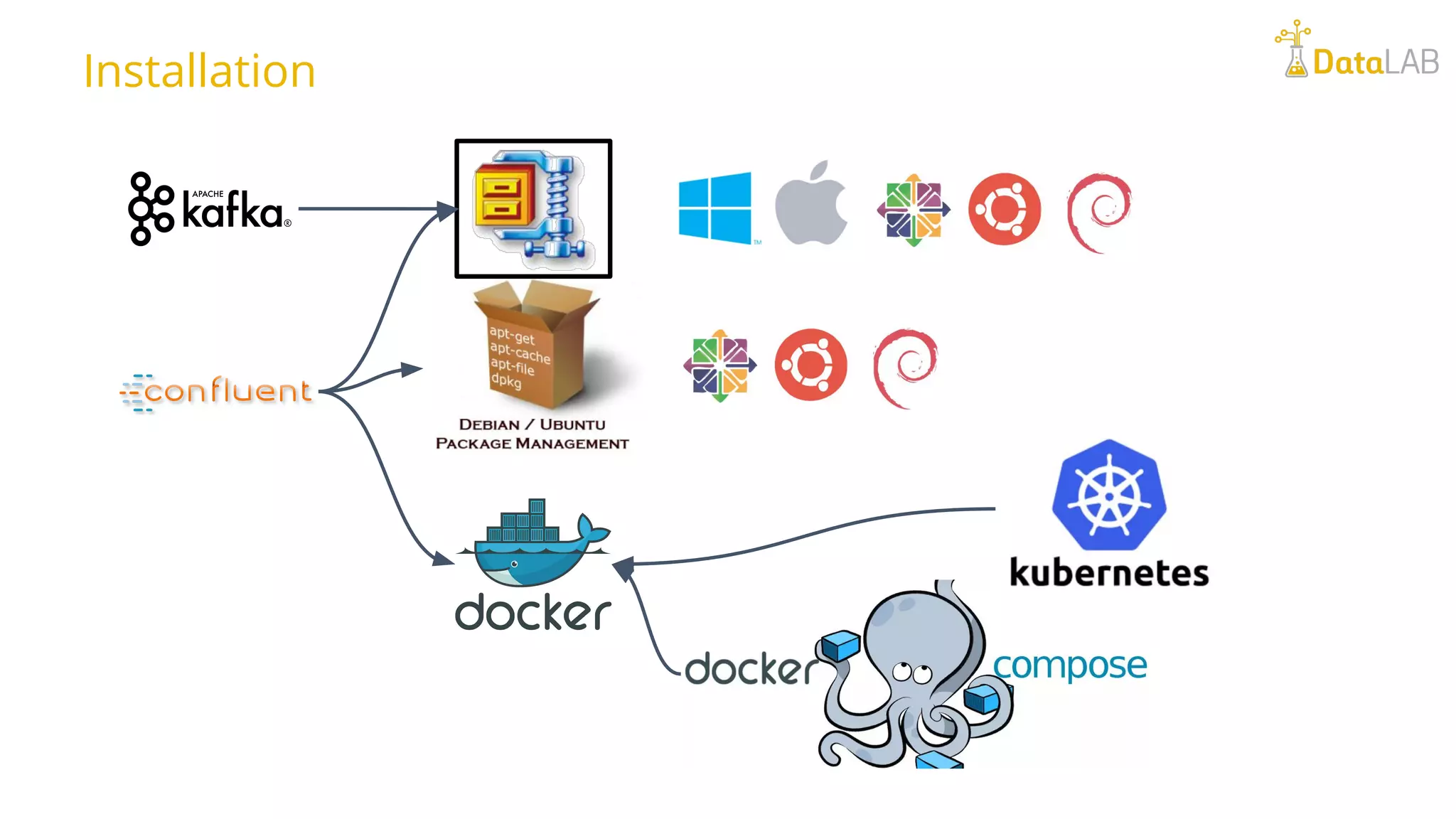

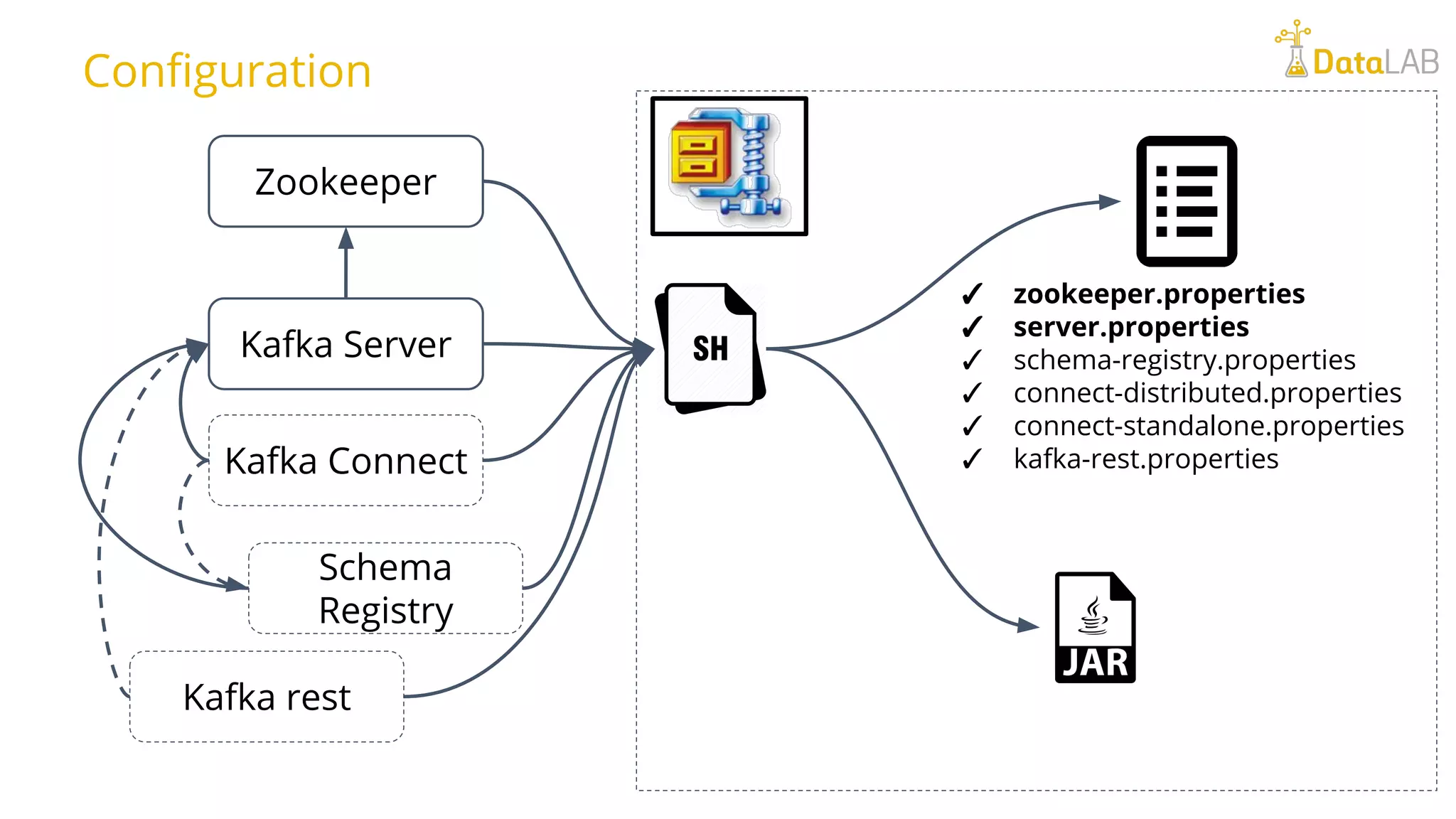

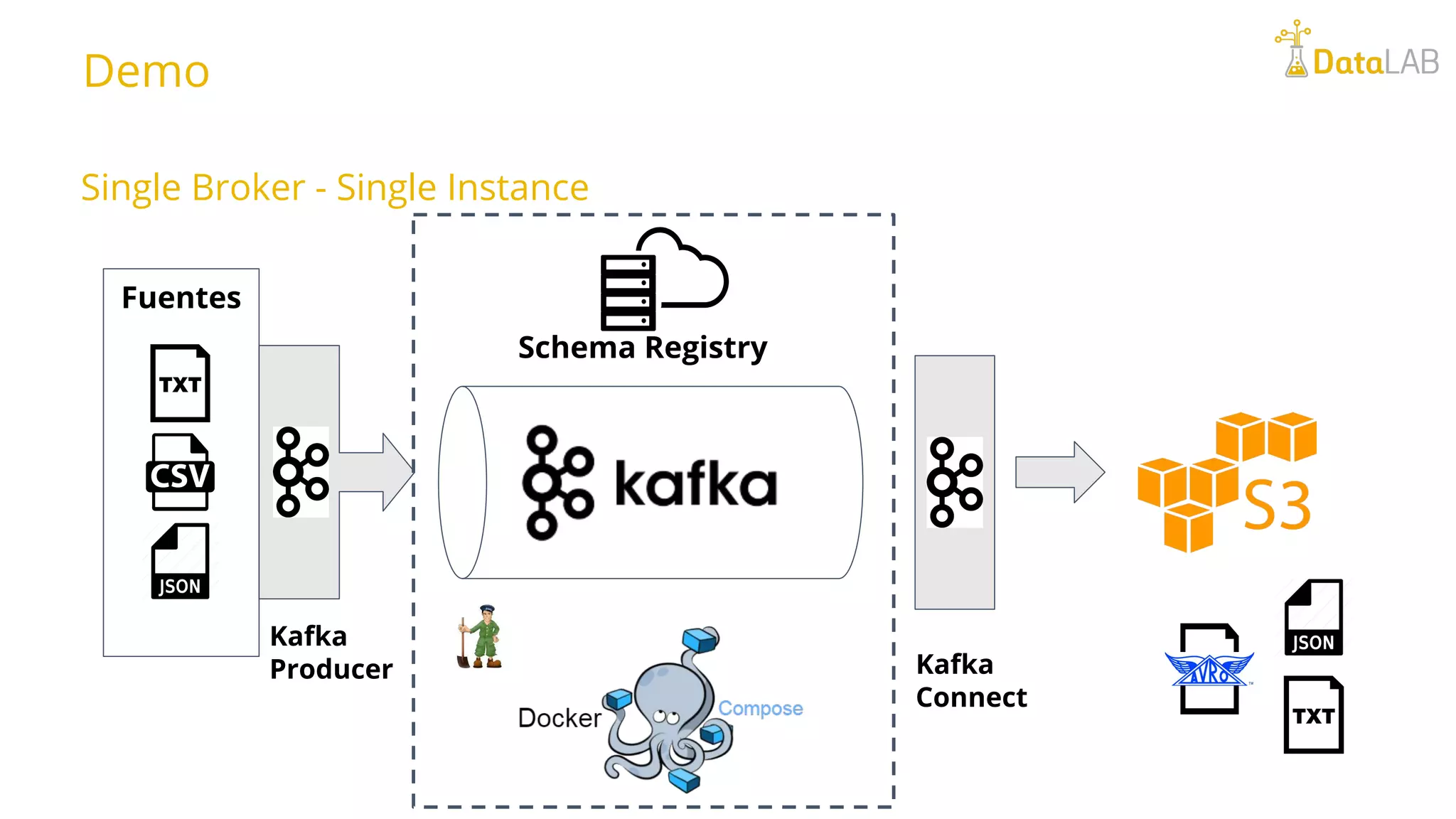

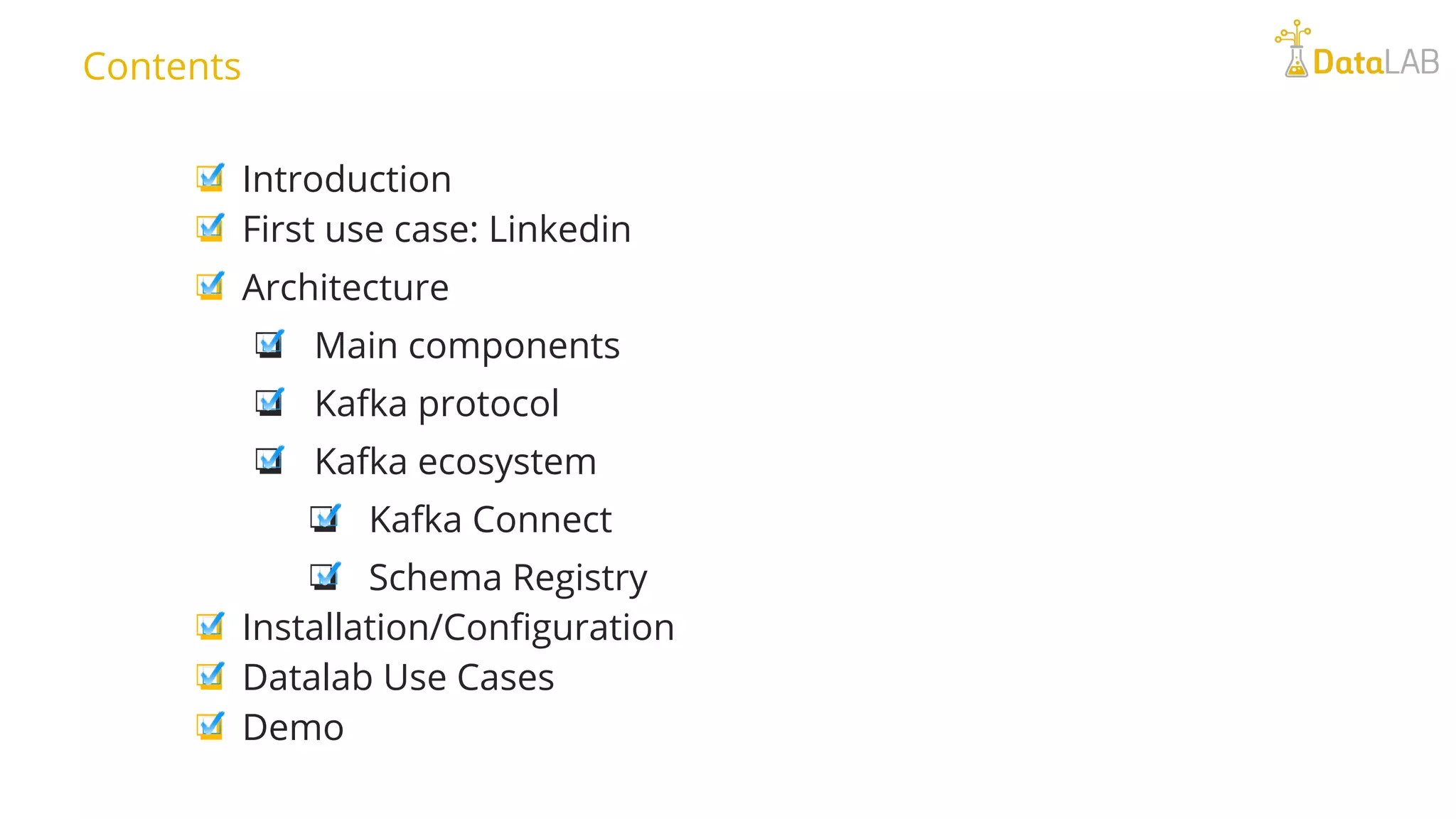

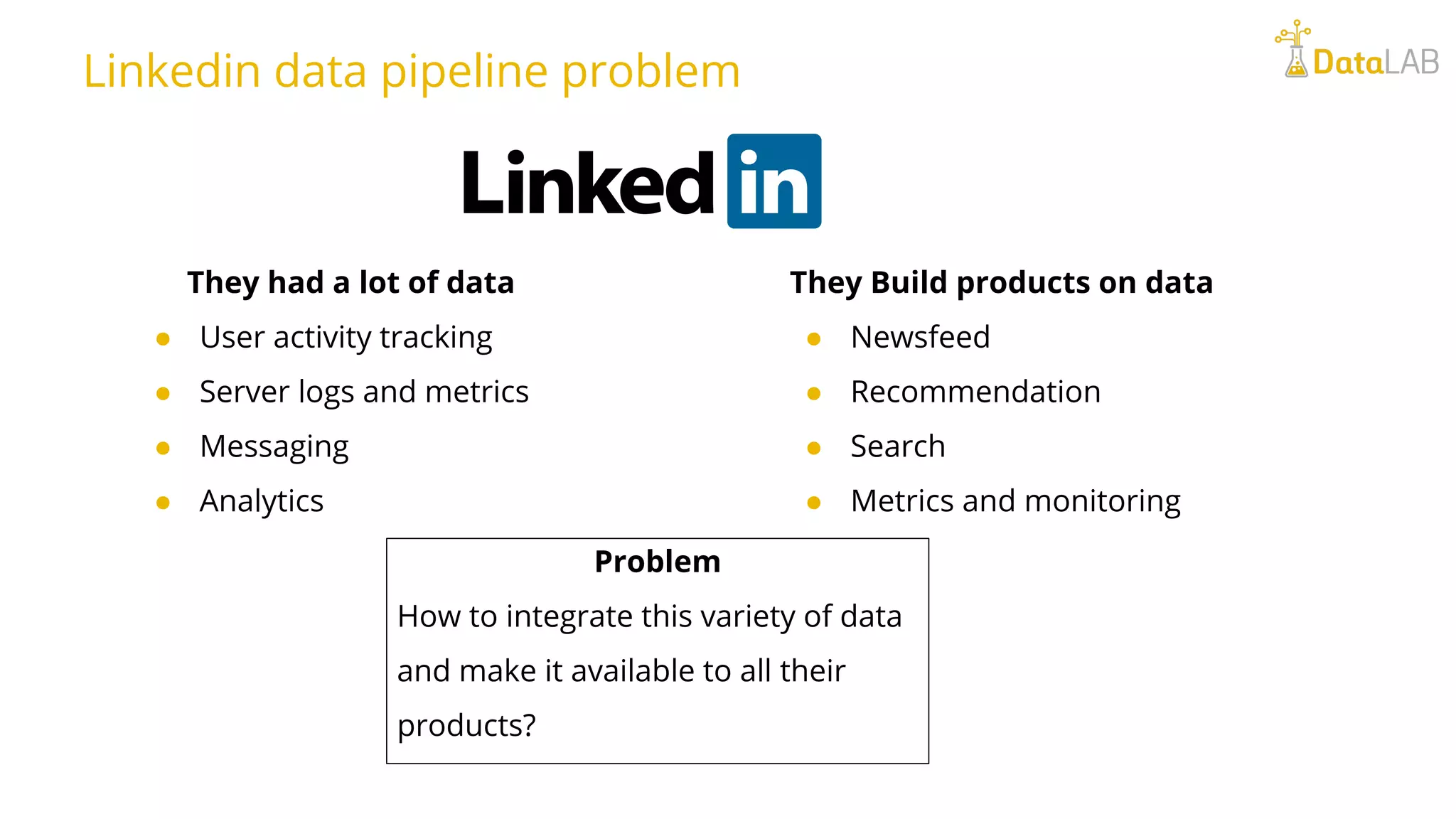

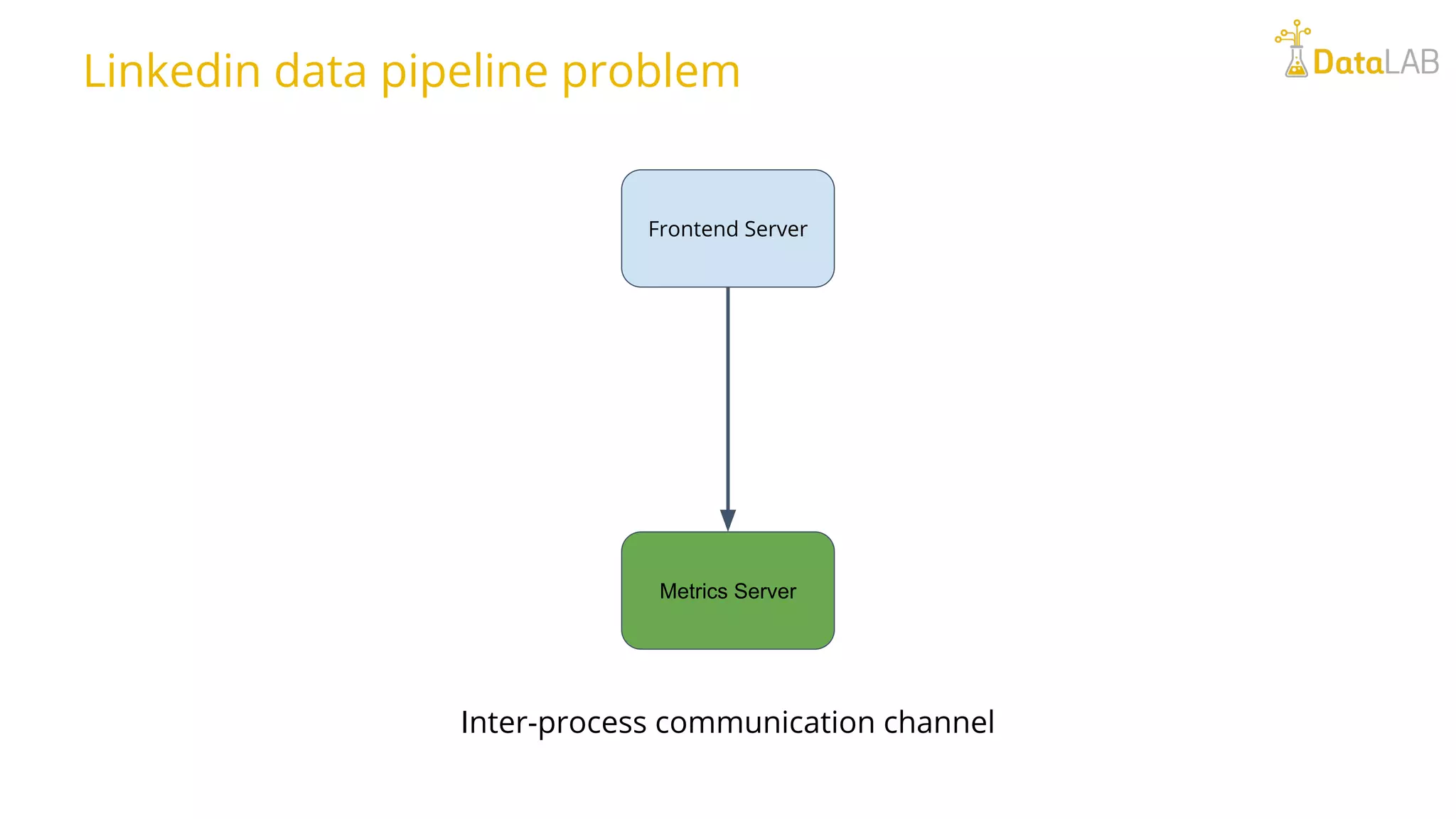

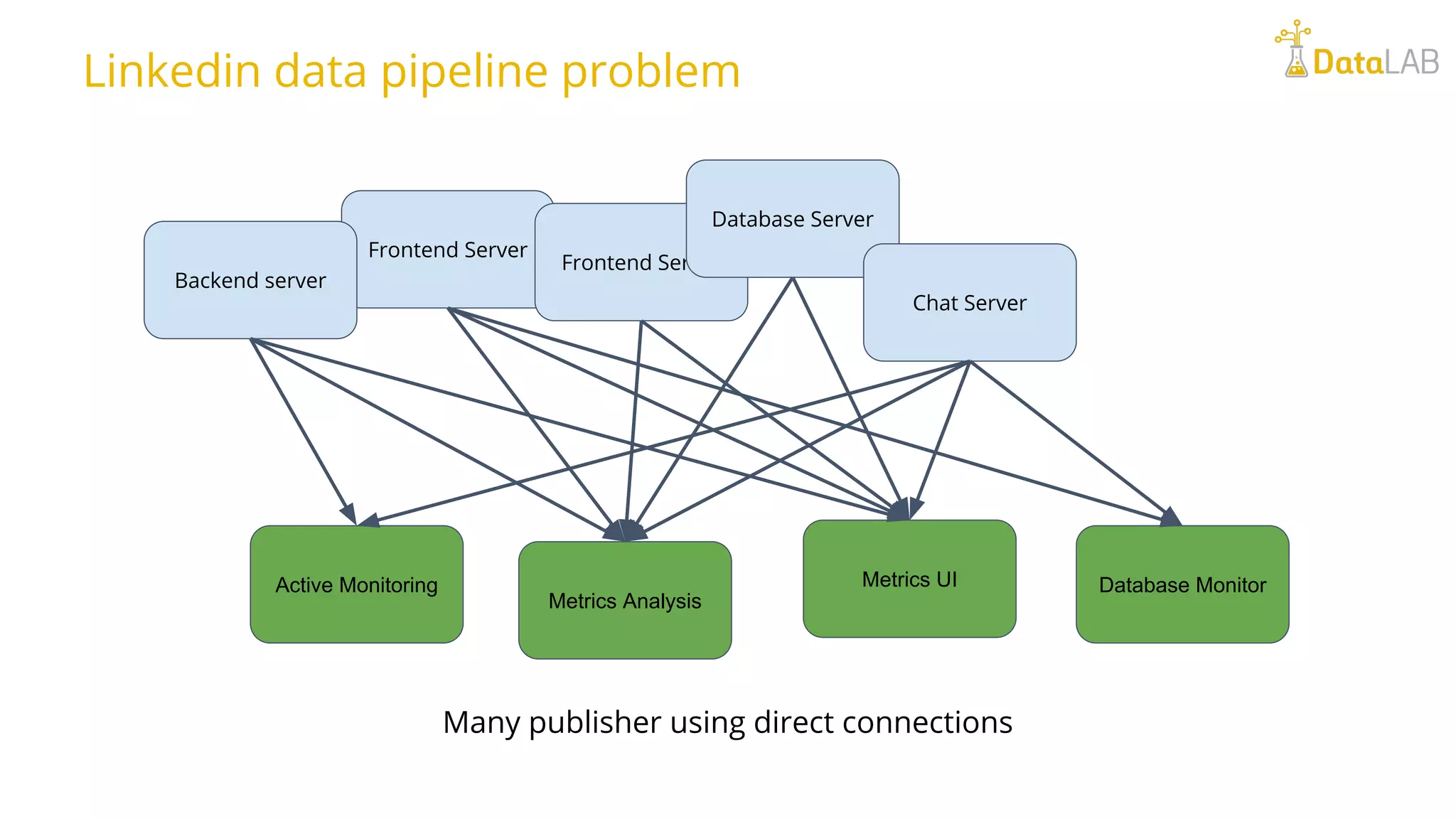

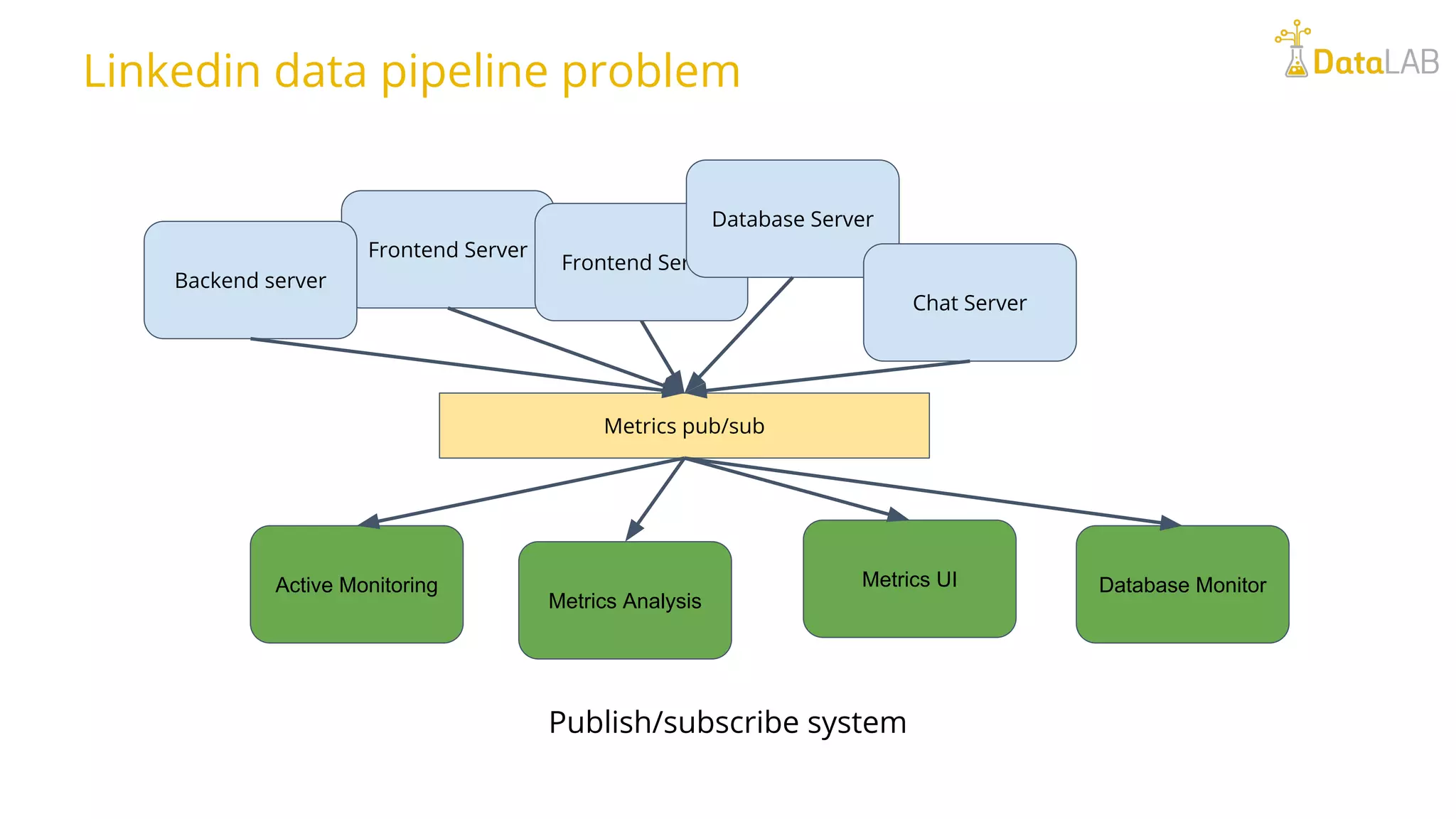

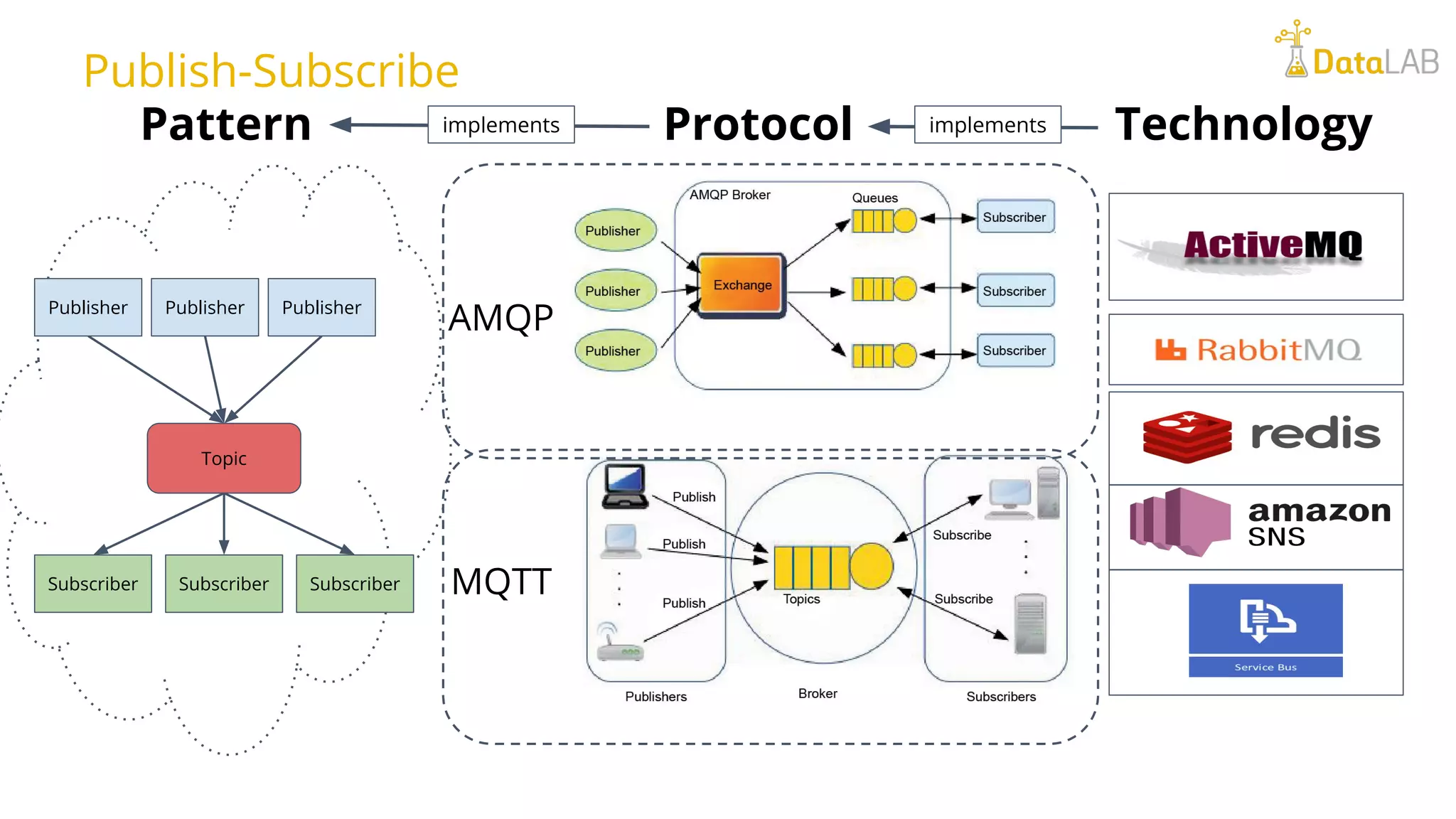

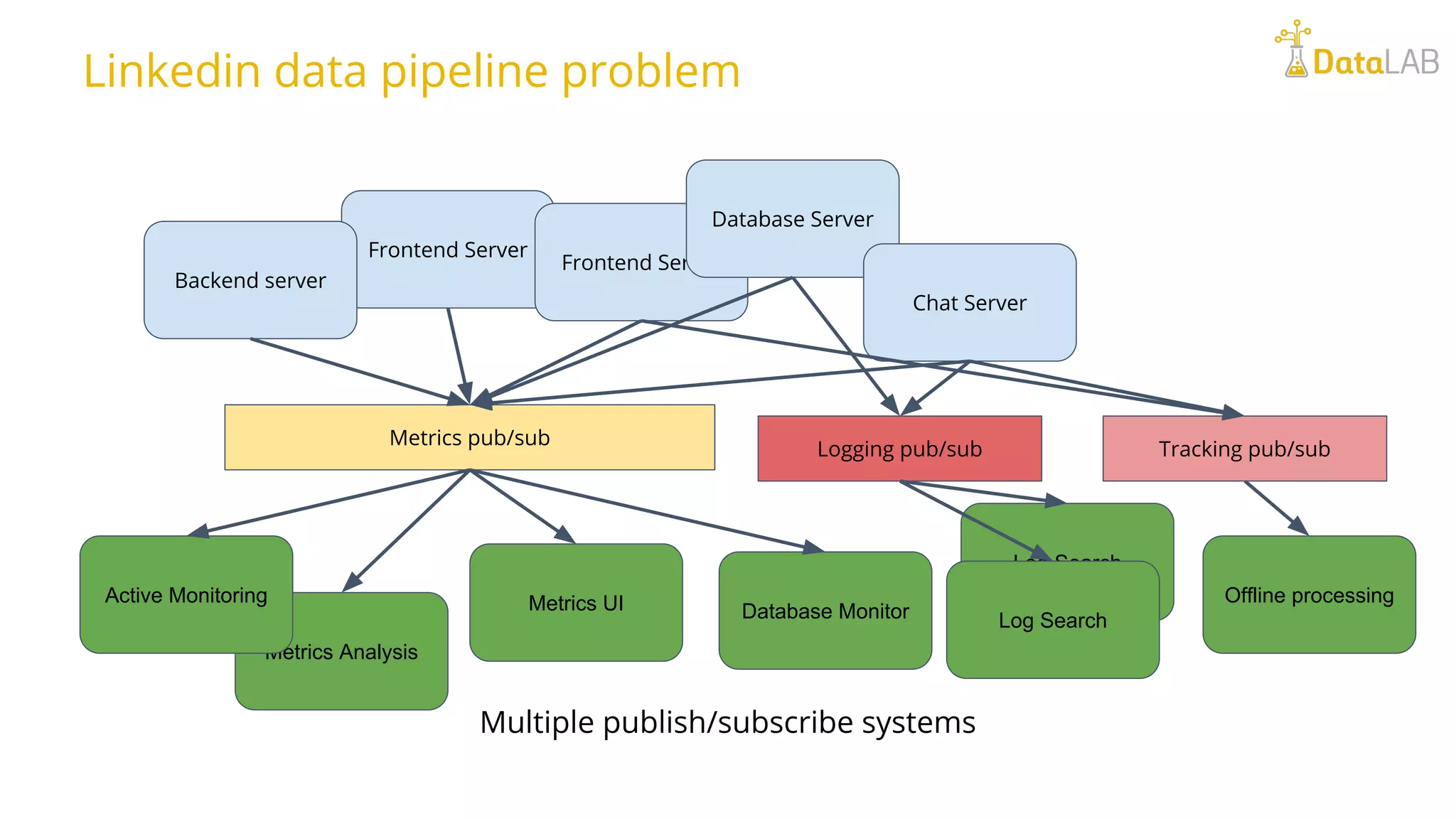

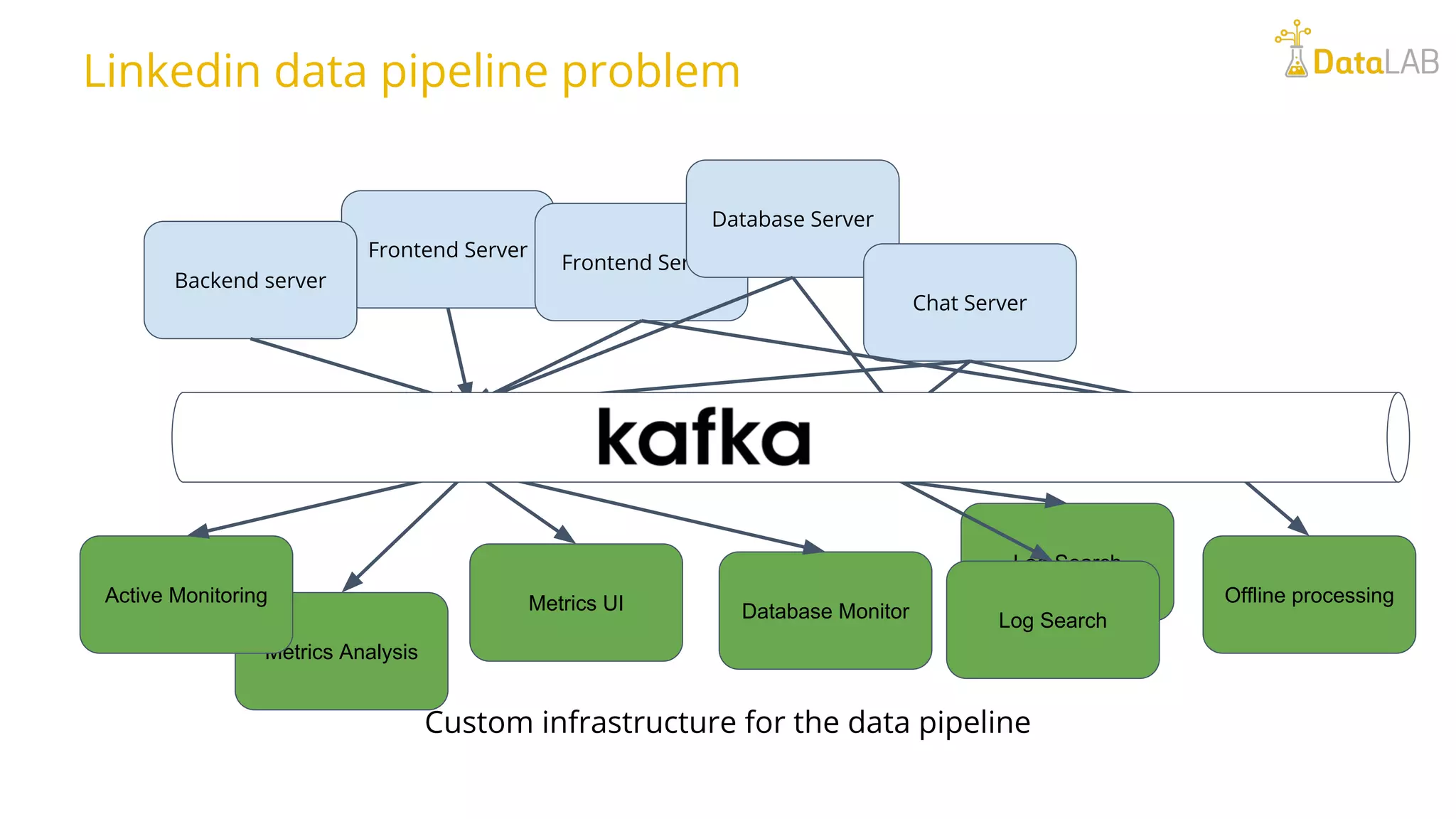

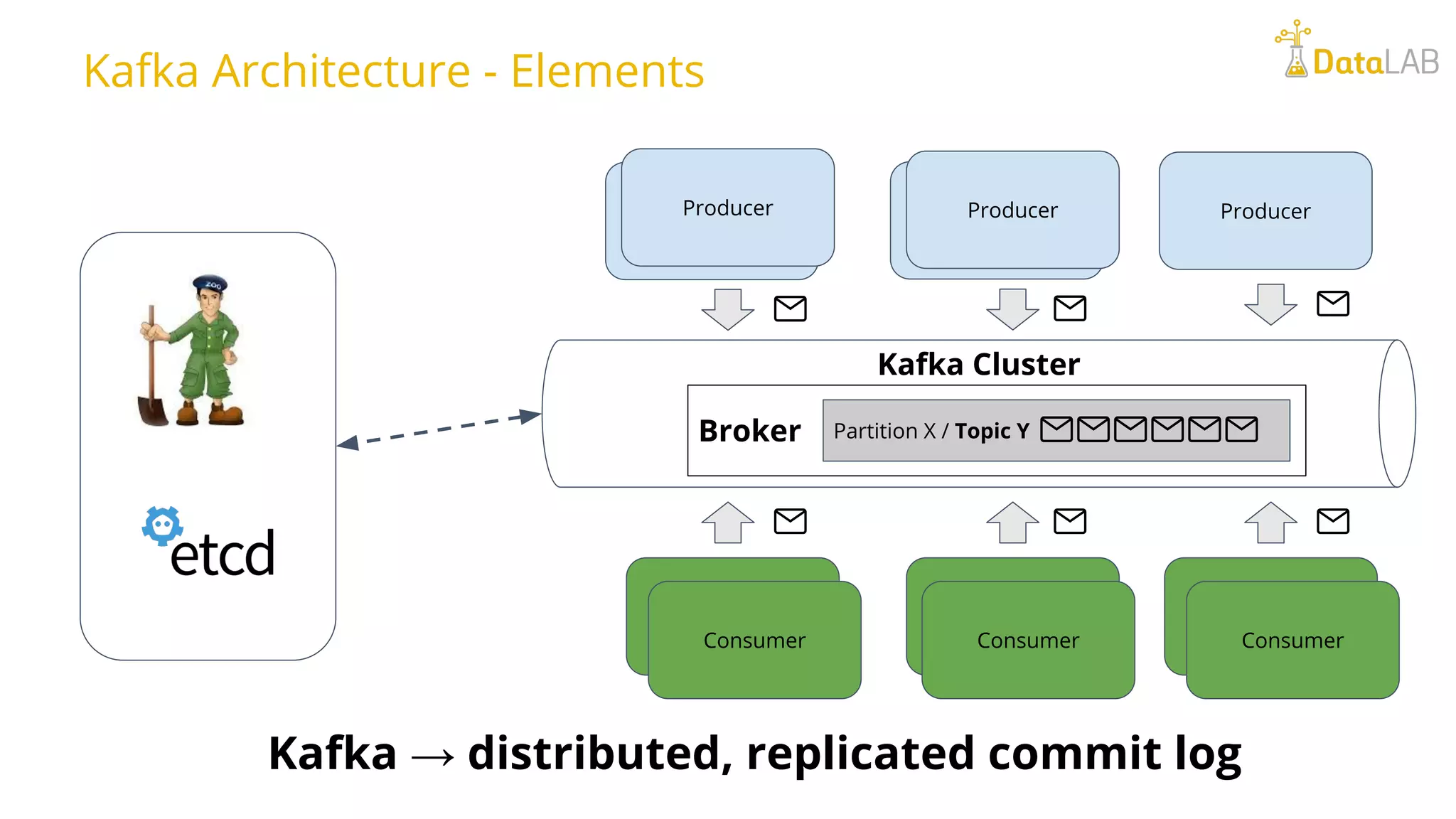

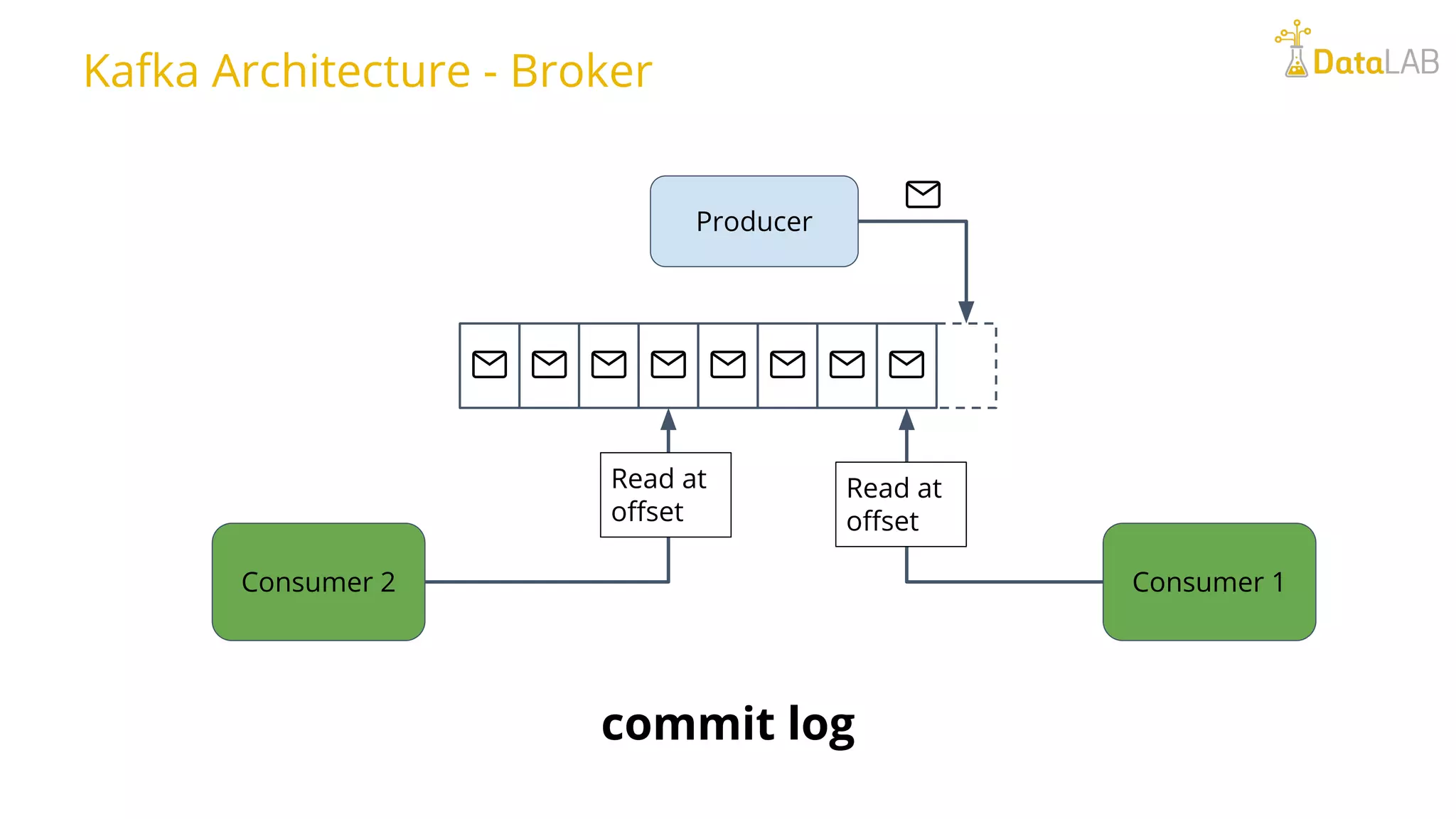

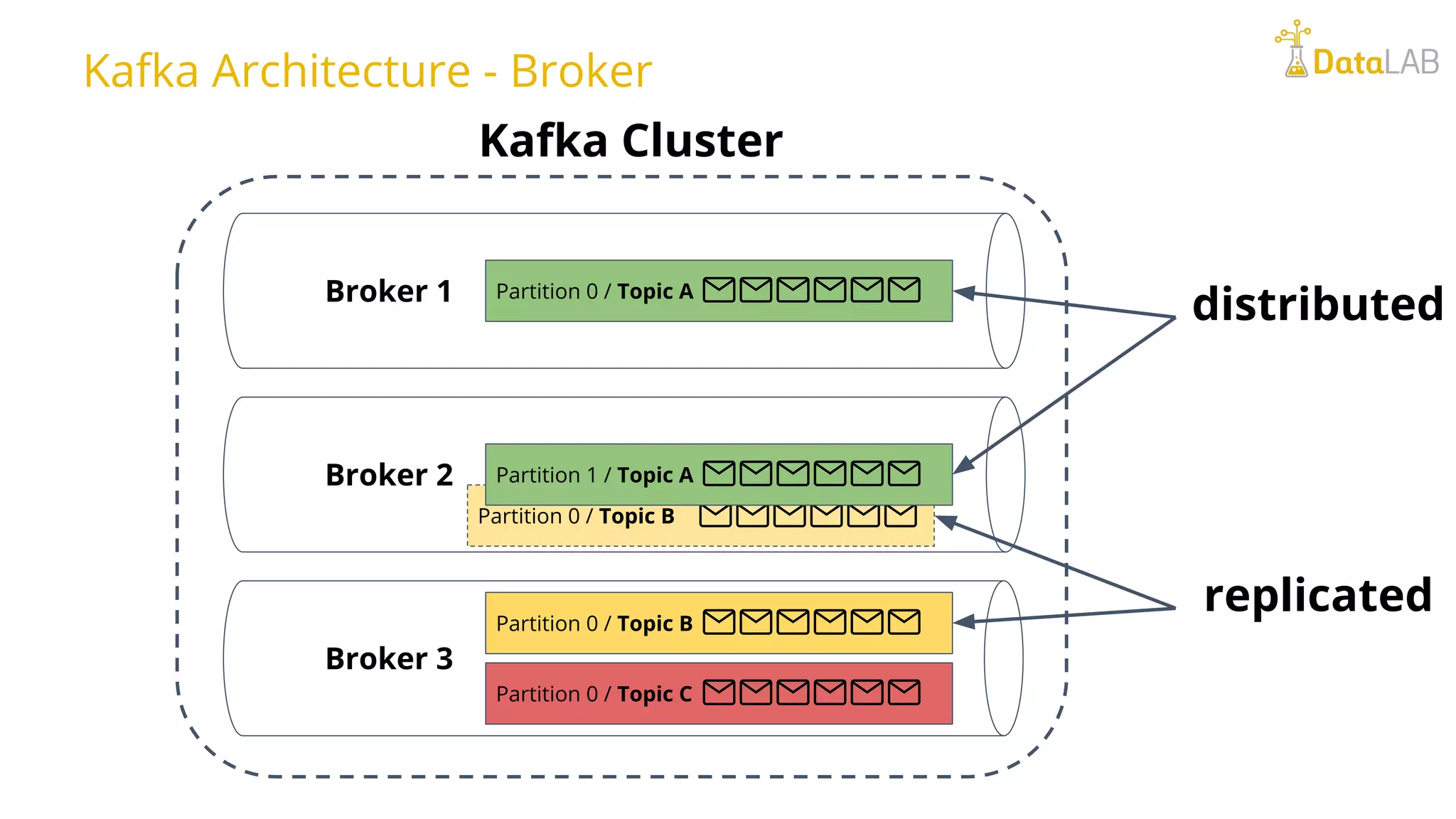

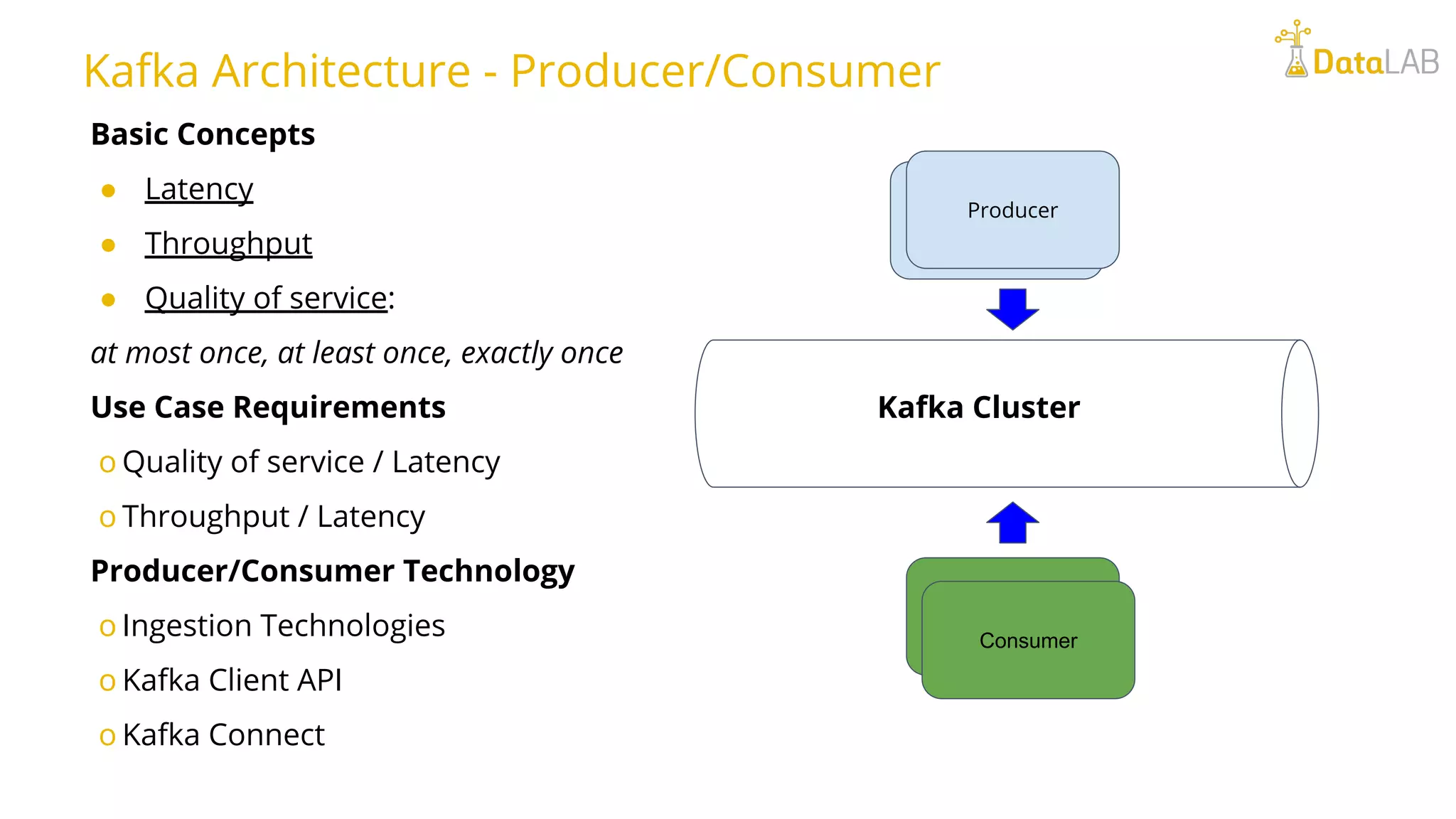

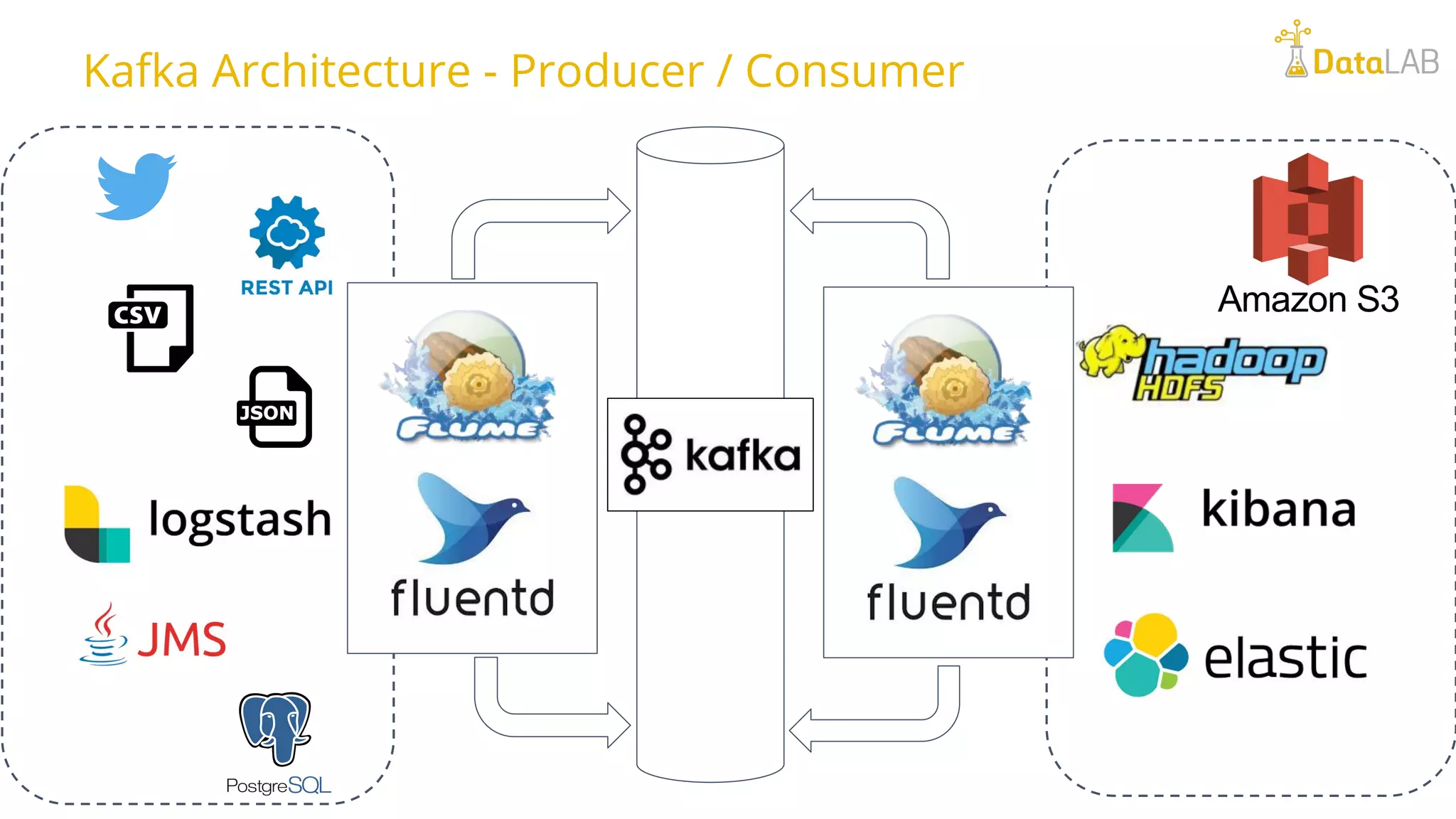

This document provides an overview of Apache Kafka including its main components, architecture, and ecosystem. It describes how LinkedIn used Kafka to solve their data pipeline problem by decoupling systems and allowing for horizontal scaling. The key elements of Kafka are producers that publish data to topics, the Kafka cluster that stores streams of records in a distributed, replicated commit log, and consumers that subscribe to topics. Kafka Connect and the Schema Registry are also introduced as part of the Kafka ecosystem.

![Kafka Producer Kafka Protocol - Producer Producer Record Topic [Partition] [Key] Value Broker Partition 0 / Topic A Producer.send (record) exception/metadata](https://image.slidesharecdn.com/slideshareapachekafka-180528132146/75/Introduction-to-Apache-Kafka-27-2048.jpg)

![Productor Kafka Topic / Partition Buffer Sender Thread Producer Record Topic [Partition] [Key] Value Serializer Partitioner Topic A / Partition 0 Batch 0 Batch 1 Batch 0 / Topic A / Partition 0 Batch 0 / Topic B / Partition 0 Batch 0 / Topic B / Partition 1 Batch 1 / Topic B / Partition 0 Retry Fail Yes Yes No NoException Metadata Topic Partition X Partition Commit Metadata Topic Part. Offset Send Kafka Protocol - Producer](https://image.slidesharecdn.com/slideshareapachekafka-180528132146/75/Introduction-to-Apache-Kafka-28-2048.jpg)