Main Objective: Implement matrix addition using sequential, OpenMP, and MPI models on a 4-core system. Compare performance, scalability, and efficiency across approaches. Learning Outcomes: Sequential Execution – establish baseline time & complexity. OpenMP (Shared Memory) – multi-threading, synchronization, and overhead analysis. MPI Point-to-Point – message passing with Send/Recv, handle deadlocks & partitioning. MPI Collective – use Scatter/Gather/Bcast/Reduce for simpler, scalable communication. Goal: Build hands-on understanding of trade-offs in parallel programming models.

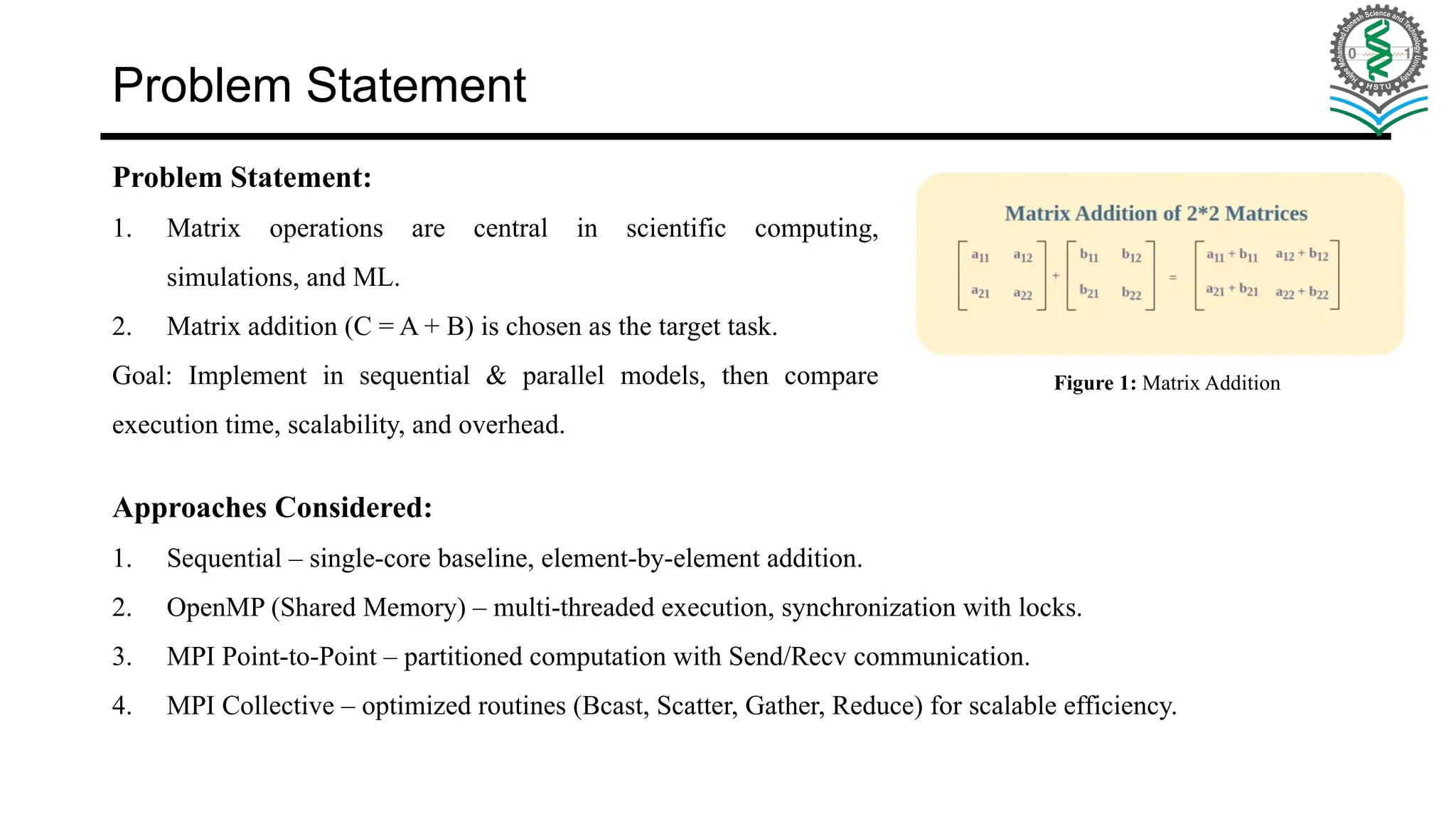

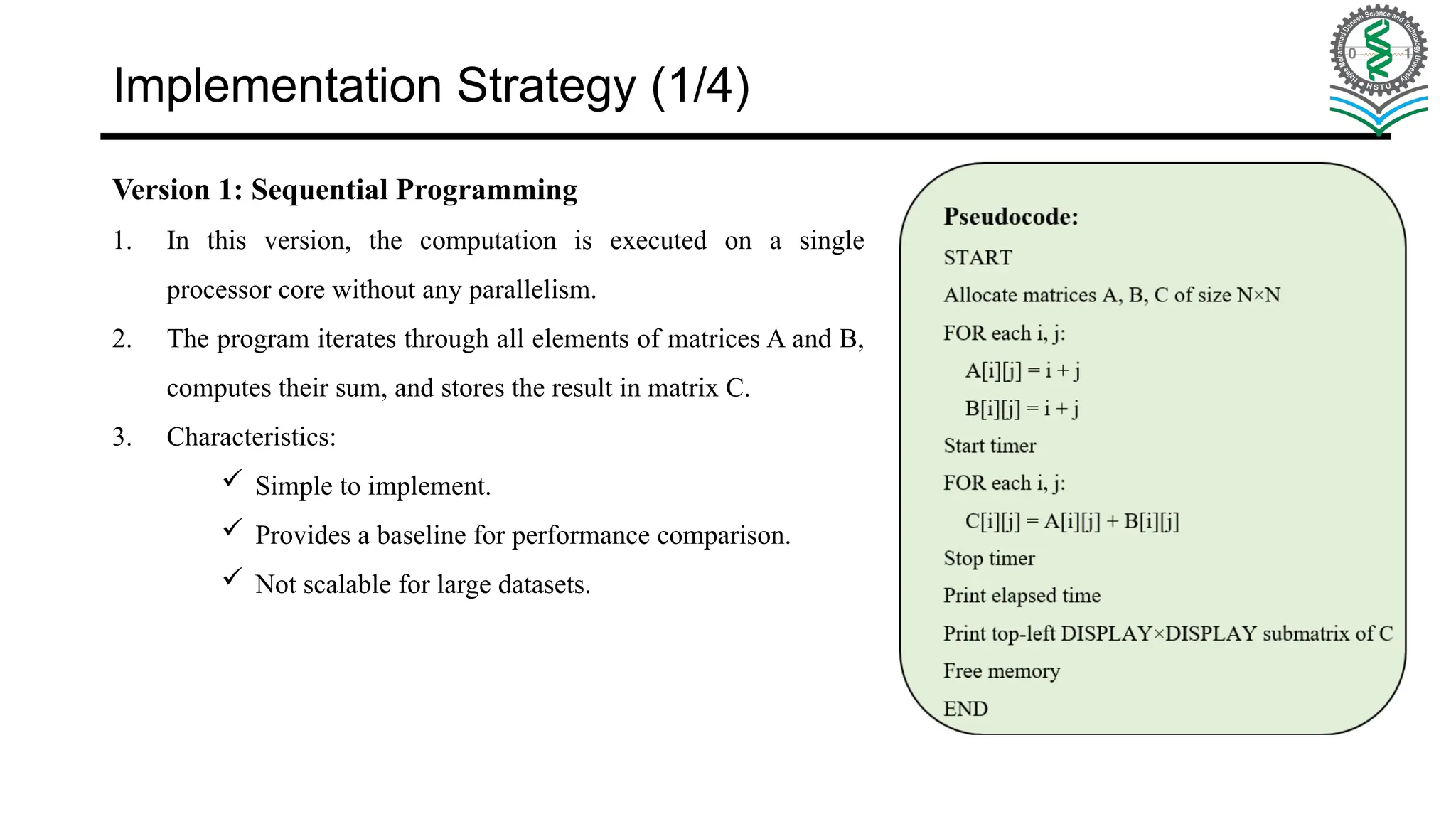

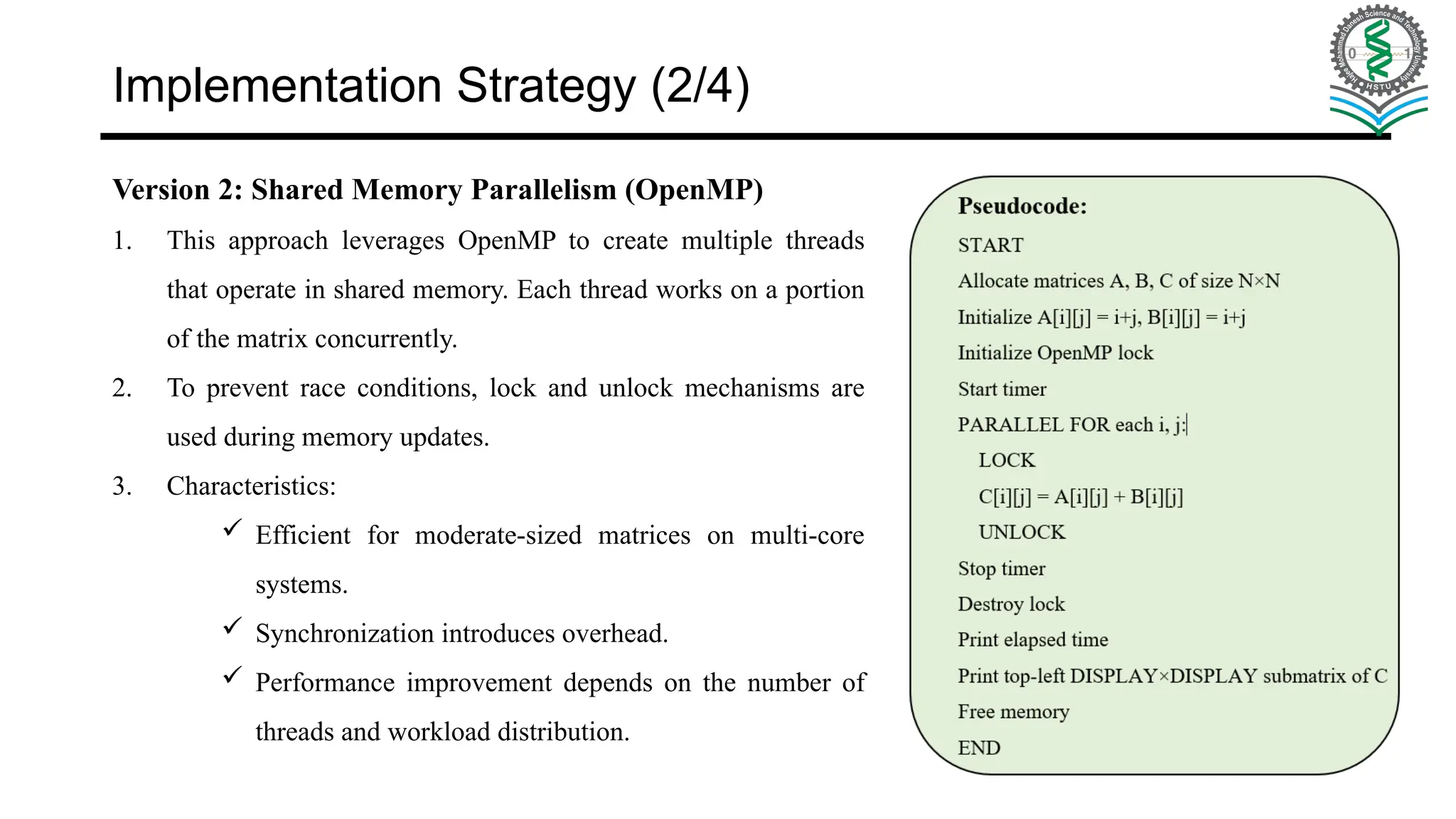

![References [1] M. J. Quinn, Parallel Programming in C with MPI and OpenMP. New York, NY, USA: McGraw-Hill, 2004. [2] W. Gropp, E. Lusk, and A. Skjellum, Using MPI: Portable Parallel Programming with the Message Passing Interface, 3rd ed. Cambridge, MA, USA: MIT Press, 2014. [3] OpenMP Architecture Review Board, OpenMP Application Programming Interface, Version 5.2, Nov. 2023. [Online]. Available: https://www.openmp.org [4] Message Passing Interface Forum, MPI: A Message-Passing Interface Standard, Version 4.0, June 2021. [Online]. Available: https://www.mpi-forum.org [5] B. Wilkinson and M. Allen, Parallel Programming: Techniques and Applications Using Networked Workstations and Parallel Computers, 2nd ed. Upper Saddle River, NJ, USA: Pearson, 2005. [6] I. Foster, Designing and Building Parallel Programs: Concepts and Tools for Parallel Software Engineering. Reading, MA, USA: Addison-Wesley, 1995. [7] Lawrence Livermore National Laboratory (LLNL), MPI Tutorial. [Online]. Available: https://hpc-tutorials.llnl.gov/mpi/](https://image.slidesharecdn.com/exploringparallelprogrammingmodelsformatrixcomputation-251026112224-d83ad471/75/Exploring-Parallel-Programming-Models-for-Matrix-Computation-pptx-22-2048.jpg)