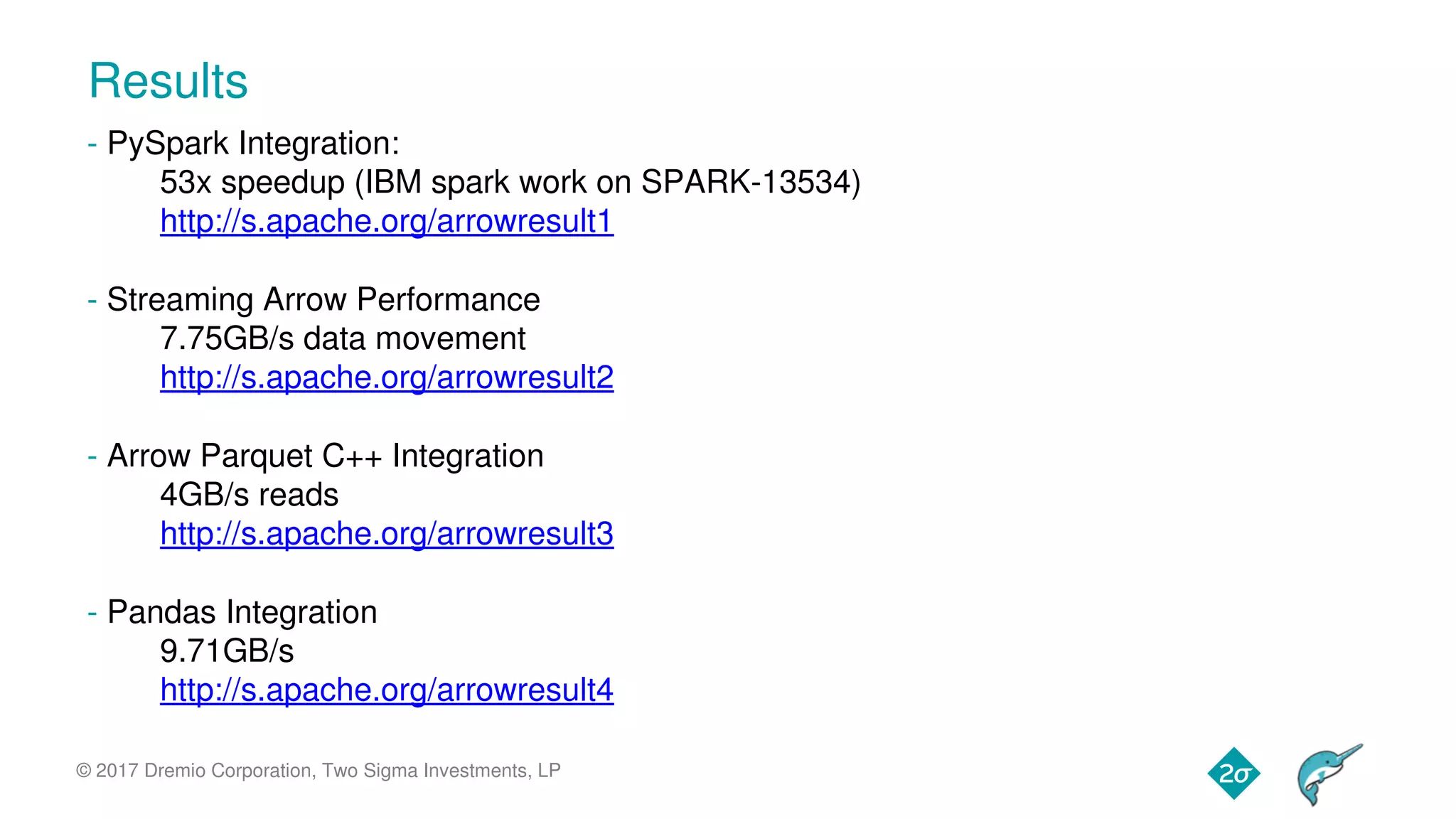

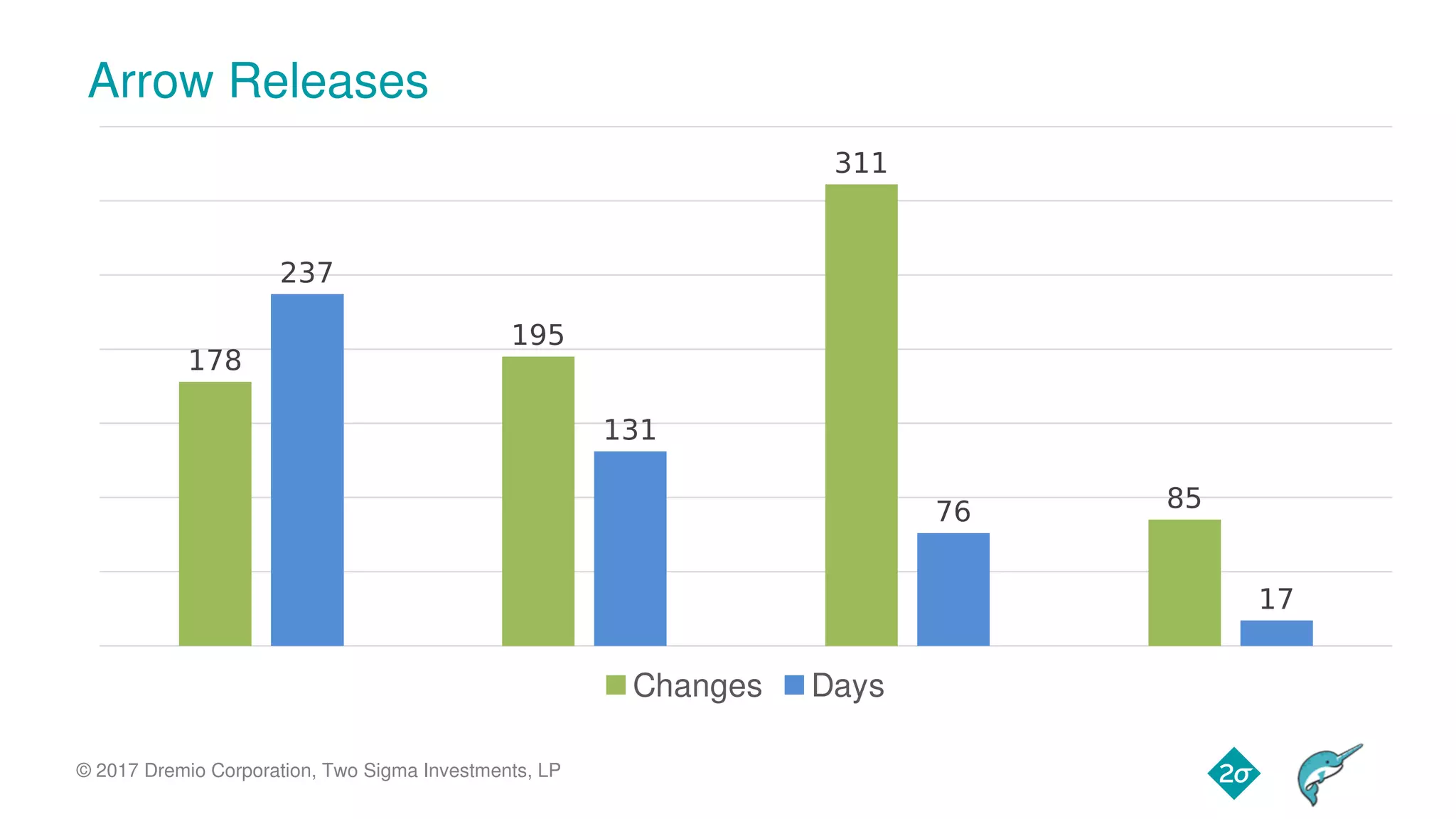

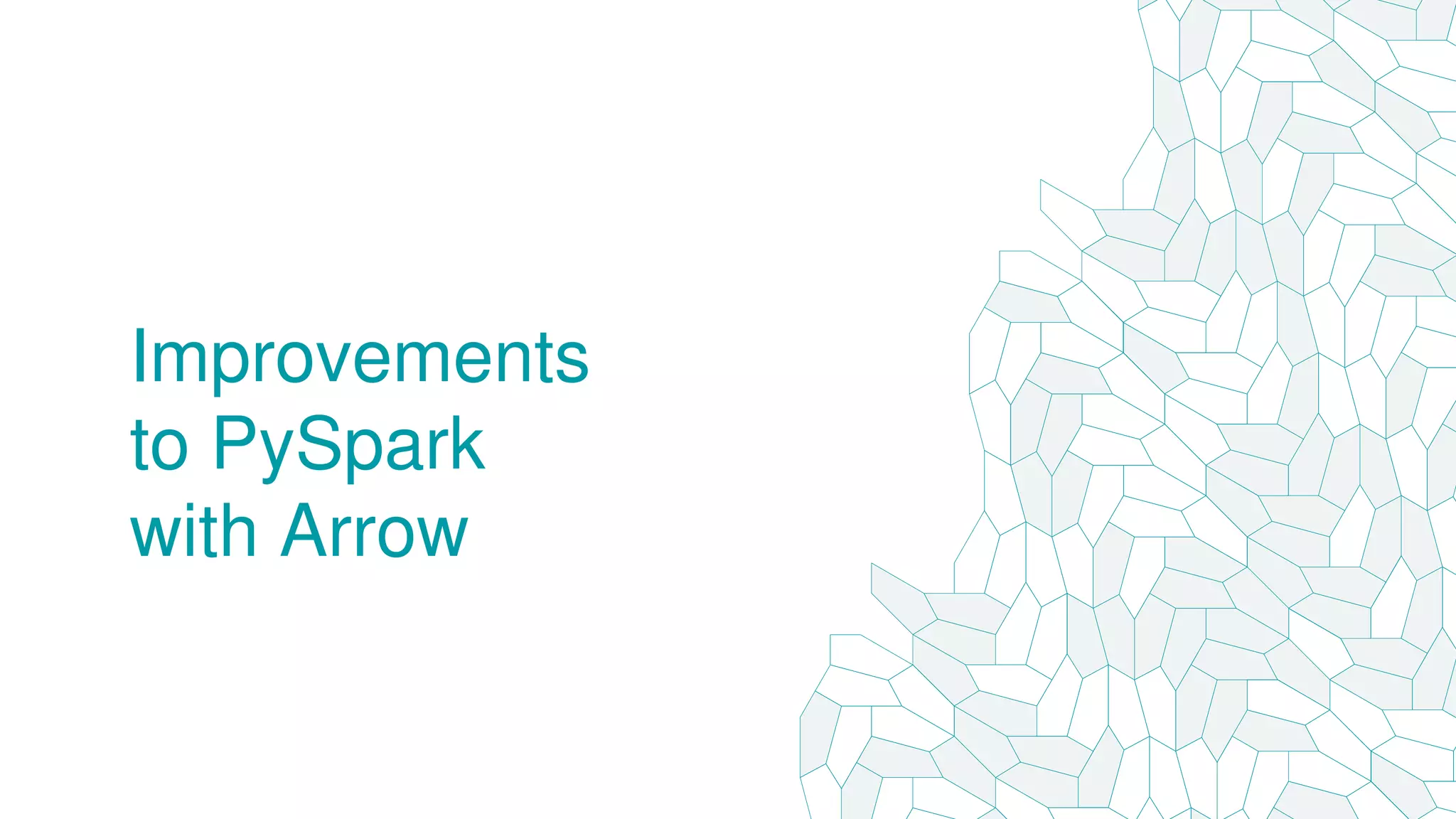

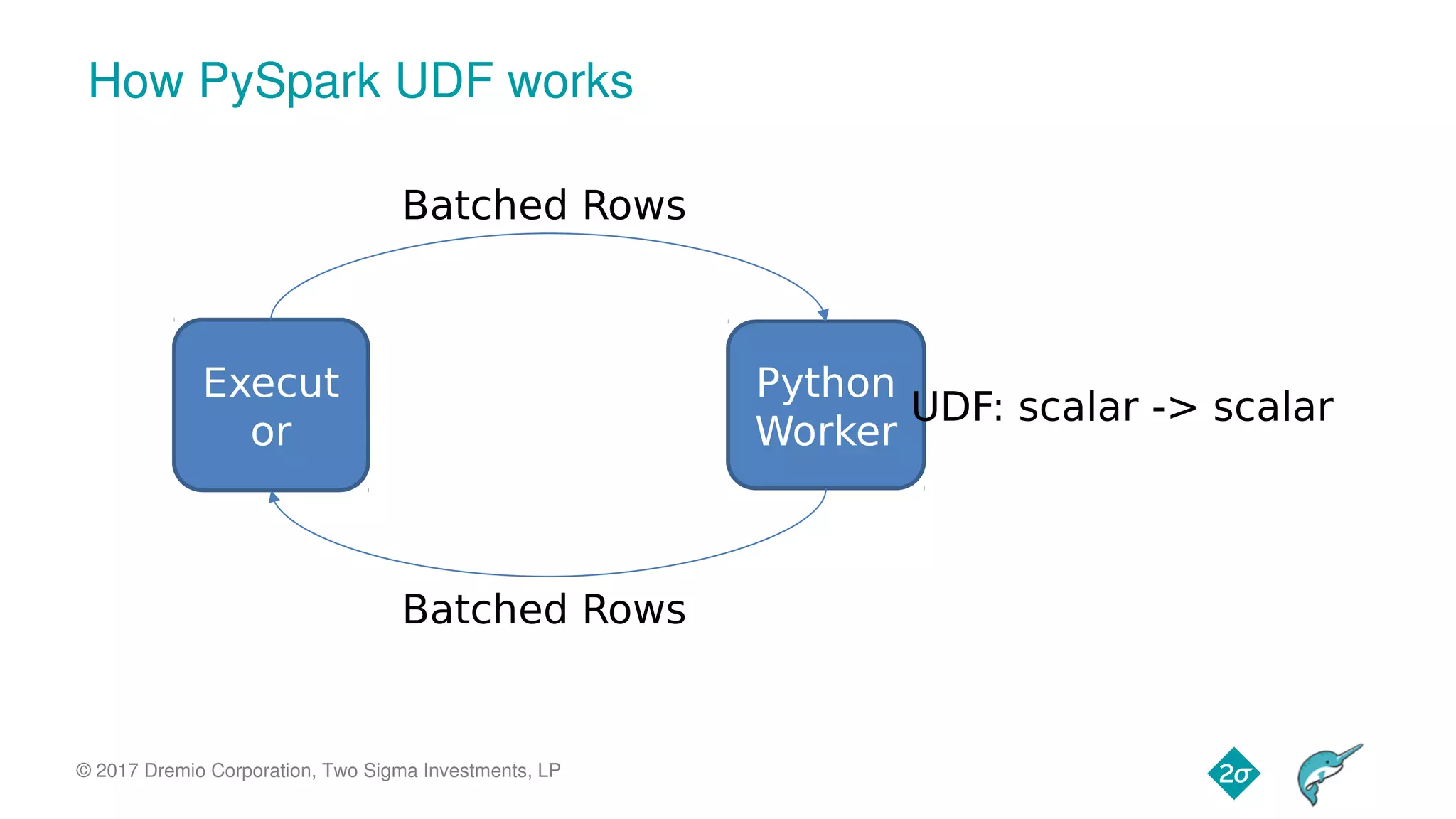

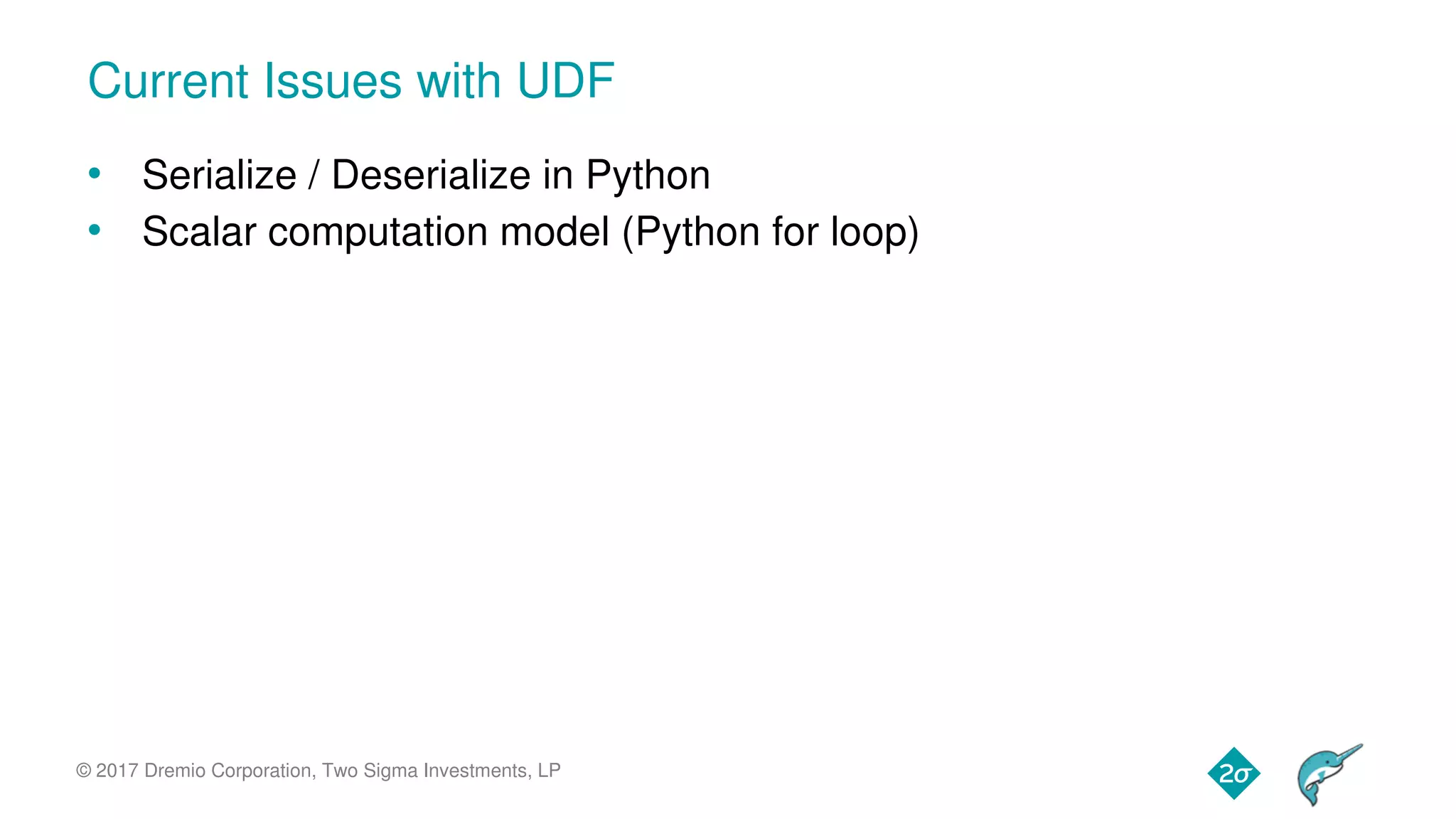

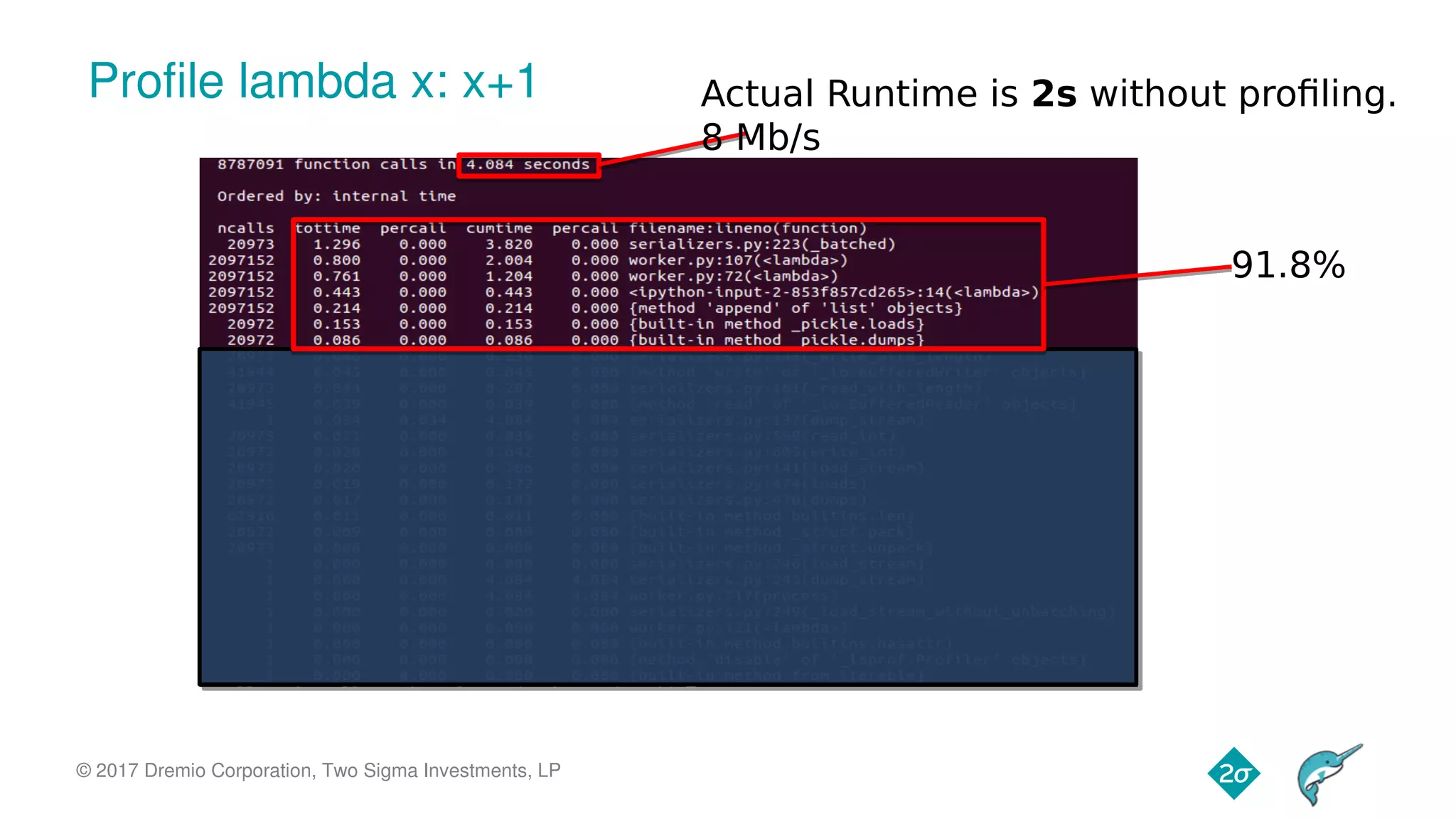

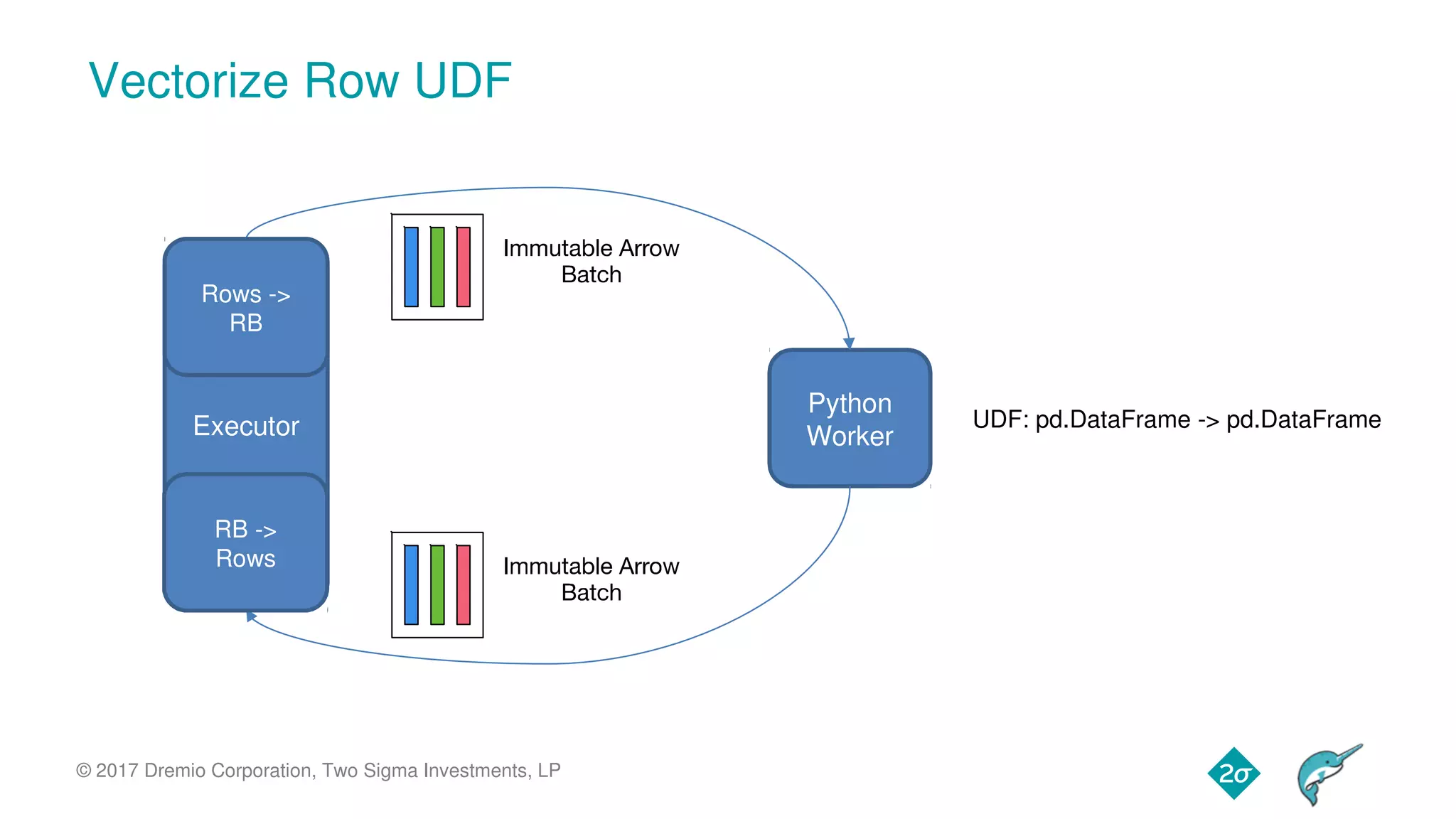

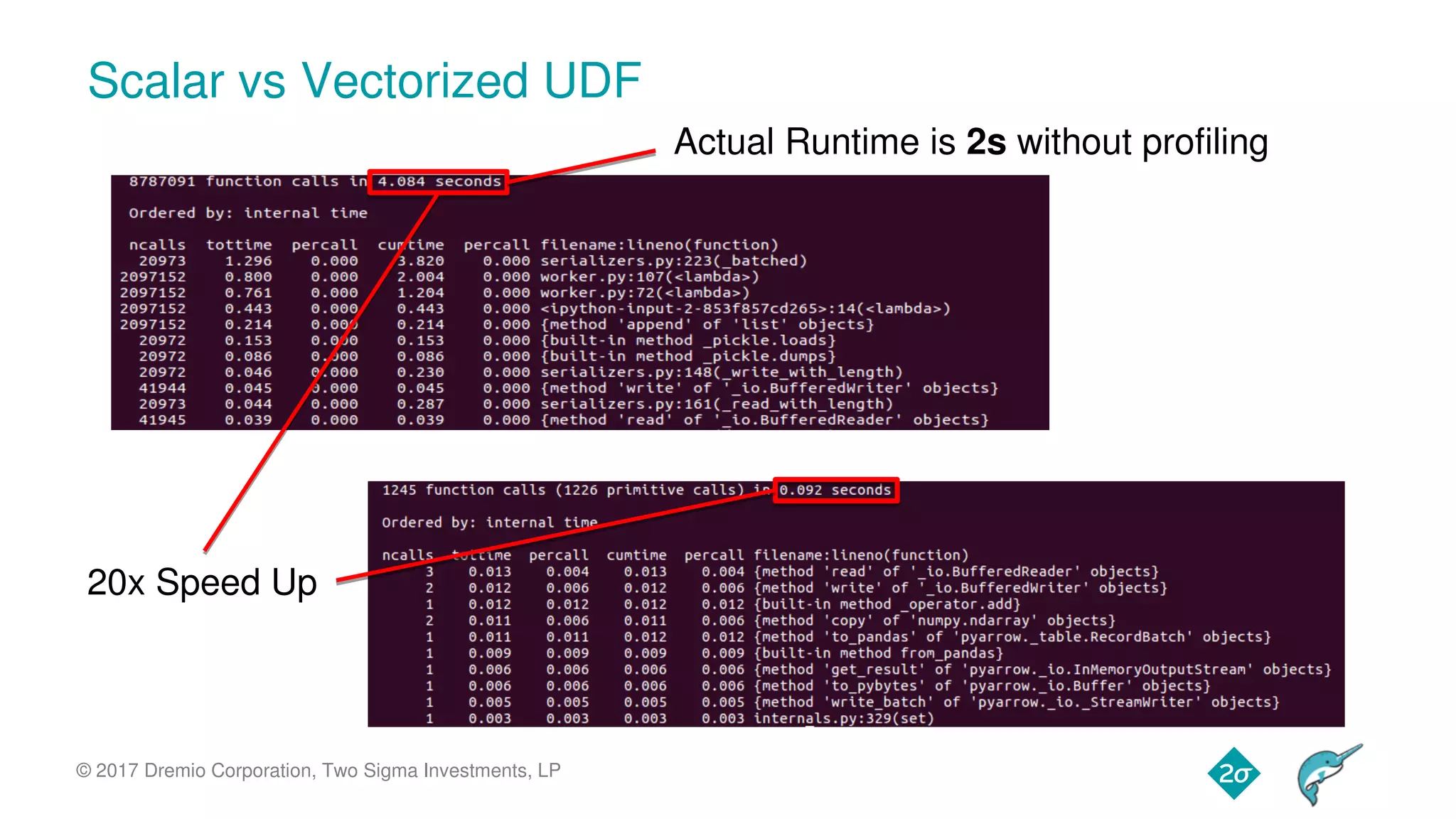

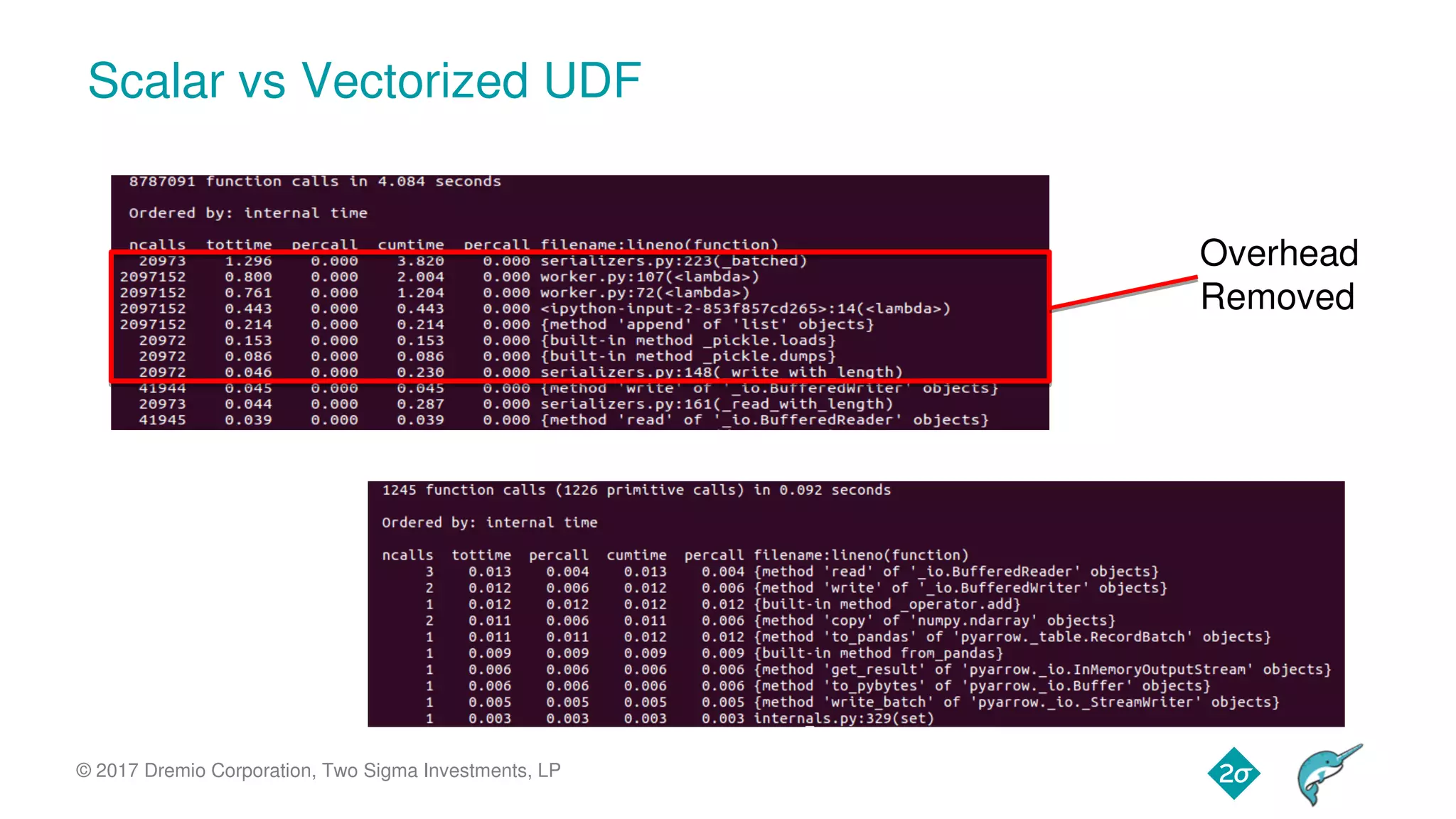

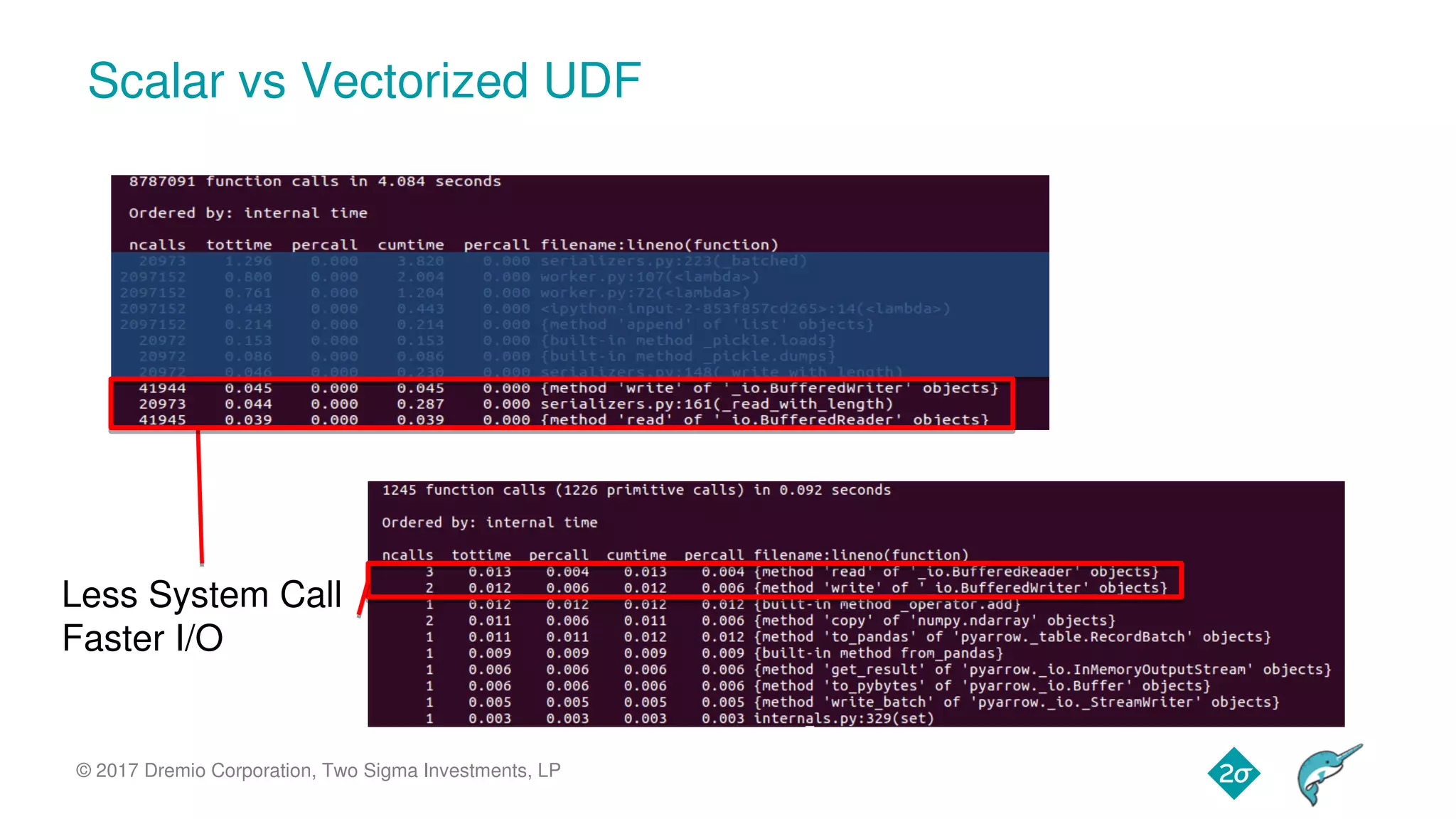

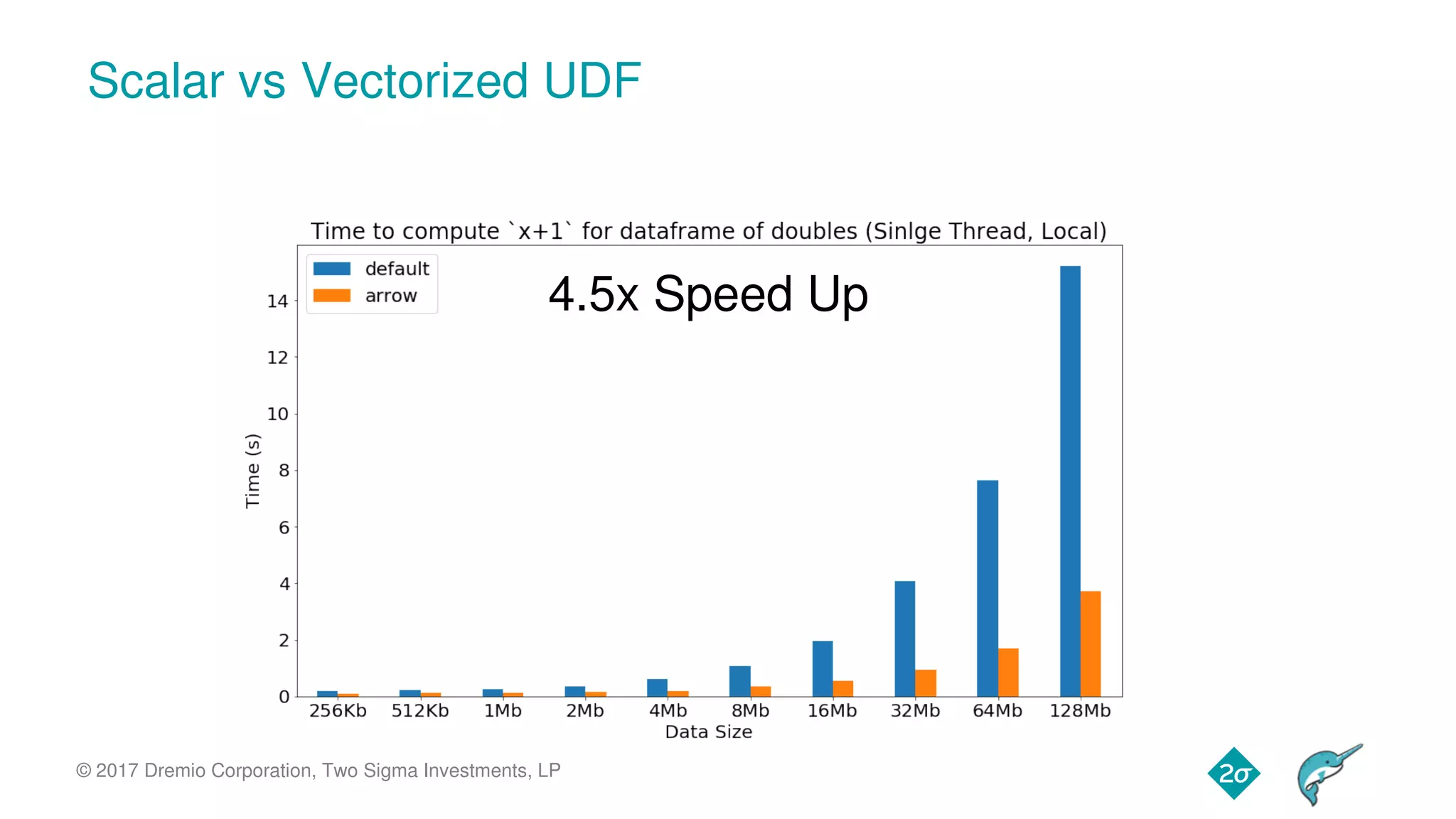

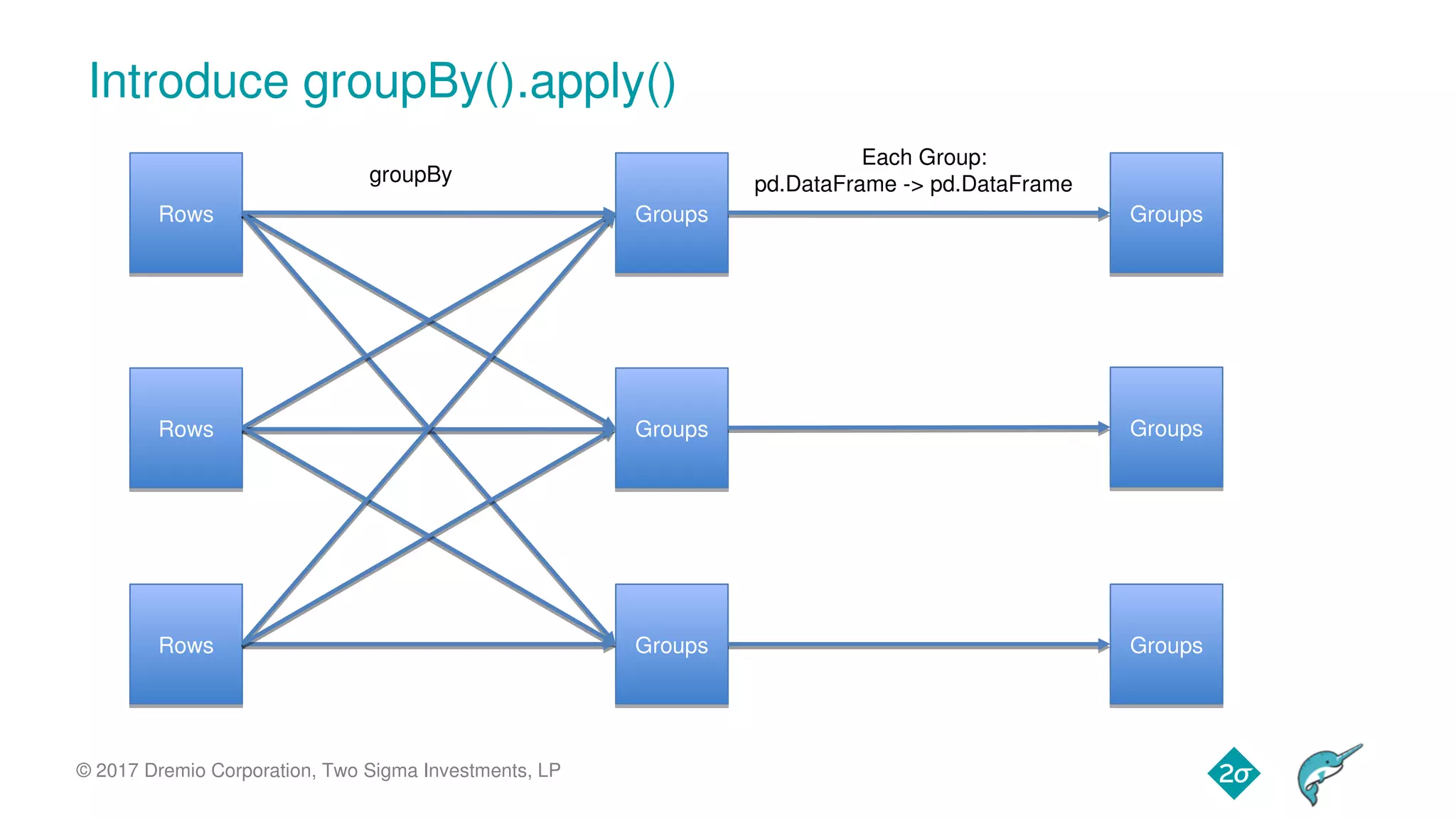

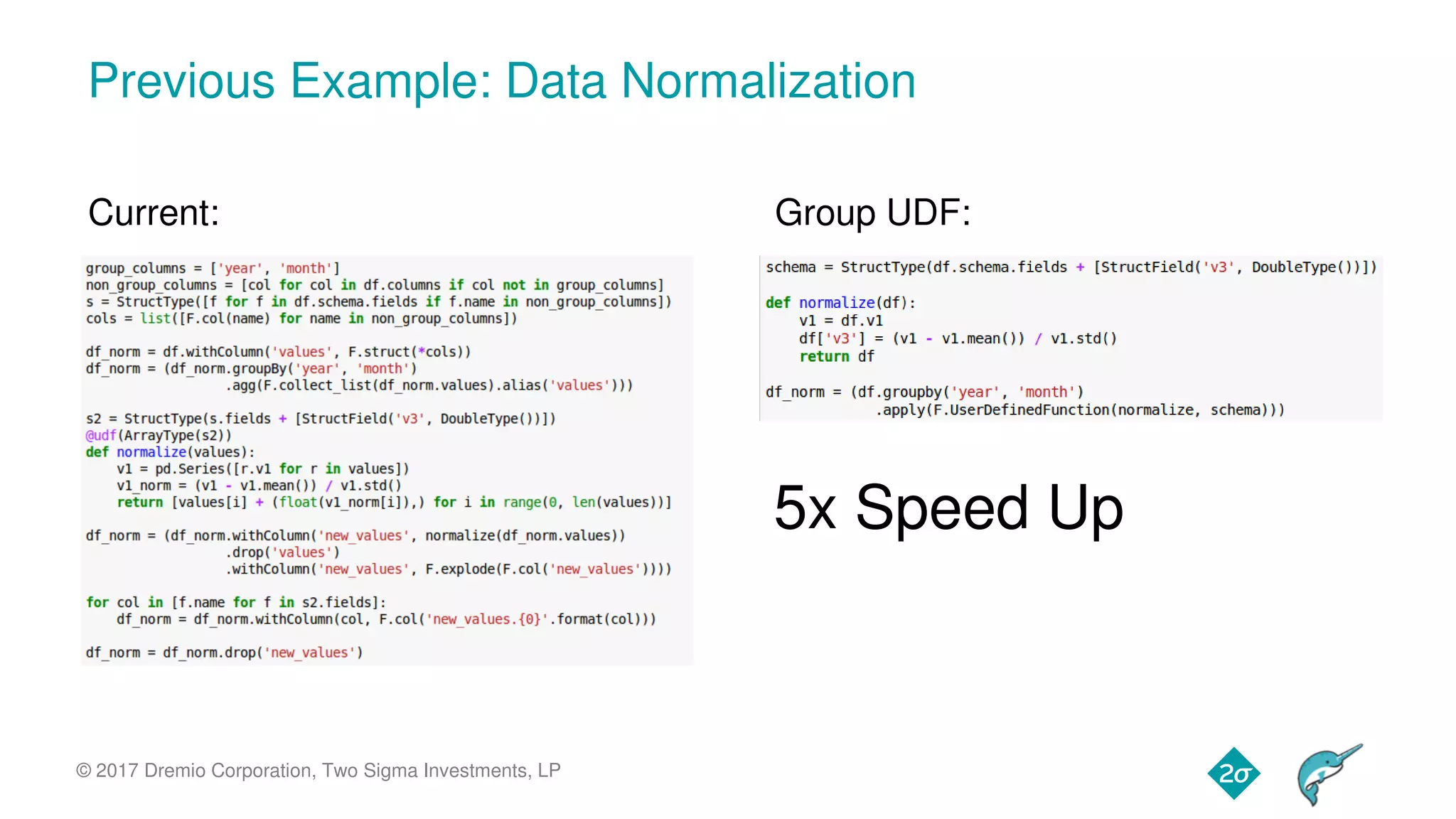

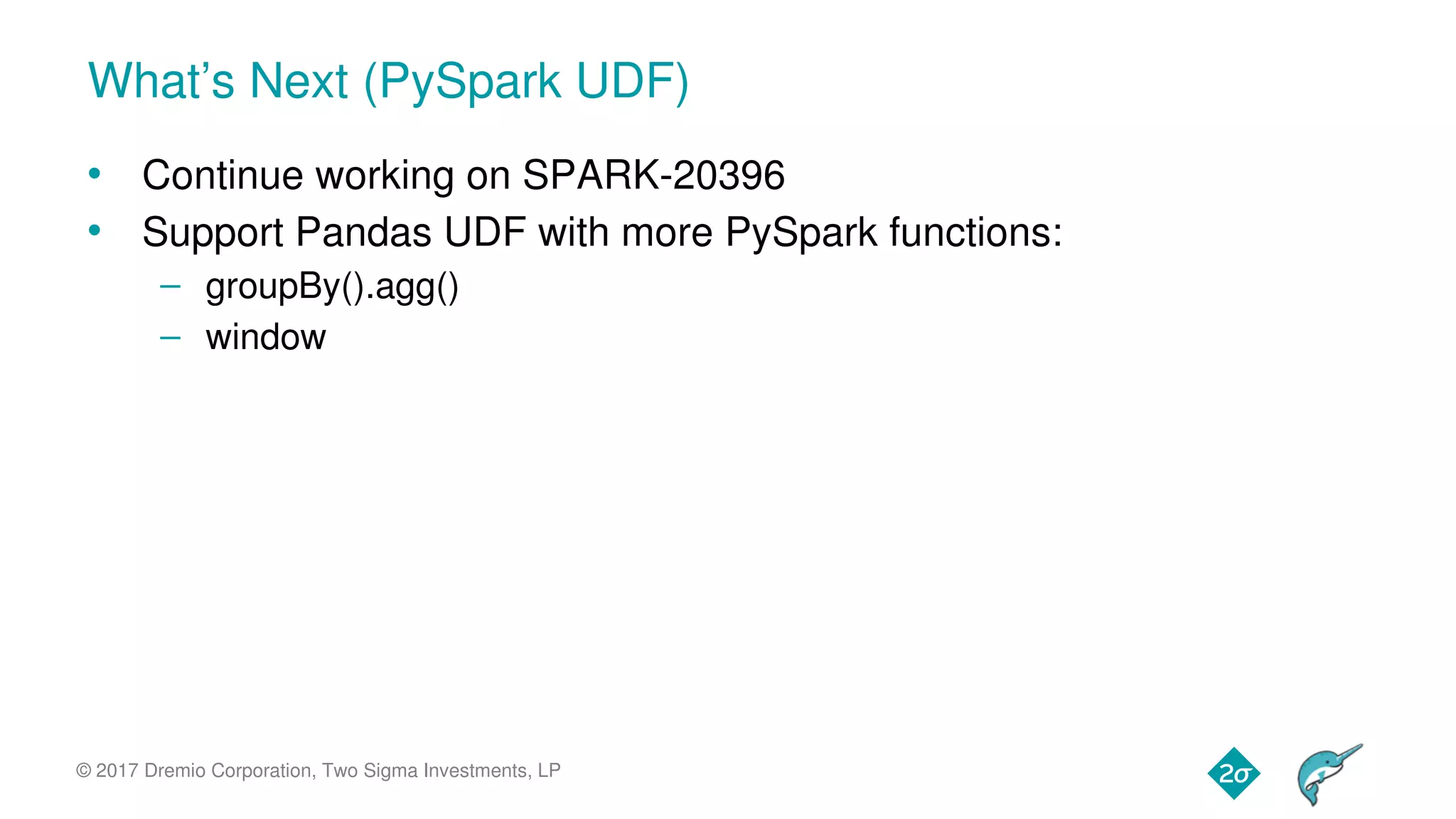

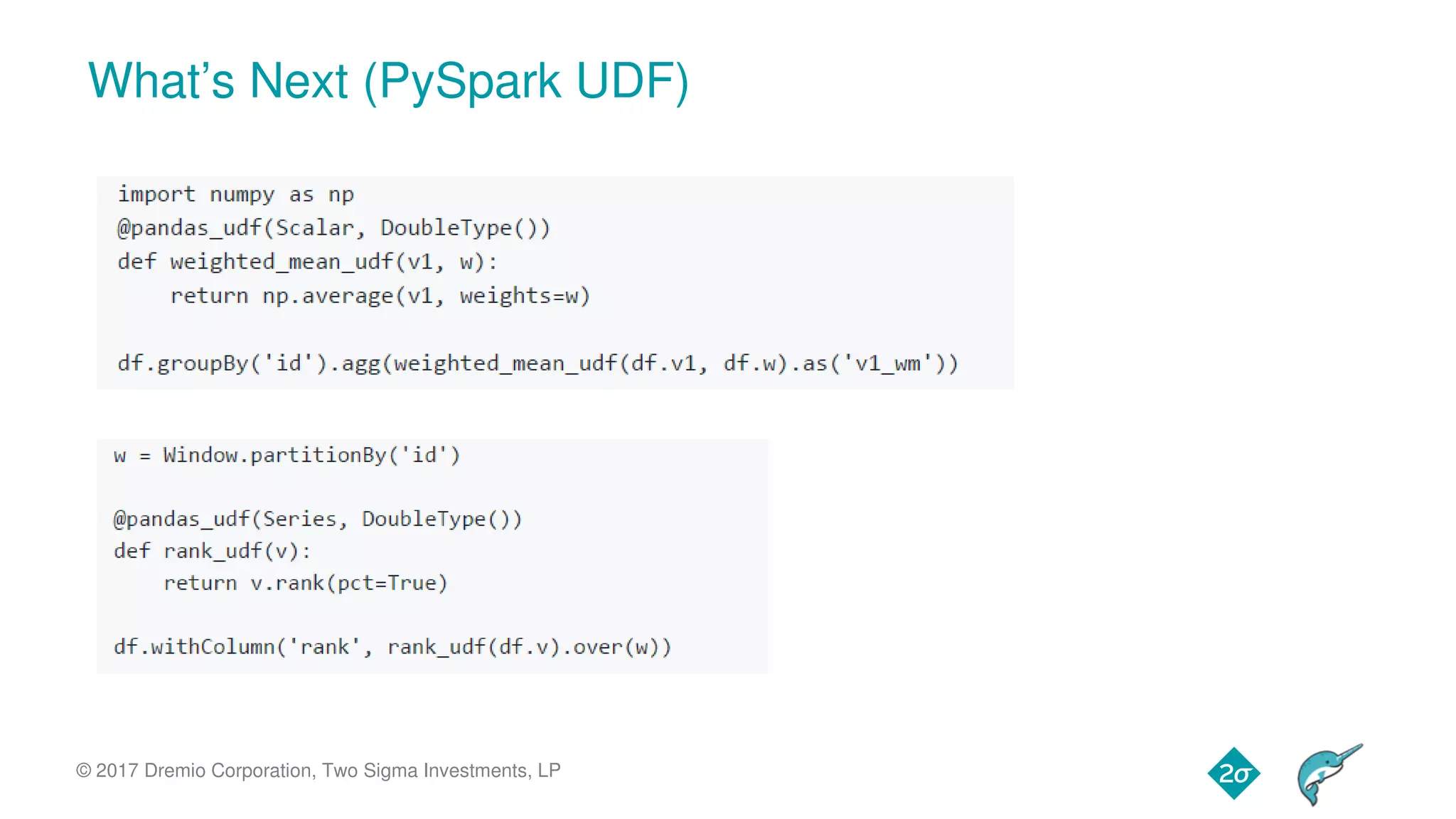

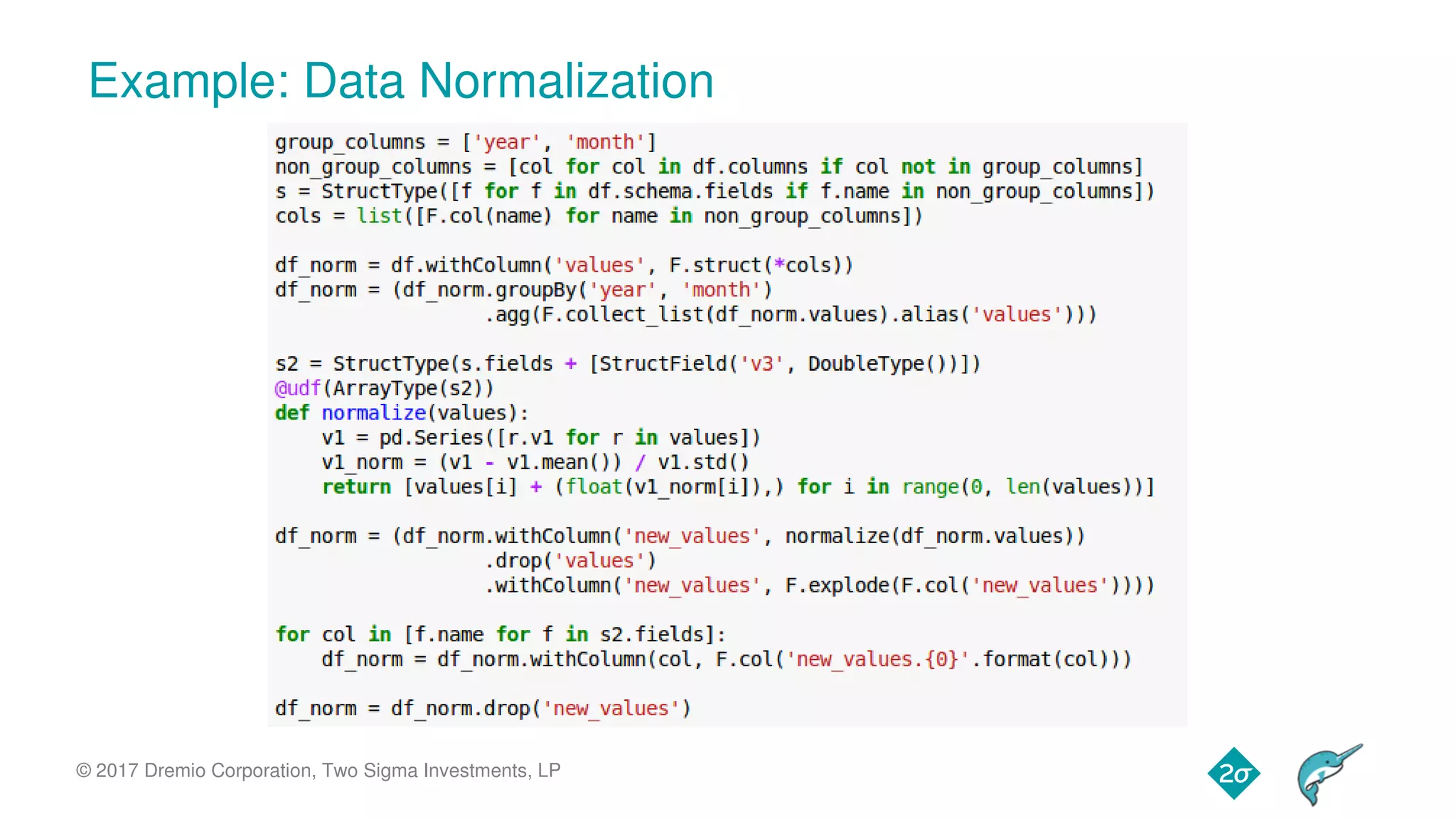

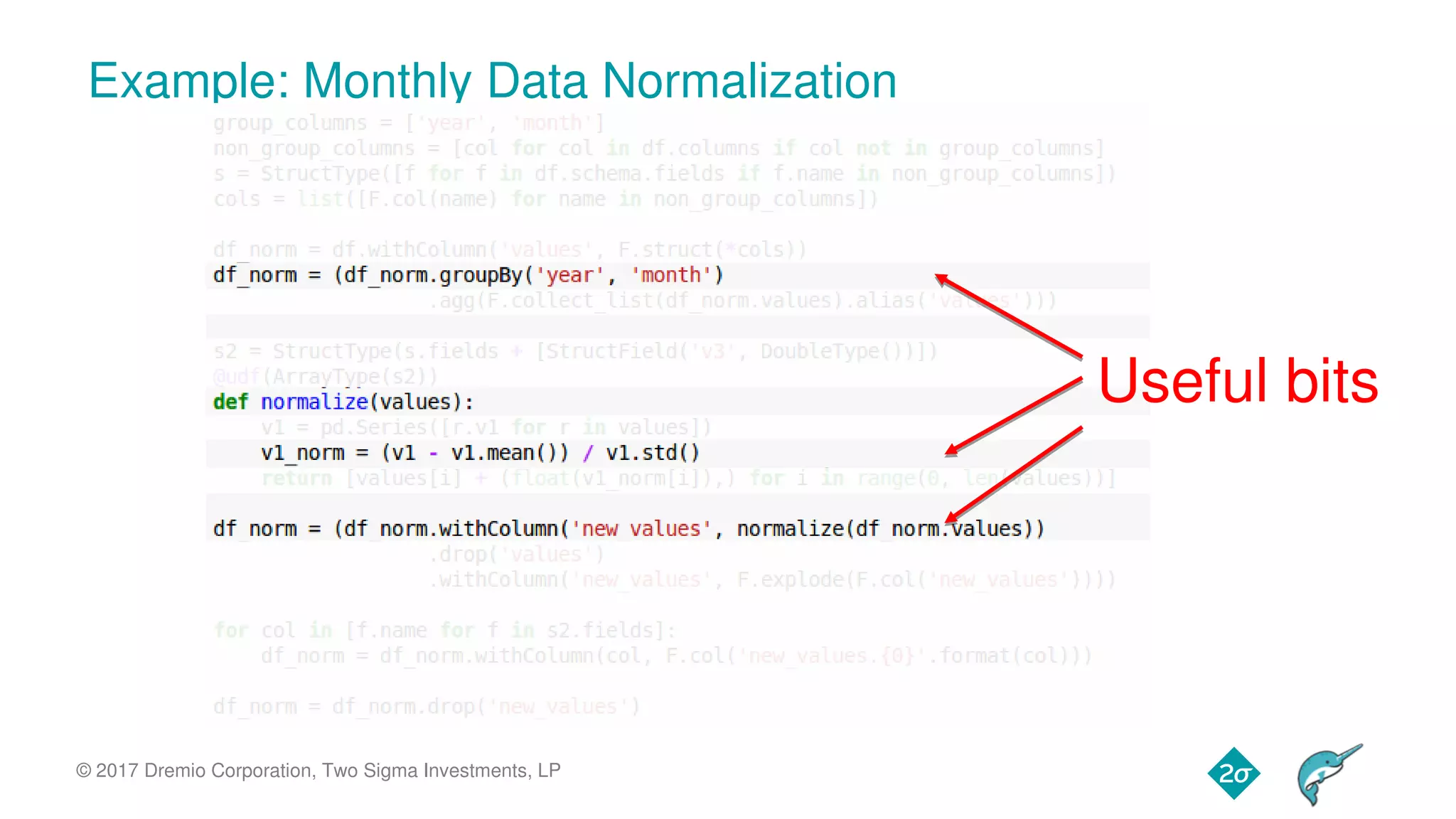

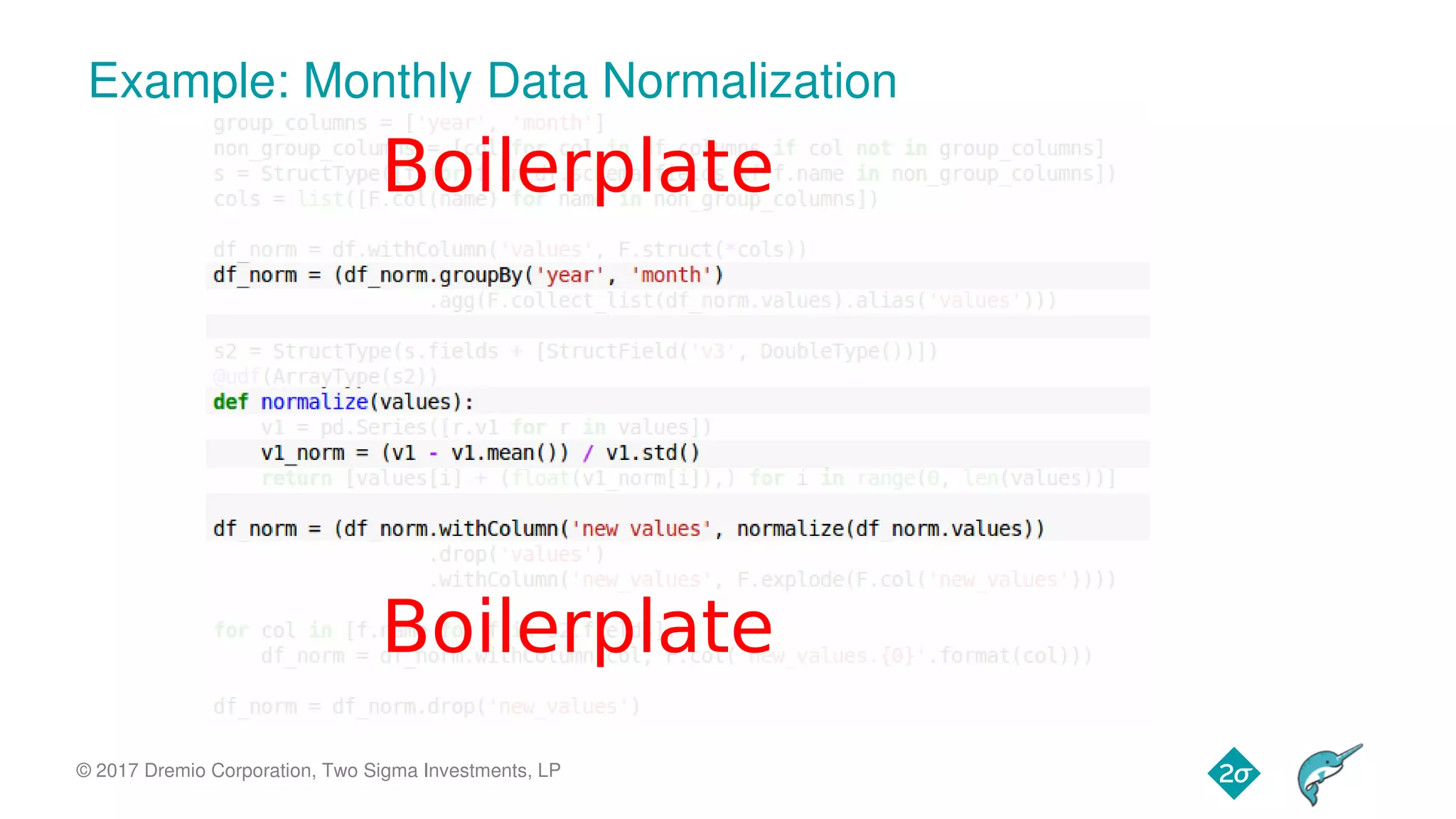

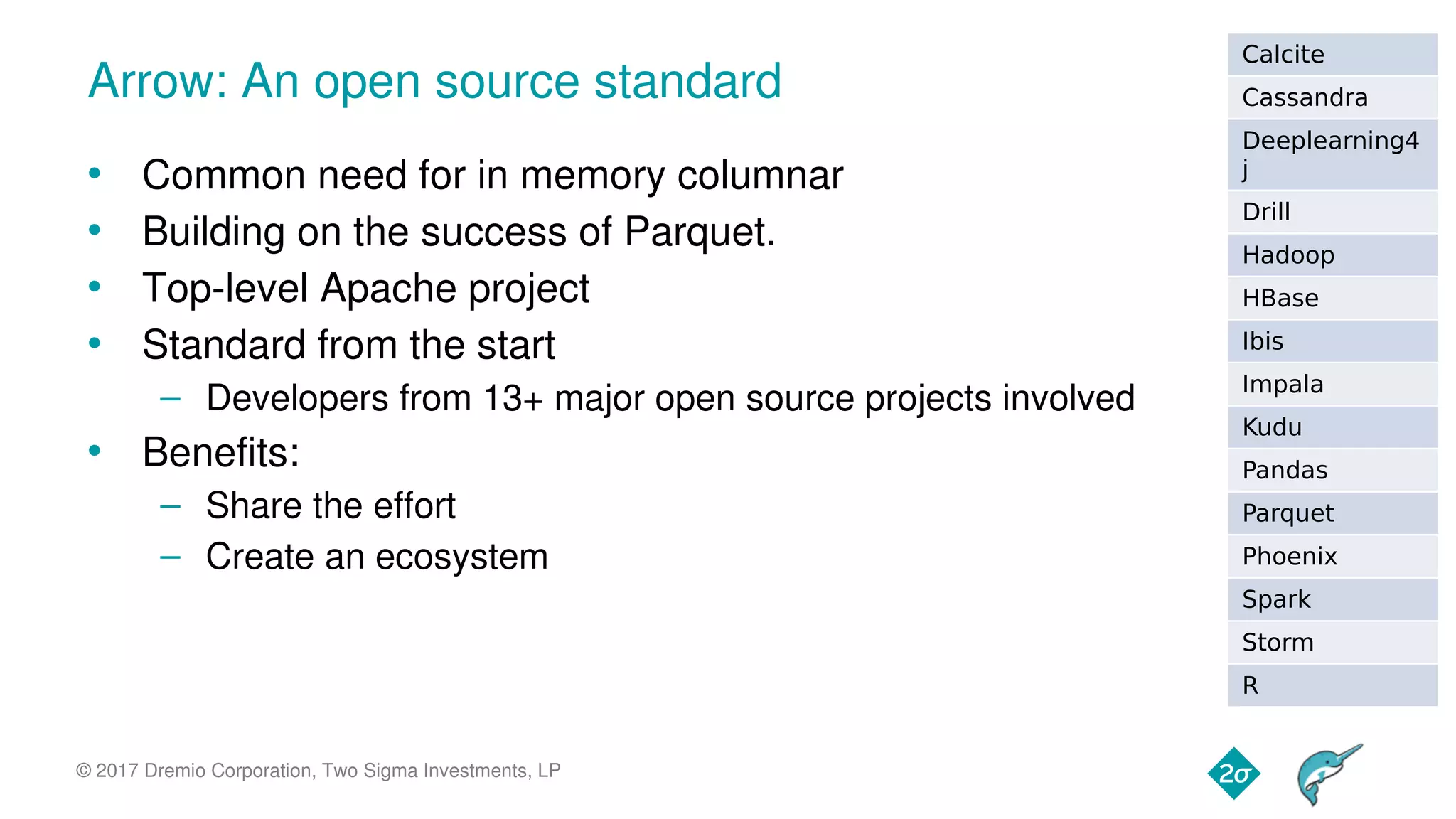

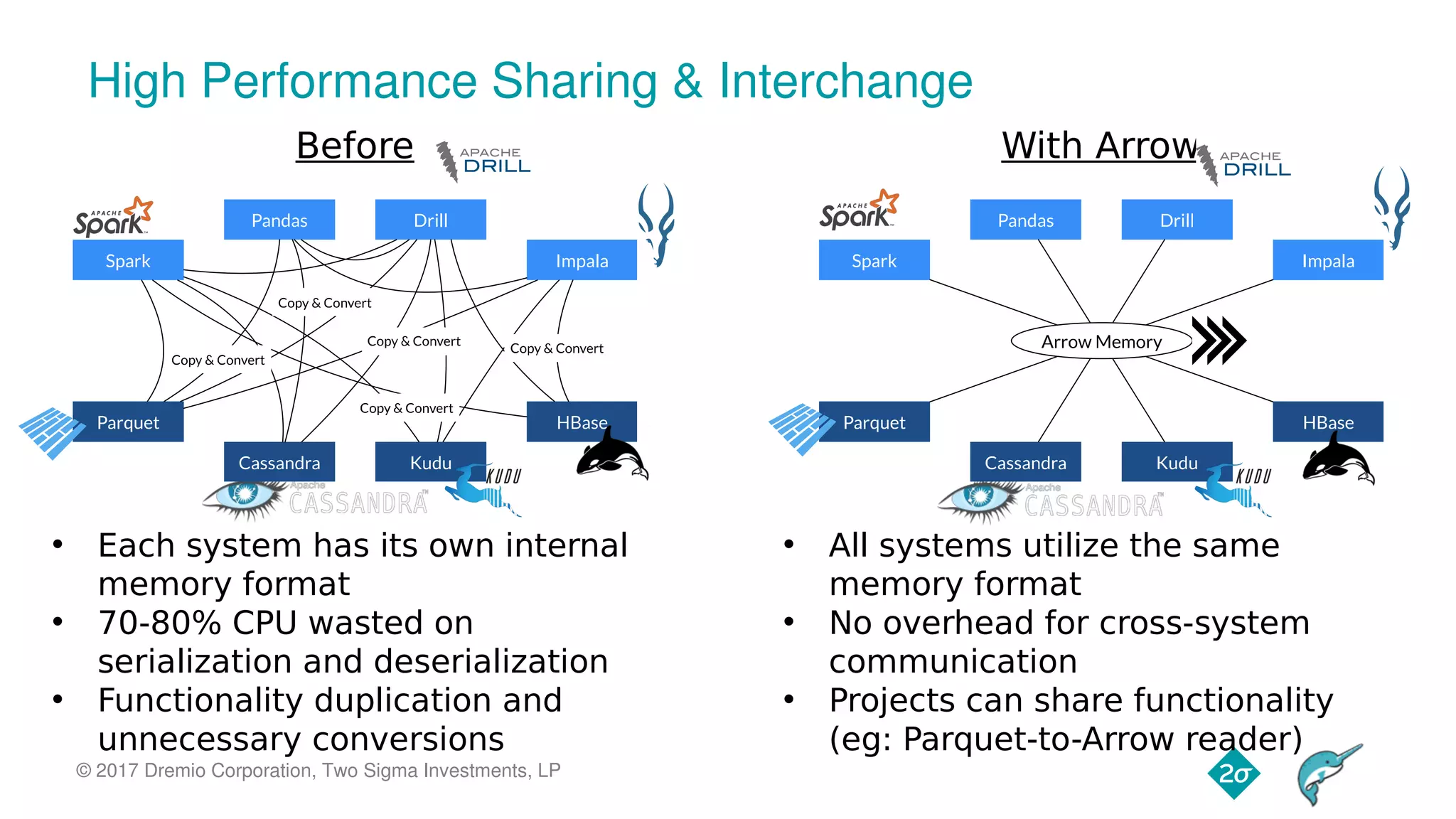

The document discusses improving Python and Spark performance through Apache Arrow, highlighting the limitations of PySpark user-defined functions (UDFs) and their performance issues. It introduces Apache Arrow as a solution that streamlines memory formats for better interoperability and efficiency in data processing. The future roadmap includes enhancements for grouping functions and further integration with Spark and Arrow to optimize UDFs.

![© 2017 Dremio Corporation, Two Sigma Investments, LP Columnar data persons = [{ nam e:’Joe', age:18, phones:[ ‘555-111-1111’, ‘555-222-2222’ ] },{ nam e:’Jack', age:37, phones:[‘555-333-3333’] }]](https://image.slidesharecdn.com/acfrogbgbmxapx82mxuhzjc-sl8fulqgdezkqz0uwtwijgvvcpzty9bqr-iiwmxex3rgws4wof4bamlcek6lujemvgaw1ufkmllh-180504154726/75/Improving-Python-and-Spark-Performance-and-Interoperability-with-Apache-Arrow-20-2048.jpg)

![© 2017 Dremio Corporation, Two Sigma Investments, LP Record Batch Construction Schema Negotiation Schema Negotiation Dictionary Batch Dictionary Batch Record Batch Record Batch Record Batch Record Batch Record Batch Record Batch name (offset)name (offset) name (data)name (data) age (data)age (data) phones (list offset)phones (list offset) phones (data)phones (data) data header (describes offsets into data)data header (describes offsets into data) name (bitmap)name (bitmap) age (bitmap)age (bitmap) phones (bitmap)phones (bitmap) phones (offset)phones (offset) { nam e:’Joe', age:18, phones:[ ‘555-111-1111’, ‘555-222-2222’ ] } Each box (vector) is contiguous memory The entire record batch is contiguous on wire Each box (vector) is contiguous memory The entire record batch is contiguous on wire](https://image.slidesharecdn.com/acfrogbgbmxapx82mxuhzjc-sl8fulqgdezkqz0uwtwijgvvcpzty9bqr-iiwmxex3rgws4wof4bamlcek6lujemvgaw1ufkmllh-180504154726/75/Improving-Python-and-Spark-Performance-and-Interoperability-with-Apache-Arrow-21-2048.jpg)