This document provides an overview of setting up a Resource Description Framework (RDF) environment for IBM DB2 databases. It describes RDF store tables that contain metadata and data, access control methods for RDF stores, and how to create default and optimized RDF stores. It also discusses the central view of RDF stores in DB2 and how to set up an RDF environment to use DB2 RDF commands and APIs.

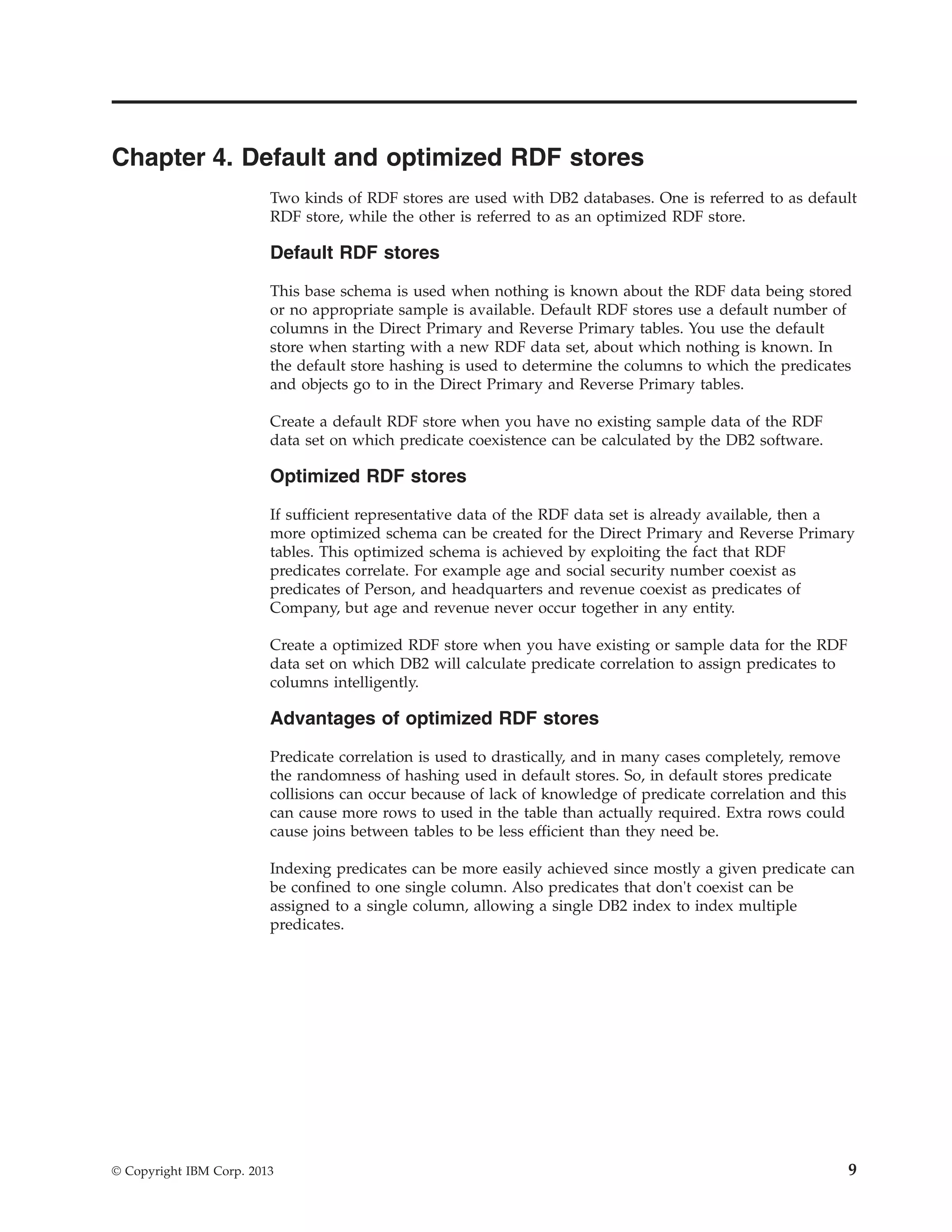

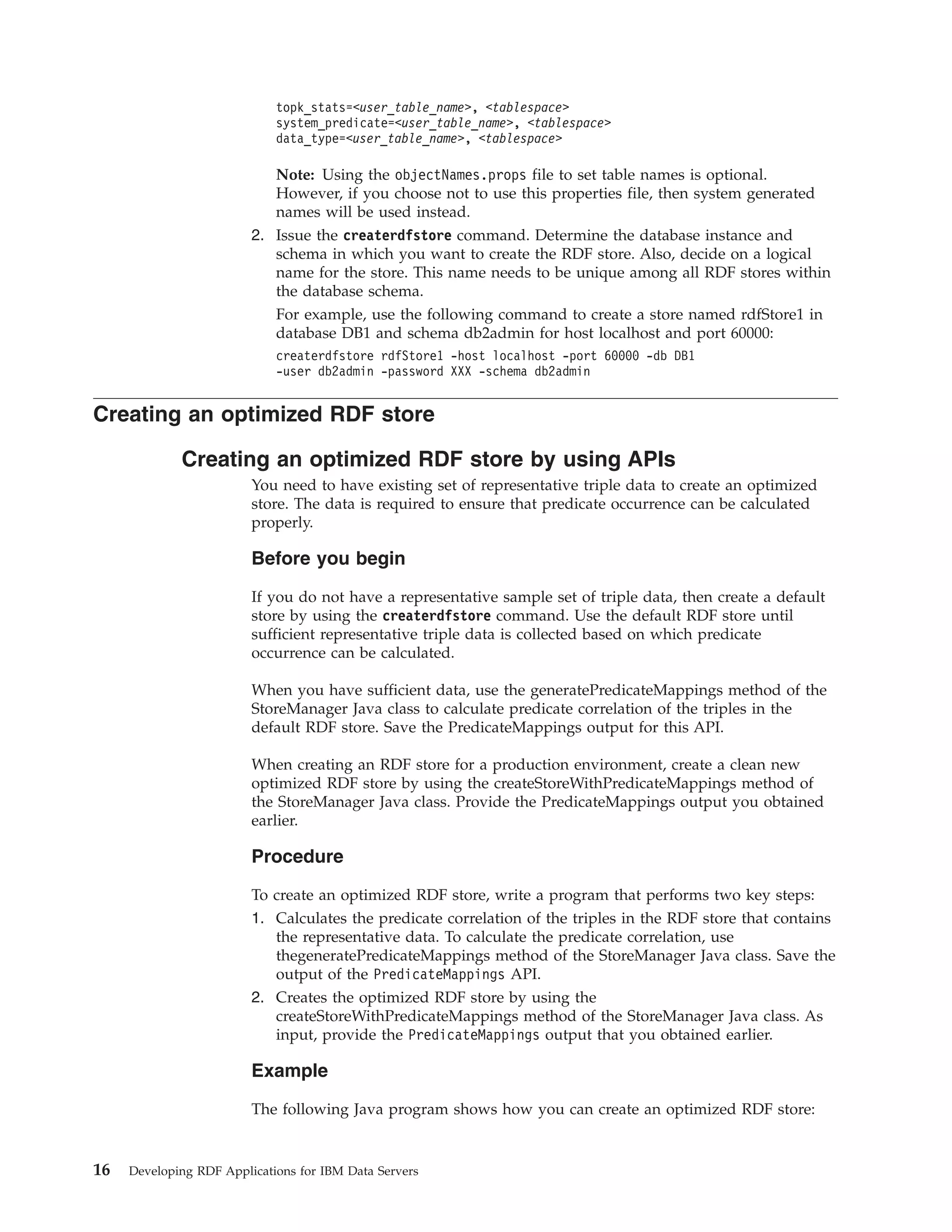

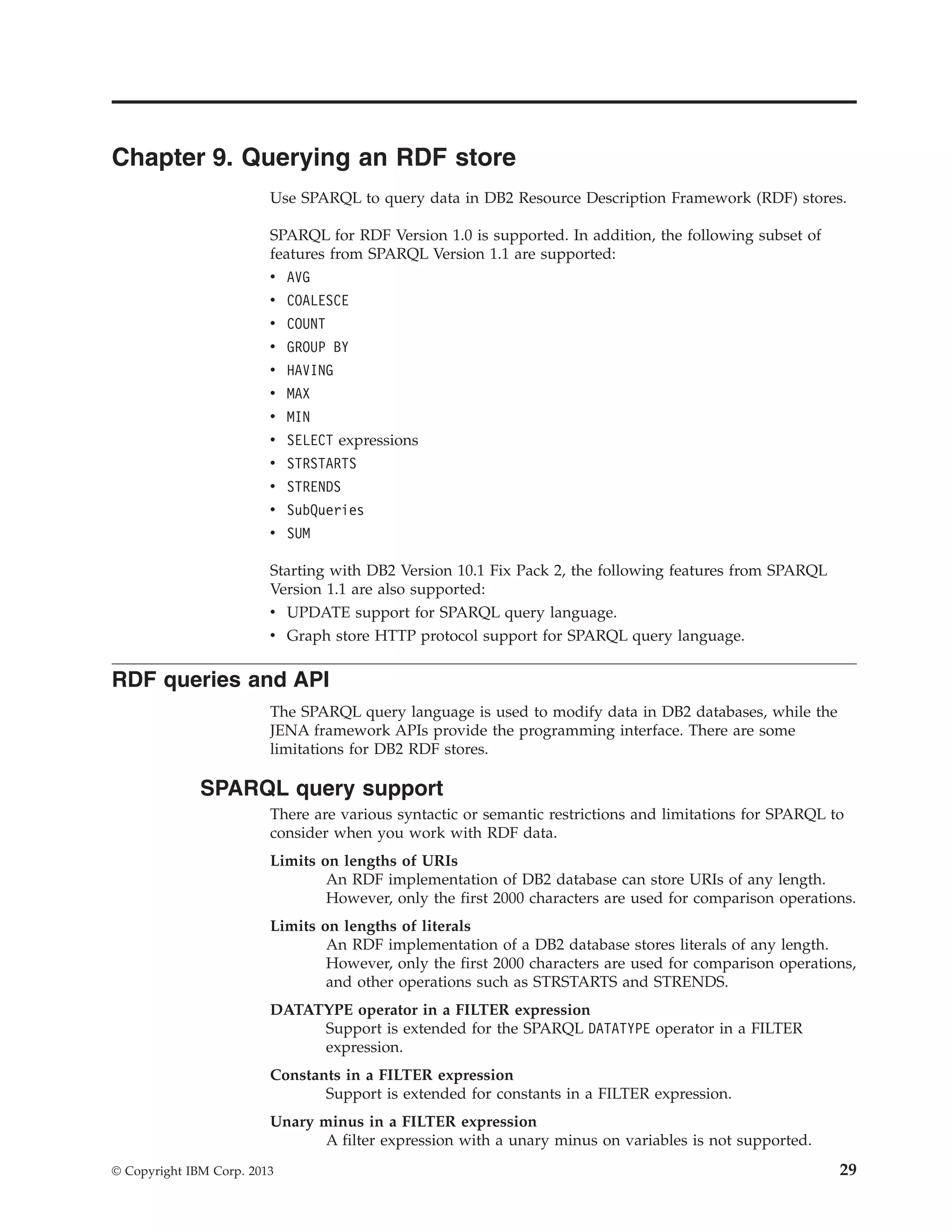

![import java.io.BufferedInputStream; import java.io.BufferedOutputStream; import java.io.FileInputStream; import java.io.FileOutputStream; import java.io.IOException; import java.sql.Connection; import java.sql.DriverManager; import java.sql.SQLException; import com.ibm.rdf.store.StoreManager; /** * This program demonstrates how to create an optimized RDF store * by using the predicate correlation information from an existing * store. You can also create optimized RDF stores for production * environments after a collecting enough representative data cycle * on a default store. */ public class CreateOptimizedStore { public static void main(String[] args) throws SQLException, IOException { String currentStoreName = "sample"; String currentStoreSchema = "db2admin"; Connection currentStoreConn = null; /* * Connect to the "currentStore" and generate the predicate * correlation for the triples in it. */ try { Class.forName("com.ibm.db2.jcc.DB2Driver"); currentStoreConn = DriverManager.getConnection( "jdbc:db2://localhost:50000/dbrdf", "db2admin", "db2admin"); currentStoreConn.setAutoCommit(false); } catch (ClassNotFoundException e1) { e1.printStackTrace(); } /* Specify the file on disk where the predicate * correlation will be stored. */ String file = "/predicateMappings.nq"; BufferedOutputStream predicateMappings = new BufferedOutputStream(new FileOutputStream(file)); StoreManager.generatePredicateMappings(currentStoreConn, currentStoreSchema, currentStoreName, predicateMappings); predicateMappings.close(); /** * Create an optimized RDF store by using the previously * generated predicate correlation information. */ String newOptimizedStoreName = "production"; String newStoreSchema = "db2admin"; Connection newOptimizedStoreConn = DriverManager.getConnection( "jdbc:db2://localhost:50000/dbrdf", "db2admin","db2admin"); BufferedInputStream inputPredicateMappings = new BufferedInputStream( Chapter 7. Creating an RDF store 17](https://image.slidesharecdn.com/ibmdb210-161212075648/75/Ibm-db2-10-5-for-linux-unix-and-windows-developing-rdf-applications-for-ibm-data-servers-23-2048.jpg)

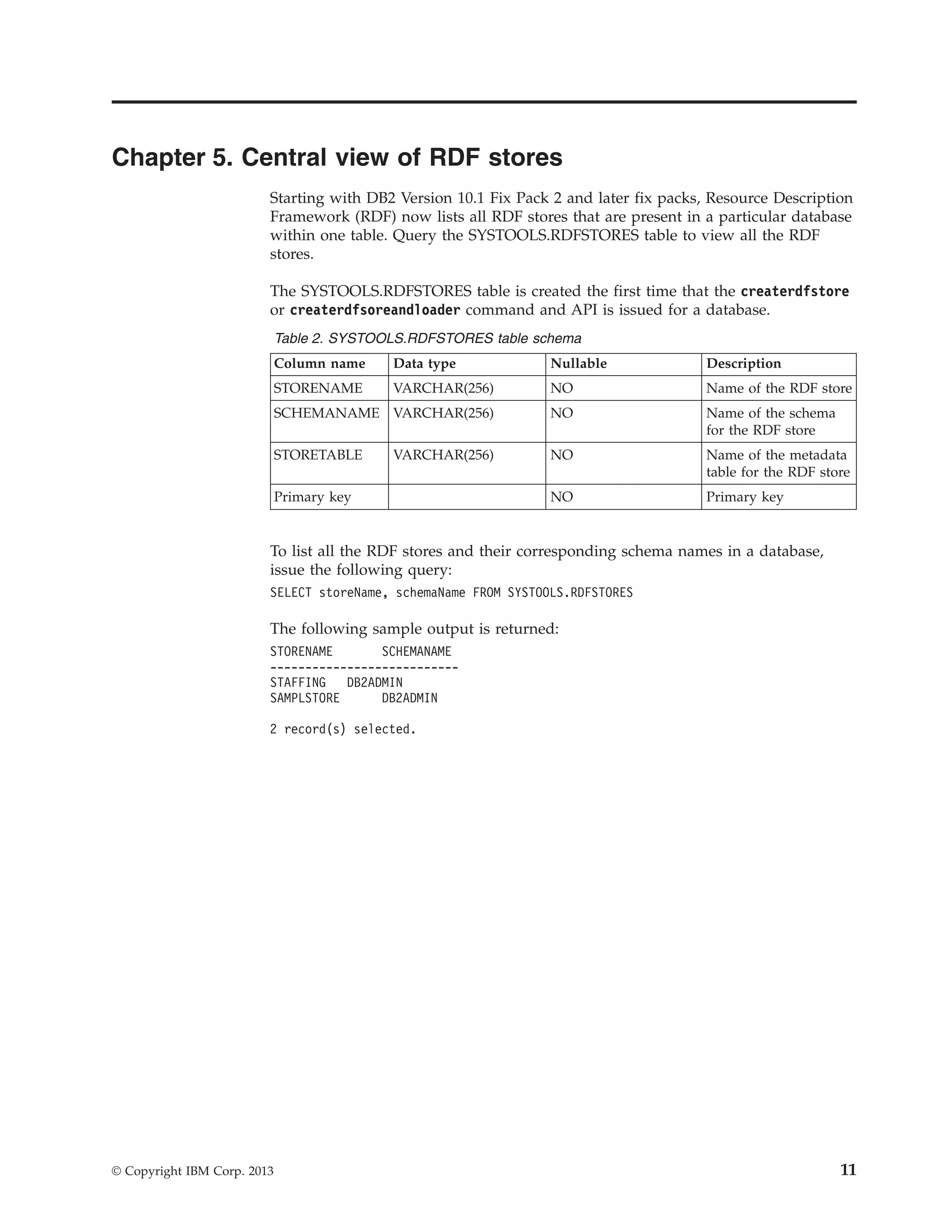

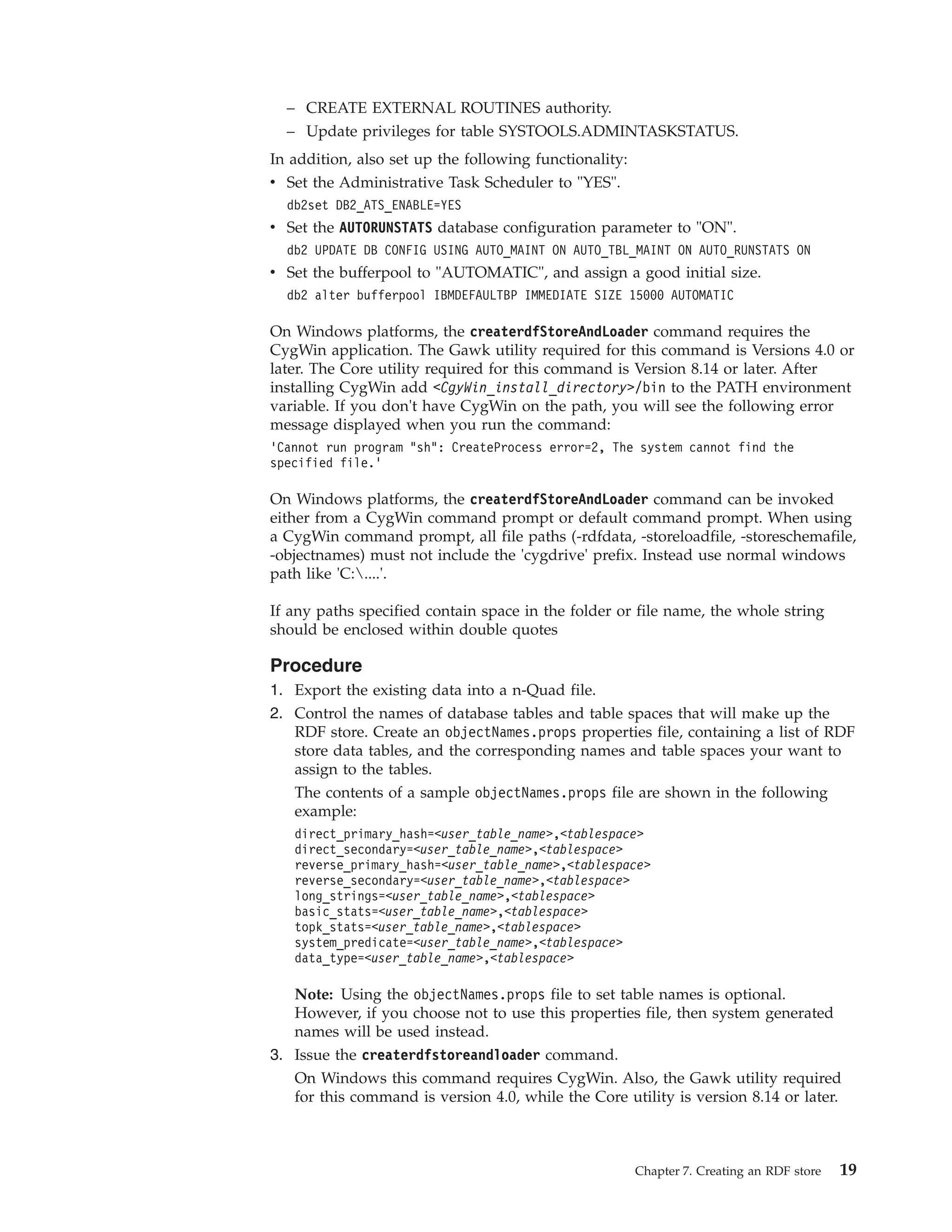

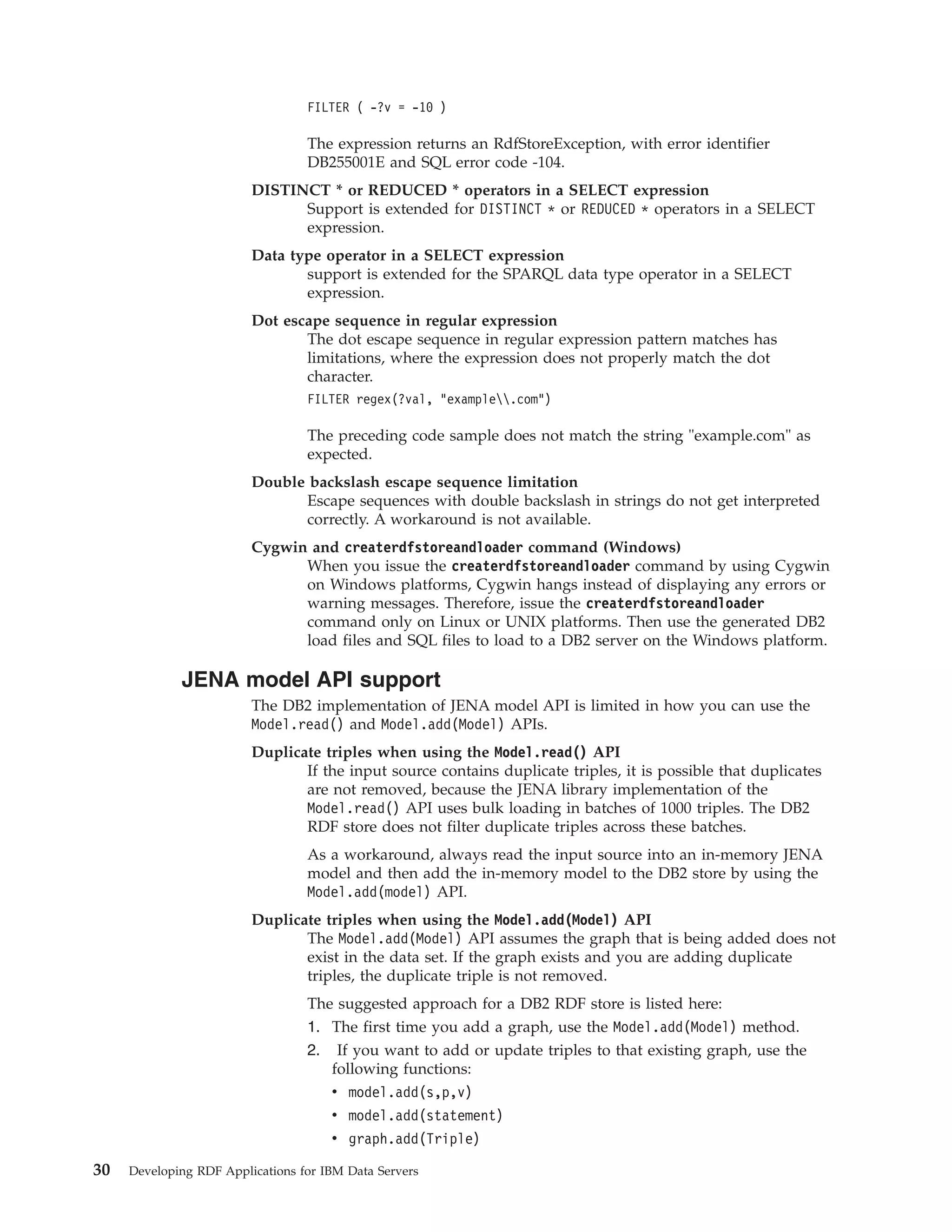

![import com.ibm.rdf.store.StoreManager; public class CreateStoreGraphAccessControl { /* Access to the graph is controlled based on the * following two RDF predicates. */ private final static String APPID = "http://myapp.net/xmlns/APPID"; private final static String CONTEXTID = "http://myapp.net/xmlns/CONTEXTID"; /** * The DB2 data type for these predicates is assigned. */ private final static String APPID_DATATYPE = "VARCHAR(60)"; private final static String CONTEXTID_DATATYPE = "VARCHAR(60)"; /* * Create a java.util.properties file that lists these two * properties and their data types, where * propertyName is the RDF predicate and * propertyValue is the data type for the RDF predicate. */ private static Properties filterPredicateProps = new Properties(); static { filterPredicateProps.setProperty(APPID, APPID_DATATYPE); filterPredicateProps.setProperty(CONTEXTID, CONTEXTID_DATATYPE); } public static void main(String[] args) throws SQLException { Connection conn = null; // Get a connection to the DB2 database. try { Class.forName("com.ibm.db2.jcc.DB2Driver"); conn = DriverManager.getConnection( "jdbc:db2://localhost:50000/dbrdf", "db2admin", "db2admin"); } catch (ClassNotFoundException e1) { e1.printStackTrace(); } /* * Create the store with the access control predicates. */ StoreManager.createStore(conn, "db2admin", "SampleAccessControl", null, filterPredicateProps); } } Chapter 7. Creating an RDF store 21](https://image.slidesharecdn.com/ibmdb210-161212075648/75/Ibm-db2-10-5-for-linux-unix-and-windows-developing-rdf-applications-for-ibm-data-servers-27-2048.jpg)

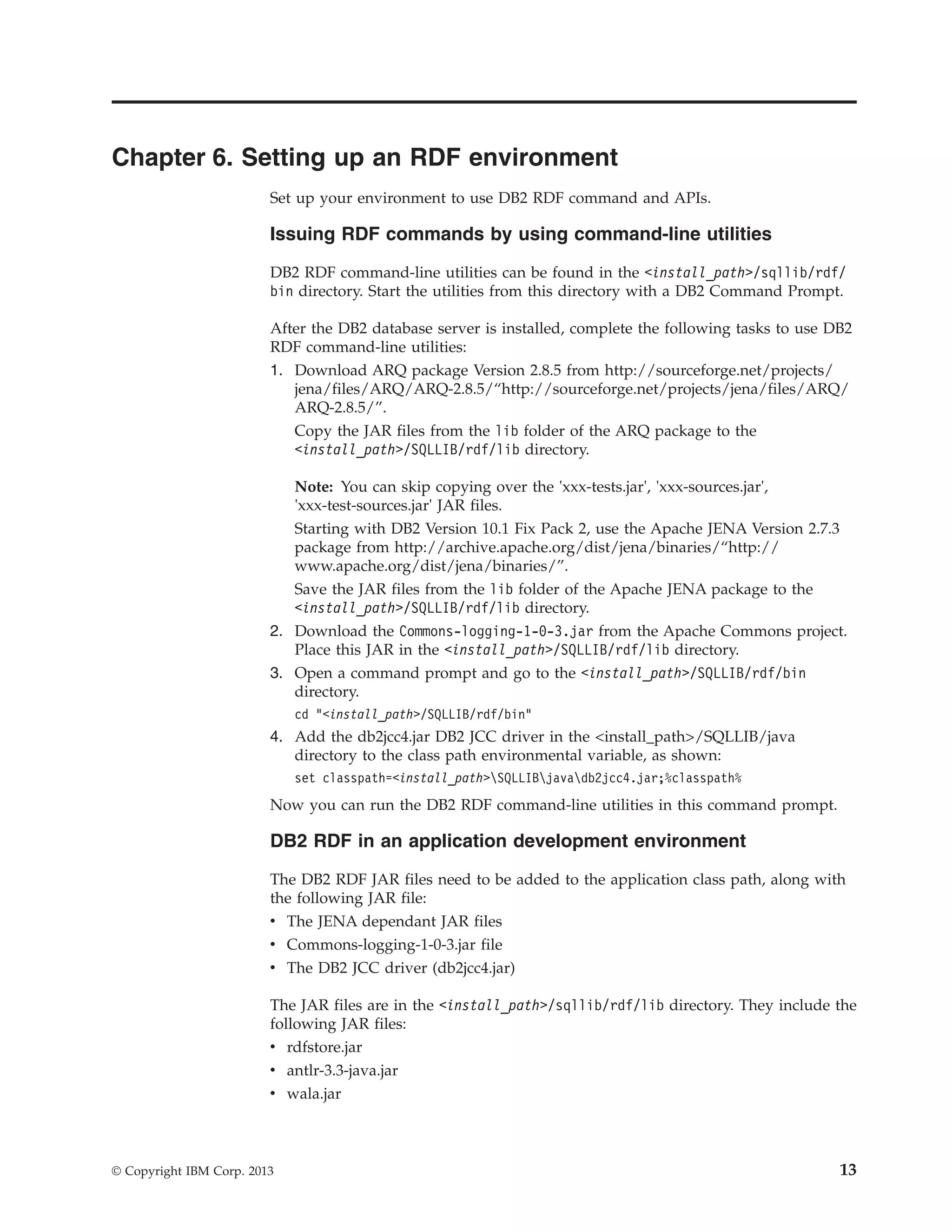

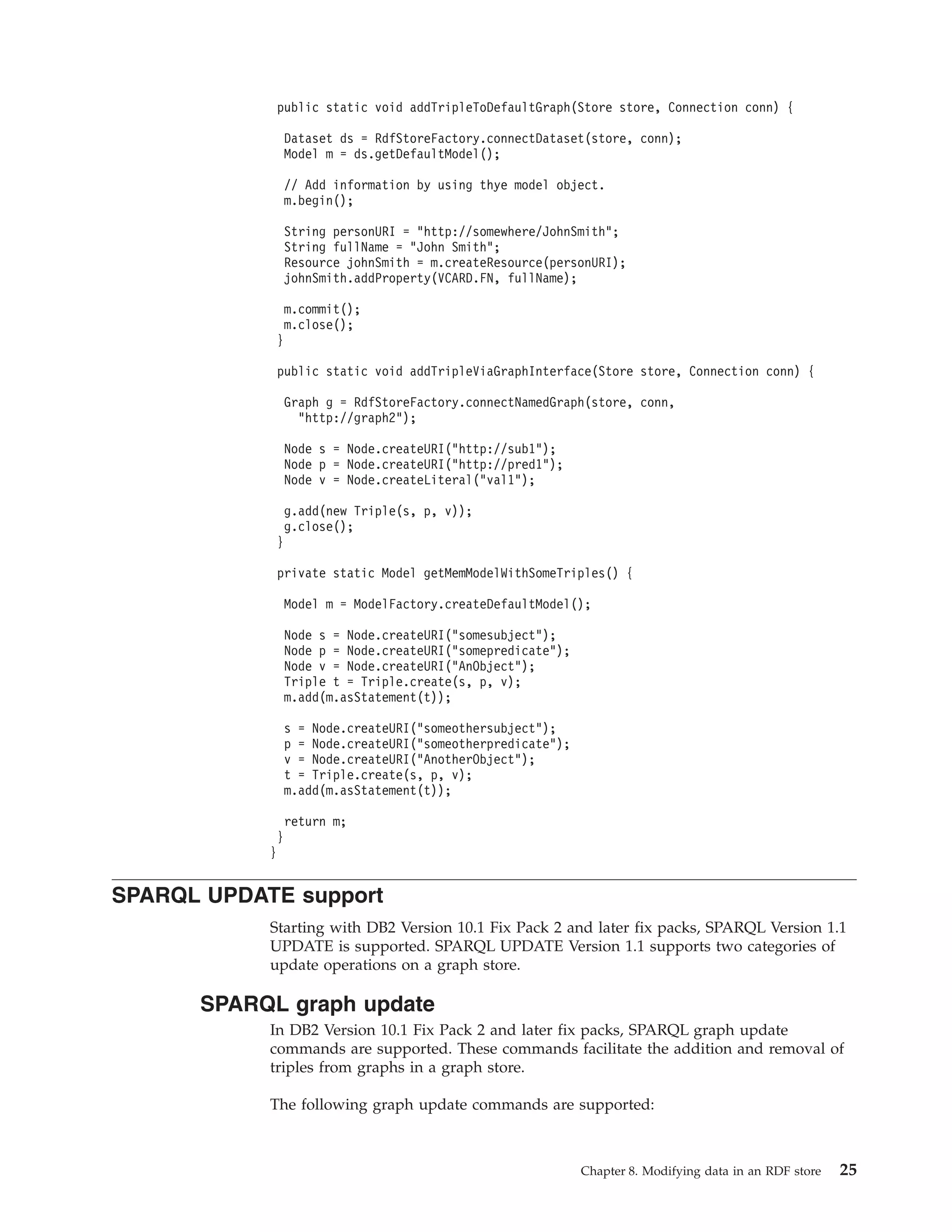

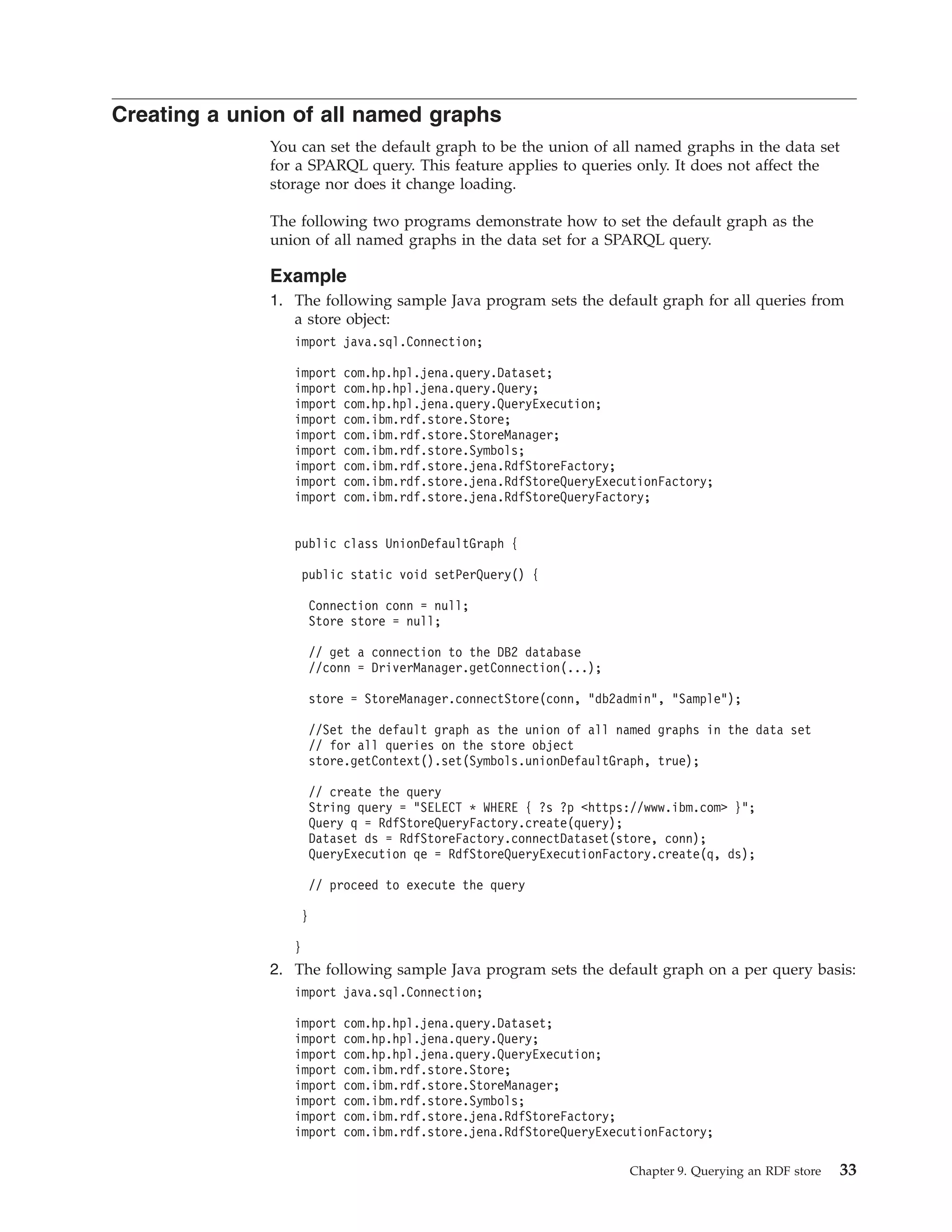

![Chapter 8. Modifying data in an RDF store Data in an RDF store can be modified either by using JENA APIs or SPARQL Update operations. Modifying data in an RDF store Work with the data in an RDF store using JENA APIs. Procedure To modify data in an RDF store, you can use the following sample program. Modify triples and graphs in an RDF store by using JENA APIs as shown in the following example. Example The following program demonstrates how to modify triples and graphs in an RDF store by using JENA APIs: import java.sql.Connection; import java.sql.DriverManager; import java.sql.SQLException; import com.hp.hpl.jena.graph.Graph; import com.hp.hpl.jena.graph.Node; import com.hp.hpl.jena.graph.Triple; import com.hp.hpl.jena.query.Dataset; import com.hp.hpl.jena.rdf.model.Model; import com.hp.hpl.jena.rdf.model.ModelFactory; import com.hp.hpl.jena.rdf.model.Resource; import com.hp.hpl.jena.vocabulary.VCARD; import com.ibm.rdf.store.Store; import com.ibm.rdf.store.StoreManager; import com.ibm.rdf.store.jena.RdfStoreFactory; public class RDFStoreSampleInsert { public static void main(String[] args) throws SQLException { Connection conn = null; Store store = null; String storeName = "sample"; String schema = "db2admin"; try { Class.forName("com.ibm.db2.jcc.DB2Driver"); conn = DriverManager.getConnection( "jdbc:db2://localhost:50000/dbrdf", "db2admin", "db2admin"); conn.setAutoCommit(false); } catch (ClassNotFoundException e1) { e1.printStackTrace(); } // Create a store or dataset. store = StoreManager.createStore(conn, schema, storeName, null); © Copyright IBM Corp. 2013 23](https://image.slidesharecdn.com/ibmdb210-161212075648/75/Ibm-db2-10-5-for-linux-unix-and-windows-developing-rdf-applications-for-ibm-data-servers-29-2048.jpg)

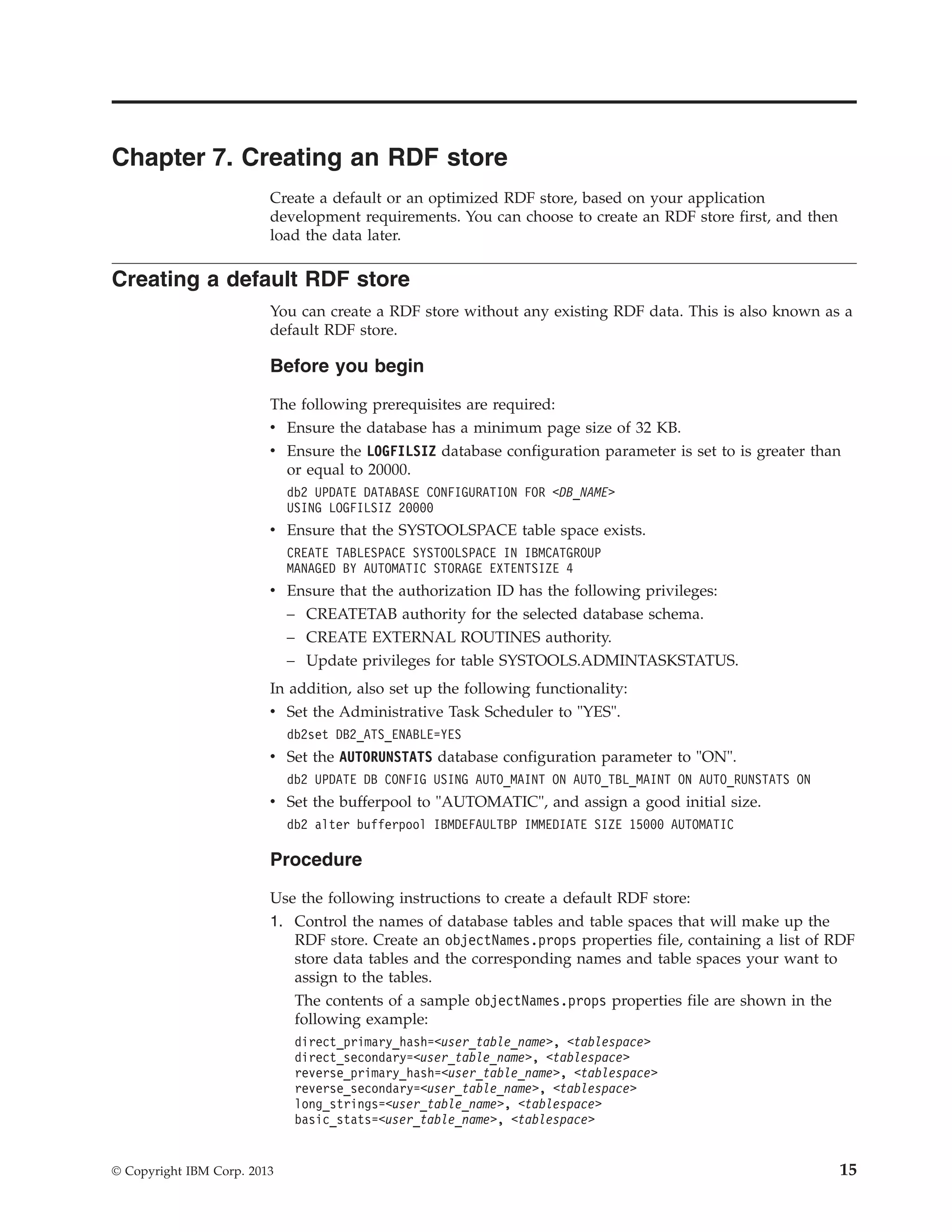

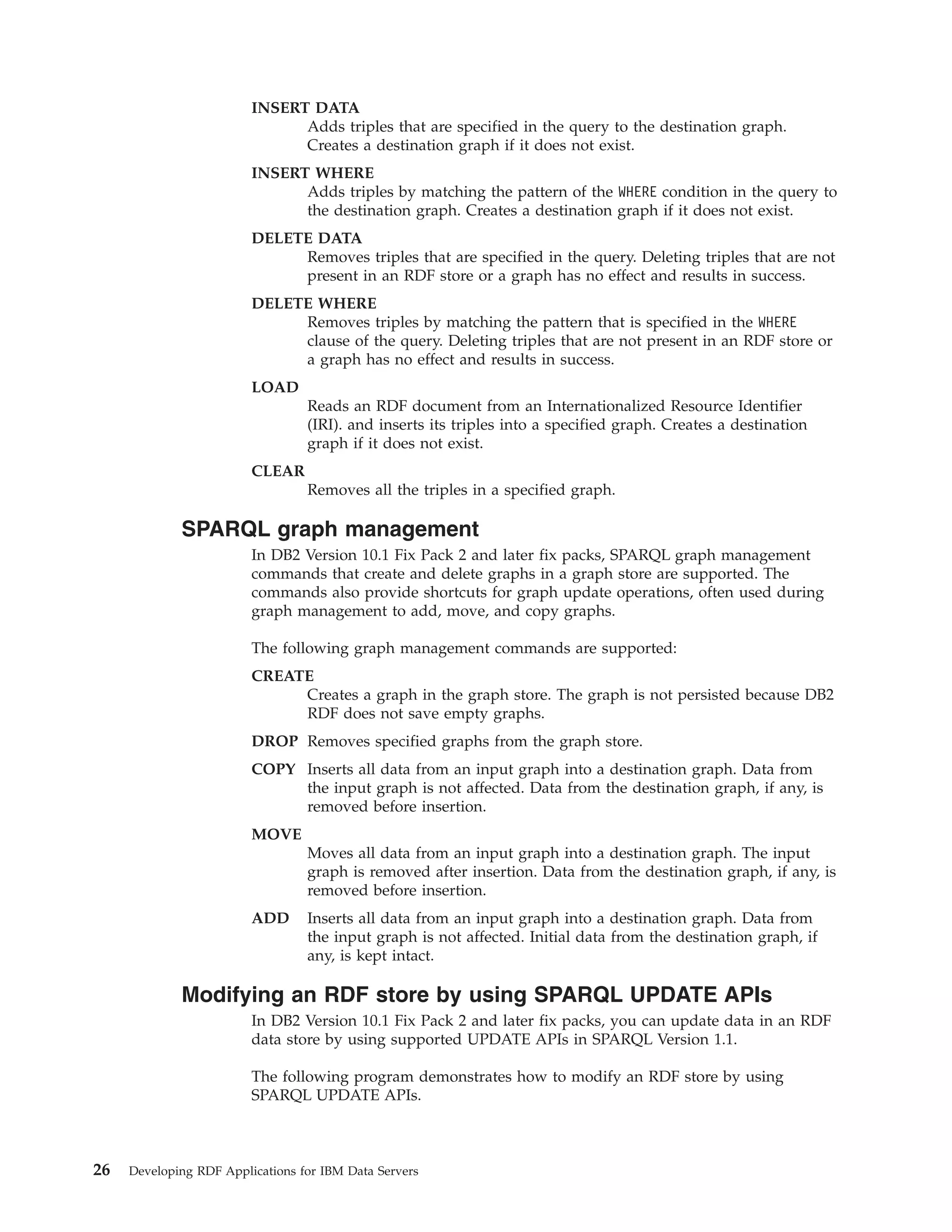

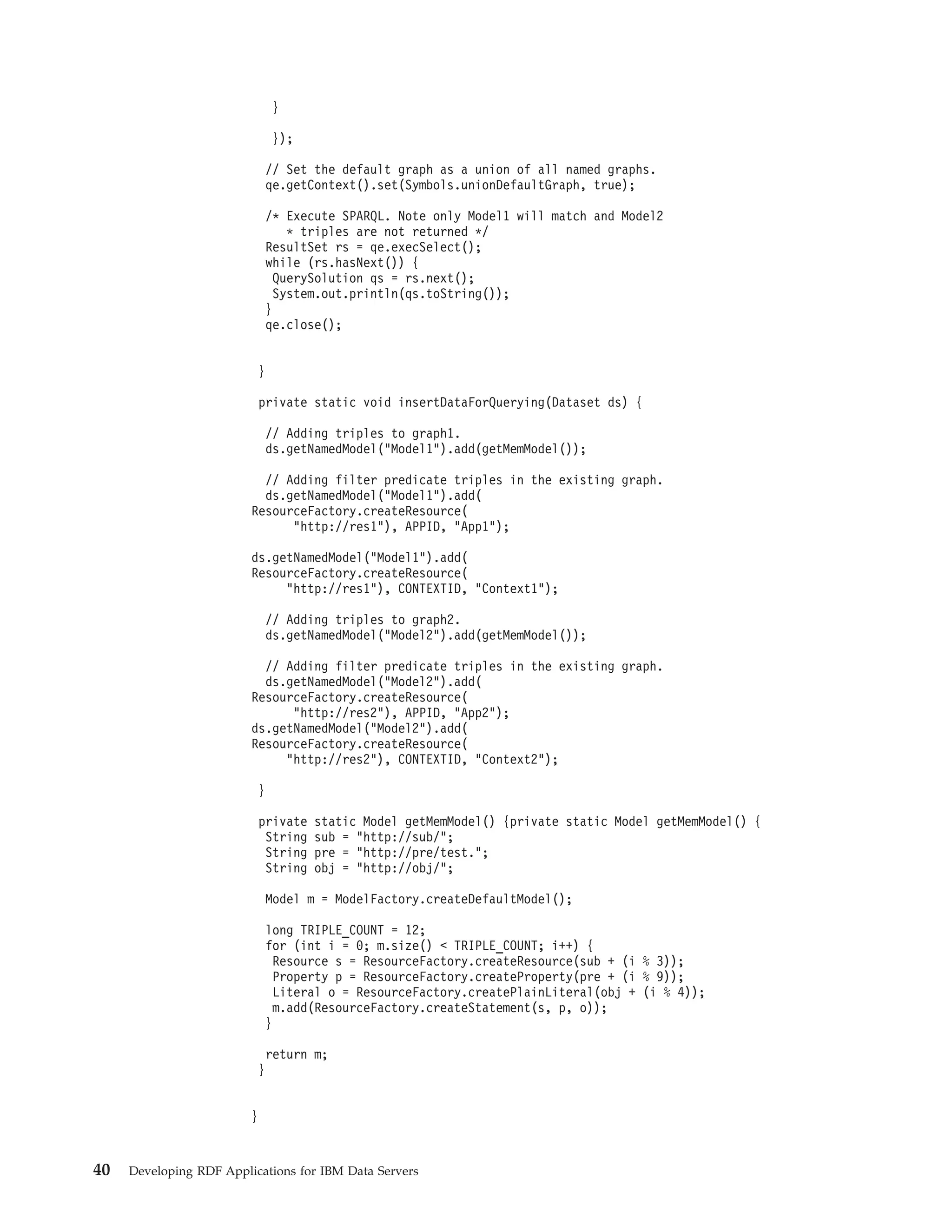

![import java.sql.Connection; import java.sql.DriverManager; import java.sql.SQLException; import com.hp.hpl.jena.graph.Node; import com.hp.hpl.jena.query.Dataset; import com.hp.hpl.jena.sparql.core.Quad; import com.hp.hpl.jena.sparql.modify.request.QuadDataAcc; import com.hp.hpl.jena.sparql.modify.request.UpdateDataInsert; import com.hp.hpl.jena.sparql.util.NodeFactory; import com.hp.hpl.jena.update.UpdateAction; import com.ibm.rdf.store.Store; import com.ibm.rdf.store.StoreManager; import com.ibm.rdf.store.jena.RdfStoreFactory; /** * Sample program for using SPARQL Updates */ public class RDFStoreUpdateSample { public static void main(String[] args) throws SQLException // Create the connection Connection conn = null; Store store = null; String storeName = "staffing"; String schema = "db2admin"; try { Class.forName("com.ibm.db2.jcc.DB2Driver"); conn = DriverManager.getConnection( "jdbc:db2://localhost:50000/RDFDB", "db2admin", "db2admin"); conn.setAutoCommit(false); } catch (ClassNotFoundException e1) { e1.printStackTrace(); } // Connect to the store store = StoreManager.connectStore(conn, schema, storeName); // Create the dataset Dataset ds = RdfStoreFactory.connectDataset(store, conn); // Update dataset by parsing the SPARQL UPDATE statement // updateByParsingSPARQLUpdates(ds); // Update dataset by building Update objects // updateByBuildingUpdateObjects(ds); ds.close(); conn.commit(); } /** * Update by Parsing SPARQL Update * * @param ds * @param graphNode */ private static void updateByParsingSPARQLUpdates(Dataset ds) { String update = "INSERT DATA { GRAPH <http://example/bookStore> { <http://example/book1> <http://example.org/ns#price> 100 } }"; //Execute update via UpdateAction UpdateAction.parseExecute(update, ds); } /** * Update by creating Update objects * * @param ds * @param graphNode */ private static void updateByBuildingUpdateObjects(Dataset ds) { // Graph node Node graphNode = NodeFactory.parseNode("http://example/book2>"); Node p = NodeFactory.parseNode("<http://example.org/ns#price>"); Chapter 8. Modifying data in an RDF store 27](https://image.slidesharecdn.com/ibmdb210-161212075648/75/Ibm-db2-10-5-for-linux-unix-and-windows-developing-rdf-applications-for-ibm-data-servers-33-2048.jpg)

![v Resource.addXX() Remember: If you are adding a triple that exists, an error message is returned. In DB2 Version 10.1 Fix Pack 2 and later fix packs, both of the preceding limitations are now removed in the DB2 product implementation of the JENA API. Issuing SPARQL queries You can query data stored in an RDF store. Example The following program demonstrates how to query data in an RDF store by using the SPARQL query language. import java.io.IOException; import java.sql.Connection; import java.sql.DriverManager; import java.sql.SQLException; import com.hp.hpl.jena.query.Dataset; import com.hp.hpl.jena.query.Query; import com.hp.hpl.jena.query.QueryExecution; import com.hp.hpl.jena.query.QuerySolution; import com.hp.hpl.jena.query.ResultSet; import com.hp.hpl.jena.rdf.model.Model; import com.ibm.rdf.store.Store; import com.ibm.rdf.store.StoreManager; import com.ibm.rdf.store.exception.RdfStoreException; import com.ibm.rdf.store.jena.RdfStoreFactory; import com.ibm.rdf.store.jena.RdfStoreQueryExecutionFactory; import com.ibm.rdf.store.jena.RdfStoreQueryFactory; public class RDFStoreSampleQuery { public static void main(String[] args) throws SQLException, IOException { Connection conn = null; Store store = null; String storeName = "sample"; String schema = "db2admin"; // Get a connection to the DB2 database. try { Class.forName("com.ibm.db2.jcc.DB2Driver"); conn = DriverManager.getConnection( "jdbc:db2://localhost:50000/dbrdf", "db2admin", "db2admin"); } catch (ClassNotFoundException e1) { e1.printStackTrace(); } try { /* Connect to required RDF store in the specified schema. */ store = StoreManager.connectStore(conn, schema, storeName); /* This is going to be our SPARQL query i.e. select triples in the default graph where object is <ibm.com> */ String query = "SELECT * WHERE { ?s ?p Chapter 9. Querying an RDF store 31](https://image.slidesharecdn.com/ibmdb210-161212075648/75/Ibm-db2-10-5-for-linux-unix-and-windows-developing-rdf-applications-for-ibm-data-servers-37-2048.jpg)

![import com.hp.hpl.jena.rdf.model.Resource; import com.hp.hpl.jena.rdf.model.ResourceFactory; import com.hp.hpl.jena.sparql.core.describe.DescribeHandler; import com.hp.hpl.jena.sparql.core.describe.DescribeHandlerFactory; import com.hp.hpl.jena.sparql.core.describe.DescribeHandlerRegistry; import com.hp.hpl.jena.sparql.util.Context; import com.ibm.rdf.store.Store; import com.ibm.rdf.store.StoreManager; import com.ibm.rdf.store.jena.RdfStoreFactory; import com.ibm.rdf.store.jena.RdfStoreQueryExecutionFactory; import com.ibm.rdf.store.jena.RdfStoreQueryFactory; public class DescribeTest { /** * @param args * @throws ClassNotFoundException * @throws SQLException */ public static void main(String[] args) throws ClassNotFoundException, SQLException { if (args.length != 5) { System.err.print("Invalid arguments.n"); printUsage(); System.exit(0); } /* Note: ensure that the DB2 default describe handler is also removed. * Use the ARQ API’s to remove the default registered describe handlers. * If you don’t do this, every resource runs through multiple describe * handlers, causing unnecessarily high overhead. */ /* * Now Register a new DescribeHandler (MyDescribeHandler) */ DescribeHandlerRegistry.get().add(new DescribeHandlerFactory() { public DescribeHandler create() { return new MyDescribeHandler(); } }); /* * Creating database connection and store object. */ Store store = null; Connection conn = null; Class.forName("com.ibm.db2.jcc.DB2Driver"); String datasetName = args[0]; String url = args[1]; String schema = args[2]; String username = args[3]; String passwd = args[4]; conn = DriverManager.getConnection(url, username, passwd); if (StoreManager.checkStoreExists(conn, schema, datasetName)) { store = StoreManager.connectStore(conn, schema, datasetName); } else { store = StoreManager.createStore(conn, schema, datasetName, null); } /* * Creating dataset with test data. Chapter 9. Querying an RDF store 35](https://image.slidesharecdn.com/ibmdb210-161212075648/75/Ibm-db2-10-5-for-linux-unix-and-windows-developing-rdf-applications-for-ibm-data-servers-41-2048.jpg)

![*/ Dataset ds = RdfStoreFactory.connectDataset(store, conn); ds.getDefaultModel().removeAll(); ds.getDefaultModel().add(getInputData()); /* * Executing a DESCRIBE SPARQL query. */ String sparql = "DESCRIBE <http://example.com/x>"; Query query = RdfStoreQueryFactory.create(sparql); QueryExecution qe = RdfStoreQueryExecutionFactory.create(query, ds); Model m = qe.execDescribe(); m.write(System.out, "N-TRIPLES"); qe.close(); conn.close(); } private static void printUsage() { System.out.println("Correct usage: "); System.out.println("java DescribeTest <DATASET_NAME>"); System.out.println(" <URL> <SCHEMA> <USERNAME> <PASSWORD>"); } // Creating input data. private static Model getInputData() { Model input = ModelFactory.createDefaultModel(); Resource iris[] = { ResourceFactory.createResource("http://example.com/w"), ResourceFactory.createResource("http://example.com/x"), ResourceFactory.createResource("http://example.com/y"), ResourceFactory.createResource("http://example.com/z") }; Property p = ResourceFactory.createProperty("http://example.com/p"); Node o = Node.createAnon(new AnonId("AnonID")); for (int i = 0; i < iris.length - 1; i++) { input.add(input.asStatement(Triple.create(iris[i].asNode(), p .asNode(), iris[i + 1].asNode()))); } input.add(input.asStatement(Triple.create(iris[iris.length - 1] .asNode(), p.asNode(), o))); return input; } } /* * Sample implementation of DescribeHandler. */ class MyDescribeHandler implements DescribeHandler { /* * Set to keep track of all unique resource which are * required to DESCRIBE. */ private Set <Resource> resources; private Model accumulator; 36 Developing RDF Applications for IBM Data Servers](https://image.slidesharecdn.com/ibmdb210-161212075648/75/Ibm-db2-10-5-for-linux-unix-and-windows-developing-rdf-applications-for-ibm-data-servers-42-2048.jpg)

![Determine the values of RDF predicates based on RDF graphs that contain triples. The values determine which graphs are accessed. Decide whether the access control check is for a single value or any value in a set of values. If the access control check is for a single value, create a QueryFilterPredicateEquals object that returns this value. If the access control check is for any one value in a set of values, create a QueryFilterPredicateMember object that returns set of values. Repeat this process for each access control filter predicate. Set the objects into the QueryExecution context for the SPARQL query that is issued. Example The following Java program demonstrates how graph access is controlled by using the RDF store SQL generator. import java.sql.Connection; import java.sql.DriverManager; import java.sql.SQLException; import java.util.ArrayList; import java.util.Iterator; import java.util.List; import com.hp.hpl.jena.query.Dataset; import com.hp.hpl.jena.query.QueryExecution; import com.hp.hpl.jena.query.QuerySolution; import com.hp.hpl.jena.query.ResultSet; import com.hp.hpl.jena.rdf.model.Literal; import com.hp.hpl.jena.rdf.model.Model; import com.hp.hpl.jena.rdf.model.ModelFactory; import com.hp.hpl.jena.rdf.model.Property; import com.hp.hpl.jena.rdf.model.Resource; import com.hp.hpl.jena.rdf.model.ResourceFactory; import com.ibm.rdf.store.Store; import com.ibm.rdf.store.StoreManager; import com.ibm.rdf.store.Symbols; import com.ibm.rdf.store.jena.RdfStoreFactory; import com.ibm.rdf.store.jena.RdfStoreQueryExecutionFactory; import com.ibm.rdf.store.query.filter.QueryFilterPredicate; import com.ibm.rdf.store.query.filter.QueryFilterPredicateEquals; import com.ibm.rdf.store.query.filter.QueryFilterPredicateMember; import com.ibm.rdf.store.query.filter.QueryFilterPredicateProvider; public class QueryStoreGraphAccessControl { /* Property objects for the two RDF predicates based on whose triples * access to the graph is controlled. */ private final static Property APPID = ModelFactory.createDefaultModel() .createProperty("http://myapp.net/xmlns/APPID"); private final static Property CONTEXTID = ModelFactory.createDefaultModel() .createProperty("http://myapp.net/xmlns/CONTEXTID"); public static void main(String[] args) throws SQLException { Connection conn = null; Store store = null; 38 Developing RDF Applications for IBM Data Servers](https://image.slidesharecdn.com/ibmdb210-161212075648/75/Ibm-db2-10-5-for-linux-unix-and-windows-developing-rdf-applications-for-ibm-data-servers-44-2048.jpg)

![Chapter 10. Setting up SPARQL Version 1.1 Graph Store Protocol and SPARQL over HTTP In DB2 Version 10.1 Fix Pack 2 and later fix packs, DB2 RDF supports the SPARQL Version 1.1 graph store HTTP protocol. This protocol requires Apache JENA Fuseki Version 0.2.4. You need to set up the Fuseki environment to use the SPARQL REST API. Before you begin To set up the Fuseki environment: 1. Download the jena-fuseki-0.2.4-distribution.zip file from http://archive.apache.org/dist/jena/binaries/“http://archive.apache.org/dist/ jena/binaries/”. 2. Extract the file on your local system. Procedure 1. Open a command prompt window and go to the <Fuseki install dir>/jena-fuseki-0.2.4 directory. 2. Open the config.ttl file and add db2rdf as a prefix @prefix : <#> . @prefix fuseki: <http://jena.apache.org/fuseki#> . @prefix rdf: <http://www.w3.org/1999/02/22-rdf-syntax-ns#> . @prefix rdfs: <http://www.w3.org/2000/01/rdf-schema#> . @prefix tdb: <http://jena.hpl.hp.com/2008/tdb#> . @prefix ja: <http://jena.hpl.hp.com/2005/11/Assembler#> . @prefix db2rdf: <http://rdfstore.ibm.com/IM/fuseki/configuration#> 3. Add the DB2 RDF service to the config.ttl file. Add this service to the section of the file where all the other services are registered. fuseki:services ( <#service1> <#service2> <#serviceDB2RDF_staffing> ) . You can register multiple services. Each service queries different DB2 RDF data sets. 4. Add the following configuration to the config.ttl file to initialize the RDF namespace. This configuration registers the assembler that creates the DB2Dataset. The configuration also registers the DB2QueryEngine and DB2UpdateEngine engines. # DB2 [] ja:loadClass "com.ibm.rdf.store.jena.DB2" . db2rdf:DB2Dataset rdfs:subClassOf ja:RDFDataset . 5. Add details about the DB2 RDF service to the end of config.ttl file. # Service DB2 Staffing store <#serviceDB2RDF_staffing> rdf:type fuseki:Service ; rdfs:label "SPARQL against DB2 RDF store" ; fuseki:name "staffing" ; fuseki:serviceQuery "sparql" ; fuseki:serviceQuery "query" ; fuseki:serviceUpdate "update" ; fuseki:serviceUpload "upload" ; © Copyright IBM Corp. 2013 41](https://image.slidesharecdn.com/ibmdb210-161212075648/75/Ibm-db2-10-5-for-linux-unix-and-windows-developing-rdf-applications-for-ibm-data-servers-47-2048.jpg)