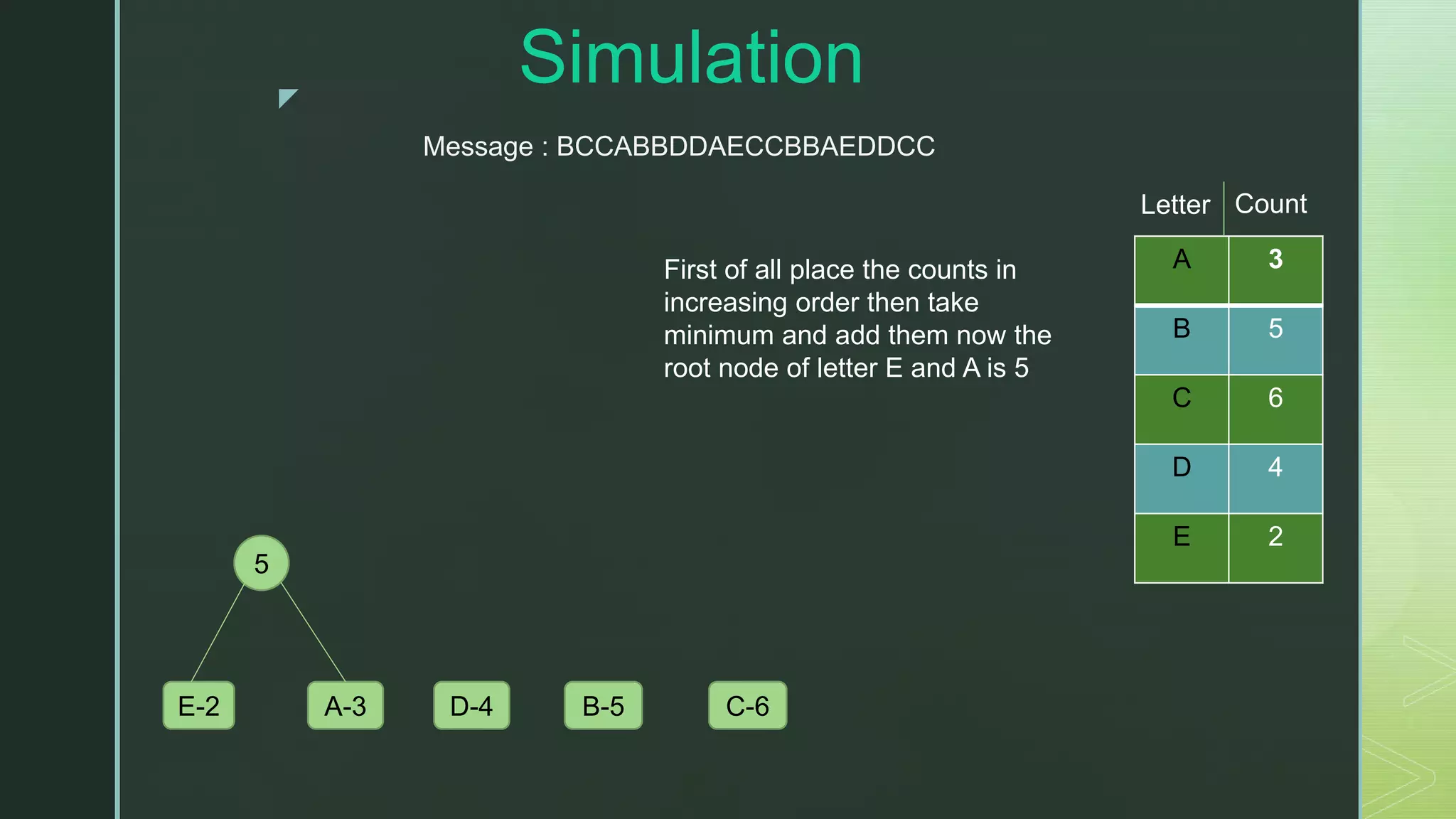

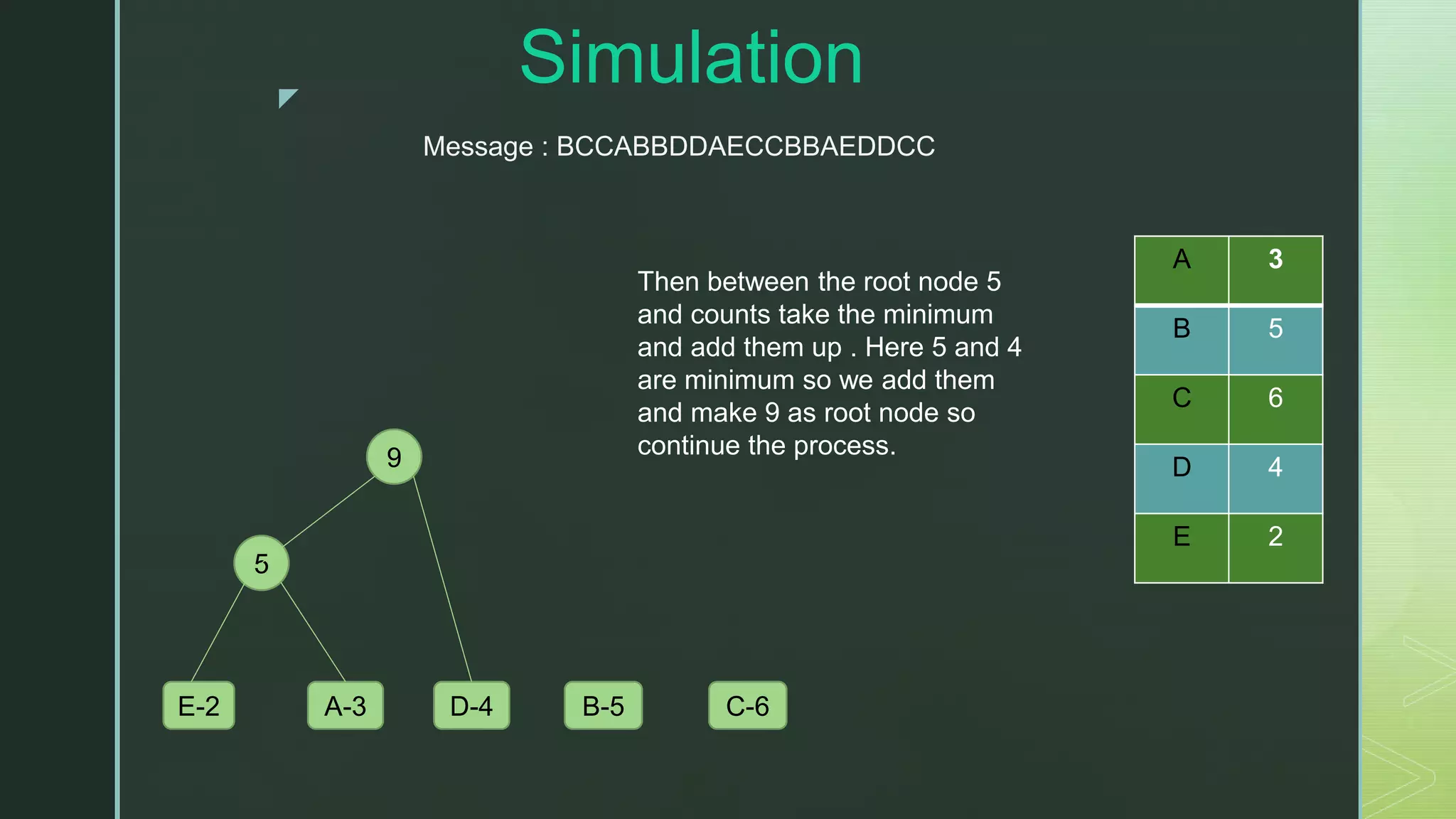

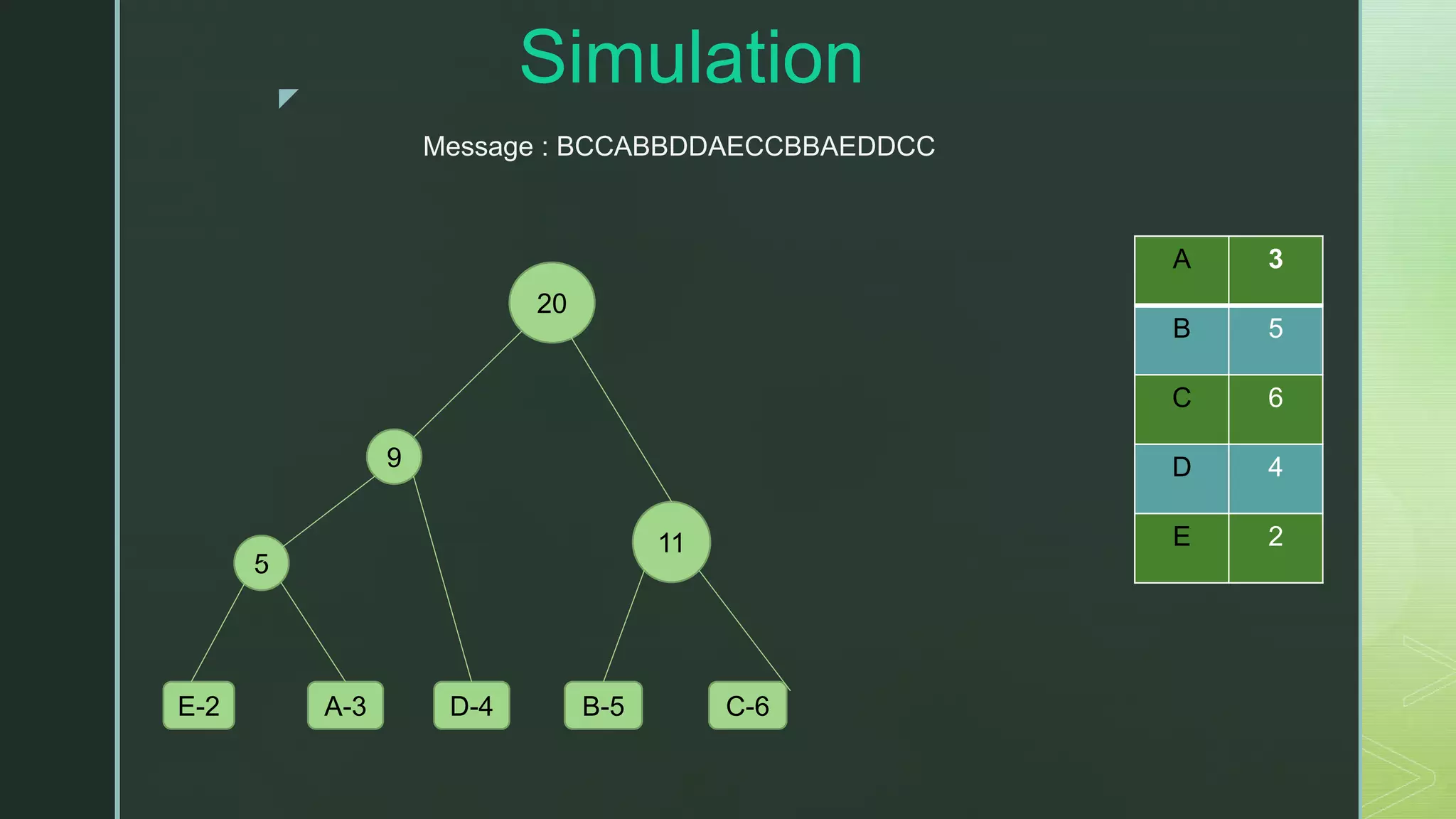

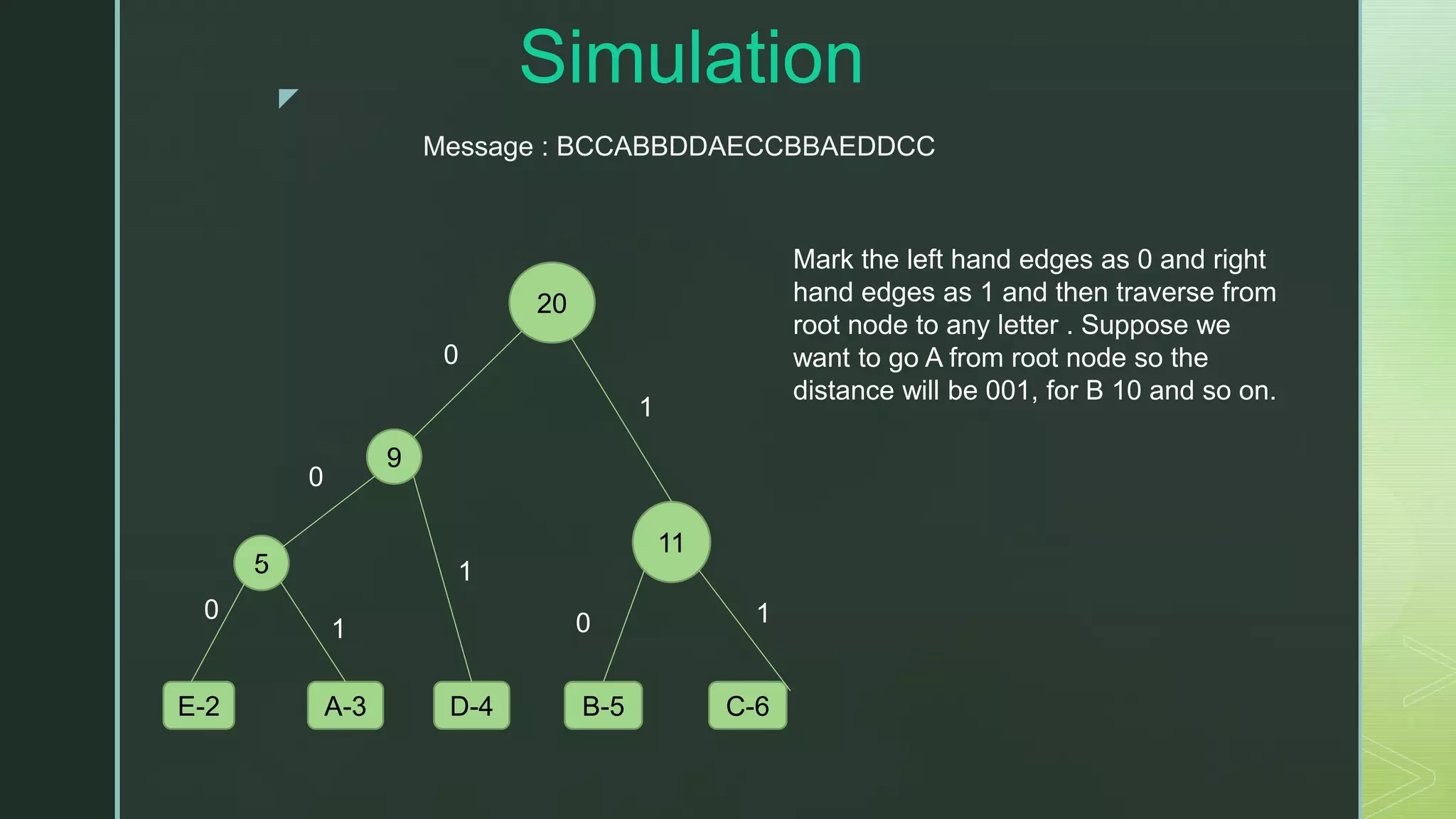

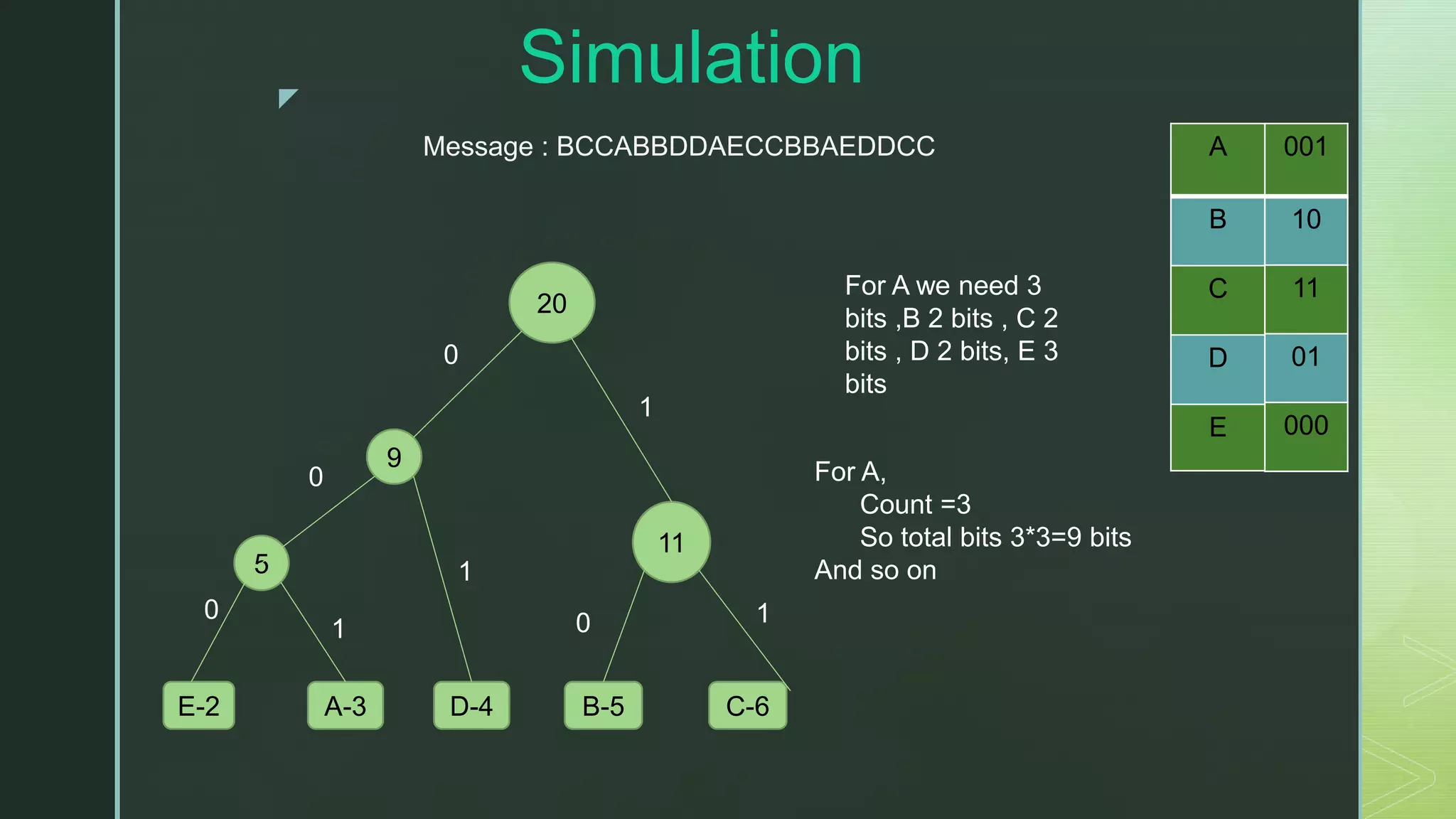

Huffman coding is a lossless data compression algorithm that assigns variable-length binary codes to characters based on their frequencies, with more common characters getting shorter codes. It builds a Huffman tree from the character frequencies where the root node has the total frequency and interior nodes branch left or right. To encode a message, it traverses the tree assigning 0s and 1s to the path taken. This simulation shows building the Huffman tree for a sample message and assigns codes to each character, compressing the data from 160 bits to 45 bits. Huffman coding has time complexity of O(n log n) and is commonly used in file compression, multimedia, and communication applications, providing efficient compression at the cost of slower encoding and