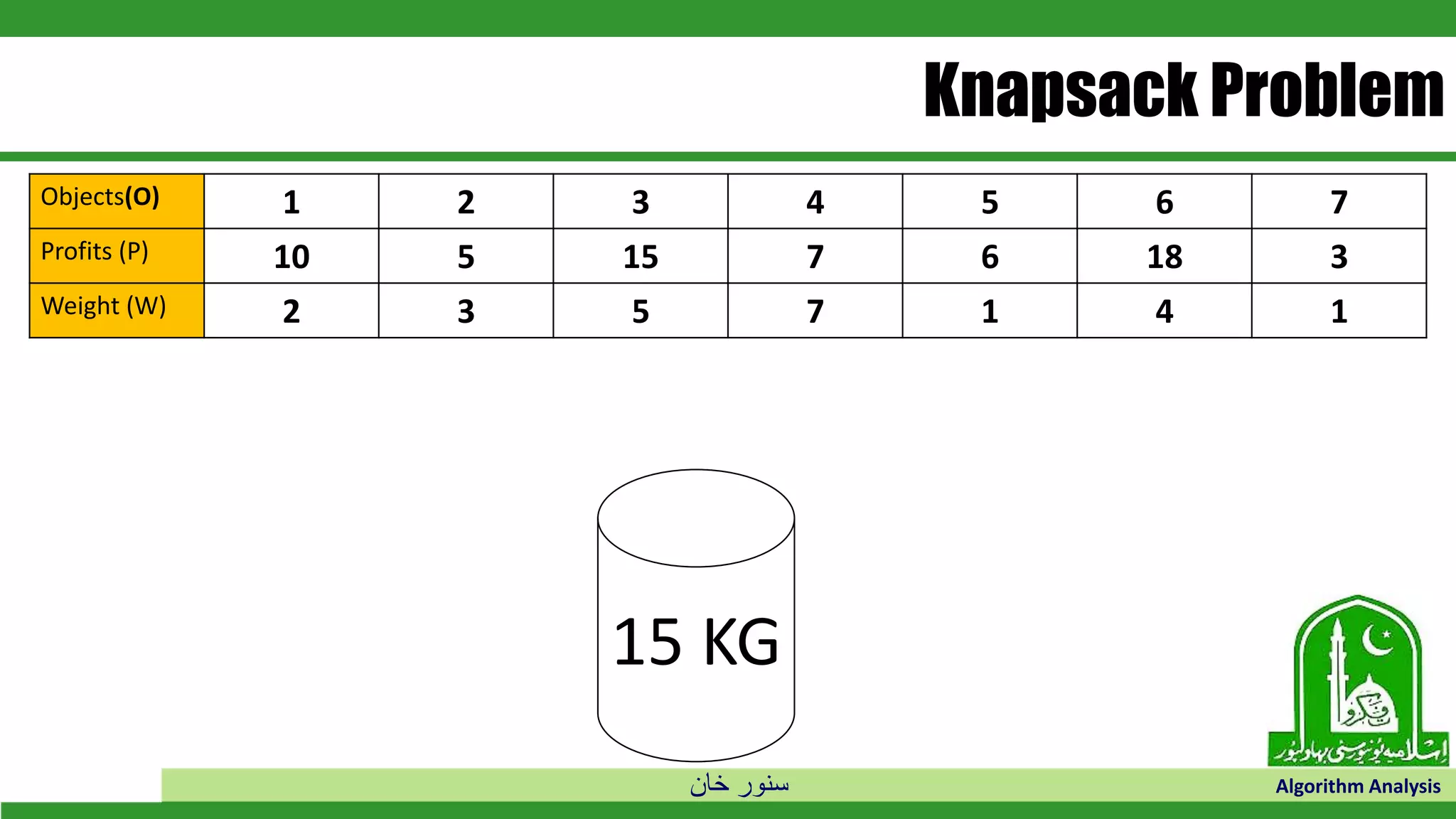

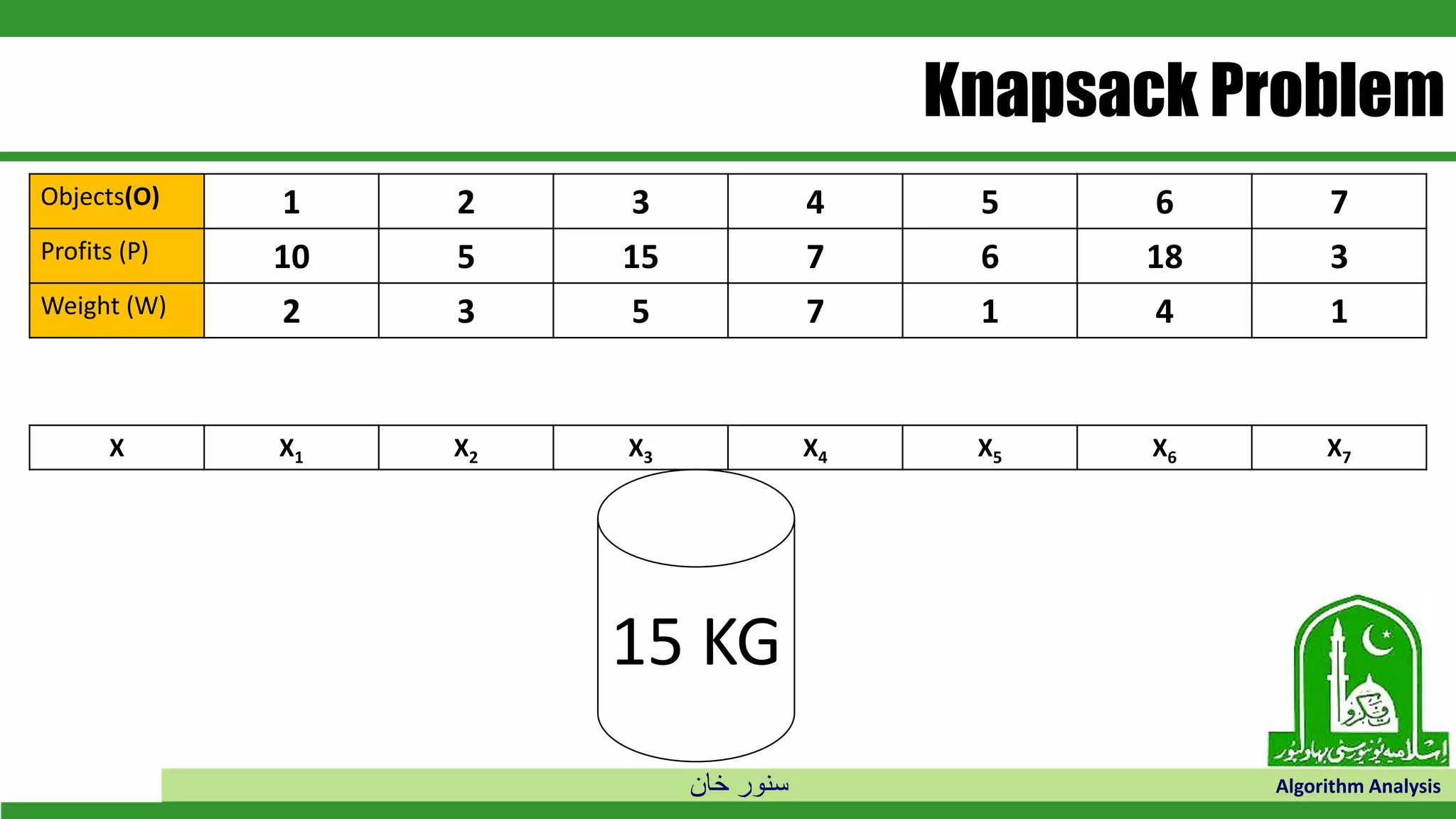

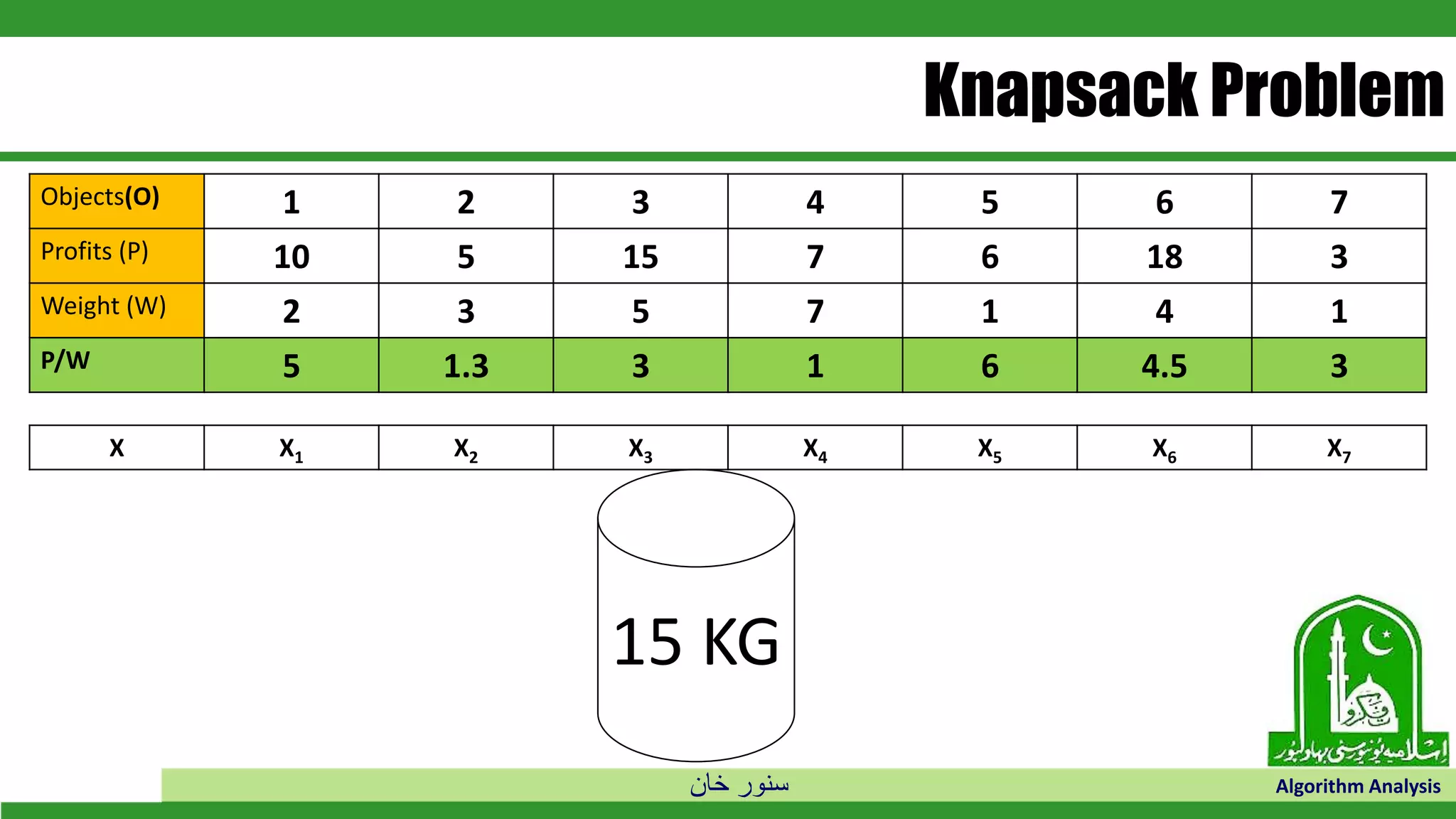

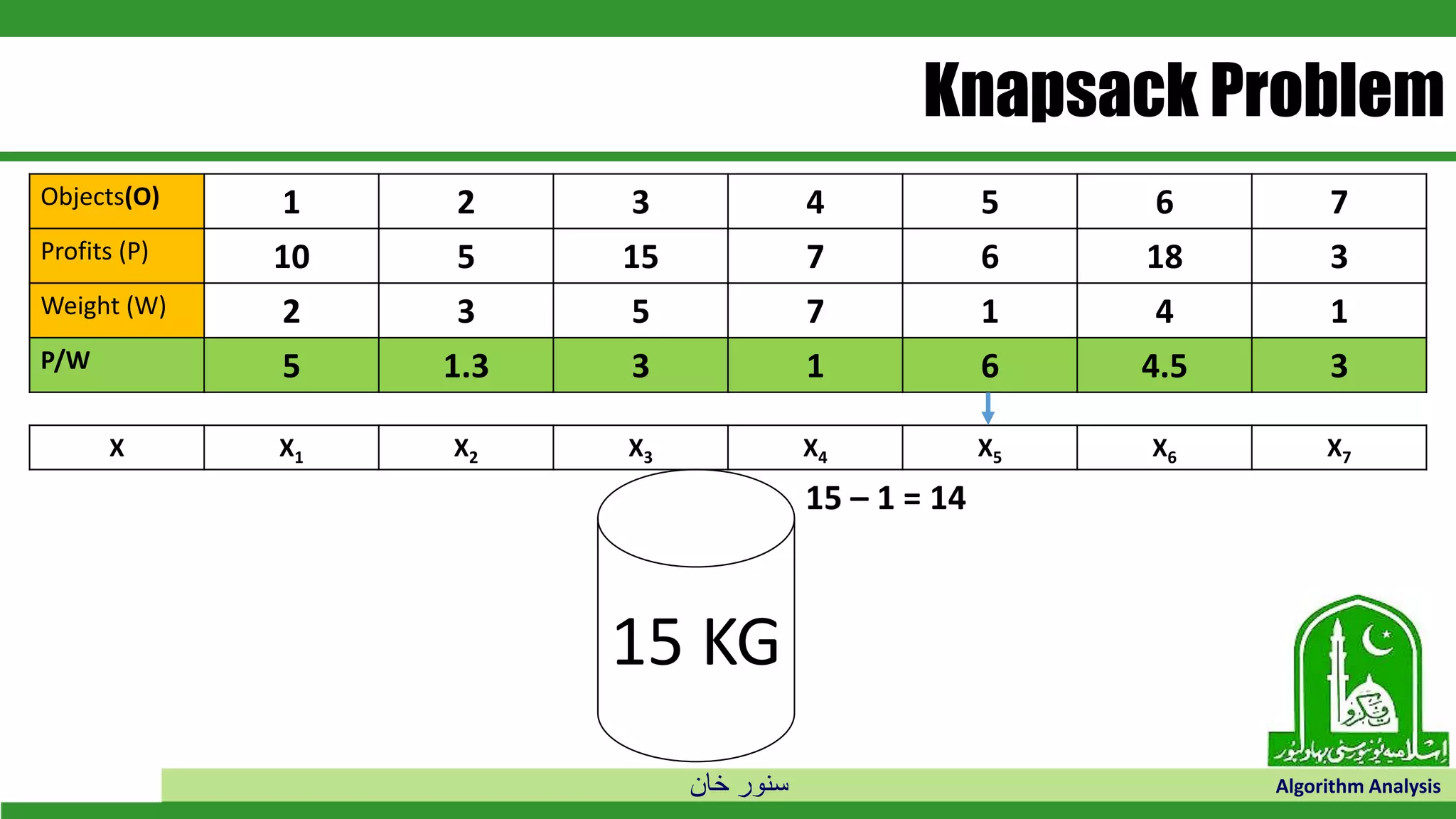

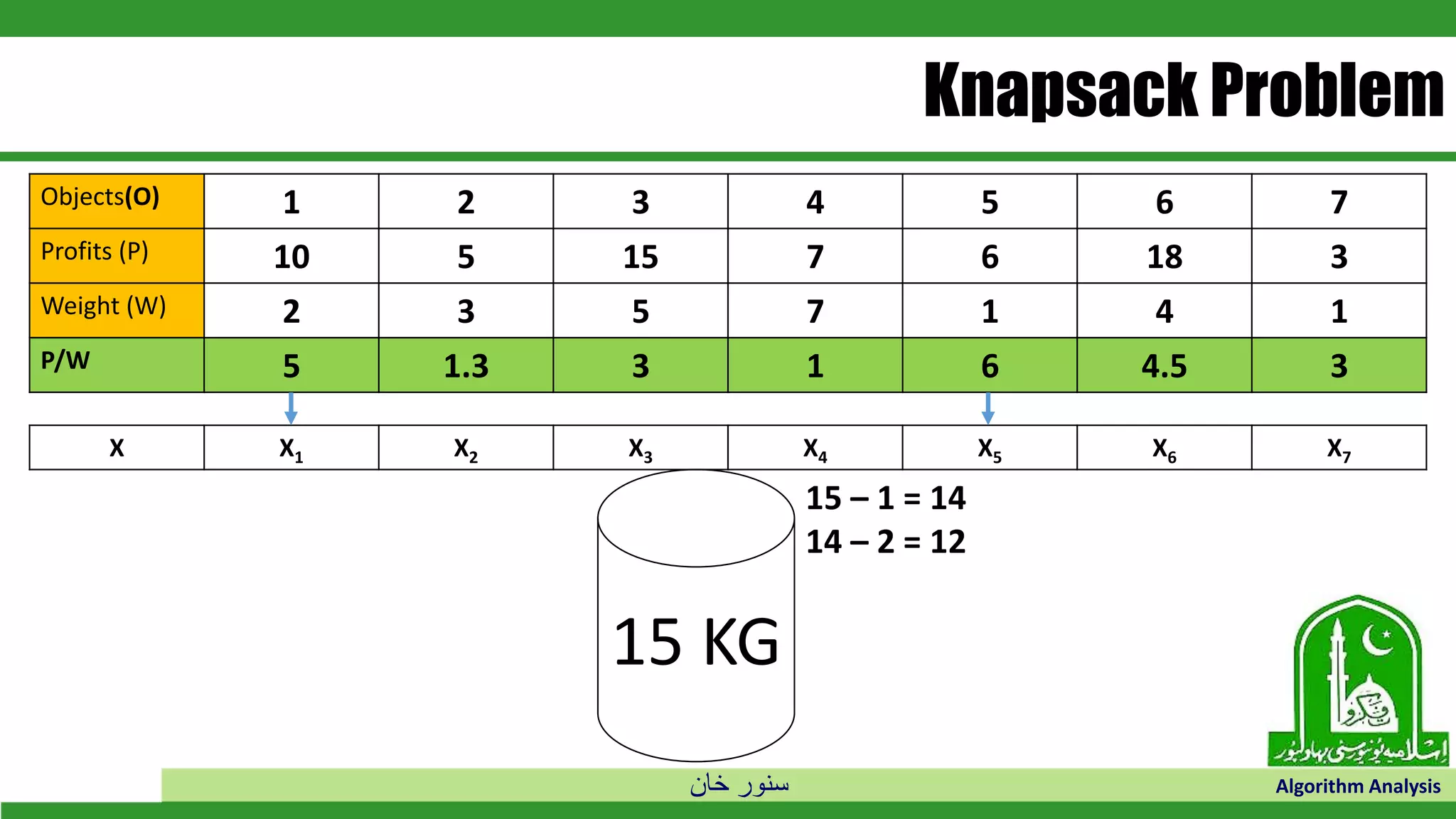

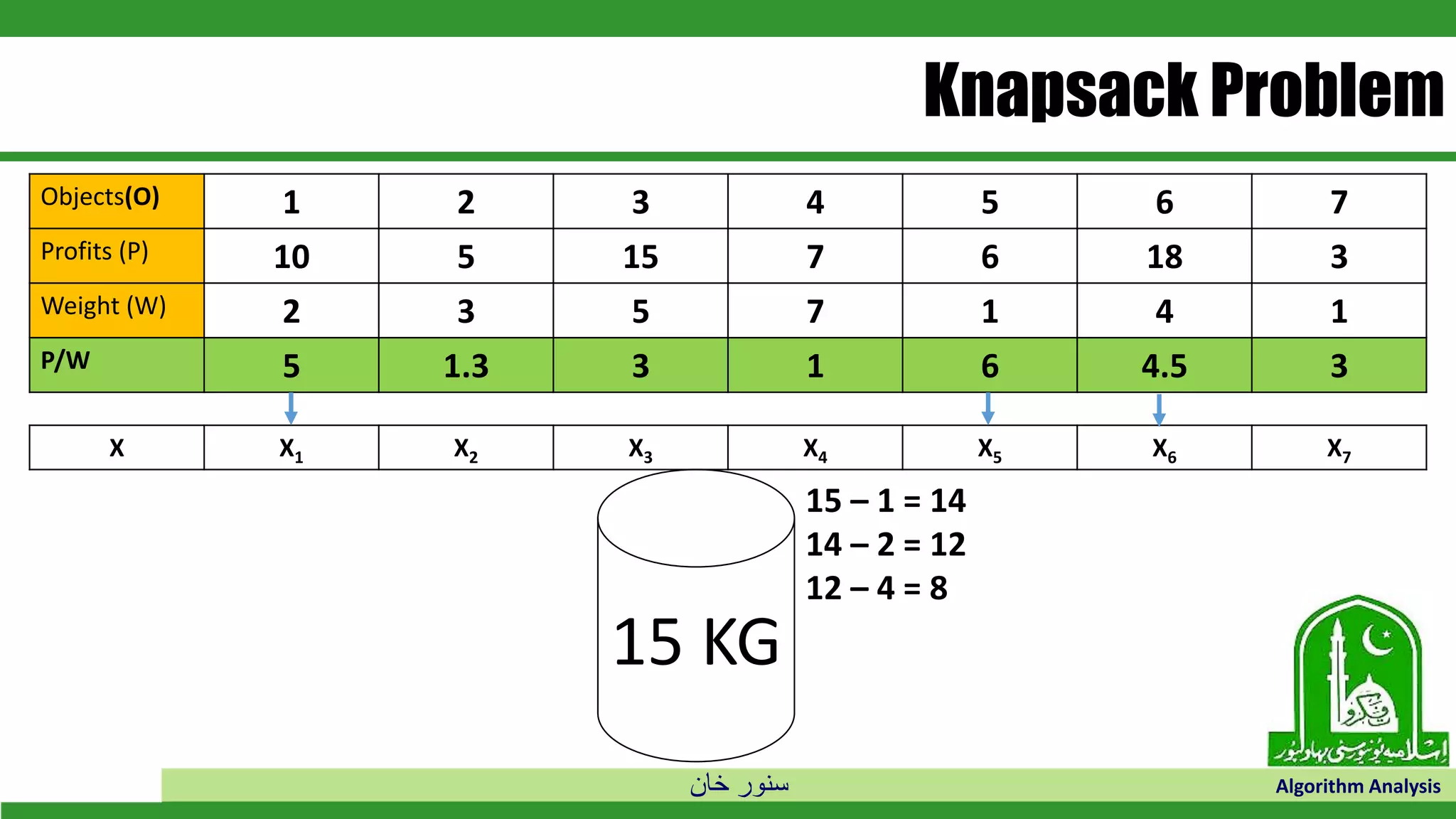

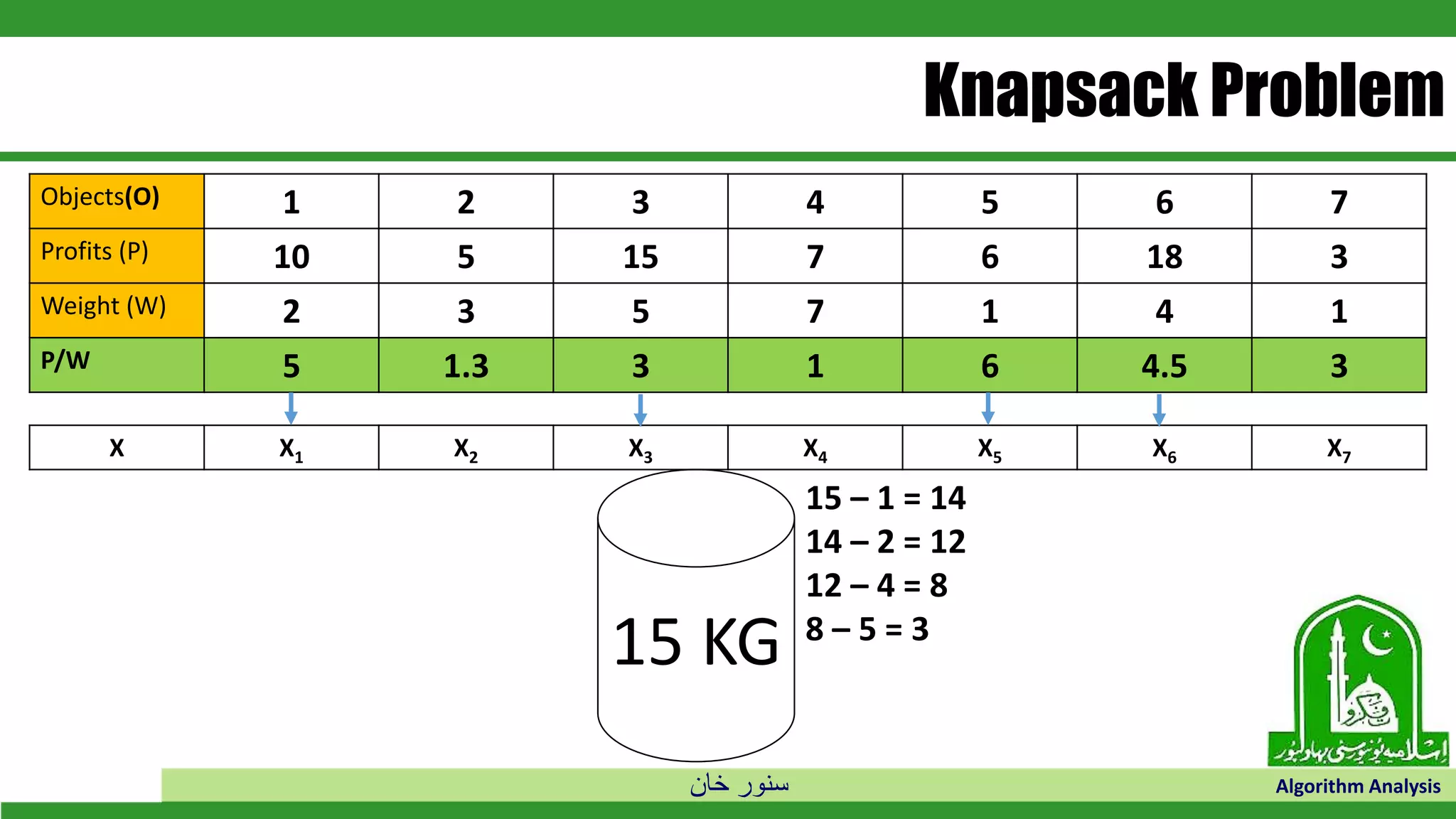

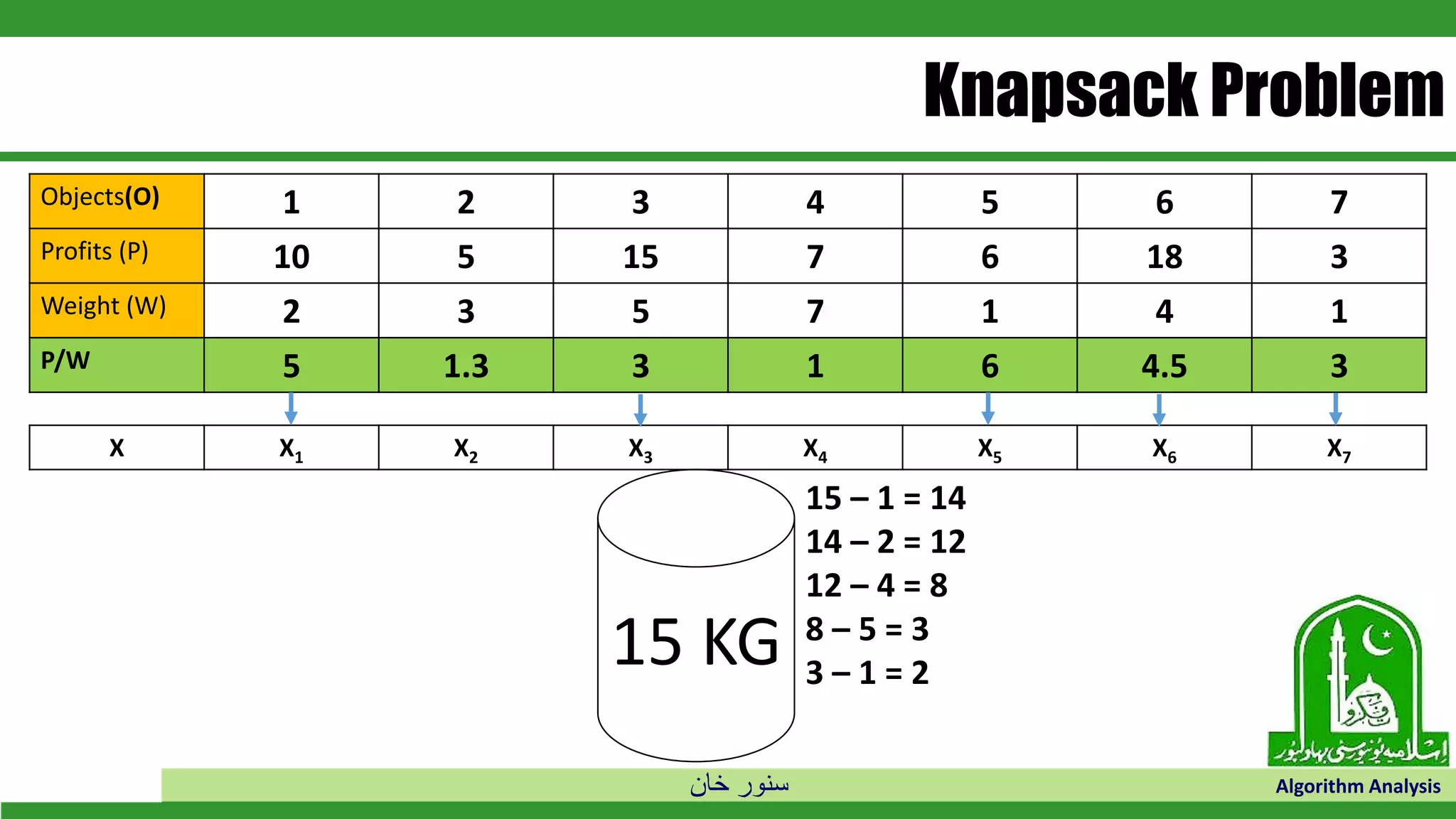

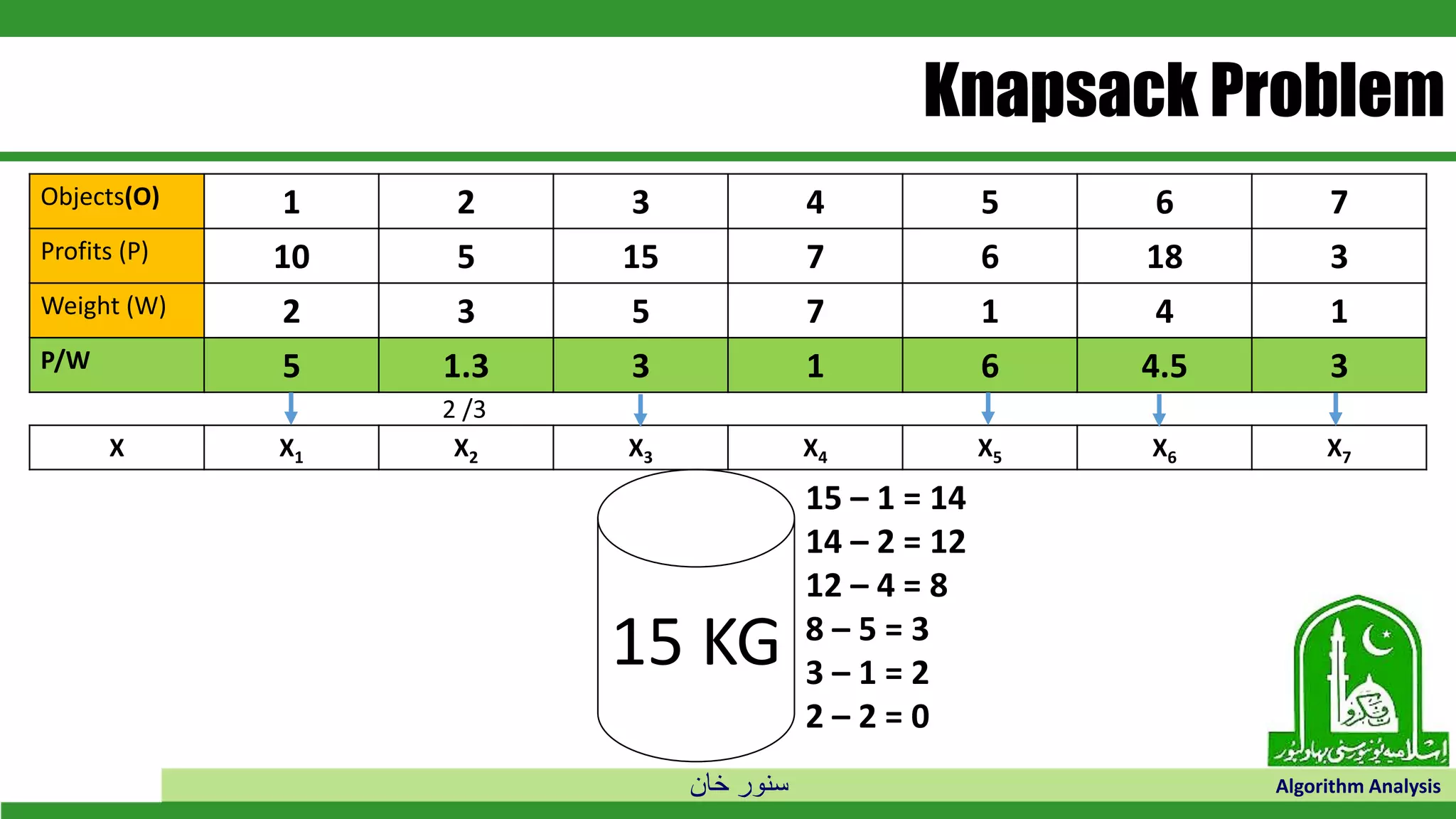

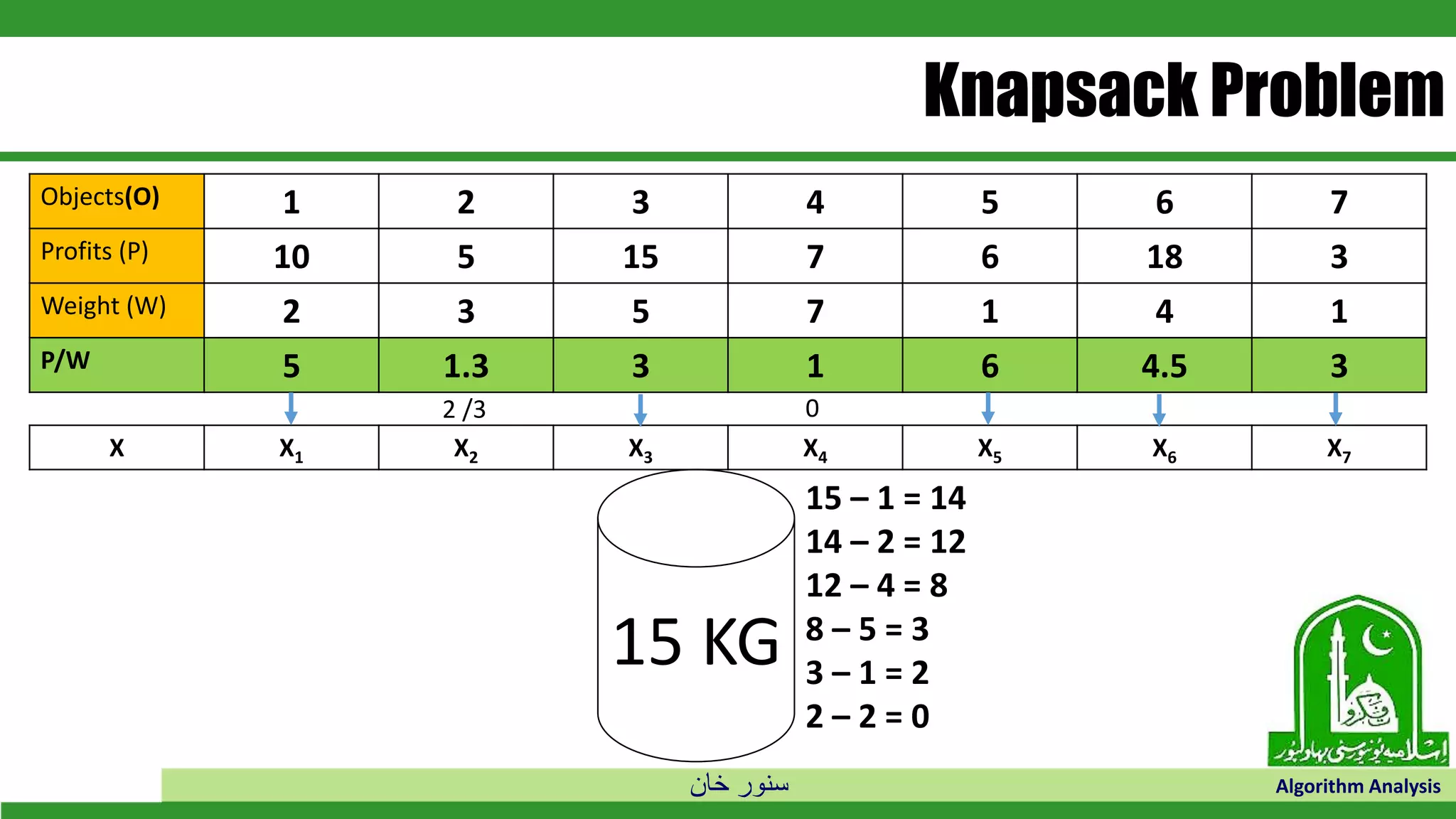

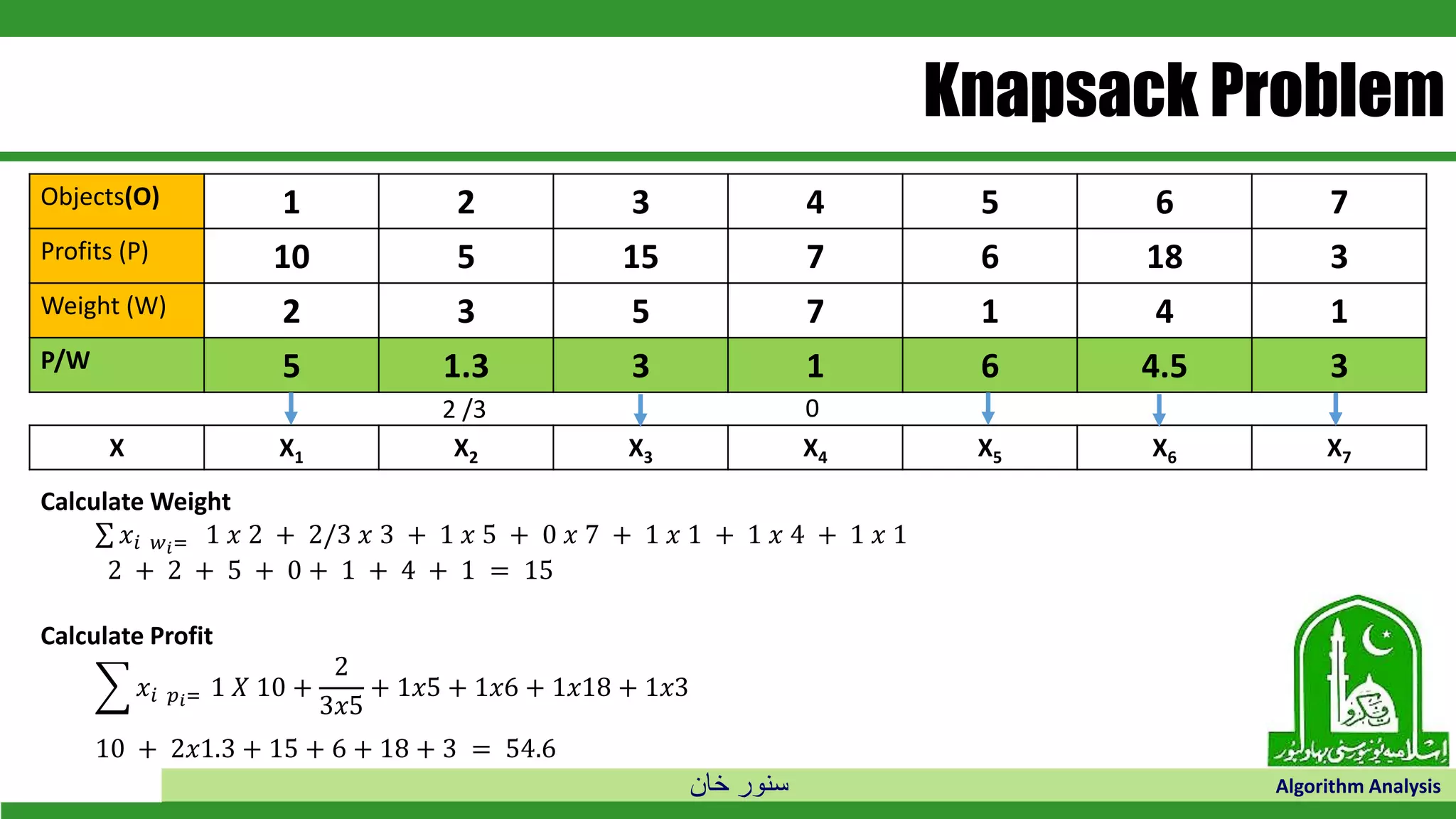

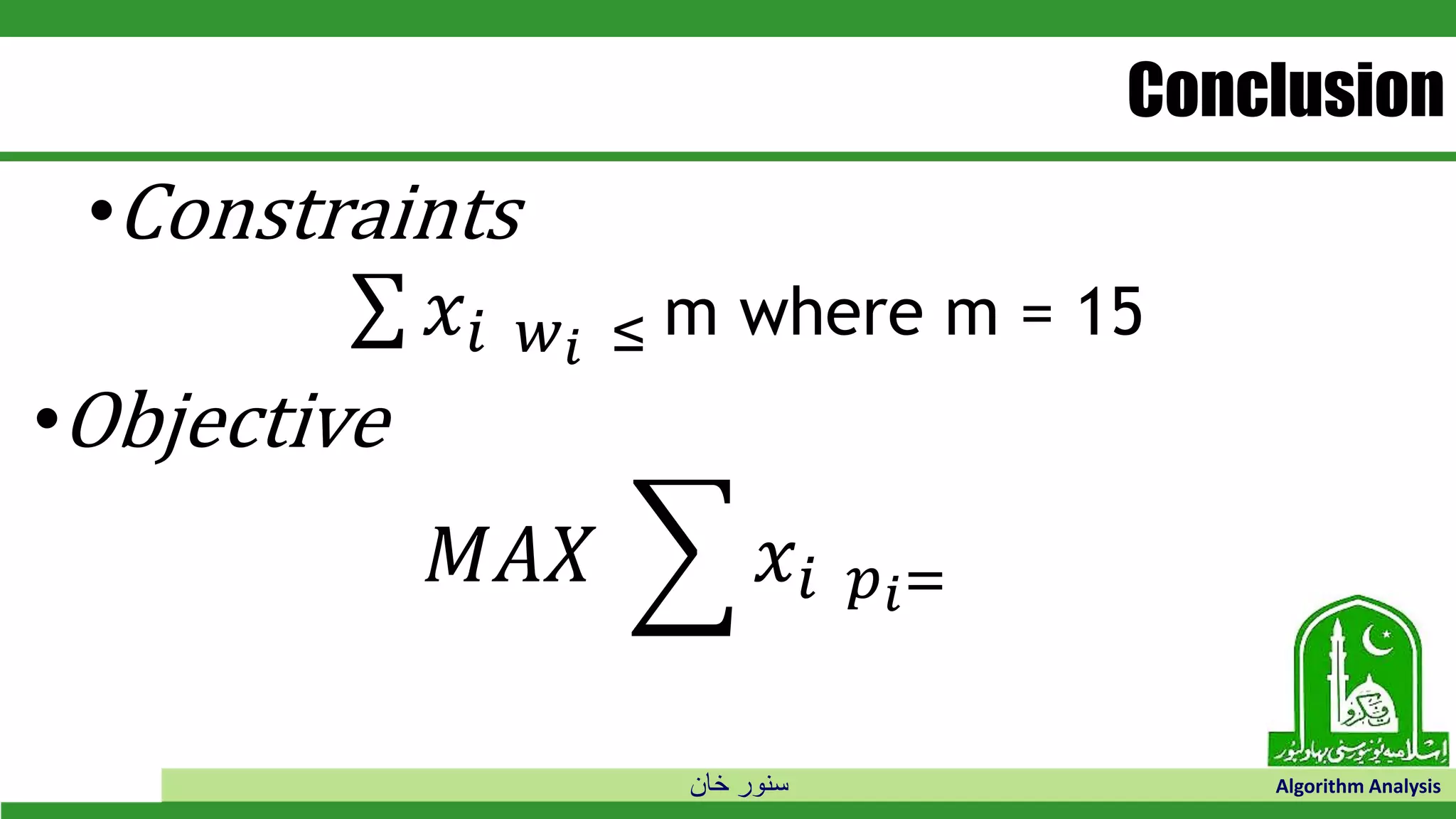

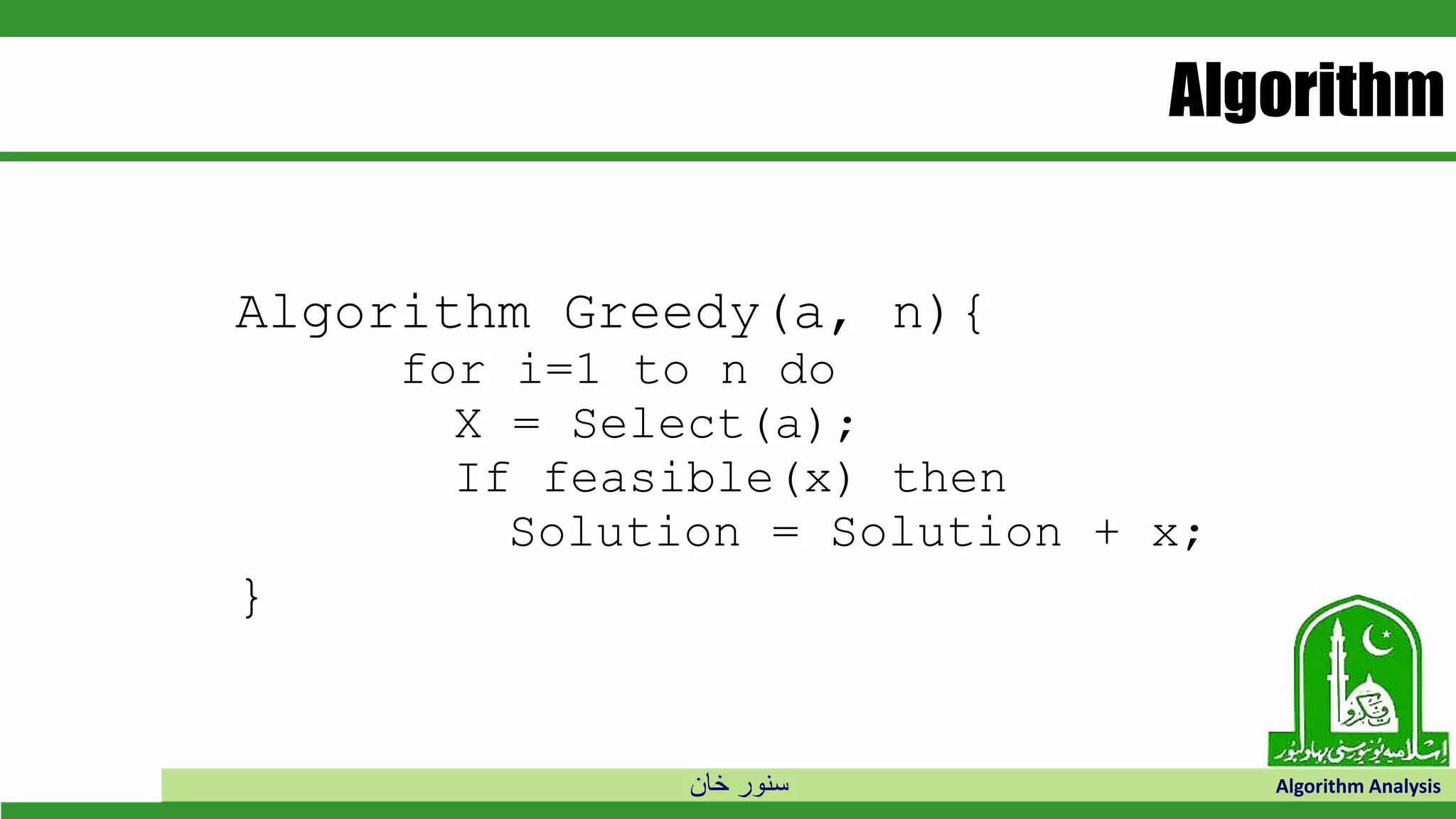

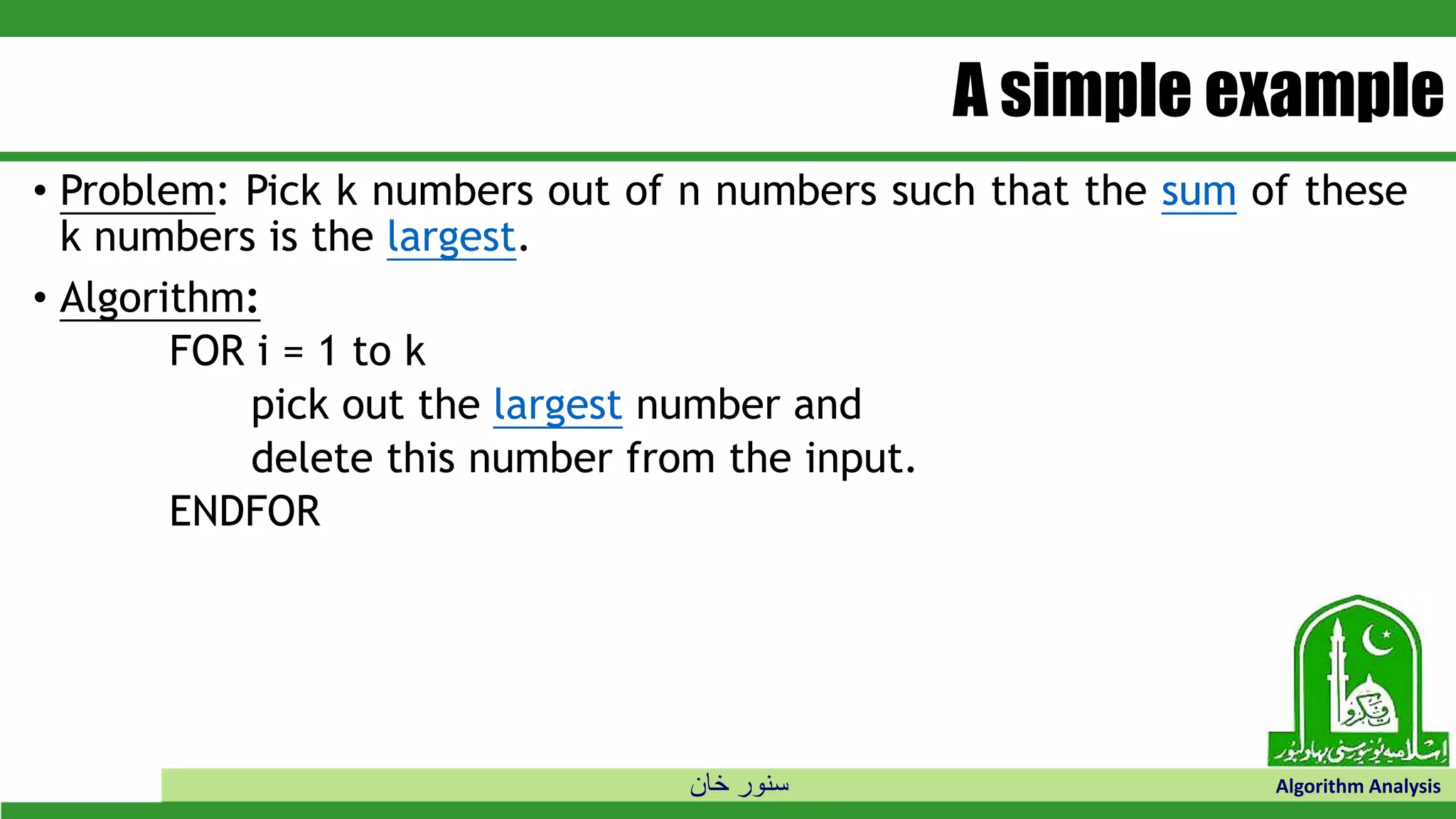

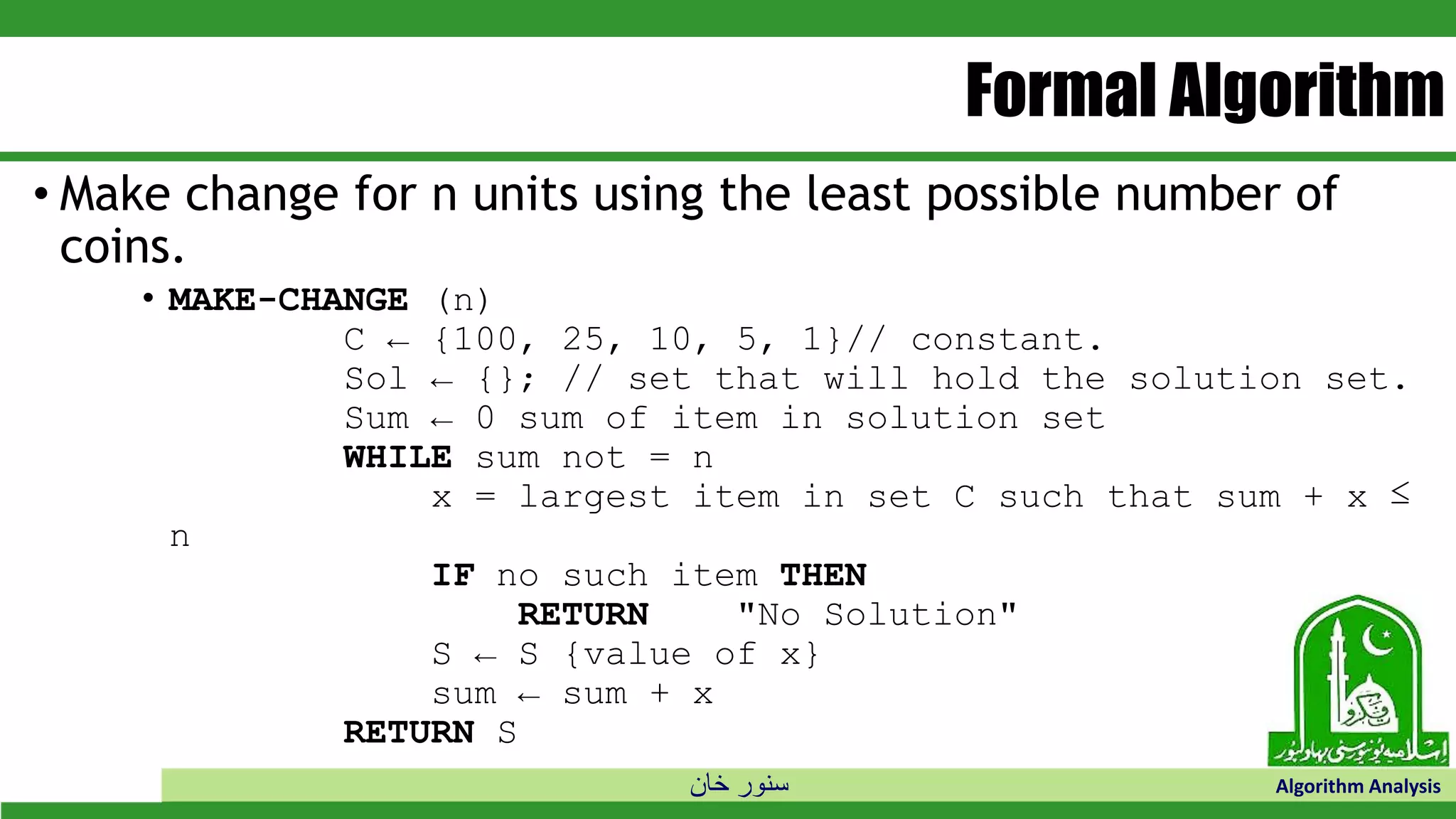

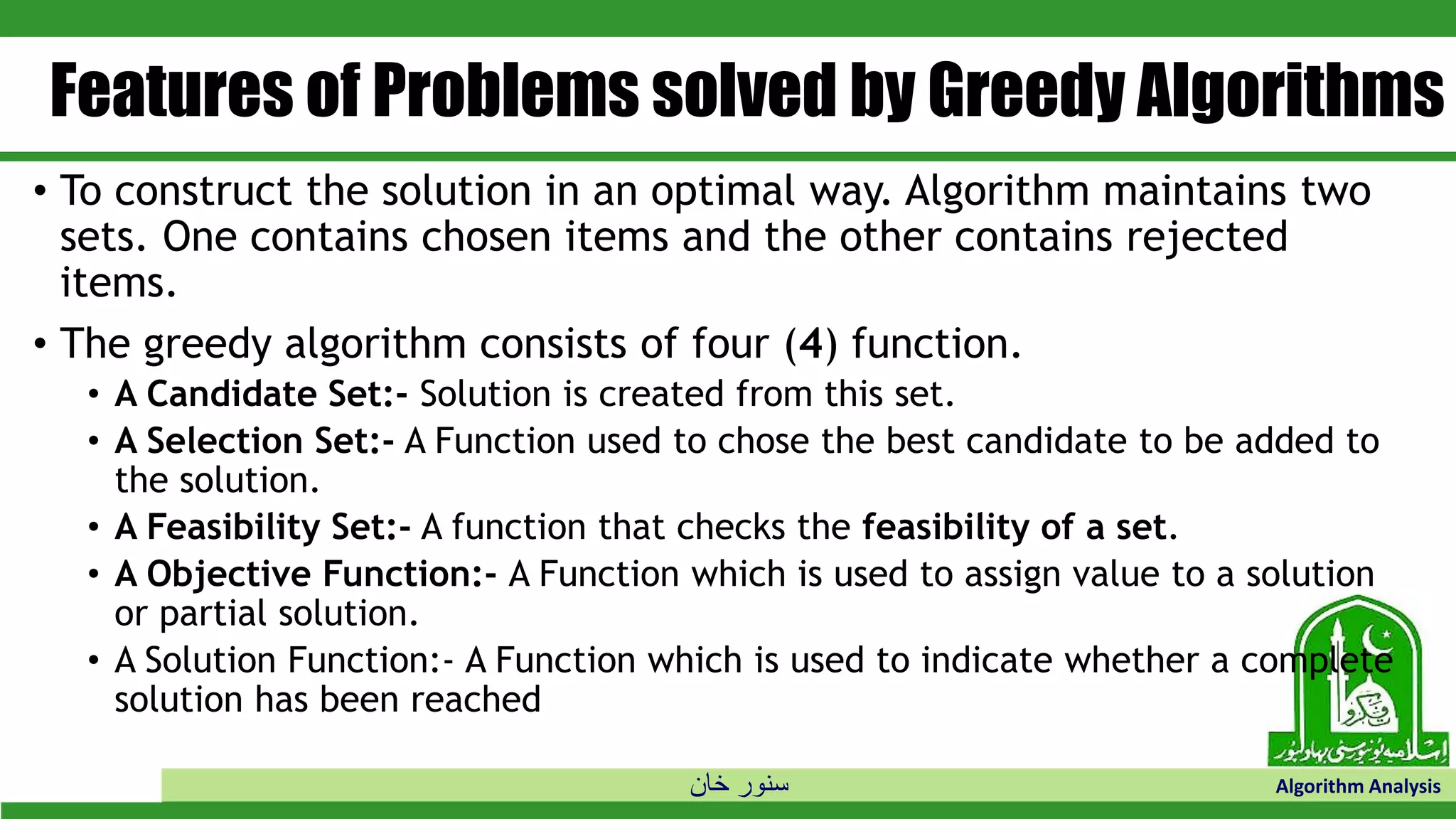

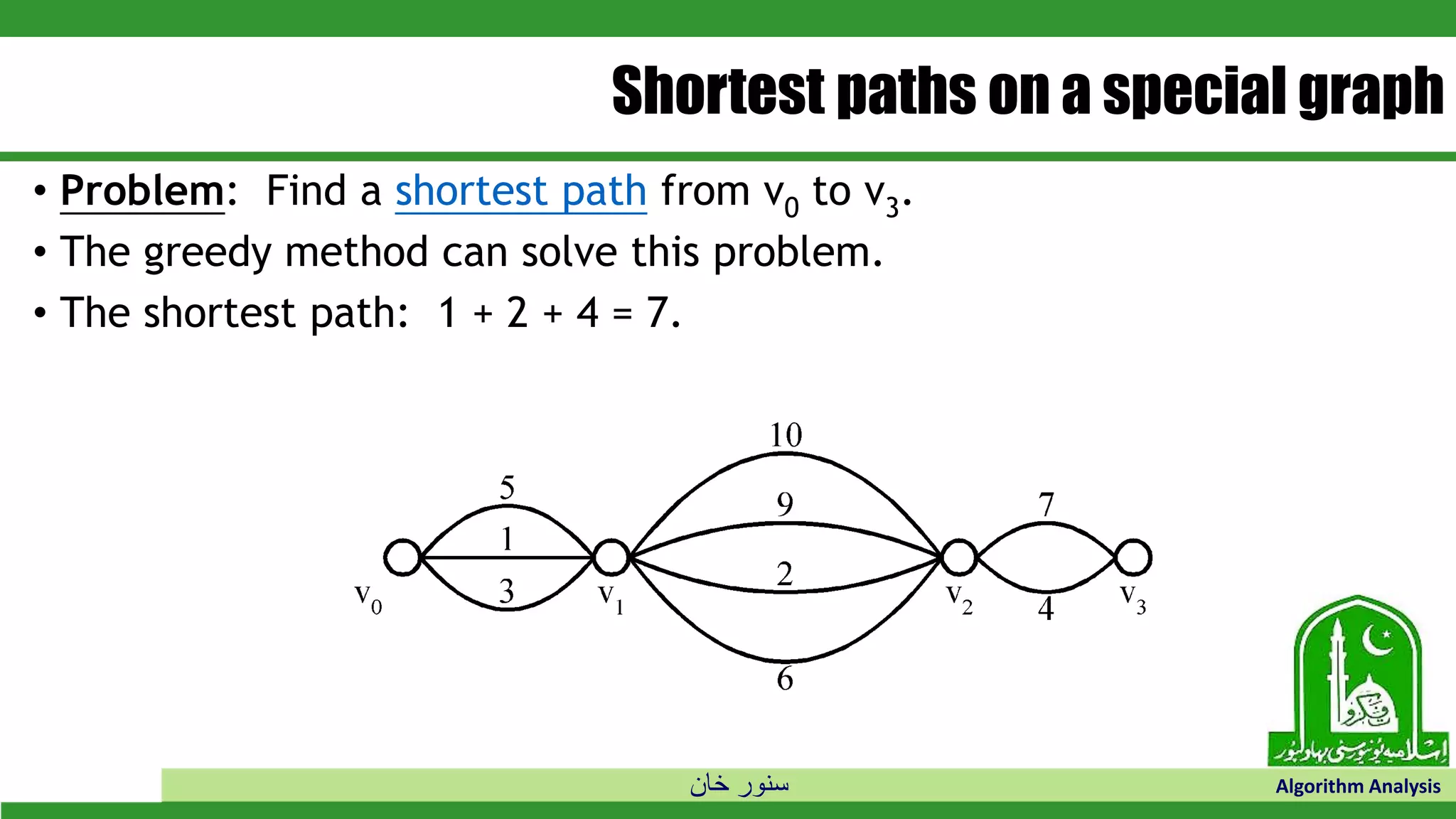

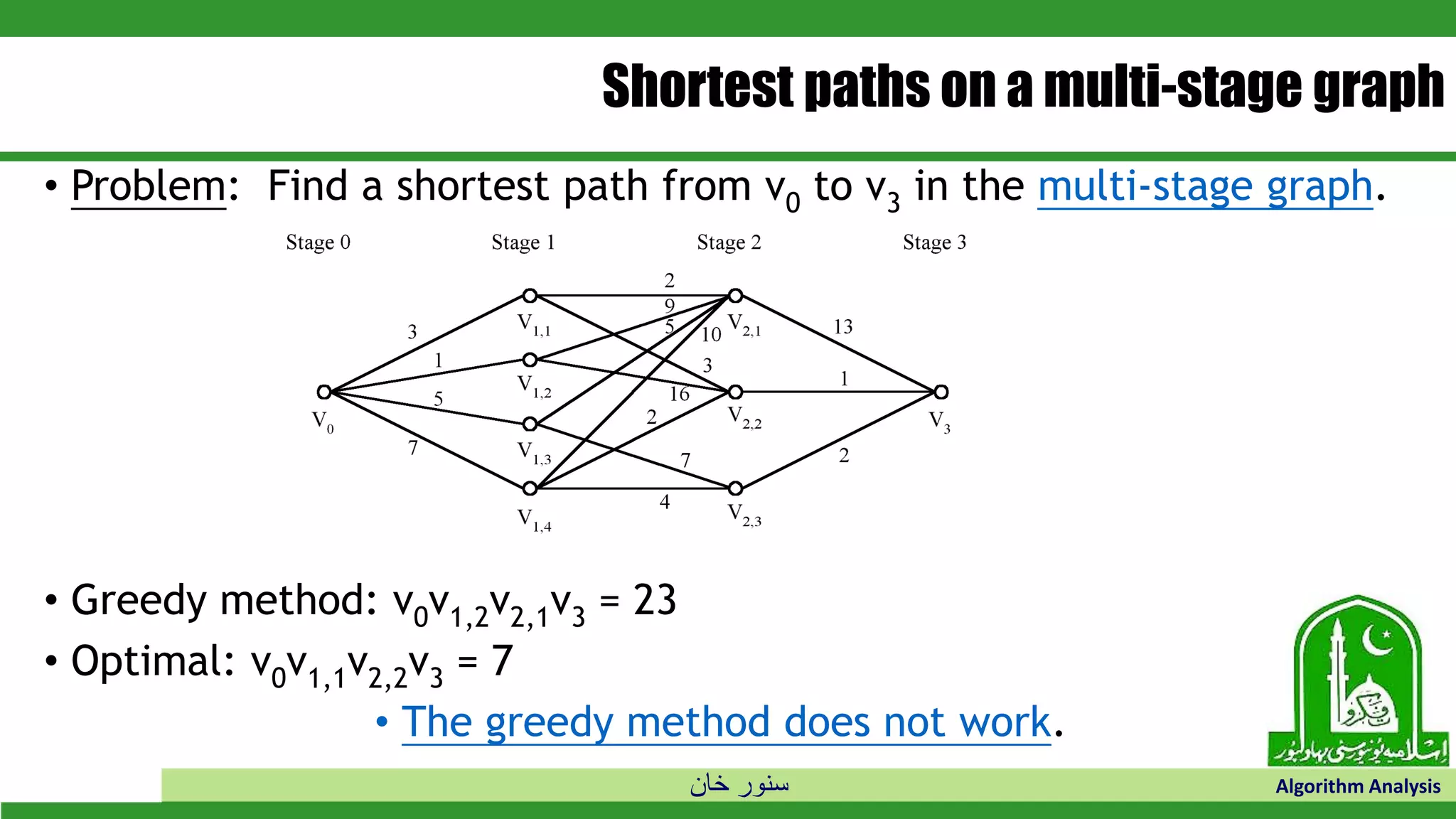

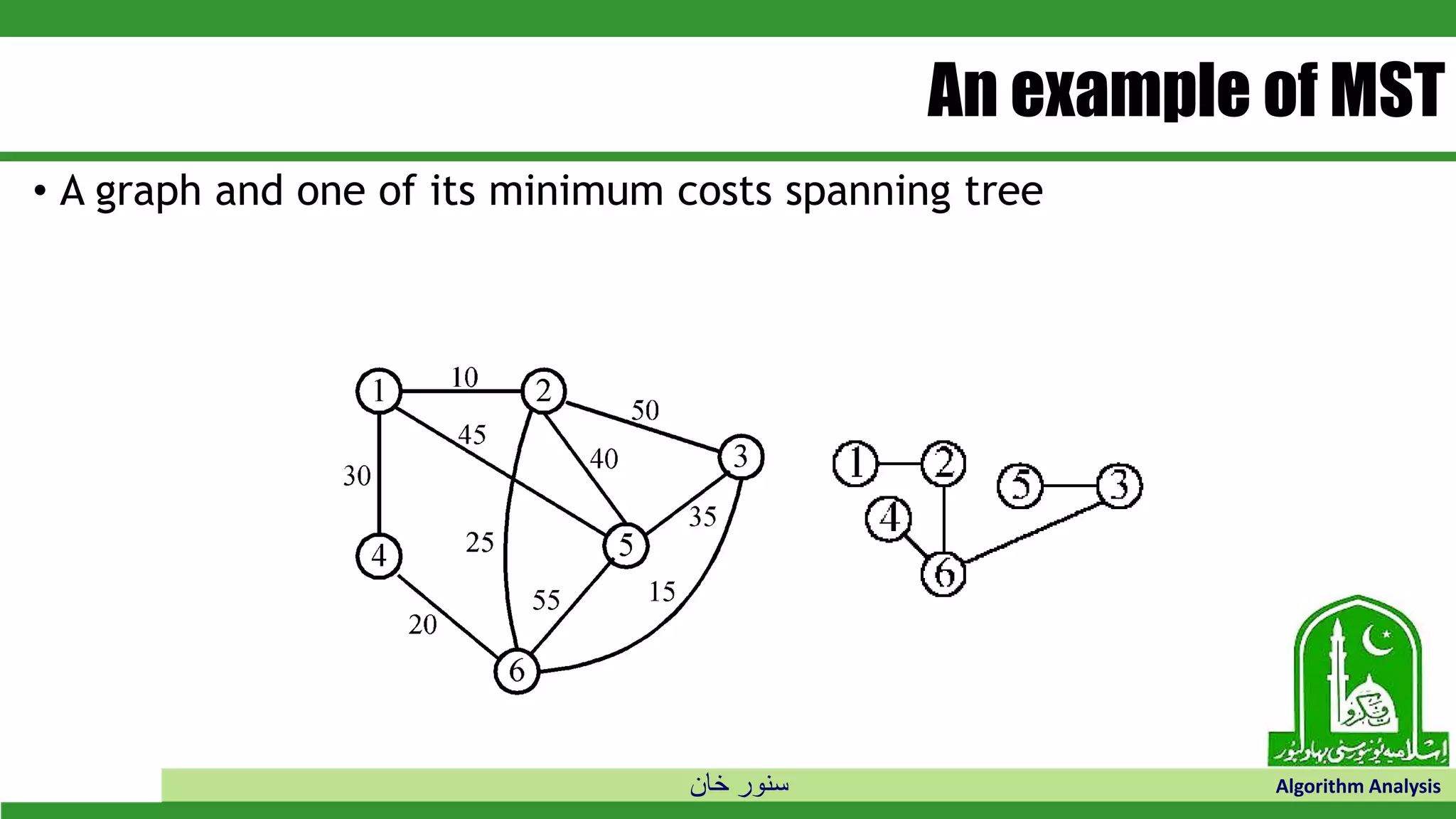

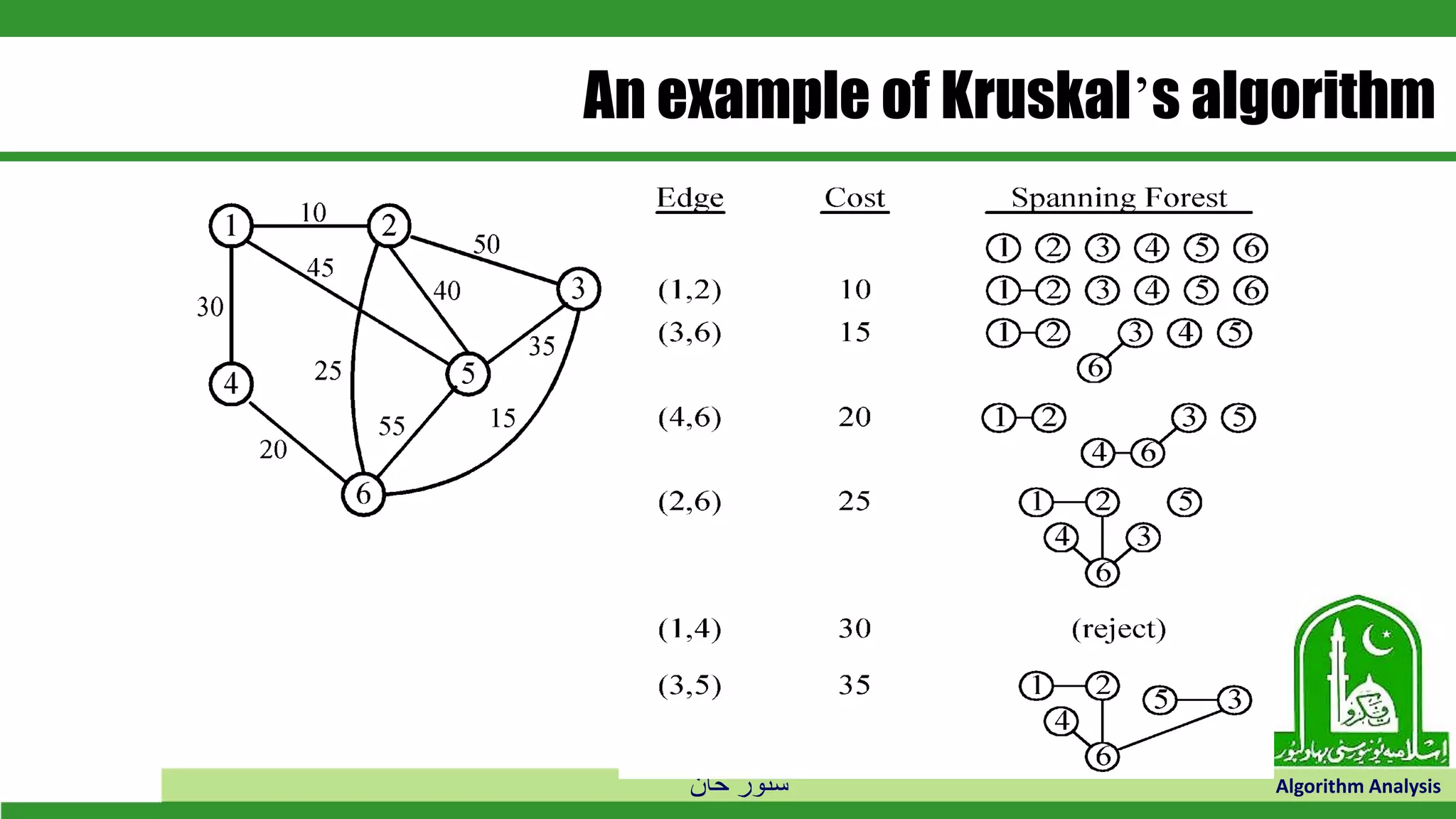

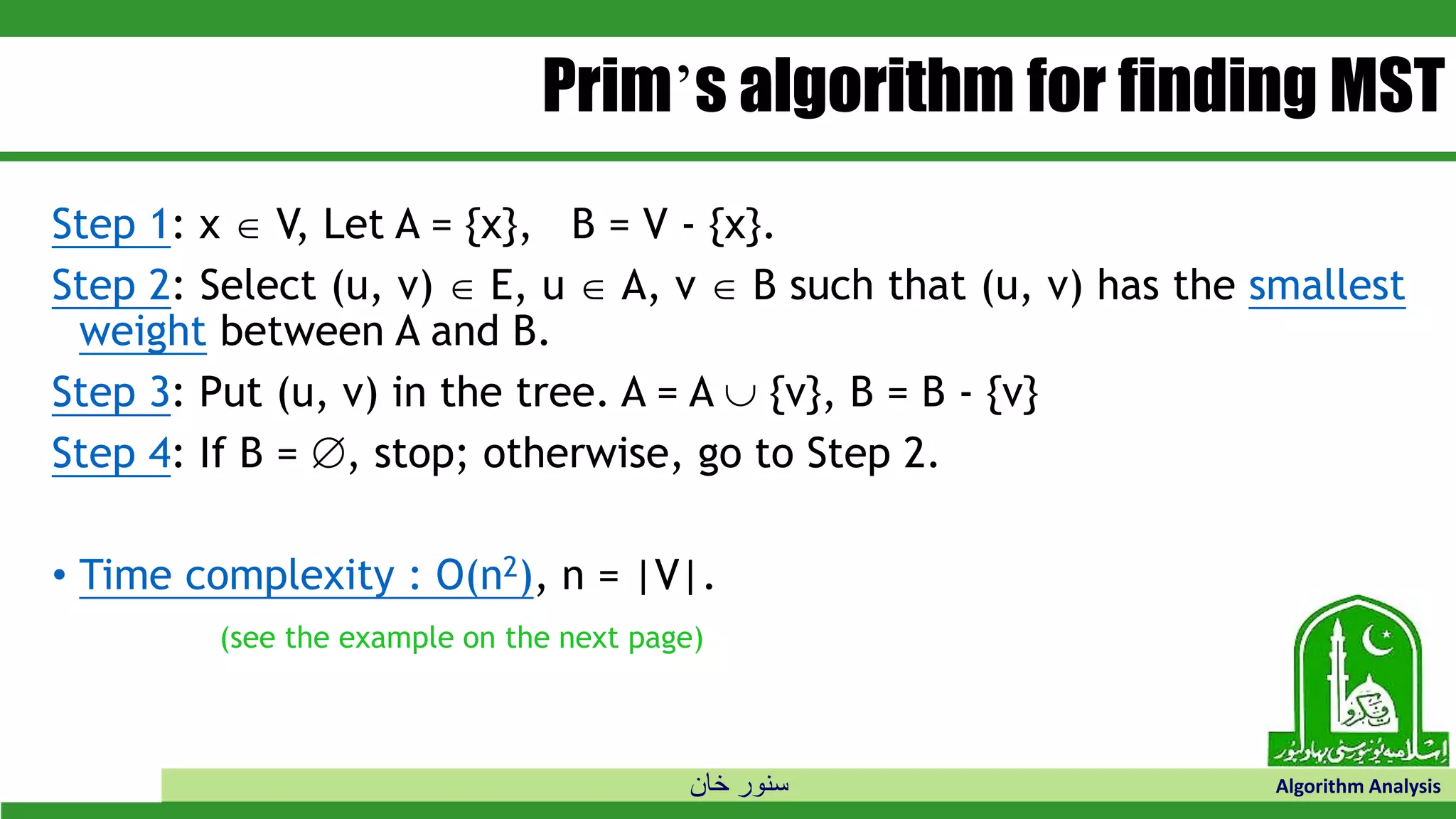

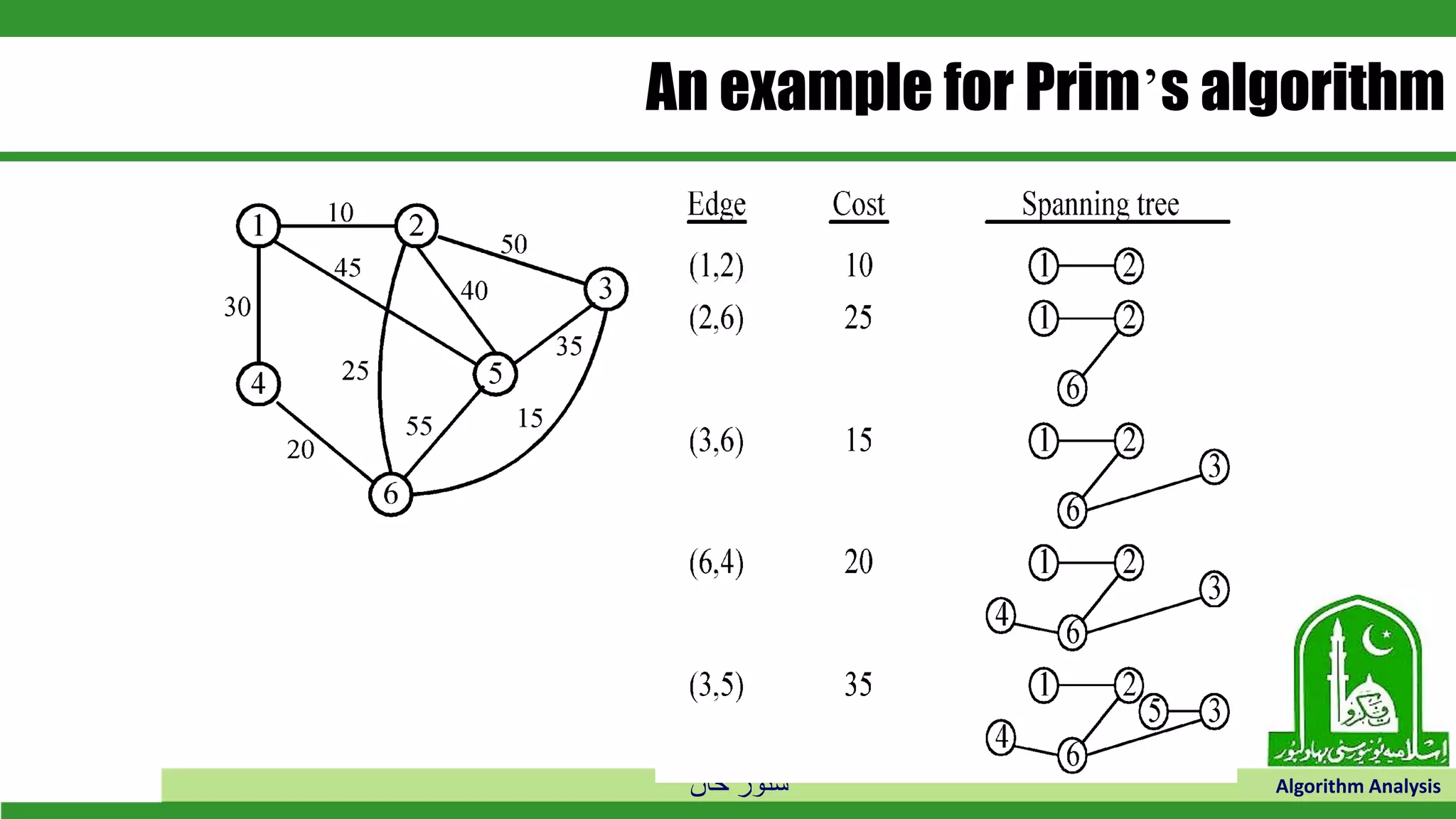

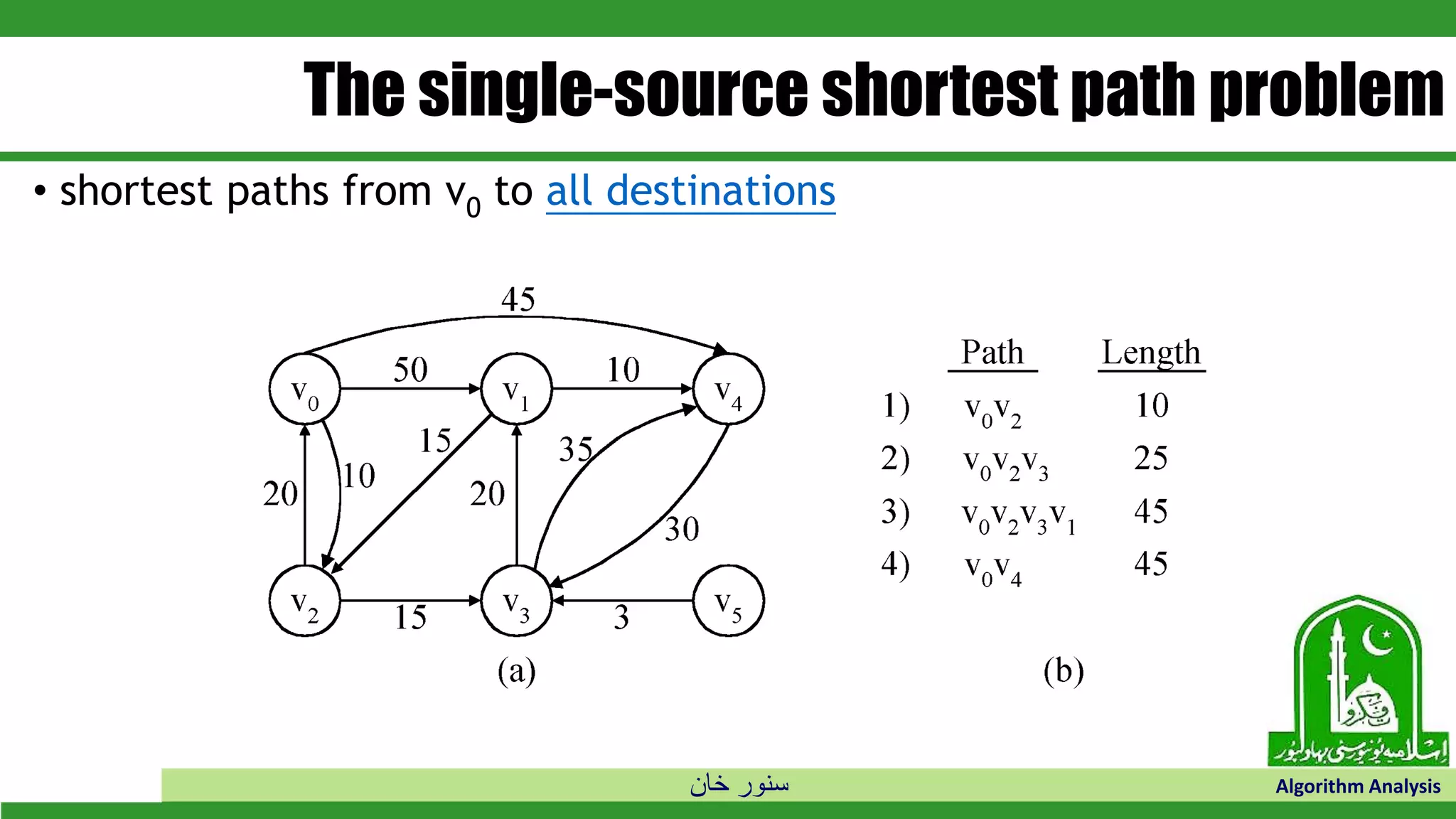

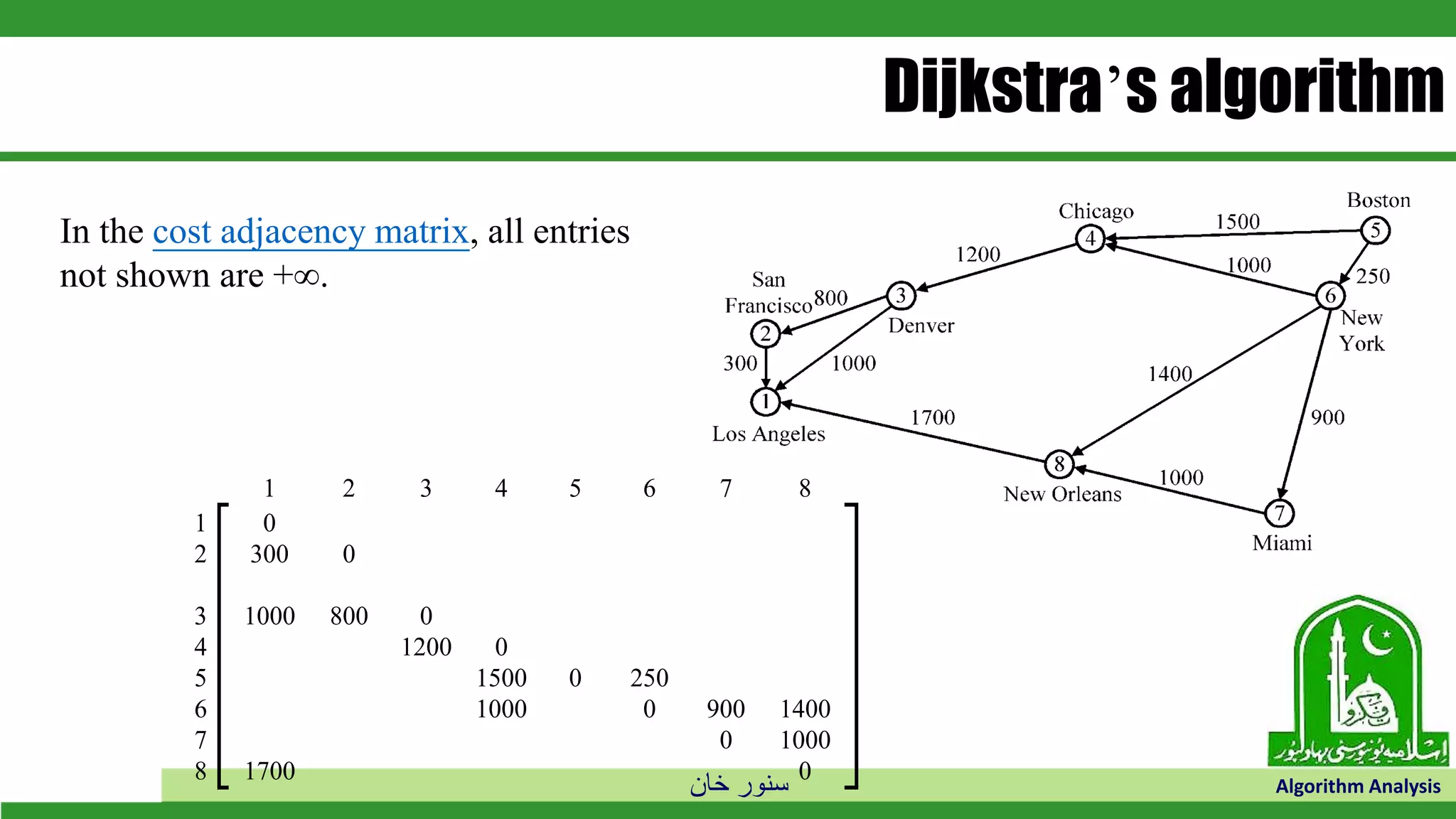

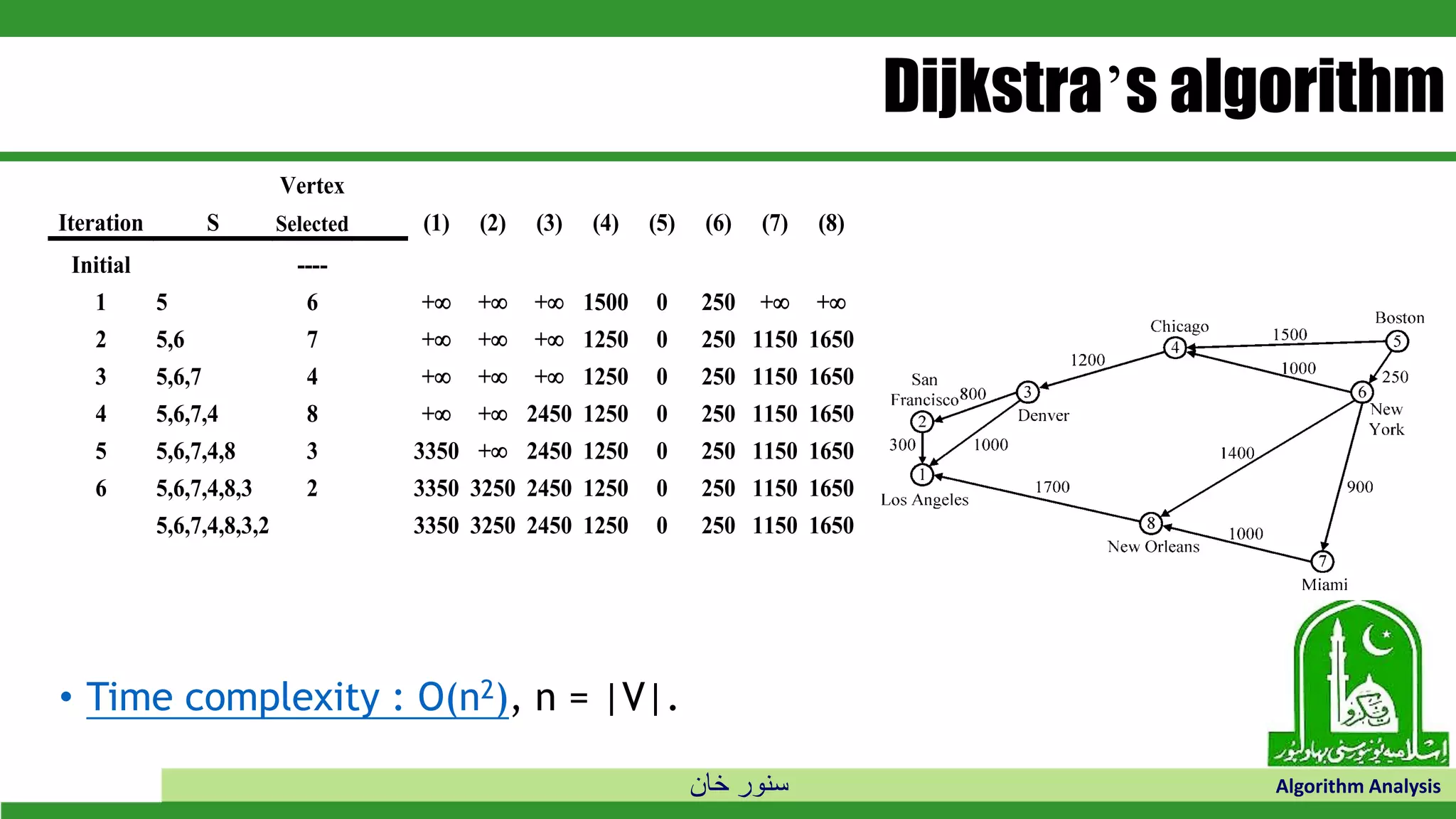

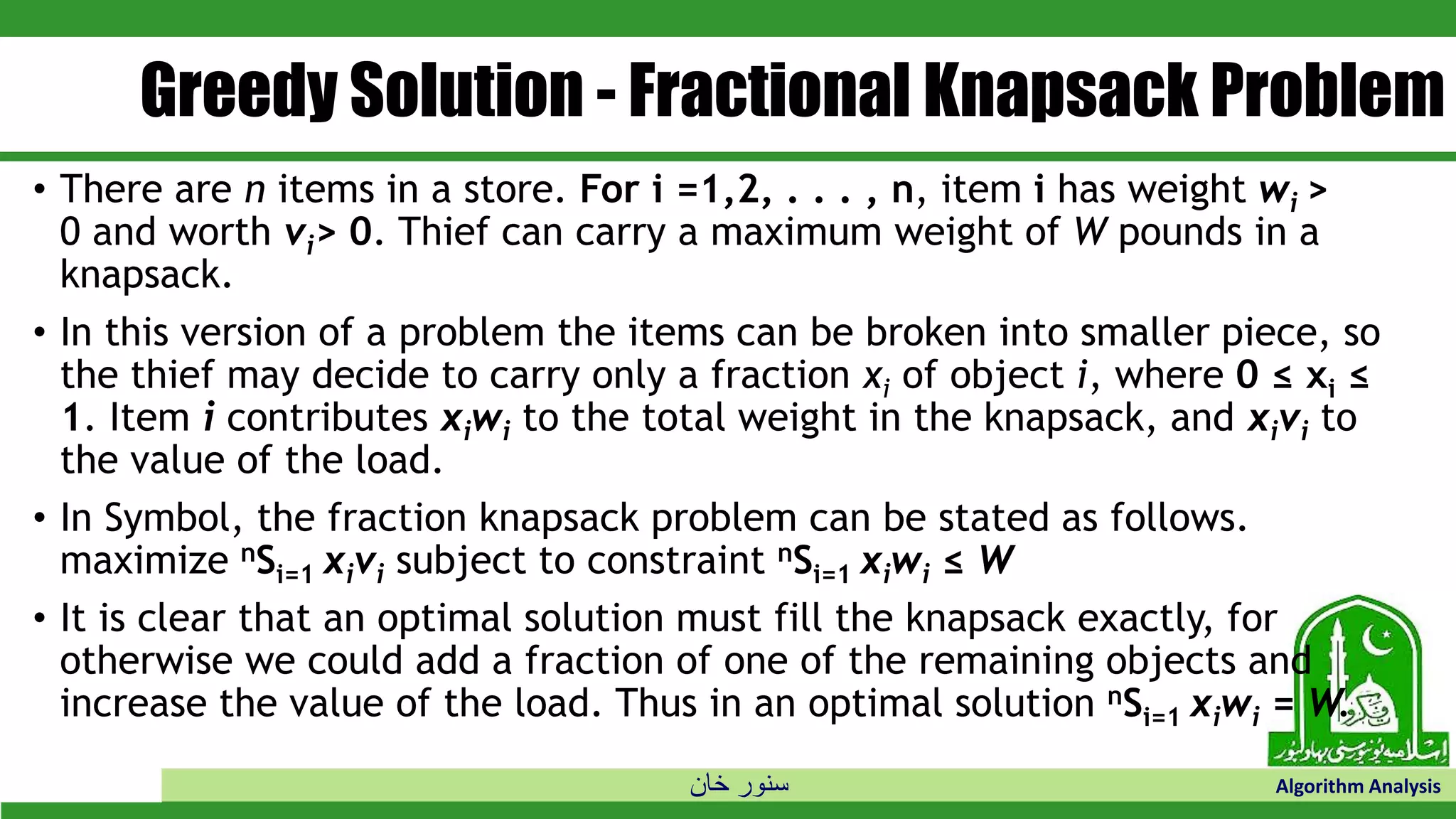

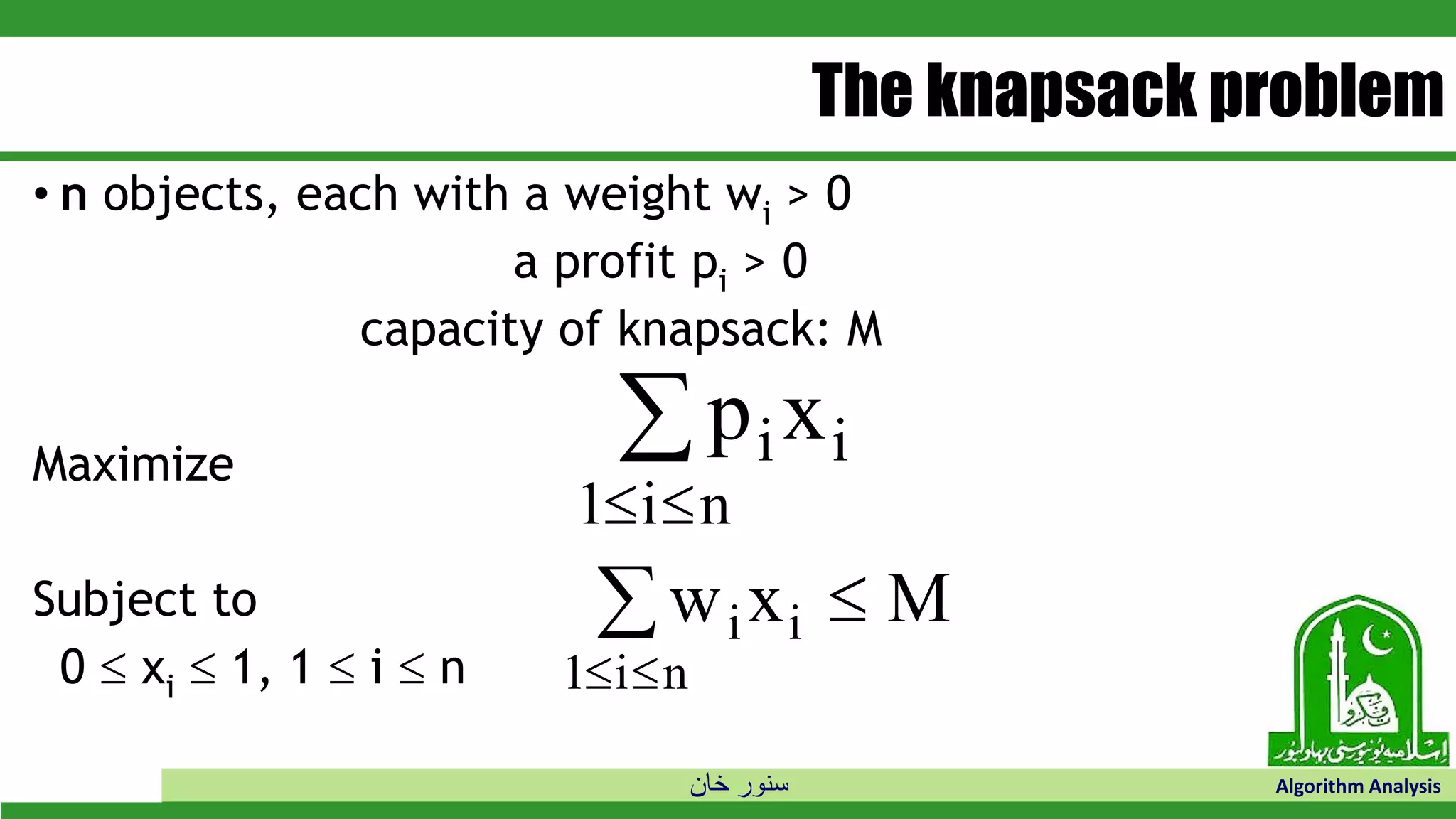

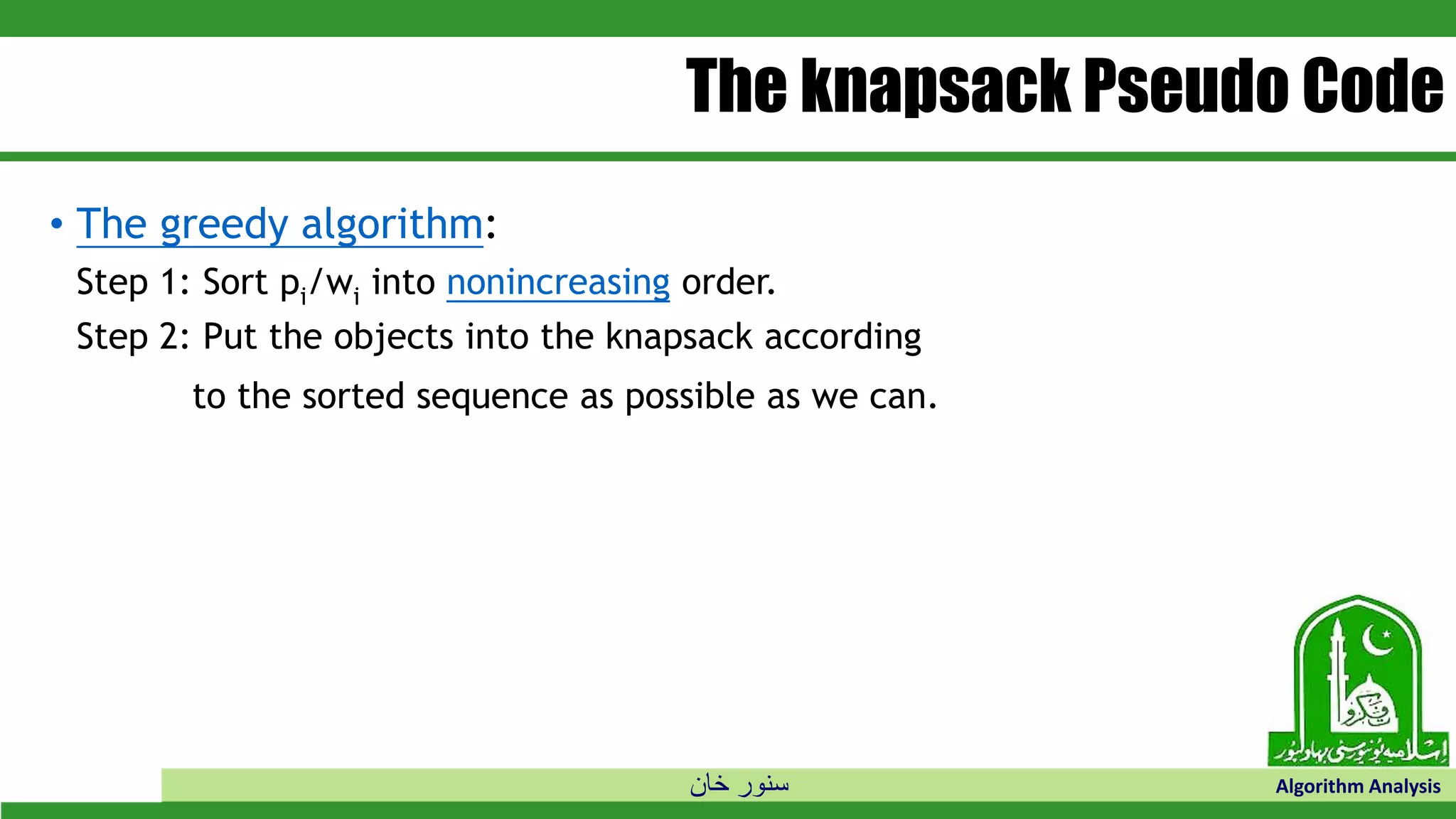

The document discusses greedy algorithms and their application to optimization problems. It provides examples of problems that can be solved using greedy approaches, such as fractional knapsack and making change. However, it notes that some problems like 0-1 knapsack and shortest paths on multi-stage graphs cannot be solved optimally with greedy algorithms. The document also describes various greedy algorithms for minimum spanning trees, single-source shortest paths, and fractional knapsack problems.

![خان سنور Algorithm Analysis Algorithm Greedy-fractional-knapsack (w, v, W) FOR i =1 to do x[i] =0 weight = 0 while weight < W do i = best remaining item IF weight + w[i] ≤ W then x[i] = 1 weight = weight + w[i] else x[i] = (w - weight) / w[i] weight = W return x](https://image.slidesharecdn.com/greedyalgorithm-190528012359/75/Greedy-algorithm-35-2048.jpg)