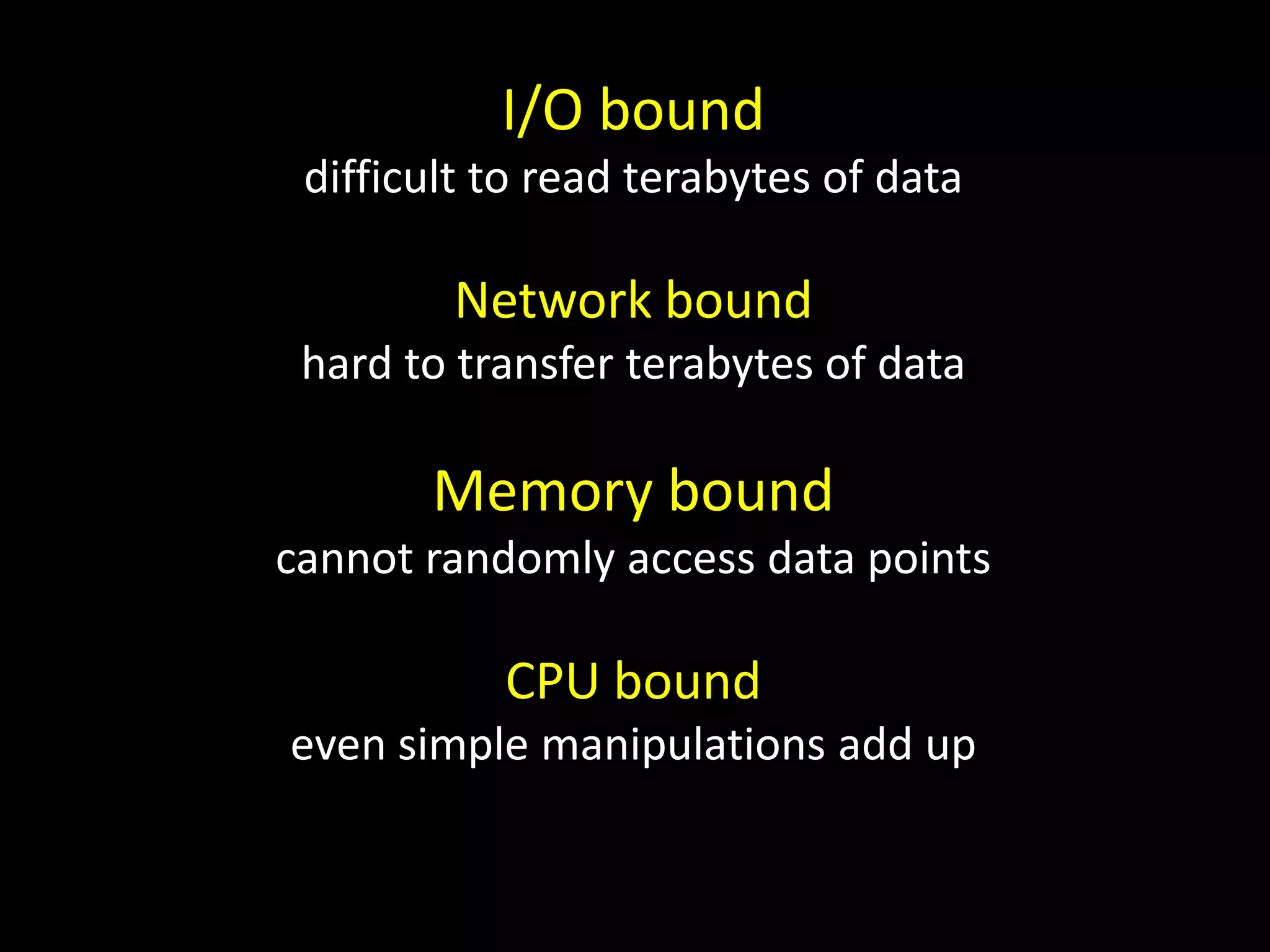

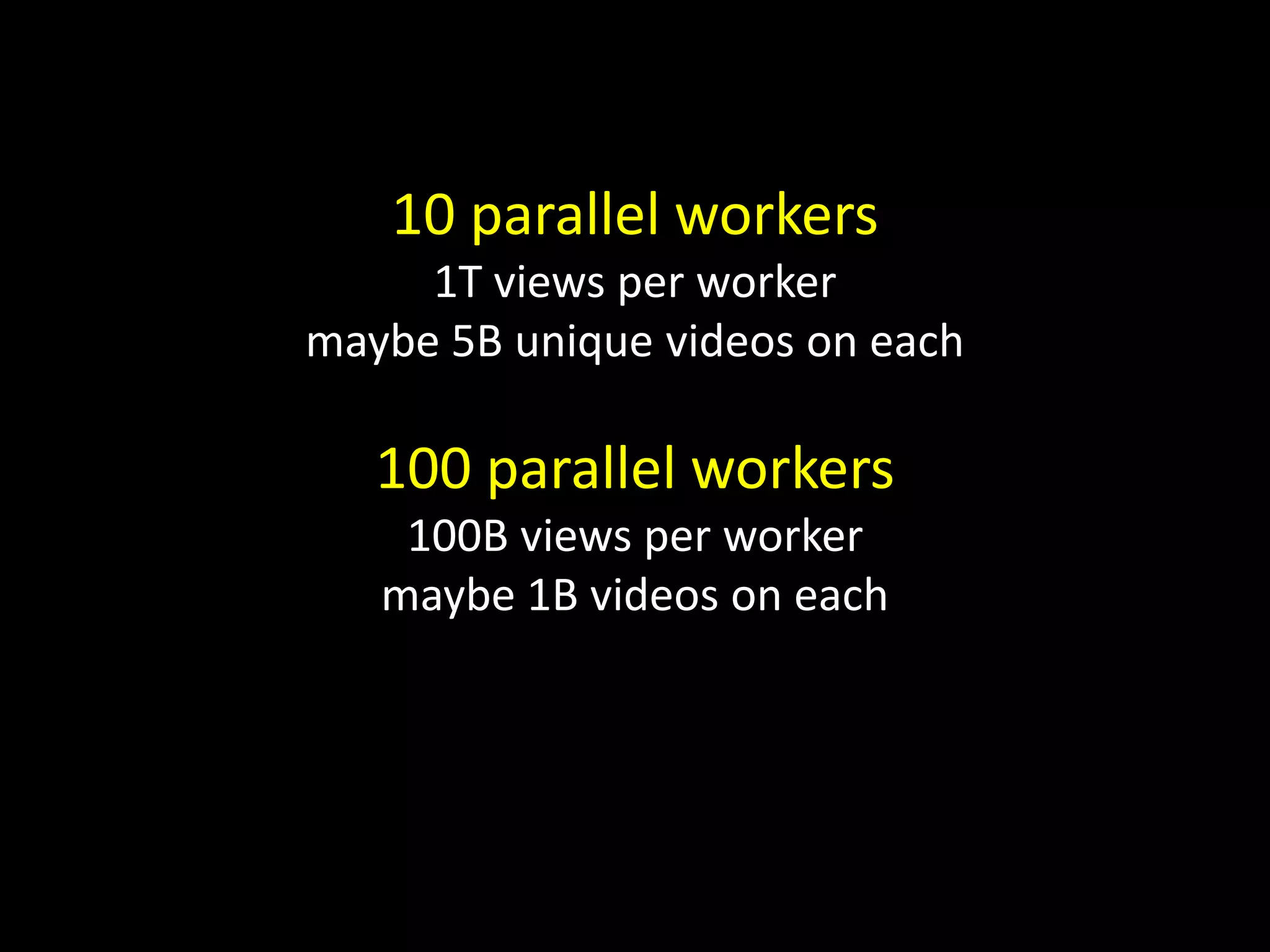

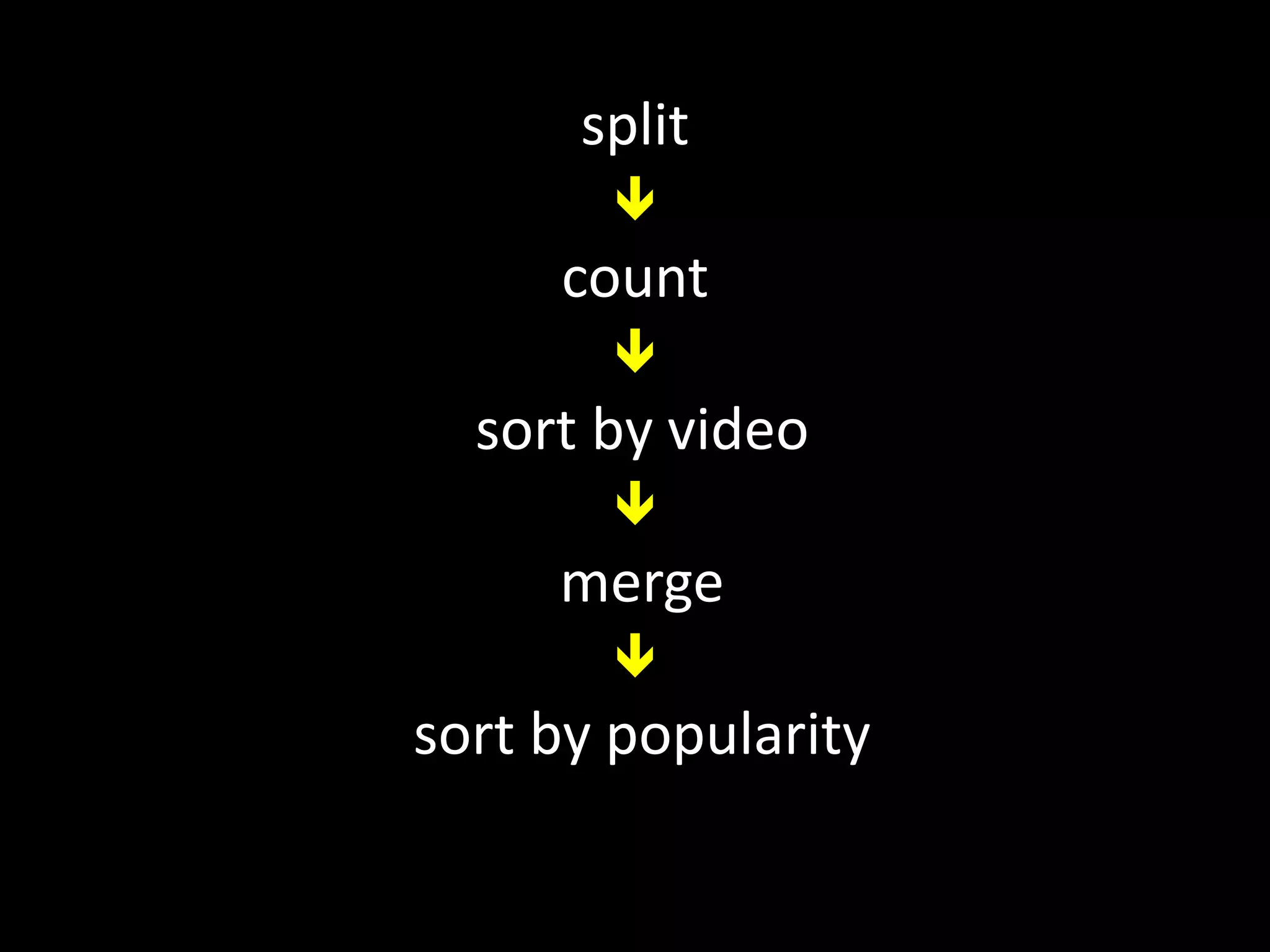

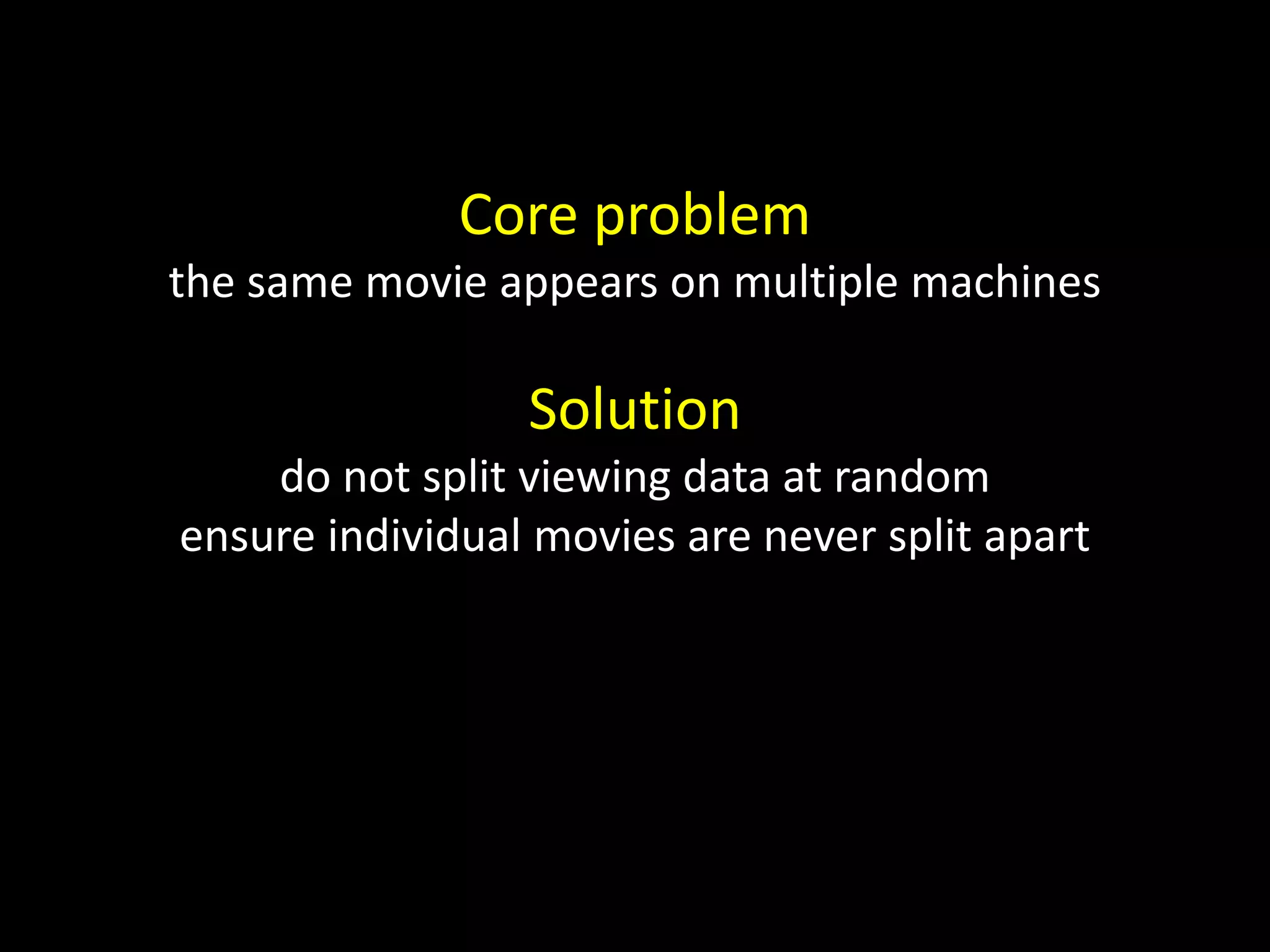

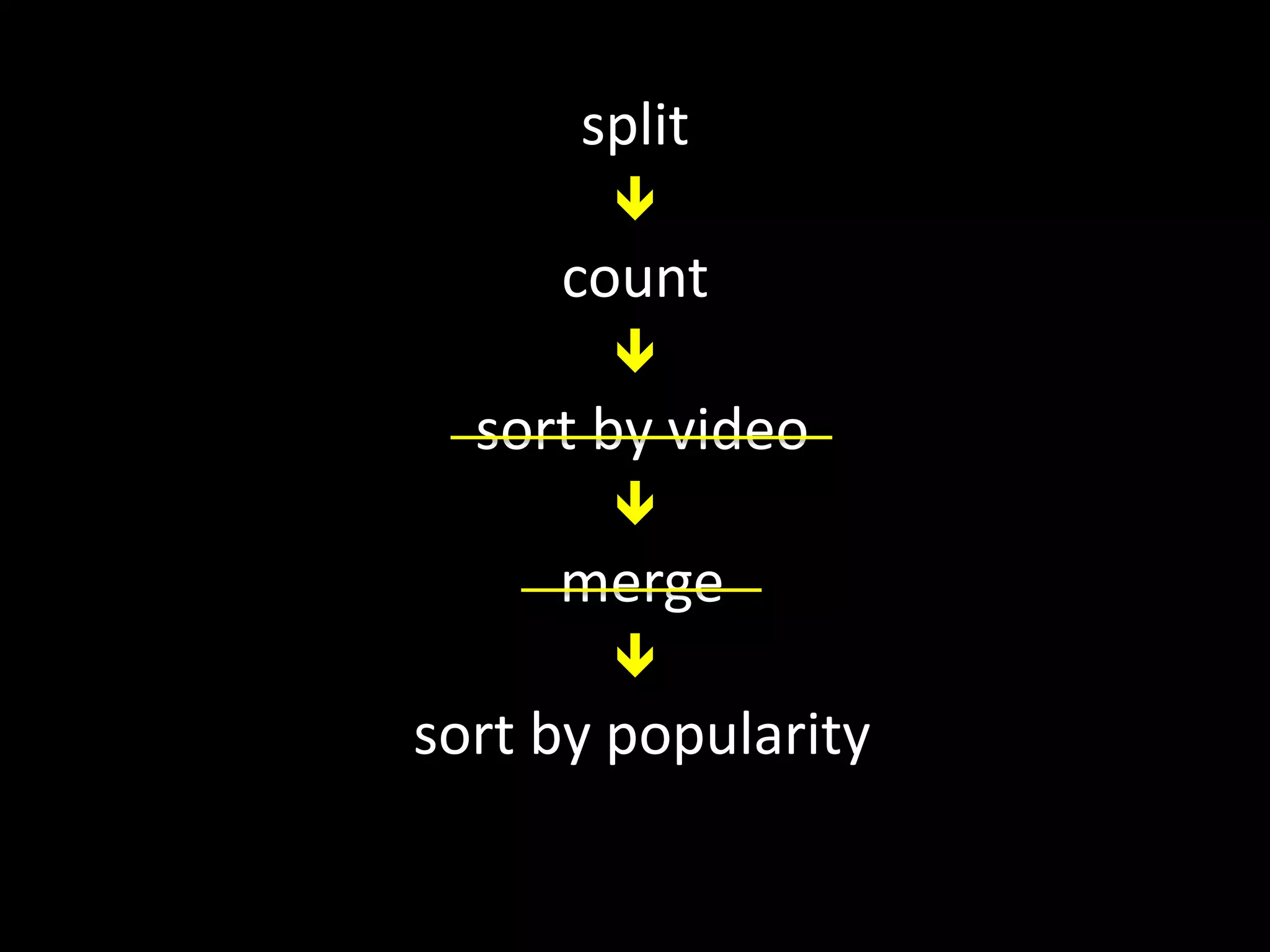

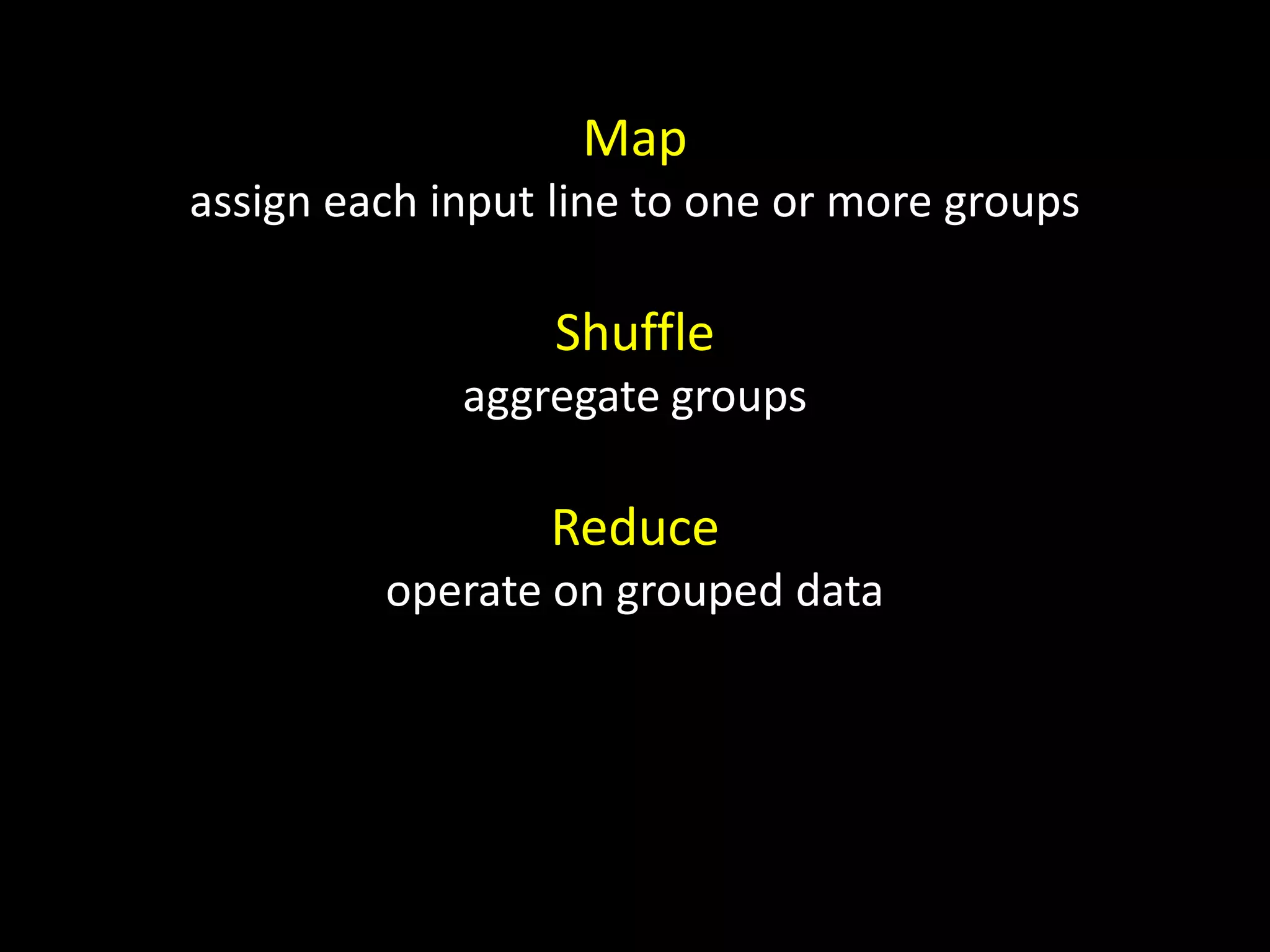

This document discusses techniques for counting and analyzing large datasets at scale. It introduces challenges like being I/O bound, network bound, memory bound, or CPU bound when dealing with terabytes of data. MapReduce is presented as a framework that can distribute work across multiple machines by mapping input to key-value pairs, shuffling/aggregating by key, and reducing on the grouped data. This approach takes advantage of the insight that many tasks are easier when performed on grouped data.

![Map assign each input line to one or more groups v [(k1, v1), …, (km, vm)] Shuffle aggregate groups Reduce operate on grouped data (k, [v1, …, vn]) [w1, …, wp]](https://image.slidesharecdn.com/mapreduce1-130218190738-phpapp01/75/Computational-Social-Science-Lecture-03-Counting-at-Scale-Part-I-22-2048.jpg)