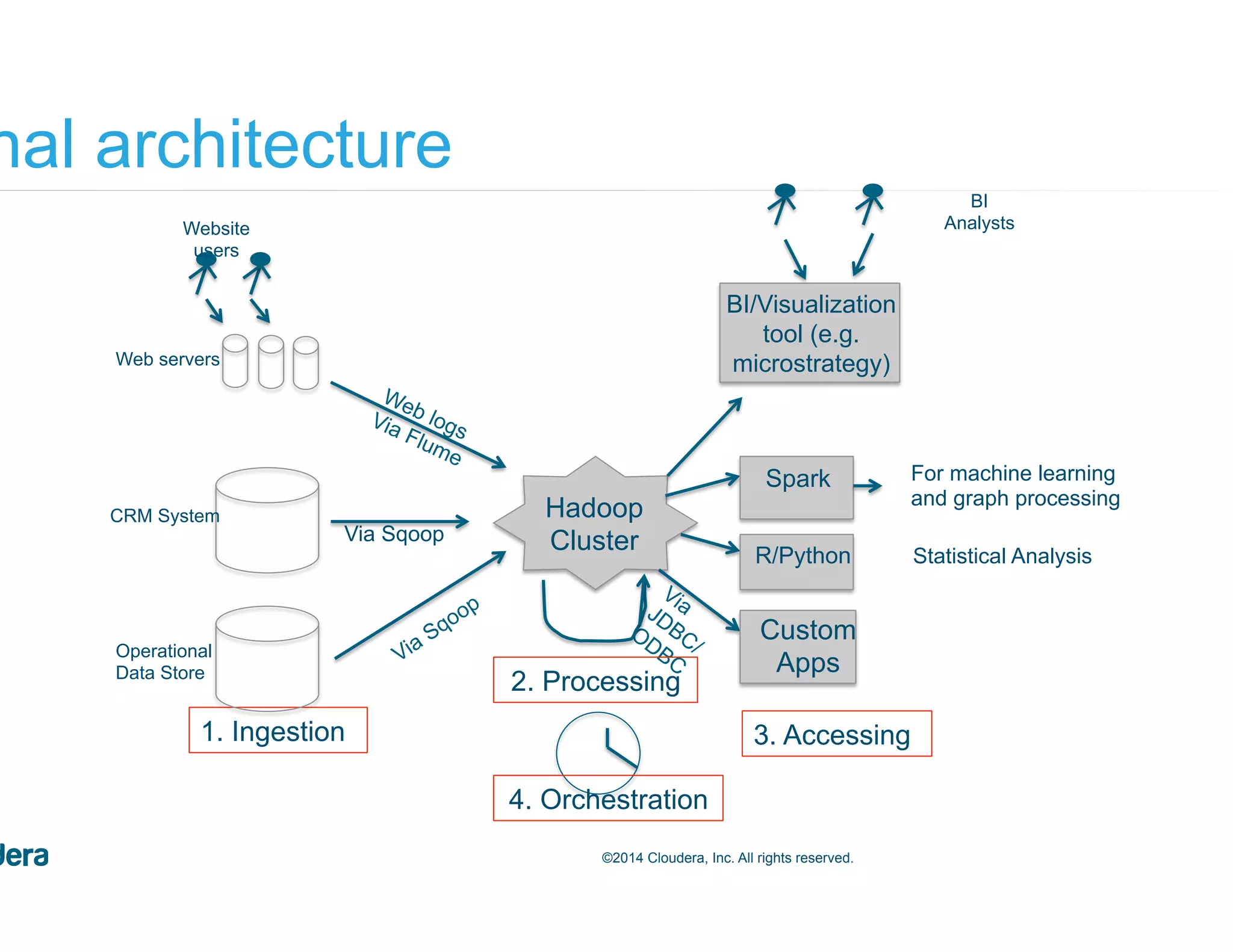

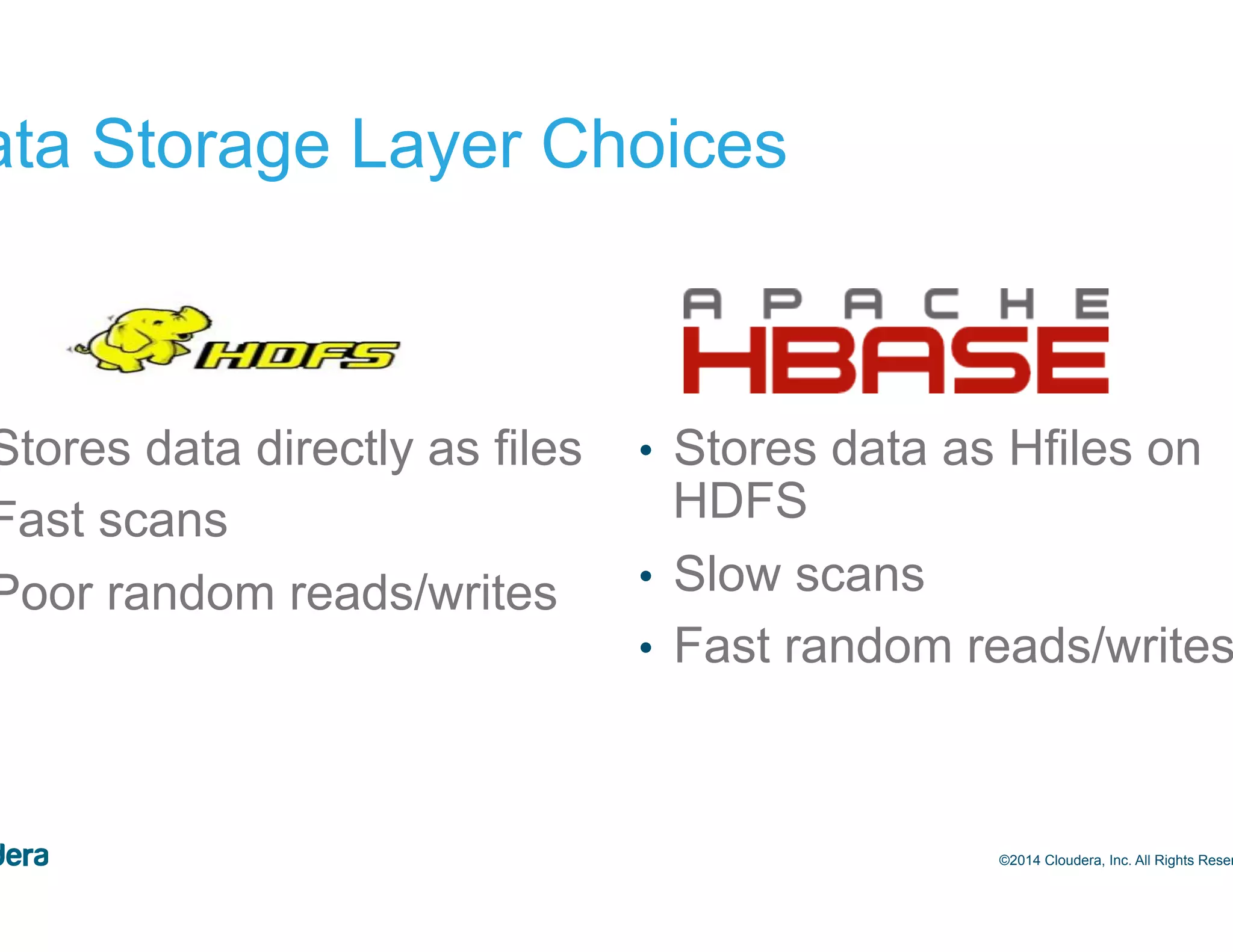

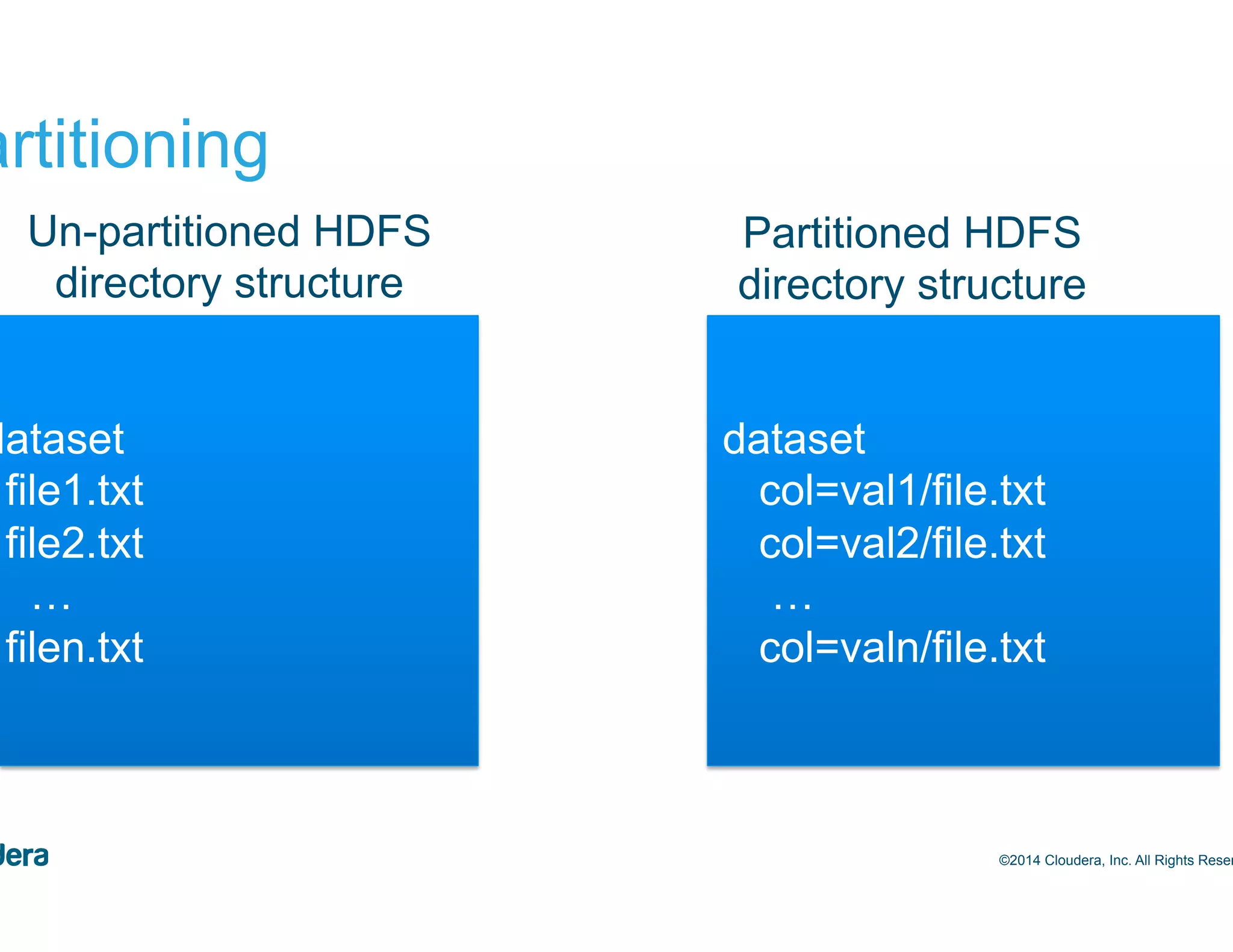

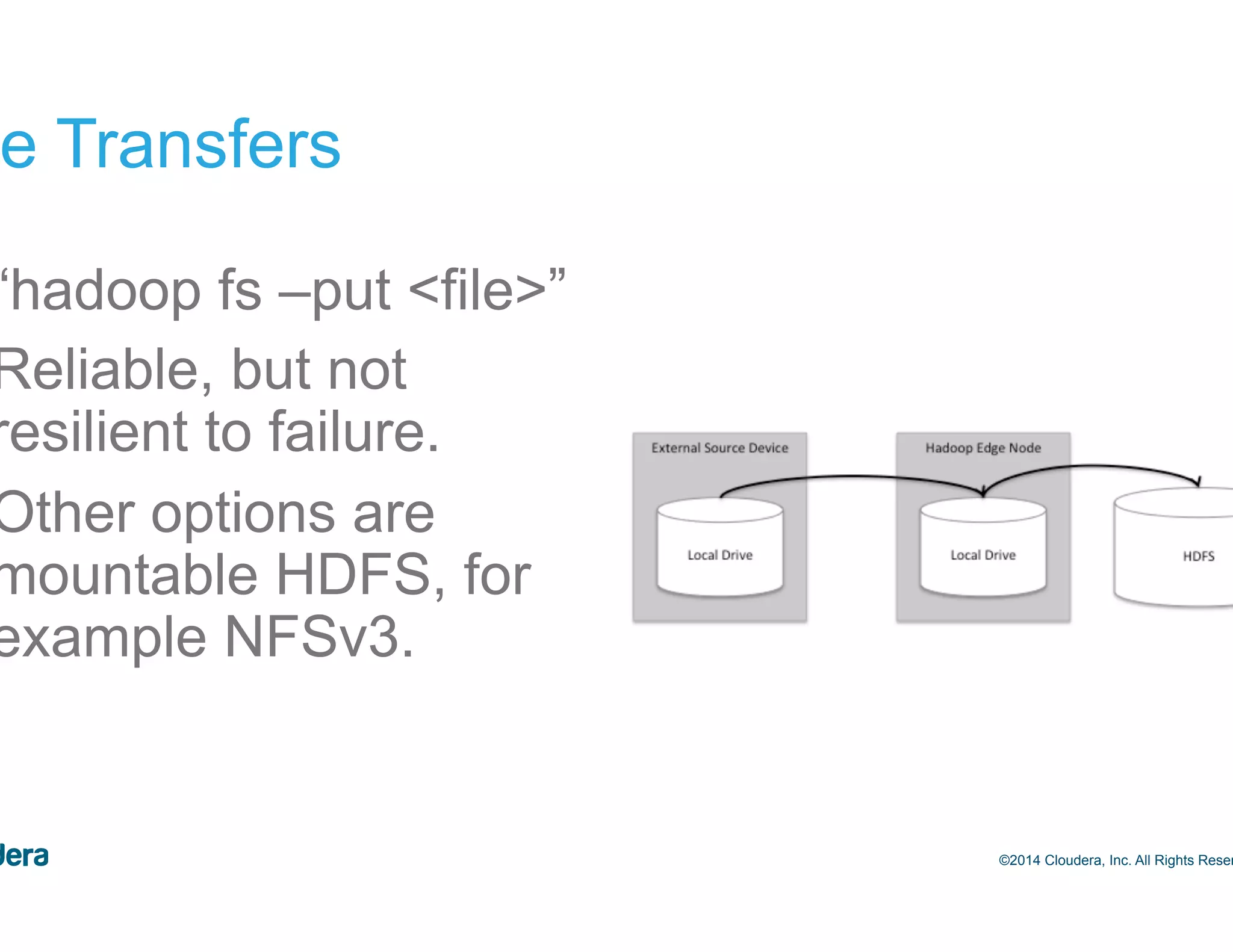

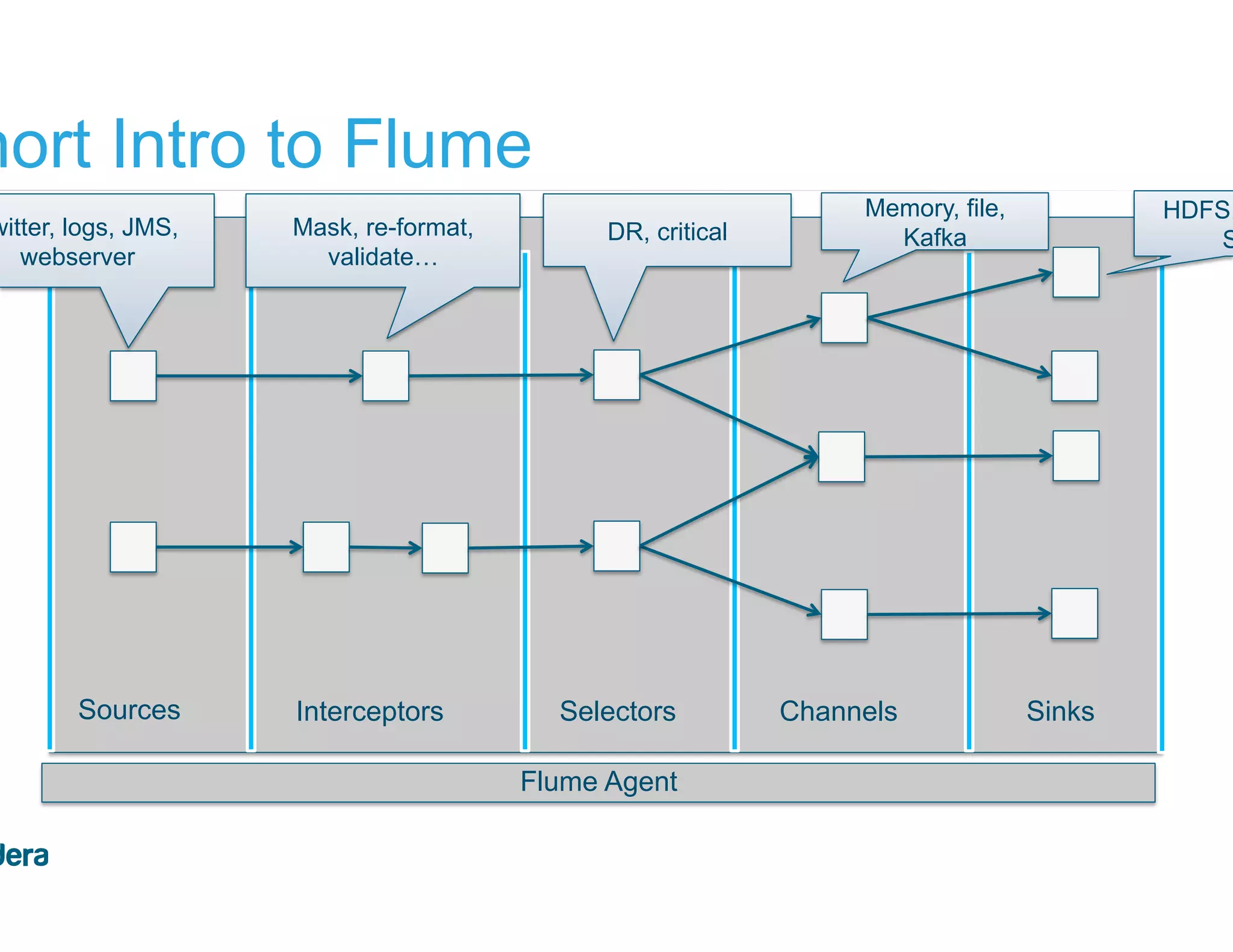

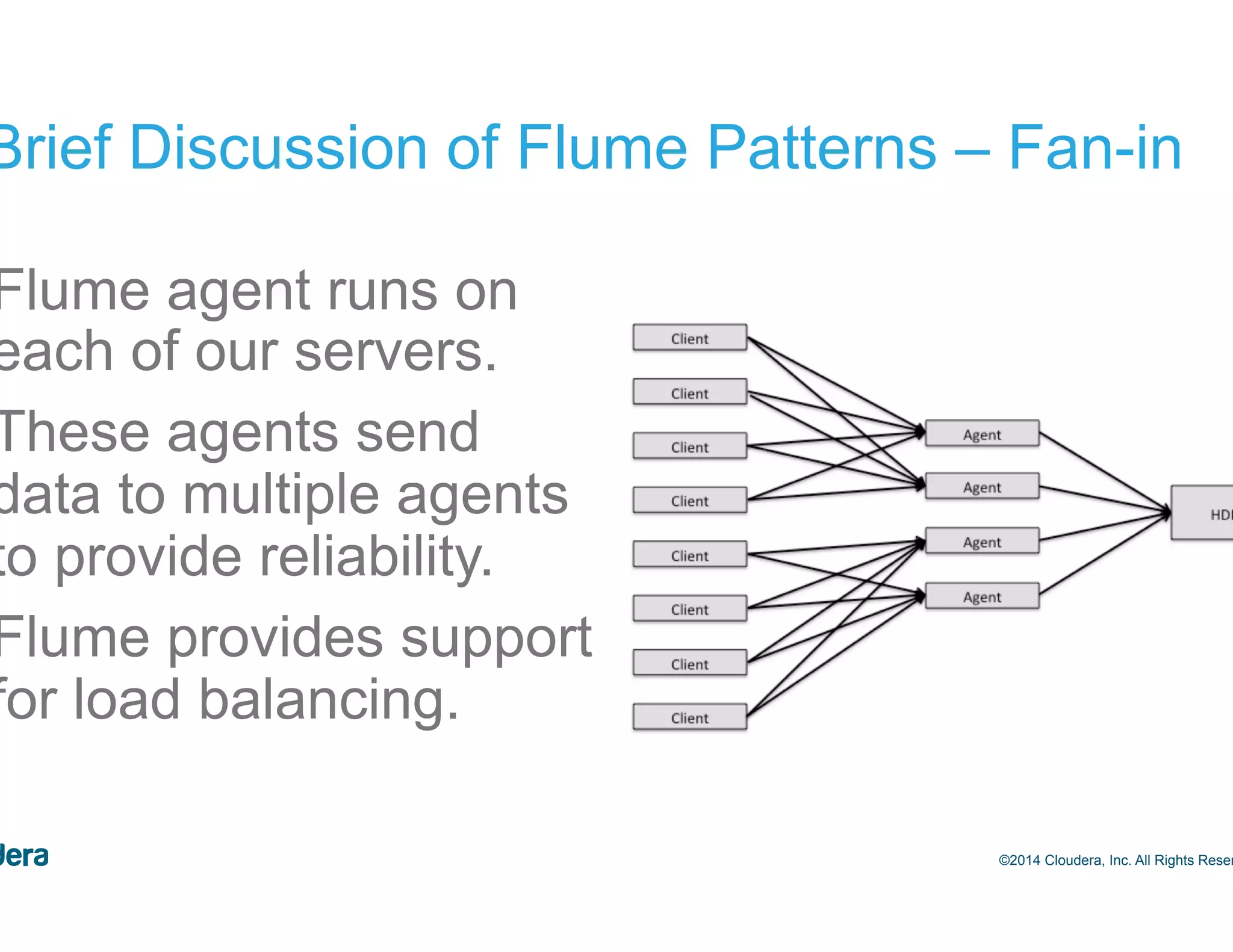

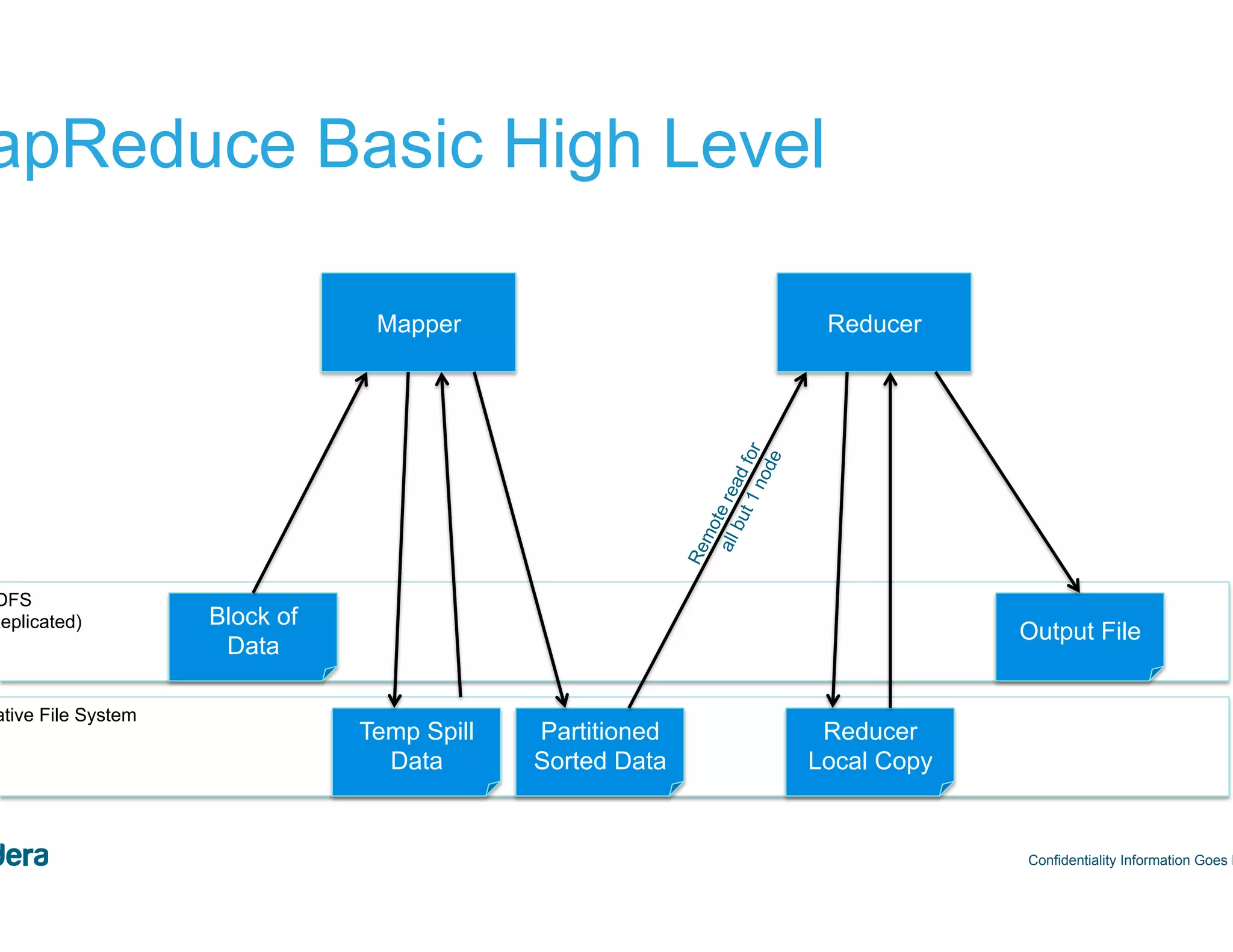

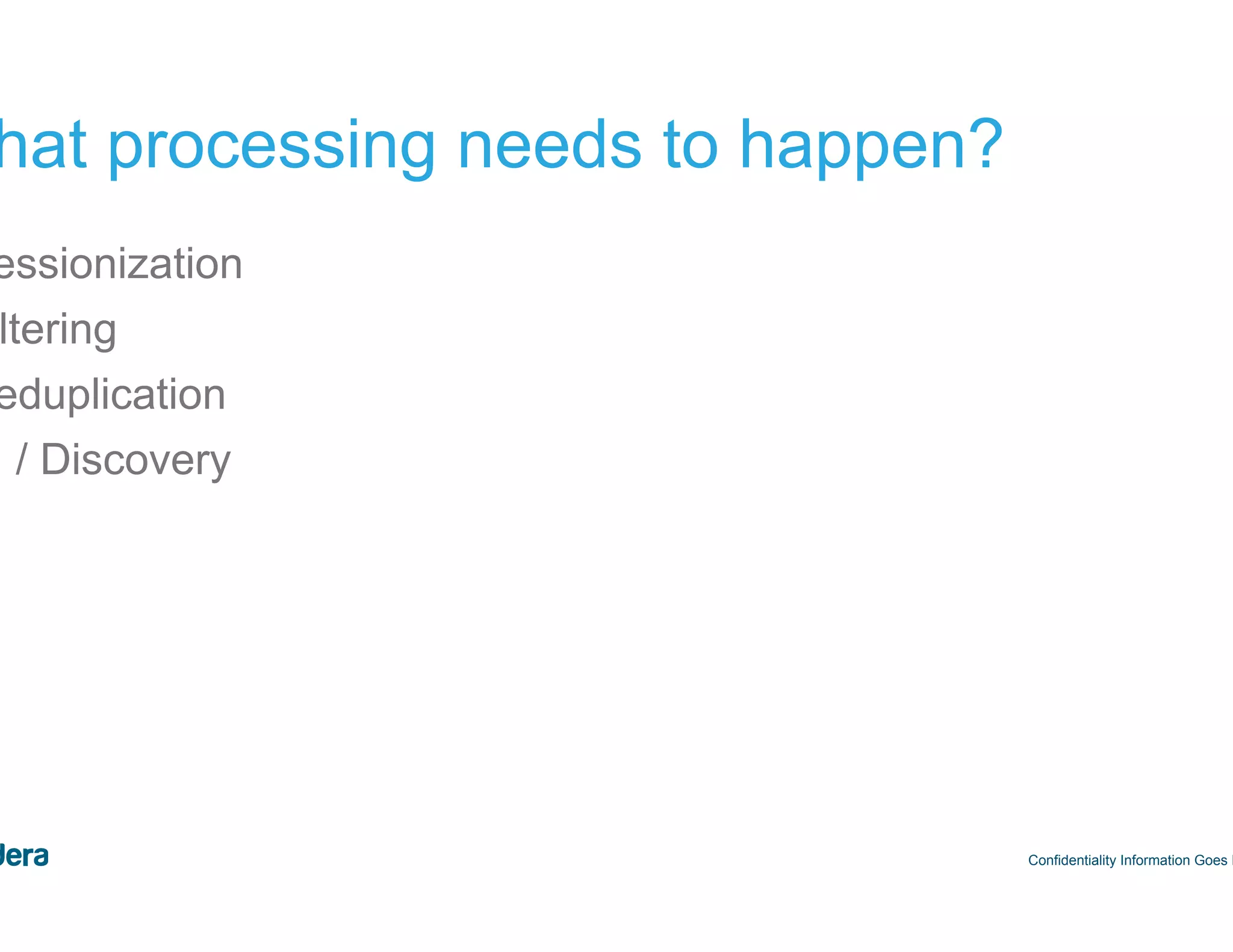

This document discusses architectural considerations for analyzing clickstream data using Hadoop. It covers choices for data storage layers like HDFS vs HBase, data formats like Avro and Parquet, partitioning strategies, and data ingestion using tools like Flume and Kafka. It also discusses processing engines like MapReduce, Spark and Impala and how they can be used to sessionize data and perform other analytics.

![7 Web Logs – Combined Log Format ©2014 Cloudera, Inc. All Rights Reserved. 244.157.45.12 - - [17/Oct/2014:21:08:30 ] "GET /seatposts HTTP/1.0" 200 4463 "http://bestcyclingreviews.com/top_online_shops" "Mozilla/ 5.0 (Macintosh; Intel Mac OS X 10_9_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/36.0.1944.0 Safari/537.36” 244.157.45.12 - - [17/Oct/2014:21:59:59 ] "GET /Store/cart.jsp? productID=1023 HTTP/1.0" 200 3757 "http://www.casualcyclist.com" "Mozilla/5.0 (Linux; U; Android 2.3.5; en-us; HTC Vision Build/ GRI40) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1”](https://image.slidesharecdn.com/applicationarchitectureswithhadoop-londonhug-141117081334-conversion-gate02/75/Application-Architectures-with-Hadoop-UK-Hadoop-User-Group-7-2048.jpg)

![8 Clickstream Analytics ©2014 Cloudera, Inc. All Rights Reserved. 244.157.45.12 - - [17/Oct/ 2014:21:08:30 ] "GET /seatposts HTTP/1.0" 200 4463 "http:// bestcyclingreviews.com/ top_online_shops" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/36.0.1944.0 Safari/ 537.36”](https://image.slidesharecdn.com/applicationarchitectureswithhadoop-londonhug-141117081334-conversion-gate02/75/Application-Architectures-with-Hadoop-UK-Hadoop-User-Group-8-2048.jpg)

![48 #1 – Which clicks are from same user? ©2014 Cloudera, Inc. All Rights Reserved. 244.157.45.12 - - [17/Oct/2014:21:08:30 ] "GET /seatposts HTTP/1.0" 200 4463 "http:// bestcyclingreviews.com/top_online_shops" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/36.0.1944.0 Safari/537.36” 244.157.45.12 - - [17/Oct/2014:21:59:59 ] "GET /Store/cart.jsp?productID=1023 HTTP/1.0" 200 3757 "http://www.casualcyclist.com" "Mozilla/5.0 (Linux; U; Android 2.3.5; en-us; HTC Vision Build/GRI40) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1”](https://image.slidesharecdn.com/applicationarchitectureswithhadoop-londonhug-141117081334-conversion-gate02/75/Application-Architectures-with-Hadoop-UK-Hadoop-User-Group-48-2048.jpg)

![49 #2 – Which clicks part of the same session? ©2014 Cloudera, Inc. All Rights Reserved. 244.157.45.12 - - [17/Oct/2014:21:08:30 ] "GET /seatposts HTTP/1.0" 200 4463 "http:// bestcyclingreviews.com/top_online_shops" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/36.0.1944.0 Safari/537.36” 244.157.45.12 - - [17/Oct/2014:21:59:59 ] "GET /Store/cart.jsp?productID=1023 HTTP/1.0" 200 3757 "http://www.casualcyclist.com" "Mozilla/5.0 (Linux; U; Android 2.3.5; en-us; HTC Vision Build/GRI40) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1” > 30 mins apart = different sessions](https://image.slidesharecdn.com/applicationarchitectureswithhadoop-londonhug-141117081334-conversion-gate02/75/Application-Architectures-with-Hadoop-UK-Hadoop-User-Group-49-2048.jpg)

![51 Filtering – filter out incomplete records ©2014 Cloudera, Inc. All Rights Reserved. 244.157.45.12 - - [17/Oct/2014:21:08:30 ] "GET /seatposts HTTP/1.0" 200 4463 "http:// bestcyclingreviews.com/top_online_shops" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/36.0.1944.0 Safari/537.36” 244.157.45.12 - - [17/Oct/2014:21:59:59 ] "GET /Store/cart.jsp?productID=1023 HTTP/1.0" 200 3757 "http://www.casualcyclist.com" "Mozilla/5.0 (Linux; U…](https://image.slidesharecdn.com/applicationarchitectureswithhadoop-londonhug-141117081334-conversion-gate02/75/Application-Architectures-with-Hadoop-UK-Hadoop-User-Group-51-2048.jpg)

![52 Filtering – filter out records from bots/spiders ©2014 Cloudera, Inc. All Rights Reserved. 244.157.45.12 - - [17/Oct/2014:21:08:30 ] "GET /seatposts HTTP/1.0" 200 4463 "http:// bestcyclingreviews.com/top_online_shops" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/36.0.1944.0 Safari/537.36” 209.85.238.11 - - [17/Oct/2014:21:59:59 ] "GET /Store/cart.jsp?productID=1023 HTTP/1.0" 200 3757 "http://www.casualcyclist.com" "Mozilla/5.0 (Linux; U; Android 2.3.5; en-us; HTC Vision Build/GRI40) AppleWebKit/533.1 (KHTML, like Gecko) Version/4.0 Mobile Safari/533.1” Google spider IP address](https://image.slidesharecdn.com/applicationarchitectureswithhadoop-londonhug-141117081334-conversion-gate02/75/Application-Architectures-with-Hadoop-UK-Hadoop-User-Group-52-2048.jpg)

![54 Deduplication – remove duplicate records ©2014 Cloudera, Inc. All Rights Reserved. 244.157.45.12 - - [17/Oct/2014:21:08:30 ] "GET /seatposts HTTP/1.0" 200 4463 "http:// bestcyclingreviews.com/top_online_shops" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/36.0.1944.0 Safari/537.36” 244.157.45.12 - - [17/Oct/2014:21:08:30 ] "GET /seatposts HTTP/1.0" 200 4463 "http:// bestcyclingreviews.com/top_online_shops" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_9_2) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/36.0.1944.0 Safari/537.36”](https://image.slidesharecdn.com/applicationarchitectureswithhadoop-londonhug-141117081334-conversion-gate02/75/Application-Architectures-with-Hadoop-UK-Hadoop-User-Group-54-2048.jpg)