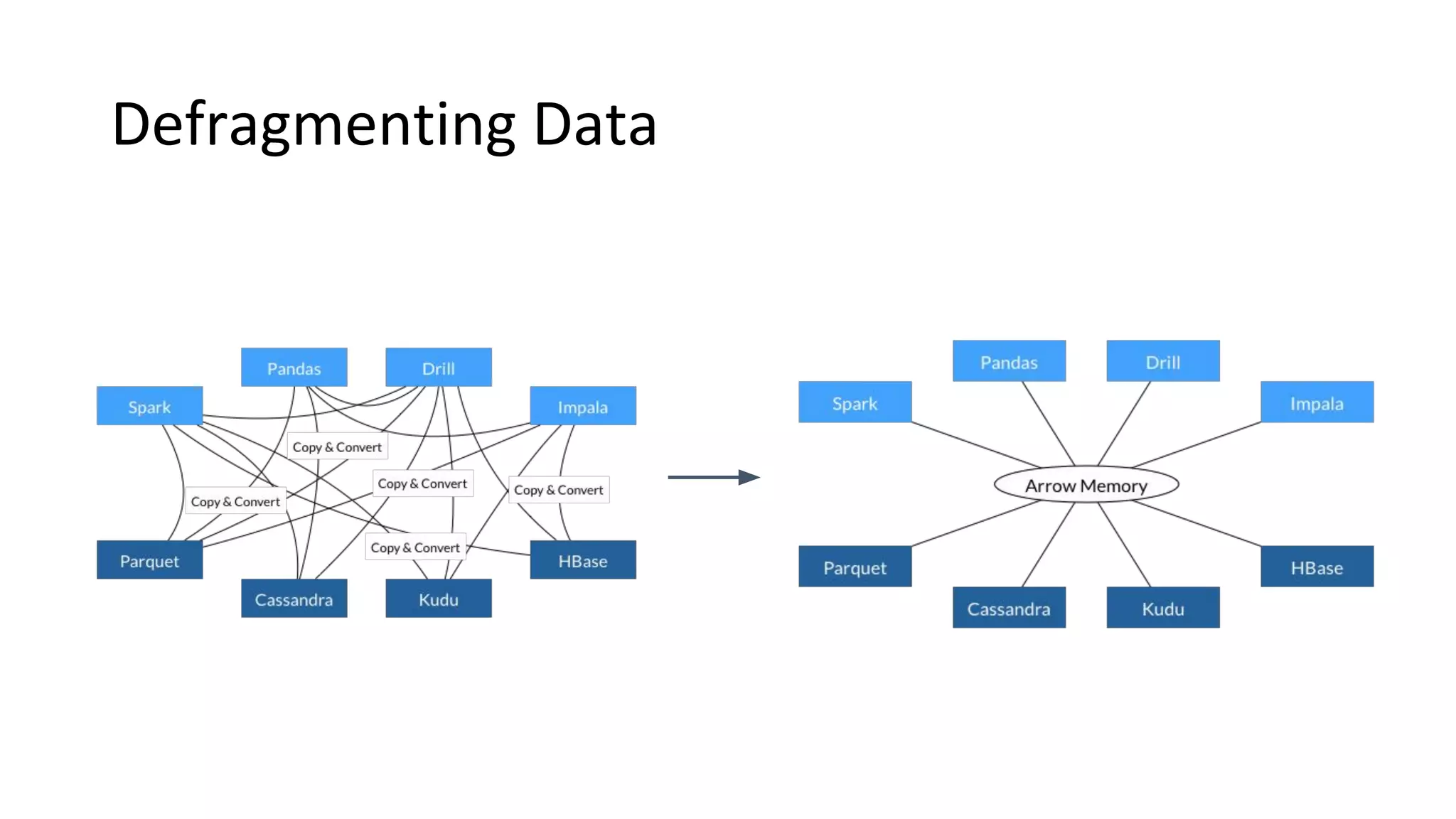

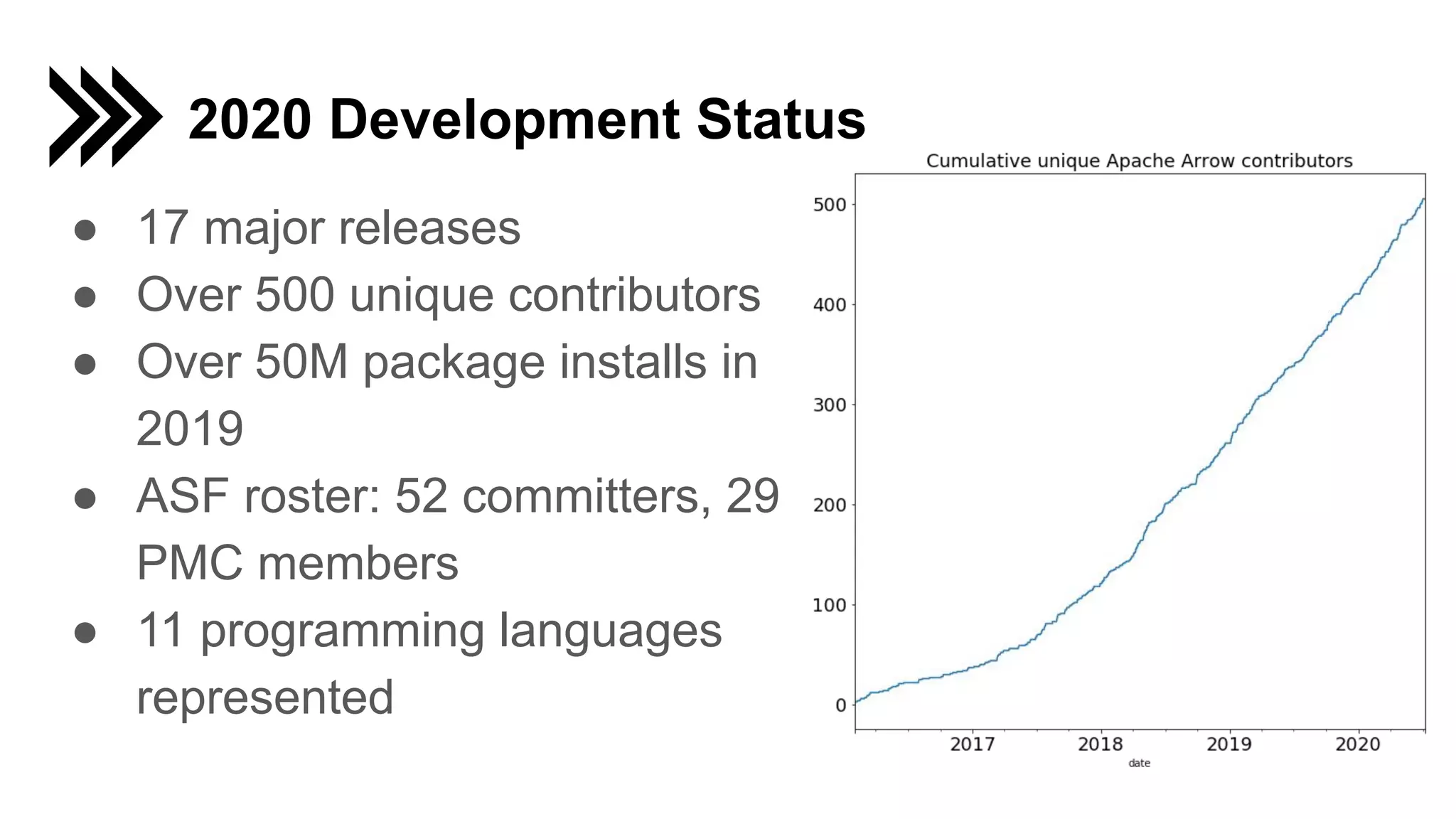

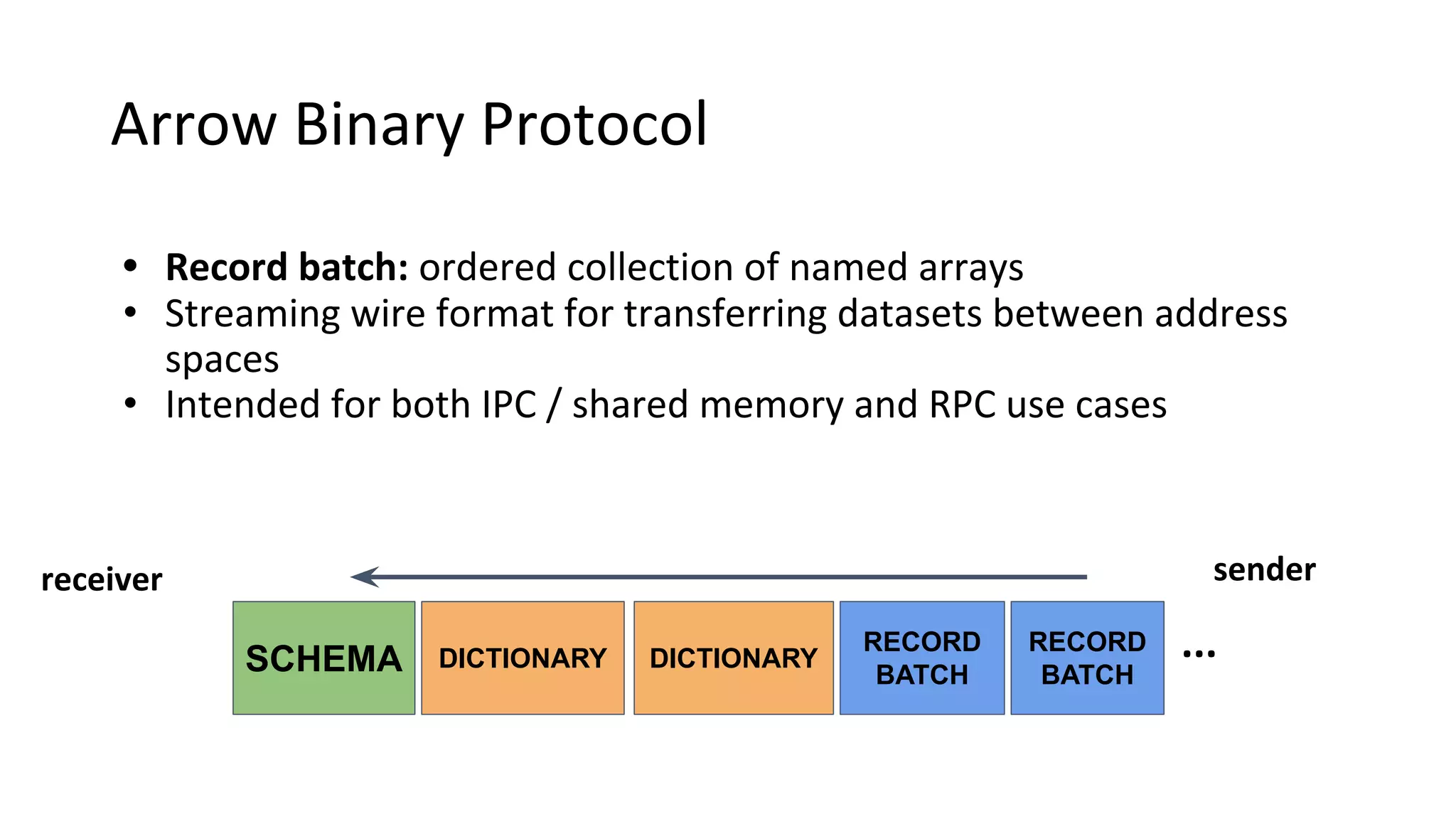

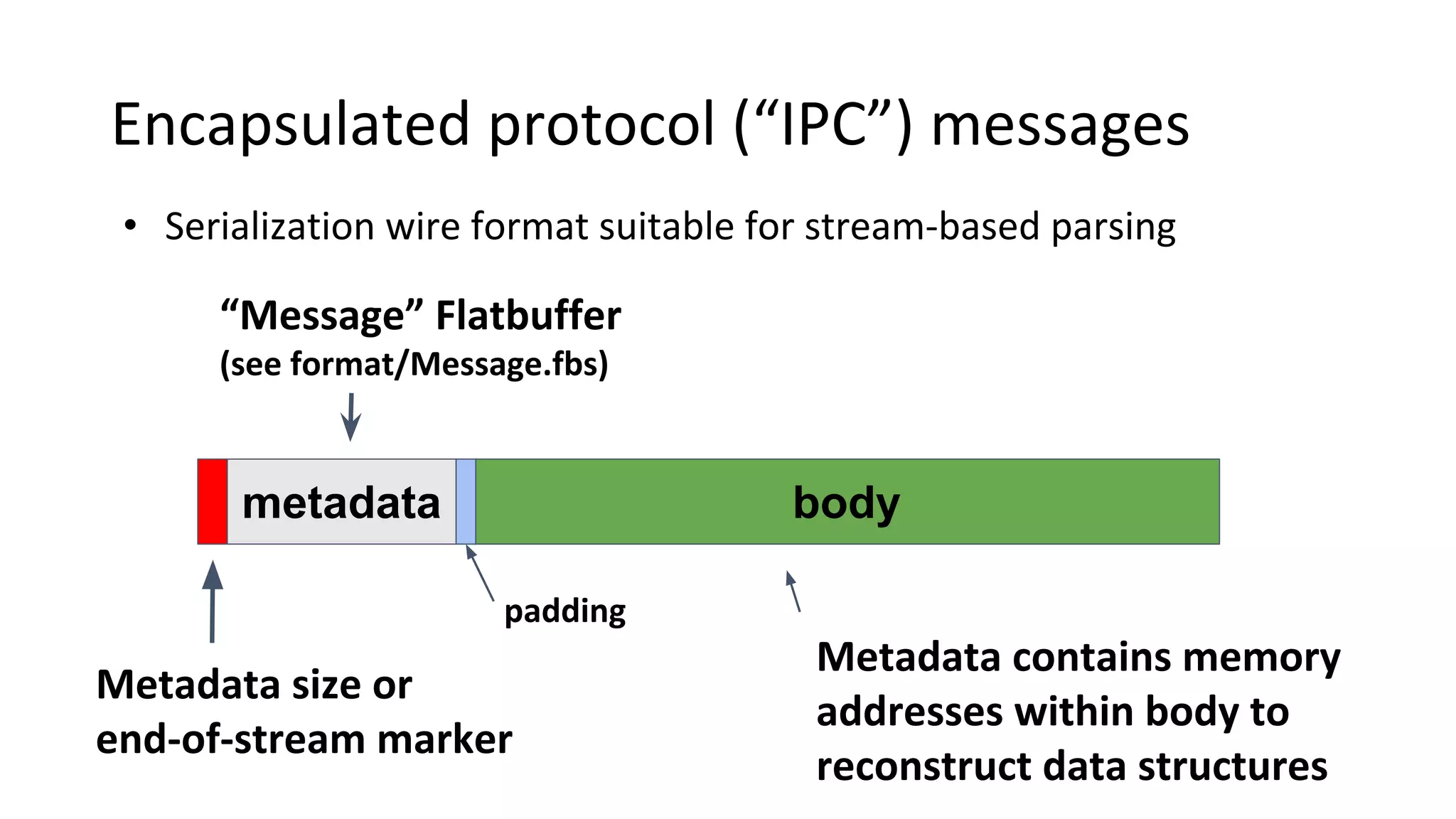

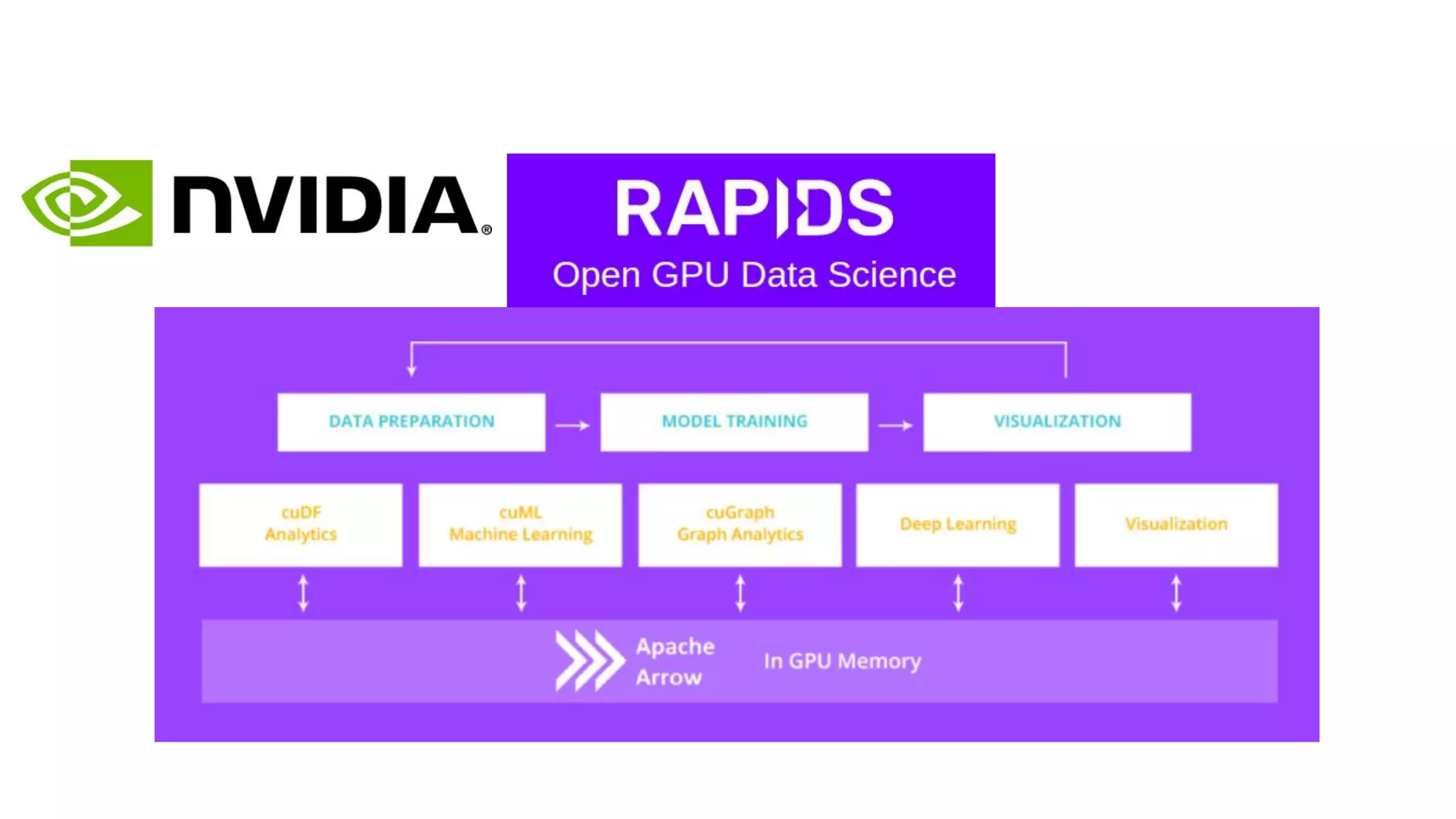

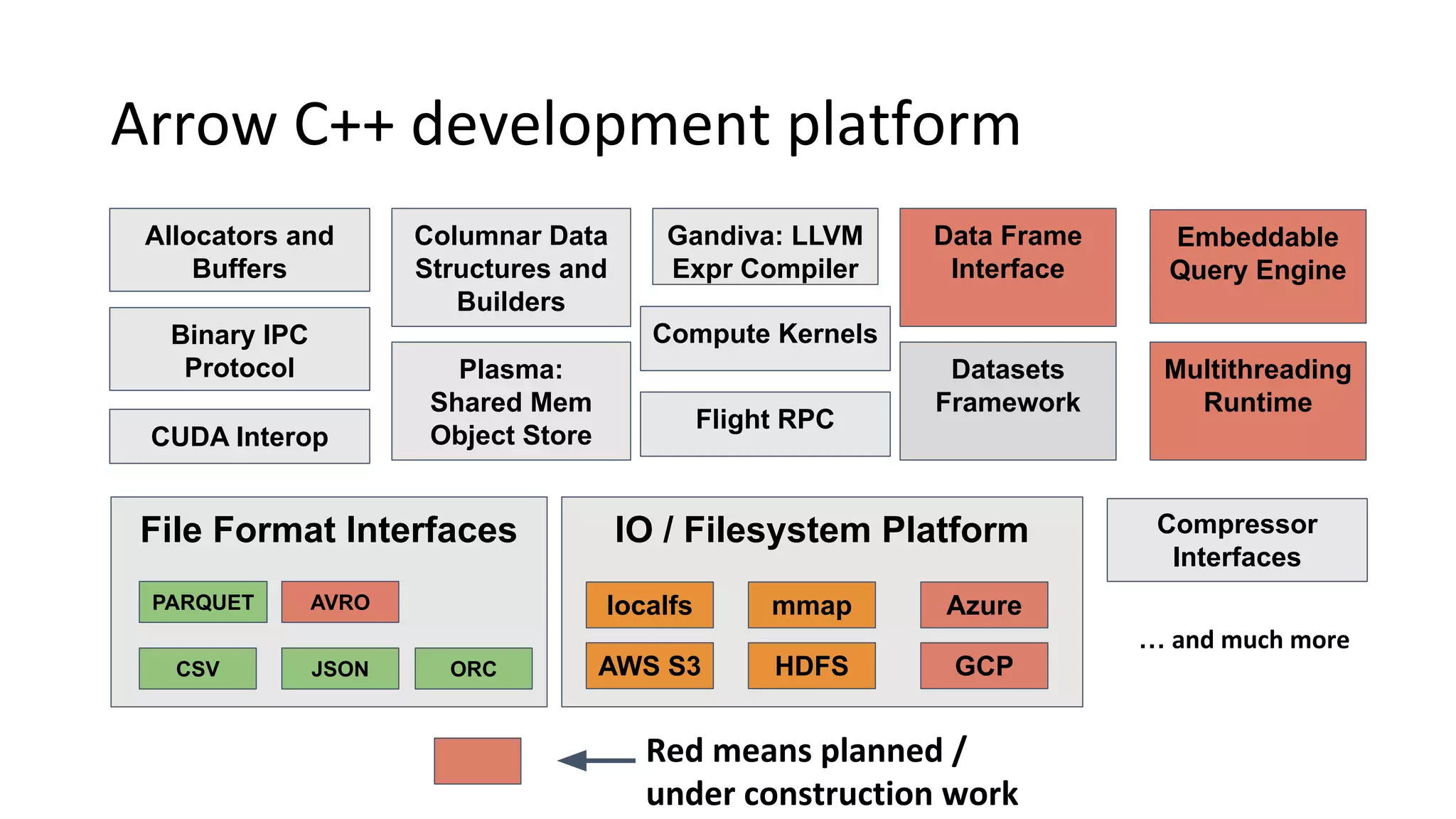

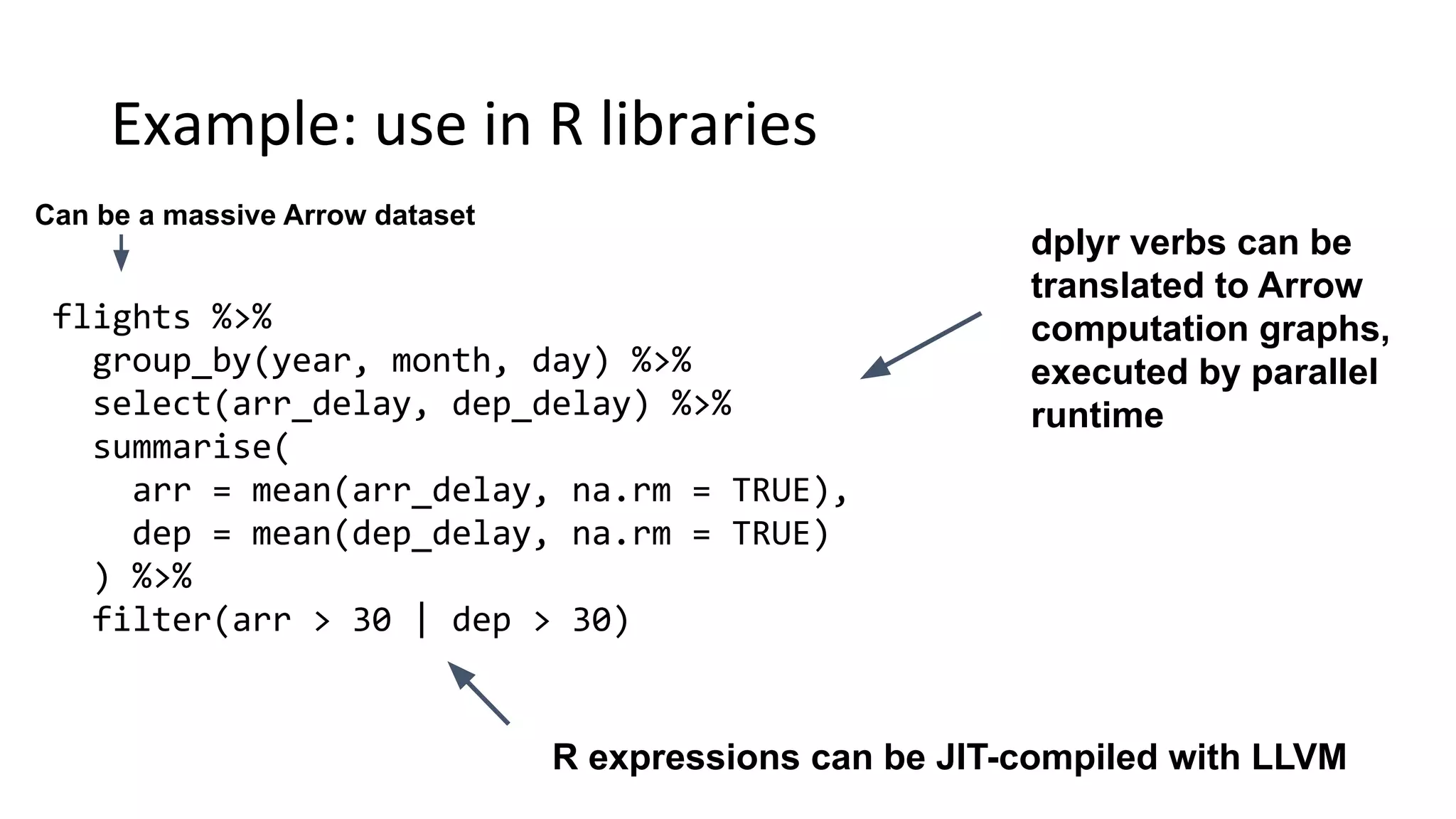

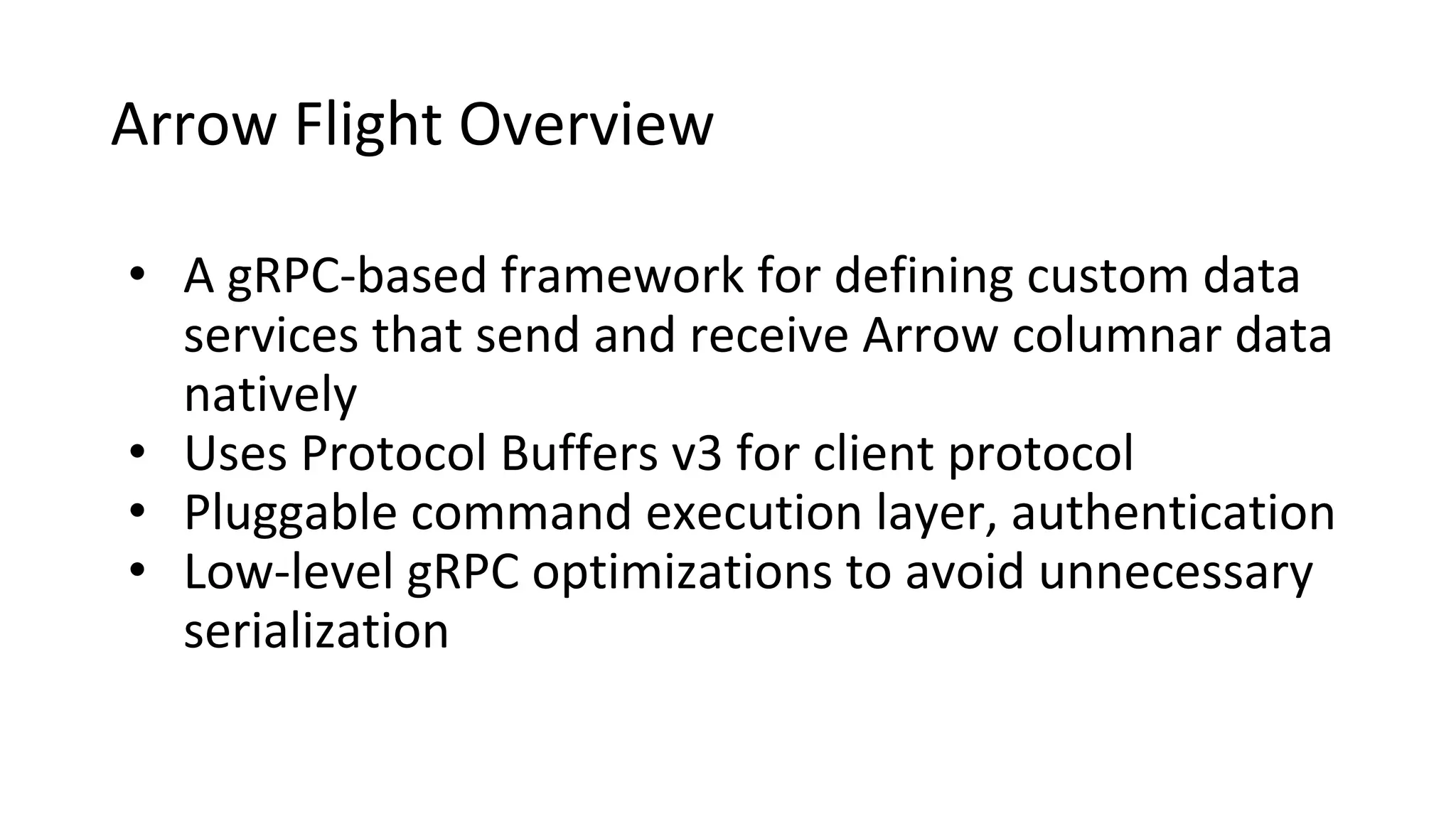

Wes McKinney gave a talk on Apache Arrow and the future of data frames. He discussed how Arrow aims to standardize columnar data formats and reduce inefficiencies in data processing. It defines an efficient binary format for transferring data between systems and programming languages. As more tools support Arrow natively, it will become more efficient to process data directly in Arrow format rather than converting between data structures. Arrow is gaining adoption in popular data tools like Spark, BigQuery, and InfluxDB to improve performance.