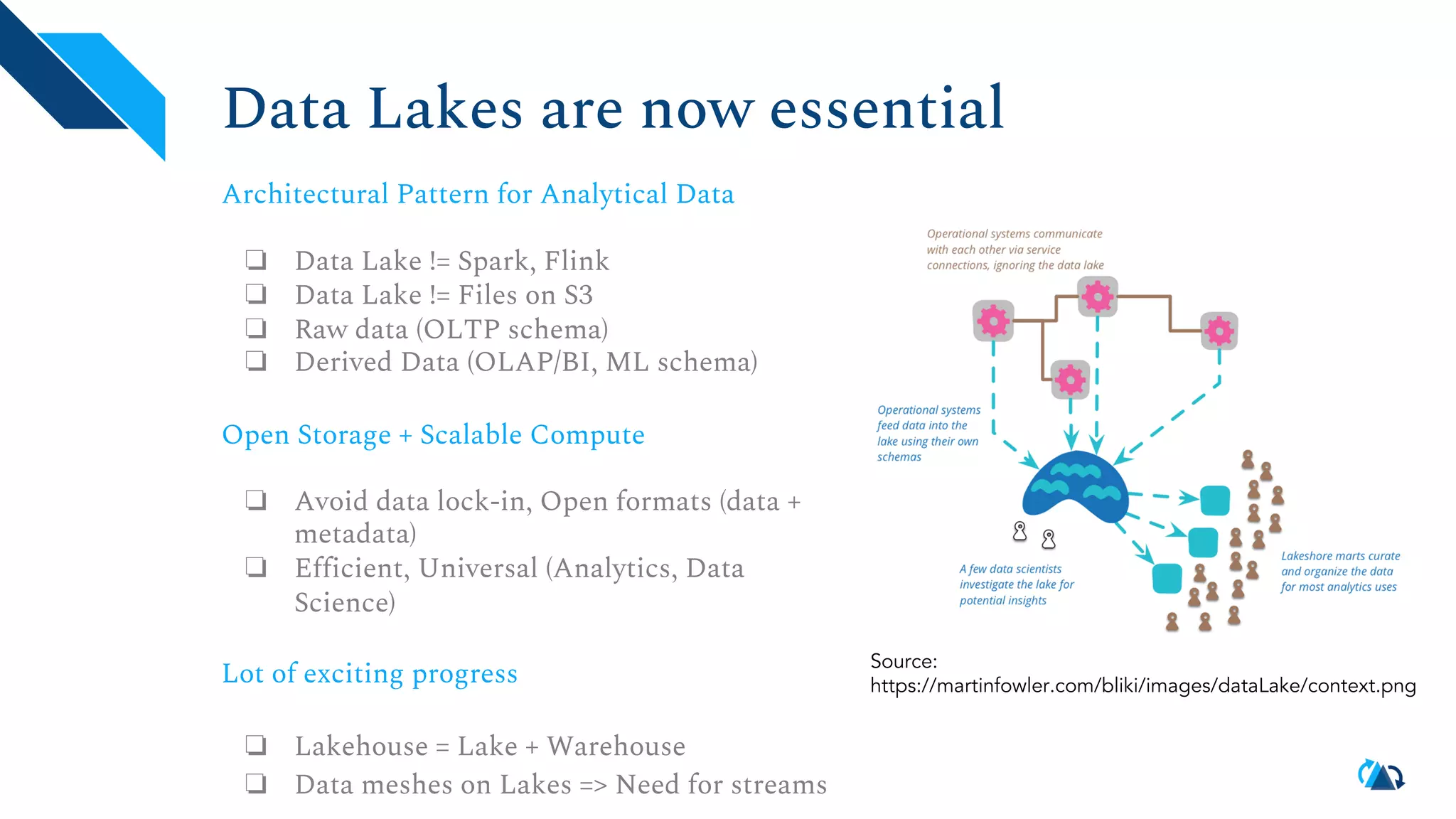

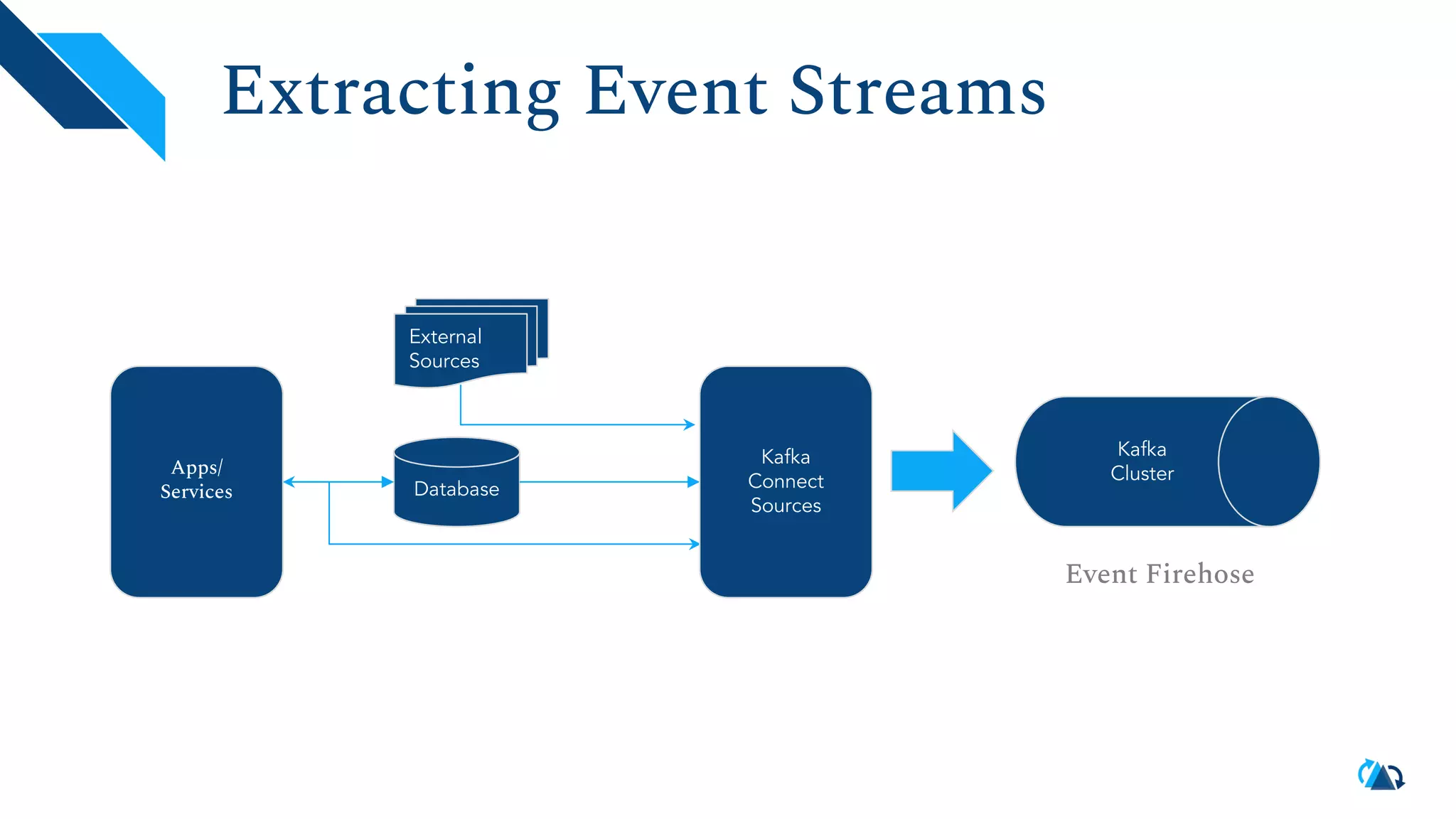

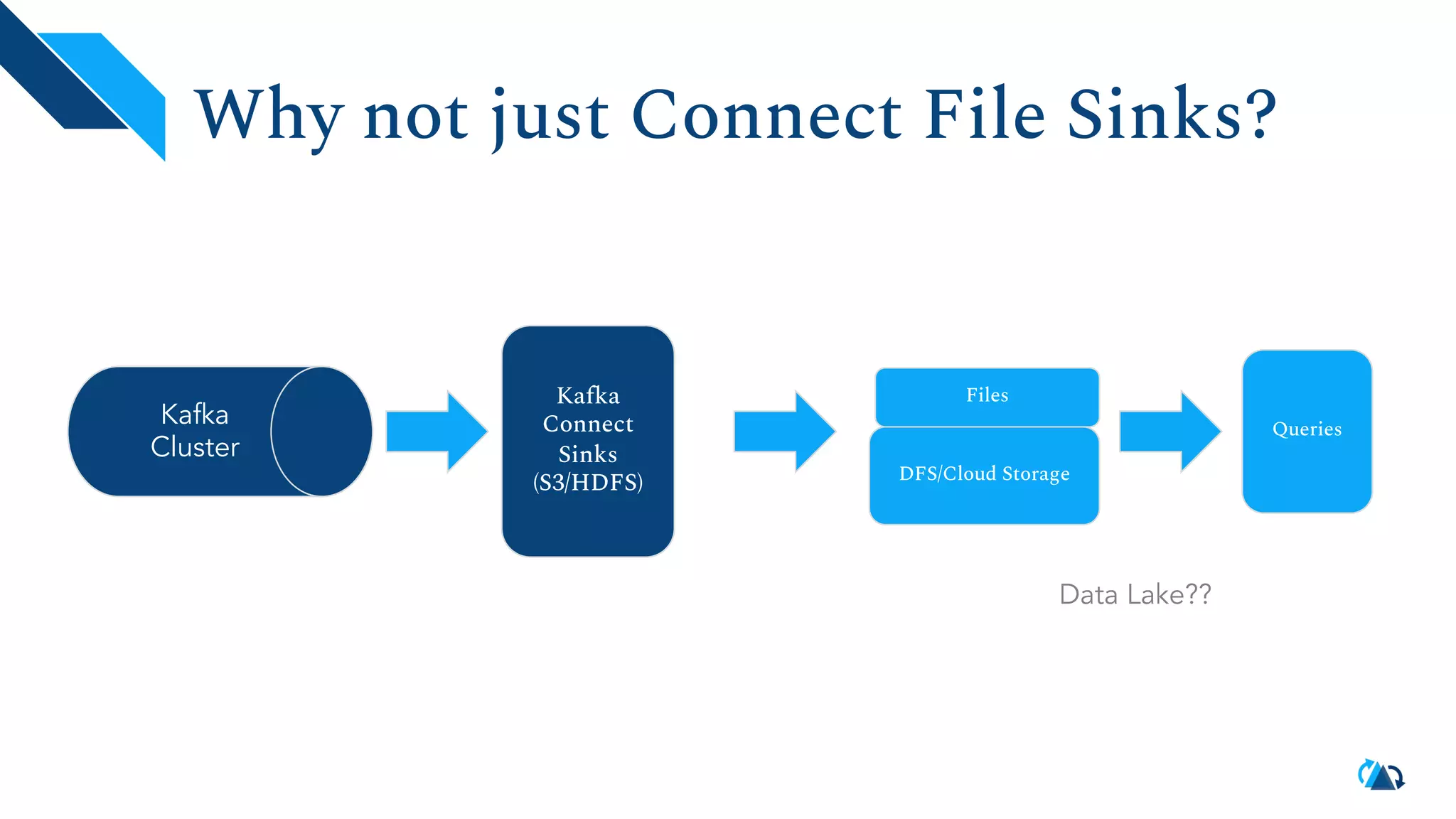

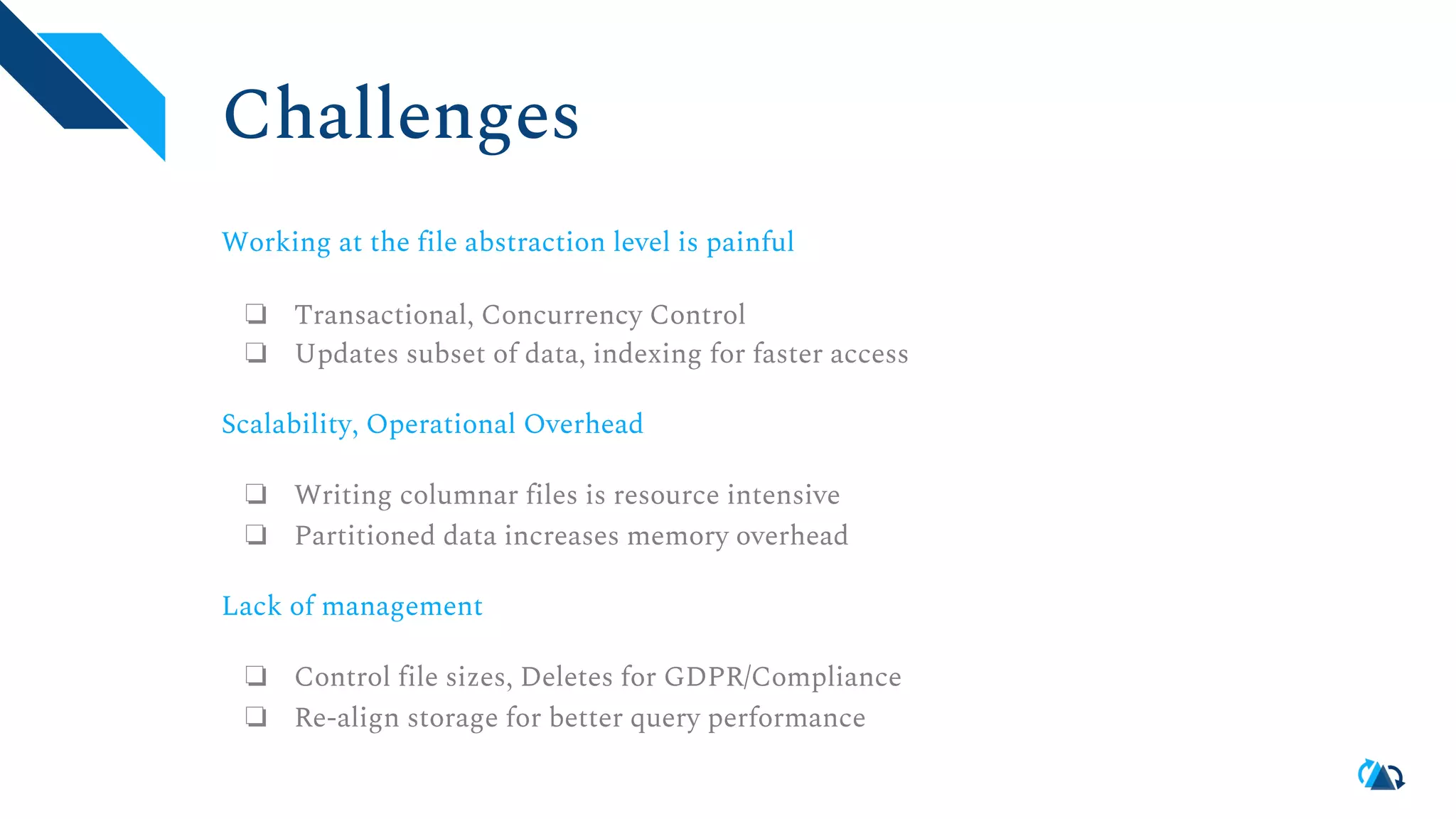

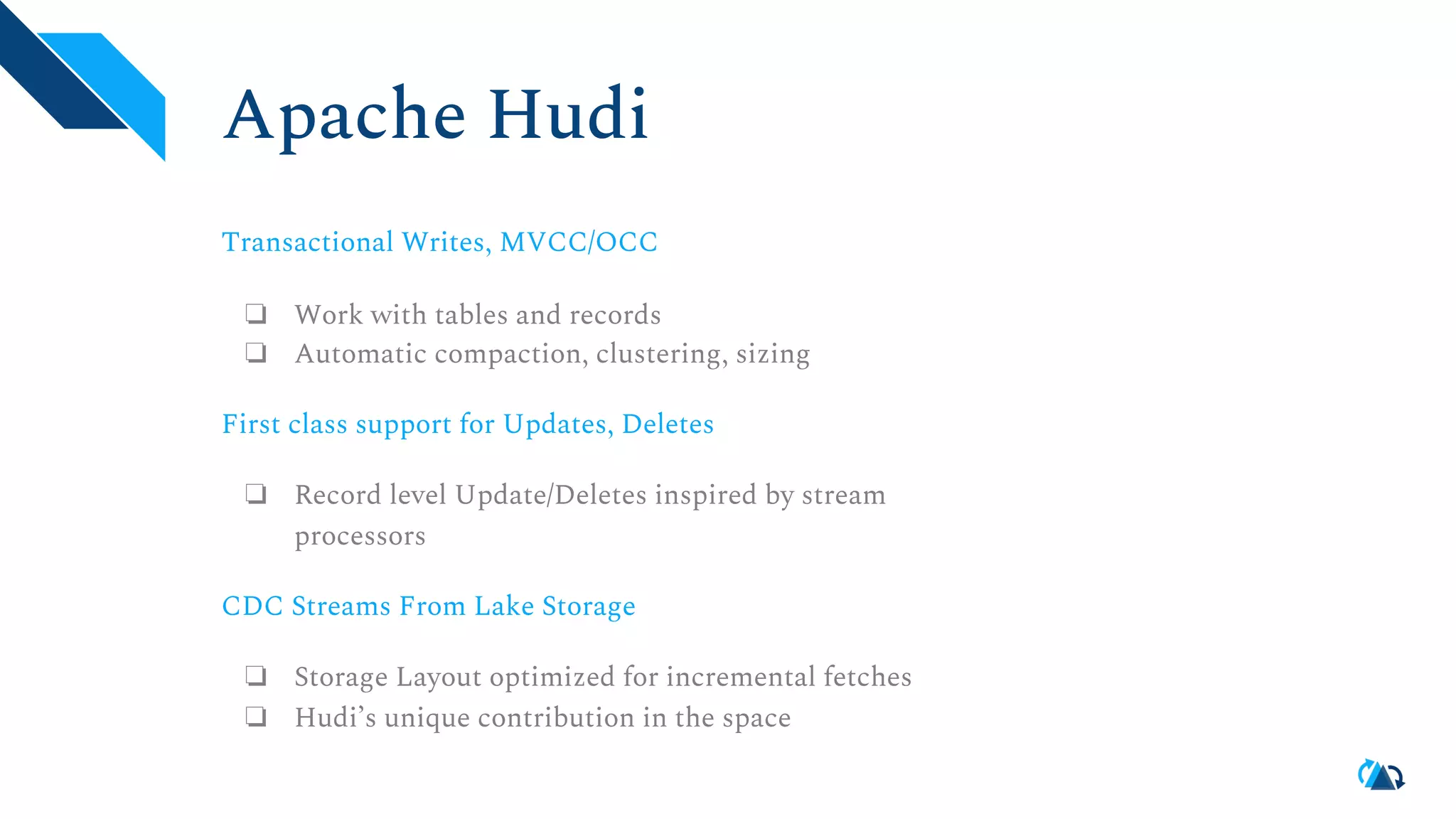

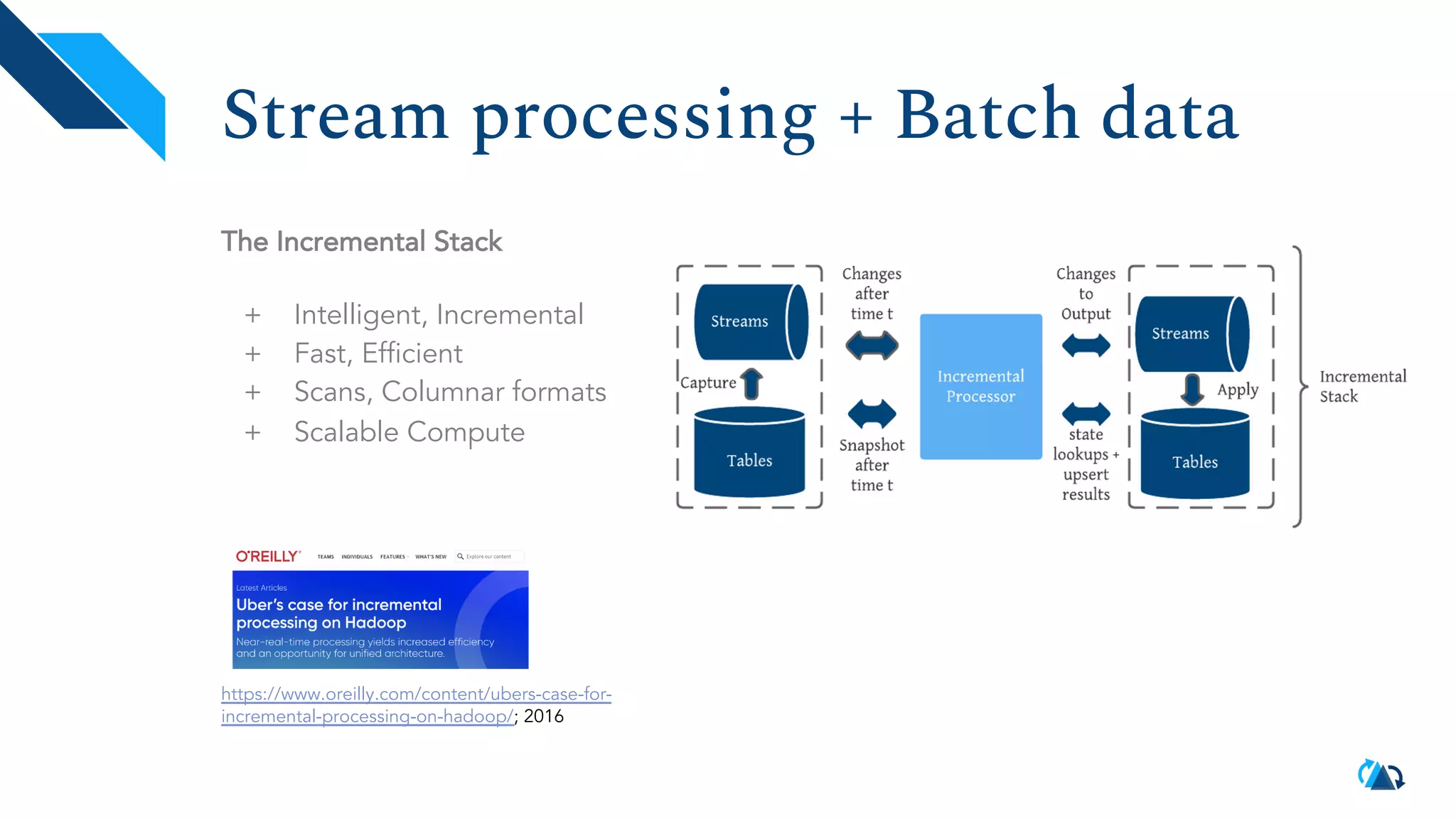

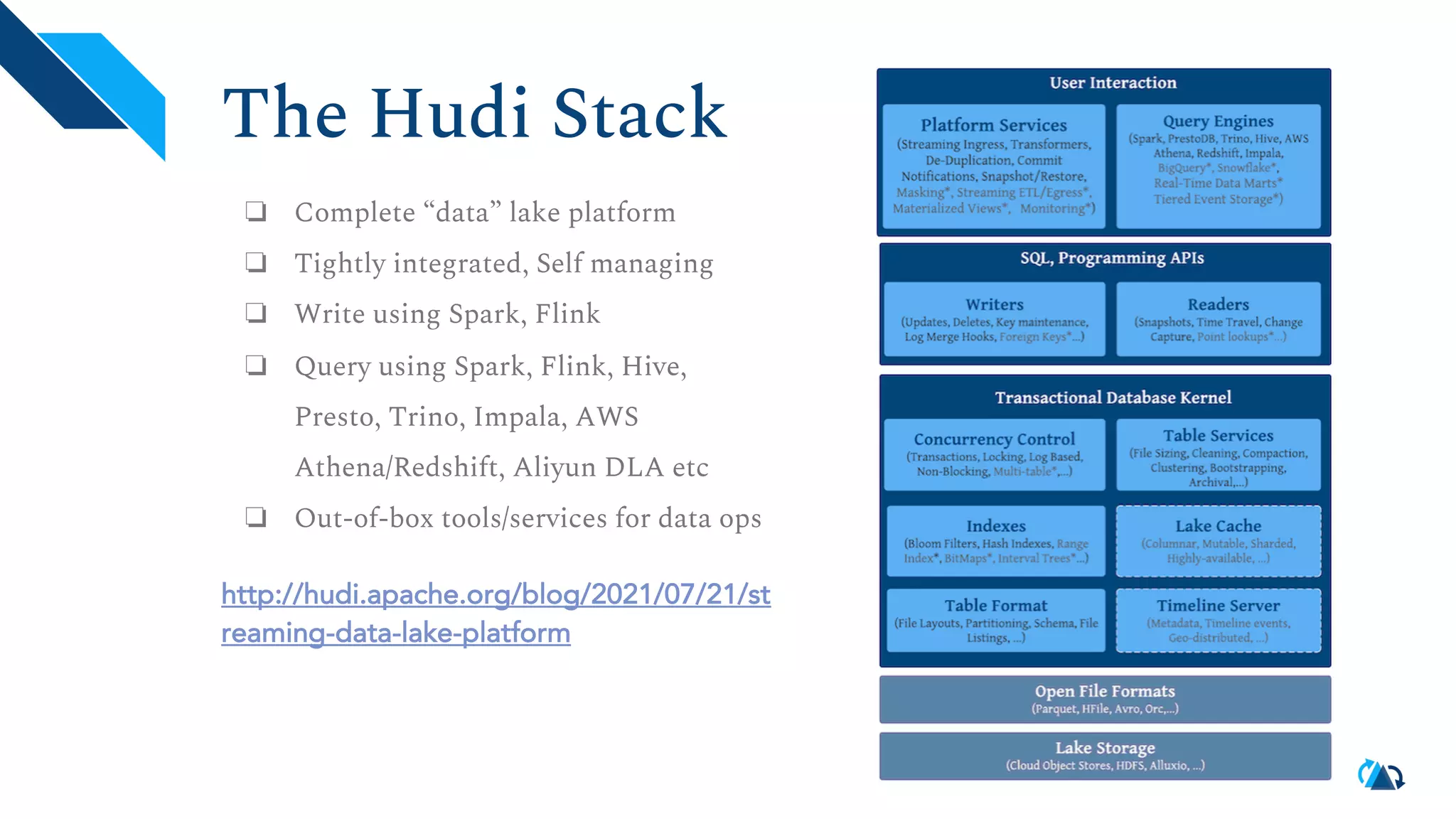

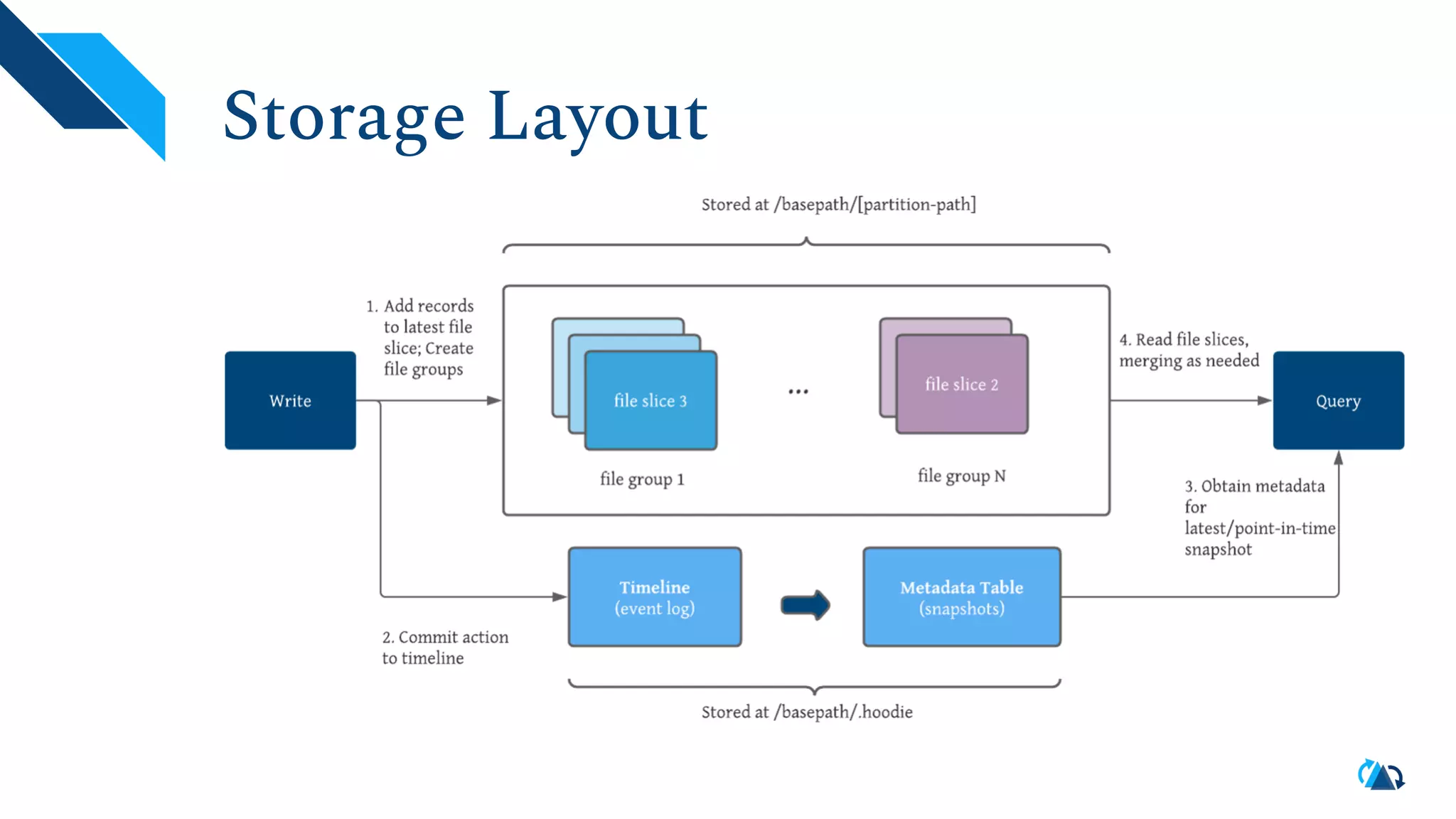

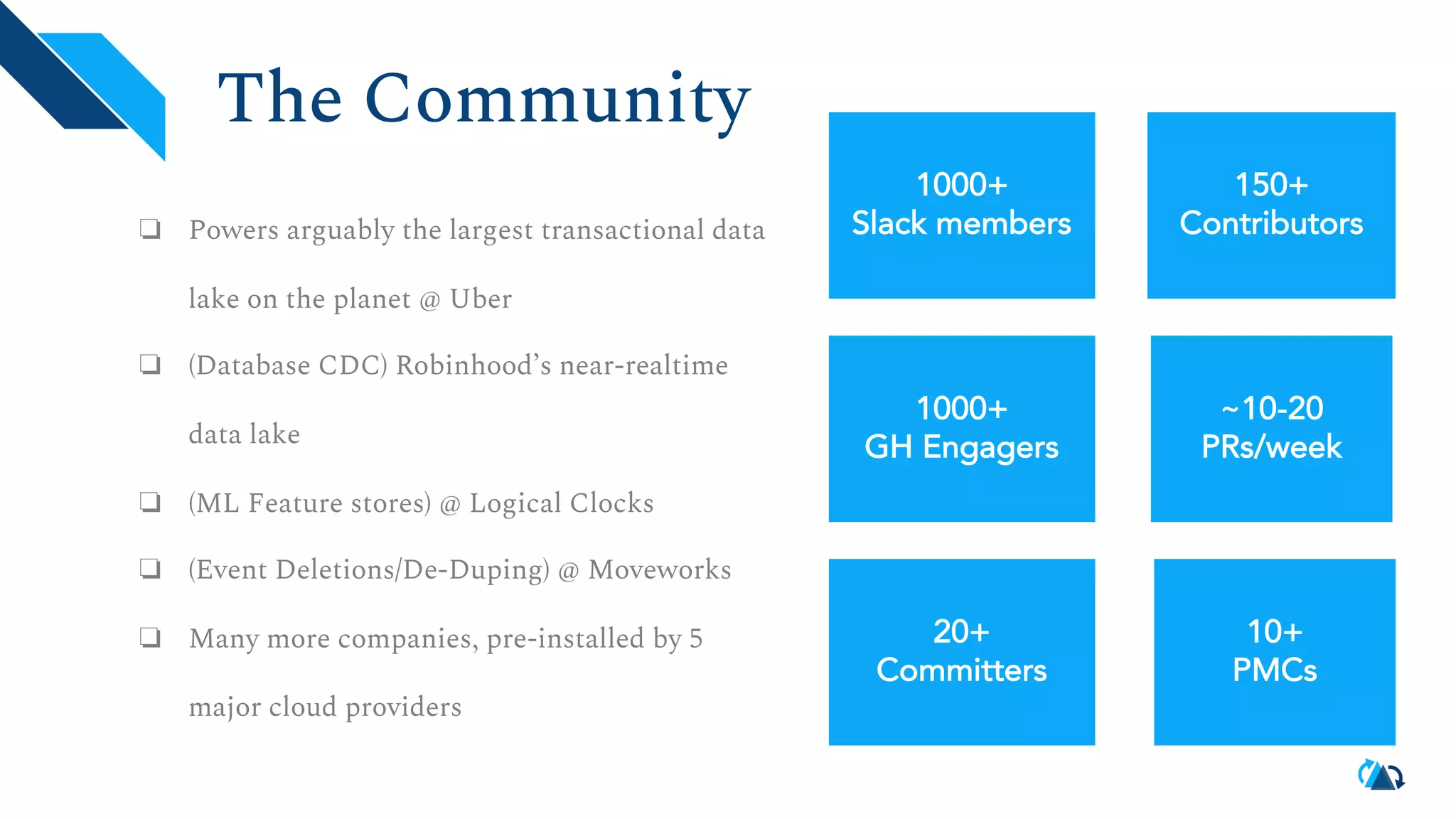

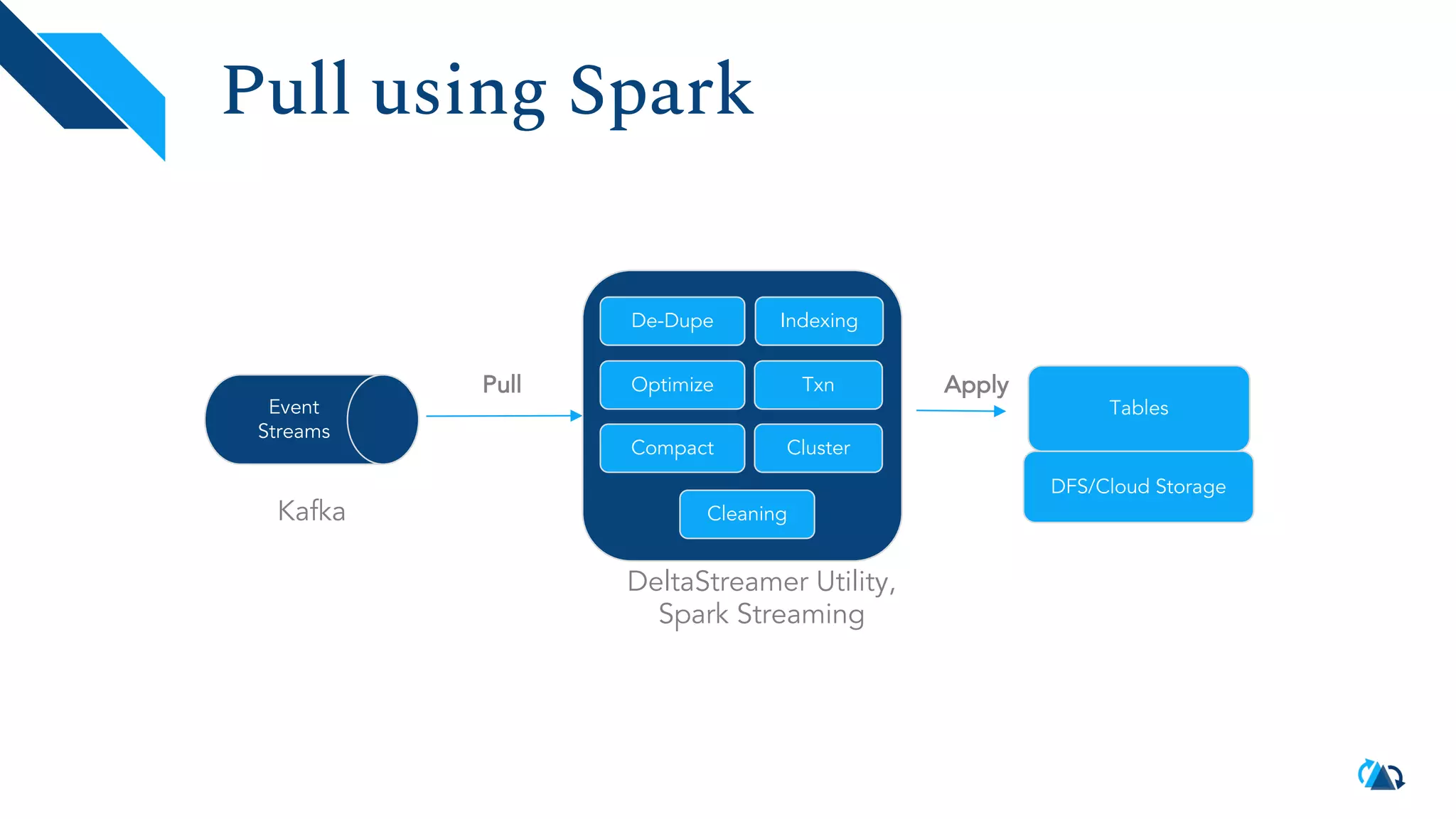

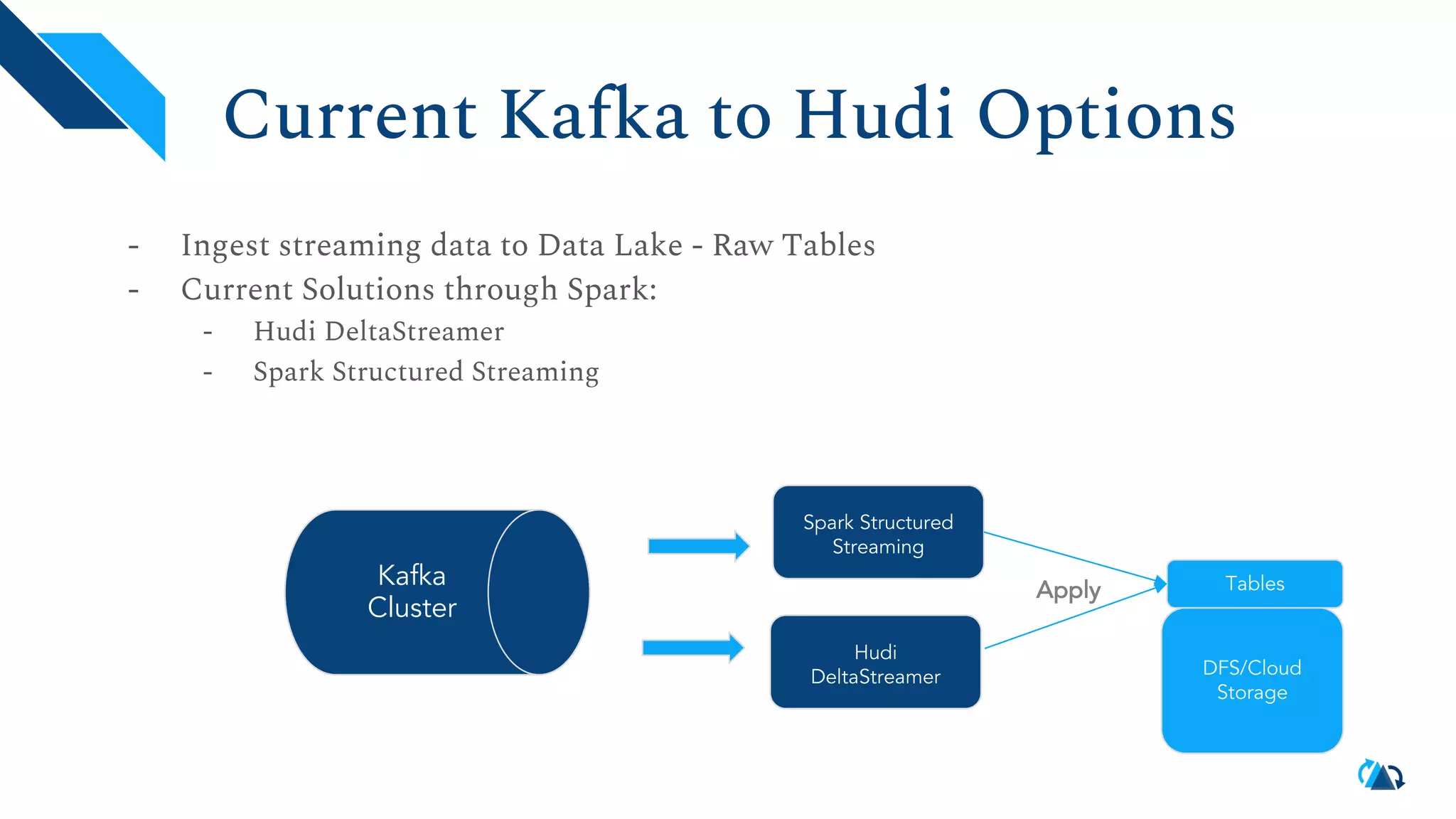

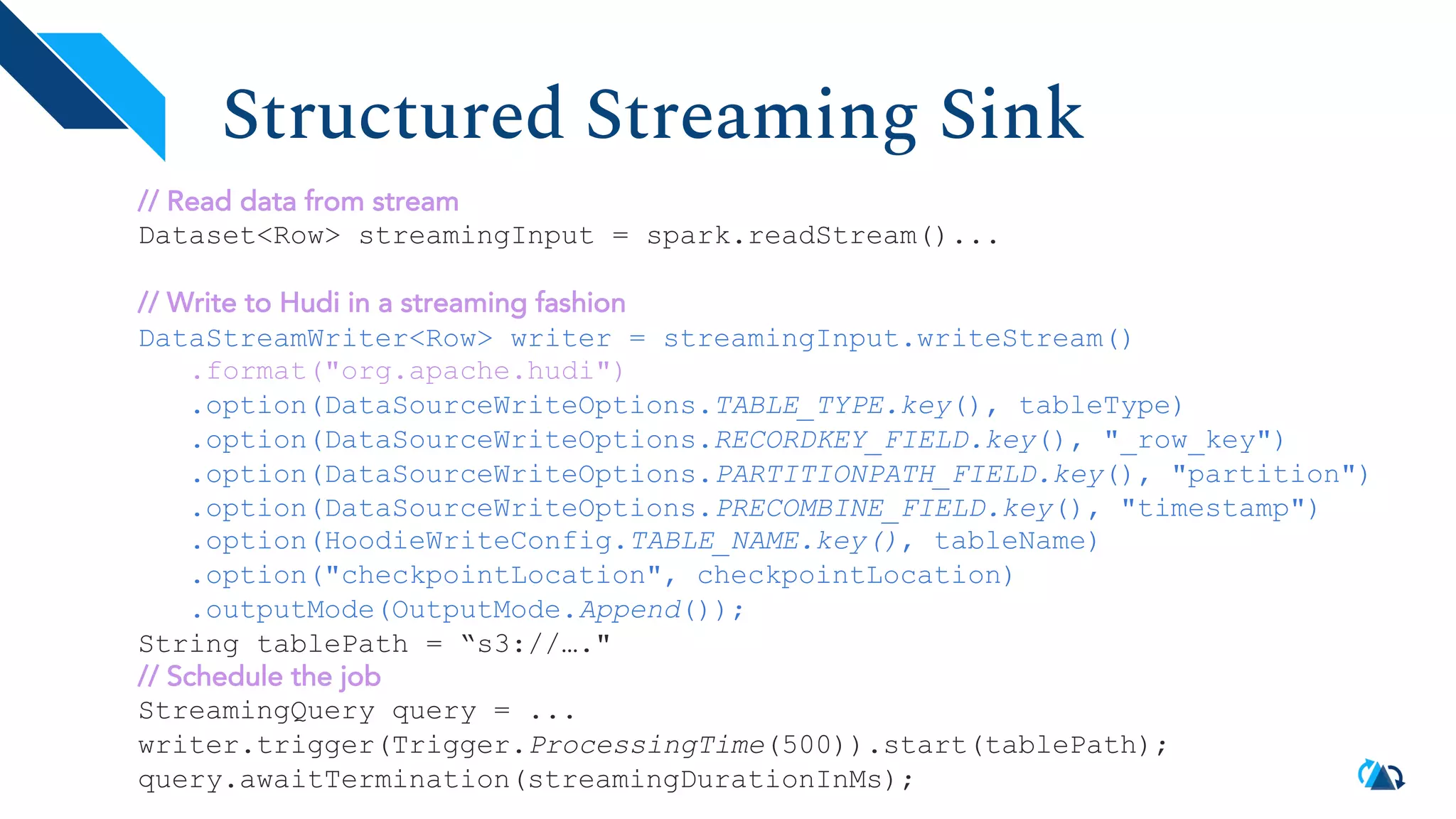

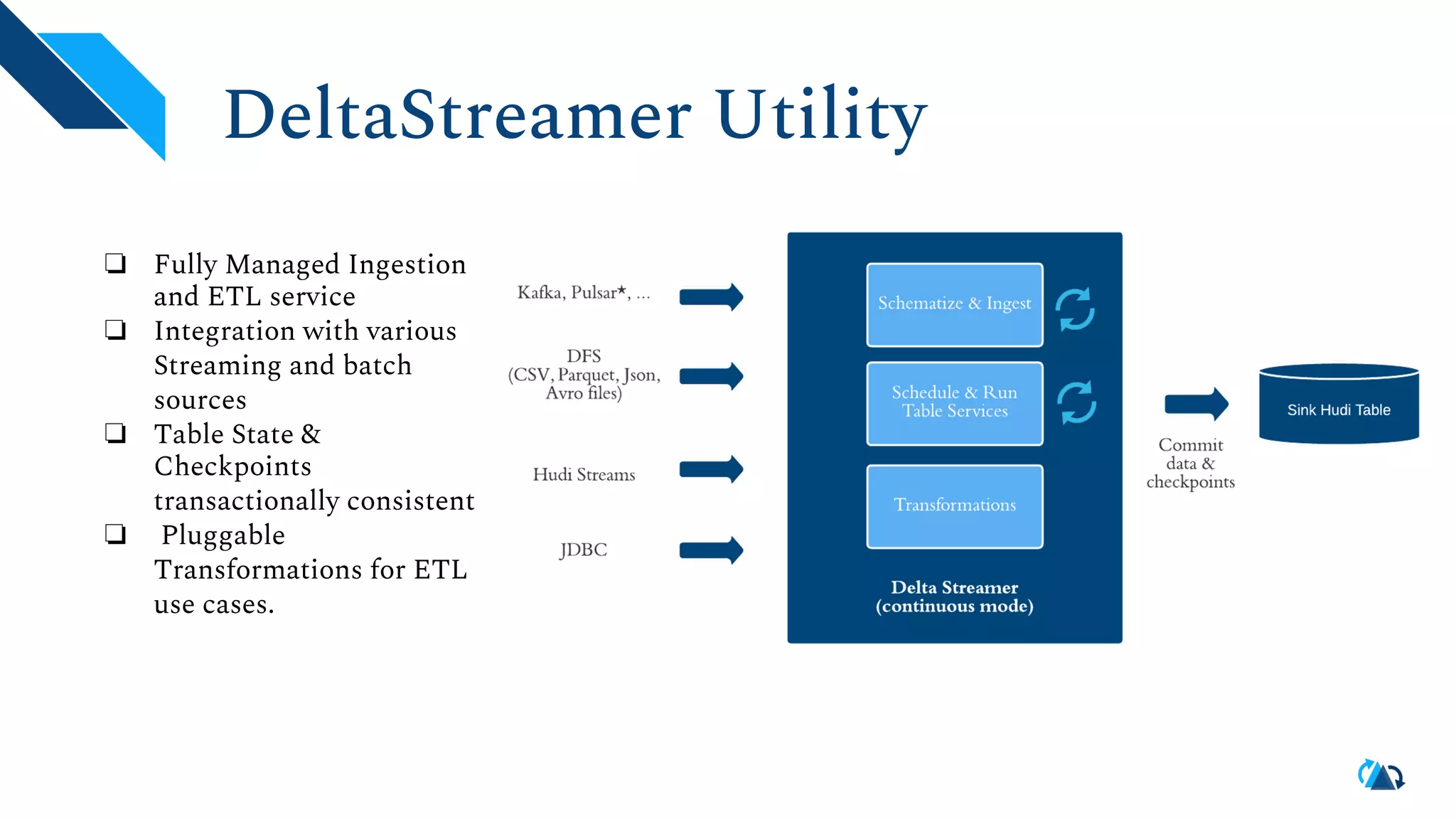

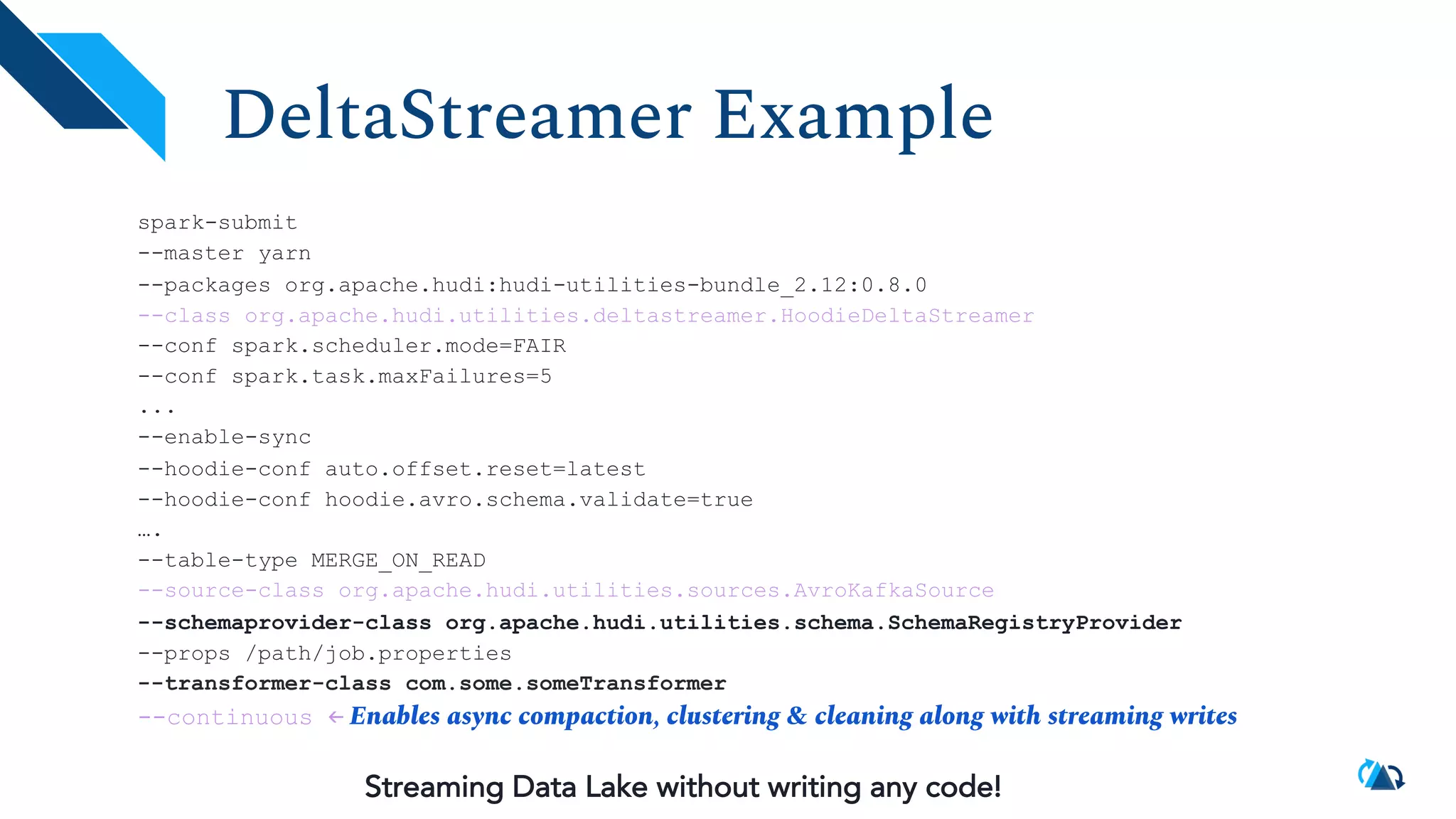

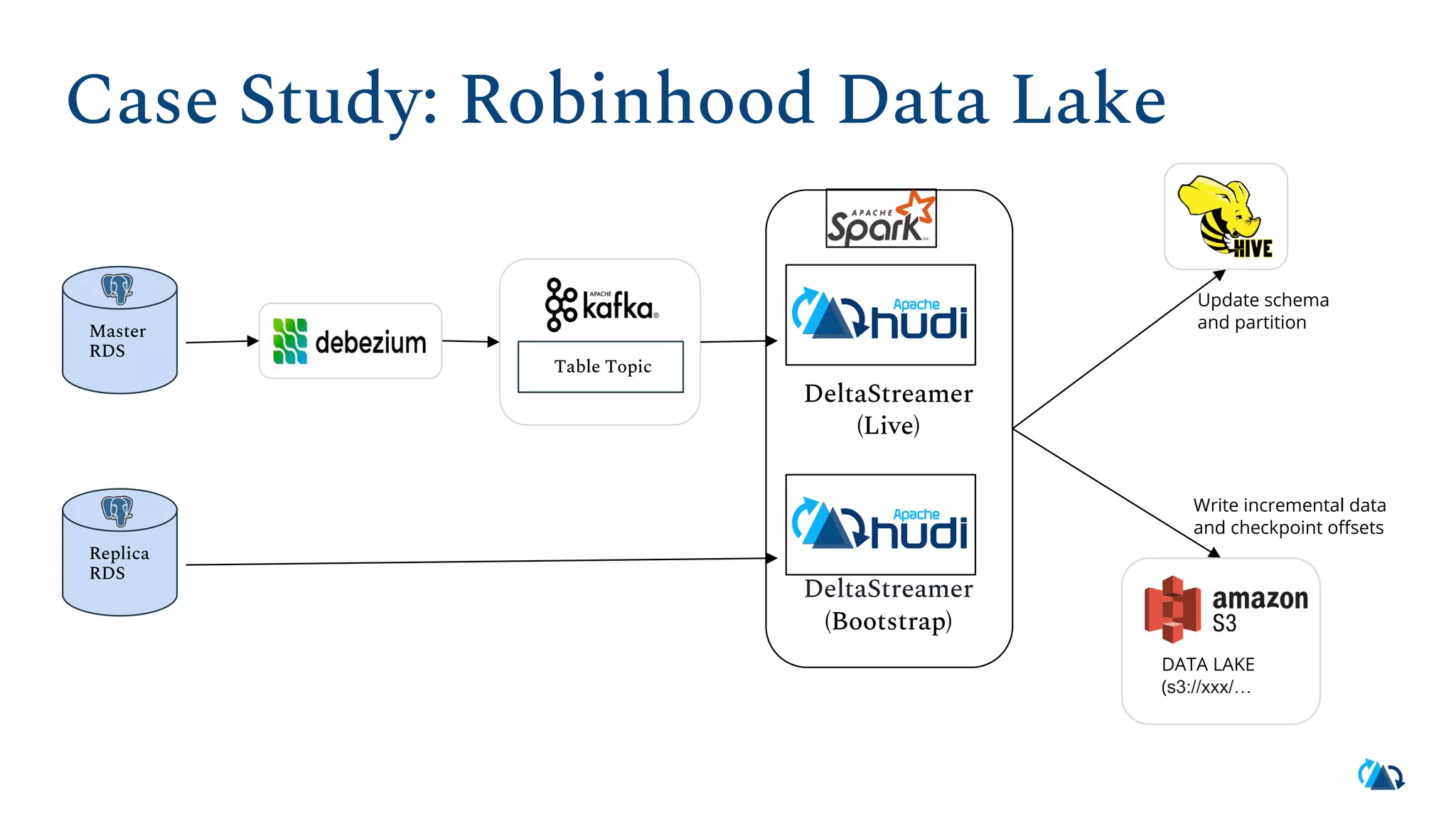

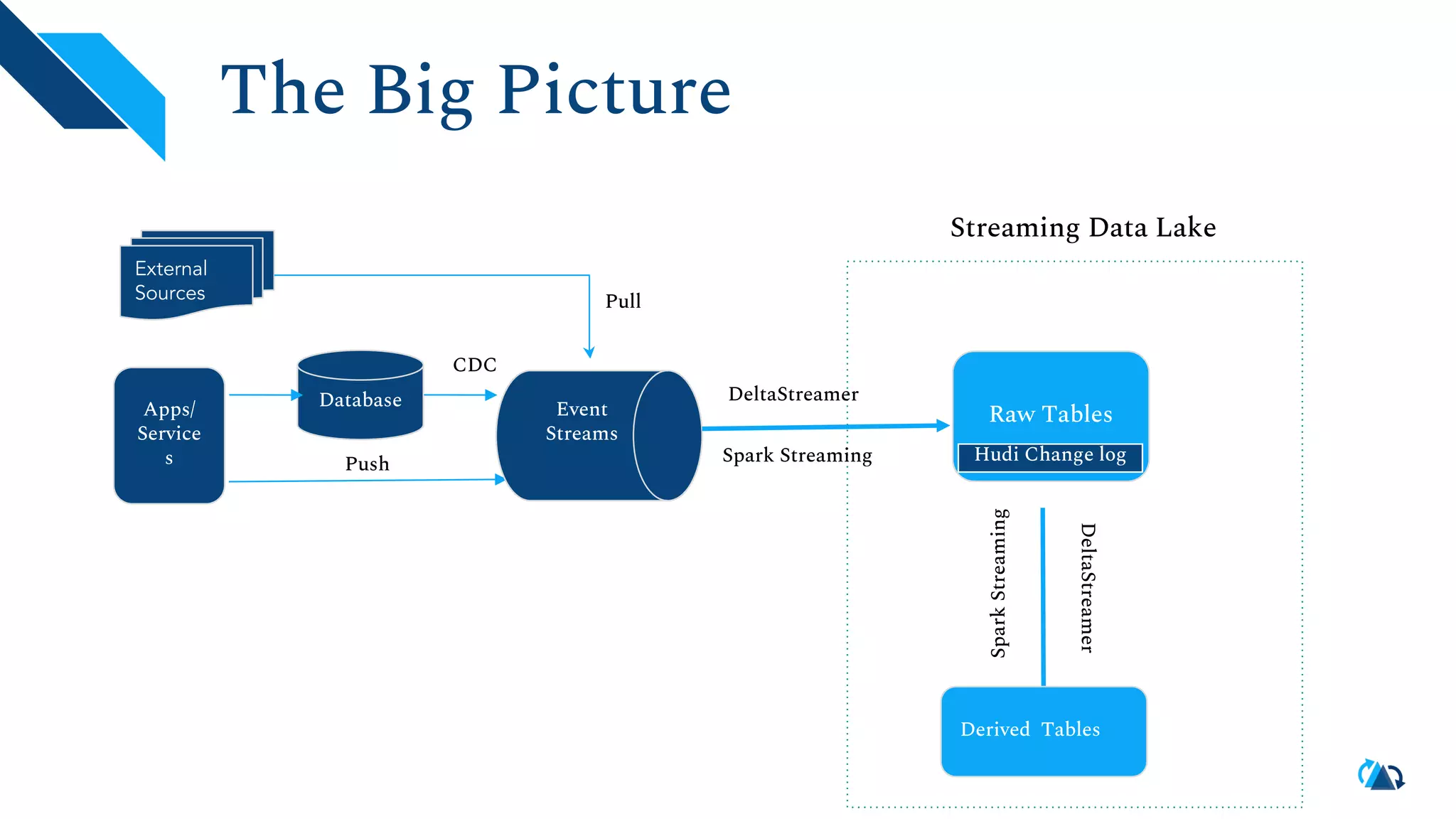

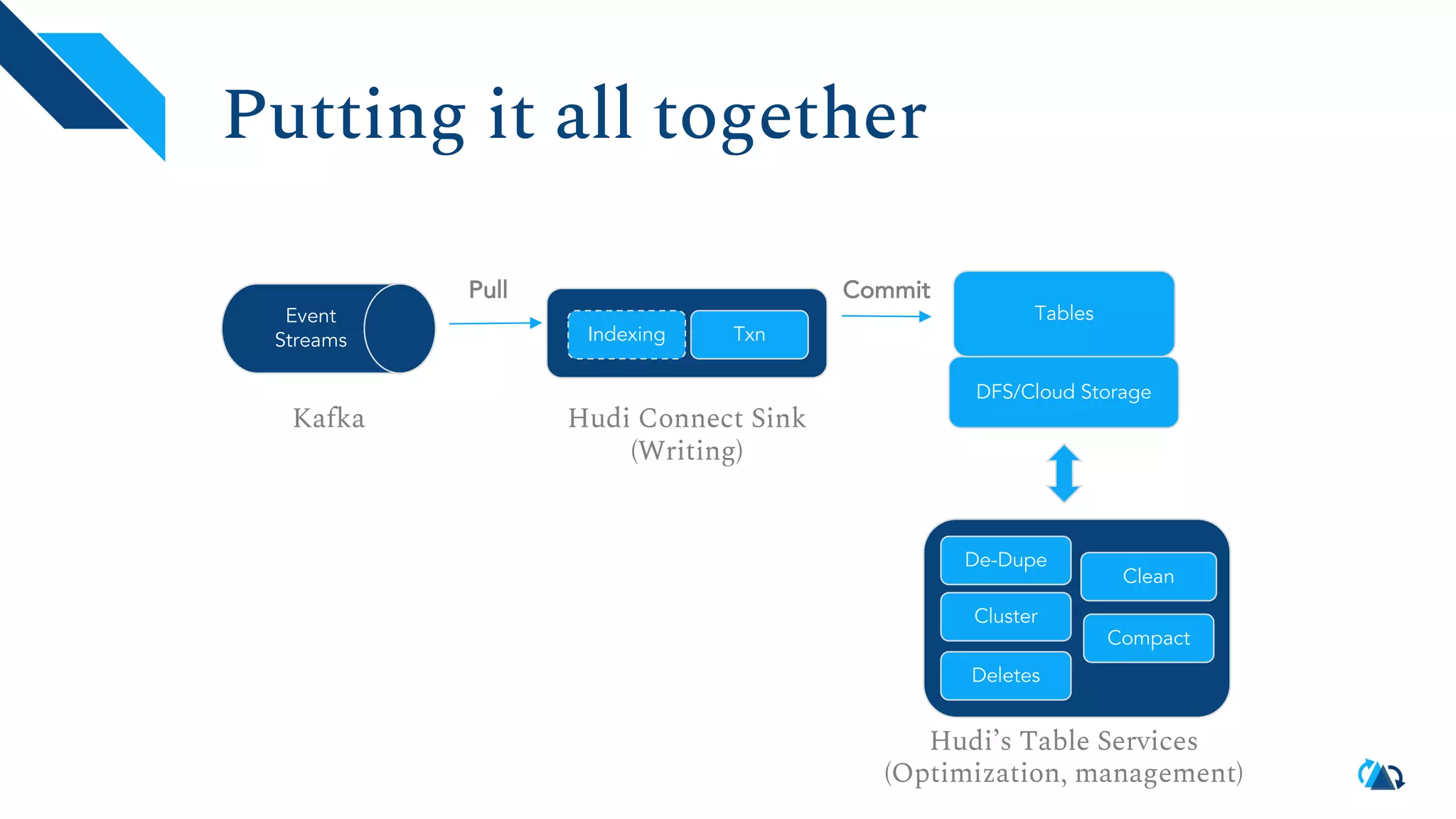

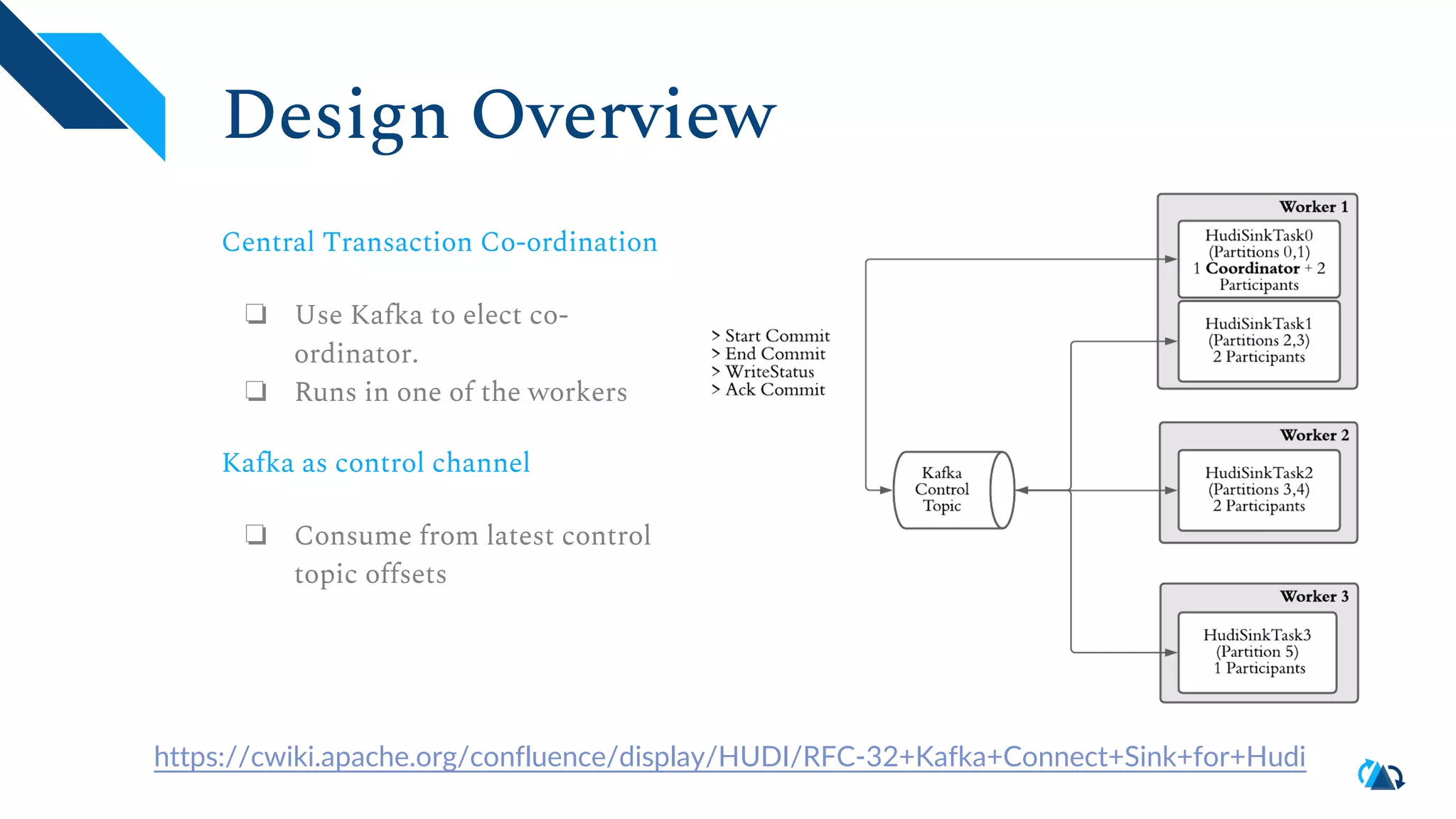

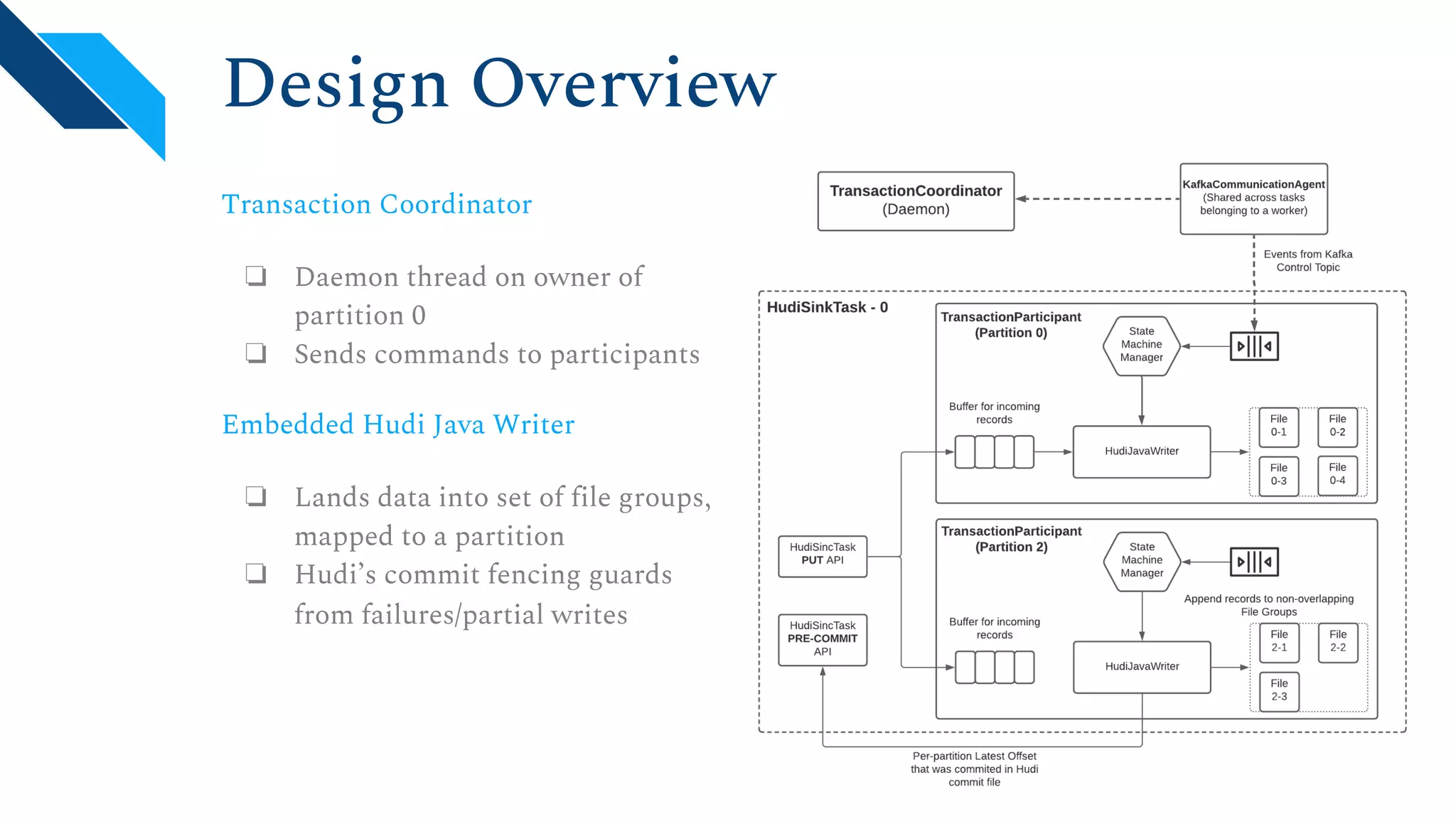

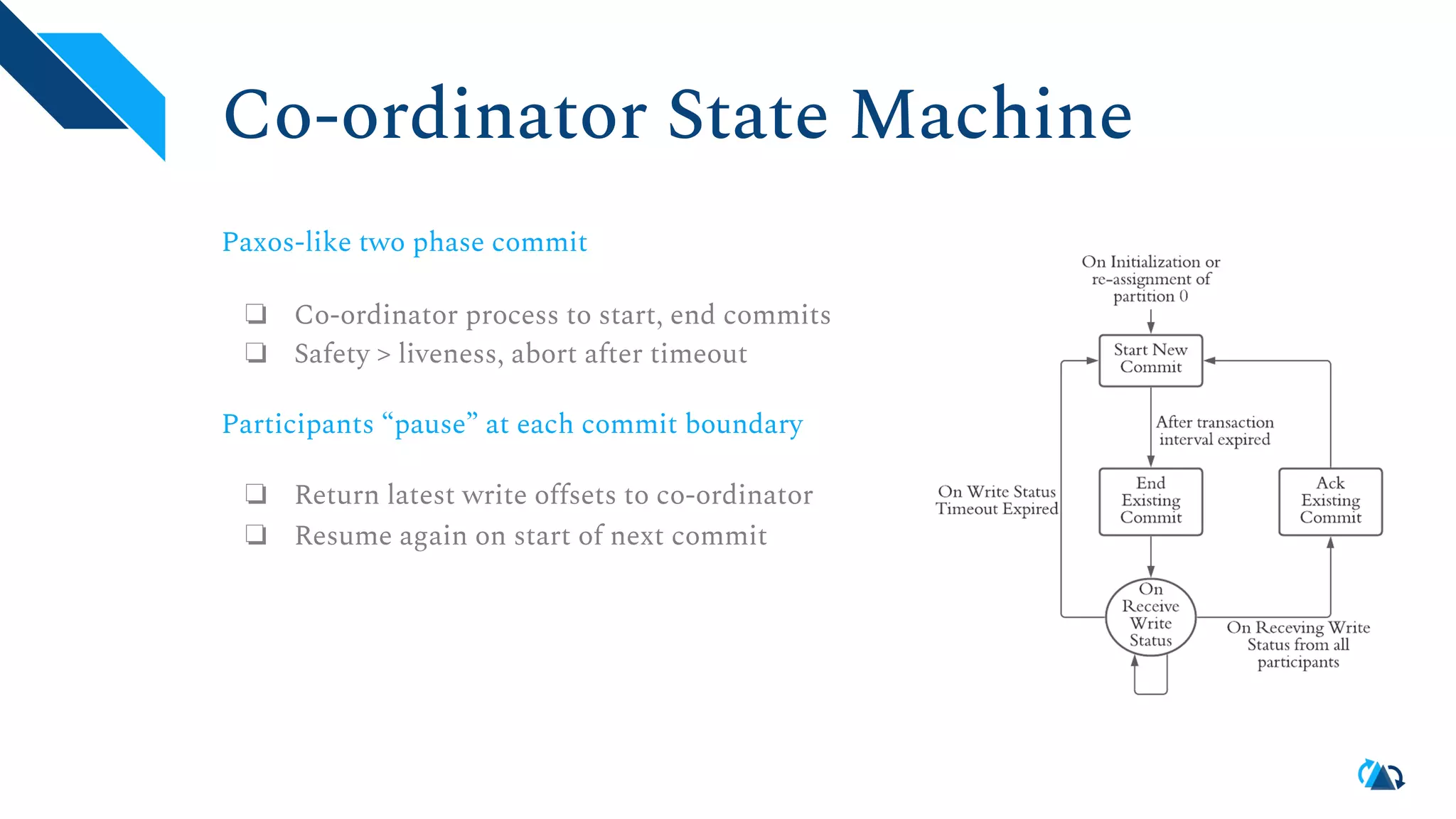

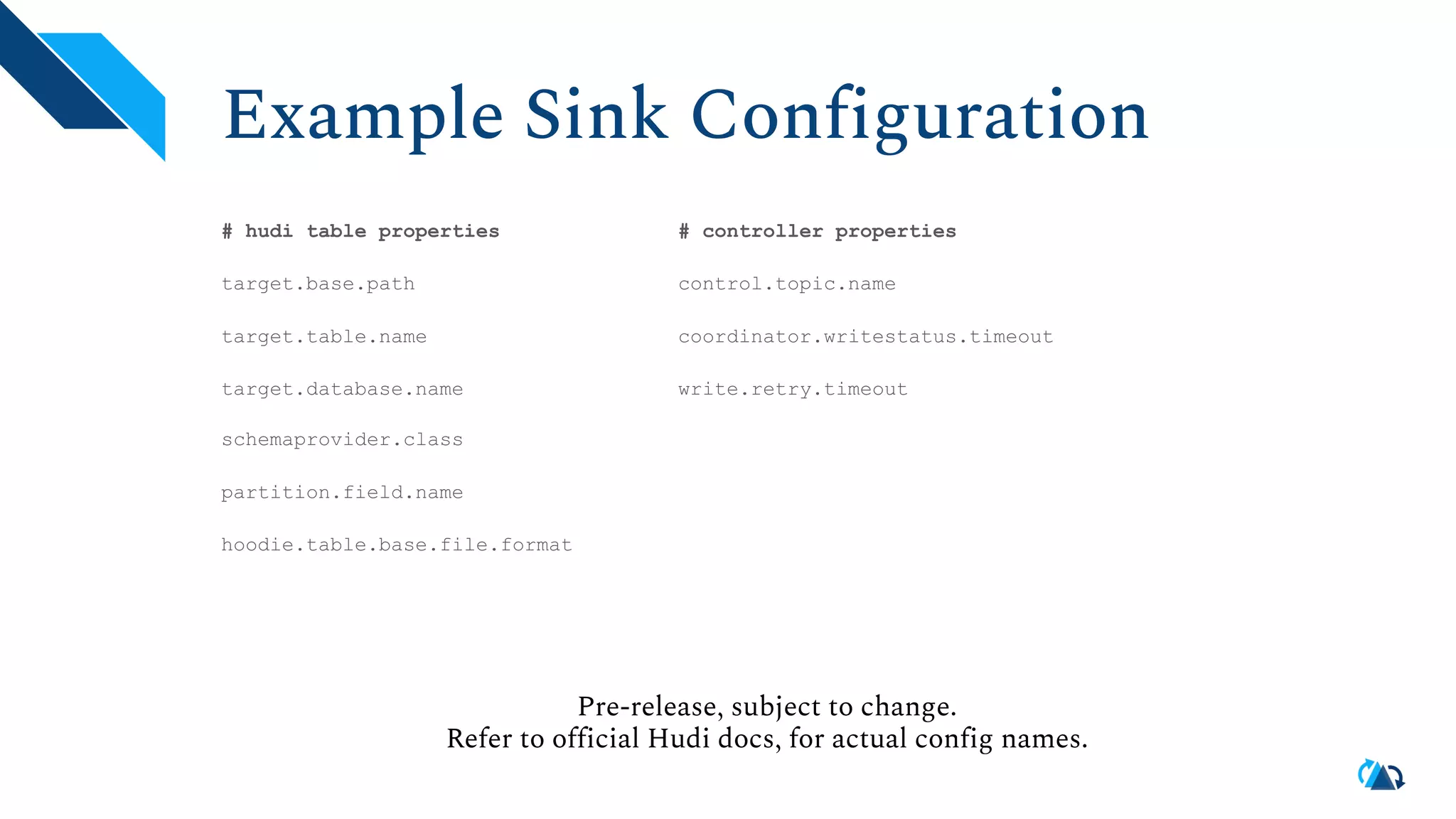

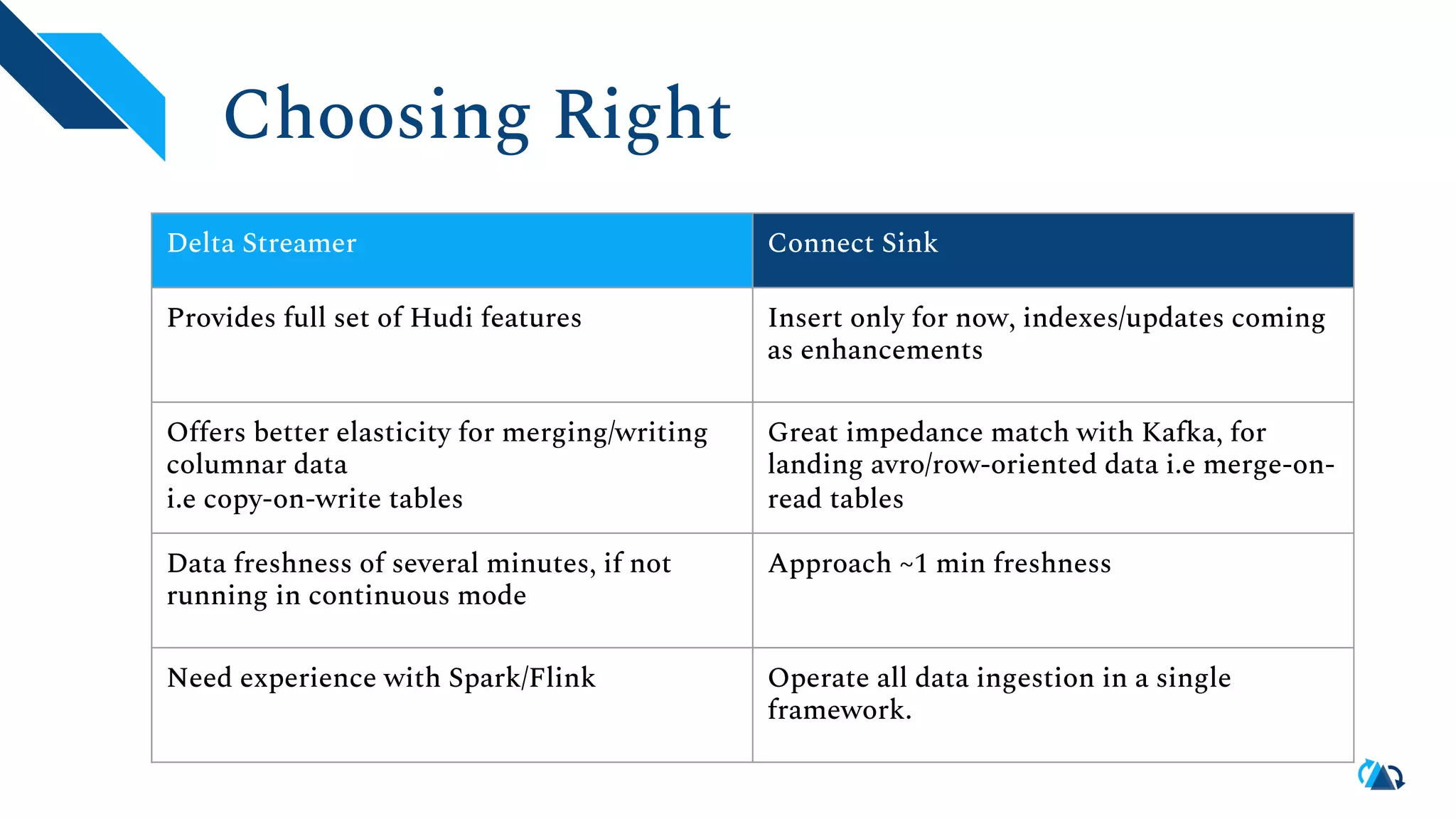

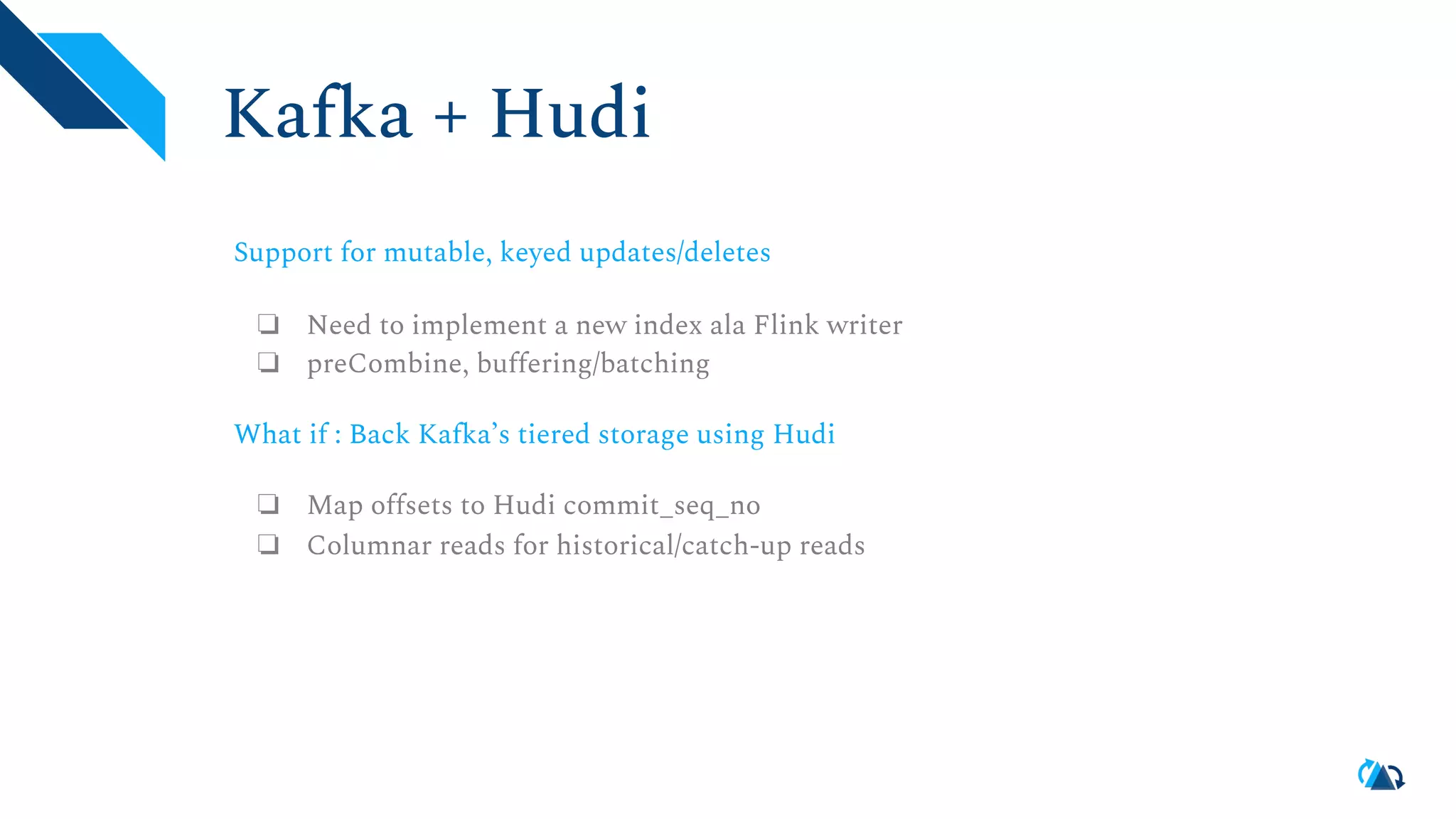

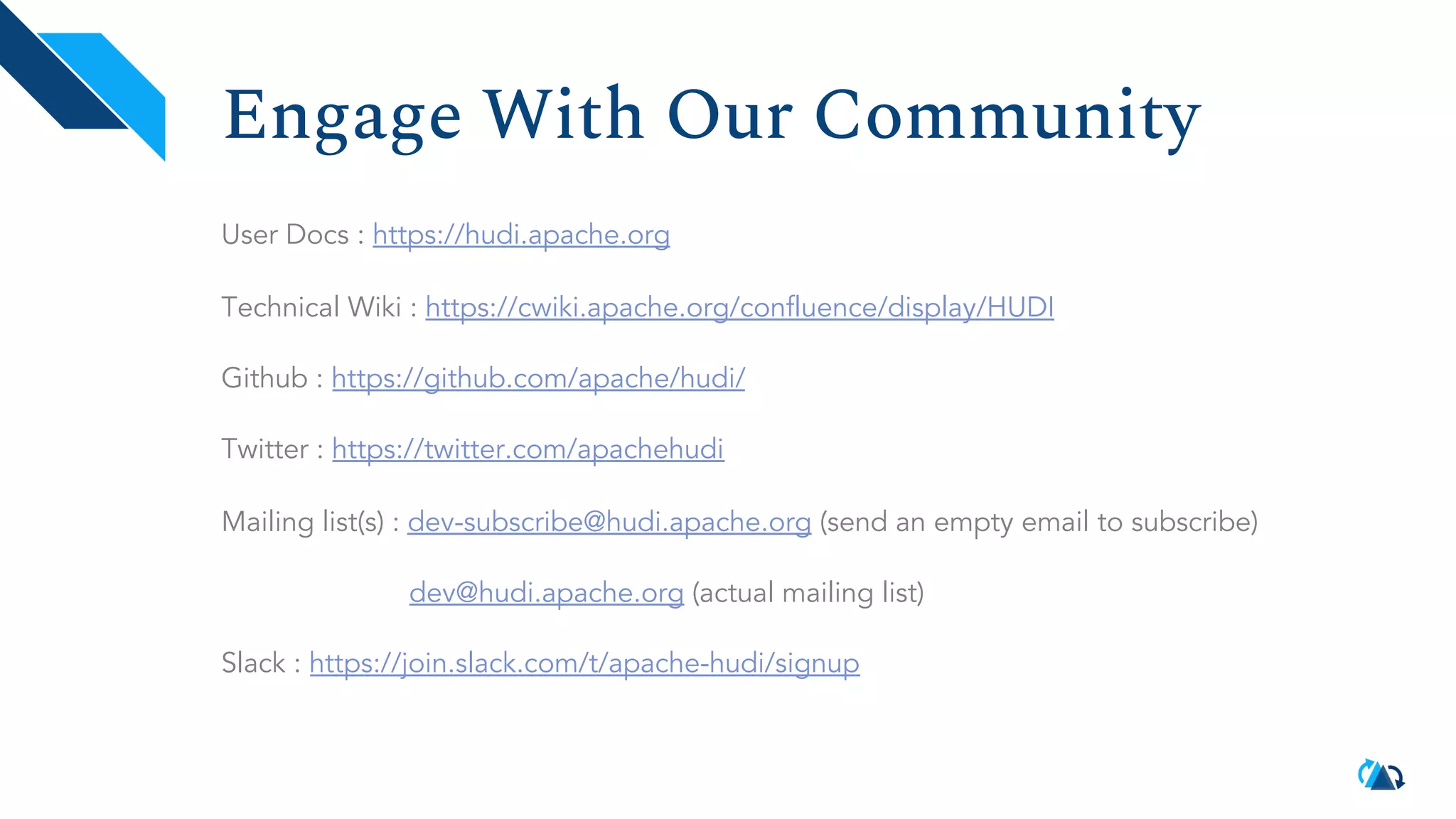

The document discusses the architecture and implementation of streaming data lakes using Kafka Connect and Apache Hudi. It covers the importance of data lakes in modern analytics, the functionality and advantages of Hudi for managing data streams and transactional writes, and a case study of Robinhood's data lake solution. Key features, usage examples, and community engagement avenues are also presented to facilitate adoption and integration of Hudi in streaming infrastructures.