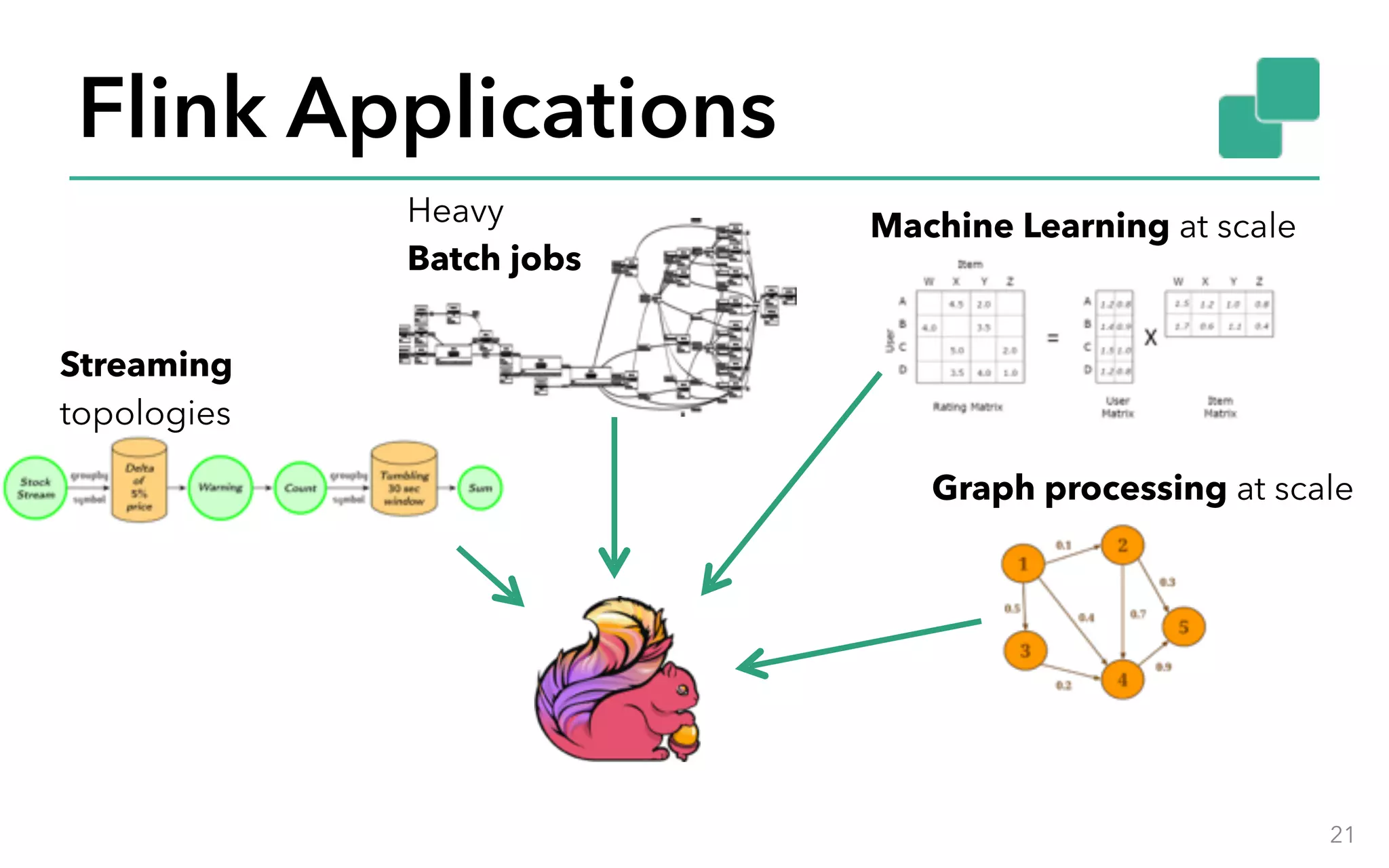

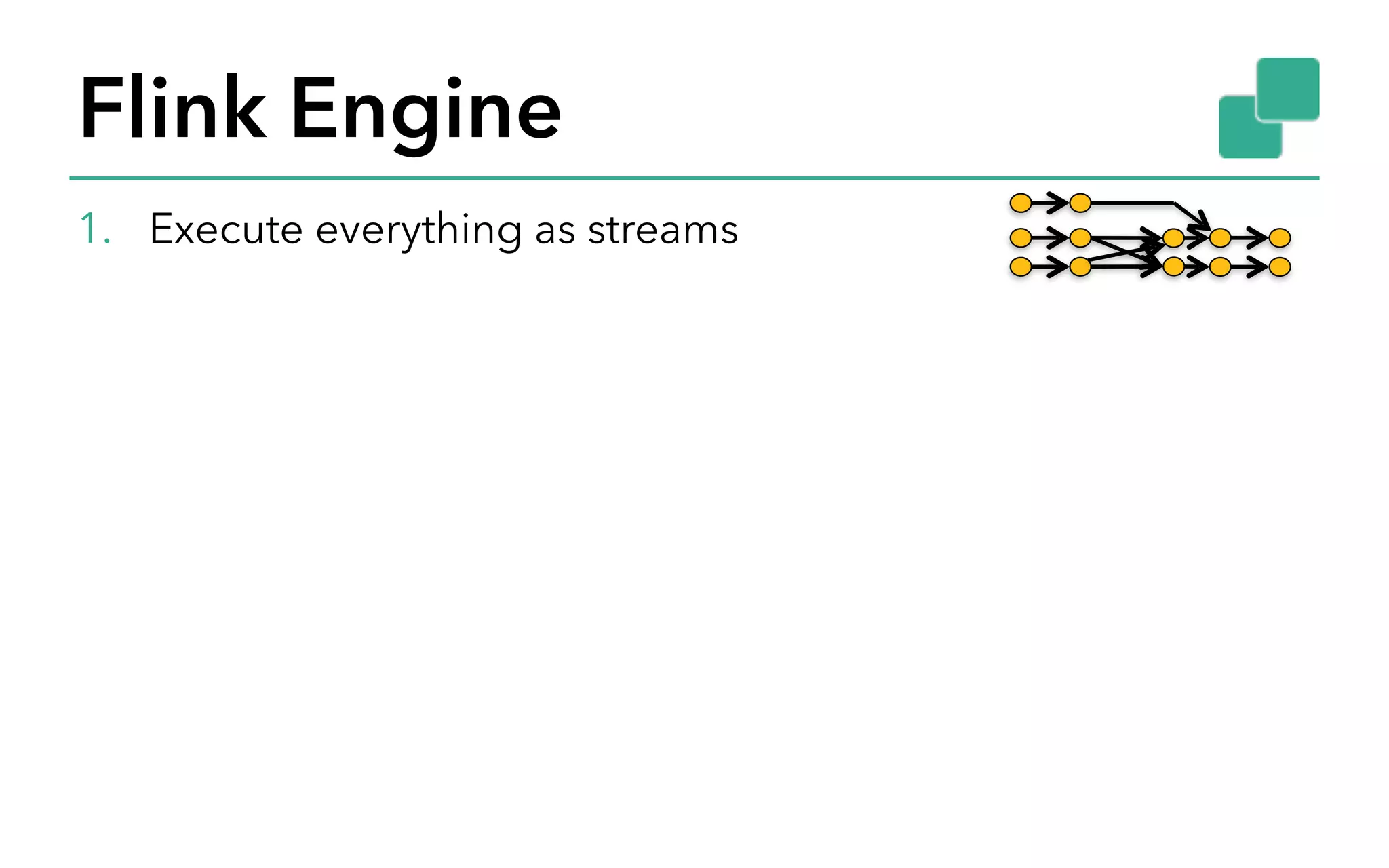

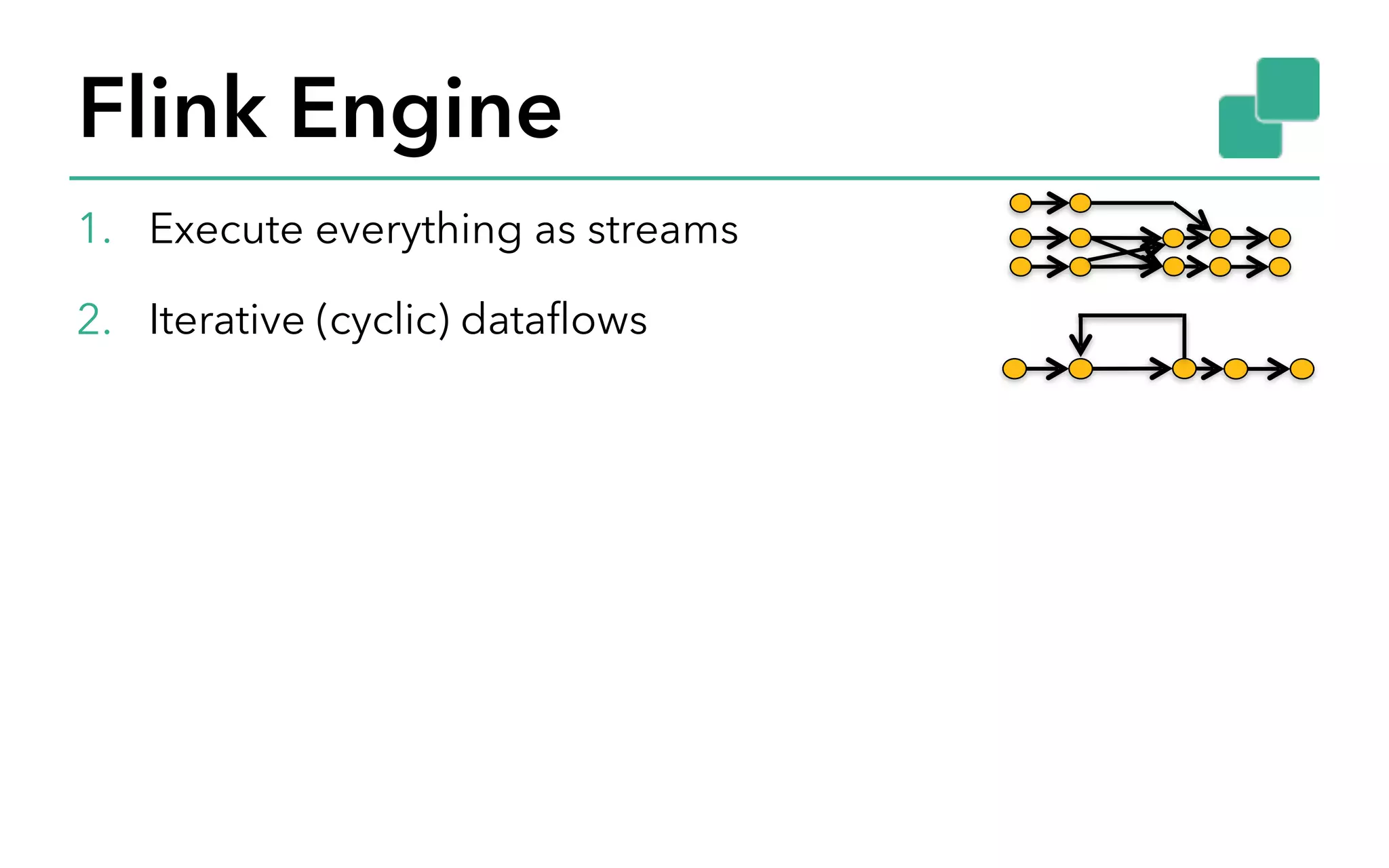

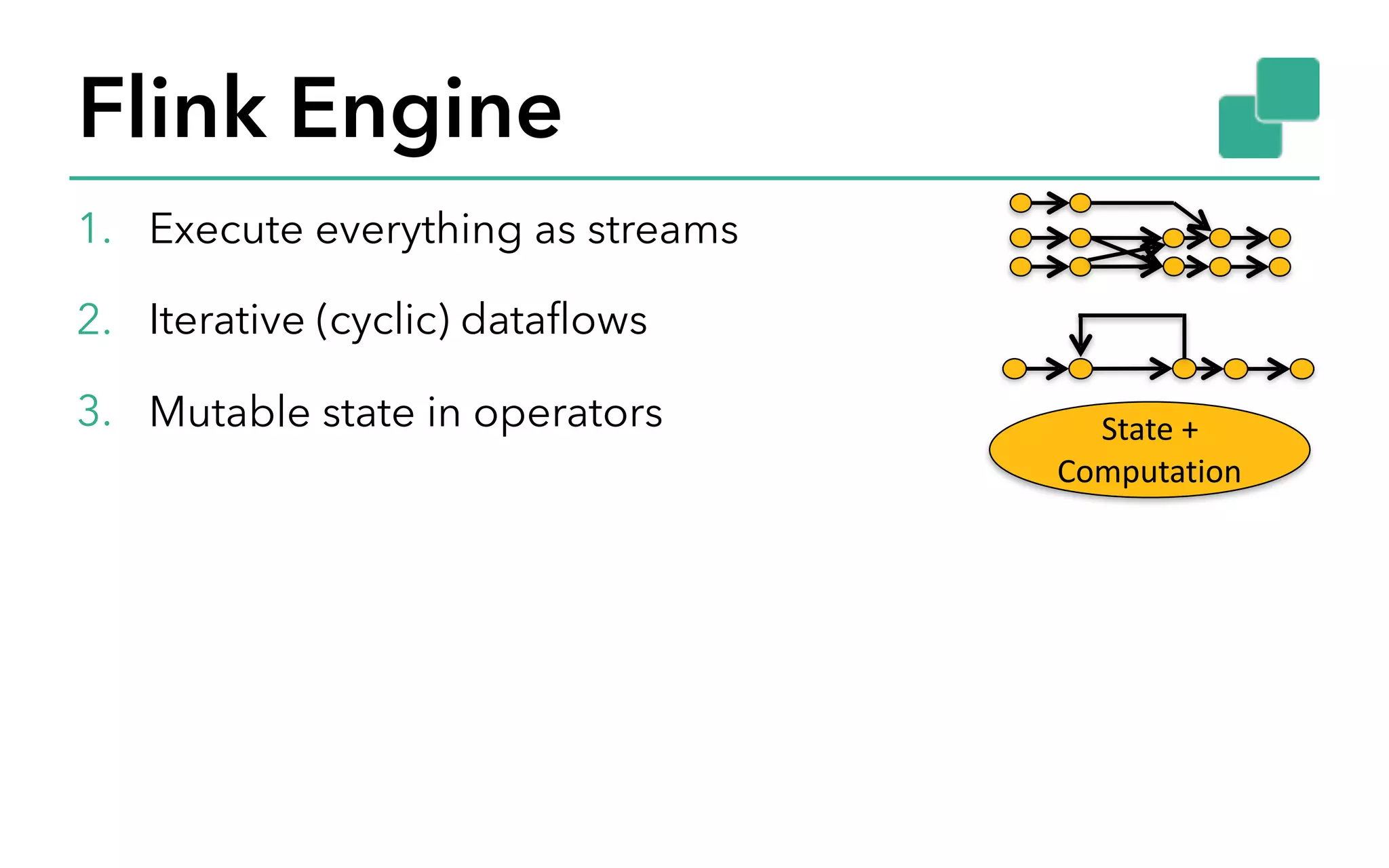

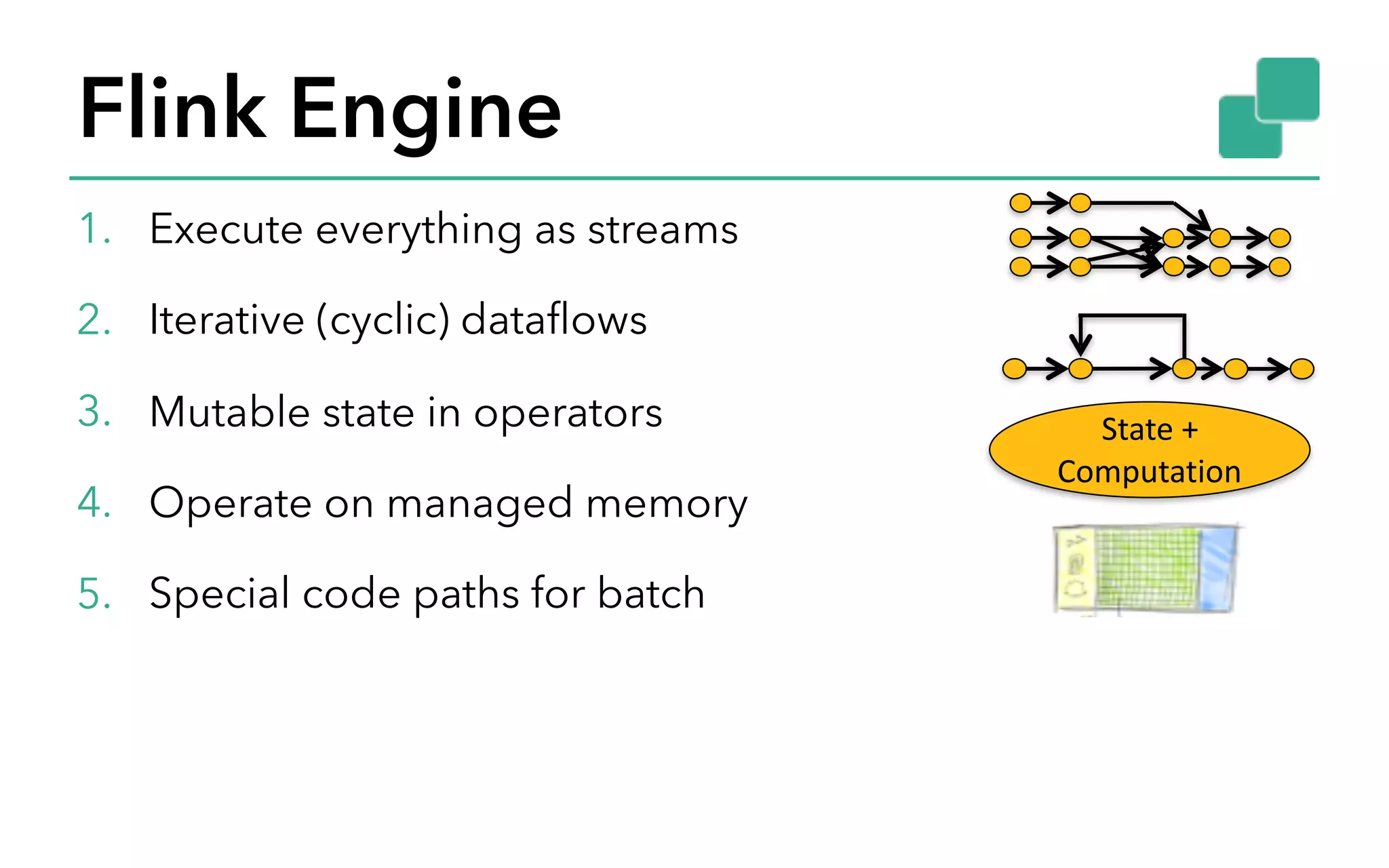

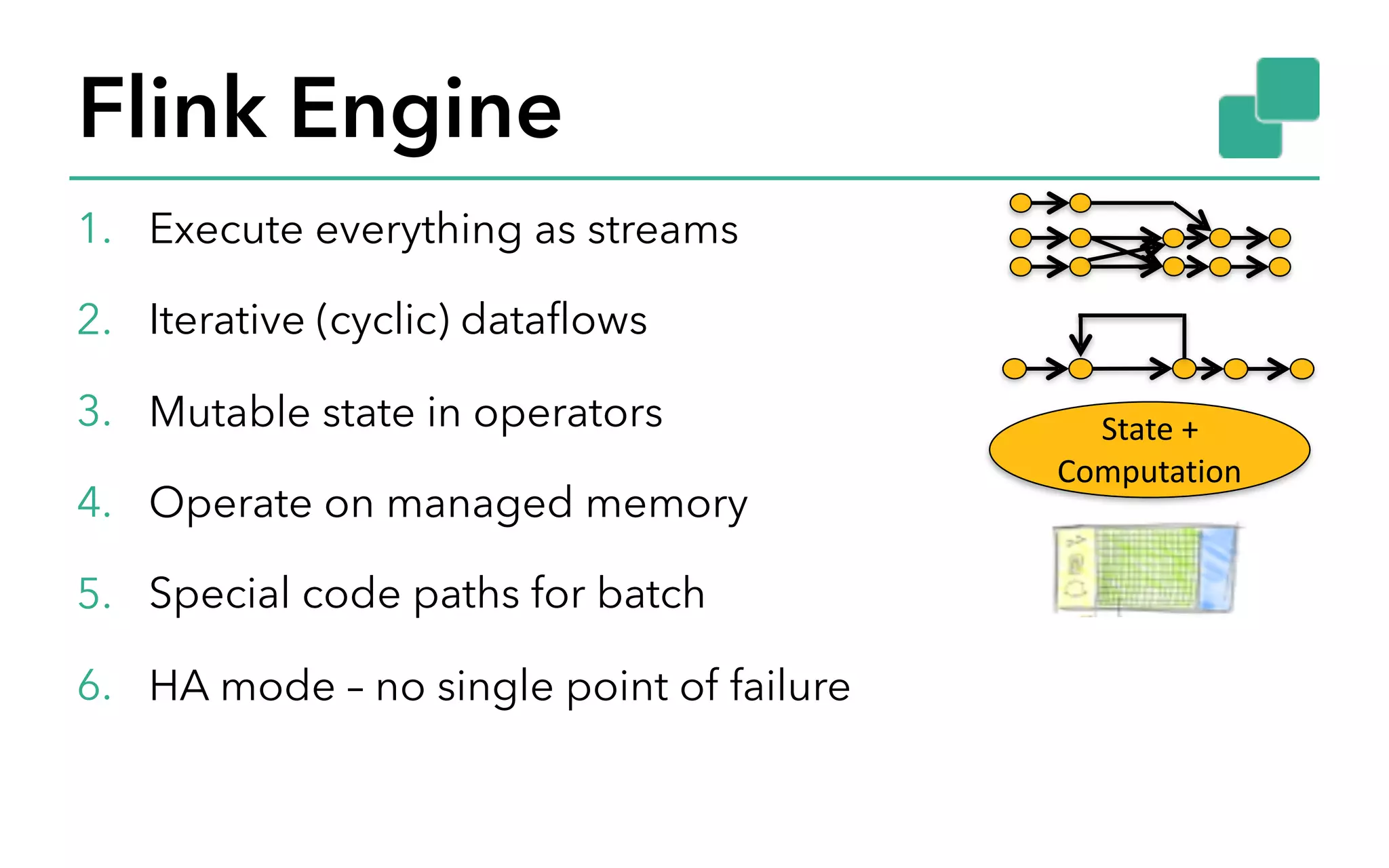

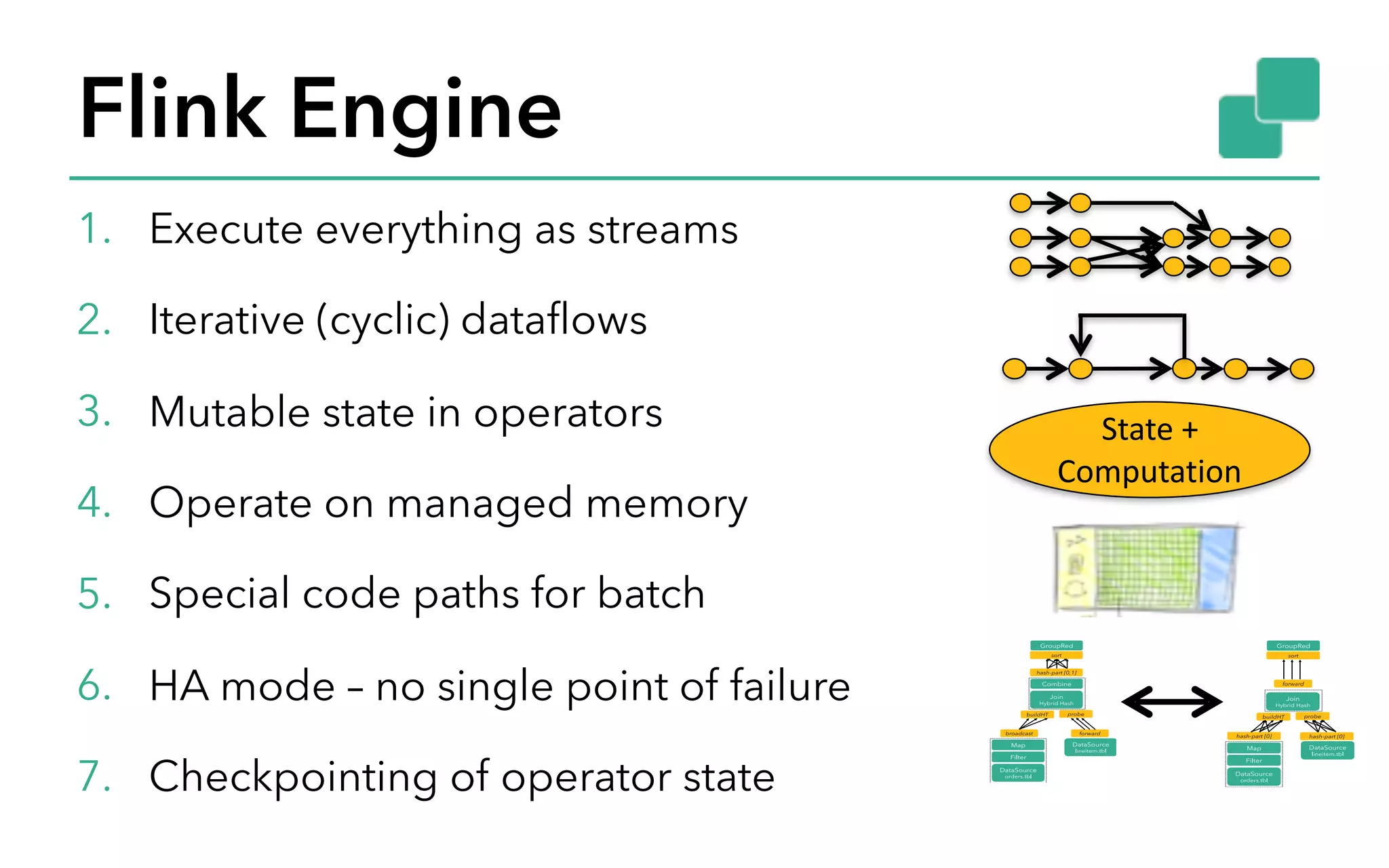

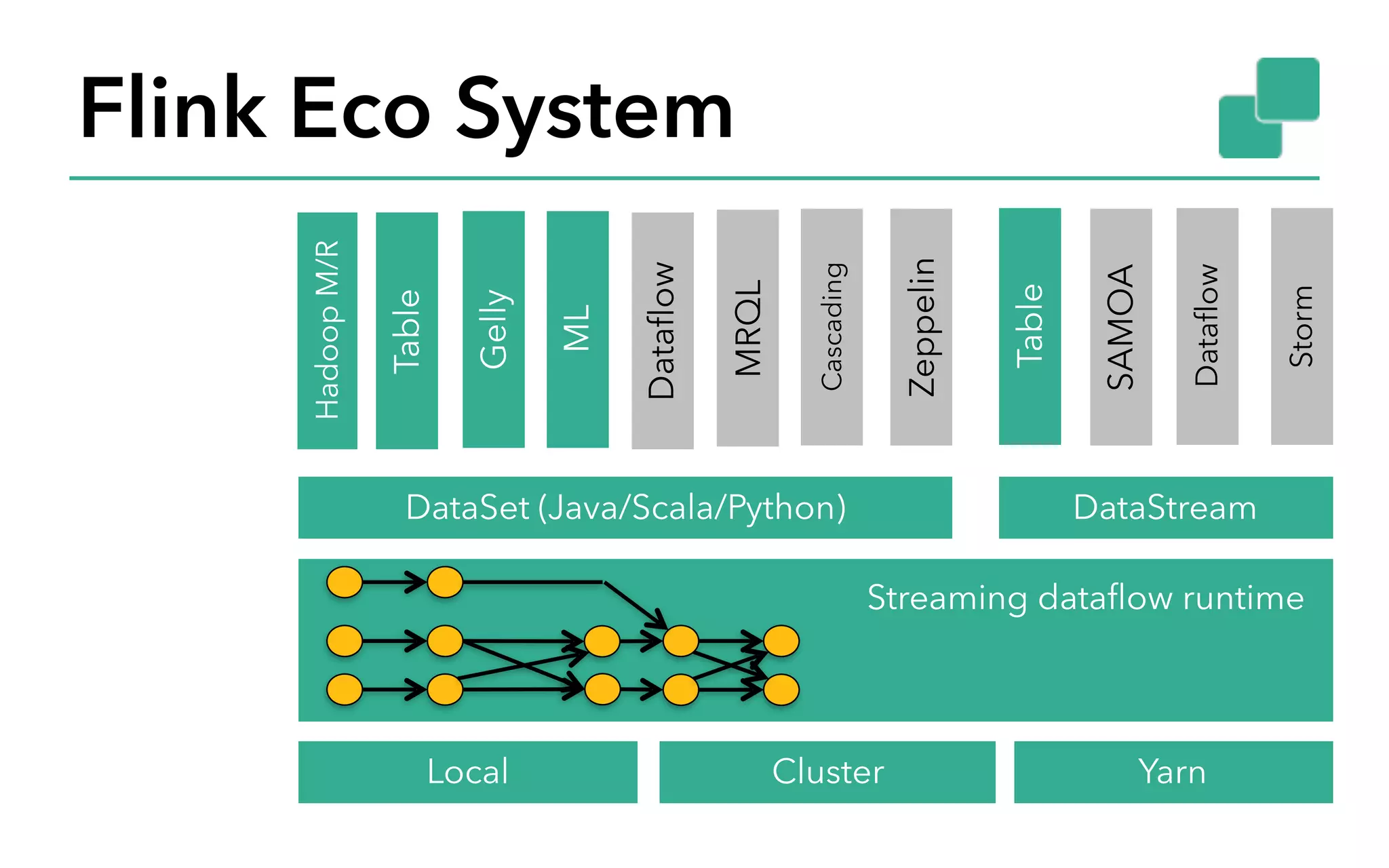

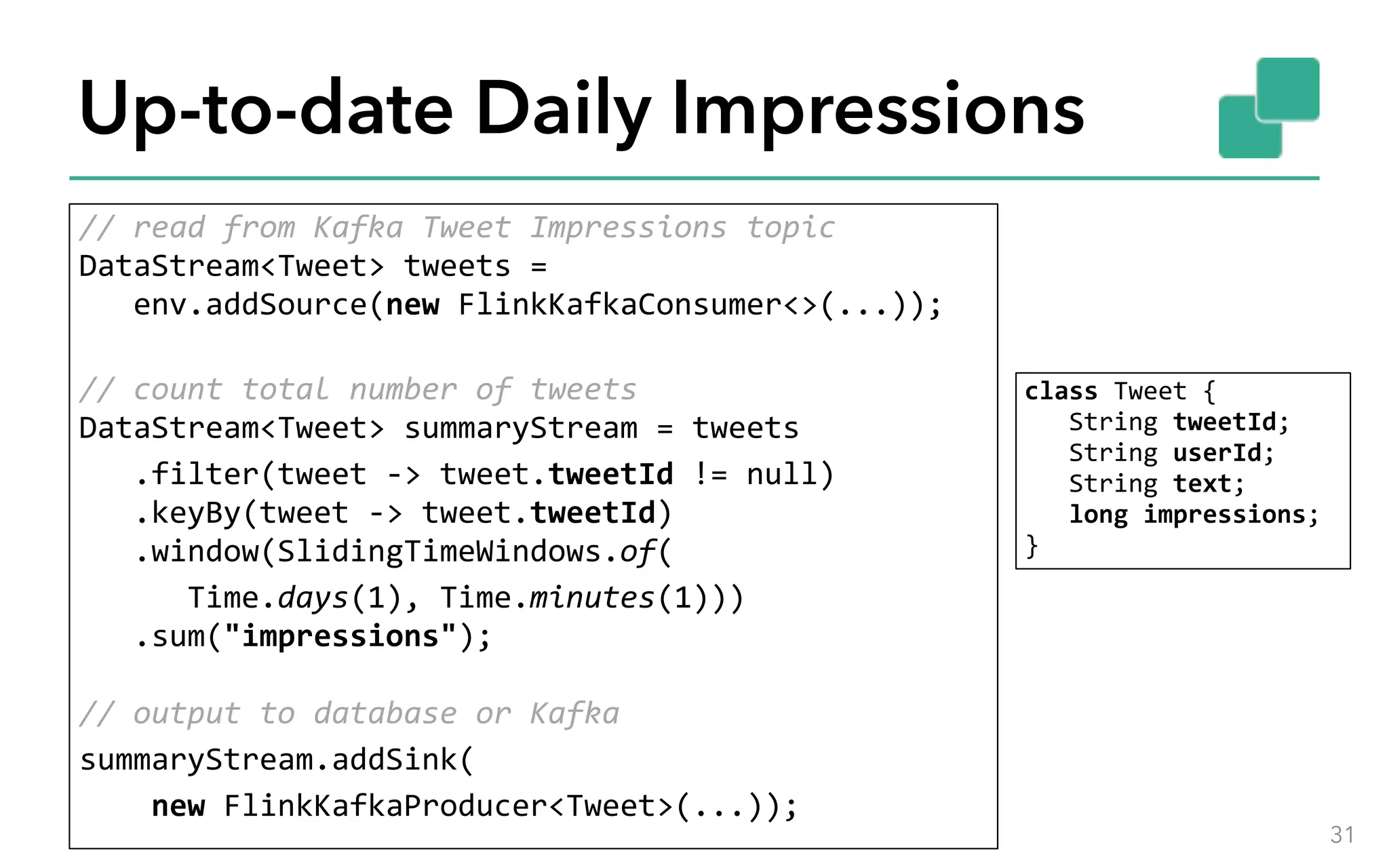

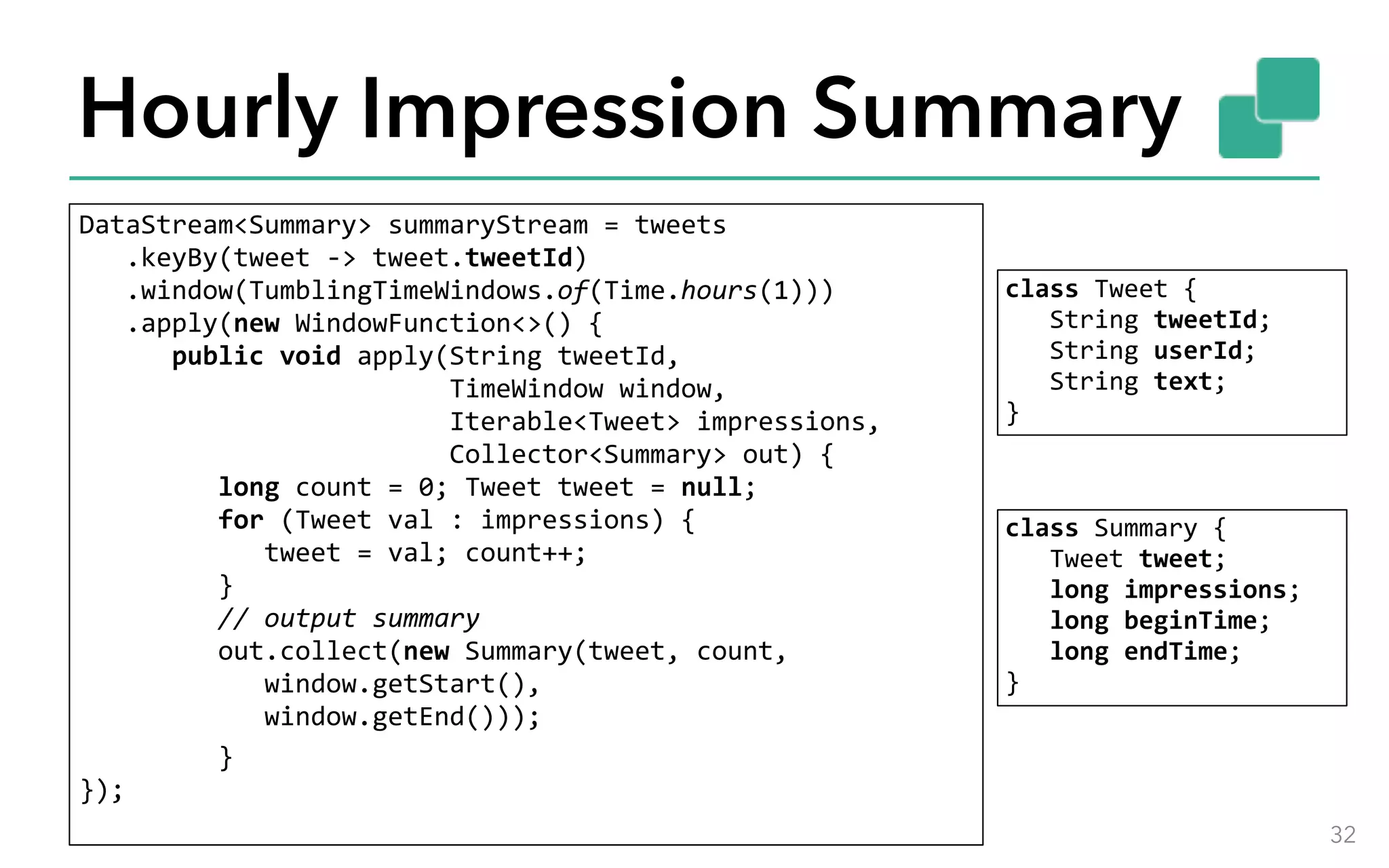

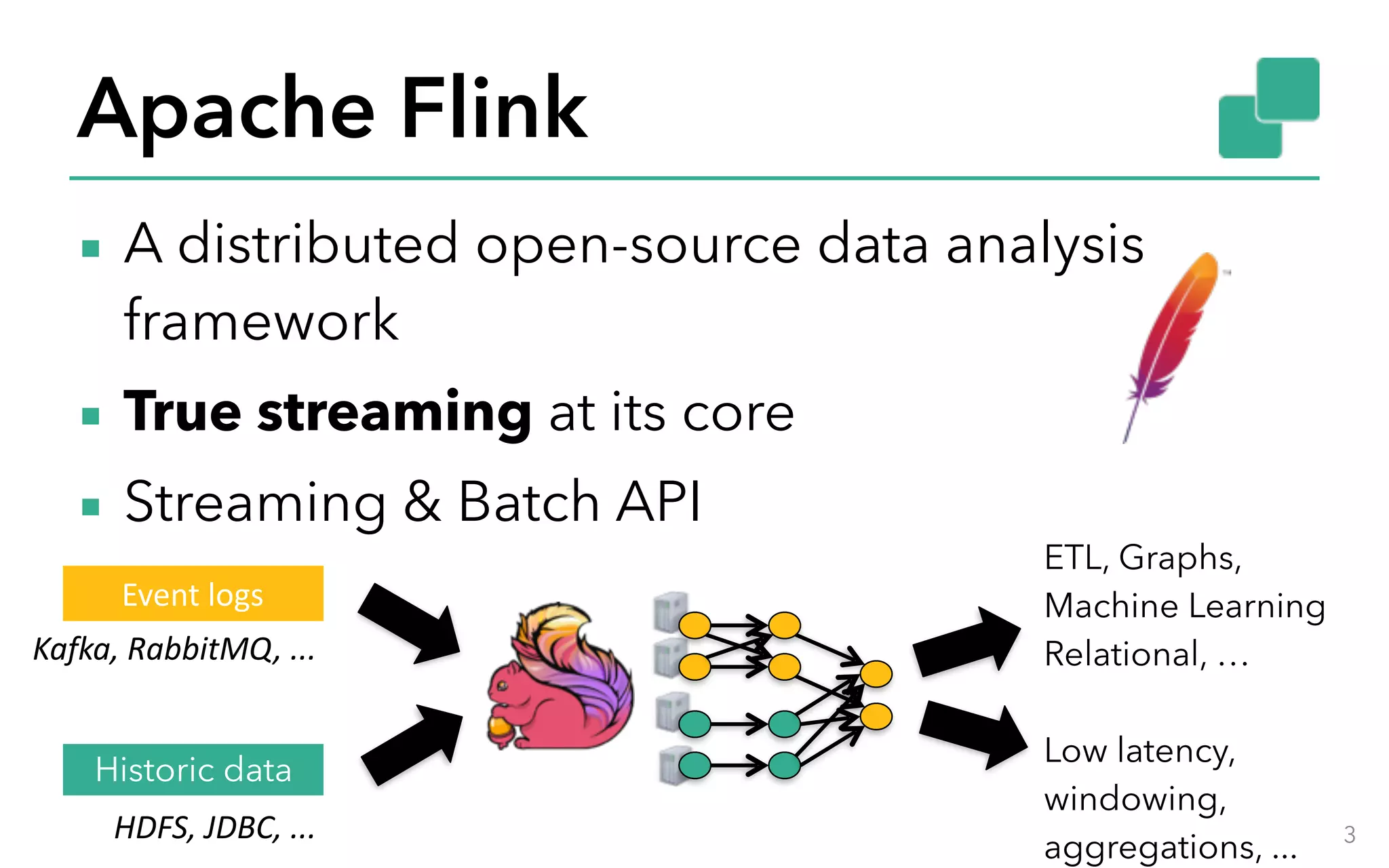

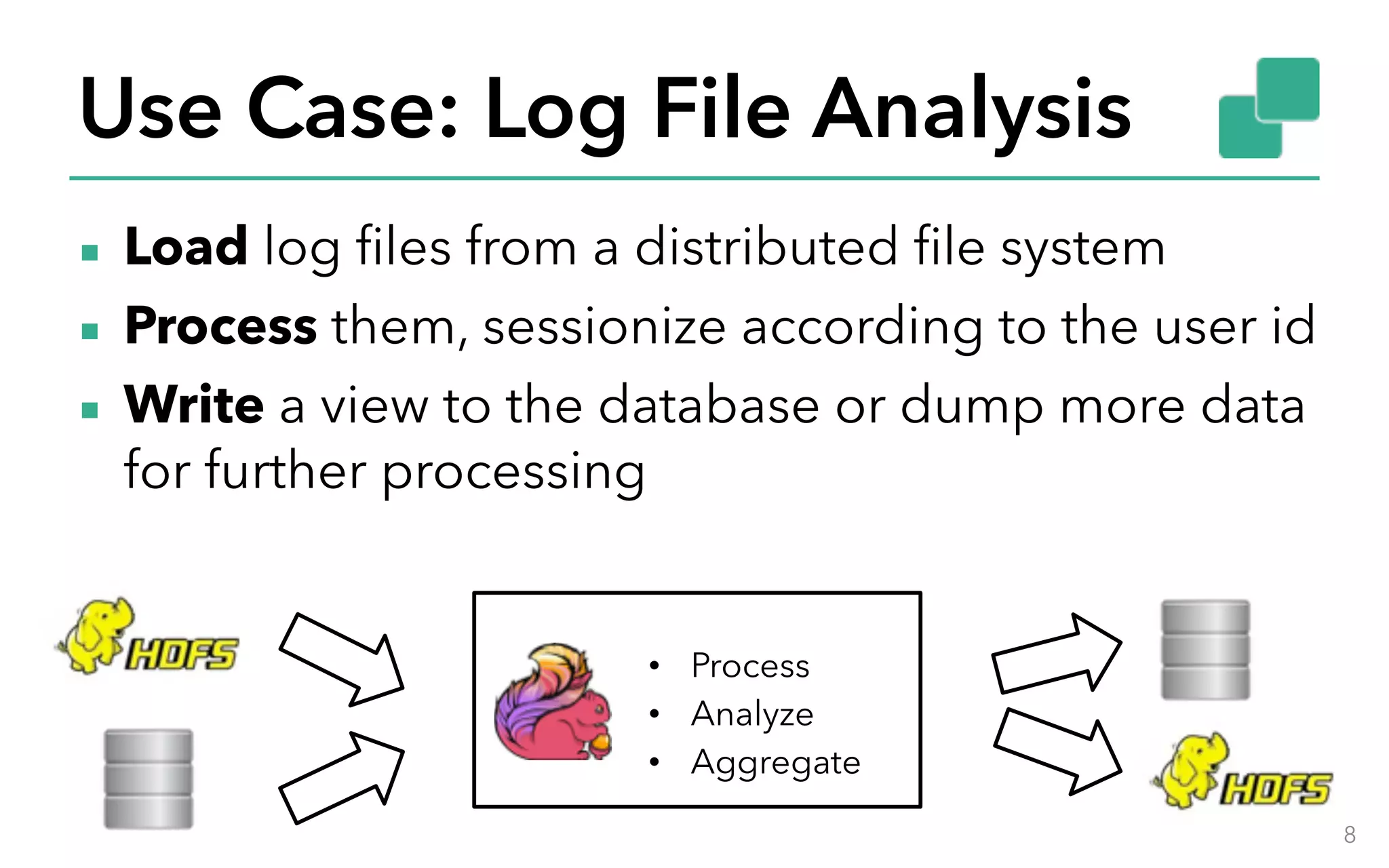

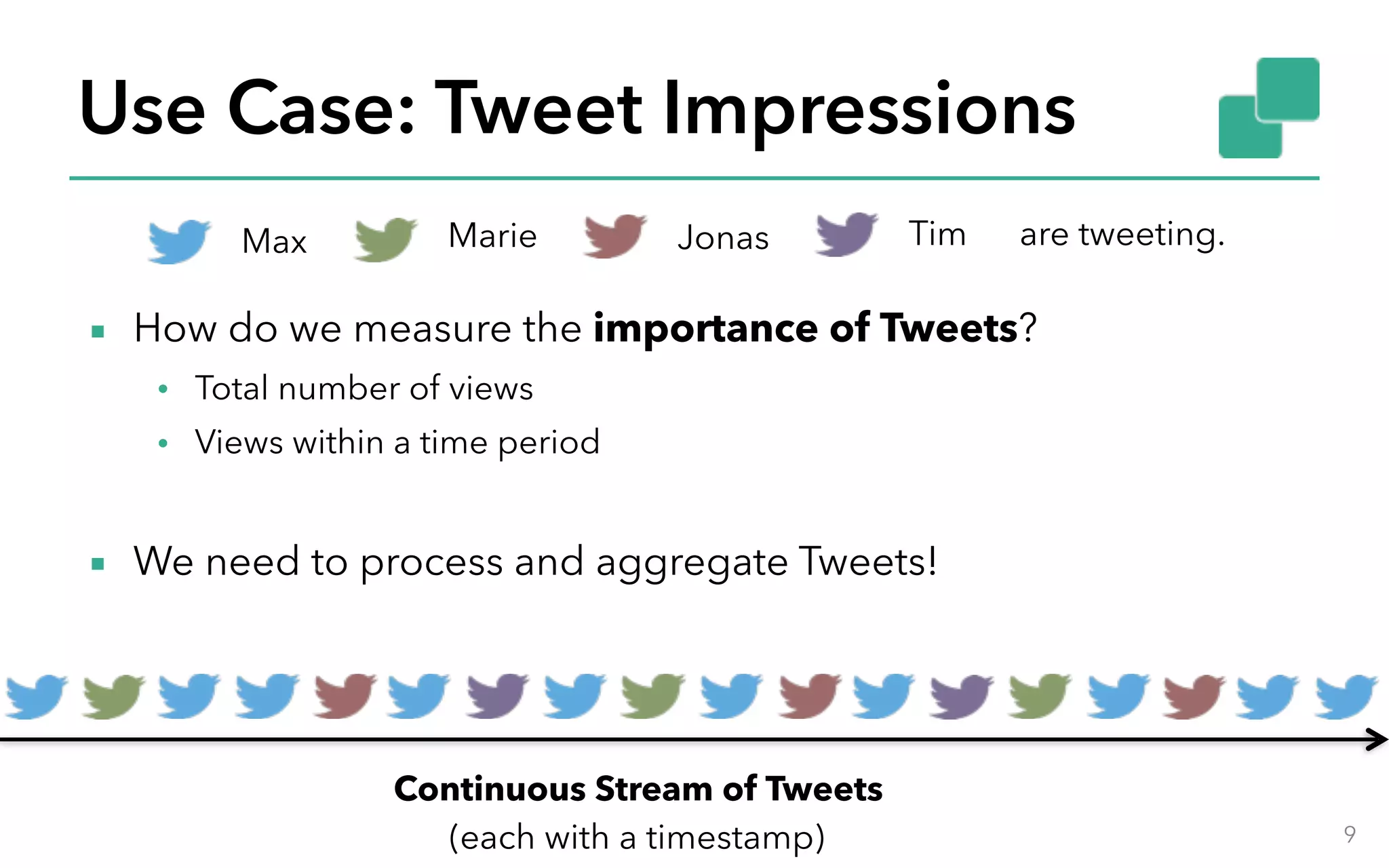

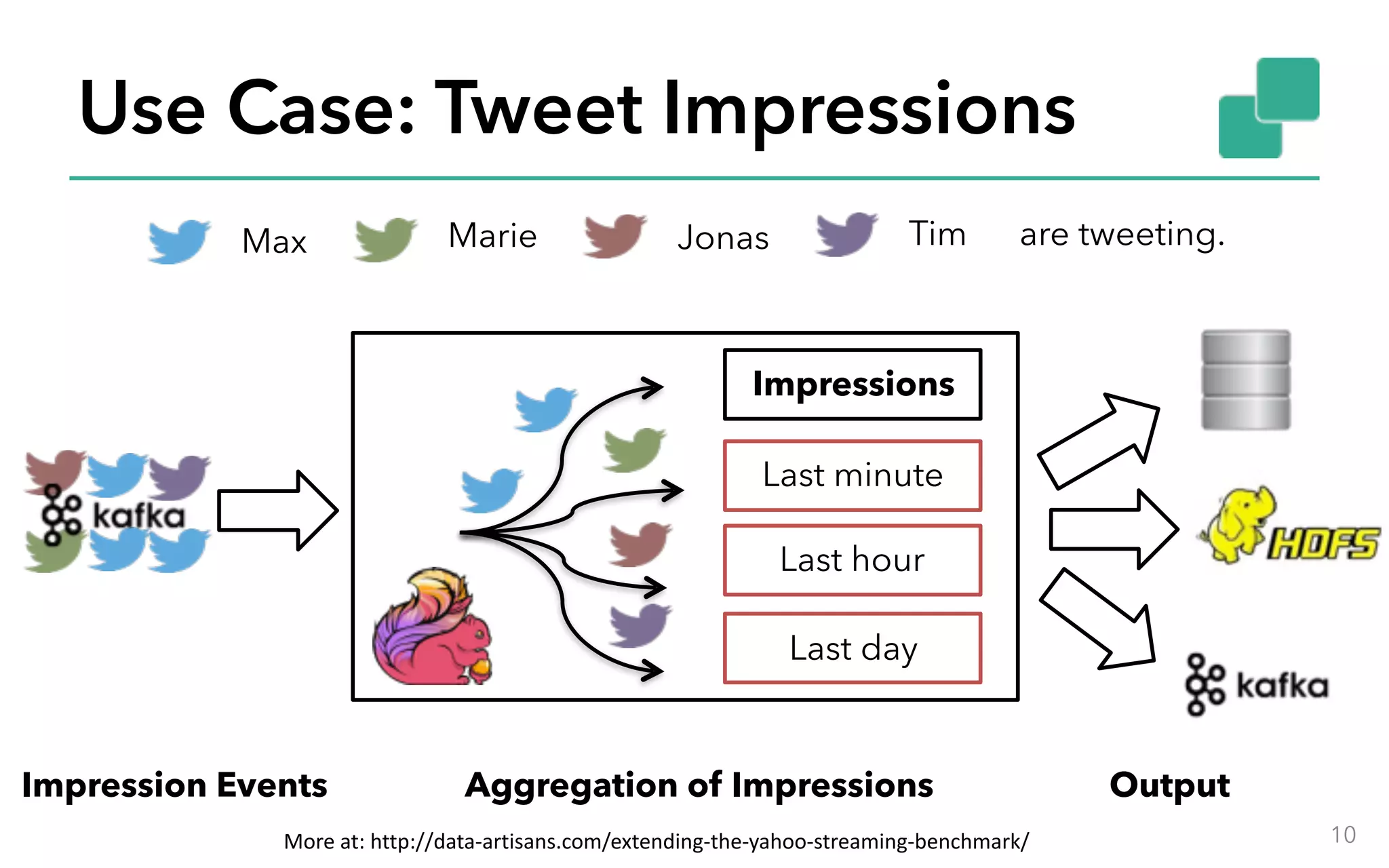

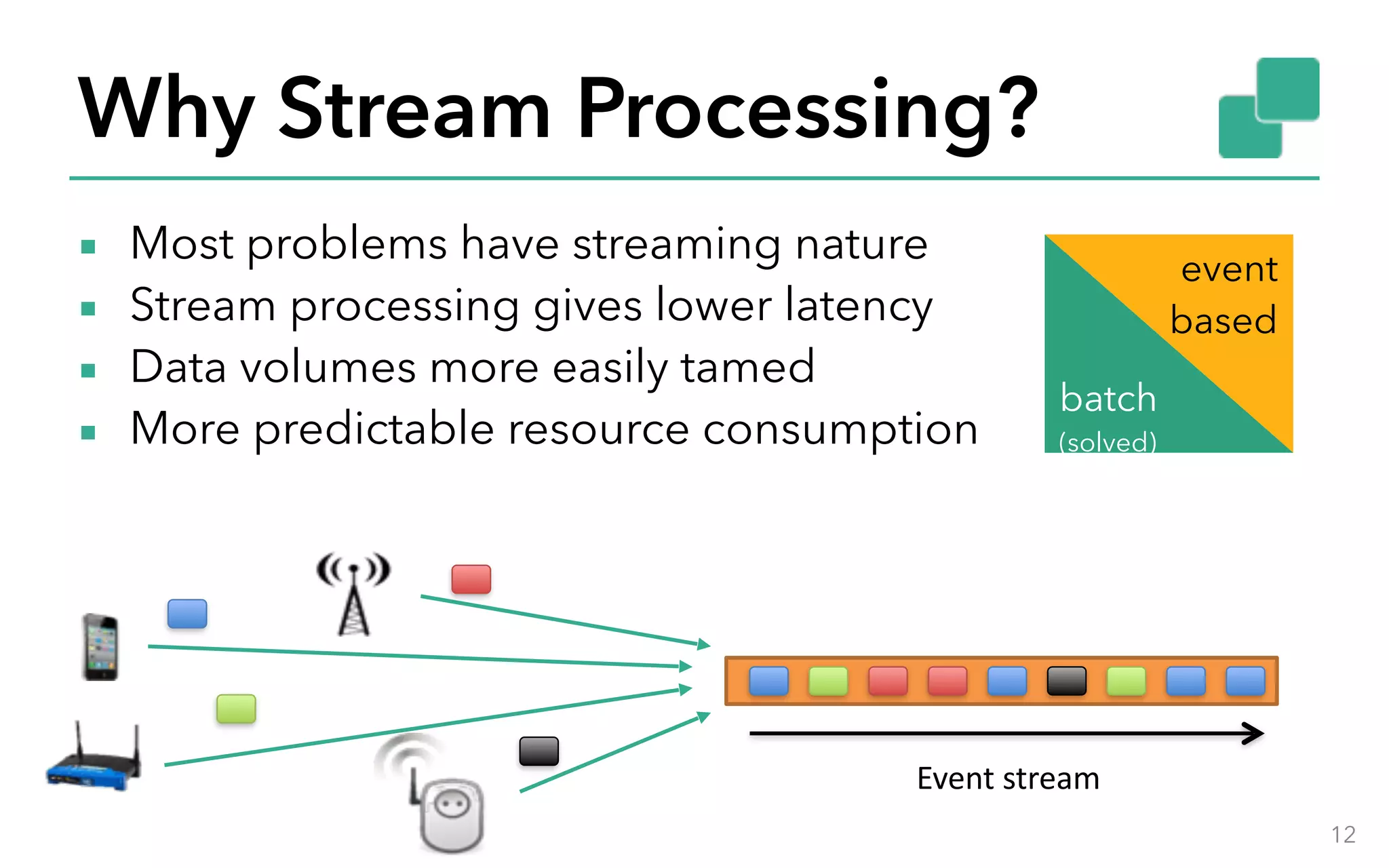

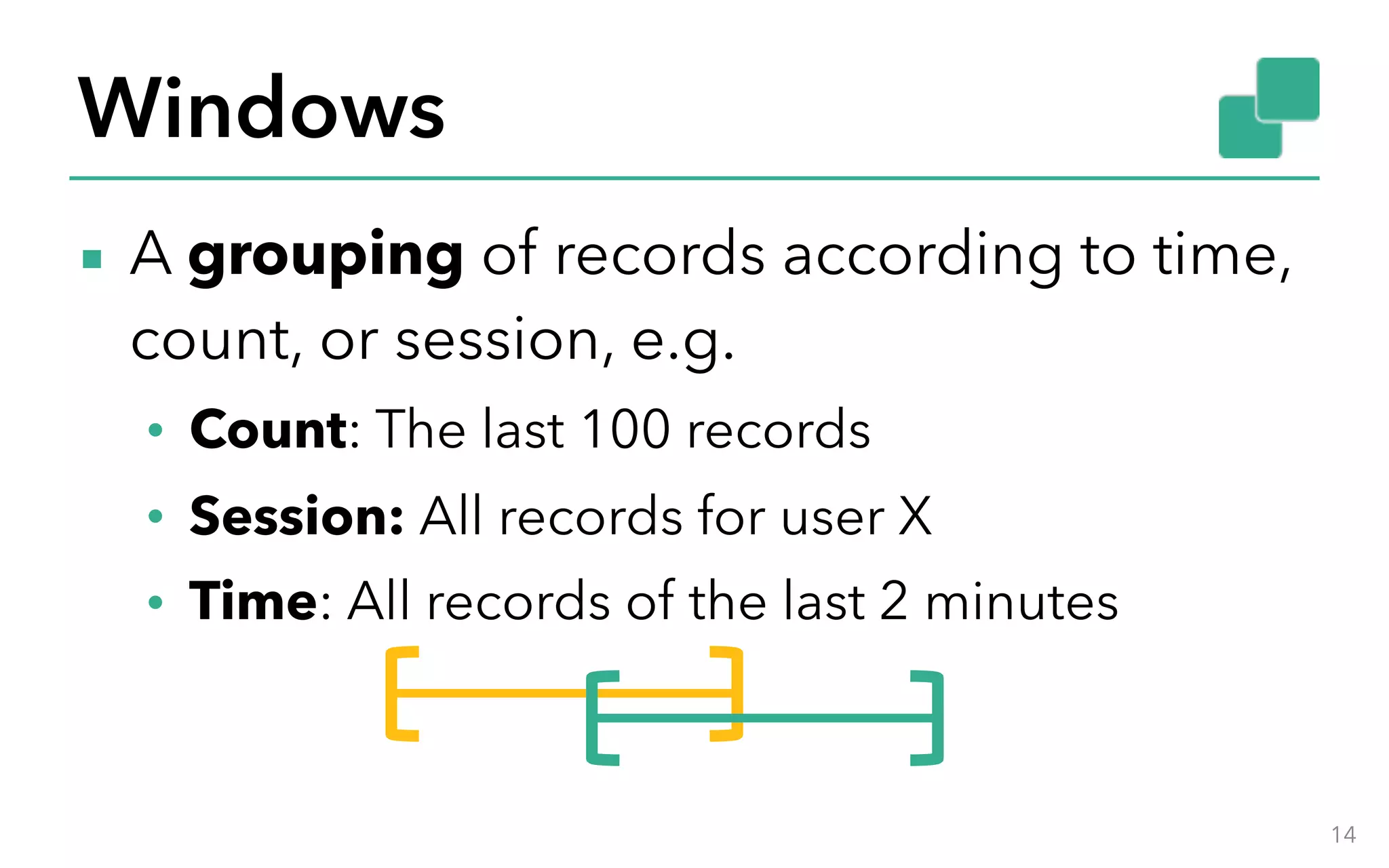

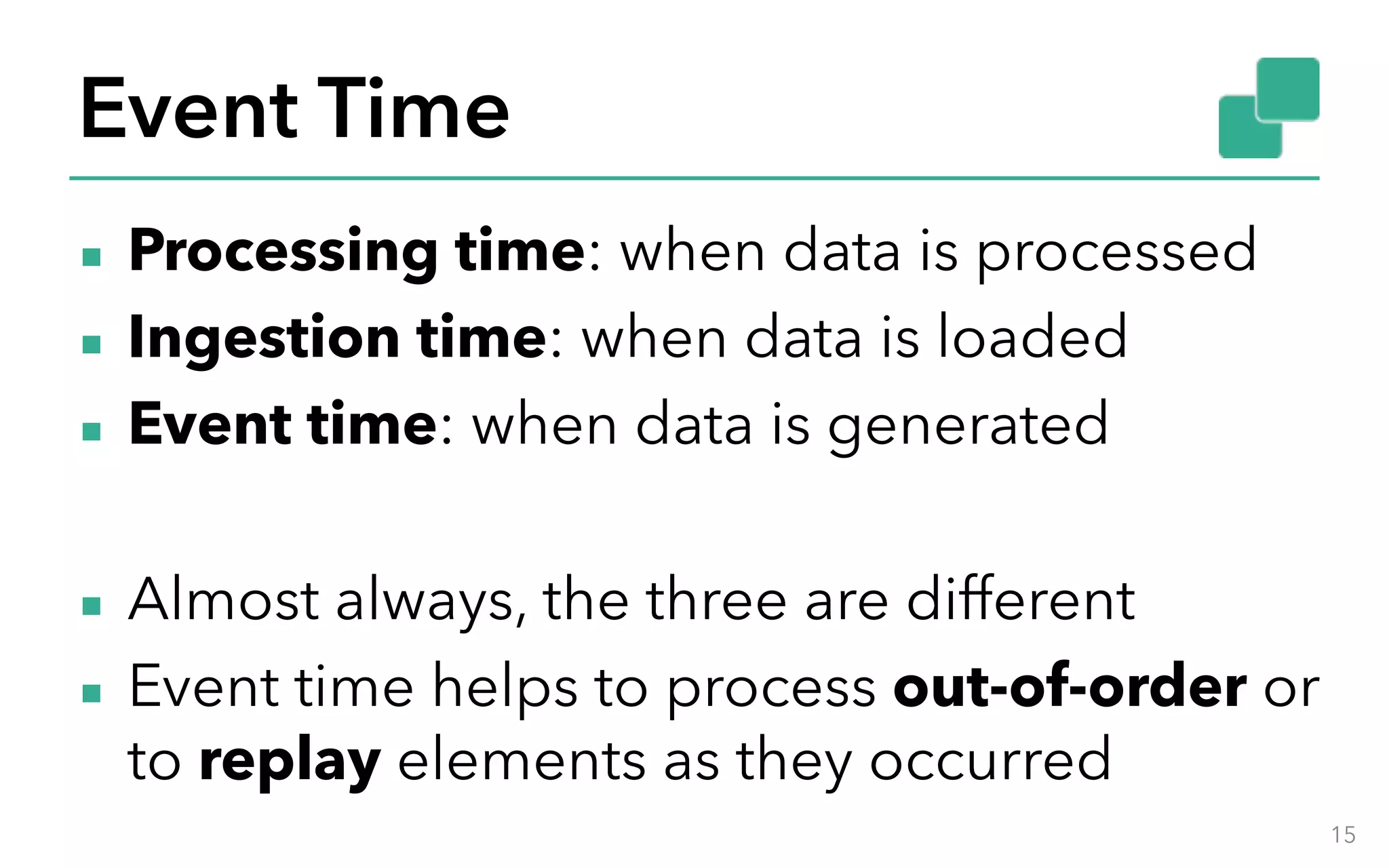

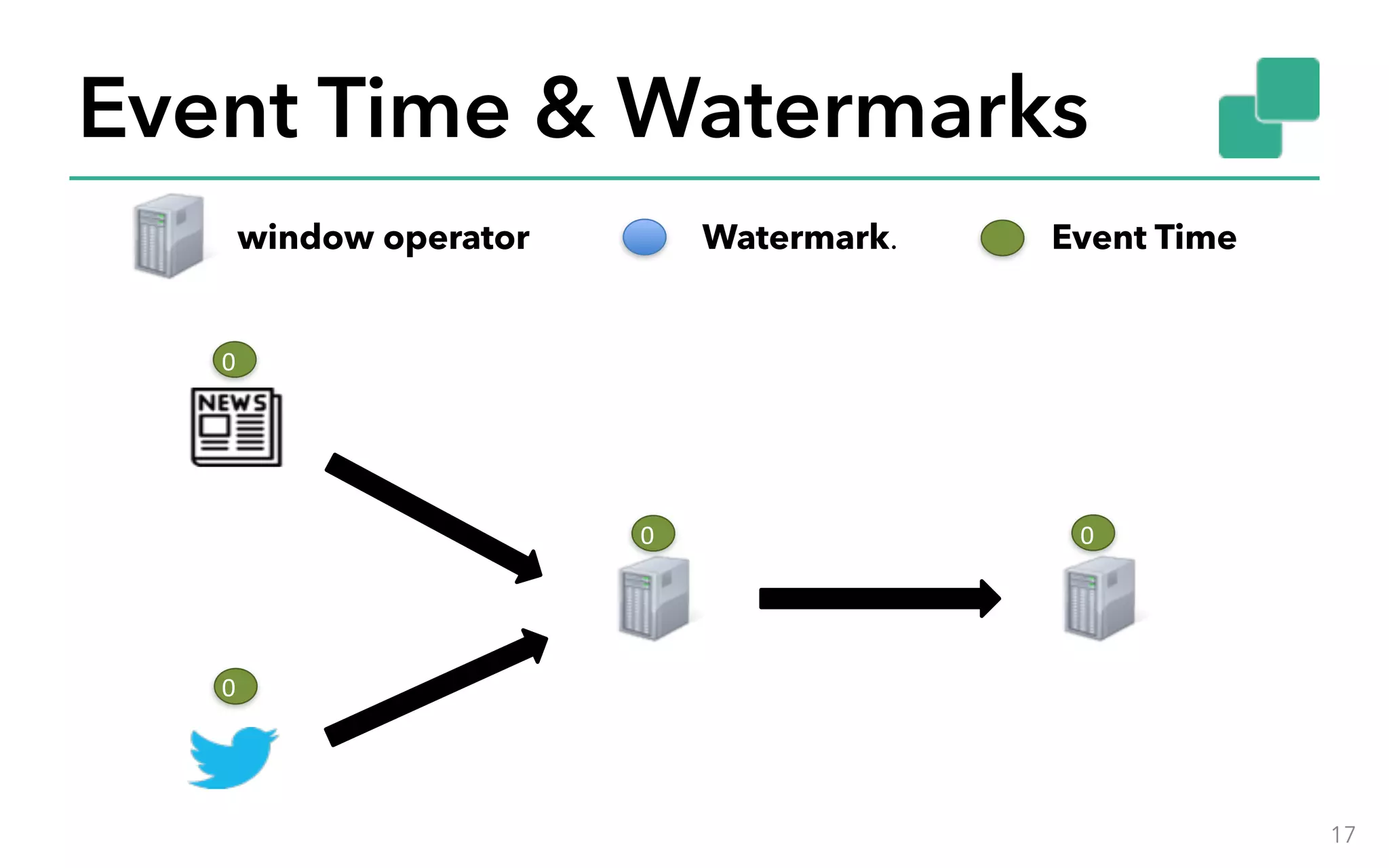

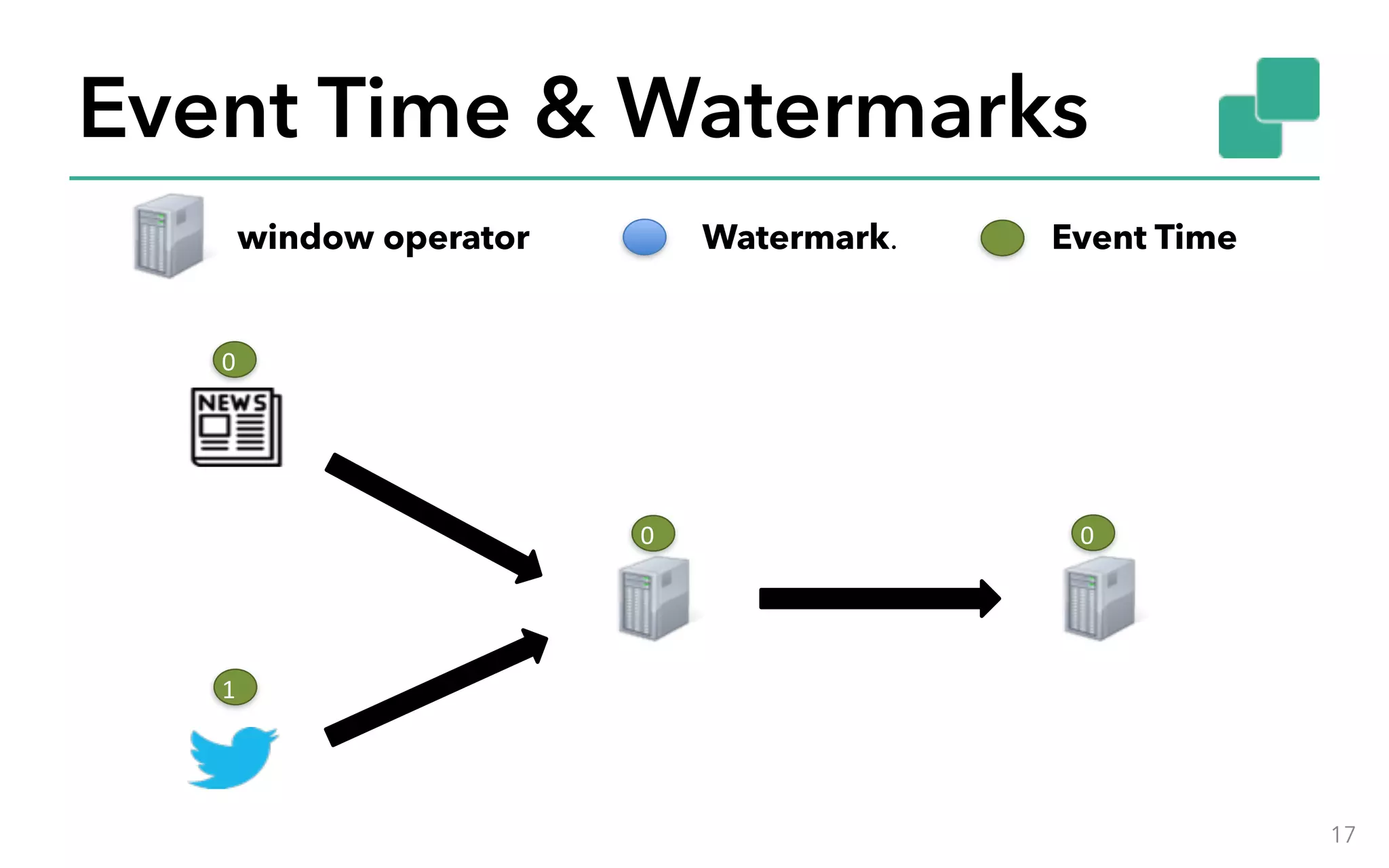

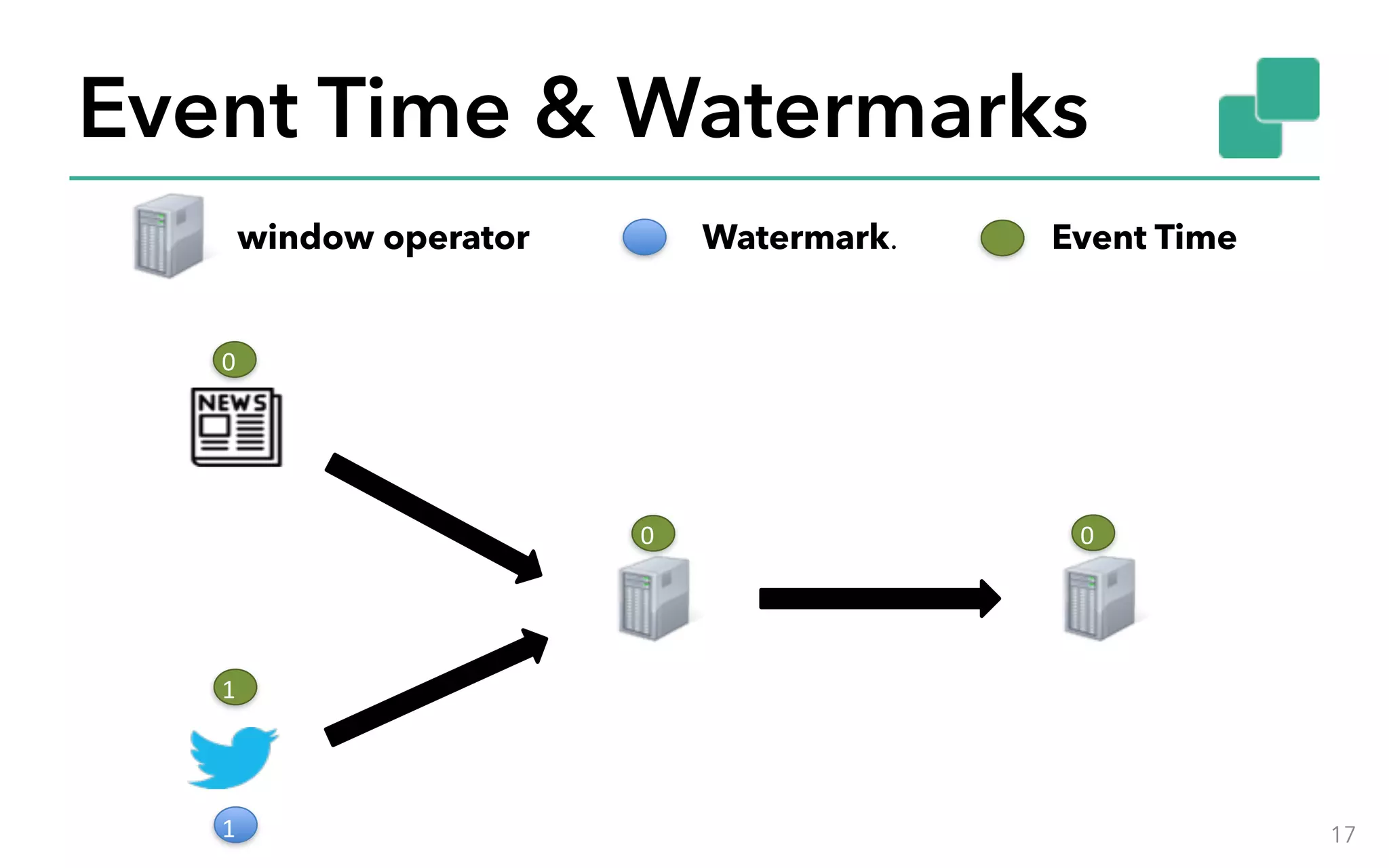

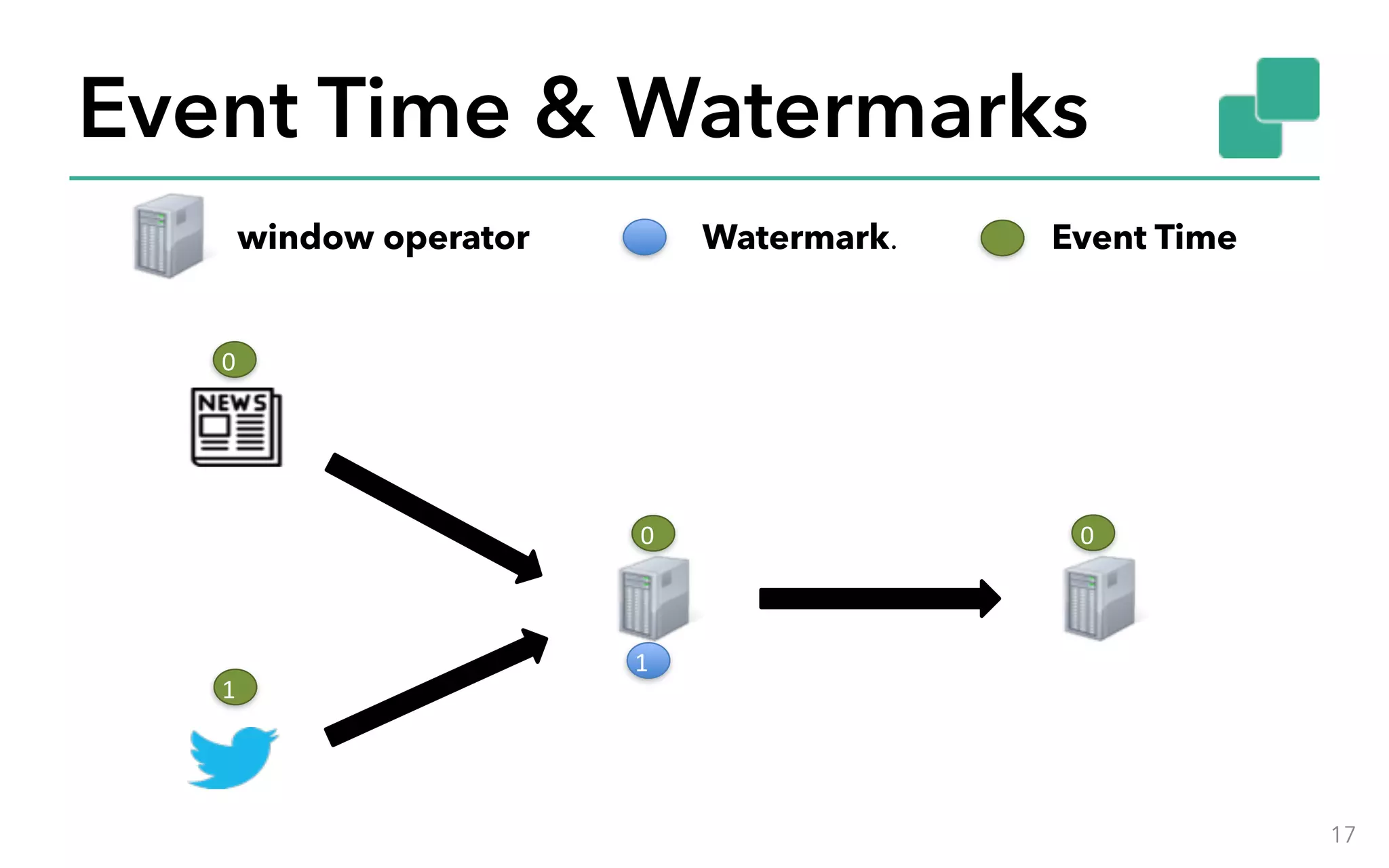

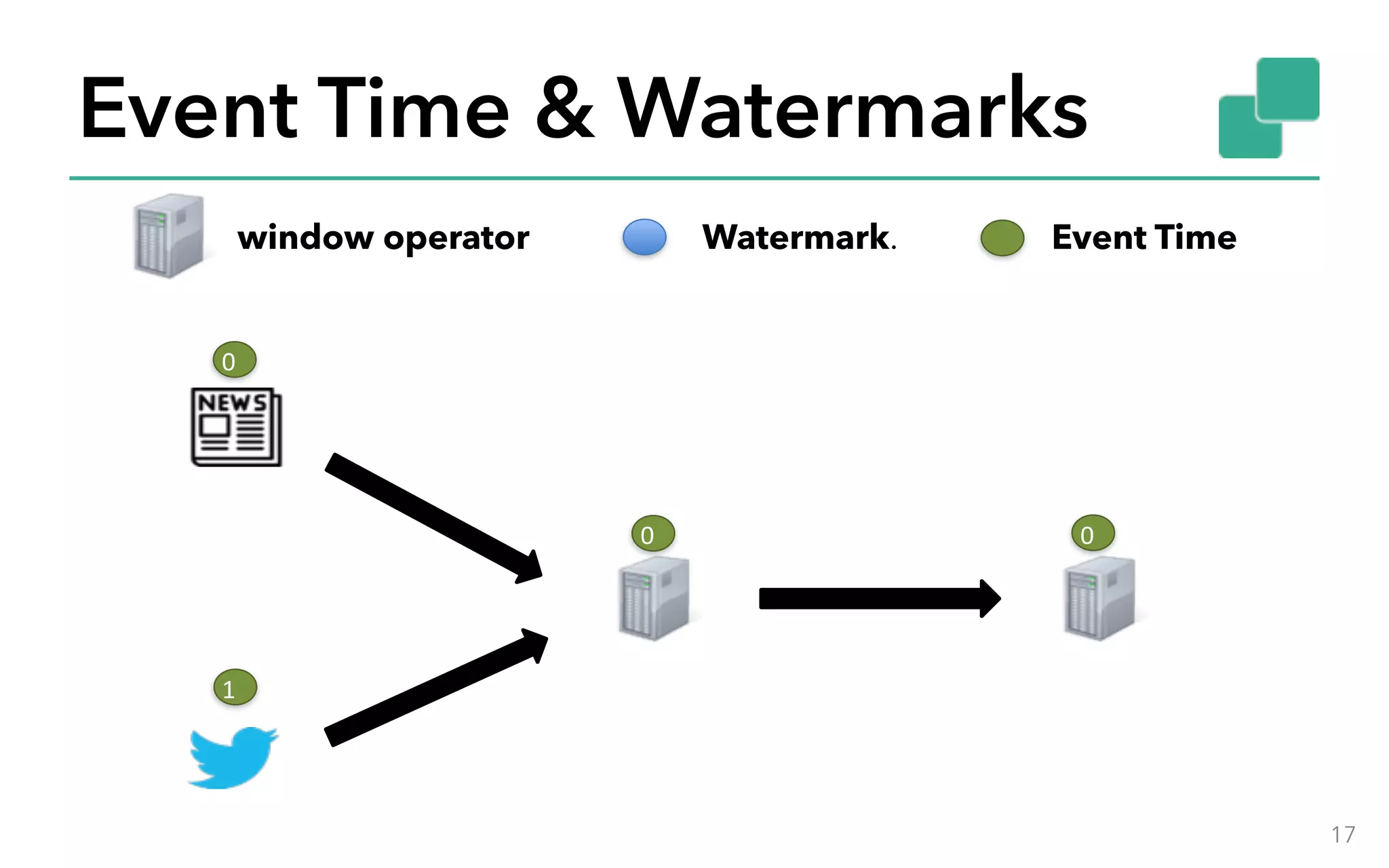

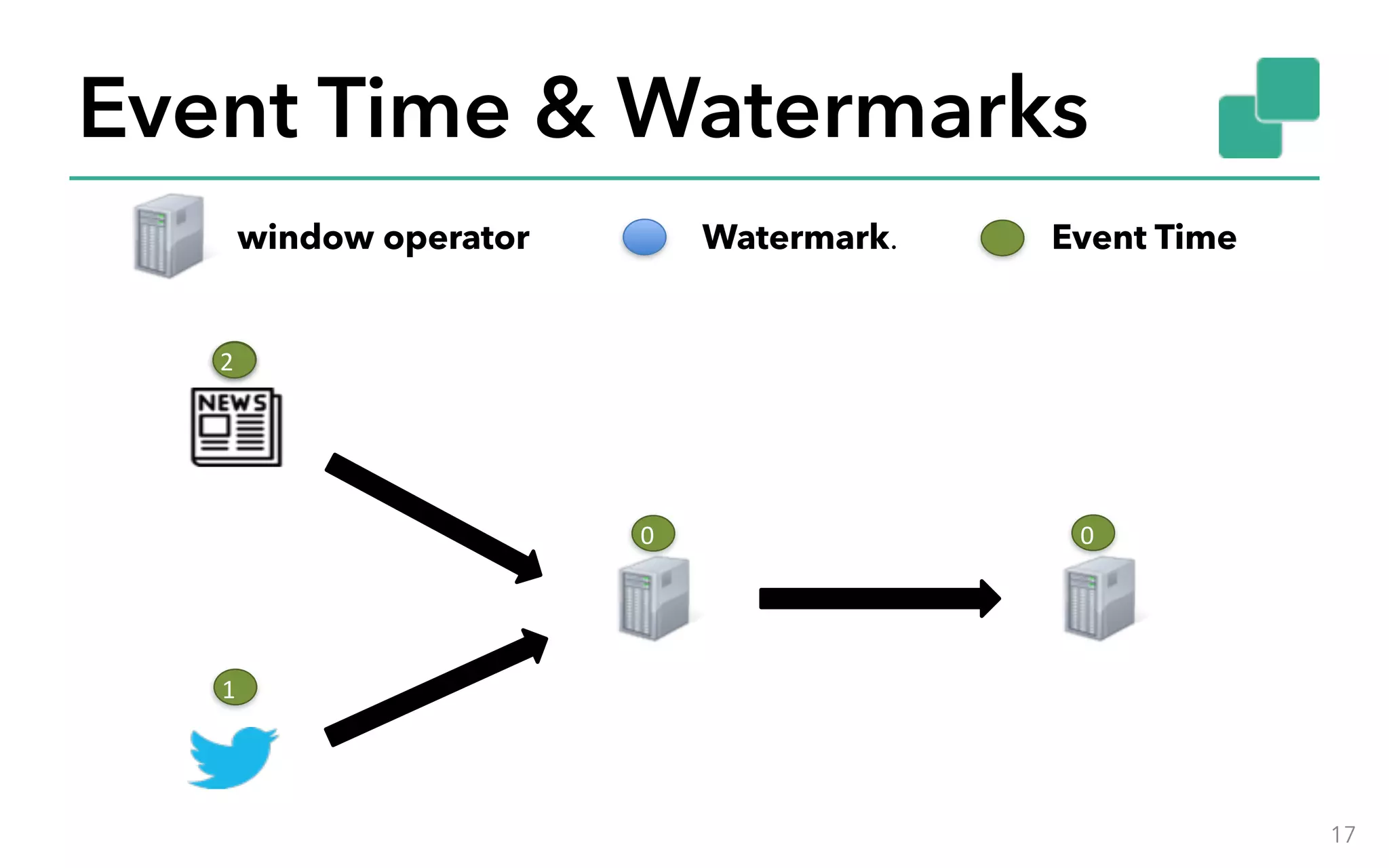

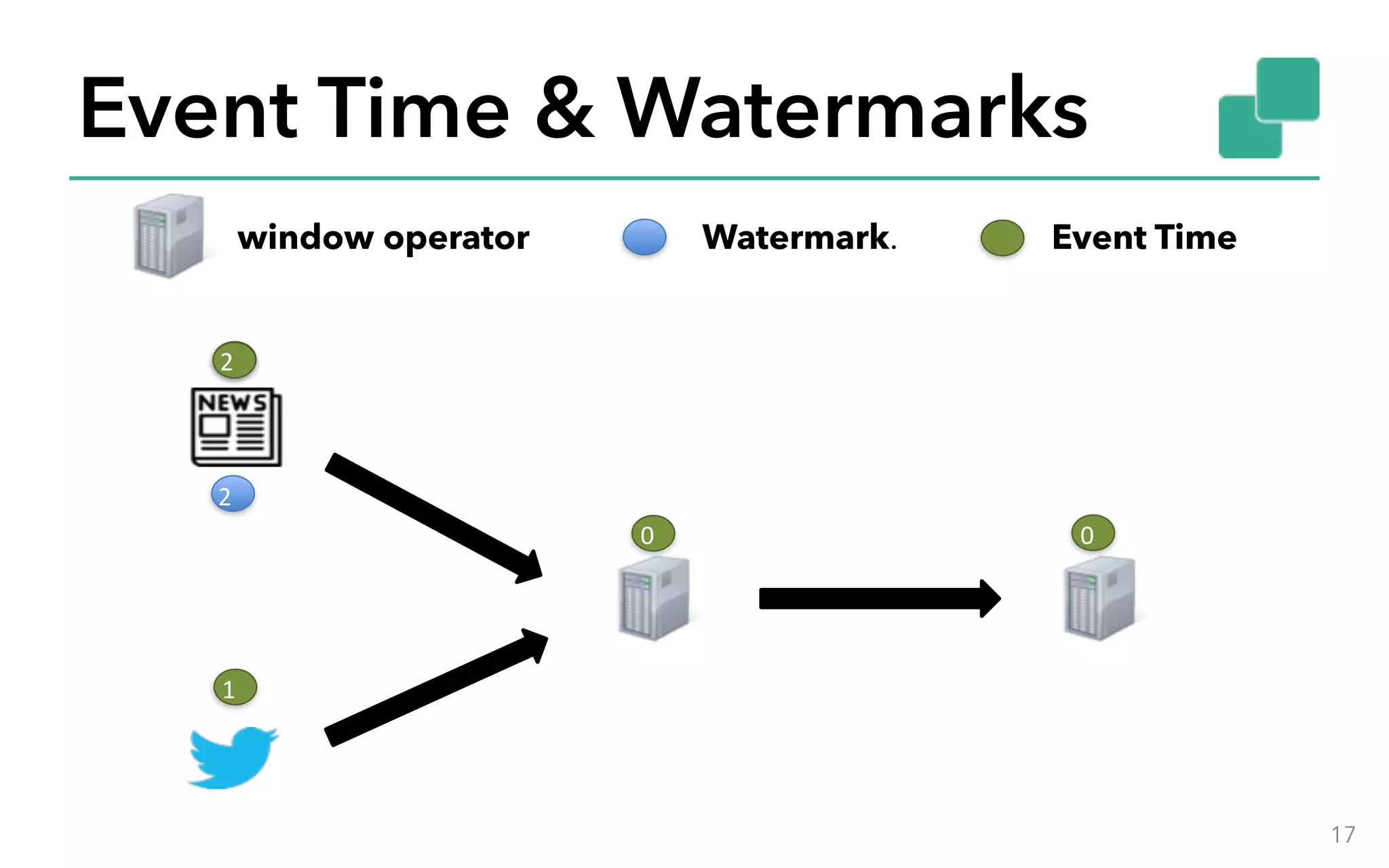

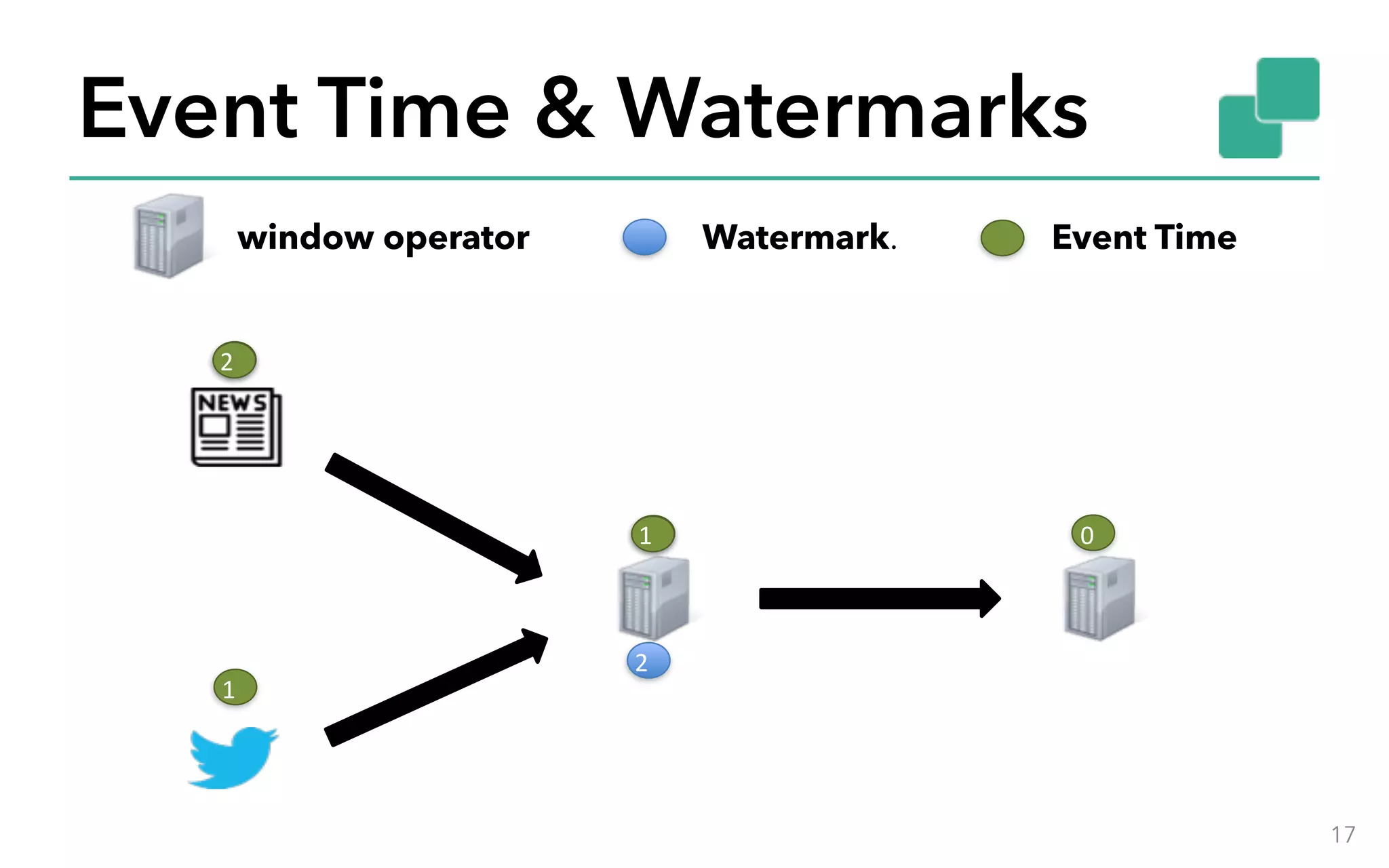

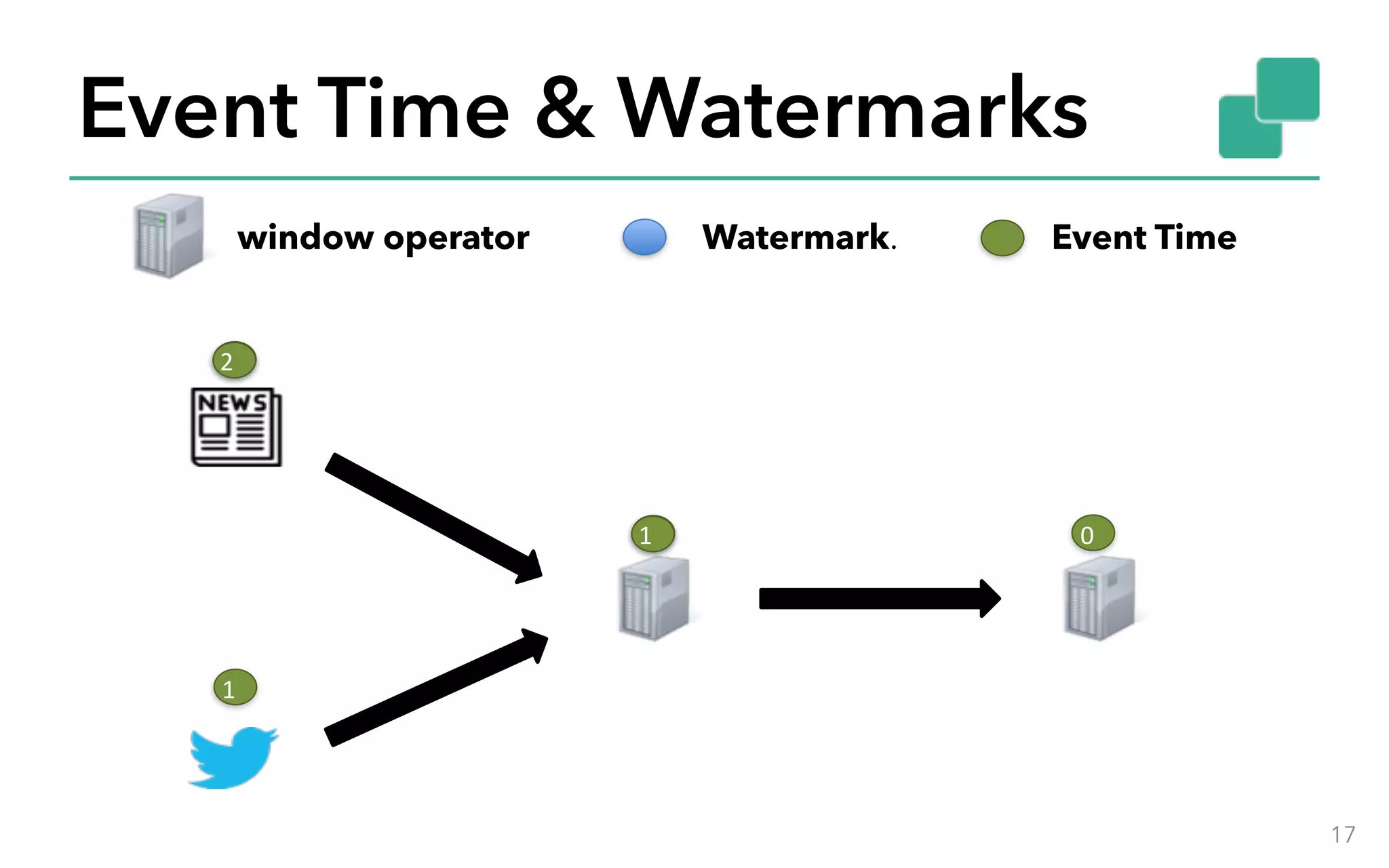

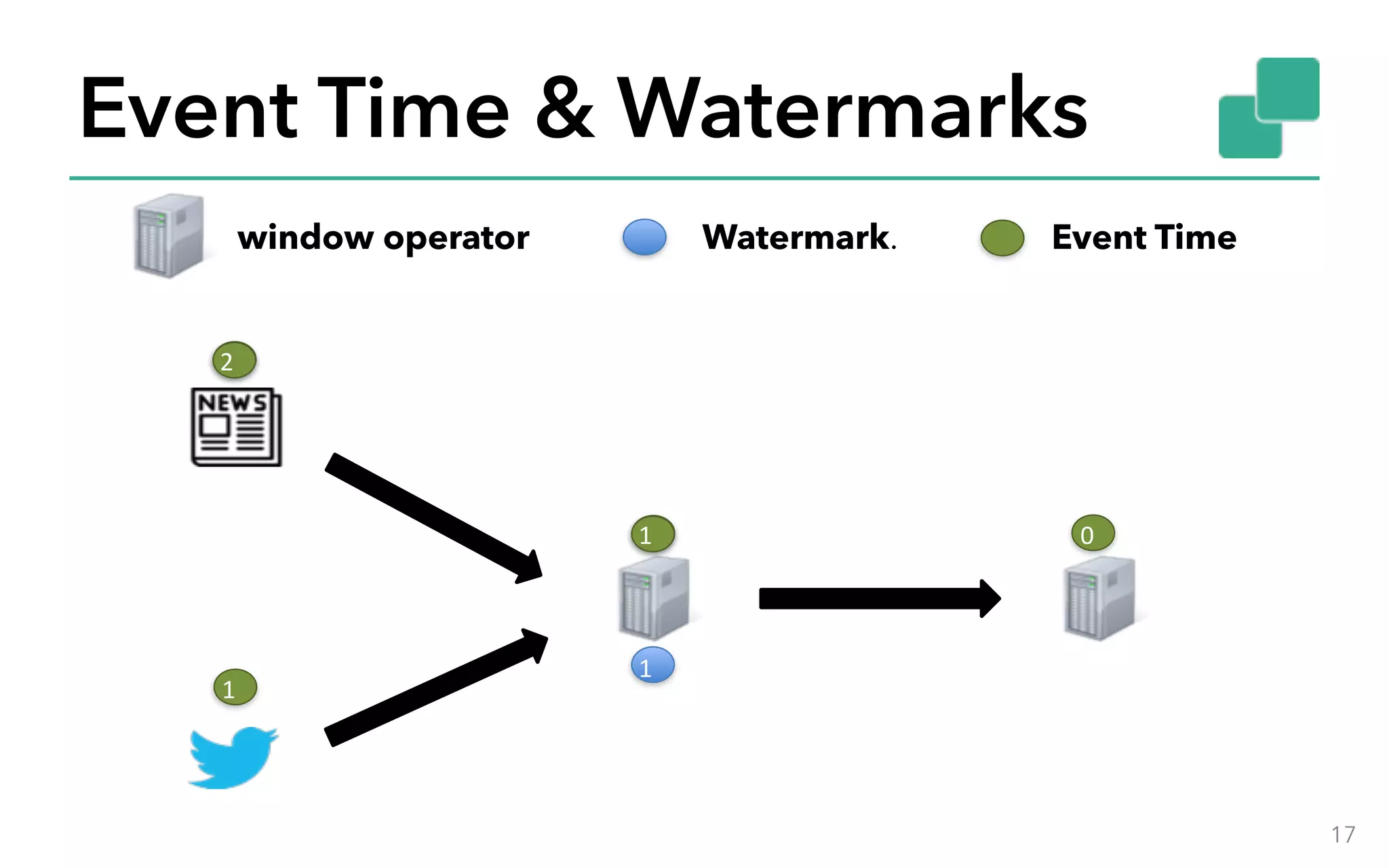

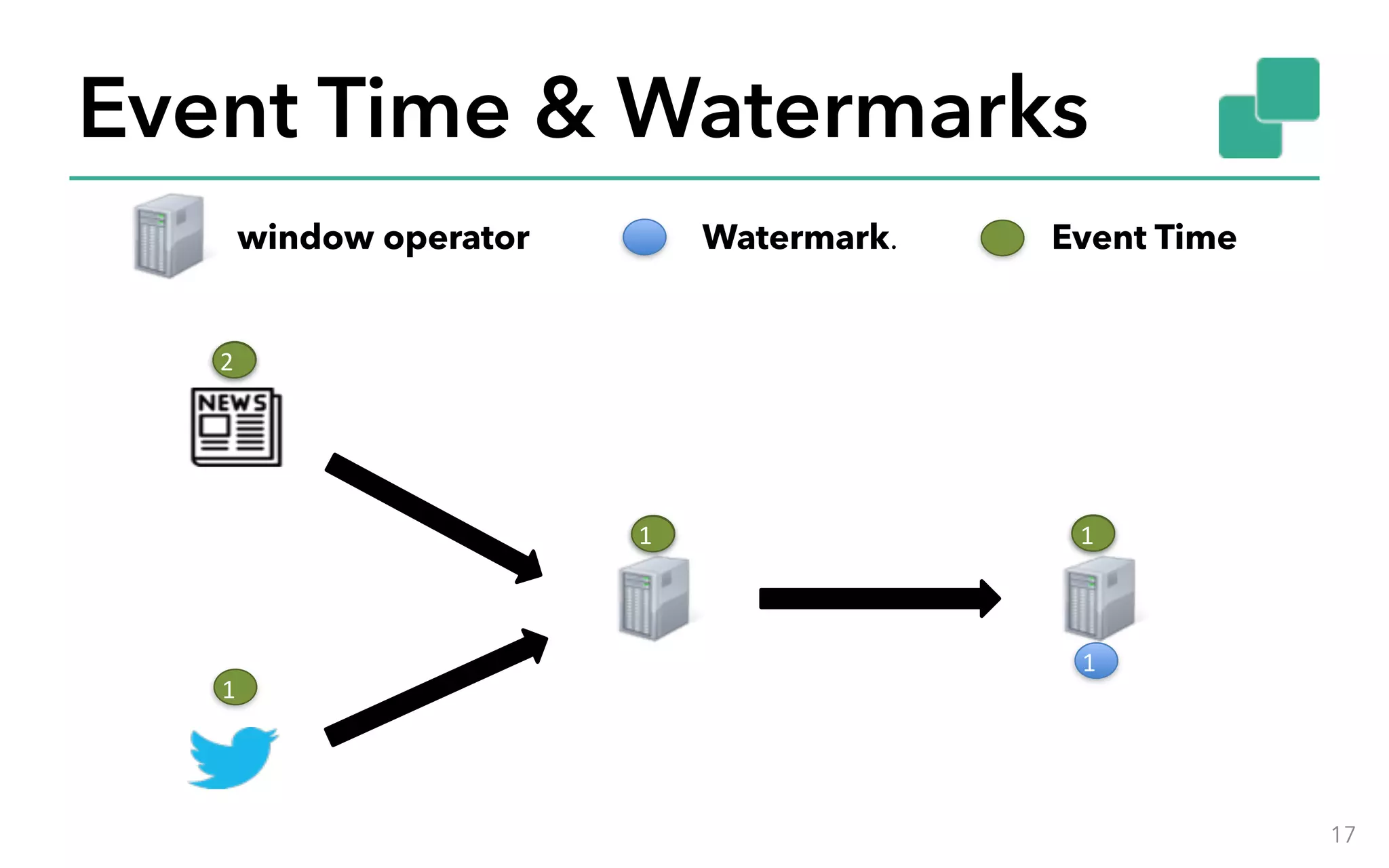

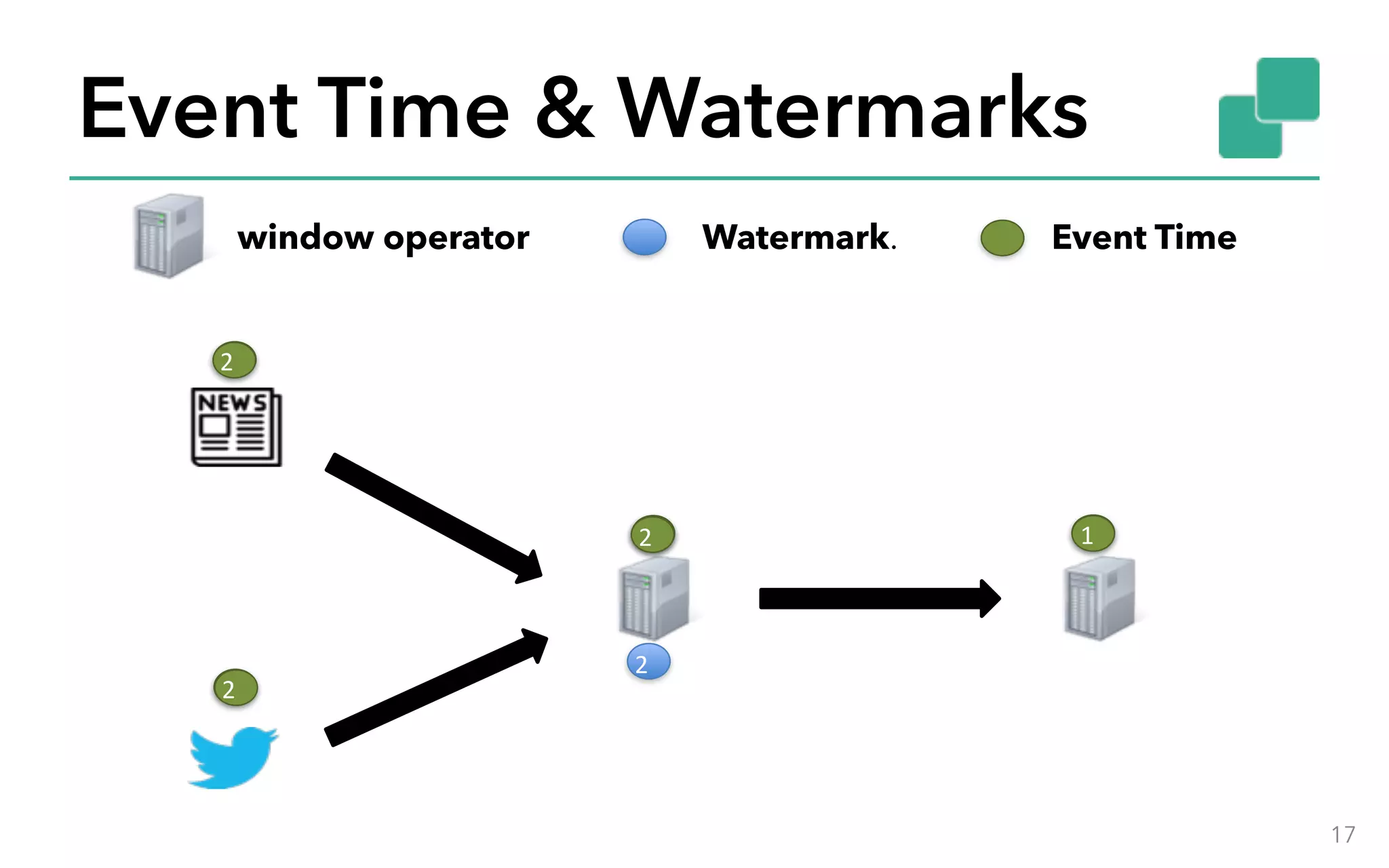

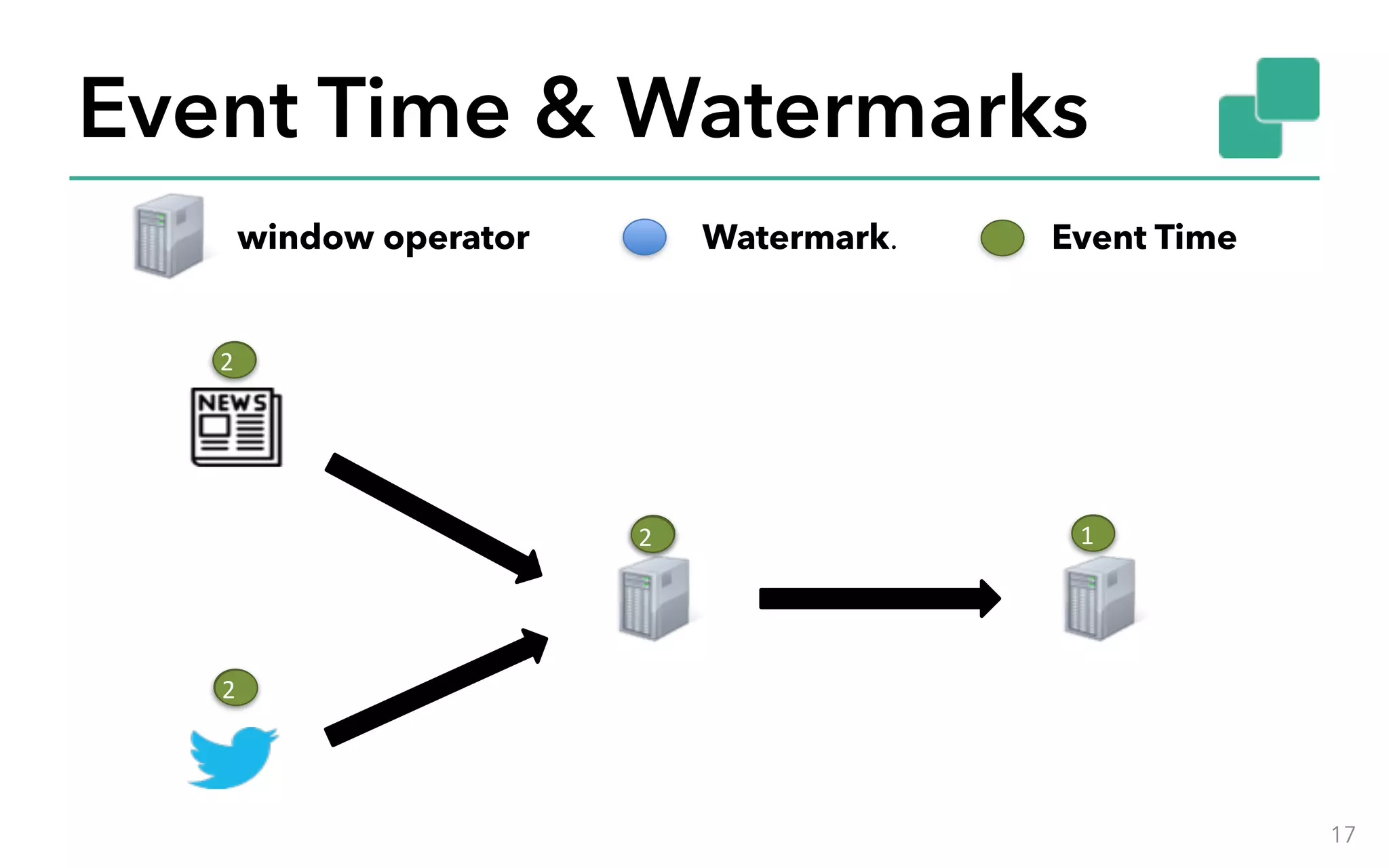

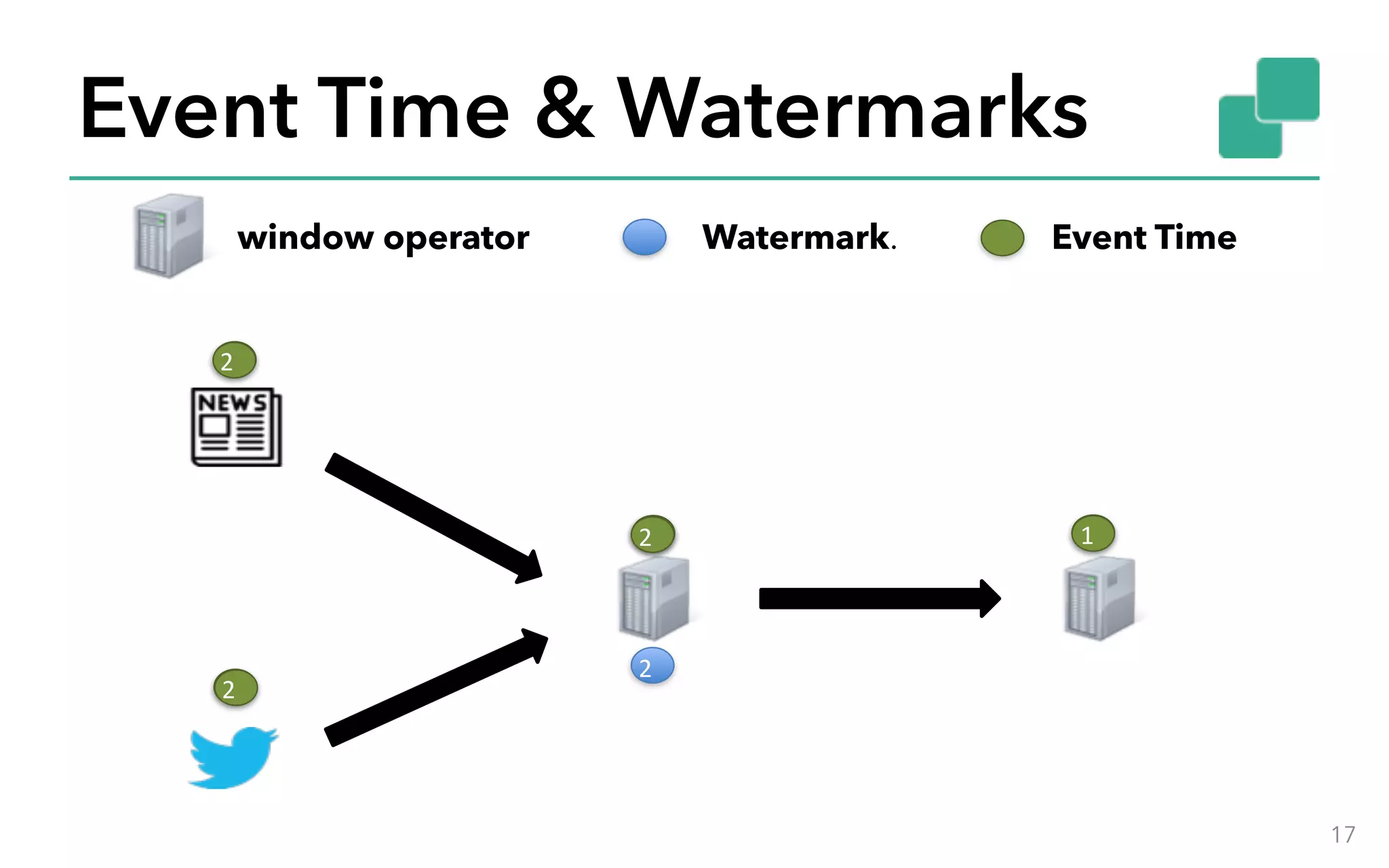

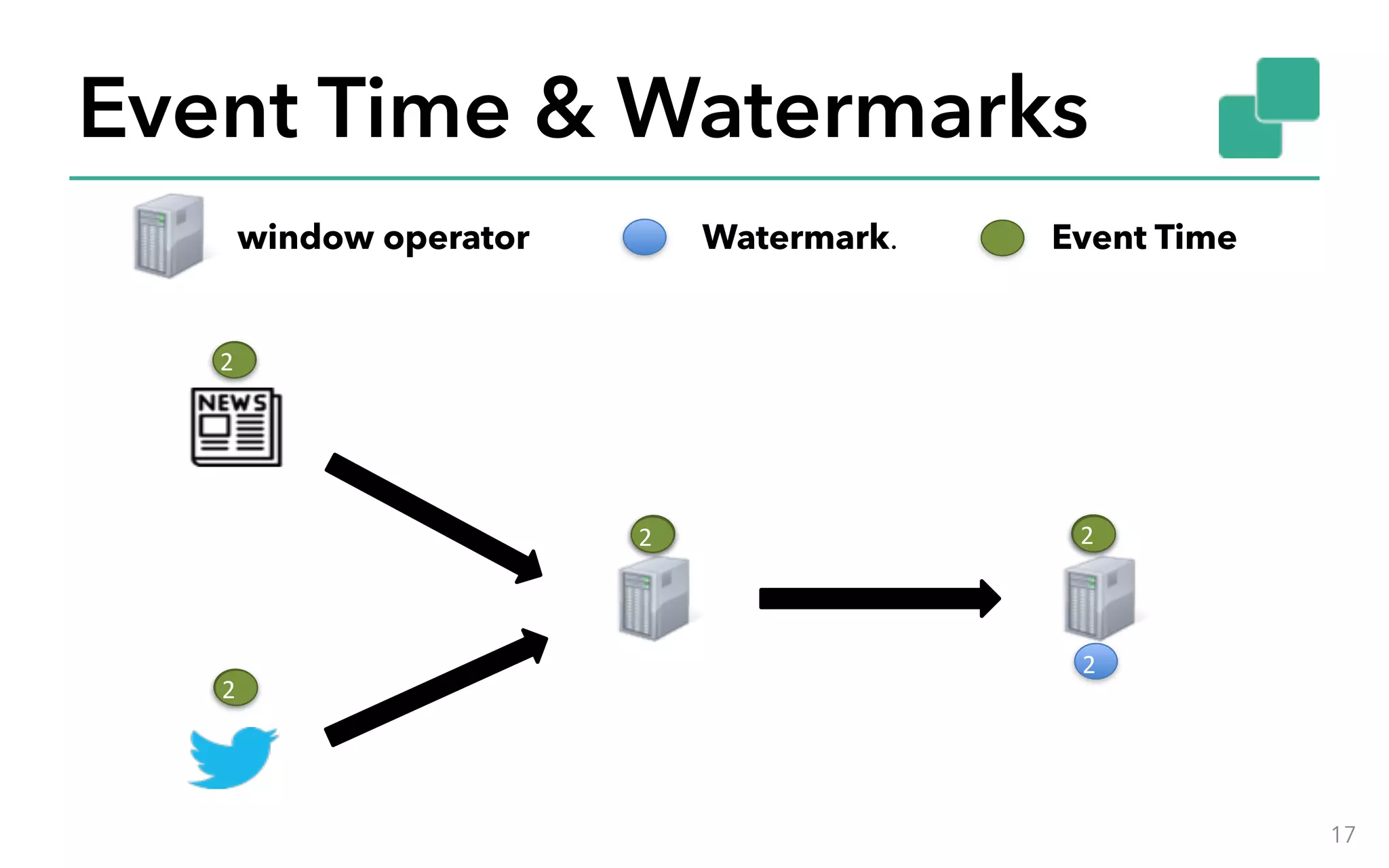

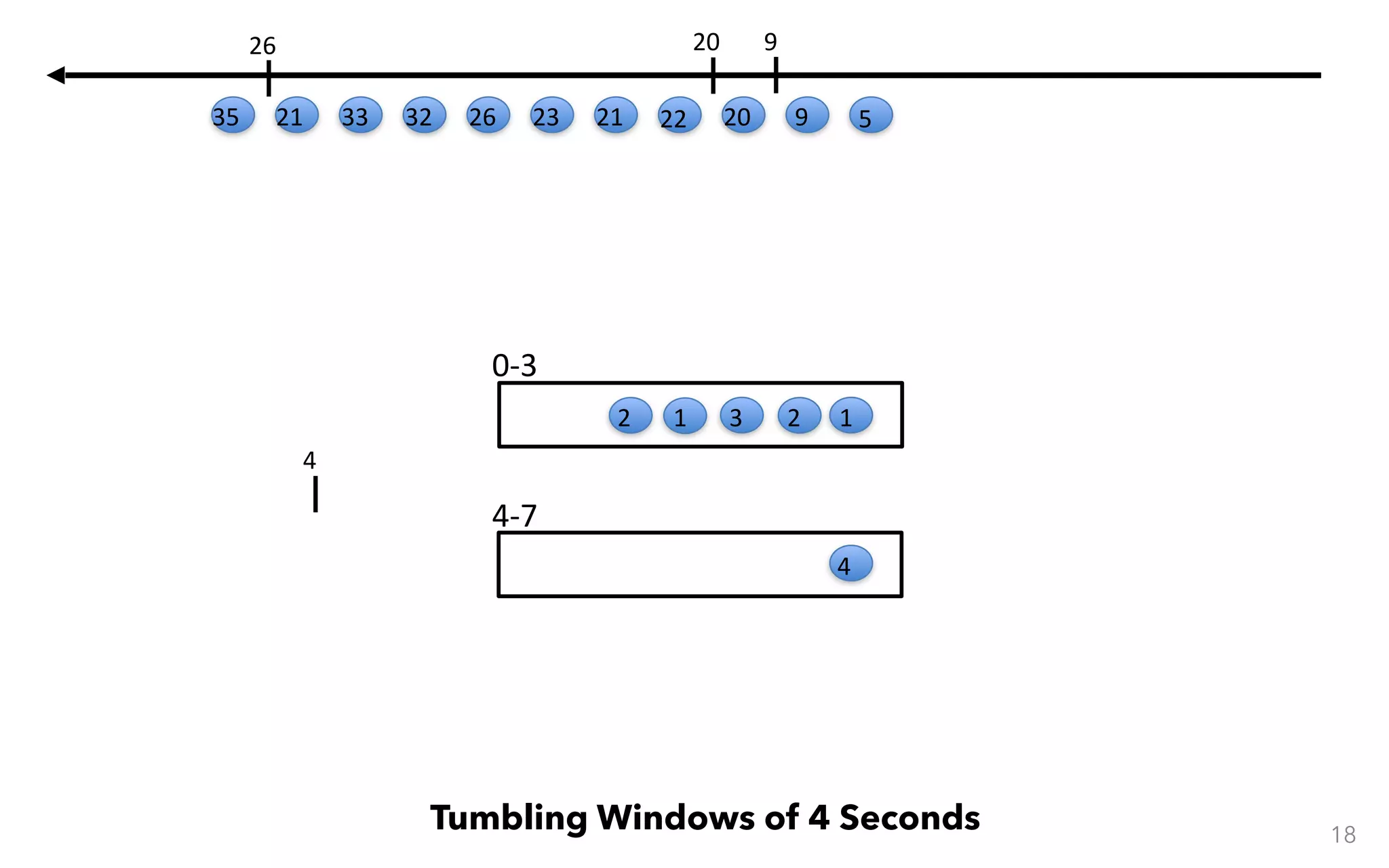

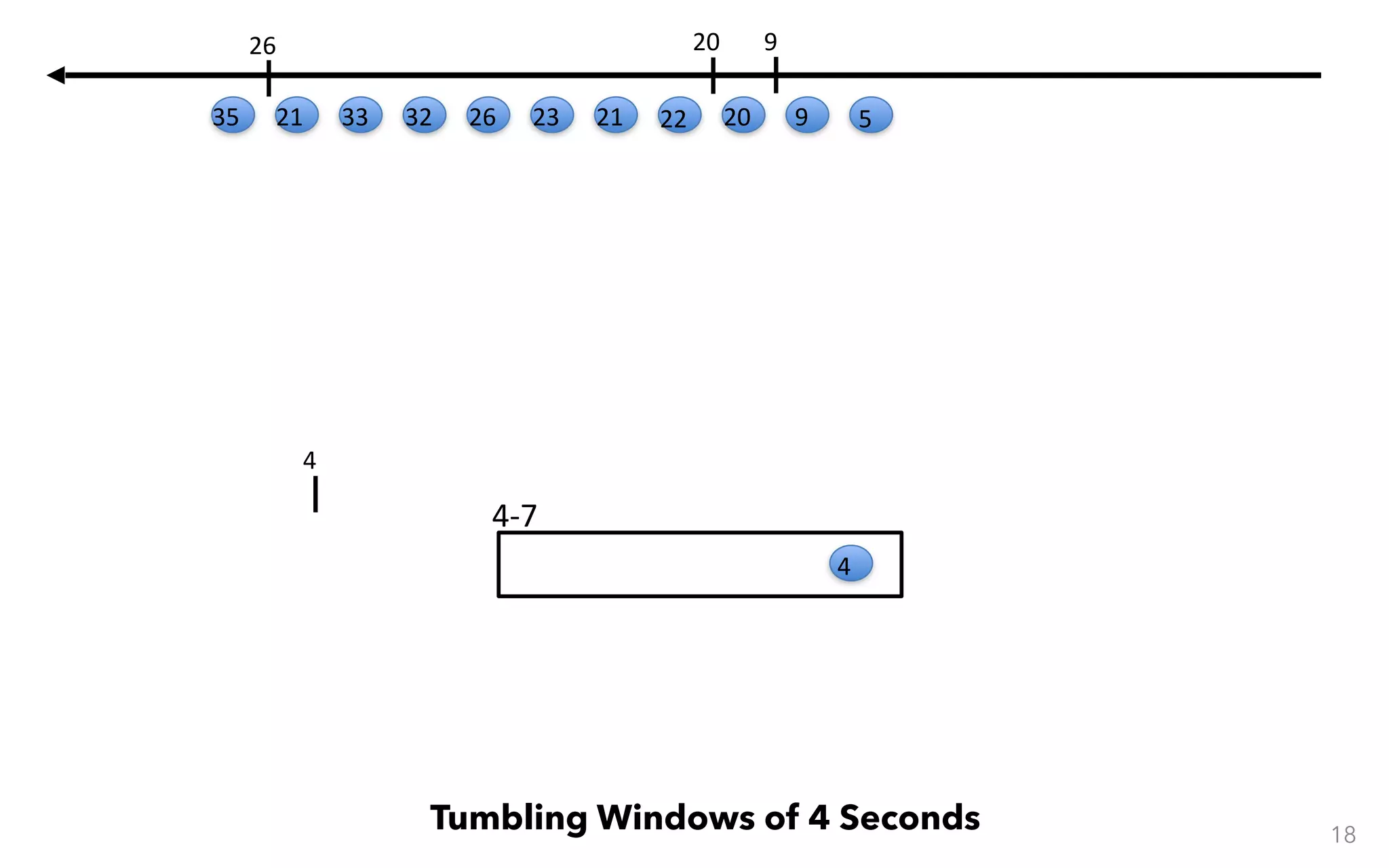

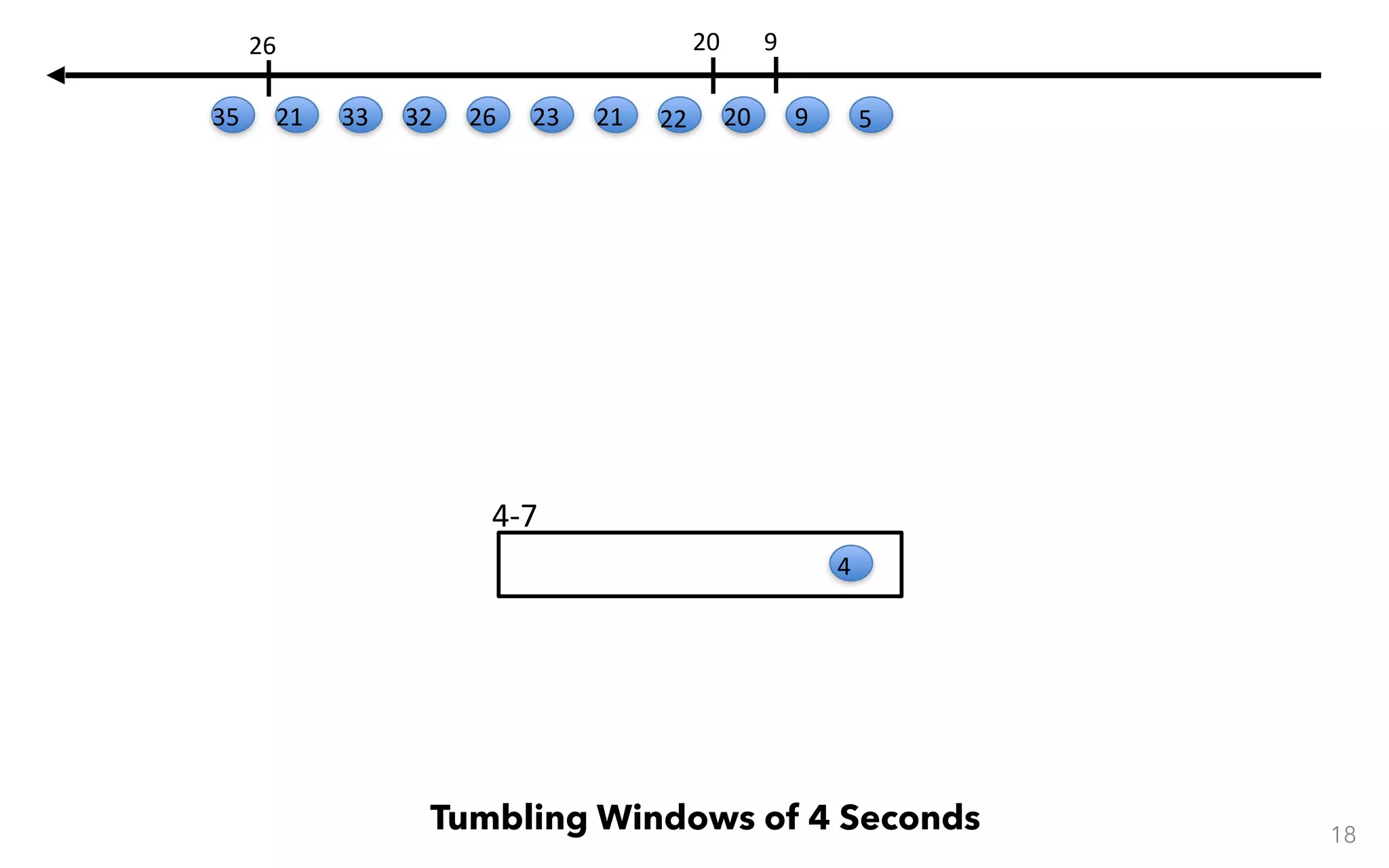

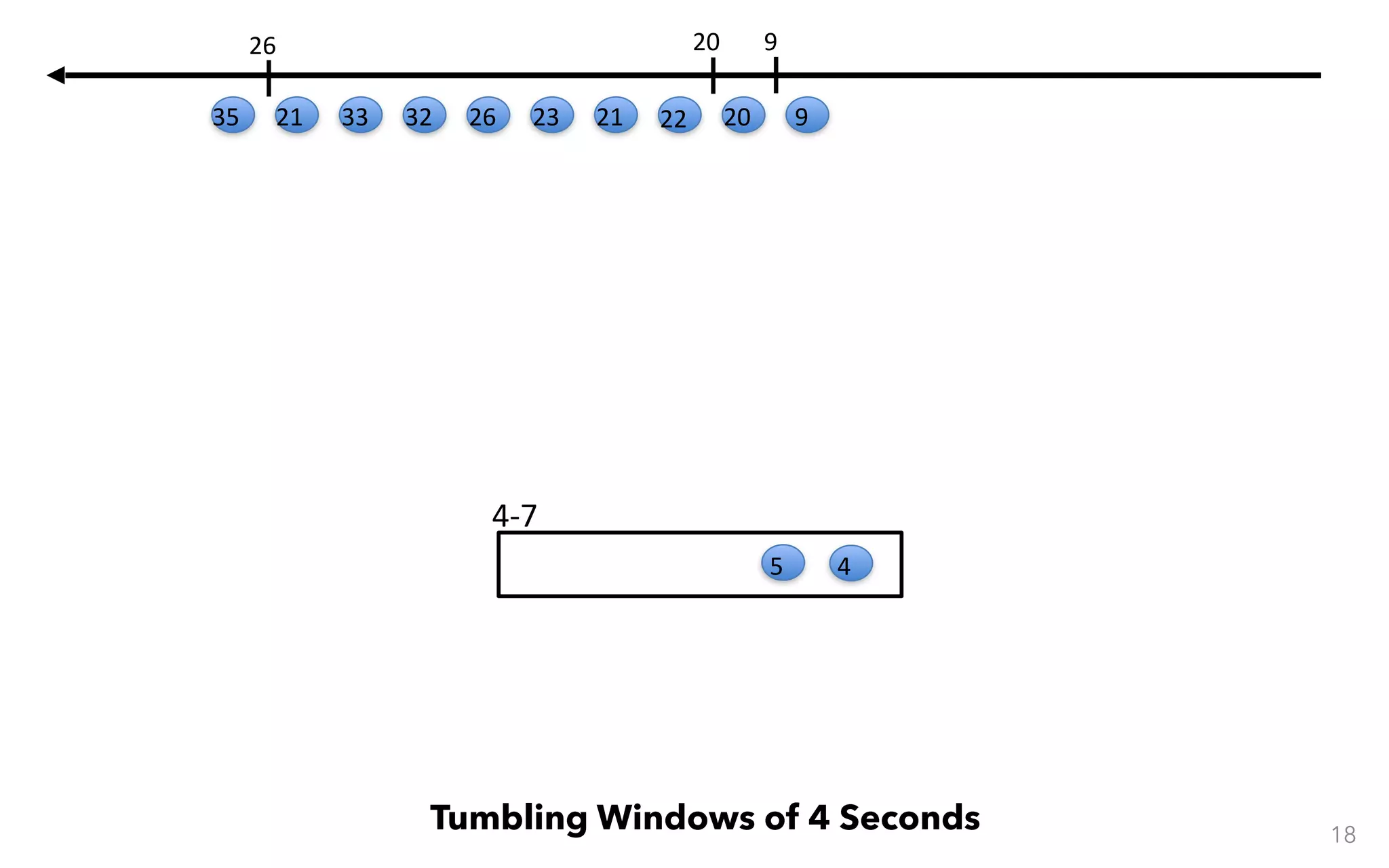

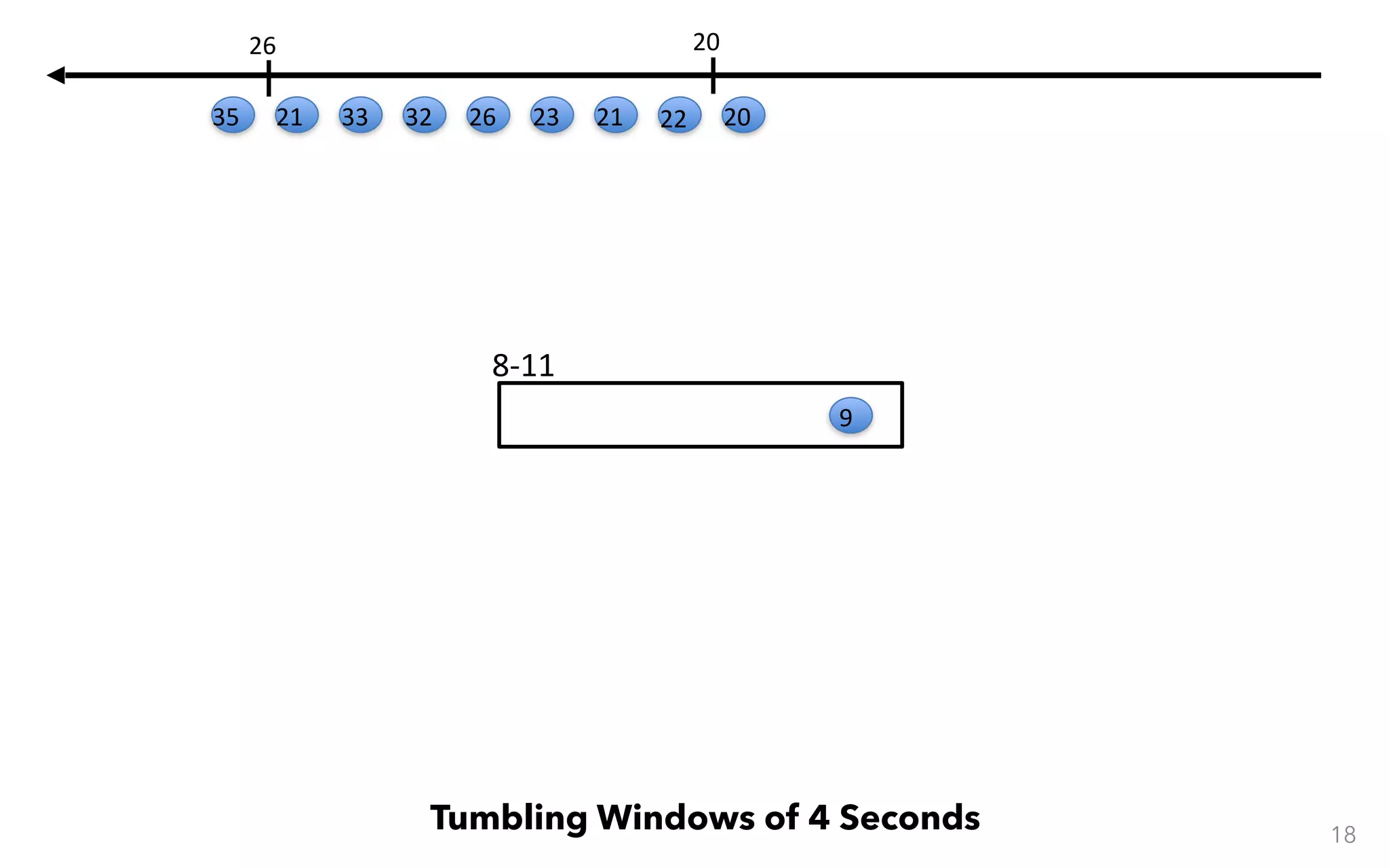

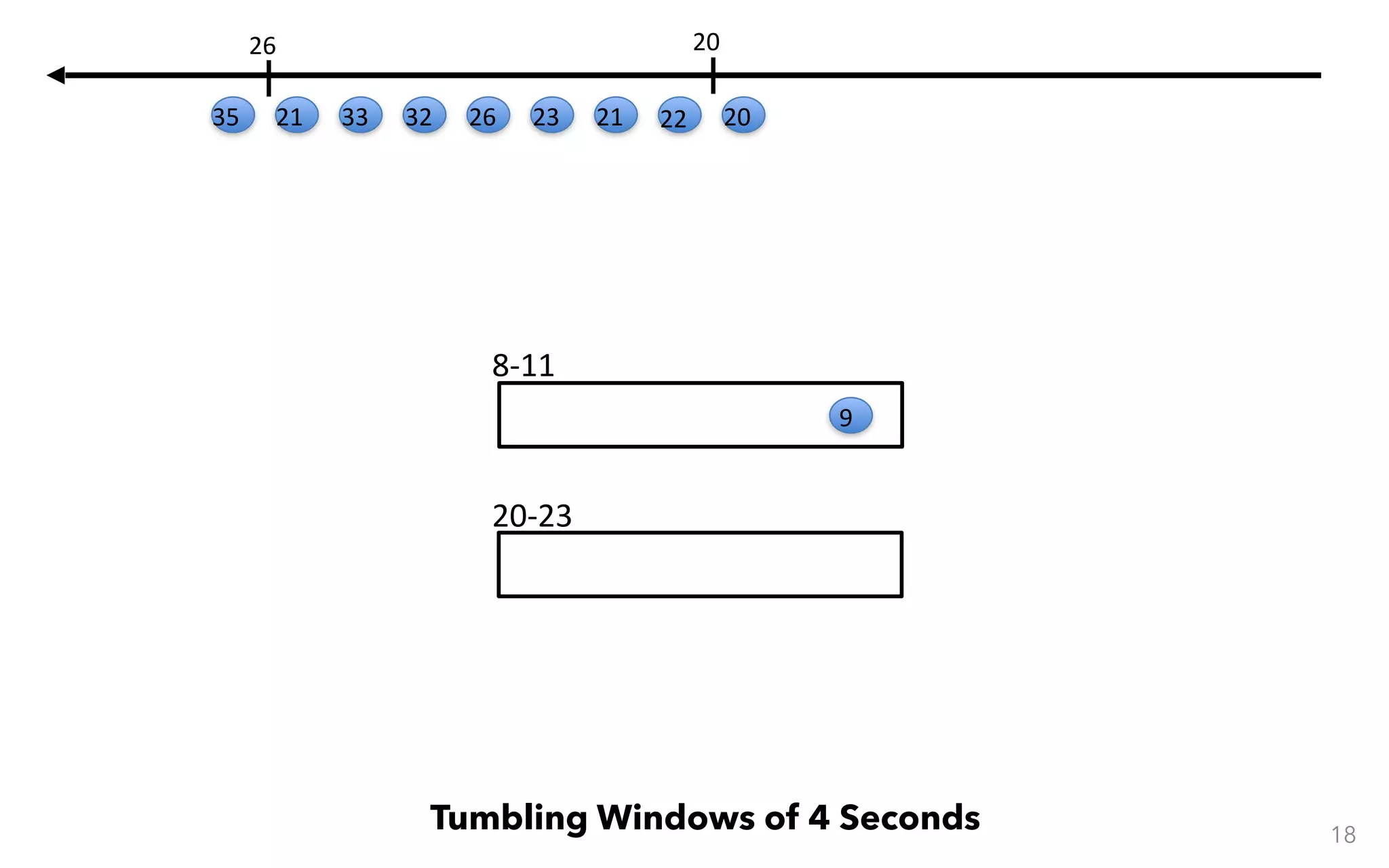

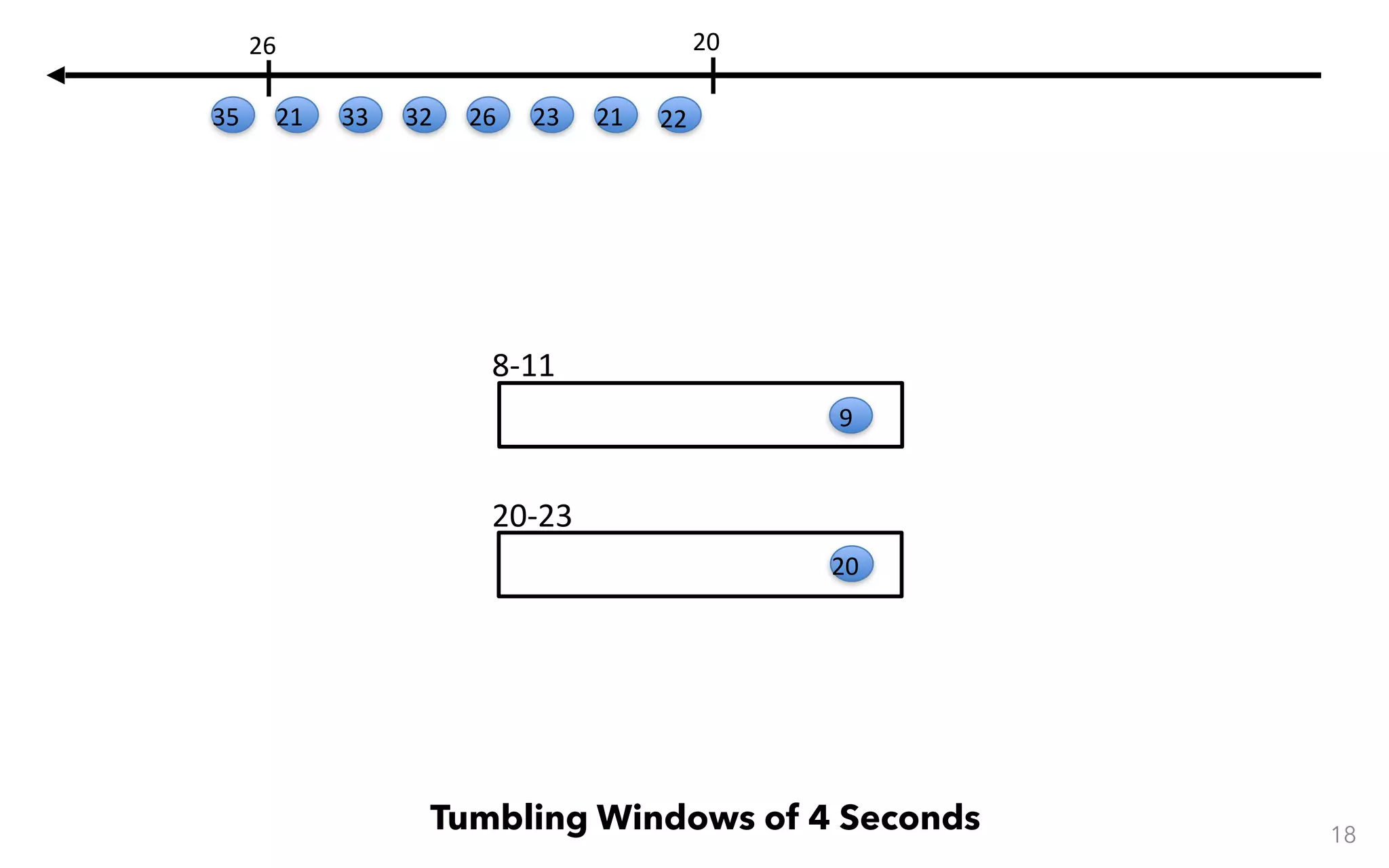

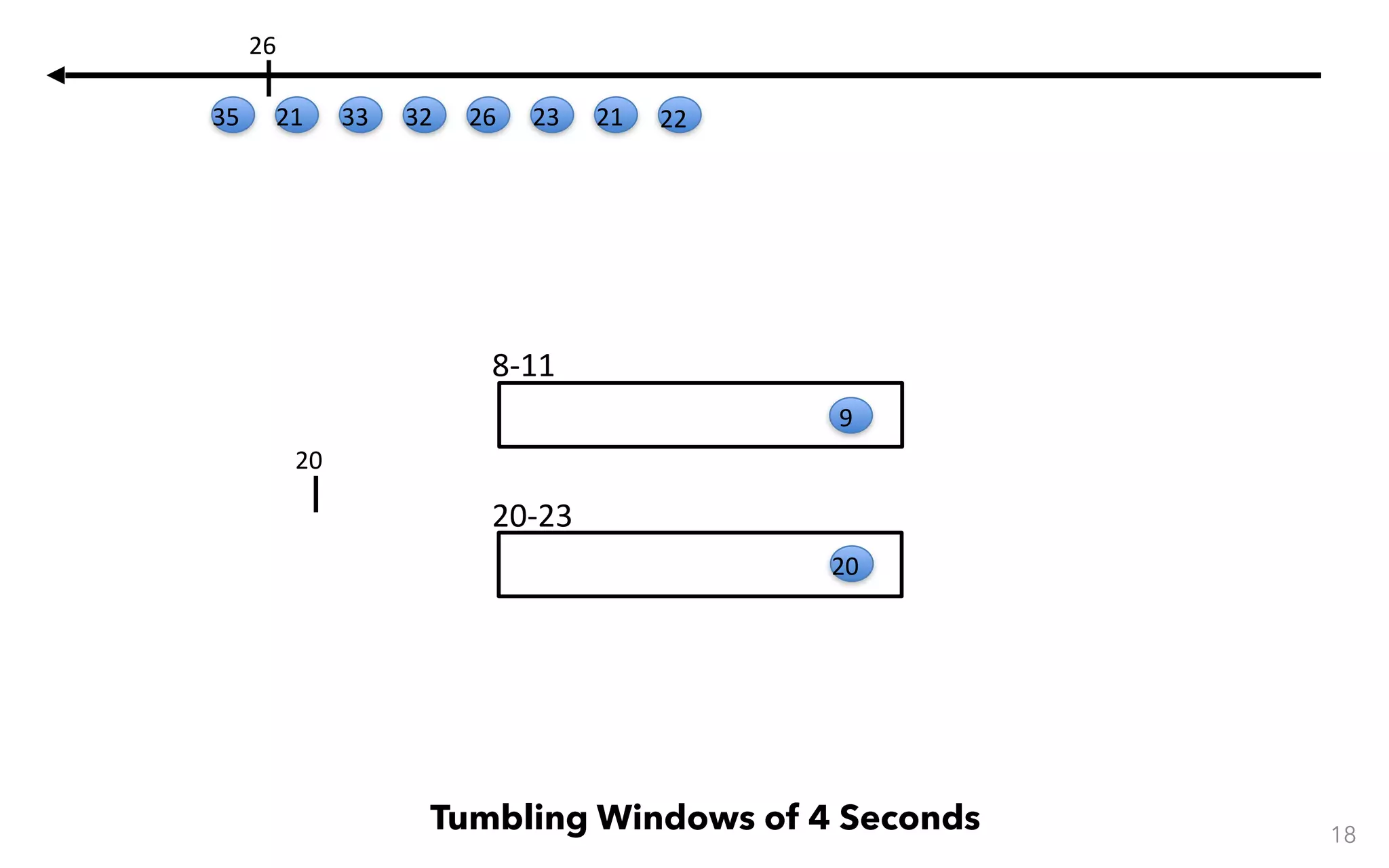

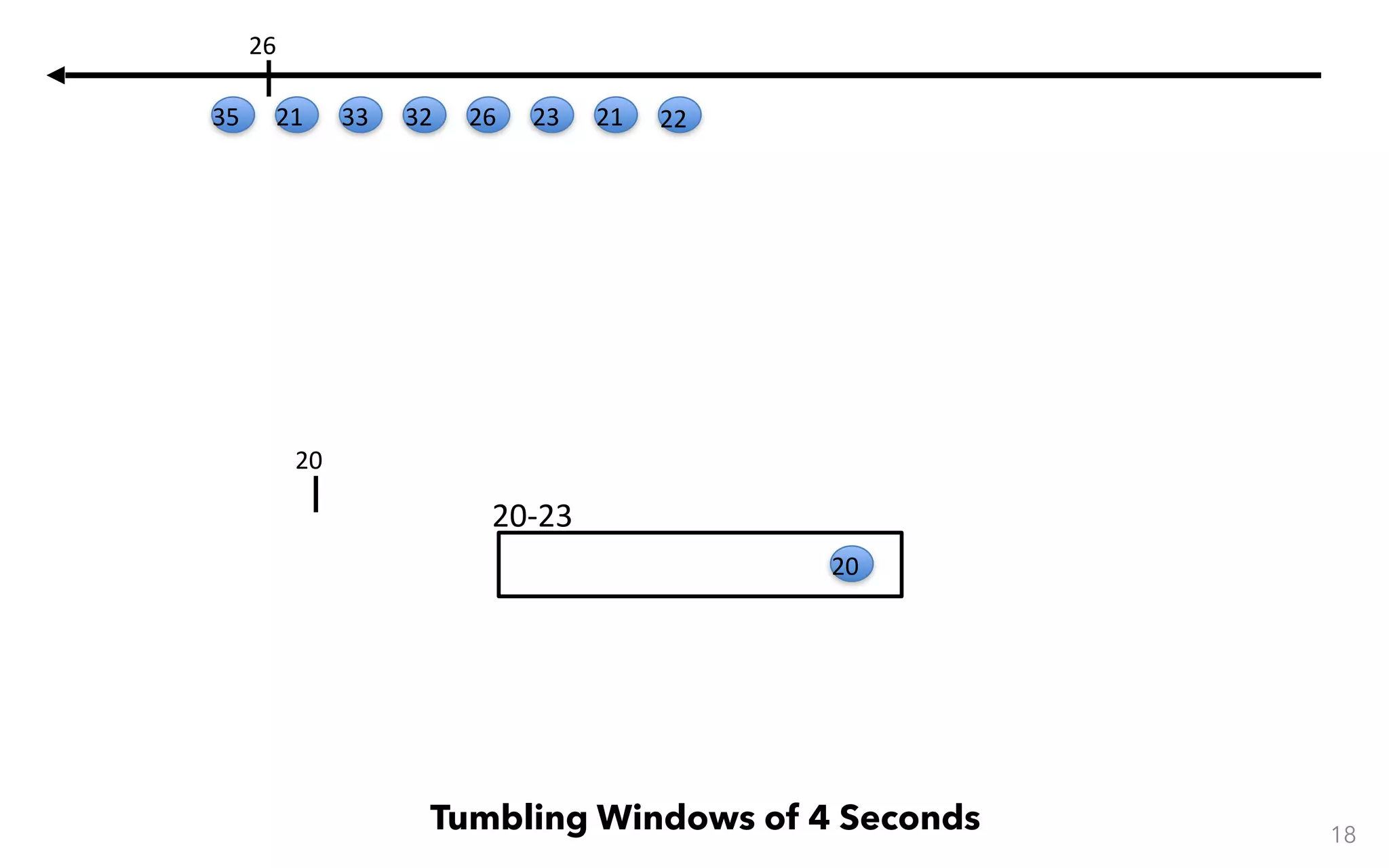

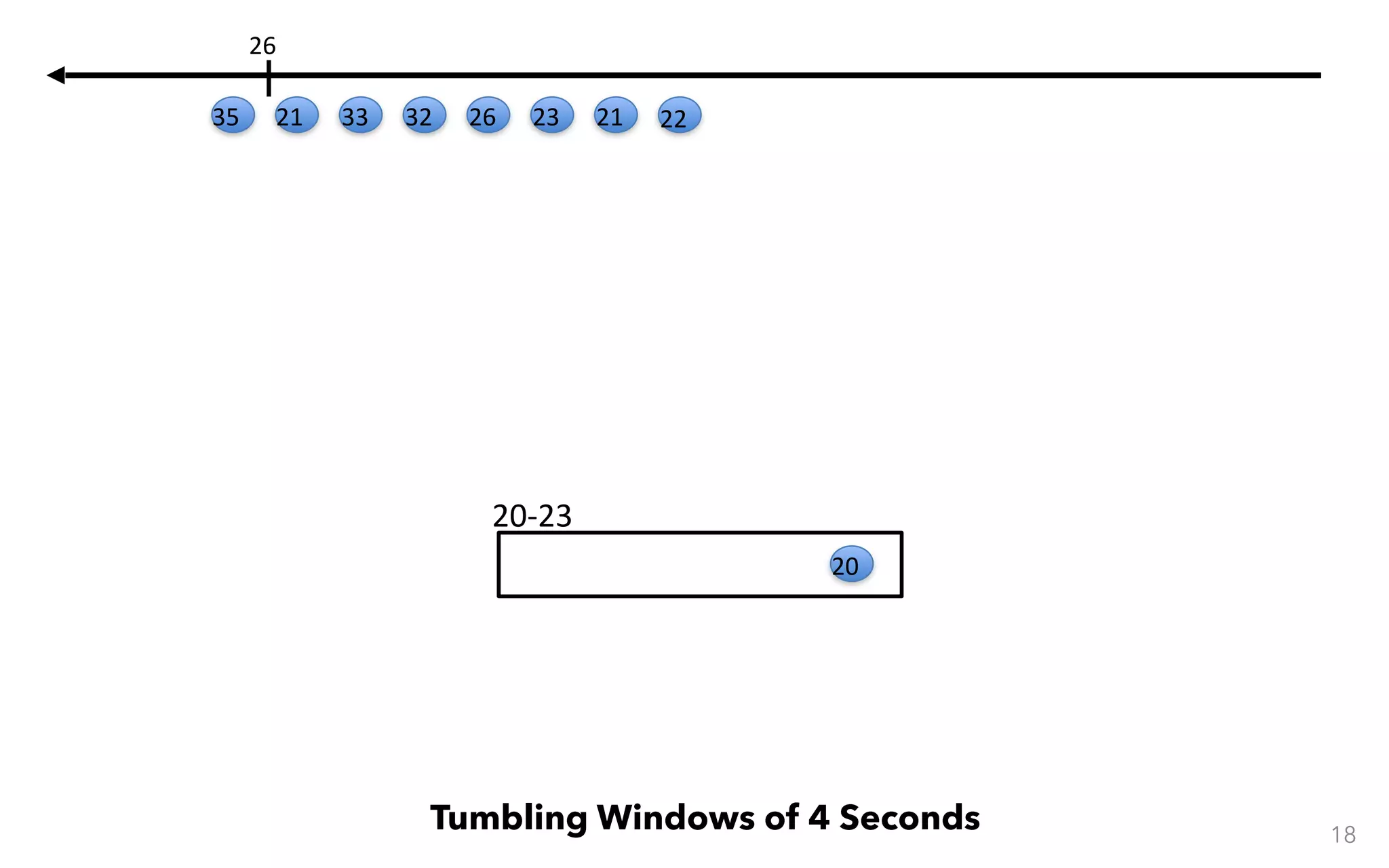

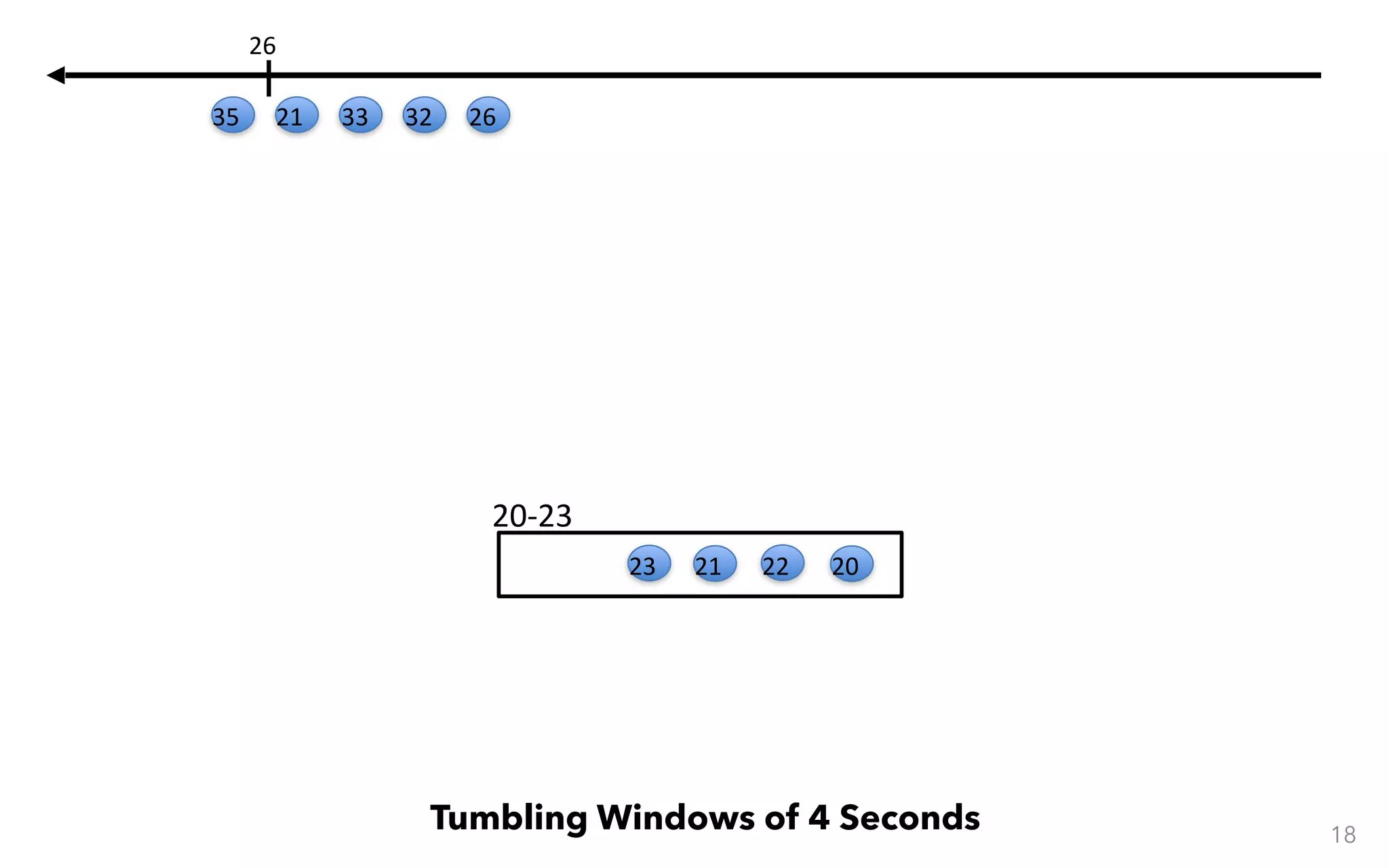

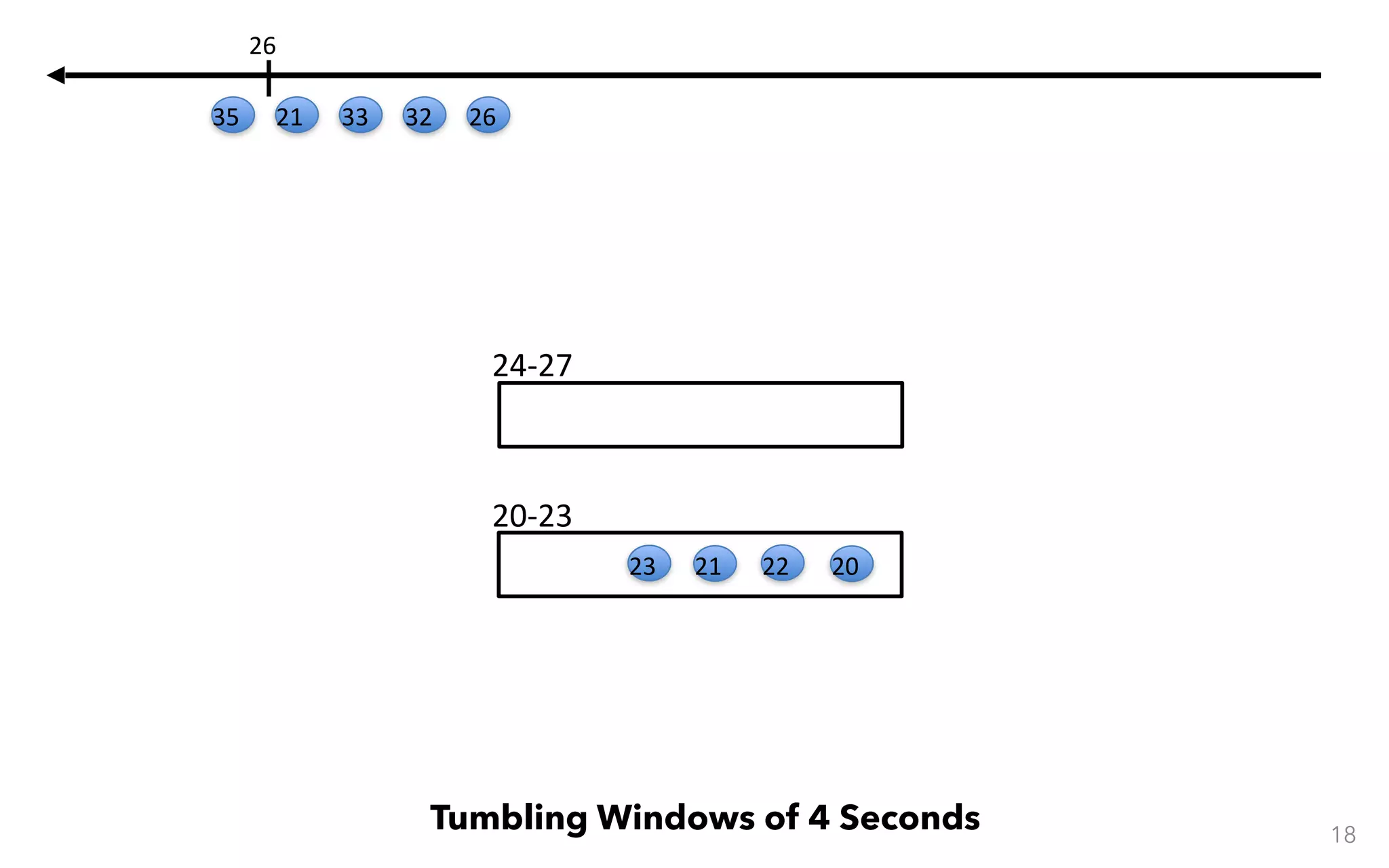

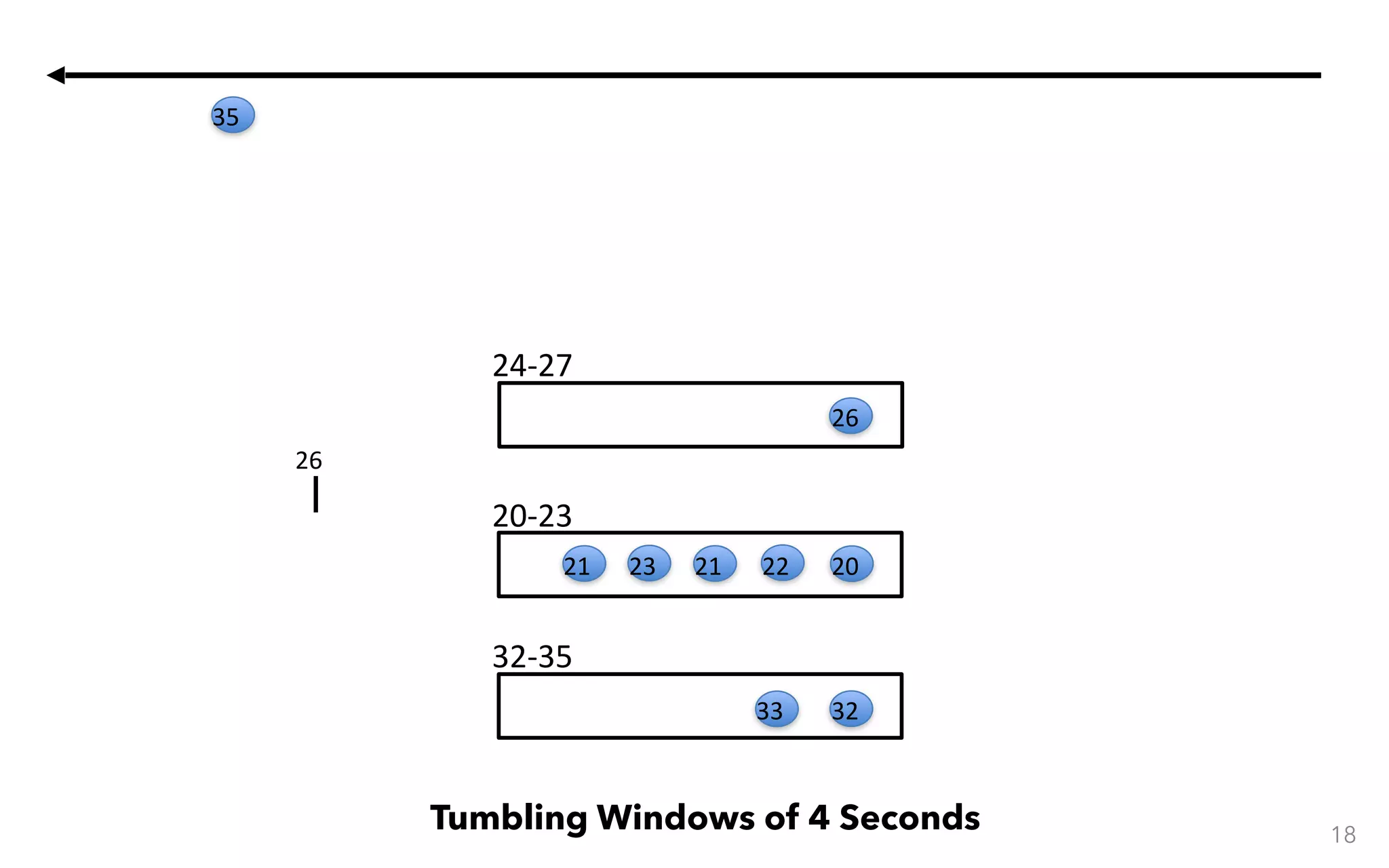

This document provides an overview of Apache Flink, a distributed open-source data analysis framework focused on stream processing. It covers foundational concepts of streaming, the architecture of the Flink engine, and practical use cases such as log file analysis and tweet impressions. Additionally, it highlights Flink's low latency, fault-tolerance, and growing community support.

![From Program to Execution case class Path (from: Long, to: Long) val tc = edges.iterate(10) { paths: DataSet[Path] => val next = paths .join(edges) .where("to") .equalTo("from") { (path, edge) => Path(path.from, edge.to) } .union(paths) .distinct() next } Cost-based optimizer Type extraction stack Task scheduling Recovery metadata Pre-flight (Client) Master Workers DataSource orders.tbl Filter Map DataSource lineitem.tbl Join Hybrid Hash buildHT probe hash-part [0] hash-part [0] GroupRed sort forward Program Dataflow

Graph Memory manager Out-of-core algorithms Batch & Streaming State & Checkpoints deploy

operators track

intermediate

results](https://image.slidesharecdn.com/streamprocessingwithapacheflinkmaximilianmichels-180117140151/75/Stream-processing-with-Apache-Flink-Maximilian-Michels-Data-Artisans-72-2048.jpg)