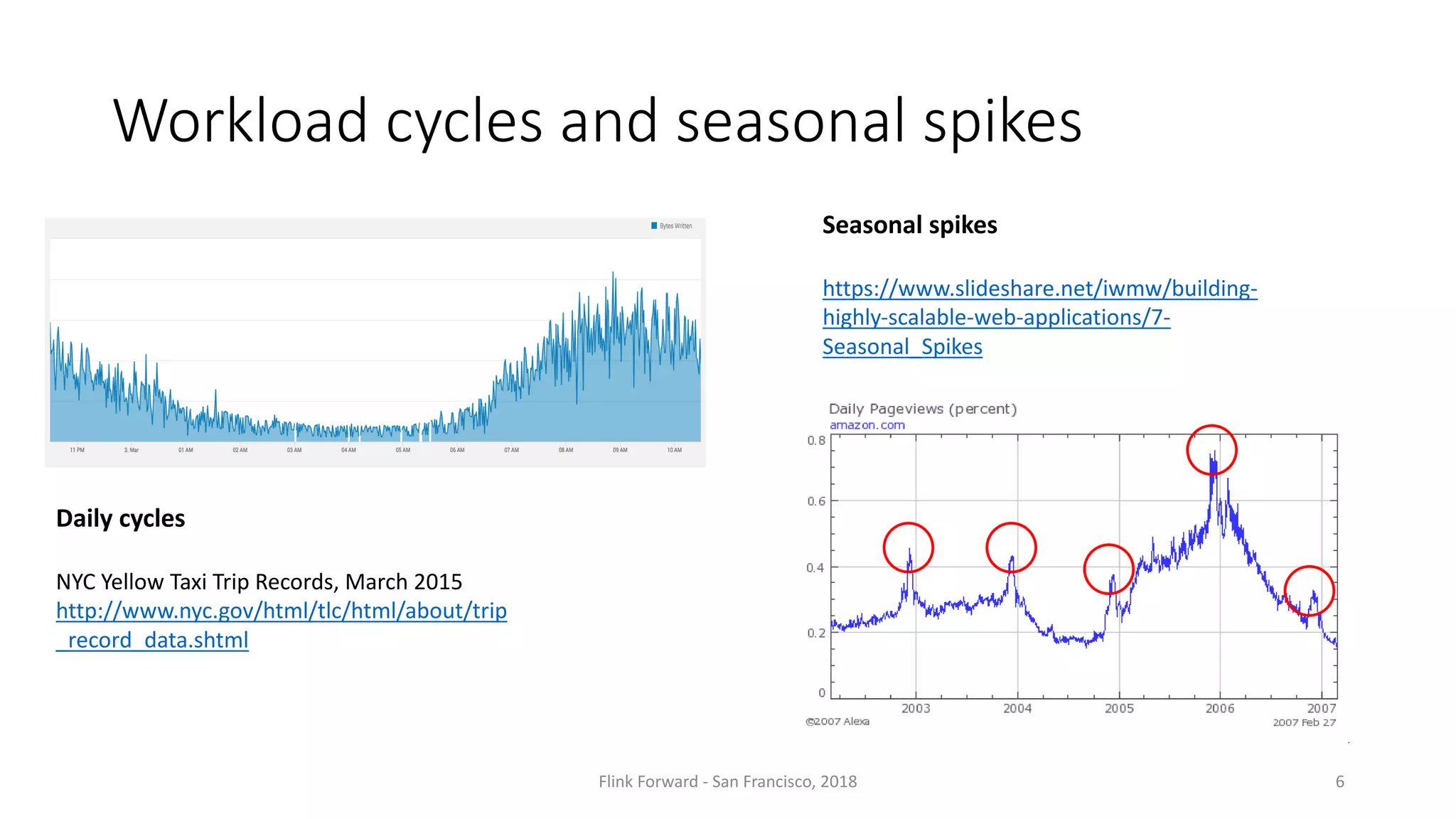

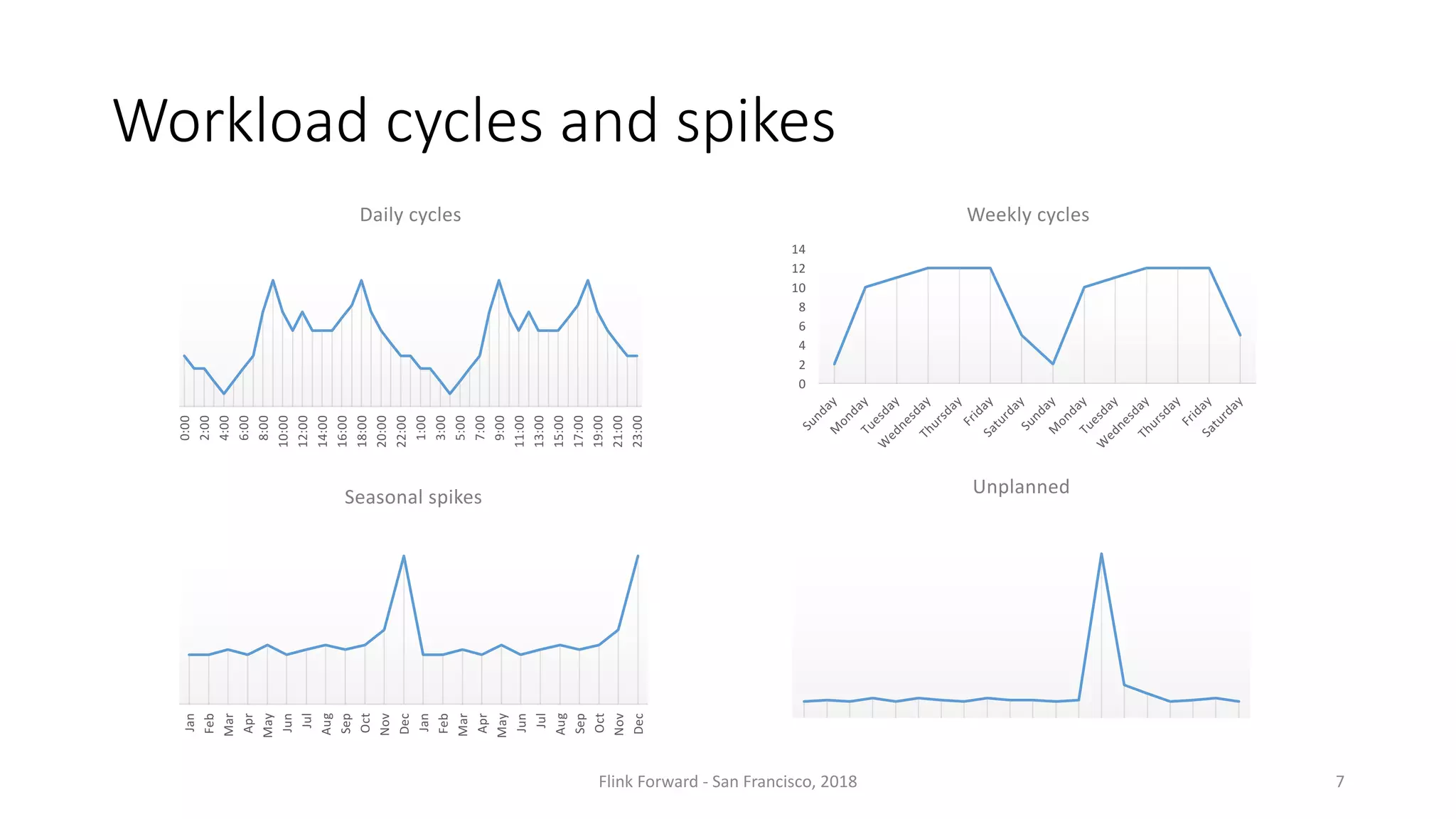

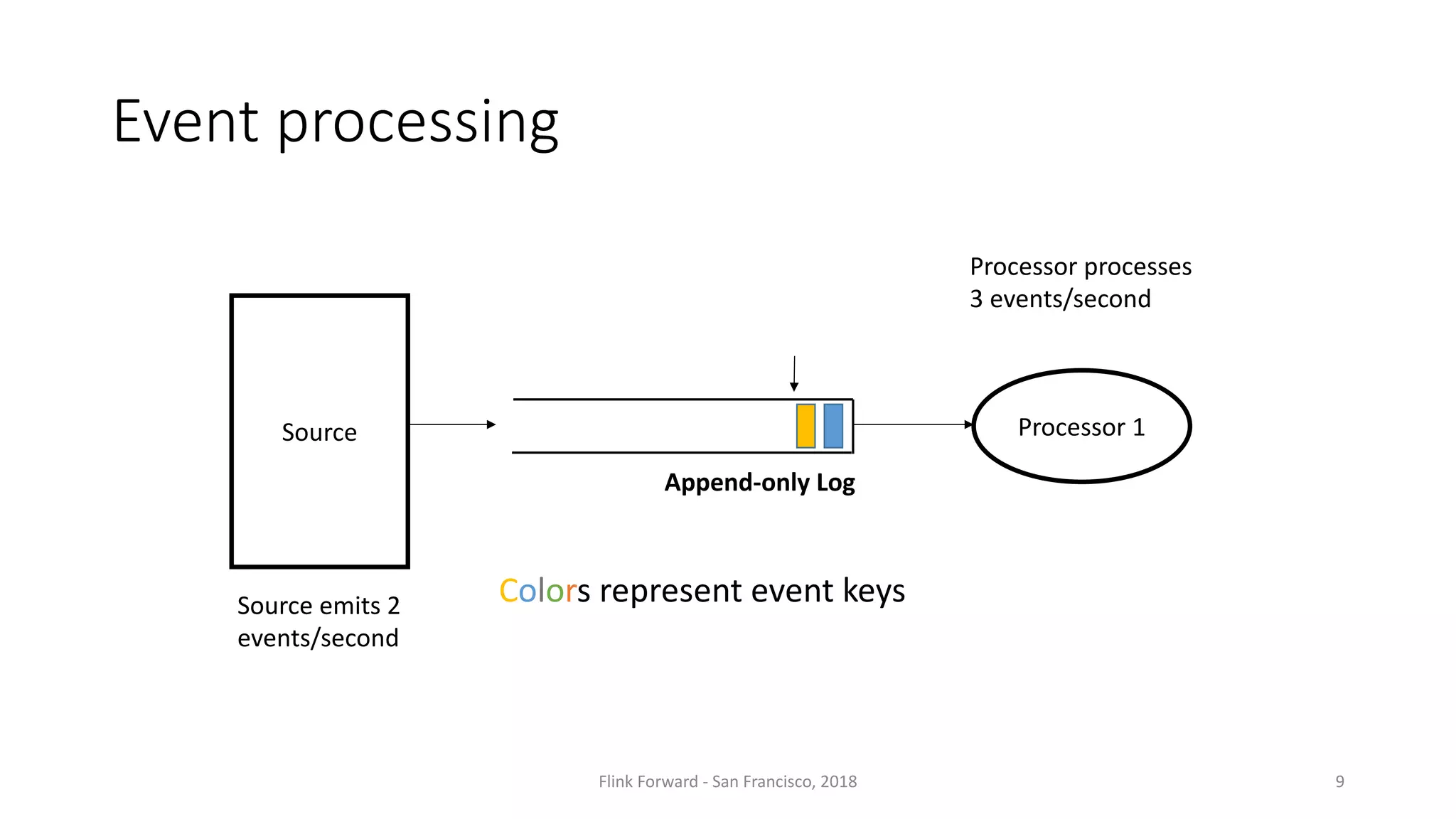

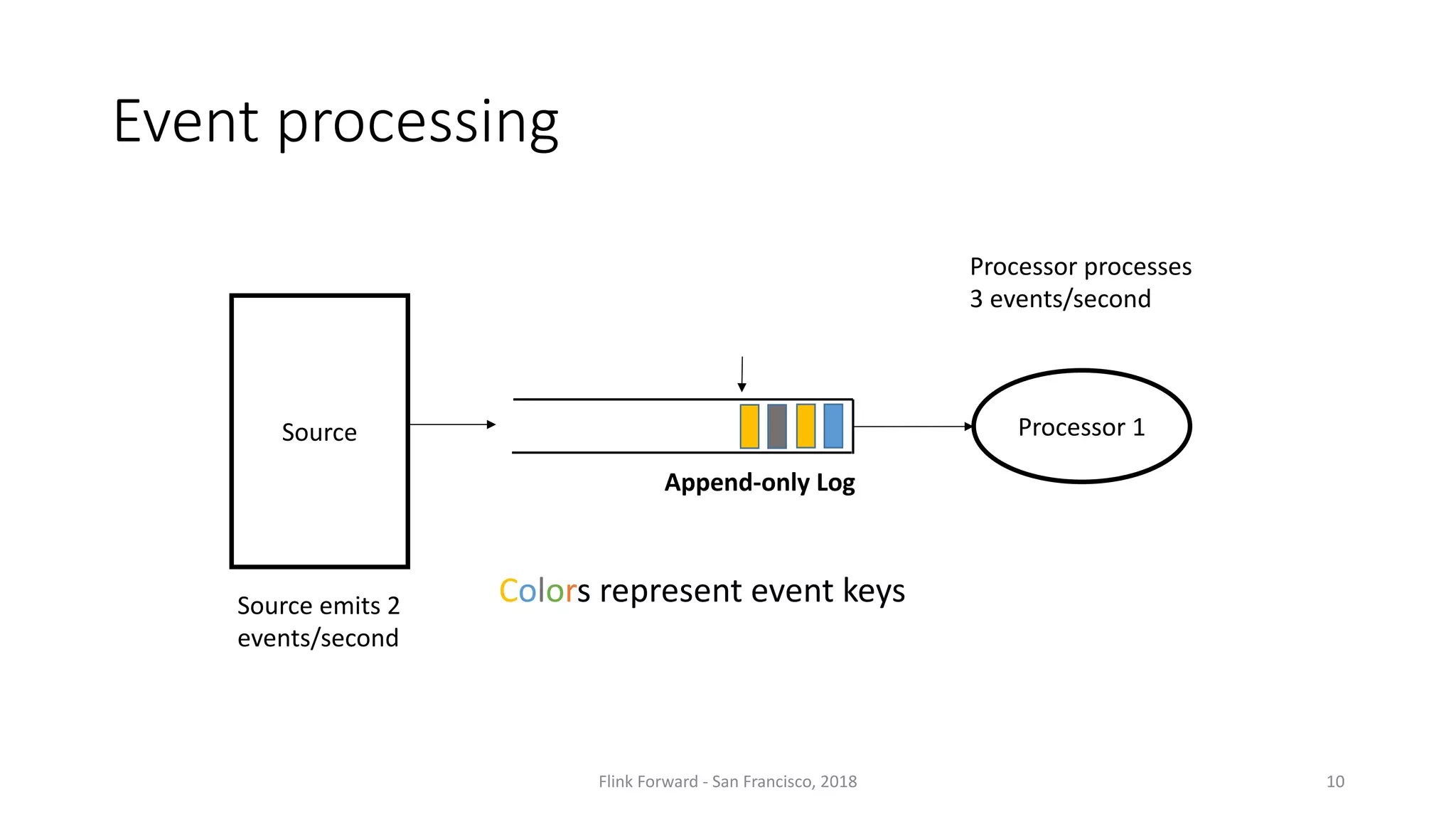

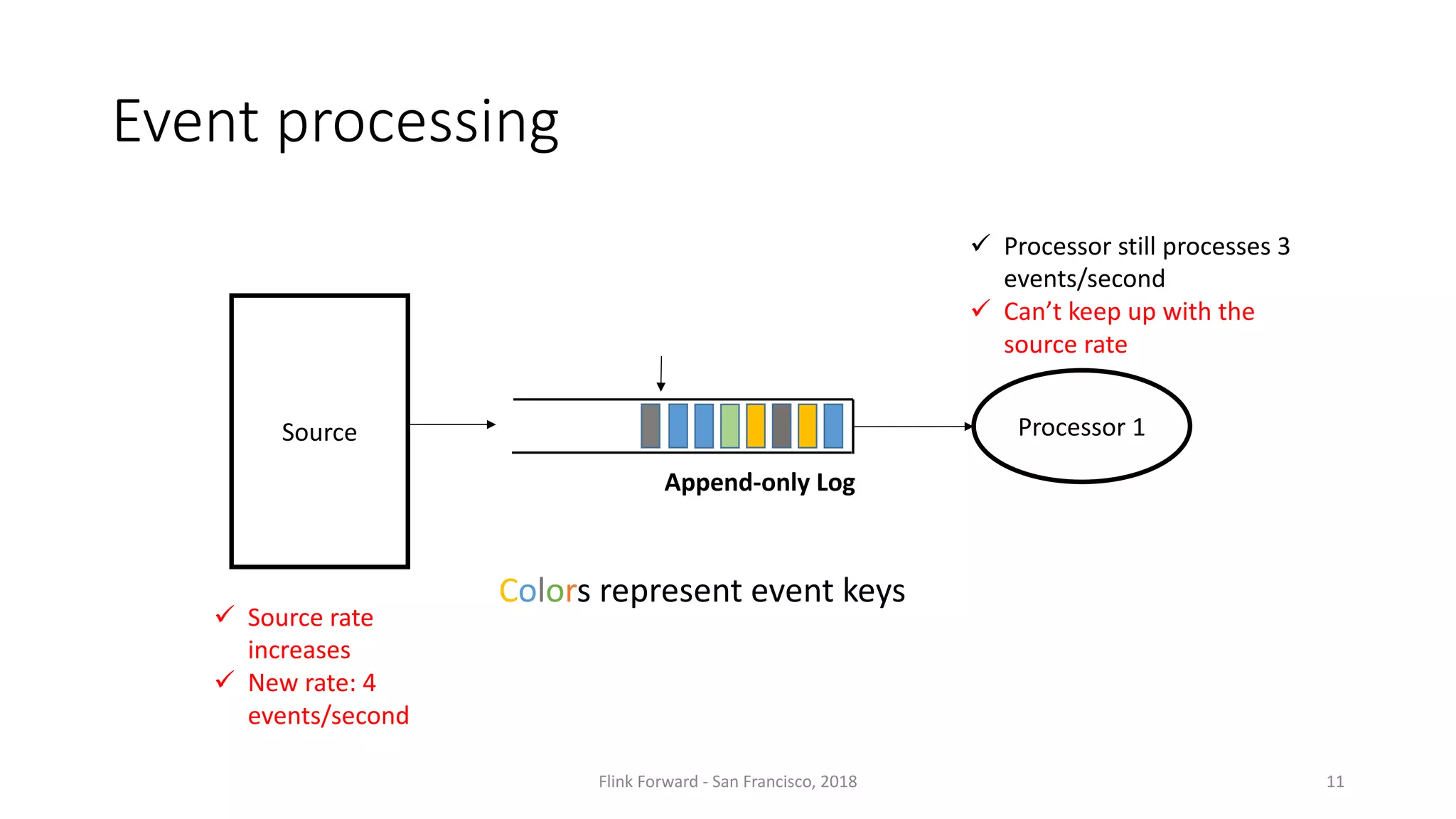

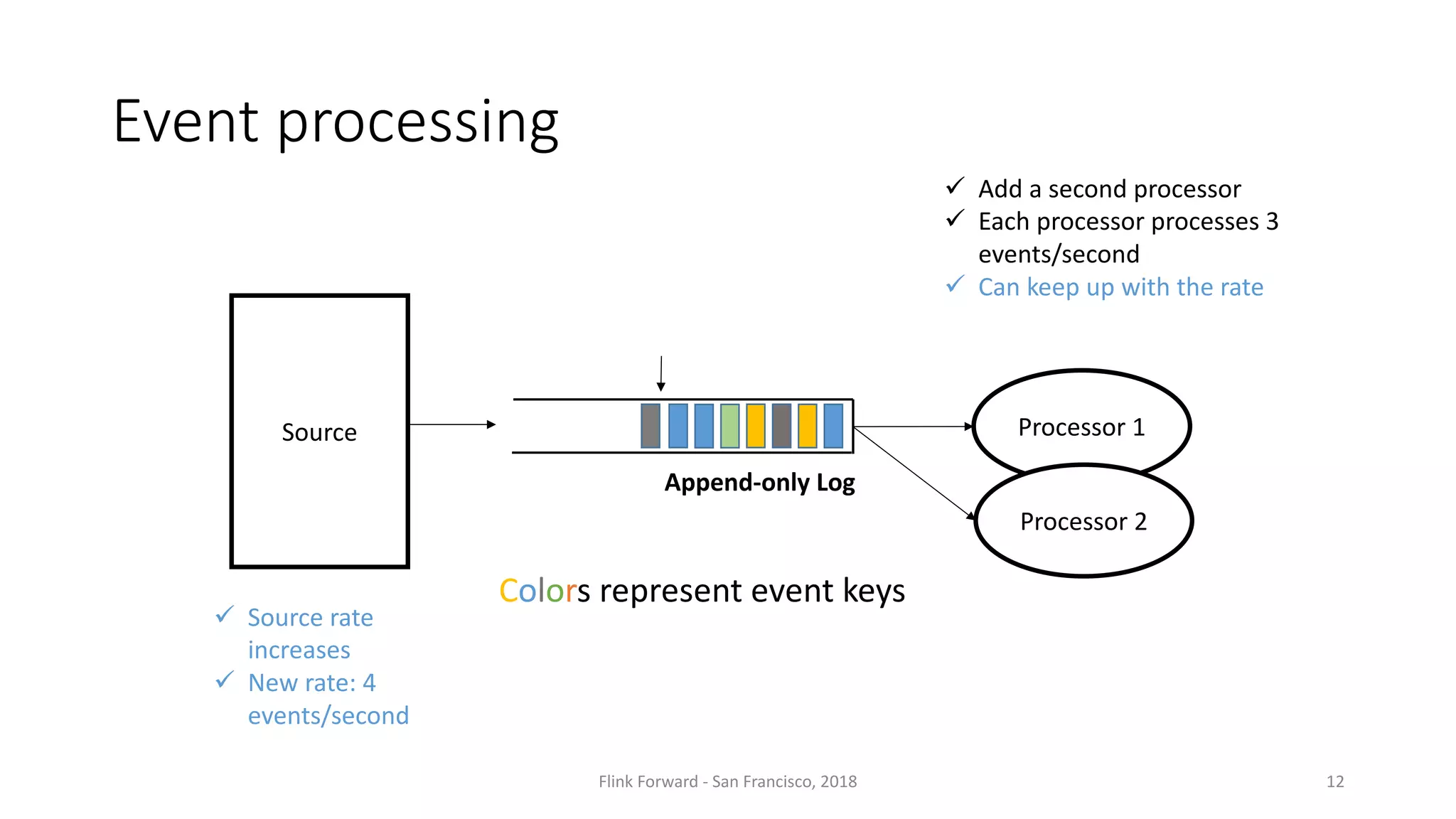

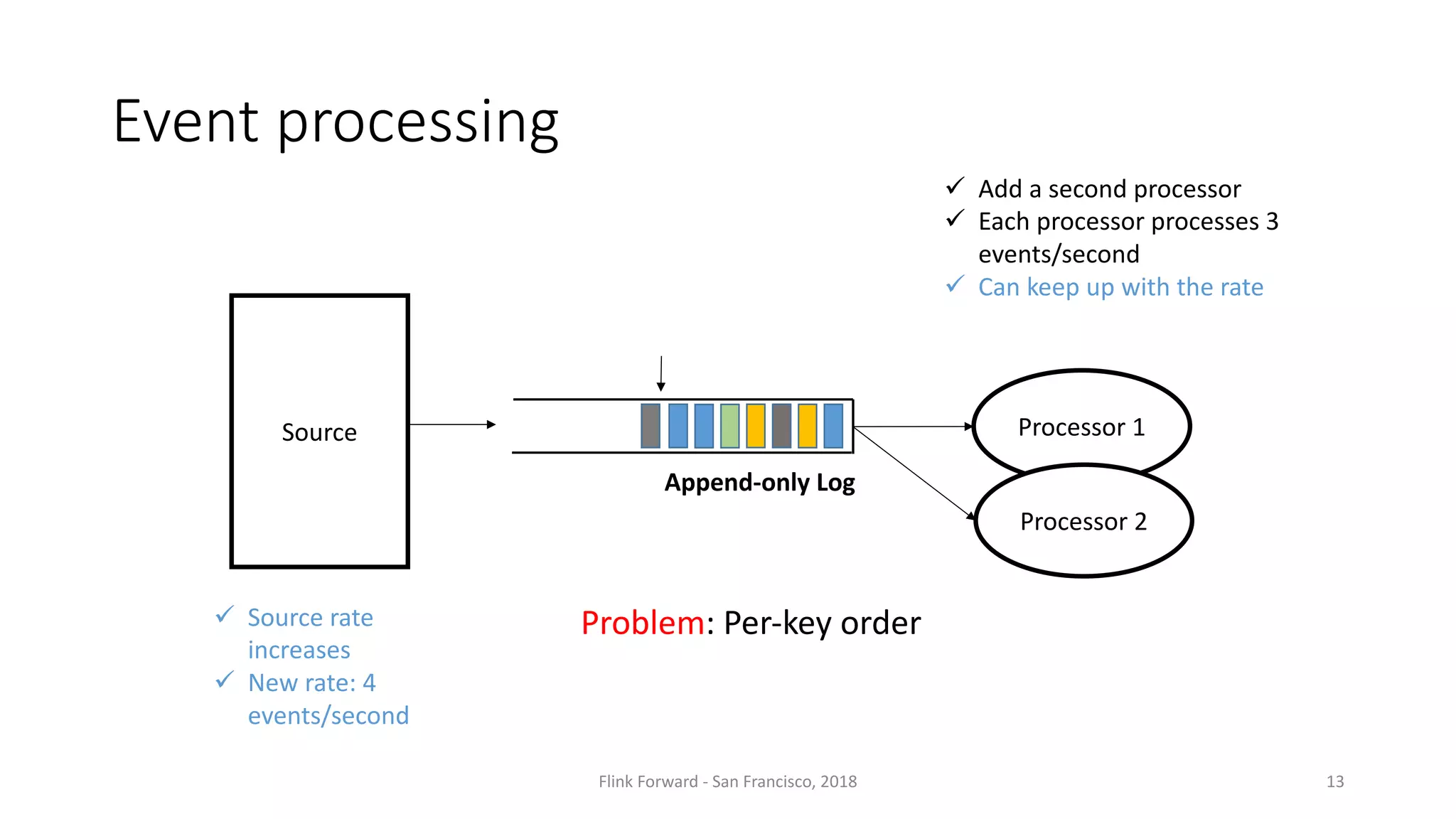

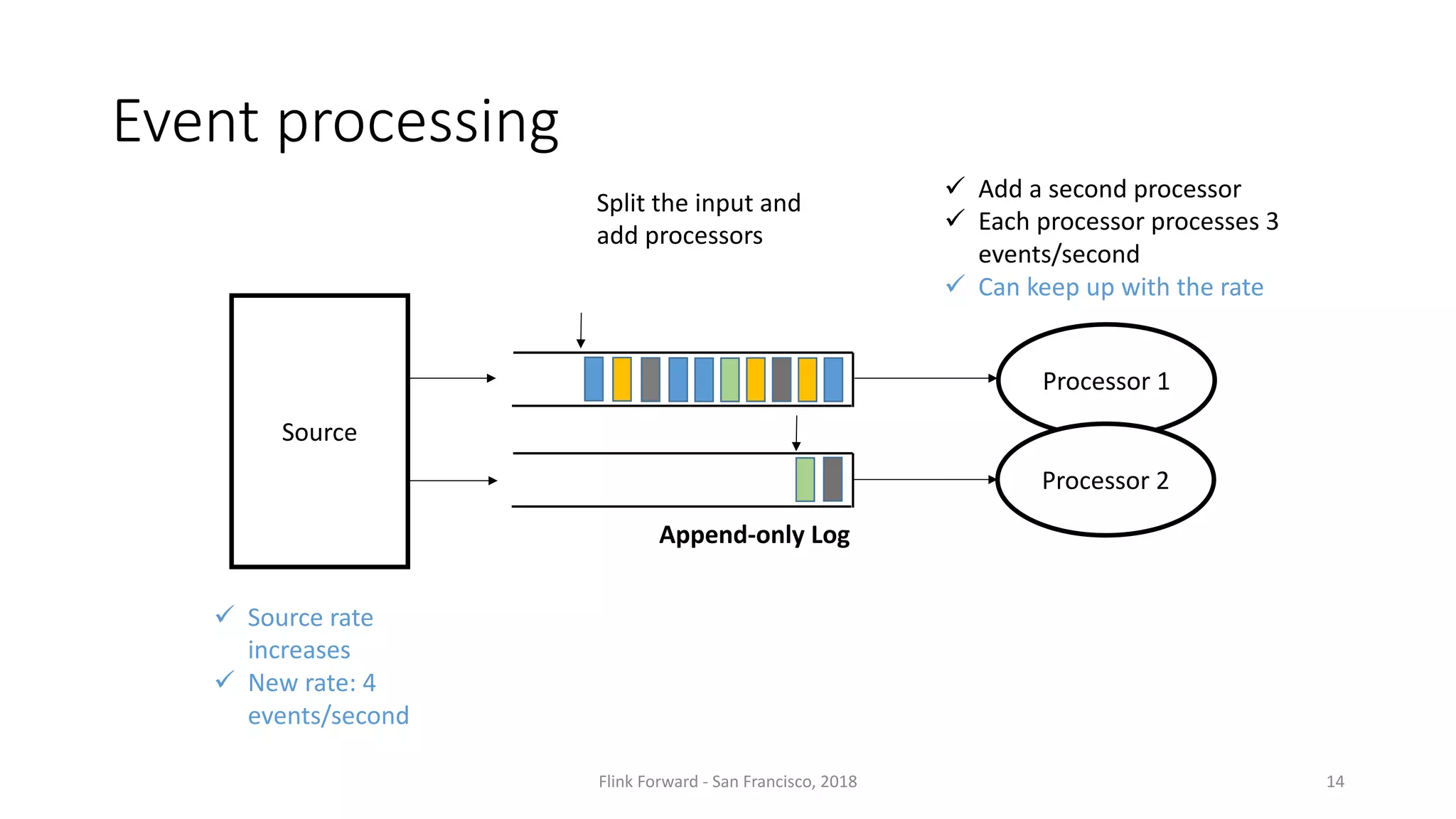

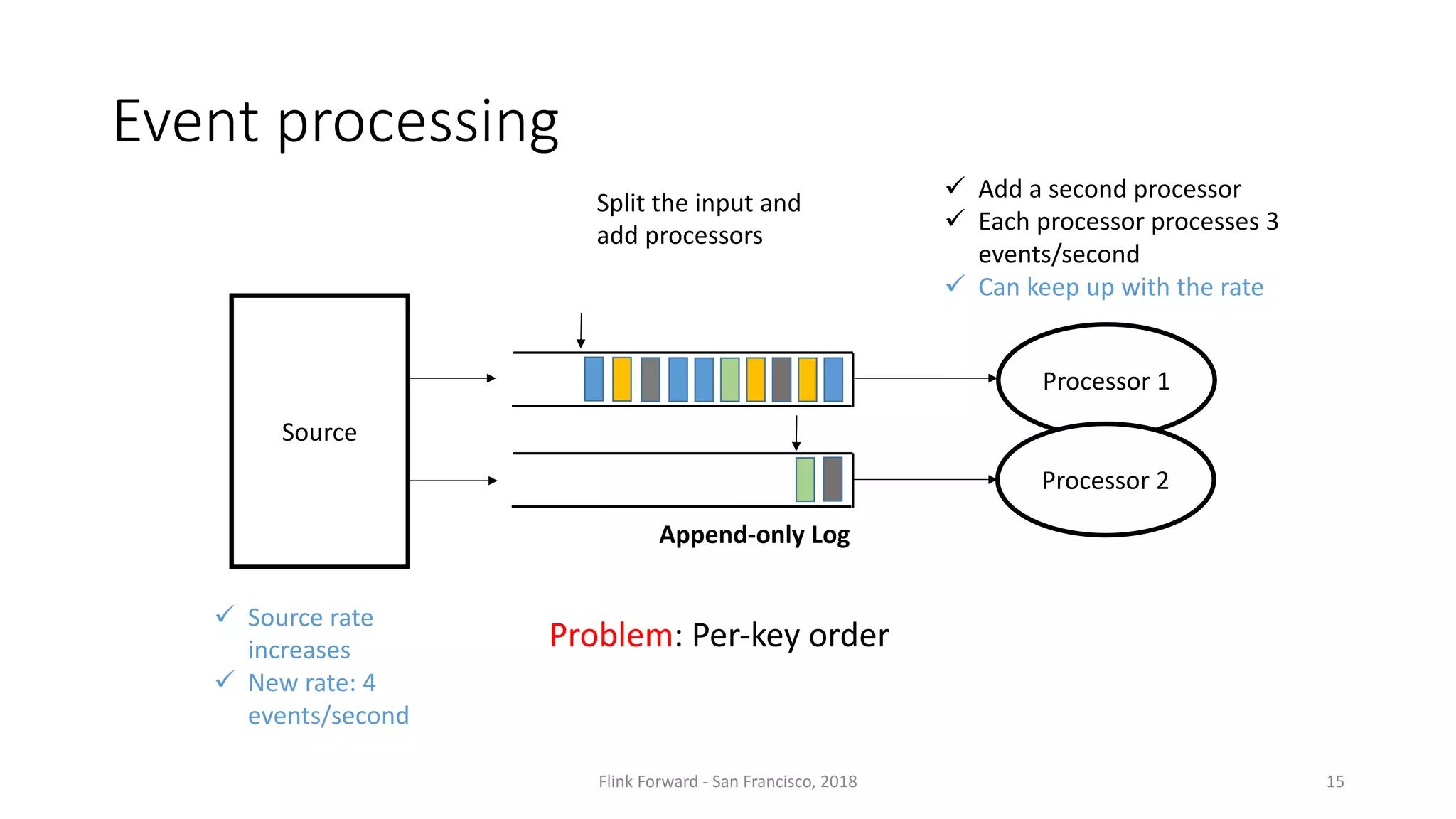

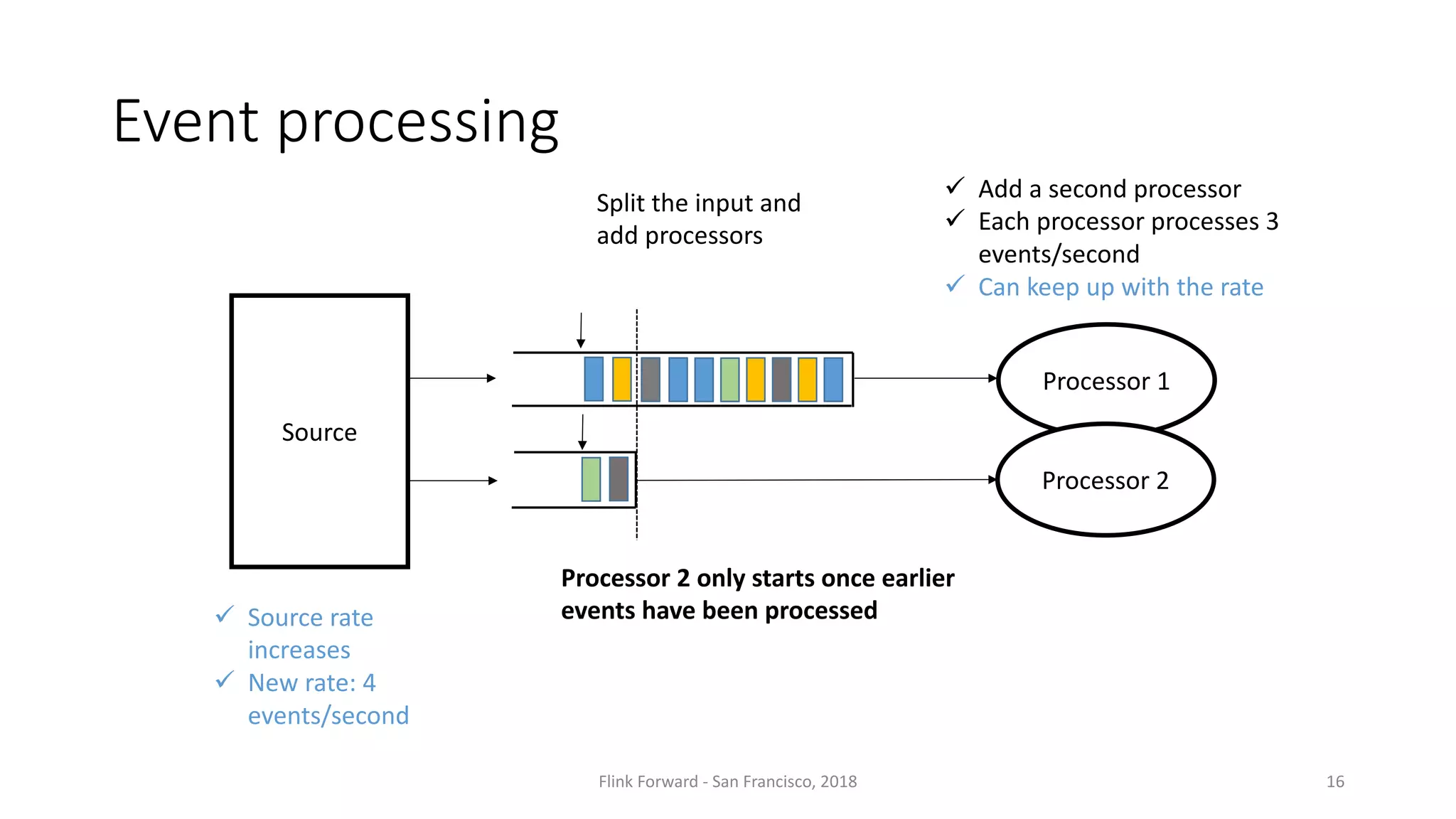

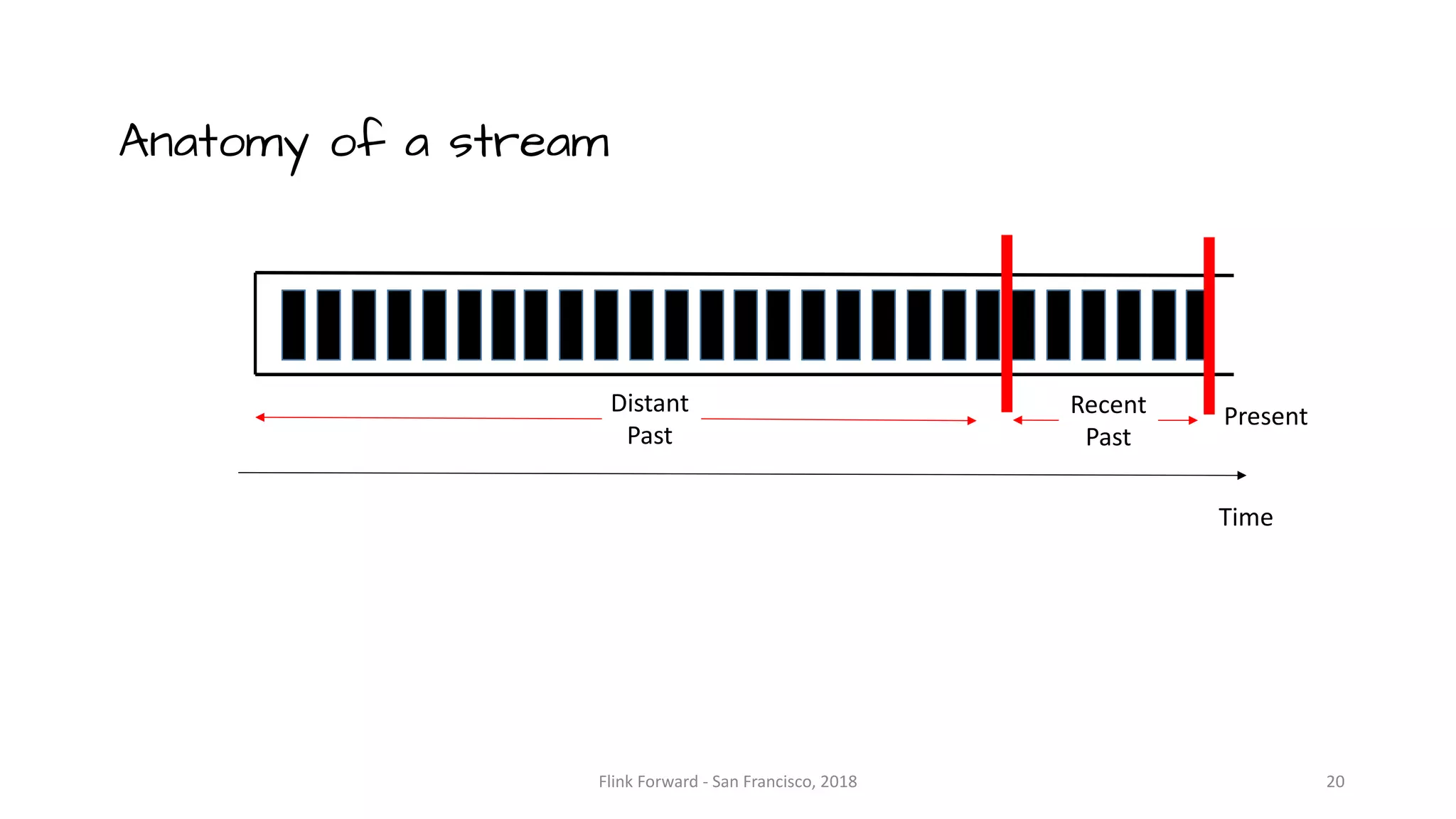

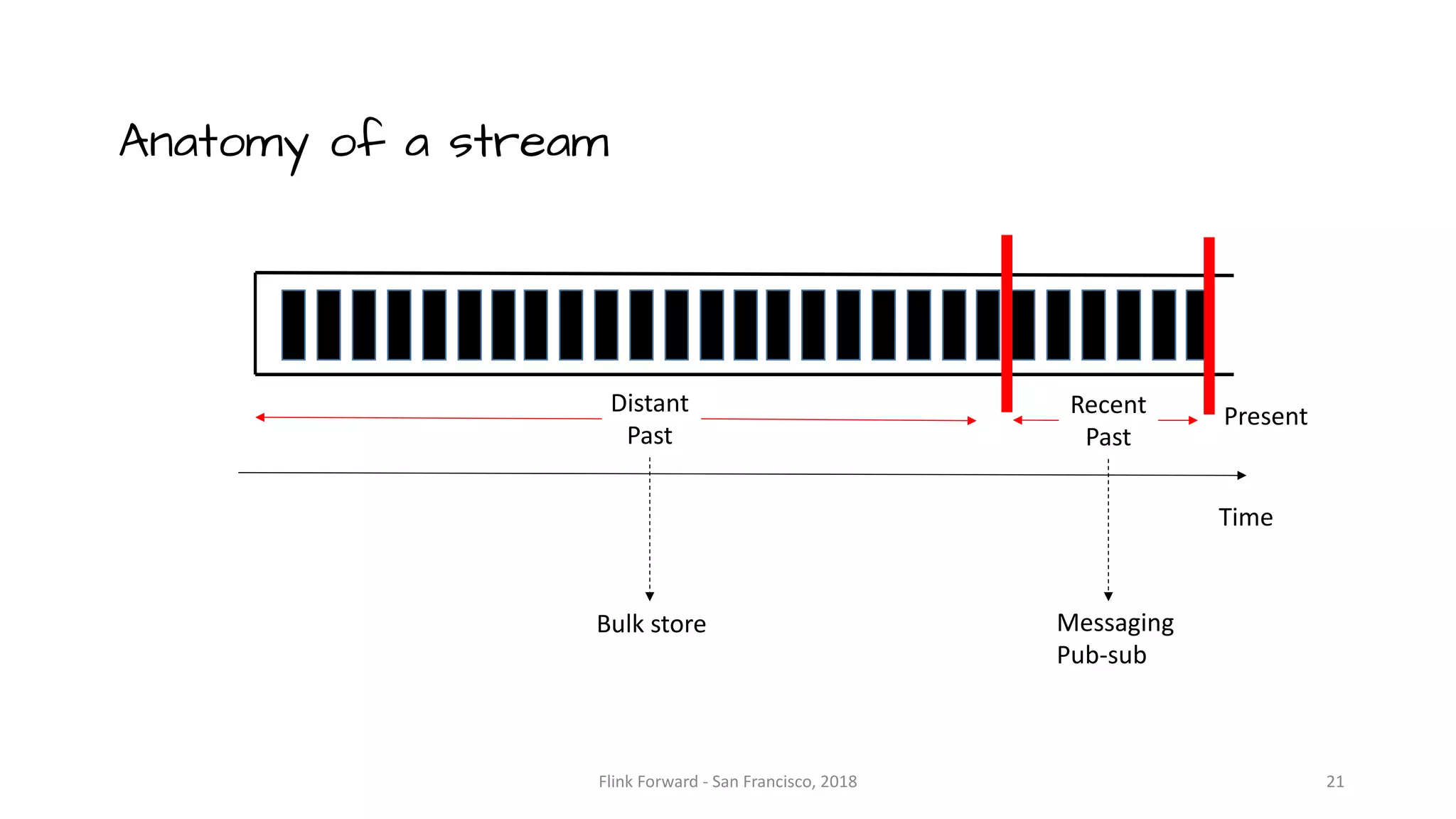

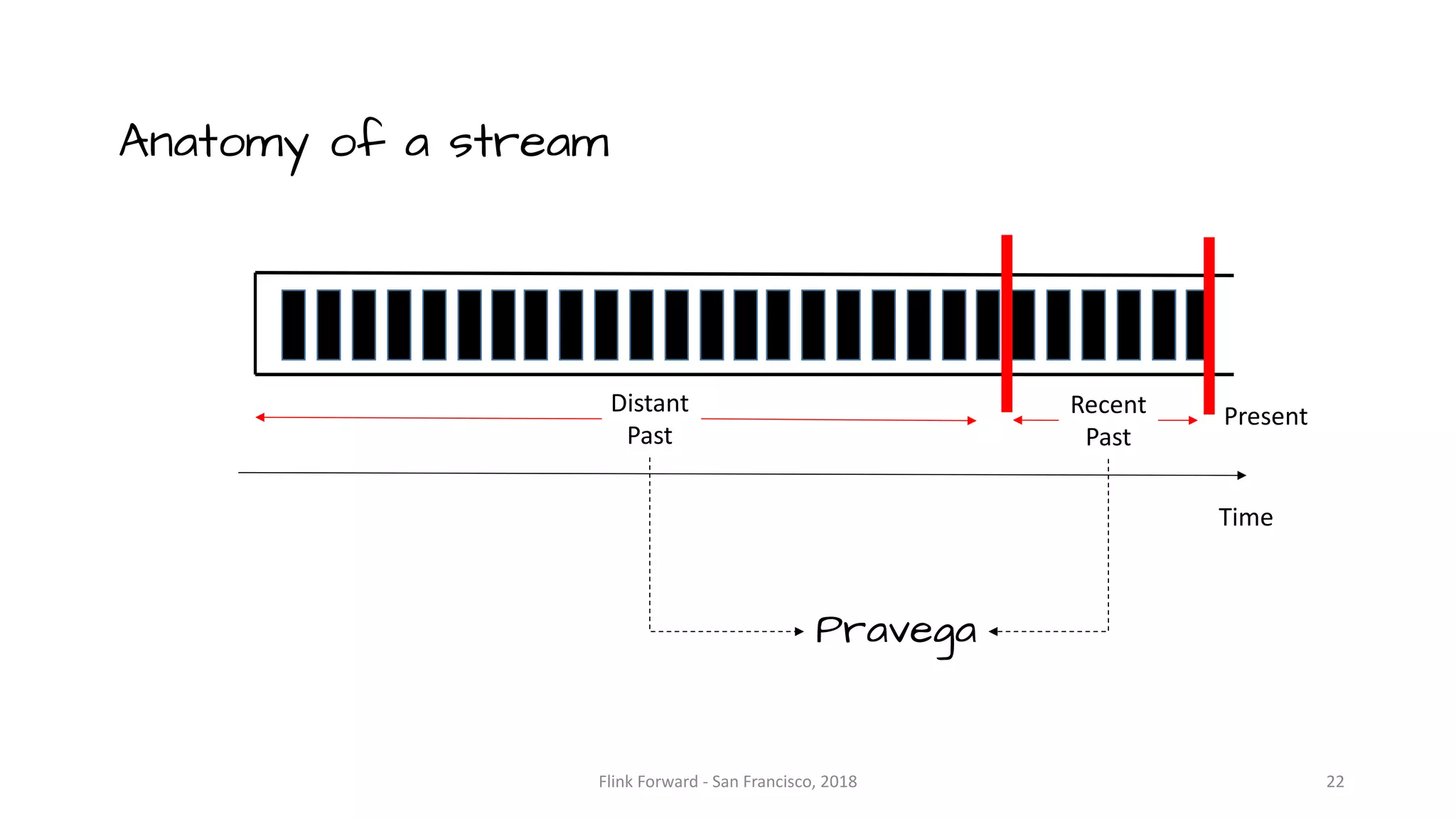

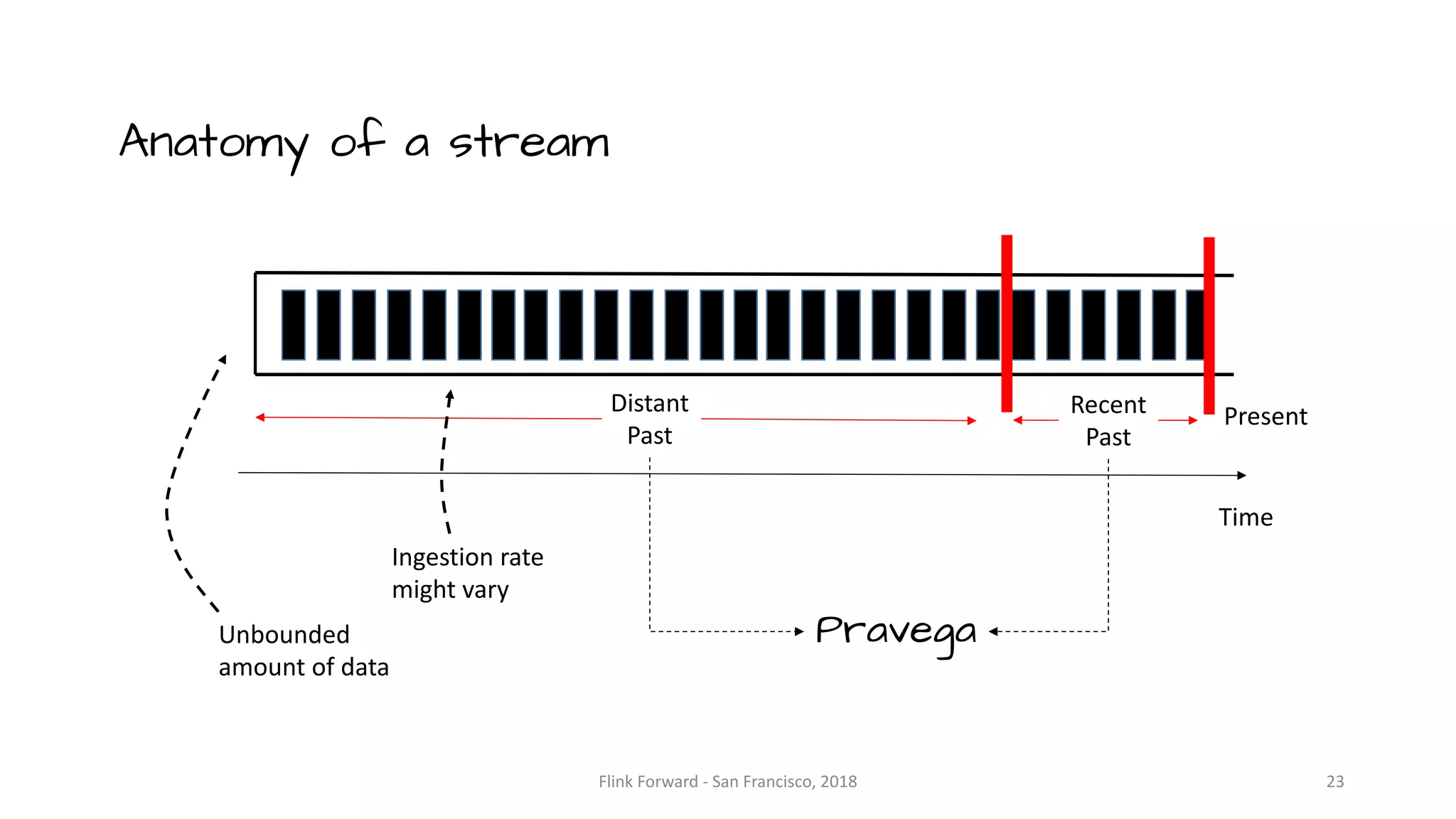

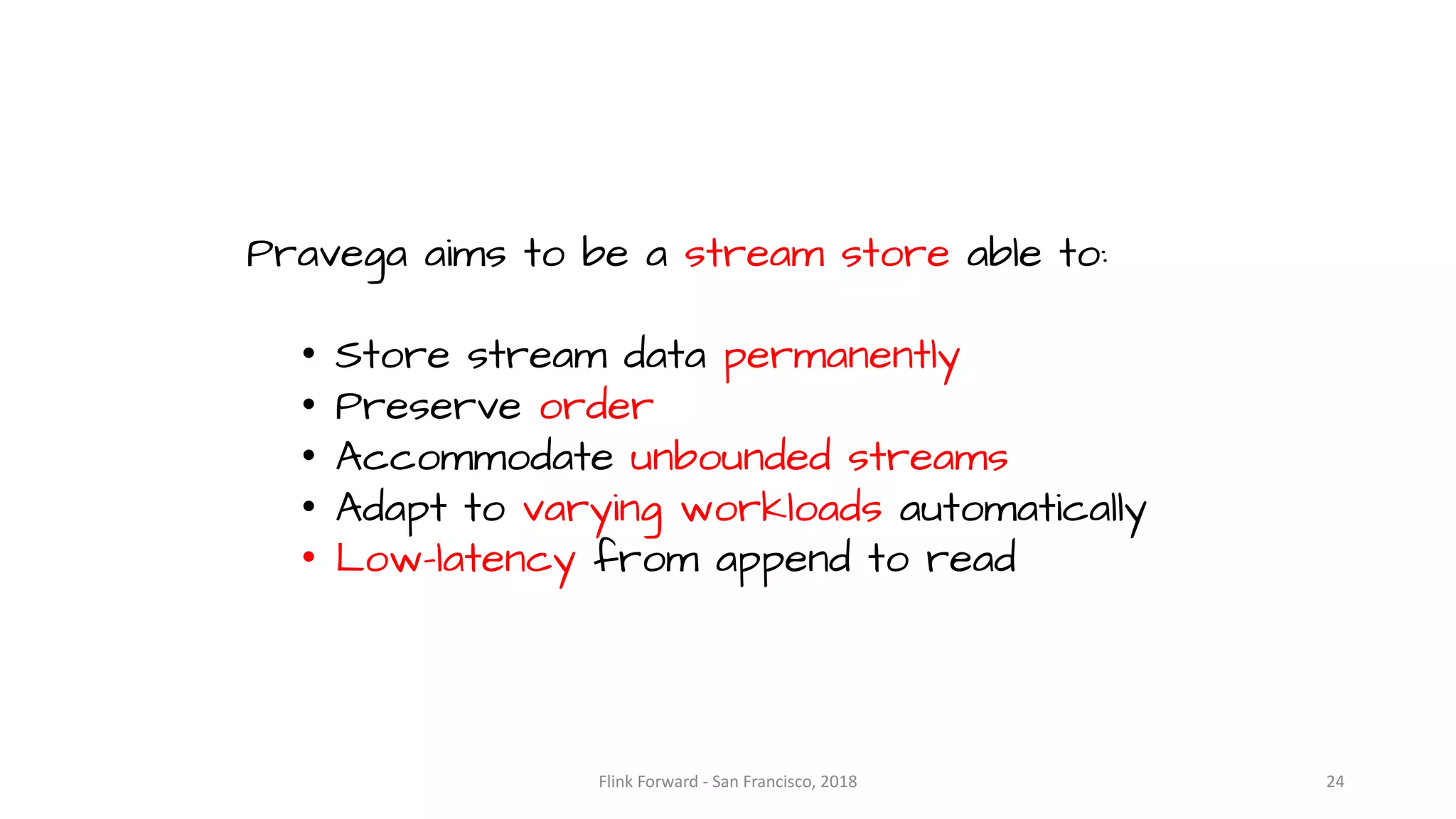

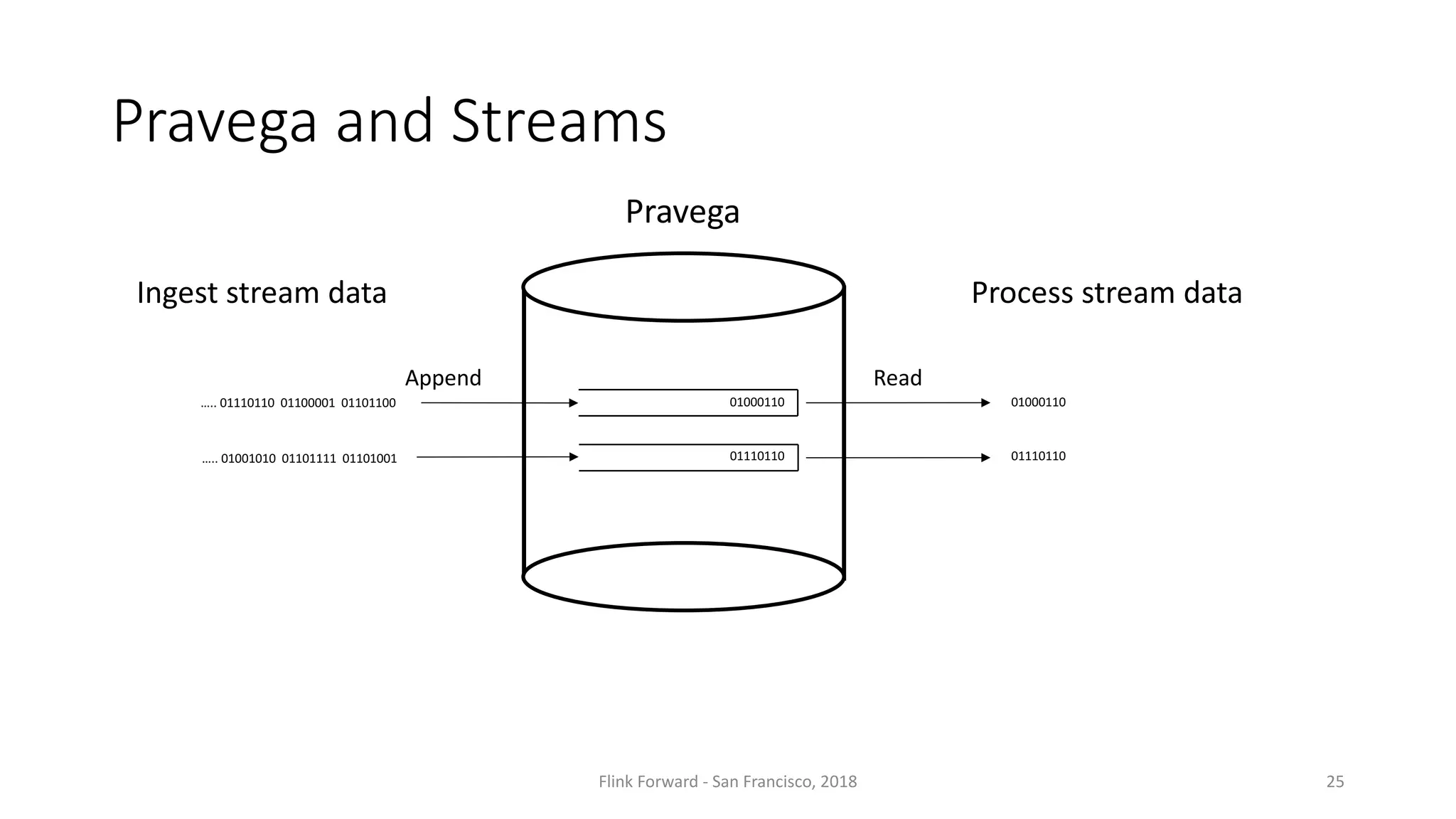

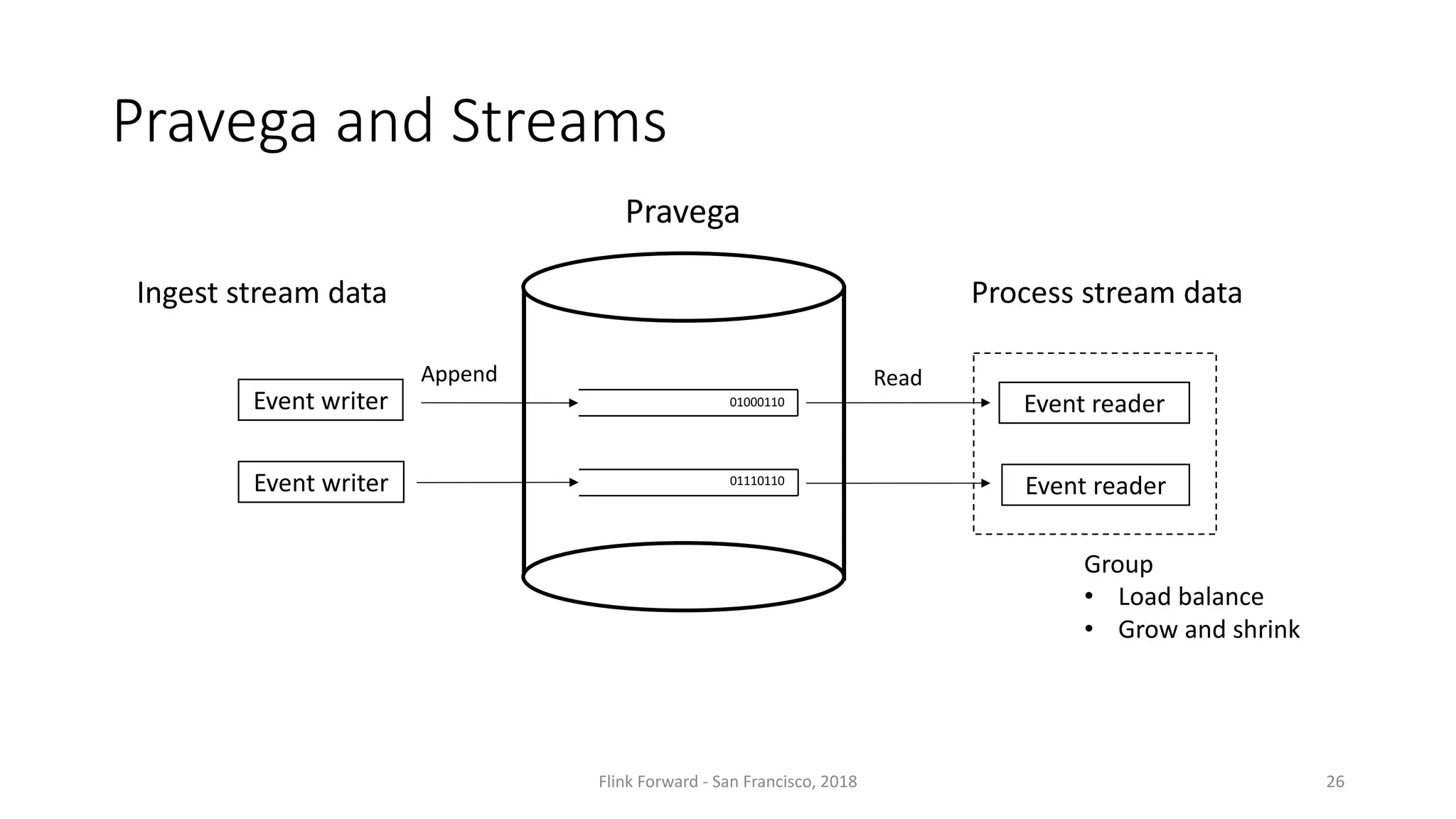

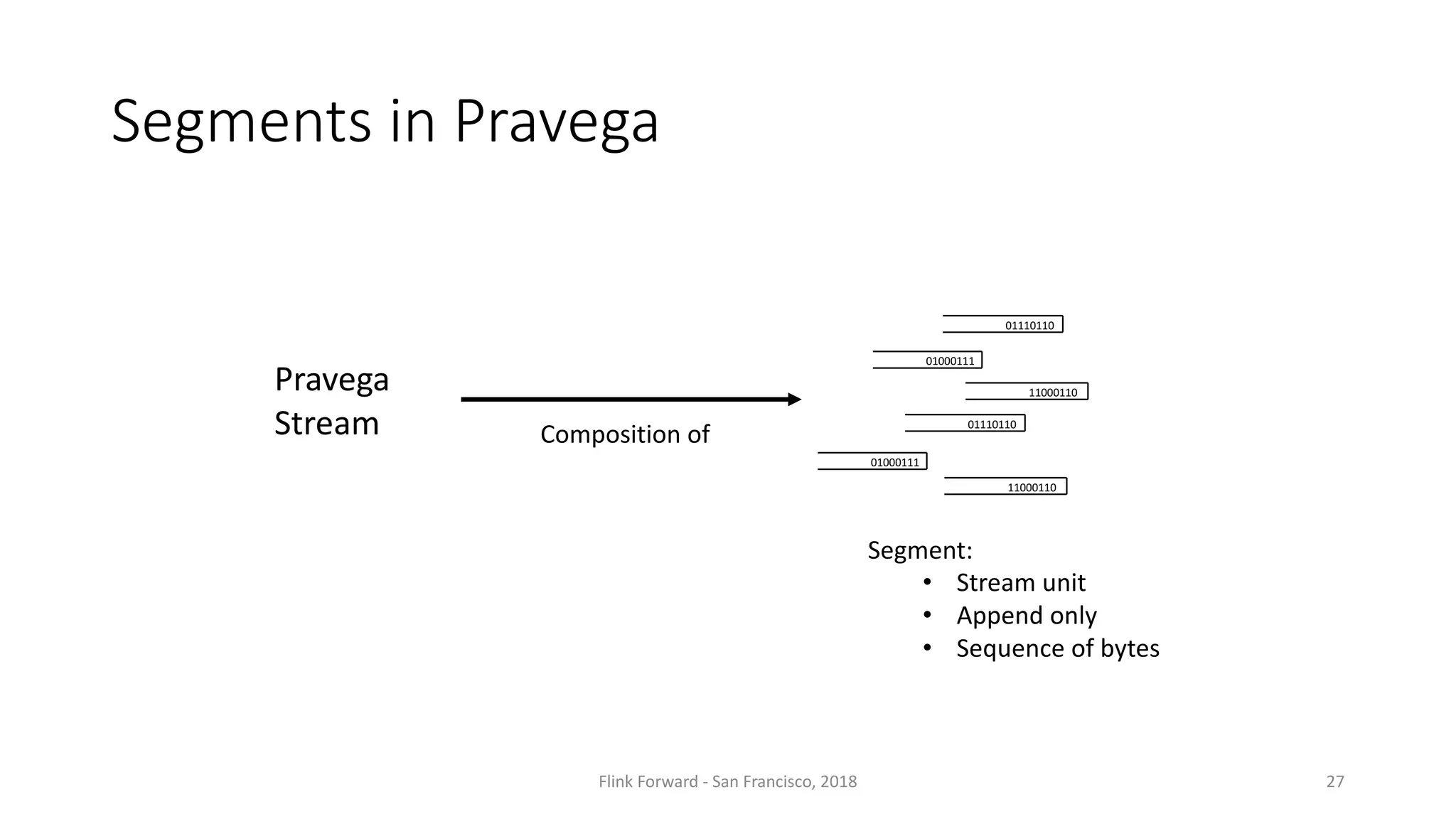

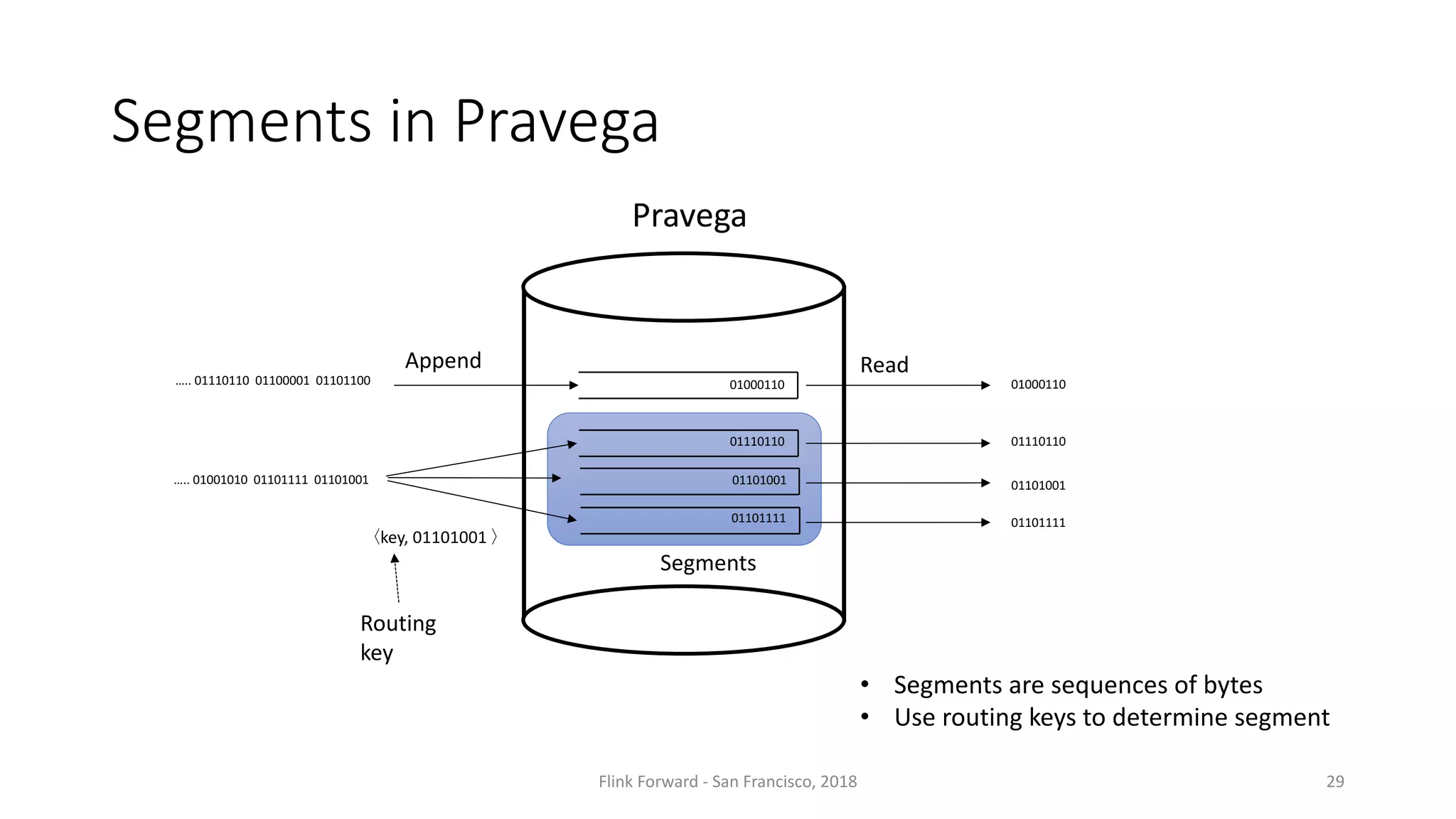

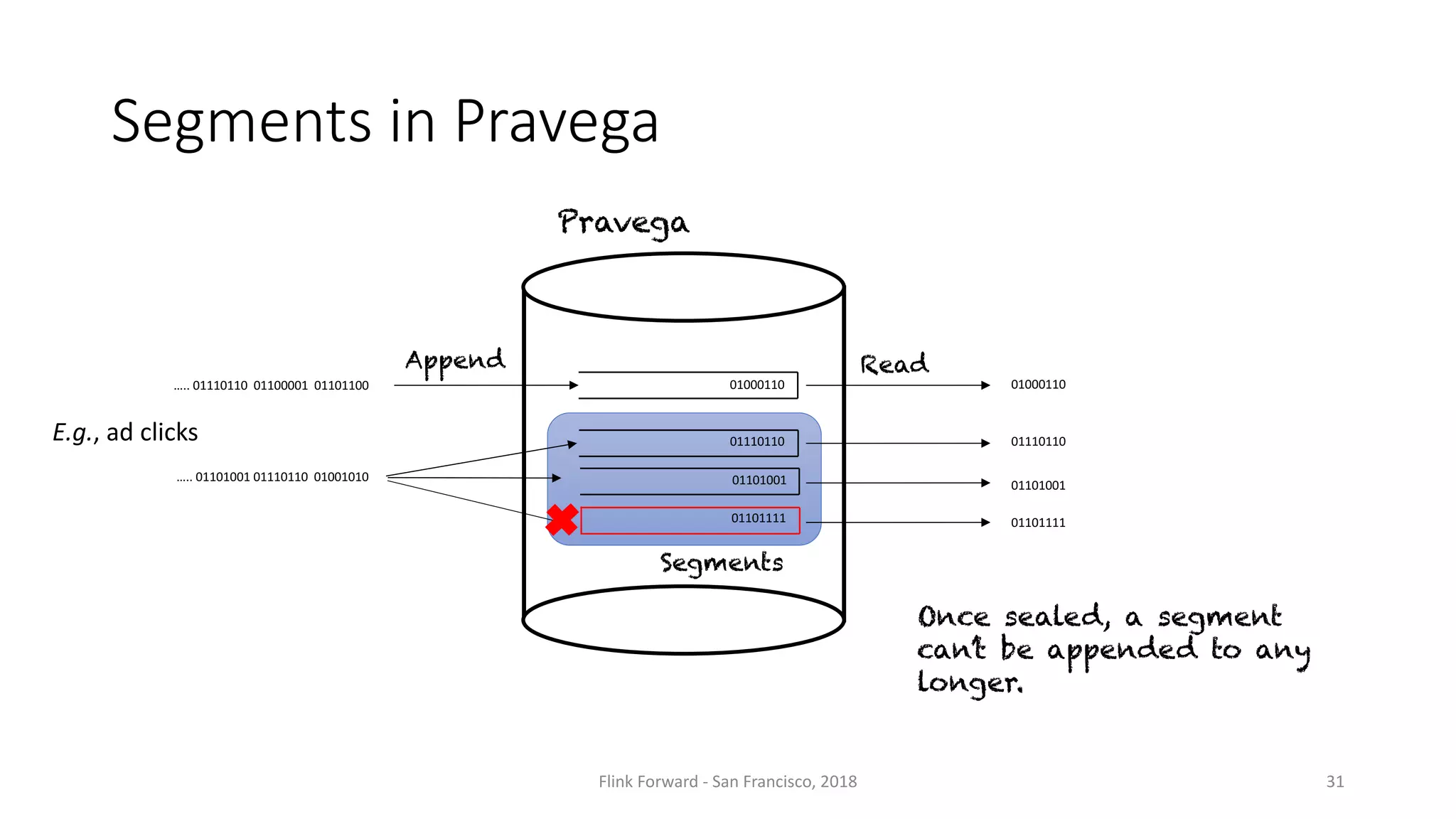

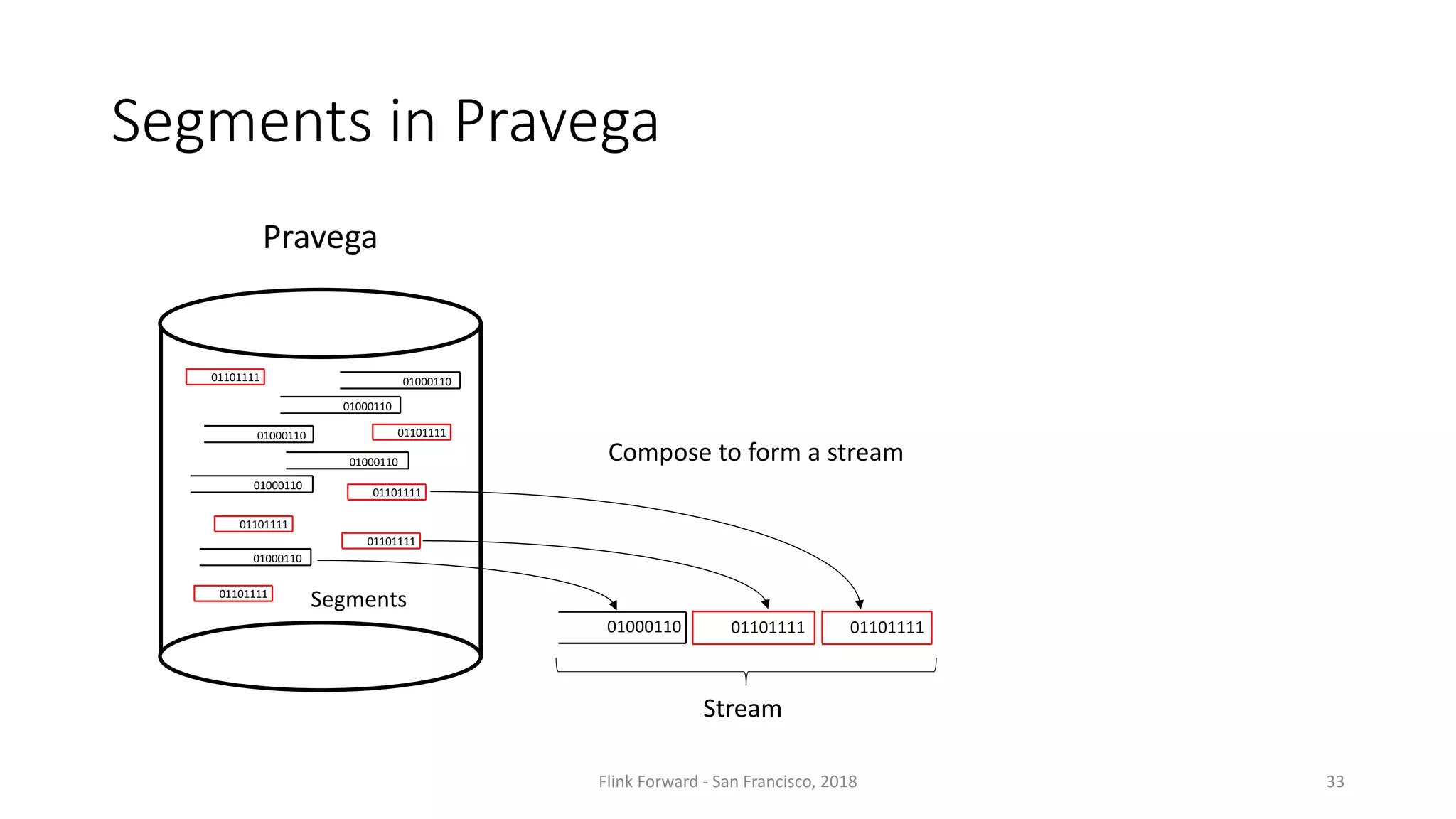

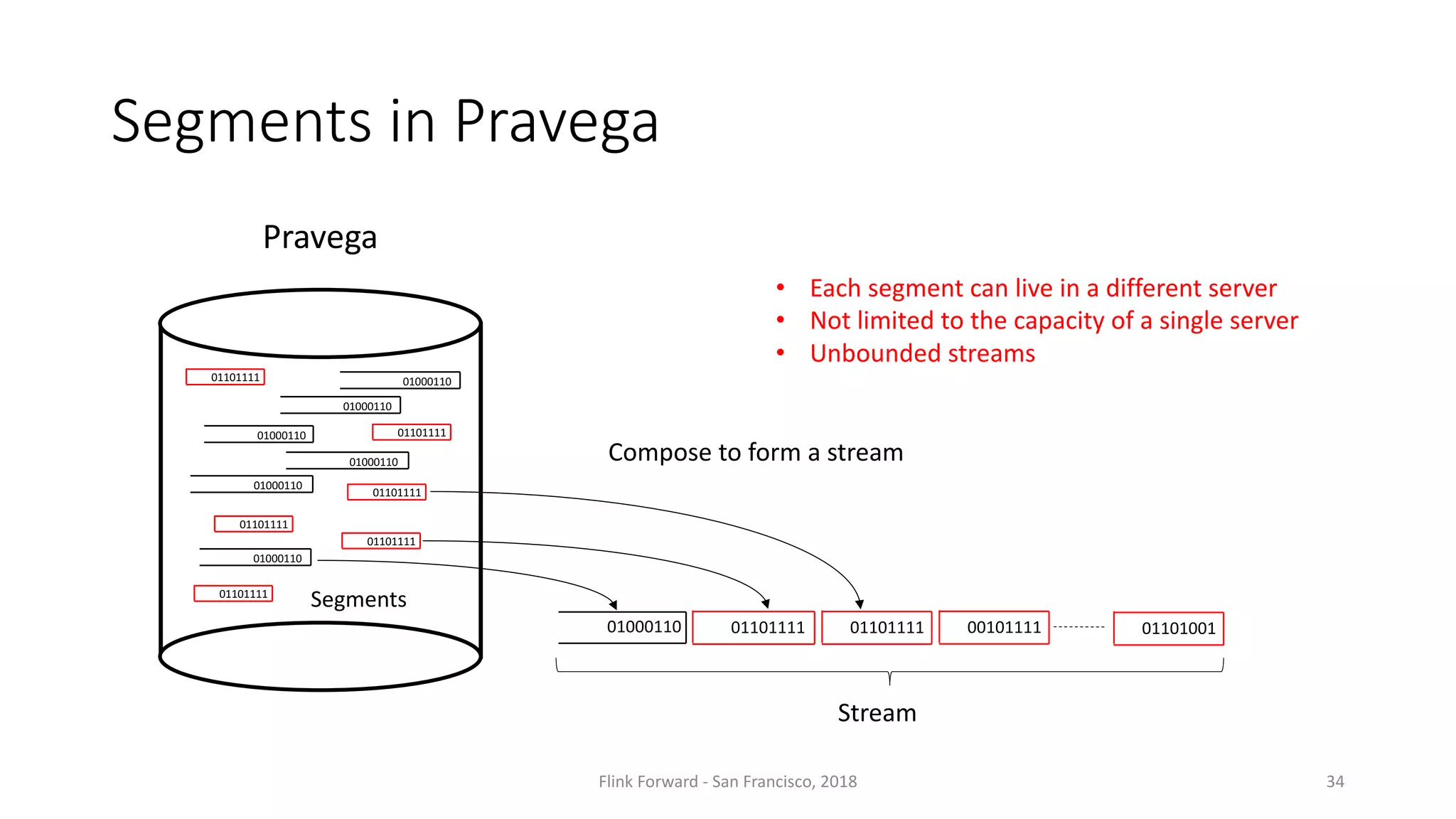

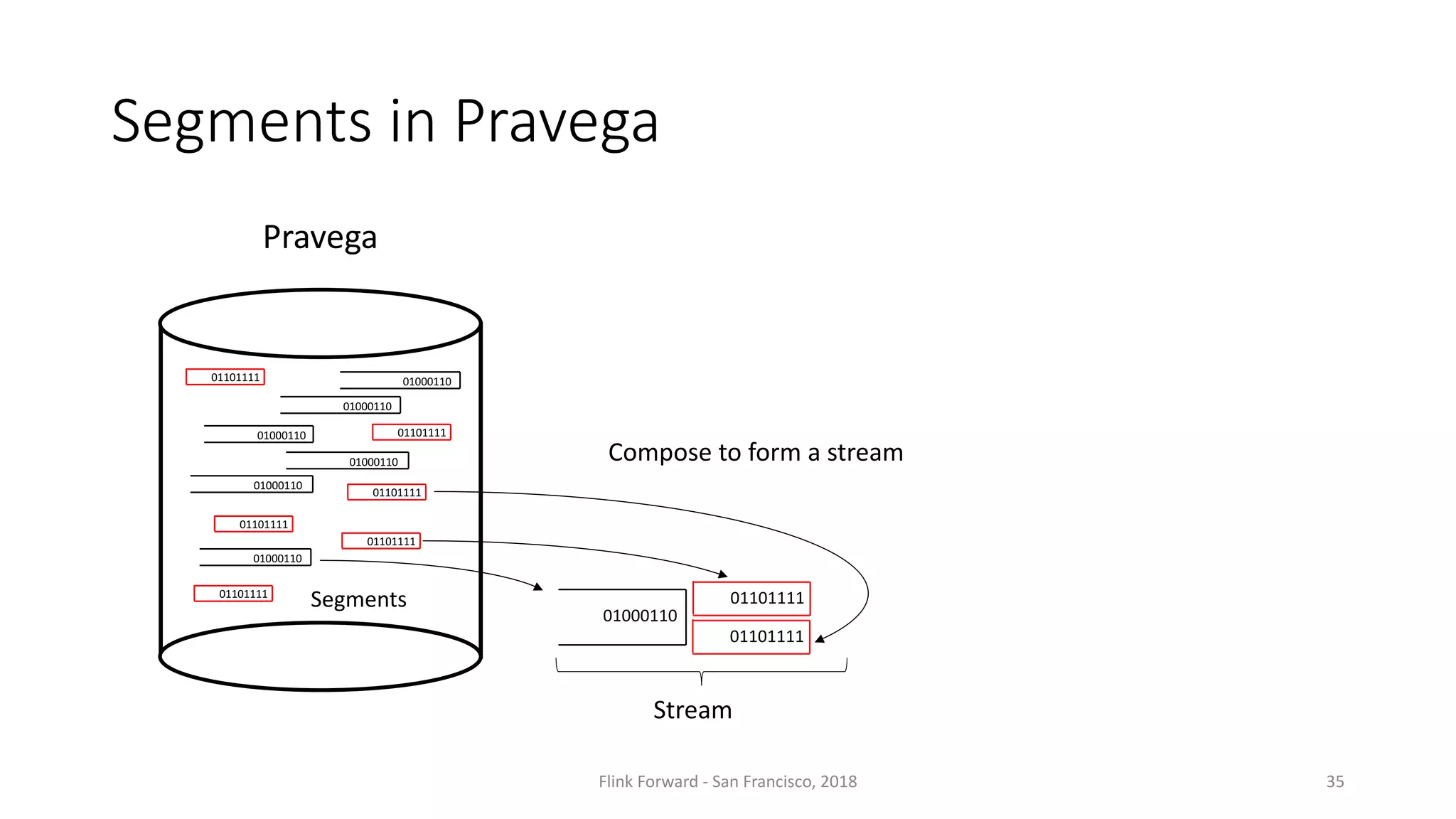

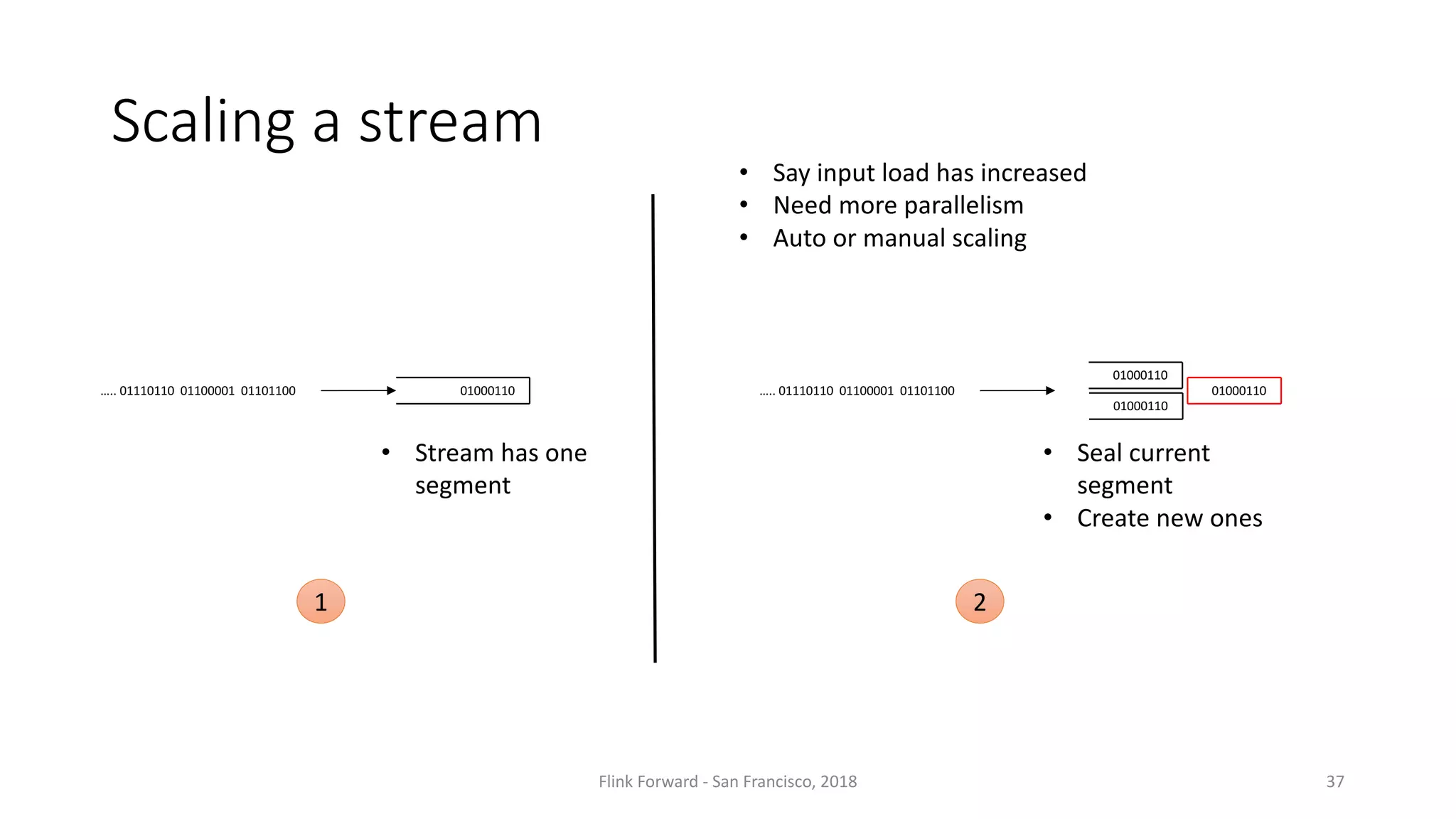

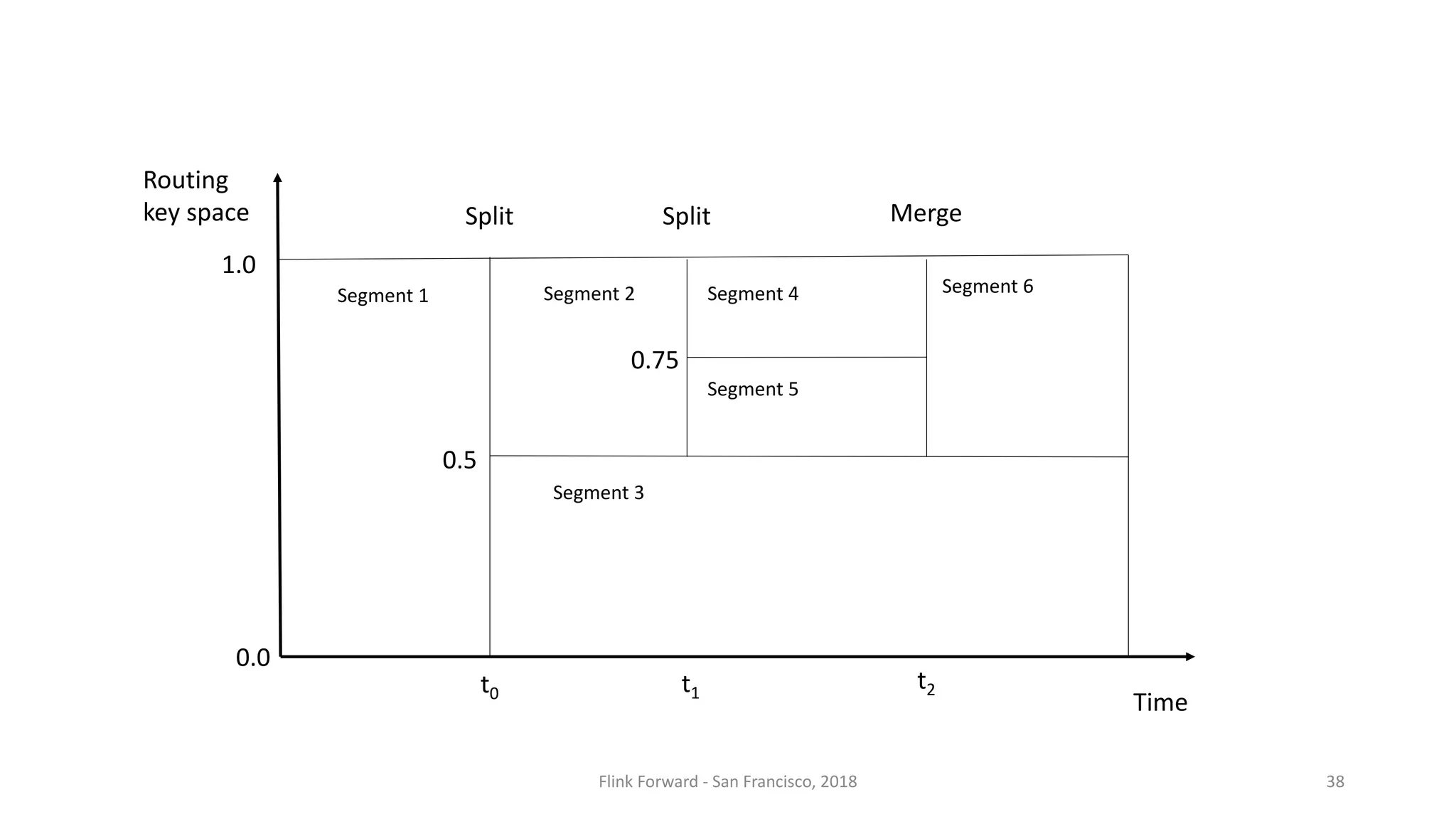

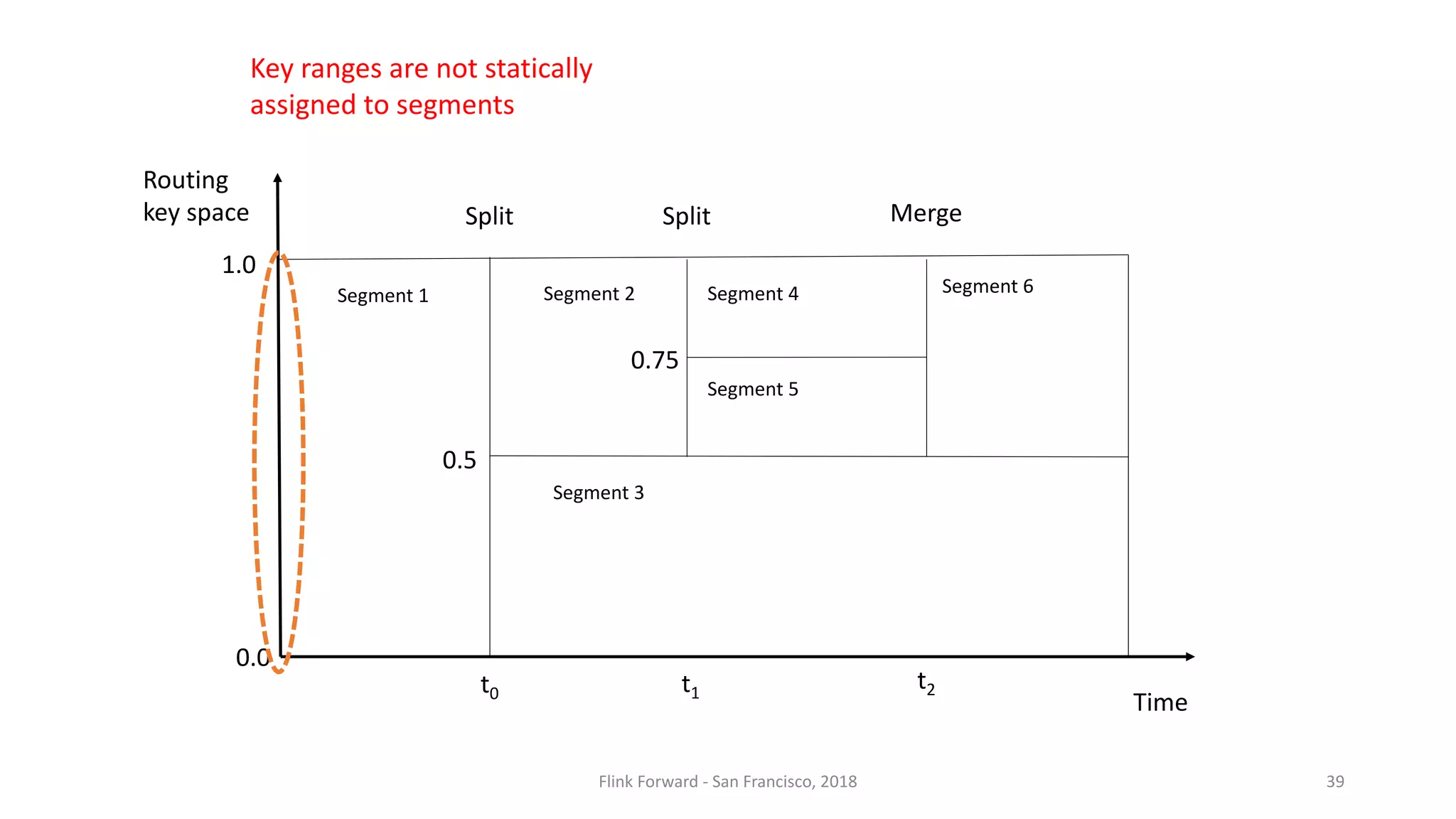

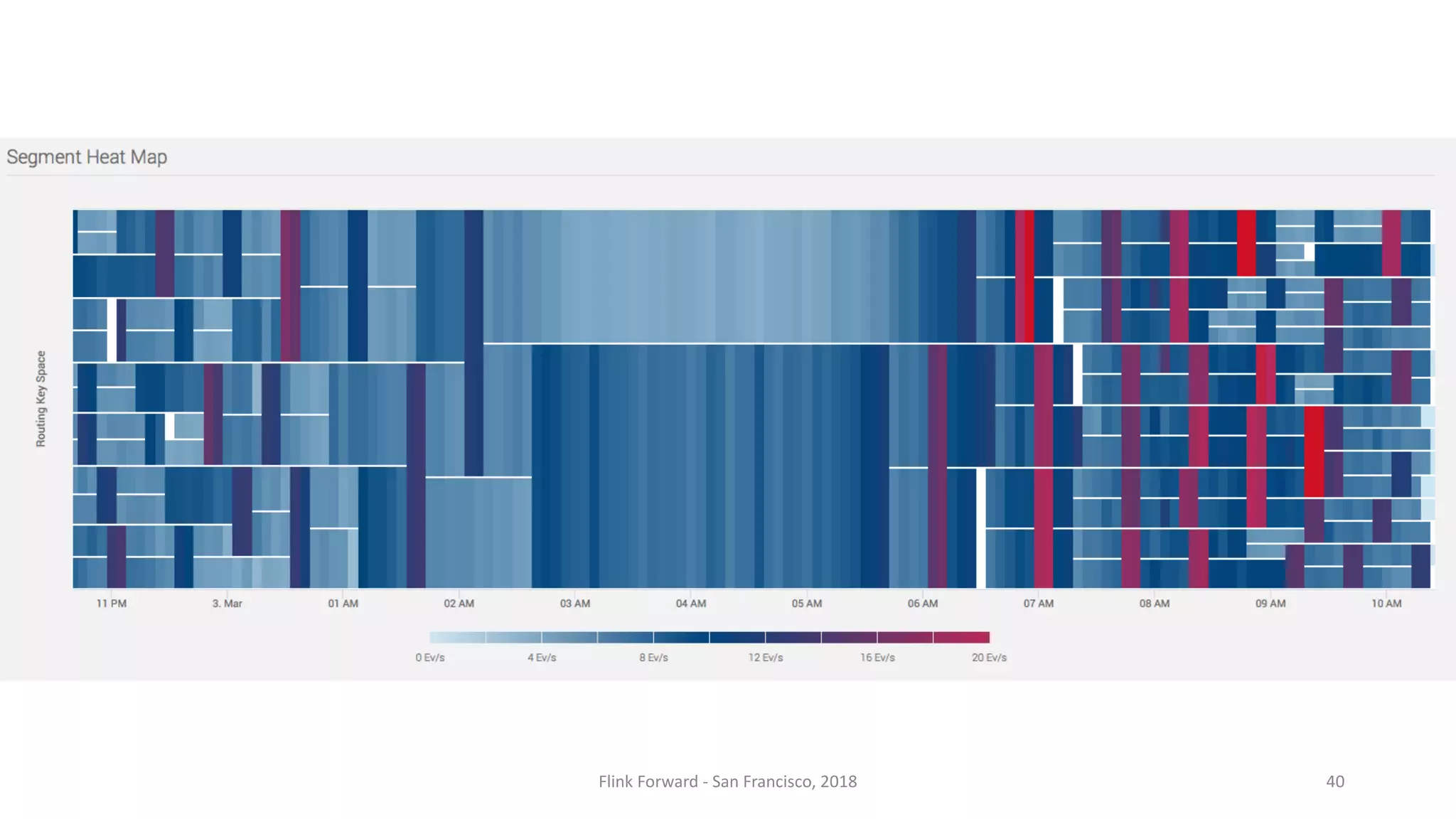

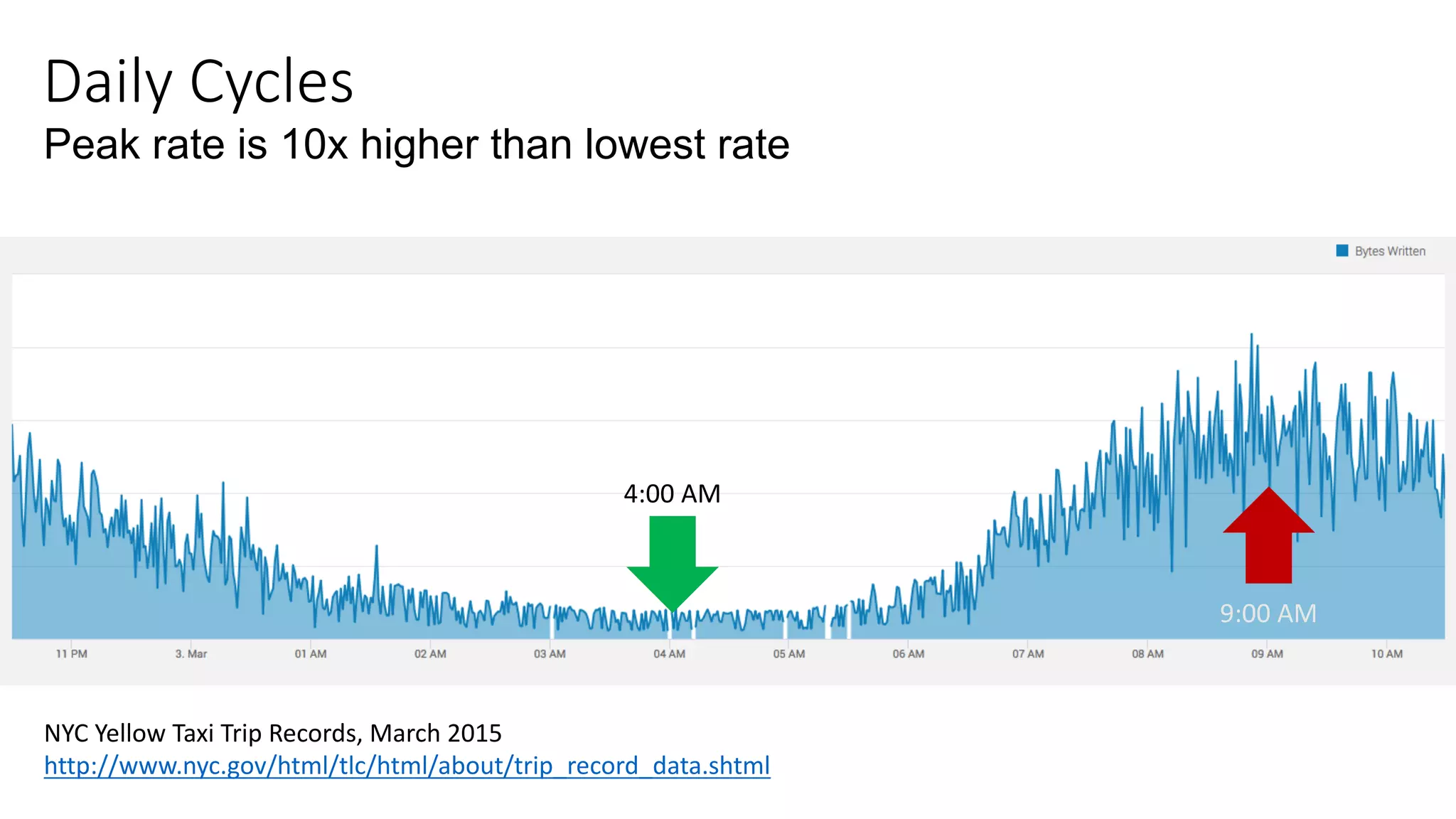

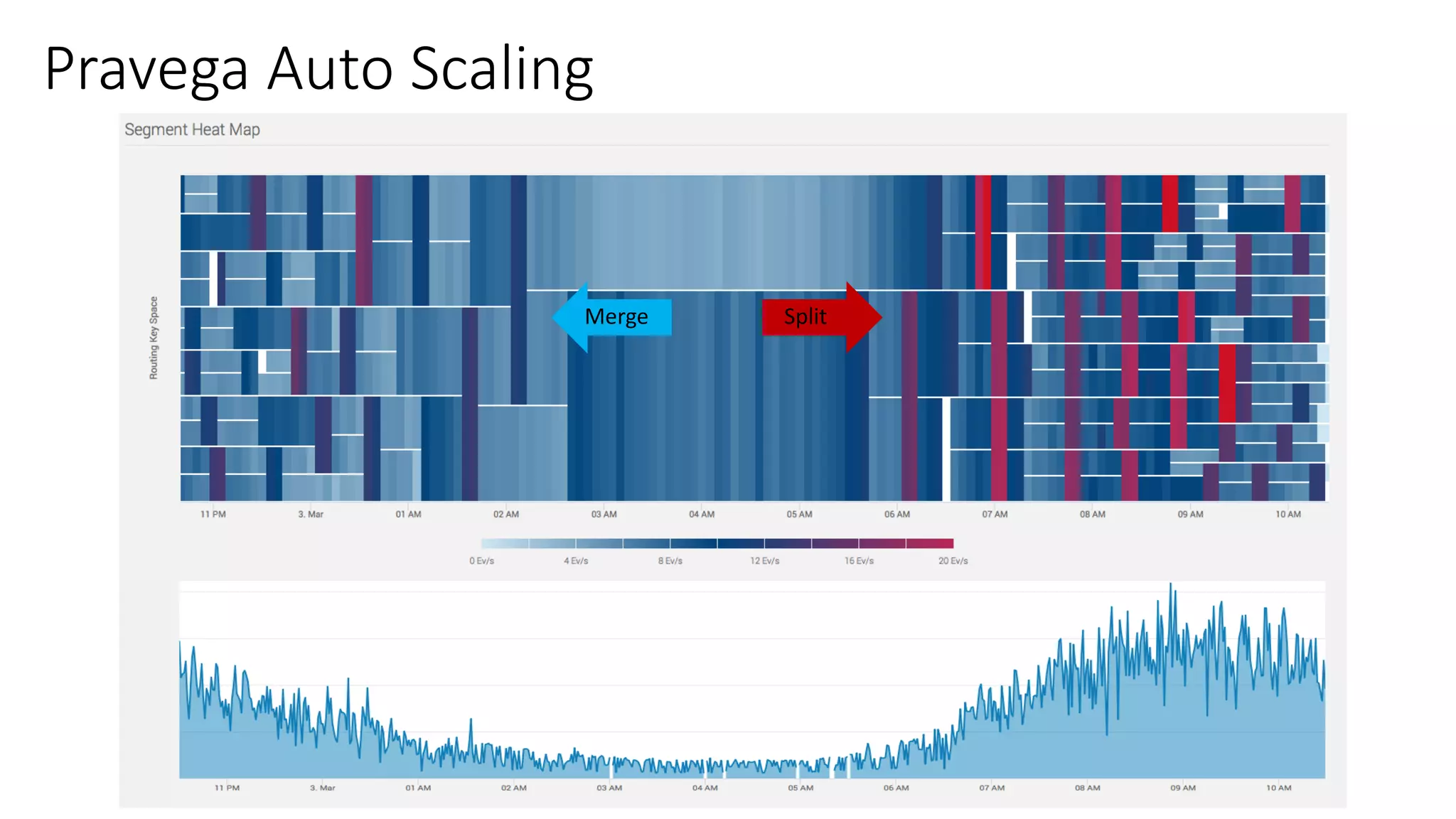

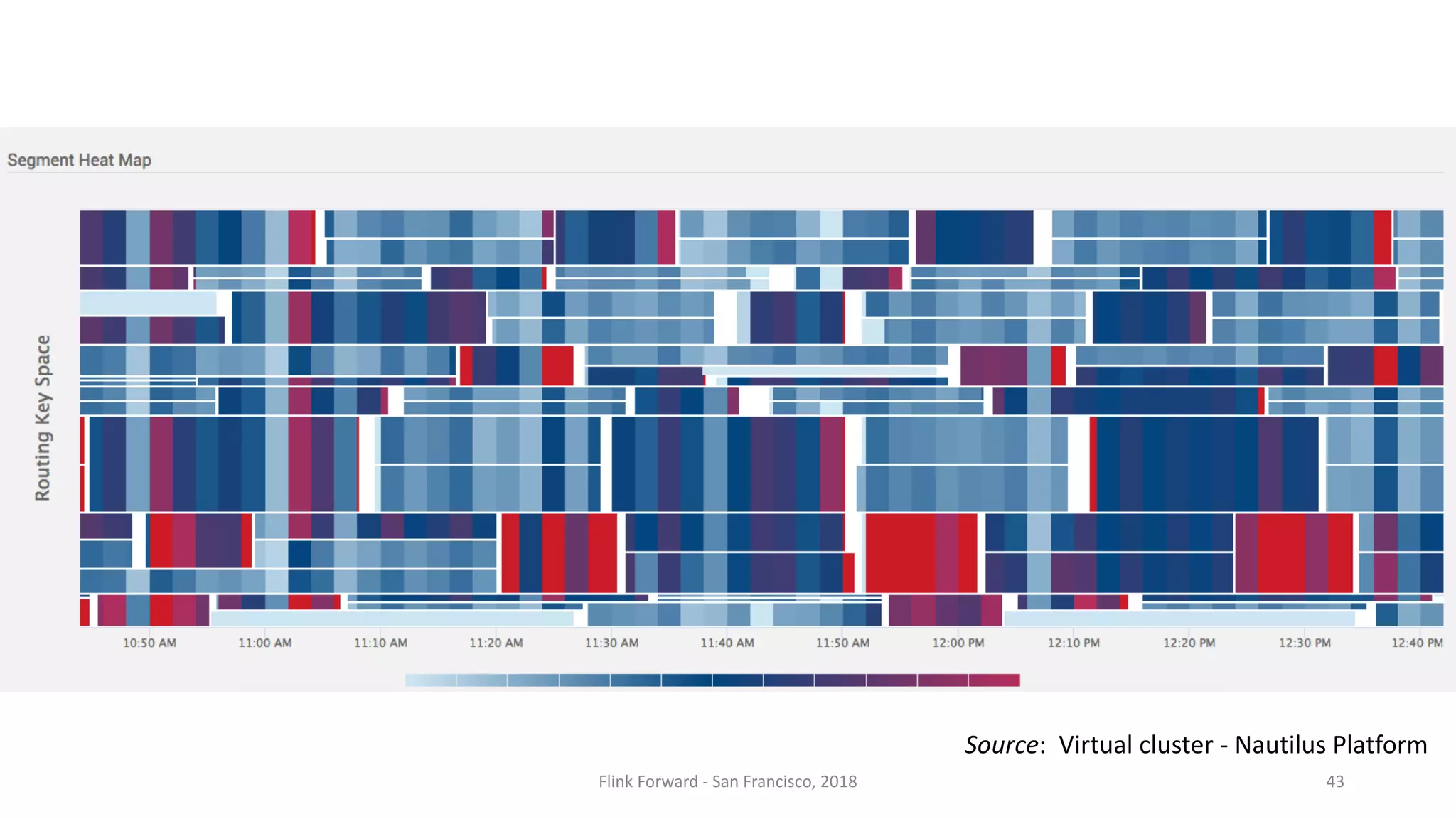

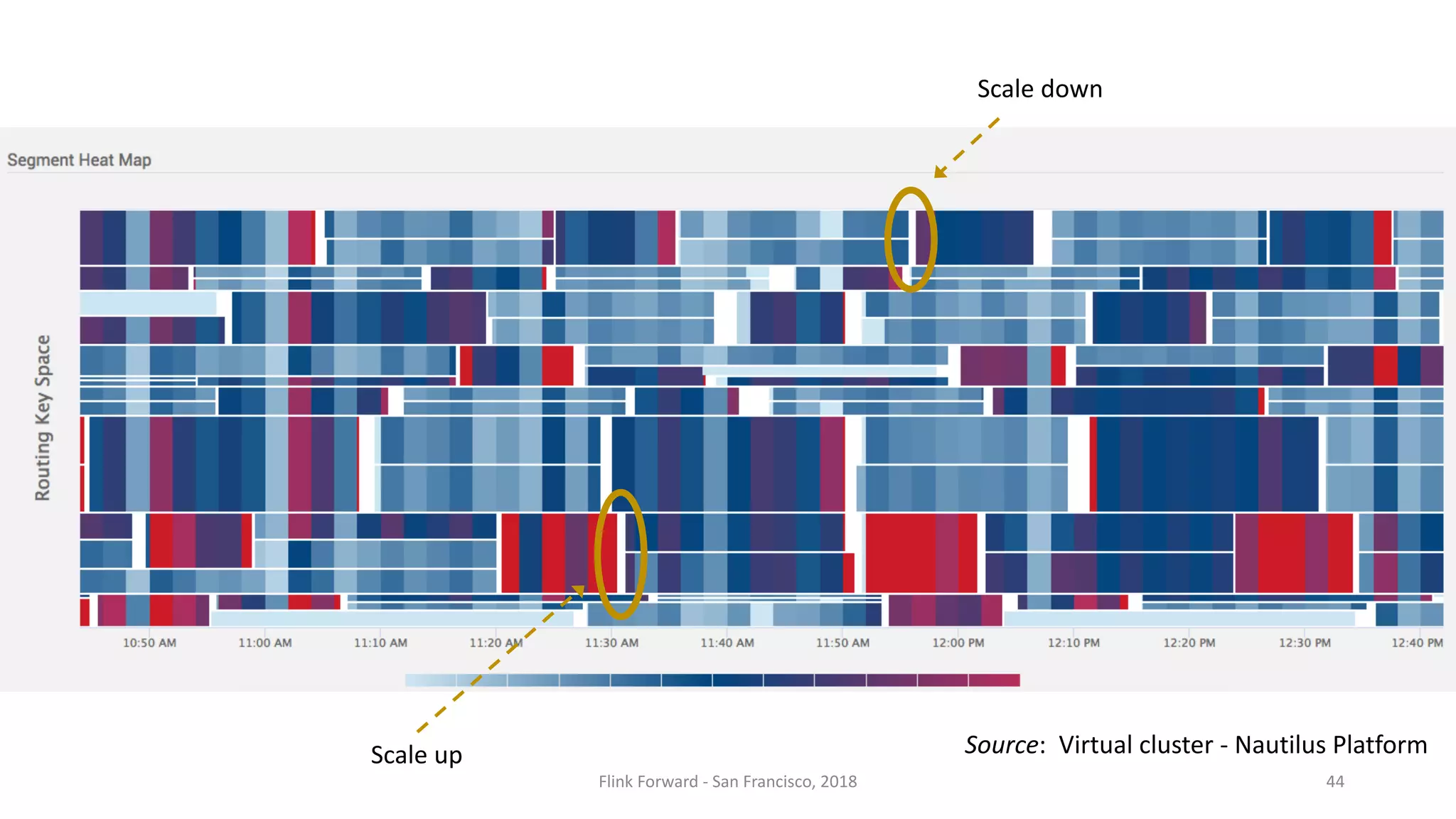

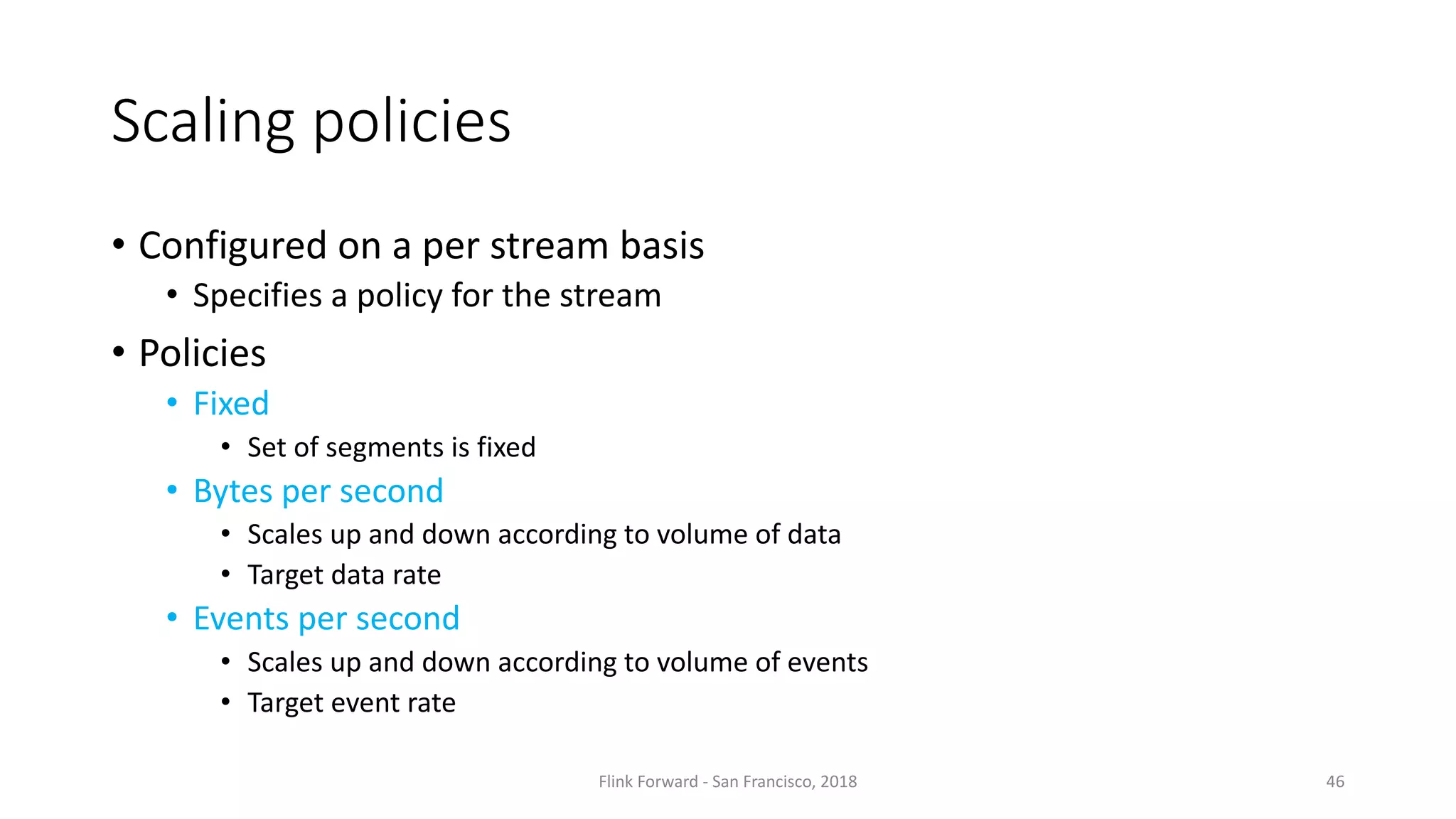

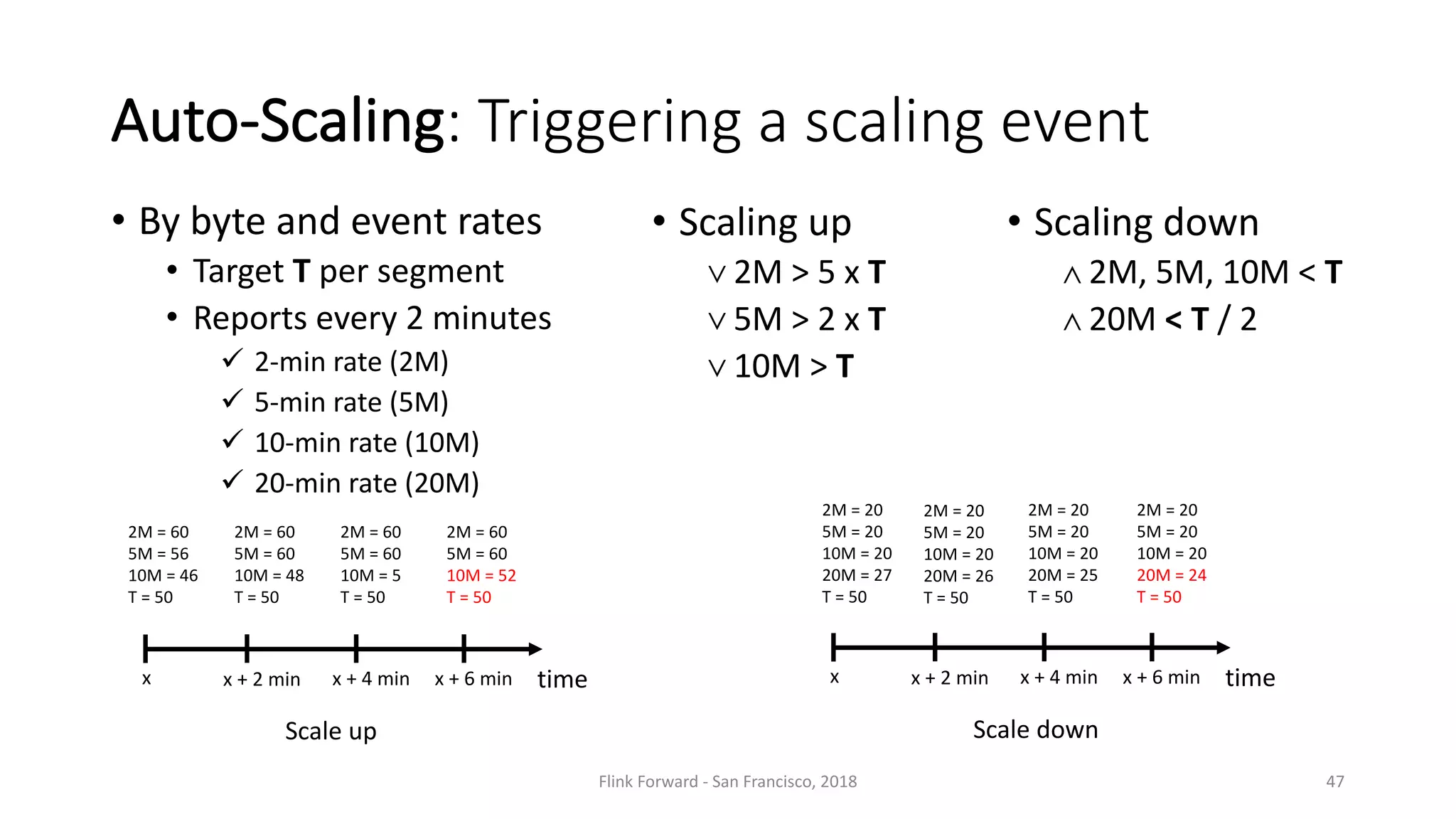

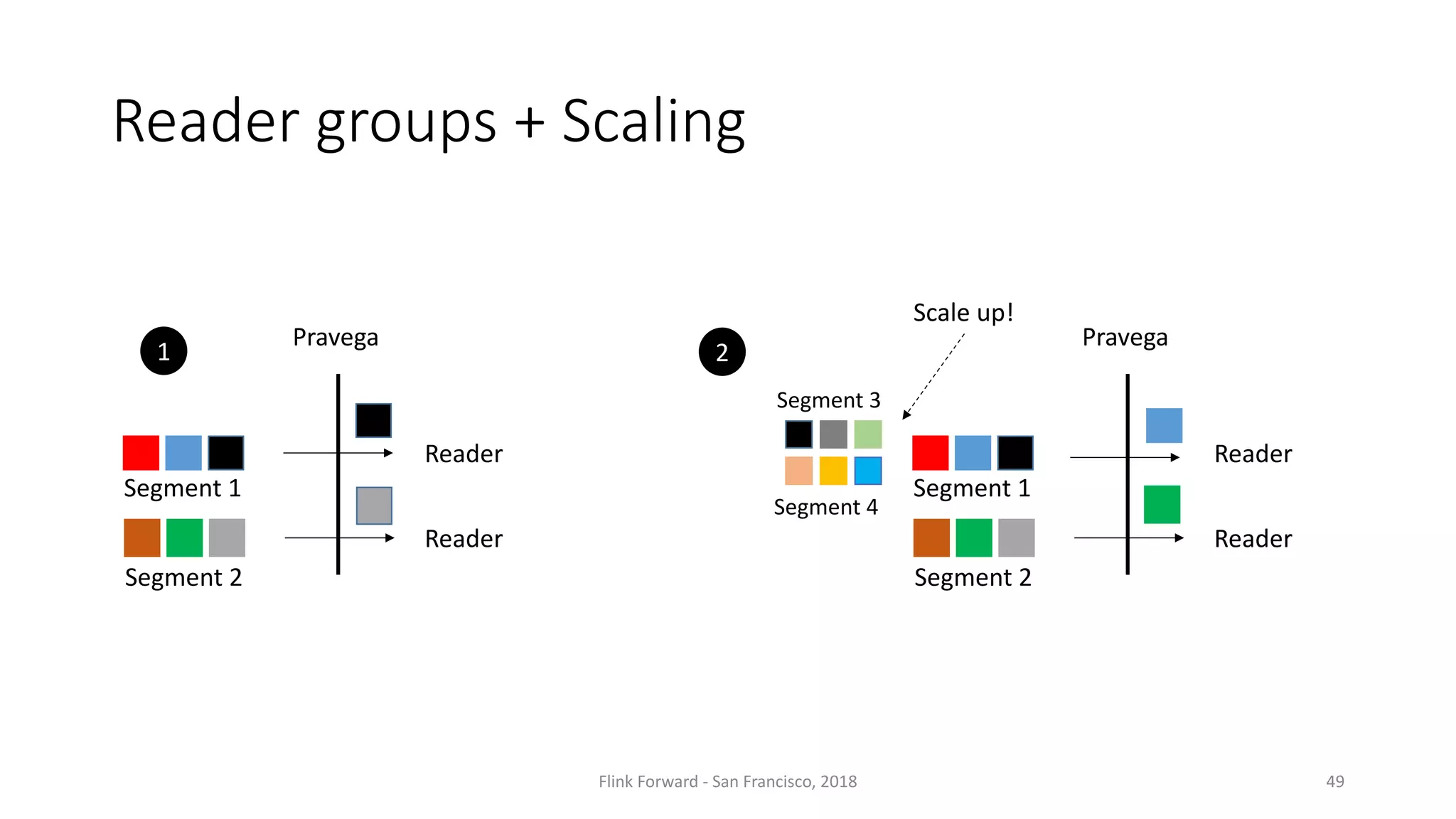

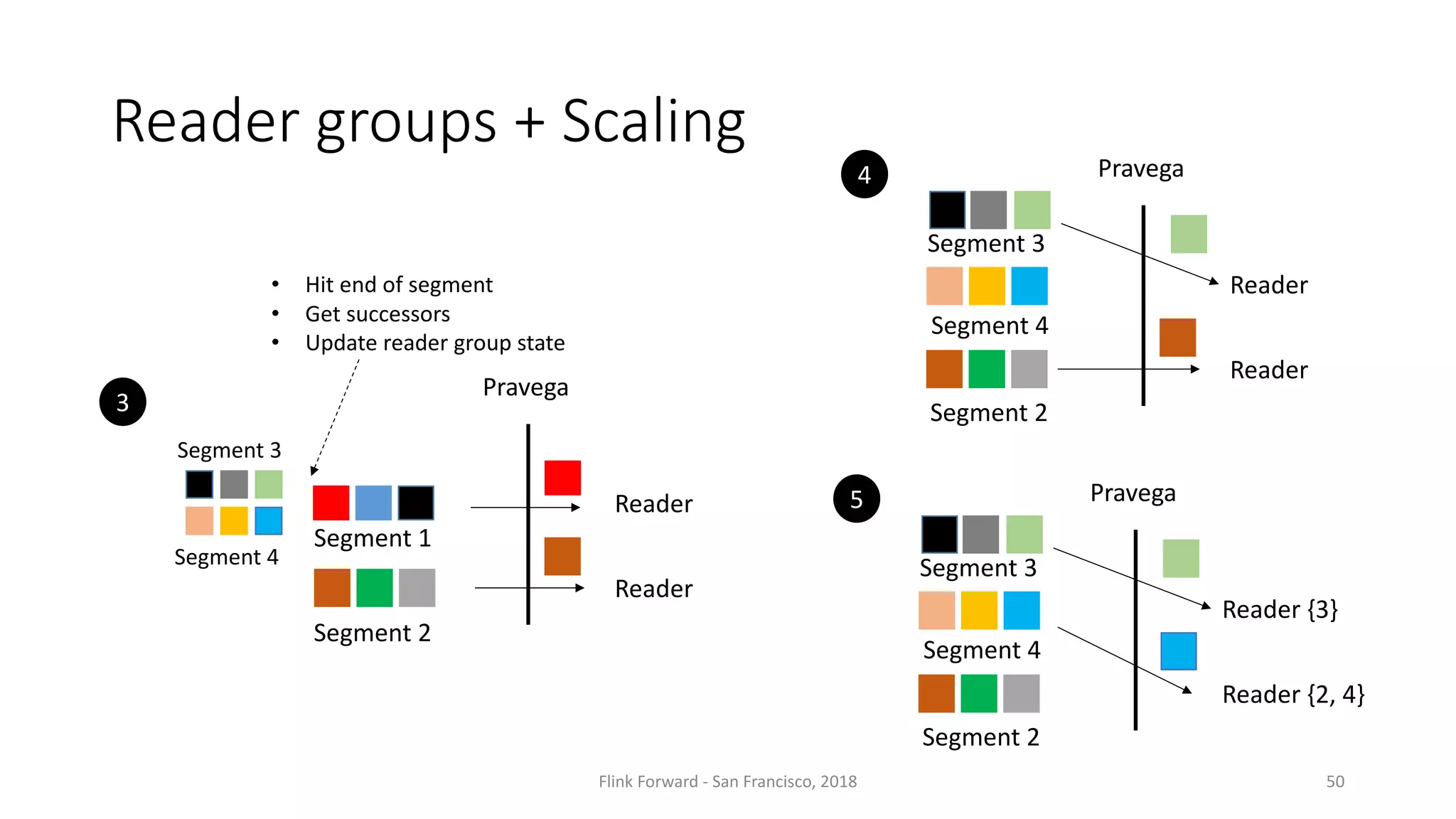

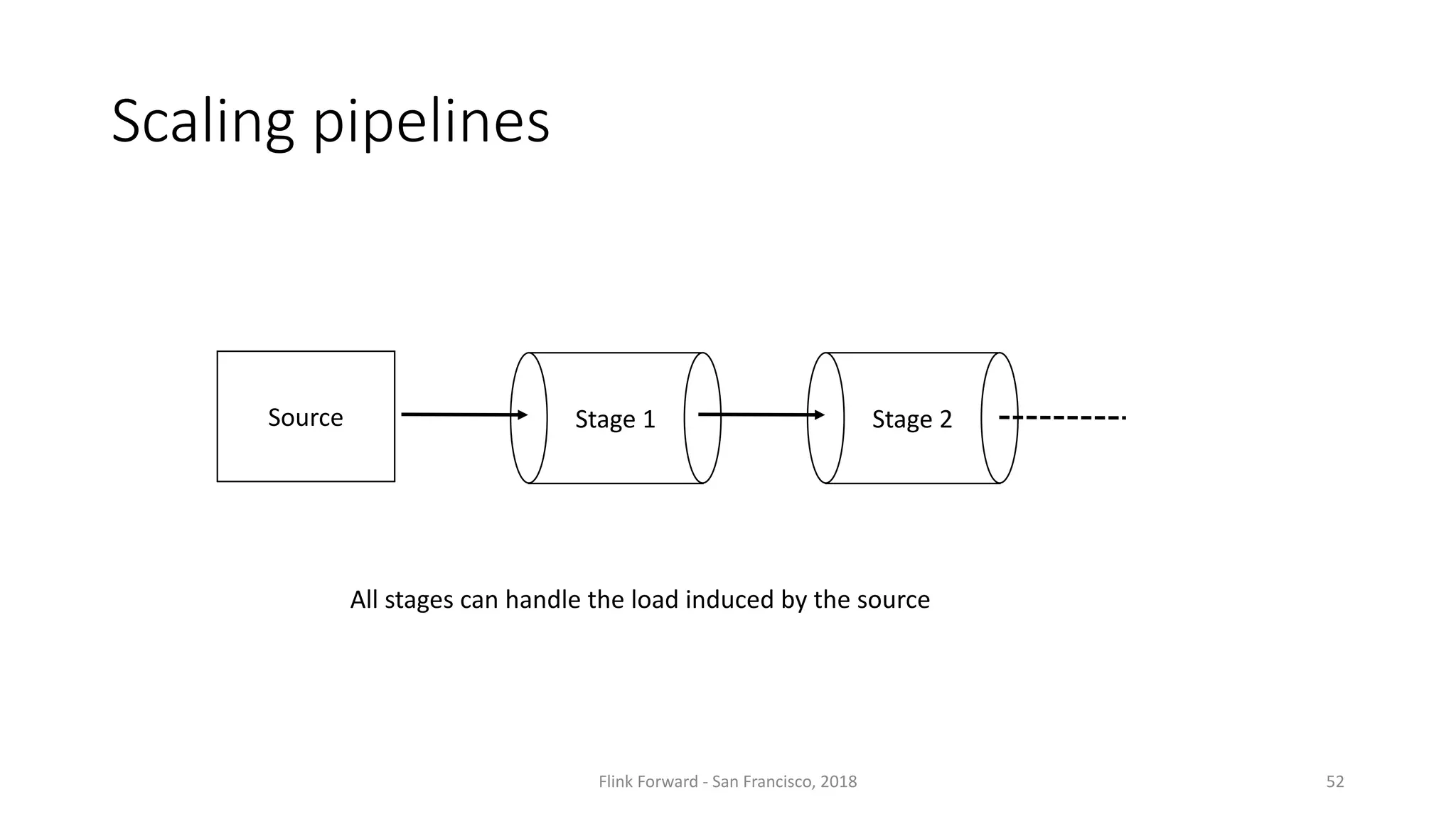

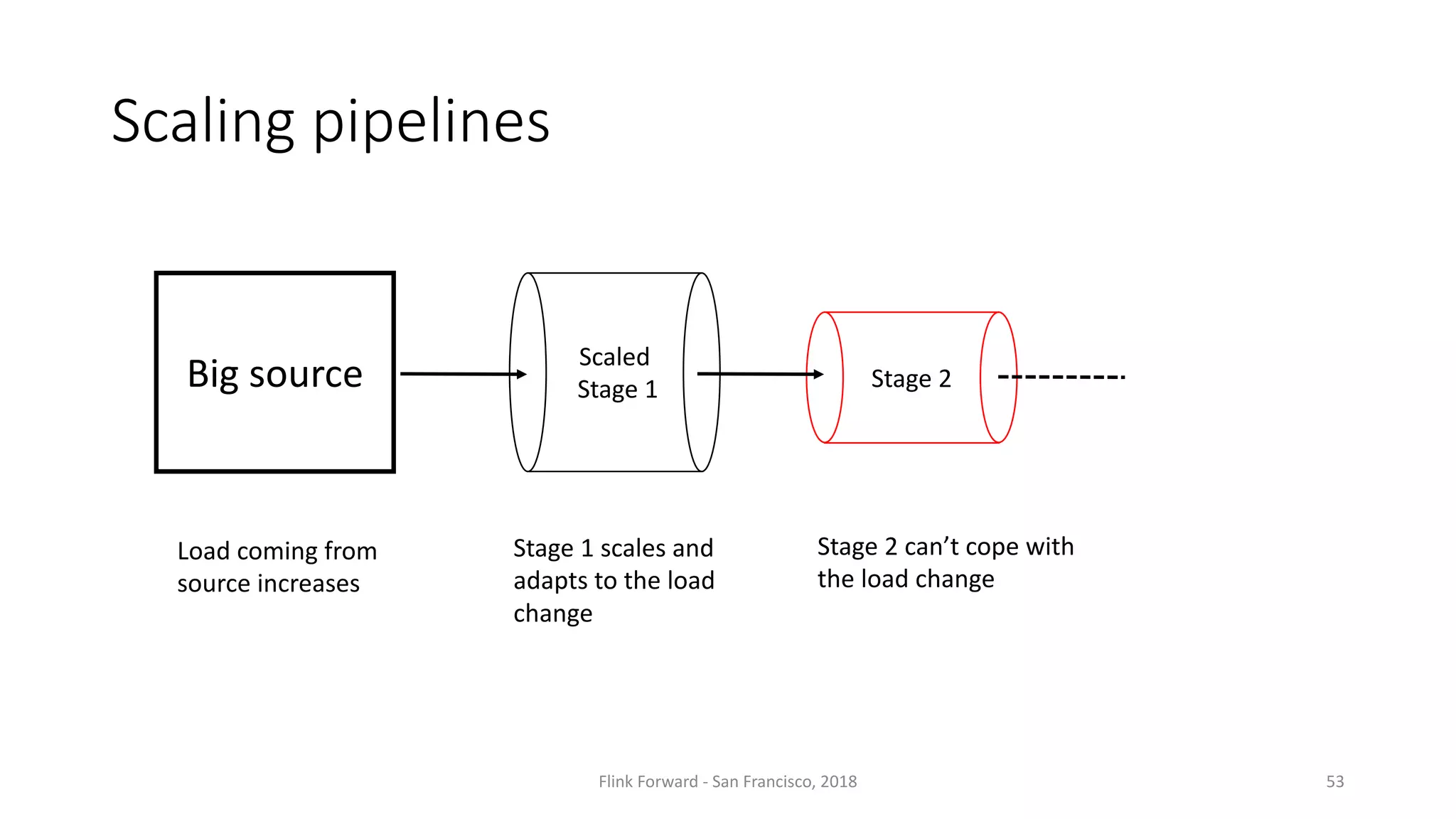

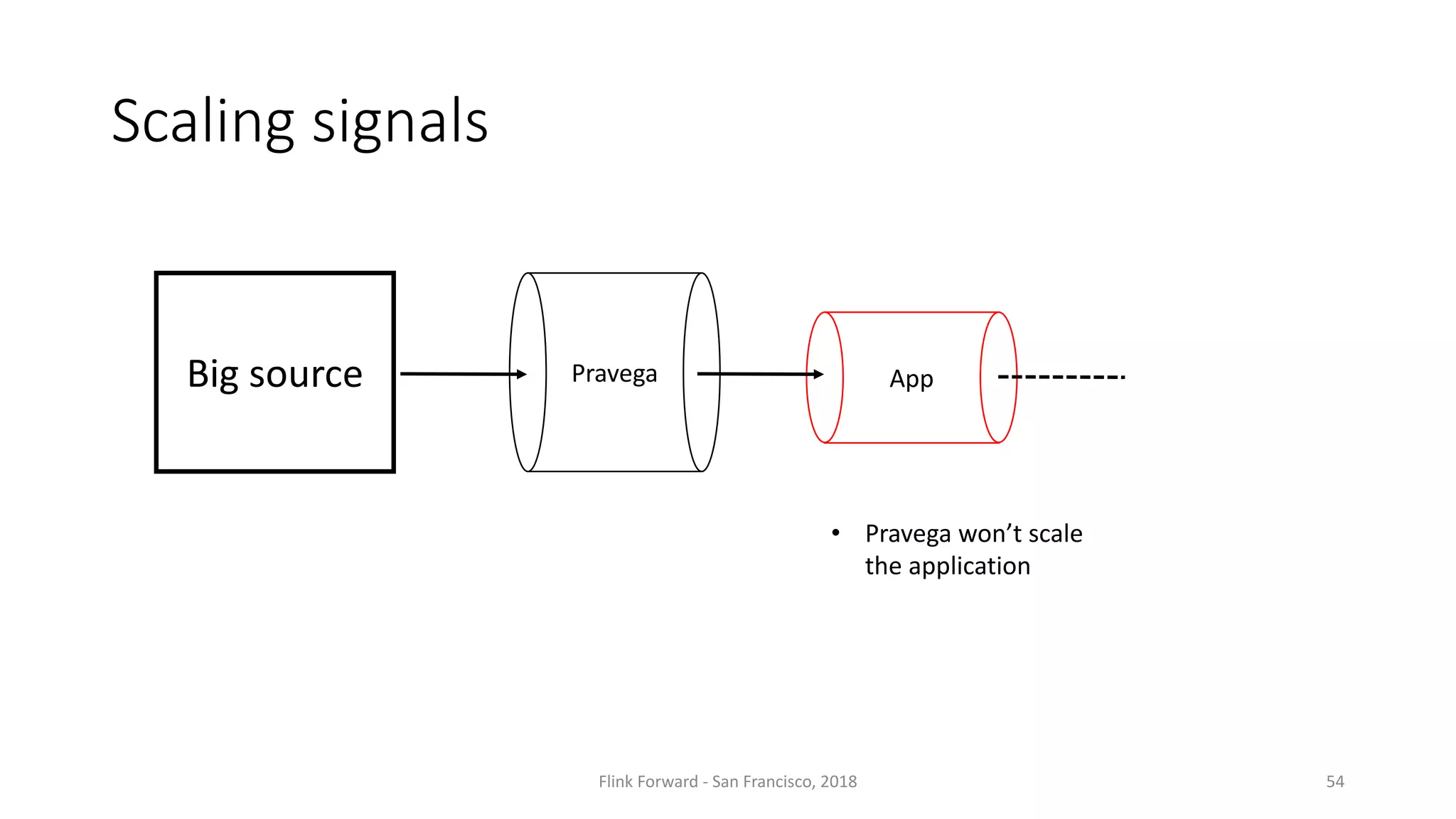

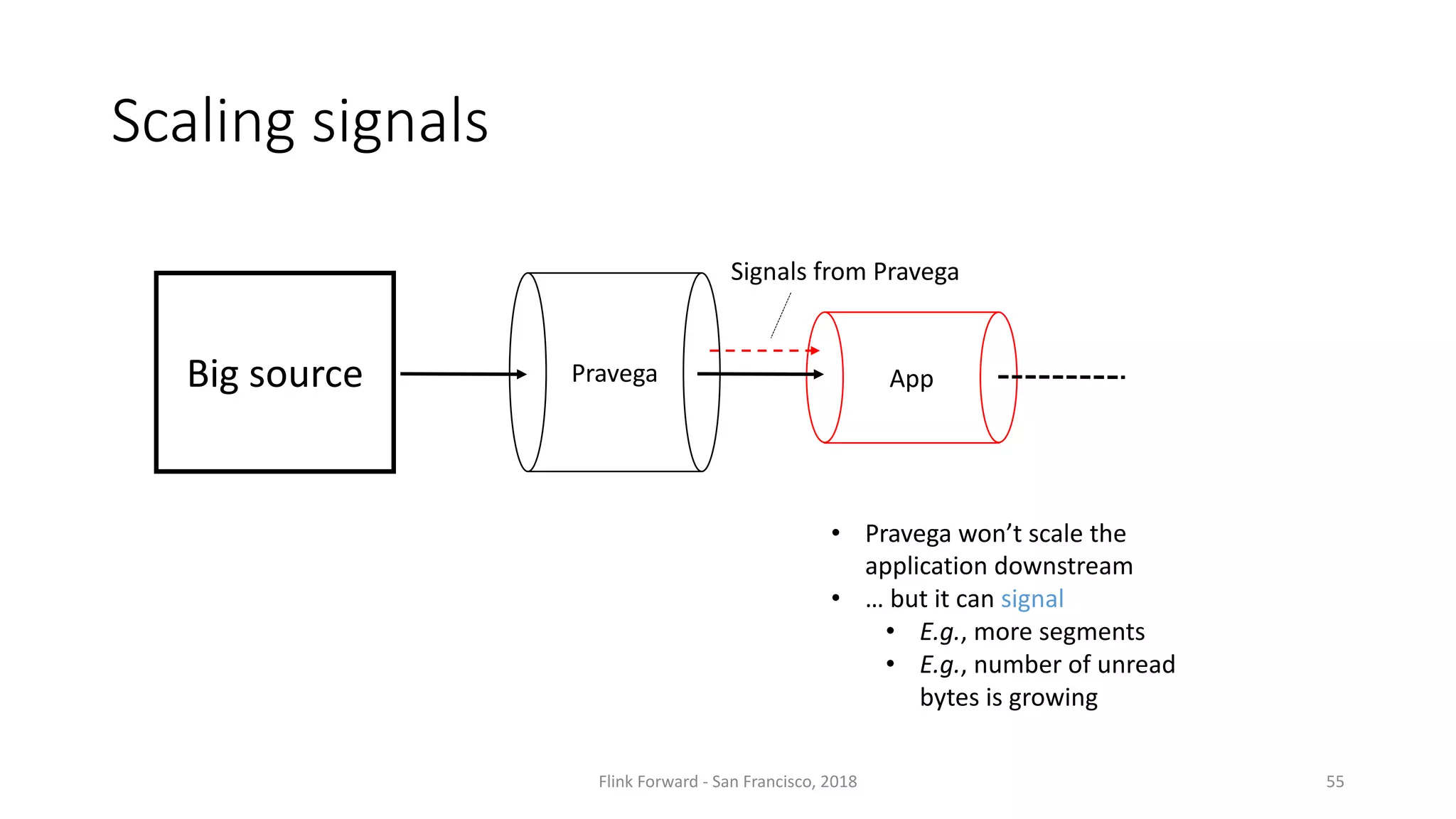

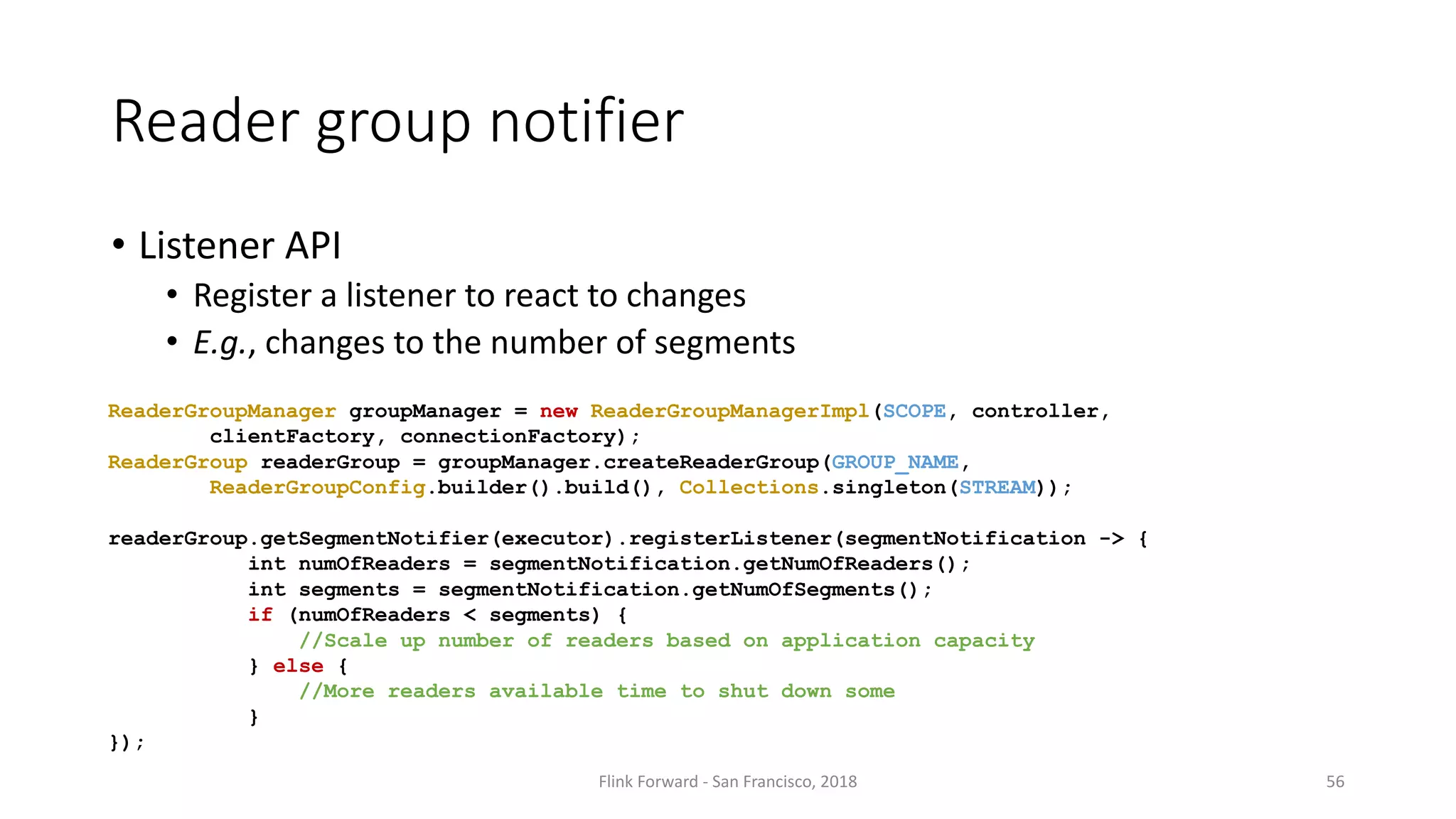

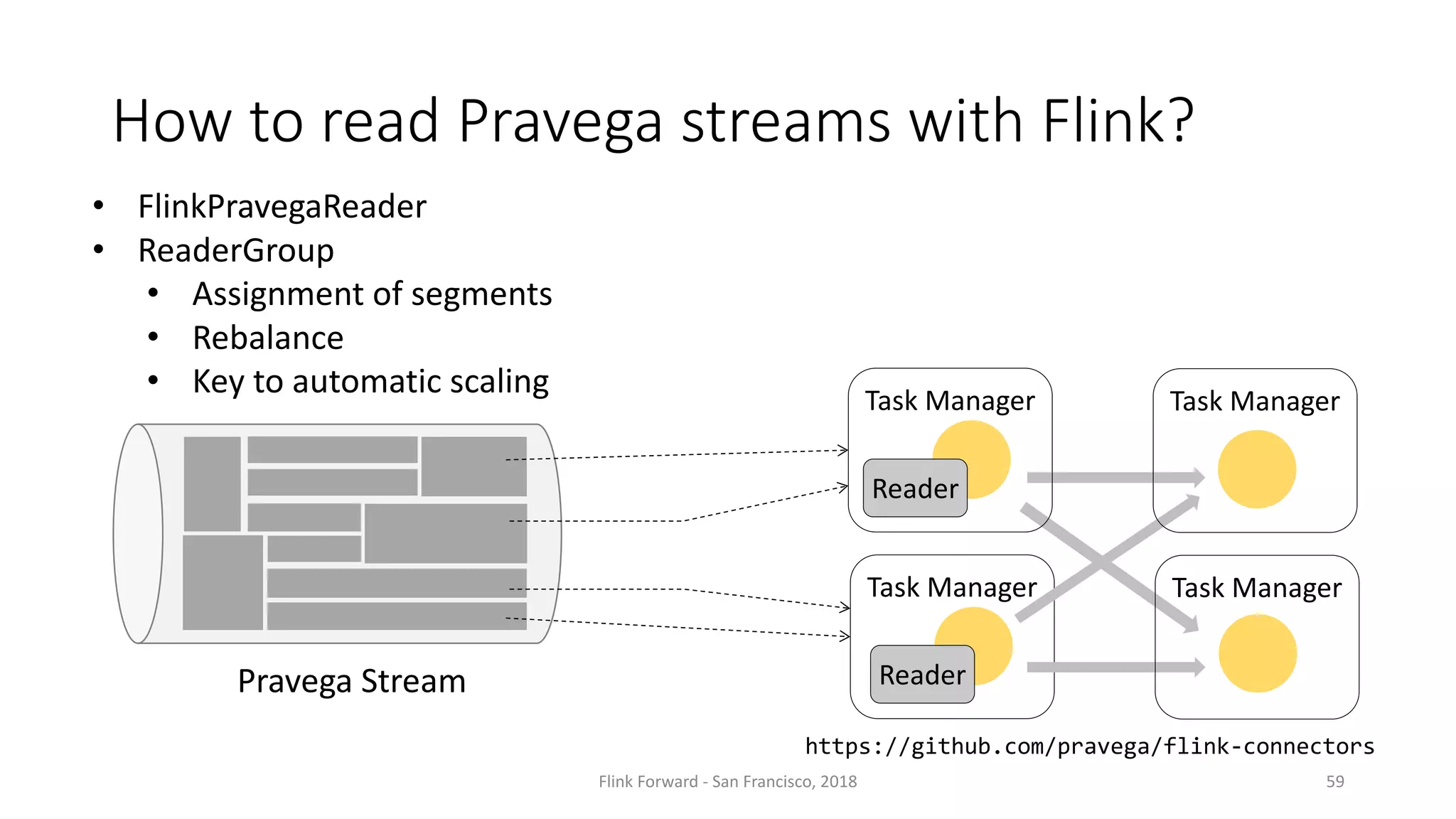

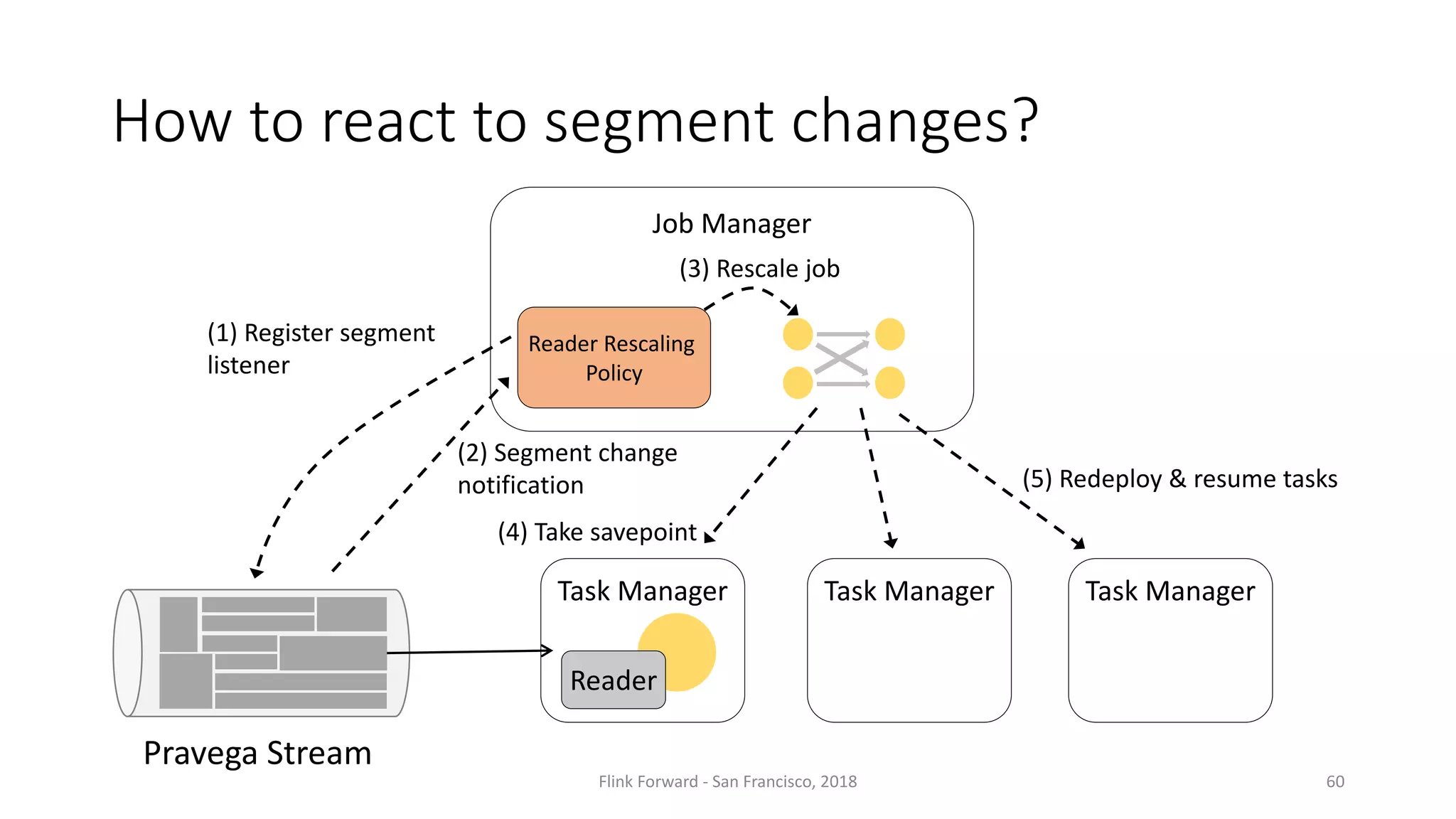

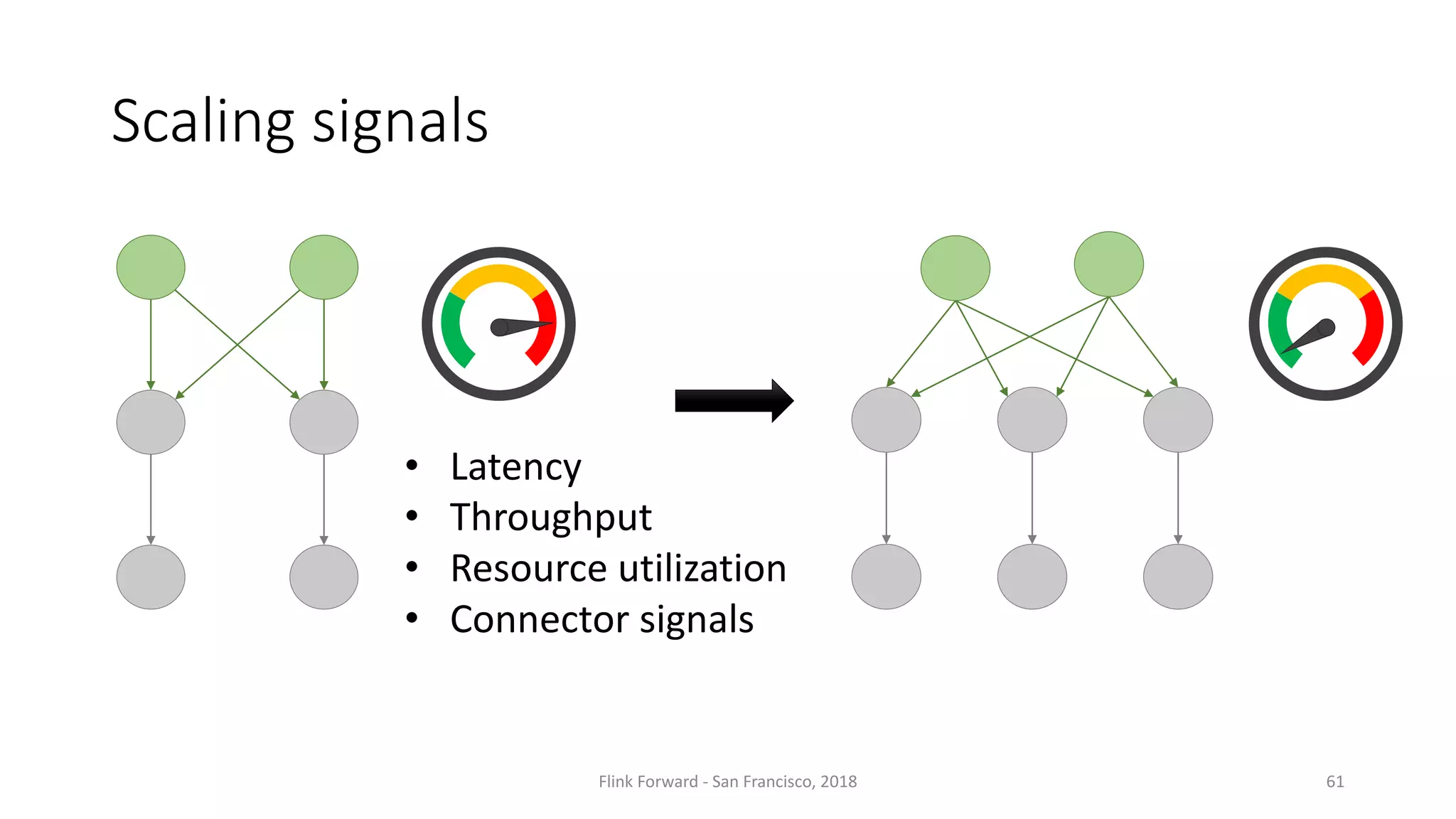

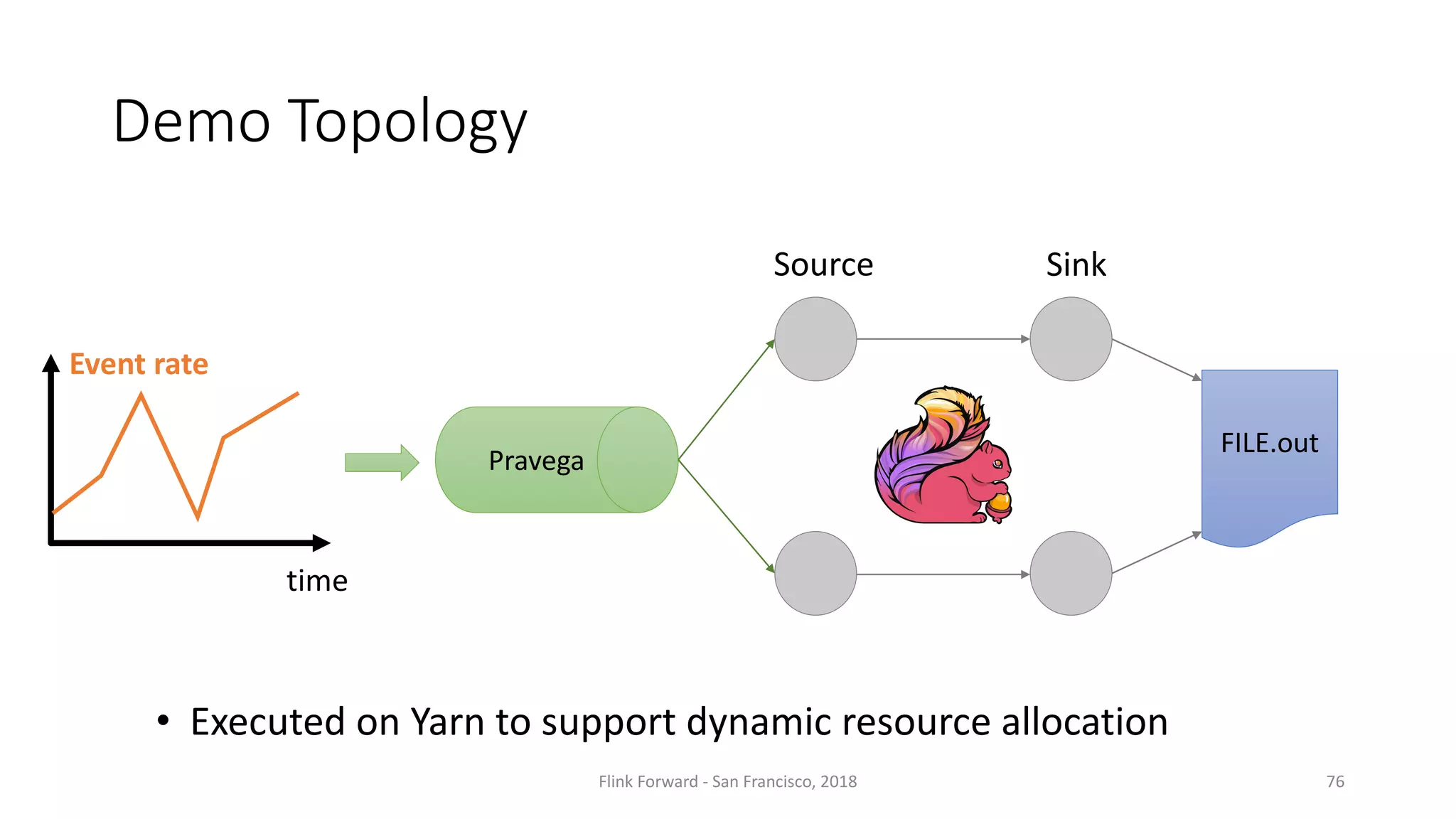

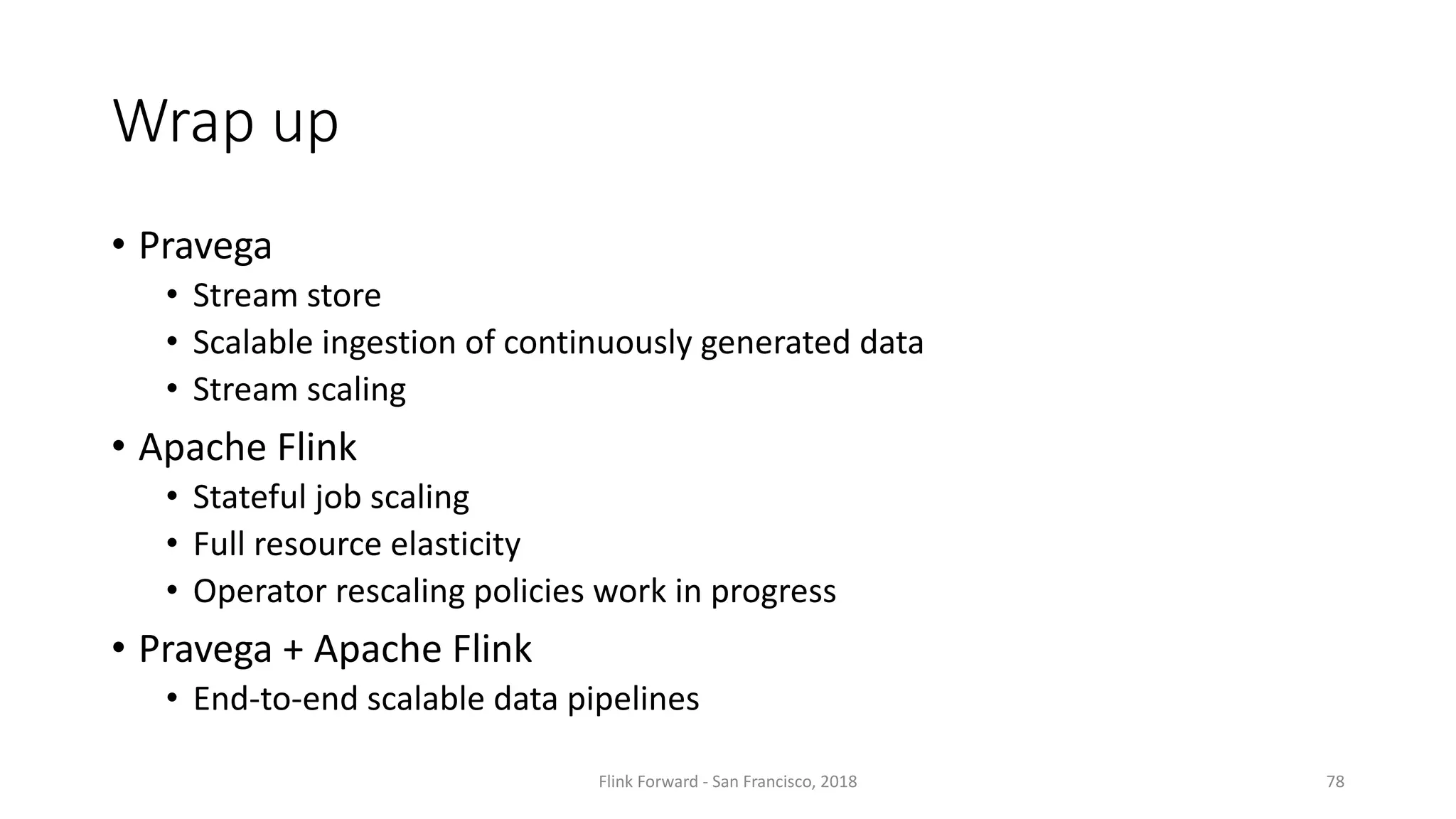

The document discusses scaling stream data pipelines. It covers how streams from social networks, online shopping, server monitoring and IoT sensors are becoming more common. It discusses how workloads have daily, weekly and seasonal cycles that cause spikes. It then discusses how to scale event processing by adding more processors as the input rate increases. It introduces Pravega as a stream storage system that can store streams permanently while preserving ordering and scaling to varying workloads. Pravega uses segments that can be split or merged to scale a stream. It describes Pravega's auto-scaling policies and how it triggers scaling events based on throughput metrics. Finally, it discusses how reader groups allow Pravega to maintain ordering during scaling.