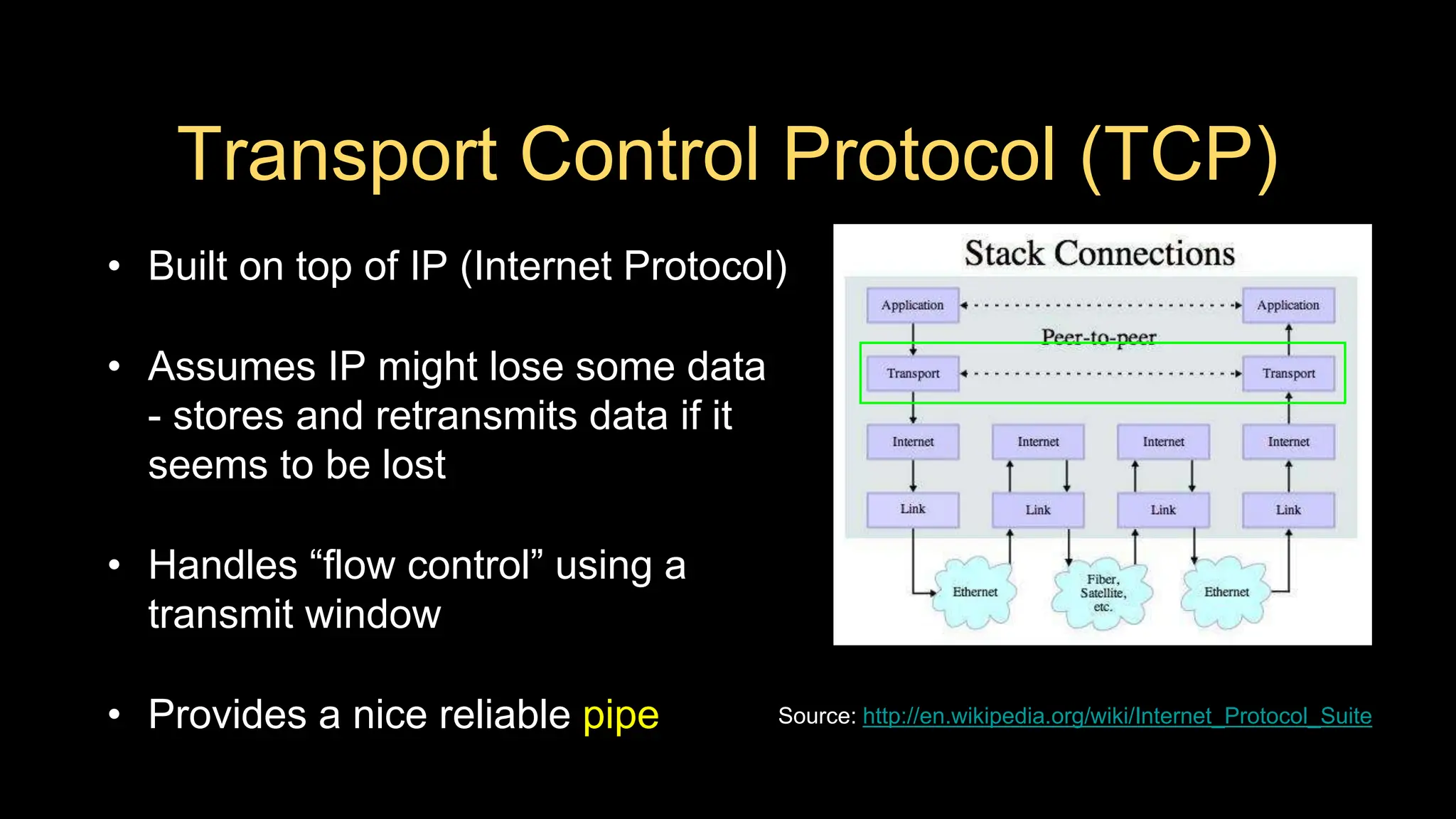

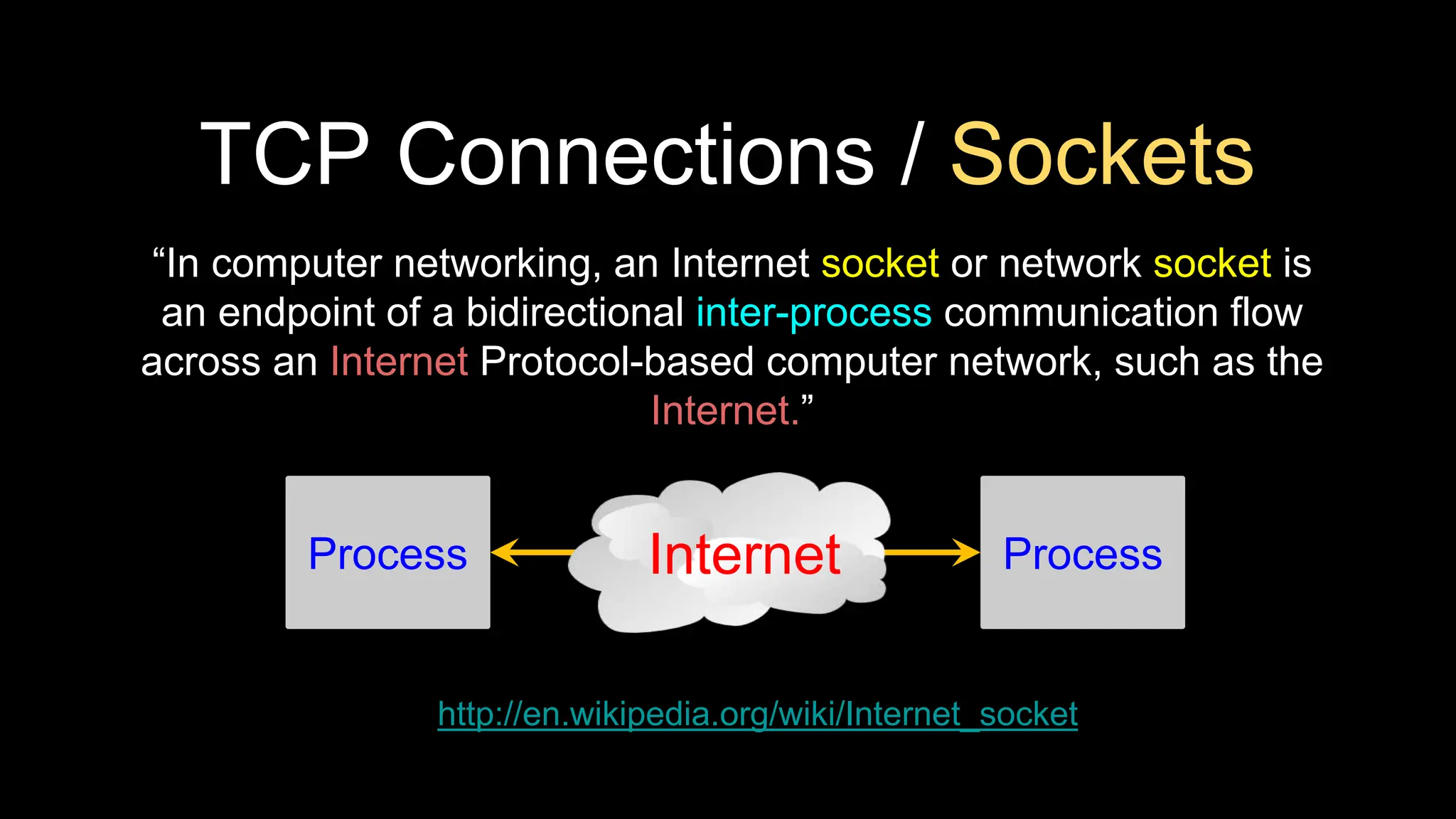

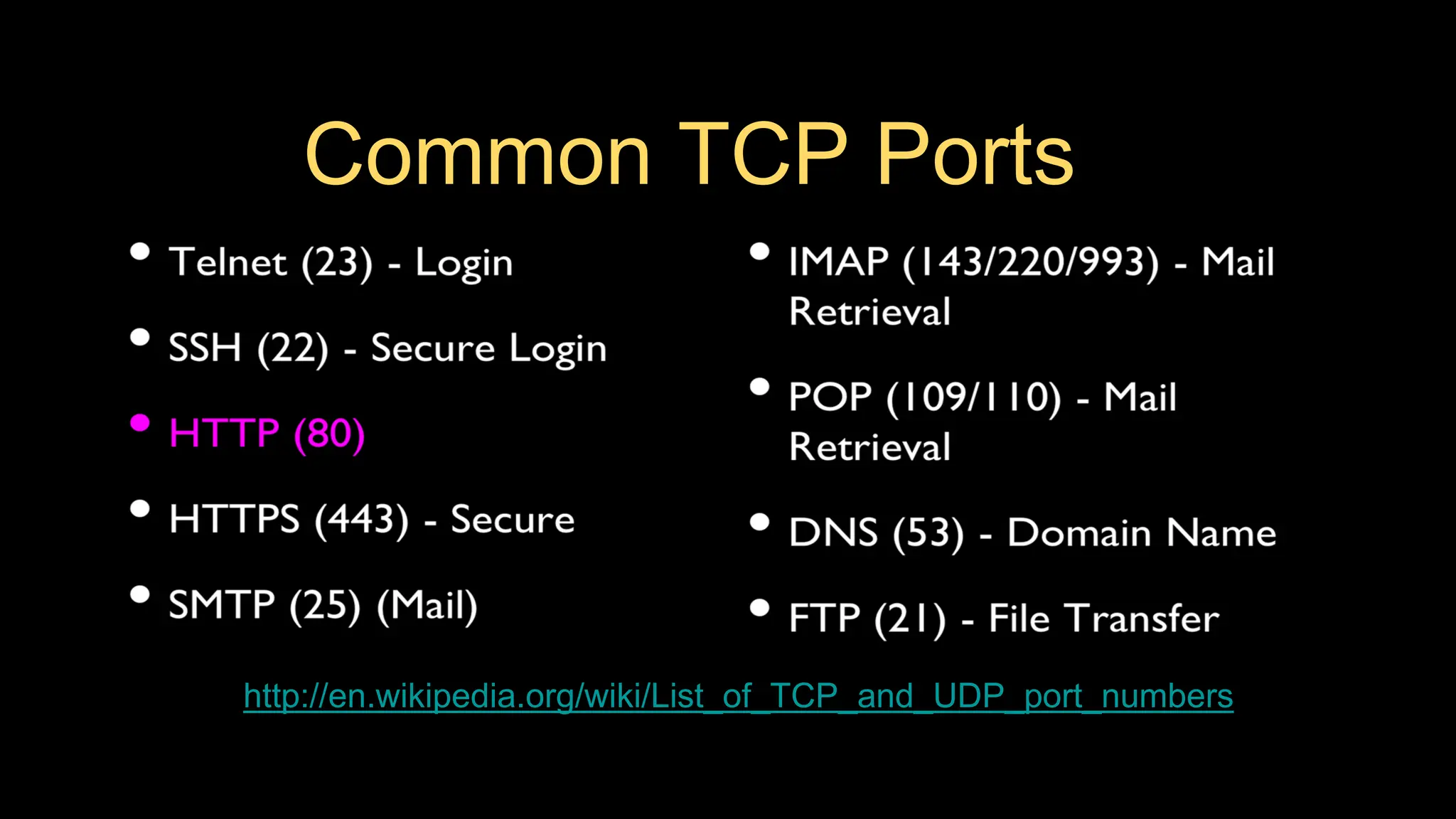

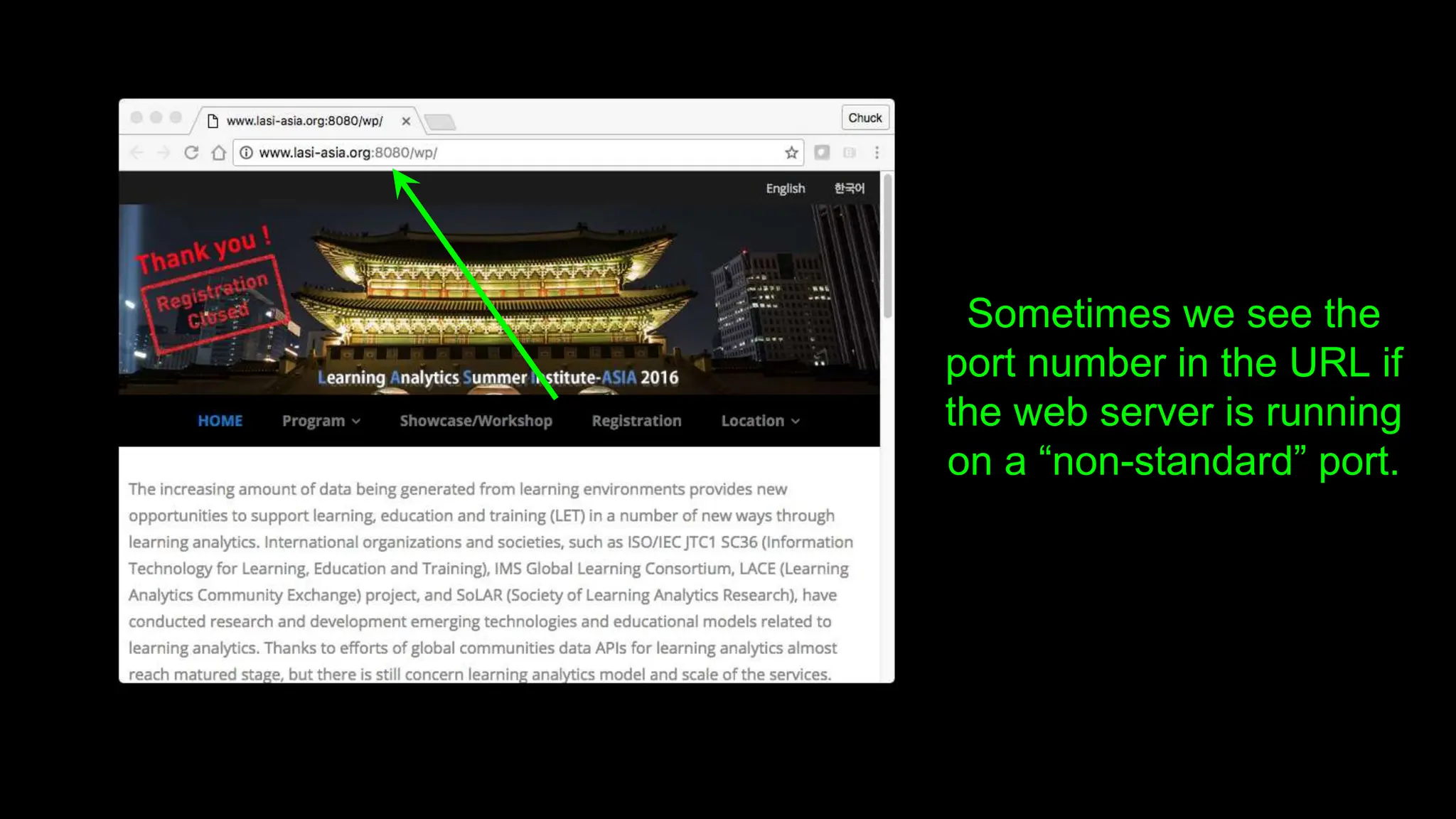

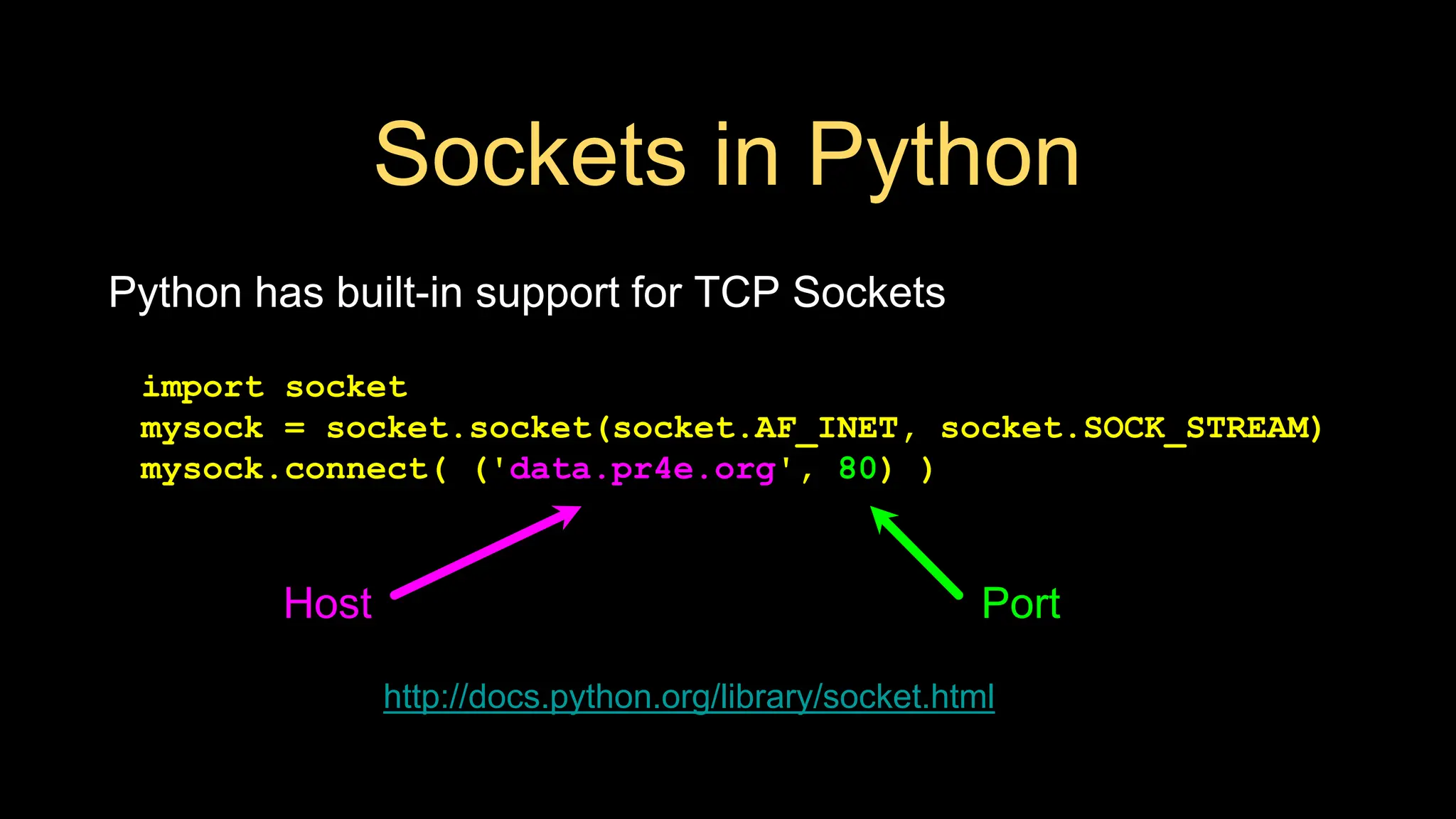

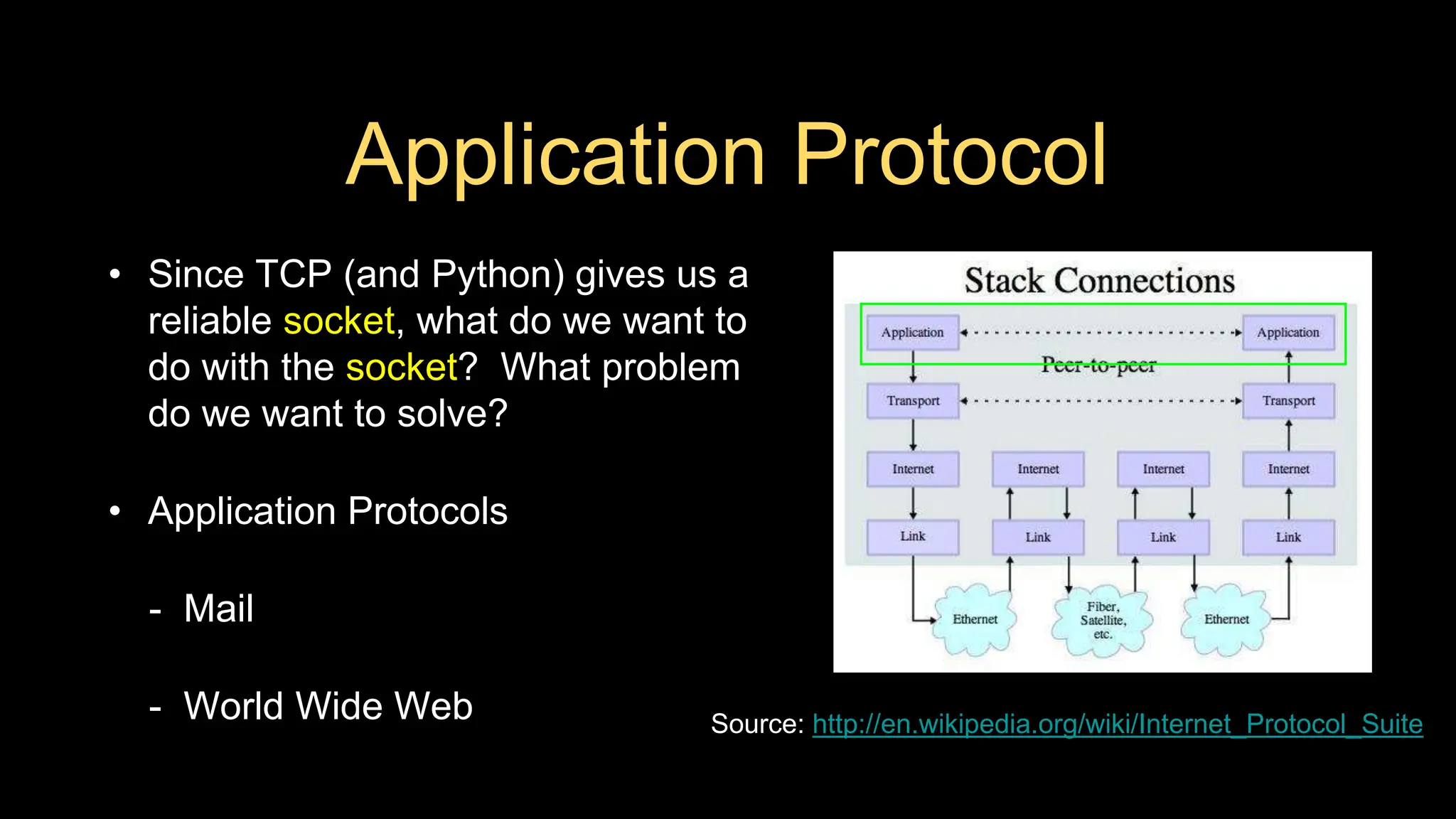

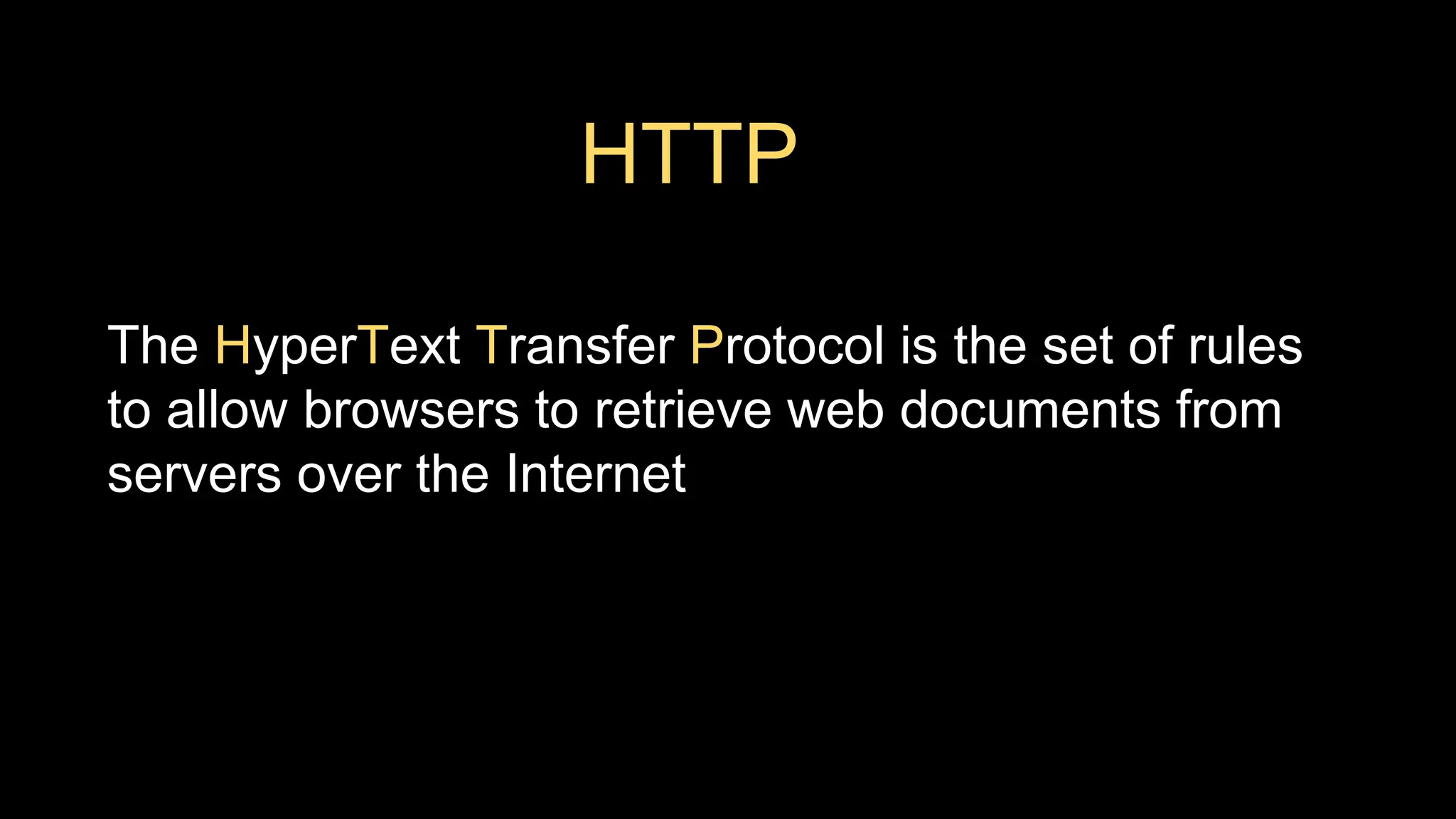

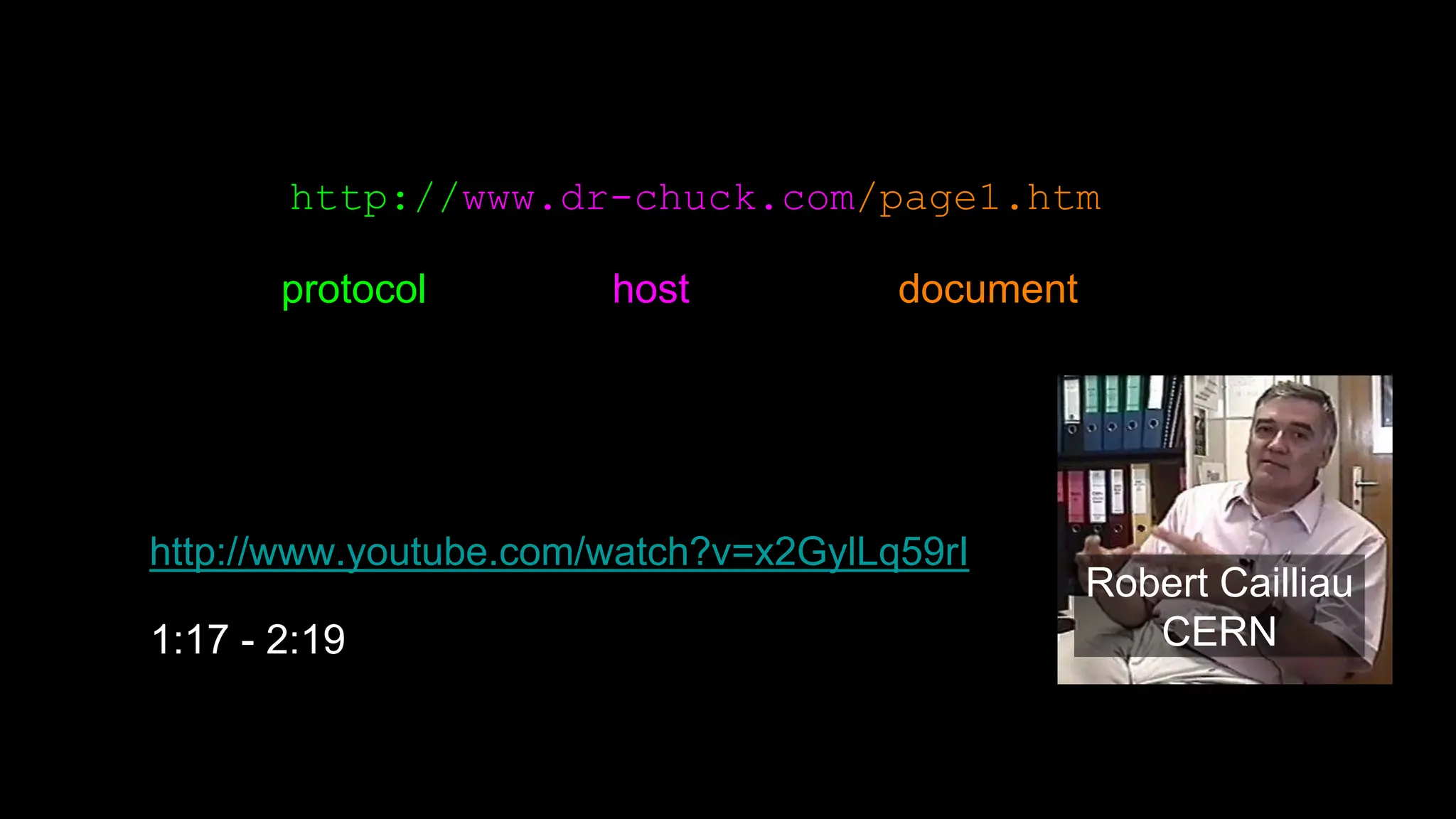

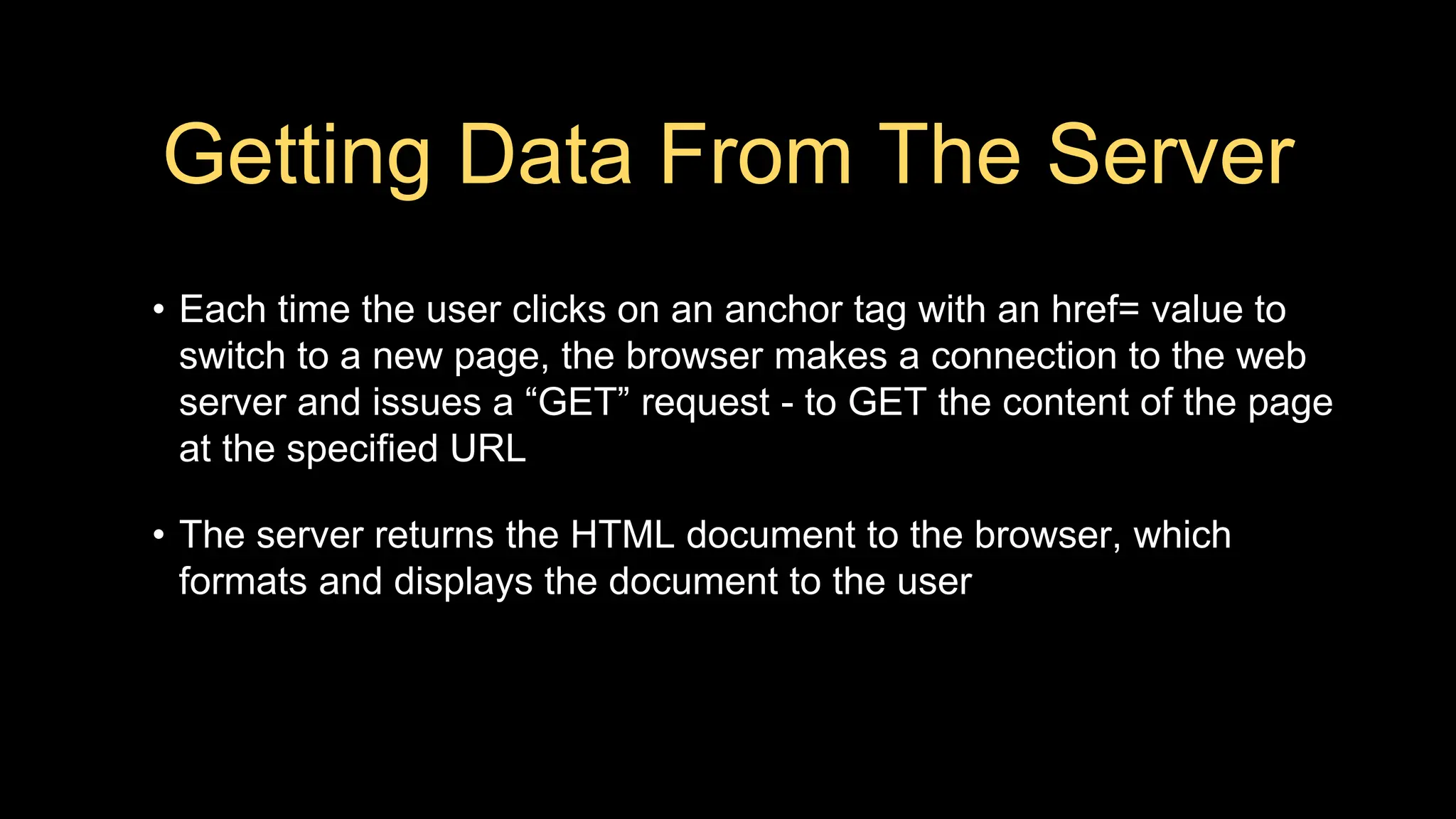

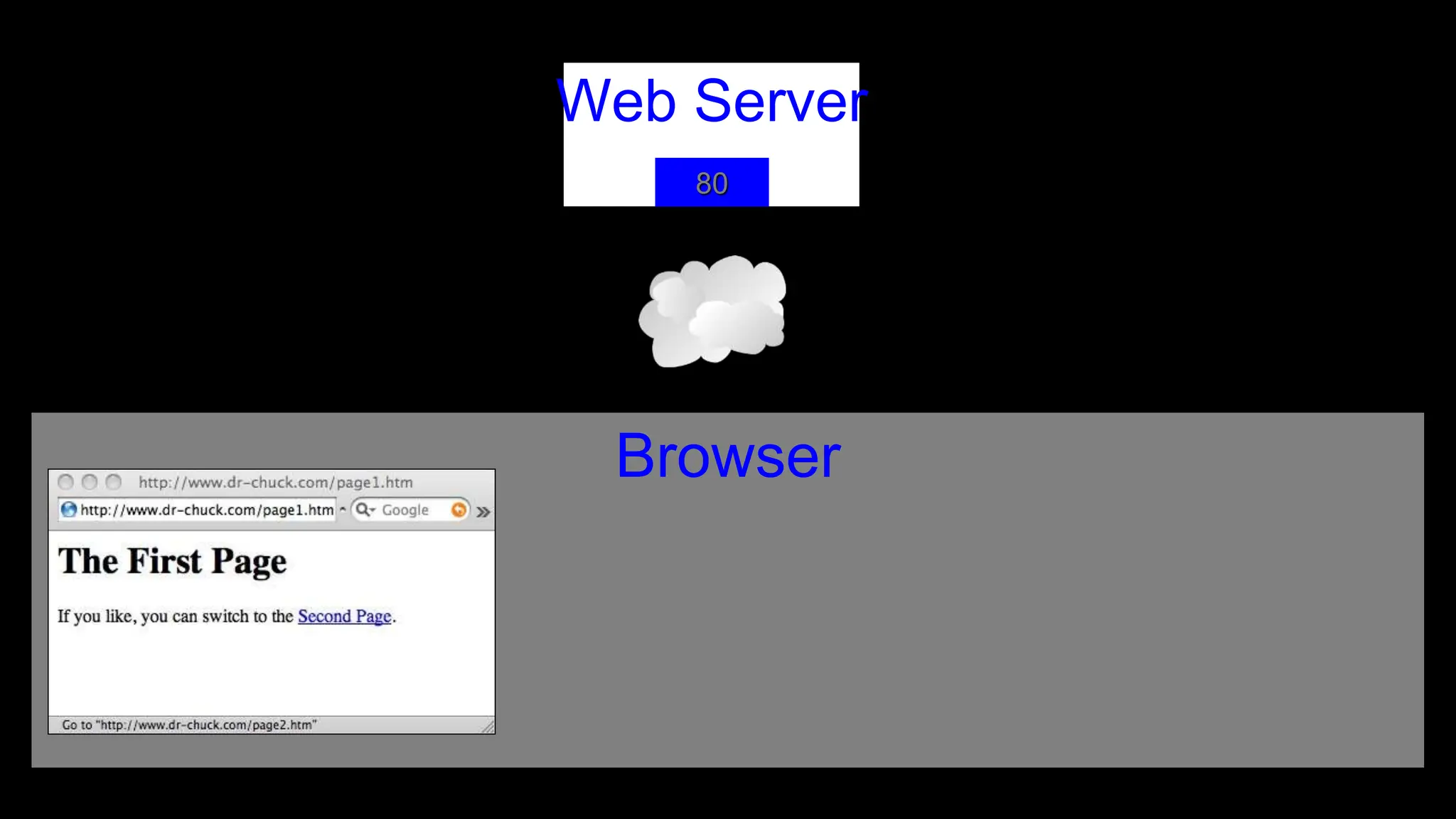

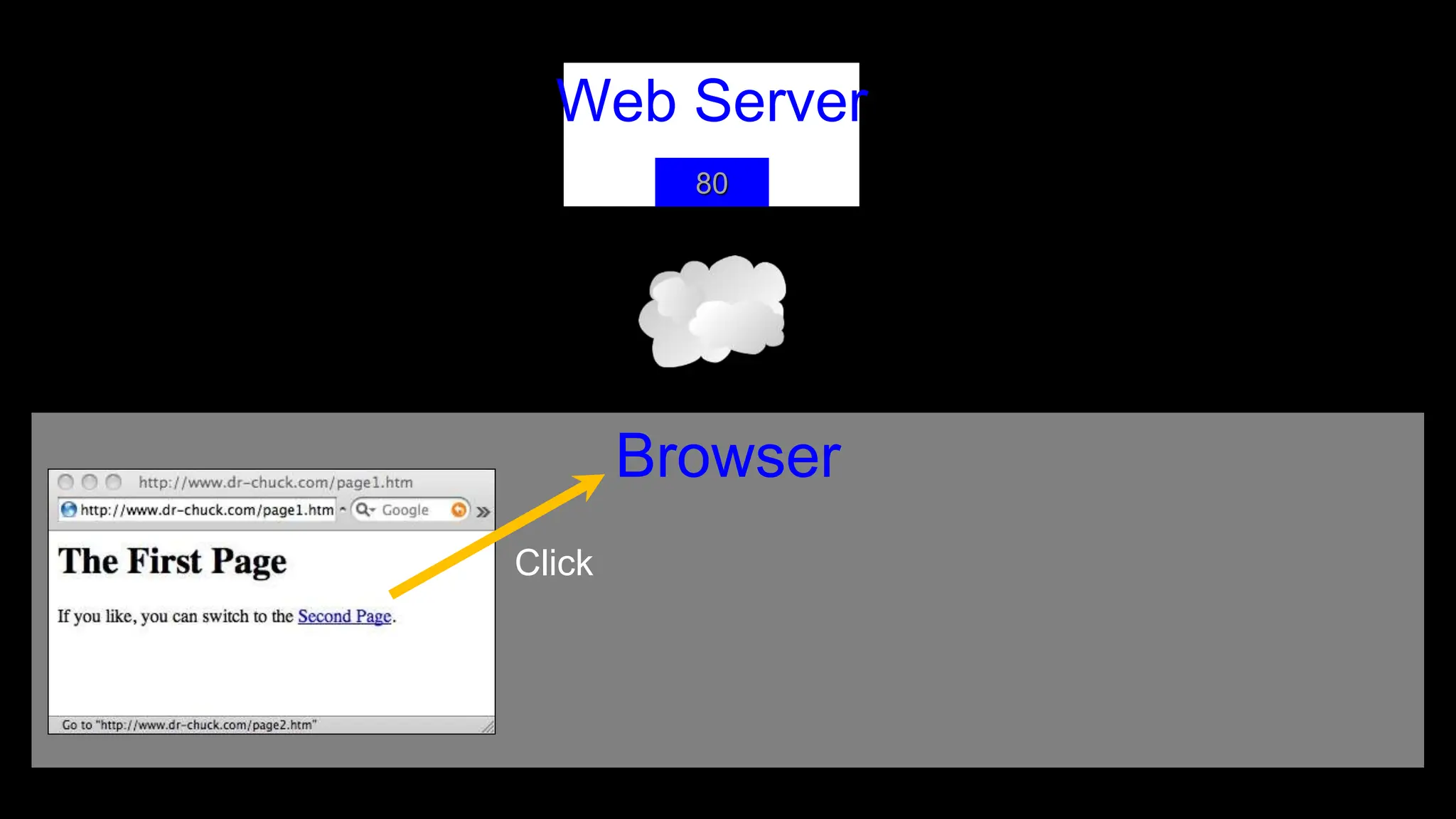

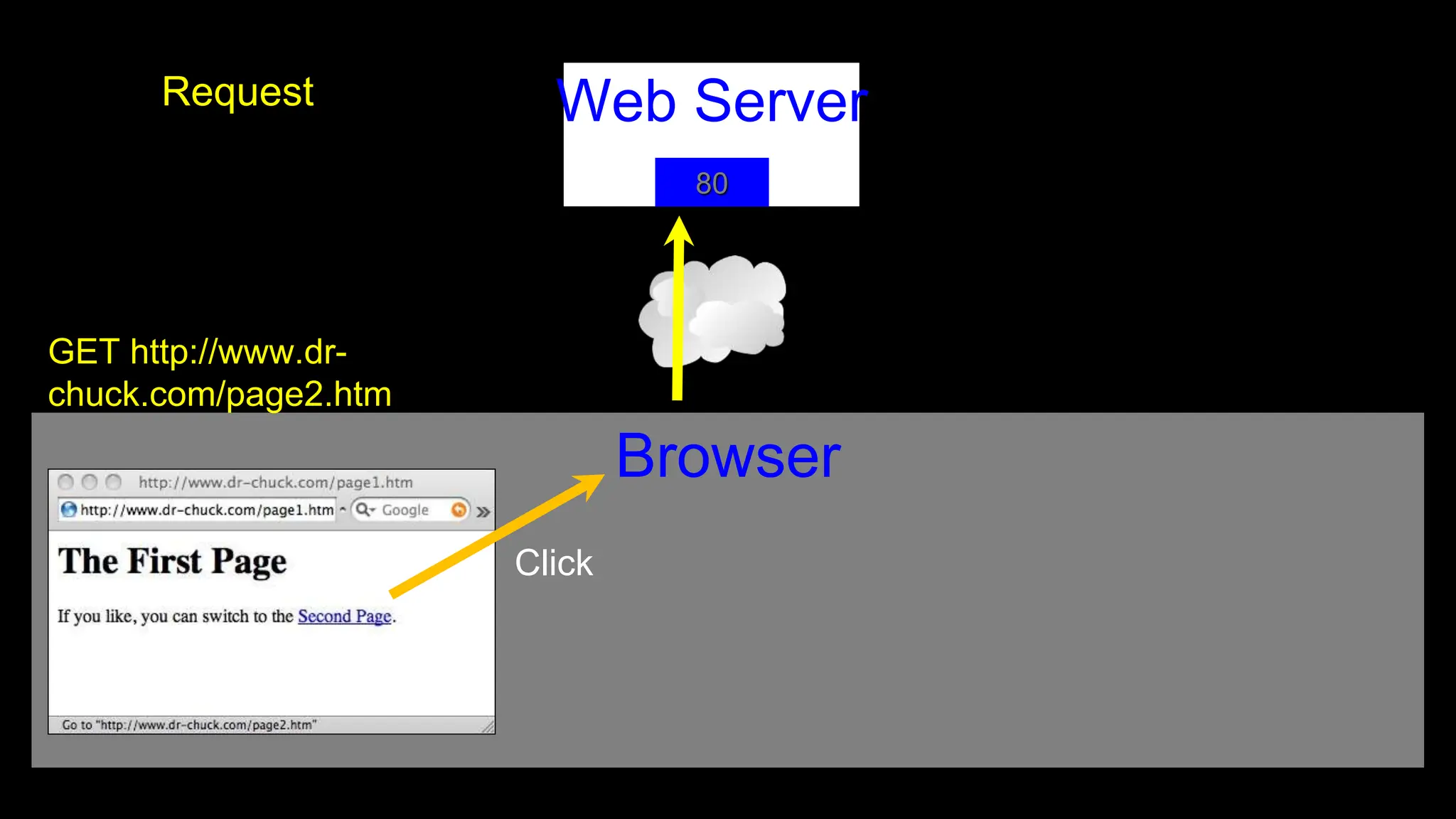

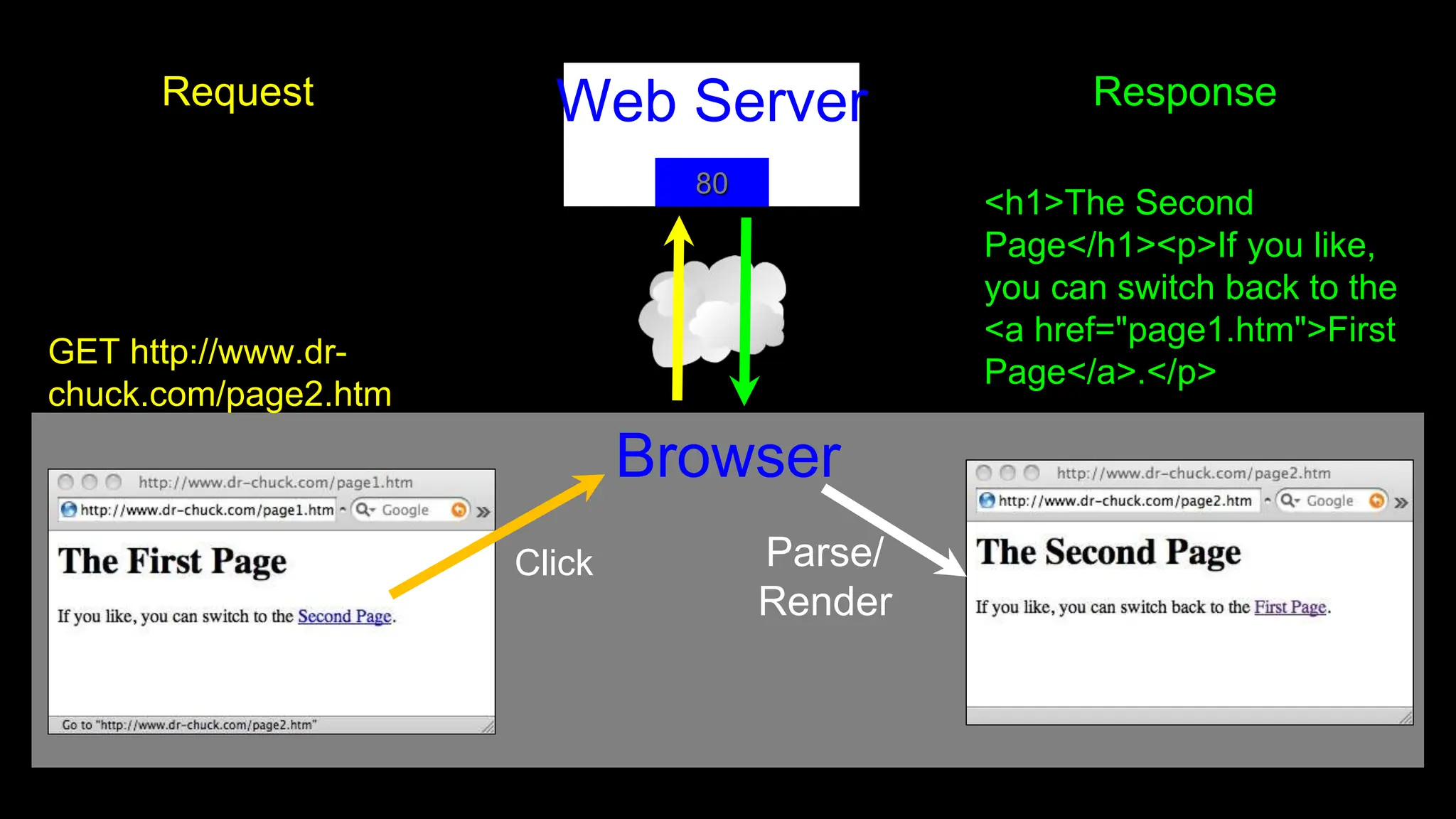

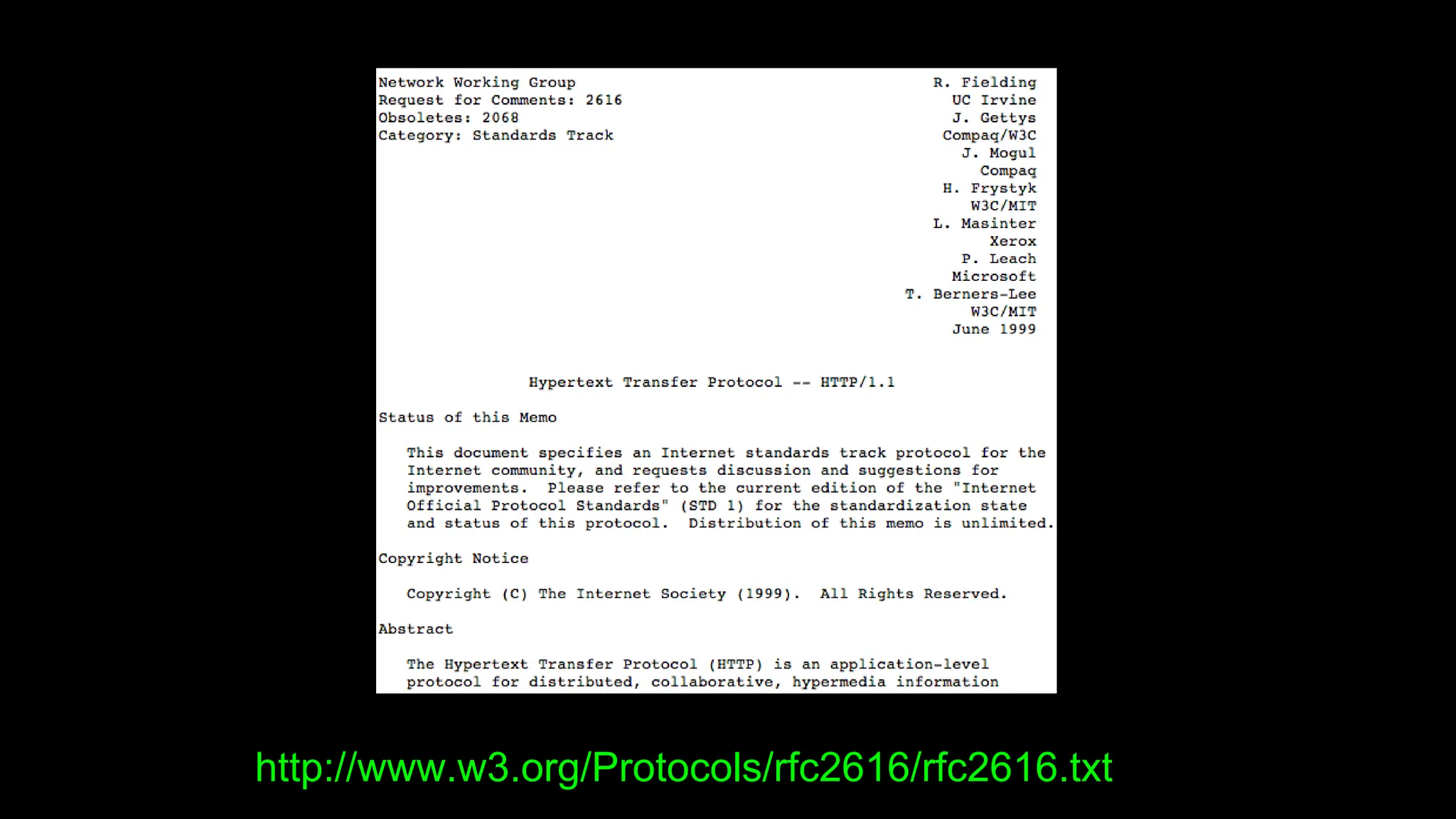

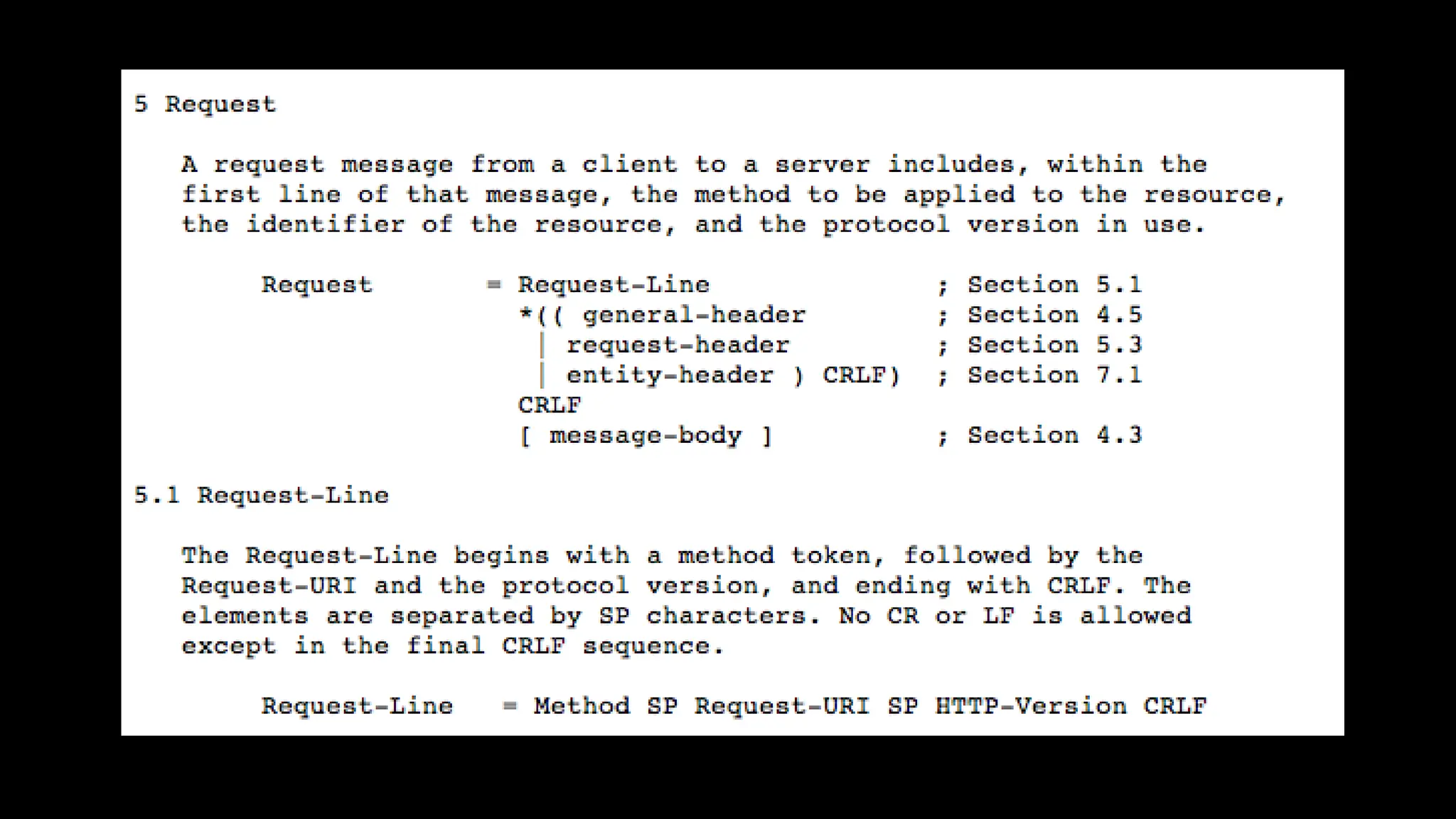

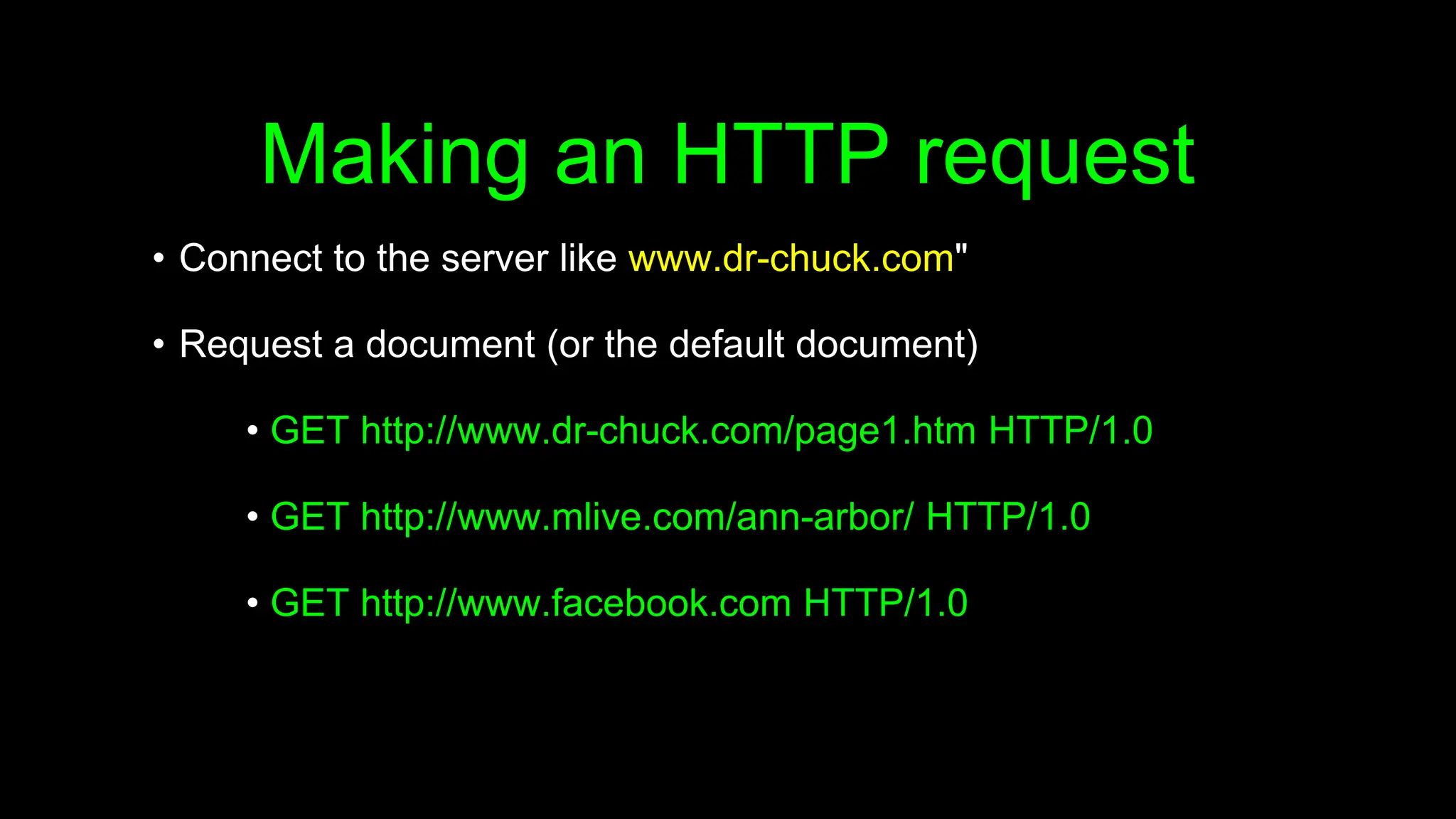

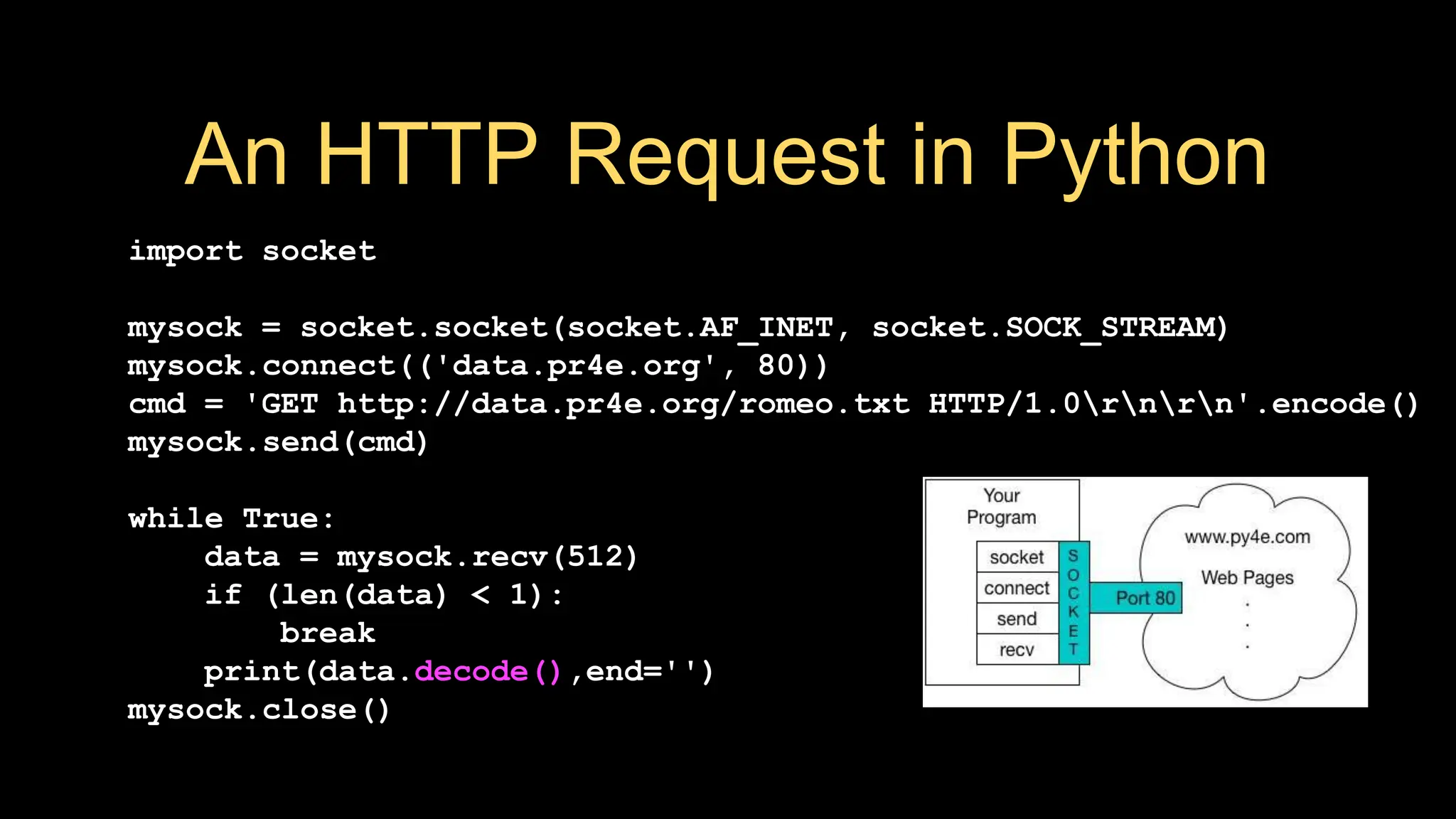

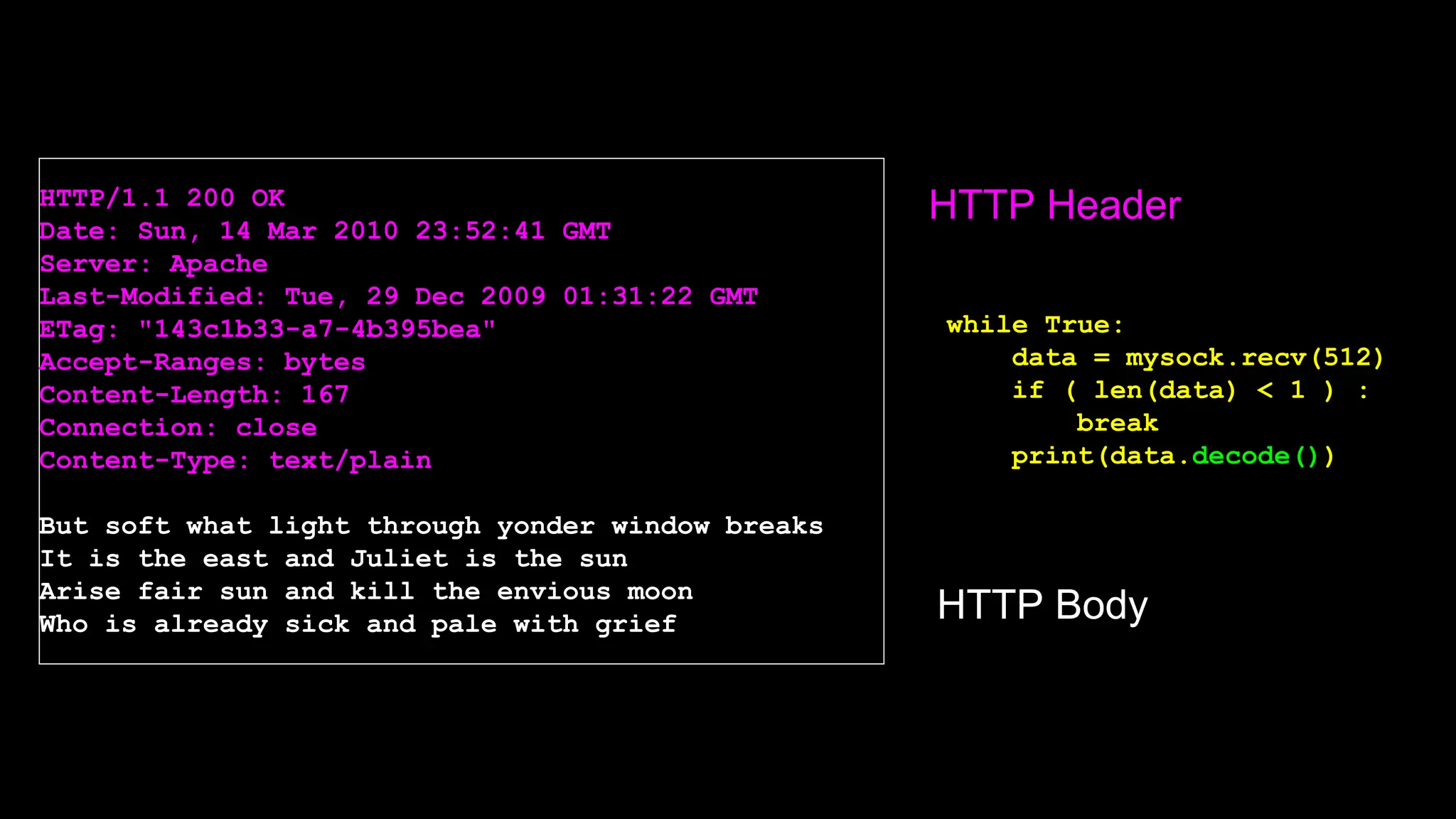

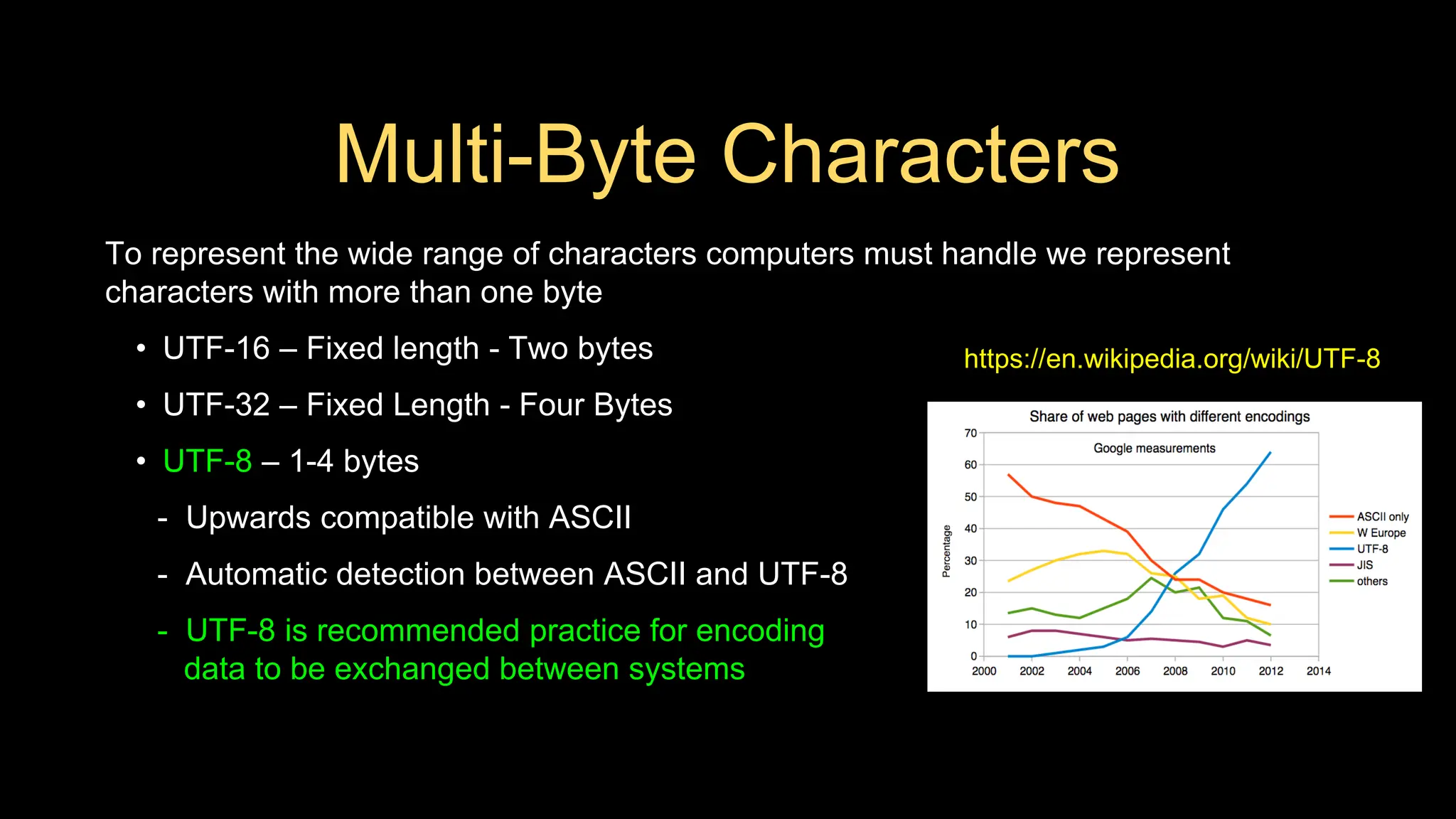

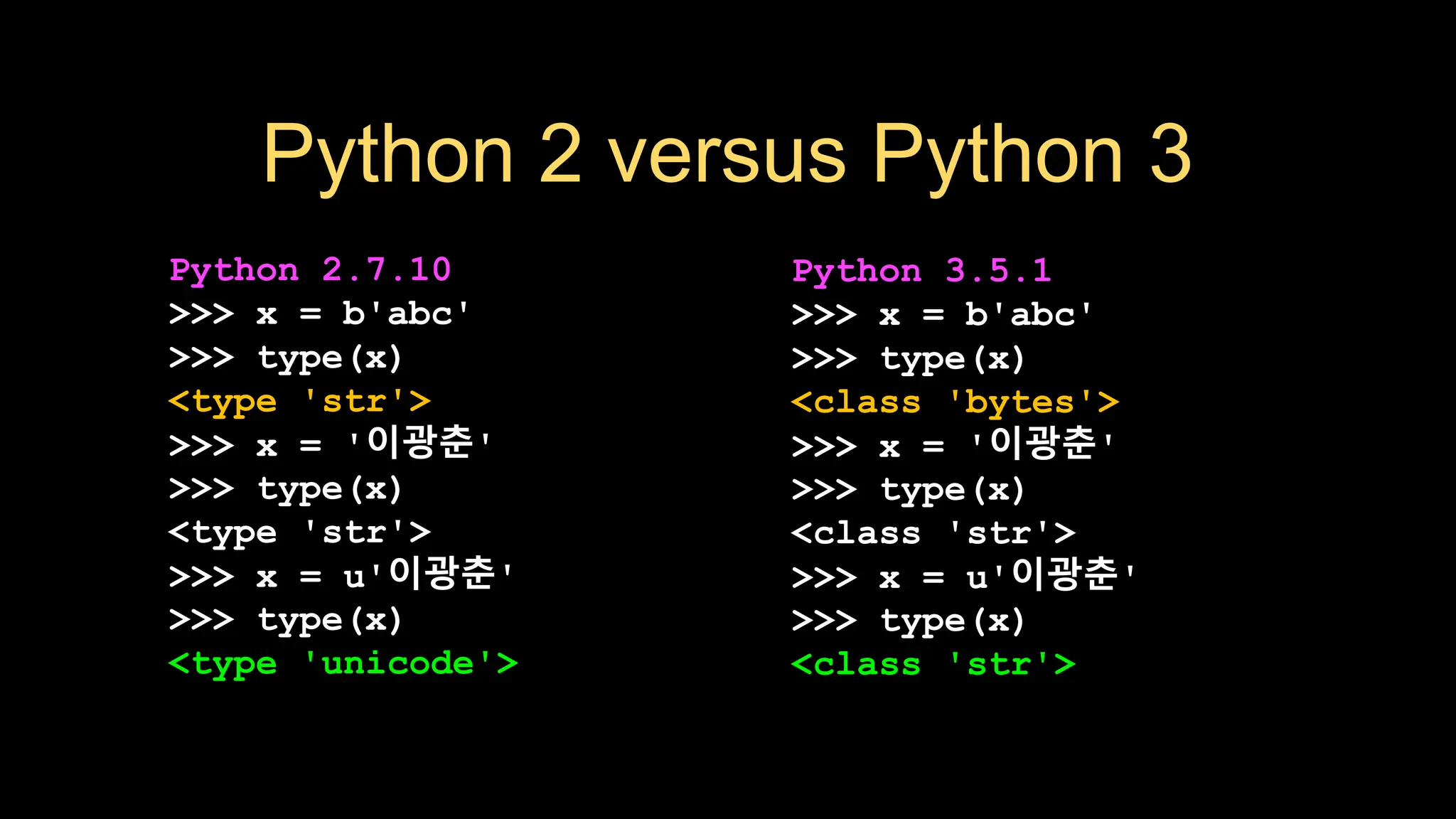

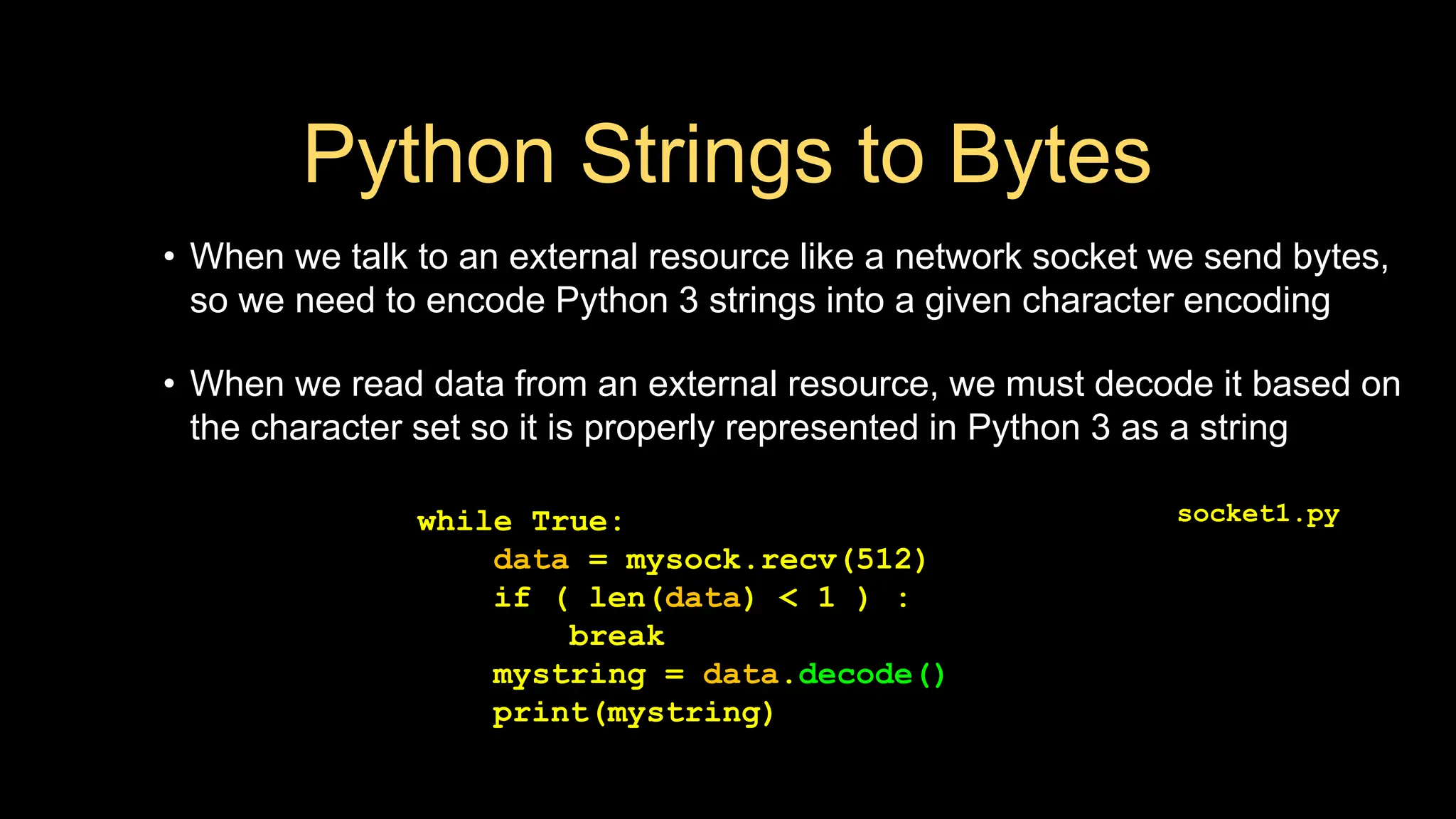

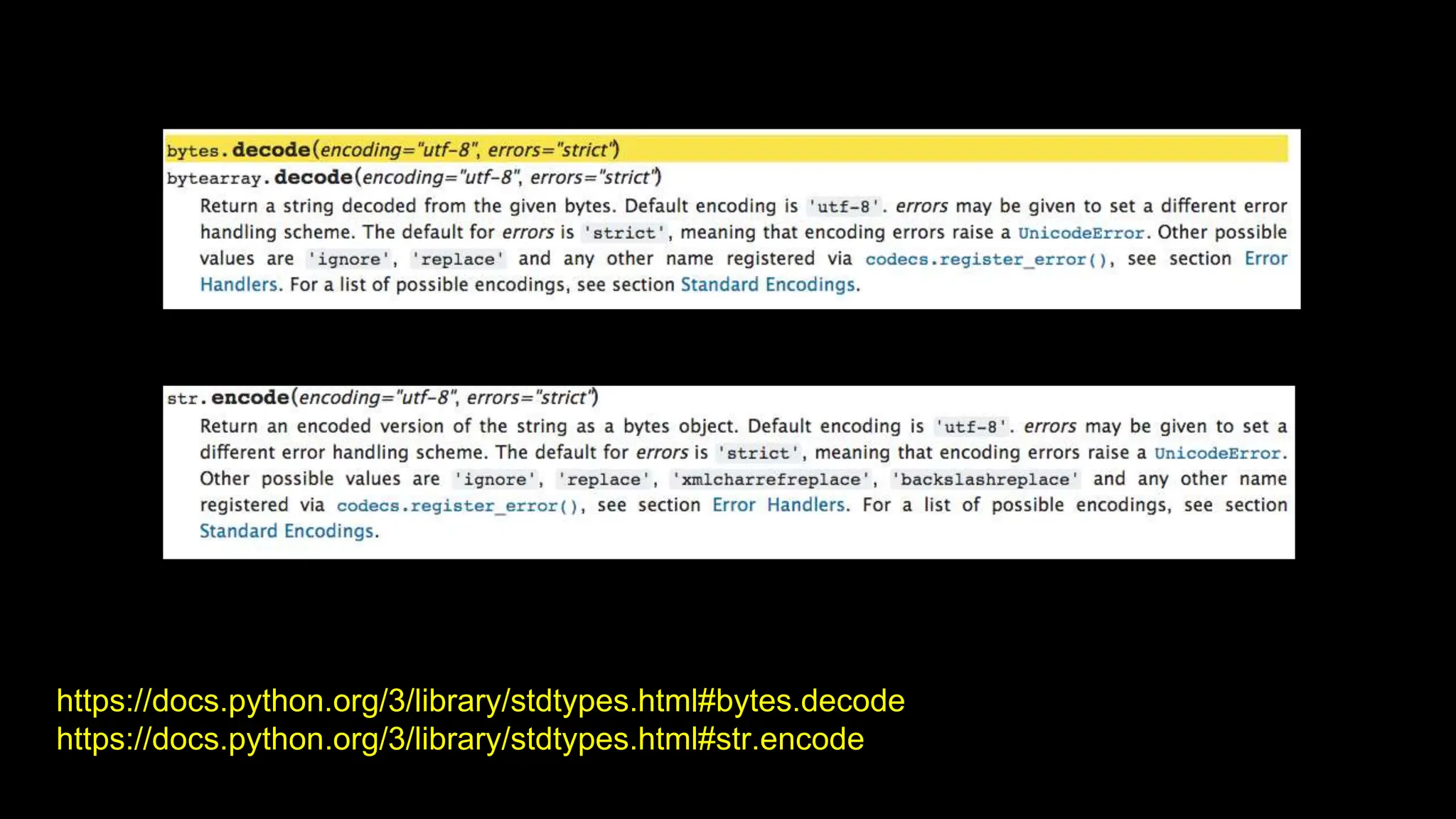

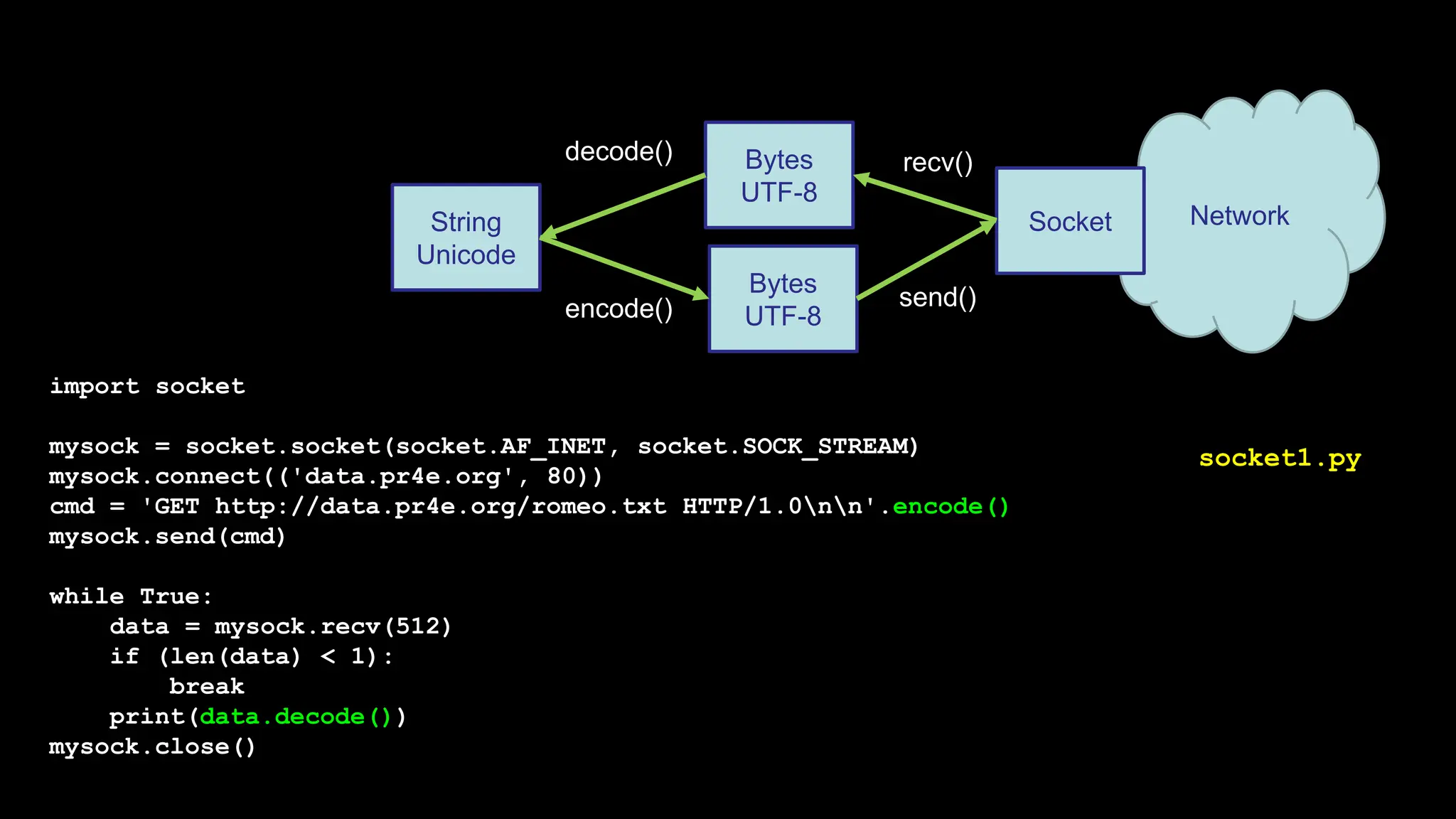

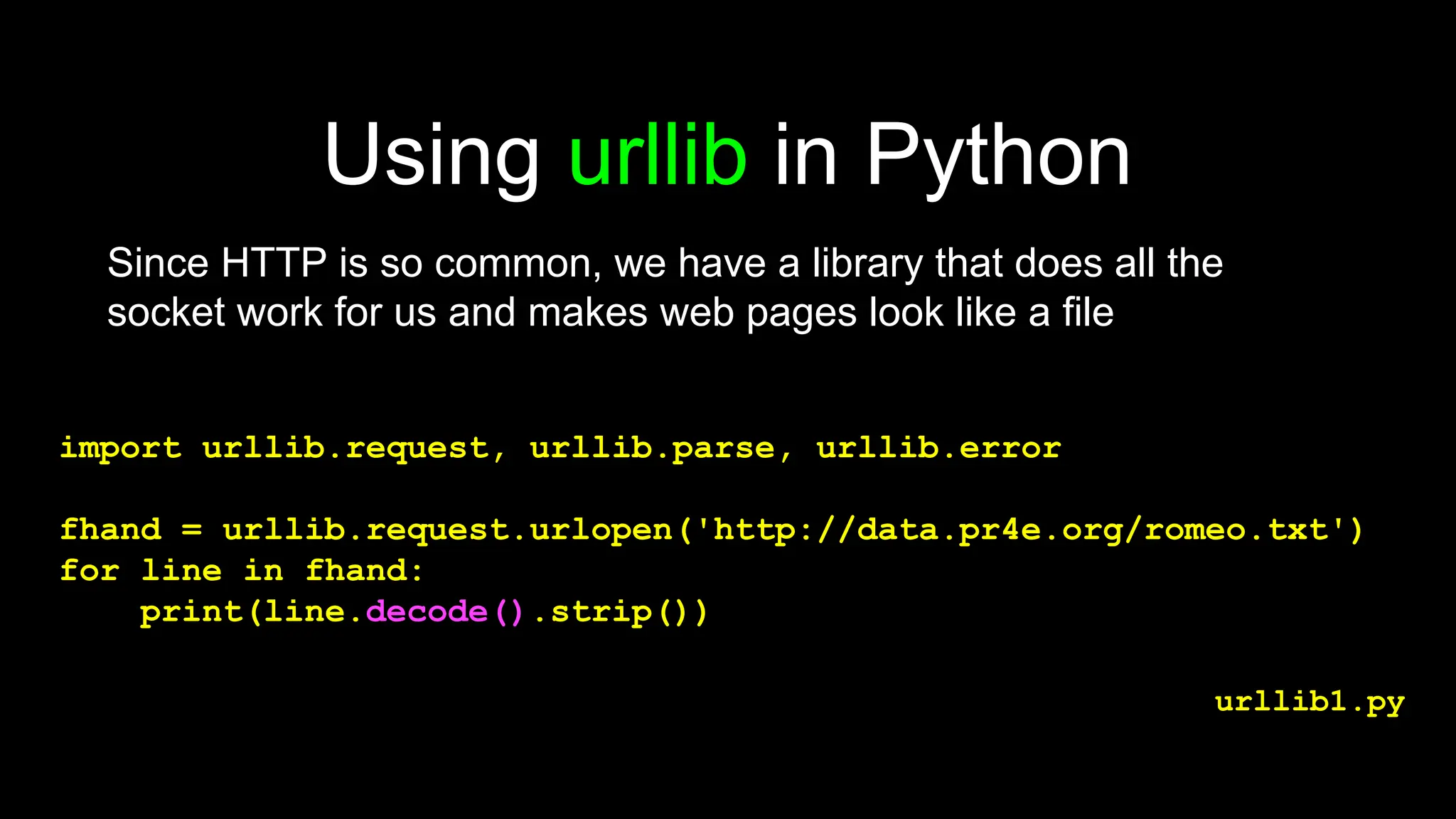

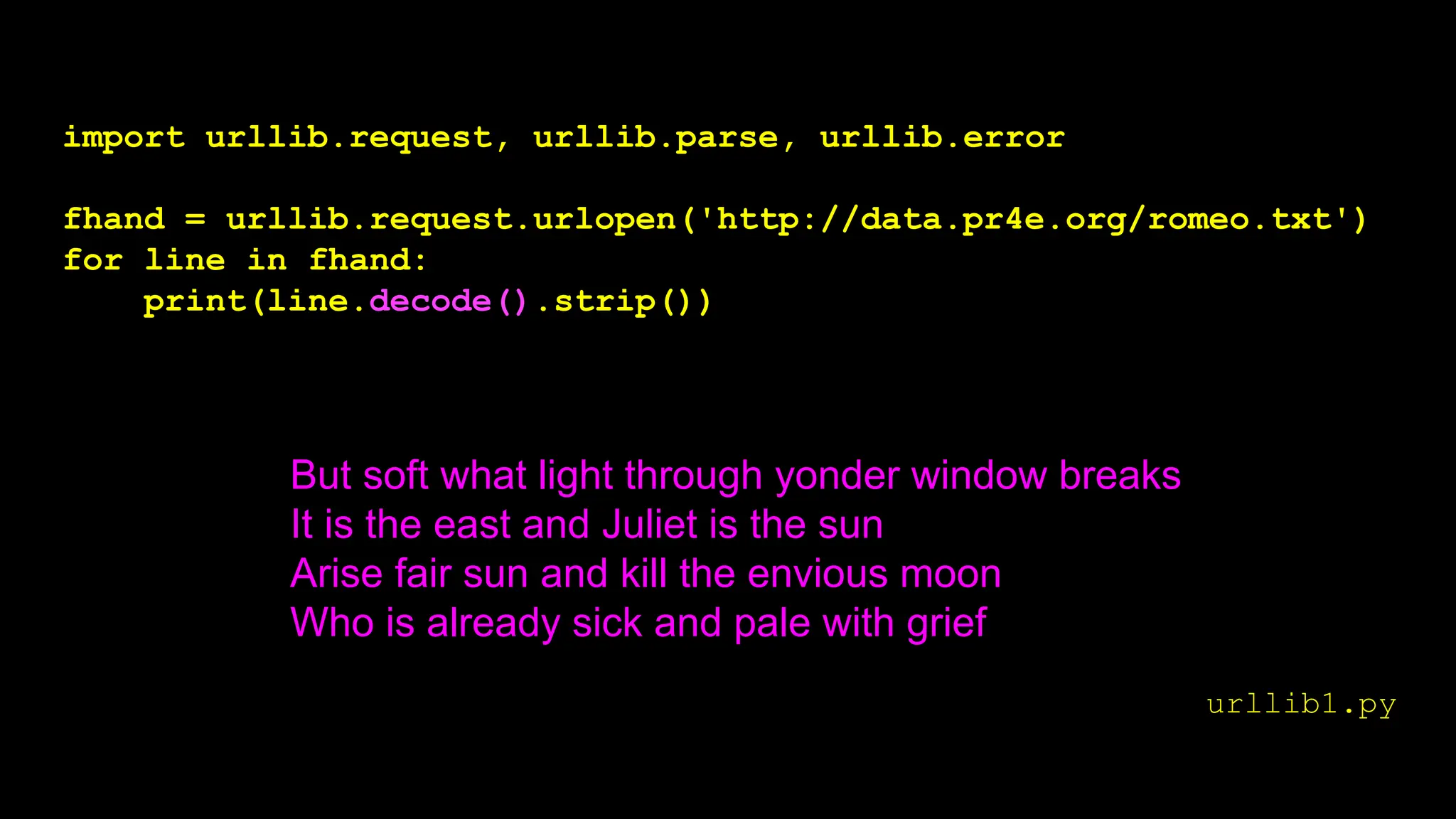

This document discusses network architecture, focusing on Transport Control Protocol (TCP) and its functionalities such as flow control and error handling. It describes how Python supports TCP sockets and provides an overview of application protocols, specifically the Hypertext Transfer Protocol (HTTP), detailing how web browsers request documents from web servers. Additionally, it covers the importance of Unicode and string encoding in Python when interacting with network resources.

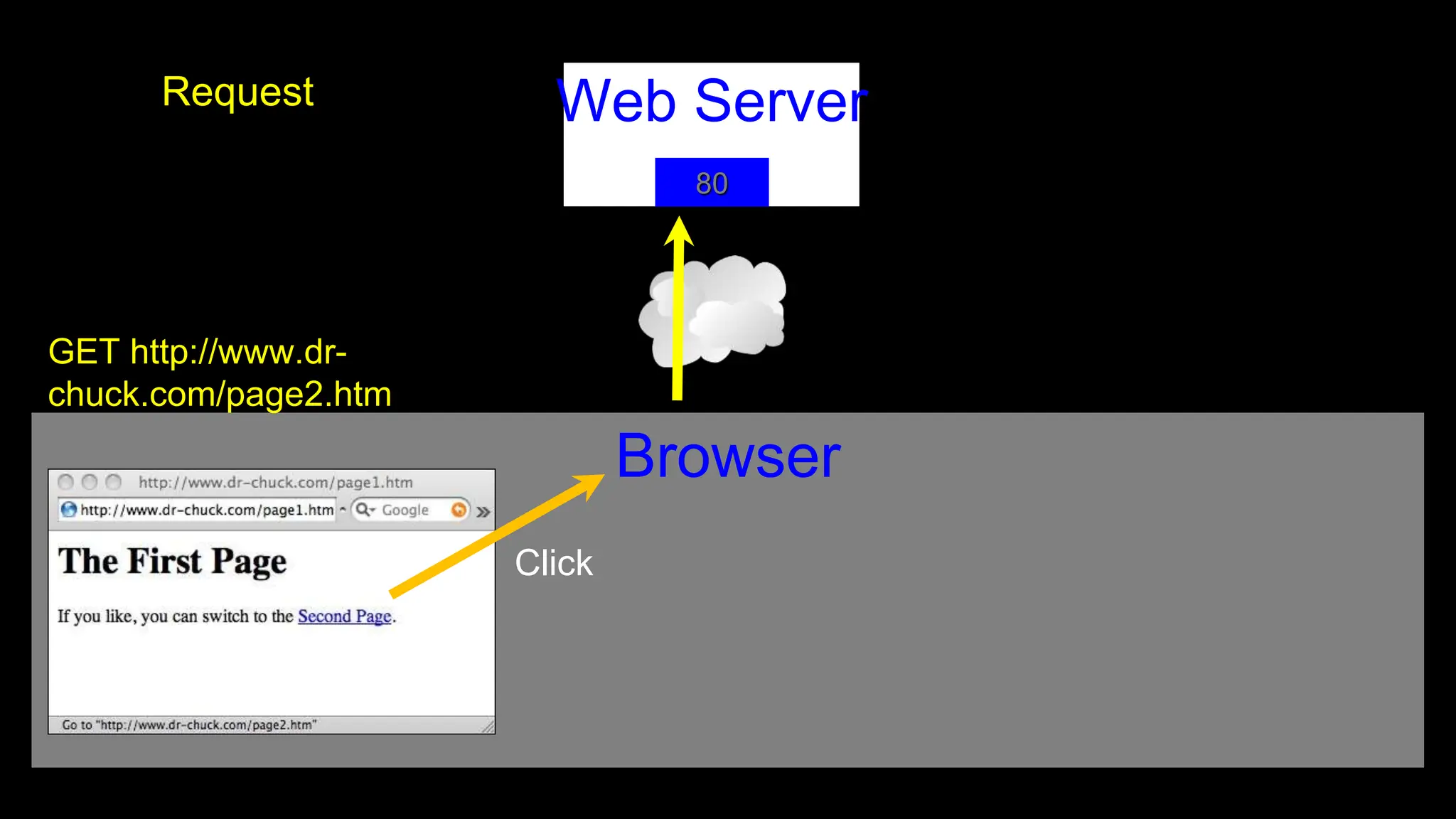

![Browser Web Server Note: Many servers do not support HTTP 1.0 $ telnet data.pr4e.org 80 Trying 74.208.28.177... Connected to data.pr4e.org. Escape character is '^]'. GET http://data.pr4e.org/page1.htm HTTP/1.0 HTTP/1.1 200 OK Date: Tue, 30 Jan 2024 15:30:13 GMT Server: Apache/2.4.18 (Ubuntu) Last-Modified: Mon, 15 May 2017 11:11:47 GMT Content-Length: 128 Content-Type: text/html <h1>The First Page</h1> <p>If you like, you can switch to the <a href="http://data.pr4e.org/page2.htm">Second Page</a>.</p> Connection closed by foreign host.](https://image.slidesharecdn.com/pythonlearn-12-http-240525014507-db546470/75/Pythonlearn-12-HTTP-Network-Programming-29-2048.jpg)

![Like a File... import urllib.request, urllib.parse, urllib.error fhand = urllib.request.urlopen('http://data.pr4e.org/romeo.txt') counts = dict() for line in fhand: words = line.decode().split() for word in words: counts[word] = counts.get(word, 0) + 1 print(counts) urlwords.py](https://image.slidesharecdn.com/pythonlearn-12-http-240525014507-db546470/75/Pythonlearn-12-HTTP-Network-Programming-50-2048.jpg)