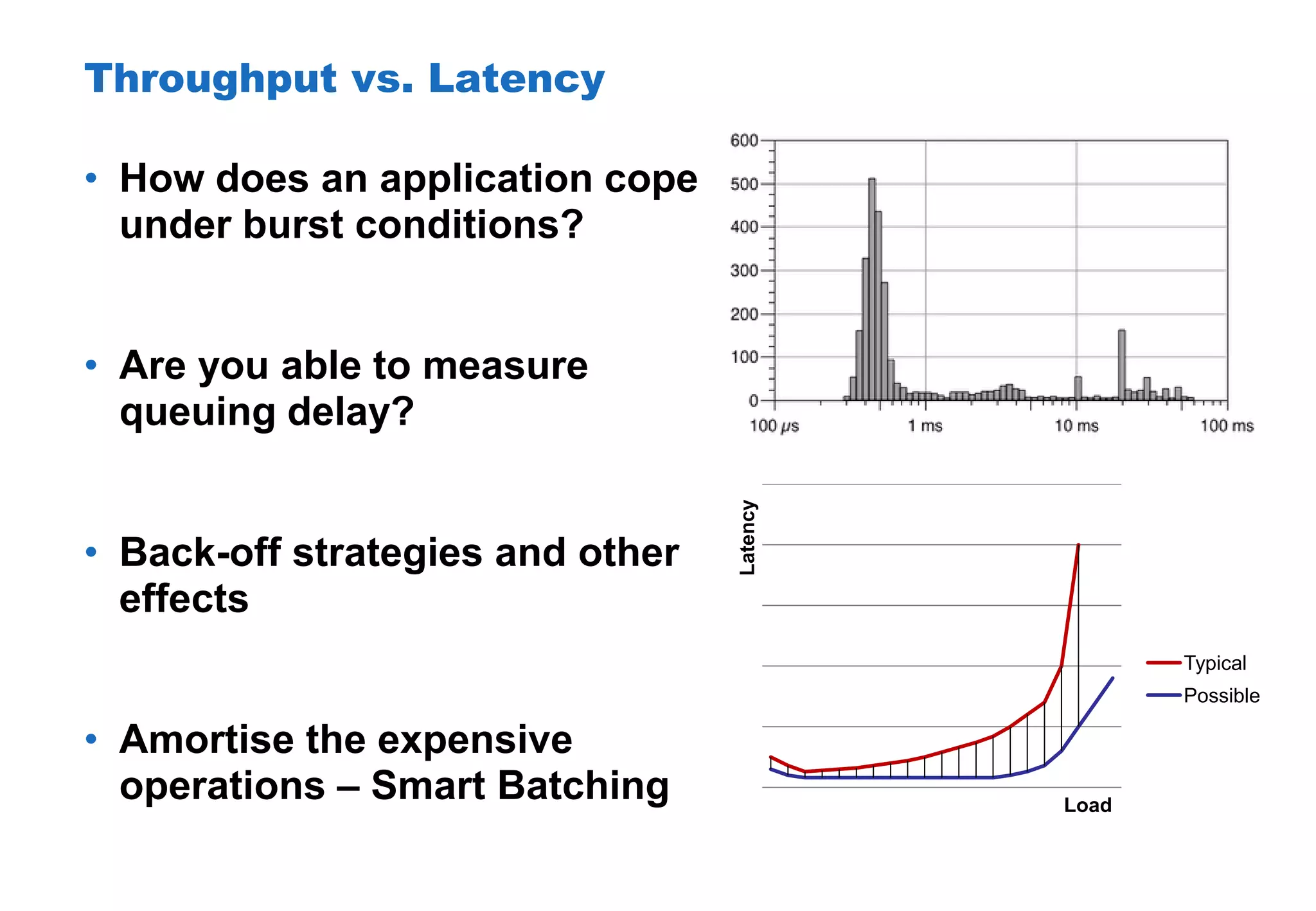

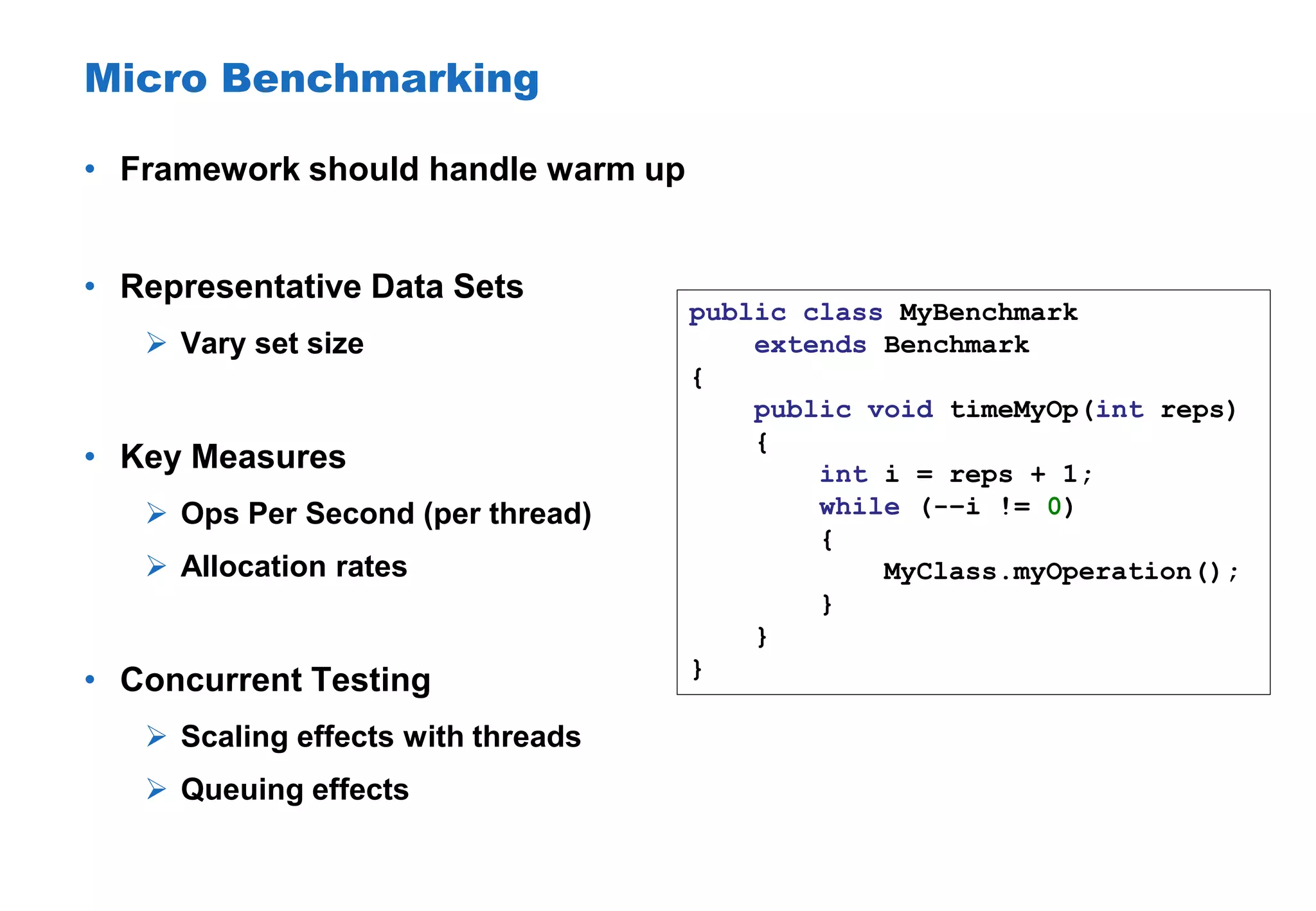

The document discusses performance testing for Java applications, focusing on key metrics such as throughput, latency, and various types of performance tests like stress and capacity testing. It emphasizes the importance of understanding architectural layers, testing practices, and the necessity for performance to be a shared responsibility among developers. Additionally, it highlights tools and strategies for effective performance profiling while admonishing against premature optimization and the pitfalls of various Java-specific conditions.

![Anatomy Of A Micro Benchmark public class MapBenchmark extends Benchmark { private int size; private Map<Long, String> map = new MySpecialMap<Long, String>(); private long[] keys; private String[] values; // setup method to init keys and values public void timePutOperation(int reps) { for (int i = 0; i < reps; i++) { map.put(keys[i], values[i]); } } }](https://image.slidesharecdn.com/untitled-130417100607-phpapp02/75/Performance-Testing-Java-Applications-23-2048.jpg)