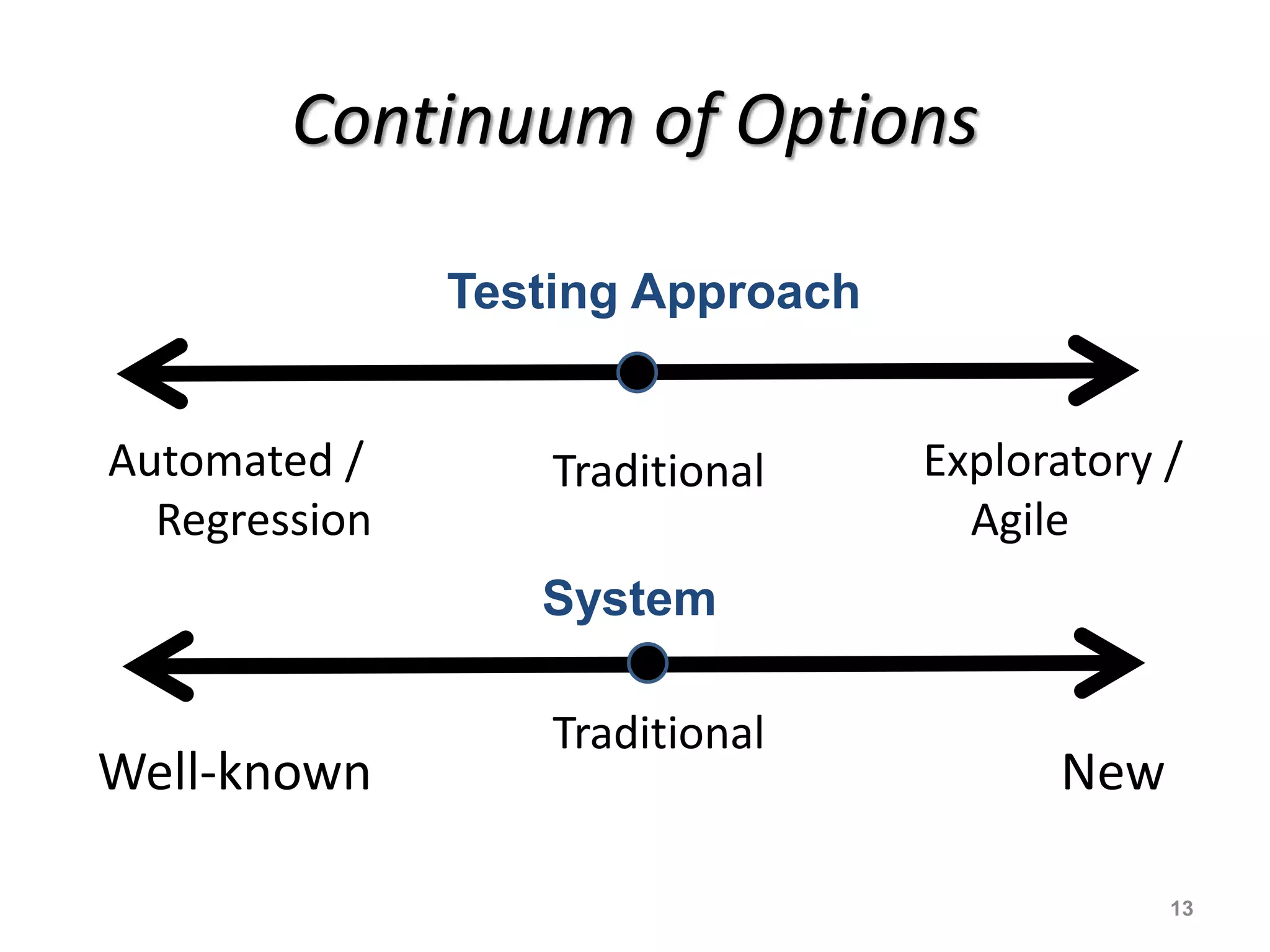

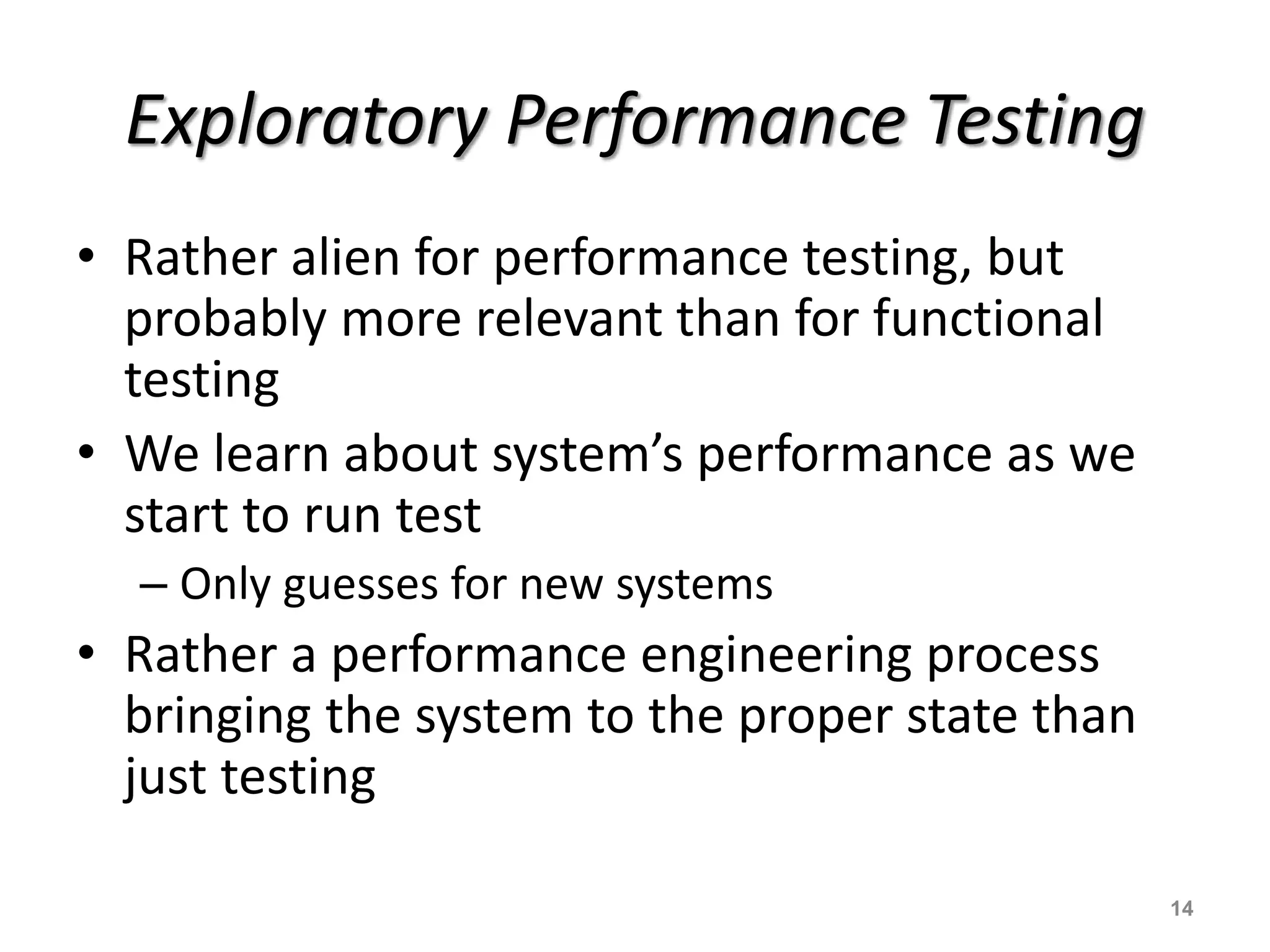

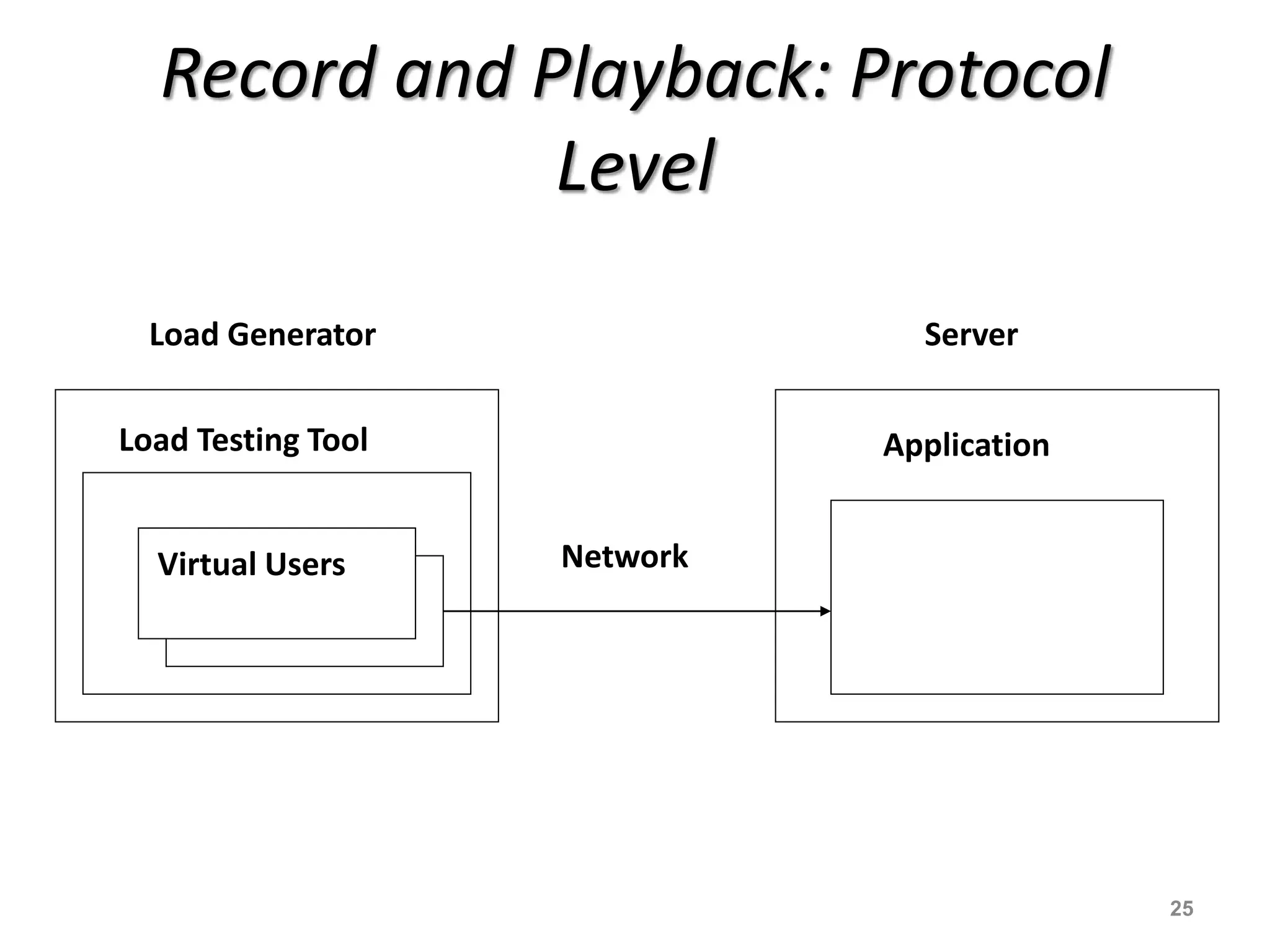

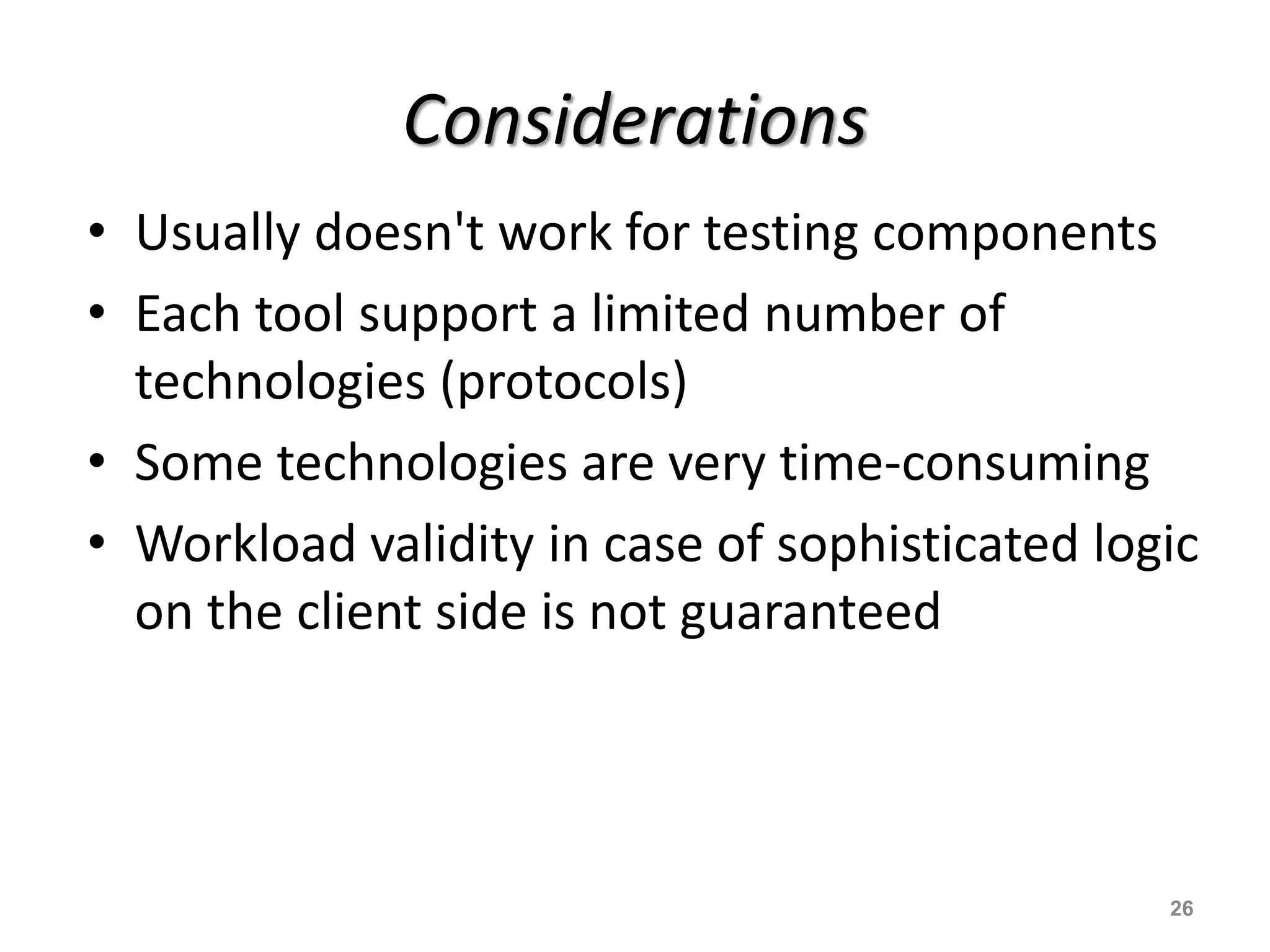

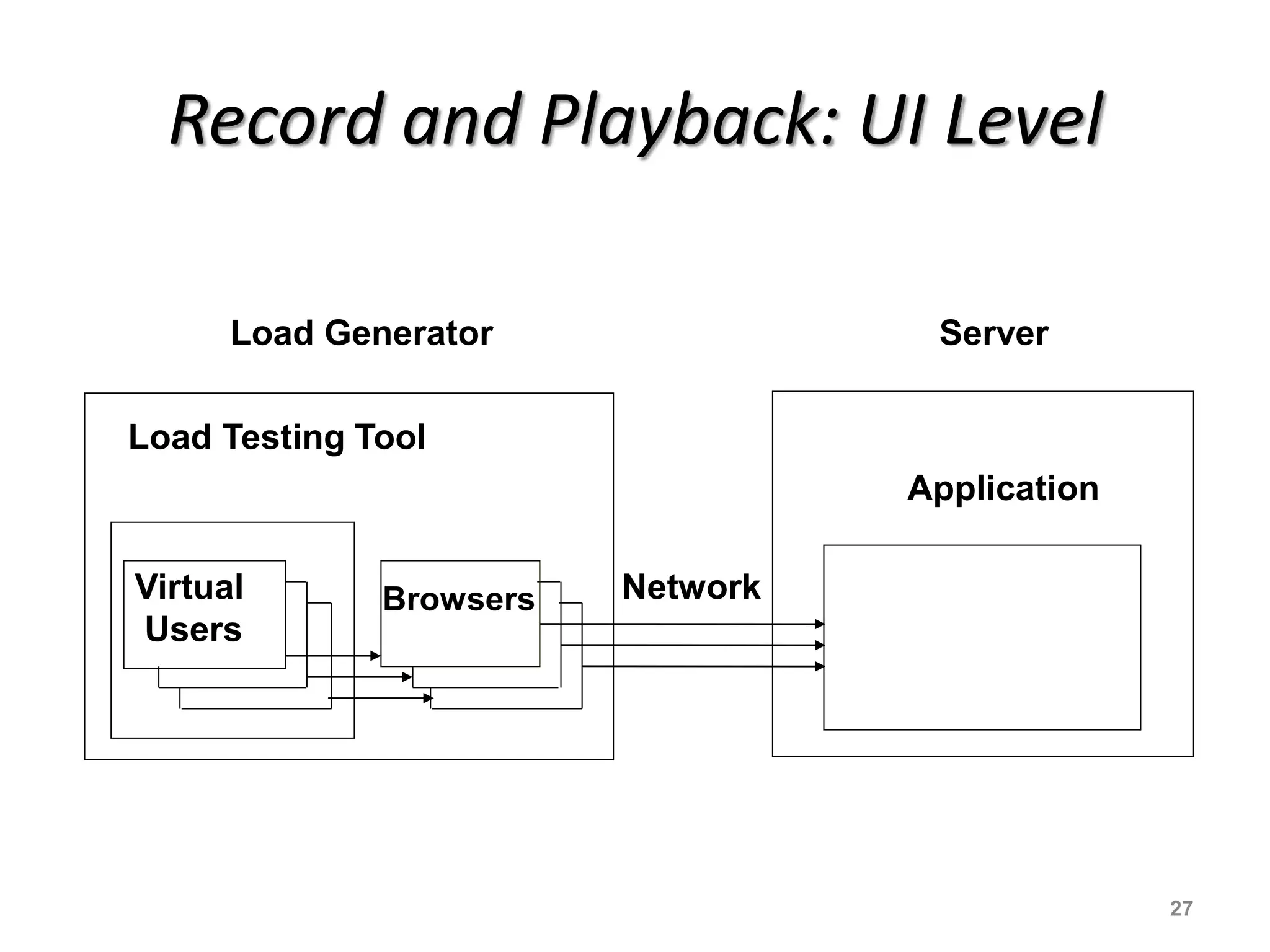

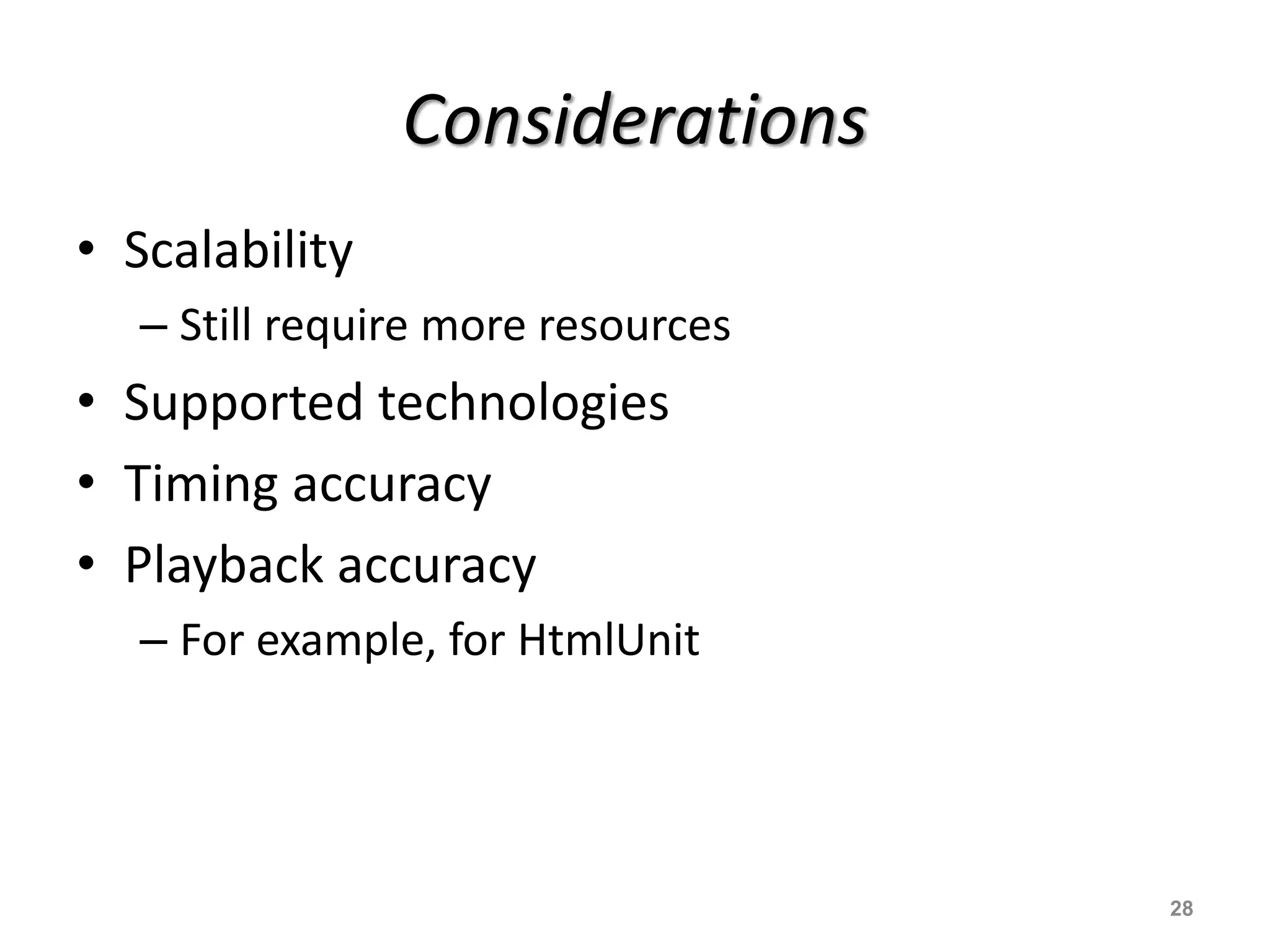

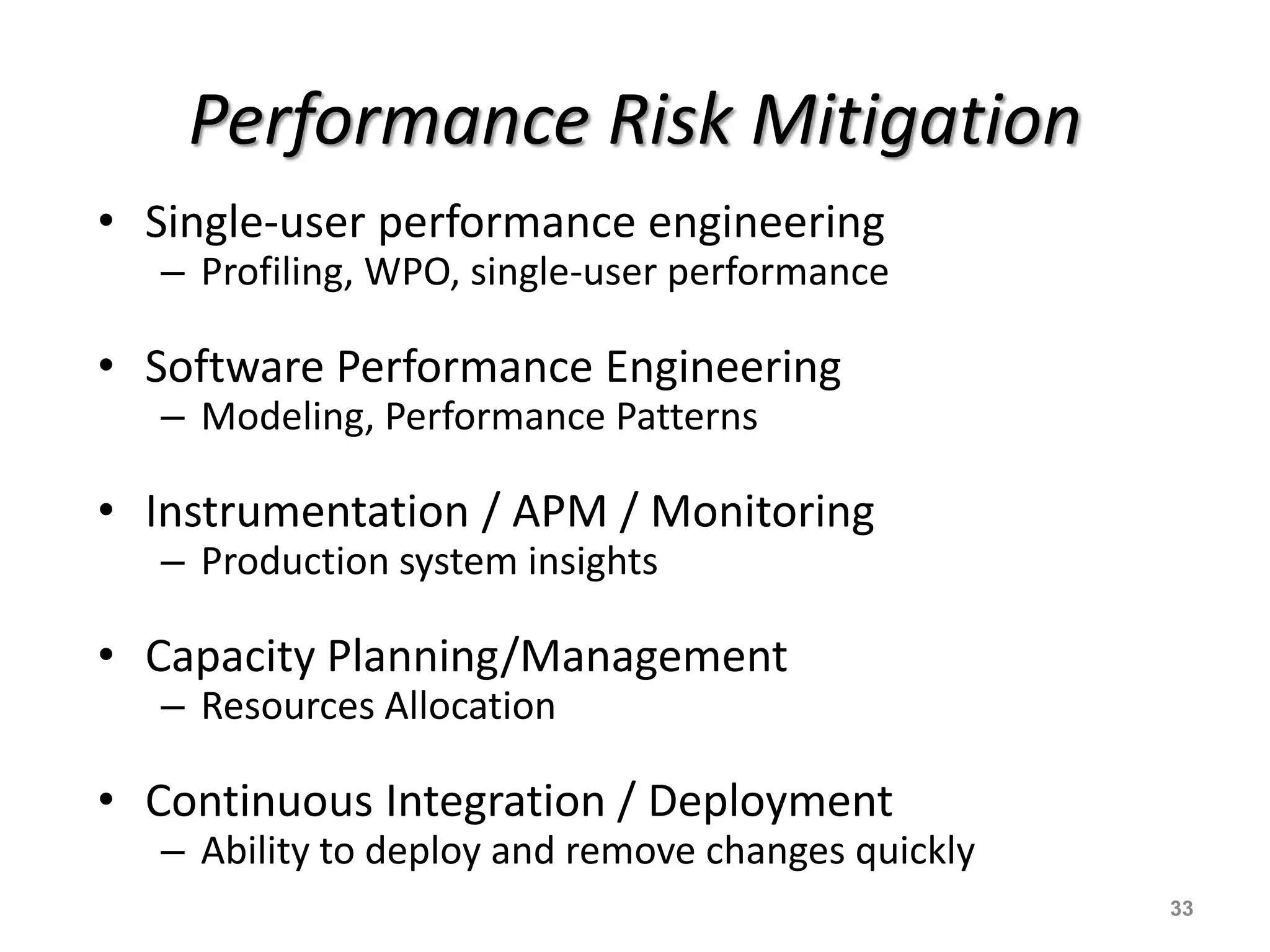

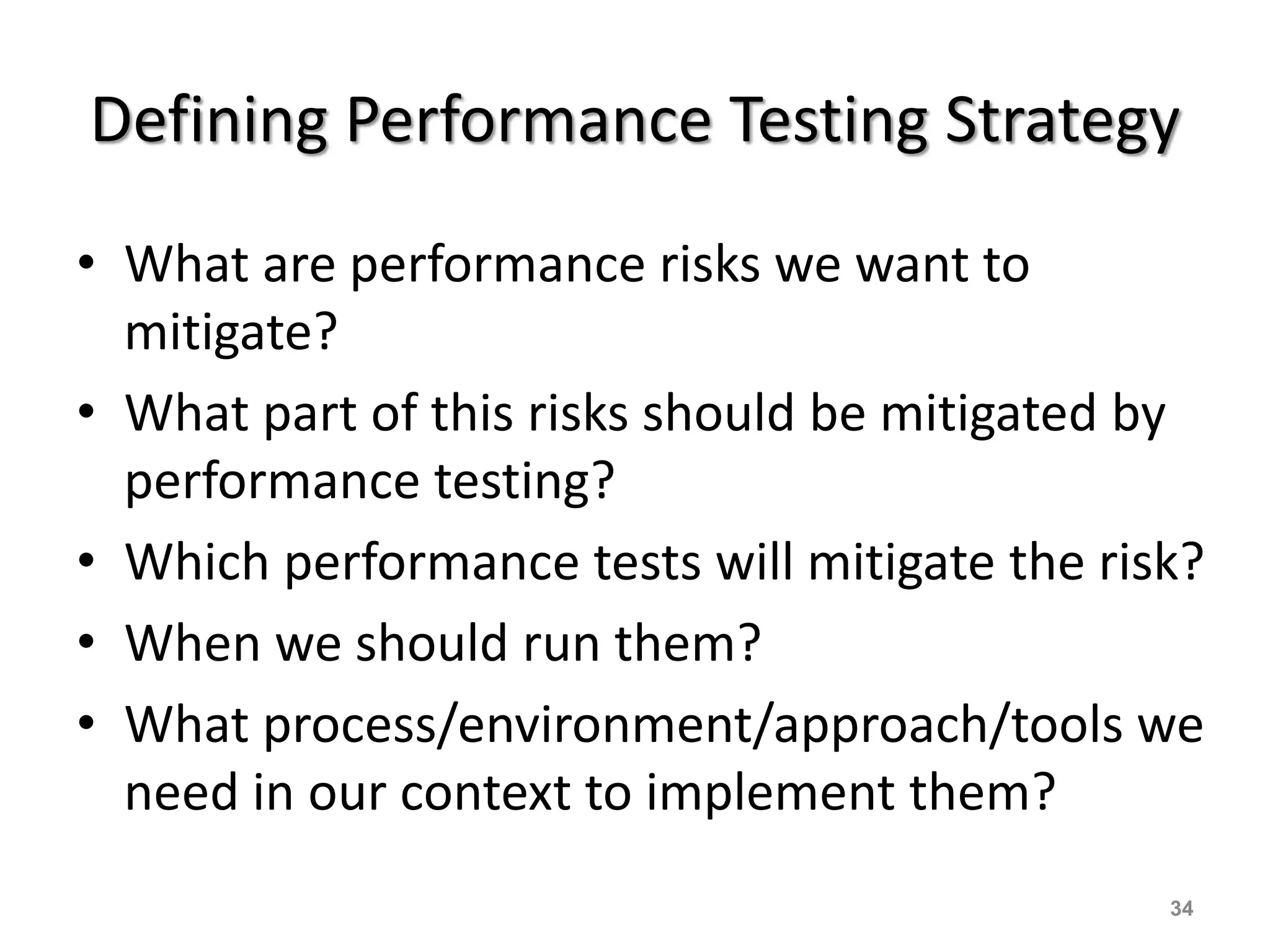

The document discusses context-driven performance testing. It advocates for early performance testing using exploratory and continuous testing approaches in agile development. Testing should be done at the component level using various environments like cloud, lab, and production. Load can be generated through record and playback, programming, or using production workloads. Defining a performance testing strategy involves determining risks, appropriate tests, timing, and processes based on the project context. The strategy is part of an overall performance engineering approach.

![The Main Issue on the Agile Side • It doesn’t [always] work this way in practice • That is why you have “Hardening Iterations”, “Technical Debt” and similar notions • Same old problem: functionality gets priority over performance 10](https://image.slidesharecdn.com/tlc2020contextdrivenperformancetesting-200930014640/75/Context-Driven-Performance-Testing-10-2048.jpg)

![Examples • Handling full/extra load – System level, production[-like env], realistic load • Catching regressions – Continuous testing, limited scale/env • Early detection of performance problems – Exploratory tests, targeted workload • Performance optimization/investigation – Dedicated env, targeted workload 35](https://image.slidesharecdn.com/tlc2020contextdrivenperformancetesting-200930014640/75/Context-Driven-Performance-Testing-35-2048.jpg)