This document presents a detailed overview of parsing techniques in compiler design, particularly focusing on bottom-up parsing methods such as shift-reduce and operator-precedence parsing. It discusses concepts like rightmost derivation, handle pruning, and the construction of parsing tables, along with examples and the advantages and disadvantages of various parsing methods, including LR parsing techniques. Additionally, it addresses the complexities involved in grammar handling, conflict resolution, and the implementation of different parsers.

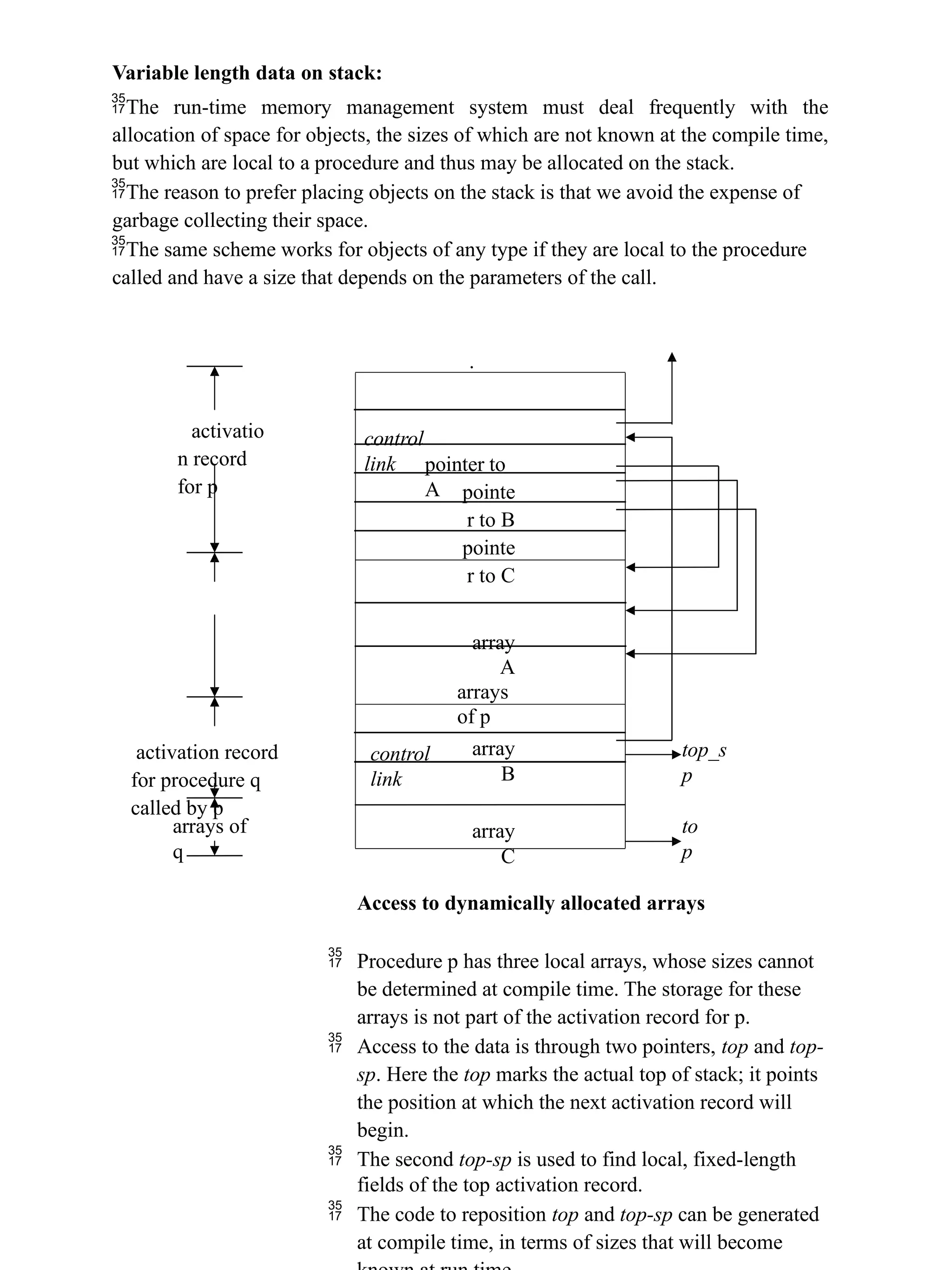

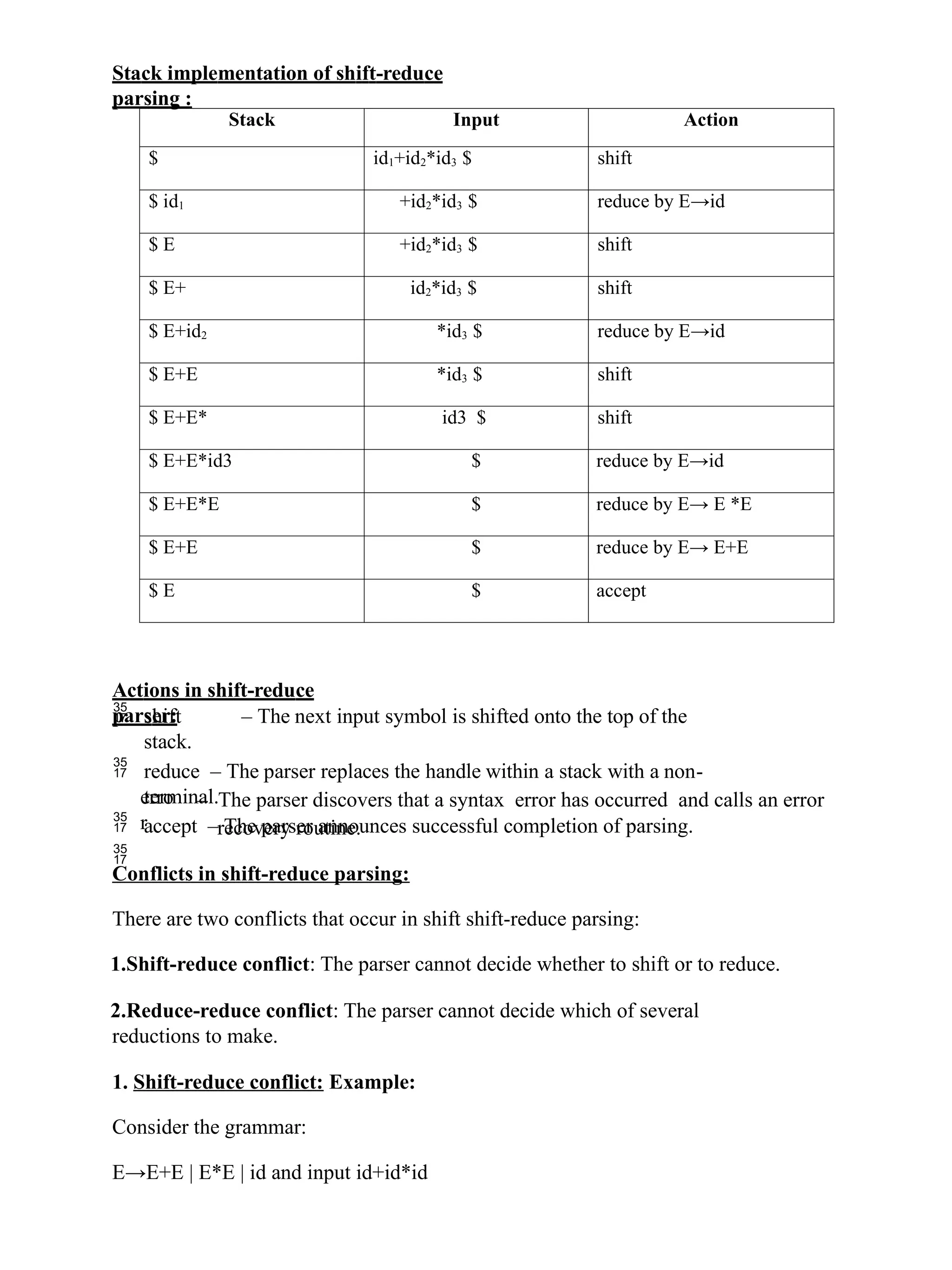

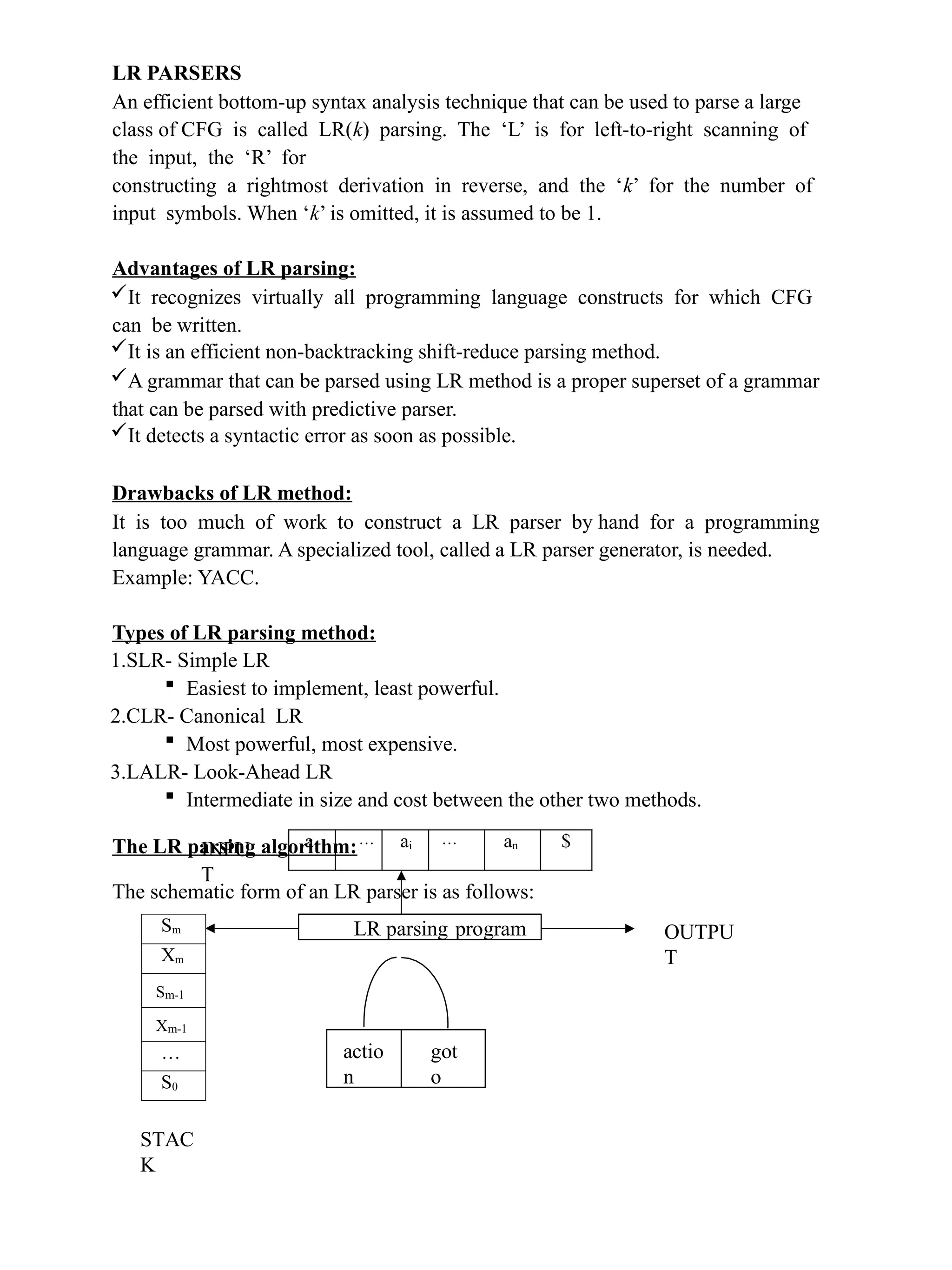

![It consists of : an input, an output, a stack, a driver program, and a parsing table that has two parts (action and goto). The driver program is the same for all LR parser. The parsing program reads characters from an input buffer one at a time. The program uses a stack to store a string of the form s0X1s1X2s2…Xmsm, where sm is on top. Each Xi is a grammar symbol and each si is a state. The parsing table consists of two parts : action and goto functions. Action : The parsing program determines sm, the state currently on top of stack, and ai, the current input symbol. It then consults action[sm,ai] in the action table which can have one of four values : 1.shift s, where s is a state, 2.reduce by a grammar production A → β, 3.accept, and 4.error. Goto : The function goto takes a state and grammar symbol as arguments and produces a state. LR Parsing algorithm: Input: An input string w and an LR parsing table with functions action and goto for grammar G. Output: If w is in L(G), a bottom-up-parse for w; otherwise, an error indication. Method: Initially, the parser has s0 on its stack, where s0 is the initial state, and w$ in the input buffer. The parser then executes the following program : set ip to point to the first input symbol of w$; repeat forever begin let s be the state on top of the stack and a the symbol pointed to by ip; if action[s, a] = shift s’ then begin push a then s’ on top of the stack; advance ip to the next input symbol end else if action[s, a] = reduce A→β then begin pop 2* | β | symbols off the stack; let s’ be the state now on top of the stack; push A then goto[s’, A] on top of the stack; output the production A→ β end else if action[s, a] = accept then return else error( ) end](https://image.slidesharecdn.com/cdunit2-241120091923-d1979eed/75/Parsing-techniques-notations-methods-of-parsing-in-compiler-design-9-2048.jpg)

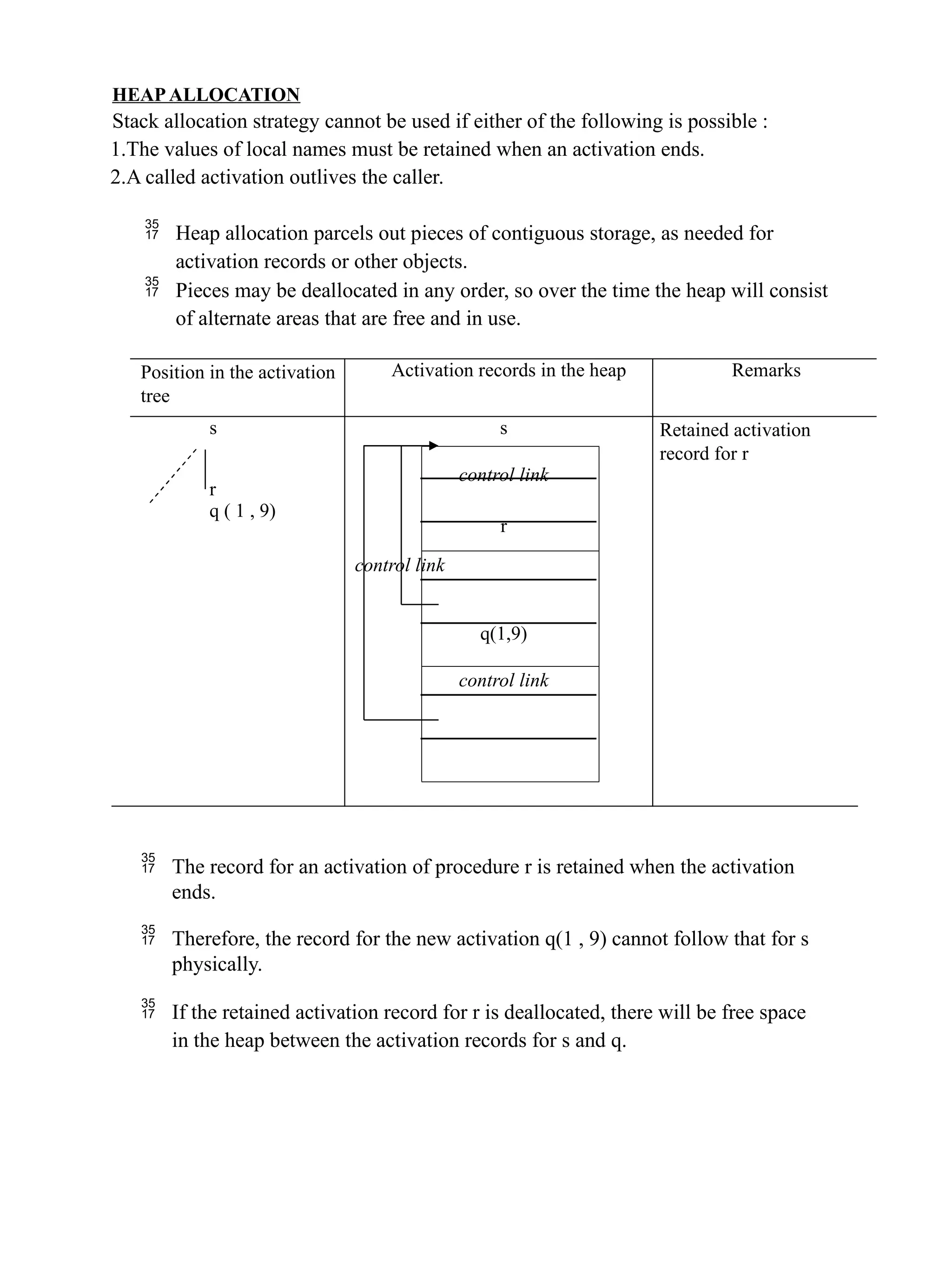

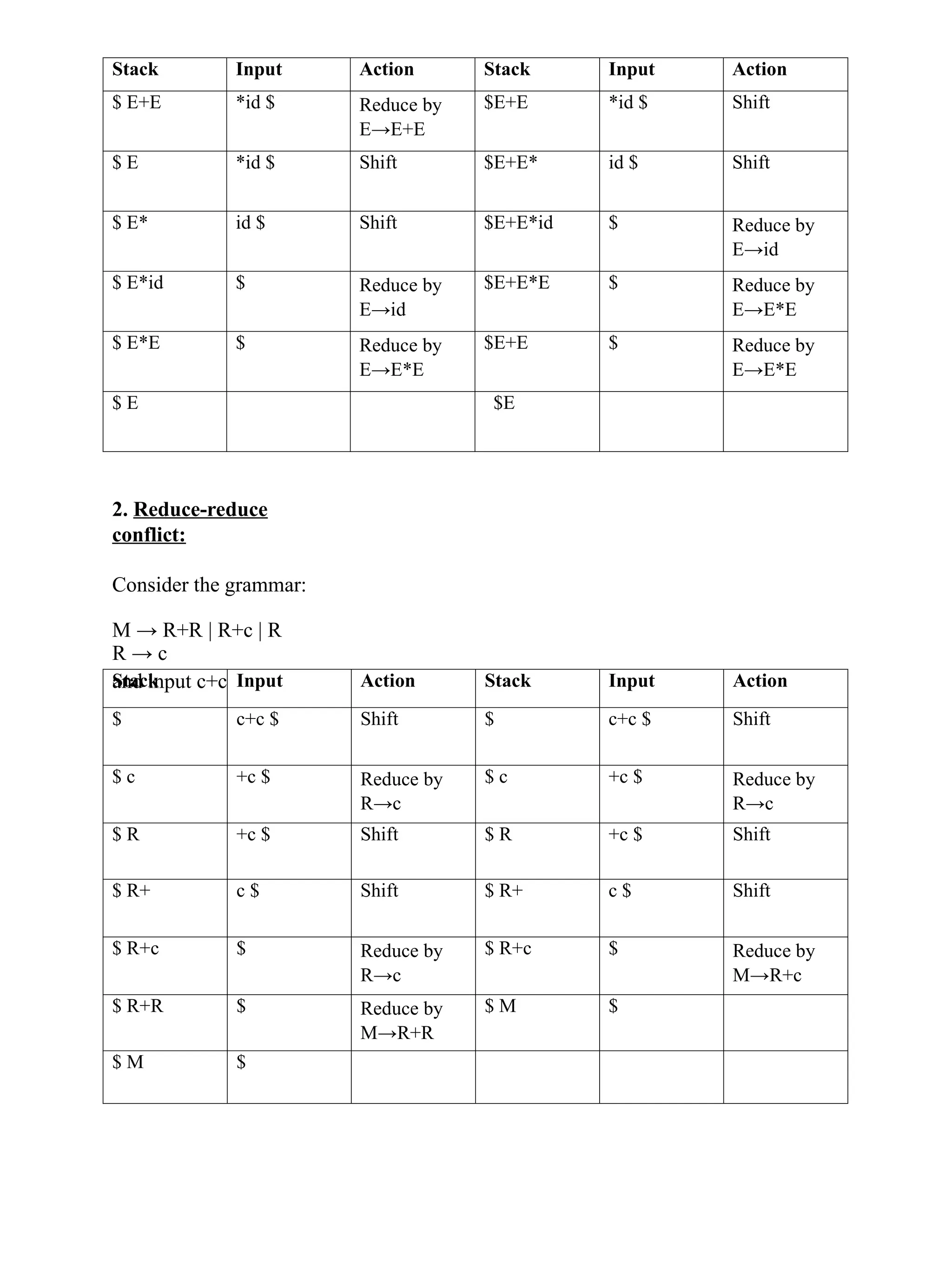

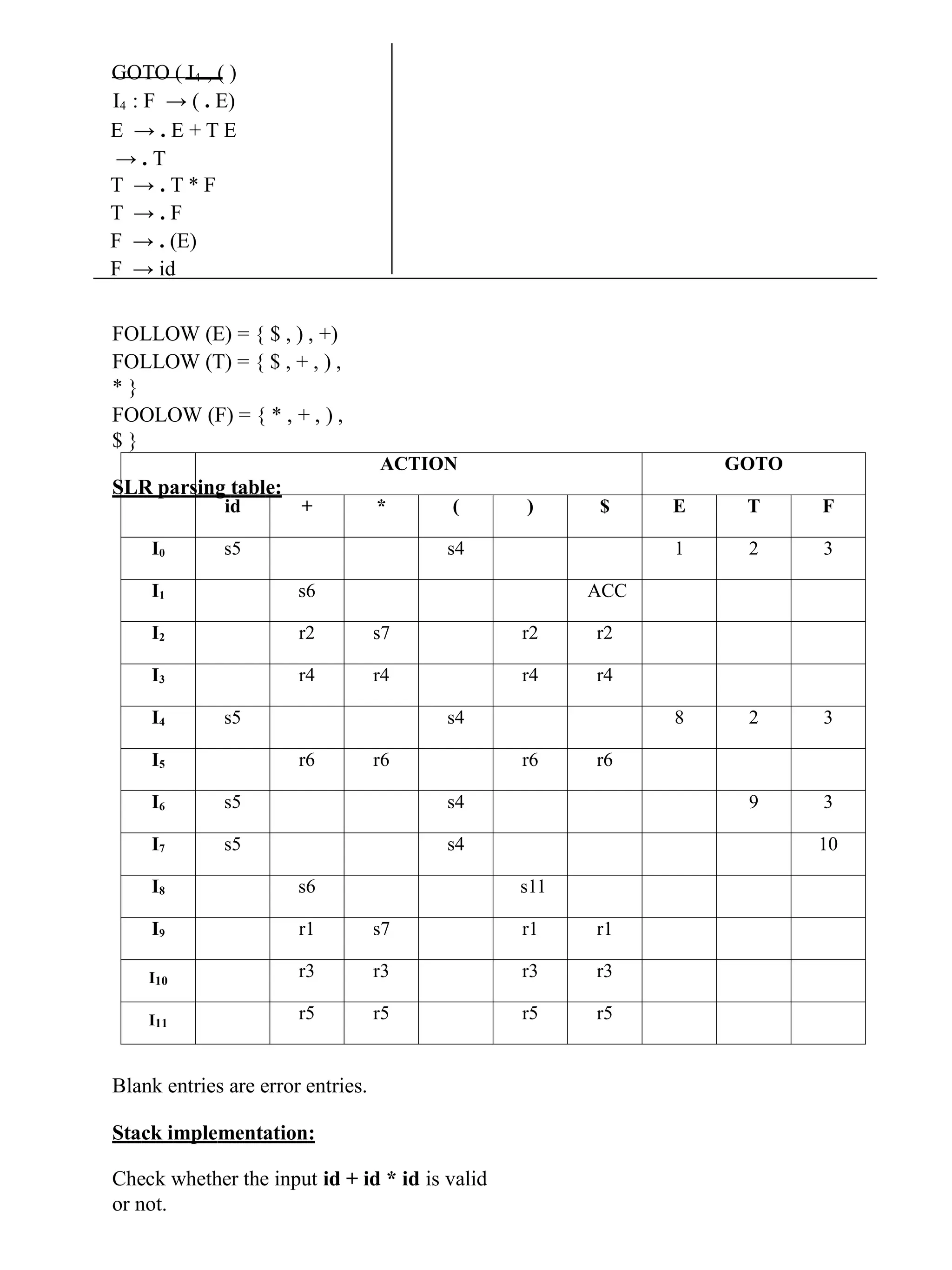

![CONSTRUCTING SLR(1) PARSING TABLE: To perform SLR parsing, take grammar as input and do the following: 1.Find LR(0) items. 2.Completing the closure. 3.Compute goto(I,X), where, I is set of items and X is grammar symbol. LR(0) items: An LR(0) item of a grammar G is a production of G with a dot at some position of the right side. For example, production A → XYZ yields the four items : A → . XYZ A → X . YZ A → XY . Z A → XYZ . Closure operation: If I is a set of items for a grammar G, then closure(I) is the set of items constructed from I by the two rules: 4.Initially, every item in I is added to closure(I). 5.If A → α . Bβ is in closure(I) and B → γ is a production, then add the item B → . γ to I , if it is not already there. We apply this rule until no more new items can be added to closure(I). Goto operation: Goto(I, X) is defined to be the closure of the set of all items [A→ αX . β] such that [A→ α . Xβ] is in I. Steps to construct SLR parsing table for grammar G are: 1. Augment G and produce G’ 2. Construct the canonical collection of set of items C for G’ 3. Construct the parsing action function action and goto using the following algorithm that requires FOLLOW(A) for each non-terminal of grammar. Algorithm for construction of SLR parsing table: Input : An augmented grammar G’ Output : The SLR parsing table functions action and goto for G’ Method : 6.Construct C = {I0, I1, …. In}, the collection of sets of LR(0) items for G’. 7.State i is constructed from Ii.. The parsing functions for state i are determined as follows: (a) If [A→α∙aβ] is in Ii and goto(Ii,a) = Ij, then set action[i,a] to “shift j”. Here a must be terminal. (b) If [A→α∙] is in Ii , then set action[i,a] to “reduce A→α” for all a in FOLLOW(A). (c) If [S’→S.] is in Ii, then set action[i,$] to “accept”.](https://image.slidesharecdn.com/cdunit2-241120091923-d1979eed/75/Parsing-techniques-notations-methods-of-parsing-in-compiler-design-10-2048.jpg)

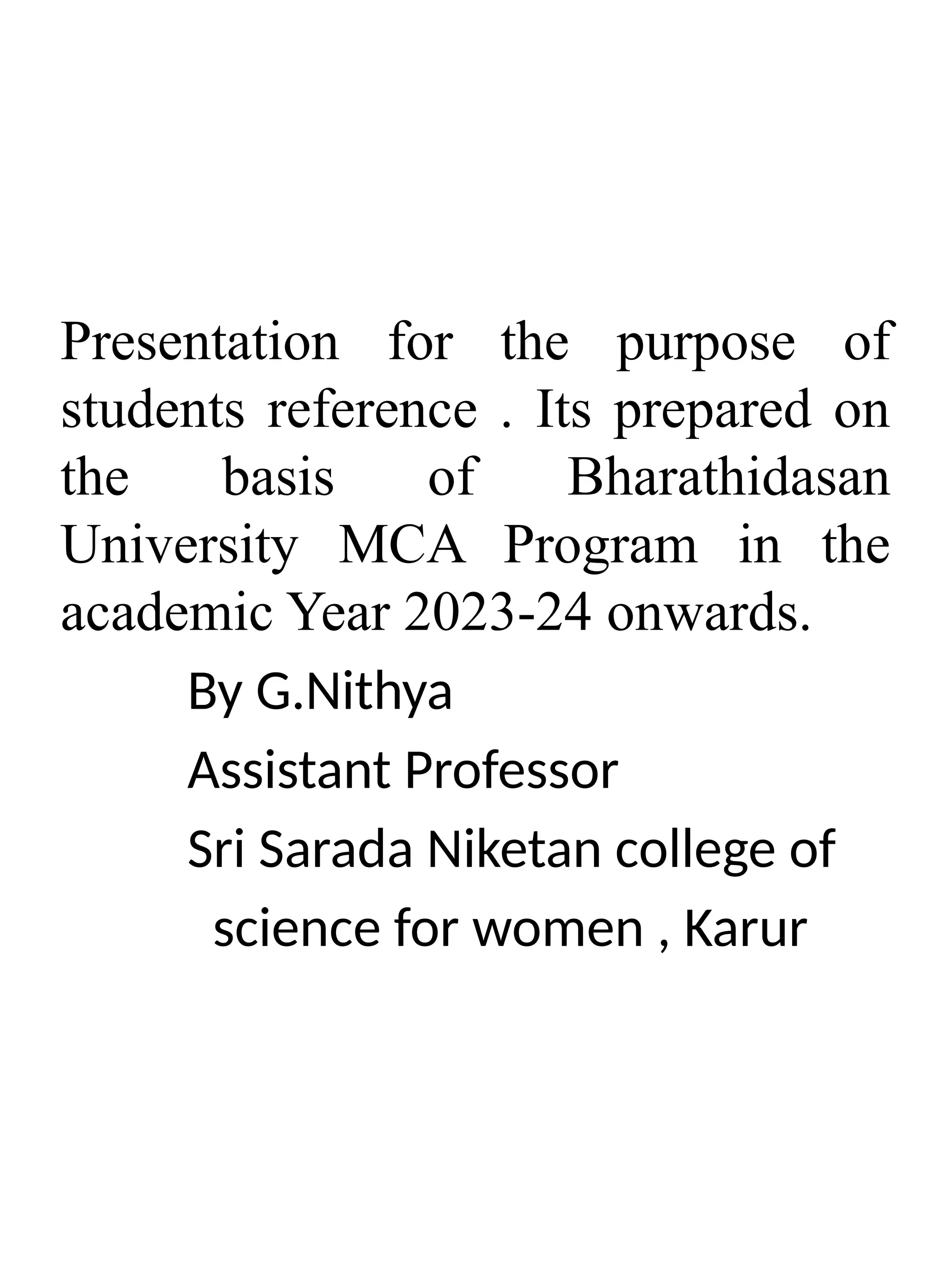

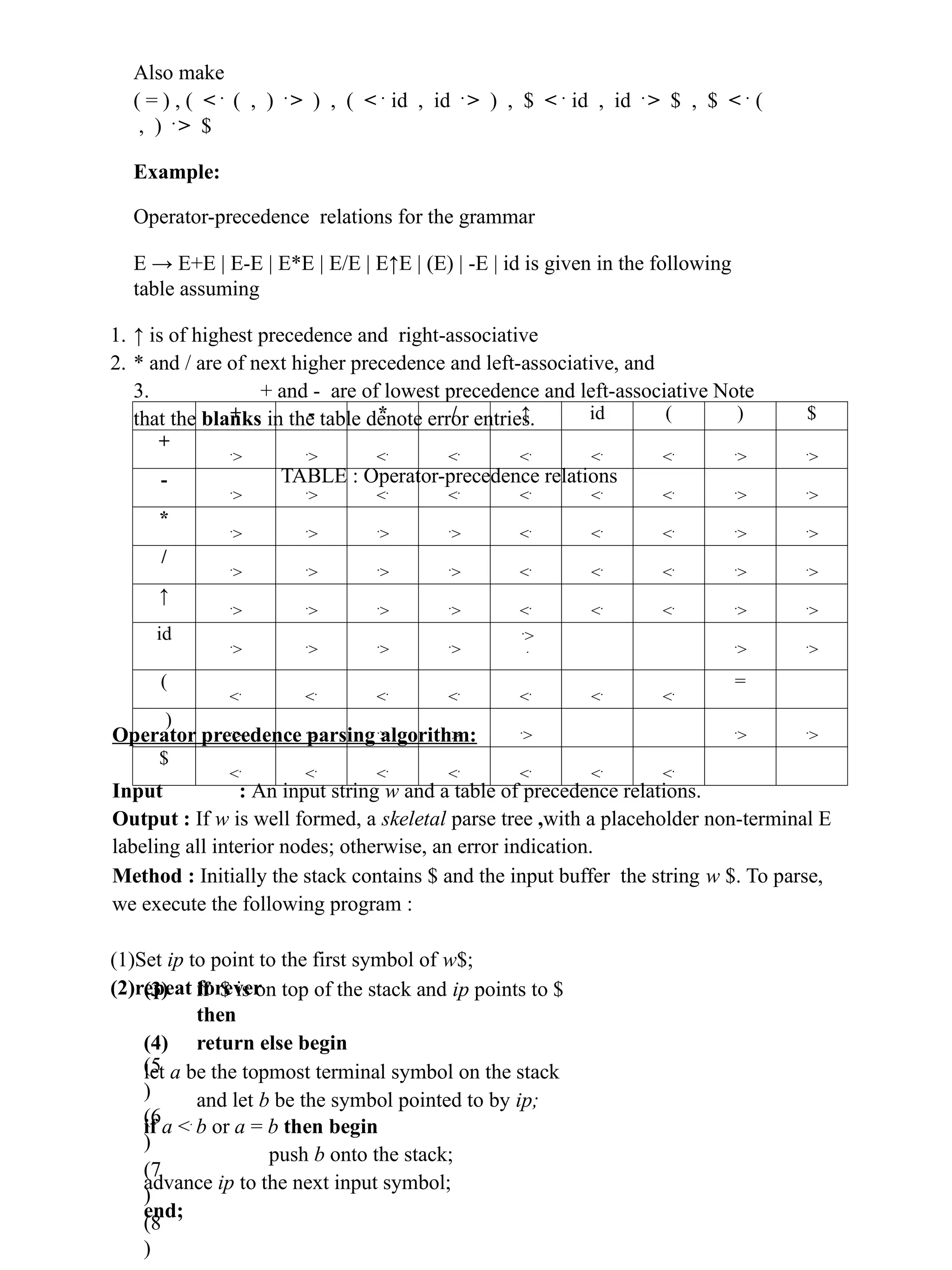

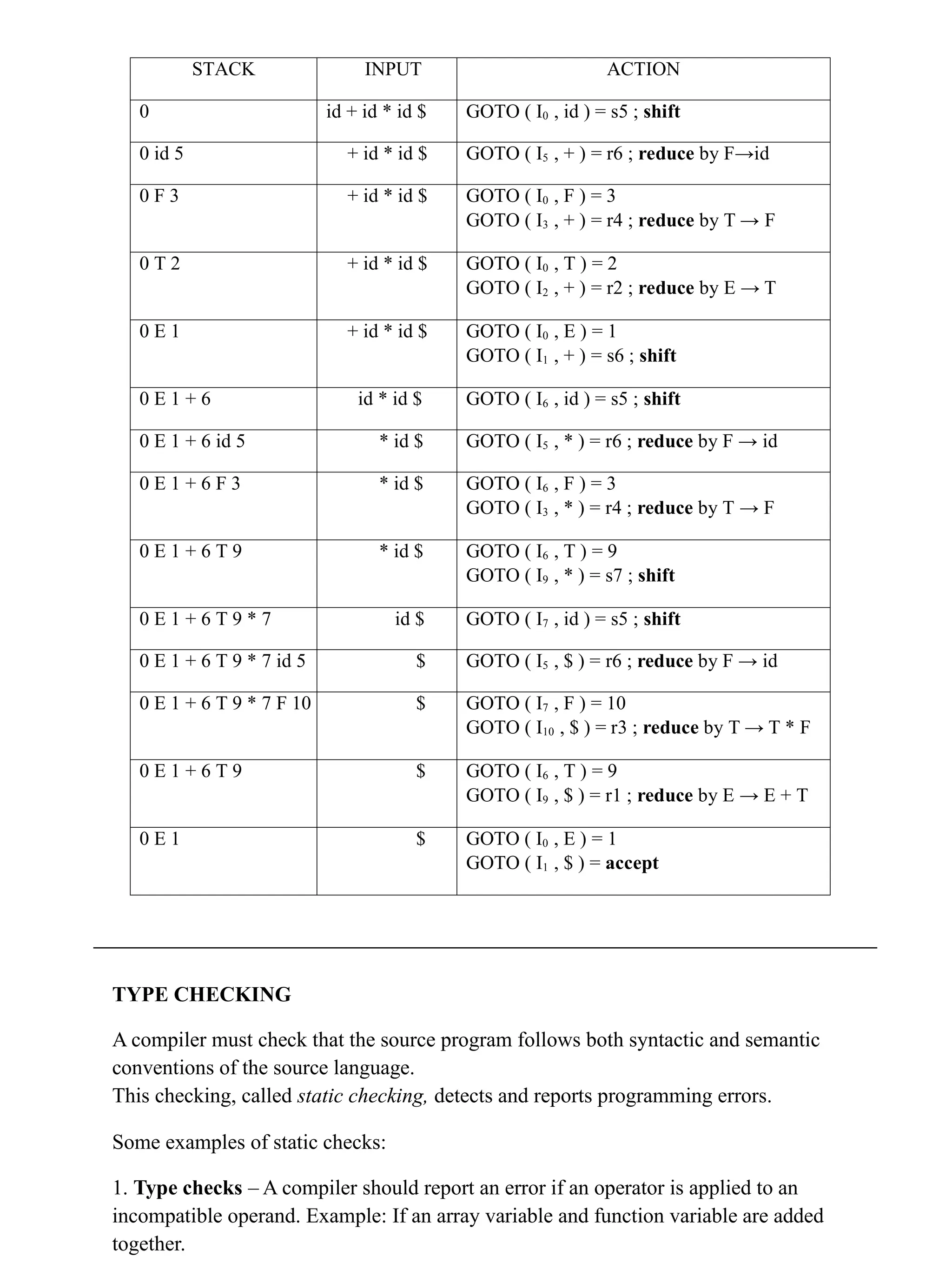

![3. The goto transitions for state i are constructed for all non-terminals A using the rule: If goto(Ii,A) = Ij, then goto[i,A] = j. 4. All entries not defined by rules (2) and (3) are made “error” 5. The initial state of the parser is the one constructed from the set of items containing [S’→.S]. Example for SLR parsing: Construct SLR parsing for the following grammar : G : E → E + T | T T → T * F | F F → (E) | id The given grammar is : Step 1 : Convert given grammar into augmented grammar. Augmented grammar : E’ → E E → E + T E → T T → T * F T → F F → (E) F → id Step 2 : Find LR (0) items. I0 : E’ → . E E → . E + T E → . T T → . T * F T → . F F → . (E) F → . id GOTO ( I0 , E) GOTO ( I4 , id ) I1 : E’ → E . E → E . + T I5 : F → id . G : E → E + T ------ (1) E →T ------ (2) T → T * F ------ (3) T → F ------ (4) F → (E) ------ (5) F → id ------ (6)](https://image.slidesharecdn.com/cdunit2-241120091923-d1979eed/75/Parsing-techniques-notations-methods-of-parsing-in-compiler-design-11-2048.jpg)

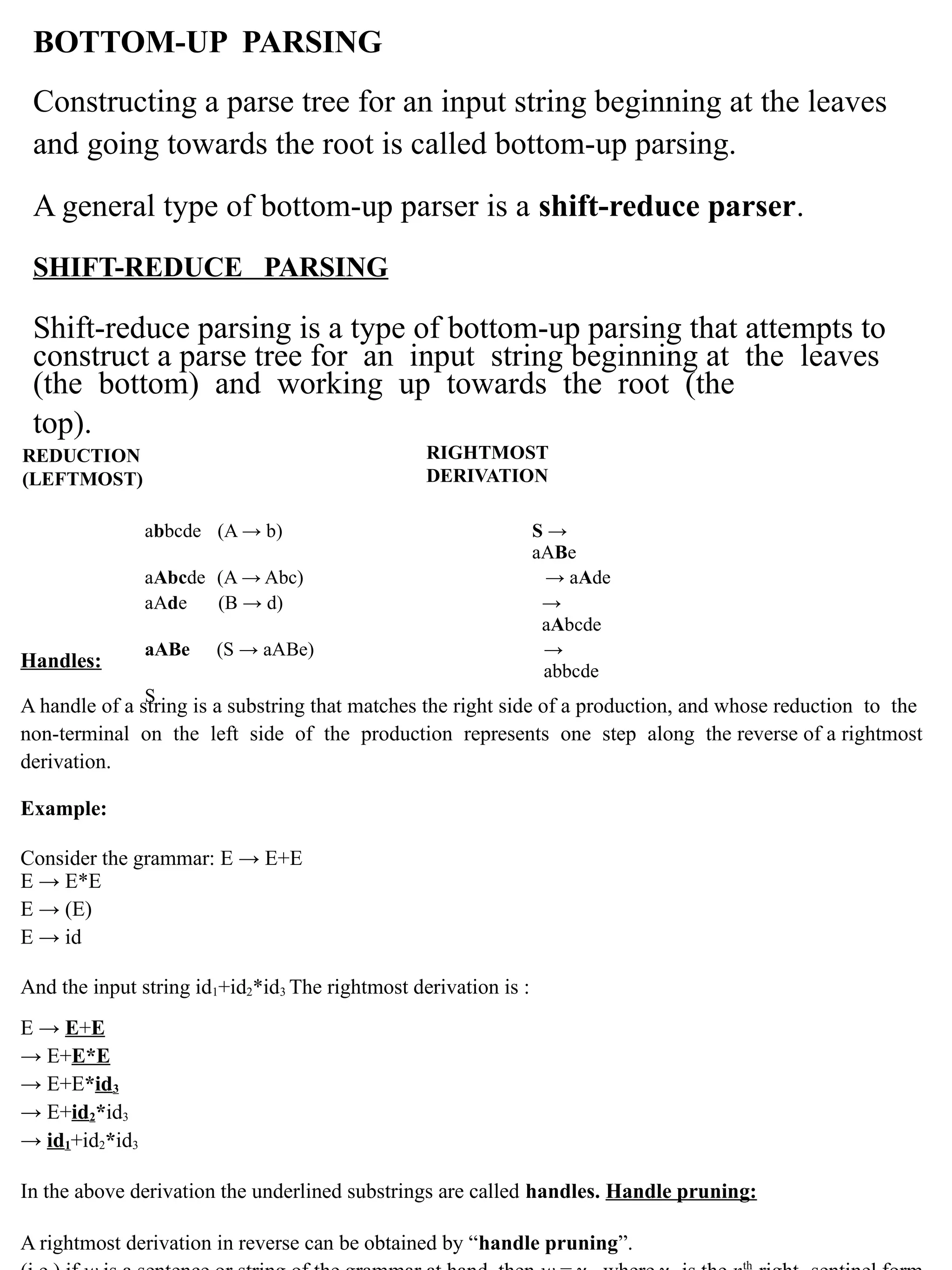

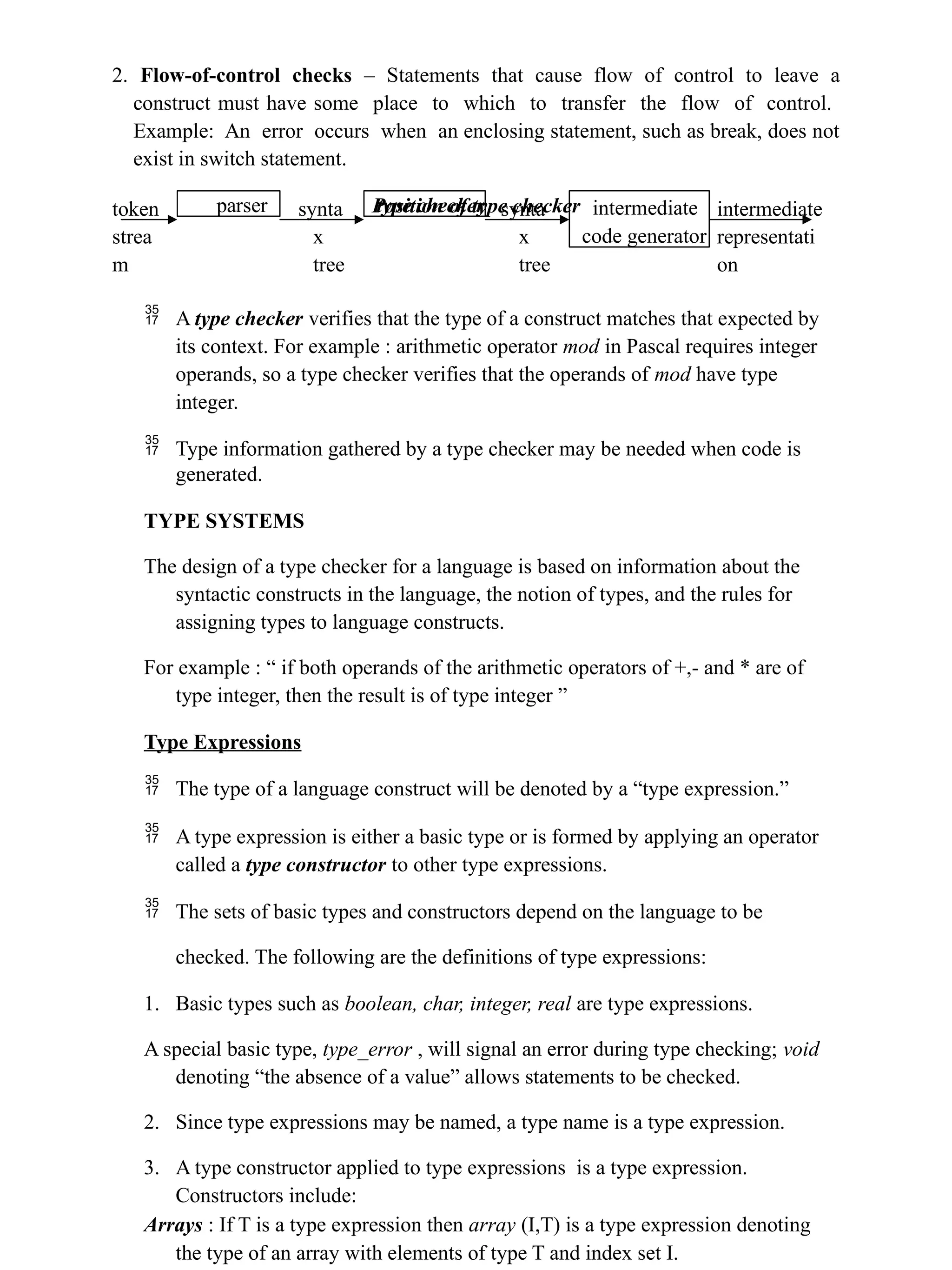

![Records : The difference between a record and a product is that the fields of a record have names. The record type constructor will be applied to a tuple formed from field names and field types. For example: type row = record address: integer; lexeme: array[1..15] of char end; var table: array[1...101] of row; declares the type name row representing the type expression record((address X integer) X (lexeme X array(1..15,char))) and the variable table to be an array of records of this type. Pointers : If T is a type expression, then pointer(T) is a type expression denoting the type “pointer to an object of type T”. For example, var p: ↑ row declares variable p to have type pointer(row). Functions : A function in programming languages maps a domain type D to a range type R. The type of such function is denoted by the type expression D → R 4. Type expressions may contain variables whose values are type expressions. Tree representation for char x char → pointer (integer) → x pointe r cha r cha r intege r Type systems A type system is a collection of rules for assigning type expressions to the various parts of a program. A type checker implements a type system. It is specified in a syntax-directed manner. Different type systems may be used by different compilers or processors of the same language. Static and Dynamic Checking of Types Checking done by a compiler is said to be static, while checking done when the target program runs is termed dynamic. Any check can be done dynamically, if the target code carries the type of an element along with the value of that element.](https://image.slidesharecdn.com/cdunit2-241120091923-d1979eed/75/Parsing-techniques-notations-methods-of-parsing-in-compiler-design-16-2048.jpg)

![Sound type system A sound type system eliminates the need for dynamic checking for type errors because it allows us to determine statically that these errors cannot occur when the target program runs. That is, if a sound type system assigns a type other than type_error to a program part, then type errors cannot occur when the target code for the program part is run. Strongly typed language A language is strongly typed if its compiler can guarantee that the programs it accepts will execute without type errors. Error Recovery Since type checking has the potential for catching errors in program, it is desirable for type checker to recover from errors, so it can check the rest of the input. Error handling has to be designed into the type system right from the start; the type checking rules must be prepared to cope with errors. SPECIFICATION OF A SIMPLE TYPE CHECKER Here, we specify a type checker for a simple language in which the type of each identifier must be declared before the identifier is used. The type checker is a translation scheme that synthesizes the type of each expression from the types of its subexpressions. The type checker can handle arrays, pointers, statements and functions. A Simple Language Consider the following grammar: P → D ; E D → D ; D | id : T T → char | integer | array [ num ] of T | ↑ T E → literal | num | id | E mod E | E [ E ] | E ↑ Translation scheme: P → D ; E D → D ; D D → id : T T → char T → integer T → ↑ T1 { addtype (id.entry , T.type) } { T.type : = char } { T.type : = integer } { T.type : = pointer(T1.type) } T → array [ num ] of T1 { T.type : = array ( 1… num.val , T1.type) } In the above language, → There are two basic types : char and integer ; → type_error is used to signal errors; → the prefix operator ↑ builds a pointer type. Example , ↑ integer leads to the type expression pointer ( integer ).](https://image.slidesharecdn.com/cdunit2-241120091923-d1979eed/75/Parsing-techniques-notations-methods-of-parsing-in-compiler-design-17-2048.jpg)

![Type checking of expressions In the following rules, the attribute type for E gives the type expression assigned to the expression generated by E. 1. E → literal E → num { E.type : = char } { E.type : = integer } Here, constants represented by the tokens literal and num have type char and integer. 2. E → id { E.type : = lookup ( id.entry ) } lookup ( e ) is used to fetch the type saved in the symbol table entry pointed to by e. 3. E → E1 mod E2 { E.type : = if E1. type = integer and E2. type = integer then integer else type_error } The expression formed by applying the mod operator to two subexpressions of type integer has type integer; otherwise, its type is type_error. 4. E → E1 [ E2 ] { E.type : = if E2.type = integer and E1.type = array(s,t) then t else type_error } In an array reference E1 [ E2 ] , the index expression E2 must have type integer. The result is the element type t obtained from the type array(s,t) of E1. 5. E → E1 ↑ { E.type : = if E1.type = pointer (t) then t else type_error } The postfix operator ↑ yields the object pointed to by its operand. The type of E ↑ is the type t of the object pointed to by the pointer E. Type checking of statements Statements do not have values; hence the basic type void can be assigned to them. If an error is detected within a statement, then type_error is assigned. Translation scheme for checking the type of statements: 1. Assignment statement: S → id : = E { S.type : = if id.type = E.type then void else type_error } 2. Conditional statement: S → if E then S1 { S.type : = if E.type = boolean then S1.type else type_error } 3. While statement: S → while E do S1 { S.type : = if E.type = boolean then S1.type else type_error }](https://image.slidesharecdn.com/cdunit2-241120091923-d1979eed/75/Parsing-techniques-notations-methods-of-parsing-in-compiler-design-18-2048.jpg)

![4. Sequence of statements: S → S1 ; S2 { S.type : = if S1.type = void and S1.type = void then void else type_error } Type checking of functions The rule for checking the type of a function application is : E → E1 ( E2) { E.type : = if E2.type = s and E1.type = s → t then t else type_error } SOURCE LANGUAGE ISSUES Procedures: A procedure definition is a declaration that associates an identifier with a statement. The identifier is the procedure name, and the statement is the procedure body. For example, the following is the definition of procedure named readarray : procedure readarray; var i : integer; begin for i : = 1 to 9 do read(a[i]) end; When a procedure name appears within an executable statement, the procedure is said to be called at that point. Activation trees: An activation tree is used to depict the way control enters and leaves activations. In an activation tree, 1.Each node represents an activation of a procedure. 2.The root represents the activation of the main program. 3.The node for a is the parent of the node for b if and only if control flows from activation a to b. 4.The node for a is to the left of the node for b if and only if the lifetime of a occurs before the lifetime of b. Control stack: A control stack is used to keep track of live procedure activations. The idea is to push the node for an activation onto the control stack as the activation begins and to pop the node when the activation ends. The contents of the control stack are related to paths to the root of the activation tree. When node n is at the top of control stack, the stack contains](https://image.slidesharecdn.com/cdunit2-241120091923-d1979eed/75/Parsing-techniques-notations-methods-of-parsing-in-compiler-design-19-2048.jpg)