This document summarizes research on parallelizing the graceful graph labeling problem using OpenMP on multi-core processors. It introduces the concepts of parallelization, multi-core architecture, and OpenMP. An algorithm is designed to parallelize graceful labeling by distributing graph vertices across processor cores. Execution time and speedup are measured for graphs of increasing size, showing improved speedup and reduced time with parallelization. Results show consistent performance gains as graph size increases due to better utilization of the multi-core architecture.

![Page 3 Execution time – Time taken to execute the program The factor by which multi-core shows improvement in finding solution is called speed up the overall speedup S is given by the below equation . Speedup, S = Tparallel Tseries Where, Tserial – serial program execution time Tparallel – parallel program execution time No of Vertices Serial Execution Time(Micro sec) Parallel Execution Time(Micro sec) 10*10 0412 0371 50*50 2313 2062 100*100 72501 65210 150*150 18751 16782 200*200 27983 26123 250*250 42516 41710 300*300 66543 61287 350*350 78142 60286 400*400 90421 84371 450*450 111242 107342 Table 1:Execution Time Table 1 shows that for 10*10, there is no much difference in execution time between serial and parallel execution times. However, for 450*450, there is a considerable amount of difference between serial execution time and parallel execution time. When the number of vertices increases, the corresponding difference between serial and parallel execution time also increases. 3. Speedup The speedup is calculated by using the fraction of code parallelized with speed obtained from serial and parallel execution time which is shown in Table 2. Table 2 Speedup 4. CONCLUSIONS In this work, we learned how OpenMp programming techniques are beneficial to multicore systems. We also found out the execution time of both serial and parallel programs. From the vertices 10 to 450, it shows consistent improvement. It is concluded that by parallelization the speedup, and execution time are improved. . 5. REFERENCES [1] Brankovic,&Wanless (2011). Graceful Labelling: State of the Art, Applications and Future Directions. Mathematics in Computer Science, 5(1), 11– 20. doi:10.1007/s11786-011-0073-6 [2] Yamazaki ,Kurzak , Wu , Zounon , &Dongarra (2018). Symmetric Indefinite Linear Solver Using OpenMP Task on Multicore Architectures. IEEE Transactions on Parallel and Distributed Systems, 29(8), No of Vertices Speedup 10*10 1.112 50*50 1.121 100*100 1.123 150*150 1.125 200*200 1.125 250*250 1.123 300*300 1.127 350*350 1.130 400*400 1.134 450*450 1.148](https://image.slidesharecdn.com/ijsred-v3i5p23-200919143251/75/Parallelization-of-Graceful-Labeling-Using-Open-MP-3-2048.jpg)

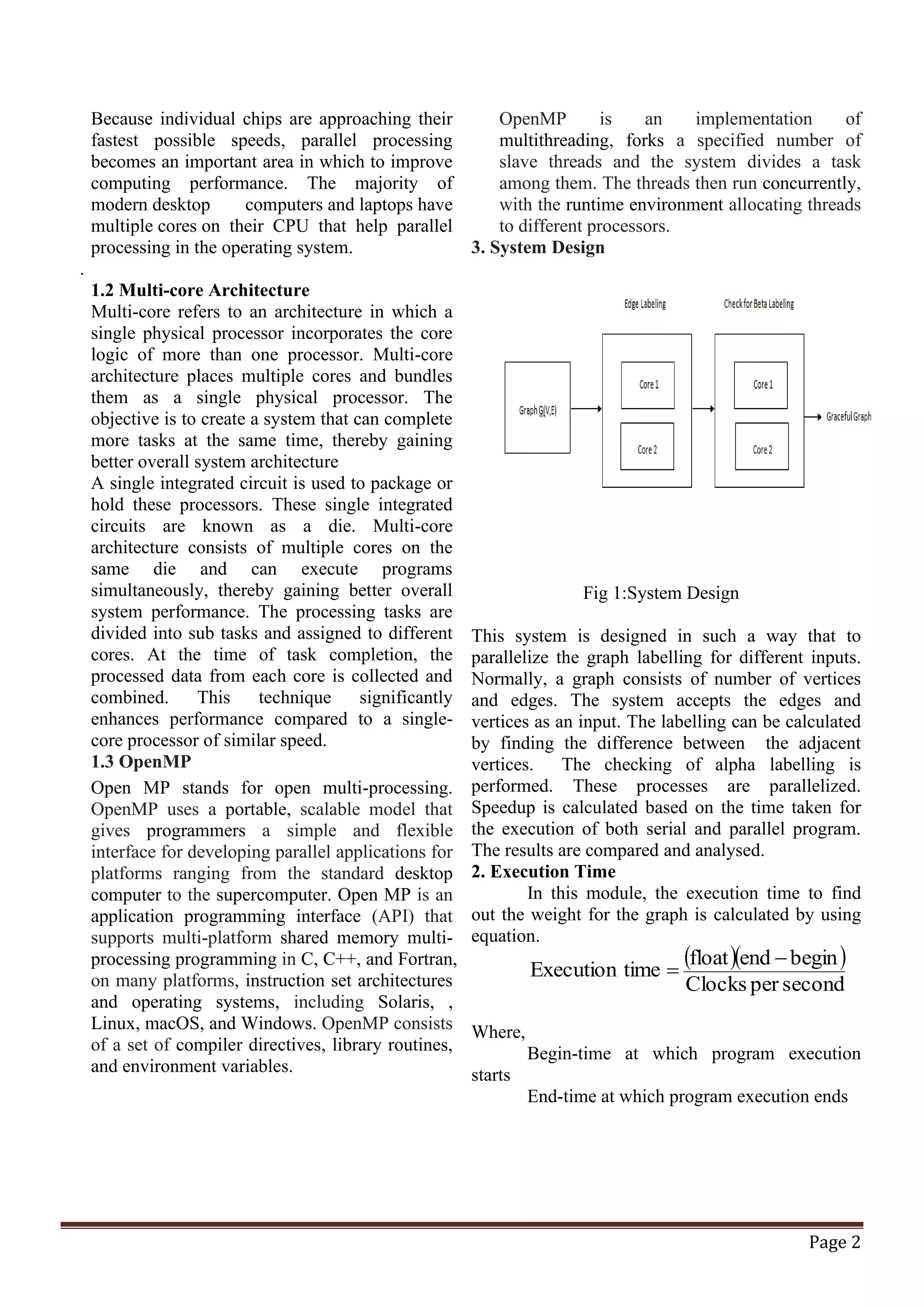

![Page 4 1879– 1892. doi:10.1109/tpds.2018.2808964 [3] Peipei , Tai , &Yuanyuan(2014). The Generation of k-Graceful Figure and Graceful Label.2014 Fourth International Conference on Instrumentation and Measurement, Computer, Communication and Control. doi:10.1109/imccc.2014.94 [4] Zeng K, Tang Y, & Liu F (2011). Parallization of Adaboost Algorithm through Hybrid MPI/OpenMP and Transactional Memory.2011 19th International Euromicro Conference on Parallel, Distributed and Network-Based Processing. doi:10.1109/pdp.2011.97 [5] SheelaKathavate and N.K. Srinath, Efficiency of Parallel Algorithms on Multi Core Systems Using OpenMP, International Journal of Advanced Research in Computer and Communication Engineering Vol. 3, Issue 10, October 2014. [6] ChetanArora, Subhashis Banerjee, PremKalra, and S.N. Maheshwari, An Efficient GraphCut Algorithm for Computer Vision Problems, Department of Computer Science and Engineering, Indian Institute of Technology, New Delhi, India@Springer- Verlag Berlin Heidelberg 2010. [7] P.K.D Sridevi,A.Sindhuja,R.Muthuselvi “Parallelization of maze generation and solving in multicore Using OpenMP” International Conference on Innovations in Engineering and Technology, ICIET 16, 6th and 7th April 2016 conducted by KLN College of Engineering,Madurai. [8] R.Muthuselvi, M.Muneeswari, K.Sudha, V.Vasantha “Parallelization of Graph Labelling Problem in Multicore using OpenMP” International Conference on Trends in Electronics and Informatics ICEI 2017 May 11th and 12th 2017 conducted by SCAD Engineering College, Thirunelveli [9] Rohit Chandra, Leonardo Dagum, Dave Kohr, DrorMaydan, Jeff McDonald, Ramesh Menon ' Parallel Programming in OpenMP' ch.1-6, pp.1-249. [10] J.A. Mac Dougall, M. Miller, Slamin and W. D. Wallis,Vertexmagic total labelling og graphs,Util,Math.,61(2002)3-4. [11] K.A. Hawick, A. Leist and D.P. Playne, Parallel Graph Component Labelling with GPUs and CUDA,International Journal of Engineering and Technology Vol.3,2014. .](https://image.slidesharecdn.com/ijsred-v3i5p23-200919143251/75/Parallelization-of-Graceful-Labeling-Using-Open-MP-4-2048.jpg)