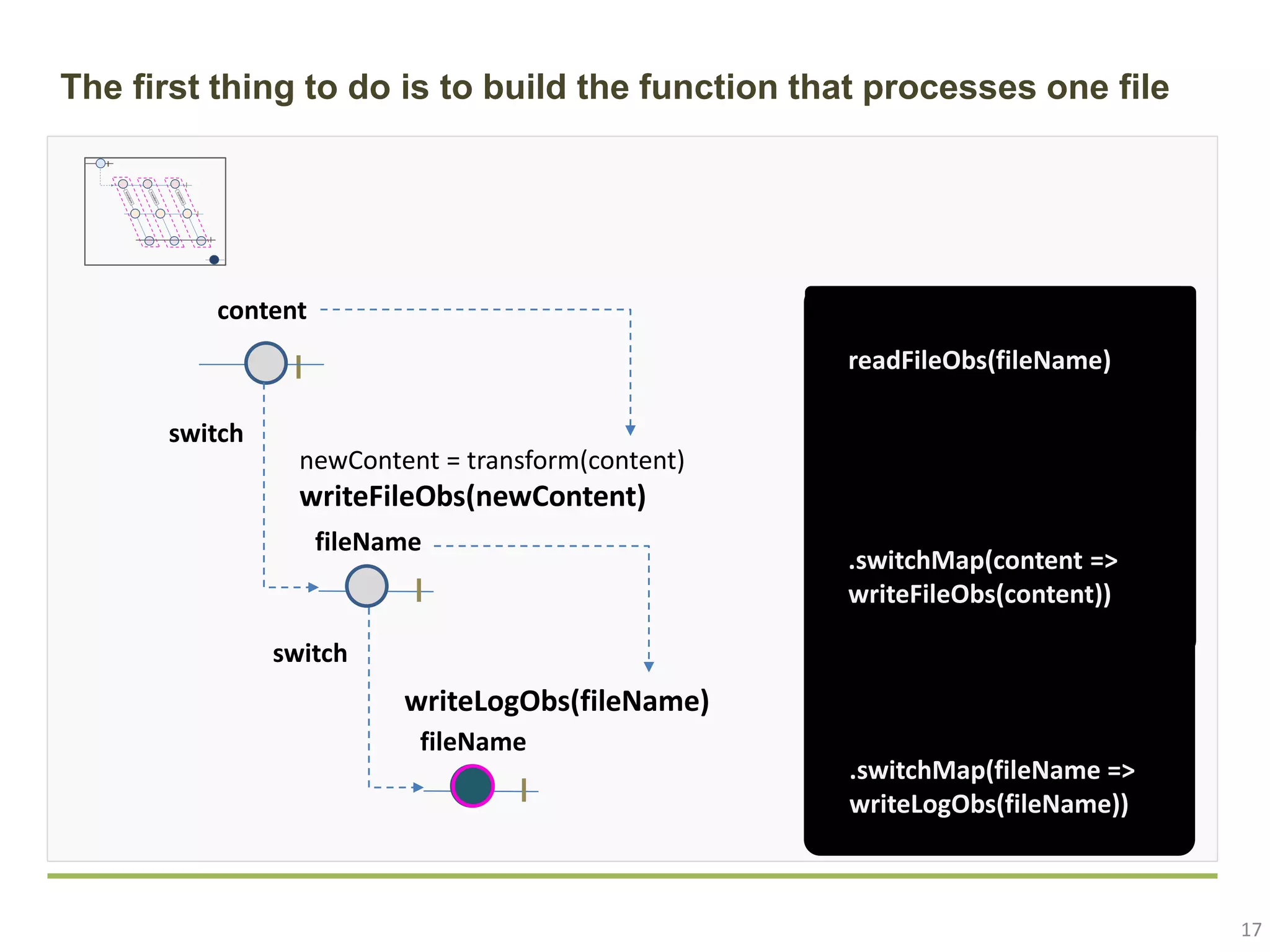

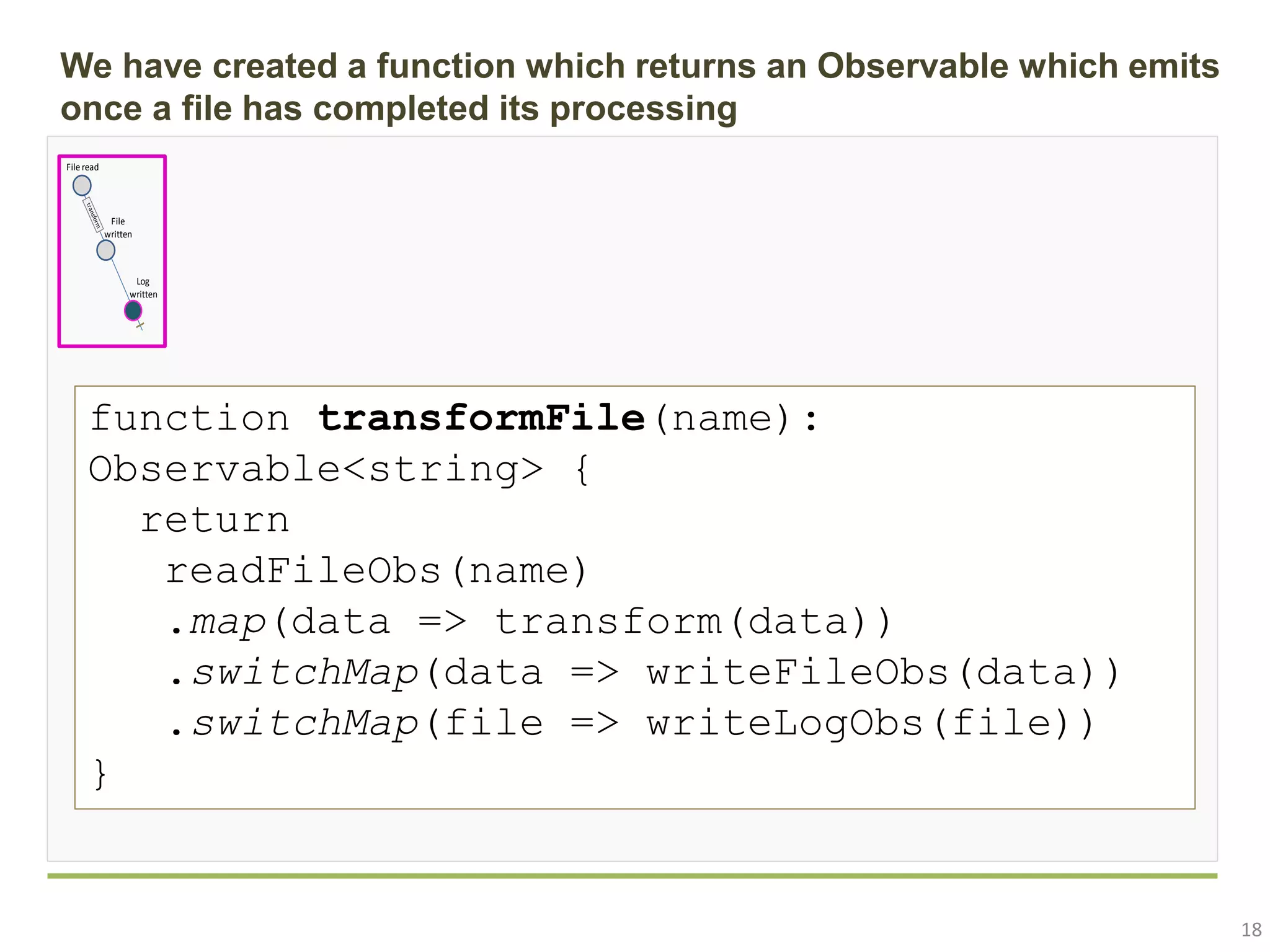

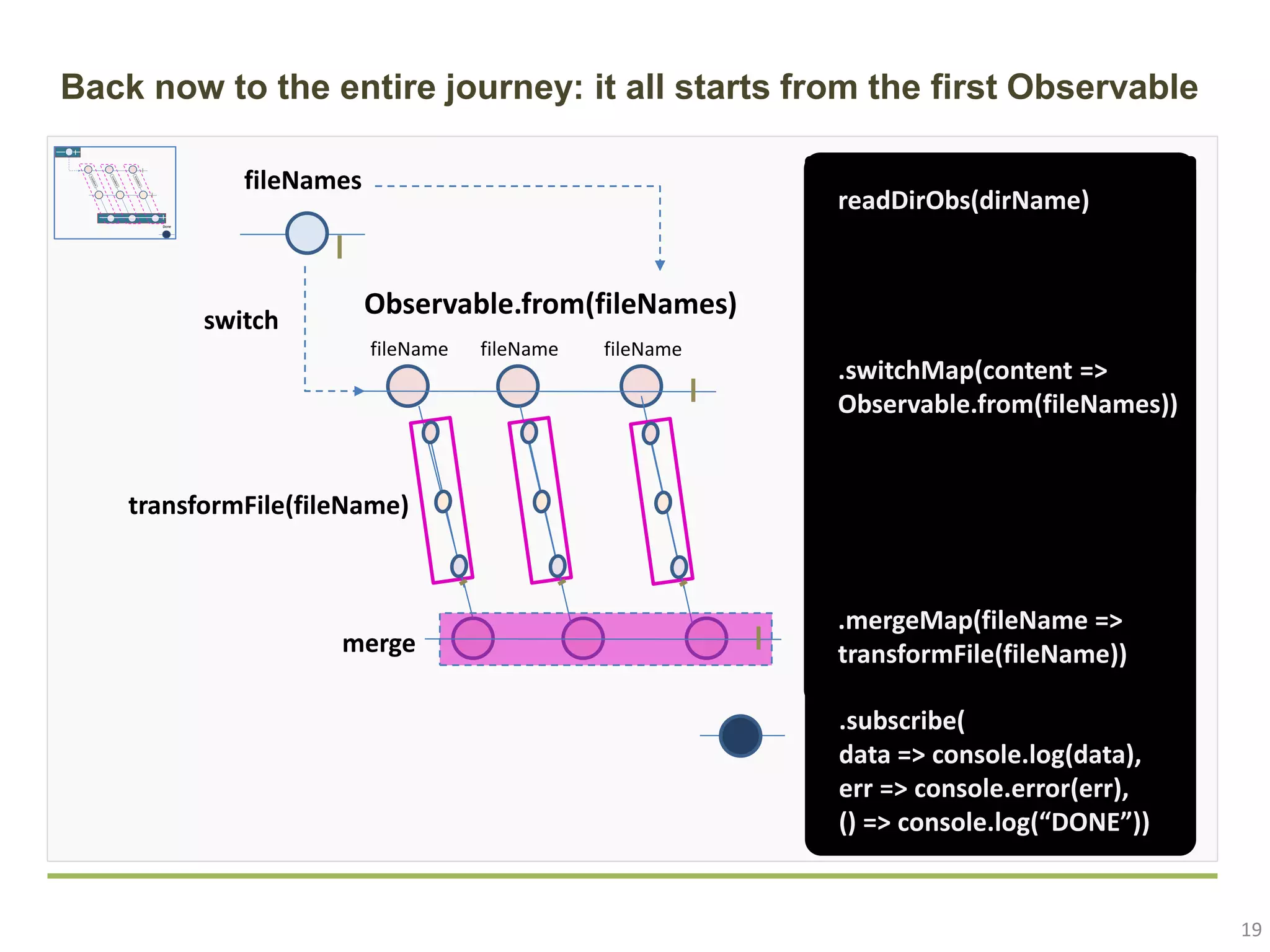

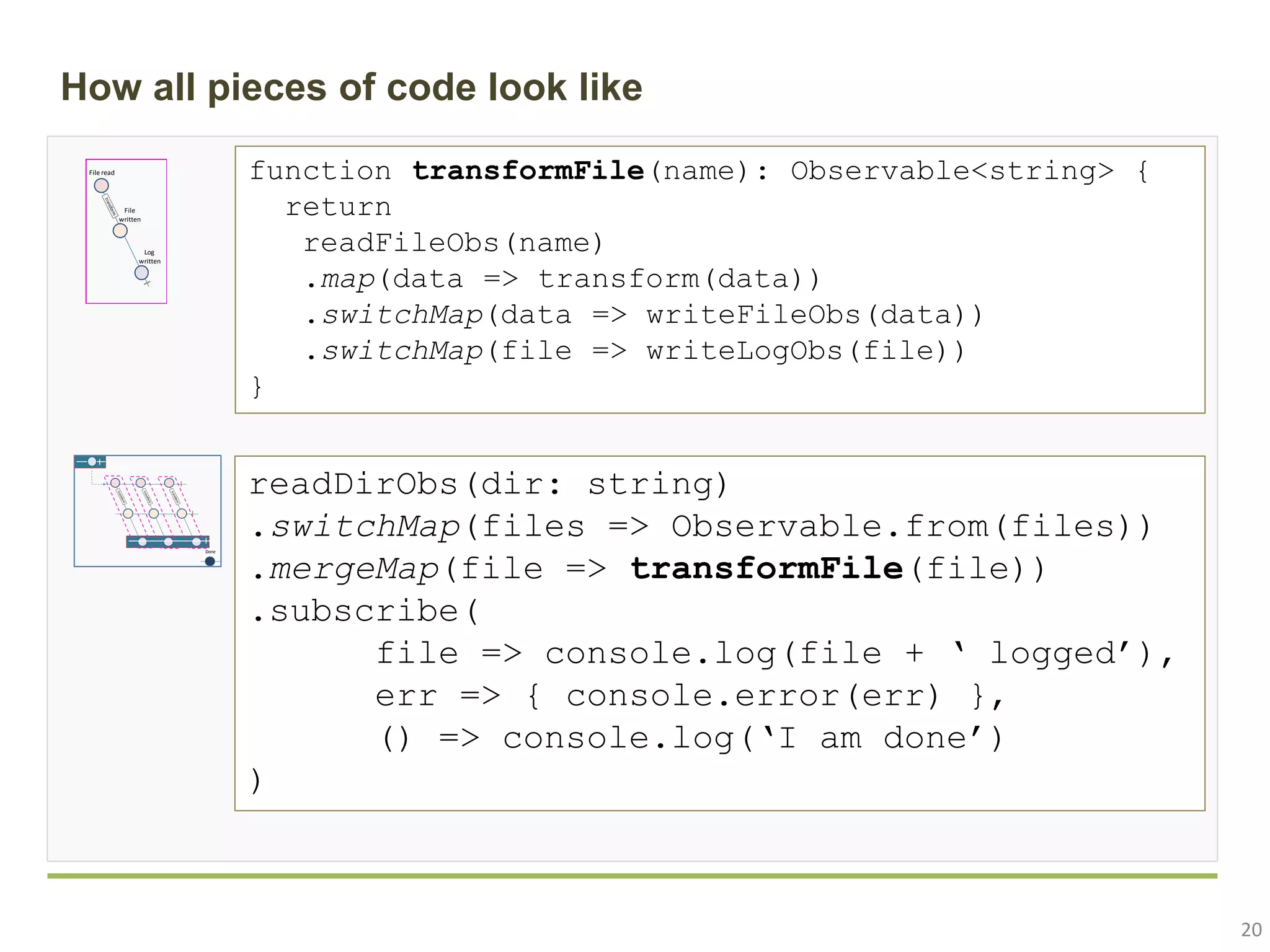

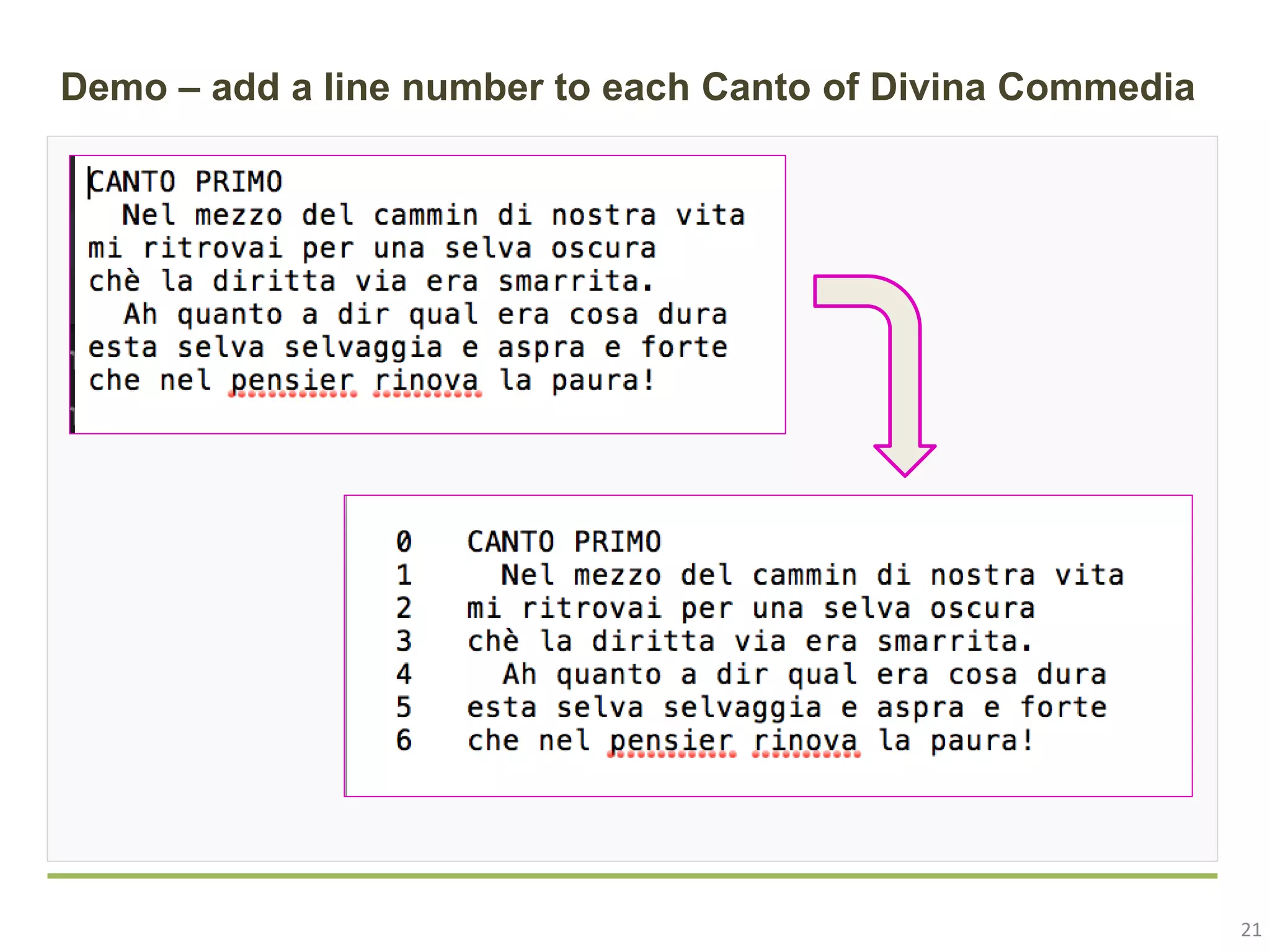

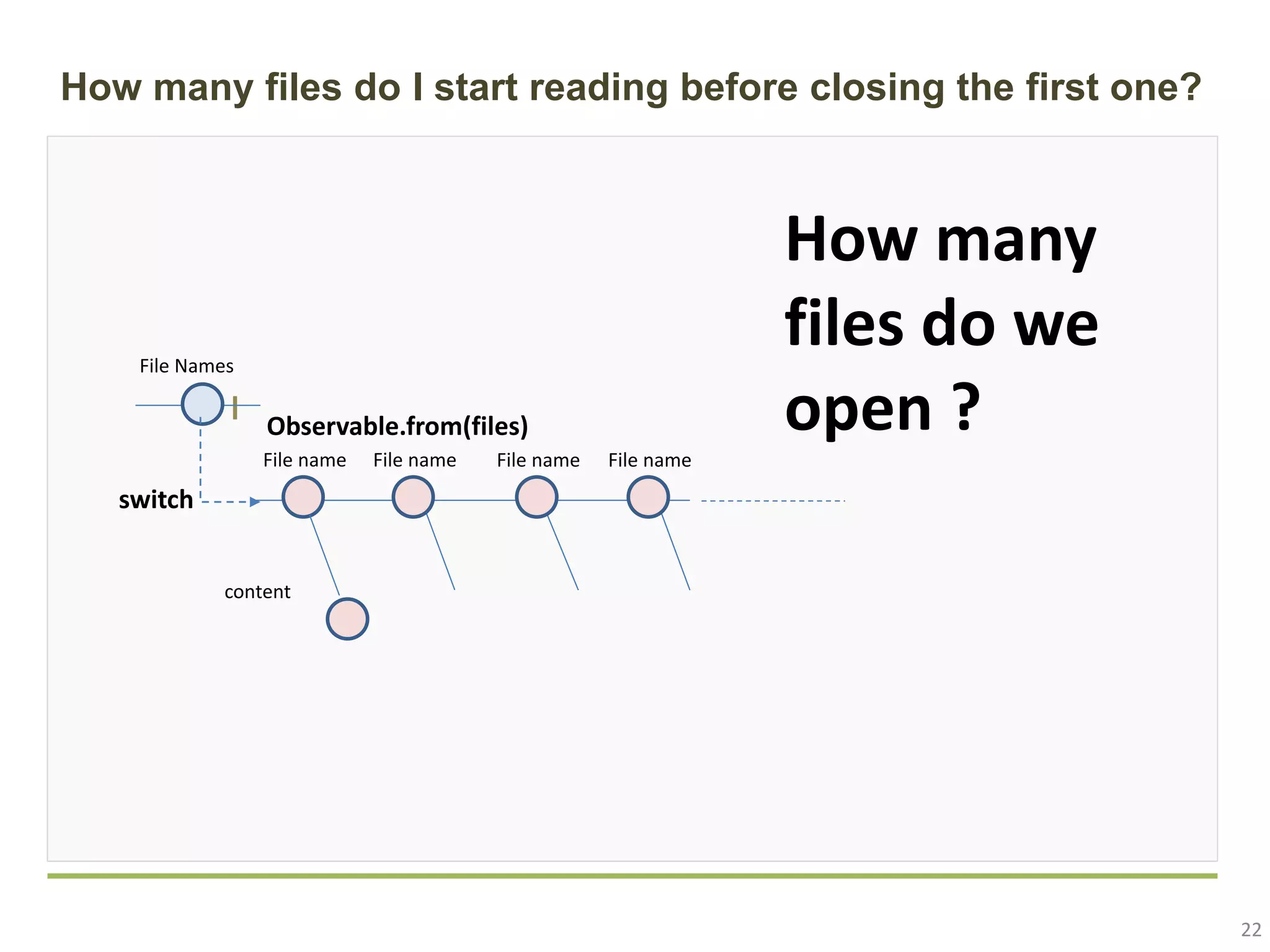

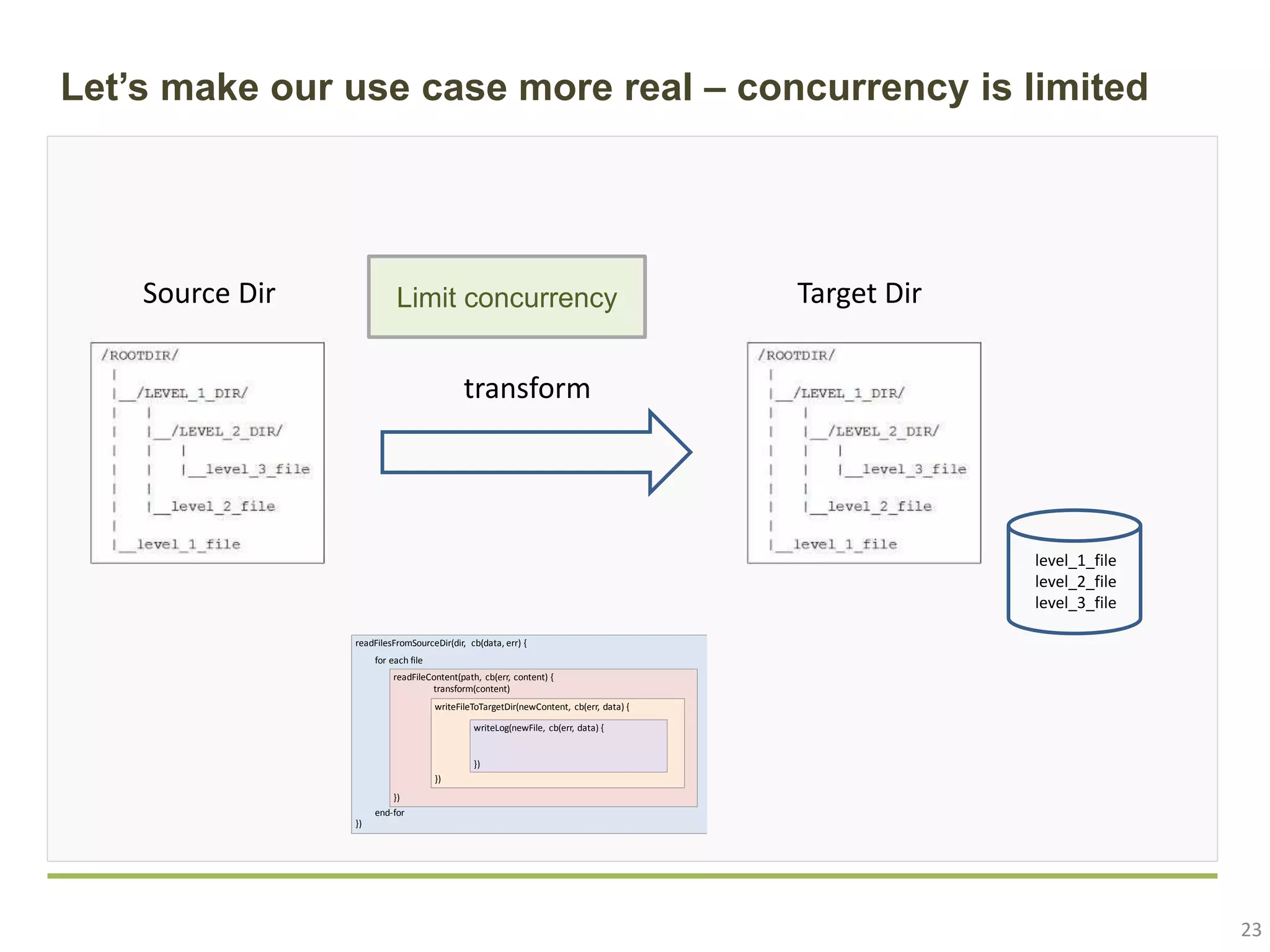

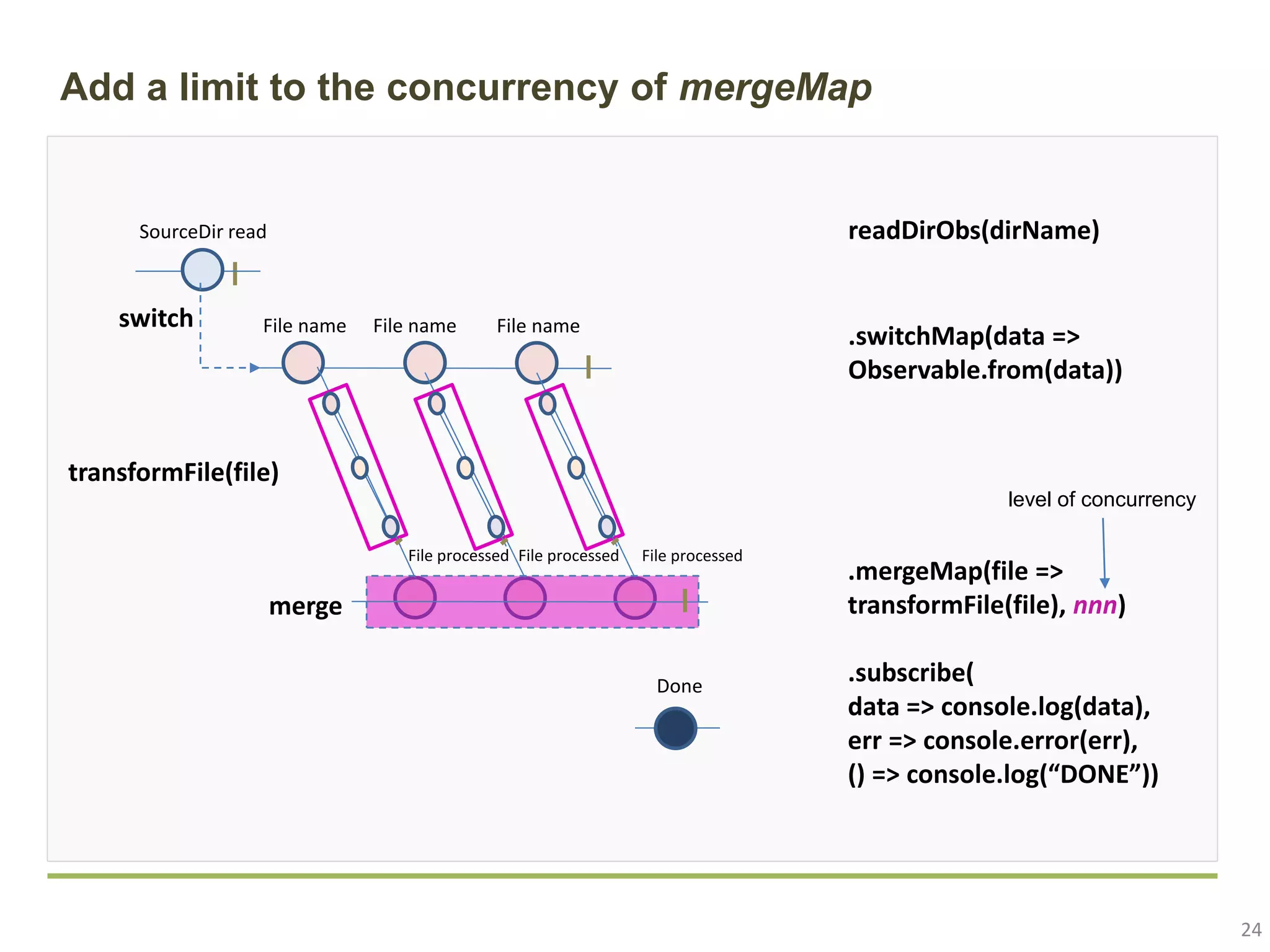

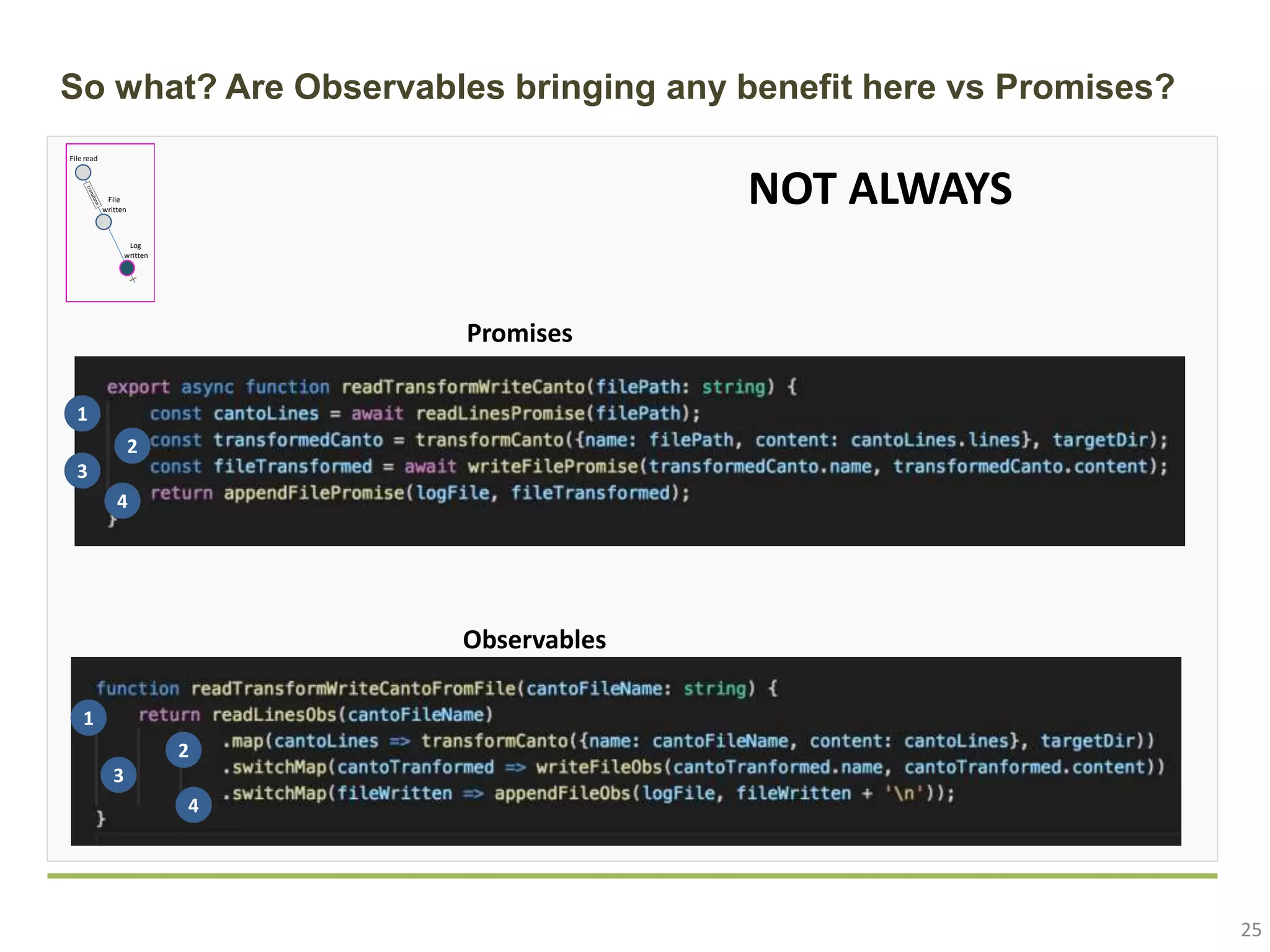

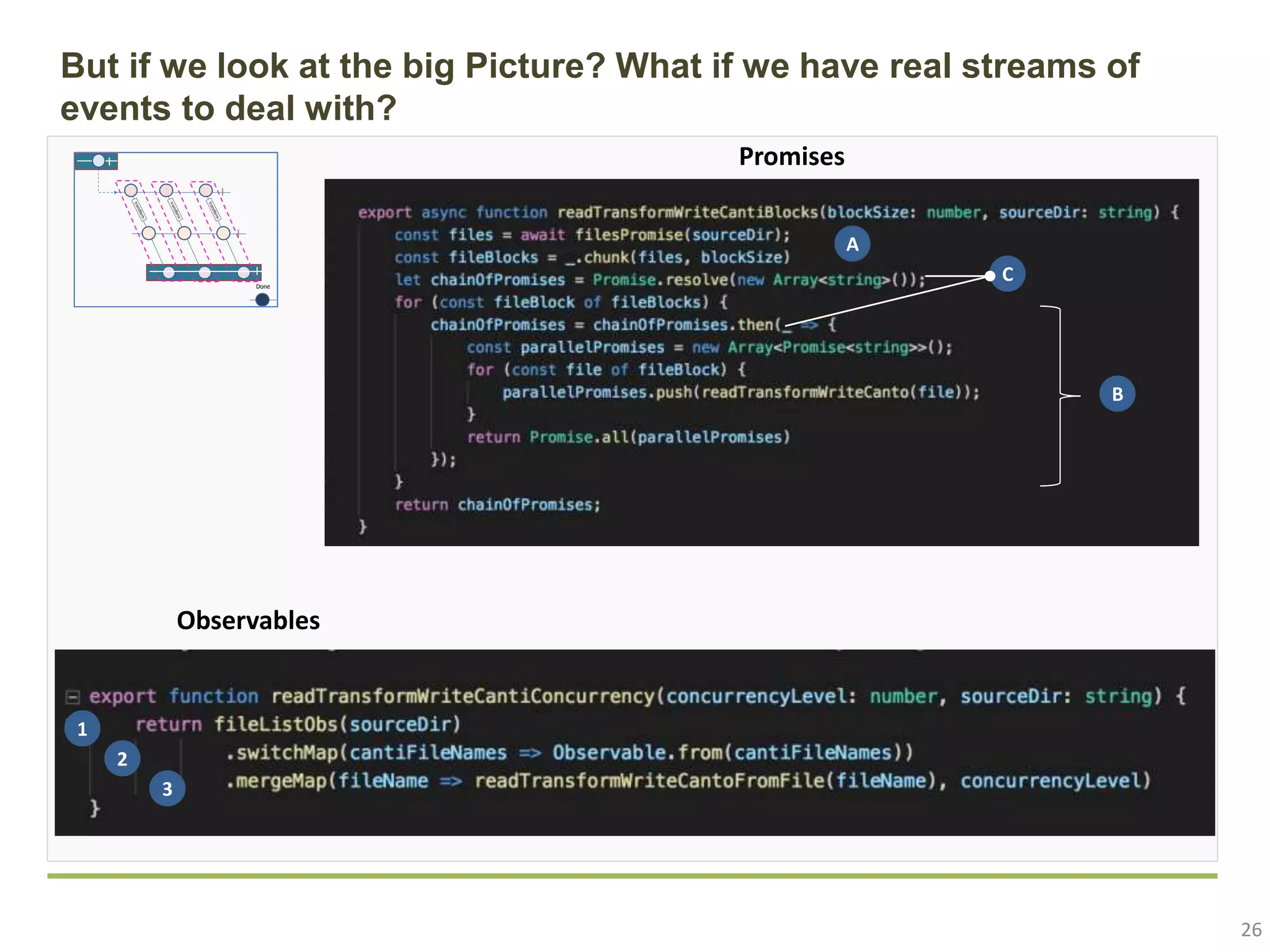

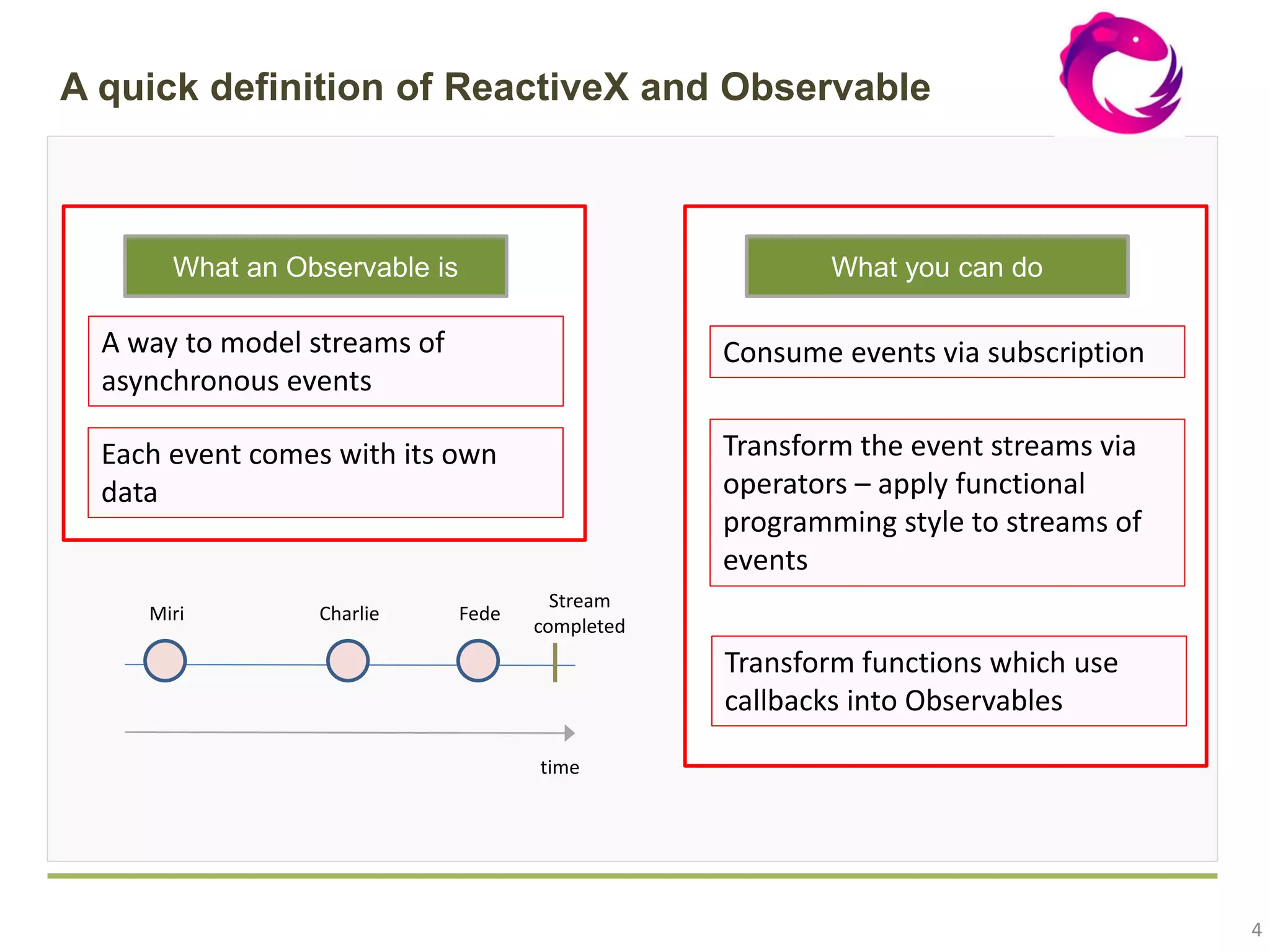

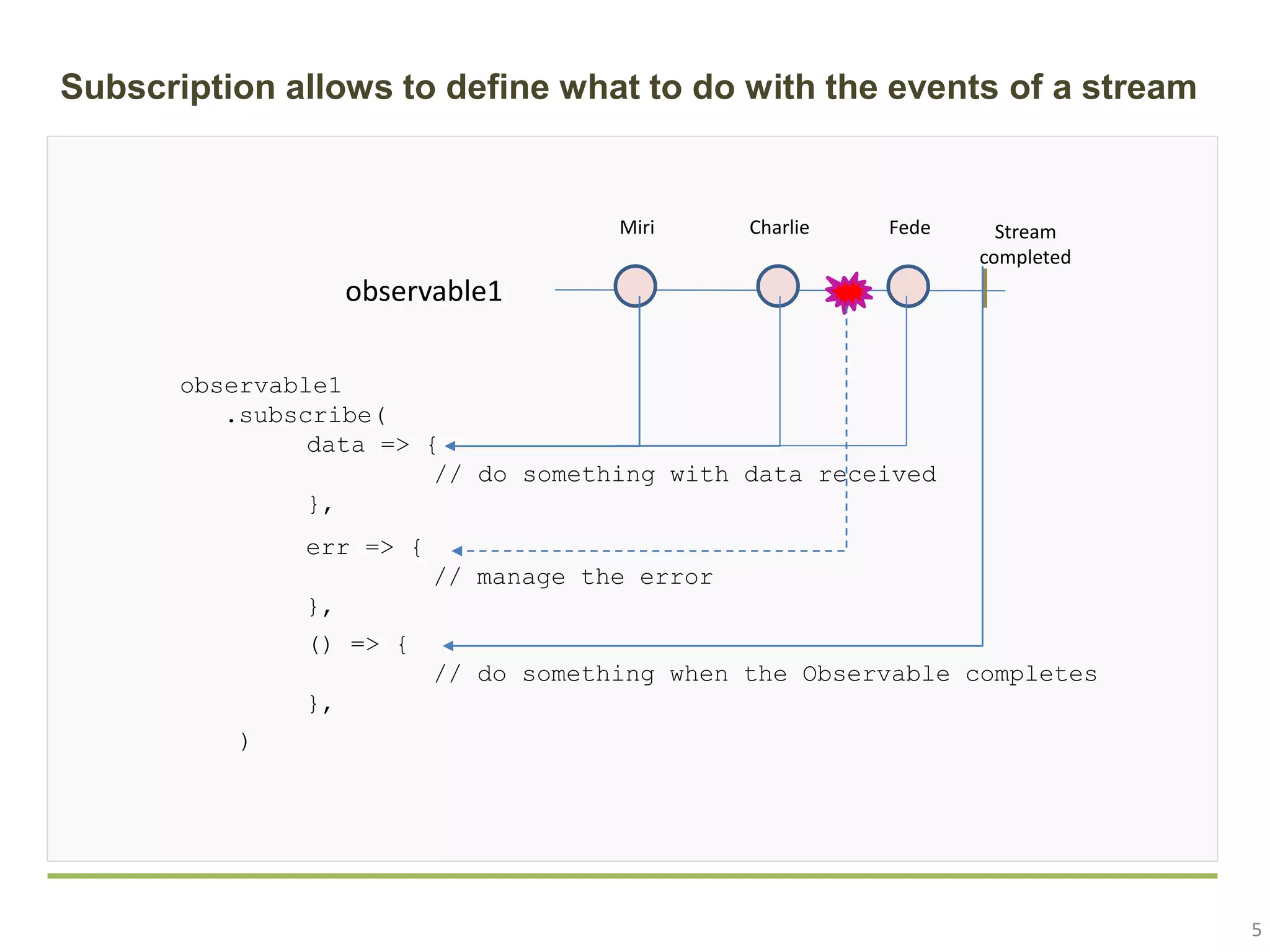

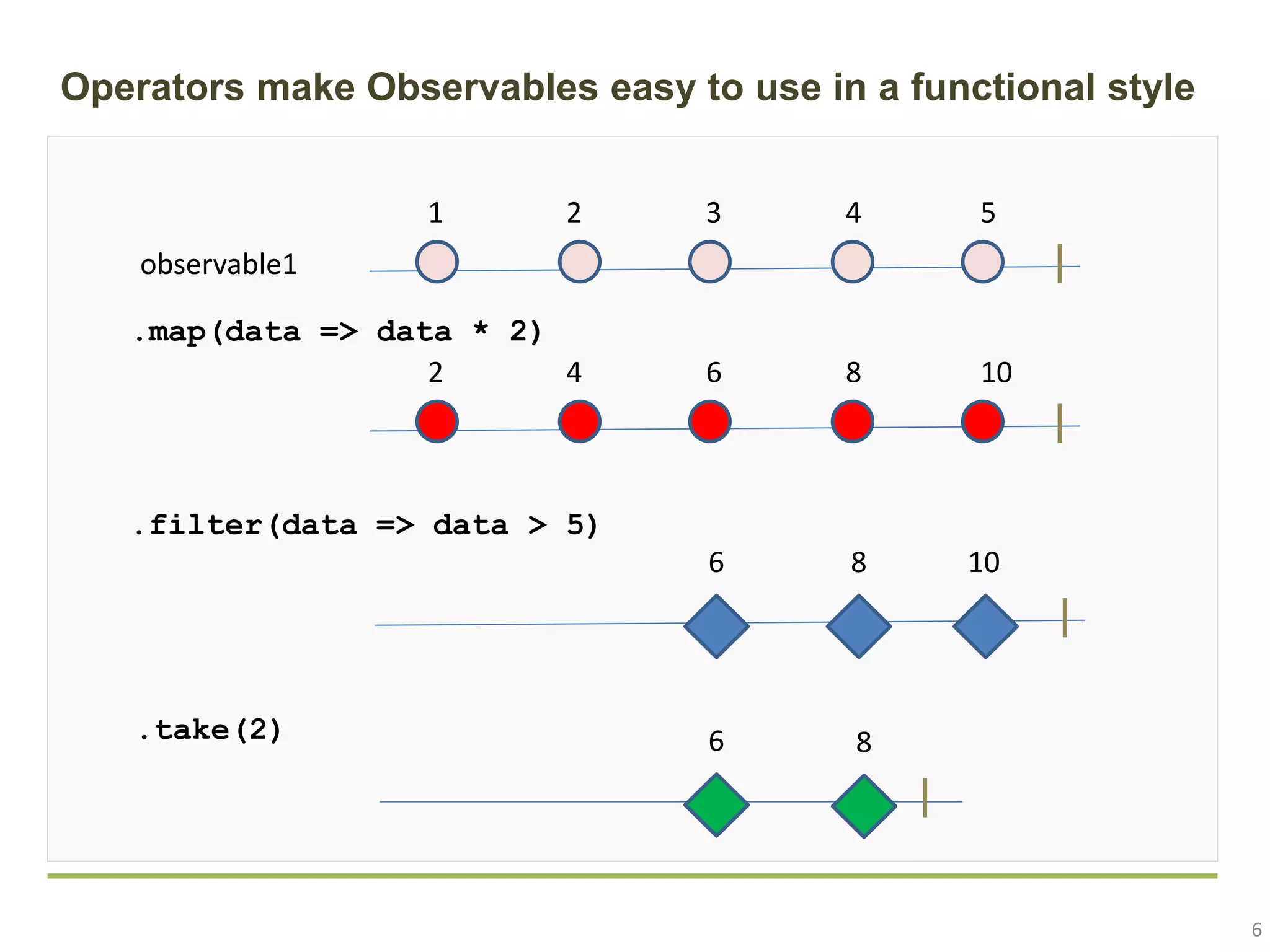

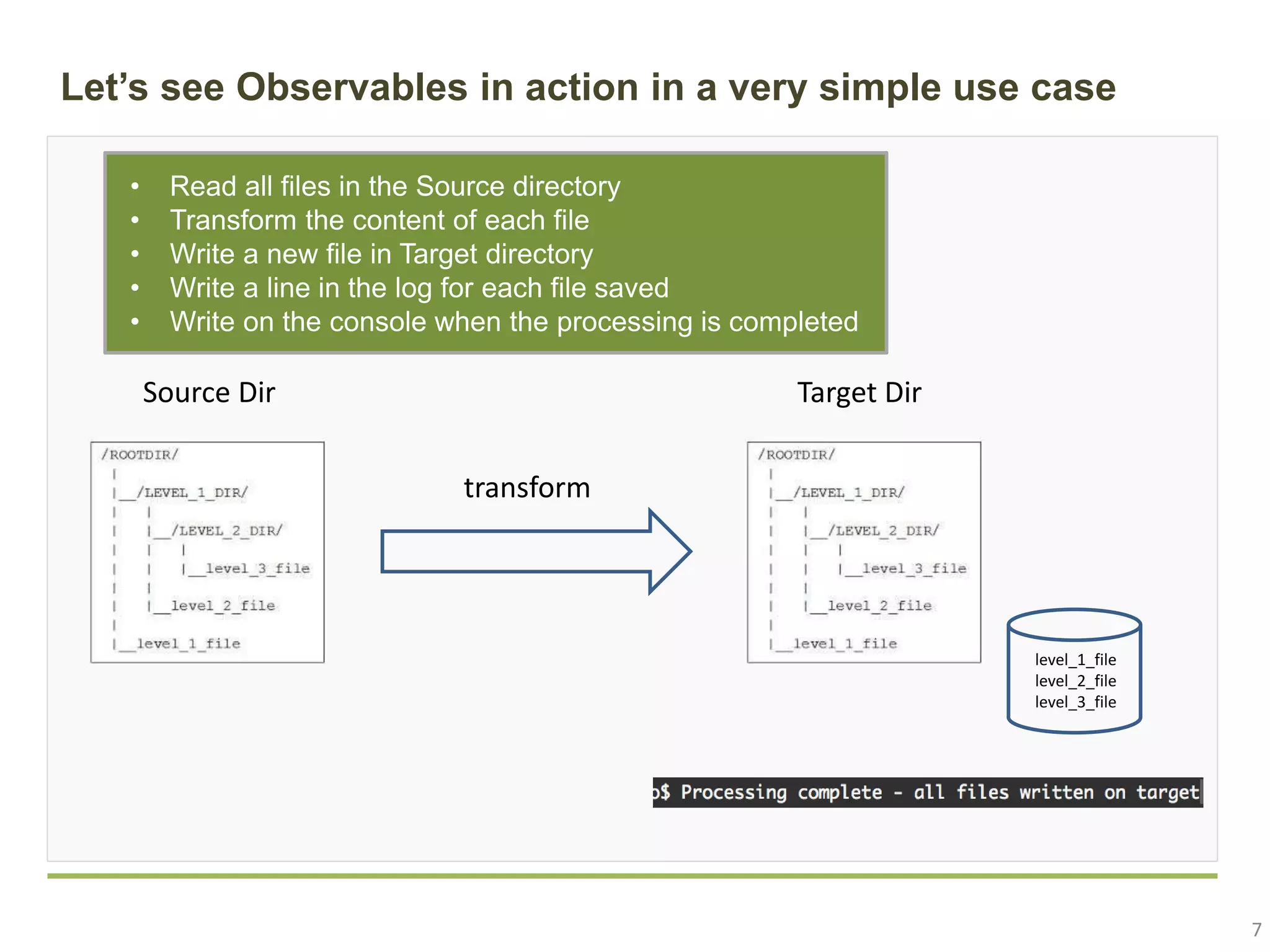

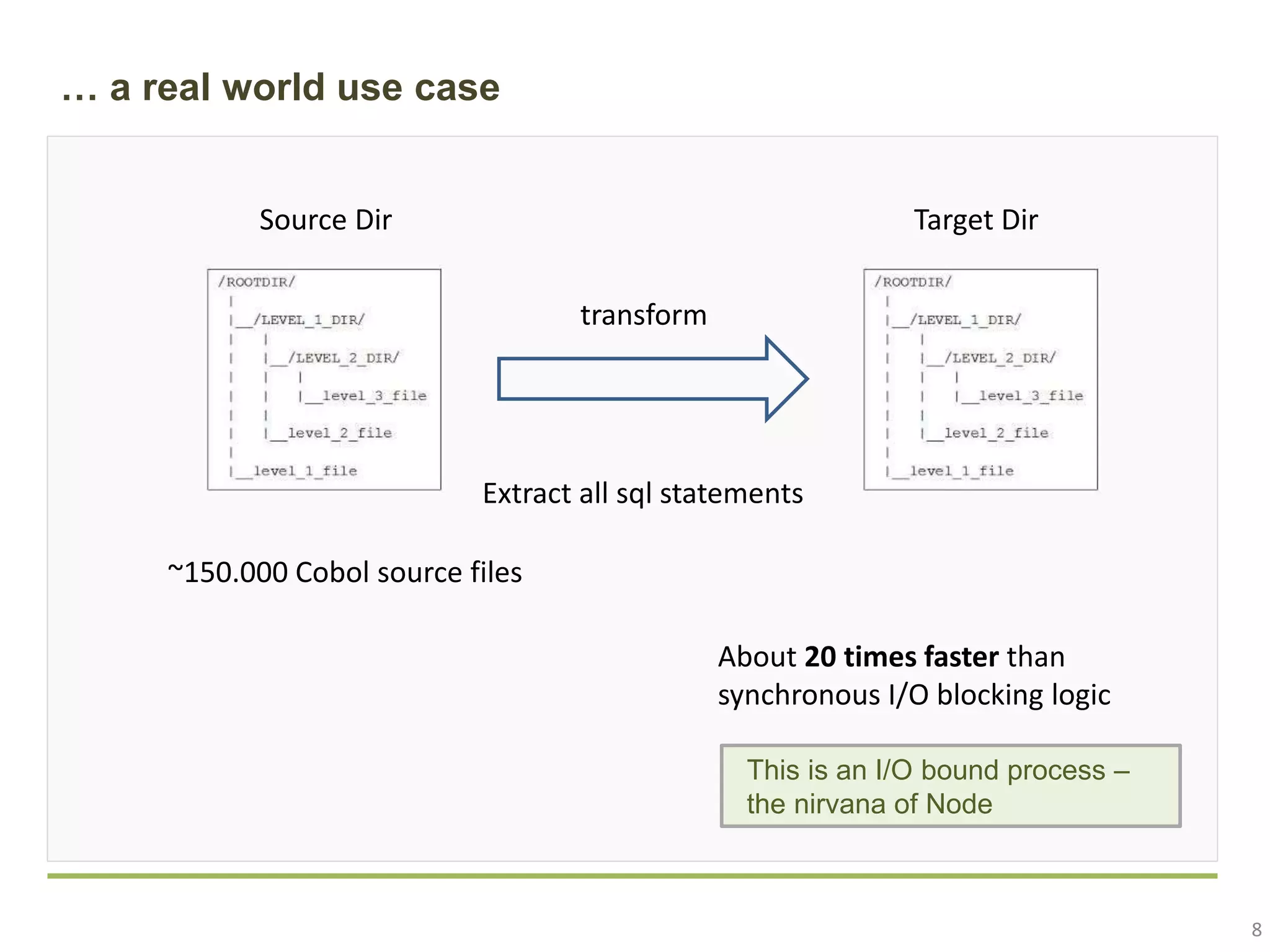

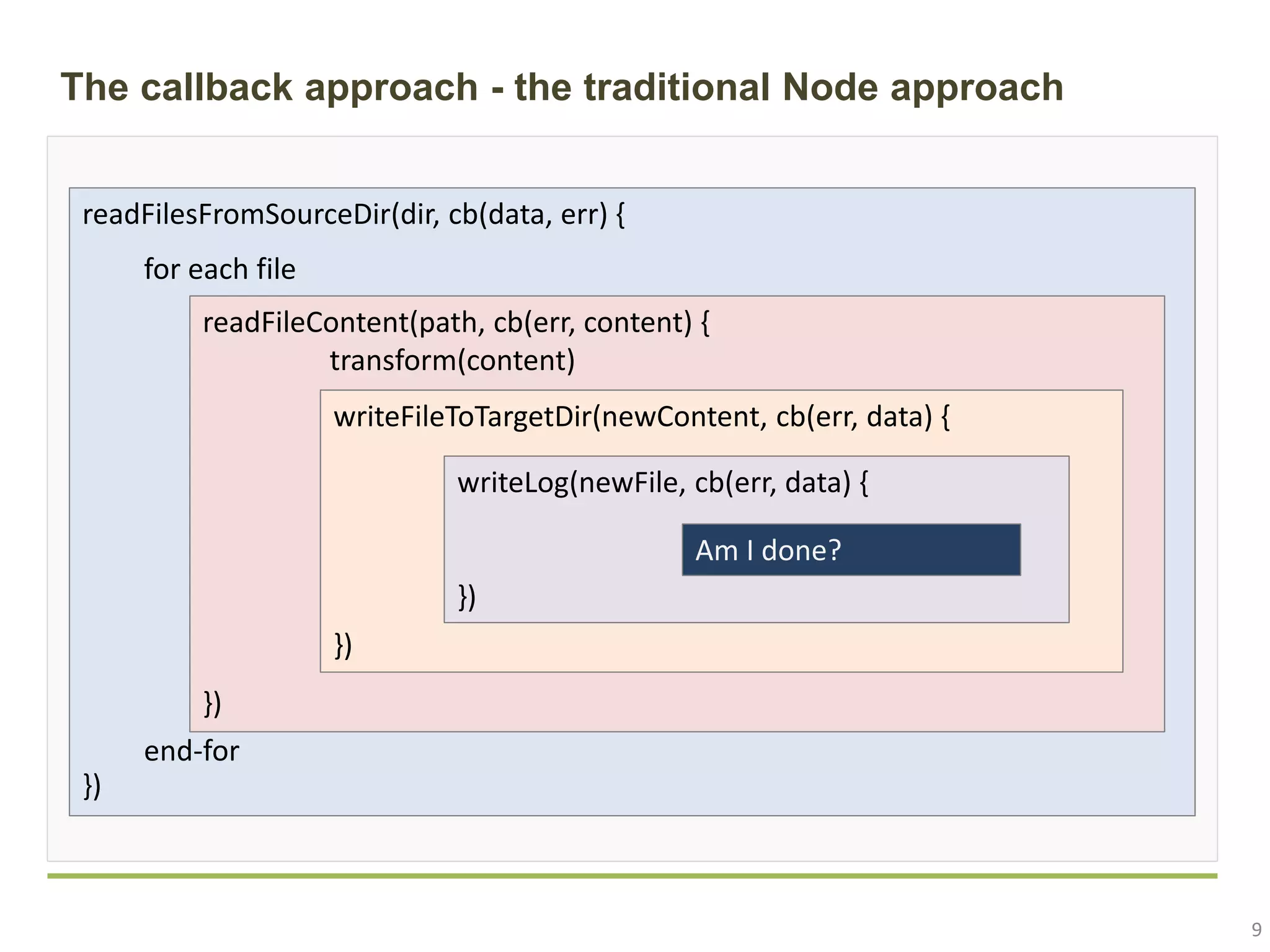

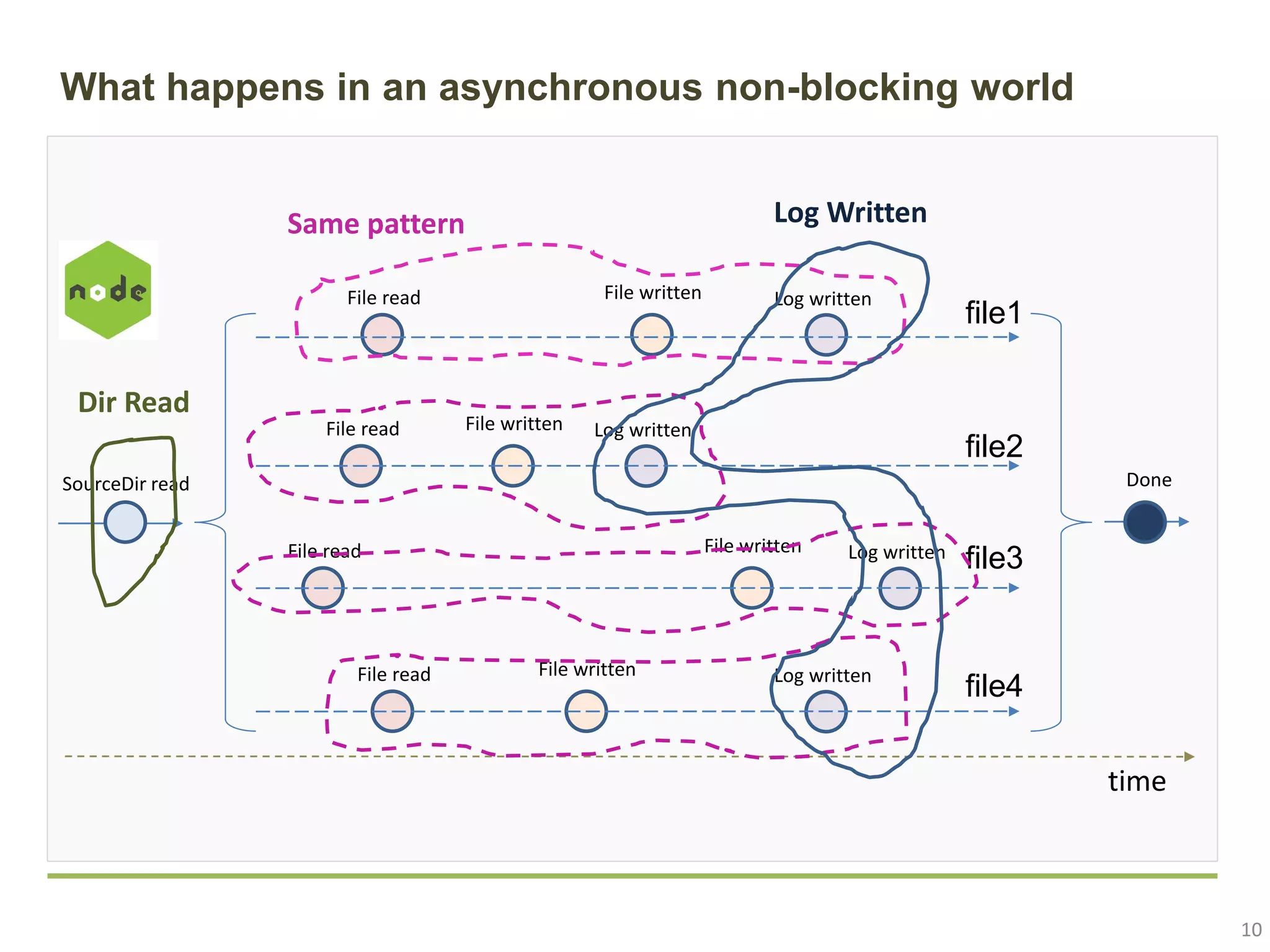

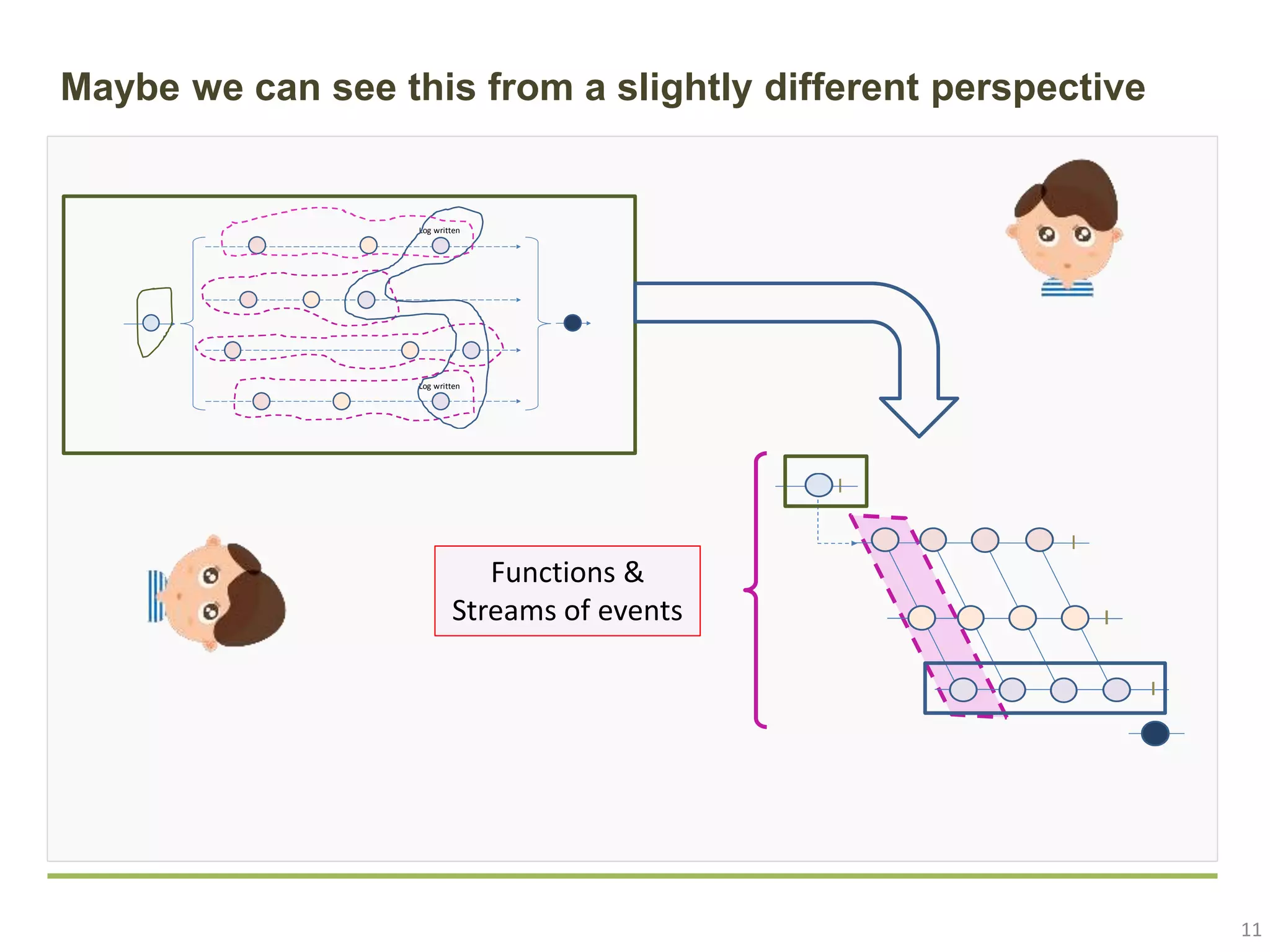

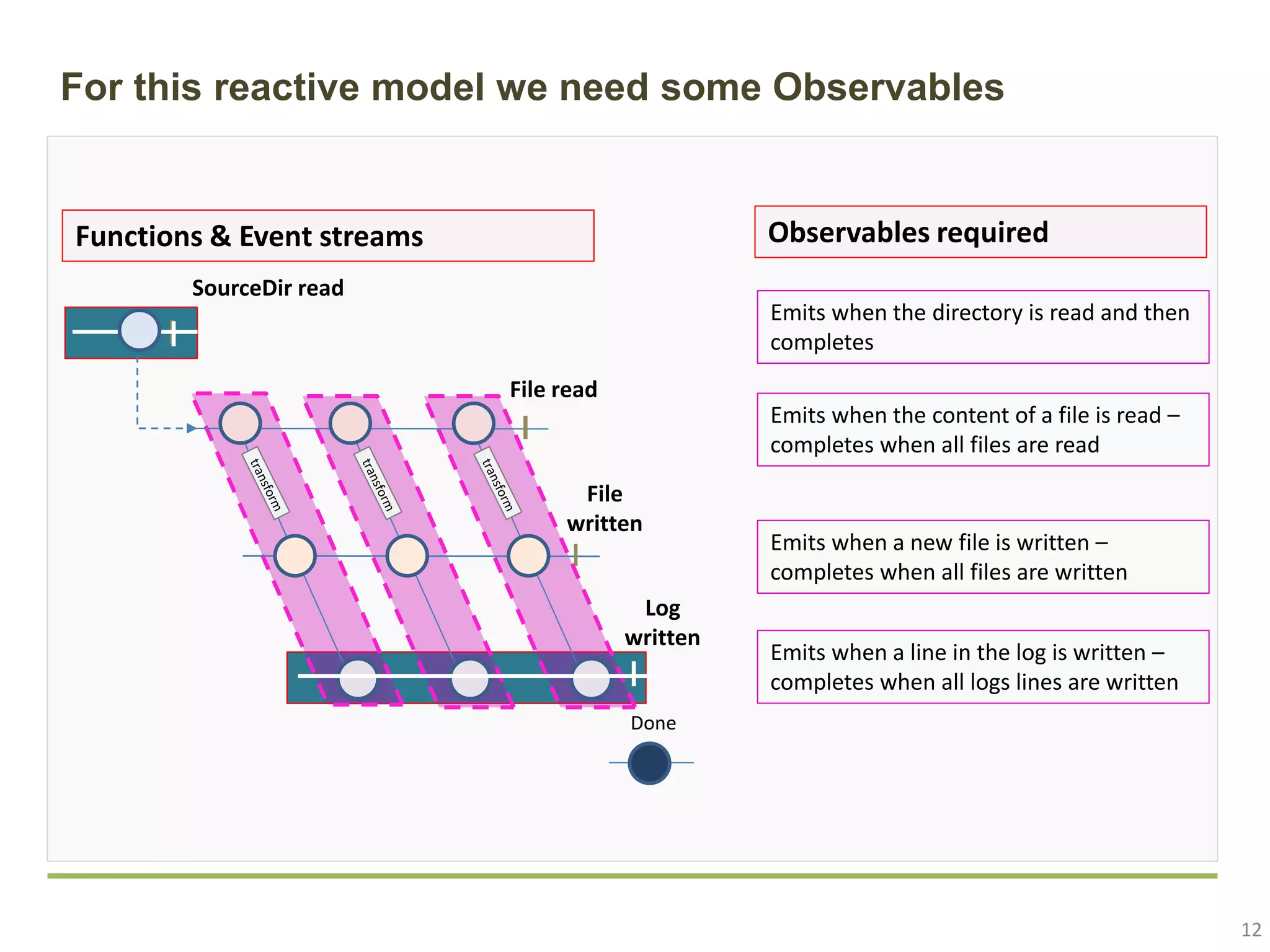

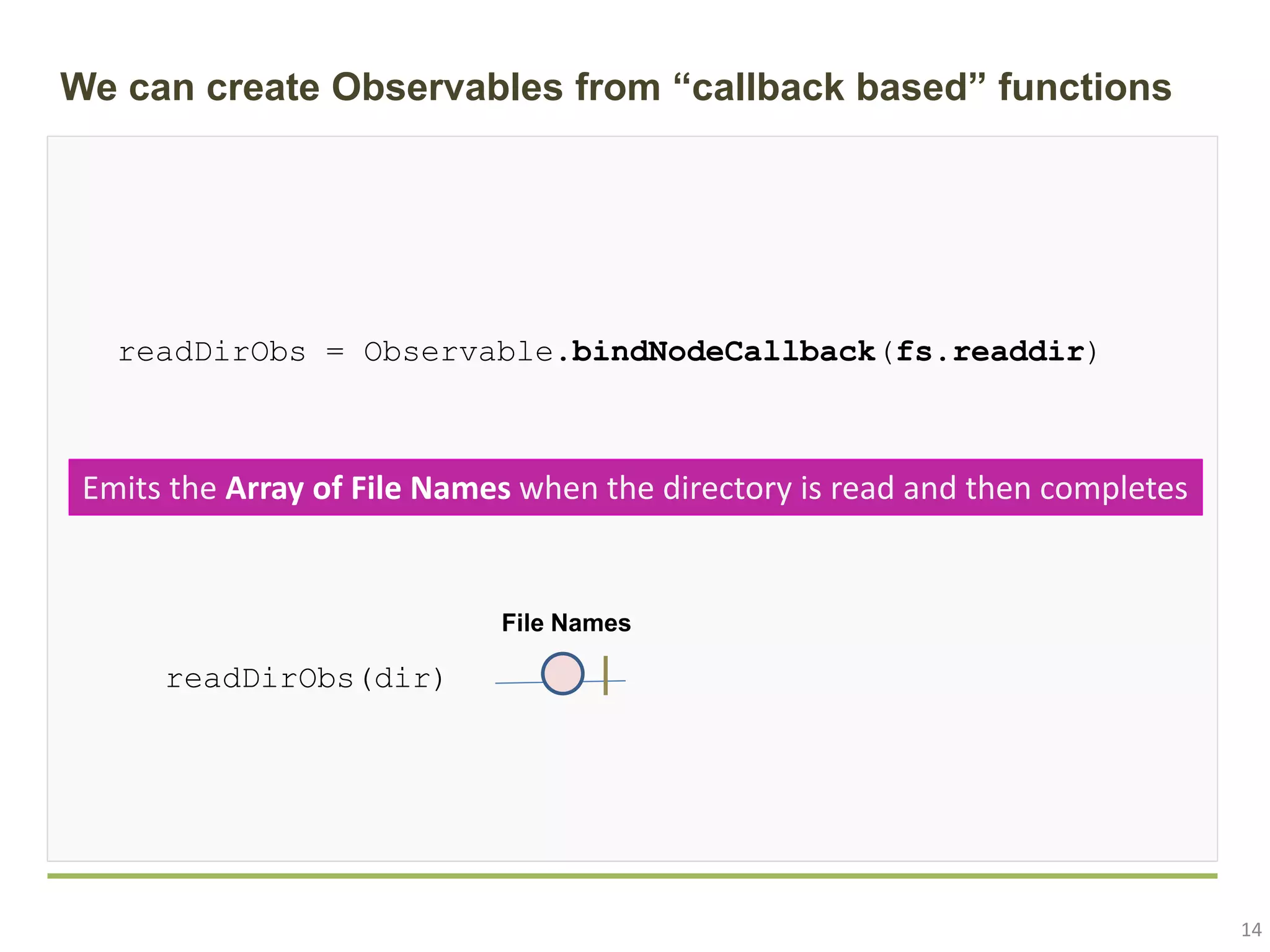

The document discusses how ReactiveX and observables can enhance asynchronous non-blocking programming in environments like Node.js. It explains the observable pattern, how to transform event streams, and provides a real-world use case that demonstrates the efficiency of processing I/O-bound tasks. The document also contrasts observables with promises and highlights the benefits of using observables for handling asynchronous event streams.

![13 We need to start from what node gives us: “callback based” functions fs.readdir(path[, options], callback)Read Directory fs.readFile(path[, options], callback)Read File fs.writeFile(file, data[, options], callback)Write File fs.write(fd, string[, position[, encoding]], callback)Append line readFilesFromSourceDir(dir, cb(data, err) { }) readFileContent(path, cb(err, content) { transform(content) }) writeFileToTargetDir(newContent, cb(err, data) { }) for each file end-for writeLog(newFile, cb(err, data) { }) Am I done?](https://image.slidesharecdn.com/piccinin-2018-04-codemotion-noderxjs-7-180709104735/75/Make-RxJS-and-Node-dance-how-reactive-programming-can-help-in-asynchronous-non-blocking-environments-Enrico-Piccinin-Codemotion-Rome-2018-13-2048.jpg)

![15 Observables can be created also from Arrays names = [ ”Gina”, “Fede”, “Maria”, ”Carl”, “Rob” ] namesObs = Observable.from( names ) namesObs Gina Fede Maria Carl Rob Emits sequentially as many events as the number of Objects in the Array, each event carrying as content data the object itself, and then completes](https://image.slidesharecdn.com/piccinin-2018-04-codemotion-noderxjs-7-180709104735/75/Make-RxJS-and-Node-dance-how-reactive-programming-can-help-in-asynchronous-non-blocking-environments-Enrico-Piccinin-Codemotion-Rome-2018-15-2048.jpg)

![16 Now we have all the tools we need to build our solution We can create Observables from callback based functions We can create Observables from Arrays We can transform Observables into other Observables via operators readFilesFromSourceDir(dir, cb(data, err) { }) readFileContent(path, cb(err, content) { transform(content) }) writeFileToTargetDir(newContent, cb(err, data) { }) for each file end-for writeLog(newFile, cb(err, data) { }) Am I done? [1, 2, 3, … ] observable1 observable2 = operator( observable1 ) observable2](https://image.slidesharecdn.com/piccinin-2018-04-codemotion-noderxjs-7-180709104735/75/Make-RxJS-and-Node-dance-how-reactive-programming-can-help-in-asynchronous-non-blocking-environments-Enrico-Piccinin-Codemotion-Rome-2018-16-2048.jpg)