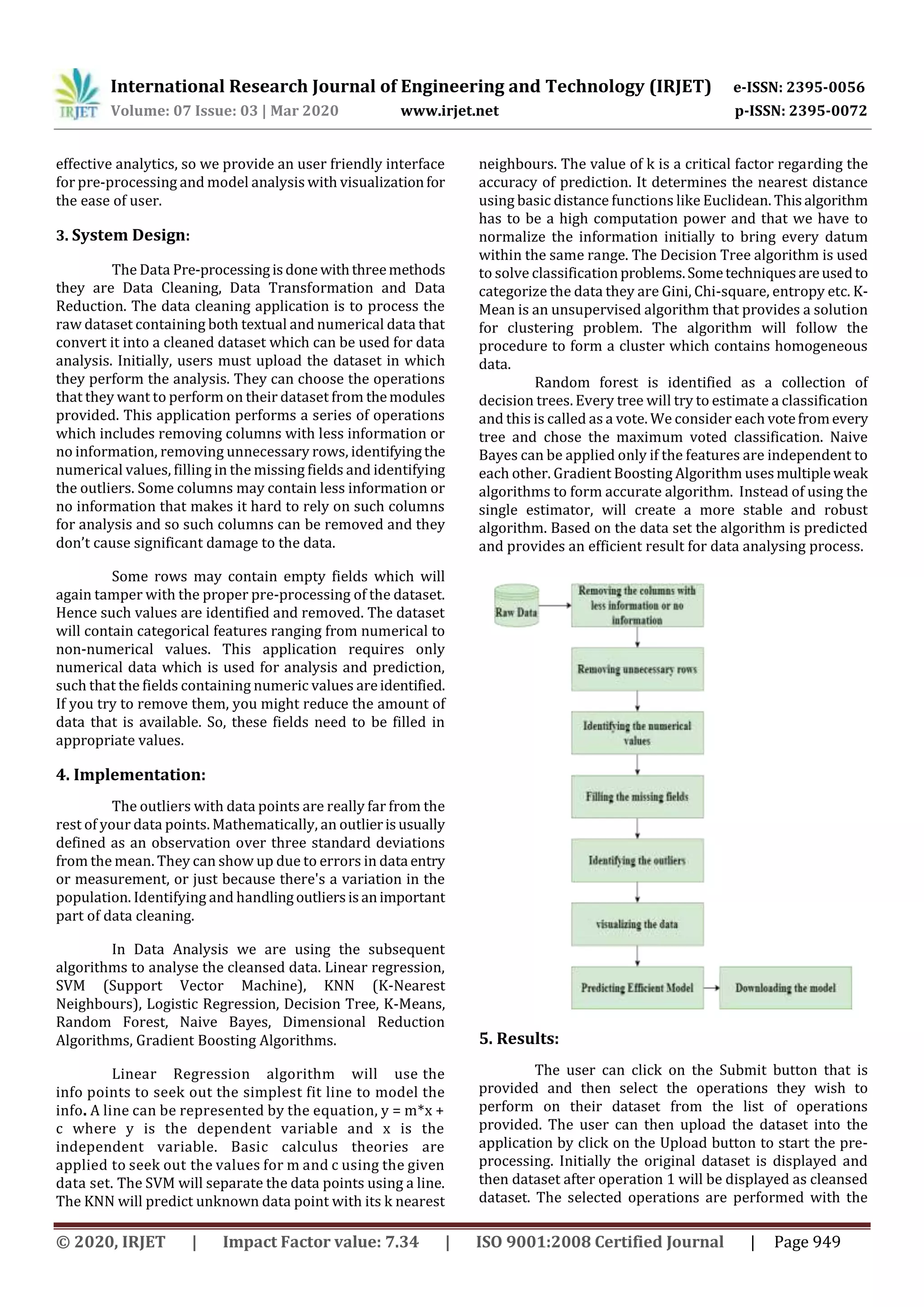

This document presents a tool for preprocessing and visualizing data using machine learning models. It aims to simplify the preprocessing steps for users by performing tasks like data cleaning, transformation, and reduction. The tool takes in a raw dataset, cleans it by removing missing values, outliers, etc. It then allows users to apply machine learning algorithms like linear regression, KNN, random forest for analysis. The processed and predicted data can be visualized. The tool is intended to save time by automating preprocessing and providing visual outputs for analysis using machine learning models on large datasets.

![International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 Volume: 07 Issue: 03 | Mar 2020 www.irjet.net p-ISSN: 2395-0072 © 2020, IRJET | Impact Factor value: 7.34 | ISO 9001:2008 Certified Journal | Page 948 An User Friendly Interface for Data Preprocessing and Visualization using Machine Learning Models Mr. S. Yoganand1, Bharathi Kannan R2, Daya Meenakshi B2 1Assistant Professor, Department of Computer Science and Engineering, Agni College of Technology Chennai-130, Tamil Nadu, India. 2,3UG Student, Department of Computer Science and Engineering,Agni College of Technology Chennai-130, Tamil Nadu, India. ---------------------------------------------------------------------***--------------------------------------------------------------------- Abstract – Machine learning is one of the most efficient techniques for prediction and classification related problems. In this modern era, most of the industries all over the world depend upon the machine learning models which leadintothe data analytics century. There is no properandefficienttool for handling the datasets which use machine learning models for data prediction and Visualization. So, in this paper a novel idea is proposed for making the user-friendly approach to handle the machine learning models for data prediction and visualisation. A tool is developed, such that it performs data cleaning which will be a prerequisite for data analysis and then provides a visible representation of the cleansed data. The developed tool will take the input as structured dataset that contains both textual and numerical data which are then processed using machine learning algorithms to obtain a pre- processed dataset. This process may undergo series of steps to produce visualized and predicted data as per the chosen effective algorithm to obtain efficient result. Key Words: Machine learning, visualization, pre- processing, Tool, user interface. 1. INTRODUCTION An organisation uses the dataset for predictive analysis and an important concern in these cases is data quality. Using noisy data can hamper with the correctness of analysis. The common errors are missing values, duplicates and other errors. These errors need to be corrected for reliable decisions and analytics. The users must know that the effects of using the noisy data before proceeding with the cleaning process. Noise removal will improve the model performance, due to the fact that noises may disturb the discovery of important information. Machine learning is the appreciated application of Artificial Intelligence. It is used to learn automatically without any human assistancethatprovideshugedataset for analysing with a large number of data fields. With the data provided by the system after implementing the machine learning algorithms, organizations are able to work more effective and acquire profit over their competitors. The system that uses machine learning technique will be able to predict how the structure looks like and adjust the data according to their structure. The mainchallengesinmachine learning model is to deal with large data sources for data cleaning process. Data cleaning process is carried by taking in huge datasets which are checkedforthepossible errorsby using data pre-processing techniques. The other challenges include avoiding learning process from noisy data, avoiding building a prejudiced model, not giving reasons for compromising with the qualityofthedata.The bestpractices for data cleaning using machine learning techniquesthatare filling missing values, removing unnecessary rows,reducing the size of the data and implementing a good quality plan. The success of machine learning applications depends on the amount of good quality data that is given to it. But this process of cleaning may not be considered as a main area in data pre-processing. The system that uses powerful algorithms to process the noisy data can yield bad results if irrelevant or wrong training set of data is given. In the proposed model ML algorithms to find out the different patterns in the data and group it by itself into clean and noisy data which will help in reducing execution time. 2. Related Work: Data Pre-processing is used to convert the raw data into pre-processed data set. [1] In Machine Learning, the data pre-processing is used to transform or encode the data easily by their algorithm. It consists of interactive steps as follows. Data cleaning is used to detect and correct inaccurate records from a record or tables, and then replacing, modifying or deleting this noisy data. Data integration will combines the data residing indifferent sources that provides user with a unified view of these data [2]. The process of selecting suitable data for a research project will impact data integritywhereData transformation converts data from a source data format into resultant data [3]. The tools which are available to process the data in data processing and visualizing are Knime, Shogun, Oryx 2, Tensor flow, Weka, RapidMiner, Trifacta Wrangler, Python [12] [13]. In this paper, we will focus on removing the noisy data that identifies the numerical values, predicting and filling in missing values and detect outliers which hamper with data analysis [11]. We propose a system that simplifies the process for the user and allows for better processing. In summary, Machine learning for data cleaning might be the only way to provide complete and trustworthy data sets for](https://image.slidesharecdn.com/irjet-v7i3177-201205022605/75/IRJET-An-User-Friendly-Interface-for-Data-Preprocessing-and-Visualization-using-Machine-Learning-Models-1-2048.jpg)

![International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 Volume: 07 Issue: 03 | Mar 2020 www.irjet.net p-ISSN: 2395-0072 © 2020, IRJET | Impact Factor value: 7.34 | ISO 9001:2008 Certified Journal | Page 950 Cleansed dataset; finally the user will perform the data analysis with the required algorithm to obtain the result in visualization and it can be download by the user. Upload Noisy Dataset: Displaying the noisy Dataset: Preprocessing the Data: Applying Machine Learning Modal: Output: 6. Conclusion: Our developed systemperformsData Cleaning,Data Transformation and Data Reduction in data pre-processing. Our system which takes the rawdatasetsintotheapplication which are then pre-processed to clean up all the noisy data using pre-processing techniques and the cleansed data is visualized to the users after all the pre-processing is done. This system saves a lot of time since manual cleaning can be avoided. After cleansing the user can choose or select the machine learning model which will provide efficient results as plots. This serves as an effective purpose for the users who wants to clean huge datasets and visualizestheanalysis of pre-processed data. In future the accuracy and comparison of the machine learning algorithms can be done within the friendly user interface. REFERENCES [1] Cristian Felix, Anshul Vikram Pandey, and EnricoBertini, “TextTile: An Interactive Visualization Tool for Seamless Exploratory, Analysis of Structured Dataand Unstructured Text“, IEEE-2018. [2] Data,Huawen Liu, Xuelong Li, Jiuyong Li, andShichao Zhang, “Efficient Outlier Detection for High-Dimensional“, IEEE-2019. [3] M. Bostock, V. Ogievetsky, and J. Heer, “Datadriven documents,” IEEE-2011.](https://image.slidesharecdn.com/irjet-v7i3177-201205022605/75/IRJET-An-User-Friendly-Interface-for-Data-Preprocessing-and-Visualization-using-Machine-Learning-Models-3-2048.jpg)

![International Research Journal of Engineering and Technology (IRJET) e-ISSN: 2395-0056 Volume: 07 Issue: 03 | Mar 2020 www.irjet.net p-ISSN: 2395-0072 © 2020, IRJET | Impact Factor value: 7.34 | ISO 9001:2008 Certified Journal | Page 951 [4] F. Beck, S. Koch, and D. Weiskopf, “Visual Analysis and Dissemination of Scientific Literature Collections with SurVis”, IEEE-2016. [5] Parke Godfrey, JarekGryz and PioterLasek,“Interactive visualisation of large datasets”, IEEE-2016. [6] Dileep kumarkoshleyand RajuHadler,“Data Cleaning: An Abstraction-based approach”, IEEE-2015. [7] Mehmet Adil Yalçın;NiklasElmqvist; Benjamin B. Bederson,“Keshif :Rapid and Expressive Tabular Data Exploration for Novices”, IEEE-2018. [8] S. Papadimitriou, H. Kitagawa, P. B. Gibbons, and C. Faloutsosk, “LOCI: Fast outlier detection using the local correlation integral,” IEEE 19th Int. Conf. Data Eng. (ICDE), Bengaluru, India, 2003, pp. 315–326. [9] Y. Pang, J. Cao, and X. Li, “Learning samplingdistributions for efficient object detection”, IEEE Trans. Cybern., vol. 47, no. 1, pp. 117–129, Jan. 2017. [10] M. Gupta, J. Gao, C. C. Aggarwal, and J. Han, “Outlier detection for temporal data: A survey”, IEEE Trans. Knowl. Data Eng., vol. 26, no. 9, pp. 2250–2267, Sep. 2014. [11] S. F. Roth and J. Mattis, “Automating the presentation of information,” in Artificial Intelligence Applications, 1991. Pro-ceedings. , Seventh IEEE Conference on, vol. 1.IEEE, 1991, pp. 90–97. [12] M. Bostock and J. Heer, “Protovis: A graphical toolkit for visualization,” Visualization and Computer Graphics, IEEE Transactions on, vol. 15, no. 6, pp. 1121–1128, 2009. [13] A. Dziedzic, J. Duggan, A. J. Elmore, V. Gadepally, and M. Stonebraker, “Bigdawg: a polystore for diverse interactive applications,” in IEEE Viz Data Systems for Interactive Analysis, 2015. [14] P. Bohannon, W. Fan, F. Geerts, X. Jia, and A. Kementsietsidis “Conditional functional dependencies for data cleaning. In Data Engineering”, IEEE 23rd International Conference on, pages 746–755. IEEE, 2007.](https://image.slidesharecdn.com/irjet-v7i3177-201205022605/75/IRJET-An-User-Friendly-Interface-for-Data-Preprocessing-and-Visualization-using-Machine-Learning-Models-4-2048.jpg)